Towards an End-to-End Digital Framework for Precision Crop Disease Diagnosis and Management Based on Emerging Sensing and Computing Technologies: State over Past Decade and Prospects

Abstract

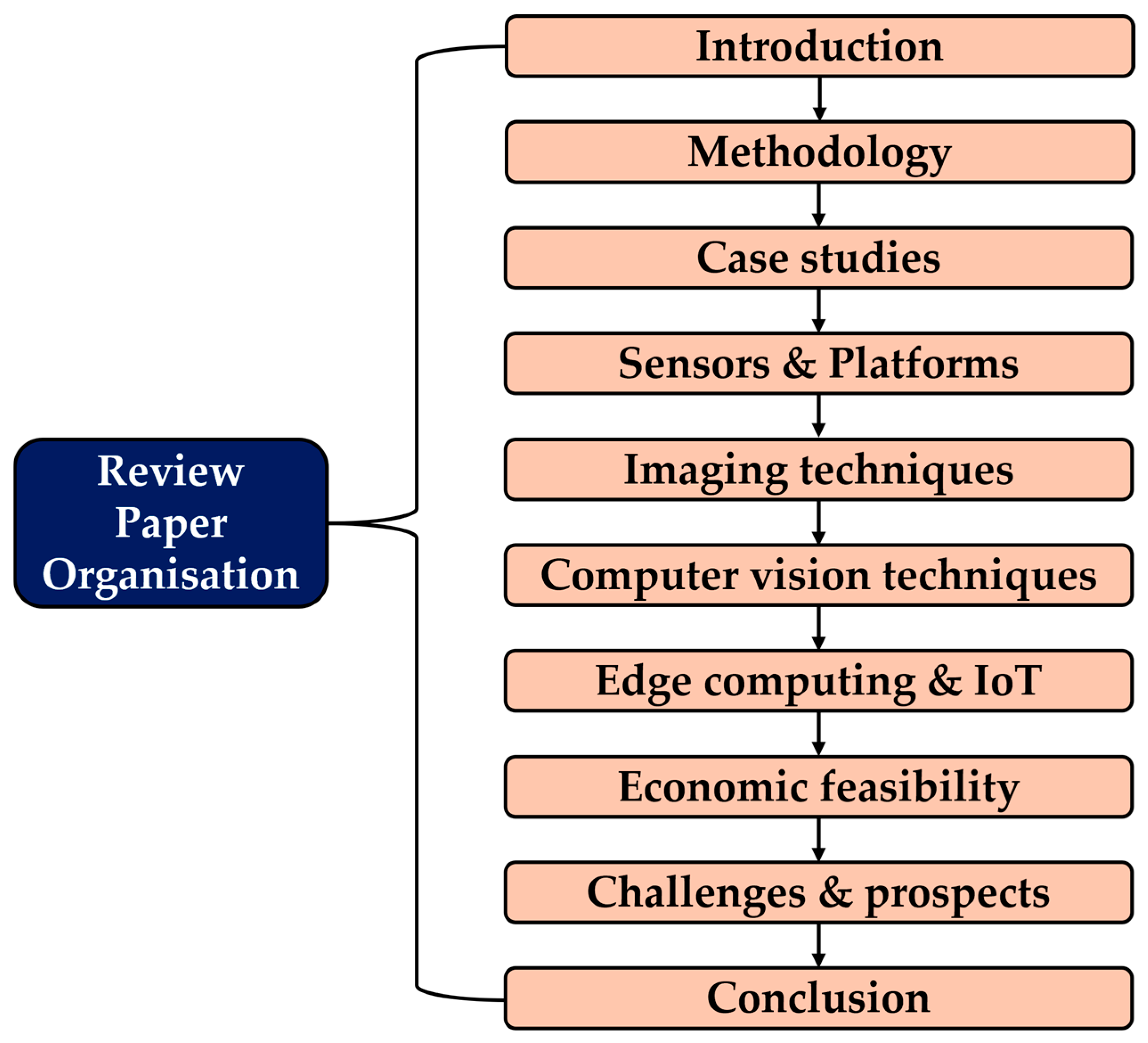

1. Introduction

- How can sensor fusion from multiple platforms (e.g., UGVs, UAVs, satellites) be optimized for accurate field-scale disease mapping?

- What are the most effective models for deploying edge-AI systems that process and act on data locally in resource-limited agricultural environments?

- How can IoT-based disease monitoring networks be designed for long-term scalability and sustainability in smallholder farming systems?

- How can real-time disease forecasts be integrated into automated treatment systems for closed-loop precision crop protection?

- How do the various sensing platforms vary in resolution, scalability, and effectiveness for disease detection above and below the crop canopy?

- How has imaging evolved from 2D to advanced multispectral and 3D methods, and which technique leads in early disease diagnosis?

- How have DL and CNN-based computer vision outperformed traditional methods in automating and improving disease detection accuracy?

- How do IoT and edge computing enable real-time, resource-efficient crop disease detection and decision-making across varied farm settings?

- What are the key economic, infrastructural, and policy factors influencing the adoption and scalability of emerging sensing and AI-driven technologies for end-to-end crop disease diagnosis?

2. Review Methodology and Article Selection

2.1. Article Retrieval Criteria

2.1.1. Inclusion Criteria

- Relevance to subject of review: A key criterion for inclusion was the study’s alignment with the central theme of plant disease detection and its associated technologies. Selected studies were required to cover at least one relevant aspect of the review, such as sensors and platforms used for plant disease detection, imaging techniques for plant disease detection, development of ML/DL models and computer vision techniques for plant image analysis, or edge-computing and IoT for end-to-end solution for integrated plant disease management systems. Relevance was assessed by reviewing the study’s title, abstract, objectives, and methodology to confirm its consistency with the scope of the paper.

- Publication timeframe: Although the review emphasizes recent innovations and emerging technologies in the field over the past decade, 2015–2025, earlier studies were also examined for a comprehensively understanding advancement over time.

- Article type and subject areas: The literature search for this review primarily targeted review and research articles published in the subject areas of agricultural and biological sciences, computer science, and engineering. The articles selected are comprised of journals, conference papers, thesis and dissertations.

- Language: To maintain consistency and ensure broad accessibility, only English-language publications were considered in this review. This approach supported clarity and uniformity in the analysis of the selected literature.

2.1.2. Exclusion Criteria

- Irrelevance to subject of review: Irrelevant studies such as studies that do not directly address any aspect of the topic of review, were excluded from this research. This was mostly determined by critically examining the study’s abstract.

- Out-of-scope publications: Studies published prior to 2015 were completely excluded from this review, as they do not fall within the scope of the review.

- Non-journal, non-conference paper, non-thesis/dissertation: Articles such as book chapters, editorials, and short communications, which do not have a significant amount of research component, were completely excluded from this review.

- Non-English publications: To maintain consistency and accessibility, studies published in languages other than English were excluded. This also ensured that reviewed literature be accurately interpreted by the broader scientific community.

2.2. Article Selection Process

2.2.1. Database Search

2.2.2. Keywords Search

2.2.3. Initial Screening

2.2.4. Text Evaluation and Final Selection

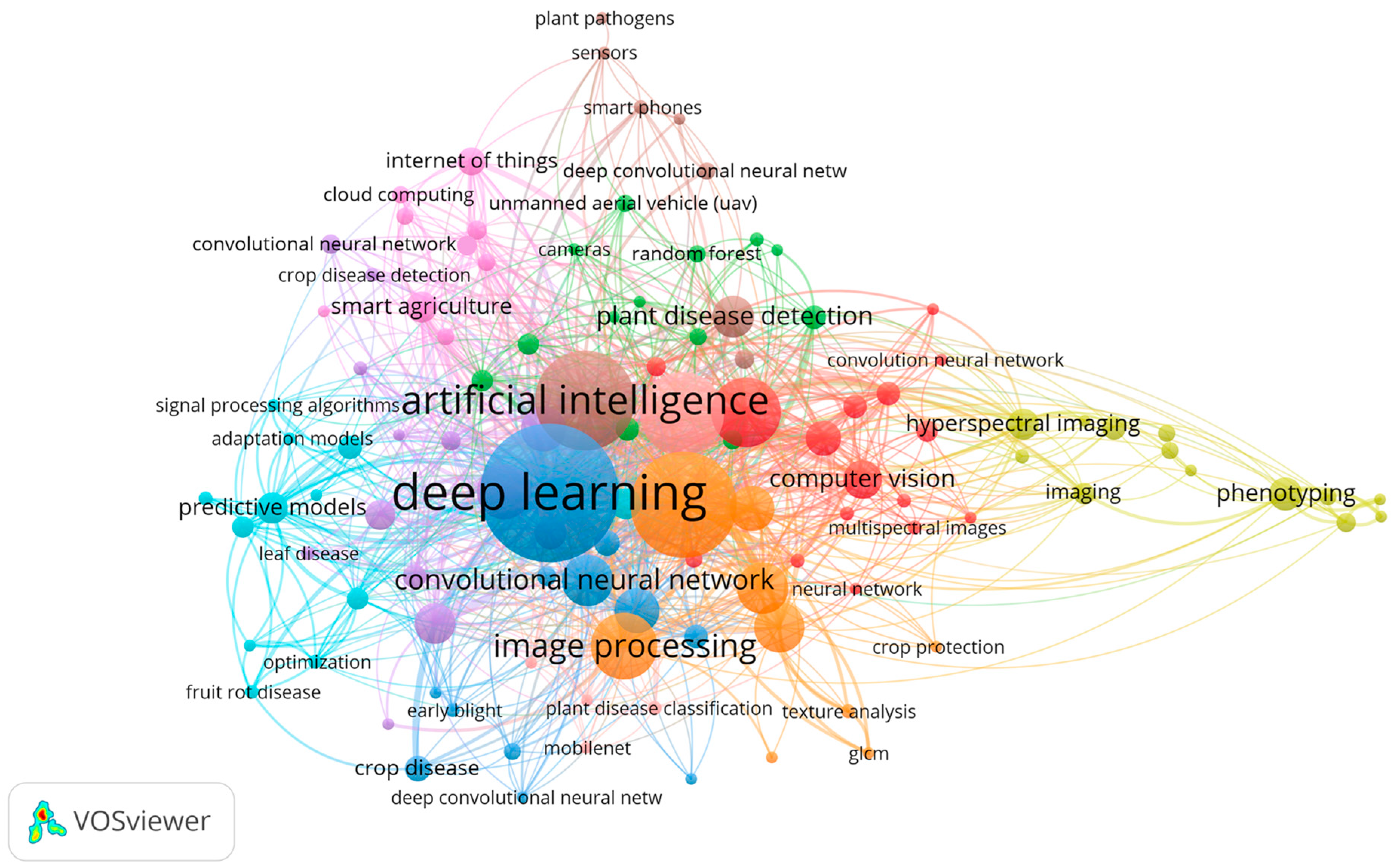

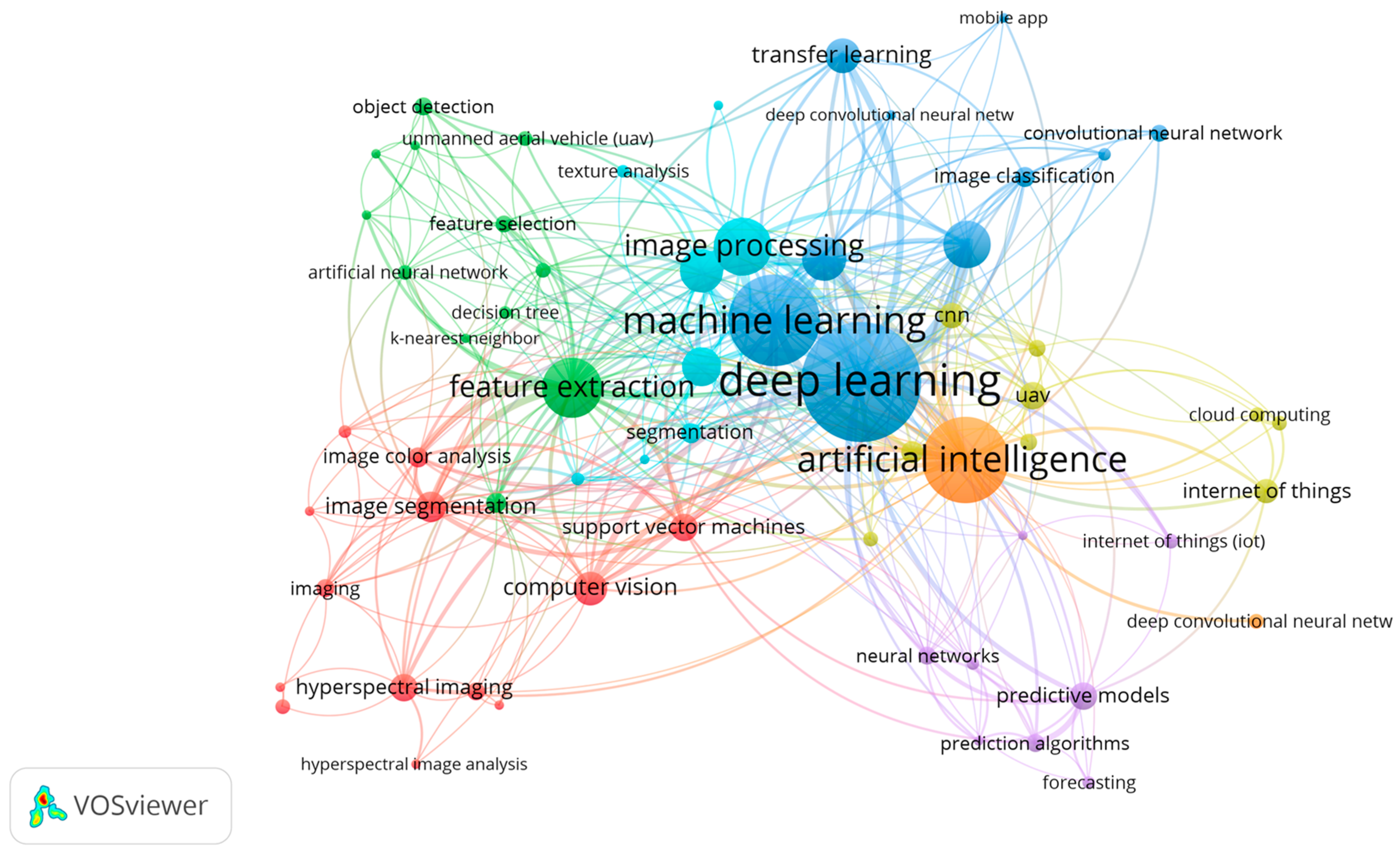

2.3. Keyword Analysis

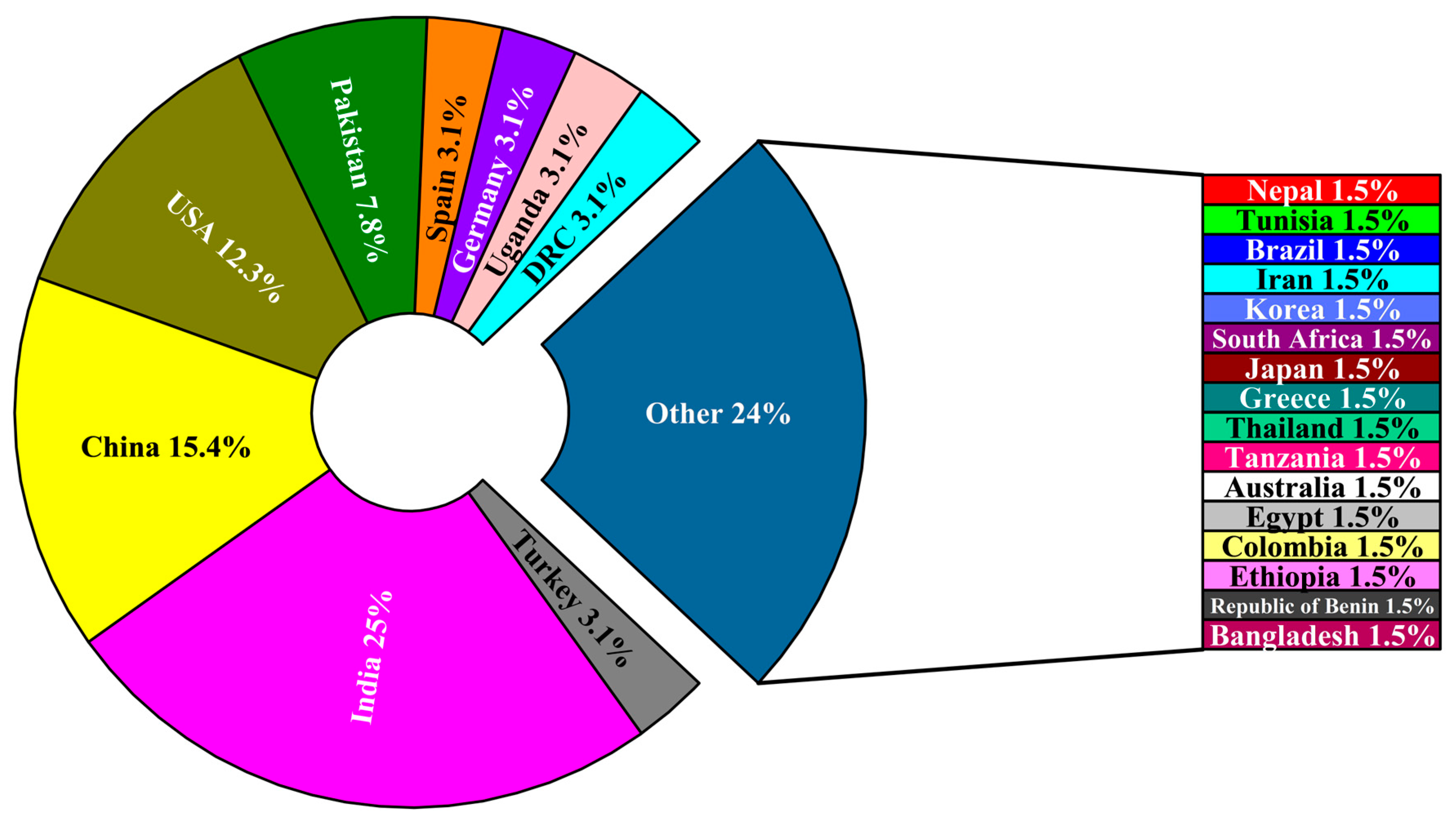

3. Case Studies in Crop Disease Detection

4. Sensors and Platforms Used for Crop Disease Detection

4.1. Handheld Biosensors and Laboratory Setups

4.2. Smartphones and Mobile Apps

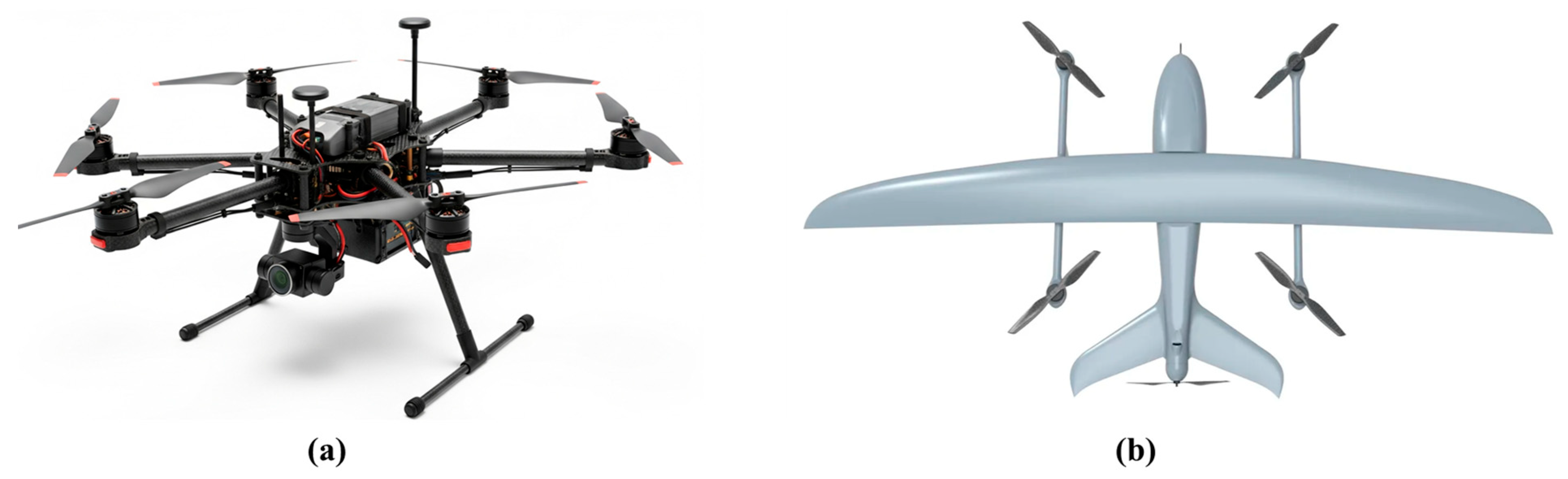

4.3. Unoccupied Aerial Vehicles

4.4. Unoccupied Ground Vehicles

| Reference | Technique | Platform/Sensor | Platform Design | Findings | Limitation |

|---|---|---|---|---|---|

| [138] | Artificial neural network (ANN) | UAV and laboratory setup | (a) DJI Matrice 600 Pro Hexacopter (DJI, Shenzhen, China) with hyperspectral camera, (b) benchtop hyperspectral imaging system | Satisfactory results were obtained in the laboratory and field (UAV-based) conditions top detect diseases | Lack of real time capability as the processing and analysis of data relies solely on computer software |

| [109] | Deep learning and image processing | Smartphone | Smartphone mobile app | The developed model achieved a detection accuracy of 98.79% | High computational resource requirements |

| [111] | Deep learning and image processing | Smartphone | Smartphone mobile app | The developed system achieved high accuracy when tested | Reliance on a relatively small dataset of 659 images |

| [139] | Image processing and deep learning | UAV | Quadcopter UAV with MAPIR Survey2 camera sensor (MAPIR, Inc., San Diego, CA, USA) | The proposed method enabled the detection of vine symptoms | Small size training sample which reduced the performance of the model |

| [67] | Deep learning | Smartphone | Smartphone mobile app | The developed system was able to detect and classify diseases with a high confidence score | Low throughput as phone cannot be used to cover large area |

| [140] | Deep multiple instance learning | Smartphone | Smartphone mobile app | Processing speed of 1 s/image based on Mobile 4G service which satisfies real-time application | Inability to handle the high storage and computational demands of DL models. |

| [141] | Deep neural networks | UAS | DJI Mavic 2 Pro (DJI, Shenzhen, China) equipped with ZED depth camera (StereoLabs, San Francisco, CA, USA) and Jetson Nano (NVIDIA, Santa Clara, CA, USA). | Allows for efficient data collection and real-time analysis | Payload constraints, high data bandwidth, and high-power consumption |

| [142] | Machine learning (Random Forest Classifier) | UAV | DJI Spreading Wings S1000 Octocopter (DJI, Shenzhen, China) with multispectral camera | Developed system achieved good performance in distinguishing healthy from infected wheat | Reduced spatial resolution at altitude, reliance on ground calibration, and lack of real-time capability |

| [2] | Deep transfer learning | Handheld | Android-based application | The developed system achieved a recognition accuracy of 99.53% in real time | The developed system was tested based on images collected from laboratory conditions |

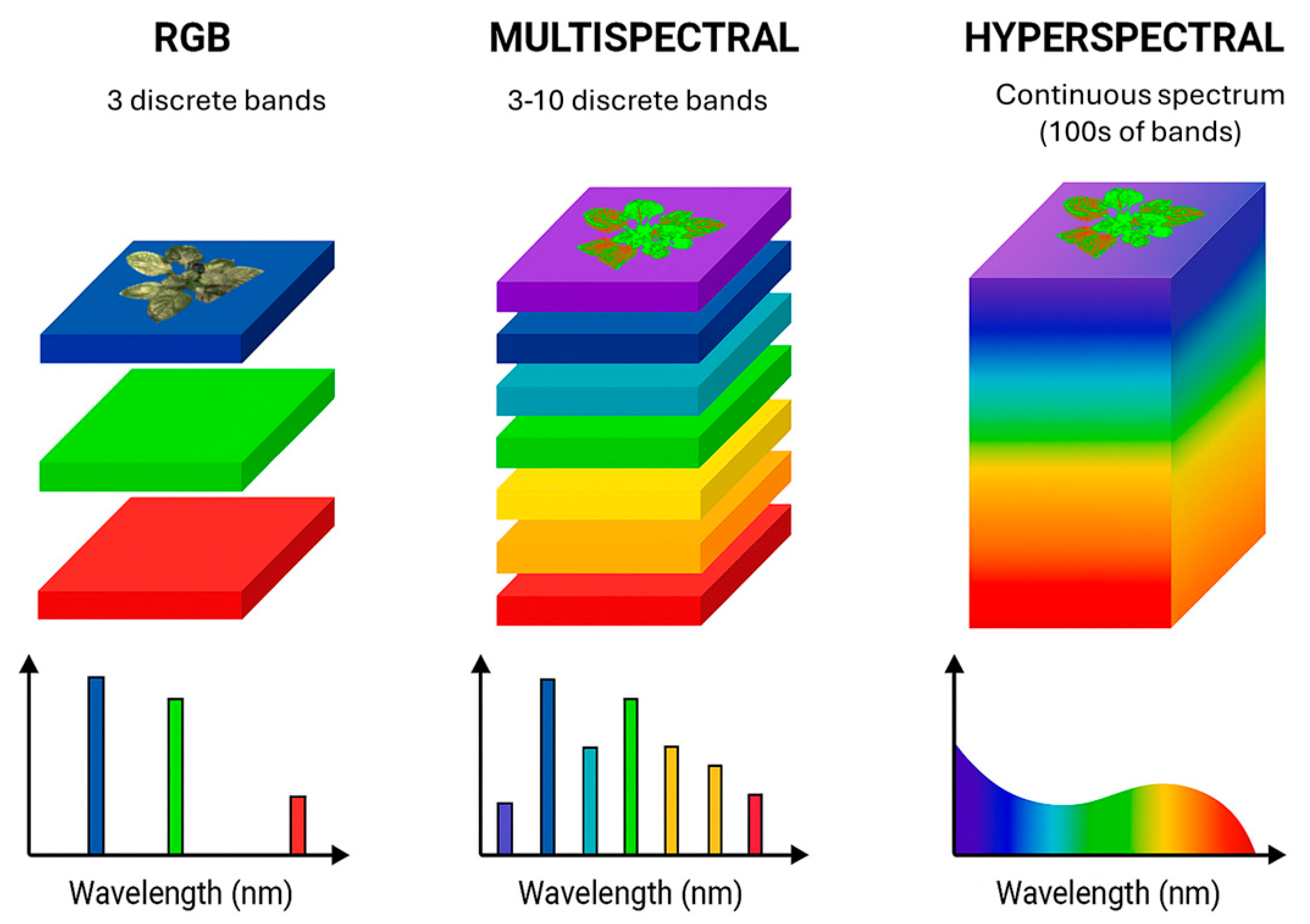

5. Imaging Techniques for Crop Disease Detection

5.1. Visible Light Imaging

5.2. Multispectral Imaging

5.3. Hyperspectral Imaging

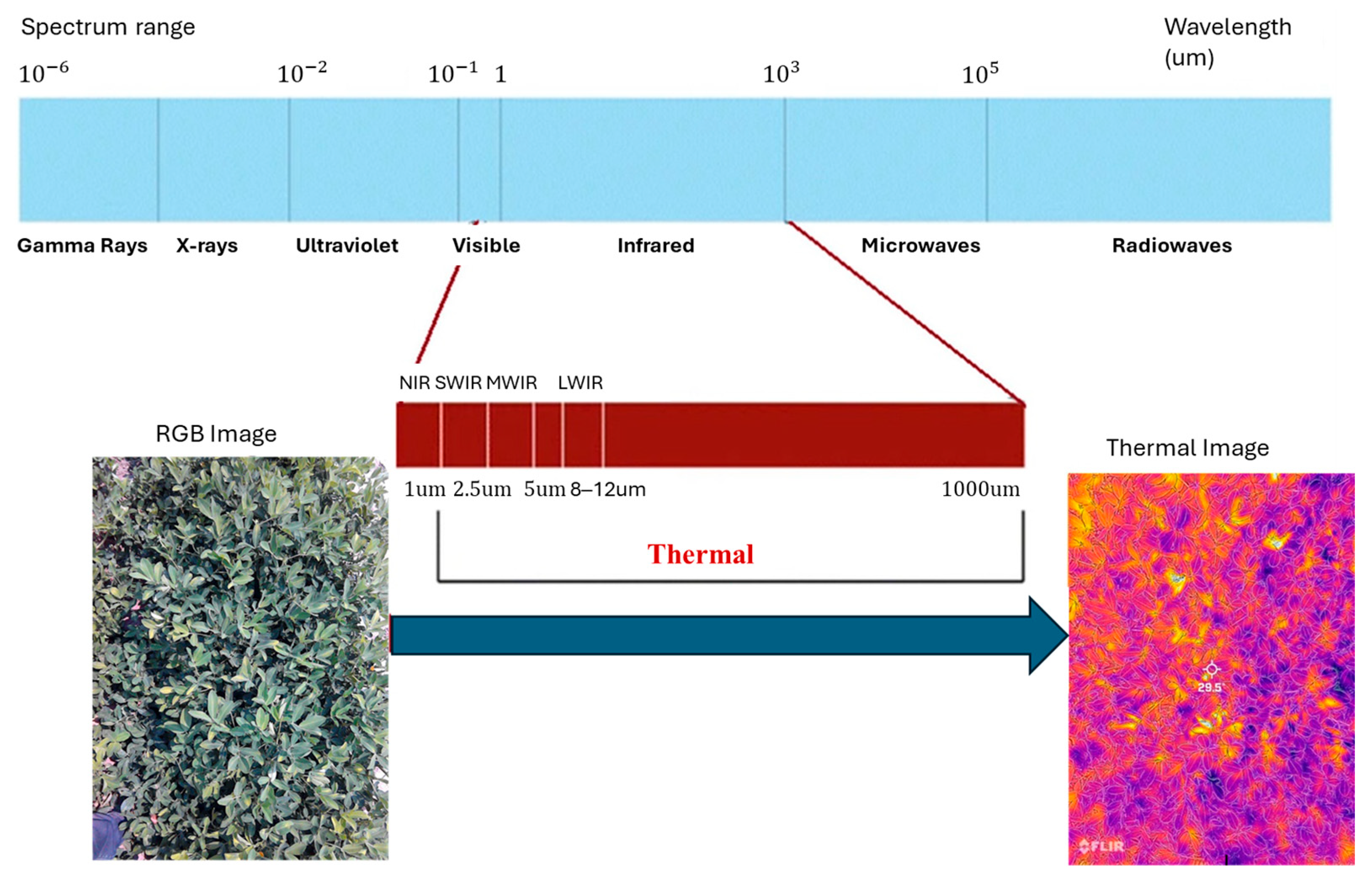

5.4. Thermal Imaging

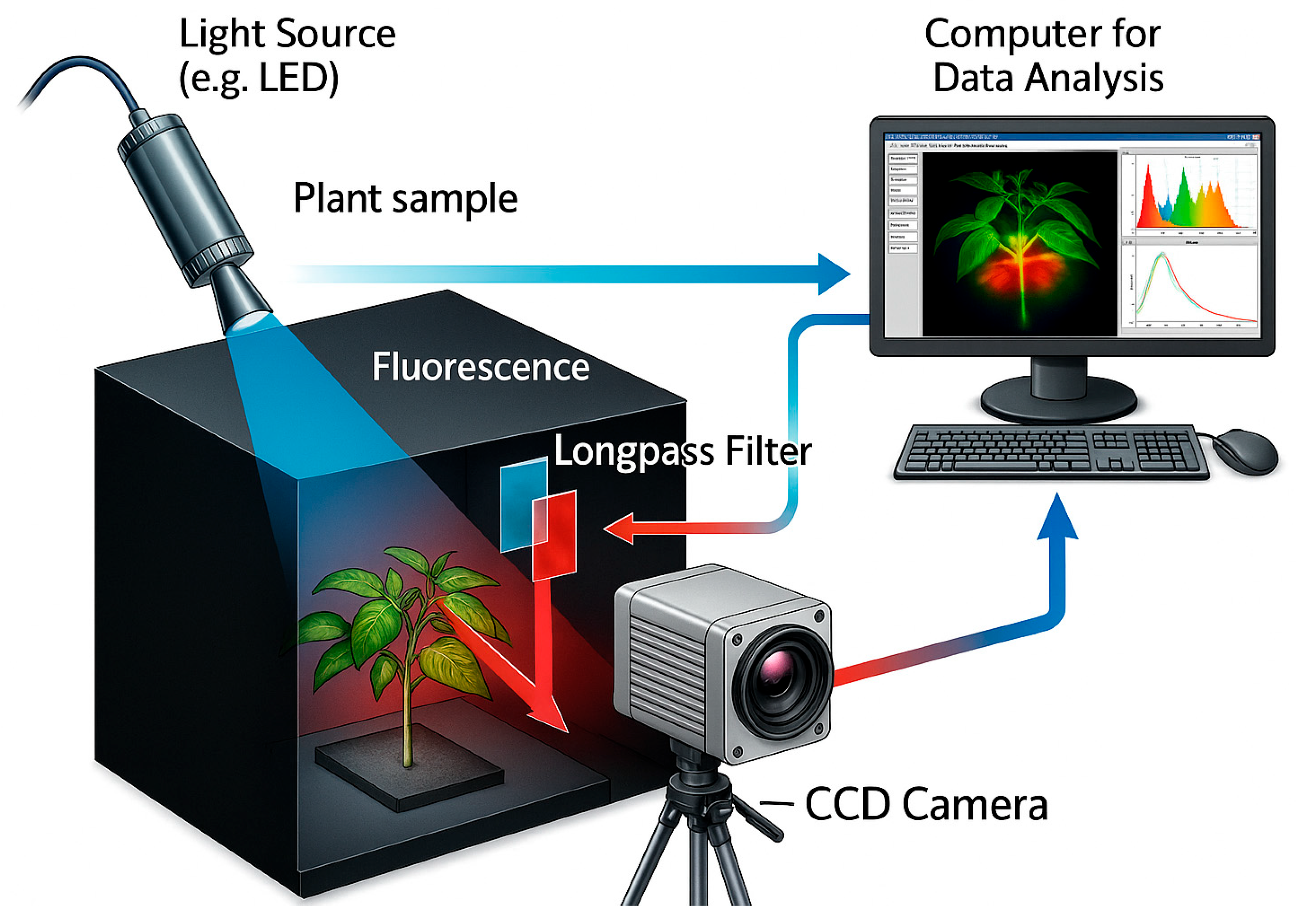

5.5. Fluorescence Imaging

5.6. Three-Dimensional Imaging

6. Computer Vision Techniques for Crop Disease Detection

6.1. Traditional Image Processing Techniques

6.2. Classical Machine Learning Techniques

6.3. Deep Learning Techniques

7. Edge Computing and Internet of Things (IoT) for Crop Diagnosis

8. Economic Feasibility, Accessibility, and Recommendations for Emerging End-to-End Solutions for Crop Disease Diagnosis

9. Challenges and Prospects

10. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using Deep Learning for Image-Based Plant Disease Detection. Front. Plant Sci. 2016, 7, 215232. [Google Scholar] [CrossRef] [PubMed]

- Yang, M.; Seklouli, A.S.; Ren, L.; He, Y.; Yu, X.; Ouzrout, Y. A New Mobile Diagnosis System for Estimation of Crop Disease Severity Using Deep Transfer Learning. Crop Prot. 2024, 184, 106776. [Google Scholar] [CrossRef]

- Singh, R.P.; Singh, P.K.; Rutkoski, J.; Hodson, D.P.; He, X.; Jørgensen, L.N.; Hovmøller, M.S.; Huerta-Espino, J. Disease Impact on Wheat Yield Potential and Prospects of Genetic Control. Annu. Rev. Phytopathol. 2016, 54, 303–322. [Google Scholar] [CrossRef]

- Ji, M.; Zhang, K.; Wu, Q.; Deng, Z. Multi-Label Learning for Crop Leaf Diseases Recognition and Severity Estimation Based on Convolutional Neural Networks. Soft Comput. 2020, 24, 15327–15340. [Google Scholar] [CrossRef]

- Ampatzidis, Y.; De Bellis, L.; Luvisi, A. iPathology: Robotic Applications and Management of Plants and Plant Diseases. Sustainability 2017, 9, 1010. [Google Scholar] [CrossRef]

- Cruz, A.C.; Luvisi, A.; De Bellis, L.; Ampatzidis, Y. X-FIDO: An Effective Application for Detecting Olive Quick Decline Syndrome with Deep Learning and Data Fusion. Front. Plant Sci. 2017, 8, 1741. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Du, K.; Zheng, F.; Zhang, L.; Gong, Z.; Sun, Z. A Recognition Method for Cucumber Diseases Using Leaf Symptom Images Based on Deep Convolutional Neural Network. Comput. Electron. Agric. 2018, 154, 18–24. [Google Scholar] [CrossRef]

- Wang, Q.; Qi, F. Tomato Diseases Recognition Based on Faster RCNN. In Proceedings of the 2019 10th International Conference on Information Technology in Medicine and Education (ITME), Qingdao, China, 23–25 August 2019; IEEE: New York, NY, USA, 2019; pp. 772–776. [Google Scholar]

- George, R.; Thuseethan, S.; Ragel, R.G.; Mahendrakumaran, K.; Nimishan, S.; Wimalasooriya, C.; Alazab, M. Past, Present and Future of Deep Plant Leaf Disease Recognition: A Survey. Comput. Electron. Agric. 2025, 234, 110128. [Google Scholar] [CrossRef]

- USDA. Precision Agriculture in Crop Production|NIFA. Available online: https://www.nifa.usda.gov/grants/programs/precision-geospatial-sensor-technologies-programs/precision-agriculture-crop-production (accessed on 3 October 2025).

- European Commission. The Farm to Fork Strategy|Fact Sheets on the European Union|European Parliament. Available online: https://www.europarl.europa.eu/factsheets/en/sheet/293547/the-farm-to-fork-strategy (accessed on 3 October 2025).

- Adem, K.; Ozguven, M.M.; Altas, Z. A Sugar Beet Leaf Disease Classification Method Based on Image Processing and Deep Learning. Multimed. Tools Appl. 2023, 82, 12577–12594. [Google Scholar] [CrossRef]

- Bai, X.; Li, X.; Fu, Z.; Lv, X.; Zhang, L. A Fuzzy Clustering Segmentation Method Based on Neighborhood Grayscale Information for Defining Cucumber Leaf Spot Disease Images. Comput. Electron. Agric. 2017, 136, 157–165. [Google Scholar] [CrossRef]

- Cruz, A.; Ampatzidis, Y.; Pierro, R.; Materazzi, A.; Panattoni, A.; De Bellis, L.; Luvisi, A. Detection of Grapevine Yellows Symptoms in Vitis vinifera L. with Artificial Intelligence. Comput. Electron. Agric. 2019, 157, 63–76. [Google Scholar] [CrossRef]

- Liu, C.; Zhu, H.; Guo, W.; Han, X.; Chen, C.; Wu, H. EFDet: An Efficient Detection Method for Cucumber Disease under Natural Complex Environments. Comput. Electron. Agric. 2021, 189, 106378. [Google Scholar] [CrossRef]

- Mutka, A.M.; Bart, R.S. Image-Based Phenotyping of Plant Disease Symptoms. Front. Plant Sci. 2015, 5, 734. [Google Scholar] [CrossRef] [PubMed]

- Zhao, C.; Chan, S.S.F.; Cham, W.-K.; Chu, L.M. Plant Identification Using Leaf Shapes—A Pattern Counting Approach. Pattern Recognit. 2015, 48, 3203–3215. [Google Scholar] [CrossRef]

- Sinha, A.; Shekhawat, R.S. Review of Image Processing Approaches for Detecting Plant Diseases. IET Image Process. 2020, 14, 1427–1439. [Google Scholar] [CrossRef]

- Jasim, M.A.; AL-Tuwaijari, J.M. Plant Leaf Diseases Detection and Classification Using Image Processing and Deep Learning Techniques. In Proceedings of the 2020 International Conference on Computer Science and Software Engineering (CSASE), Duhok, Iraq, 16–18 April 2020; IEEE: New York, NY, USA, 2020; pp. 259–265. [Google Scholar]

- Nagaraju, M.; Chawla, P. Systematic Review of Deep Learning Techniques in Plant Disease Detection. Int. J. Syst. Assur. Eng. Manag. 2020, 11, 547–560. [Google Scholar] [CrossRef]

- Nagasubramanian, K.; Jones, S.; Singh, A.K.; Sarkar, S.; Singh, A.; Ganapathysubramanian, B. Plant Disease Identification Using Explainable 3D Deep Learning on Hyperspectral Images. Plant Methods 2019, 15, 98. [Google Scholar] [CrossRef]

- Singh, V.; Sharma, N.; Singh, S. A Review of Imaging Techniques for Plant Disease Detection. Artif. Intell. Agric. 2020, 4, 229–242. [Google Scholar] [CrossRef]

- Sun, J.; Yang, Y.; He, X.; Wu, X. Northern Maize Leaf Blight Detection Under Complex Field Environment Based on Deep Learning. IEEE Access 2020, 8, 33679–33688. [Google Scholar] [CrossRef]

- Lowe, A.; Harrison, N.; French, A.P. Hyperspectral Image Analysis Techniques for the Detection and Classification of the Early Onset of Plant Disease and Stress. Plant Methods 2017, 13, 80. [Google Scholar] [CrossRef]

- Grujić, K. A Review of Thermal Spectral Imaging Methods for Monitoring High-Temperature Molten Material Streams. Sensors 2023, 23, 1130. [Google Scholar] [CrossRef]

- Altas, Z.; Ozguven, M.M.; Yanar, Y. Determination of Sugar Beet Leaf Spot Disease Level (Cercospora beticola Sacc.) with Image Processing Technique by Using Drone. Curr. Investig. Agric. Curr. Res. 2018, 5, 669–678. [Google Scholar] [CrossRef]

- Ozguven, M.M.; Altas, Z. A New Approach to Detect Mildew Disease on Cucumber (Pseudoperonospora cubensis) Leaves with Image Processing. J. Plant Pathol. 2022, 104, 1397–1406. [Google Scholar] [CrossRef]

- Ozguven, M.M. Deep Learning Algorithms for Automatic Detection and Classification of Mildew Disease in Cucumber. Fresenius Environ. Bull. 2020, 29, 7081–7087. [Google Scholar]

- Liang, Q.; Xiang, S.; Hu, Y.; Coppola, G.; Zhang, D.; Sun, W. PD2SE-Net: Computer-Assisted Plant Disease Diagnosis and Severity Estimation Network. Comput. Electron. Agric. 2019, 157, 518–529. [Google Scholar] [CrossRef]

- Wang, C.; Du, P.; Wu, H.; Li, J.; Zhao, C.; Zhu, H. A Cucumber Leaf Disease Severity Classification Method Based on the Fusion of DeepLabV3+ and U-Net. Comput. Electron. Agric. 2021, 189, 106373. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, S.; Zhang, C.; Wang, X.; Shi, Y. Cucumber Leaf Disease Identification with Global Pooling Dilated Convolutional Neural Network. Comput. Electron. Agric. 2019, 162, 422–430. [Google Scholar] [CrossRef]

- Aravind, K.R.; Raja, P.; Mukesh, K.V.; Aniirudh, R.; Ashiwin, R.; Szczepanski, C. Disease Classification in Maize Crop Using Bag of Features and Multiclass Support Vector Machine. In Proceedings of the 2018 2nd International Conference on Inventive Systems and Control (ICISC), Coimbatore, India, 19–20 January 2018; IEEE: New York, NY, USA, 2018; pp. 1191–1196. [Google Scholar]

- Sanyal, P.; Patel, S.C. Pattern Recognition Method to Detect Two Diseases in Rice Plants. Imaging Sci. J. 2008, 56, 319–325. [Google Scholar] [CrossRef]

- Abdulridha, J.; Batuman, O.; Ampatzidis, Y. UAV-Based Remote Sensing Technique to Detect Citrus Canker Disease Utilizing Hyperspectral Imaging and Machine Learning. Remote Sens. 2019, 11, 1373. [Google Scholar] [CrossRef]

- Saberi Anari, M. A Hybrid Model for Leaf Diseases Classification Based on the Modified Deep Transfer Learning and Ensemble Approach for Agricultural AIoT-Based Monitoring. Comput. Intell. Neurosci. 2022, 2022, 6504616. [Google Scholar] [CrossRef]

- Too, E.C.; Yujian, L.; Njuki, S.; Yingchun, L. A Comparative Study of Fine-Tuning Deep Learning Models for Plant Disease Identification. Comput. Electron. Agric. 2019, 161, 272–279. [Google Scholar] [CrossRef]

- Zhao, Y.; Sun, C.; Xu, X.; Chen, J. RIC-Net: A Plant Disease Classification Model Based on the Fusion of Inception and Residual Structure and Embedded Attention Mechanism. Comput. Electron. Agric. 2022, 193, 106644. [Google Scholar] [CrossRef]

- Yadav, A.; Yadav, K. Portable Solutions for Plant Pathogen Diagnostics: Development, Usage, and Future Potential. Front. Microbiol. 2025, 16, 1516723. [Google Scholar] [CrossRef]

- Upadhyay, A.; Chandel, N.S.; Singh, K.P.; Chakraborty, S.K.; Nandede, B.M.; Kumar, M.; Subeesh, A.; Upendar, K.; Salem, A.; Elbeltagi, A. Deep Learning and Computer Vision in Plant Disease Detection: A Comprehensive Review of Techniques, Models, and Trends in Precision Agriculture. Artif. Intell. Rev. 2025, 58, 92. [Google Scholar] [CrossRef]

- Ashwini, C.; Sellam, V. EOS-3D-DCNN: Ebola Optimization Search-Based 3D-Dense Convolutional Neural Network for Corn Leaf Disease Prediction. Neural Comput. Appl. 2023, 35, 11125–11139. [Google Scholar] [CrossRef] [PubMed]

- Singla, A.; Nehra, A.; Joshi, K.; Kumar, A.; Tuteja, N.; Varshney, R.K.; Gill, S.S.; Gill, R. Exploration of Machine Learning Approaches for Automated Crop Disease Detection. Curr. Plant Biol. 2024, 40, 100382. [Google Scholar] [CrossRef]

- Poutanen, K.S.; Kårlund, A.O.; Gómez-Gallego, C.; Johansson, D.P.; Scheers, N.M.; Marklinder, I.M.; Eriksen, A.K.; Silventoinen, P.C.; Nordlund, E.; Sozer, N.; et al. Grains—A Major Source of Sustainable Protein for Health. Nutr. Rev. 2022, 80, 1648–1663. [Google Scholar] [CrossRef]

- Waldamichael, F.G.; Debelee, T.G.; Schwenker, F.; Ayano, Y.M.; Kebede, S.R. Machine Learning in Cereal Crops Disease Detection: A Review. Algorithms 2022, 15, 75. [Google Scholar] [CrossRef]

- Han, G.; Liu, S.; Wang, J.; Jin, Y.; Zhou, Y.; Luo, Q.; Liu, H.; Zhao, H.; An, D. Identification of an Elite Wheat-Rye T1RS·1BL Translocation Line Conferring High Resistance to Powdery Mildew and Stripe Rust. Plant Dis. 2020, 104, 2940–2948. [Google Scholar] [CrossRef]

- Sharma, I.; Tyagi, B.S.; Singh, G.; Venkatesh, K.; Gupta, O.P. Enhancing Wheat Production- A Global Perspective. Indian J. Agric. Sci. 2015, 85, 3–13. [Google Scholar] [CrossRef]

- Sudhesh, K.M.; Sowmya, V.; Sainamole Kurian, P.; Sikha, O.K. AI Based Rice Leaf Disease Identification Enhanced by Dynamic Mode Decomposition. Eng. Appl. Artif. Intell. 2023, 120, 105836. [Google Scholar] [CrossRef]

- Haque, M.A.; Marwaha, S.; Deb, C.K.; Nigam, S.; Arora, A. Recognition of Diseases of Maize Crop Using Deep Learning Models. Neural Comput. Appl. 2023, 35, 7407–7421. [Google Scholar] [CrossRef]

- Adhikari, S.; Shrestha, B.; Baiju, B.; Saban Kumar, K.C. Tomato Plant Diseases Detection System Using Image Processing. In Proceedings of the 1st KEC Conference on Engineering and Technology, Lalitpur, Nepal, 27 September 2018. [Google Scholar]

- Amara, J.; Bouaziz, B.; Algergawy, A. A Deep Learning-Based Approach for Banana Leaf Diseases Classification. In Proceedings of the Datenbanksysteme für Business, Technologie und Web (BTW 2017)-Workshopband, Stuttgart, Germany, 6–10 March 2017; Gesellschaft für Informatik e.V.: Hamburg, Germany, 2017; pp. 79–88. [Google Scholar]

- Arnal Barbedo, J.G. Plant Disease Identification from Individual Lesions and Spots Using Deep Learning. Biosyst. Eng. 2019, 180, 96–107. [Google Scholar] [CrossRef]

- Ashourloo, D.; Aghighi, H.; Matkan, A.A.; Mobasheri, M.R.; Rad, A.M. An Investigation Into Machine Learning Regression Techniques for the Leaf Rust Disease Detection Using Hyperspectral Measurement. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4344–4351. [Google Scholar] [CrossRef]

- Balasundaram, A.; Sundaresan, P.; Bhavsar, A.; Mattu, M.; Kavitha, M.S.; Shaik, A. Tea Leaf Disease Detection Using Segment Anything Model and Deep Convolutional Neural Networks. Results Eng. 2025, 25, 103784. [Google Scholar] [CrossRef]

- Bhange, M.; Hingoliwala, H.A. Smart Farming: Pomegranate Disease Detection Using Image Processing. Procedia Comput. Sci. 2015, 58, 280–288. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Zhang, D.; Sun, Y.; Nanehkaran, Y.A. Using Deep Transfer Learning for Image-Based Plant Disease Identification. Comput. Electron. Agric. 2020, 173, 105393. [Google Scholar] [CrossRef]

- Chen, J.; Yin, H.; Zhang, D. A Self-Adaptive Classification Method for Plant Disease Detection Using GMDH-Logistic Model. Sustain. Comput. Inform. Syst. 2020, 28, 100415. [Google Scholar] [CrossRef]

- Chug, A.; Bhatia, A.; Singh, A.P.; Singh, D. A Novel Framework for Image-Based Plant Disease Detection Using Hybrid Deep Learning Approach. Soft Comput. 2023, 27, 13613–13638. [Google Scholar] [CrossRef]

- DeChant, C.; Wiesner-Hanks, T.; Chen, S.; Stewart, E.L.; Yosinski, J.; Gore, M.A.; Nelson, R.J.; Lipson, H. Automated Identification of Northern Leaf Blight-Infected Maize Plants from Field Imagery Using Deep Learning. Phytopathology 2017, 107, 1426–1432. [Google Scholar] [CrossRef] [PubMed]

- Divyanth, L.G.; Ahmad, A.; Saraswat, D. A Two-Stage Deep-Learning Based Segmentation Model for Crop Disease Quantification Based on Corn Field Imagery. Smart Agric. Technol. 2023, 3, 100108. [Google Scholar] [CrossRef]

- Fuentes, A.; Yoon, S.; Kim, S.C.; Park, D.S. A Robust Deep-Learning-Based Detector for Real-Time Tomato Plant Diseases and Pests Recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef]

- Haridasan, A.; Thomas, J.; Raj, E.D. Deep Learning System for Paddy Plant Disease Detection and Classification. Environ. Monit. Assess. 2022, 195, 120. [Google Scholar] [CrossRef]

- Huang, X.; Chen, A.; Zhou, G.; Zhang, X.; Wang, J.; Peng, N.; Yan, N.; Jiang, C. Tomato Leaf Disease Detection System Based on FC-SNDPN. Multimed. Tools Appl. 2023, 82, 2121–2144. [Google Scholar] [CrossRef]

- Isinkaye, F.O.; Olusanya, M.O.; Akinyelu, A.A. A Multi-Class Hybrid Variational Autoencoder and Vision Transformer Model for Enhanced Plant Disease Identification. Intell. Syst. Appl. 2025, 26, 200490. [Google Scholar] [CrossRef]

- Jiang, P.; Chen, Y.; Liu, B.; He, D.; Liang, C. Real-Time Detection of Apple Leaf Diseases Using Deep Learning Approach Based on Improved Convolutional Neural Networks. IEEE Access 2019, 7, 59069–59080. [Google Scholar] [CrossRef]

- Kaur, G.; Rajni; Sivia, J.S. Development of Deep and Machine Learning Convolutional Networks of Variable Spatial Resolution for Automatic Detection of Leaf Blast Disease of Rice. Comput. Electron. Agric. 2024, 224, 109210. [Google Scholar] [CrossRef]

- Kawasaki, Y.; Uga, H.; Kagiwada, S.; Iyatomi, H. Basic Study of Automated Diagnosis of Viral Plant Diseases Using Convolutional Neural Networks. In Advances in Visual Computing; Bebis, G., Boyle, R., Parvin, B., Koracin, D., Pavlidis, I., Feris, R., McGraw, T., Elendt, M., Kopper, R., Ragan, E., et al., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 638–645. [Google Scholar]

- Khan, A.I.; Quadri, S.M.K.; Banday, S.; Latief Shah, J. Deep Diagnosis: A Real-Time Apple Leaf Disease Detection System Based on Deep Learning. Comput. Electron. Agric. 2022, 198, 107093. [Google Scholar] [CrossRef]

- Khan, F.; Zafar, N.; Tahir, M.N.; Aqib, M.; Waheed, H.; Haroon, Z. A Mobile-Based System for Maize Plant Leaf Disease Detection and Classification Using Deep Learning. Front. Plant Sci. 2023, 14, 1079366. [Google Scholar] [CrossRef]

- Kumar, R.R.; Jain, A.K.; Sharma, V.; Jain, N.; Das, P.; Sahni, P. Advanced Deep Learning Model for Multi-Disease Prediction in Potato Crops: A Precision Agriculture Approach. In Proceedings of the 2024 IEEE International Conference on Information Technology, Electronics and Intelligent Communication Systems (ICITEICS), Bangalore, India, 28–29 June 2024; pp. 1–7. [Google Scholar]

- Lu, Y.; Yi, S.; Zeng, N.; Liu, Y.; Zhang, Y. Identification of Rice Diseases Using Deep Convolutional Neural Networks. Neurocomputing 2017, 267, 378–384. [Google Scholar] [CrossRef]

- Mahum, R.; Munir, H.; Mughal, Z.-U.-N.; Awais, M.; Sher Khan, F.; Saqlain, M.; Mahamad, S.; Tlili, I. A Novel Framework for Potato Leaf Disease Detection Using an Efficient Deep Learning Model. Hum. Ecol. Risk Assess. Int. J. 2023, 29, 303–326. [Google Scholar] [CrossRef]

- Mallick, M.T.; Biswas, S.; Das, A.K.; Saha, H.N.; Chakrabarti, A.; Deb, N. Deep Learning Based Automated Disease Detection and Pest Classification in Indian Mung Bean. Multimed. Tools Appl. 2023, 82, 12017–12041. [Google Scholar] [CrossRef]

- Naeem, A.B.; Senapati, B.; Zaidi, A.; Maaliw, R.R.; Sudman, M.S.I.; Das, D.; Almeida, F.; Sakr, H.A. Detecting Three Different Diseases of Plants by Using CNN Model and Image Processing. J. Electr. Syst. 2024, 20, 1519–1525. [Google Scholar] [CrossRef]

- Pantazi, X.E.; Moshou, D.; Tamouridou, A.A. Automated Leaf Disease Detection in Different Crop Species through Image Features Analysis and One Class Classifiers. Comput. Electron. Agric. 2019, 156, 96–104. [Google Scholar] [CrossRef]

- Padhi, J.; Mishra, K.; Ratha, A.K.; Behera, S.K.; Sethy, P.K.; Nanthaamornphong, A. Enhancing Paddy Leaf Disease Diagnosis—A Hybrid CNN Model Using Simulated Thermal Imaging. Smart Agric. Technol. 2025, 10, 100814. [Google Scholar] [CrossRef]

- Picon, A.; Seitz, M.; Alvarez-Gila, A.; Mohnke, P.; Ortiz-Barredo, A.; Echazarra, J. Crop Conditional Convolutional Neural Networks for Massive Multi-Crop Plant Disease Classification over Cell Phone Acquired Images Taken on Real Field Conditions. Comput. Electron. Agric. 2019, 167, 105093. [Google Scholar] [CrossRef]

- Picon, A.; Alvarez-Gila, A.; Seitz, M.; Ortiz-Barredo, A.; Echazarra, J.; Johannes, A. Deep Convolutional Neural Networks for Mobile Capture Device-Based Crop Disease Classification in the Wild. Comput. Electron. Agric. 2019, 161, 280–290. [Google Scholar] [CrossRef]

- Prajapati, H.B.; Shah, J.P.; Dabhi, V.K. Detection and Classification of Rice Plant Diseases. Intell. Decis. Technol. 2017, 11, 357–373. [Google Scholar] [CrossRef]

- Rahman, H.; Imran, H.M.; Hossain, A.; Siddiqui, I.H.; Sakib, A.H. Explainable Vision Transformers for Real-time Chili and Onion Leaf Disease Identification and Diagnosis. Int. J. Sci. Res. Arch. 2025, 15, 1823–1833. [Google Scholar] [CrossRef]

- Rahman, S.U.; Alam, F.; Ahmad, N.; Arshad, S. Image Processing Based System for the Detection, Identification and Treatment of Tomato Leaf Diseases. Multimed. Tools Appl. 2023, 82, 9431–9445. [Google Scholar] [CrossRef]

- Ramcharan, A.; Baranowski, K.; McCloskey, P.; Ahmed, B.; Legg, J.; Hughes, D.P. Deep Learning for Image-Based Cassava Disease Detection. Front. Plant Sci. 2017, 8, 1852. [Google Scholar] [CrossRef]

- Rangarajan, A.K.; Purushothaman, R.; Prabhakar, M.; Szczepański, C. Crop Identification and Disease Classification Using Traditional Machine Learning and Deep Learning Approaches. J. Eng. Res. 2023, 11, 228–252. [Google Scholar] [CrossRef]

- Rezaei, M.; Diepeveen, D.; Laga, H.; Gupta, S.; Jones, M.G.K.; Sohel, F. A Transformer-Based Few-Shot Learning Pipeline for Barley Disease Detection from Field-Collected Imagery. Comput. Electron. Agric. 2025, 229, 109751. [Google Scholar] [CrossRef]

- Saad, M.H.; Salman, A.E. A Plant Disease Classification Using One-Shot Learning Technique with Field Images. Multimed. Tools Appl. 2024, 83, 58935–58960. [Google Scholar] [CrossRef]

- Sambasivam, G.; Opiyo, G.D. A Predictive Machine Learning Application in Agriculture: Cassava Disease Detection and Classification with Imbalanced Dataset Using Convolutional Neural Networks. Egypt. Inform. J. 2021, 22, 27–34. [Google Scholar] [CrossRef]

- Selvaraj, M.G.; Vergara, A.; Ruiz, H.; Safari, N.; Elayabalan, S.; Ocimati, W.; Blomme, G. AI-Powered Banana Diseases and Pest Detection. Plant Methods 2019, 15, 92. [Google Scholar] [CrossRef]

- Gomez Selvaraj, M.; Vergara, A.; Montenegro, F.; Alonso Ruiz, H.; Safari, N.; Raymaekers, D.; Ocimati, W.; Ntamwira, J.; Tits, L.; Omondi, A.B.; et al. Detection of Banana Plants and Their Major Diseases Through Aerial Images and Machine Learning Methods: A Case Study in DR Congo and Republic of Benin. ISPRS J. Photogramm. Remote Sens. 2020, 169, 110–124. [Google Scholar] [CrossRef]

- Sharif, M.; Khan, M.A.; Iqbal, Z.; Azam, M.F.; Lali, M.I.U.; Javed, M.Y. Detection and Classification of Citrus Diseases in Agriculture Based on Optimized Weighted Segmentation and Feature Selection. Comput. Electron. Agric. 2018, 150, 220–234. [Google Scholar] [CrossRef]

- Sharma, P.; Berwal, Y.P.S.; Ghai, W. Performance Analysis of Deep Learning CNN Models for Disease Detection in Plants Using Image Segmentation. Inf. Process. Agric. 2020, 7, 566–574. [Google Scholar] [CrossRef]

- Shovon, M.S.H.; Mozumder, S.J.; Pal, O.K.; Mridha, M.F.; Asai, N.; Shin, J. PlantDet: A Robust Multi-Model Ensemble Method Based on Deep Learning For Plant Disease Detection. IEEE Access 2023, 11, 34846–34859. [Google Scholar] [CrossRef]

- Singh, V.; Misra, A.K. Detection of Plant Leaf Diseases Using Image Segmentation and Soft Computing Techniques. Inf. Process. Agric. 2017, 4, 41–49. [Google Scholar] [CrossRef]

- Singh, U.P.; Chouhan, S.S.; Jain, S.; Jain, S. Multilayer Convolution Neural Network for the Classification of Mango Leaves Infected by Anthracnose Disease. IEEE Access 2019, 7, 43721–43729. [Google Scholar] [CrossRef]

- Sujatha, R.; Chatterjee, J.M.; Jhanjhi, N.; Brohi, S.N. Performance of Deep Learning vs Machine Learning in Plant Leaf Disease Detection. Microprocess. Microsyst. 2021, 80, 103615. [Google Scholar] [CrossRef]

- Sunil, C.K.; Jaidhar, C.D.; Patil, N. Cardamom Plant Disease Detection Approach Using EfficientNetV2. IEEE Access 2022, 10, 789–804. [Google Scholar] [CrossRef]

- Türkoğlu, M.; Hanbay, D. Plant Disease and Pest Detection Using Deep Learning-Based Features. Turk. J. Electr. Eng. Comput. Sci. 2019, 27, 1636–1651. [Google Scholar] [CrossRef]

- Wang, X.; Liu, J. Vegetable Disease Detection Using an Improved YOLOv8 Algorithm in the Greenhouse Plant Environment. Sci. Rep. 2024, 14, 4261. [Google Scholar] [CrossRef]

- Xu, L.; Cao, B.; Zhao, F.; Ning, S.; Xu, P.; Zhang, W.; Hou, X. Wheat Leaf Disease Identification Based on Deep Learning Algorithms. Physiol. Mol. Plant Pathol. 2023, 123, 101940. [Google Scholar] [CrossRef]

- Zhang, S.; Wu, X.; You, Z.; Zhang, L. Leaf Image Based Cucumber Disease Recognition Using Sparse Representation Classification. Comput. Electron. Agric. 2017, 134, 135–141. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, H.; Huang, W.; You, Z. Plant Diseased Leaf Segmentation and Recognition by Fusion of Superpixel, K-Means and PHOG. Optik 2018, 157, 866–872. [Google Scholar] [CrossRef]

- FAOSTAT. World Food and Agriculture—Statistical Yearbook 2024; FAO: Rome, Italy, 2024; ISBN 978-92-5-139255-3. [Google Scholar]

- Su, J.; Zhu, X.; Li, S.; Chen, W.-H. AI Meets UAVs: A Survey on AI Empowered UAV Perception Systems for Precision Agriculture. Neurocomputing 2023, 518, 242–270. [Google Scholar] [CrossRef]

- Zhang, T.; Cai, Y.; Zhuang, P.; Li, J. Remotely Sensed Crop Disease Monitoring by Machine Learning Algorithms: A Review. Unmanned Syst. 2024, 12, 161–171. [Google Scholar] [CrossRef]

- Konica Minolta. Chlorophyll Meter. Available online: https://sensing.konicaminolta.eu/products/colour-measurement/chlorophyll-meter (accessed on 6 October 2025).

- Bruker. BRAVO. Handheld Raman Spectrometer. Available online: https://www.bruker.com/en/products-and-solutions/raman-spectroscopy/raman-spectrometers/bravo-handheld-raman-spectrometer.html (accessed on 6 October 2025).

- Aurora QPCR Device–UltraFast (FQ-8A). Aurora Biomed. Available online: https://www.aurorabiomed.com/product/ultrafast-qpcr-fq-8a/ (accessed on 13 October 2025).

- Thomas, S.; Kuska, M.T.; Bohnenkamp, D.; Brugger, A.; Alisaac, E.; Wahabzada, M.; Behmann, J.; Mahlein, A.-K. Benefits of Hyperspectral Imaging for Plant Disease Detection and Plant Protection: A Technical Perspective. J. Plant Dis. Prot. 2018, 125, 5–20. [Google Scholar] [CrossRef]

- Wang, L.; Jin, J.; Song, Z.; Wang, J.; Zhang, L.; Rehman, T.U.; Ma, D.; Carpenter, N.R.; Tuinstra, M.R. LeafSpec: An Accurate and Portable Hyperspectral Corn Leaf Imager. Comput. Electron. Agric. 2020, 169, 105209. [Google Scholar] [CrossRef]

- Kumari, K.; Parray, R.; Basavaraj, Y.B.; Godara, S.; Mani, I.; Kumar, R.; Khura, T.; Sarkar, S.; Ranjan, R.; Mirzakhaninafchi, H. Spectral Sensor-Based Device for Real-Time Detection and Severity Estimation of Groundnut Bud Necrosis Virus in Tomato. J. Field Robot. 2025, 42, 5–19. [Google Scholar] [CrossRef]

- Hussain, R.; Zhao, B.-Y.; Aarti, A.; Yin, W.-X.; Luo, C.-X. A Single Tube RPA/Cas12a-Based Diagnostic Assay for Early, Rapid, and Efficient Detection of Botrytis Cinerea in Sweet Cherry. Plant Dis. 2025, 109, 1244–1253. [Google Scholar] [CrossRef]

- Babatunde, R.S.; Babatunde, A.N.; Ogundokun, R.O.; Yusuf, O.K.; Sadiku, P.O.; Shah, M.A. A Novel Smartphone Application for Early Detection of Habanero Disease. Sci. Rep. 2024, 14, 1423. [Google Scholar] [CrossRef] [PubMed]

- Li, T.; Xia, T.; Wang, H.; Tu, Z.; Tarkoma, S.; Han, Z.; Hui, P. Smartphone App Usage Analysis: Datasets, Methods, and Applications. IEEE Commun. Surv. Tutor. 2022, 24, 937–966. [Google Scholar] [CrossRef]

- Christakakis, P.; Papadopoulou, G.; Mikos, G.; Kalogiannidis, N.; Ioannidis, D.; Tzovaras, D.; Pechlivani, E.M. Smartphone-Based Citizen Science Tool for Plant Disease and Insect Pest Detection Using Artificial Intelligence. Technologies 2024, 12, 101. [Google Scholar] [CrossRef]

- Giakoumoglou, N.; Pechlivani, E.M.; Tzovaras, D. Generate-Paste-Blend-Detect: Synthetic Dataset for Object Detection in the Agriculture Domain. Smart Agric. Technol. 2023, 5, 100258. [Google Scholar] [CrossRef]

- Siddiqua, A.; Kabir, M.A.; Ferdous, T.; Ali, I.B.; Weston, L.A. Evaluating Plant Disease Detection Mobile Applications: Quality and Limitations. Agronomy 2022, 12, 1869. [Google Scholar] [CrossRef]

- Huang, Y.; Feng, G.; Tewolde, H. Multisource Remote Sensing Field Monitoring for Improving Crop Production Management. In Proceedings of the 2021 ASABE Annual International Meeting, Virtual, 12–16 July 2021; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2021. [Google Scholar]

- Abbas, A.; Zhang, Z.; Zheng, H.; Alami, M.M.; Alrefaei, A.F.; Abbas, Q.; Naqvi, S.A.H.; Rao, M.J.; Mosa, W.F.A.; Abbas, Q.; et al. Drones in Plant Disease Assessment, Efficient Monitoring, and Detection: A Way Forward to Smart Agriculture. Agronomy 2023, 13, 1524. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. Factors Influencing the Use of Deep Learning for Plant Disease Recognition. Biosyst. Eng. 2018, 172, 84–91. [Google Scholar] [CrossRef]

- Al-Saddik, H.; Laybros, A.; Simon, J.C.; Cointault, F. Protocol for the Definition of a Multi-Spectral Sensor for Specific Foliar Disease Detection: Case of “Flavescence Dorée”. In Phytoplasmas: Methods and Protocols; Musetti, R., Pagliari, L., Eds.; Springer: New York, NY, USA, 2019; pp. 213–238. ISBN 978-1-4939-8837-2. [Google Scholar]

- Pande, C.B.; Moharir, K.N. Application of Hyperspectral Remote Sensing Role in Precision Farming and Sustainable Agriculture Under Climate Change: A Review. In Climate Change Impacts on Natural Resources, Ecosystems and Agricultural Systems; Pande, C.B., Moharir, K.N., Singh, S.K., Pham, Q.B., Elbeltagi, A., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 503–520. ISBN 978-3-031-19059-9. [Google Scholar]

- Dash, J.P.; Watt, M.S.; Pearse, G.D.; Heaphy, M.; Dungey, H.S. Assessing Very High Resolution UAV Imagery for Monitoring Forest Health during a Simulated Disease Outbreak. ISPRS J. Photogramm. Remote Sens. 2017, 131, 1–14. [Google Scholar] [CrossRef]

- Zhang, T.; Xu, Z.; Su, J.; Yang, Z.; Liu, C.; Chen, W.-H.; Li, J. Ir-UNet: Irregular Segmentation U-Shape Network for Wheat Yellow Rust Detection by UAV Multispectral Imagery. Remote Sens. 2021, 13, 3892. [Google Scholar] [CrossRef]

- Su, J.; Yi, D.; Su, B.; Mi, Z.; Liu, C.; Hu, X.; Xu, X.; Guo, L.; Chen, W.-H. Aerial Visual Perception in Smart Farming: Field Study of Wheat Yellow Rust Monitoring. IEEE Trans. Ind. Inform. 2021, 17, 2242–2249. [Google Scholar] [CrossRef]

- Liu, L.; Dong, Y.; Huang, W.; Du, X.; Ma, H. Monitoring Wheat Fusarium Head Blight Using Unmanned Aerial Vehicle Hyperspectral Imagery. Remote Sens. 2020, 12, 3811. [Google Scholar] [CrossRef]

- Huang, H.; Deng, J.; Lan, Y.; Yang, A.; Zhang, L.; Wen, S.; Zhang, H.; Zhang, Y.; Deng, Y. Detection of Helminthosporium Leaf Blotch Disease Based on UAV Imagery. Appl. Sci. 2019, 9, 558. [Google Scholar] [CrossRef]

- Stewart, E.L.; Wiesner-Hanks, T.; Kaczmar, N.; DeChant, C.; Wu, H.; Lipson, H.; Nelson, R.J.; Gore, M.A. Quantitative Phenotyping of Northern Leaf Blight in UAV Images Using Deep Learning. Remote Sens. 2019, 11, 2209. [Google Scholar] [CrossRef]

- Dstechuas. Humpback Whale 360 VTOL FIXED WING UAV. Available online: https://www.dstechuas.com/Humpback-Whales-320-VTOL-p3595339.html (accessed on 6 October 2025).

- Farella, A.; Paciolla, F.; Quartarella, T.; Pascuzzi, S. Agricultural Unmanned Ground Vehicle (UGV): A Brief Overview. In Farm Machinery and Processes Management in Sustainable Agriculture; Lorencowicz, E., Huyghebaert, B., Uziak, J., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 137–146. [Google Scholar]

- Liu, B. Recent Advancements in Autonomous Robots and Their Technical Analysis. Math. Probl. Eng. 2021, 2021, 6634773. [Google Scholar] [CrossRef]

- Xie, D.; Chen, L.; Liu, L.; Chen, L.; Wang, H. Actuators and Sensors for Application in Agricultural Robots: A Review. Machines 2022, 10, 913. [Google Scholar] [CrossRef]

- Liu, Q.; Li, Z.; Yuan, S.; Zhu, Y.; Li, X. Review on Vehicle Detection Technology for Unmanned Ground Vehicles. Sensors 2021, 21, 1354. [Google Scholar] [CrossRef]

- Ahmad, A.; Aggarwal, V.; Saraswat, D.; Johal, G.S. UAS and UGV-Based Disease Management System for Diagnosing Corn Diseases Above and Below the Canopy Using Deep Learning. J. ASABE 2024, 67, 161–179. [Google Scholar] [CrossRef]

- Agelli, M.; Corona, N.; Maggio, F.; Moi, P.V. Unmanned Ground Vehicles for Continuous Crop Monitoring in Agriculture: Assessing the Readiness of Current ICT Technology. Machines 2024, 12, 750. [Google Scholar] [CrossRef]

- Mahmud, M.S.A.; Abidin, M.S.Z.; Emmanuel, A.A.; Hasan, S. Robotics and Automation in Agriculture: Present and Future Applications. Appl. Model. Simul. 2020, 4, 130–140. [Google Scholar]

- Menendez-Aponte, P.; Garcia, C.; Freese, D.; Defterli, S.; Xu, Y. Software and Hardware Architectures in Cooperative Aerial and Ground Robots for Agricultural Disease Detection. In Proceedings of the 2016 International Conference on Collaboration Technologies and Systems (CTS), Orlando, FL, USA, 31 October–4 November 2016; IEEE: New York, NY, USA, 2016; pp. 354–358. [Google Scholar]

- Quartarella, T.; Farella, A.; Paciolla, F.; Pascuzzi, S. UVC Rays Devices Mounted on Autonomous Terrestrial Rovers: A Brief Overview. In Farm Machinery and Processes Management in Sustainable Agriculture; Lorencowicz, E., Huyghebaert, B., Uziak, J., Eds.; Lecture Notes in Civil Engineering; Springer Nature: Cham, Switzerland, 2024; Volume 609, pp. 378–386. ISBN 978-3-031-70954-8. [Google Scholar]

- Sujatha, K.; Reddy, T.K.; Bhavani, N.P.G.; Ponmagal, R.S.; Srividhya, V.; Janaki, N. UGVs for Agri Spray with AI Assisted Paddy Crop Disease Identification. Procedia Comput. Sci. 2023, 230, 70–81. [Google Scholar] [CrossRef]

- JSUMO. ATLAS All Terrain Robot 4x4 Explorer Robot Kit (UGV Robot)|JSumo. Available online: https://www.jsumo.com/atlas-4x4-robot-mechanical-kit (accessed on 6 October 2025).

- SuperDroid. Configurable-HD2 Treaded ATR Tank Robot Platform. Available online: https://www.superdroidrobots.com/store/product=789 (accessed on 6 October 2025).

- Abdulridha, J.; Ampatzidis, Y.; Kakarla, S.C.; Roberts, P. Detection of Target Spot and Bacterial Spot Diseases in Tomato Using UAV-Based and Benchtop-Based Hyperspectral Imaging Techniques. Precis. Agric. 2020, 21, 955–978. [Google Scholar] [CrossRef]

- Kerkech, M.; Hafiane, A.; Canals, R. Vine Disease Detection in UAV Multispectral Images Using Optimized Image Registration and Deep Learning Segmentation Approach. Comput. Electron. Agric. 2020, 174, 105446. [Google Scholar] [CrossRef]

- Lu, J.; Hu, J.; Zhao, G.; Mei, F.; Zhang, C. An In-Field Automatic Wheat Disease Diagnosis System. Comput. Electron. Agric. 2017, 142, 369–379. [Google Scholar] [CrossRef]

- Mishra, P. Disease Detection in Plants Using UAS and Deep Neural Networks. Ph.D. Thesis, Tennessee State University, Nashville, TN, USA, 2024. [Google Scholar]

- Su, J.; Liu, C.; Coombes, M.; Hu, X.; Wang, C.; Xu, X.; Li, Q.; Guo, L.; Chen, W.-H. Wheat Yellow Rust Monitoring by Learning from Multispectral UAV Aerial Imagery. Comput. Electron. Agric. 2018, 155, 157–166. [Google Scholar] [CrossRef]

- Farber, C.; Mahnke, M.; Sanchez, L.; Kurouski, D. Advanced Spectroscopic Techniques for Plant Disease Diagnostics. A Review. TrAC Trends Anal. Chem. 2019, 118, 43–49. [Google Scholar] [CrossRef]

- Dolatabadian, A.; Neik, T.X.; Danilevicz, M.F.; Upadhyaya, S.R.; Batley, J.; Edwards, D. Image-Based Crop Disease Detection Using Machine Learning. Plant Pathol. 2025, 74, 18–38. [Google Scholar] [CrossRef]

- Tominaga, S.; Nishi, S.; Ohtera, R. Measurement and Estimation of Spectral Sensitivity Functions for Mobile Phone Cameras. Sensors 2021, 21, 4985. [Google Scholar] [CrossRef]

- Giakoumoglou, N.; Kalogeropoulou, E.; Klaridopoulos, C.; Pechlivani, E.M.; Christakakis, P.; Markellou, E.; Frangakis, N.; Tzovaras, D. Early Detection of Botrytis Cinerea Symptoms Using Deep Learning Multi-Spectral Image Segmentation. Smart Agric. Technol. 2024, 8, 100481. [Google Scholar] [CrossRef]

- Terentev, A.; Dolzhenko, V.; Fedotov, A.; Eremenko, D. Current State of Hyperspectral Remote Sensing for Early Plant Disease Detection: A Review. Sensors 2022, 22, 757. [Google Scholar] [CrossRef]

- Pechlivani, E.M.; Papadimitriou, A.; Pemas, S.; Giakoumoglou, N.; Tzovaras, D. Low-Cost Hyperspectral Imaging Device for Portable Remote Sensing. Instruments 2023, 7, 32. [Google Scholar] [CrossRef]

- Fahrentrapp, J.; Ria, F.; Geilhausen, M.; Panassiti, B. Detection of Gray Mold Leaf Infections Prior to Visual Symptom Appearance Using a Five-Band Multispectral Sensor. Front. Plant Sci. 2019, 10, 628. [Google Scholar] [CrossRef]

- Su, W.-H.; Sun, D.-W. Multispectral Imaging for Plant Food Quality Analysis and Visualization. Compr. Rev. Food Sci. Food Saf. 2018, 17, 220–239. [Google Scholar] [CrossRef]

- De Silva, M.; Brown, D. Tomato Disease Detection Using Multispectral Imaging with Deep Learning Models. In Proceedings of the 2024 International Conference on Artificial Intelligence, Big Data, Computing and Data Communication Systems (icABCD), Port Louis, Mauritius, 1–2 August 2024; pp. 1–9. [Google Scholar]

- Gewali, U.B.; Monteiro, S.T.; Saber, E. Machine Learning Based Hyperspectral Image Analysis: A Survey. arXiv 2019, arXiv:1802.08701. [Google Scholar] [CrossRef]

- Gao, C.; Ji, X.; He, Q.; Gong, Z.; Sun, H.; Wen, T.; Guo, W. Monitoring of Wheat Fusarium Head Blight on Spectral and Textural Analysis of UAV Multispectral Imagery. Agriculture 2023, 13, 293. [Google Scholar] [CrossRef]

- Liu, L.; Dong, Y.; Huang, W.; Du, X.; Ren, B.; Huang, L.; Zheng, Q.; Ma, H. A Disease Index for Efficiently Detecting Wheat Fusarium Head Blight Using Sentinel-2 Multispectral Imagery. IEEE Access 2020, 8, 52181–52191. [Google Scholar] [CrossRef]

- Han, S.; Zhao, Y.; Cheng, J.; Zhao, F.; Yang, H.; Feng, H.; Li, Z.; Ma, X.; Zhao, C.; Yang, G. Monitoring Key Wheat Growth Variables by Integrating Phenology and UAV Multispectral Imagery Data into Random Forest Model. Remote Sens. 2022, 14, 3723. [Google Scholar] [CrossRef]

- Han, X.; Wei, Z.; Chen, H.; Zhang, B.; Li, Y.; Du, T. Inversion of Winter Wheat Growth Parameters and Yield Under Different Water Treatments Based on UAV Multispectral Remote Sensing. Front. Plant Sci. 2021, 12, 609876. [Google Scholar] [CrossRef]

- Xu, X.Q.; Lu, J.S.; Zhang, N.; Yang, T.C.; He, J.Y.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Inversion of Rice Canopy Chlorophyll Content and Leaf Area Index Based on Coupling of Radiative Transfer and Bayesian Network Models. ISPRS J. Photogramm. Remote Sens. 2019, 150, 185–196. [Google Scholar] [CrossRef]

- Zheng, H.; Li, W.; Jiang, J.; Liu, Y.; Cheng, T.; Tian, Y.; Zhu, Y.; Cao, W.; Zhang, Y.; Yao, X. A Comparative Assessment of Different Modeling Algorithms for Estimating Leaf Nitrogen Content in Winter Wheat Using Multispectral Images from an Unmanned Aerial Vehicle. Remote Sens. 2018, 10, 2026. [Google Scholar] [CrossRef]

- Giakoumoglou, N.; Pechlivani, E.M.; Sakelliou, A.; Klaridopoulos, C.; Frangakis, N.; Tzovaras, D. Deep Learning-Based Multi-Spectral Identification of Grey Mould. Smart Agric. Technol. 2023, 4, 100174. [Google Scholar] [CrossRef]

- Fernández, C.I.; Leblon, B.; Wang, J.; Haddadi, A.; Wang, K. Detecting Infected Cucumber Plants with Close-Range Multispectral Imagery. Remote Sens. 2021, 13, 2948. [Google Scholar] [CrossRef]

- Albetis, J.; Duthoit, S.; Guttler, F.; Jacquin, A.; Goulard, M.; Poilvé, H.; Féret, J.-B.; Dedieu, G. Detection of Flavescence Dorée Grapevine Disease Using Unmanned Aerial Vehicle (UAV) Multispectral Imagery. Remote Sens. 2017, 9, 308. [Google Scholar] [CrossRef]

- Albetis, J.; Jacquin, A.; Goulard, M.; Poilvé, H.; Rousseau, J.; Clenet, H.; Dedieu, G.; Duthoit, S. On the Potentiality of UAV Multispectral Imagery to Detect Flavescence Dorée and Grapevine Trunk Diseases. Remote Sens. 2018, 11, 23. [Google Scholar] [CrossRef]

- De Silva, M.; Brown, D. Early Plant Disease Detection Using Infrared and Mobile Photographs in Natural Environment. In Intelligent Computing; Arai, K., Ed.; Springer Nature: Cham, Switzerland, 2023; pp. 307–321. [Google Scholar]

- Lei, S.; Luo, J.; Tao, X.; Qiu, Z. Remote Sensing Detecting of Yellow Leaf Disease of Arecanut Based on UAV Multisource Sensors. Remote Sens. 2021, 13, 4562. [Google Scholar] [CrossRef]

- Rodríguez, J.; Lizarazo, I.; Prieto, F.; Angulo-Morales, V. Assessment of Potato Late Blight from UAV-Based Multispectral Imagery. Comput. Electron. Agric. 2021, 184, 106061. [Google Scholar] [CrossRef]

- Ye, H.; Huang, W.; Huang, S.; Cui, B.; Dong, Y.; Guo, A.; Ren, Y.; Jin, Y. Identification of Banana Fusarium Wilt Using Supervised Classification Algorithms with UAV-Based Multi-Spectral Imagery. Int. J. Agric. Biol. Eng. 2020, 13, 136–142. [Google Scholar] [CrossRef]

- Lucasbosch Spectral Sampling Rgb Multispectral Hyperspectral Imaging.Svg 2021. Available online: https://commons.wikimedia.org/wiki/File:Spectral_sampling_RGB_multispectral_hyperspectral_imaging.svg (accessed on 25 January 2025).

- Zou, X.; Mõttus, M. Sensitivity of Common Vegetation Indices to the Canopy Structure of Field Crops. Remote Sens. 2017, 9, 994. [Google Scholar] [CrossRef]

- Mutanga, O.; Masenyama, A.; Sibanda, M. Spectral Saturation in the Remote Sensing of High-Density Vegetation Traits: A Systematic Review of Progress, Challenges, and Prospects. ISPRS J. Photogramm. Remote Sens. 2023, 198, 297–309. [Google Scholar] [CrossRef]

- Rastogi, V.; Srivastava, S.; Jaiswal, G.; Sharma, A. Detecting Document Forgery Using Hyperspectral Imaging and Machine Learning. In Computer Vision and Image Processing; Raman, B., Murala, S., Chowdhury, A., Dhall, A., Goyal, P., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 14–25. [Google Scholar]

- Adetutu, A.E.; Bayo, Y.F.; Emmanuel, A.A.; Opeyemi, A.-A.A. A Review of Hyperspectral Imaging Analysis Techniques for Onset Crop Disease Detection, Identification and Classification. J. For. Environ. Sci. 2024, 40, 1–8. [Google Scholar] [CrossRef]

- Jaiswal, G.; Rani, R.; Mangotra, H.; Sharma, A. Integration of Hyperspectral Imaging and Autoencoders: Benefits, Applications, Hyperparameter Tunning and Challenges. Comput. Sci. Rev. 2023, 50, 100584. [Google Scholar] [CrossRef]

- Zhou, W.; Zhang, J.; Zou, M.; Liu, X.; Du, X.; Wang, Q.; Liu, Y.; Liu, Y.; Li, J. Prediction of Cadmium Concentration in Brown Rice before Harvest by Hyperspectral Remote Sensing. Environ. Sci. Pollut. Res. 2019, 26, 1848–1856. [Google Scholar] [CrossRef]

- Mahlein, A.-K.; Hammersley, S.; Oerke, E.-C.; Dehne, H.-W.; Goldbach, H.; Grieve, B. Supplemental Blue LED Lighting Array to Improve the Signal Quality in Hyperspectral Imaging of Plants. Sensors 2015, 15, 12834–12840. [Google Scholar] [CrossRef]

- Virlet, N.; Sabermanesh, K.; Sadeghi-Tehran, P.; Hawkesford, M.J. Field Scanalyzer: An Automated Robotic Field Phenotyping Platform for Detailed Crop Monitoring. Funct. Plant Biol. 2017, 44, 143. [Google Scholar] [CrossRef]

- Zhang, N.; Yang, G.; Pan, Y.; Yang, X.; Chen, L.; Zhao, C. A Review of Advanced Technologies and Development for Hyperspectral-Based Plant Disease Detection in the Past Three Decades. Remote Sens. 2020, 12, 3188. [Google Scholar] [CrossRef]

- Moghadam, P. Early Plant Disease Detection Using Hyperspectral Imaging Combined with Machine Learning and IoT. Available online: https://research.csiro.au/robotics/early-plant-disease-detection-using-hyperspectral-imaging-combined-with-machine-learning-and-iot/ (accessed on 20 January 2025).

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Zhang, N.; Pan, Y.; Feng, H.; Zhao, X.; Yang, X.; Ding, C.; Yang, G. Development of Fusarium Head Blight Classification Index Using Hyperspectral Microscopy Images of Winter Wheat Spikelets. Biosyst. Eng. 2019, 186, 83–99. [Google Scholar] [CrossRef]

- Couture, J.J.; Singh, A.; Charkowski, A.O.; Groves, R.L.; Gray, S.M.; Bethke, P.C.; Townsend, P.A. Integrating Spectroscopy with Potato Disease Management. Plant Dis. 2018, 102, 2233–2240. [Google Scholar] [CrossRef] [PubMed]

- Jin, X.; Jie, L.; Wang, S.; Qi, H.; Li, S. Classifying Wheat Hyperspectral Pixels of Healthy Heads and Fusarium Head Blight Disease Using a Deep Neural Network in the Wild Field. Remote Sens. 2018, 10, 395. [Google Scholar] [CrossRef]

- Ghimire, A.; Lee, H.S.; Yoon, Y.; Kim, Y. Prediction of Soybean Yellow Mottle Mosaic Virus in Soybean Using Hyperspectral Imaging. Plant Methods 2025, 21, 112. [Google Scholar] [CrossRef] [PubMed]

- Ban, S.; Tian, M.; Hu, D.; Xu, M.; Yuan, T.; Zheng, X.; Li, L.; Wei, S. Evaluation and Early Detection of Downy Mildew of Lettuce Using Hyperspectral Imagery. Agriculture 2025, 15, 444. [Google Scholar] [CrossRef]

- Nguyen, D.H.D.; Tan, A.J.H.; Lee, R.; Lim, W.F.; Wong, J.Y.; Suhaimi, F. Monitoring of Plant Diseases Caused by Fusarium Commune and Rhizoctonia Solani in Bok Choy Using Hyperspectral Remote Sensing and Machine Learning. Pest Manag. Sci. 2025, 81, 149–159. [Google Scholar] [CrossRef]

- Liu, T.; Qi, Y.; Yang, F.; Yi, X.; Guo, S.; Wu, P.; Yuan, Q.; Xu, T. Early Detection of Rice Blast Using UAV Hyperspectral Imagery and Multi-Scale Integrator Selection Attention Transformer Network (MS-STNet). Comput. Electron. Agric. 2025, 231, 110007. [Google Scholar] [CrossRef]

- Li, X.; Peng, F.; Wei, Z.; Han, G. Identification of Yellow Vein Clearing Disease in Lemons Based on Hyperspectral Imaging and Deep Learning. Front. Plant Sci. 2025, 16, 1554514. [Google Scholar] [CrossRef]

- Almoujahed, M.B.; Rangarajan, A.K.; Whetton, R.L.; Vincke, D.; Eylenbosch, D.; Vermeulen, P.; Mouazen, A.M. Detection of Fusarium Head Blight in Wheat under Field Conditions Using a Hyperspectral Camera and Machine Learning. Comput. Electron. Agric. 2022, 203, 107456. [Google Scholar] [CrossRef]

- Xie, Y.; Plett, D.; Liu, H. The Promise of Hyperspectral Imaging for the Early Detection of Crown Rot in Wheat. AgriEngineering 2021, 3, 924–941. [Google Scholar] [CrossRef]

- Jiao, L.Z.; Wu, W.B.; Zheng, W.G.; Dong, D.M. The Infrared Thermal Image-Based Monitoring Process of Peach Decay under Uncontrolled Temperature Conditions. J. Anim. Plant Sci. 2015, 25, 202–207. [Google Scholar]

- Zhu, W.; Chen, H.; Ciechanowska, I.; Spaner, D. Application of Infrared Thermal Imaging for the Rapid Diagnosis of Crop Disease. IFAC-PapersOnLine 2018, 51, 424–430. [Google Scholar] [CrossRef]

- Lillesand, T.; Kiefer, R.W.; Chipman, J. Remote Sensing and Image Interpretation; John Wiley & Sons: Hoboken, NJ, USA, 2015; ISBN 978-1-118-34328-9. [Google Scholar]

- Pineda, M.; Barón, M.; Pérez-Bueno, M.-L. Thermal Imaging for Plant Stress Detection and Phenotyping. Remote Sens. 2020, 13, 68. [Google Scholar] [CrossRef]

- Wei, X.; Johnson, M.A.; Langston, D.B.; Mehl, H.L.; Li, S. Identifying Optimal Wavelengths as Disease Signatures Using Hyperspectral Sensor and Machine Learning. Remote Sens. 2021, 13, 2833. [Google Scholar] [CrossRef]

- Yang, N.; Yuan, M.; Wang, P.; Zhang, R.; Sun, J.; Mao, H. Tea Diseases Detection Based on Fast Infrared Thermal Image Processing Technology. J. Sci. Food Agric. 2019, 99, 3459–3466. [Google Scholar] [CrossRef]

- Gohad, P.R.; Khan, S.S. Diagnosis of Leaf Health Using Grape Leaf Thermal Imaging and Convolutional Neural Networks. In Proceedings of the 2021 6th IEEE International Conference on Recent Advances and Innovations in Engineering (ICRAIE), Kedah, Malaysia, 1–3 December 2021; IEEE: New York, NY, USA, 2021; pp. 1–5. [Google Scholar]

- Mastrodimos, N.; Lentzou, D.; Templalexis, C.; Tsitsigiannis, D.I.; Xanthopoulos, G. Thermal and Digital Imaging Information Acquisition Regarding the Development of Aspergillus Flavus in Pistachios against Aspergillus Carbonarius in Table Grapes. Comput. Electron. Agric. 2022, 192, 106628. [Google Scholar] [CrossRef]

- Haidekker, M.A.; Dong, K.; Mattos, E.; van Iersel, M.W. A Very Low-Cost Pulse-Amplitude Modulated Chlorophyll Fluorometer. Comput. Electron. Agric. 2022, 203, 107438. [Google Scholar] [CrossRef]

- Mahlein, A.-K. Plant Disease Detection by Imaging Sensors—Parallels and Specific Demands for Precision Agriculture and Plant Phenotyping. Plant Dis. 2016, 100, 241–251. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Mali, P.; Arthur, L.; Molaei, F.; Atsyo, S.; Geng, J.; He, L.; Ghatrehsamani, S. Advanced Technologies for Precision Tree Fruit Disease Management: A Review. Comput. Electron. Agric. 2025, 229, 109704. [Google Scholar] [CrossRef]

- Zhao, X.; Qi, J.; Xu, H.; Yu, Z.; Yuan, L.; Chen, Y.; Huang, H. Evaluating the Potential of Airborne Hyperspectral LiDAR for Assessing Forest Insects and Diseases with 3D Radiative Transfer Modeling. Remote Sens. Environ. 2023, 297, 113759. [Google Scholar] [CrossRef]

- Chauhan, C.; Rani, V.; Kumar, M. Advance Remote Sensing Technologies for Crop Disease and Pest Detection. In Hyperautomation in Precision Agriculture; Elsevier: Amsterdam, The Netherlands, 2025; pp. 181–190. ISBN 978-0-443-24139-0. [Google Scholar]

- Yan, W.Y.; Shaker, A.; El-Ashmawy, N. Urban Land Cover Classification Using Airborne LiDAR Data: A Review. Remote Sens. Environ. 2015, 158, 295–310. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Y.; Pu, R.; Gonzalez-Moreno, P.; Yuan, L.; Wu, K.; Huang, W. Monitoring Plant Diseases and Pests through Remote Sensing Technology: A Review. Comput. Electron. Agric. 2019, 165, 104943. [Google Scholar] [CrossRef]

- Husin, N.A.; Khairunniza-Bejo, S.; Abdullah, A.F.; Kassim, M.S.M.; Ahmad, D.; Azmi, A.N.N. Application of Ground-Based LiDAR for Analysing Oil Palm Canopy Properties on the Occurrence of Basal Stem Rot (BSR) Disease. Sci. Rep. 2020, 10, 6464. [Google Scholar] [CrossRef]

- Bao, D.; Zhou, J.; Bhuiyan, S.A.; Adhikari, P.; Tuxworth, G.; Ford, R.; Gao, Y. Early Detection of Sugarcane Smut and Mosaic Diseases via Hyperspectral Imaging and Spectral-Spatial Attention Deep Neural Networks. J. Agric. Food Res. 2024, 18, 101369. [Google Scholar] [CrossRef]

- Bhakta, I.; Phadikar, S.; Majumder, K.; Mukherjee, H.; Sau, A. A Novel Plant Disease Prediction Model Based on Thermal Images Using Modified Deep Convolutional Neural Network. Precis. Agric. 2023, 24, 23–39. [Google Scholar] [CrossRef]

- Bohnenkamp, D.; Behmann, J.; Mahlein, A.-K. In-Field Detection of Yellow Rust in Wheat on the Ground Canopy and UAV Scale. Remote Sens. 2019, 11, 2495. [Google Scholar] [CrossRef]

- Bohnenkamp, D.; Kuska, M.T.; Mahlein, A.-K.; Behmann, J. Hyperspectral Signal Decomposition and Symptom Detection of Wheat Rust Disease at the Leaf Scale Using Pure Fungal Spore Spectra as Reference. Plant Pathol. 2019, 68, 1188–1195. [Google Scholar] [CrossRef]

- Brown, D.; De Silva, M. Plant Disease Detection on Multispectral Images Using Vision Transformers. In Proceedings of the 25th Irish Machine Vision and Image Processing Conference (IMVIP), Galway, Ireland, 30 August–1 September 2023. [Google Scholar] [CrossRef]

- Chen, X.; Shi, D.; Zhang, H.; Pérez, J.A.S.; Yang, X.; Li, M. Early Diagnosis of Greenhouse Cucumber Downy Mildew in Seedling Stage Using Chlorophyll Fluorescence Imaging Technology. Biosyst. Eng. 2024, 242, 107–122. [Google Scholar] [CrossRef]

- Dasari, K.; Yadav, S.A.; Kansal, L.; Adilakshmi, J.; Kaliyaperumal, G.; Albawi, A. Fusion of Hyperspectral Imaging and Convolutional Neural Networks for Early Detection of Crop Diseases in Precision Agriculture. In Proceedings of the 2024 International Conference on Communication, Computer Sciences and Engineering (IC3SE), Gautam Buddha Nagar, India, 9–11 May 2024; IEEE: New York, NY, USA, 2024; pp. 1172–1177. [Google Scholar]

- Deng, J.; Hong, D.; Li, C.; Yao, J.; Yang, Z.; Zhang, Z.; Chanussot, J. RustQNet: Multimodal Deep Learning for Quantitative Inversion of Wheat Stripe Rust Disease Index. Comput. Electron. Agric. 2024, 225, 109245. [Google Scholar] [CrossRef]

- Deng, J.; Zhou, H.; Lv, X.; Yang, L.; Shang, J.; Sun, Q.; Zheng, X.; Zhou, C.; Zhao, B.; Wu, J.; et al. Applying Convolutional Neural Networks for Detecting Wheat Stripe Rust Transmission Centers under Complex Field Conditions Using RGB-Based High Spatial Resolution Images from UAVs. Comput. Electron. Agric. 2022, 200, 107211. [Google Scholar] [CrossRef]

- Haider, I.; Khan, M.A.; Nazir, M.; Hamza, A.; Alqahtani, O.; Alouane, M.T.-H.; Masood, A. Crops Leaf Disease Recognition From Digital and RS Imaging Using Fusion of Multi Self-Attention RBNet Deep Architectures and Modified Dragonfly Optimization. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 7260–7277. [Google Scholar] [CrossRef]

- Win Kent, O.; Weng Chun, T.; Lee Choo, T.; Weng Kin, L. Early Symptom Detection of Basal Stem Rot Disease in Oil Palm Trees Using a Deep Learning Approach on UAV Images. Comput. Electron. Agric. 2023, 213, 108192. [Google Scholar] [CrossRef]

- Kerkech, M.; Hafiane, A.; Canals, R. Deep Leaning Approach with Colorimetric Spaces and Vegetation Indices for Vine Diseases Detection in UAV Images. Comput. Electron. Agric. 2018, 155, 237–243. [Google Scholar] [CrossRef]

- Khan, I.H.; Liu, H.; Li, W.; Cao, A.; Wang, X.; Liu, H.; Cheng, T.; Tian, Y.; Zhu, Y.; Cao, W.; et al. Early Detection of Powdery Mildew Disease and Accurate Quantification of Its Severity Using Hyperspectral Images in Wheat. Remote Sens. 2021, 13, 3612. [Google Scholar] [CrossRef]

- Knauer, U.; Matros, A.; Petrovic, T.; Zanker, T.; Scott, E.S.; Seiffert, U. Improved Classification Accuracy of Powdery Mildew Infection Levels of Wine Grapes by Spatial-Spectral Analysis of Hyperspectral Images. Plant Methods 2017, 13, 47. [Google Scholar] [CrossRef]

- Lay, L.; Lee, H.S.; Tayade, R.; Ghimire, A.; Chung, Y.S.; Yoon, Y.; Kim, Y. Evaluation of Soybean Wildfire Prediction via Hyperspectral Imaging. Plants 2023, 12, 901. [Google Scholar] [CrossRef]

- Leucker, M.; Wahabzada, M.; Kersting, K.; Peter, M.; Beyer, W.; Steiner, U.; Mahlein, A.-K.; Oerke, E.-C. Hyperspectral Imaging Reveals the Effect of Sugar Beet Quantitative Trait Loci on Cercospora Leaf Spot Resistance. Funct. Plant Biol. 2016, 44, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Ma, H.; Huang, W.; Dong, Y.; Liu, L.; Guo, A. Using UAV-Based Hyperspectral Imagery to Detect Winter Wheat Fusarium Head Blight. Remote Sens. 2021, 13, 3024. [Google Scholar] [CrossRef]

- Mahlein, A.-K.; Alisaac, E.; Al Masri, A.; Behmann, J.; Dehne, H.-W.; Oerke, E.-C. Comparison and Combination of Thermal, Fluorescence, and Hyperspectral Imaging for Monitoring Fusarium Head Blight of Wheat on Spikelet Scale. Sensors 2019, 19, 2281. [Google Scholar] [CrossRef]

- Mahlein, A.-K.; Kuska, M.T.; Thomas, S.; Bohnenkamp, D.; Alisaac, E.; Behmann, J.; Wahabzada, M.; Kersting, K. Plant Disease Detection by Hyperspectral Imaging: From the Lab to the Field. Adv. Anim. Biosci. 2017, 8, 238–243. [Google Scholar] [CrossRef]

- Moghadam, P.; Ward, D.; Goan, E.; Jayawardena, S.; Sikka, P.; Hernandez, E. Plant Disease Detection Using Hyperspectral Imaging. In Proceedings of the 2017 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Sydney, Australia, 29 November–1 December 2017; IEEE: New York, NY, USA, 2017; pp. 1–8. [Google Scholar]

- Nguyen, C.; Sagan, V.; Maimaitiyiming, M.; Maimaitijiang, M.; Bhadra, S.; Kwasniewski, M.T. Early Detection of Plant Viral Disease Using Hyperspectral Imaging and Deep Learning. Sensors 2021, 21, 742. [Google Scholar] [CrossRef]

- Shao, Y.; Ji, S.; Xuan, G.; Ren, Y.; Feng, W.; Jia, H.; Wang, Q.; He, S. Detection and Analysis of Chili Pepper Root Rot by Hyperspectral Imaging Technology. Agronomy 2024, 14, 226. [Google Scholar] [CrossRef]

- Tong, J.; Zhang, L.; Tian, J.; Yu, Q.; Lang, C. ToT-Net: A Generalized and Real-Time Crop Disease Detection Framework via Task-Level Meta-Learning and Lightweight Multi-Scale Transformer. Smart Agric. Technol. 2025, 12, 101249. [Google Scholar] [CrossRef]

- Vásconez, J.P.; Vásconez, I.N.; Moya, V.; Calderón-Díaz, M.J.; Valenzuela, M.; Besoain, X.; Seeger, M.; Auat Cheein, F. Deep Learning-Based Classification of Visual Symptoms of Bacterial Wilt Disease Caused by Ralstonia Solanacearum in Tomato Plants. Comput. Electron. Agric. 2024, 227, 109617. [Google Scholar] [CrossRef]

- Venkatesan, R.; Balaji, G.N. Balancing Composite Motion Optimization Using R-ERNN with Plant Disease. Appl. Soft Comput. 2024, 154, 111288. [Google Scholar] [CrossRef]

- Wang, A.; Song, Z.; Xie, Y.; Hu, J.; Zhang, L.; Zhu, Q. Detection of Rice Leaf SPAD and Blast Disease Using Integrated Aerial and Ground Multiscale Canopy Reflectance Spectroscopy. Agriculture 2024, 14, 1471. [Google Scholar] [CrossRef]

- Weng, H.; Lv, J.; Cen, H.; He, M.; Zeng, Y.; Hua, S.; Li, H.; Meng, Y.; Fang, H.; He, Y. Hyperspectral Reflectance Imaging Combined with Carbohydrate Metabolism Analysis for Diagnosis of Citrus Huanglongbing in Different Seasons and Cultivars. Sens. Actuators B Chem. 2018, 275, 50–60. [Google Scholar] [CrossRef]

- Wetterich, C.B.; de Oliveira Neves, R.F.; Belasque, J.; Marcassa, L.G. Detection of Citrus Canker and Huanglongbing Using Fluorescence Imaging Spectroscopy and Support Vector Machine Technique. Appl. Opt. 2016, 55, 400–407. [Google Scholar] [CrossRef] [PubMed]

- Wetterich, C.B.; de Oliveira Neves, R.F.; Belasque, J.; Ehsani, R.; Marcassa, L.G. Detection of Huanglongbing in Florida Using Fluorescence Imaging Spectroscopy and Machine-Learning Methods. Appl. Opt. 2017, 56, 15–23. [Google Scholar] [CrossRef]

- Xie, Y.; Plett, D.; Evans, M.; Garrard, T.; Butt, M.; Clarke, K.; Liu, H. Hyperspectral Imaging Detects Biological Stress of Wheat for Early Diagnosis of Crown Rot Disease. Comput. Electron. Agric. 2024, 217, 108571. [Google Scholar] [CrossRef]

- Yan, K.; Fang, X.; Yang, W.; Xu, X.; Lin, S.; Zhang, Y.; Lan, Y. Multiple Light Sources Excited Fluorescence Image-Based Non-Destructive Method for Citrus Huanglongbing Disease Detection. Comput. Electron. Agric. 2025, 237, 110549. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Camino, C.; Beck, P.S.A.; Calderon, R.; Hornero, A.; Hernández-Clemente, R.; Kattenborn, T.; Montes-Borrego, M.; Susca, L.; Morelli, M.; et al. Previsual Symptoms of Xylella Fastidiosa Infection Revealed in Spectral Plant-Trait Alterations. Nat. Plants 2018, 4, 432–439. [Google Scholar] [CrossRef]

- Zhang, D.; Lin, F.; Huang, Y.; Wang, X.; Zhang, L. Detection of Wheat Powdery Mildew by Differentiating Background Factors Using Hyperspectral Imaging. Int. J. Agric. Biol. 2016, 18, 747–756. [Google Scholar] [CrossRef]

- Zheng, Q.; Huang, W.; Cui, X.; Shi, Y.; Liu, L. New Spectral Index for Detecting Wheat Yellow Rust Using Sentinel-2 Multispectral Imagery. Sensors 2018, 18, 868. [Google Scholar] [CrossRef]

- Zhou, B.; Elazab, A.; Bort, J.; Vergara, O.; Serret, M.D.; Araus, J.L. Low-Cost Assessment of Wheat Resistance to Yellow Rust through Conventional RGB Images. Comput. Electron. Agric. 2015, 116, 20–29. [Google Scholar] [CrossRef]

- Kiran Pandiri, D.N.; Murugan, R.; Goel, T.; Sharma, N.; Singh, A.K.; Sen, S.; Baruah, T. POT-Net: Solanum Tuberosum (Potato) Leaves Diseases Classification Using an Optimized Deep Convolutional Neural Network. Imaging Sci. J. 2022, 70, 387–403. [Google Scholar] [CrossRef]

- Shoaib, M.; Shah, B.; Hussain, T.; Ali, A.; Ullah, A.; Alenezi, F.; Gechev, T.; Ali, F.; Syed, I. A Deep Learning-Based Model for Plant Lesion Segmentation, Subtype Identification, and Survival Probability Estimation. Front. Plant Sci. 2022, 13, 1095547. [Google Scholar] [CrossRef] [PubMed]

- Shoaib, M.; Shah, B.; EI-Sappagh, S.; Ali, A.; Ullah, A.; Alenezi, F.; Gechev, T.; Hussain, T.; Ali, F. An Advanced Deep Learning Models-Based Plant Disease Detection: A Review of Recent Research. Front. Plant Sci. 2023, 14, 1158933. [Google Scholar] [CrossRef]

- Qadri, S.A.A.; Huang, N.-F.; Wani, T.M.; Bhat, S.A. Advances and Challenges in Computer Vision for Image-Based Plant Disease Detection: A Comprehensive Survey of Machine and Deep Learning Approaches. IEEE Trans. Autom. Sci. Eng. 2024, 22, 2639–2670. [Google Scholar] [CrossRef]

- Kurmi, Y.; Gangwar, S. A Leaf Image Localization Based Algorithm for Different Crops Disease Classification. Inf. Process. Agric. 2022, 9, 456–474. [Google Scholar] [CrossRef]

- Peng, Y.; Wang, Y. Leaf Disease Image Retrieval with Object Detection and Deep Metric Learning. Front. Plant Sci. 2022, 13, 963302. [Google Scholar] [CrossRef]

- Gülmez, B. A Novel Deep Learning Model with the Grey Wolf Optimization Algorithm for Cotton Disease Detection. JUCS-J. Univers. Comput. Sci. 2023, 29, 595–626. [Google Scholar] [CrossRef]

- Gülmez, B. Advancements in Rice Disease Detection through Convolutional Neural Networks: A Comprehensive Review. Heliyon 2024, 10, e33328. [Google Scholar] [CrossRef]

- Lee, T.-Y.; Lin, I.-A.; Yu, J.-Y.; Yang, J.; Chang, Y.-C. High Efficiency Disease Detection for Potato Leaf with Convolutional Neural Network. SN Comput. Sci. 2021, 2, 297. [Google Scholar] [CrossRef]

- Shafik, W.; Tufail, A.; Namoun, A.; De Silva, L.C.; Apong, R.A.A.H.M. A Systematic Literature Review on Plant Disease Detection: Motivations, Classification Techniques, Datasets, Challenges, and Future Trends. IEEE Access 2023, 11, 59174–59203. [Google Scholar] [CrossRef]

- Wei, X.; Zhang, J.; Conrad, A.O.; Flower, C.E.; Pinchot, C.C.; Hayes-Plazolles, N.; Chen, Z.; Song, Z.; Fei, S.; Jin, J. Machine Learning-Based Spectral and Spatial Analysis of Hyper- and Multi-Spectral Leaf Images for Dutch Elm Disease Detection and Resistance Screening. Artif. Intell. Agric. 2023, 10, 26–34. [Google Scholar] [CrossRef]

- Rashid, J.; Khan, I.; Ali, G.; Almotiri, S.H.; AlGhamdi, M.A.; Masood, K. Multi-Level Deep Learning Model for Potato Leaf Disease Recognition. Electronics 2021, 10, 2064. [Google Scholar] [CrossRef]

- Shaheed, K.; Qureshi, I.; Abbas, F.; Jabbar, S.; Abbas, Q.; Ahmad, H.; Sajid, M.Z. EfficientRMT-Net—An Efficient ResNet-50 and Vision Transformers Approach for Classifying Potato Plant Leaf Diseases. Sensors 2023, 23, 9516. [Google Scholar] [CrossRef] [PubMed]

- Alzoubi, S.; Jawarneh, M.; Bsoul, Q.; Keshta, I.; Soni, M.; Khan, M.A. An Advanced Approach for Fig Leaf Disease Detection and Classification: Leveraging Image Processing and Enhanced Support Vector Machine Methodology. Open Life Sci. 2023, 18, 20220764. [Google Scholar] [CrossRef] [PubMed]

- Liang, W.; Zhang, H.; Zhang, G.; Cao, H. Rice Blast Disease Recognition Using a Deep Convolutional Neural Network. Sci. Rep. 2019, 9, 2869. [Google Scholar] [CrossRef]

- Raghuvanshi, A.; Singh, U.K.; Sajja, G.S.; Pallathadka, H.; Asenso, E.; Kamal, M.; Singh, A.; Phasinam, K. Intrusion Detection Using Machine Learning for Risk Mitigation in IoT-Enabled Smart Irrigation in Smart Farming. J. Food Qual. 2022, 2022, 3955514. [Google Scholar] [CrossRef]

- Kumar, S.; Kaur, R. Plant Disease Detection Using Image Processing—A Review. Int. J. Comput. Appl. 2015, 124, 6–9. [Google Scholar] [CrossRef]

- Misra, V.; Mall, A.K. Harnessing Image Processing for Precision Disease Diagnosis in Sugar Beet Agriculture. Crop Des. 2024, 3, 100075. [Google Scholar] [CrossRef]

- Pothen, M.E.; Pai, M.L. Detection of Rice Leaf Diseases Using Image Processing. In Proceedings of the 2020 Fourth International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 11–13 March 2020; IEEE: New York, NY, USA, 2020; pp. 424–430. [Google Scholar]

- Iqbal, Z.; Khan, M.A.; Sharif, M.; Shah, J.H.; ur Rehman, M.H.; Javed, K. An Automated Detection and Classification of Citrus Plant Diseases Using Image Processing Techniques: A Review. Comput. Electron. Agric. 2018, 153, 12–32. [Google Scholar] [CrossRef]

- Malaisamy, J.; Rethnaraj, J. Disease Segmentation in Groundnut Crop Leaves Using Image Processing Techniques. In Proceedings of the Innovations and Advances in Cognitive Systems; Ragavendiran, S.D.P., Pavaloaia, V.D., Mekala, M.S., Cabezuelo, A.S., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 242–253. [Google Scholar]

- Elaziz, M.A.; Oliva, D.; Ewees, A.A.; Xiong, S. Multi-Level Thresholding-Based Grey Scale Image Segmentation Using Multi-Objective Multi-Verse Optimizer. Expert Syst. Appl. 2019, 125, 112–129. [Google Scholar] [CrossRef]

- Chouhan, S.S.; Singh, U.P.; Jain, S. Applications of Computer Vision in Plant Pathology: A Survey. Arch. Comput. Methods Eng. 2020, 27, 611–632. [Google Scholar] [CrossRef]

- Mesejo, P.; Ibáñez, Ó.; Cordón, Ó.; Cagnoni, S. A Survey on Image Segmentation Using Metaheuristic-Based Deformable Models: State of the Art and Critical Analysis. Appl. Soft Comput. 2016, 44, 1–29. [Google Scholar] [CrossRef]

- Zaitoun, N.M.; Aqel, M.J. Survey on Image Segmentation Techniques. Procedia Comput. Sci. 2015, 65, 797–806. [Google Scholar] [CrossRef]

- Krishnan, V.G.; Deepa, J.; Rao, P.V.; Divya, V.; Kaviarasan, S. An Automated Segmentation and Classification Model for Banana Leaf Disease Detection. J. Appl. Biol. Biotechnol. 2022, 10, 213–220. [Google Scholar] [CrossRef]

- Cui, B.; Ma, X.; Xie, X.; Ren, G.; Ma, Y. Classification of Visible and Infrared Hyperspectral Images Based on Image Segmentation and Edge-Preserving Filtering. Infrared Phys. Technol. 2017, 81, 79–88. [Google Scholar] [CrossRef]

- Vishnoi, V.K.; Kumar, K.; Kumar, B. Plant Disease Detection Using Computational Intelligence and Image Processing. J. Plant Dis. Prot. 2021, 128, 19–53. [Google Scholar] [CrossRef]

- Rachmad, A.; Hapsari, R.K.; Setiawan, W.; Indriyani, T.; Rochman, E.M.S.; Satoto, B.D. Classification of Tobacco Leaf Quality Using Feature Extraction of Gray Level Co-Occurrence Matrix (GLCM) and K-Nearest Neighbor (K-NN). In Proceedings of the 1st International Conference on Neural Networks and Machine Learning 2022 (ICONNSMAL 2022); Agustin, I.H., Ed.; Advances in Intelligent Systems Research. Atlantis Press International BV: Dordrecht, The Netherlands, 2023; Volume 177, pp. 30–38, ISBN 978-94-6463-173-9. [Google Scholar]

- Muzaffar, A.W.; Riaz, F.; Abuain, T.; Abu-Ain, W.A.K.; Hussain, F.; Farooq, M.U.; Azad, M.A. Gabor Contrast Patterns: A Novel Framework to Extract Features From Texture Images. IEEE Access 2023, 11, 60324–60334. [Google Scholar] [CrossRef]

- Yang, N.; Qian, Y.; EL-Mesery, H.S.; Zhang, R.; Wang, A.; Tang, J. Rapid Detection of Rice Disease Using Microscopy Image Identification Based on the Synergistic Judgment of Texture and Shape Features and Decision Tree–Confusion Matrix Method. J. Sci. Food Agric. 2019, 99, 6589–6600. [Google Scholar] [CrossRef]

- Shoaib, M.; Hussain, T.; Shah, B.; Ullah, I.; Shah, S.M.; Ali, F.; Park, S.H. Deep Learning-Based Segmentation and Classification of Leaf Images for Detection of Tomato Plant Disease. Front. Plant Sci. 2022, 13, 1031748. [Google Scholar] [CrossRef]

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable AI: A Review of Machine Learning Interpretability Methods. Entropy 2020, 23, 18. [Google Scholar] [CrossRef]

- Kaur, S.; Pandey, S.; Goel, S. Plants Disease Identification and Classification Through Leaf Images: A Survey. Arch. Comput. Methods Eng. 2019, 26, 507–530. [Google Scholar] [CrossRef]

- Javidan, S.M.; Banakar, A.; Rahnama, K.; Vakilian, K.A.; Ampatzidis, Y. Feature Engineering to Identify Plant Diseases Using Image Processing and Artificial Intelligence: A Comprehensive Review. Smart Agric. Technol. 2024, 8, 100480. [Google Scholar] [CrossRef]

- Goel, L.; Nagpal, J. A Systematic Review of Recent Machine Learning Techniques for Plant Disease Identification and Classification. IETE Tech. Rev. 2023, 40, 423–439. [Google Scholar] [CrossRef]

- Dhakate, M.; Ingole, A.B. Diagnosis of Pomegranate Plant Diseases Using Neural Network. In Proceedings of the 2015 Fifth National Conference on Computer Vision, Pattern Recognition, Image Processing and Graphics (NCVPRIPG), Patna, India, 16–19 December 2015; pp. 1–4. [Google Scholar]

- Francis, M.; Deisy, C. Disease Detection and Classification in Agricultural Plants Using Convolutional Neural Networks—A Visual Understanding. In Proceedings of the 2019 6th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 7–8 March 2019; pp. 1063–1068. [Google Scholar]

- Saragih, T.H.; Fajri, D.M.N.; Rakhmandasari, A. Comparative Study of Decision Tree, K-Nearest Neighbor, and Modified K-Nearest Neighbor on Jatropha Curcas Plant Disease Identification. Kinet. Game Technol. Inf. Syst. Comput. Netw. Comput. Electron. Control 2020, 5, 55–60. [Google Scholar] [CrossRef]

- Kumari, C.U.; Jeevan Prasad, S.; Mounika, G. Leaf Disease Detection: Feature Extraction with K-Means Clustering and Classification with ANN. In Proceedings of the 2019 3rd International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 27–29 March 2019; pp. 1095–1098. [Google Scholar]

- Padol, P.B.; Yadav, A.A. SVM Classifier Based Grape Leaf Disease Detection. In Proceedings of the 2016 Conference on Advances in Signal Processing (CASP), Pune, India, 9–11 June 2016; pp. 175–179. [Google Scholar]

- Bhatia, A.; Chug, A.; Singh, A.P. Hybrid SVM-LR Classifier for Powdery Mildew Disease Prediction in Tomato Plant. In Proceedings of the 2020 7th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 27–28 February 2020; pp. 218–223. [Google Scholar]

- Ahmed, K.; Shahidi, T.R.; Irfanul Alam, S.M.; Momen, S. Rice Leaf Disease Detection Using Machine Learning Techniques. In Proceedings of the 2019 International Conference on Sustainable Technologies for Industry 4.0 (STI), Dhaka, Bangladesh, 24–25 December 2019; pp. 1–5. [Google Scholar]

- Harakannanavar, S.S.; Rudagi, J.M.; Puranikmath, V.I.; Siddiqua, A.; Pramodhini, R. Plant Leaf Disease Detection Using Computer Vision and Machine Learning Algorithms. Glob. Transit. Proc. 2022, 3, 305–310. [Google Scholar] [CrossRef]

- Ahmed, I.; Yadav, P.K. Plant Disease Detection Using Machine Learning Approaches. Expert Syst. 2023, 40, e13136. [Google Scholar] [CrossRef]

- Gali, B.; Jaina, T.; Katpally, G.; Kankipati, G.; Rajyalakshmi, C. Image Based Plant Disease Detection Using Computer Vision. In Proceedings of the 2024 Fourth International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT), Bhilai, India, 11–12 January 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Chen, Z.; Wu, R.; Lin, Y.; Li, C.; Chen, S.; Yuan, Z.; Chen, S.; Zou, X. Plant Disease Recognition Model Based on Improved YOLOv5. Agronomy 2022, 12, 365. [Google Scholar] [CrossRef]

- Albattah, W.; Nawaz, M.; Javed, A.; Masood, M.; Albahli, S. A Novel Deep Learning Method for Detection and Classification of Plant Diseases. Complex Intell. Syst. 2022, 8, 507–524. [Google Scholar] [CrossRef]

- Miglani, A.; Kumar, N. Deep Learning Models for Traffic Flow Prediction in Autonomous Vehicles: A Review, Solutions, and Challenges. Veh. Commun. 2019, 20, 100184. [Google Scholar] [CrossRef]

- Jindal, V.; Bedi, P. High Performance Adaptive Traffic Control for Efficient Response in Vehicular Ad Hoc Networks. Int. J. Comput. Sci. Eng. 2018, 16, 390–400. [Google Scholar] [CrossRef]

- Shaikh, S.G.; Suresh Kumar, B.; Narang, G. Recommender System for Health Care Analysis Using Machine Learning Technique: A Review. Theor. Issues Ergon. Sci. 2022, 23, 613–642. [Google Scholar] [CrossRef]

- Garg, S.; Kaur, K.; Kumar, N.; Rodrigues, J.J.P.C. Hybrid Deep-Learning-Based Anomaly Detection Scheme for Suspicious Flow Detection in SDN: A Social Multimedia Perspective. IEEE Trans. Multimed. 2019, 21, 566–578. [Google Scholar] [CrossRef]

- Astani, M.; Hasheminejad, M.; Vaghefi, M. A Diverse Ensemble Classifier for Tomato Disease Recognition. Comput. Electron. Agric. 2022, 198, 107054. [Google Scholar] [CrossRef]

- Khan, F.; Zafar, N.; Tahir, M.N.; Aqib, M.; Saleem, S.; Haroon, Z. Deep Learning-Based Approach for Weed Detection in Potato Crops. Environ. Sci. Proc. 2022, 23, 6. [Google Scholar]

- Chen, S.; Arrouays, D.; Leatitia Mulder, V.; Poggio, L.; Minasny, B.; Roudier, P.; Libohova, Z.; Lagacherie, P.; Shi, Z.; Hannam, J.; et al. Digital Mapping of GlobalSoilMap Soil Properties at a Broad Scale: A Review. Geoderma 2022, 409, 115567. [Google Scholar] [CrossRef]

- Cheng, L.; Shi-Quan, S.; Wei, G. Maize Seedling and Weed Detection Based on MobileNetv3-YOLOv4. In Proceedings of the 2021 China Automation Congress (CAC), Beijing, China, 22–24 October 2021; pp. 5679–5683. [Google Scholar]