SADAMB: Advancing Spatially-Aware Vision-Language Modeling Through Datasets, Metrics, and Benchmarks

Abstract

1. Introduction

2. Related Work

2.1. Existing Datasets

- COCO (Common Objects in Context):

- Visual Genome:

- Clevr:

- GQA:

- Spatial Commonsense:

- Visual Spatial Reasoning:

2.2. Conventional Metrics for Generic IC

- BLEU:

- ROUGE:

- METEOR:

2.3. Lack of Rich Spatial Descriptions in Existing Datasets

2.4. Inadequate Evaluation Metrics for Spatial Reasoning

2.5. Limited Scope of Existing Datasets for Spatially Aware VQA

- Generated Caption:

- Caption: “a car is to the left of a person.”

- Generated Q & A:

- Question: “where is the car?”Answer: “to the left of the person.”

- Enhances the Utilization of Spatial Captions: The spatial captions generated by this work not only describe the objects in an image, but also provide context for more complex VQA tasks that require an understanding of spatial relationships.

- Bridges the Gap between Captioning and VQA: By generating question–answer pairs from the same spatially enriched captions, we enable a seamless connection between the two tasks, allowing for multi-task learning where models can simultaneously generate captions and answer spatially aware questions about images. This cross-task capability is critical for models that aim to tackle a broader range of vision-language tasks, making them more versatile and efficient in real-world applications.

3. Dataset

3.1. Dataset Creation Process

- The yolov8 [23] object detector provides the wrong class for an object or completely misses it.

- The ZoeDepth [24] depth estimator provides depth estimations for the objects that are not compatible with their true order along the Z(depth)-axis.

- Our heuristics that manipulate the estimated 3D bounding-boxes of the objects (calculated combining information from the two previously mentioned pre-trained models) consider relations that a human evaluator would not due to the imperfect, over-simplified nature of the logical examinations occurring.

- There are more than four well-sized objects in the scene, while we only consider up to four. This arbitrary hard limit was enforced to prevent the creation of very long image descriptions (since we consider all vs. all objects) that would require very large token sizes to be processed, rendering a good portion of the dataset irrelevant for training models compatible with our targeted edge devices. This strategy, of course, can sometimes lead to an odd situation where not all individual objects of a class are treated (e.g., conducting bad object counting). In any case, the object count and its following distribution for all data splits can be seen in Figure 3.

3.2. Dataset Extension for Spatial VQA

4. Evaluation Metrics

4.1. Definitions and Formulation

4.2. Formulaic Representation

- A: What is the probability that a generated caption accurately describes a valid spatial relationship?

- B: What is the probability of an image being correctly captioned, i.e., to have at least one caption correctly describing a spatial relationship in it?

4.2.1. Sentence-Level Accuracy ()

- is the total number of generated captions that exactly match any ground truth caption for their corresponding images.

- is the total number of generated captions that do not match any ground truth caption.

4.2.2. Image-Level Accuracy ()

- N is the total number of images;

- is the number of generated captions for image i that exactly match any ground truth caption;

- is the total number of captions generated for image i.

4.3. Properties of the Metric

5. Benchmark and Results

5.1. Description of the Models Tested

5.1.1. Vision Encoders

5.1.2. Text Decoders

5.2. Fine-Tuning

5.3. Benchmarking and Experimental Setup

5.4. Results and Analysis

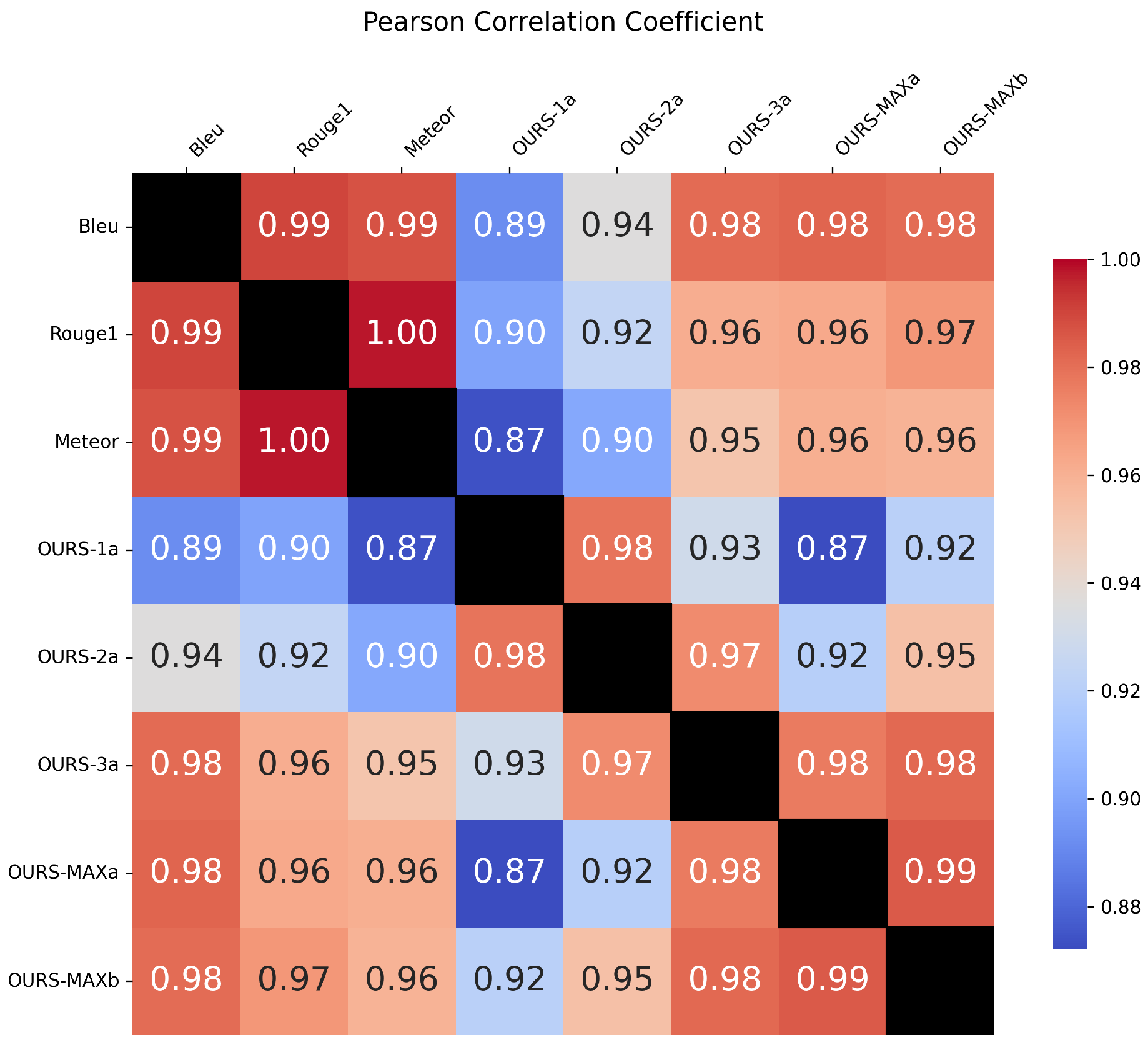

6. Metrics Observations

- A value of 1.0 indicates perfect positive correlation.

- A value of 0.0 indicates no correlation.

- A value of <0 would indicate negative correlation (none observed here).

6.1. Key Observations

- Standard metrics are tightly correlated.Metrics like Bleu and Rouge1 correlate strongly (≥0.99), indicating

- High agreement on the quality of the caption.

- Redundancy—using all may provide limited additional insight.

- Custom spatial metrics correlate strongly among themselves. Examples include

- Acc1A vs. Acc2A: 0.978;

- Acc3A vs. AccmaxA: 0.976.

This suggests coherence across custom metrics targeting different prediction depths. - There are variations of the custom vs. standard metrics which exhibit a correlation gap.

- Meteor vs. Acc1A: 0.87;

- Meteor vs. Acc2A: 0.90;

- Meteor vs. Acc3A: 0.95.

This indicates that there are varying levels of alignment depending on the targeted prediction depth. Acc1A and Acc2A seem the most valuable, providing insight possibly lost if only standard metrics were to be used. - MAX metrics are robust aggregators.AccmaxA and AccmaxB show a very high correlation (0.985), implying near interchangeability. They also correlate consistently with other metrics, reflecting their role as composite indicators.

6.2. Implications

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Vinyals, O.; Toshev, A.; Bengio, S.; Erhan, D. Show and tell: A neural image caption generator. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3156–3164. [Google Scholar]

- Li, X.; Yin, X.; Li, C.; Zhang, P.; Hu, X.; Zhang, L.; Wang, L.; Hu, H.; Dong, L.; Wei, F.; et al. Oscar: Object-semantics aligned pre-training for vision-language tasks. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXX 16. Springer: Cham, Switzerland, 2020; pp. 121–137. [Google Scholar]

- Lu, J.; Batra, D.; Parikh, D.; Lee, S. Vilbert: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. Adv. Neural Inf. Process. Syst. 2019, 32, 13–23. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Bojarski, M.; Del Testa, D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Jackel, L.D.; Monfort, M.; Muller, U.; Zhang, J.; et al. End to end learning for self-driving cars. arXiv 2016, arXiv:1604.07316. [Google Scholar] [CrossRef]

- Anderson, P.; Wu, Q.; Teney, D.; Bruce, J.; Johnson, M.; Sünderhauf, N.; Reid, I.; Gould, S.; Van Den Hengel, A. Vision-and-language navigation: Interpreting visually-grounded navigation instructions in real environments. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3674–3683. [Google Scholar]

- Gu, S.; Holly, E.; Lillicrap, T.; Levine, S. Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3389–3396. [Google Scholar]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002; pp. 311–318. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Ann Arbor, MI, USA, 29 June 2005; pp. 65–72. [Google Scholar]

- Lin, C.Y. Rouge: A package for automatic evaluation of summaries. In Text Summarization Branches Out; Association for Computational Linguistics: Barcelona, Spain, 2004; pp. 74–81. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; proceedings, part v 13. Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Antol, S.; Agrawal, A.; Lu, J.; Mitchell, M.; Batra, D.; Zitnick, C.L.; Parikh, D. Vqa: Visual question answering. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2425–2433. [Google Scholar]

- Liu, R.; Liu, C.; Bai, Y.; Yuille, A.L. Clevr-ref+: Diagnosing visual reasoning with referring expressions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4185–4194. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. Adv. Neural Inf. Process. Syst. 2014, 27, 3104–3112. [Google Scholar]

- Krishna, R.; Zhu, Y.; Groth, O.; Johnson, J.; Hata, K.; Kravitz, J.; Chen, S.; Kalantidis, Y.; Li, L.J.; Shamma, D.A.; et al. Visual Genome: Connecting Language and Vision Using Crowdsourced Dense Image Annotations. Int. J. Comput. Vis. (IJCV) 2017, 123, 32–73. [Google Scholar] [CrossRef]

- Johnson, J.; Hariharan, B.; Van Der Maaten, L.; Fei-Fei, L.; Lawrence Zitnick, C.; Girshick, R. Clevr: A diagnostic dataset for compositional language and elementary visual reasoning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2901–2910. [Google Scholar]

- Hudson, D.A.; Manning, C.D. GQA: A New Dataset for Real-World Visual Reasoning and Compositional Question Answering. arXiv 2019, arXiv:1902.09506. [Google Scholar] [CrossRef]

- Storks, S.; Gao, Q.; Chai, J.Y. Commonsense reasoning for natural language understanding: A survey of benchmarks, resources, and approaches. arXiv 2019, arXiv:1904.01172, 1–60. [Google Scholar]

- Liu, F.; Emerson, G.; Collier, N. Visual Spatial Reasoning. Trans. Assoc. Comput. Linguist. 2023, 11, 635–651. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Bhat, S.F.; Birkl, R.; Wofk, D.; Wonka, P.; Müller, M. ZoeDepth: Zero-shot Transfer by Combining Relative and Metric Depth. arXiv 2023, arXiv:2302.12288. [Google Scholar]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Transformers: State-of-the-Art Natural Language Processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Online, 16–20 November 2020; pp. 38–45. [Google Scholar]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. In Proceedings of the International Conference on Machine Learning, PMLR, Online, 18–24 July 2021; pp. 10347–10357. [Google Scholar]

- Ranftl, R.; Bochkovskiy, A.; Koltun, V. Vision transformers for dense prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 12179–12188. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; Association for Computational Linguistics: Minneapolis, MN, USA, 2019; pp. 4171–4186. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Yadan, O. Hydra—A Framework for Elegantly Configuring Complex Applications. Github. 2019. Available online: https://hydra.cc/ (accessed on 17 September 2025).

| Data Split | Original Images | Spatial Captions | Spatial Q&A |

|---|---|---|---|

| train | 72,784 | 314,159 | 989,350 |

| validation | 35,359 | 149,487 | 472,004 |

| test | 71,027 | 294,422 | 933,211 |

| Total | 179,170 | 758,068 | 2,394,565 |

| Performance Scores | ||||||

|---|---|---|---|---|---|---|

| ViT/GPT2 | ViT/BERT | DeiT/GPT2 | DeiT/BERT | DPT/GPT2 | DPT/BERT | |

| Bleu | 68.9205 | 69.1500 | 69.5859 | 70.6920 | 69.3144 | 69.1059 |

| Rouge | 78.7030 | 78.8027 | 78.7846 | 79.6154 | 78.5726 | 78.2929 |

| Meteor | 77.0582 | 77.1043 | 77.2755 | 78.0631 | 76.8082 | 76.6636 |

| 51.4664 | 51.5399 | 50.6547 | 51.4155 | 47.7672 | 48.2565 | |

| 44.8609 | 45.8751 | 45.6122 | 46.7670 | 43.9423 | 43.6683 | |

| 42.1286 | 43.0079 | 43.8455 | 45.2108 | 41.9176 | 42.6292 | |

| 38.6304 | 38.4833 | 39.8153 | 41.4448 | 39.2559 | 39.3343 | |

| 46.7449 | 46.6486 | 46.4213 | 47.4918 | 44.8170 | 44.8145 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Papadopoulos, G.; Drakoulis, P.; Ntovas, A.; Doumanoglou, A.; Zarpalas, D. SADAMB: Advancing Spatially-Aware Vision-Language Modeling Through Datasets, Metrics, and Benchmarks. Computers 2025, 14, 413. https://doi.org/10.3390/computers14100413

Papadopoulos G, Drakoulis P, Ntovas A, Doumanoglou A, Zarpalas D. SADAMB: Advancing Spatially-Aware Vision-Language Modeling Through Datasets, Metrics, and Benchmarks. Computers. 2025; 14(10):413. https://doi.org/10.3390/computers14100413

Chicago/Turabian StylePapadopoulos, Giorgos, Petros Drakoulis, Athanasios Ntovas, Alexandros Doumanoglou, and Dimitris Zarpalas. 2025. "SADAMB: Advancing Spatially-Aware Vision-Language Modeling Through Datasets, Metrics, and Benchmarks" Computers 14, no. 10: 413. https://doi.org/10.3390/computers14100413

APA StylePapadopoulos, G., Drakoulis, P., Ntovas, A., Doumanoglou, A., & Zarpalas, D. (2025). SADAMB: Advancing Spatially-Aware Vision-Language Modeling Through Datasets, Metrics, and Benchmarks. Computers, 14(10), 413. https://doi.org/10.3390/computers14100413