Do LLMs Offer a Robust Defense Mechanism Against Membership Inference Attacks on Graph Neural Networks?

Abstract

1. Introduction

2. Related Works

2.1. Background

- Convolutional Layers used in GCN, ARMA, and ChebNet update node representations through neighborhood aggregation:where processes neighboring nodes and weights their contributions.

- Attention Layers (used in GATs, MoNet, GaAN) implement dynamic neighbor weighting:where a determines neighbor importance through learned attention mechanisms.

- Message Passing Layers (used in GraphSAGE, MPNNs) control information flow between nodes:where defines the message content passed between nodes.

2.2. Membership Inference Attacks and Defense Mechanisms

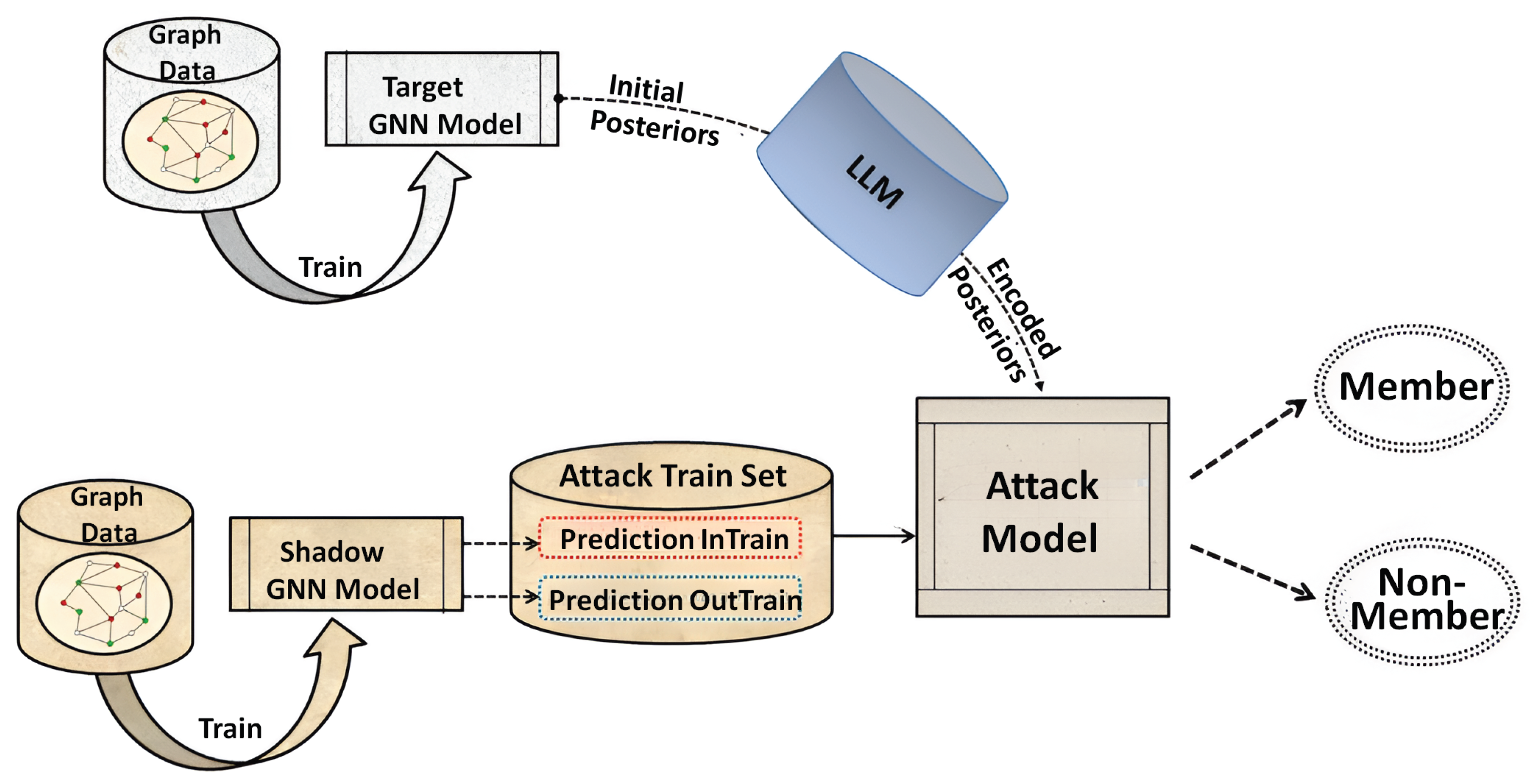

3. Proposed Model

3.1. Attack Methodology

3.2. Proposed Defense Methodologies

3.2.1. Posterior Encoding Using LLM-Guided Knowledge Distillation

3.2.2. Posterior Encoding Using LLM-Guided Secure Aggregation

3.2.3. Posterior Encoding with LLM-Guided Noise Injection

3.3. LLM Architectural Details

4. Experiments

4.1. Datasets

- Cora: a citation dataset consisting of machine learning papers grouped into seven classes. The nodes represent papers, and the edges represent citation links.

- CiteSeer: another citation network, with publications categorized into six classes. Features are expressed as binary-valued word vectors.

- PubMed: a biomedical dataset of abstracts of medical papers classified into three classes.

4.2. Proposed Approach

4.3. Experimental Setup

4.4. Metrics

5. Results and Discussion

5.1. Reduction of Membership Inference Attack Accuracy Using Three LLM-Guided Defense Strategies

5.2. Outperforming Previous Defense Approaches

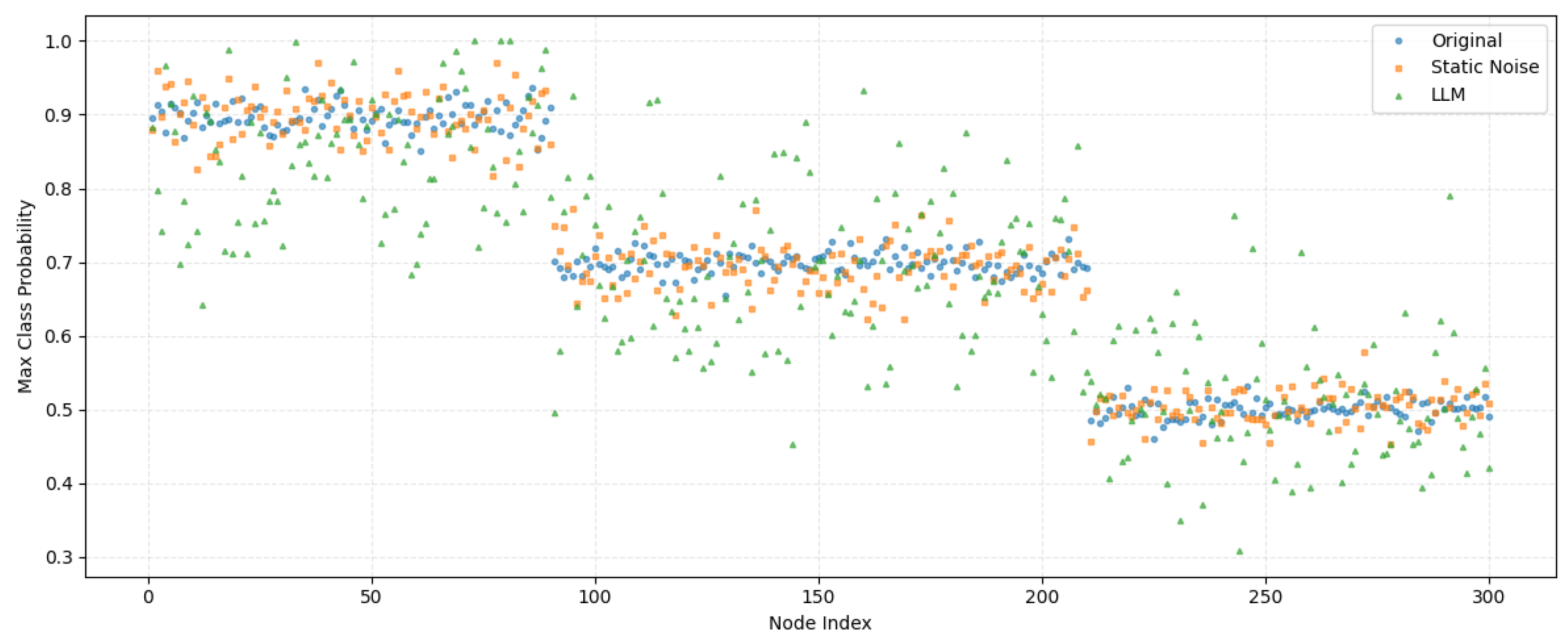

5.3. Maintaining Node Classification Accuracy in GNN

5.4. Computational Overhead and Practical Deployment

5.5. Why LLMs?

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Luong, M.T.; Pham, H.; Manning, C.D. Effective approaches to attention-based neural machine translation. In Proceedings of the Empirical Methods in Natural Language Processing (EMNLP), Lisbon, Portugal, 17–21 September 2015; pp. 1412–1421. [Google Scholar]

- Wu, Y.; Schuster, M.; Chen, Z.; Le, Q.V.; Norouzi, M.; Macherey, W.; Krikun, M.; Cao, Y.; Gao, Q.; Macherey, K.; et al. Google’s neural machine translation system: Bridging the gap between human and machine translation. arXiv 2016, arXiv:1609.08144. [Google Scholar]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.R.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N.; et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the 28th Annual Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- LeCun, Y.; Bengio, Y. Convolutional networks for images, speech, and time series. Handb. Brain Theory Neural Netw. 1995, 3361, 1995. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.A. Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Bronstein, M.M.; Bruna, J.; LeCun, Y.; Szlam, A.; Vandergheynst, P. Geometric deep learning: Going beyond Euclidean data. IEEE Signal Process. Mag. 2017, 34, 18–42. [Google Scholar] [CrossRef]

- Hamilton, W.L.; Ying, R.; Leskovec, J. Representation learning on graphs: Methods and applications. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 1024–1034. [Google Scholar]

- Battaglia, P.W.; Hamrick, J.B.; Bapst, V.; Sanchez-Gonzalez, A.; Zambaldi, V.; Malinowski, M.; Tacchetti, A.; Raposo, D.; Santoro, A.; Faulkner, R.; et al. Relational inductive biases, deep learning, and graph networks. arXiv 2018, arXiv:1806.01261. [Google Scholar] [CrossRef]

- Luan, S.; Hua, C.; Lu, Q.; Zhu, J.; Chang, X.W.; Precup, D. When Do We Need GNN for Node Classification? arXiv 2022, arXiv:2210.16979. [Google Scholar]

- Zhou, F.; Cao, C.; Zhang, K.; Trajcevski, G.; Zhong, T.; Geng, J. Meta-gnn: On few-shot node classification in graph meta-learning. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 2357–2360. [Google Scholar]

- Morshed, M.G.; Sultana, T.; Lee, Y.K. LeL-GNN: Learnable Edge Sampling and Line based Graph Neural Network for Link Prediction. IEEE Access 2023, 11, 56083–56097. [Google Scholar] [CrossRef]

- Errica, F.; Podda, M.; Bacciu, D.; Micheli, A. A fair comparison of graph neural networks for graph classification. arXiv 2019, arXiv:1912.09893. [Google Scholar]

- Chen, J.; Ma, M.; Ma, H.; Zheng, H.; Zhang, J. An Empirical Evaluation of the Data Leakage in Federated Graph Learning. IEEE Trans. Netw. Sci. Eng. 2023, 11, 1605–1618. [Google Scholar] [CrossRef]

- Carlini, N.; Chien, S.; Nasr, M.; Song, S.; Terzis, A.; Tramer, F. Membership inference attacks from first principles. In Proceedings of the 2022 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 22–26 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1897–1914. [Google Scholar]

- Jayaraman, B.; Evans, D. Are attribute inference attacks just imputation? In Proceedings of the 2022 ACM SIGSAC Conference on Computer and Communications Security, Los Angeles, CA, USA, 7–11 November 2022; pp. 1569–1582. [Google Scholar]

- Wang, X.; Wang, W.H. Group property inference attacks against graph neural networks. In Proceedings of the 2022 ACM SIGSAC Conference on Computer and Communications Security, Los Angeles, CA, USA, 7–11 November 2022; pp. 2871–2884. [Google Scholar]

- Wang, Y.; Huang, L.; Yu, P.S.; Sun, L. Membership Inference Attacks on Knowledge Graphs. arXiv 2021, arXiv:2104.08273. [Google Scholar]

- Olatunji, I.E.; Nejdl, W.; Khosla, M. Membership inference attack on graph neural networks. In Proceedings of the 2021 Third IEEE International Conference on Trust, Privacy and Security in Intelligent Systems and Applications (TPS-ISA), Atlanta, GA, USA, 13–15 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 11–20. [Google Scholar]

- He, X.; Wen, R.; Wu, Y.; Backes, M.; Shen, Y.; Zhang, Y. Node-level membership inference attacks against graph neural networks. arXiv 2021, arXiv:2102.05429. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, X.; Chen, C.; Lin, S.; Li, J. Membership Inference Attacks Against Robust Graph Neural Network. In Proceedings of the International Symposium on Cyberspace Safety and Security, Xi’an, China, 16–18 October 2022; Springer: Cham, Switzerland, 2022; pp. 259–273. [Google Scholar]

- Wang, X.; Wang, W.H. Link Membership Inference Attacks against Unsupervised Graph Representation Learning. In Proceedings of the 39th Annual Computer Security Applications Conference, Austin, TX, USA, 4–8 December 2023; pp. 477–491. [Google Scholar]

- Zhong, D.; Yu, R.; Wu, K.; Wang, X.; Xu, J.; Wang, W.H. Disparate Vulnerability in Link Inference Attacks against Graph Neural Networks. Proc. Priv. Enhancing Technol. 2023, 4, 149–169. [Google Scholar] [CrossRef]

- Cerda, P.; Varoquaux, G.; Kégl, B. Similarity encoding for learning with dirty categorical variables. Mach. Learn. 2018, 107, 1477–1494. [Google Scholar] [CrossRef]

- Wang, Z.; Huang, N.; Sun, F.; Ren, P.; Chen, Z.; Luo, H.; de Rijke, M.; Ren, Z. Debiasing learning for membership inference attacks against recommender systems. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Washington DC, USA, 14–18 August 2022; pp. 1959–1968. [Google Scholar]

- Jnaini, A.; Bettar, A.; Koulali, M.A. How Powerful are Membership Inference Attacks on Graph Neural Networks? In Proceedings of the 34th International Conference on Scientific and Statistical Database Management, Copenhagen, Denmark, 6–8 July 2022; pp. 1–4. [Google Scholar]

- Nasr, M.; Shokri, R.; Houmansadr, A. Machine learning with membership privacy using adversarial regularization. In Proceedings of the 2018 ACM SIGSAC conference on computer and communications security, Toronto, ON, Canada, 15–19 October 2018; pp. 634–646. [Google Scholar]

- Wang, K.; Wu, J.; Zhu, T.; Ren, W.; Hong, Y. Defense against membership inference attack in graph neural networks through graph perturbation. Int. J. Inf. Secur. 2023, 22, 497–509. [Google Scholar] [CrossRef] [PubMed]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Zhang, H.; Wu, B.; Yuan, X.; Pan, S.; Tong, H.; Pei, J. Trustworthy graph neural networks: Aspects, methods, and trends. Proc. IEEE 2024, 112, 97–139. [Google Scholar] [CrossRef]

- Singh, A.; Thakur, N.; Sharma, A. A review of supervised machine learning algorithms. In Proceedings of the 2016 3rd International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 16–18 March 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1310–1315. [Google Scholar]

- Torlay, L.; Perrone-Bertolotti, M.; Thomas, E.; Baciu, M. Machine learning–XGBoost analysis of language networks to classify patients with epilepsy. Brain Inform. 2017, 4, 159–169. [Google Scholar] [CrossRef] [PubMed]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

| Dataset | Nodes | Edges | Features | Classes |

|---|---|---|---|---|

| Cora | 2708 | 10,565 | 1433 | 7 |

| CiteSeer | 3327 | 9104 | 3703 | 6 |

| PubMed | 19,717 | 88,648 | 500 | 3 |

| Datasets | Defenses | GNNs | ||||

|---|---|---|---|---|---|---|

| Approaches | GCN | Arma | GAT | Cheb | Sage | |

| MIA | 97.30 | 93.50 | 98.30 | 98.40 | 96.10 | |

| Shuffle ENC. | 52.90 | 58.30 | 58.00 | 59.10 | 54.70 | |

| Dropout | 57.10 | 54.80 | 56.30 | 62.80 | 54.40 | |

| One-Hot ENC. | 50.00 | 50.00 | 50.00 | 50.00 | 50.00 | |

| RELU | 63.40 | 58.10 | 60.10 | 63.90 | 60.30 | |

| Cora | K TOP | 67.30 | 70.20 | 63.80 | 66.20 | 63.50 |

| VAE | 57.30 | 57.50 | 55.40 | 54.70 | 54.90 | |

| Graph per 1 | 55.10 | 55.70 | 54.70 | 56.20 | 54.90 | |

| Graph per 2 | 54.90 | 55.20 | 54.30 | 55.30 | 53.80 | |

| LLM* | 47.20 | 48.10 | 47.00 | 48.40 | 47.20 | |

| LLM** | 44.30 | 47.40 | 45.10 | 44.90 | 43.70 | |

| LLM*** | 42.80 | 45.60 | 46.00 | 42.90 | 43.40 | |

| MIA | 87.30 | 85.60 | 94.60 | 96.40 | 92.30 | |

| Shuffle ENC. | 58.10 | 53.50 | 61.10 | 54.10 | 54.70 | |

| Dropout | 61.80 | 57.70 | 63.40 | 52.20 | 56.60 | |

| One-Hot ENC. | 50.00 | 50.00 | 50.00 | 50.00 | 50.00 | |

| RELU | 64.60 | 59.10 | 63.10 | 55.20 | 56.80 | |

| PubMed | K TOP | 67.50 | 66.40 | 69.80 | 61.30 | 63.10 |

| VAE | 57.10 | 58.70 | 57.70 | 56.40 | 53.90 | |

| Graph per 1 | 56.20 | 56.30 | 54.90 | 56.50 | 56.20 | |

| Graph per 2 | 56.10 | 55.50 | 54.10 | 55.70 | 55.10 | |

| LLM* | 45.00 | 45.20 | 47.30 | 48.60 | 46.50 | |

| LLM** | 42.80 | 44.90 | 45.40 | 46.10 | 47.30 | |

| LLM*** | 42.30 | 45.10 | 42.30 | 45.00 | 43.60 | |

| MIA | 96.00 | 94.10 | 98.40 | 99.70 | 96.40 | |

| Shuffle ENC. | 53.60 | 56.40 | 57.20 | 54.80 | 55.70 | |

| Dropout | 56.90 | 59.70 | 60.80 | 58.80 | 57.70 | |

| One-Hot ENC. | 50.00 | 50.00 | 50.00 | 50.00 | 50.00 | |

| RELU | 57.80 | 62.10 | 64.60 | 60.80 | 59.90 | |

| CiteSeer | K TOP | 63.30 | 64.80 | 65.90 | 62.90 | 64.30 |

| VAE | 56.10 | 57.10 | 56.30 | 5.570 | 58.10 | |

| Graph per 1 | 54.80 | 55.70 | 54.60 | 55.30 | 54.80 | |

| Graph per 2 | 54.70 | 54.60 | 54.20 | 54.10 | 54.30 | |

| LLM* | 47.00 | 47.80 | 48.30 | 48.90 | 47.70 | |

| LLM** | 44.60 | 45.40 | 46.70 | 47.30 | 43.20 | |

| LLM*** | 43.30 | 46.70 | 45.50 | 46.00 | 44.10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jnaini, A.; Koulali, M.-A. Do LLMs Offer a Robust Defense Mechanism Against Membership Inference Attacks on Graph Neural Networks? Computers 2025, 14, 414. https://doi.org/10.3390/computers14100414

Jnaini A, Koulali M-A. Do LLMs Offer a Robust Defense Mechanism Against Membership Inference Attacks on Graph Neural Networks? Computers. 2025; 14(10):414. https://doi.org/10.3390/computers14100414

Chicago/Turabian StyleJnaini, Abdellah, and Mohammed-Amine Koulali. 2025. "Do LLMs Offer a Robust Defense Mechanism Against Membership Inference Attacks on Graph Neural Networks?" Computers 14, no. 10: 414. https://doi.org/10.3390/computers14100414

APA StyleJnaini, A., & Koulali, M.-A. (2025). Do LLMs Offer a Robust Defense Mechanism Against Membership Inference Attacks on Graph Neural Networks? Computers, 14(10), 414. https://doi.org/10.3390/computers14100414