Abstract

This study investigates the adoption of PyChatAI, a bilingual AI-powered chatbot for Python programming education, among female computer science students at Jouf University. Guided by the Technology Acceptance Model 3 (TAM3), it examines the determinants of user acceptance and usage behaviour. A Solomon Four-Group experimental design (N = 300) was used to control pre-test effects and isolate the impact of the intervention. PyChatAI provides interactive problem-solving, code explanations, and topic-based tutorials in English and Arabic. Measurement and structural models were validated via Confirmatory Factor Analysis (CFA) and Structural Equation Modelling (SEM), achieving excellent fit (CFI = 0.980, RMSEA = 0.039). Results show that perceived usefulness (β = 0.446, p < 0.001) and perceived ease of use (β = 0.243, p = 0.005) significantly influence intention to use, which in turn predicts actual usage (β = 0.406, p < 0.001). Trust, facilitating conditions, and hedonic motivation emerged as strong antecedents of ease of use, while social influence and cognitive factors had limited impact. These findings demonstrate that AI-driven bilingual tools can effectively enhance programming engagement in gender-specific, culturally sensitive contexts, offering practical guidance for integrating intelligent tutoring systems into computer science curricula.

1. Introduction

1.1. Background and Motivation

Artificial intelligence (AI) has emerged as a transformative force in computer science, significantly influencing both theoretical research and real-world applications. This study builds upon our previous research [1,2] by extending the analysis to include additional constructs and providing further empirical validation, thereby offering new insights into the acceptance and educational impact of PyChatAI.

In educational contexts, AI-powered technologies, particularly chatbots and virtual assistants, are increasingly being integrated into instructional design to support personalised, scalable, and adaptive learning experiences [3]. These systems aim to simulate intelligent behaviour by acquiring knowledge from the environment and providing context-sensitive feedback, making them particularly valuable in complex learning domains such as programming. In programming education, AI has been shown to enhance learning efficiency, reduce coding-related anxiety, and improve overall student performance [4]. Tools such as Iris, an AI-driven tutor, have proven useful in helping students grasp programming concepts and solve exercises. However, its influence on student motivation has been mixed, with most learners preferring to use such tools as supplementary aids rather than replacements for human instruction [5]. Intelligent tutoring systems enable personalised learning paths, emulate expert guidance, and foster greater engagement, particularly for self-paced learners [6].

1.2. Theoretical Framing

As AI tools proliferate in educational environments, researchers and instructors alike are increasingly focused on understanding what drives their adoption among students. The Technology Acceptance Model (TAM) and its extended version, TAM3, are among the most widely applied frameworks for evaluating technology usage intention, perceived usefulness, and ease of use in educational settings [7,8]. These models account for a range of mediating and moderating variables, including social influence, cognitive load, trust, and hedonic motivation. TAM-based studies have demonstrated that the behavioural intention to adopt AI tools is often shaped by perceptions of educational support and ease of use. For instance, students supported by AI tools performed better on thread-related tasks but showed gaps in process-based understanding [9]. Meanwhile, TAM3-based analyses have shown that job relevance and robot-related anxiety exert minimal influence on students’ willingness to adopt AI technologies, particularly in higher education [10]. Among Saudi university students, perceived usefulness and satisfaction have emerged as dominant predictors of acceptance [11].

1.3. Context and Research Gap

Despite advancements in AI-driven instruction, there remains a lack of empirical research on AI tool adoption within specific sociocultural contexts, such as bilingual education environments or female-only institutions in the Middle East. Most existing studies focus on general student populations, often overlooking the unique challenges and acceptance patterns that may emerge in conservative or gender-segregated academic settings [12,13] in Saudi Arabia. Furthermore, computer science students in Saudi Arabia frequently encounter linguistic barriers, as programming is typically taught in English while Arabic remains the primary medium of communication [14]. This combination of a bilingual and female-only setting introduces distinct dynamics that have not been sufficiently explored in prior research, representing a critical gap that this study seeks to address.

Moreover, the growing use of large language models (LLMs) such as ChatGPT-4 and Bard has introduced new layers of complexity to programming education. Students frequently rely on these tools for code generation, debugging, and problem-solving [15,16], yet few studies explore how these models are utilised in structured curricula or formal assessment environments [17,18]. Although hybrid platforms combining generative AI and community-driven knowledge, such as Stack Overflow, show pedagogical promise, their role in fostering deep learning remains underexamined [19].

1.4. Research Purpose and Contribution

This study seeks to address the aforementioned gaps by evaluating the adoption and behavioural usage of PyChatAI, a bilingual AI-powered learning assistant designed to support Python (Version 3.8.19) programming education. PyChatAI enables students to engage with coding tutorials, receive AI-generated feedback on code, and navigate guided problem-solving scenarios, all in a conversational user interface available in both English and Arabic. To systematically examine user acceptance, the study applies the TAM3 framework alongside a Solomon Four-Group experimental design, which allows for the control of pre-test effects and isolated evaluation of the AI intervention. The research specifically targets female computer science students at Jouf University, offering valuable insight into AI adoption patterns in a culturally specific and underrepresented demographic. By integrating a validated acceptance model with a robust experimental design, this research contributes to the growing body of literature on intelligent tutoring systems, provides practical guidance for technology implementation in higher education, and highlights the importance of culturally aware design in educational AI tools.

2. Literature Review

2.1. AI Adoption in K–12 and Higher Education

Numerous studies have explored the integration of artificial intelligence (AI) into educational institutions, focusing on factors influencing student engagement with AI-based learning systems. Han et al. applied the Technology Acceptance Model 3 (TAM3) to investigate AI adoption in rural Chinese middle schools [20]. Their analysis identified key determinants such as system quality, feedback perception, computer self-efficacy, and perceived enjoyment. The study offers practical insights for enhancing adaptive education through AI and provides actionable recommendations for academic leadership seeking to improve student learning outcomes. In a higher education context, Al-Mamary evaluated the adoption of learning management systems (LMS) using the TAM [21]. The study analysed responses from 228 students at the University of Hail and tested five hypotheses using structural equation modelling (SEM). Results confirmed that perceived usefulness (PU) and perceived ease of use (PEOU) significantly influence students’ intent to use LMS platforms. These findings suggest that institutional strategies promoting LMS integration can yield improvements in resource management, instructional design, and administrative efficiency. Sánchez-Prieto, Cruz-Benito, Therón Sánchez, and García-Peñalvo implemented a TAM-based model to examine students’ acceptance of AI-based assessment tools [22]. Using a 30-item Likert-type instrument grounded in established technology adoption theories, the researchers found that PU, PEOU, behavioural intention, attitude toward use, and actual system use were critical dimensions shaping technology adoption in AI-supported assessment. From a policymaker perspective, Helmiatin, Hidayat, and Kahar investigated AI integration in higher education at a public university in Indonesia [23]. Their survey of 348 lecturers and 39 administrative personnel was analysed using partial least squares structural equation modelling (PLS-SEM). The findings demonstrated that effort expectancy and performance expectancy positively influenced AI adoption, whereas perceived risk had a negative effect. In addition, facilitating conditions supported effort expectancy but were found to negatively influence behavioural intention. Attitudinal and intentional variables significantly impacted AI adoption outcomes.

2.2. ChatGPT and Conversational AI Tools

Several studies have examined the integration and perception of conversational AI tools such as ChatGPT in educational settings. Goh, Dai, and Yang explored the use of ChatGPT as a conceptual research benchmark. Through two experiments, they assessed ChatGPT responses in relation to TAM constructs. While results suggested an alignment between ChatGPT output and TAM theory, a high Heterotrait–Monotrait (HTMT) ratio indicated issues with discriminant validity among the constructs [24]. In a large-scale study involving 921 respondents, Bouteraa et al. investigated the role of student integrity in the adoption of ChatGPT. The researchers employed three theoretical frameworks: Social Cognitive Theory (SCT), Unified Theory of Acceptance and Use of Technology (UTAUT), and the Technology Acceptance Model. Results highlighted several influencing factors, including social influence, performance expectancy, technological and educational self-efficacy, and personal anxiety. While a positive moderating effect was found between ChatGPT usage and effort expectancy, performance expectancy and self-efficacy had a negative effect. Moreover, student integrity emerged as a negatively impacted construct in the adoption process [25]. Kleine, Schaffernak, and Lermer conducted a two-part study using TAM and TAM3 to analyse AI chatbot usage among university students. In the first study, involving 72 participants, the researchers identified that chatbot usage correlated strongly with PU and PEOU. A second study with 153 students validated these findings and introduced new predictors for PU and PEOU, including subjective norms and facilitating conditions, emphasising the role of both individual and contextual influences in AI adoption [26]. Essel, Vlachopoulos, Essuman, and Amankwa focused on the cognitive impact of ChatGPT usage among undergraduates. Their analysis underscored that instant feedback and conversational support from ChatGPT improved learners’ problem-solving skills and engagement, although the extent of learning gains varied depending on the instructional context [18]. Moon and Kim assessed elementary teachers’ perceptions of ChatGPT by deploying a structured questionnaire across a balanced sample in terms of gender and teaching experience. Rather than naming the tool explicitly, the term “System” was used to ensure unbiased responses. Analysis using TAM3 revealed that perceptions differed significantly by teaching course, experience level, and instructional medium [27]. In a study conducted in Jakarta, Sitanaya, Luhukay, and Syahchari explored student experiences with ChatGPT through a design-based methodology. The research illustrated that AI chatbots such as ChatGPT played a supportive role in enhancing conceptual understanding, especially in programming courses. However, it also revealed challenges including overreliance and lack of deep critical engagement [28]. Garcia et al. compared AI-driven responses from ChatGPT with peer-supported systems such as Stack Overflow. Their study highlighted a student preference for human-curated platforms in complex scenarios, although ChatGPT was favoured for rapid troubleshooting and concept clarification [19]. Finally, Almulla analysed the effect of ChatGPT on university students’ learning satisfaction. Based on data from 262 participants (175 male, remainder female), the study used SEM to confirm that PU and PEOU were the dominant factors influencing ChatGPT adoption in academic settings [29].

2.3. Broader Applications of TAM and UTAUT in EdTech

The TAM and its extensions have been widely employed to evaluate AI-driven tools across diverse educational settings. Al-Mamary applied TAM to study student adoption of LMS at the University of Hail. Based on responses from 228 students and using structural equation modelling, the study confirmed that both PU and PEOU significantly influenced LMS adoption [21]. The findings highlight the potential of LMS platforms to support resource planning, administrative efficiency, and strategic educational development. At the Ho Chi Minh City University of Industry, Duy et al. investigated AI adoption using a hybrid model combining TAM and the Theory of Planned Behaviour. Surveying 390 students via stratified sampling and applying a least-squares structural model, the study identified key influencing factors such as system output quality, relevance, image, subjective norms, self-efficacy, perceived playfulness, demonstrability, ease of use, usefulness, and anxiety. PU and PEOU emerged as the most influential variables, underscoring their centrality in AI tool adoption [30]. To assess acceptance of Industry 4.0 technologies among technical students, Castillo-Vergara et al. developed a TAM-based model. Their study, involving 326 rural students, focused on technologies including big data, artificial intelligence, 3D simulation, augmented reality, cybersecurity, collaborative robots, and additive manufacturing. Results showed that PU and PEOU significantly impacted students’ technology acceptance [31]. Madni et al. conducted a meta-analysis across various databases, Scopus, Web of Science, IEEE Xplore, Google Scholar, between 2016 and 2021, focusing on E-learning adoption in higher education through Internet of Things (IoT) technologies. Their analysis revealed that adoption factors extended beyond PU and PEOU to include privacy, infrastructure readiness, financial constraints, faculty support, network and data security, and interaction quality. The study emphasised the role of individual, technological, organisational, and cultural factors in technology adoption [32]. Sánchez-Prieto et al. applied TAM to understand student perspectives on AI-based assessments. By developing a 30-item Likert-scale questionnaire based on earlier TAM literature, they identified PU, PEOU, behavioural intention, attitude toward use, and actual use as central elements in adoption decisions [22].

In Indonesia, Helmiatin et al. surveyed 348 lecturers and 39 administrators in public universities to examine AI acceptance through a SEM. Findings indicated that effort expectancy and performance expectancy positively influenced AI adoption, while perceived risk had a negative effect. Facilitating conditions were found to positively affect effort expectancy, although they had a negative influence on behavioural intention. Users’ attitudes and behavioural intentions were also confirmed as key determinants [23]. Yazdanpanahi et al. explored teleorthodontic technology acceptance among 300 orthodontists in Iran. Using TAM3, they identified determinants such as job relevance, output quality, subjective norms, demonstrability, and PU as critical to technology acceptance. The relationship between PEOU and behavioural intention was also statistically significant [33]. Faqih and Jaradat assessed mobile commerce adoption in Jordan by applying TAM3 and examining moderating effects of gender and individualism–collectivism. Based on 425 responses from students at 14 private universities, they confirmed that both PU and PEOU were strong predictors of adoption [34]. Ma and Lei extended TAM by incorporating artificial intelligence literacy, subjective norms, and output quality to assess willingness among teacher education students in China to adopt AI tools. Their SEM-based analysis of 359 samples revealed that PU and AI literacy were the most influential factors on behavioural intention [35]. In the context of mobile learning (M-learning), Qashou surveyed 402 university students in Palestine to examine TAM-based adoption patterns. Multiple regression, Pearson correlation, and SEM results confirmed PU and PEOU as the primary adoption drivers [36]. Li et al. studied student adoption of intelligent systems in physical education using an expanded TAM framework. Analysing 502 responses, they identified six critical variables: self-efficacy, subjective norms, technological complexity, facilitating conditions, knowledge acquisition, and knowledge sharing. Self-efficacy, PU, attitude toward use (ATU), and knowledge sharing were particularly influential in shaping students’ behavioural intention [37]. Finally, Dewi and Budiman evaluated the e-Rapor system in Indonesian middle schools using TAM3 and the DeLone and McLean model. Surveying 93 teachers, they found PU and PEOU significantly impacted system adoption. Additional variables such as self-efficacy, information quality, and perceived enjoyment were also influential, especially in shaping PEOU [38].

2.4. Faculty and Institutional Perspectives on AI Adoption

Faculty and institutional perspectives play a critical role in shaping the broader ecosystem for adoption of AI in education. Kavitha and Joshith examined how higher education instructors in India perceive the use of AI tools in pedagogical and professional settings. Using the TAM framework, their study surveyed 400 respondents ranging from assistant professors to full professors. The data was analysed using CB-SEM and validated through nine of fifteen hypothesised relationships. The findings suggest that successful AI integration in higher education hinges on adequate training and institutional support to foster user confidence and acceptance [39]. Moon and Kim explored elementary school teachers’ attitudes toward ChatGPT by examining balanced profiles in terms of gender and years of teaching experience. To eliminate bias, the term “System” was used instead of “ChatGPT” in the survey. TAM3 was employed to assess nine variables aligned with the study objectives. The results revealed that teachers’ perceptions varied based on their teaching domain, instructional language, and experience, suggesting that context-specific factors significantly influence AI acceptance in the classroom [27]. In the domain of telehealth education, Yazdanpanahi et al. conducted a study on Iranian orthodontists to assess their acceptance of teleorthodontic technologies. Employing TAM3 and collecting responses from 300 members of the Iranian Orthodontic Association, the study found that factors such as job relevance, quality of output, demonstrability, and subjective norms were significant predictors of AI adoption. The results also established a strong relationship between PEOU, PU, and behavioural intention, underscoring the practical applicability of TAM3 in specialised medical education contexts [33]. Helmiatin et al. further extended the investigation into institutional dynamics by surveying 348 lecturers and 39 administrative staff at public universities in Indonesia. Using PLS-SEM for analysis, their study demonstrated that effort expectancy and performance expectancy had a positive influence on AI adoption, while perceived risk negatively affected acceptance. Facilitating conditions were positively linked to effort expectancy but had a counterintuitive negative effect on behavioural intention. Nevertheless, user attitude and behavioural intention remained key drivers of AI integration across the academic institution [23].

Together, these studies emphasise that while student-focused TAM research is abundant, understanding institutional and faculty attitudes, particularly through validated models such as TAM3, adds a critical layer to AI adoption frameworks in education. Insights from educators, administrators, and subject matter professionals provide not only technical and pedagogical validation but also inform policy decisions for scalable and sustainable AI use.

2.5. Thematic Synthesis and Observed Gaps

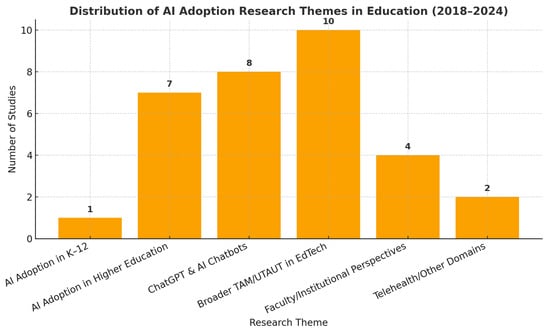

A thematic synthesis of existing studies (see Figure 1) on AI adoption in education reveals clear patterns and gaps. As shown in both the thematic distribution of ai adoption studies chart and Table 1, the majority of empirical research focuses on TAM-based applications in higher education and conversational AI tools such as ChatGPT. These studies frequently adopt established frameworks such as TAM, TAM3, and UTAUT to evaluate student adoption, system usability, and learning satisfaction.

Figure 1.

Thematic Distribution of Studies Included in the Literature Review: Distribution of AI adoption research themes in education (2018–2024). The figure presents the number of studies grouped by thematic focus: AI adoption in K–12 (n = 1), higher education (n = 7), ChatGPT and AI chatbots (n = 8), broader TAM/UTAUT applications in EdTech (n = 10), faculty/institutional perspectives (n = 4), and telehealth/other domains (n = 2). This distribution highlights the strong emphasis on higher education and EdTech contexts, with limited research in K–12 and culturally specific domains, underscoring existing gaps in the literature.

Table 1.

Summary of Reviewed Studies on AI Adoption in Education (2018–2024). The table lists first author, study objective, and theoretical framework. Reviewed studies cover a wide range of contexts including K–12, higher education, faculty perspectives, and conversational AI (e.g., ChatGPT, chatbots).

Table 1 (see Appendix A for details) presents a consolidated overview of these reviewed studies, detailing their objectives, participant demographics, methodological designs, and theoretical foundations. The studies span a range of domains, including K–12, higher education, faculty perspectives, conversational AI, and specialised areas such as teleorthodontics and physical education. Despite this diversity, the table highlights the underrepresentation of research in K–12 settings, gender-specific adoption behaviour, bilingual learning environments, and cross-cultural comparisons. The concentration on university students and generalised TAM constructs suggests a lack of contextual depth in addressing educational equity and localised adoption challenges.

Key conclusions from the synthesis include:

- PU and PEOU consistently emerge as the strongest predictors of technology acceptance across all contexts.

- Constructs such as trust, ethics, and sociocultural variables (e.g., gender, language, institutional support) are becoming more prominent, indicating a shift toward more nuanced models of technology adoption.

- Studies on AI chatbots and generative AI reveal mixed cognitive outcomes, suggesting that while these tools may enhance engagement and access, they require carefully designed instructional scaffolding to avoid superficial learning.

This synthesis not only confirms the robustness of TAM-based models in EdTech but also emphasises the need for targeted research in culturally diverse, underrepresented, and pedagogically complex settings.

2.6. Research Objectives and Questions

2.6.1. Research Objectives

The objectives of this research are threefold. First, it aims to empirically assess student acceptance and behavioural usage of the PyChatAI tool in the framework of the Technology Acceptance Model 3 (TAM3). Second, the study seeks to determine the predictive power of key factors such as perceived usefulness, perceived ease of use, and other TAM3 antecedents, including trust and hedonic motivation, on students’ behavioural intention and actual usage of the tool. Finally, the research intends to evaluate the effectiveness of a bilingual AI learning assistant in a gender-specific, culturally sensitive educational setting, employing a controlled experimental design to ensure robust and reliable findings.

2.6.2. Research Questions

This study is guided by the following research questions:

- RQ1: Which TAM3 constructs significantly influence the intention of female computer science students to use PyChatAI?

- RQ2: How do behavioural intention and perceived usefulness predict the actual usage of the chatbot in programming education?

- RQ3: What are the indirect (mediated) effects through PEOU and PU by which antecedents such as trust and facilitating conditions influence behavioural intention and actual usage?

- RQ4: Does the use of a bilingual AI assistant enhance user perceptions of both usability and educational value?

2.6.3. Research Hypotheses

Drawing on the TAM3 framework, this study proposes the following hypotheses concerning female computer science students’ acceptance and use of PyChatAI:

H1.

Social Influence (SI) positively affects Perceived Ease of Use (PEOU).

H2.

Perceived Value (PV) positively affects PEOU.

H3.

Cognitive Factors (CF) positively affect PEOU.

H4.

Facilitating Conditions (FC) positively affect PEOU.

H5.

Hedonic Motivation (HM) positively affects PEOU.

H6.

Trust (TR) positively affects PEOU.

H7.

PEOU positively affects Perceived Usefulness (PU).

H8.

SI positively affects PU.

H9.

PV positively affects PU.

H10.

PU positively affects Intention to Use (IU).

H11.

SI positively affects IU.

H12.

PEOU positively affects IU.

H13.

IU positively affects Actual Usage Behaviour (AUB).

3. PyChatAI Learning Tool: Design and Features

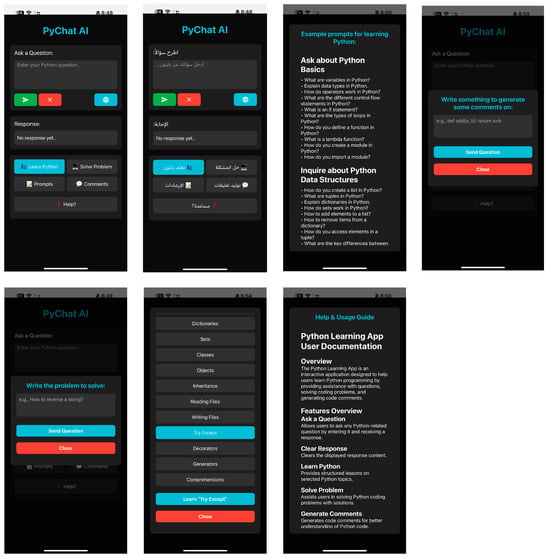

3.1. Overview of PyChatAI

PyChatAI (see Figure 2) is an interactive, bilingual mobile application designed to support Python programming education through conversational learning. It provides students with real-time, AI-driven assistance across a range of tasks, including question-answering, code commenting, and problem-solving. The system supports both Arabic and English languages and offers an intuitive dark-themed user interface enhanced by a typewriter-style animation to simulate human-like interaction. PyChatAI was developed with the objective of reducing programming anxiety, scaffolding problem-solving skills, and promoting self-paced learning. It aligns with the constructivist view of education by enabling learners to engage directly with coding content through active exploration, guided support, and immediate feedback.

Figure 2.

The core functional screens of the PyChatAI app(Version 1) [1].

3.2. Functional Components and Features

Each feature in PyChatAI serves a specific instructional and usability function aligned with TAM3 constructs.

- Ask a Question

- Functionality: Users can pose Python-related queries and receive AI-generated responses with animated delivery.

- Use Case: Ideal for asking about syntax rules, logic flows, or specific programming functions.

- Pedagogical Role: Facilitates self-directed learning and reinforces understanding through direct AI interaction.

- Clear Response

- Functionality: Resets the conversation interface.

- Use Case: Allows students to begin a new query session without distraction.

- Pedagogical Role: Supports cognitive reset and iterative questioning, minimising overload.

- Learn Python

- Functionality: Offers structured lessons categorised by topics.

- Use Case: Students choose modules such as loops, inheritance, or file handling to receive explanations and mini exercises.

- Pedagogical Role: Delivers microlearning experiences for just-in-time concept reinforcement.

- Solve a Problem

- Functionality: Accepts problem descriptions and outputs guided solutions.

- Use Case: Prompts such as “reverse a string” are broken into logic steps corresponding Python code.

- Pedagogical Role: Cultivates computational thinking and debugging skills through modelled reasoning.

- Generate Comments

- Functionality: Analyses submitted code and returns annotated lines.

- Use Case: Suitable for reinforcing understanding of code structure and logic.

- Pedagogical Role: Enhances code readability and promotes reflection through automated explanation.

- Prompts

- Functionality: Offers categorised example prompts.

- Use Case: Helps users formulate better queries by referencing model examples grouped by topic (e.g., data structures, OOP).

- Pedagogical Role: Scaffolds effective engagement and supports inquiry-based learning.

- Help

- Functionality: Provides access to usage guidelines in a help module.

- Use Case: Users can review functional descriptions and interaction tips.

- Pedagogical Role: Reduces cognitive load and enhances PEOU by supporting autonomy and system familiarity.

3.3. Educational Alignment

PyChatAI is grounded in the principles of TAM3, with features that directly map to key constructs:

- PEOU: Simple interface, bilingual support, structured prompts, and clear guidance reduce interaction friction.

- PU: Real-time answers, task-based modules, and annotated code outputs enhance learning efficiency and effectiveness.

- Trust and Self-Efficacy: Clear explanations and consistent AI responses build confidence, especially among novice learners.

- Facilitating Conditions: Mobile accessibility, offline guidance, and multi-language support remove common adoption barriers.

4. Research Methodology

4.1. Study Design

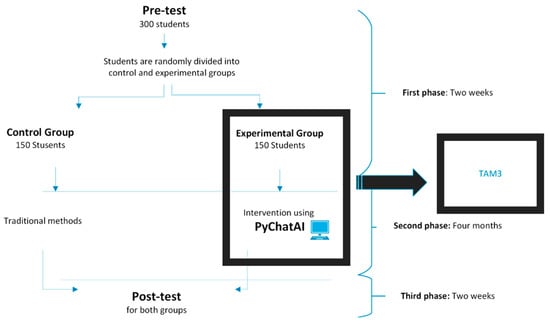

This study employed a Solomon Four-Group Design, a rigorous experimental approach designed to control pre-test sensitisation effects and to isolate the true causal impact of the educational intervention in this case, the use of PyChatAI. The design compares learning outcomes across groups that differ in both exposure to a pre-test and participation in the AI-driven intervention. This methodological structure enhances internal validity and supports causal inference regarding effects of PyChatAI [9].

4.1.1. Participant Distribution and Group Configuration

In this study, randomisation was conducted using a computerised random number generator to ensure equal probability of selection and minimise bias. To establish group comparability at baseline, an independent samples t-test was performed on pre-test scores across all programming skills dimensions (theoretical understanding, code writing, software design, problem-solving and analysis, and software testing/debugging). Results showed no statistically significant differences between groups (all p-values > 0.05), confirming that the groups were statistically equivalent prior to the intervention.

A total of 300 students were randomly assigned into four equal groups (n = 75 per group). As shown in Table 2, two groups received a pre-test, and two did not. Two groups used PyChatAI as an instructional supplement, while the other two followed traditional learning methods.

Table 2.

Research design showing the distribution of 300 participants across four groups (n = 75 each). Groups G1 and G3 used PyChatAI (experimental), while G2 and G4 received traditional instruction (control). G1 and G2 completed a pre-test to measure baseline knowledge, while G3 and G4 did not. Post-test results were used to isolate the effects of both pre-testing and the AI intervention.

4.1.2. Phase Timeline and Workflow

Figure 3 illustrates the research phases and flow of participants through the experimental process.

- Phase 1—Pre-Test (2 Weeks):

Students in Groups G1 and G2 completed a baseline programming test to measure existing knowledge. This phase allows for assessment of pre-intervention equivalence and analysis of pre-test effects.

- Phase 2—Intervention (4 Months):

Students in Groups G1 and G3 engaged with PyChatAI, an AI-based educational chatbot designed to support programming instruction. The other two groups (G2 and G4) followed traditional instructional methods. The use of PyChatAI was aligned with the TAM3 framework [50], with student interaction and feedback captured for further SEM analysis.

- Phase 3—Post-Test (2 Weeks):

All four groups completed a standardised outcome test to evaluate learning gains and compare performance based on group condition (intervention vs. control; pre-tested vs. not pre-tested).

Figure 3.

Timeline of the study phases (pre-test, intervention, and post-test) and participant allocation under the Solomon Four-Group design. This flow highlights how intervention and control groups progressed, ensuring control for pre-test sensitisation and enabling fair comparison of learning outcomes.

4.1.3. TAM3 Integration and Measurement Mapping

The TAM3 framework was applied to Groups G1 and G3, the two cohorts that engaged with PyChatAI. These groups provided data on key TAM3 constructs, including perceived ease of use (PEOU), perceived usefulness (PU), social influence (SI), trust (TR), hedonic motivation (HM), behavioural intention to use (IU), and actual usage behaviour (AUB), which were subsequently modelled using structural equation modelling (SEM). In contrast, the control groups (G2 and G4) served as benchmarks to assess the impact of pre-testing on learning outcomes and to evaluate the comparative efficacy of PyChatAI against traditional instructional methods. Actual Usage Behaviour (AUB) was operationalised through three self-report survey items (Q28–Q30). These items asked participants to indicate whether they (a) used PyChatAI for studying and completing assignments regularly, (b) used PyChatAI to review before exams, and (c) relied on PyChatAI to learn new programming techniques and concepts. Responses were collected on a 5-point Likert scale (1 = strongly disagree to 5 = strongly agree). This operationalisation ensured that AUB was quantified in a trans-parent and replicable manner.

4.1.4. Clarified Summary

The study followed a structured sequence across the four groups. Groups G1 and G2 first completed a pre-test to establish baseline knowledge. The intervention phase involved Groups G1 and G3 using PyChatAI over a four-month period. Following the intervention, all participants (G1–G4) completed the same post-test to assess learning outcomes. Additionally, the TAM3 framework was applied to Groups G1 and G3 to evaluate AI acceptance and usage behaviour, using validated measurement constructs.

4.1.5. Justification for Design

This comprehensive research design supports causal inference by controlling for potential confounding variables, such as pre-test reactivity, and ensures the validity of learning outcome comparisons through rigorous post-test administration across all groups. It also enables robust structural equation modelling (SEM) by aligning TAM3 constructs with AI-tool usage data from the experimental cohorts. By integrating the TAM3 framework in a Solomon Four-Group design, the study achieves both methodological rigour and theoretical depth, providing nuanced insights into student engagement with educational AI technologies.

4.2. Measurement Model Analysis

This section outlines the steps taken to validate the measurement model employed in this study. A comprehensive set of analyses was conducted to evaluate common method bias, model fit, construct reliability, convergent validity, and discriminant validity using SPSS 30.0 and AMOS 30.0. Prior to conducting the analysis, the dataset was screened and found to be complete with no missing value, ensuring the integrity of the statistical analysis.

4.2.1. Common Method Bias

To assess the presence of common method bias (CMB), Harman’s single-factor test was conducted using Principal Axis Factoring in SPSS, following established methodological guidance [51,52]. This approach evaluates whether a single factor accounts for the majority of variance in the data, which could suggest a risk of method variance. The unrotated factor solution revealed that the first factor explained only 19.561% of the total variance below the accepted threshold of 50%. The initial eigenvalue for this factor was 6.515, and nine factors had eigenvalues exceeding 1, indicating a multi-factorial structure rather than dominance by a single factor. These results confirm that common method bias is not a significant concern in this dataset, and the measurement responses are not likely to be inflated by systematic error.

4.2.2. Measurement Model Evaluation

The measurement model was tested through Confirmatory Factor Analysis (CFA) using AMOS to assess the structural soundness of the model and ensure that the observed variables appropriately reflect the underlying latent constructs. This evaluation included tests for overall model fit, construct reliability, convergent validity, and discriminant validity.

4.2.3. Model Fit

Multiple fit indices were used to evaluate the measurement model, in accordance with standards proposed by Hu and Bentler [53], Jöreskog and Sörbom [54], Barrett [55], and Fornell and Larcker [56]. The results confirmed that the model fits the data exceptionally well:

- Chi-square to degrees of freedom ratio (CMIN/DF) = 1.231 (threshold < 3),

- Comparative Fit Index (CFI) = 0.980 (threshold > 0.95),

- Standardised Root Mean Square Residual (SRMR) = 0.061 (threshold < 0.08),

- Root Mean Square Error of Approximation (RMSEA) = 0.039 (threshold < 0.06),

- PClose = 0.934 (threshold > 0.05).

These statistics provide robust evidence of excellent model fit.

4.2.4. Construct Reliability

Construct reliability was evaluated using Cronbach’s Alpha and Composite Reliability (CR), based on criteria from Hair et al. [57,58]. Values above 0.70 suggest acceptable reliability. As shown in Table 3, Cronbach’s alpha values ranged from 0.891 to 0.975, and CR values from 0.895 to 0.975, indicating that all constructs demonstrate strong internal consistency.

Table 3.

Reliability and convergent validity of TAM3 constructs. The table reports item loadings, Cronbach’s Alpha, Composite Reliability (CR), and Average Variance Extracted (AVE) for each construct. All factor loadings exceeded 0.70, Cronbach’s Alpha and CR values exceeded 0.70, and AVE values were above 0.50, confirming internal consistency and convergent validity of the measurement model.

4.2.5. Convergent Validity

Convergent validity was assessed based on three core criteria:

- Standardised factor loadings ≥ 0.70,

- CR ≥ 0.70,

- Average Variance Extracted (AVE) ≥ 0.50 ([56,57]).

As shown in Table 3, standardised loadings ranged from 0.774 to 0.974, and AVE values ranged from 0.740 to 0.928. These values confirm that the items in each construct adequately reflect their intended latent variables.

4.2.6. Discriminant Validity

Discriminant validity examines the extent to which each construct is empirically distinct from others in the model. This was assessed using the Heterotrait-Monotrait Ratio of Correlations (HTMT), an advanced technique that offers greater sensitivity than traditional methods such as the Fornell-Larcker criterion [59,60].

As shown in Table 4, all HTMT values were below the conservative threshold of 0.85, confirming that each construct measures a unique concept. For instance:

- The HTMT value between PU and IU was 0.521,

- Between PU and AUB was 0.401,

- Between TR and IU was 0.351,

- Between TR and AUB was 0.255.

Low values such as 0.008 between Facilitating Conditions (FC) and Perceived Value (PV), or 0.000 between SI and Cognitive Factors (CF), indicate minimal conceptual overlap. These results reinforce the empirical distinctiveness of the constructs used to evaluate user experiences with PyChatAI.

Table 4.

Discriminant Validity (HTMT Criterion).

Table 4.

Discriminant Validity (HTMT Criterion).

| PU | FC | PV | PEOU | HM | CF | SI | TR | IU | AUB | |

|---|---|---|---|---|---|---|---|---|---|---|

| PU | — | |||||||||

| FC | 0.076 | — | ||||||||

| PV | 0.120 | 0.008 | — | |||||||

| PEOU | 0.352 | 0.313 | 0.002 | — | ||||||

| HM | 0.204 | 0.012 | 0.048 | 0.171 | — | |||||

| CF | 0.001 | 0.081 | 0.098 | 0.028 | 0.052 | — | ||||

| SI | 0.494 | 0.088 | 0.141 | 0.122 | 0.046 | 0.000 | — | |||

| TR | 0.249 | 0.033 | 0.077 | 0.428 | 0.070 | 0.018 | 0.048 | — | ||

| IU | 0.521 | 0.187 | 0.113 | 0.441 | 0.039 | 0.061 | 0.081 | 0.351 | — | |

| AUB | 0.401 | 0.025 | 0.028 | 0.374 | 0.220 | 0.037 | 0.083 | 0.255 | 0.453 | — |

5. Structural Model Assessment

This section presents the empirical findings derived from the SEM analysis. Following the confirmation of the validity of the measurements, the structural model assessment focuses on testing the hypothesised relationships among latent variables. The results include both direct effects (hypothesis testing for H1 to H13) and indirect effects (mediation analysis), providing insight into how key TAM3 constructs such as trust, perceived usefulness, and behavioural intention interact to influence the actual usage of the PyChatAI tool. The significance of these paths is evaluated using standardised coefficients, p values, and bootstrapped confidence intervals.

5.1. Structural Model Results (Direct Effects)

To validate the hypothesised relationships, the structural model was analysed using SEM in AMOS, with the results summarising the strength and significance of direct paths among TAM3 variables, behavioural intention, and actual usage of the PyChatAI tool (Table 5). Perceived ease of use (PEOU) was significantly influenced by facilitating conditions (β = 0.225, p < 0.001), hedonic motivation (β = 0.142, p = 0.004), and trust (β = 0.354, p < 0.001), indicating that technical support, enjoyment, and system trust enhance users’ perceptions of ease of use. In contrast, social influence (β = −0.064, p = 0.137), perceived value (β = −0.032, p = 0.568), and cognitive factors (β = −0.026, p = 0.521) had no significant effect on PEOU, suggesting they do not meaningfully shape perceived ease. Perceived usefulness (PU) was significantly affected by PEOU (β = 0.467, p < 0.001), social influence (β = 0.369, p < 0.001), and perceived value (β = 0.165, p = 0.004), demonstrating that users who find the system easy to use, socially supported, and valuable are more likely to perceive it as useful. Behavioural intention to use (IU) was strongly predicted by PU (β = 0.446, p < 0.001) and PEOU (β = 0.243, p = 0.005), but not by social influence (β = −0.087, p = 0.106), indicating that usability and usefulness are stronger drivers of intention than peer influence. Finally, IU (β = 0.406, p < 0.001) significantly predicted the actual usage behaviour (AUB), confirming that intention effectively translates into actual usage. Overall, out of the 13 hypothesised direct paths, 10 were supported, with usability (PEOU), motivational factors (HM), trust (TR), and behavioural intention (IU) emerging as key predictors of AI tool adoption and sustained use.

Table 5.

Results of structural equation modelling (SEM) testing the hypothesised direct paths (H1–H13) among TAM3 constructs in relation to PyChatAI adoption. Of the 13 hypotheses, 10 were supported. Perceived ease of use (PEOU) was significantly predicted by facilitating conditions (β = 0.225, p < 0.001), hedonic motivation (β = 0.142, p = 0.004), and trust (β = 0.354, p < 0.001), while social influence (SI), perceived value (PV), and cognitive factors (CF) had no significant effects. Perceived usefulness (PU) was significantly influenced by PEOU (β = 0.467, p < 0.001), SI (β = 0.369, p < 0.001), and PV (β = 0.165, p = 0.004). Behavioural intention to use (IU) was strongly predicted by PU (β = 0.446, p < 0.001) and PEOU (β = 0.243, p = 0.005), but not by SI (β = –0.087, p = 0.106). Actual usage behaviour (AUB) was significantly determined by IU (β = 0.406, p < 0.001). These results highlight the central roles of trust, facilitating conditions, hedonic motivation, perceived usefulness, and perceived ease of use in driving behavioural intention and, ultimately, actual adoption of PyChatAI.

5.2. Indirect Effects/Mediation Analysis

To evaluate these indirect effects, a bootstrapping approach was applied using 5000 resamples with bias-corrected confidence intervals in AMOS. The results, summarised in Table 5, include standardised path estimates, confidence intervals, and p-values, helping identify both statistically significant and non-significant mediating paths.

5.2.1. Key Mediation Paths

Trust (TR) exhibited one of the most prominent sequential mediation effects, operating through the full TAM3 chain, TR → PEOU → PU → IU → AUB (β = 0.030, p < 0.001), indicating that trust indirectly shapes actual usage behaviour by enhancing perceived ease of use, perceived usefulness, and behavioural intention. Facilitating conditions (FC) also showed strong indirect effects on perceived usefulness (β = 0.115, p < 0.001), intention to use (β = 0.068, p = 0.007), and actual usage behaviour (β = 0.068, p = 0.004). Similarly, hedonic motivation (HM) contributed significantly through indirect pathways, influencing perceived usefulness (β = 0.090, p = 0.003), intention to use (β = 0.053, p = 0.005), and actual usage behaviour (β = 0.053, p = 0.003). Collectively, these results suggest that enjoyment in using PyChatAI, along with adequate support and trust in the system, exerts a positive cascading influence on user perceptions and subsequent behaviour.

5.2.2. Non-Significant Mediation Paths

Certain constructs did not demonstrate meaningful indirect effects in the model. The pathway from social influence (SI) through perceived ease of use (PEOU), perceived usefulness (PU), and intention to use (IU) to actual usage behaviour (AUB) was non-significant (β = −0.005, p = 0.061). Similarly, the pathway from cognitive factors (CF) to PEOU and PU lacked significance (β = −0.020, p = 0.549). These results suggest that neither peer encouragement nor mental effort alone is sufficient to drive actual usage unless these influences are channelled through core system perceptions such as ease of use and perceived usefulness.

5.2.3. Additional Supporting Paths

The analysis revealed that the indirect pathway from perceived ease of use (PEOU) to perceived usefulness (PU), then to intention to use (IU), and finally to actual usage behaviour (AUB), was statistically significant (β = 0.085, p < 0.001), confirming ease of use as a foundational construct that influences behaviour through both perceived usefulness and behavioural intention. Similarly, the pathway from PU to IU and subsequently to AUB (β = 0.181, p < 0.001) reinforces perceived usefulness as a critical determinant of behavioural intention and eventual usage. Overall, the findings indicate that trust, facilitating conditions, and hedonic motivation drive actual usage through both direct and indirect mechanisms involving PEOU and PU, while social influence, perceived value, and cognitive factors exert an effect only when mediated through these core TAM3 constructs. This layered mediation pattern underscores the robustness of the model in explaining user behaviour in AI-supported learning environments such as PyChatAI.

6. Discussion

This study examined the adoption and use of PyChatAI, an AI-driven, bilingual chatbot designed for Python programming education, through the lens of the TAM3. Using SEM in a Solomon Four-Group experimental design, both direct and indirect effects were tested, offering a multi-layered understanding of how students engage with AI-powered learning tools. The analysis provides theoretical advancement of TAM3 constructs and actionable recommendations for EdTech developers and educators, particularly in culturally specific, gender-segregated learning contexts.

6.1. Theoretical Implications

The study advances TAM3 by empirically validating the roles of trust, hedonic motivation, and facilitating conditions as significant antecedents of perceived ease of use, which in turn shapes perceived usefulness and behavioural intention. These findings confirm the multi-tiered belief structure of TAM3, offering robust support for its hierarchical and mediational pathways in AI-enhanced education settings.

A particularly novel insight lies in the cascading mediation sequence, from trust to ease of use, then usefulness, intention, and finally actual behaviour, which reinforces the proposition of TAM3 that behavioural outcomes are indirectly governed by a layered progression of cognitive and affective constructs. This “trust → ease → usefulness → intention → behaviour” chain is particularly salient in contexts involving emerging, intelligent technologies where initial user scepticism or lack of familiarity may impede adoption.

Moreover, the relative insignificance of social influence, cognitive readiness, and perceived value, unless mediated through ease and usefulness, underscores the importance of designing systems that are intrinsically rewarding and cognitively effortless, rather than relying solely on external pressures or technical competence.

These theoretical implications align with and extend prior TAM3-based AI studies [33,50], and also offer differentiation: unlike generalised technology adoption, this study highlights how affective trust and system enjoyability function as essential prerequisites in environments where learners may have heightened privacy concerns, language barriers, or limited prior exposure to generative AI. In particular, the bilingual and female-only context of Jouf University provided a unique testbed for TAM3, where language barriers and gender-specific norms shaped trust and ease of use more strongly than in mixed or monolingual settings. This extends TAM3 by showing how sociocultural factors can amplify or attenuate established adoption constructs.

6.2. Practical Implications of Effect Sizes and Statistical Significance

The findings carry strong practical implications for the design and deployment of AI-based educational tools. By highlighting the importance of transparent decision-making, explainable outputs, and consistent system behaviour, the study offers concrete strategies for building trust among novice learners and students in conservative academic settings.

The effect sizes observed in this study underscore the practical weight of key adoption drivers. Perceived usefulness (β = 0.446, p < 0.001) and perceived ease of use (β = 0.243, p = 0.005) emerged as the strongest predictors of behavioural intention, together accounting for a substantial proportion of variance in whether students intended to use the tool. In practical terms, this means that if students perceive the tool as both effective for their learning tasks and straightforward to navigate, they are significantly more likely to adopt it. Moreover, behavioural intention strongly predicted actual usage (β = 0.406, p < 0.001), confirming that intention reliably translates into classroom behaviour.

Equally notable are the indirect effects. Trust (β = 0.354 → PEOU), facilitating conditions (β = 0.225 → PEOU), and hedonic motivation (β = 0.142 → PEOU) exerted meaningful effects not only directly but also through cascading mediation pathways (e.g., trust → ease of use → usefulness → intention → usage). Although the numerical coefficients appear moderate, in educational terms they highlight how seemingly intangible factors such as enjoyment, reliability of support, and perceived credibility can shape sustained engagement. For example, the mediation effect of trust (β = 0.030, p < 0.001) confirms that a trustworthy system does not just build confidence, it indirectly improves usage by making the system feel easier, more useful, and therefore worth adopting.

These results carry direct implications for classroom practice. First, tool design should not rely on peer influence or students’ prior programming ability, as these factors showed no significant effects. Instead, adoption hinges on whether students personally experience ease and usefulness in their interactions. For educators, this suggests that demonstrations, structured first-use experiences, and opportunities for immediate success are more impactful than awareness campaigns or peer-led encouragement.

Second, the moderate-to-strong effect sizes for bilingual and culturally sensitive features suggest that inclusivity is not optional but instrumental to adoption. In a female-only, bilingual setting, students were more likely to engage with the AI assistant when it supported both languages and addressed privacy concerns. In educational terms, this means that system design aligned with students’ sociocultural realities can reduce cognitive and emotional barriers, enabling more equitable access to programming education.

Finally, the relative strength of facilitating conditions highlights the need for robust institutional strategies. The presence of technical support, endorsement from academic leadership, and seamless LMS integration are not peripheral but central to shaping adoption outcomes. For universities, this translates into a clear directive: investment in institutional scaffolding will directly boost students’ ease of use perceptions and indirectly promote sustained adoption.

While statistical significance establishes that the observed effects are unlikely due to chance, effect sizes illustrate their educational and practical weight. For instance, the medium-to-strong effects of perceived usefulness on behavioural intention (β = 0.446) and behavioural intention on actual usage behaviour (β = 0.406) show that these relationships are not only statistically valid but also pedagogically meaningful. In practical terms, this means that when students perceive the system as useful and easy to use, their likelihood of sustained adoption increases substantially, leading to more consistent engagement in programming education. Considering both statistical significance and effect sizes therefore ensures that theoretical findings translate into actionable strategies for educators and institutions.

6.3. Limitations and Future Research Directions

Despite the methodological rigour, including the use of the Solomon Four-Group design to control pre-test reactivity, several limitations should be acknowledged. The study was conducted in a single institutional and cultural context, female computer science students at Jouf University, which constrains the generalisability of the findings across genders, disciplines, and cultural settings. Future research should consider cross-institutional and cross-cultural designs to explore how factors such as gender norms, language proficiency, and prior AI exposure influence TAM3 constructs. Although SEM provided robust validation of behavioural intention and actual usage constructs, the reliance on self-reported data introduces potential recall bias and social desirability effects; integrating log-based behavioural data (e.g., clickstream or usage frequency) could offer objective triangulation and more accurate modelling of actual behaviour. Moreover, while TAM3 is comprehensive, it may not fully capture longitudinal commitment, confirmation expectations, or emotional dissonance experienced by students interacting with AI, suggesting the potential value of incorporating elements from Expectation-Confirmation Theory (ECT), Self-Determination Theory (SDT), or Cognitive Load Theory (CLT). The quantitative SEM design, though statistically strong, provides limited insight into student reasoning, preferences, and anxieties, highlighting the importance of complementary qualitative methods, such as focus groups, think-aloud protocols, or diary studies, to reveal nuanced experiences and misalignments between student expectations and system output. Finally, given the rapid evolution of large language models and AI-powered tutoring tools, theoretical frameworks like TAM3 may struggle to keep pace, underscoring the need for ongoing research to track how user acceptance evolves alongside advances in system capability, transparency, and societal adoption.

7. Conclusions

This study explored the adoption and usage of PyChatAI, a bilingual AI-driven chatbot for Python programming education, among female computer science students in Saudi Arabia. Grounded in TAM3 and implemented through a Solomon Four-Group experimental design (N = 300), the results confirmed that perceived usefulness and ease of use are the strongest predictors of behavioural intention, which in turn drives actual usage. Trust, facilitating conditions, and hedonic motivation further supported ease of use, while other factors had limited or indirect effects.

Overall, the findings highlight the layered mechanisms through which AI adoption occurs in education, with trust emerging as a key driver. However, given the focus of the study on a single cultural and institutional context, the conclusions should be applied cautiously and not overgeneralised beyond similar settings.

Author Contributions

Conceptualisation, M.A., A.L. and B.S.; methodology, M.A. and B.S.; software, M.A.; validation, M.A., B.S. and H.S.; formal analysis, M.A., B.S. and H.S.; investigation, M.A., B.S. and H.S.; resources, M.A., B.S. and A.L.; data curation, M.A. and H.S.; writing—original draft preparation, M.A.; writing—review and editing, B.S., A.L. and H.S.; visualisation, M.A., A.L. and H.S.; supervision, B.S. and A.L.; project administration, M.A., B.S., H.S. and A.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

All experiments in this study were performed in accordance with relevant guidelines and regulations, adhering to the Declaration of Helsinki. The study was approved by the Human Research Ethics Committee at La Trobe University (Project no. HEC23394).

Informed Consent Statement

Informed consent was obtained from all participants in accordance with HEC23394.

Data Availability Statement

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

| First Author | Objective | Participants | Design | Theory |

| Han et al. [20] | Analyse the factors that influence the student to use AI-based learning systems | China’s two middle schools’ 66 students | 260 questionnaires were used | TAM3 |

| Goh et al. [24] | To explore the leveraging of the ChatGPT paradigm in conceptual research | The study used ChatGPT-generated responses for analysis | Two experimental studies where ChatGPT was prompted with scenarios related. | TAM |

| Bouteraa et al. [25] | To determine the role of student integrity to adopting ChatGPT | 41 measurement items based on 921 individuals | Comprise the online survey | SCT, UTA, and UTAUT |

| Al-Mamary et al. [21] | The intention of students determining to adopt LMS | University of Hail’s 228 students | Internet questionnaire | TAM |

| Kleine et al. [26] | Identify factors associated with the usage of AI chatbots by university students | Study 1 (N = 72), Study 2 (N = 153) | Survey forms | TAM and TAM3 |

| Duy et al. [30] | To determine the influential factors in to use of AI in students | 390 students at the University | Survey using the stratified sampling method | TAM and planning behaviour theory |

| Castillo et al. [31] | To identify the factors that capture students’ attention toward the acceptance of technologies | 326 samples from the students | Self-administered questionnaire | TAM |

| Madni et al. [32] | To determine the factors that appeal to the students to adopt different technologies in higher education | Existing studies from 2016 to 2021 | Web of Science, Scopus, Science Direct, Taylor & Francis, Springer, Google Scholar, IEEE Explore, and ACM Digital Library | Designed Adoption Model |

| Sánchez et al. [22] | To take students’ perceptions about their assessment by machine learning models | 30 Likert-type items obtained from previous theories | Questionnaire | TAM |

| Helmiatin et al. [23] | To investigate how AI can be used by policymakers in education | 348 lecturers and 39 administrative personnel | Survey conducted | PLS-SEM Model |

| Moon et al. [27] | To develop a positive perception of teachers towards the use of ChatGPT | Using the response of 100 elements teacher based on their profiles. | Questioner | TAM3 model |

| Yazdanpanah et al. [33] | To demonstrate the orthodontists’ acceptance of teleorthodontic technology | Gathered the responses from 300 Iranian orthodontists | Accommodated questionnaire | TAM3 model |

| Faqih et al. [34] | To investigate mobile commerce adoption in Jordan | D54ata was collected from 14 private Jordanian universities based on 425 valid datasets | Paper-based questionnaire | TAM3 model |

| AlGerafi et al. [10] | To evaluate the intention of Chinese higher education students toward AI-based robots for study | Analysis was performed on 425 valid datasets | Questionnaires | TAM3 model |

| Kavitha et al. [39] | To assess the intention and willingness of use in higher pedagogical and professional domains | 400 responses data collected from assistant professors to professors | Cross-sectional survey design | TAM based on CB-SEM |

| Almulla et al. [29] | To assess the impact of learning satisfaction and adoption of ChatGPT by university students | 252 University Students in Saudi Arabia | Questionnaire | TAM, PLS-SEM, and an SEM approach |

| Qashou et al. [36] | To analyse the adoption of mobile learning in university students | 402 university students involved in the survey | Questionnaire | TAM |

| Li et al. [37] | To determine the important factors that influence students to adopt intelligent methods in physical education | 502 responses from university students | Questionnaire Star software | TAM |

| Ma et al. [35] | To assess the teacher education students’ influential factors for AI adoption | 359 samples collected from a survey | Survey Questionnaire | TAM and SEM models |

| Goli et al. [40] | To examine various which affect the behavioural intention of an individual to use Chatbots | Data from 378 individuals was collected | A questionnaire was designed | TAM 2, TAM 3, UTAUT 2, and Delone and McLean models |

| Dewi et al. [38] | To analyse the user acceptance level of the e-Report system | 93 samples from 1201 teachers were collected | Questionnaire was distributed | TAM3 and the DeLone & McLean methods |

| Handoko et al. [41] | Examine the factors influencing students’ use of AI and its impact on learning satisfaction | 130 university students | Quantitative study using convenience sampling and SEM | (UTAUT) |

| Musyaffi et al. [42] | Examine the factors influencing accounting students’ acceptance of AI in learning | 147 higher-education students | Quantitative study using a survey and analysed using SmartPLS 4.0 with the partial least square approach | (TAM) and Information Systems Success Model |

| Chiu et al. [43] | Compare the effectiveness of traditional programming instruction versus Robot-Assisted Instruction (RAI) in enhancing student learning and satisfaction | 81 junior high school students (41 in traditional programming course, 40 in RAI course using Dash robots) | Quantitative study using a questionnaire survey and pre-test/post-test analysis based on the UTAUT model | (UTAUT) |

| Pan et al. [44] | Investigate key factors influencing college students’ willingness to use AI Coding Assistant Tools (AICATs) by extending the Technology Acceptance Model (TAM) | 303 Chinese college students, 251 valid responses after data cleaning | Quantitative study using an online survey, factor analysis, and SEM | Technology Acceptance Model (TAM) with extended variables: Perceived Trust, Perceived Risk, Self-Efficacy, and Dependence Worry |

| Ho et al. [45] | Investigate the impact of Generative AI intervention in teaching App Inventor programming courses, comparing UI materials designed by traditional teachers and Generative AI | 6 students in a preliminary test, with plans to expand the sample size in future research | Experimental study with a single-group pre-test/post-test design, comparing traditional teacher-designed UI materials with Generative AI-generated materials | (TAM), User Satisfaction Model |

| Liu et al. [46] | Summarise the current status of AI-assisted programming technology in education and explore the efficiency mechanisms of AI-human paired programming | Not specified, but literature review-based study | Systematic literature review following thematic coding methodology | (TAM), Learning Analytics, Grounded Theory |

| Ferri et al. [47] | To ascertain the intentions of risk managers to use artificial intelligence in performing their tasks—To examine the factors affecting risk managers’ motivation to use artificial intelligence | 782 Italian risk managers were surveyed, with 208 providing full responses (response rate of 26.59%). | Integrated theoretical framework combining TAM3 and UTAUT, Applying PLS-SEM on data from a Likert-based questionnaire. | TAM3 & UTAUT |

| Kleine et al. [26] | Investigate factors influencing AI chatbot usage intensity among university students | University students (Study 1: N = 72, Study 2: N = 153) | Daily diary study (5 days), Multilevel SEM, between-person and within-person variation | TAM, TAM3, Cognitive Load Theory, Task-Technology Fit Theory (TTF), Social Influence Theory |

| Cippelletti et al. [48] | perceived acceptability of collaborative robots (“cobots”) among French workers. | 442 French workers | Quantitative study | The theoretical frameworks used in this study are the Technology Acceptance Model 3 (TAM3), the Meaning of Work Inventory, and the Ethical, Legal and Social Implications (ELSI) scale. |

| Abdi et al. [49] | To explore the predictors of behavioural intention to use ChatGPT for academic purposes among university students in Somalia | 299 university students who have used ChatGPT for academic purposes | Quantitative study | TAM |

References

- Alanazi, M.; Soh, B.; Samra, H.; Li, A. PyChatAI: Enhancing Python Programming Skills—An Empirical Study of a Smart Learning System. Computers 2025, 14, 158. [Google Scholar] [CrossRef]

- Alanazi, M.; Soh, B.; Samra, H.; Li, A. The Influence of Artificial Intelligence Tools on Learning Outcomes in Computer Programming: A Systematic Review and Meta-Analysis. Computers 2025, 14, 185. [Google Scholar] [CrossRef]

- Aznar-Díaz, I.; Cáceres-Reche, M.P.; Romero-Rodríguez, J.M. Artificial intelligence in higher education: A bibliometric study on its impact in the scientific literature. Educ. Sci. 2019, 9, 51. [Google Scholar] [CrossRef]

- Fan, G.; Liu, D.; Zhang, R.; Pan, L. The impact of AI-assisted pair programming on student motivation, programming anxiety, collaborative learning, and programming performance: A comparative study with traditional pair programming and individual approaches. Int. J. STEM Educ. 2025, 12, 16. [Google Scholar] [CrossRef]

- Bassner, P.; Frankford, E.; Krusche, S. Iris: An AI-driven virtual tutor for computer science education. In Proceedings of the 2024 on Innovation and Technology in Computer Science Education, Milan, Italy, 8–10 July 2024; Volume 1, pp. 394–400. [Google Scholar]

- Kashive, N.; Powale, L.; Kashive, K. Understanding user perception toward artificial intelligence (AI) enabled e-learning. Int. J. Inf. Learn. Technol. 2020, 38, 1–19. [Google Scholar] [CrossRef]

- Ghimire, A.; Edwards, J. Generative AI adoption in the classroom: A contextual exploration using the Technology Acceptance Model (TAM) and the Innovation Diffusion Theory (IDT). In Proceedings of the 2024 Intermountain Engineering, Technology and Computing (IETC), Logan, UT, USA, 13–14 May 2024; pp. 129–134. [Google Scholar]

- Aldraiweesh, A.A.; Alturki, U. The influence of Social Support Theory on AI acceptance: Examining educational support and perceived usefulness using SEM analysis. IEEE Access 2025, 13, 18366–18385. [Google Scholar] [CrossRef]

- Daleiden, P.; Stefik, A.; Uesbeck, P.M.; Pedersen, J. Analysis of a randomized controlled trial of student performance in parallel programming using a new measurement technique. ACM Trans. Comput. Educ. (TOCE) 2020, 20, 1–28. [Google Scholar] [CrossRef]

- Algerafi, M.A.; Zhou, Y.; Alfadda, H.; Wijaya, T.T. Understanding the factors influencing higher education students’ intention to adopt artificial intelligence-based robots. IEEE Access 2023, 11, 99752–99764. [Google Scholar] [CrossRef]

- Almogren, A.S. Creating a novel approach to examine how King Saud University’s Arts College students utilize AI programs. IEEE Access 2025, 13, 63895–63917. [Google Scholar] [CrossRef]

- Binyamin, S.; Rutter, M.; Smith, S. The Moderating Effect of Gender and Age on the Students’ Acceptance of Learning Management Systems in Saudi Higher Education. Int. J. Educ. Technol. High. Educ. 2020, 17, 1–18. [Google Scholar] [CrossRef]

- Alanazi, A.; Li, A.; Soh, B. Barriers and Potential Solutions to Women in Studying Computer Programming in Saudi Arabia. Int. J. Enhanc. Res. Manag. Comput. Appl. 2023, 12, 55–70. [Google Scholar]

- Alharbi, A. English Medium Instruction in Saudi Arabia: A Systematic Review. Lang. Teach. Res. Q. 2024, 42, 21–37. [Google Scholar] [CrossRef]

- Chan, C.K.Y.; Hu, W. Students’ voices on generative AI: Perceptions, benefits, and challenges in higher education. Int. J. Educ. Technol. High. Educ. 2023, 20, 43. [Google Scholar] [CrossRef]

- Groothuijsen, S.; van den Beemt, A.; Remmers, J.C.; van Meeuwen, L.W. AI chatbots in programming education: Students’ use in a scientific computing course and consequences for learning. Comput. Educ. Artif. Intell. 2024, 7, 100290. [Google Scholar] [CrossRef]

- Gu, X.; Cai, H. Predicting the future of artificial intelligence and its educational impact: A thought experiment based on social science fiction. Educ. Res. 2021, 42, 137–147. [Google Scholar]

- Essel, H.B.; Vlachopoulos, D.; Essuman, A.B.; Amankwa, J.O. ChatGPT effects on cognitive skills of undergraduate students: Receiving instant responses from AI-based conversational large language models (LLMs). Comput. Educ. Artif. Intell. 2024, 6, 100198. [Google Scholar] [CrossRef]

- Garcia, M.B.; Revano, T.F.; Maaliw, R.R.; Lagrazon, P.G.G.; Valderama, A.M.C.; Happonen, A.; Qureshi, B.; Yilmaz, R. Exploring student preference between AI-powered ChatGPT and human-curated Stack Overflow in resolving programming problems and queries. In Proceedings of the 2023 IEEE 15th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), Coron, Philippines, 19–23 November 2023; pp. 1–6. [Google Scholar]

- Han, J.; Liu, G.; Liu, X.; Yang, Y.; Quan, W.; Chen, Y. Continue using or gathering dust? A mixed method research on the factors influencing the continuous use intention for an AI-powered adaptive learning system for rural middle school students. Heliyon 2024, 10, e33251. [Google Scholar] [CrossRef]

- Al-Mamary, Y.H.S. Why do students adopt and use learning management systems?: Insights from Saudi Arabia. Int. J. Inf. Manag. Data Insights 2022, 2, 100088. [Google Scholar] [CrossRef]

- Sánchez-Prieto, J.C.; Cruz-Benito, J.; Therón Sánchez, R.; García-Peñalvo, F.J. Assessed by machines: Development of a TAM-based tool to measure AI-based assessment acceptance among students. Int. J. Interact. Multimed. Artif. Intell. 2020, 6, 80. [Google Scholar] [CrossRef]

- Helmiatin; Hidayat, A.; Kahar, M.R. Investigating the adoption of AI in higher education: A study of public universities in Indonesia. Cogent Educ. 2024, 11, 2380175. [Google Scholar] [CrossRef]

- Goh, T.T.; Dai, X.; Yang, Y. Benchmarking ChatGPT for prototyping theories: Experimental studies using the Technology Acceptance Model. BenchCouncil Trans. Benchmarks Stand. Eval. 2023, 3, 100153. [Google Scholar]

- Bouteraa, M.; Bin-Nashwan, S.A.; Al-Daihani, M.; Dirie, K.A.; Benlahcene, A.; Sadallah, M.; Zaki, H.O.; Lada, S.; Ansar, R.; Fook, L.M.; et al. Understanding the diffusion of AI-generative (ChatGPT) in higher education: Does students’ integrity matter? Comput. Hum. Behav. Rep. 2024, 14, 100402. [Google Scholar]

- Kleine, A.K.; Schaffernak, I.; Lermer, E. Exploring predictors of AI chatbot usage intensity among students: Within- and between-person relationships based on the Technology Acceptance Model. Comput. Hum. Behav. Artif. Hum. 2025, 3, 100113. [Google Scholar]

- Moon, J.; Kim, S.B. Analyzing elementary teachers’ perception of ChatGPT. In Proceedings of the 2023 5th International Workshop on Artificial Intelligence and Education (WAIE), Tokyo, Japan, 28–30 September 2023; pp. 43–47. [Google Scholar]

- Sitanaya, I.H.; Luhukay, D.; Syahchari, D.H. Digital education revolution: Analysis of ChatGPT’s influence on student learning in Jakarta. In Proceedings of the 2024 IEEE International Conference on Technology, Informatics, Management, Engineering and Environment (TIME-E), Bali, Indonesia, 7–9 August 2024; Volume 5, pp. 69–73. [Google Scholar]

- Almulla, M. Investigating influencing factors of learning satisfaction in AI ChatGPT for research: University students’ perspective. Heliyon 2024, 10, e32220. [Google Scholar] [CrossRef]

- Duy, N.B.P.; Phuong, T.N.M.; Chau, V.N.M.; Nhi, N.V.H.; Khuyen, V.T.M.; Giang, N.T.P. AI-assisted learning: An empirical study on student application behavior. Multidiscip. Sci. J. 2025, 7, 2025275. [Google Scholar]

- Castillo-Vergara, M.; Álvarez-Marín, A.; Villavicencio Pinto, E.; Valdez-Juárez, L.E. Technological acceptance of Industry 4.0 by students from rural areas. Electronics 2022, 11, 2109. [Google Scholar] [CrossRef]

- Madni, S.H.H.; Ali, J.; Husnain, H.A.; Masum, M.H.; Mustafa, S.; Shuja, J.; Maray, M.; Hosseini, S. Factors influencing the adoption of IoT for e-learning in higher educational institutes in developing countries. Front. Psychol. 2022, 13, 915596. [Google Scholar] [CrossRef]

- Yazdanpanahi, F.; Shahi, M.; Vossoughi, M.; Davaridolatabadi, N. Investigating the effective factors on the acceptance of teleorthodontic technology based on the Technology Acceptance Model 3 (TAM3). J. Dent. 2024, 25, 68. [Google Scholar]

- Faqih, K.M.; Jaradat, M.I.R.M. Assessing the moderating effect of gender differences and individualism–collectivism at individual-level on the adoption of mobile commerce technology: TAM3 perspective. J. Retail. Consum. Serv. 2015, 22, 37–52. [Google Scholar] [CrossRef]

- Ma, S.; Lei, L. The factors influencing teacher education students’ willingness to adopt artificial intelligence technology for information-based teaching. Asia Pac. J. Educ. 2024, 44, 94–111. [Google Scholar] [CrossRef]

- Qashou, A. Influencing factors in M-learning adoption in higher education. Educ. Inf. Technol. 2021, 26, 1755–1785. [Google Scholar]

- Li, X.; Tan, W.H.; Bin, Y.; Yang, P.; Yang, Q.; Xu, T. Analysing factors influencing undergraduates’ adoption of intelligent physical education systems using an expanded TAM. Educ. Inf. Technol. 2025, 30, 5755–5785. [Google Scholar]

- Dewi, Y.M.; Budiman, K. Analysis of user acceptance levels of the e-Rapor system users in junior high schools in Rembang District using the TAM 3 and DeLone McLean. J. Adv. Inf. Syst. Technol. 2024, 6, 256–269. [Google Scholar]

- Kavitha, K.; Joshith, V.P. Artificial intelligence powered pedagogy: Unveiling higher educators’ acceptance with extended TAM. J. Univ. Teach. Learn. Pract. 2025, 21. [Google Scholar] [CrossRef]

- Goli, M.; Sahu, A.K.; Bag, S.; Dhamija, P. Users’ acceptance of artificial intelligence-based chatbots: An empirical study. Int. J. Technol. Hum. Interact. (IJTHI) 2023, 19, 1–18. [Google Scholar]

- Handoko, B.L.; Thomas, G.N.; Indriaty, L. Adoption and utilization of artificial intelligence to enhance student learning satisfaction. In Proceedings of the 2024 International Conference on ICT for Smart Society (ICISS), Bandung, Indonesia, 4–5 September 2024; pp. 1–6. [Google Scholar]

- Musyaffi, A.M.; Baxtishodovich, B.S.; Afriadi, B.; Hafeez, M.; Adha, M.A.; Wibowo, S.N. New challenges of learning accounting with artificial intelligence: The role of innovation and trust in technology. Eur. J. Educ. Res. 2024, 13, 183–195. [Google Scholar] [CrossRef]

- Chiu, M.Y.; Chiu, F.Y. Using UTAUT to explore the acceptance of high school students in programming learning with robots. In Proceedings of the 2024 21st International Conference on Information Technology Based Higher Education and Training (ITHET), Paris, France, 6–8 November 2024; pp. 1–7. [Google Scholar]

- Pan, Z.; Xie, Z.; Liu, T.; Xia, T. Exploring the key factors influencing college students’ willingness to use AI coding assistant tools: An expanded technology acceptance model. Systems 2024, 12, 176. [Google Scholar] [CrossRef]

- Ho, C.L.; Liu, X.Y.; Qiu, Y.W.; Yang, S.Y. Research on innovative applications and impacts of using generative AI for user interface design in programming courses. In Proceedings of the 2024 International Conference on Information Technology, Data Science, and Optimization, Taipei, Taiwan, 22–24 May 2024; pp. 68–72. [Google Scholar]

- Liu, J.; Li, S. Toward artificial intelligence–human paired programming: A review of the educational applications and research on artificial intelligence code-generation tools. J. Educ. Comput. Res. 2024, 62, 1385–1415. [Google Scholar] [CrossRef]

- Ferri, L.; Maffei, M.; Spanò, R.; Zagaria, C. Uncovering risk professionals’ intentions to use artificial intelligence: Empirical evidence from the Italian setting. Manag. Decis. 2023. [Google Scholar] [CrossRef]

- Cippelletti, E.; Fournier, É.; Jeoffrion, C.; Landry, A. Assessing cobot’s acceptability of French workers: Proposition of a model integrating the TAM3, the ELSI and the meaning of work scales. Int. J. Hum.–Comput. Interact. 2024, 41, 2763–2775. [Google Scholar] [CrossRef]

- Abdi, A.N.M.; Omar, A.M.; Ahmed, M.H.; Ahmed, A.A. The predictors of behavioral intention to use ChatGPT for academic purposes: Evidence from higher education in Somalia. Cogent Educ. 2025, 12, 2460250. [Google Scholar] [CrossRef]