1. Introduction

Modern forms of information warfare are complex, multilevel and dynamically developing phenomena. Traditional methods of influence are complemented and enhanced by digital technologies. The development of information and communication systems, social networks, and automated platforms enables the dissemination of information at scale and in targeted ways, influencing public opinion, political stability, and social processes.

In the context of high-speed data exchange and global interconnectedness, information attacks are becoming increasingly covert and multifaceted. They can include targeted distortion of facts, manipulation of context, dissemination of disinformation, creation of artificial agendas, and use of automated bots to amplify the effect. At the same time, traditional approaches to counteraction are often insufficient. Since they are unable to ensure timely detection and analysis of hidden patterns in large, noisy data arrays.

The problem under consideration is distinctly interdisciplinary nature. Its solution, at a minimum, requires close integration of the humanities and technical sciences. The development of information technologies leads to transformations of society [

1,

2], which cannot always be explained using classical sociology and/or psychology. From our point of view, which underlies the methodology of this review, any attempt to create an effective method of counteracting modern forms of information warfare will highlight the difficulties of a fundamental nature as discussed in [

3]. The cited work shows that the highly fragmented disciplinary structure of science that had developed by the early 21st century is often an obstacle to understanding modern societal processes as a whole. This conclusion applies not only to the problems associated with information warfare. Accordingly, in this review this particular problem is considered, among other things, to substantiate the concept discussed in [

3]. Specifically, the issue of information warfare can be considered as the most illustrative example proving the adequacy of the thesis on the convergence of natural scientific, technical, and humanitarian knowledge. It is this thesis that underlies the methodology of this review.

An illustration of the importance of this thesis is the increasingly widespread use of generative artificial intelligence (GenAI [

4]) in science and education. The nature of which causes numerous discussions [

5,

6,

7]. GenAI is increasingly used to address a wide range of questions, including those of a humanitarian nature. The most general ideas about the essence of information warfare allow us to assert that GenAI can, depending on the algorithms embedded in it, prioritize the interests of certain political or other groups, etc. We emphasize that this does not imply outright falsehoods. Given the widespread use of such technologies, a relatively small (and often unnoticeable to the average user) shift in emphasis is sufficient.

This example clearly shows that the problem of information warfare is reaching a qualitatively new level. There is a famous expression attributed to Bismarck: “The Battle of Sadowa was won by a Prussian school teacher.” This statement illustrates an obvious thesis—the worldview is determined by the education system. Consequently, if this system is transformed, then society is transformed as well. At a minimum, these processes are interconnected.

Consequently, the nature of the future development of computing systems that form the basis of AI for various purposes, particularly GenAI, cannot but influence society (at least in the long term). There is every reason to believe that the “information technology—society” system is currently at a bifurcation point [

8], namely, there is a wide range of scenarios for the further development of this system.

Furthermore, evidence suggests that classical computing technology built on the von Neumann architecture has exhausted its development potential. This underscores the need to develop multiple types of neuromorphic systems [

9,

10]. Revies on this topic repeatedly emphasize that the von Neumann architecture has several significant shortcomings (at least relative to current demands). One of them is determined by the need for significant energy costs for data exchange between the actual computing nodes and memory blocks [

11,

12].

Developments in the field of neuromorphic materials, in turn, raise questions about the algorithmic principles underlying computing systems, including those implementing GenAI. As [

13] shows, that computing systems based on neuromorphic materials will be complementary to logics that differ from binary. To an even greater extent, this conclusion is true for materials that represent a further development of neuromorphic materials [

14]. In particular, attempts to create sociomorphic materials have already been reported in the literature [

15].

In general, the analysis of problems related in one way or another to information warfare requires the integrated use of digital signal processing (DSP) methods, new-generation computing architectures, and mathematical models capable of effectively identifying significant features and anomalies in information flows. Of particular importance are algorithms operating under modular arithmetic, as well as approaches that provide parallel data processing in real time. These technologies are used across military and government domains, and to protect critical infrastructure, financial systems, and media platforms.

This review is structured as follows.

Section 2 details the review methodology (databases, search keywords and year coverage, inclusion/exclusion criteria, deduplication and screening) and reports the PRISMA flow.

Section 3 develops the theoretical foundations of information influence, clarifying core concepts, mechanisms and levels of impact.

Section 4 surveys modern forms of information warfare, including coordinated online operations, platform-mediated manipulation, deepfakes and memetic tactics.

Section 5 analyzes the impact on the sociocultural code—language, value-normative, symbolic, historical-narrative and social-institutional layers—with illustrative cases.

Section 6 synthesizes methods of countering information attacks, spanning education and prebunking, platform-level interventions and detection pipelines (network, temporal, content and multimodal), as well as governance tools.

Section 7 focuses on IoT/IIoT threat and mitigation patterns in the context of information warfare (e.g., segmentation/zero-trust, SBOM, MUD and industrial control security).

Section 8 discusses the evolution of information-processing systems relevant to counter-IO—linking digital signal processing and emerging computing architectures to scalable detection and filtering.

Section 9 considers ethical and legal aspects (e.g., transparency, researcher access and accountability requirements).

Section 10 examines higher education in the context of information warfare and the need for a paradigm shift in curricula and training.

Section 11 outlines future directions and a research agenda.

Section 12 concludes. Map of the review presented in

Table 1.

The purpose of the review is to combine modern scientific and technological approaches in the field of countering information threats, providing a systemic understanding and a comprehensive view of possible methods of protection in the context of the rapid development of the digital environment.

2. Review Methodology

As noted above, the methodology of this review is based on the thesis of the convergence among natural science, technology, and humanities, whose significance is most evident in the problems of information warfare. Key evidence of the importance of interdisciplinary cooperation in this area is presented in

Section 3, where the theoretical foundations of information influence are discussed.

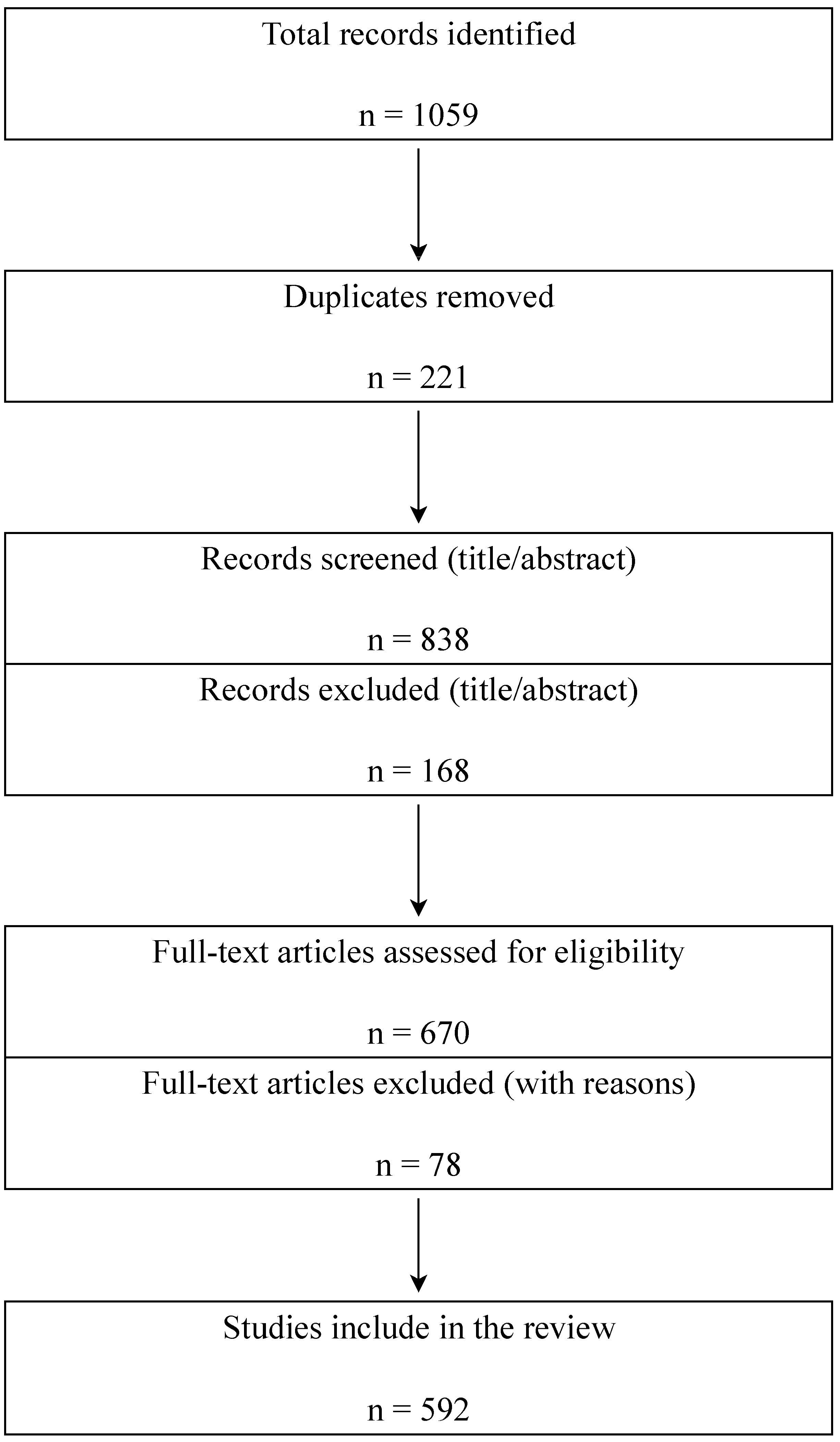

The PRISMA 2020 methodology was used to prepare this review, ensuring transparency and reproducibility of the literature selection process. During the initial search, a total of 1059 publications were identified. After removing duplicates and sources that did not meet the formal criteria, 838 papers remained for screening. A step-by-step assessment was conducted. Based on the titles and abstracts, about 168 publications were excluded that were not related to the research focus or did not meet academic standards. The remaining articles underwent a full-text analysis, during which 78 more sources were excluded due to insufficient methodological rigor or limited relevance. As a result, the final sample included 592 publications, which formed the basis of the analysis. The chronological scope of coverage is broad: although the main focus was on studies of recent years (2015–2025). The review also included earlier works that are fundamental to forming a theoretical basis and explaining the evolution of information impact methods. Thus, the final sample combines both contemporary studies reflecting current trends and challenges and classical works that allow us to trace the development of scientific thought in retrospect.

The literature search was conducted in leading international databases—Scopus, Web of Science, and Google Scholar, as well as in specialized academic publications. In addition, cross-references from review articles, monographs, and key publications were considered. When including sources, priority was given to publications in peer-reviewed journals and academic presses. This approach minimized the risk of bias and increased the reliability of the summarized data. To increase the completeness of the sample, a combination of keywords was used, including the terms “information warfare”, “information operations”, “propaganda”, “disinformation”, “hybrid warfare”, “sociocultural code”, “cognitive security”, “digital platforms”, “cybersecurity”, as well as other equivalents.

Table 2 provides information on source coverage, and

Figure 1 shows a PRISMA-style diagram.

3. Theoretical Foundations of Information Influence

The study of information impact requires a comprehensive approach that combines knowledge from cognitive psychology, sociology, communication theory, cybernetics, and political science. In the context of digitalization and global interconnectedness of the media, the information environment has become not only a channel for transmitting information but also an arena for targeted manipulation of public opinion and behavioral patterns. Modern research emphasizes that the effectiveness of such impacts is determined by both individual cognitive mechanisms of perception and the structure of the information networks through which information disseminated. Fundamental theoretical models—from the concepts of cognitive biases and social epistemology to network and epidemiological theories—allow us to identify patterns in the formation, amplification, and replication of meanings in the collective consciousness. These same models underlie the development of information operations strategies, including military doctrines of cognitive actions, platform algorithms and the attention economy. This section presents a systematic review of the key theoretical approaches necessary to understand the nature and mechanisms of modern information impact.

3.1. Cognitive Biases and the Psychology of Perception

Cognitive biases are systematic deviations in the process of perceiving, processing, and memorizing information, that lead to persistent errors in judgment and decision making [

16]. These phenomena are not random—they are associated with the characteristics of human memory, attention, and emotions, as well as evolved mechanisms for conserving cognitive resources [

17]. In the context of information influence, cognitive biases are exploited to guide the interpretation of facts, evoke emotional reactions, and reinforce the desired attitudes in the collective consciousness [

18]. One of the most studied is the confirmation bias, in which an individual tends to search for and interpret information so that it aligns with their existing beliefs [

19]. In the context of digital platforms, this effect is amplified by content personalization algorithms that form “information bubbles” (filter bubbles), in which users mainly see information that confirms their worldview [

20,

21]. This not only reduces the likelihood of critical analysis but also makes the audience more receptive to targeted narratives.

The illusory truth effect is an increase in the perceived credibility of information through repetition [

22]. Experiments show that even the refutation of an initially false message does not always reduce its subjective credibility, especially if repetitions occur in different contexts [

23,

24]. This phenomenon is widely exploited in propaganda campaigns, where key messages are regularly reproduced across multiple channels.

The anchoring effect demonstrates that initial numerical or verbal information exerts a disproportionate influence on subsequent evaluations [

25]. For example, inflated or deflated statistics in news stories can change the perception of an issue, even when accurate information is subsequently presented [

26].

Other significant cognitive biases used in information influence include:

- -

Halo effect—tendency to transfer positive or negative characteristics of an object to its other properties [

27].

- -

Primacy and recency effects—better memorization of the first and last presented information [

28].

- -

Selective perception—tendency to ignore data that does not meet expectations [

29].

- -

Framing effect—change in the interpretation of information depending on the wording or context [

30].

Psychological research shows that such biases are particularly pronounced under heightened emotional arousal [

31]. High emotional load—fear, anger, a sense of threat—reduces cognitive control, shifts information processing to a heuristic level, and makes a person more susceptible to simplified, binary interpretations of events [

32]. This explains why emotionally charged messages spread faster and more widely on social media [

33]. In addition to individual cognitive biases, information campaigns rely on social cognitive effects. For example, the false consensus effect creates the impression that a certain position is widespread and supported by the majority [

34]. The groupthink effect suppresses critical discussion within a group if it is assumed that there is general agreement [

35].

Modern neurocognitive studies using functional magnetic resonance imaging (fMRI) show that cognitive biases are associated with activity in brain structures responsible for processing reward, social evaluation, and emotions, such as the prefrontal cortex and amygdala [

36,

37]. This opens up prospects for a deeper understanding of the mechanisms of manipulation and the development of methods of cognitive immunoprophylaxis.

Taken together, cognitive biases underpin manipulative influence, since they allow us to bypass rational filters of perception, appealing directly to automatic, emotionally charged mechanisms of information processing. Understanding these distortions is key to developing effective strategies to counter modern forms of information warfare.

3.2. Communication Theories and Social Epistemology

Communication theories play a fundamental role in understanding the mechanisms of information influence, because they allow us to conceptualize both the structure and dynamics of message dissemination across society. The evolution of communication models—from linear schemes of information transmission to multilevel network concepts—has reflected changes in the technological and media environments, as well as a shift in emphasis from data transmission to the construction of meanings and collective interpretations [

38,

39]. One of the early approaches that laid the foundation for the analysis of information processes was Las—swell’s linear communication model, which reduces communication to the chain “who says—what—through which channel—to whom—with what effect” [

40]. Despite its schematic nature, this model is still used in structuring information influence strategies and assessing the effectiveness of media campaigns [

41].

In the context of information warfare, the linear model is important for mapping sources and target audiences, but insufficient for analyzing complex networks and feedback loops. A significant development of linear approaches was the two-stage communication model by Lazarsfeld and Katz, which identifies the role of “opinion leaders” in the transmission and interpretation of messages [

42]. Opinion leaders, as highly trusted nodes in social networks, can amplify or weaken information signals, including disinformation [

43]. Recent empirical research shows that in the online environment, the role of opinion leaders is often played by micro-influencers or thematic communities, rather than large media figures. These “middle nodes” become key to the sustained dissemination of narratives [

44]. With the transition to the digital age, interactive and transactional communication models have become widespread, emphasizing the bidirectional nature of information exchange [

45]. Here, communication is viewed as a process of constant mutual influence, where the audience not only receives but also actively forms and redistributes content. This corresponds to the reality of social networks, where disinformation can be amplified through reposts, comments, and memetic transformations of original messages [

46].

Social epistemology complements these models by focusing on how knowledge and beliefs are formed, disseminated, and legitimized in a collective context [

47]. According to Goldman and Olson, social epistemology examines the institutions, technologies, and practices through which the “epistemic ecology” of society is shaped [

48]. In the context of information warfare, epistemic ecology is under attack: alternative sources of legitimacy are created, standards of evidence are eroded, and trust in traditional knowledge institutions is undermined [

49].

One of the key concepts of social epistemology is epistemic trust: the willingness to accept information from certain sources as true [

50]. Manipulative information campaigns often aim to erode epistemic trust in independent media, scientific institutions, and government agencies, thereby opening space for the promotion of alternative narratives [

51]. Recent research shows that loss of epistemic trust correlates with increased susceptibility to conspiracy theories and quasi-scientific claims [

52]. Communication theories that consider the influence of network structures are particularly relevant for the analysis of disinformation. The network approach allows viewing the dissemination of messages as a process that depends on the topology of connections between participants [

53]. For example, studies based on graph theory show that nodes with high betweenness centrality play a critical role in the transmission of information between clusters and can be targets for information intervention [

54].

An important area is the integration of communication theories with epidemiological models, where information is viewed as an “infectious agent” [

55]. These hybrid models enable to predict the dynamics of fake news spread and assess the effectiveness of “information quarantine” or prebunking strategies [

56]. At the same time, social epistemology suggests that the speed of disinformation spread depends not only on network structure, but also on cultural norms, the level of media literacy, and trust in sources [

57]. Framing and agenda-setting play a significant role in information impact. According to McCombs and Shaw, media do not so much tell people what to think as they determine what to think about [

58].

Framing, in turn, determines the interpretive framework of events, influencing which aspects are perceived as significant [

59]. In the modern digital environment, framing is often implemented through visual and memetic forms, which enhance its emotional and cognitive effectiveness [

60]. Together, communication theories and social epistemology provide powerful tools for analyzing and countering information attacks. They allow identification of nodes and channels of dissemination, mechanisms for legitimizing knowledge, and weak points in the epistemic structure of society. For developers of information-security strategies, the key conclusion is the need to combine technical network analysis with a cultural and cognitive understanding of the processes that shape information perception.

3.3. Network and Epidemiological Models of Spread

Understanding the mechanisms of information dissemination in the modern media space is impossible without network and epidemiological models. These approaches help to formalize complex message circulation processes, consider the structural features of communication systems, and predict the dynamics of information attacks. Network models are based on graph theory, in which the information ecosystem is represented as nodes (individual users, organizations, media accounts) and edges (connections between them, such as subscriptions, reposts, or mentions) [

61]. Classic network analysis indicators—degree, betweenness centrality, closeness centrality, and eigenvector centrality—help identify key agents of message dissemination [

53]. For example, nodes with high betweenness centrality are often “bridges” between clusters and can ensure the rapid transition of disinformation to new network segments [

54]. One of the fundamental features of information networks is the small-world property, identified by Watts and Strogatz [

62]. Even in large and sparse networks, there are relatively few steps needed to connect any two nodes. This property explains why disinformation can reach a global audience in a matter of hours, especially in the presence of viral content and memetic forms.

Another key characteristic is the scale-free (scale-invariant) degree distribution of networks, in which node degrees follow a power law [

63]. Such networks are resilient to random failures but are extremely vulnerable to targeted interventions on hub nodes. Research shows that on Twitter and Facebook, about 1–5% of users can be responsible for more than 80% of the spread of certain narratives [

64].

Based on network representations, diffusion models have been developed that integrate social and behavioral factors. The most well-known epidemiological models are the SIR (Susceptible-Infected-Recovered) type and its modifications—SIS, SEIR, SEIZ (where Z is the “zombie” state of a misinformed agent) [

65]. In the context of information warfare, “infection” corresponds to the state of an individual who adopts and spreads disinformation, and “recovery” can mean either debunking or refusal to further spread the message [

66].

The SIR model in the information context has been complemented by threshold models, in which an individual accepts information only when a certain number of signals from the environment are reached [

67]. This allows us to describe the phenomenon of “viral breakout”, when a narrative that has long remained on the periphery suddenly enters the mainstream.

Modern works demonstrate that classical epidemiological approaches need to be adapted to the specific features of digital platforms. For example, Borge-Holthoefer et al. [

68] propose a SEIZ model in which state Z describes users who are resilient to refutation and continue to spread falsehoods despite access to corrective information. Such “infection resilience” is particularly characteristic of politically motivated disinformation campaigns.

An important area is the combination of network analysis and epidemiological models with agent-based modeling (ABM) methods [

69]. ABM accounts for individual differences in agents—media literacy level, political orientation, emotional perception—and observe how these factors affect the macrodynamics of dissemination. For example, studies on COVID-19 show that misinformation about vaccines spread faster and deeper online than science-based messages due to emotional richness and algorithmic amplification [

70]. Particular attention is paid to role structures in the network. The work of Lerman et al. [

71] shows that “super-spreaders” and “super-receivers” are different categories of users, and counteraction strategies should take both into account. Eliminating super-spreaders slows the growth of misinformation, but long-term resilience is achieved by increasing the media literacy of super-receivers [

72]. Hybrid approaches also use percolation models to describe cascade processes [

73]. Here, dissemination is viewed as a process of penetration through a network, in which the “permeability” of nodes depends on trust, cultural factors, and personal experience. When a critical connection density is reached, a “percolation threshold” emerges, beyond which dissemination becomes uncontrollable.

In terms of countermeasures, network and epidemiological models allow testing the effectiveness of measures—from removing nodes to changing content delivery algorithms [

74]. However, several studies [

75] emphasize that without considering social context and cross-platform dynamics, such measures are short-lived.

Recent developments include the use of dynamic multilayer networks (multiplex networks), in which each layer represents a separate platform or type of interaction (e.g., public posts, private messages, offline meetings) [

76]. Modeling on such structures reveals that disinformation often “migrates” between layers: blocking on one network causes an increase in activity in another. Network and epidemiological models of dissemination provide both an analytical and a predictive basis for understanding the dynamics of information attacks. Their strength lies in their ability to identify critical points, predict consequences, and evaluate the effectiveness of countermeasures. A key challenge for current research is integrating these models with cognitive and cultural factors to create more accurate and robust analytical tools.

3.4. Attention Economy and Platform Architecture

The concept of the attention economy dates back to the 1970s, when Herbert Simon pointed out that under information abundance, the scarce resource is not information but human attention [

77]. In the modern digital space, this idea is of central importance, as user attention has become a key asset for which social networks, news aggregators, streaming services, and online platforms compete. The digital environment has transformed attention into a commodity subject to monetization through advertising models, targeting, and the sale of user-behavior data [

78]. Platforms, relying on behavioral data, build algorithms that rank and personalize content to maximize user time on the platform. These algorithms work like digital “magnets”, continuously optimizing the delivery of information to retain attention and stimulate repeat visits [

79]. Modern platforms use a combination of recommender systems, ranking algorithms, and content-filtering systems based on machine learning and deep neural networks [

80]. These mechanisms are trained on huge amounts of user data and, as recent studies show, tend to reinforce existing preferences, forming so-called “filter bubbles” and “echo chambers” [

20].

The effect of algorithmic personalization has been documented in studies on Facebook and YouTube, where recommendation systems show a tendency to direct users to more polarized and emotionally charged content [

81]. In the case of YouTube, algorithms increase the likelihood of being steered to conspiracy videos if users had previously interacted with similar content, even in the absence of an explicit search [

82].

In the context of information warfare, platform architecture and the attention economy play a dual role. On the one hand, the highly competitive environment forces platforms to optimize algorithms for engagement metrics—clicks, likes, and reposts—without directly considering the credibility of the content [

83]. On the other hand, this same mechanism creates conditions for increased amplification of disinformation, since false or sensational messages tend to evoke a stronger emotional response and accordingly, generate more interactions [

84]. An empirical study shows that false news spreads faster on Twitter and reaches more users than true news, and this effect is not explained by bots—it is associated with the behavior of real users [

64]. This indicates that the platform architecture focused on engagement metrics objectively amplifies narratives that correspond to attention patterns, rather than the quality of information.

The attention economy is closely related to cognitive psychology. People tend to pay more attention to information that evokes strong emotions (fear, outrage, surprise), which, in turn, encourages platforms to offer such content [

33]. Algorithmic systems trained on behavioral data unintentionally exploit cognitive biases, including confirmation bias and negativity bias [

84].

From the perspective of military and strategic information operations doctrines, the attention economy and platform architecture create an infrastructure that enables large-scale and targeted manipulation of public opinion [

85]. State and non-state actors, including online troll farms and coordinated botnets, use knowledge of algorithmic mechanisms to advance favorable narratives [

86]. Modern NATO StratCom [

87] and European External Action Service [

88] reports indicate that the architecture of social platforms does not simply passively transmit information but actively shapes the context and trajectories of its dissemination, influencing the likelihood of users encountering a particular narrative.

One of the key challenges is the cross-platform migration of content. Disinformation blocked in one network can be instantly reproduced in another, and the architecture of interconnections (cross-posting, messengers, forums) helps bypass barriers [

89]. Research shows that Telegram has become one of the main platforms—“recipients” of removed content from Facebook and Twitter, while maintaining a high speed of dissemination [

90].

These features indicate the need to develop architectural solutions that can work not within a single platform but at the ecosystem level.

Thus, the attention economy and platform architecture are fundamental elements of the information impact infrastructure. Their key feature is algorithmic optimization for engagement metrics, which, although not initially aimed at disinformation, creates a favorable environment for its exponential spread. Understanding these mechanisms is a prerequisite for developing effective countermeasures to modern forms of information warfare.

3.5. Strategic and Military Doctrines of Cognitive Operations

Cognitive operations (CO) within a military context are the deliberate manipulation of the perceptions, thinking, emotions, and decision-making processes of target audiences to achieve strategic or operational objectives. The approach emerged at the intersection of psychological operations (PSYOPS), strategic communications (STRATCOM), information operations (IO), and cyber operations, but has become in its own right over the past two decades as a key dimension of modern conflict [

91].

The origins of CO can be traced back to the Cold War military doctrines, when information and psychological warfare were viewed as an integral part of political struggle [

92]. However, with the advent of digital communications and social media, the cognitive space has become an integral part of the “battlefield”. A 2018 RAND report documents a shift from traditional “information superiority” to the concept of “cognitive superiority,” in which the object of influence is no longer the communications infrastructure but the processes of perception and interpretation of information [

93]. NATO doctrinal documents such as the Allied Joint Doctrine for Strategic Communications (AJP-10) define the cognitive domain as “the domain in which beliefs, values, and perceptions are formed and changed” [

94]. Unlike the information domain, which describes the channels and technical means of data transmission, the cognitive domain focuses on subjective interpretations and their strategic management.

The United States developed the CO concept as part of a combination of PSYOPS, military information operations, and cyber operations. US Special Operations Command reports emphasize the need to integrate data on the cultural characteristics of target audiences into content impact algorithms [

95]. The Pentagon views cognitive operations as a component of “Multi-Domain Operations” (MDO), in which psychological impact is synchronized with cyber and information attacks [

96].

Russia officially uses the term “information-psychological impact” (IPI), which essentially overlaps with the concept of CO. The Russian Federation Information Security Doctrine (2016) states that “information impact on collective consciousness” may aim to undermining cultural and spiritual values, as well as destabilizing the domestic situation [

97]. Analysis by the NATO StratCom COE indicates that Russia systematically uses cognitive methods as part of hybrid strategies [

98].

China, within the framework of the Three Warfares concept—psychological, legal, and media—emphasizes the cognitive effects of information campaigns [

99]. The White Paper on National Defense of the PRC (2019) states that “victory in future wars will be determined by the ability to shape the perception and interpretation of events” [

100].

Cognitive operations are embedded in a broader paradigm of hybrid warfare, in which military, diplomatic, economic, and information means are combined to achieve goals without direct armed conflict [

101]. In this scheme, the cognitive component acts as a “force multiplier”, since successfully changing the perception of the target audience can reduce the need to use kinetic means [

102].

Cognitive operations use a wide range of methods, include [

103]:

- -

targeted distribution of content based on behavioral data;

- -

creation and management of “frames” (narratives) with high emotional load;

- -

exploitation of cognitive biases (e.g., the anchoring effect and the confirmation effect);

- -

use of synthetic media (deepfakes) to enhance credibility.

A key feature of modern CO implementation is integration with platform architecture (see

Section 3.4) and the use of algorithmic affordances of social media [

104]. Research shows that the combination of algorithmic personalization and psychologically validated narratives can significantly increase the effectiveness of influence [

105].

The doctrine of CO is being developed at the level of national strategies and international alliances. The 2021 NATO report “Cognitive Warfare” [

106] explicitly states that cognitive space is a new domain of conflict along with land, sea, air, space, and cyberspace. The document warns that cognitive warfare is aimed not only at soldiers but also at civilians, including shaping the perception of threats and allies.

In 2020–2022, the United States conducted a series of exercises simulating operations in the cognitive domain, in which social media simulations were used to test the resilience of military units to information attacks [

107]. Such training demonstrates an institutional recognition that winning future conflicts requires dominance in the cognitive domain.

Despite the growing attention to CO, there is a danger of an escalating “cognitive arms race” in which states invest in increasingly sophisticated methods of manipulating perceptions [

108]. This raises serious ethical questions, including the balance between national security and the human right to freedom of thought and belief [

109].

With the cognitive domain becoming an arena for strategic confrontation, developing countermeasures requires an interdisciplinary approach that combines political science, cognitive psychology, computer science, and international law.

4. Modern Forms of Information Warfare

The evolution of communication technologies and digital platforms has profoundly changed the nature of information conflicts. While in the past information warfare relied primarily on traditional media and propaganda channels. Today, the key arenas of confrontation have become social networks, instant messaging apps, video-hosting services, and interactive platforms. The development of personalization algorithms, tools for automated content distribution, and generative technologies has led to the emergence of new, more sophisticated forms of attack—from botnets and coordinated campaigns to synthetic media and memetic weapons. These forms often operate in a complex manner, reinforcing each other. These hybrid operations creating difficult-to-distinguish hybrid operations aimed at manipulating public consciousness, undermining trust in institutions, and fragmenting the sociocultural space. This section examines the key manifestations of information warfare in their current technological and strategic context.

4.1. Disinformation, Fake News and False Narratives

In modern literature, it is suggested to distinguish between mis-, dis- and malinformation: errors and inaccuracies without the intention to mislead; deliberate lies; and accurate information disseminated with malicious intent (e.g., doxxing). This framework is outlined in the Council of Europe report [

110], which also shows that the boundaries between categories are dynamic and context dependent (e.g., motives, production processes, and distribution routes). An important conclusion is that the source and process are at least as significant as the “factual result” itself, which is critical to developing countermeasures.

A philosophically precise definition of “disinformation” was proposed by Don Fallis: false (or misleading) content disseminated with the intent to mislead [

111]. This definition emphasizes intent, distinguishing disinformation from unintentional error, and is aligns well with law-enforcement practice and communications ethics. In parallel, there is the term “fake news”, which in academic usage has been typologized as: satire, parody, fabrication, manipulation (including deepfake editorials), advertising mimicry, and propaganda. This typology [

112] notes that under one “umbrella” label are hidden different genres with different harmfulness and mechanisms of distribution—and therefore different response tools.

The review by Lazer et al. [

113] in Science systematizes the empirical evidence: the role of platform algorithms, behavioral targeting, social context, and psychology. The authors emphasize that sustainable solutions go beyond simple “delete/tag” and include interface design, researchers’ access to data, and prebunking (inoculation). The scale of impact in electoral contexts has been documented by a series of studies: the Oxford Internet Institute’s report on the IRA account pool for the US Senate [

114], Work on France’s 2017 election [

115], and an analysis of the “Brexit botnet” [

116]. All of them document coordinated inauthentic behavior (CIB), the use of botnets, memetic formats, and cross-platform integration. This does not necessarily mean that each message is highly “persuasive,” but it does demonstrate industrial-scale reach and repetition—two factors critical to the perpetuation of false narratives. At the same time, individual exposure to “untrusted domains” is heterogeneous: panel data from Guess, Nyhan, and Reifler [

117] showed that a significant portion of the audience does not encounter such sources regularly, while small cohorts are overexposed and become “superconsumers” of inauthentic sites. For counter-policy, this means that targeted measures are needed, not just “platform-wide” regulation.

The cognitive side of the phenomenon explains the persistence of false narratives. The confirmation bias [

118] inclines people to seek out and interpret information in support of their own beliefs. The “illusory truth effect” [

119] shows that simple repetition increases subjective credibility even when a person knows the correct answer. Reviews of “post-truth” [

120] highlight the limited effectiveness of post-factum refutations without changing frames and context. This explains the success of the “firehose of falsehood” [

121], which relies on speed, volume, and variability rather than internal coherence. Finally, in times of crisis, an “infodemic” works: saturating the field with contradictory messages, in which the lack of trusted reference points (e.g., institutions and experts) makes the audience receptive to simple, emotionally charged explanations. WHO-The Lancet [

122] observations during COVID-19 confirm that combating the infodemic requires a combination of technical filters, platform partnerships with fact-checking organizations, and media literacy measures [

123,

124,

125].

4.2. Botnets, Trolling, and Coordinated Inauthentic Behavior

Among the most studied technological forms of modern information influence are botnets—automated or semi-automated accounts on social media that imitate the activity of real users. Their key function is to scale content distribution, manipulate engagement metrics (likes, retweets, comments), and create the illusion of consensus on certain topics [

126].

Botnet mechanisms are varied. In technical terms, they can be based on API access scripts to platforms (for example, Twitter API before the introduction of restrictions in 2023), use the infrastructure of “bot farms” with centralized control or distributed architectures via infected user devices (i.e., botnet models known from cybersecurity) [

127]. In parallel, “human bots” are actively used—low-paid operators acting according to preset scenarios (troll farms), a pattern especially typical of political trolling [

128]. Coordinated Inauthentic Behavior (CIB) as a concept is codified in the policies of Meta and other platforms and is studied in the academic community from the perspective of network analysis, anomaly detection, and digital attribution [

129]. The key criterion is the presence of hidden centralized coordination aimed at misleading the audience about the true nature of the interaction.

Research by E. Ferrara and colleagues [

130] shows that botnets can amplify both disinformation and legitimate political messages, which complicates the binary classification of “harm/benefit”. At the same time, the pace of publications, the rhythm of daily activity, and the simultaneous distribution of the same URLs are reliable indicators of automation [

131].

A classic example of a large-scale CIB operation is the activity of the Russian “troll factory” (Internet Research Agency, IRA), identified in the reports of the US Senate and analyzed in research [

132]. Hundreds of pages and thousands of accounts aimed at polarizing society and interfering in the 2016 elections were identified on Facebook and Instagram.

Similar patterns are documented in other contexts: in the 2017 French elections [

133], in the Catalan crisis [

134], and in the media coverage of the Hong Kong protests [

135]. In each case, campaigns are multilingual, integrated across cross-platform assets (Twitter, Facebook, YouTube, messaging apps), and use memetic content for organic reach.

The CIB problem is compounded by “digital astroturfing”—the imitation of grassroots support using synthetic accounts [

136]. As experiments by Vosoughi, Roy, and Aral [

137] show, false information spreads faster and more deeply on Twitter than truthful information, even without the use of bots; however, bots significantly accelerate the early stages of diffusion, increasing the chances of a narrative taking hold.

In response, platforms are implementing automatic detection methods: behavioral metrics, graph analysis, machine learning based on content features, and metadata [

138]. However, as Keller and Klinger [

139] note, each new detector quickly causes attackers to adapt—publication patterns, message frequency, and distribution by time zones change.

In the academic and applied context, interest in international regulation of CIB is growing. NATO StratCom COE [

140] and the European External Action Service (EEAS) [

141] reports point to the need to synchronize cybersecurity and information standards, since the boundaries between “purely technical” and “purely information” threats in the bot environment are blurred.

4.3. Deepfakes and Synthetic Media

Deepfakes are synthetic media files—images, audio, or video recordings, created or modified using deep learning algorithms, most often based on generative adversarial networks (GANs) [

142]. Their key feature is a high degree of realism in the absence of an authentic source of an event or statement. Having emerged as an experimental direction in computer vision in the mid-2010s, deepfakes quickly became a tool for both entertainment and information attacks. The technological basis of deepfakes lies in the ability of GANs and their variants (StyleGAN, CycleGAN, and related models) to model data distributions and synthesize new samples that are visually indistinguishable from real ones [

143]. For audio fakes, architectures based on voice conversion models and text-to-speech systems with deep learning are used [

144]. With the advent of multimodal generators (e.g., DALL ·E 2, Imagen, and Sora), it became possible to create falsified content in several media channels simultaneously [

145].

In the context of information warfare, deepfakes have two key application scenarios [

146]:

Discrediting political figures and public leaders—creating false videos with statements or actions that did not occur.

Substitution of evidence: falsification of photo and video evidence used in the media and court proceedings.

Examples of this kind have been recorded in a number of countries. In 2019, a deepfake depicting Gabonese President Ali Bongo was distributed on Facebook and Twitter, which caused a political crisis and an attempted military coup [

147]. In 2020, during the US elections, there were cases of using altered videos to discredit candidates [

148]. Westerlund’s research [

149] points out that as generation algorithms develop, the risk of “invisible interference” in political processes through the mass production of synthetic materials increases.

The problem is exacerbated by the fact that the availability of generation tools has increased dramatically: the DeepFaceLab and the FaceSwap libraries, and cloud-based AI platforms enable deepfake creation without deep technical expertise [

150]. At the same time, anti-detection methods are developing—including adding noise-like artifacts or using adaptive frame generation to bypass detection algorithms [

151].

In terms of countermeasures, academic and corporate labs are developing methods for automatic detection of deepfakes based on analysis of facial microexpressions, blink rate discrepancies, lip movement patterns, and compression artifacts [

152]. However, as Verdoliva [

153] and Korshunov and Marcel [

154] note, in the context of an “arms race”, the accuracy of such methods decreases as new generative models appear. Organizations such as Europol and the UN view deepfakes as a threat to information security and public trust [

155]. Europol’s Facing Reality? (2022) report proposes including synthetic media in the list of priority threats to cyberspace. In 2023, the European Parliament began discussing legislation on labeling AI-generated content [

156]. Deepfakes and synthetic media are therefore becoming an increasingly important element of the information warfare arsenal. Their danger lies in their ability to rapidly erode trust in visual and audio evidence, which undermines the basis of public consensus and makes rapid verification during crises nearly impossible.

4.4. Memetic Weapons and Infooperations in Social Networks

The term “memetic weapon” traces back to the concept of the meme proposed by R. Dawkins in the book “The Selfish Gene” (1976), where the meme is described as a unit of cultural information transmitted through imitation and communication [

157]. In contemporary contexts, memes have become not only an object of study for cultural scientists, but also a tool for targeted information influence. In the context of information warfare, memetic weapons are understood as visual, textual, or audiovisual cultural artifacts created and distributed with the aim of changing the perceptions, beliefs or behaviors of target audiences [

158].

Social networks have radically increased the potential of memetic communication due to the high speed of replication, algorithmic adjustment of news feeds and a low threshold for user participation [

159]. Memes in the digital environment have several strategic properties [

160]:

- -

Virality—the ability to spread exponentially in network structures.

- -

Brevity and density—the transmission of complex meanings in a minimal form.

- -

Emotional intensity—using humor, sarcasm, or outrage to enhance the response.

- -

Mimicry of “folk art”—which makes them difficult to attribute.

Within the framework of information operations, memes perform the following functions [

161]:

- -

Cognitive contagion—introducing simplified and emotionally charged narratives that replace complex discussions.

- -

Discrediting the enemy—forming a negative image through an ironic or grotesque image.

- -

Signaling belonging—marking “us” and “them” in online communities.

- -

Triggering—activating certain reactions upon repeated contact with the meme.

Empirical studies show that memetic campaigns are often part of broader coordinated information operations. For example, Johnson et al. [

162] analyzed Twitter activity during the 2016 US presidential election and identified network clusters that distributed political memes in conjunction with botnets. In [

163] demonstrated that memes were used to increase polarization in Europe, with platform algorithms facilitating “echo chambers.”

Memes play a special role in crisis and conflict situations. For example, during the conflict in eastern Ukraine, when visual jokes and caricatures become a tool for both mobilizing supporters and demoralizing opponents [

164]. A study by Ylä-Anttila [

165] shows that political memes on Facebook during campaigns can form stable emotional frames, shaping long-term perceptions of events.

The effectiveness of memetic weapons is due to cognitive mechanisms: ease of memorization, emotional coloring, conformity, and the effect of repetition [

166]. These same mechanisms make counteraction difficult—memes quickly adapt, reformat, and integrate into new contexts.

Countermeasures include:

- -

automated meme detection using computer vision and contextual analysis [

167];

- -

proactive creation of countermemes and positive narratives [

168];

- -

media literacy and critical thinking as long-term protection [

169].

However, as Phillips notes [

170], excessive censorship of memes can be perceived as a restriction of freedom of expression, which in itself can become an object of information manipulation.

Thus, memetic weapons are an effective but difficult to control tool of information warfare, highly adaptable and able to penetrate the collective consciousness through everyday communication on social networks.

4.5. Geopolitical Cases

Analyses of the 2016 U.S. presidential election document systemic disinformation and coordinated operations using bots, targeted advertising, and memetic formats. Howard, Woolley, and Calo (JITP, 2018) [

171] describe computational propaganda and challenges to voting rights; the DiResta (Tactics & Tropes) [

172] team report and SSCI materials document IRA activities aimed at polarization and targeting vulnerable groups. The resulting estimates show uneven exposure and the contribution of small “superconsuming” cohorts, while simply increasing advertising transparency without cognitive prevention has limited impact [

173,

174,

175]. In the European Union, information attacks are considered part of hybrid threats: the East StratCom Task Force maintains a case database (EUvsDisinfo), and the Joint Communication JOIN/2020/8 sets the framework for countering COVID-19 disinformation (rapid fact-checking, cooperation with platforms, and transparency). In parallel, “digital diplomacy against propaganda” is developing (Bjola and Pamment), but the constant dilemma is the balance with human rights protections [

176,

177,

178]. Since 2014, information operations in Ukraine have been integrated with military actions; after 24 February 2022, there has been an increase in multilingual campaigns, deepfakes, operations on Telegram, and synchronization with cyberattacks. International studies document both state and civilian practices of digital resilience, OSINT and counter-memetic campaigns. Current analytical reviews (Chatham House, Atlantic Council/DFRLab) and academic literature (International Affairs) converge in their assessment: the key factors are speed, cross-platform and narrative localization [

179,

180,

181,

182].

In East Asia, China’s ‘cognitive war’ against Taiwan remains the most systemic. It combines psychological, legal, and “public opinion warfare” (the so-called “Three Warfares”). And manifests itself in electoral cycles through the fabrication of narratives, exploitation of vulnerabilities in the media environment, and the long-term “wear and tear” of trust in institutions [

183,

184,

185].

In parallel, Hong Kong 2019–2020 has become a field of intense two-way struggle over meaning: studies document how “fakes” and rumors simultaneously served to delegitimize the protest and mobilize it, increasing polarization and networked forms of leadership [

186].

In Southeast Asia, the most tragic case is Myanmar, where algorithmically amplified extremist speech and disinformation on Facebook were woven into a violent campaign against the Rohingya; academic works suggest moving away from the reduction to “hate speech” to the analysis of institutional and platform mechanisms of escalation [

187,

188]. The Philippines demonstrates a “production” model of online disinformation. In 2016, digital fan communities and informal “influencer” brokerage networks amplified the effect of offline mobilization of Duterte supporters. And in 2022, persistent narratives of “authoritarian nostalgia” and conspiracy theories contributed to the electoral dynamics, with the audience participating in the “co-production” of disinformation [

189,

190].

In South Asia, India has become a laboratory for “encrypted” information warfare: mass political communication via WhatsApp in the 2019 election campaign created opaque channels for the circulation of statements that are poorly amenable to platform moderation control. Empirical studies show that “tiplines” (crowdsourced lines for receiving suspicious content) allow for early detection of viral messages and reconstruction of cross-platform flows, while open social networks only partially reflect what is happening in end-to-end (E2E) environments [

191,

192].

In the multicultural and multilingual context of Kazakhstan, resilience relies on institutional measures of cybersecurity and information policy. The basic framework is set by the Cybersecurity Concept “Cyber Shield of Kazakhstan” (Government Resolution No. 407 of 30 June 2017) and subsequent initiatives; ITU confirms the launch and implementation of the concept. Academic publications analyze vulnerabilities and lessons from the pandemic period for information security. Practice shows that during crises, the share of disinformation through social networks and instant messengers increases—both technical monitoring measures and media literacy programs are required [

193,

194,

195]. Taiwan demonstrates a model of “civic digital resilience”: a collaborative effort between the state, the civic tech community, and fact-checking. Research documents persistent attacks from the Chinese side, especially during electoral periods; at the same time, academic and applied works describe institutional and civic responses, ranging from co-fact-checking to preventive communication and educational programs [

185,

196,

197].

Thus, the forms of information warfare are already very diverse. However, there is every reason to believe that they will evolve further. As shown in the next section, there are tools that allow influencing the basis of the mentality of the population of any state—its sociocultural code.

5. Impact on the Sociocultural Code: Theory and Practice

The sociocultural code (in the literature, similar concepts such as “mentality”, “civilizational code”, etc., were also often used earlier) is a set of values, norms, symbols, historical narratives, and collective ideas that shape the identity of a society. In the context of an information war, attacks on this code become a strategic tool that can weaken internal cohesion, undermine trust in historical heritage and transform the system of value orientations. Such influences can be direct—through the distortion of historical facts and the imposition of alternative interpretations of events—or indirect, including the gradual erosion of cultural constants through mass culture, media, and educational practices. Modern information campaigns, based on the achievements of cognitive psychology, linguistics, and digital technologies, are capable of deliberately modifying the symbolic space of a nation, altering both external image perception and internal self-identification processes. This section is devoted to the theoretical understanding of the phenomenon of the sociocultural code, the analysis of the mechanisms of its undermining and the consideration of practical examples of both successful attacks and effective defense strategies.

In studies of information security and cultural studies, the term “sociocultural code” is used to denote a set of stable symbols, values, norms, and narratives that structure the perception of the world by a particular community [

198]. These elements act as a kind of “matrix” of collective identity, ensuring the continuity of historical experience and certain behavioral patterns. The structure of the sociocultural code includes linguistic forms, myths and historical narratives, cultural rituals, symbols, and value orientations, which together create the basis for social interaction and political mobilization [

199]. Information warfare, considered as a set of targeted impacts on the cognitive, emotional and behavioral spheres of society, is increasingly focused on attacks on the sociocultural code, and not just on operational disinformation. This approach is associated with the understanding that the destruction or modification of cultural meanings has more long-term consequences than short-term manipulation of facts [

200]. The impact on the cultural code can be direct, for example, by substituting historical interpretations or the discrediting of national symbols, or indirect—through the gradual introduction of alternative value models and changes in the usual linguistic field [

201].

The mechanisms of identity fragmentation used in information operations are often built on opposing groups within a single society. Segmentation of the cultural field along ethnic, religious, regional, or political lines leads to a weakening of the common identity and an increase in the potential for conflict [

202]. This process actively uses emotional triggers associated with traumatic historical events or social injustices, which allows operators of information attacks to activate “latent” lines of cleavage [

203].

One of the key areas of cultural demobilization is the undermining of trust in national history and memory. Through the systematic dissemination of revisionist interpretations and selective coverage of historical facts, a feeling is formed that the past is subject to manipulation and cannot serve as a source of collective meaning [

204]. Similar strategies are observed, for example, in a number of post-Soviet countries, where media content deliberately downplayed the role of national figures or, by contrast, focused on collaborationist episodes, creating a sense of “historical guilt” [

205].

The cognitive and symbolic aspects of the transformation of the cultural code manifest in changing the meanings of established symbols and concepts. This process is often accompanied by a semiotic “reversal” of symbols—in which positive cultural markers are redefined as negative, and vice versa. Visual images traditionally associated with national pride or spiritual values can be reinterpreted in a satirical or derogatory way, which reduces their mobilization potential [

206]. Similar processes occur in language: keywords and phrases acquire new connotations that can transform public discourse without an obvious change in the facts [

207]. The protection of cultural constants in the context of information warfare presupposes a set of measures that include both institutional and public initiatives. Key elements include the support and development of the national language, the preservation and popularization of historical memory, and the formation of positive narratives reflecting the values and traditions of society [

208]. Educational programs that promote critical perception of information and strengthen cultural identity among young people play an important role [

209]. At the same time, modern approaches require a synthesis of humanistic and technological solutions: from digital archives and interactive museum exhibits to automated systems to monitor and analyze information attacks on the cultural space [

210]. Practice shows that an attack on the sociocultural code is as dangerous as physical intervention or economic pressure. Changing basic cultural guidelines can radically transform the political behavior of the population, change the perception of the legitimacy of power, and even influence international alliances. Therefore, the protection of cultural constants should be treated as a national-security priority in the context of a globalized information space [

211].

5.1. The Concept and Structure of the Sociocultural Code

In the humanities and social sciences, the concept of a “sociocultural code” is used to denote a set of symbolic systems, norms, values, customs, and semiotic structures that ensure the reproduction of the cultural identity of a society and the integration of its members into a common system of meanings [

212]. This code acts as a kind of “matrix” of collective thinking and behavior, determining how individuals interpret the surrounding reality, react to external events, and interact with each other. In cultural studies and semiotics, a sociocultural code is interpreted as a multilevel semiotic system that includes linguistic, visual, behavioral, and institutional elements [

213]. Yu. M. Lotman, in his theory of the semiosphere, described culture as a “mechanism that creates and transmits texts,” in which codes are the rules for generating and interpreting these texts [

214]. In the anthropological perspective (Clifford Geertz, Pierre Bourdieu), the cultural code is considered a set of “perceptual patterns” and “fields of practice” that structure social interaction [

215,

216].

Modern studies (Hofstede; Schwartz) clarify that the sociocultural code includes both explicit components (language, official symbols, laws) and latent ones (deep values, myths, collective memory) [

217,

218]. This makes it a complex and stable object, yet vulnerable to systemic influences.

According to Hofstede and Schwartz, the sociocultural code can be considered an integration of several interconnected subsystems:

Language code—a system of natural language, including vocabulary, syntax, idioms, speech practices. Language not only conveys information but also structures thinking (Sapir-Whorf hypothesis) [

219].

Value-normative code—a set of moral and ethical principles, norms of behavior and social expectations that define what is acceptable and unacceptable [

220].

Symbolic code—national and cultural symbols (flag, coat of arms, rituals, architecture) that serve as markers of identity [

221].

Historical-narrative code—collective ideas about the past, historical myths, heroic figures, traumatic events that shape national memory [

222].

Social-institutional code—the structure of social institutions (family, education, religion, state), which consolidates and transmits cultural norms [

223].

Each of these elements has its own system of protection against changes but can be modified by targeted information impact.

In the context of information warfare, the sociocultural code is considered both a strategic resource and a vulnerability. Changes in its individual elements can lead to a transformation of collective identity, political loyalty, and even to readiness for mobilization [

224]. For example, the substitution of a historical narrative or redefinition of symbolic meanings can provoke a split in society or a decrease in resistance to external pressure [

225].

An analysis of conflicts of the last decade (Ukraine, Syria, Hong Kong) shows that the impact on the sociocultural code is often carried out through media campaigns, educational programs, cultural products, and social networks [

226,

227]. These channels enable the combination of soft forms of influence (soft power) with elements of cognitive operations (see

Section 3.5), creating the effect of “long-term penetration” into the cultural fabric of society.

One of the key characteristics of the sociocultural code is its dynamic stability. Research shows that codes can adapt to external challenges while maintaining their core values [

228]. However, under a massive and multichannel attack—especially using digital technologies and algorithmic targeting—this resilience declines [

229]. The platform architecture of social media, operating on the basis of the “attention economy” (see

Section 3.4), facilitates the consolidation of new meanings through repetition and emotional reinforcement [

230]. Thus, understanding the structure and functions of the sociocultural code is a prerequisite for developing strategies to protect it in the context of modern information warfare.

Note that the above interpretations of the sociocultural code are primarily descriptive. In [

231,

232], a neural network theory of the noosphere proposes, which allows us to reveal the mechanisms of formation of the sociocultural code at a level that allows for the interpretation of its evolution. Note that according to Vernadsky, the noosphere is understood as the Earth’s shell, arising because of the collective activity of Homo sapience [

233].

This interpretation of the sociocultural code is based on the following considerations. It is generally accepted that as a result of communicating with each other, people exchange information. This, however, is a rather rough approximation. In reality, any communication between people de facto comes down to the exchange of signals between the neurons that make up their brains. Thus, a collective neural network arises, which at the global level, can be identified with the noosphere, as understood by V.I. Vernadsky. Qualitative differences in such a global network from the totality of its individual fragments are demonstrated in rigorous mathematical models.

It is appropriate to emphasize that recent theories have been proposed based on arguments from the field of physics, which in a similar way consider the Universe by analogy with a neural network [

234]. The conclusions made in the cited report are based on the results obtained in the field of the general theory of evolution [

235,

236]. Vanchurin’s concept [

234] clearly is consistent with the conclusions obtained in other fields of knowledge, where it was shown that complex systems of the most diverse nature can be modeled as neural networks, and it is this factor that determines their behavior [

237,

238,

239].

The neural network interpretation of the noosphere allows us to assert that, along with the personal level, there is also a suprapersonal level of information processing [

240,

241]. Indeed, as shown, in particular, in [

242] at the level of a correct mathematical model, the ability of a neural network to store and process information nonlinearly depends on the number of its elements. This conclusion is confirmed by current practice—otherwise, it would not make sense to create increasingly larger artificial neural networks, as is the case in practice [

243,

244]. Consequently, if interpersonal communications generate a global (albeit fragmented) neural network, its properties cannot be reduced to the properties of individual elements, i.e., relatively independent fragments of such a network, localized within individual brains. In philosophical literature, a similar conclusion has long been formulated: “social consciousness cannot be reduced to the consciousness of individuals”. The conclusion about the existence of the suprapersonal level also allows us to reveal the essence of the collective unconscious, the existence of which was previously confirmed only on an empirical basis [

245,

246].

From the perspective of the neural network interpretation of the noosphere, the collective unconscious is formed by objects developing precisely at the suprapersonal level of information processing. Information objects formed at the suprapersonal level of information processing can have a very different nature, and a significant part of them are obviously associated with the category of fashion. This can be most clearly traced based on the conclusions made in the famous monograph by Baudrillard [

247]. In the cited monograph, it was convincingly shown that the cost of many goods presented on the market actually consists of two components. One of them is associated with the satisfaction of current human needs (including physiological ones). The other is symbolic and therefore, informational in nature. An obvious example: clothes should protect a person from the cold, but there are also branded clothes, the main purpose of which is to demonstrate the social status of the owner. The same can be said in relation to goods of many other groups (branded watches, cars, etc.)

As shown in [

248], mature scientific theories, natural languages, and other systems. are also considered suprapersonal information objects. A sociocultural code, which is a set of supra-personal information objects that determine the characteristics of the collective behavior of the population of a certain country, ethnic group, is also formed by a similar mechanism.

5.2. Information Warfare as an Attack on Cultural and Historical Meanings

Cultural and historical meanings form the foundation of collective identity, ensuring continuity between the past, present, and future of society. In the humanities, cultural meanings are understood as a set of symbolic contents encoded in language, art, religious, and social rituals, as well as in collective narratives that form images of the desirable and the unacceptable [

249]. Historical meanings, in turn, represent interpretations of key events of the past, anchored in the memory of society through official chronicles, educational programs, commemorative dates, and material artifacts [

250]. Information warfare in their modern understanding consider these meanings not merely as a context in which the struggle is waged, but as targets of influence. Since a change in the interpretation of the past or a redefinition of cultural markers can transform the value orientations of the population and thereby change its political and social behavior [

251]. In political communication and conflict studies, scholars argue that the struggle for meaning is a struggle for power over the perception of reality [

252]. M. Foucault pointed out that power operates through control over discourse, which determines what is considered truth and what is subject to oblivion [

253]. In the context of information warfare, this means that strategic influence is aimed not so much at facts as at their interpretation and symbolic framing.

Modern doctrines of cognitive operations (see

Section 3.5) consider cultural and historical meanings as key points of application of efforts, since they underlie “frames”—cognitive structures through which people comprehend information [

254]. Altering frames can radically change social attitudes without direct coercion, which makes this approach extremely attractive to strategic actors.

One of the most common methods of attack is rewriting history, in which an alternative version of key events is promoted through textbooks, documentaries, popular media, and Internet resources [

255]. This may involve both emphasizing certain aspects and completely excluding inconvenient facts from public discourse.

Another method is the redefinition of symbols. Flags, monuments, and national heroes can be reinterpreted—from the glorification of previously marginalized figures to the demonization of historical leaders [

256]. This strategy is often accompanied by visual campaigns on social media, memetic content, and hashtag actions aimed at mass user engagement [

257].

Discursive inversion is also used, in which key concepts of national identity (e.g., “freedom”, “independence”, “tradition”) are filled with opposite or distorted content [

258].

A study by Ross shows how a new historical narrative was formed in post-revolutionary France through educational reforms and popular culture [

259]. In more modern examples, the analysis of the Ukrainian crisis of 2014–2022 demonstrates that both sides in the conflict actively use the media to reinforce their own interpretations of events and discredit the opposing ones [

260]. In the Baltic countries, over the past three decades, programs have been implemented to reinterpret the Soviet period, which have been accompanied by the dismantling of monuments and the changing of street names [

261]. In Syria and Iraq, the actions of terrorist organizations such as ISIS have included the targeted destruction of cultural artifacts, which was aimed at breaking cultural continuity and undermining the identity of local communities [

262].

The digitalization of the information space has radically increased the scale and speed of attacks on cultural and historical meanings. Social media and video-hosting sites allow instant distribution of content in which cultural symbols are reinterpreted through visual and auditory forms [

263]. Algorithmic recommendation mechanisms amplify the impact by creating a selective reality for users, in which certain versions of the past become dominant [

264].

In addition, an analysis of algorithmic campaigns on Twitter and Facebook (2016–2020) shows that a significant portion of the coordinated operations include culturally loaded messages—from nationalist slogans to posts about controversial historical dates [

265].

Effective protection of cultural and historical meanings requires the integration of measures into educational, cultural, and information policies. This includes transparency of historical sources, multidisciplinary examination of educational materials, the development of digital media literacy, and the involvement of society in the discussion of cultural heritage [

266]. Of particular importance is the creation of positive narratives that strengthen identity and not just respond to external attacks [

267].

Thus, information warfare in the 21st century is increasingly taking place on the battlefield of cultural and historical meanings. Success in this struggle depends not only on control over information channels, but also on the ability to protect and develop the system of meanings that underlies the collective “we”.

5.3. Mechanisms of Identity Fragmentation and Cultural Demobilization

Identity fragmentation in the context of information warfare is a process of blurring, splitting, and redefining collective ideas about belonging to a particular social, cultural or national group. This process is not limited to the diversity of cultural forms that naturally arises in a globalized world. It involves targeted information- and communication-based efforts aimed at undermining shared value orientations, symbols, and narratives that ensure the unity of society [

268].

Cultural demobilization is a closely related phenomenon in which a community loses its willingness to protect and reproduce its cultural codes and becomes passive in maintaining and transmitting them. As Castells notes, the modern communications network creates opportunities for the atomization of groups and individuals, which in a political context reduces the ability of society to consolidate around common goals [

269].