Benchmarking the Responsiveness of Open-Source Text-to-Speech Systems

Abstract

1. Introduction

2. Related Works

2.1. Text-to-Speech (TTS)

- Intelligibility—this is measured using the word error rate (WER) from STT models.

- Prosody—this is evaluated via pitch variation and rhythm consistency.

- Speaker Identity—this measures how closely the generated voice matches natural human speech by comparing voice features (e.g., tone and timbre) between synthetic and real recordings.

- Environmental Artifacts—this measures unwanted noise or distortions in the generated audio, with higher artifact levels indicating lower audio quality.

- General Distribution Similarity—this uses self-supervised embeddings to compare TTS outputs with real speech data.

2.2. End-to-End Systems

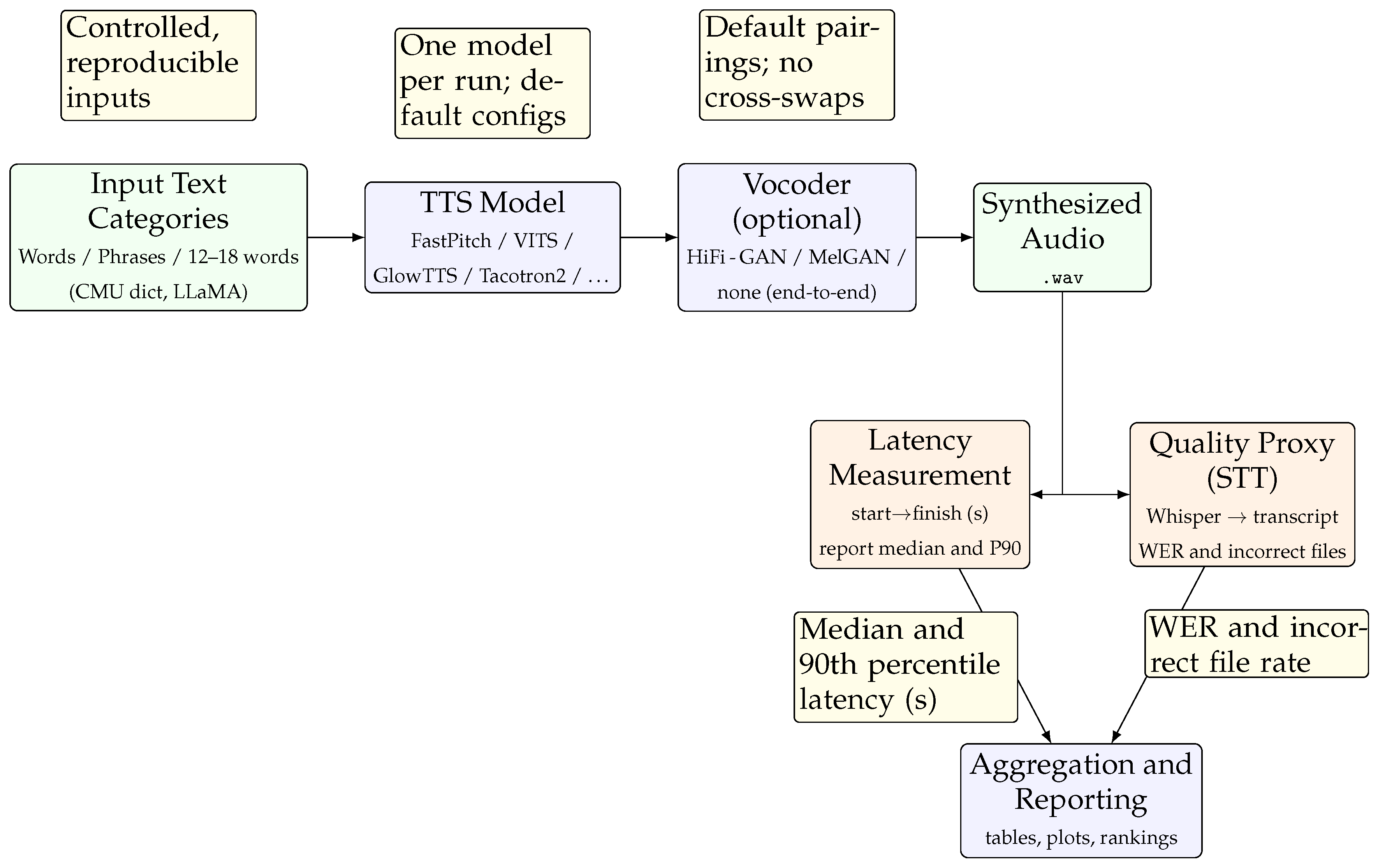

3. Benchmarking Methodology

- Latency: This measures the time from when the model begins processing the text input to when the generated audio file is fully produced. This study reports the median latency, which summarizes typical synthesis speed across the dataset while minimizing the effect of outliers. Lower median latency directly indicates faster audio generation, which is critical for real-time voice assistant performance.where

- −

- is the timestamp when the text input is passed to the TTS model;

- −

- is the timestamp when the audio file generation is completed.

- Tail Latency: This captures worst-case performance by measuring the 90th percentile latency across all samples. This reflects edge-case delays relevant to voice assistant interactions; keeping this value low is essential for maintaining smooth and responsive user experiences.where

- −

- is the 90th percentile latency across the dataset.

- Percentage of Incorrect Audio Files: The proportion of generated audio files whose transcriptions, after being converted back to text via STT and normalized as described above, do not exactly match the original input text. In other words, this metric is based on strict string equality between the normalized input and output texts.

- Overall WER: This is a cumulative measure of the word error rate (WER) across all transcriptions. The word error rate (WER) is a common metric used to evaluate transcription accuracy, calculated for a single sample aswhere

- −

- S is the number of substitutions (incorrectly transcribed words);

- −

- D is the number of deletions (missing words);

- −

- I is the number of insertions (extra words added);

- −

- N is the total number of words in the reference transcript.

For multiple transcriptions, the overall WER, used by Whisper [27], aggregates the WER across all samples by summing the total number of errors (substitutions, deletions, and insertions) across all transcriptions and dividing by the total number of words in all reference transcripts:where i represents each individual sample in the dataset. This method provides a cumulative error rate that reflects the overall performance of the transcription system across a dataset rather than an average of individual WER values. - Median WER (Mismatched Files): In addition to the Summation-Based WER following Whisper’s methodology, we also compute the median WER specifically for files with mismatched transcriptions, providing further insight into transcription accuracy variability.

- GPU memory usage (MB): peak allocation per inference, reported by PyTorch (version 2.1.0 for Fastpitch and VITS, 2.8.0 for Microsoft Windows Speech) We report the median of peak values across sampled inputs, as this provides a more robust measure than the mean when outliers occur.

- GPU utilization (%) and power draw (W): sampled via nvidia-smi at 0.1-s intervals. For each input, we compute the average utilization and power, and across the dataset we report the mean.

- CPU utilization (%): collected using the psutil library, sampled at the same interval. Per-input averages are calculated, and the mean across inputs is reported.

4. Experiments—Benchmarked Text Inputs

4.1. Evaluation Categories

4.2. TTS Models

5. Results

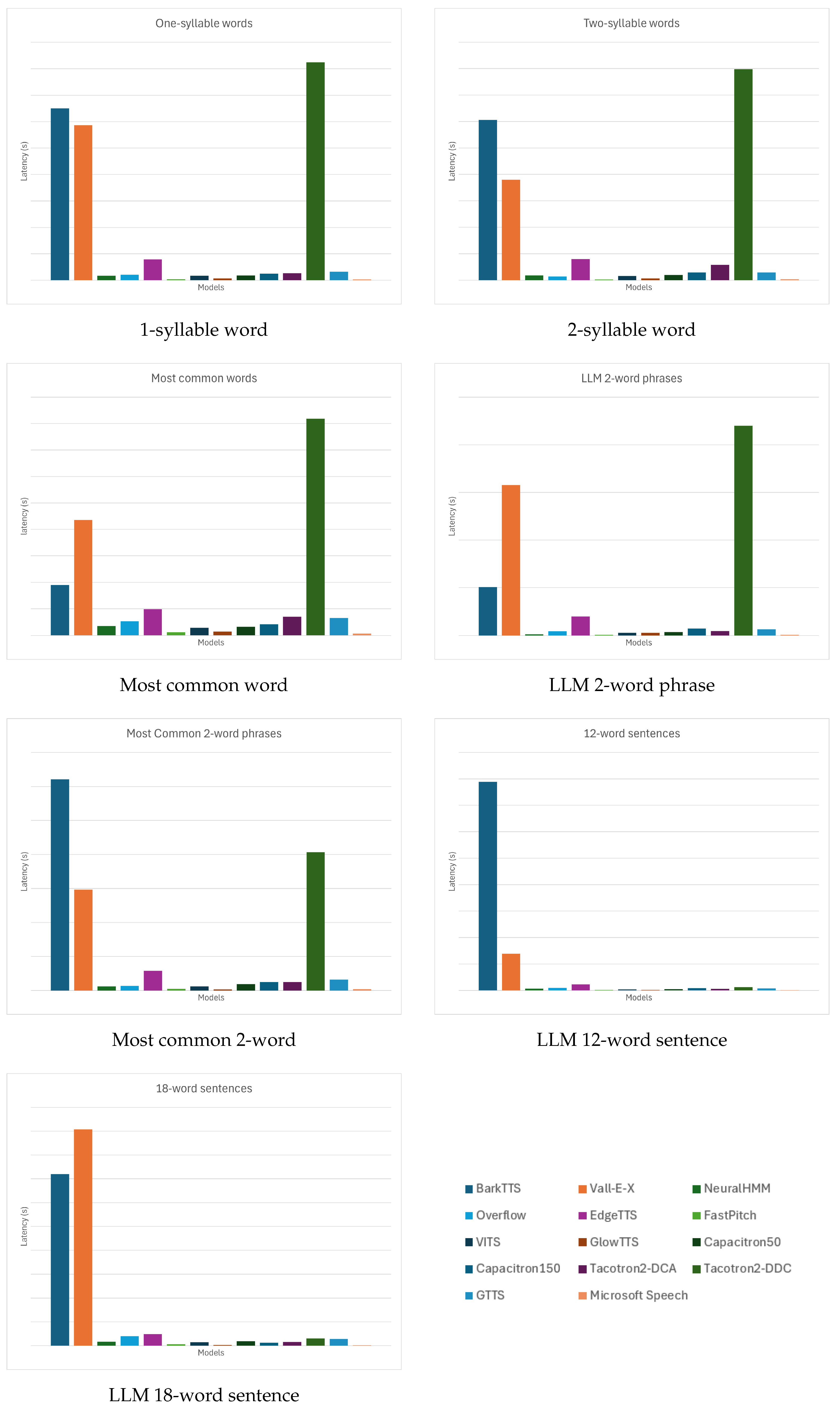

5.1. Responsiveness

5.2. Audio Quality

5.3. Secondary Metric: System Resource Utilization

6. Discussions and Future Works

6.1. High-Performing Models: Responsiveness, Quality Balance, and Deployment Considerations

6.2. Unusual Patterns in Performance Metrics

6.3. Cloud-Based TTS Services: GTTS vs. EdgeTTS

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hoy, M. Alexa, Siri, Cortana, and More: An Introduction to Voice Assistants. Med. Ref. Serv. Q. 2018, 37, 81–88. [Google Scholar] [CrossRef] [PubMed]

- Sainath, T.N.; Parada, C. Convolutional Neural Networks for Small-Footprint Keyword Spotting. In Proceedings of the 16th Annual Conference of the International Speech Communication Association (INTERSPEECH), Dresden, Germany, 6–10 September 2015; pp. 1478–1482. [Google Scholar]

- Singh, D.; Kaur, G.; Bansal, A. How Voice Assistants Are Taking Over Our Lives—A Review. J. Emerg. Technol. Innov. Res. 2019, 6, 355–357. [Google Scholar]

- Défossez, A.; Mazaré, L.; Orsini, M.; Royer, A.; Pérez, P.; Jégou, H.; Grave, E.; Zeghidour, N. Moshi: A speech-text foundation model for real-time dialogue. arXiv 2024, arXiv:2410.00037. [Google Scholar]

- Srivastava, A.; Rastogi, A.; Rao, A.; Shoeb, A.A.M.; Abid, A.; Fisch, A.; Brown, A.R.; Santoro, A.; Gupta, A.; Garriga-Alonso, A.; et al. Beyond the imitation game: Quantifying and extrapolating the capabilities of language models. arXiv 2022, arXiv:2206.04615. [Google Scholar] [CrossRef]

- Hendrycks, D.; Burns, C.; Basart, S.; Zou, A.; Mazeika, M.; Song, D.; Steinhardt, J. Measuring massive multitask language understanding. arXiv 2020, arXiv:2009.03300. [Google Scholar]

- Chen, M.; Tworek, J.; Jun, H.; Yuan, Q.; Pinto, H.P.D.O.; Kaplan, J.; Edwards, H.; Burda, Y.; Joseph, N.; Brockman, G.; et al. Evaluating large language models trained on code. arXiv 2021, arXiv:2107.03374. [Google Scholar] [CrossRef]

- Reddi, V.J.; Cheng, C.; Kanter, D.; Mattson, P.; Schmuelling, G.; Wu, C.J.; Anderson, B.; Breughe, M.; Charlebois, M.; Chou, W.; et al. Mlperf inference benchmark. In Proceedings of the 2020 ACM/IEEE 47th Annual International Symposium on Computer Architecture (ISCA), Virtual, 30 May–3 June 2020; pp. 446–459. [Google Scholar]

- Spangher, L.; Li, T.; Arnold, W.F.; Masiewicki, N.; Dotiwalla, X.; Parusmathi, R.; Grabowski, P.; Ie, E.; Gruhl, D. Project MPG: Towards a generalized performance benchmark for LLM capabilities. arXiv 2024, arXiv:2410.22368. [Google Scholar] [CrossRef]

- Banerjee, D.; Singh, P.; Avadhanam, A.; Srivastava, S. Benchmarking LLM powered chatbots: Methods and metrics. arXiv 2023, arXiv:2308.04624. [Google Scholar] [CrossRef]

- Bai, G.; Liu, J.; Bu, X.; He, Y.; Liu, J.; Zhou, Z.; Lin, Z.; Su, W.; Ge, T.; Zheng, B.; et al. Mt-bench-101: A fine-grained benchmark for evaluating large language models in multi-turn dialogues. arXiv 2024, arXiv:2402.14762. [Google Scholar]

- Malode, V.M. Benchmarking Public Large Language Model. Ph.D. Thesis, Technische Hochschule Ingolstadt, Ingolstadt, Germany, 2024. [Google Scholar]

- Jacovi, A.; Wang, A.; Alberti, C.; Tao, C.; Lipovetz, J.; Olszewska, K.; Haas, L.; Liu, M.; Keating, N.; Bloniarz, A.; et al. The FACTS Grounding Leaderboard: Benchmarking LLMs’ Ability to Ground Responses to Long-Form Input. arXiv 2025, arXiv:2501.03200. [Google Scholar] [CrossRef]

- Wang, B.; Zou, X.; Lin, G.; Sun, S.; Liu, Z.; Zhang, W.; Liu, Z.; Aw, A.; Chen, N.F. Audiobench: A universal benchmark for audio large language models. arXiv 2024, arXiv:2406.16020. [Google Scholar] [CrossRef]

- Gandhi, S.; Von Platen, P.; Rush, A.M. Esb: A benchmark for multi-domain end-to-end speech recognition. arXiv 2022, arXiv:2210.13352. [Google Scholar]

- Fang, Y.; Sun, H.; Liu, J.; Zhang, T.; Zhou, Z.; Chen, W.; Xing, X.; Xu, X. S2SBench: A Benchmark for Quantifying Intelligence Degradation in Speech-to-Speech Large Language Models. arXiv 2025, arXiv:2505.14438. [Google Scholar]

- Chen, Y.; Yue, X.; Zhang, C.; Gao, X.; Tan, R.T.; Li, H. Voicebench: Benchmarking llm-based voice assistants. arXiv 2024, arXiv:2410.17196. [Google Scholar]

- Alberts, L.; Ellis, B.; Lupu, A.; Foerster, J. CURATe: Benchmarking Personalised Alignment of Conversational AI Assistants. arXiv 2024, arXiv:2410.21159. [Google Scholar] [CrossRef]

- Fnu, N.; Bansal, A. Understanding the architecture of vision transformer and its variants: A review. In Proceedings of the 2024 1st International Conference on Innovative Engineering Sciences and Technological Research (ICIESTR), Muscat, Oman, 14–15 May 2024; pp. 1–6. [Google Scholar]

- Viswanathan, M.; Viswanathan, M. Measuring speech quality for text-to-speech systems: Development and assessment of a modified mean opinion score (MOS) scale. Comput. Speech Lang. 2005, 19, 55–83. [Google Scholar] [CrossRef]

- mrfakename; Srivastav, V.; Fourrier, C.; Pouget, L.; Lacombe, Y.; main; Gandhi, S. Text to Speech Arena. 2024. Available online: https://huggingface.co/spaces/TTS-AGI/TTS-Arena (accessed on 1 August 2025).

- mrfakename; Srivastav, V.; Fourrier, C.; Pouget, L.; Lacombe, Y.; main.; Gandhi, S.; Passos, A.; Cuenca, P. TTS Arena 2.0: Benchmarking Text-to-Speech Models in the Wild. 2025. Available online: https://huggingface.co/spaces/TTS-AGI/TTS-Arena-V2 (accessed on 1 August 2025).

- Picovoice. Picovoice TTS Latency Benchmark. 2024. Available online: https://github.com/Picovoice/tts-latency-benchmark (accessed on 1 August 2025).

- Artificial Analysis. Text-to-Speech Benchmarking Methodology. 2024. Available online: https://artificialanalysis.ai/text-to-speech (accessed on 1 August 2025).

- Labelbox. Evaluating Leading Text-to-Speech Models. 2024. Available online: https://labelbox.com/guides/evaluating-leading-text-to-speech-models/ (accessed on 1 August 2025).

- Minixhofer, C.; Klejch, O.; Bell, P. TTSDS-Text-to-Speech Distribution Score. In Proceedings of the 2024 IEEE Spoken Language Technology Workshop (SLT), Macau, China, 2–5 December 2024; pp. 766–773. [Google Scholar]

- Radford, A.; Kim, J.W.; Xu, T.; Brockman, G.; McLeavey, C.; Sutskever, I. Robust speech recognition via large-scale weak supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 28492–28518. [Google Scholar]

- Griffies, S.M.; Perrie, W.A.; Hull, G. Elements of style for writing scientific journal articles. In Publishing Connect; Elsevier: Amsterdam, The Netherlands, 2013; pp. 20–50. [Google Scholar]

- Wallwork, A. English for Writing Research Papers; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Carnegie Mellon University Speech Group. The Carnegie Mellon Pronouncing Dictionary (CMUdict). 1993–2014. Available online: http://www.speech.cs.cmu.edu/cgi-bin/cmudict (accessed on 23 July 2025).

- Day, D.L. CMU Pronouncing Dictionary Python Package. 2014. Available online: https://pypi.org/project/cmudict/ (accessed on 23 July 2025).

- Davies, M. Word Frequency Data from the Corpus of Contemporary American English (COCA). 2008. Available online: https://www.wordfrequency.info (accessed on 23 July 2025).

- Davies, M. The iWeb Corpus: 14 Billion Words of English from the Web. 2018. Available online: https://www.english-corpora.org/iweb/ (accessed on 23 July 2025).

- Suno-AI. Bark: A Transformer-Based Text-to-Audio Model. 2023. Available online: https://github.com/suno-ai/bark (accessed on 10 March 2025).

- Défossez, A.; Copet, J.; Synnaeve, G.; Adi, Y. High fidelity neural audio compression. arXiv 2022, arXiv:2210.13438. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhou, L.; Wang, C.; Chen, S.; Wu, Y.; Liu, S.; Chen, Z.; Liu, Y.; Wang, H.; Li, J.; et al. Speak foreign languages with your own voice: Cross-lingual neural codec language modeling. arXiv 2023, arXiv:2303.03926. [Google Scholar] [CrossRef]

- Mehta, S.; Székely, É.; Beskow, J.; Henter, G.E. Neural HMMs are all you need (for high-quality attention-free TTS). In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 7457–7461. [Google Scholar]

- Su, J.; Jin, Z.; Finkelstein, A. HiFi-GAN-2: Studio-quality speech enhancement via generative adversarial networks conditioned on acoustic features. In Proceedings of the 2021 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 17–20 October 2021; pp. 166–170. [Google Scholar]

- Ito, K.; Johnson, L. The LJ Speech Dataset. 2017. Available online: https://keithito.com/LJ-Speech-Dataset/ (accessed on 1 August 2025).

- Mehta, S.; Kirkland, A.; Lameris, H.; Beskow, J.; Székely, É.; Henter, G.E. OverFlow: Putting flows on top of neural transducers for better TTS. arXiv 2022, arXiv:2211.06892. [Google Scholar]

- rany2. Edge-tts: Microsoft Edge Text-to-Speech Library. 2021. Available online: https://pypi.org/project/edge-tts/ (accessed on 6 August 2025).

- Łańcucki, A. Fastpitch: Parallel text-to-speech with pitch prediction. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 6588–6592. [Google Scholar]

- Kim, J.; Kong, J.; Son, J. Conditional variational autoencoder with adversarial learning for end-to-end text-to-speech. In Proceedings of the International Conference on Machine Learning. PMLR, Virtual, 18–24 July 2021; pp. 5530–5540. [Google Scholar]

- Kim, J.; Kim, S.; Kong, J.; Yoon, S. Glow-tts: A generative flow for text-to-speech via monotonic alignment search. Adv. Neural Inf. Process. Syst. 2020, 33, 8067–8077. [Google Scholar]

- Yang, G.; Yang, S.; Liu, K.; Fang, P.; Chen, W.; Xie, L. Multi-band melgan: Faster waveform generation for high-quality text-to-speech. In Proceedings of the 2021 IEEE Spoken Language Technology Workshop (SLT), Online, 19–22 January 2021; pp. 492–498. [Google Scholar]

- Battenberg, E.; Mariooryad, S.; Stanton, D.; Skerry-Ryan, R.; Shannon, M.; Kao, D.; Bagby, T. Effective use of variational embedding capacity in expressive end-to-end speech synthesis. arXiv 2019, arXiv:1906.03402. [Google Scholar]

- King, S.; Karaiskos, V. The Blizzard Challenge 2013. In Proceedings of the Blizzard Challenge Workshop, Barcelona, Spain, 3 September 2013. [Google Scholar]

- Shen, J.; Pang, R.; Weiss, R.J.; Schuster, M.; Jaitly, N.; Yang, Z.; Chen, Z.; Zhang, Y.; Wang, Y.; Skerrv-Ryan, R.; et al. Natural TTS Synthesis by Conditioning Wavenet on Mel Spectrogram Predictions. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 4779–4783. [Google Scholar]

- Durette, P.N. gTTS: Documentation. 2014. Available online: https://gtts.readthedocs.io/ (accessed on 10 March 2025).

- Microsoft Corporation. Speech API 5.4 Documentation. 2009. Available online: https://learn.microsoft.com/en-us/previous-versions/windows/desktop/ee125663(v=vs.85) (accessed on 10 March 2025).

- NVIDIA. How to Deploy Real-Time Text-to-Speech Applications on GPUs Using TensorRT. 2023. Available online: https://developer.nvidia.com/blog/how-to-deploy-real-time-text-to-speech-applications-on-gpus-using-tensorrt/ (accessed on 1 August 2025).

- Milvus AI. What Are the Challenges of Deploying TTS on Embedded Systems? 2023. Available online: https://blog.milvus.io/ai-quick-reference/what-are-the-challenges-of-deploying-tts-on-embedded-systems (accessed on 1 August 2025).

- Stern, M.; Shazeer, N.; Uszkoreit, J. Blockwise parallel decoding for deep autoregressive models. Adv. Neural Inf. Process. Syst. 2018, 31, 1–10. [Google Scholar]

| Input Type | # Words | Justification | Source | # Data Points |

|---|---|---|---|---|

| Single Words | ||||

| 1-syllable words | 1 | Single words containing exactly one syllable, filtered and deduplicated. | CMU Dictionary [30,31] | 5352 |

| 2-syllable words | 1 | Single words containing exactly two syllables, filtered and deduplicated. | CMU Dictionary [30,31] | 13,549 |

| Most common in dialogues | 1 | Frequently spoken standalone words (questions, answers, imperatives, etc.). | COCA [32] | 501 |

| 2-Word Phrases | ||||

| LLM-generated | 2 | Random 2-word phrases used as standalone expressions, deduplicated. | LLaMA 3 8B (LLM-generated) | 5659 |

| Most common | 2 | Most frequent spoken 2-word N-grams based on raw frequency. | iWebCorpus [33] | 504 |

| Sentences with Specific Lengths | ||||

| 12-word sentences | 12 | Minimum recommended sentence length for benchmarking. | LLaMA 3 8B (LLM-generated) | 4994 |

| 18-word sentences | 18 | Maximum recommended sentence length for benchmarking. | LLaMA 3 8B (LLM-generated) | 5506 |

| Model | Architecture/Approach | Setup (Vocoder/Dataset) | Year | Stars/Forks | Key Features |

|---|---|---|---|---|---|

| BarkTTS [34] | Transformer text-to-audio, semantic token-based | EnCodec [35] | 2023 | 38.5k/4.6k | Multilingual, includes nonverbal audio and music |

| VALL-E X [36] | Neural codec language model | EnCodec [35] | 2023 | 7.9k/790 | Zero-shot voice cloning, cross-lingual synthesis |

| Neural HMM [37] | Probabilistic seq2seq with HMM alignment | Hifigan 2 [38]/LJSpeech [39] | 2022 | 41.6k/5.4k | Stable synthesis, adjustable speaking rate, low data need |

| Overflow [40] | Neural HMM + normalizing flows | Hifigan 2 [38]/LJSpeech [39] | 2022 | 41.6k/5.4k | Expressive, low WER, efficient training |

| Edge-TTS [41] | Cloud API | Cloud Backend | 2021 | N/A | Lightweight, easy integration, multilingual |

| FastPitch [42] | Parallel non-autoregressive, pitch-conditioned | Hifigan 2 [38]/LJSpeech [39] | 2021 | 41.6k/5.4k | Low latency, controllable prosody, real-time capable |

| VITS [43] | End-to-end VAE + adversarial learning | None/LJSpeech [39] | 2021 | 41.6k/5.4k | Unified pipeline, high naturalness, expressive prosody |

| Glow-TTS [44] | Flow-based, monotonic alignment | Multiband MelGAN [45]/LJSpeech [39] | 2020 | 41.6k/5.4k | Fast inference, robust alignment, pitch/rhythm control |

| Capacitron [46] | Tacotron 2 + VAE for prosody | Hifigan 2 [38]/Blizzard 2013 [47] | 2019 | 41.6k/5.4k | Fine-grained prosody modeling, expressive synthesis |

| Tacotron 2 (DCA/DDC) [48] | Two-stage seq2seq, attention-based | Hifigan 2 [38], Multiband MelGAN [45]/LJSpeech [39] | 2018 | 41.6k/5.4k | High naturalness, improved alignment with DDC/DCA |

| Google TTS (gTTS) [49] | Cloud API (Google Translate) | Cloud backend | 2014 | N/A | Lightweight, easy integration, multilingual |

| Microsoft Speech API 5.4 (Microsoft Speech) [50] | Concatenative/parametric synthesis | Built-in voices/Windows | 2009 | N/A | Commercial baseline, real time, multilingual support |

| Model | 1-Syllable Word | 2-Syllable Word | Most Common Word | LLM 2-Word Phrase | Most Common 2-Word | LLM 12-Word Sentence | LLM 18-Word Sentence | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| M | P90 | M | P90 | M | P90 | M | P90 | M | P90 | M | P90 | M | P90 | |

| BarkTTS [34] | 5.73 | 13.01 | 4.41 | 12.11 | 1.49 | 1.90 | 2.01 | 5.05 | 7.45 | 12.42 | 31.05 | 78.86 | 14.75 | 36.00 |

| Vall-E-X [36] | 2.20 | 11.72 | 1.75 | 5.59 | 2.63 | 4.36 | 7.53 | 15.78 | 1.73 | 5.93 | 10.09 | 13.90 | 17.79 | 45.38 |

| NeuralHMM [37] | 0.27 | 0.33 | 0.28 | 0.35 | 0.24 | 0.34 | 0.06 | 0.12 | 0.20 | 0.24 | 0.45 | 0.70 | 0.64 | 0.85 |

| Overflow [40] | 0.33 | 0.41 | 0.23 | 0.28 | 0.34 | 0.53 | 0.37 | 0.45 | 0.22 | 0.27 | 0.68 | 0.88 | 1.56 | 1.99 |

| EdgeTTS [41] | 1.11 | 1.58 | 1.29 | 1.59 | 0.83 | 0.98 | 1.19 | 1.98 | 0.87 | 1.16 | 1.64 | 2.26 | 1.78 | 2.41 |

| Fastpitch [42] | 0.06 | 0.09 | 0.04 | 0.05 | 0.05 | 0.12 | 0.04 | 0.07 | 0.04 | 0.10 | 0.06 | 0.17 | 0.13 | 0.30 |

| VITS [43] | 0.29 | 0.33 | 0.22 | 0.31 | 0.19 | 0.28 | 0.25 | 0.29 | 0.20 | 0.23 | 0.28 | 0.42 | 0.51 | 0.73 |

| GlowTTS [44] | 0.10 | 0.14 | 0.09 | 0.13 | 0.09 | 0.14 | 0.23 | 0.29 | 0.05 | 0.07 | 0.08 | 0.13 | 0.09 | 0.15 |

| Capacitron50 [46] | 0.23 | 0.35 | 0.24 | 0.39 | 0.20 | 0.32 | 0.29 | 0.37 | 0.19 | 0.37 | 0.35 | 0.48 | 0.66 | 0.94 |

| Capacitron150 [46] | 0.25 | 0.51 | 0.28 | 0.58 | 0.22 | 0.42 | 0.33 | 0.69 | 0.22 | 0.50 | 0.64 | 0.86 | 0.39 | 0.62 |

| Tacotron2-DCA [48] | 0.10 | 0.53 | 0.18 | 1.16 | 0.16 | 0.70 | 0.09 | 0.46 | 0.18 | 0.50 | 0.44 | 0.62 | 0.53 | 0.77 |

| Tacotron2-DDC [48] | 0.17 | 16.49 | 0.26 | 15.95 | 0.37 | 8.19 | 0.45 | 21.99 | 0.16 | 8.14 | 0.91 | 1.24 | 0.93 | 1.52 |

| GTTS [49] | 0.43 | 0.65 | 0.45 | 0.58 | 0.58 | 0.65 | 0.42 | 0.62 | 0.58 | 0.64 | 0.68 | 0.78 | 0.93 | 1.39 |

| Microsoft Speech [50] | 0.06 | 0.07 | 0.06 | 0.08 | 0.06 | 0.07 | 0.07 | 0.08 | 0.06 | 0.07 | 0.07 | 0.08 | 0.07 | 0.08 |

| 12-Word Sentence Inputs | 18-Word Sentence Inputs | |||||

|---|---|---|---|---|---|---|

| Model | % Incorrect | Overall WER | Median WER | % Incorrect | Overall WER | Median WER |

| BarkTTS [34] | 26.72 | 5.28 | 8.33 | 32.30 | 4.20 | 5.56 |

| Vall-E-X [36] | 26.36 | 4.04 | 8.33 | 32.67 | 3.75 | 5.56 |

| NeuralHMM [37] | 51.44 | 8.48 | 16.67 | 62.78 | 8.50 | 11.11 |

| Overflow [40] | 12.06 | 1.38 | 8.33 | 16.32 | 1.28 | 5.56 |

| EdgeTTS [41] | 3.02 | 0.41 | 16.67 | 4.02 | 0.38 | 11.11 |

| Fastpitch [42] | 7.15 | 0.82 | 8.33 | 9.43 | 0.77 | 5.56 |

| VITS [43] | 5.11 | 0.65 | 8.33 | 7.11 | 0.65 | 5.56 |

| GlowTTS [44] | 14.92 | 1.80 | 8.33 | 20.03 | 1.69 | 5.56 |

| Capacitron 50 [46] | 17.01 | 3.90 | 16.67 | 23.87 | 4.77 | 11.11 |

| Capacitron 150 [46] | 28.19 | 7.94 | 16.67 | 23.64 | 4.89 | 11.11 |

| Tacotron 2—DCA [48] | 16.01 | 2.01 | 8.33 | 20.40 | 1.99 | 5.56 |

| Tacotron 2—DDC [48] | 12.56 | 2.68 | 8.33 | 19.68 | 2.84 | 5.56 |

| GTTS [49] | 2.84 | 0.40 | 16.67 | 4.25 | 0.39 | 10.82 |

| Microsoft Speech [50] | 2.86 | 0.39 | 16.67 | 4.09 | 0.39 | 10.53 |

| Model | Input | Median GPU Mem (MB) | Mean GPU Util (%) | Mean GPU Power (W) | Mean CPU Util (%) | Median CPU Mem (MB) |

|---|---|---|---|---|---|---|

| FastPitch | Words | 1272.75 | 21.93 | 13.04 | 0.63 | 2174.81 |

| 2-word | 1155.02 | 23.14 | 10.43 | 0.94 | 2229.18 | |

| 12-word | 1273.49 | 28.84 | 10.92 | 0.00 | 2280.49 | |

| 18-word | 1276.32 | 23.03 | 11.83 | 0.00 | 2374.91 | |

| VITS | Words | 657.22 | 30.65 | 9.37 | 7.74 | 1652.76 |

| 2-word | 657.27 | 27.02 | 10.69 | 8.92 | 1648.10 | |

| 12-word | 661.09 | 17.06 | 12.48 | 0.00 | 1905.41 | |

| 18-word | 661.15 | 25.90 | 11.94 | 0.00 | 1851.96 | |

| Microsoft Speech | Words | N/A | 14.58 | 6.30 | 0.00 | 98.06 |

| 2-word | N/A | 14.31 | 5.75 | 0.00 | 484.85 | |

| 12-word | N/A | 19.92 | 6.21 | 0.00 | 105.81 | |

| 18-word | N/A | 12.29 | 6.70 | 0.00 | 105.45 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dinh, H.P.T.; Patamia, R.A.; Liu, M.; Cosgun, A. Benchmarking the Responsiveness of Open-Source Text-to-Speech Systems. Computers 2025, 14, 406. https://doi.org/10.3390/computers14100406

Dinh HPT, Patamia RA, Liu M, Cosgun A. Benchmarking the Responsiveness of Open-Source Text-to-Speech Systems. Computers. 2025; 14(10):406. https://doi.org/10.3390/computers14100406

Chicago/Turabian StyleDinh, Ha Pham Thien, Rutherford Agbeshi Patamia, Ming Liu, and Akansel Cosgun. 2025. "Benchmarking the Responsiveness of Open-Source Text-to-Speech Systems" Computers 14, no. 10: 406. https://doi.org/10.3390/computers14100406

APA StyleDinh, H. P. T., Patamia, R. A., Liu, M., & Cosgun, A. (2025). Benchmarking the Responsiveness of Open-Source Text-to-Speech Systems. Computers, 14(10), 406. https://doi.org/10.3390/computers14100406