Robustly Effective Approaches on Motor Imagery-Based Brain Computer Interfaces

Abstract

1. Introduction

1.1. Acquisition Methods

1.2. Communication Approaches

1.3. Process

1.4. Feature Domains

- Discriminative features;

- Statistical features;

- Data-driven adaptive features.

2. Review’s Approach

2.1. Structure of the Work

2.2. Research of Scientific Works

- Which are the most used feature extraction methods in Motor Imagery BCIs?

- Which methods are robust?

- Which approaches have high robustness and high accuracy as well?

- The paper describes a feature extraction method of the MI-BCI field;

- The method described in the paper aims to extract robust brain activity features;

- The presentation of the numerical results of the metrics is necessary.

- Toolboxes are described;

- The paper is not related to the BCI field;

- The paper does not describe a feature extraction method;

- The proceedings were not accessible.

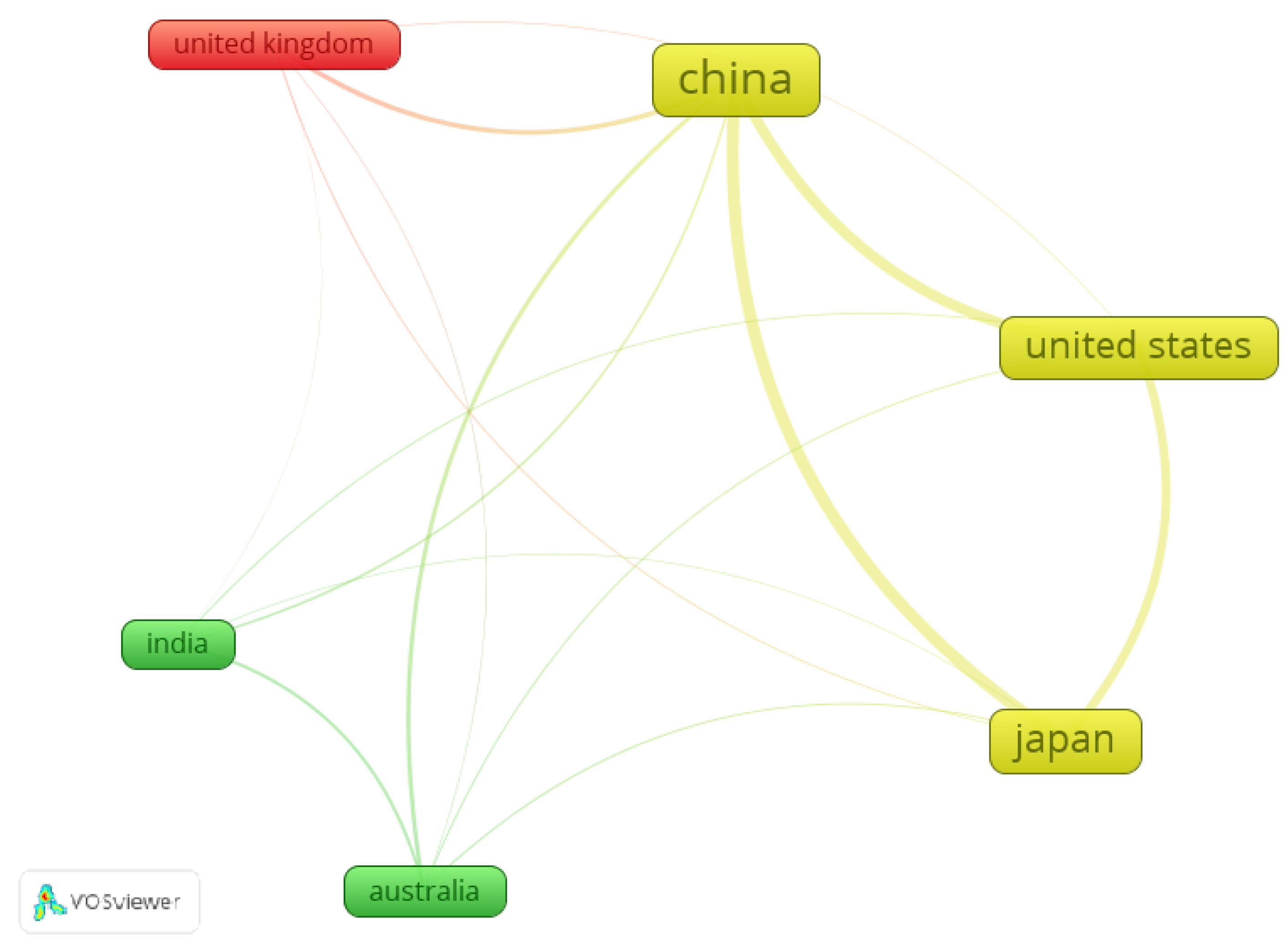

2.3. Research Early Statistics

3. Related Work

4. Efficient and Robust Approaches

4.1. Feature Extraction Methods

4.2. Feature Domains Distribution

4.3. Efficient Approaches

4.3.1. Accuracy

4.3.2. High Robustness

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AAR | Adaptive Autoregressive |

| AICA-WT | Automatic Independent Component Analysis with Wavelet Transform |

| AR | Autoregressive |

| BCI | Brain Computer Interface |

| CNN | Convolution Neural Network |

| CSP | Common Spatial Patterns |

| CWT | Continuous Wavelet Transform |

| DCNN | Deep Convolution Neural Network |

| dFC | dynamic Functional Connectivity |

| DWT | Discrete Wavelet Transform |

| ECoG | Electrocorticography |

| EEG | Electroencephalography |

| EMD | Empirical Mode Decomposition |

| EOG | Electrooculogram |

| ERD | Event-Related Desynchronization |

| ERP | Event-Related Potentials |

| ERS | Event-Related Synchronization |

| EWT | Empirical Wavelet Transform |

| FAWT | Flexible Analytic Wavelet Transform |

| FB-TRCSP | Frequency Bank Tikhonov Regularization Common Spatial Patterns |

| FBCSP | Frequency Bank Common Spatial Patterns |

| FDCSP | Frequency Domain Common Spatial Patterns |

| FDF | Frequency Domain Features |

| FFNN | Feedforward Neural Network |

| FFT | Fast Fourier Transform |

| MEG | Magnetoencephalography |

| MFCC | Mel-Frequency Cepstral Coefficients |

| kNN | k-Nearest Neighbors |

| LCD | Local Characteristic-scale Decomposition |

| Log-BP | Logarithmic Band Power |

| MEWT | Multivariate Empirical Wavelet Transform |

| MI | Motor Imagery |

| PSD | Power Spectral Density |

| RF | Random Forest |

| RFB | Reactive Frequency Band |

| SGRM | sparse group representation model |

| SDI | Successive Decomposition Index |

| SRDA | Spectral Regression Discriminant Analysis |

| SSAEP | Steady State Auditory Evoked Potentials |

| SSSEP | Steady State Somatosensory Evoked Potentials |

| SSVEP | Steady State Visually Evoked Potentials |

| STFT | Short-Time-Fourier Transform |

| SVM | Support Vector Machine |

| TFDF | Time-Frequency Domain Features |

| TFP | Transfer function perturbationm |

| TDF | Time Domain Features |

| WDPSD | Weighted Difference of Power Spectral Density |

| WT | Wavelet Transform |

References

- Kaushal, G.; Singh, A.; Jain, V. Better approach for denoising EEG signals. In Proceedings of the 2016 5th International Conference on Wireless Networks and Embedded Systems (WECON), Rajpura, India, 14–16 October 2016; pp. 1–3. [Google Scholar]

- Freitas, D.R.; Inocêncio, A.V.; Lins, L.T.; Santos, E.A.; Benedetti, M.A. A real-time embedded system design for ERD/ERS measurement on EEG-based brain-computer interfaces. In Proceedings of the XXVI Brazilian Congress on Biomedical Engineering, Armação de Buzios, Brazil, 21–25 October 2018; pp. 25–33. [Google Scholar]

- Abhang, P.A.; Gawali, B.W.; Mehrotra, S.C. Technological basics of EEG recording and operation of apparatus. In Introduction to EEG-and Speech-Based Emotion Recognition; Academic Press: Cambridge, MA, USA, 2016; pp. 19–50. [Google Scholar]

- Nicolas-Alonso, L.F.; Gomez-Gil, J. Brain computer interfaces, a review. Sensors 2012, 12, 1211–1279. [Google Scholar] [CrossRef] [PubMed]

- Chaudhary, U.; Birbaumer, N.; Ramos-Murguialday, A. Brain–computer interfaces for communication and rehabilitation. Nat. Rev. Neurol. 2016, 12, 513–525. [Google Scholar] [CrossRef] [PubMed]

- Rashid, N.; Iqbal, J.; Javed, A.; Tiwana, M.I.; Khan, U.S. Design of embedded system for multivariate classification of finger and thumb movements using EEG signals for control of upper limb prosthesis. BioMed Res. Int. 2018, 2018, 2695106. [Google Scholar] [CrossRef] [PubMed]

- Ding, S.; Yuan, Z.; An, P.; Xue, G.; Sun, W.; Zhao, J. Cascaded convolutional neural network with attention mechanism for mobile eeg-based driver drowsiness detection system. In Proceedings of the 2019 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), San Diego, CA, USA, 18–21 November 2019; pp. 1457–1464. [Google Scholar]

- Sawan, M.; Salam, M.T.; Le Lan, J.; Kassab, A.; Gélinas, S.; Vannasing, P.; Lesage, F.; Lassonde, M.; Nguyen, D.K. Wireless recording systems: From noninvasive EEG-NIRS to invasive EEG devices. IEEE Trans. Biomed. Circuits Syst. 2013, 7, 186–195. [Google Scholar] [CrossRef]

- Dan, J.; Vandendriessche, B.; Paesschen, W.V.; Weckhuysen, D.; Bertrand, A. Computationally-efficient algorithm for real-time absence seizure detection in wearable electroencephalography. Int. J. Neural Syst. 2020, 30, 2050035. [Google Scholar] [CrossRef]

- Nijholt, A.; Tan, D.; Allison, B.; del R. Milan, J.; Graimann, B. Brain-computer interfaces for HCI and games. In Proceedings of the CHI ’08: CHI Conference on Human Factors in Computing Systems, Florence, Italy, 5–10 April 2008; pp. 3925–3928. [Google Scholar]

- Kovari, A.; Katona, J.; Heldal, I.; Helgesen, C.; Costescu, C.; Rosan, A.; Hathazi, A.; Thill, S.; Demeter, R. Examination of gaze fixations recorded during the trail making test. In Proceedings of the 2019 10th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Naples, Italy, 23–25 October 2019; pp. 319–324. [Google Scholar]

- Kovari, A.; Katona, J.; Costescu, C. Quantitative analysis of relationship between visual attention and eye-hand coordination. Acta Polytech. Hung. 2020, 17, 77–95. [Google Scholar] [CrossRef]

- Kovari, A.; Katona, J.; Costescu, C. Evaluation of eye-movement metrics in a software debbuging task using gp3 eye tracker. Acta Polytech. Hung. 2020, 17, 57–76. [Google Scholar] [CrossRef]

- Kovari, A. Study of Algorithmic Problem-Solving and Executive Function. Acta Polytech. Hung. 2020, 17, 241–256. [Google Scholar] [CrossRef]

- Katona, J.; Ujbanyi, T.; Sziladi, G.; Kovari, A. Examine the effect of different web-based media on human brain waves. In Proceedings of the 2017 8th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Debrecen, Hungary, 11–14 September 2017; pp. 000407–000412. [Google Scholar]

- Katona, J.; Ujbanyi, T.; Sziladi, G.; Kovari, A. Electroencephalogram-based brain-computer interface for internet of robotic things. In Cognitive Infocommunications, Theory and Applications; Springer: Cham, Switzerland, 2019; pp. 253–275. [Google Scholar]

- Panoulas, K.J.; Hadjileontiadis, L.J.; Panas, S.M. Brain-computer interface (BCI): Types, processing perspectives and applications. In Multimedia Services in Intelligent Environments; Springer: Cham, Switzerland, 2010; pp. 299–321. [Google Scholar]

- Wolpaw, J.R.; Birbaumer, N.; Heetderks, W.J.; McFarland, D.J.; Peckham, P.H.; Schalk, G.; Donchin, E.; Quatrano, L.A.; Robinson, C.J.; Vaughan, T.M.; et al. Brain-computer interface technology: A review of the first international meeting. IEEE Trans. Rehabil. Eng. 2000, 8, 164–173. [Google Scholar] [CrossRef]

- Tangermann, M.; Krauledat, M.; Grzeska, K.; Sagebaum, M.; Blankertz, B.; Vidaurre, C.; Müller, K.R. Playing pinball with non-invasive BCI. In Proceedings of the Advances in Neural Information Processing Systems 21 (NIPS 2008), Vancouver, BC, Canada, 8–11 December 2008; pp. 1641–1648. [Google Scholar]

- Allison, B.Z.; McFarland, D.J.; Schalk, G.; Zheng, S.D.; Jackson, M.M.; Wolpaw, J.R. Towards an independent brain–computer interface using steady state visual evoked potentials. Clin. Neurophysiol. 2008, 119, 399–408. [Google Scholar] [CrossRef]

- Friman, O.; Luth, T.; Volosyak, I.; Graser, A. Spelling with steady-state visual evoked potentials. In Proceedings of the 2007 3rd International IEEE/EMBS Conference on Neural Engineering, Kohala Coast, HI, USA, 2–5 May 2007; pp. 354–357. [Google Scholar]

- Kotlewska, I.; Wójcik, M.; Nowicka, M.; Marczak, K.; Nowicka, A. Present and past selves: A steady-state visual evoked potentials approach to self-face processing. Sci. Rep. 2017, 7, 16438. [Google Scholar] [CrossRef] [PubMed]

- George, O.; Smith, R.; Madiraju, P.; Yahyasoltani, N.; Ahamed, S.I. Motor Imagery: A review of existing techniques, challenges and potentials. In Proceedings of the 2021 IEEE 45th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 12–16 July 2021; pp. 1893–1899. [Google Scholar]

- Bansal, D.; Mahajan, R. Chapter 2: EEG-based Brain-Computer Interfacing (BCI). In EEG-Based Brain-Computer Interfaces: Cognitive Analysis and Control; Academic Press: Cambridge, MA, USA, 2019; pp. 21–71. [Google Scholar]

- Fernandez-Fraga, S.; Aceves-Fernandez, M.; Pedraza-Ortega, J. EEG data collection using visual evoked, steady state visual evoked and motor image task, designed to Brain Computer Interfaces (BCI) development. Data Brief 2019, 25, 103871. [Google Scholar] [CrossRef] [PubMed]

- Lotze, M.; Halsband, U. Motor imagery. J. Physiol. 2006, 99, 386–395. [Google Scholar] [CrossRef] [PubMed]

- Abiri, R.; Borhani, S.; Sellers, E.W.; Jiang, Y.; Zhao, X. A comprehensive review of EEG-based brain–computer interface paradigms. J. Neural Eng. 2019, 16, 011001. [Google Scholar] [CrossRef] [PubMed]

- Pfurtscheller, G.; Neuper, C. Motor imagery activates primary sensorimotor area in humans. Neurosci. Lett. 1997, 239, 65–68. [Google Scholar] [CrossRef]

- Shi, T.; Ren, L.; Cui, W. Feature extraction of brain–computer interface electroencephalogram based on motor imagery. IEEE Sensors J. 2019, 20, 11787–11794. [Google Scholar] [CrossRef]

- Aggarwal, S.; Chugh, N. Signal processing techniques for motor imagery brain computer interface: A review. Array 2019, 1, 100003. [Google Scholar] [CrossRef]

- Hammon, P.S.; de Sa, V.R. Preprocessing and meta-classification for brain-computer interfaces. IEEE Trans. Biomed. Eng. 2007, 54, 518–525. [Google Scholar] [CrossRef]

- Lotte, F. A tutorial on EEG signal-processing techniques for mental-state recognition in brain–computer interfaces. In Guide to Brain-Computer Music Interfacing; Springer: London, UK, 2014; pp. 133–161. [Google Scholar]

- Pfurtscheller, G.; Neuper, C. Motor imagery and direct brain-computer communication. Proc. IEEE 2001, 89, 1123–1134. [Google Scholar] [CrossRef]

- Alimardani, M.; Nishio, S.; Ishiguro, H. Chapter 5: Brain-computer interface and motor imagery training: The role of visual feedback and embodiment. In Evolving BCI Therapy-Engaging Brain State Dynamics; IntechOpen: London, UK, 2018; Volume 2, p. 64. [Google Scholar]

- Fleury, M.; Lioi, G.; Barillot, C.; Lécuyer, A. A survey on the use of haptic feedback for brain-computer interfaces and neurofeedback. Front. Neurosci. 2020, 14, 528. [Google Scholar] [CrossRef]

- Ren, W.; Han, M.; Wang, J.; Wang, D.; Li, T. Efficient feature extraction framework for EEG signals classification. In Proceedings of the 2016 Seventh International Conference on Intelligent Control and Information Processing (ICICIP), Siem Reap, Cambodia, 1–4 December 2016; pp. 167–172. [Google Scholar]

- Pahuja, S.; Veer, K. Recent Approaches on Classification and Feature Extraction of EEG Signal: A Review. Robotica 2021, 77–101. [Google Scholar]

- Boonyakitanont, P.; Lek-Uthai, A.; Chomtho, K.; Songsiri, J. A review of feature extraction and performance evaluation in epileptic seizure detection using EEG. Biomed. Signal Process. Control. 2020, 57, 101702. [Google Scholar] [CrossRef]

- Kanimozhi, M.; Roselin, R. Statistical Feature Extraction and Classification using Machine Learning Techniques in Brain-Computer Interface. Int. J. Innov. Technol. Explor. Eng. 2020, 9, 1754–1758. [Google Scholar] [CrossRef]

- Wang, P.; Jiang, A.; Liu, X.; Shang, J.; Zhang, L. LSTM-based EEG classification in motor imagery tasks. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 2086–2095. [Google Scholar] [CrossRef]

- Al-Saegh, A.; Dawwd, S.A.; Abdul-Jabbar, J.M. Deep learning for motor imagery EEG-based classification: A review. Biomed. Signal Process. Control. 2021, 63, 102172. [Google Scholar] [CrossRef]

- Iftikhar, M.; Khan, S.A.; Hassan, A. A survey of deep learning and traditional approaches for EEG signal processing and classification. In Proceedings of the 2018 IEEE 9th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 1–3 November 2018; pp. 395–400. [Google Scholar]

- Pawar, D.; Dhage, S. Feature Extraction Methods for Electroencephalography based Brain-Computer Interface: A Review. IAENG Int. J. Comput. Sci. 2020, 47, 501–515. [Google Scholar]

- Zhang, R.; Xiao, X.; Liu, Z.; Jiang, W.; Li, J.; Cao, Y.; Ren, J.; Jiang, D.; Cui, L. A new motor imagery EEG classification method FB-TRCSP+ RF based on CSP and random forest. IEEE Access 2018, 6, 44944–44950. [Google Scholar] [CrossRef]

- Ai, Q.; Chen, A.; Chen, K.; Liu, Q.; Zhou, T.; Xin, S.; Ji, Z. Feature extraction of four-class motor imagery EEG signals based on functional brain network. J. Neural Eng. 2019, 16, 026032. [Google Scholar] [CrossRef]

- Razzak, I.; Hameed, I.A.; Xu, G. Robust sparse representation and multiclass support matrix machines for the classification of motor imagery EEG signals. IEEE J. Transl. Eng. Health Med. 2019, 7, 2168–2372. [Google Scholar] [CrossRef]

- Jiao, Y.; Zhang, Y.; Chen, X.; Yin, E.; Jin, J.; Wang, X.; Cichocki, A. Sparse group representation model for motor imagery EEG classification. IEEE J. Biomed. Health Inform. 2018, 23, 631–641. [Google Scholar] [CrossRef]

- Tayeb, Z.; Fedjaev, J.; Ghaboosi, N.; Richter, C.; Everding, L.; Qu, X.; Wu, Y.; Cheng, G.; Conradt, J. Validating deep neural networks for online decoding of motor imagery movements from EEG signals. Sensors 2019, 19, 210. [Google Scholar] [CrossRef] [PubMed]

- Thomas, K.P.; Robinson, N.; Vinod, A.P. Utilizing subject-specific discriminative EEG features for classification of motor imagery directions. In Proceedings of the 2019 IEEE 10th International Conference on Awareness Science and Technology (iCAST), Morioka, Japan, 23–25 October 2019; pp. 1–5. [Google Scholar]

- Zhang, Y.; Nam, C.S.; Zhou, G.; Jin, J.; Wang, X.; Cichocki, A. Temporally constrained sparse group spatial patterns for motor imagery BCI. IEEE Trans. Cybern. 2018, 49, 3322–3332. [Google Scholar] [CrossRef] [PubMed]

- Khakpour, M. The Improvement of a Brain Computer Interface Based on EEG Signals. Front. Biomed. Technol. 2020, 7, 259–265. [Google Scholar] [CrossRef]

- Liu, Q.; Zheng, W.; Chen, K.; Ma, L.; Ai, Q. Online detection of class-imbalanced error-related potentials evoked by motor imagery. J. Neural Eng. 2021, 18, 046032. [Google Scholar] [CrossRef] [PubMed]

- Azab, A.M.; Mihaylova, L.; Ahmadi, H.; Arvaneh, M. Robust common spatial patterns estimation using dynamic time warping to improve bci systems. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 3897–3901. [Google Scholar]

- Tang, X.; Wang, T.; Du, Y.; Dai, Y. Motor imagery EEG recognition with KNN-based smooth auto-encoder. Artif. Intell. Med. 2019, 101, 101747. [Google Scholar] [CrossRef]

- Miao, Y.; Yin, F.; Zuo, C.; Wang, X.; Jin, J. Improved RCSP and AdaBoost-based classification for motor-imagery BCI. In Proceedings of the 2019 IEEE International Conference on Computational Intelligence and Virtual Environments for Measurement Systems and Applications (CIVEMSA), Tianjin, China, 14–16 June 2019; pp. 1–5. [Google Scholar]

- Wang, J.; Feng, Z.; Lu, N. Feature extraction by common spatial pattern in frequency domain for motor imagery tasks classification. In Proceedings of the 2017 29th Chinese Control and Decision Conference (CCDC), Chongqing, China, 28–30 May 2017; pp. 5883–5888. [Google Scholar]

- Gu, J.; Wei, M.; Guo, Y.; Wang, H. Common Spatial Pattern with L21-Norm. Neural Process. Lett. 2021, 53, 3619–3638. [Google Scholar] [CrossRef]

- Hossain, I.; Hettiarachchi, I. Calibration time reduction for motor imagery-based BCI using batch mode active learning. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar]

- Hossain, I.; Khosravi, A.; Hettiarachchi, I.; Nahavandi, S. Batch mode query by committee for motor imagery-based BCI. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 27, 13–21. [Google Scholar] [CrossRef]

- Peterson, V.; Wyser, D.; Lambercy, O.; Spies, R.; Gassert, R. A penalized time-frequency band feature selection and classification procedure for improved motor intention decoding in multichannel EEG. J. Neural Eng. 2019, 16, 016019. [Google Scholar] [CrossRef]

- Jiang, Q.; Zhang, Y.; Ge, G.; Xie, Z. An adaptive csp and clustering classification for online motor imagery EEG. IEEE Access 2020, 8, 156117–156128. [Google Scholar] [CrossRef]

- Samuel, O.W.; Asogbon, M.G.; Geng, Y.; Pirbhulal, S.; Mzurikwao, D.; Chen, S.; Fang, P.; Li, G. Determining the optimal window parameters for accurate and reliable decoding of multiple classes of upper limb motor imagery tasks. In Proceedings of the 2018 IEEE International Conference on Cyborg and Bionic Systems (CBS), Shenzhen, China, 25–27 October 2018; pp. 422–425. [Google Scholar]

- Islam, M.N.; Sulaiman, N.; Rashid, M.; Bari, B.S.; Hasan, M.J.; Mustafa, M.; Jadin, M.S. Empirical mode decomposition coupled with fast fourier transform based feature extraction method for motor imagery tasks classification. In Proceedings of the 2020 IEEE 10th International Conference on System Engineering and Technology (ICSET), Shah Alam, Malaysia, 9 November 2020; pp. 256–261. [Google Scholar]

- Sadiq, M.T.; Yu, X.; Yuan, Z.; Aziz, M.Z. Identification of motor and mental imagery EEG in two and multiclass subject-dependent tasks using successive decomposition index. Sensors 2020, 20, 5283. [Google Scholar] [CrossRef]

- Jana, G.C.; Shukla, S.; Srivastava, D.; Agrawal, A. Performance estimation and analysis over the supervised learning approaches for motor imagery EEG signals classification. In Intelligent Computing and Applications; Springer: Cham, Switzerland, 2021; pp. 125–141. [Google Scholar]

- Kim, C.; Sun, J.; Liu, D.; Wang, Q.; Paek, S. An effective feature extraction method by power spectral density of EEG signal for 2-class motor imagery-based BCI. Med. Biol. Eng. Comput. 2018, 56, 1645–1658. [Google Scholar] [CrossRef] [PubMed]

- Ortiz-Echeverri, C.; Paredes, O.; Salazar-Colores, J.S.; Rodríguez-Reséndiz, J.; Romo-Vázquez, R. A Comparative Study of Time and Frequency Features for EEG Classification. In Proceedings of the VIII Latin American Conference on Biomedical Engineering and XLII National Conference on Biomedical Engineering, Cancún, México, 2–5 October 2019; pp. 91–97. [Google Scholar]

- Chu, Y.; Zhao, X.; Zou, Y.; Xu, W.; Han, J.; Zhao, Y. A decoding scheme for incomplete motor imagery EEG with deep belief network. Front. Neurosci. 2018, 12, 680. [Google Scholar] [CrossRef] [PubMed]

- Meziani, A.; Djouani, K.; Medkour, T.; Chibani, A. A Lasso quantile periodogram based feature extraction for EEG-based motor imagery. J. Neurosci. Methods 2019, 328, 108434. [Google Scholar] [CrossRef] [PubMed]

- Chaudhary, S.; Taran, S.; Bajaj, V.; Siuly, S. A flexible analytic wavelet transform based approach for motor-imagery tasks classification in BCI applications. Comput. Methods Programs Biomed. 2020, 187, 105325. [Google Scholar] [CrossRef] [PubMed]

- Sadiq, M.T.; Yu, X.; Yuan, Z.; Aziz, M.Z.; Siuly, S.; Ding, W. A matrix determinant feature extraction approach for decoding motor and mental imagery EEG in subject specific tasks. IEEE Trans. Cogn. Dev. Syst. 2020, 1. [Google Scholar] [CrossRef]

- Al-Qazzaz, N.K.; Alyasseri, Z.A.A.; Abdulkareem, K.H.; Ali, N.S.; Al-Mhiqani, M.N.; Guger, C. EEG feature fusion for motor imagery: A new robust framework towards stroke patients rehabilitation. Comput. Biol. Med. 2021, 137, 104799. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Kim, Y.K.; Eskandarian, A. EEG-inception: An accurate and robust end-to-end neural network for EEG-based motor imagery classification. J. Neural Eng. 2021, 18, 046014. [Google Scholar] [CrossRef]

- Amin, S.U.; Alsulaiman, M.; Muhammad, G.; Bencherif, M.A.; Hossain, M.S. Multilevel weighted feature fusion using convolutional neural networks for EEG motor imagery classification. IEEE Access 2019, 7, 18940–18950. [Google Scholar] [CrossRef]

- Tang, X.; Zhang, N.; Zhou, J.; Liu, Q. Hidden-layer visible deep stacking network optimized by PSO for motor imagery EEG recognition. Neurocomputing 2017, 234, 1–10. [Google Scholar] [CrossRef]

- Samanta, K.; Chatterjee, S.; Bose, R. Cross-subject motor imagery tasks EEG signal classification employing multiplex weighted visibility graph and deep feature extraction. IEEE Sensors Lett. 2019, 4, 7000104. [Google Scholar] [CrossRef]

- Jagadish, B.; Rajalakshmi, P. A novel feature extraction framework for four class motor imagery classification using log determinant regularized riemannian manifold. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 6754–6757. [Google Scholar]

- Miao, M.; Wang, A.; Zeng, H. Designing robust spatial filter for motor imagery electroencephalography signal classification in brain-computer interface systems. In Fuzzy Systems and Data Mining III: Proceedings of FSDM 2017 (Frontiers in Artificial Intelligence and Applications); IOS Press: Amsterdam, The Netherlans, 2017; pp. 189–196. [Google Scholar]

- Wang, P.; He, J.; Lan, W.; Yang, H.; Leng, Y.; Wang, R.; Iramina, K.; Ge, S. A hybrid EEG-fNIRS brain-computer interface based on dynamic functional connectivity and long short-term memory. In Proceedings of the 2021 IEEE 5th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 12–14 March 2021; Volume 5, pp. 2214–2219. [Google Scholar]

- Seraj, E.; Karimzadeh, F. Improved detection rate in motor imagery based bci systems using combination of robust analytic phase and envelope features. In Proceedings of the 2017 Iranian Conference on Electrical Engineering (ICEE), Tehran, Iran, 2–4 May 2017; pp. 24–28. [Google Scholar]

- Bhattacharyya, S.; Mukul, M.K. Reactive frequency band-based real-time motor imagery classification. Int. J. Intell. Syst. Technol. Appl. 2018, 17, 136–152. [Google Scholar] [CrossRef]

- Trigui, O.; Zouch, W.; Messaoud, M.B. Hilbert-Huang transform and Welch’s method for motor imagery based brain computer interface. Int. J. Cogn. Inform. Nat. Intell. 2017, 11, 47–68. [Google Scholar] [CrossRef][Green Version]

- Vega-Escobar, L.; Castro-Ospina, A.; Duque-Muñoz, L. Feature extraction schemes for BCI systems. In Proceedings of the 2015 20th Symposium on Signal Processing, Images and Computer Vision (STSIVA), Bogota, Colombia, 2–4 September 2015; pp. 1–6. [Google Scholar]

- Hong, J.; Qin, X.; Li, J.; Niu, J.; Wang, W. Signal processing algorithms for motor imagery brain-computer interface: State of the art. J. Intell. Fuzzy Syst. 2018, 35, 6405–6419. [Google Scholar] [CrossRef]

- Lotte, F.; Bougrain, L.; Cichocki, A.; Clerc, M.; Congedo, M.; Rakotomamonjy, A.; Yger, F. A review of classification algorithms for EEG-based brain–computer interfaces: A 10 year update. J. Neural Eng. 2018, 15, 031005. [Google Scholar] [CrossRef]

- Koles, Z.J. The quantitative extraction and topographic mapping of the abnormal components in the clinical EEG. Electroencephalogr. Clin. Neurophysiol. 1991, 79, 440–447. [Google Scholar] [CrossRef]

- Reuderink, B.; Poel, M. Robustness of the Common Spatial Patterns Algorithm in the BCI-Pipeline. Techical Report 2008. Available online: https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.216.1456&rep=rep1&type=pdf (accessed on 23 March 2022).

- Ramoser, H.; Muller-Gerking, J.; Pfurtscheller, G. Optimal spatial filtering of single trial EEG during imagined hand movement. IEEE Trans. Rehabil. Eng. 2000, 8, 441–446. [Google Scholar] [CrossRef]

- Mitsuhashi, T. Impact of feature extraction to accuracy of machine learning based hotspot detection. In Proceedings of the International Society for Optics and Photonics, Monterey, CA, USA, 11–14 September 2017; Volume 10451, p. 104510C. [Google Scholar]

- Chaudhary, S.; Taran, S.; Bajaj, V.; Sengur, A. Convolutional neural network based approach towards motor imagery tasks EEG signals classification. IEEE Sens. J. 2019, 19, 4494–4500. [Google Scholar] [CrossRef]

- Vieira, S.M.; Kaymak, U.; Sousa, J.M. Cohen’s kappa coefficient as a performance measure for feature selection. In Proceedings of the International Conference on Fuzzy Systems, Barcelona, Spain, 18–23 July 2010; pp. 1–8. [Google Scholar]

- Grosse-Wentrup, M.; Liefhold, C.; Gramann, K.; Buss, M. Beamforming in noninvasive brain–computer interfaces. IEEE Trans. Biomed. Eng. 2009, 56, 1209–1219. [Google Scholar] [CrossRef]

- Huang, G.; Liu, G.; Meng, J.; Zhang, D.; Zhu, X. Model based generalization analysis of common spatial pattern in brain computer interfaces. Cogn. Neurodynamics 2010, 4, 217–223. [Google Scholar] [CrossRef]

- Raghu, S.; Sriraam, N.; Rao, S.V.; Hegde, A.S.; Kubben, P.L. Automated detection of epileptic seizures using successive decomposition index and support vector machine classifier in long-term EEG. Neural Comput. Appl. 2020, 32, 8965–8984. [Google Scholar] [CrossRef]

| Brainwaves | Hz/V |

|---|---|

| Delta () | ≤4 Hz, 100 V |

| Theta () | 4–8 Hz, <100 V |

| Alpha () | 8–13 Hz, <50 V |

| Beta () | 13–30 Hz, <30 V |

| Gamma () | ≥30 Hz, ≤10 V |

| Methods | Citations | Percentages |

|---|---|---|

| CSP-based | [40,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61] | 44.26% |

| PSD methods | [52,62,63,64,65,66,67,68,69] | 21.42% |

| Wavelet Transform-based | [48,53,54,64,70,71,72] | 16.65% |

| Deep learning | [73,74,75,76] | 9.52% |

| Riemmannian | [58,59,77] | 7.14% |

| Boltzmann | [68,75] | 4.76% |

| LCD | [45,78] | 4.76% |

| dFC | [79] | 2.38% |

| MFCC | [67] | 2.38% |

| log-BP | [48] | 2.38% |

| TPF | [80] | 2.38% |

| RFB | [81] | 2.38% |

| HHT | [82] | 2.38% |

| EMD | [63] | 2.38% |

| Feature Extraction | Classifier | Kappa | Avg. Accuracy | Dataset | Work |

|---|---|---|---|---|---|

| EMD-FFT | SVM | 0.9244 | 95.89% | BCI III Dataset 1 | [63] |

| RJSPCA | SVM-based | 0.916 | 78% | BCI III Dataset 1 | [46] |

| MEWT | FFNN | 0.95 | 99.55% | BCI III IVa | [71] |

| CWT | DCNN | 0.9869 | 99.35% | BCI III IVa | [90] |

| STFT | DCNN | 0.9798 | 98.7% | BCI III IVa | [90] |

| SDI | FFNN | 0.9693 | 97.46% | BCI III IVa | [64] |

| SDI | SVM | 0.915 | 93.05% | BCI III IVa | [64] |

| FAWT | Subspace kNN | 0.917 | 95.97% | BCI III IVa | [70] |

| CSP | SGRM | 0.54 | 77.7% | BCI III IVa | [47] |

| MEWT | FFNN | 0.95 | 99.52% | BCI III IVb | [71] |

| SDI | FFNN | 0.978 | 97.5% | BCI III V | [64] |

| MEWT | FFNN | 0.894 | 91.8% | BCI III V | [71] |

| CNN | LSTM | 0.55 | 77.44% | BCI IV 2a | [73] |

| CSP/LCD | SRDA | 0.73 | 79.7% | BCI IV 2a | [45] |

| CSP | SVM | 0.92 | 96.4% | BCI IV 2b | [51] |

| CSP | Bootstrap | 0.92 | 96.5% | BCI IV 2b | [51] |

| CNN | LSTM | 0.544 | 65.88% | BCI IV 2b | [73] |

| CSP | SGRM | 0.57 | 78.2% | BCI IV 2b | [47] |

| Dataset | Channels | Classes | Subjects |

|---|---|---|---|

| BCI III Dataset 1 | 64 ECoG | 2 | Continuous EEG |

| BCI III IVa | 118 EEG | 2 | 5 |

| BCI III IVb | 118 EEG | 2 | Continuous EEG |

| BCI III V | 32 EEG | 3 | Continuous EEG |

| BCI IV 2a | 22 EEG/3 EOG | 4 | 9 |

| BCI IV 2b | 3 bipolar EEG/3 EOG | 2 | 9 |

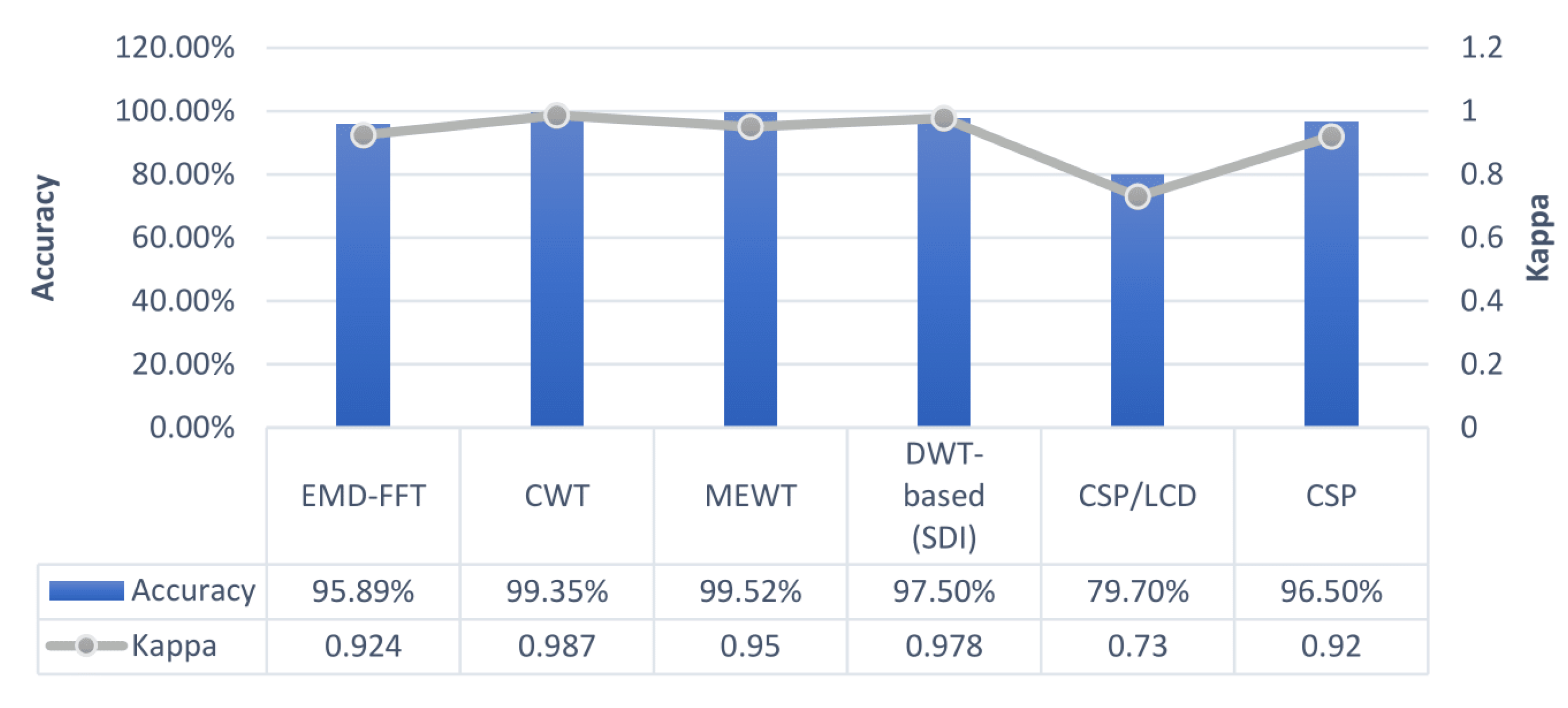

| Domain | Classes | Avg. Accuracy | K-Value | Dataset | Work |

|---|---|---|---|---|---|

| Frequency domain | 2 | 95.89% | 0.9244 | BCI III Dataset 1 | [63] |

| Time-Frequency domain | 2 | 99.35% | 0.987 | BCI III IVa | [90] |

| Time-Frequency domain | 2 | 99.52% | 0.95 | BCI III IVb | [71] |

| Multi-domain | 3 | 97.5% | 0.978 | BCI III V | [64] |

| Spatial domain | 4 | 79.7% | 0.73 | BCI IV 2a | [45] |

| Spatial domain | 2 | 96.5% | 0.92 | BCI IV 2b | [51] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moumgiakmas, S.S.; Papakostas, G.A. Robustly Effective Approaches on Motor Imagery-Based Brain Computer Interfaces. Computers 2022, 11, 61. https://doi.org/10.3390/computers11050061

Moumgiakmas SS, Papakostas GA. Robustly Effective Approaches on Motor Imagery-Based Brain Computer Interfaces. Computers. 2022; 11(5):61. https://doi.org/10.3390/computers11050061

Chicago/Turabian StyleMoumgiakmas, Seraphim S., and George A. Papakostas. 2022. "Robustly Effective Approaches on Motor Imagery-Based Brain Computer Interfaces" Computers 11, no. 5: 61. https://doi.org/10.3390/computers11050061

APA StyleMoumgiakmas, S. S., & Papakostas, G. A. (2022). Robustly Effective Approaches on Motor Imagery-Based Brain Computer Interfaces. Computers, 11(5), 61. https://doi.org/10.3390/computers11050061