1. Introduction

Storytelling is a widely used method for people across the world to engage emotionally, communicate, and project elements from their culture and personality. Humans can really benefit from their own stories, mentally and emotionally, and after all these years, we still learn and improve on telling stories [

1]. Narratologists agree that to constitute a narrative, a text must tell a story, exist in a world, be situated in time, include intelligent agents, and have some form of a causal chain of events, while also it usually seeks to convey something meaningful to an audience [

2].

Except for humans, museums can be considered as “natural storytellers” [

3]. Museums aim to make their exhibits appealing and engaging to an increasing variety of audiences while also nurturing their role in conservation, interpretation, education, and outreach [

4]. The utilization of multimodal storytelling mechanisms, in which digital information is presented through multiple communication ways/media (multimedia), is considered as a supplement to physical/traditional heritage preservation, activating users’ involvement/collaboration in integrated digital environments. Thus, digital storytelling is one of the resources museums have in hand for enriching their offer to visitors and to society at large. Through narratives, museums can find new ways to enhance and represent their exhibit’s stories to visitors, attracting their attention and increasing their interest through active engagement.

Digital Storytelling (DS) derives its engaging power by integrating images, music, narrative, and voice together, thereby giving deep dimension and vivid color to characters, situations, experiences, and insights. Consequently, technologies such as Augmented Reality (AR) can influence the way museums present their narratives and display their cultural heritage information to their visitors. AR can be seen as a form of mediation using interaction and customization that supports a form of narratives where visitors can engage or even create their own narrative scenarios in their cultural tour.

Meanwhile, the design of user-profiles as ‘fictional’ characters based on real data and research, and being created for a digital storytelling application, is considered to be a very consistent and representative way of defining actual users and their goals. Personalized cultural heritage (CH) applications require that the system collects data about the users and the environment, processing them in order to tailor the user experience. Context-awareness is a technology that addresses this requirement, by enhancing the interaction between human and machine and adding perception of the environment, which eventually leads to intelligence [

5]. The context in the cultural space domain includes many features such as location, profiles, user movement and behavior, and environmental data [

6].

In this work, the authors present the Cultural Heritage Augmented and Tangible Storytelling (CHATS) project, a framework that combines Augmented Reality and Tangible Interactive Narratives. This project is based on a famous painting named “Children’s Concert” by G. Jacovides and on a previous project in which 3D models representing the painting’s characters and objects were created [

7,

8]. It is a hybrid architecture that combines state-of-the-art technologies along with tangible artifacts and shows usability and expandability to other areas and applications at a relatively low cost. In addition, CHATS takes into consideration the special needs of visually impaired people and aims for an immersive cultural experience even without the need for images.

The paper is organized as follows:

Section 2 is about related work in digital storytelling applications on cultural heritage. DS applications have been reviewed and classified. In

Section 3, CHATS is presented. Its architecture, its hardware infrastructure, and all the modules that work together for a DS application over a tangible interface. In

Section 4, CHATS is implemented over a painting and the project is tested and evaluated. The work is concluded in

Section 5.

2. Related Work

There have been various applications that apply digital storytelling techniques, almost exclusively addressing the problem of delivering appropriate storytelling content to the visitor. The rationale for applying such techniques is that cultural heritage applications have a huge amount of information to present, which must be filtered in order to enable the individual user to easily access it.

For the purposes of this paper, we have reviewed those applications described in a variety of sources. Specifically, we identified 26 digital storytelling applications only from the last decade and included them in our collection. The criterion for choosing those applications was primarily the use of interactive narratives about cultural heritage, in combination with other technologies. The following table (

Table 1) is based on digital technologies per cultural application and is described below.

In CHESS [

10], researchers designed and tested personalized audio narratives about specific exhibits in the Acropolis archeological museum, in Athens. Visitors were assigned a predefined profile according to their age and had access to AR content through a mobile app, representing the artifacts in 3D. Some members of this research team continued with EMOTIVE [

29], which was tested in different museums and, in addition to the above, they created an authoring tool for narratives and a mobile app with which to present those narratives. Personalization in CULTURA [

12] works differently, according to visitors’ interests and the level of engagement with the cultural artifacts. In works, as with Lost State College [

14], iGuide [

15] personalized content that derives from the visitors, who upload their own media.

Gossip at Palace [

19] efforts to attract teenagers as a primary target group using gamification elements. In contrast, SVEVO [

25] targets adults and seniors to engage them more in a cultural visit. In some works, such as TolkArt [

24], SPIRIT [

22], and Střelák [

20], AR guide-personalized content comes as a result of location awareness and user interactions. Cicero [

30] and MyWay [

27] are recommender systems that take advantage of DS to promote cultural heritage. In most of the works [

10,

15,

16,

17,

18,

20,

21,

22,

23,

25,

29], AR apps (for smartphones and tablets), AR smart glasses, or VR headsets are used as technologies to immerse users into 3D environments and engage them into a more participatory interaction with objects.

To summarize, all the above applications make use of interactive stories, and most of these stories have personalization elements. This means that, by some method, they either create user profiles or use ready-made, predesigned user profiles to deliver personalized content. Many of them exploit augmented and virtual reality technologies to dive into virtual environments and offer a more intense experience. The same technologies offer the ability for gamification and offer serious games to communicate cultural information in a more entertaining way, especially to younger ages. Additionally, in most applications, there is context awareness, and applications provide content that is related to the user’s position and movement.

On the other side, not as many applications have a three-dimensional representation of objects and monuments of cultural heritage and, in even fewer, there is a tangible interface for the users to interact. It is a common finding that cultural heritage research has shifted over the years to mobile applications and the use of screens and has moved away from tangible interfaces. CHATS efforts to bridge the gap that has been created and exploit all the potentials a tangible interface can offer for cultural heritage. In addition, it uses 3D representations of artifacts, shows gamification aspects, and opens horizons to people with visual impairments under a low cost.

3. CHATS—Cultural Heritage Augmented and Tangible Storytelling

3.1. Architecture

In CHATS, there is a tangible 3D printed and handcrafted representative diorama for each exhibit—an object of interest in a collection. The system can be installed in any gallery, library, archive, or museum (GLAM); thus, objects of interest could be paintings, museum objects, books, artworks, etc.

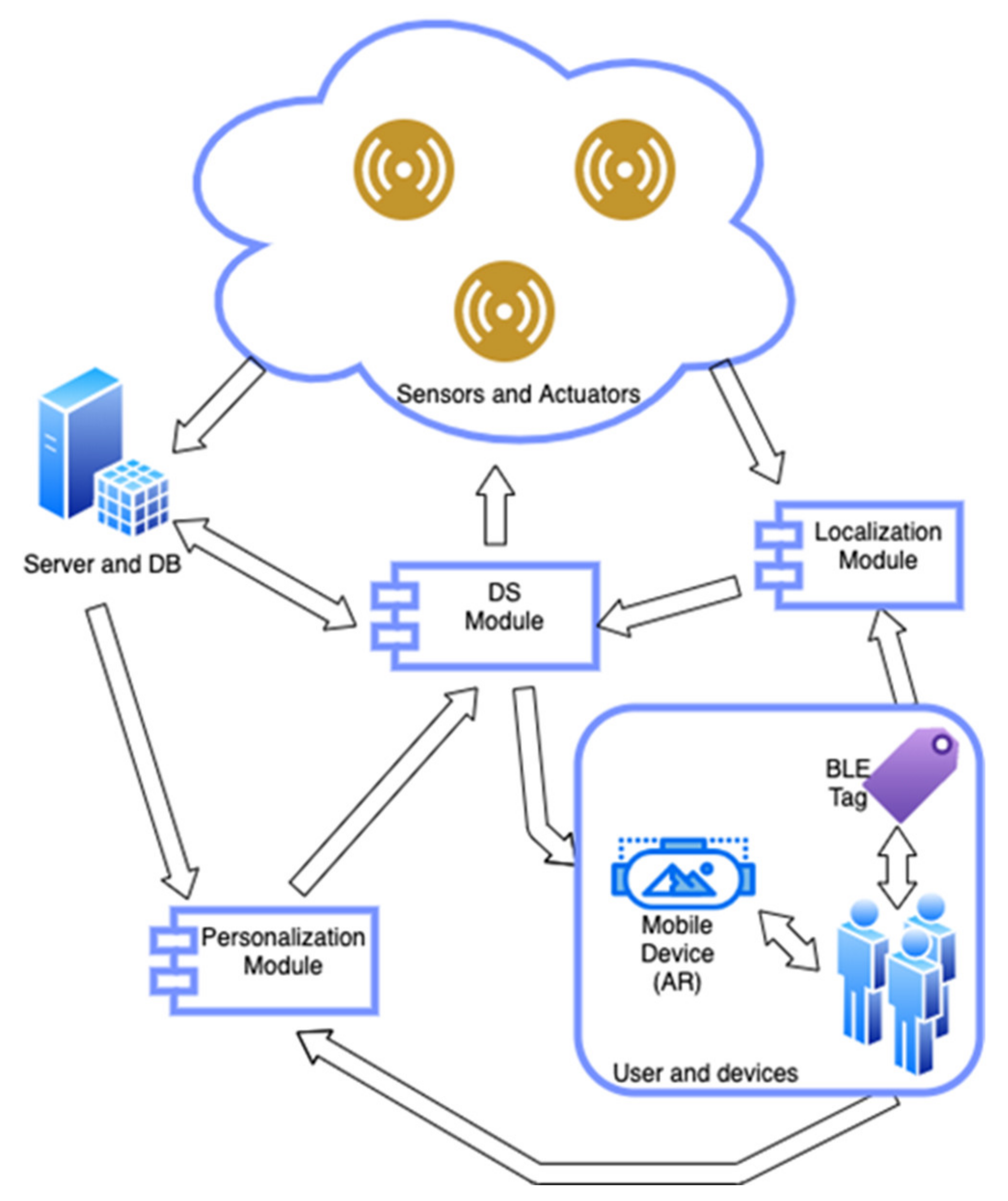

The basis of CHATS architecture is the network of sensors and actuators, for an entire collection (an actual Internet of Things network), that interacts with the visitors and sends data to a server. The server could be remote or local. The number and type of sensors could be different for each exhibit, according to the desired level of interaction (detail, sensitivity, accuracy, modes of interaction). Sensors can sense the proximity of visitors and interactions such as touching objects, moving hands near objects, making noises, etc. Actuators offer automated interactivity, allowing events such as playing sounds or projecting images, triggered by user actions or context-aware procedures. Sensors are rather ubiquitous and not visible by users.

Nevertheless, the first interaction between visitors and exhibits is the sense of proximity via Bluetooth low energy (BLE) technology. All visitors carry a tag that is given to them when entering a cultural site, and the Bluetooth transceiver, also hidden in each construction, can pair automatically with the tags. Localization is executed in the Localization Module, which is responsible for the acquisition of localization data, i.e., data from the BLE infrastructure (on users and artifacts) and data that capture user activity. The output of the localization module is sent to the DS module, triggering the necessary actions.

In any case, the sensors are always active and stream data to be processed primarily by an Arduino controller (one for each artifact). Useful data (data of actual user interaction) are sent to the server for the second level of processing while redundant data are ignored. The sensor network data flow, in association with localization and personalization data, is combined to dynamically select one of the several story trajectories to be heard by the visitors.

The Personalization Module is responsible for executing the personalization procedure. Acquiring data from either stored profiled data in the database (DB) or directly from the user (e.g., through a questionnaire), the personalization module identifies the persona of the user, associates them with a profile that is relevant for the application, and outputs the result to the DS module in order to be exploited for a more personalized user experience.

The DS module is placed at the center of the CHATS architecture, receiving the output of all other modules, and delivering the appropriate content to the user. The typical output device of the DS module is the mobile device of the user but is not restricted only to traditional content delivery methods. In cases where smart glasses are available, AR content is reproduced, according to the narrative. AR content, along with binaural audio for the narratives, enhance visitors’ experience. Finally, the DS module may also trigger actions to actuators, such as the playing of appropriate music when the narrative requires it.

The complete architecture is illustrated in

Figure 1. The three modules integrated into the CHATS architecture are further described in the following subsections.

3.2. DS Module

Narratives in CHATS have the form of audio. Technologies of binaural audio in combination with augmented reality projections, using smart glasses, were chosen for the immersion of visitors into the painting’s environment and for a richer user experience (UX). Nevertheless, the tangible interface can also be used without the need for smart glasses.

The binaural recording technique has been known and examined for more than a century. A lot of times, the term is considered synonymous with stereo recording, but these techniques are different conceptually and produce different audio results. In the binaural recording, specialized microphones are used. Usually, these microphones are shaped like a dummy head, where the ears are the actual microphones. Binaural audio creates the effect of immersion if it is reproduced using a headset. In this case, the listener feels like they are sitting in the exact location where the sound was originally created.

Narratives, in general, can be linear, following a specific trajectory, but interactive narratives are mostly branching narratives, which means they can follow several paths and that the user has some level of control over the story outcome. In CHATS, audio narratives are branching, according to user input and profile. This technique requires plenty of recordings to have more branching options and recordings should follow a general direction, or at least a specific format, so that a story’s meaning always exists.

Agency (a term mostly used in games) is the actual level of control that players feel while in the game world [

2,

35]. Multiplayer Interactive Narrative Experiences (MINEs) are interactive authored narratives in which multiple players experience distinct narratives (multiplayer differentiability) and their actions influence the storylines of both themselves and others (inter-player agency) [

36]. The aim of CHATS is for the visitor-user to perceive the maximum possible level of agency, because this also leads to a higher level of engagement [

37]. MINEs are also supported. A narrative trajectory can be defined by the simultaneous interaction of multiple visitors, but there is no differentiability, as only one narrative can occur at a time.

The DS module initiates the moment a visitor approaches an artifact (trigger distance can be calibrated into software, can vary in different hardware implementations, and can be affected by obstacles, such as other visitors, metal objects, and walls). At that time, sensors inside the tangible representation of the artifact start producing useful data that can initiate audio reproduction. This is the first level of interaction with CHATS—a rather involuntary interaction—and can be affected by the presence of multiple users at range. Meanwhile, users with smart glasses can utilize them to display digital information about their artifact of interest, viewing 3D models, images, and text that complete and enhance users’ knowledge and perception. This contextual information about each artifact can be also part of its narrative, further explaining the story behind its existence. The combination of the physical artifacts with the virtual information available through smart glasses and the awareness of its narrative can trigger curiosity and stimulate the interest of users to physically manipulate their object of interest.

At a second step comes the voluntary interaction, in which visitors are invited (by audio and hopefully by curiosity and self-interest) to touch and feel the 3D printed diorama. The 3D printed objects offer a tangible experience to the visitor, which is added to the digital experience. In this way, users with smart glasses can also interact with the virtual information displayed through AR techniques. By manipulating the physical object, users have access to different types of information based on the angle of the artifact’s view. Each part of the artifact can be used as an “image maker” for the AR software to display multiple virtual data according to the user’s view perspective. Users then can interact with the AR content by manipulating the artifact.

3.3. Personalization Module

The personalization module involves the necessary steps to initialize the application, consisting of the main components of the user persona identification by receiving data from the users, to accordingly adapt the initial customization of the DS application.

When a visitor uses a system for the first time, the system will most likely fail to effectively recommend content to the user. This problem is commonly known as cold start and is a common issue in recommendation systems. Many solutions and methods have been proposed to address the cold start issue. To construct an individual user’s profile, information may be collected explicitly, through direct user intervention, or implicitly, through agents that monitor user activity [

38].

Therefore, the basic function of the CHATS personalization module is the definition of the user’s requirements and characteristics by defining his profile. Most similar surveys have used the questionnaire to categorize users according to their answers [

9,

39,

40,

41]. Viewing a small questionnaire with simple but targeted questions about the user’s profile and interests at the beginning of the CHATS application is a common and effective method in corresponding cases of personalized information to gather the necessary information about the user profile.

Therefore, the first section of the module contains six questions that inquire about the demographic information of the participants (gender, age range, and level of education), as well as their DS-related background information (frequency of DS platforms interaction, experience with different DS platforms or devices, preferred DS genres). This information would enable us to determine the heterogeneity of the sample, as well as investigate the effect of personal and contextual factors such as age, education, and prior experiences. The questionnaire is digitally filled by the visitor upon registration at the start of the visit (also when the BLE tagging is performed).

The next step includes the data acquisition from profiled data derived from various resources (mobile devices, database repository) that allow a refinement of the associated user persona. Overall, the user profiling module provides a setup for the application to know where to start from, eliminating the cold-start issue mentioned before [

42].

Finally, this cycle of persona identification will be continuously performed during the whole DS integration of the user, eventually storing the identified persona for future use and providing a dynamic personalization module.

3.4. Localization and Context-Awareness Module

The proposed digital storytelling platform supports context-aware functionality, allowing for the automated identification of visitor proximity and interaction with the artifacts. Although context-awareness includes a wide range of context types such as location, time, social context, and activity, it is often sufficient to track visitor movement with respect to artifacts or other visitors. This approach has been applied in the proposed platform, which captures visitor location to initiate the next step of the narrative. A visitor’s location is expressed in three ways: (a) proximity with an artifact, (b) proximity with other visitors, and (c) tangible interaction with an artifact. Thus, apart from locative context, social context is also exploited, distinguishing between lone visitors and groups of visitors.

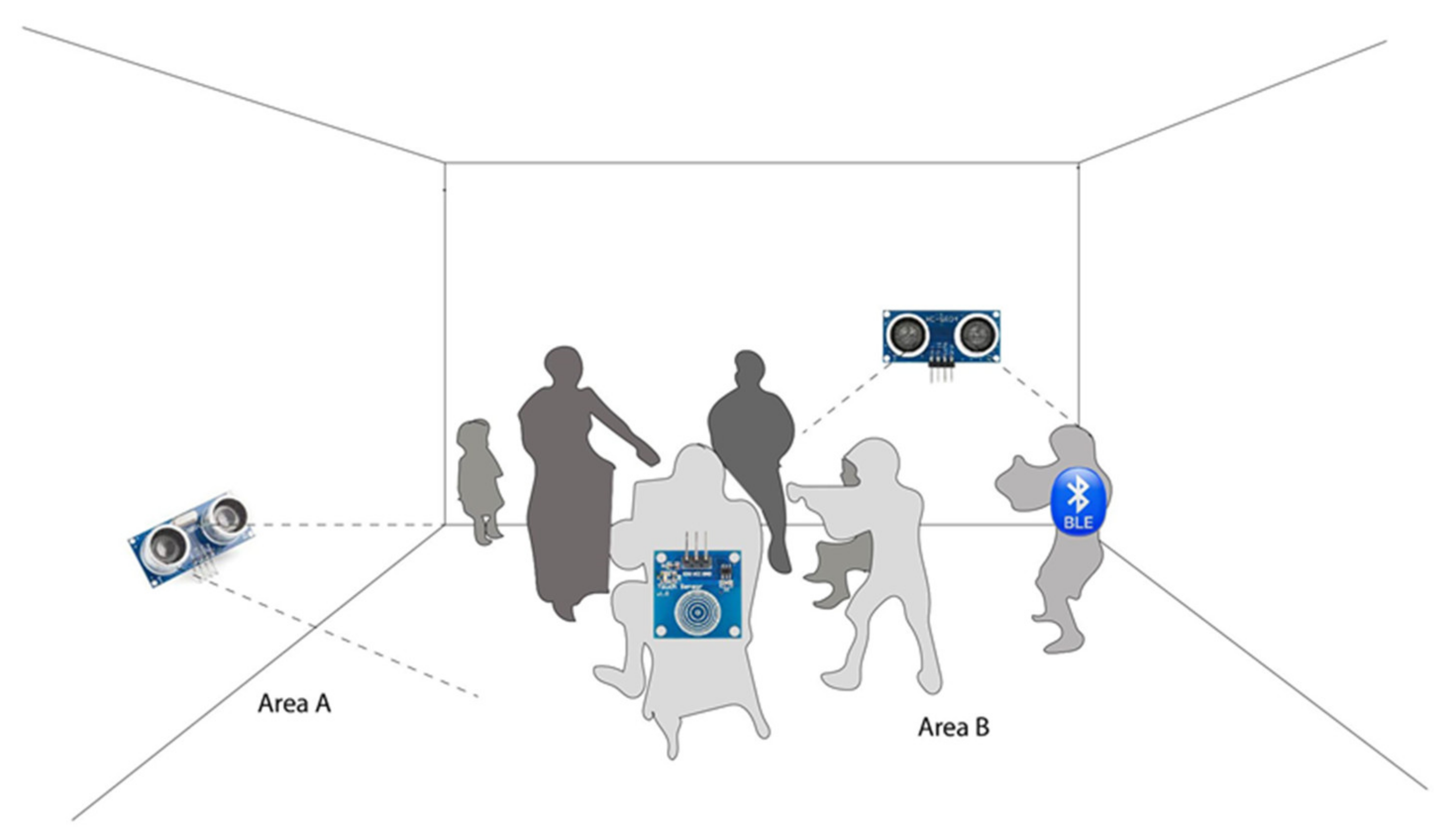

The infrastructure which is responsible for the realization of context-awareness includes BLE modules and capacitive touch sensors that are installed on Arduino microcontrollers and BLE beacons carried by visitors. The localization procedure is based on the following two independent sensing processes:

Firstly, the BLE module installed on the Arduino board is placed close to the related artifact and is continuously searching for BLE devices. Visitors are moving in the area, carrying BLE beacons (in the form of badges given to them) that advertise their presence. When the BLE module detects a BLE beacon, it retrieves the registered visitor mapped to this beacon and calculates his/her distance from the related artifact. The distance is calculated using the Received Signal Strength Indicator (RSSI). When the calculated distance is reduced below a predetermined threshold, the registered visitor is considered to be approaching the artifact, an event that is sent to the server. Accordingly, if the distance of a registered visitor becomes greater than the threshold, the visitor is considered to be moving away from the artifact, which is again sent to the server.

Secondly, the capacitive touch sensor installed on the Arduino board is also placed in a critical position on the artifact. When the visitor touches that area and triggers the sensor, the Arduino microcontroller sends that event to the server.

At the same time, the server, which is continuously executed, receives events from the various Arduino boards. Performing logical functions, it can identify whether a visitor has approached an artifact (first level of proximity) or has touched it, thus initiating the second level of proximity and interaction. Furthermore, proximity between visitors is indirectly calculated based on simultaneous proximity to an artifact. Thus, social context, i.e., grouping the visitors together, is acquired only in relation to an artifact.

It should be noted that the second layer of close proximity triggered by the capacitive touch sensor does not provide identification of the visitor, thus relying on BLE identification and mapping to a registered visitor to know who is currently interacting with a certain artifact. Overall, the described procedure disseminates the following contextual information to the DS module: who is approaching the artifact, which level of proximity has been reached, and who else is accompanying him/her.

4. Case Study

CHATS was tested over a painting named “Children’s concert”. It was created around 1900 by a Greek artist called George Jacovides and can be found in the Greek National Gallery in Athens (

Figure 2). The painting was awarded a prize (a golden medal) in the 1900 Paris Exposition. In the painting, there are seven characters in total. Children are playing music for an infant and its mother. The scene is placed in a bright room with some furniture such as a table, a chair, and benches.

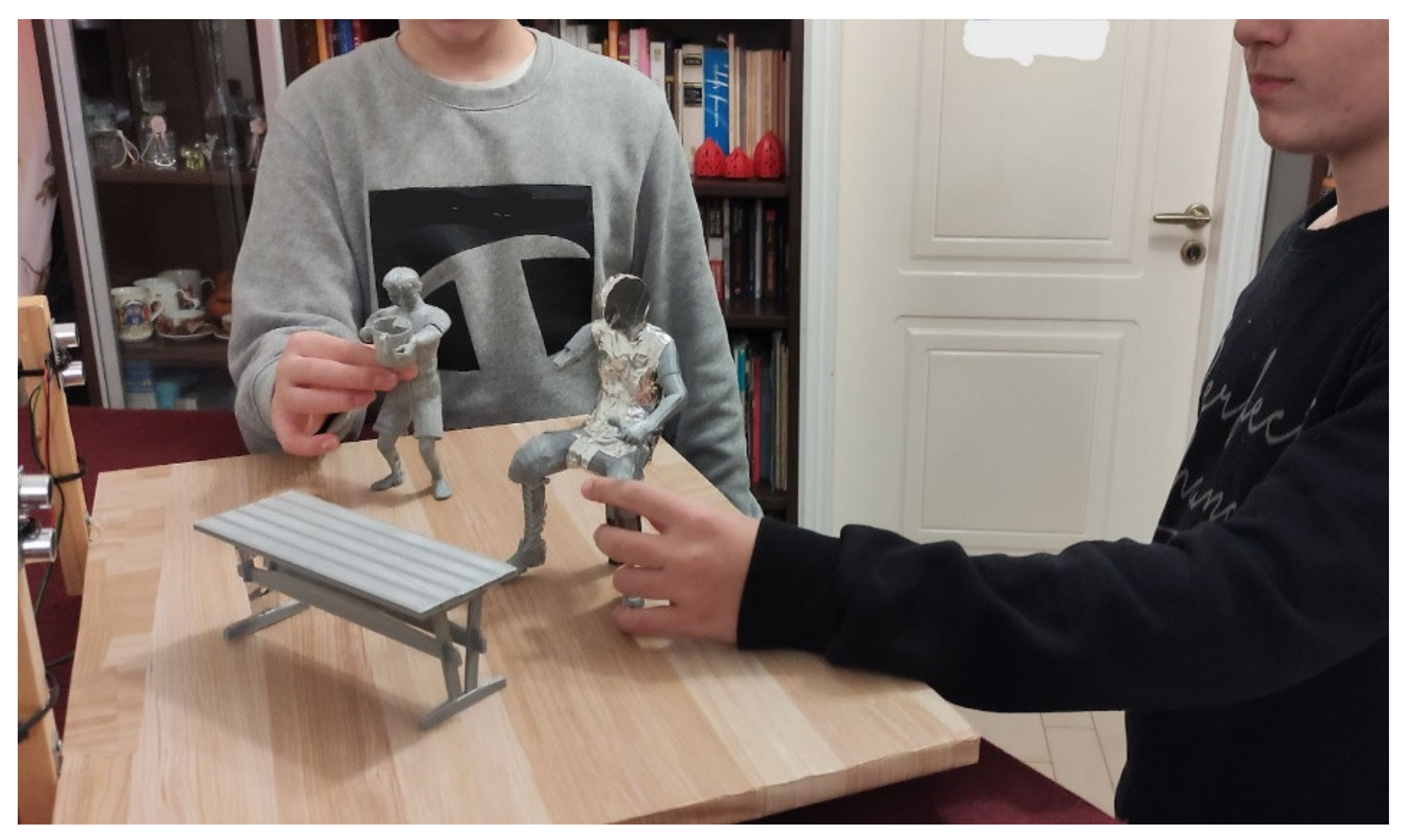

4.1. Tangible Interface Description and Characteristics

The 3D representation of the painting was part of another project, called ARTISTS [

7,

8]. Three-dimensional printing was applied to materialize some characters. The purpose of these prints was to create a tangible representation of the painting to be used in various instances:

For gallery visitors with vision disabilities;

For children, adding gamification features into gallery visits;

For any visitor wishing for a richer cultural user experience.

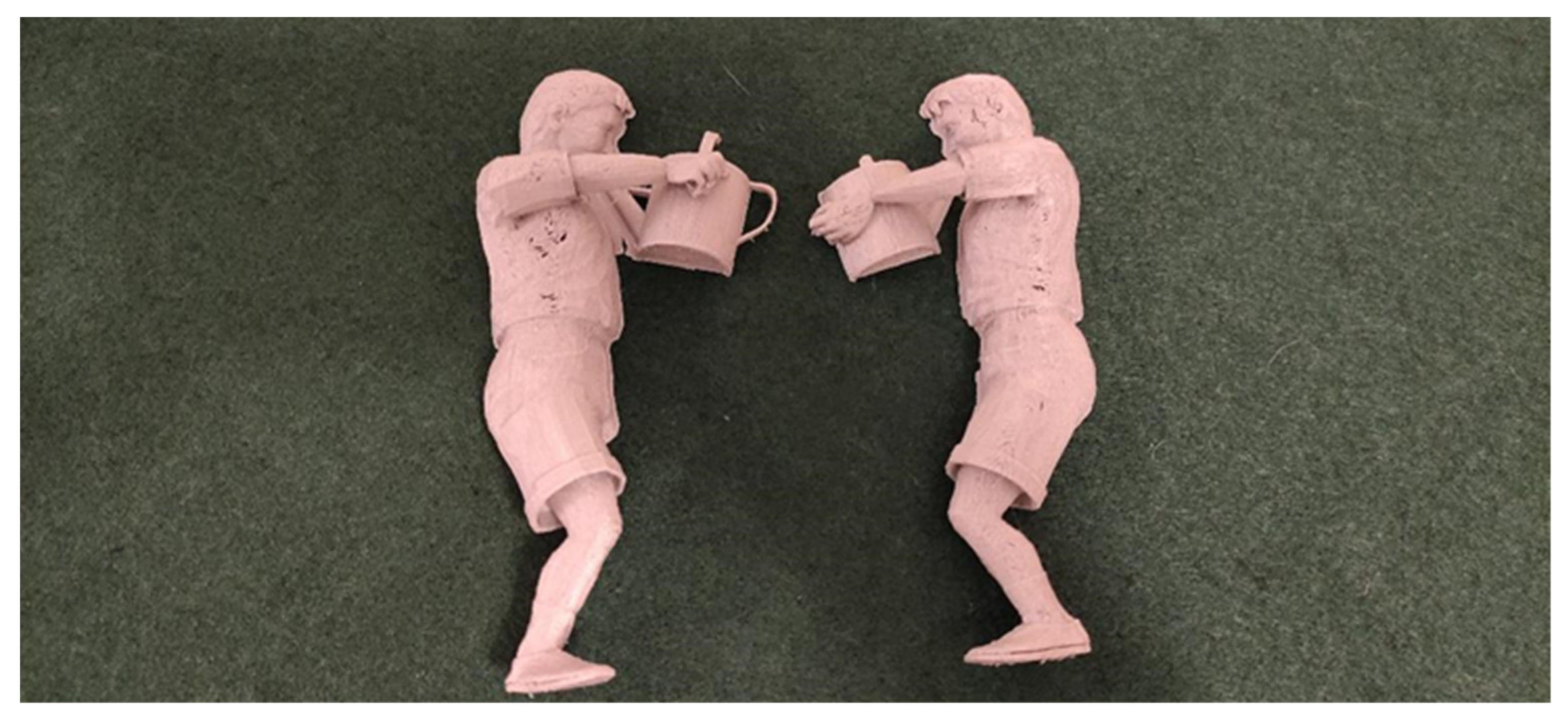

The printed representation model (diorama) works as a user interface, offering multiple interactions. The models’ fidelity was not a project target, as the case study mostly focuses on the interaction and the diorama represents a demo application for experimentation purposes.

Character 3D models were drawn in Cinema 4D and were processed again in a way that 3D printing would be possible and efficient. After an initial trial-and-error period, it was decided that it would be more convenient for the models to be hollow inside and split in half. This empty space could fit electronics, less printing material would be used, and the printing process would be faster. The second period of processing and reprinting the models started and resulted in half model parts, plus additional elements of the painting scene, such as a table (

Figure 3).

The material used for our printing was polylactic acid (PLA), a common material for homemade 3D prints, using an Ultimaker 3, 3D printer. The best result for our rather complicated models was achieved using an AA 0.4 head at 180 °C and printing at a density of 0.2 mm with 40% filling.

All these parts were assembled and glued together in a diorama, but, prior to this, Arduino sensors should be positioned inside the models. Arduino was chosen as the sensing and communicating interface because of its versatility, availability, low cost, and open architecture. Ultrasonic distance sensor, capacitive touch sensor, Bluetooth BLE adapter, all connected on a Mega 2560 board, compose the electronics infrastructure and coding that was implemented using the Arduino SDK and processing programming language.

Model characters were positioned in fixed positions and in a way in which they both represent the drawn characters and are accessible by hand. Entering in area A or area B, as shown in

Figure 4, triggers interaction. The same happens when touching the child which is sitting on the chair and holding the drum. This character is positioned at the closest position to the visitors and is referred to as “the drummer”. There is also a character that adds a humorous feeling to the artwork. It is the boy trying to play music by blowing air inside a watering can. This character is hosting our BLE receiver and is referred to as “the watering can” (

Figure 5).

Distance sensors are quite accurate and cover the whole painting area. Proximity to a visitor, before entering by hand in the painting area, can be sensed using the BLE transceiver when paired with the beacon (BLE tag) carried by visitors. The initial pairing triggers the first audio message from the painting, which welcomes and attracts visitors.

Arduino’s sensors are installed into and around the models and, with the aid of BLE beacons, the proximity of visitors can be sensed. Touching a model or entering the area around models can also be sensed. Sensors trigger narratives about the painting in the form of sound. These narratives have a personalized form as they change according to the number of people involved and their behavior. Some form of profiling, matching with a predesigned persona, could also be processed earlier by the personalization module, however this function is not yet fully implemented. Narratives are based on actual historic data about the painting and the era, enriched with fictional features.

Those narratives are binaural recordings. The selection of binaural audio was part of the plan to impress the user and enhance the sense of presence into the virtual space. To keep the files small and fast to stream, narratives are short in length (about 10 s each) and stored in an SQL Server database, accessible via HTTP. There are about ten recordings for each of the two 3D printed characters on the demo diorama, ten more recorded files not associated with some character, plus ten more recordings (audio files) which are triggered when a group of people is sensed within a proximity of the construction (

Table 2).

An algorithm decides which recording is going to be streamed, according to user interaction with the characters. Each new session starts with the welcome sound and then three usage scenarios are considered: (a) The user does not touch the objects; (b) The user uses one hand and touches a character of the painting; (c) The user puts both hands in the constructed painting space. These scenarios change in case a group of people (two or more) interacts with the painting. In that case, two different usage scenarios are considered: (a) Users are approaching and passing by; (b) Someone starts touching. In more complex situations, e.g., session starts with a single user and then someone else is added, the cycle flow must finish before a new session begins (

Figure 6).

For each narrative in the above flowchart, there is a leveling approach (

Figure 7) where each recording belongs to a specific level in such a way that, when a sound is completed (the green rectangles) and the narrative continues to the next level, there is always a logical continuity. It is essential for the narratives to have a meaning and a structure, that is, to have a beginning, a middle, and an end, and the use of levels is a technique to accomplish that. Users can even be led from level 1 of one narrative to level 2 of another narrative without losing the coherence of the story. To make the above possible, special attention had to be paid when authoring the parts of each level, so that they could be related and stitched to the previous and next level.

To make all the above feasible, the Arduino microcontroller is equipped with a Wi-Fi module (NINA) and uses the WiFiNINA Library to create a web server and be able to respond to http calls. The same library was essential for the communication and data exchange between the microcontroller and the AR portable device. Thus, augmented Reality (AR) techniques are utilized to digitally visualize data from the narratives. Users can watch the characters “come to life” and narrate their stories through AR smart glasses, and they can also listen to binaural sounds from the surroundings of the painting. Moreover, data from the Arduino sensors about the user’s movements and position dynamically change the digital information available through the smart glasses while also improving the physical interaction with the system. AR techniques enhance the cultural user experience by immersing them in the digitally reconstructed painting and engaging with its 3D models, thus combining digital storytelling in 3D virtual immersive learning environments [

43].

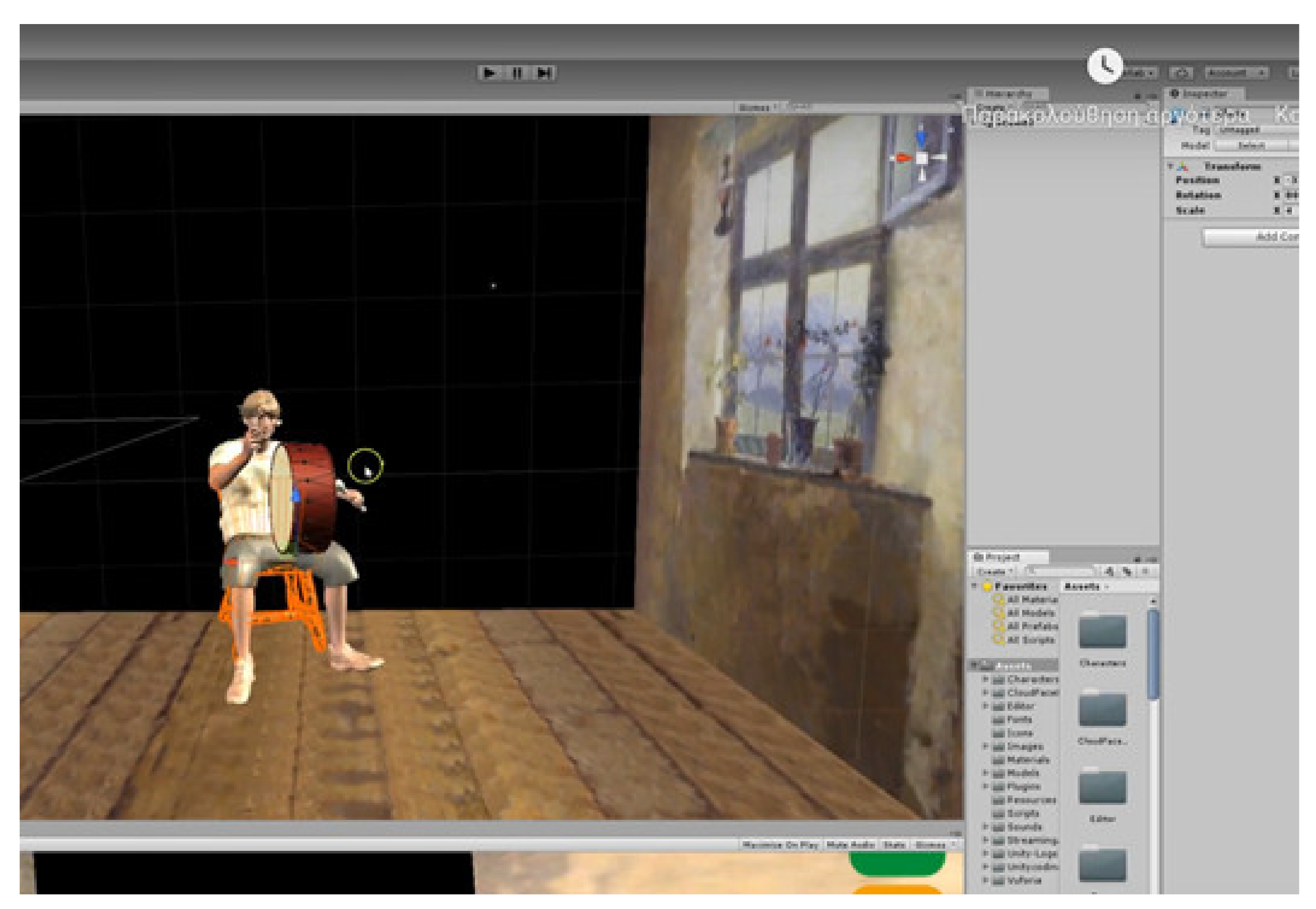

The AR demo application (

Figure 8) has been composed in Unity and the AR engine is Vuforia, but some other software tools have also been used. Animations were all imported by Adobe’s Mixamo, while the painting’s environment has been crafted using Adobe Photoshop.

The sound used for the application was either recorded using a 3Dio FS binaural microphone, both imported from common related web portals. The smart glasses used for the experiment were Microsoft’s HoloLens 2 (

Figure 9).

Vuforia allows for the visual recognition of the painting representation and, while using the AR device, when the user focuses at a certain angle, activates the projection of the augmented content on the diorama. The smart glasses perform well, but improvements should be made to the application to align the augmented visual content more accurately over the tangible objects.

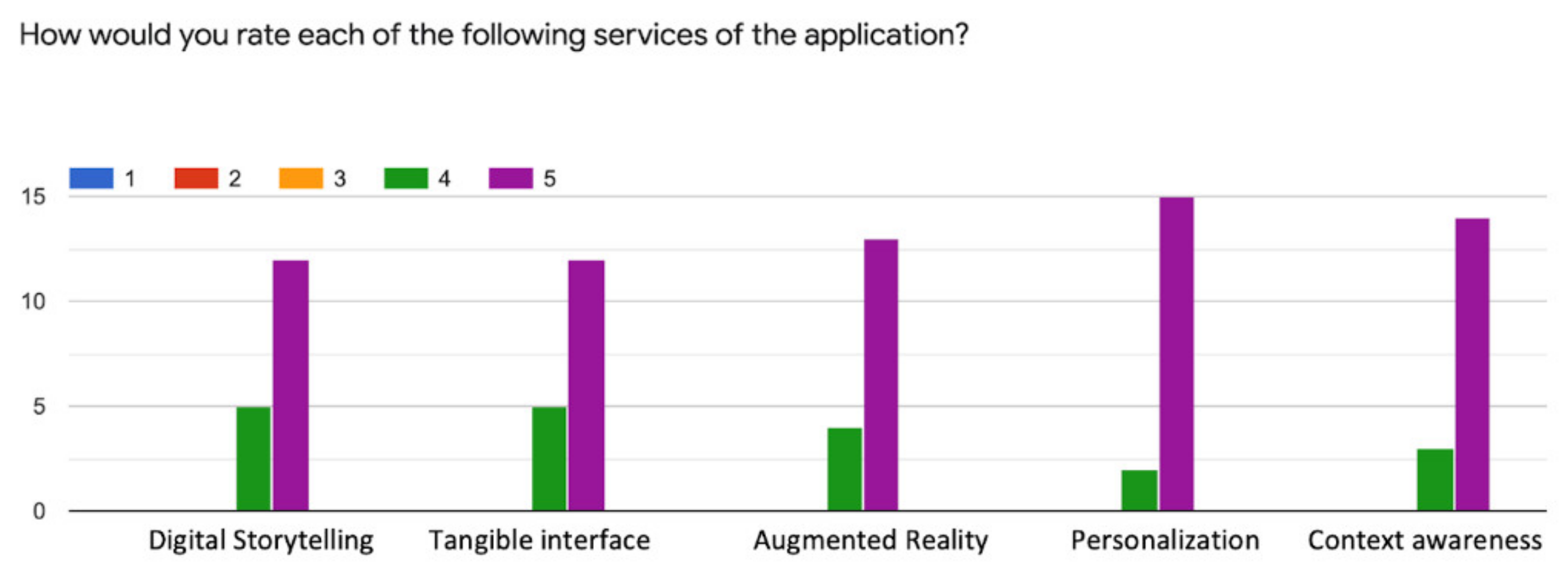

4.2. Evaluation

One of the most basic stages during the implementation of an application is its evaluation by the users themselves. The conclusions that come out about the user experience are very important, and a proper reading of the results helps the developers to optimize the performance of the application. A questionnaire, interviews, and user observations, as stated earlier, are the most widely known methods of evaluation. In this section, we present the results from the test of the usability and effectiveness of our CHATS application and evaluate the feedback received from the testing users based on the answers to our interviews, questionnaire, and user observation procedures [

42].

Forty-two users were recruited, with no previous experience in DS tangible applications. When recruiting, we opted for a balanced sample across age and gender and tried to match the age demographics with those of our personas, which meant that we were looking for participants fitting in five different age range buckets: young children (but not younger than 10), middle-school-age children, young adults, adults, and people in middle or late adulthood (

Figure 10). Although we were not able to achieve a perfect balance, we managed to collect a full set of data for a total of 42 participants who spent approximately two hours each experiencing the CHATS prototypes and participating in the evaluation [

44].

Participants were invited to complete a series of tasks. Different sets of storytelling experiences were designed specifically for different visitor profiles based on the personalization module described earlier.

To overcome the challenges, our study design sought to strike a balance between different methods, including the use of observation, interviews, questionnaires, as well as the automatic recording of system logs. Specifically, the CHATS system evaluation employed the mixed methods described below (

Figure 11,

Figure 12 and

Figure 13):

A general, pre-experience demographic questionnaire, administered verbally in the form of an interview;

Video and audio recording and note-taking of the observation of visitors’ behavior throughout their interaction with the CHATS system;

Two semi-structured, post-experience interviews per visitor/group, conducted immediately after the end of the visit. The interviews were delivered in a conversational tone in order to draw visitors out of what they experienced.

After their experience, the participants discussed with our experts, who concluded the following:

Most of the participants agreed that it was a pleasant educational experience and that they learned new things about the Jacovides painting and tangible Interactive Narratives. In the post-experience interviews, all users found the stories interesting and entertaining;

On a scale of 1 to 5, the users granted the CHATS application with 4 on how it attracts them to continue using it after 2 min;

Most of the participants would appreciate the CHATS application as a massive, multiplayer online experience through social data login;

Some players found the tasks very easy and suggested having a longer version, adding tasks not strictly related to educational content, and including rewards;

Most visitors found the visual assets presented on AR glasses fascinating, reporting that media assets aided their understanding;

Similar to other evaluations concerning mobile devices in cultural heritage, an important observation was that the majority of users, especially younger ones, were fully absorbed by the imagery shown on the AR glasses and spent more time looking at the screen than observing the exhibits;

In some cases, visitors felt that the visuals augmented the experience by bringing forth exhibit details that were not otherwise visible or related to informational content and artifacts;

Some visitors liked to be guided by the storytelling experience, but others would have liked to break the experience and focus on an irrelevant exhibit that caught their attention;

Regarding usability, both the observations and visitors’ responses showed that, overall, the interface was regarded as straightforward and easy to use, even by visitors not experienced with touch screen devices, smartphones, or AR glasses.

5. Conclusions

In this research work, an architecture for dynamic digital storytelling on tangible objects, named CHATS, was proposed. CHATS combines storytelling techniques such as narratives, augmented reality visualization, and binaural audio in a dynamic environment that is personalized and context-aware. The system identifies user proximity and interaction using appropriate sensors and delivers an enhanced user experience based on user behavior.

The proposed architecture was evaluated in a use case including 3D printed objects that represented figures of a painting. The tangible replication of painted characters allowed for a vivid representation of the scene depicted in the painting, offering new and exciting ways of digital storytelling associated with the scene. The evaluation showed that the involved users experienced a more immersive story compared to more traditional digital storytelling approaches. The evaluation of the experiences deployed for the CHATS system provided insights into real users’ interactions in a visiting context, which is challenging. The studies have revealed issues concerning both and less favorable aspects of the deployed system. Overall, visitors had a very positive response to the experience, indicating that more unconventional, e.g., storytelling, approaches to engage with cultural content may greatly contribute towards more compelling visiting experiences.

DS experts are keen to invest effort in providing different visitors with the right information at the right time and with the most effective type of interaction. CHATS developed a platform where personalization technology helps DS experts to tailor aspects of a digitally enhanced visiting experience, the interaction modalities through which the content is disclosed, and the pace of the visit both for individuals and for groups. We believe that the direct involvement of cultural heritage professionals in the co-design of CHATS technology as well as the extensive evaluation with visitors in field studies was instrumental in shaping a holistic approach to personalization that exploits in full the new opportunities offered by the tangible and embodied interaction.

CHATS can be further extended to allow for more advanced personalization and context-aware procedures which capture more behavioral patterns of the visitor, such as the tracking of complex movement beyond proximity and the identification of visitor focus on specific tangible objects. Moreover, additional ways and methods of digital storytelling, apart from prerecorded audio narratives and AR visualization, can be integrated into the architecture. Future storytelling research focuses on computational DS techniques for emergent narratives. These challenges will be addressed in future work, while the evaluation will be conducted in a larger scale environment.

: high level,

: high level,  : low level,

: low level,  : medium level.

: medium level.