Intelligent Robotic Welding Based on a Computer Vision Technology Approach

Abstract

:1. Introduction

2. Background and Summary

- A single-line laser, which can detect all welding objects (except T joints) and uses the SVM method feature, which is suitable for all image process algorithms but does not contain enough details about the welds.

- An active vision sensor, which detects the I, Y grooves, tube sheet, and spot welding, which improves weld identification (speed, accuracy, and electrode resistance are measured), and which is suitable for line tracking, regional center extraction, and direct guiding, with the same features of a single-line laser. These include:

- A cross-lines laser, which is used for horizontal and vertical weld lines, apertures, and T-joints, as well as detecting the weld variation values, weld line width tracking, aperture, and weld seam tracking using a spatial–temporal Markov model, intensity mapping, and piecewise fitting marking method, all of which have features suitable for T-joints and cross-seam shapes.

- A multi-lines laser, which is utilized for the butt, lap, and complex curve seams of weld seam tracking that is based on a kernelized correlation filter, and their features used are for tracking weld seam and complicated algorithms.

- A grid-lines laser used for large V-grooves and surface welds, which is applied for multi-layer and 3D weld construction.

- A dot matrix laser using real-time 3D weld surfaces based on the slope field of the reflecting laser and has features such as wide weld coverage and situation-specific welding.

- A circular laser user for all welds—except T-joints— and is suitable for seam tracking and 3D image processing.

- A welding layer measure used for the welding layer, which as a complex vision system.

- Passive vision sensors include:

- a

- Weld pool detection, which is used for seam tracking with neural network vision and is suitable for edge detection in real-time detection, but it is affected by the process parameters.

- b

- Swing arc extraction, which controls for penetration in swing arcs with narrow gaps. It is also used for deviation detection using extraction algorithms and local recognition, and it is suitable for considerable groove depths, though it requires an infrared camera.

3. The System Description

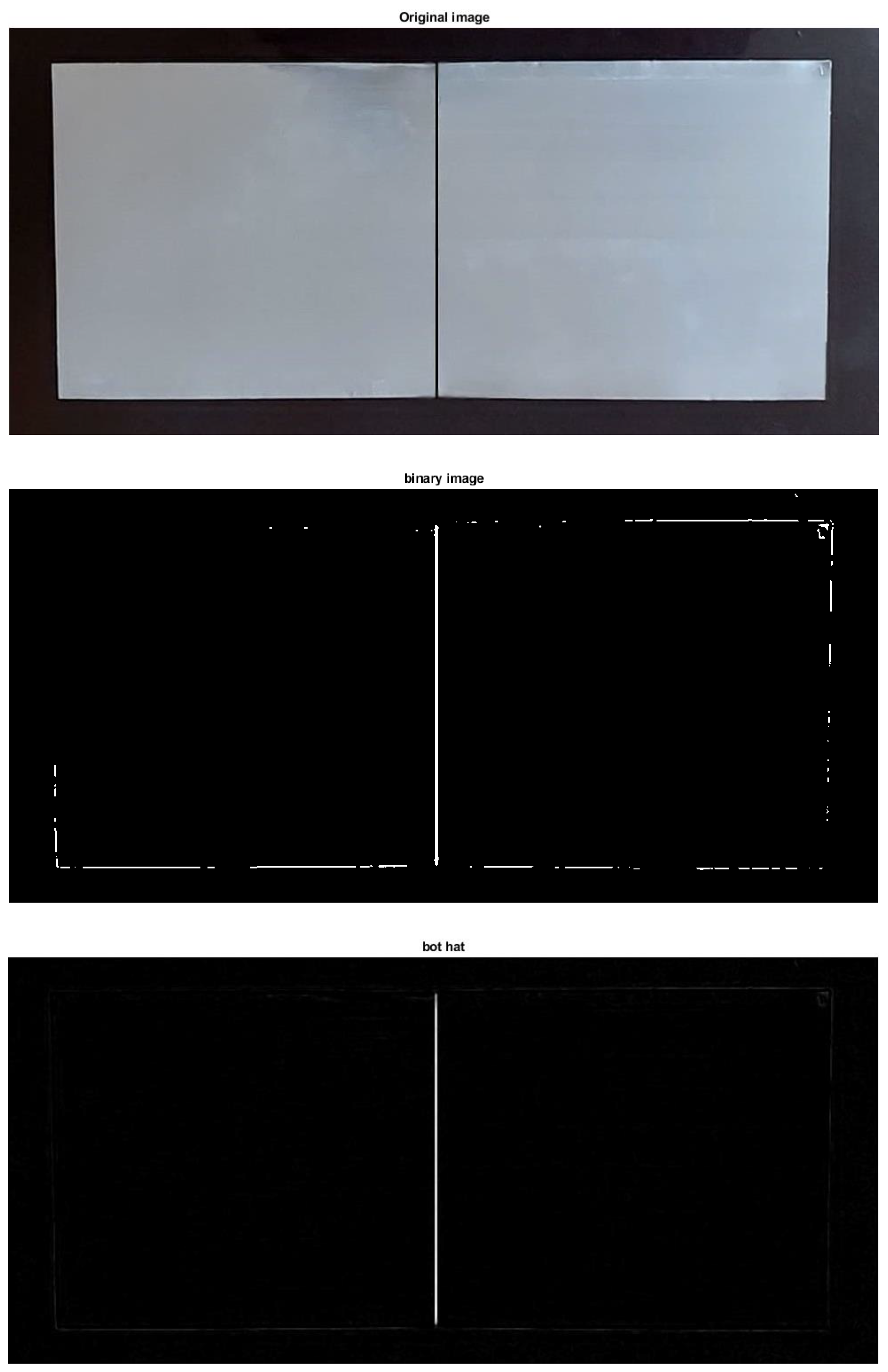

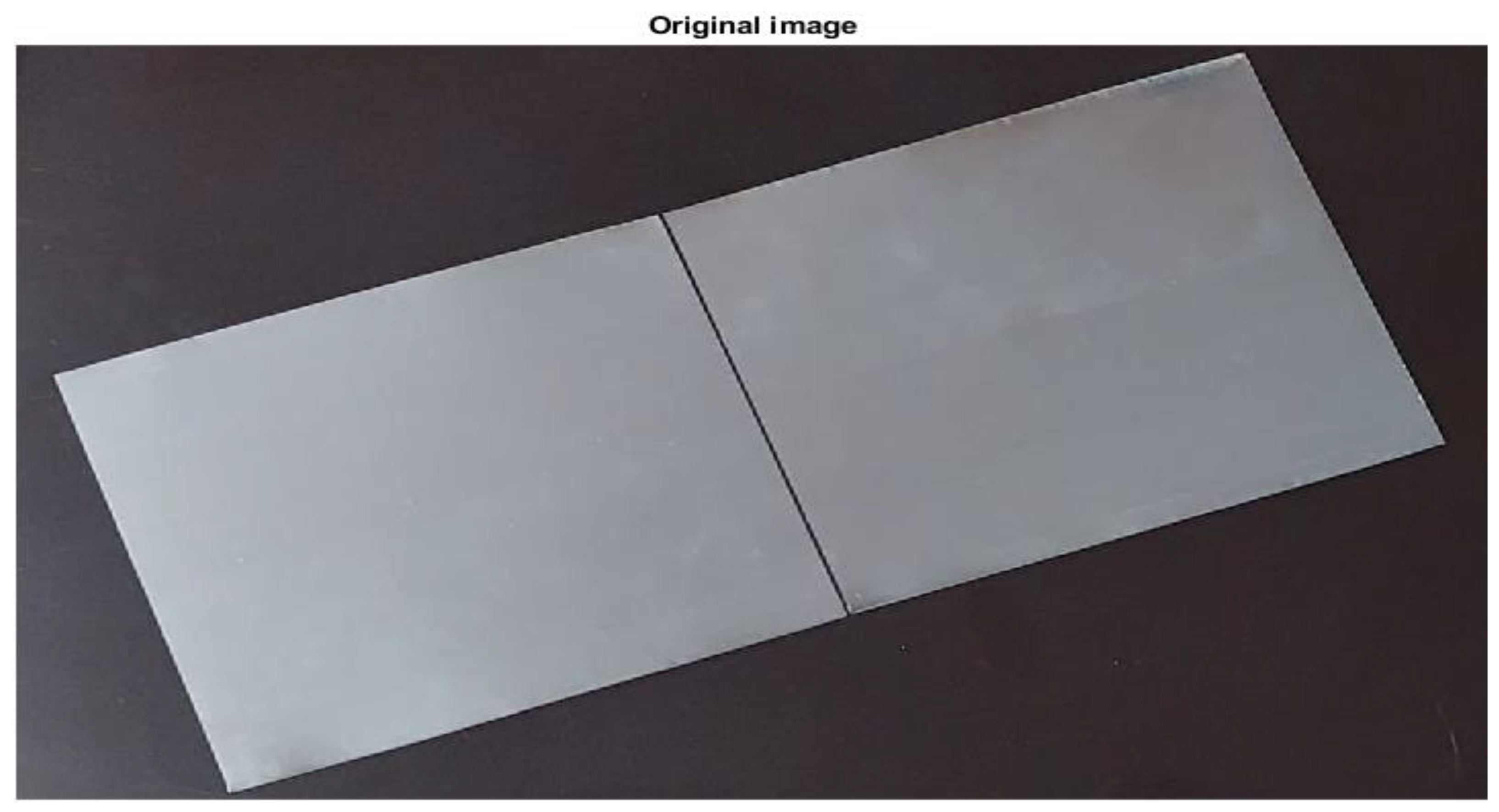

4. Recognition of the Target Line

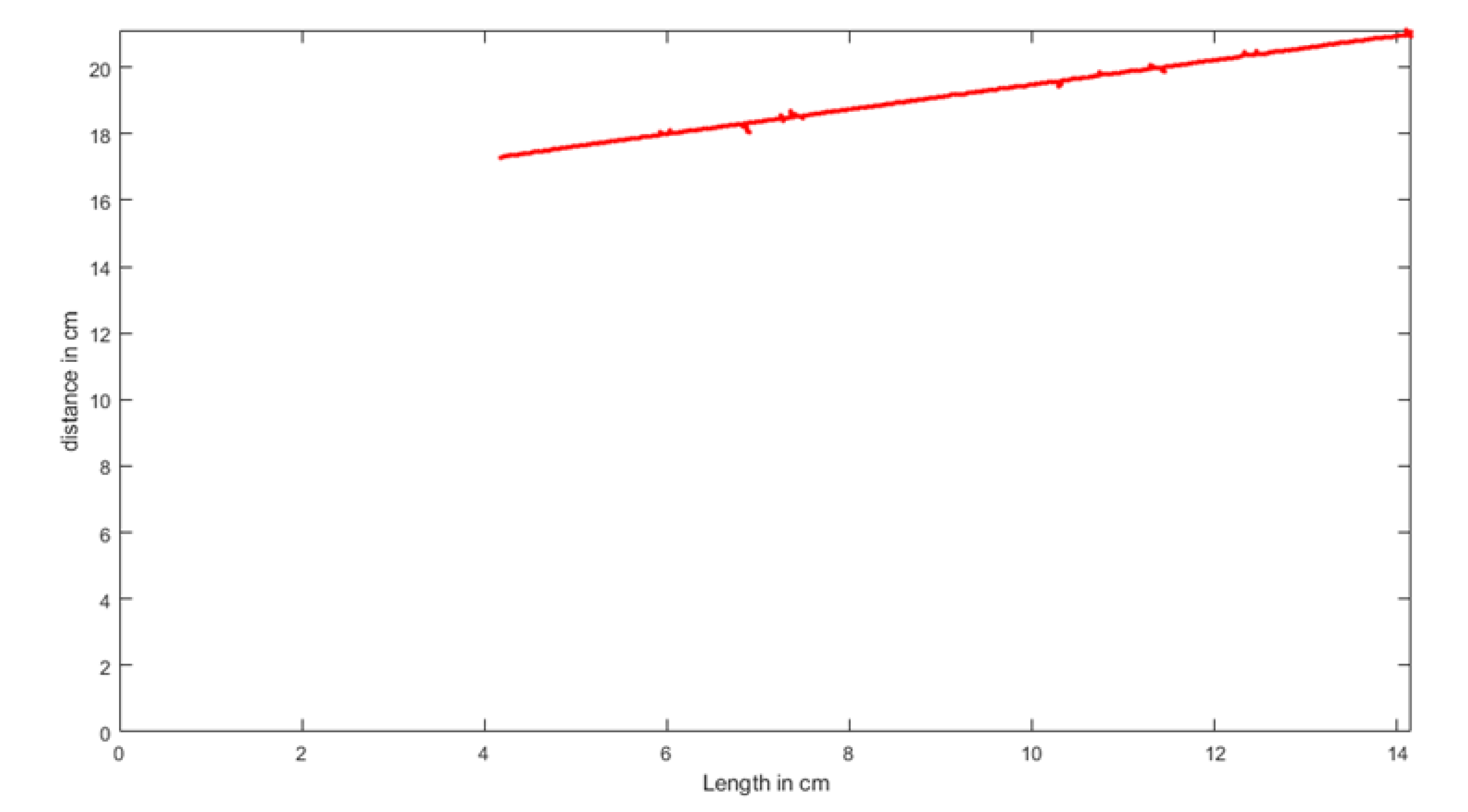

- The calibration object is rectangular in shape, and its length and width are measured and represented by dx and dy, respectively.

- The camera and the calibration object are placed on a flat floor in a parallel position in a level manner. The calibration object should be accurately placed in the center of the camera’s vision.

- The distance between the calibration object and the camera is calculated and denoted by dz.

- A picture is taken to verify the installation’s correctness by ensuring that the edges of the calibration object are aligned with the row and column of the image.

- The length and width of the calibration object (dx and dy) are calculated in pixels.

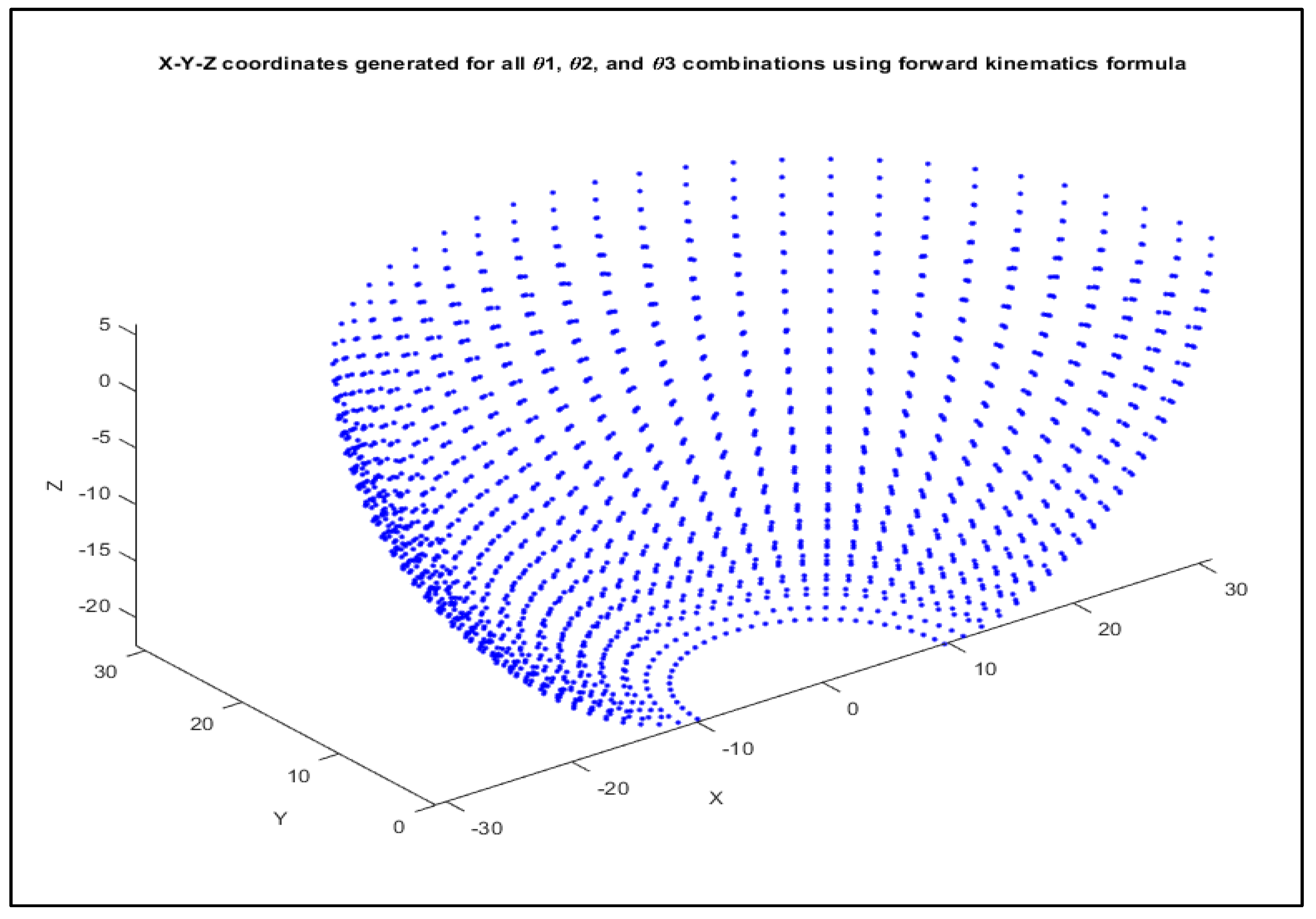

5. Robotic Forward and Inverse Kinematics

6. The Robot Forward and Inverse Kinematics Using ANFIS

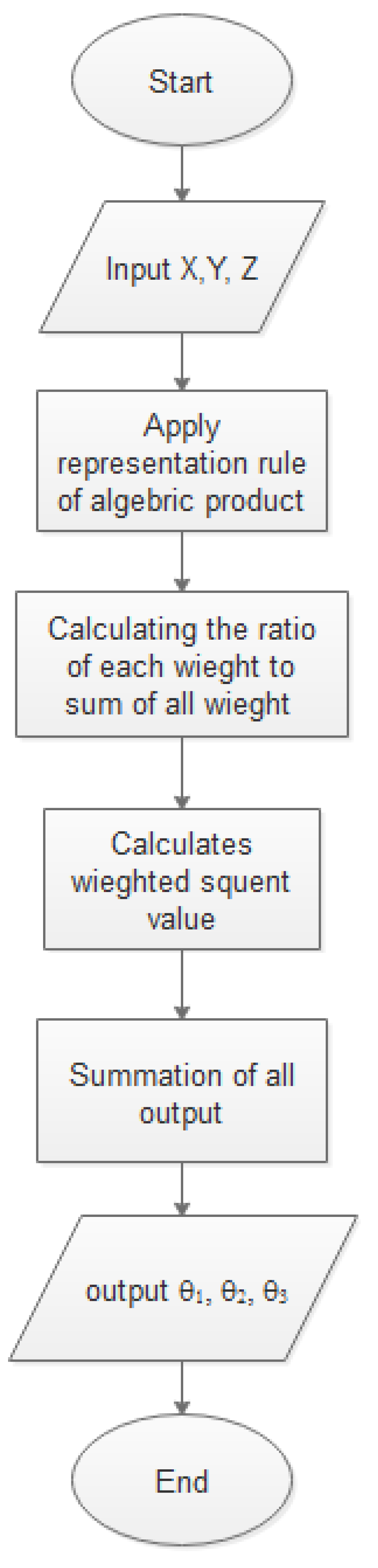

7. The ANFIS Simulation for Robotic Arm Kinematics

8. Results and Discussions

9. Conclusions and Remarks

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Balfour, C.; Smith, J.S.; Ai-Shamma’a, A.I. A novel edge feature correlation algorithm for real-time computer vision-based molten weld pool measurements. Weld. J. 2006, 85, 1. [Google Scholar]

- Agapakis, J.E.; Katz, J.M.; Friedman, J.M.; Epstein, G.N. Vision-aided robotic welding. An approach and a flexible implementation. Int. J. Rob. Res. 1990, 9, 17–34. [Google Scholar] [CrossRef]

- Chen, S.B.; Chen, X.Z.; Qiu, T.; Li, J.Q. Acquisition of weld seam dimensional position information for arc welding robot based on vision computing. J. Intell. Robot. Syst. Theory Appl. 2005, 43, 77–97. [Google Scholar] [CrossRef]

- Chen, X.Z.; Chen, S.B. The autonomous detection and guiding of start welding position for arc welding robot. Ind. Rob. 2010, 37, 70–78. [Google Scholar] [CrossRef]

- Chen, S.B.; Ye, Z.; Fang, G. Intelligentized technologies for welding manufacturing. Mater. Sci. Forum. 2014, 773, 725–731. [Google Scholar] [CrossRef]

- Chen, S.-B. On Intelligentized Welding Manufacturing. In Advances in Intelligent Systems and Computing; Springer International Publishing: Cham, Switzerland, 2015; Volume 363, pp. 3–34. [Google Scholar]

- Xu, Y.; Lv, N.; Fang, G.; Lin, T.; Chen, H.; Chen, S.; Han, Y. Sensing Technology for Intelligentized Robotic Welding in Arc Welding Processes. In Advances in Intelligent Systems and Computing; Springer International Publishing: Cham, Switzerland, 2015; Volume 363, pp. 411–423. [Google Scholar]

- Fidali, M.; Jamrozik, W. Diagnostic method of welding process based on fused infrared and vision images. Infrared Phys. Technol. 2013, 61, 241–253. [Google Scholar] [CrossRef]

- Aviles-Viñas, J.F.; Lopez-Juarez, I.; Rios-Cabrera, R. Acquisition of welding skills in industrial robots. Ind. Rob. 2015, 42, 156–166. [Google Scholar] [CrossRef]

- Hong, T.S.; Ghobakhloo, M.; Khaksar, W. Robotic Welding Technology. In Comprehensive Materials Processing; Elsevier: Amsterdam, The Netherlands, 2014; Volume 6, pp. 77–99. [Google Scholar]

- HShah, N.M.; Sulaiman, M.; Shukor, A.Z.; Jamaluddin, M.H.; Rashid, M.Z.A. A Review Paper on Vision Based Identification, Detection and Tracking of Weld Seams Path in Welding Robot Environment. Mod. Appl. Sci. 2016, 10, 83. [Google Scholar] [CrossRef] [Green Version]

- Wang, H.; Lu, X.; Hu, Z.; Li, Y. A vision-based fully-automatic calibration method for hand-eye serial robot. Ind. Rob. 2015, 42, 64–73. [Google Scholar] [CrossRef]

- Shah, H.N.M.; Sulaiman, M.; Shukor, A.Z.; Rashid, M.Z.A. Vision based identification and detection of initial, mid and end points of weld seams path in Butt-Welding joint using point detector methods. J. Telecommun. Electron. Comput. Eng. 2016, 8, 57–61. [Google Scholar]

- Rout, A.; Deepak, B.B.V.L.; Biswal, B.B. Advances in weld seam tracking techniques for robotic welding: A review. Robot. Comput. Integr. Manuf. 2019, 56, 12–37. [Google Scholar] [CrossRef]

- Xu, Y.; Fang, G.; Lv, N.; Chen, S.; Zou, J.J. Computer vision technology for seam tracking in robotic GTAW and GMAW. Robot. Comput. Integr. Manuf. 2015, 32, 25–36. [Google Scholar] [CrossRef]

- Wang, X.W. Three-dimensional vision applications in GTAW process modeling and control. Int. J. Adv. Manuf. Technol. 2015, 80, 1601–1611. [Google Scholar] [CrossRef]

- Ogbemhe, J.; Mpofu, K. Towards achieving a fully intelligent robotic arc welding: A review. Ind. Rob. 2015, 42, 475–484. [Google Scholar] [CrossRef]

- Leonardo, B.Q.; Steffens, C.R.; da Silva Filho, S.C.; Mór, J.L.; Hüttner, V.; Leivas, E.D.; Da Rosa, V.S.; Botelho, S.S. Vision-based system for welding groove measurements for robotic welding applications. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; Volume 2016, pp. 5650–5655. [Google Scholar] [CrossRef]

- Lei, T.; Rong, Y.; Wang, H.; Huang, Y.; Li, M. A review of vision-aided robotic welding. Comput. Ind. 2020, 123, 103326. [Google Scholar] [CrossRef]

- di Sun, J.; Cao, G.Z.; Huang, S.D.; Chen, K.; Yang, J.J. Welding seam detection and feature point extraction for robotic arc welding using laser-vision. In Proceedings of the 2016 13th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Xian, China, 19–22 August 2016; pp. 644–647. [Google Scholar] [CrossRef]

- Muhammad, J.; Altun, H.; Abo-Serie, E. Welding seam profiling techniques based on active vision sensing for intelligent robotic welding. Int. J. Adv. Manuf. Technol. 2017, 88, 127–145. [Google Scholar] [CrossRef]

- Deepak, B.B.V.L.; Bahubalendruni, R.M.V.A.; Rao, C.A.; Nalini, J. Computer aided weld seam tracking approach. J. Eng. Des. Technol. 2017, 15, 31–43. [Google Scholar] [CrossRef]

- Weis, Á.A.; Mor, J.L.; Soares, L.B.; Steffens, C.R.; Drews, P.L., Jr.; de Faria, M.F.; Evald, P.J.; Azzolin, R.Z.; Nelson Filho, D.; Botelho, S.S. Automated seam tracking system based on passive monocular vision for automated linear robotic welding process. In Proceedings of the 2017 IEEE 15th International Conference on Industrial Informatics (INDIN), Emden, Germany, 24–26 July 2017; pp. 305–310. [Google Scholar] [CrossRef]

- Rout, A.; Deepak, B.B.V.L.; Biswal, B.B.; Mahanta, G.B.; Gunji, B.M. An optimal image processing method for simultaneous detection of weld seam position and weld gap in robotic arc welding. Int. J. Manuf. Mater. Mech. Eng. 2018, 8, 37–53. [Google Scholar] [CrossRef]

- Zou, Y.; Chen, T. Laser vision seam tracking system based on image processing and continuous convolution operator tracker. Opt. Lasers Eng. 2018, 105, 141–149. [Google Scholar] [CrossRef]

- Rao, S.H.; Kalaichelvi, V.; Karthikeyan, R. Tracing a weld line using artificial neural networks. Int. J. Netw. Distrib. Comput. 2018, 6, 216–223. [Google Scholar] [CrossRef] [Green Version]

- Mark, M.V.; Spong, W. Seth Hutchinson. In Robot Dynamics and Controls; John Wiley & Sons Inc.: Hoboken, NJ, USA, 2020. [Google Scholar]

- Pratt, W.K. Digital Image Processing; Wiley-interscience: Hoboken, NJ, USA, 1994; Volume 19. [Google Scholar]

- Vernon, D. Machine Vision: Automated Visual Inspection and Robot Vision; Prentice-Hall, Inc.: Hoboken, NJ, USA, 1991. [Google Scholar]

- Bai, X. Morphological image fusion using the extracted image regions and details based on multi-scale top-hat transform and toggle contrast operator. Digit. Signal Process. Rev. J. 2013, 23, 542–554. [Google Scholar] [CrossRef]

- Herrera-Arellano, M.; Peregrina-Barreto, H.; Terol-Villalobos, I. Visible-NIR image fusion based on top-hat transform. IEEE Trans. Image Process. 2021, 30, 4962–4972. [Google Scholar] [CrossRef]

- Sawagashira, T.; Hayashi, T.; Hara, T.; Katsumata, A.; Muramatsu, C.; Zhou, X.; Iida, Y.; Katagi, K.; Fujita, H. An automatic detection method for carotid artery calcifications using top-hat filter on dental panoramic radiographs. IEICE Trans. Inf. Syst. 2013, 96, 1878–1881. [Google Scholar] [CrossRef] [Green Version]

- Yardımcı, O.; Tunç, S.; Parnas, İ.U. Performance and time requirement analysis of top-hat transform based small target detection algorithms. Autom. Target Recognit. XXV 2015, 9476, 171–185. [Google Scholar] [CrossRef]

- Kushol, R.; Kabir, M.H.; Salekin, M.S.; Rahman, A.B.M.A. Contrast enhancement by top-hat and bottom-hat transform with optimal structuring element: Application to retinal vessel segmentation. In International Conference Image Analysis and Recognition; Springer: Cham, Switzerland, 2017; pp. 533–540. [Google Scholar] [CrossRef]

- Wu, H.; Li, G.F.; Sun, Y.; Tao, B.; Kong, J.Y.; Xu, S. Image Segmentation Algorithm Based on Clustering. In Proceedings of the 2018 International Conference on Machine Learning and Cybernetics (ICMLC), Chengdu, China, 15–18 July 2018; Volume 2, pp. 631–637. [Google Scholar] [CrossRef]

- Alharbi, S.S.; Sazak, Ç.; Nelson, C.J.; Alhasson, H.F.; Obara, B. The multiscale top-hat tensor enables specific enhancement of curvilinear structures in 2D and 3D images. Methods 2020, 173, 3–15. [Google Scholar] [CrossRef]

- Bai, X.; Zhou, F.; Xue, B. Multiple linear feature detection through top-hat transform by using multi linear structuring elements. Optik 2012, 123, 2043–2049. [Google Scholar] [CrossRef]

- Bai, X.; Zhou, F. Multi structuring element top-hat transform to detect linear features. In Proceedings of the IEEE 10th International Conference on Signal Processing Proceedings, Beijing, China, 24–28 October 2010; pp. 877–880. [Google Scholar] [CrossRef]

- Nacy, S.M.; Abbood, W.T. Automated Surface Defect Detection using Area Scan Camera. Innov. Syst. Des. Eng. 2013, 4, 1–11. [Google Scholar]

- ADuroobi, A.A.; Obaeed, N.H.; Ghazi, S.K. Reverse Engineering Representation Using an Image Processing Modification. Al-Khwarizmi Eng. J. 2019, 15, 56–62. [Google Scholar] [CrossRef] [Green Version]

- Sahib, N.K.A.A.; Salih, A.A.H. Path Planning Control for Mobile Robot. Al-Khwarizmi Eng. J. 2011, 7, 1–16. [Google Scholar]

- Abbood, W.T.; Hussein, H.K.; Abdullah, O.I. Industrial Tracking Camera And Product Vision Detection System. J. Mech. Eng. Res. Dev. 2019, 42, 277–280. [Google Scholar] [CrossRef]

- Abbood, W.T.; Abdullah, O.I.; Khalid, E.A. A real-time automated sorting of robotic vision system based on the interactive design approach. Int. J. Interact. Des. Manuf. 2020, 14, 201–209. [Google Scholar] [CrossRef]

- Abdullah, O.I.; Abbood, W.T.; Hussein, H.K. Development of Automated Liquid Filling System Based on the Interactive Design Approach. FME Trans. 2020, 48, 938–945. [Google Scholar] [CrossRef]

- Schilling, R.J. Fundamentals of Robotics: Analysis and Control; Prentice-Hall of India: Upper Saddle River, NJ, USA, 2003. [Google Scholar]

- Deshmukh, D.; Pratihar, D.K.; Deb, A.K.; Ray, H.; Ghosh, A. ANFIS-Based Inverse Kinematics and Forward Dynamics of 3 DOF Serial Manipulator. Adv. Intell. Syst. Comput. 2021, 1375, 144–156. [Google Scholar] [CrossRef]

- Duka, A.-V. ANFIS Based Solution to the Inverse Kinematics of a 3DOF Planar Manipulator. Procedia Technol. 2015, 19, 526–533. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

AL-Karkhi, N.K.; Abbood, W.T.; Khalid, E.A.; Jameel Al-Tamimi, A.N.; Kudhair, A.A.; Abdullah, O.I. Intelligent Robotic Welding Based on a Computer Vision Technology Approach. Computers 2022, 11, 155. https://doi.org/10.3390/computers11110155

AL-Karkhi NK, Abbood WT, Khalid EA, Jameel Al-Tamimi AN, Kudhair AA, Abdullah OI. Intelligent Robotic Welding Based on a Computer Vision Technology Approach. Computers. 2022; 11(11):155. https://doi.org/10.3390/computers11110155

Chicago/Turabian StyleAL-Karkhi, Nazar Kais, Wisam T. Abbood, Enas A. Khalid, Adnan Naji Jameel Al-Tamimi, Ali A. Kudhair, and Oday Ibraheem Abdullah. 2022. "Intelligent Robotic Welding Based on a Computer Vision Technology Approach" Computers 11, no. 11: 155. https://doi.org/10.3390/computers11110155

APA StyleAL-Karkhi, N. K., Abbood, W. T., Khalid, E. A., Jameel Al-Tamimi, A. N., Kudhair, A. A., & Abdullah, O. I. (2022). Intelligent Robotic Welding Based on a Computer Vision Technology Approach. Computers, 11(11), 155. https://doi.org/10.3390/computers11110155