Simple Summary

This study explores whether treatment planning models developed in one country can be effectively used in another, helping to improve and share expertise in cancer radiotherapy. The researchers focused on right whole breast radiation treatments, creating multiple models from a large national group and testing them on patients from a different international group. The analysis examined how accurately the models predicted radiation doses to key organs, such as the lungs, and whether these predictions aligned with actual clinical data. The findings showed that most models worked well across different patient groups, with good accuracy in predicting lung doses. This suggests that knowledge-based planning models can be reliably shared between institutions, potentially saving time, improving treatment quality, and reducing variability in patient care. Such model sharing could strengthen collaboration between centers and accelerate advancements in radiotherapy planning worldwide.

Abstract

Background: Knowledge-based (KB) planning is a promising approach to model prior planning experience and optimize radiotherapy. To enable the sharing of models across institutions, their transferability must be evaluated. This study aimed to validate KB prediction models developed by a national consortium using data from another multi-institutional consortium in a different country. Methods: Ten right whole breast tangential field (RWB-TF) models were built within the national consortium. A cohort of 20 patients from the external consortium was used for testing. Transferability was defined when the ipsilateral (IPSI) lung first principal component (PC1) was within the 10th–90th percentile of the training set. Predicted dose–volume parameters were compared with clinical dose–volume histograms (cDVHs). Results: Planning target volume (PTV) coverage strategies were comparable between the two consortia, even though significant volume differences were observed for the PTV and contralateral breast (p = 0.002 and p = 0.02, respectively). For the IPSI lung, the standard deviation of predicted mean dose/V20 Gy was 1.13 Gy/2.9% in the external consortium versus 0.55 Gy/1.6% in the training consortium. Differences between the cDVH and the predicted IPSI lung mean dose and the volume receiving more than 20 Gy (V20 Gy) were <2 Gy and <5% in 88.7% and 92.3% of cases, respectively. PC1 values fell within the 10th–90th percentile for ≥90% of patients in 6/10 models and 65–85% for the remaining 4. Conclusions: This study demonstrates the feasibility of applying RWB-TF KB models beyond the consortium in which they were developed, supporting broader clinical implementation. This retrospective study was supported by AIRC (Associazione Italiana per la Ricerca sul Cancro) and registered on ClinicalTrials.gov (NCT06317948, 12 March 2024).

1. Introduction

Breast cancer remains the most common malignancy in women worldwide and a leading cause of cancer mortality [1]. Contemporary management combines surgery with adjuvant radiotherapy (RT) to reduce local recurrence and improve survival [2]. However, ‘manual’ tangential field (TF) planning is time-consuming and prone to inter-planner and inter-institution variability, with a potential downstream impact on normal tissue doses and plan quality. Automated approaches—including protocol-based, multicriteria, and knowledge-based (KB) planning—offer standardization and efficiency, but their reliability across countries and institutions remains a critical, under-studied question that our work addresses [3].

The standard treatment for breast cancer typically involves radiation therapy (RT) administered as adjuvant therapy following surgery, often combined with chemotherapy and/or hormonal therapy [4,5]. Currently, whole breast irradiation is a well-established therapeutic approach, with tangential fields (TF) being the predominant and widely utilized method, primarily using manual optimization. Manual TF planning and optimization introduces clinically relevant variability in target coverage and heart/lung doses, is highly dependent on individual expertise and time pressure, and creates throughput bottlenecks that limit standardization and can delay the start of the treatment. To avoid this highly labor-intensive manual process that also relies on the expertise of the planner, various automated planning approaches have been proposed, including protocol-based, multicriteria optimization, and knowledge-based (KB) optimization [6]. Among available automation paradigms, protocol-based/template methods promote consistency but are inherently site-specific and less adaptive to patient geometry. For example while multi-criteria optimization (MCO) can expose Pareto-optimal trade-offs it continues to heavily rely on user interaction, preserving inter-planner variability. In addition, deep-learning based planning solutions show promise but currently require large, harmonized training datasets and are less widely deployed across our centers worldwide. In contrast, KB optimization is a data-driven automated planning method that utilizes prior knowledge and experience to predict an “optimally” achievable dose for a new patient from a similar population. This approach offers several advantages, such as reducing or eliminating inter-planner variability [7], reducing planning time [8], minimizing suboptimal plans [9], avoiding dose distributions that deviate far from the previous clinical experience, permitting unbiased plan quality comparison [10,11,12], and aiding in training and education [13]. However, there are also cons, including the ‘garbage in, garbage out’ risk if the plans included in the training datasets are not of the highest quality, the time required for generation/validation to translate the KB method into effective and automatic plan solutions, and the need for updates in case of protocol modifications [14,15,16,17]. Several single institutions, various publications have demonstrated overall plan quality and efficiency improvements, ref. [7,18,19,20,21]. When applied on a multi-institutional scale, KB planning can achieve several notable outcomes. Ideally serve as a strong rationale for in silico plan comparison and QA, it is powerful for QA in clinical trials, it can assist in patient treatment modality selection (e.g., particle vs. photon), and allows the sharing of KB models, especially with the commercialization of KB tools. However, challenges still exist in large-scale KB model implementation [22], including inter-institutional protocol variability (dose fractionation, technique, etc.), variability in clinical target volume (CTV), planning target volume (PTV), and organs at risk (OARs) definition and contouring between institutes, the lack of a well-established strategy/workflow for KB methods, and the interchangeability of KB methods between different centers.

It is quite well-established that while plan quality may largely vary between planners of the same institutione, much larger variations can be expected among different clinical institutions [23,24,25,26,27,28]. A major limitation of KB approaches is that inter-institutional variability is not considered in the training phase, making the possibility of applying a KB model outside the generating center challenging. Recent publications (Supplementary Material S1) have demonstrated the feasibility of sharing models across institutiones. However, in order to share models, it is first necessary to assess the degree of plan transferability between different institutes; that is the primary aim of this study, which consists of an inter-consortia validation of DVH prediction models.

Multi-institutional KB plan prediction models were previously generated to assess differences in plan performance within a national consortium for right whole breast (RWB) irradiation using TF. For this reason the current investigation aimed to quantify the resulting KB plan predictions in a separate multi-institutional consortium in another country to assess their “geographical” transferability. Due to possible variations in patient anatomy from different countries and then PTV/OARs segmentation variability, assessing models’ transferability is crucial. To our knowledge, it is the first experience of KB model validation on whole breast irradiation outside of national boundaries.

2. Materials and Methods

2.1. KB Model Definition, Model Set Criteria, and Intra-Consortium Validation

This retrospective study was conducted under the MIKAPOCo (Multi-Institutional Knowledge-based Approach for Planning Optimization for the Community) project. The study was supported by a grant from AIRC (Associazione Italiana per la Ricerca sul Cancro) and approved by the Ethics Committee of the host institution (IRCCS Ospedale San Raffaele, protocol number IG23150). The project has been registered on ClinicalTrials.gov (trial registration number: NCT06317948; date of registration: 13 March 2024). The aim of MIKAPOCo was to build consistent KB model libraries and incorporate inter-institutional variability into plan prediction, focusing on the whole breast (WB) irradiation scenario. MIKAPOCo developed ten distinct KB models following standardized criteria, specifically for patients treated with RWB-TF using manually optimized wedges or field-in-field (FiF) techniques [28]. Each of the ten centers built their individual KB models using the Model Configuration tool of RapidPlan (RP, Varian Medical Systems, Inc., Palo Alto, CA, USA) following common criteria. RapidPlan configures a model using existing clinical plans, combining principal component analysis (PCA) and regression techniques. Using the modeled data, the tool generates a dose–volume histogram (DVH) prediction for a new patient case optimization. Different Eclipse software versions (from V13.5 to V16.1) were utilized based on the availability at each institute. The models were trained and validated using a common methodology, requiring a minimum number of patients (>70), adherence to national guidelines for contouring (CTV/PTV/OARs), consistency in techniques (wedged or FiF), outlier elimination criteria, and evaluation of the goodness of the regression, which were jointly discussed and defined, as reported in Tudda et al. [26] (also in Supplementary Material S2). As reported in Tudda et al. [28], each model was tested on a cohort of 20 patients, randomly selected from ten MIKAPOCo institutes (two per center), to evaluate the models’ variability and transferability. To enhance robustness, we intentionally replicated the methodology of Tudda et al. [28], treating their results as the starting point and proof of concept, thereby enabling international, inter-consortium validation of knowledge-based plan prediction modeling for whole breast radiotherapy.

2.2. Comparison of the Intra- and Inter-Consortium Models’ Prediction Variability and Transferability

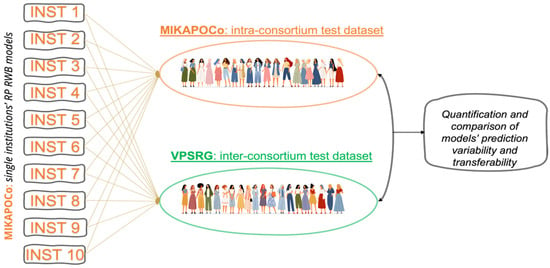

An external model validation test was performed using 20 patients from another national consortium, the Victorian Public Sector RapidPlan Group (VPSRG). Within this consortium, two independent centers each contributed 10 patients for validation. The assessment included evaluating dose–volume model predictions and the transferability of the models between consortia. Each of the ten models from MIKAPOCo was tested on the 20 patients provided by VPSRG (Figure 1).

Figure 1.

Ten KB models generated within the national consortium MIKAPOCo were evaluated and quantified in terms of variability and transferability on an intra-consortium dataset (MIKAPOCo) and an inter-consortium dataset (VPSRG).

The VPSRG patients’ cohort was initially planned and treated with TF using manually optimized wedges or FiF techniques, using electronic tissue compensation (eComp) and skin flash. Plans’ clinical goals are reported in Supplementary Material S3. For each patient, 10 RP estimations were generated by setting energy and beam arrangements consistent with MIKAPOCo’s recommendations, based on a dedicated protocol (Supplementary Material S4). The predicted DVHs generated by each of the 10 MIKAPOCo models for all OARs, including heart, contralateral breast, ipsilateral (IPSI) lung, and contralateral lung, were compared, providing the first quantification of inter-consortia variability in plan performances. All models were run using the most commonly employed fractionation regimen of 40 Gy in 15 fractions. For each OAR, prediction bands were exported by running a dedicated script.

PTVs were compared between the two consortia (CTV–PTV margins used for both the consortia were 5 mm isotropically in all directions). The percentage of the VPSRG’s PTVs inside the MIKAPOCO’s models in terms of joint target volume was also analyzed (specific parameter provided by the RapidPlan software (V16.1), defined as the volume of all matched target structures in cm3), to quantitatively evaluate differences in contouring and dataset variations due to anatomical differences. Dose–volume parameters were analyzed using PTV metrics (V95%, V105%, D1%, and D99%) to assess major variations in the planning approach.

To determine if model predictions were influenced by overlap in geometry and anatomical features from the original training cohort, the validity of the fit for each patient’s geometrical features in the VPSRG test set was examined. Additionally, the estimated statistics for each selected OAR, particularly the ipsilateral lung, heart, contralateral breast, and lung, were analyzed using RapidPlan’s Model Configuration tools. For inter-consortia transferability, ipsilateral lung DVH predictions were categorized considering the V20%–V80% range as optimal (cDVH within the predicted DVH band), suboptimal (cDVH below the band), improved (cDVH above the band), and failed (predicted DVH band strongly disagrees with cDVH). Examples are provided in Supplementary Material S5.

The predicted ipsilateral lung mean dose and V20 Gy were then calculated to quantify the variability of plan predictions between consortia. Additionally, for the VPSRG test dataset, the clinical DVH (cDVH) was compared with the predicted DVH. For each patient (i = 1, …, 20) and each model (j = 1, …, 10), the mean predicted DVH values (described in Equation (1) as ) were extracted as the mean values between the upper and lower predicted DVH bands, as described in Tudda et al. [26], and compared with the clinical DVH (cDVH). The corresponding inter-institute standard deviations (SD) were assessed as described in Equation (2).

The overall average DVH (including inter-institute variability) over all 20 patients was estimated. Subsequently, the overall mean standard deviation values for each corresponding mean DVH (DVHint), representing inter-institute variability, were calculated as follows:

Therefore, Equations (1) and (2) describe the overall average and mean standard deviation DVH over the different models, while Equations (3) and (4) describe these values over the patient cohort. For interpretability, we defined Δ = cDVH − predicted. Positive Δ indicates that clinical dose/volume exceeds the prediction. We report the proportions within ± 5% (Δ V20 Gy) and ±2 Gy (Δ mean dose).

Once the target coverage approach and the predicted mean doses (and their standard deviations) were compared and found to be in agreement, model transferability was quantified. This was done by counting the number of cases in which the ipsilateral lung PC1 fell within the 10th and 90th percentiles of the training set. Inter-institute transferability was assessed using the methodology from Tudda et al. [28] and compared with results from an intra-validation cohort of 20 patients, randomly selected from ten MIKAPOCo institutes (two per center). In Tudda et al. [28], each institution’s model was tested on 18 out of 20 patients, excluding those from the model’s originating center. No formal statistical power calculation was performed because the primary endpoint—across both intra- and inter-consortium cohorts—was the estimation of model transferability/feasibility metrics (Δ distributions, PC1-band inclusion) across ten site-specific models, not at the patient-level hypothesis testing.

2.3. Terminology and Analysis Context

To aid clarity and reproducibility, we provide concise definitions of core terms—MIKAPOCo, VPSRG, model prediction, variability, and transferability—and specify the analytic perspective used to distinguish intra- versus inter-consortium contexts throughout this study.

MIKAPOCo (source consortium). Multi-institutional cohort from which the 10 site-specific KB models used in this study were trained/derived. Unless otherwise stated, “intra-consortium” refers to analyses where a MIKAPOCo-derived model is applied to MIKAPOCo patients (same consortium as model origin).

VPSRG (external consortium). Independent multi-center cohort used as the external application/validation set. In this manuscript, applying a MIKAPOCo-derived model to VPSRG patients is termed inter-consortium (different consortium from model origin).

Model prediction. The dose–volume prediction produced by a knowledge-based model for a given patient’s anatomy/beam geometry, reported as DVH quantities for OARs and targets. Importantly, this study evaluates prediction (not re-optimization or delivery).

Variability.

- -

- Patient-level clinical variability: Spread of delivered clinical DVH metrics within a cohort (anatomy, geometry, and practice effects; summarized by mean value and SD).

- -

- Prediction residual variability: Dispersion of Δ = clinical − predicted; reported within accuracy bands (±2 Gy mean dose; ±5% V20 Gy) and tail checks.

- -

- Model-to-model variability: For each patient, spread of predictions across the 10 site-specific models, aggregated to quantify dependence on model provenance/training distribution.

Transferability. The extent to which a model maintains accuracy and consistency when applied outside of its training distribution. We assess intra-consortium transferability (model to same-consortium patients) and inter-consortium transferability (model to other-consortium patients). All “intra/inter” labels are defined relative to the model’s training origin (“source model to target cohort”).

3. Results

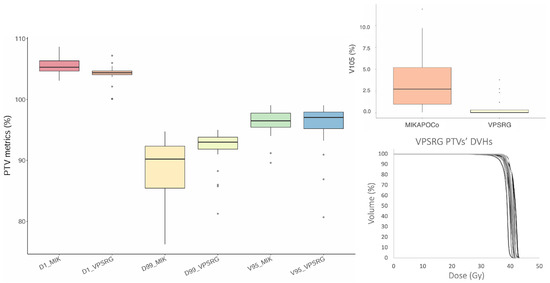

VPSRG’s and MIKAPOCo’s test dataset (20 test patients each) were compared in terms of PTV metrics, as depicted in Figure 2. With regard to the PTV, the clinical VPSRG dataset exhibited homogeneity in terms of V95%, D1%, V105%, and D99%, except for some outliers (due to clinical requirements), as shown in Figure 2.

Figure 2.

Box plot of V95%, V105% (top right panel), D1%, and D99% for the 20 MIKAPOCo’s test dataset (MIK) and the VPSRG’s test dataset PTVs. On the right bottom, the twenty PTV DVHs of the VPSRG’s test dataset are shown.

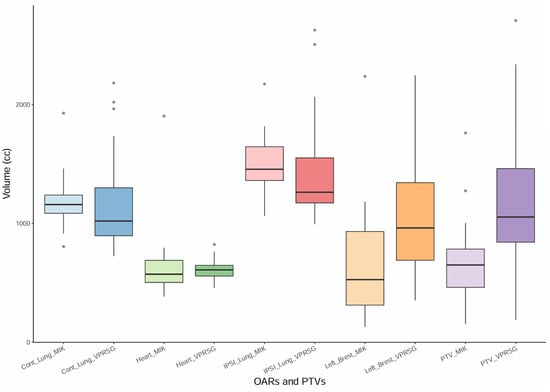

PTVs and OARs’ volumes were also compared between the twenty VPSRG test datasets and the twenty MIKAPOCo test datasets (Figure 3). Volume differences have been evaluated using the Mann–Whitney U test (significant differences if p < 0.05). Similar volumes (mean volume ± SD) have been observed for the heart in the MIKAPOCo test dataset and the VPSRG test dataset (656.3 ± 348.1cc vs. 609.50 ± 93.53cc p = 0.5). This behavior was similar for the contralateral lung (MIKAPOCo = 1199.5 ± 244.9cc vs. VPSRG = 1179.06 ± 442.22, p = 0.1) and for the IPSI lung (MIKAPOCo = 1501.1 ± 262.9cc vs. VPSRG = 1449.0 ± 470.8cc, p = 0.1). On the other hand, PTVs and left breasts show statistically significant differences in terms of volume values: PTV MIKAPOCo = 677.5 ± 363.7cc vs. VPSRG = 1151.9 ± 605.9cc (p = 0.002) and left breast MIKAPOCo = 685.46 ± 543.4cc vs. VPSRG = 1043.9 ± 503.0cc (p = 0.02).

Figure 3.

Box plot of the PTVs and OARs (contralateral lung, heart, ipsilateral lung, and left breast) volumes for the twenty VPSRG’s patients test datasets and the twenty MIKAPOCo’s patients test datasets, as used in Tudda et al. [26].

To better quantify possible differences in contouring and/or volumes, the percentage of the VPSRG’s PTVs inside the prediction of the MIKAPOCo’s models was on average 51%. If we consider each individual MIKAPOCo models, the minimum and maximum percentage of the VPSRG’s PTVs inside the prediction was 30% (model from Institute 8) and 70% (model from Institute 5), respectively.

The ipsilateral lung DVH predictions from MIKAPOCo’s KB models were categorized and quantified, yielding 76% optimal predictions, 15% improved predictions, 6% suboptimal predictions, and 3% of failed predictions (see Supplementary Material S5). Cases defined as failed predictions were then excluded from the remaining analysis.

Table 1 reports the predicted mean doses for each OAR (heart, contralateral breast, ipsilateral, and contralateral lung) along with their SD for both national consortia’s test datasets. Regarding the prediction of ipsilateral lung V20 Gy, SDint was 2.9% for the VPSRG test datasets, compared to 1.6% for the MIKAPOCo test datasets.

Table 1.

Mean values (averaged among the models) of the predicted mean doses and their standard deviations, expressing the inter-consortium variability of the prediction, on the 20 test patients from VPSRG. These values are compared with those obtained in Tudda et al. [28], showing the intra-consortium variability.

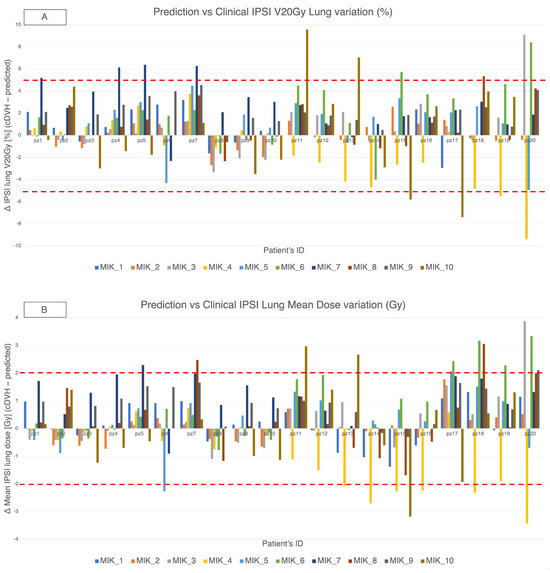

Across the VPSRG test set and the ten MIKAPOCo models, differences (Δ = cDVH − predicted) in ipsilateral lung V20 Gy and mean dose are summarized, respectively, in Figure 4A,B. Overall, 92.3% of Δ V20 Gy values were within ± 5% and 88.7% of Δ mean dose values were within ± 2 Gy. We report 95% binomial confidence interval for these proportions for transparency (pooled count basis): 88.7% corresponds to approximately 83–93%, and 92.3% to approximately 88–95%. These intervals are illustrative and likely conservative given within-patient and within-model clustering.

Figure 4.

MIKAPOCO per-model differences (Δ = cDVH − predicted) for ipsilateral lung across 20 VPSRG patients. Top rows, (A) Δ V20 Gy (%); bottom rows, (B) Δ mean dose (Gy). Positive values indicate clinical > predicted. Dashed lines mark ±5% and ±2 Gy thresholds.

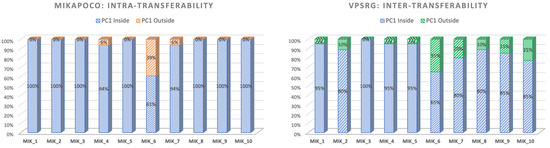

In the intra-consortium validation (Figure 5-left), MIKAPOCo demonstrated high inter-institute interchangeability, with ipsilateral lung PC1 values within the 90th percentile in less than 10% of test patients for 9 out of 10 models. When considering the inter-consortium validation patients’ cohort from VPSRG (Figure 5-right), PC1 values for each MIKAPOCo model were within the 90th percentile in less than 10% of the test patients for 6 out of 10 models. Institution 6 exhibited poor transferability, both for intra-consortium validation within MIKAPOCo (7 out of 18 patients) and inter-consortium validation within VPSRG (7 out of 20 patients). Additionally, worse transferability was observed for Institutes 10 and 7 in the international validation done by the VPSRG (5 and 4 out of 20 patients, respectively). VPSRG shows a significantly higher PC1-Outside fraction than MIKAPOCo across models (Wilcoxon signed-rank test p = 0.0207).

Figure 5.

Values of ipsilateral lung PC1 for each consortium within the internal validation cohort for MIKAPOCo (left) and the external validation dataset for VPSRG (right). ‘‘Inside” refers to values less than or equal to the 90th percentile of the corresponding training set for all patients.

4. Discussion

National KB face challenges in harmonizing plan libraries and manage inter-institution variability in treatment planning because model-building methods are currently not standardized. International comparisons could enable common modeling and cross-validation standards, enabling robust, population-wide assessments that account for contouring and anatomical differences.

The presented study constitute the first assessment of international consortia KB prediction models on WB irradiation, anticipating subsequent demonstrations of their quantified transferability. Beyond the known benefits of KB models for “in silico” plan comparisons [29,30], quality assurance, and clinical trials/audits [31,32,33,34,35,36], this assessment offers national consortia or single institutions a valuable benchmark to adopt automated planning without requiring extensive expertise, promoting broader use across diverse clinical settings. KB models developed by cross-institutional groups, leveraging large-scale data, have the potential to enhance the homogeneity of plan quality [37,38,39] and can be valuable in ensuring compliance with predefined planning criteria performances within the context of clinical trials [25,26,40]. Recently, a similar study by Jain et al. [41] evaluated and quantified the universal applicability of two cervical cancer KBP models developed in two different institutions (Asia and Europe) based on the EMBRACE-II protocol, using respective patients’ plans. These two KB models were exchanged between three institutions with different geo-ethnic populations and validated on reference manual plans, demonstrating cross-continental applicability of KBP for cervix cancer. In contrast, our study focuses on right whole breast tangential fields, evaluates ten models assembled within a national consortium, and assesses transferability of DVH predictions using a PC1-based within-band criterion rather than full plan re-optimization. Methodologically, Jain’s output involves plan quality from generated plans, whereas ours is prediction fidelity and model portability; accordingly, the comparative emphasis is different (plan performance vs. prediction agreement). Despite these differences in disease site, technique, model provenance, and evaluation endpoints, both studies converge on the same insight: well-curated KB models can generalize across populations and institutions.

Regarding the volume comparison, only the PTVs and left breasts’ volumes show statistically significant differences between the consortia test datasets. A larger spread of the MIKAPOCo left breast, if compared with MIKAPOCo PTVs, could be due to an inter-observer variation in the delineation of the left breast. This is not the case for the VPRSG test dataset. Nevertheless, in terms of PTV coverage, the VPSRG cohort shows similar values for PTVs of V95%, V105%, D1%, and D99% compared to most institutions of the MIKAPOCo training set dataset (Figure 2). A comparison of the obtained results with those described by Tudda et al. [28] reveals good agreement, particularly in terms of the mean values (averaged among the models) of predicted OARs mean doses and their standard deviations, expressing inter-institute variability on the dataset test cohort provided by the consortia. For instance, as shown in Table 1, the comparison of the ipsilateral lung mean dose between VPSRG (5.57 Gy) and MIKAPOCo (5.39 Gy), or heart mean dose (VPSRG: 0.59 Gy vs. MIKAPOCo: 0.39 Gy), demonstrates this alignment. However, the larger SD observed in the VPSRG patient test dataset highlights a higher inter-consortium variability in the prediction. This is evident not only for ipsilateral lung (VPSRG SD: 1.13 vs. MIKAPOCo SD: 0.55) but also for the heart (VPSRG SD: 0.31 Gy vs. MIKAPOCo SD: 0.17 Gy). The larger SD in VPSRG indicates increased variability across predictions, underscoring the importance of assessing and understanding the inter-consortium variability in the application of KB models. It is worth noting that despite volume variations, dose and OARs do not always correlate, and the prediction remains reliable and satisfactory even when volume differences occur between the test and training datasets. This can be attributed to the model’s reliance on principal component analysis, probably due to the consistent positioning of the PTVs in relation to the OARs.

This result is also confirmed when examining the ipsilateral lung PC1 to verify the transferability of KB prediction models. Consistent with the findings of Tudda et al. [28], the lowest model transferability in this study is associated with the Institute 6 model, with PC1 outside the 90th percentile in 39% of the patients. Similarly, during the VPSRG dataset test, PC1 values were outside the 90th percentile in 35% of the cases. Indeed, Institute 6 showed a model with no overlap between the PTV and the ipsilateral lung, leading to high PTV coverage and improved lung sparing [29]. While the Institute 6 model demonstrated agreement in both dataset tests in terms of reduced transferability, larger differences between the consortia for the ipsilateral lung PC1 transferability were observed for models from Institute 7 (20%), Institute 9 (15%), and Institute 10 (25%), as shown in Figure 5. Outlier inspection of the latter three institutes does not highlight that any relevant clinical-predicted discrepancies occurred, as in the case of Institute 6 (no overlap between PTV and ipsilateral lung), or similar, like possible larger PTV/left breast volumes or lower inclusion within training bounds. Model-specific patterns suggest that training set geometry probably contributes mostly to lower transferability. Increased evaluation of the dataset and eventually curating training sets for anatomical diversity may improve generalizability.

Given that the ipsilateral lung PC1 value outside the 90th percentile was observed in four out of ten models—still considered good transferability—this difference could be due to the difference in volumes.

KB prediction model performance was further quantified by grouping ipsilateral lung DVHs into four categories according to their consistency with the clinical plans. As depicted in the last figure in Supplementary Material S5, only 2% of cases exhibited failure (4 out of 200 DVH predictions). In 76% of cases, optimal prediction was achieved, indicating that the predicted ipsilateral lung DVH was within the expected range, aligning with the cDVH. This results underscores the excellent prediction performance of the models studied in this work. Moreover, in 15% of cases, the ipsilateral lung DVH prediction by MIKAPOCo’s KB models improved upon the cDVH, demonstrating potential for OAR sparing. Conversely, in only 7% of cases, the prediction was suboptimal compared to the cDVH, suggesting instances where the KB prediction model did not perform optimally. These interesting results translated into a lower, although relatively small, reduction (0.4–1.5 Gy) in the predicted mean lung dose compared to the clinical ones for 8 out of 10 models, prompting VPSRG to consider utilizing them for their planning practice. Outlier errors were uncommon but informative: larger residuals clustered in patients whose ipsilateral lung geometry lay outside the models’ training envelope (PC1 out of band) and in cases with atypical PTV–lung relationships relative to the originating cohort. Models trained without PTV–lung overlap (e.g., one institute, number 6) transferred worst to typical overlap anatomies, underscoring the role of geometry mismatch. Anatomical scale differences in the external cohort (larger PTV/left breast volumes) further widened the dispersion of Δ(cDVH-predicted). Despite these factors, the vast majority still met practical accuracy bands (88.7% of mean-dose Δ within ±2 Gy; 92.3% of V20 Gy Δ within ±5%), supporting overall portability. Pragmatic mitigations include training set enrichment at geometric extremes (overlap and size), and development of a unified, diversity-curated model to reduce out-of-envelope failures.

Moreover, possible implementation of the models in clinical practice deserves careful validation. For instance, many aspects could impact differences between predicted and calculated dose [19,42], such as machine configuration, dose algorithm, delivery technique, optimization template, and so on. We already studied and established this aspect through previous single-center investigations [43]. Although such investigation is beyond the scope of the present study, the deliverability of the predicted dose and its impact on the models’ transferability should be confirmed in a large-scale implementation and it will be the focus of a future study.

Even if this study is the first to assess national consortia KB models on RWB irradiation using TF, several limitations should guide future research. First, the VPSRG external cohort comprised 20 patients, adequate for feasibility/transferability assessment and validation but limiting precision at the patient level inference. Therefore, expanding it to other Australian regions and additional hospitals could provide more insights into geographical and methodological dependencies between consortia, enhancing the robustness and generalizability of the findings. Future studies could improve the results by using a unified MIKAPOCo model, collaboratively created by all MIKAPOCo institutions [43]. This standardized model would serve as a benchmark, offering better insight into KB model performance across different institutions and treatment scenarios. Second, the deliverability and end-to-end plan QA of automatically generated plans were not tested in this work here and warrant a prospective evaluation. Third, observed inter-consortium variability likely reflects differences in contouring practice, planning templates, and patient anatomy; while our PC1-based criteria are robust, they may not capture all clinically relevant nuances. Finally, predictions were evaluated against clinical DVHs rather than full re-optimizations, by design, to isolate model prediction performance. For this reason, plan deliverability and pretreatment QA of automatically generated plans were not assessed in this retrospective analysis due to the scope of the study. An ongoing prospective study using a pooled, generalized MIKAPOCo model [43] and a pre-specified on-machine feasibility pilot will address this question. Based on this achievement, a possible implementable framework could support some concrete and actionable strategies to mitigate inter-institute differences: standardized contouring, shared atlas, and one-page checklist, with quarterly 5-case inter-observer audits and predefined pass/fail thresholds; centralized QA and monitoring, validated on a 10-case common benchmark using harmonized tangential templates; require independent MU checks and EPID/array QA for pilot plans; track prediction residuals (Δ) with simple statistical process control to detect site drift; collaborative model refinement, to maintain a pooled base model with lightweight site adapters updated via a 10-case calibration set. As a last consideration, PTV/left breast differences could not be fully attributed because anthropometric covariates were not collected; prospective harmonization and covariate capture are planned in the next study.

The international inter-consortia experience highlights the potential of transferred learning in KB planning prediction models. By reusing models across domains, this approach accelerates robust model creation, benefiting institutions and consortia implementing automated planning in new or diverse clinical settings. This is particularly relevant for low- and middle-income countries with rising cancer cases and limited resources. Transferred learning improves model development, generalizability, and adaptability, while reducing the need for extensive data collection and addressing ethical challenges in data transfer, supporting efficient radiation therapy planning.

5. Conclusions

In this study, inter-consortia collaboration was proven to be not only feasible but also effective. For RBW-TF plans, comparable ipsilateral lung transferability was demonstrated in most of the large majority of the test cases and models, and good dose and statistical prediction were also demonstrated. The results of this work strongly support the use of national RWB-TF models for predicting RWB-TF outside of the national consortium where they were originally generated. These findings support international model sharing to promote plan quality consistency, reduce planning time, and enhance QA. Future work should prospectively evaluating deliverability on the treatment machines, expanding validation cohorts across additional regions and techniques, and investigating standardized, unified consortium models to further mitigate inter-institution variability.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/cancers17213576/s1, S1. Literature review of national or multi-institutional KB models experience [44,45,46,47]. S2. Procedures for building KB models and estimates of goodness of regression. S3. Planning and clinical goals for VPSRG’s test set cohort. S4. Operative Instructions for external validation data set. S5. IPSI lung predicted DVH’s categorization.

Author Contributions

Conceptualization: L.P., P.G., R.C., A.T., V.P. and C.F.; Methodology, L.P., P.G., R.C., A.T., V.P. and C.F.; Software: L.P., R.C. and A.T.; Validation: L.P., P.G., R.C., A.T. and V.P.; Formal analysis: L.P., R.C. and A.T.; Investigation: L.P., P.G., R.C., A.T., G.B., M.B., E.C., C.M., V.L., E.M., C.O., G.R.G., G.M., T.R., A.S., K.M., V.P. and C.F.; Resources: Data curation: L.P., P.G., R.C., A.T., G.B., M.B., E.C., C.M., V.L., E.M., C.O., G.R.G., G.M., T.R., A.S., K.M. and V.P.; Writing—original draft preparation: L.P., P.G., R.C. and V.P.; Writing—review and editing; A.T., G.B., M.B., E.C., C.M., V.L., E.M., C.O., G.R.G., G.M., T.R., A.S., K.M., V.P. and C.F.; Visualization: L.P., R.C. and A.T.; Supervision: V.P. and C.F.; Project administration: L.P., P.G., R.C., V.P. and C.F.; Funding acquisition: C.F.; All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by AIRC (Associazione Italiana per la Ricerca sul Cancro) grant (IG23150).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board (or Ethics Committee) of IRCCS Ospedale San Raffaele (protocol code IG23150; date of approval 8 June 2021). It was also approved by the Ethics Committee of the Alfred Hospital (Project n: 546/22) and by the Peter MacCallum Cancer Centre Human Research Ethics Committee (ethics approval 17_95R).

Informed Consent Statement

Patient consent was waived due to the nature of the study (retrospective observational study). It was impossible to obtain consent for data processing from patients (deceased, out of follow-up, or lost to follow-up), so it was impossible to request individual consent from each patient. Data security and confidentiality were ensured at every stage of the study, from collection to transmission of information to the designated personnel for processing and analysis. The data collected concerned exclusively dose distributions, planning CT images, and the associated contours of structures delineated for radiotherapy planning purposes.

Data Availability Statement

Research data are stored in an institutional repository and will be shared upon request to the corresponding author.

Acknowledgments

The study was supported by an AIRC (Associazione Italiana per la Ricerca sul Cancro) grant (IG23150). We thank M. Acerbi and E. Lanzi (Varian Medical System, Inc.) for their technical support.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| KB | Knowledge-based |

| RWB-TF | Right Whole Breast Tangential Field |

| IPSI | Ipsilateral (nel contesto: polmone ipsilaterale) |

| PC1 | First Principal Component |

| cDVH | Clinical Dose–Volume Histogram |

| DVH | Dose–Volume Histogram |

| PTV | Planning Target Volume |

| CTV | Clinical Target Volume |

| OARs | Organs At Risk |

| RT | Radiotherapy |

| FiF | Field-in-Field |

| RP | RapidPlan |

| PCA | Principal Component Analysis |

| SD | Standard Deviation |

| V20 Gy | Volume of an organ receiving at least 20 Gy |

| WB | Whole Breast |

| VPSRG | Victorian Public Sector RapidPlan Group |

| MIKAPOCo | Multi-Institutional Knowledge-based Approach for Planning Optimization for the Community |

| AIRC | Associazione Italiana per la Ricerca sul Cancro |

References

- Breast Cancer Burden in EU-27. Available online: https://ecis.jrc.ec.europa.eu/sites/default/files/2023-12/Breast_cancer_en-Dec_2020.pdf (accessed on 27 October 2025).

- Early Breast Cancer Trialists’ Collaborative Group. Effect of radiotherapy after breast-conserving surgery on 10-year recurrence and 15-year breast cancer death: Meta-analysis of individual patient data for 10,801 women in 17 randomised trials. Lancet 2011, 378, 1707–1716. [Google Scholar] [CrossRef]

- Moore, K.L. Automated Radiotherapy Treatment Planning. Semin. Radiat. Oncol. 2019, 29, 209–218. [Google Scholar] [CrossRef]

- Meattini, I.; Becherini, C.; Boersma, L.; Kaidar-Person, O.; Marta, G.N.; Montero, A.; Offersen, B.V.; Aznar, M.C.; Belka, C.; Brunt, A.M.; et al. European Society for Radiotherapy and Oncology Advisory Committee in Radiation Oncology Practice consensus recommendations on patient selection and dose and fractionation for external beam radiotherapy in early breast cancer. Lancet Oncol. 2022, 23, e21–e31. [Google Scholar] [CrossRef] [PubMed]

- Krug, D.; Baumann, R.; Combs, S.E.; Duma, M.N.; Dunst, J.; Feyer, P.; Fietkau, R.; Haase, W.; Harms, W.; Hehr, T.; et al. Moderate hypofractionation remains the standard of care for whole-breast radiotherapy in breast cancer: Considerations regarding FAST and FAST-Forward. Strahlenther. Onkol. 2021, 197, 269–280. [Google Scholar] [CrossRef]

- Hussein, M.; Heijmen, B.J.M.; Verellen, D.; Nisbet, A. Automation in intensity modulated radiotherapy treatment planning—A review of recent innovations. Br. J. Radiol. 2018, 91, 20180270. [Google Scholar] [CrossRef] [PubMed]

- Scaggion, A.; Fusella, M.; Roggio, A.; Bacco, S.; Pivato, N.; Rossato, M.A.; Peña, L.M.A.; Paiusco, M. Reducing inter- and intra-planner variability in radiotherapy plan output with a commercial knowledge-based planning solution. Phys. Med. 2018, 53, 86–93. [Google Scholar] [CrossRef] [PubMed]

- Apaza Blanco, O.A.; Almada, M.J.; Garcia Andino, A.A.; Zunino, S.; Venencia, D. Knowledge-Based Volumetric Modulated Arc Therapy Treatment Planning for Breast Cancer. J. Med. Phys. 2021, 46, 334–340. [Google Scholar] [CrossRef]

- Cao, W.; Gronberg, M.; Olanrewaju, A.; Whitaker, T.; Hoffman, K.; Cardenas, C.; Garden, A.; Skinner, H.; Beadle, B.; Court, L. Knowledge-based planning for the radiation therapy treatment plan quality assurance for patients with head and neck cancer. J. Appl. Clin. Med. Phys. 2022, 23, e13614. [Google Scholar] [CrossRef]

- Harms, J.; Pogue, J.A.; Cardenas, C.E.; Stanley, D.N.; Cardan, R.; Popple, R. Automated evaluation for rapid implementation of knowledge-based radiotherapy planning models. J. Appl. Clin. Med. Phys. 2023, 24, e14152. [Google Scholar] [CrossRef]

- Hernandez, V.; Hansen, C.R.; Widesott, L.; Bäck, A.; Canters, R.; Fusella, M.; Götstedt, J.; Jurado-Bruggeman, D.; Mukumoto, N.; Kaplan, L.P. What is plan quality in radiotherapy? The importance of evaluating dose metrics, complexity, and robustness of treatment plans. Radiother. Oncol. 2020, 153, 26–33. [Google Scholar] [CrossRef]

- Kaplan, L.P.; Placidi, L.; Bäck, A.; Canters, R.; Hussein, M.; Vaniqui, A.; Fusella, M.; Piotrowski, T.; Hernandez, V.; Jornet, N.; et al. Plan quality assessment in clinical practice: Results of the 2020 ESTRO survey on plan complexity and robustness. Radiother. Oncol. 2022, 173, 254–261. [Google Scholar] [CrossRef]

- Fogliata, A.; Cozzi, L.; Reggiori, G.; Stravato, A.; Lobefalo, F.; Franzese, C.; Franceschini, D.; Tomatis, S.; Scorsetti, M. RapidPlan knowledge based planning: Iterative learning process and model ability to steer planning strategies. Radiother. Oncol. 2019, 14, 187. [Google Scholar] [CrossRef]

- Kaderka, R.; Hild, S.J.; Bry, V.N.; Cornell, M.; Ray, X.J.; Murphy, J.D.; Atwood, T.F.; Moore, K.L. Wide-Scale Clinical Implementation of Knowledge-Based Planning: An Investigation of Workforce Efficiency, Need for Post-automation Refinement, and Data-Driven Model Maintenance. Int. J. Radiat. Oncol. Biol. Phys. 2021, 111, 705–715. [Google Scholar] [CrossRef]

- Scaggion, A.; Fusella, M.; Cavinato, S.; Dusi, F.; El Khouzai, B.; Germani, A.; Pivato, N.; Rossato, M.A.; Roggio, A.; Scott, A.; et al. Updating a clinical Knowledge-Based Planning prediction model for prostate radiotherapy. Phys. Med. 2023, 107, 102542. [Google Scholar] [CrossRef] [PubMed]

- Guo, H.; Kunkyab, T.; Lei, Y.; Rosenzweig, K.; Samstein, R.; Chao, M.; Liu, T.; Xia, J.; Zhang, J. PlanAct: An eclipse scripting API-based module embedding clinical optimization strategies for automated planning in locally advanced non-small cell lung cancer. J. Appl. Clin. Med. Phys. 2025, 26, e70304. [Google Scholar] [CrossRef]

- Tol, J.P.; Doornaert, P.; Witte, B.I.; Dahele, M.; Slotman, B.J.; Verbakel, W.F.A.R. A longitudinal evaluation of improvements in radiotherapy treatment plan quality for head and neck cancer patients. Radiother. Oncol. 2016, 119, 337–343. [Google Scholar] [CrossRef] [PubMed]

- Rago, M.; Placidi, L.; Polsoni, M.; Rambaldi, G.; Cusumano, D.; Greco, F.; Indovina, L.; Menna, S.; Placidi, E.; Stimato, G.; et al. Evaluation of a generalized knowledge-based planning performance for VMAT irradiation of breast and locoregional lymph nodes-Internal mammary and/or supraclavicular regions. PLoS ONE 2021, 16, e0245305. [Google Scholar] [CrossRef] [PubMed]

- Castriconi, R.; Esposito, P.G.; Tudda, A.; Mangili, P.; Broggi, S.; Fodor, A.; Deantoni, C.L.; Longobardi, B.; Pasetti, M.; Perna, L.; et al. Replacing Manual Planning of Whole Breast Irradiation with Knowledge-Based Automatic Optimization by Virtual Tangential-Fields Arc Therapy. Front. Oncol. 2021, 11, 712423. [Google Scholar] [CrossRef]

- Sinha, S.; Kumar, A.; Maheshwari, G.; Mohanty, S.; Joshi, K.; Shinde, P.; Gupta, D.; Kale, S.; Phurailatpam, R.; Swain, M.; et al. Development and Validation of Single-Optimization Knowledge-Based Volumetric Modulated Arc Therapy Model Plan in Nasopharyngeal Carcinomas. Adv. Radiat. Oncol. 2024, 9, 101311. [Google Scholar] [CrossRef]

- Kaderka, R.; Dogan, N.; Jin, W.; Bossart, E. Effects of model size and composition on quality of head-and-neck knowledge-based plans. J. Appl. Clin. Med. Phys. 2024, 25, e14168. [Google Scholar] [CrossRef]

- Pallotta, S.; Marrazzo, L.; Calusi, S.; Castriconi, R.; Fiorino, C.; Loi, G.; Fiandra, C. Implementation of automatic plan optimization in Italy: Status and perspectives. Phys. Med. 2021, 92, 86–94. [Google Scholar] [CrossRef]

- Berry, S.L.; Ma, R.; Boczkowski, A.; Jackson, A.; Zhang, P.; Hunt, M. Evaluating inter-campus plan consistency using a knowledge based planning model. Radiother. Oncol. 2016, 120, 349–355. [Google Scholar] [CrossRef]

- Schubert, C.; Waletzko, O.; Weiss, C.; Voelzke, D.; Toperim, S.; Roeser, A.; Puccini, S.; Piroth, M.; Mehrens, C.; Kueter, J.-D.; et al. Intercenter validation of a knowledge based model for automated planning of volumetric modulated arc therapy for prostate cancer. The experience of the German RapidPlan Consortium. PLoS ONE 2017, 12, e0178034. [Google Scholar] [CrossRef]

- Ueda, Y.; Fukunaga, J.I.; Kamima, T.; Shimizu, Y.; Kubo, K.; Doi, H.; Monzen, H. Standardization of knowledge-based volumetric modulated arc therapy planning with a multi-institution model (broad model) to improve prostate cancer treatment quality. Phys. Eng. Sci. Med. 2023, 46, 1091–1100. [Google Scholar] [CrossRef]

- Kamima, T.; Ueda, Y.; Fukunaga, J.-I.; Shimizu, Y.; Tamura, M.; Ishikawa, K.; Monzen, H. Multi-institutional evaluation of knowledge-based planning performance of volumetric modulated arc therapy (VMAT) for head and neck cancer. Phys. Med. 2019, 64, 174–181. [Google Scholar] [CrossRef]

- Panettieri, V.; Ball, D.; Chapman, A.; Cristofaro, N.; Gawthrop, J.; Griffin, P.; Herath, S.; Hoyle, S.; Jukes, L.; Kron, T.; et al. Development of a multicentre automated model to reduce planning variability in radiotherapy of prostate cancer. Phys. Imaging Radiat. Oncol. 2019, 11, 34–40. [Google Scholar] [CrossRef] [PubMed]

- Tudda, A.; Castriconi, R.; Benecchi, G.; Cagni, E.; Cicchetti, A.; Dusi, F.; Esposito, P.G.; Guernieri, M.; Ianiro, A.; Landoni, V.; et al. Knowledge-based multi-institution plan prediction of whole breast irradiation with tangential fields. Radiother. Oncol. 2022, 175, 10–16. [Google Scholar] [CrossRef] [PubMed]

- Zhao, T.; Beckert, R.; Hilliard, J.; Laugeman, E.; Hao, Y.; Hunerkoch, K.; Miller, K.; Brunt, L.; Hong, D.; Schiff, J.; et al. An In Silico study of a One-Day One-Machine Workflow for Definitive Radiotherapy Cases on a Novel Simulation and Treatment Platform. Int. J. Radiat. Oncol. Biol. Phys. 2023, 117 (Suppl. S2), e749. [Google Scholar] [CrossRef]

- Villaggi, E.; Hernandez, V.; Fusella, M.; Moretti, E.; Russo, S.; Vaccara, E.M.L.; Nardiello, B.; Esposito, M.; Saez, J.; Cilla, S.; et al. Plan quality improvement by DVH sharing and planner’s experience: Results of a SBRT multicentric planning study on prostate. Phys. Med. 2019, 62, 73–82. [Google Scholar] [CrossRef]

- Matrosic, C.K.; Dess, K.; Boike, T.; Dominello, M.; Dryden, D.; Fraser, C.; Grubb, M.; Hayman, J.; Jarema, D.; Marsh, R.; et al. Knowledge-Based Quality Assurance and Model Maintenance in Lung Cancer Radiation Therapy in a Statewide Quality Consortium of Academic and Community Practice Centers. Pract. Radiat. Oncol. 2023, 13, e200–e208. [Google Scholar] [CrossRef]

- Pogue, J.A.; Cardenas, C.E.; Harms, J.; Soike, M.H.; Kole, A.J.; Schneider, C.S.; Veale, C.; Popple, R.; Belliveau, J.-G.; McDonald, A.M.; et al. Benchmarking Automated Machine Learning-Enhanced Planning with Ethos Against Manual and Knowledge-Based Planning for Locally Advanced Lung Cancer. Adv. Radiat. Oncol. 2023, 8, 101292. [Google Scholar] [CrossRef] [PubMed]

- Habraken, S.J.M.; Sharfo, A.W.M.; Buijsen, J.; Verbakel, W.F.A.R.; Haasbeek, C.J.A.; Öllers, M.C.; Westerveld, H.; van Wieringen, N.; Reerink, N.; Seravalli, E.; et al. The TRENDY multi-center randomized trial on hepatocellular carcinoma—Trial QA including automated treatment planning and benchmark-case results. Radiother. Oncol. 2017, 125, 507–513. [Google Scholar] [CrossRef]

- Martin, J.M.; Sidhom, M.; Banyer, P.; Porter, S.; Moore, A.J.; Cook, O.M. Implementation of Knowledge-Based Planning as a Quality Assurance Feedback Tool for a Multicentre Prostate Clinical Trial (TROG 18.01 NINJA). Int. J. Radiat. Oncol. Biol. Phys. 2025, 123 (Suppl. S1), S200–S201. [Google Scholar] [CrossRef]

- van Gysen, K.; Kneebone, A.; Le, A.; Wu, K.; Haworth, A.; Bromley, R.; Hruby, G.; O’Toole, J.; Booth, J.; Brown, C.; et al. Evaluating the utility of knowledge-based planning for clinical trials using the TROG 08.03 post prostatectomy radiation therapy planning data. Phys. Imaging Radiat. Oncol. 2022, 22, 91–97. [Google Scholar] [CrossRef]

- Moore, K.L.; Schmidt, R.; Moiseenko, V.; Olsen, L.A.; Tan, J.; Xiao, Y.; Galvin, J.; Pugh, S.; Seider, M.J.; Dicker, A.P.; et al. Quantifying Unnecessary Normal Tissue Complication Risks due to Suboptimal Planning: A Secondary Study of RTOG 0126. Int. J. Radiat. Oncol. Biol. Phys. 2015, 92, 228–235. [Google Scholar] [CrossRef] [PubMed]

- Good, D.; Lo, J.; Lee, W.R.; Wu, Q.J.; Yin, F.-F.; Das, S.K. A knowledge-based approach to improving and homogenizing intensity modulated radiation therapy planning quality among treatment centers: An example application to prostate cancer planning. Int. J. Radiat. Oncol. Biol. Phys. 2013, 87, 176–181. [Google Scholar] [CrossRef]

- Frizzelle, M.; Pediaditaki, A.; Thomas, C.; South, C.; Vanderstraeten, R.; Wiessler, W.; Adams, E.; Jagadeesan, S.; Lalli, N. Using multi-centre data to train and validate a knowledge-based model for planning radiotherapy of the head and neck. Phys. Imaging Radiat. Oncol. 2022, 21, 18–23. [Google Scholar] [CrossRef]

- Li, N.; Carmona, R.; Sirak, I.; Kasaova, L.; Followill, D.; Michalski, J.; Bosch, W.; Straube, W.; Mell, L.K.; Moore, K.L. Highly Efficient Training, Refinement, and Validation of a Knowledge-based Planning Quality-Control System for Radiation Therapy Clinical Trials. Int. J. Radiat. Oncol. Biol. Phys. 2017, 97, 164–172. [Google Scholar] [CrossRef]

- Younge, K.C.; Marsh, R.B.; Owen, D.; Geng, H.; Xiao, Y.; Spratt, D.E.; Foy, J.; Suresh, K.; Wu, Q.J.; Yin, F.-F.; et al. Improving Quality and Consistency in NRG Oncology Radiation Therapy Oncology Group 0631 for Spine Radiosurgery via Knowledge-Based Planning. Int. J. Radiat. Oncol. Biol. Phys. 2018, 100, 1067–1074. [Google Scholar] [CrossRef]

- Jain, J.; Serban, M.; Assenholt, M.S.; Hande, V.; Swamida, J.; Seppenwoolde, Y.; Alfieri, J.; Tanderup, K.; Chopra, S. Can knowledge-based planning models validated on ethnically diverse patients lead to global standardisation of external beam radiation therapy for locally advanced cervix cancer? Radiother. Oncol. J. Eur. Soc. Ther. Radiol. Oncol. 2025, 204, 110694. [Google Scholar] [CrossRef] [PubMed]

- Hussein, M.; South, C.P.; Barry, M.A.; Adams, E.J.; Jordan, T.J.; Stewart, A.J.; Nisbet, A. Clinical validation and benchmarking of knowledge-based IMRT and VMAT treatment planning in pelvic anatomy. Radiother. Oncol. 2016, 120, 473–479. [Google Scholar] [CrossRef]

- Tudda, A.; Castriconi, R.; Placidi, L.; Benecchi, G.; Buono, R.C.; Cagni, E.; Cicchetti, A.; Landoni, V.; Malatesta, T.; Mazzilli, A.; et al. Multi-institutional Knowledge-Based (KB) plan prediction benchmark models for whole breast irradiation. Phys. Med. 2025, 130, 104889. [Google Scholar] [CrossRef]

- Ueda, Y.; Fukunaga, J.-I.; Kamima, T.; Adachi, Y.; Nakamatsu, K.; Monzen, H. Evaluation of multiple institutions’ models for knowledge-based planning of volumetric modulated arc therapy (VMAT) for prostate cancer. Radiat. Oncol. 2018, 13, 46. [Google Scholar] [CrossRef]

- Kavanaugh, J.A.; Holler, S.; DeWees, T.A.; Robinson, C.G.; Bradley, J.D.; Iyengar, P.; Higgins, K.A.; Mutic, S.; Olsen, L.A. Multi-Institutional Validation of a Knowledge-Based Planning Model for Patients Enrolled in RTOG 0617: Implications for Plan Quality Controls in Cooperative Group Trials. Pract. Radiat. Oncol. 2019, 9, e218–e227. [Google Scholar] [CrossRef] [PubMed]

- Castriconi, R.; Tudda, A.; Placidi, L.; Benecchi, G.; Cagni, E.; Dusi, F.; Ianiro, A.; Landoni, V.; Malatesta, T.; Mazzilli, A.; et al. Inter-institutional variability of knowledge-based plan prediction of left whole breast irradiation. Physica Medica 2024, 120, 103331. [Google Scholar] [CrossRef] [PubMed]

- Esposito, P.G.; Castriconi, R.; Mangili, P.; Fodor, A.; Pasetti, M.; Di Muzio, N.G.; Del Vecchio, A.; Fiorino, C. Virtual Tangential-fields Arc Therapy (ViTAT) for whole breast irradiation: Technique optimization and validation. Phys Med. 2020, 77, 160–168. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).