Meeting Cancer Detection Benchmarks in MRI/Ultrasound Fusion Biopsy for Prostate Cancer: Insights from a Retrospective Analysis of Experienced Urologists

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Population and Design

2.2. MRI and Biopsy Protocol

2.3. Histopathological Analysis

2.4. Data Collection and Study Objectives

2.5. Statistical Analysis

3. Results

3.1. Descriptive Results

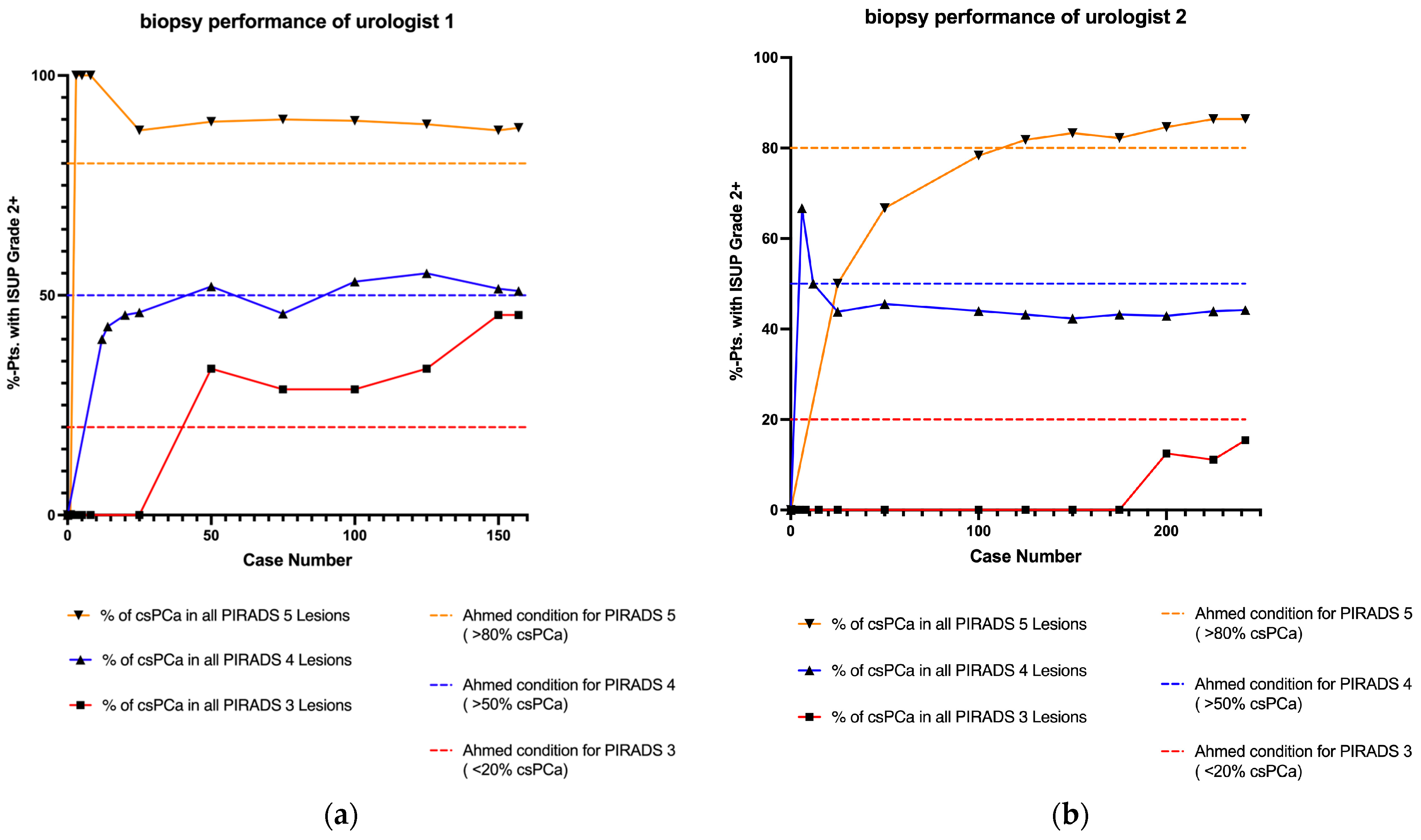

3.2. Learning Curve Analysis

3.3. Follow Up Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

References

- Cornford, P.; Bergh, R.C.v.D.; Briers, E.; Broeck, T.V.D.; Brunckhorst, O.; Darraugh, J.; Eberli, D.; De Meerleer, G.; De Santis, M.; Farolfi, A.; et al. EAU-EANM-ESTRO-ESUR-ISUP-SIOG Guidelines on Prostate Cancer—2024 Update. Part I: Screening, Diagnosis, and Local Treatment with Curative Intent. Eur. Urol. 2024, 86, 148–163. [Google Scholar] [CrossRef]

- Kasivisvanathan, V.; Rannikko, A.S.; Borghi, M.; Panebianco, V.; Mynderse, L.A.; Vaarala, M.H.; Briganti, A.; Budäus, L.; Hellawell, G.; Hindley, R.G.; et al. MRI-Targeted or Standard Biopsy for Prostate-Cancer Diagnosis. N. Engl. J. Med. 2018, 378, 1767–1777. [Google Scholar] [CrossRef]

- Klotz, L.; Chin, J.; Black, P.C.; Finelli, A.; Anidjar, M.; Bladou, F.; Mercado, A.; Levental, M.; Ghai, S.; Chang, S.D.; et al. Comparison of Multiparametric Magnetic Resonance Imaging–Targeted Biopsy with Systematic Transrectal Ultrasonography Biopsy for Biopsy-Naive Men at Risk for Prostate Cancer: A Phase 3 Randomized Clinical Trial. JAMA Oncol. 2021, 7, 534–542. [Google Scholar] [CrossRef]

- Ahmed, H.U.; El-Shater Bosaily, A.; Brown, L.C.; Gabe, R.; Kaplan, R.; Parmar, M.K.; Collaco-Moraes, Y.; Ward, K.; Hindley, R.G.; Freeman, A.; et al. Diagnostic accuracy of multi-parametric MRI and TRUS biopsy in prostate cancer (PROMIS): A paired validating confirmatory study. Lancet 2017, 389, 815–822. [Google Scholar] [CrossRef]

- Gereta, S.; Hung, M.; Alexanderani, M.K.; Robinson, B.D.; Hu, J.C. Evaluating the Learning Curve for In-office Freehand Cognitive Fusion Transperineal Prostate Biopsy. Urology 2023, 181, 31–37. [Google Scholar] [CrossRef] [PubMed]

- Halstuch, D.; Baniel, J.; Lifshitz, D.; Sela, S.; Ber, Y.; Margel, D. Characterizing the learning curve of MRI-US fusion prostate biopsies. Prostate Cancer Prostatic Dis. 2019, 22, 546–551. [Google Scholar] [CrossRef]

- Lenfant, L.; Beitone, C.; Troccaz, J.; Rouprêt, M.; Seisen, T.; Voros, S.; Mozer, P.C. Learning curve for fusion magnetic resonance imaging targeted prostate biopsy and three-dimensional transrectal ultrasonography segmentation. BJU Int. 2024, 133, 709–716. [Google Scholar] [CrossRef]

- Xu, L.; Ye, N.Y.; Lee, A.; Chopra, J.; Naslund, M.; Wong-You-Cheong, J.; Wnorowski, A.; Siddiqui, M.M. Learning curve for magnetic resonance imaging/ultrasound fusion prostate biopsy in detecting prostate cancer using cumulative sum analysis. Curr. Urol. 2022, 17, 159–164. [Google Scholar] [CrossRef] [PubMed]

- Barentsz, J.O.; Richenberg, J.; Clements, R.; Choyke, P.; Verma, S.; Villeirs, G.; Rouviere, O.; Logager, V.; Fütterer, J.J. ESUR prostate MR guidelines 2012. Eur. Radiol. 2012, 22, 746–757. [Google Scholar] [CrossRef]

- Padhani, A.R.; Weinreb, J.; Rosenkrantz, A.B.; Villeirs, G.; Turkbey, B.; Barentsz, J. Prostate Imaging-Reporting and Data System Steering Committee: PI-RADS v2 Status Update and Future Directions. Eur. Urol. 2019, 75, 385–396. [Google Scholar] [CrossRef]

- Sigle, A.; Jilg, C.A.; Kuru, T.H.; Binder, N.; Michaelis, J.; Grabbert, M.; Schultze-Seemann, W.; Miernik, A.; Gratzke, C.; Benndorf, M.; et al. Evaluation of the Ginsburg Scheme: Where Is Significant Prostate Cancer Missed? Cancers 2021, 13, 2502. [Google Scholar] [CrossRef]

- Moore, C.M.; Kasivisvanathan, V.; Eggener, S.; Emberton, M.; Fütterer, J.J.; Gill, I.S.; Iii, R.L.G.; Hadaschik, B.; Klotz, L.; Margolis, D.J.; et al. Standards of Reporting for MRI-targeted Biopsy Studies (START) of the Prostate: Recommendations from an International Working Group. Eur. Urol. 2013, 64, 544–552. [Google Scholar] [CrossRef] [PubMed]

- Epstein, J.I.; Egevad, L.; Amin, M.B.; Delahunt, B.; Srigley, J.R.; Humphrey, P.A. The 2014 International Society of Urological Pathology (ISUP) Consensus Conference on Gleason Grading of Prostatic Carcinoma: Definition of Grading Patterns and Proposal for a New Grading System. Am. J. Surg. Pathol. 2016, 40, 244–252. [Google Scholar] [CrossRef]

- Mager, R.; Brandt, M.P.; Borgmann, H.; Gust, K.M.; Haferkamp, A.; Kurosch, M. From novice to expert: Analyzing the learning curve for MRI-transrectal ultrasonography fusion-guided transrectal prostate biopsy. Int. Urol. Nephrol. 2017, 49, 1537–1544. [Google Scholar] [CrossRef]

- Hsieh, P.-F.; Li, P.-I.; Lin, W.-C.; Chang, H.; Chang, C.-H.; Wu, H.-C.; Chang, Y.-H.; Wang, Y.-D.; Huang, W.-C.; Huang, C.-P. Learning Curve of Transperineal MRI/US Fusion Prostate Biopsy: 4-Year Experience. Life 2023, 13, 638. [Google Scholar] [CrossRef]

- Meng, X.; Rosenkrantz, A.B.; Huang, R.; Deng, F.-M.; Wysock, J.S.; Bjurlin, M.A.; Huang, W.C.; Lepor, H.; Taneja, S.S. The Institutional Learning Curve of Magnetic Resonance Imaging-Ultrasound Fusion Targeted Prostate Biopsy: Temporal Improvements in Cancer Detection in 4 Years. J. Urol. 2018, 200, 1022–1029. [Google Scholar] [CrossRef]

- Calio, B.; Sidana, A.; Sugano, D.; Gaur, S.; Jain, A.; Maruf, M.; Xu, S.; Yan, P.; Kruecker, J.; Merino, M.; et al. Changes in prostate cancer detection rate of MRI-TRUS fusion vs systematic biopsy over time: Evidence of a learning curve. Prostate Cancer Prostatic Dis. 2017, 20, 436–441. [Google Scholar] [CrossRef]

- Cata, E.D.; Van Praet, C.; Andras, I.; Kadula, P.; Ognean, R.; Buzoianu, M.; Leucuta, D.; Caraiani, C.; Tamas-Szora, A.; Decaestecker, K.; et al. Analyzing the learning curves of a novice and an experienced urologist for transrectal magnetic resonance imaging-ultrasound fusion prostate biopsy. Transl. Androl. Urol. 2021, 10, 1956–1965. [Google Scholar] [CrossRef] [PubMed]

- Urkmez, A.; Ward, J.F.; Choi, H.; Troncoso, P.; Inguillo, I.; Gregg, J.R.; Altok, M.; Demirel, H.C.; Qiao, W.; Kang, H.C. Temporal learning curve of a multidisciplinary team for magnetic resonance imaging/transrectal ultrasonography fusion prostate biopsy. BJU Int. 2021, 127, 524–527. [Google Scholar] [CrossRef]

- Görtz, M.; Nyarangi-Dix, J.N.; Pursche, L.; Schütz, V.; Reimold, P.; Schwab, C.; Stenzinger, A.; Sültmann, H.; Duensing, S.; Schlemmer, H.-P.; et al. Impact of Surgeon’s Experience in Rigid versus Elastic MRI/TRUS-Fusion Biopsy to Detect Significant Prostate Cancer Using Targeted and Systematic Cores. Cancers 2022, 14, 886. [Google Scholar] [CrossRef] [PubMed]

- Checcucci, E.; Piramide, F.; Amparore, D.; De Cillis, S.; Granato, S.; Sica, M.; Verri, P.; Volpi, G.; Piana, A.; Garrou, D.; et al. Beyond the Learning Curve of Prostate MRI/TRUS Target Fusion Biopsy after More than 1000 Procedures. Urology 2021, 155, 39–45. [Google Scholar] [CrossRef]

- Berg, S.; Hanske, J.; von Landenberg, N.; Noldus, J.; Brock, M. Institutional Adoption and Apprenticeship of Fusion Targeted Prostate Biopsy: Does Experience Affect the Cancer Detection Rate? Urol. Int. 2020, 104, 476–482. [Google Scholar] [CrossRef]

- Himmelsbach, R.; Hackländer, A.; Weishaar, M.; Morlock, J.; Schoeb, D.; Jilg, C.; Gratzke, C.; Grabbert, M.; Sigle, A. Retrospective analysis of the learning curve in perineal robot-assisted prostate biopsy. Prostate 2024, 84, 1165–1172. [Google Scholar] [CrossRef] [PubMed]

- Calleris, G.; Marquis, A.; Zhuang, J.; Beltrami, M.; Zhao, X.; Kan, Y.; Oderda, M.; Huang, H.; Faletti, R.; Zhang, Q.; et al. Impact of operator expertise on transperineal free-hand mpMRI-fusion-targeted biopsies under local anaesthesia for prostate cancer diagnosis: A multicenter prospective learning curve. World J. Urol. 2023, 41, 3867–3876. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; He, X.; Zeng, Y.; Lu, Q.; Li, Y. The learning curve and experience of a novel multi-modal image fusion targeted transperineal prostate biopsy technique using electromagnetic needle tracking under local anesthesia. Front. Oncol. 2024, 14, 1361093. [Google Scholar] [CrossRef]

- Taha, F.; Larre, S.; Branchu, B.; Kumble, A.; Saffarini, M.; Ramos-Pascual, S.; Surg, R. Surgeon seniority and experience have no effect on CaP detection rates using MRI/TRUS fusion-guided targeted biopsies. Urol. Oncol. Semin. Orig. Investig. 2024, 42, 67.e1–67.e7. [Google Scholar] [CrossRef]

- Ramacciotti, L.S.; Kaneko, M.; Strauss, D.; Hershenhouse, J.S.; Rodler, S.; Cai, J.; Liang, G.; Aron, M.; Duddalwar, V.; Cacciamani, G.E.; et al. The learning curve for transperineal MRI/TRUS fusion prostate biopsy: A prospective evaluation of a stepwise approach. Urol. Oncol. Semin. Orig. Investig. 2024, 43, 64.e1–64.e10. [Google Scholar] [CrossRef] [PubMed]

- Alargkof, V.; Engesser, C.; Breit, H.C.; Winkel, D.J.; Seifert, H.; Trotsenko, P.; Wetterauer, C. The learning curve for robotic-assisted transperineal MRI/US fusion-guided prostate biopsy. Sci. Rep. 2024, 14, 5638. [Google Scholar] [CrossRef]

- Kasabwala, K.; Patel, N.; Cricco-Lizza, E.; Shimpi, A.A.; Weng, S.; Buchmann, R.M.; Motanagh, S.; Wu, Y.; Banerjee, S.; Khani, F.; et al. The Learning Curve for Magnetic Resonance Imaging/Ultrasound Fusion-guided Prostate Biopsy. Eur. Urol. Oncol. 2019, 2, 135–140. [Google Scholar] [CrossRef] [PubMed]

- Truong, M.; Weinberg, E.; Hollenberg, G.; Borch, M.; Park, J.H.; Gantz, J.; Feng, C.; Frye, T.; Ghazi, A.; Wu, G.; et al. Institutional Learning Curve Associated with Implementation of a Magnetic Resonance/Transrectal Ultrasound Fusion Biopsy Program Using PI-RADS™ Version 2: Factors that Influence Success. Urol. Pr. 2018, 5, 69–75. [Google Scholar] [CrossRef]

- Giganti, F.; Moore, C.M. A critical comparison of techniques for MRI-targeted biopsy of the prostate. Transl. Androl. Urol. 2017, 6, 432–443. [Google Scholar] [CrossRef] [PubMed]

- Stabile, A.; Dell’oglio, P.; Gandaglia, G.; Fossati, N.; Brembilla, G.; Cristel, G.; Dehò, F.; Scattoni, V.; Maga, T.; Losa, A.; et al. Not All Multiparametric Magnetic Resonance Imaging–targeted Biopsies Are Equal: The Impact of the Type of Approach and Operator Expertise on the Detection of Clinically Significant Prostate Cancer. Eur. Urol. Oncol. 2018, 1, 120–128. [Google Scholar] [CrossRef]

- Kornienko, K.; Reuter, M.; Maxeiner, A.; Günzel, K.; Kittner, B.; Reimann, M.; Hofbauer, S.L.; Wiemer, L.E.; Heckmann, R.; Asbach, P.; et al. Follow-up of men with a PI-RADS 4/5 lesion after negative MRI/Ultrasound fusion biopsy. Sci. Rep. 2022, 12, 13603. [Google Scholar] [CrossRef]

- Arsov, C.; Becker, N.; Rabenalt, R.; Hiester, A.; Quentin, M.; Dietzel, F.; Antoch, G.; Gabbert, H.E.; Albers, P.; Schimmöller, L. The use of targeted MR-guided prostate biopsy reduces the risk of Gleason upgrading on radical prostatectomy. J. Cancer Res. Clin. Oncol. 2015, 141, 2061–2068. [Google Scholar] [CrossRef]

- Lee, H.; Hwang, S.I.; Lee, H.J.; Byun, S.-S.; Lee, S.E.; Hong, S.K. Diagnostic performance of diffusion-weighted imaging for prostate cancer: Peripheral zone versus transition zone. PLoS ONE 2018, 13, e0199636. [Google Scholar] [CrossRef]

- Wegelin, O.; Exterkate, L.; van der Leest, M.; Kummer, J.A.; Vreuls, W.; de Bruin, P.C.; Bosch, J.; Barentsz, J.O.; Somford, D.M.; van Melick, H.H. The FUTURE Trial: A Multicenter Randomised Controlled Trial on Target Biopsy Techniques Based on Magnetic Resonance Imaging in the Diagnosis of Prostate Cancer in Patients with Prior Negative Biopsies. Eur. Urol. 2019, 75, 582–590. [Google Scholar] [CrossRef] [PubMed]

- Filson, C.P.; Natarajan, S.; Margolis, D.J.; Huang, J.; Lieu, P.; Dorey, F.J.; Reiter, R.E.; Marks, L.S. Prostate cancer detection with magnetic resonance-ultrasound fusion biopsy: The role of systematic and targeted biopsies. Cancer 2016, 122, 884–892. [Google Scholar] [CrossRef]

- Drost, F.-J.H.; Osses, D.F.; Nieboer, D.; Steyerberg, E.W.; Bangma, C.H.; Roobol, M.J.; Schoots, I.G. Prostate MRI, with or without MRI-targeted biopsy, and systematic biopsy for detecting prostate cancer. Cochrane Database Syst. Rev. 2019, 2019, CD012663. [Google Scholar] [CrossRef] [PubMed]

- Ahdoot, M.; Wilbur, A.R.; Reese, S.E.; Lebastchi, A.H.; Mehralivand, S.; Gomella, P.T.; Bloom, J.; Gurram, S.; Siddiqui, M.; Pinsky, P.; et al. MRI-Targeted, Systematic, and Combined Biopsy for Prostate Cancer Diagnosis. N. Engl. J. Med. 2020, 382, 917–928. [Google Scholar] [CrossRef] [PubMed]

- Rouvière, O.; Puech, P.; Renard-Penna, R.; Claudon, M.; Roy, C.; Mège-Lechevallier, F.; Decaussin-Petrucci, M.; Dubreuil-Chambardel, M.; Magaud, L.; Remontet, L.; et al. Use of prostate systematic and targeted biopsy on the basis of multiparametric MRI in biopsy-naive patients (MRI-FIRST): A prospective, multicentre, paired diagnostic study. Lancet Oncol. 2019, 20, 100–109. [Google Scholar] [CrossRef]

- Leitlinienprogramm Onkologie. S3-Leitlinie Prostatakarzinom, Langversion 7.0; Leitlinienprogramm Onkologie: Berlin, Germany, 2024. [Google Scholar]

- Kaneko, M.; Medina, L.G.; Lenon, M.S.L.; Hemal, S.; Sayegh, A.S.; Jadvar, D.S.; Ramacciotti, L.S.; Paralkar, D.; Cacciamani, G.E.; Lebastchi, A.H.; et al. Transperineal vs transrectal magnetic resonance and ultrasound image fusion prostate biopsy: A pair-matched comparison. Sci. Rep. 2023, 13, 13457. [Google Scholar] [CrossRef]

- Oderda, M.; Diamand, R.; Zahr, R.A.; Anract, J.; Assenmacher, G.; Delongchamps, N.B.; Bui, A.P.; Benamran, D.; Calleris, G.; Dariane, C.; et al. Transrectal versus transperineal prostate fusion biopsy: A pair-matched analysis to evaluate accuracy and complications. World J. Urol. 2024, 42, 535. [Google Scholar] [CrossRef] [PubMed]

- Mian, B.M.; Feustel, P.J.; Aziz, A.; Kaufman, R.P.; Bernstein, A.; Avulova, S.; Fisher, H.A. Complications Following Transrectal and Transperineal Prostate Biopsy: Results of the ProBE-PC Randomized Clinical Trial. J. Urol. 2024, 211, 205–213. [Google Scholar] [CrossRef]

| Parameter | U1 (n = 157) | U2 (n = 242) | p |

|---|---|---|---|

| Median age, years (IQR) | 71 (65–76) | 68 (63–73.3) | <0.001 |

| Median PSA, ng/mL (IQR) | 7.0 (5.3–10.8) | 7.8 (5.6–11.1) | 0.134 |

| Median prostate volume, cm3 (IQR) | 45.3 (35.0–64.4) | 51.9 (35.0–73.8) | 0.225 |

| Proportion of PCa, n (%) | 121 (77.1) | 173 (71.5) | 0.216 |

| Proportion of csPCa, n (%) | 95 (60.5) | 131 (54.1) | 0.209 |

| Proportion of PCa solely detected by SB, n (%) | 22 (14.0) | 38 (15.7) | 0.726 |

| Proportion of csPCa solely detected by SB, n (%) | 15 (9.6) | 17 (7.0) | 0.550 |

| Median FU of pts. with negative biopsy and PI-RADS 4/5, months (IQR) | 45 (18–51) | 23 (15–31) | <0.001 |

| Number of pts. with PI-RADS 4/5 and negative biopsy, n (%) | 30 (19.1) | 62 (25.6) | 0.425 |

| Transrectal Biopsies n/n (%) | Transperineal Biopsies n/n (%) | p | |||

|---|---|---|---|---|---|

| PCa in PI-RADS 3 | 8 | (50%) | 3 | (37.5%) | 0.679 |

| csPCa in PI-RADS 3 | 6 | (37.5%) | 1 | (12.5%) | 0.352 |

| PCa in PI-RADS 4 | 131 | (67.2%) | 52 | (72.2%) | 0.461 |

| csPCa in PI-RADS 4 | 90 | (46.2%) | 35 | (48.6%) | 0.783 |

| PCa in PI-RADS 5 | 73 | (90.1%) | 27 | (100%) | 0.197 |

| csPCa in PI-RADS 5 | 68 | (84%) | 26 | (96.3%) | 0.182 |

| Biopsies with Intern MRI n/n (%) | Biopsies with Extern MRI n/n (%) | p | |||

|---|---|---|---|---|---|

| PCa in PI-RADS 3 | 6 | (60%) | 5 | (35.7%) | 0.408 |

| csPCa in PI-RADS 3 | 4 | (40%) | 3 | (21.4%) | 0.393 |

| PCa in PI-RADS 4 | 95 | (69.3%) | 88 | (67.7%) | 0.793 |

| csPCa in PI-RADS 4 | 61 | (44.5%) | 64 | (49.2%) | 0.463 |

| PCa in PI-RADS 5 | 56 | (88.9%) | 44 | (97.8%) | 0.136 |

| csPCa in PI-RADS 5 | 54 | (85.7%) | 40 | (88.9%) | 0.774 |

| No. of UMFB | Urologist 1 (n = 157) n/n (%) | Urologist 2 (n = 242) n/n (%) | p | ||||||

|---|---|---|---|---|---|---|---|---|---|

| 25 | PI-RADS 3: | 4 | GS ≥ 7a: | 0 (0%) | PI-RADS 3: | 1 | GS ≥ 7a: | 0 (0%) | 0.999 |

| PI-RADS 4: | 13 | GS ≥ 7a: | 6 (46.1%) | PI-RADS 4: | 16 | GS ≥ 7a: | 7 (43.8%) | 0.999 | |

| PI-RADS 5: | 8 | GS ≥ 7a: | 7 (87.5%) | PI-RADS 5: | 8 | GS ≥ 7a: | 4 (50%) | 0.282 | |

| 50 | PI-RADS 3: | 6 | GS ≥ 7a: | 2 (33.3%) | PI-RADS 3: | 2 | GS ≥ 7a: | 0 (0%) | 0.999 |

| PI-RADS 4: | 25 | GS ≥ 7a: | 13 (52%) | PI-RADS 4: | 33 | GS ≥ 7a: | 15 (45.5%) | 0.791 | |

| PI-RADS 5: | 19 | GS ≥ 7a: | 17 (89.5%) | PI-RADS 5: | 15 | GS ≥ 7a: | 10 (66.7%) | 0.199 | |

| 100 | PI-RADS 3: | 7 | GS ≥ 7a: | 2 (28.6%) | PI-RADS 3: | 2 | GS ≥ 7a: | 0 (0%) | 0.999 |

| PI-RADS 4: | 64 | GS ≥ 7a: | 34 (53.1%) | PI-RADS 4: | 75 | GS ≥ 7a: | 33 (44%) | 0.310 | |

| PI-RADS 5: | 29 | GS ≥ 7a: | 26 (89.7%) | PI-RADS 5: | 23 | GS ≥ 7a: | 18 (78.3%) | 0.441 | |

| 150 | PI-RADS 3: | 11 | GS ≥ 7a: | 5 (45.5%) | PI-RADS 3: | 4 | GS ≥ 7a: | 0 (0%) | 0.231 |

| PI-RADS 4: | 99 | GS ≥ 7a: | 51 (51.5%) | PI-RADS 4: | 104 | GS ≥ 7a: | 44 (42.3%) | 0.207 | |

| PI-RADS 5: | 40 | GS ≥ 7a: | 35 (87.5%) | PI-RADS 5: | 42 | GS ≥ 7a: | 35 (83.3%) | 0.757 | |

| Final count | PI-RADS 3: | 11 | GS ≥ 7a: | 5 (45.5%) | PI-RADS 3: | 13 | GS ≥ 7a: | 2 (15.4%) | 0.182 |

| PI-RADS 4: | 104 | GS ≥ 7a: | 53 (51%) | PI-RADS 4: | 163 | GS ≥ 7a: | 72 (44.2%) | 0.315 | |

| PI-RADS 5: | 42 | GS ≥ 7a: | 37 (88.1%) | PI-RADS 5: | 66 | GS ≥ 7a: | 57 (86.4%) | 0.999 | |

| No. of UMFB | Urologist 1 | Urologist 2 | ||

|---|---|---|---|---|

| PI-RADS (Ahmed Cutoff) | p | PI-RADS (Ahmed Cutoff) | p | |

| 25 | PI-RADS 3 (<20%) | 0.999 | PI-RADS 3 (<20%) | 0.999 |

| PI-RADS 4 (>50%) | 0.999 | PI-RADS 4 (>50%) | 0.804 | |

| PI-RADS 5 (>80%) | 0.999 | PI-RADS 5 (>80%) | 0.056 | |

| 50 | PI-RADS 3 (<20%) | 0.345 | PI-RADS 3 (<20%) | 0.999 |

| PI-RADS 4 (>50%) | 0.999 | PI-RADS 4 (>50%) | 0.728 | |

| PI-RADS 5 (>80%) | 0.400 | PI-RADS 5 (>80%) | 0.199 | |

| 100 | PI-RADS 3 (<20%) | 0.633 | PI-RADS 3 (<20%) | 0.999 |

| PI-RADS 4 (>50%) | 0.708 | PI-RADS 4 (>50%) | 0.356 | |

| PI-RADS 5 (>80%) | 0.249 | PI-RADS 5 (>80%) | 0.796 | |

| 150 | PI-RADS 3 (<20%) | 0.050 | PI-RADS 3 (<20%) | 0.999 |

| PI-RADS 4 (>50%) | 0.841 | PI-RADS 4 (>50%) | 0.141 | |

| PI-RADS 5 (>80%) | 0.322 | PI-RADS 5 (>80%) | 0.702 | |

| Final count | PI-RADS 3 (<20%) | 0.050 | PI-RADS 3 (<20%) | 0.999 |

| PI-RADS 4 (>50%) | 0.922 | PI-RADS 4 (>50%) | 0.158 | |

| PI-RADS 5 (>80%) | 0.247 | PI-RADS 5 (>80%) | 0.221 | |

| First Author (Year) | Study Design, Methods, and Results |

|---|---|

| Yang et al. [25] (2024) | Design: single-center (UC), prospective (P), transperineal (TP); Patients: n = 92; Urologist: one experienced urologist, trained on a TP simulator prior to the study. Statistical analysis: CUSUM analysis for operating time with learning curve fitting, CDR, procedure duration, VAS, and VNS. Endpoints: CDR, procedure duration, and pain scores. Results: Two distinct phases identified: an initial learning phase (first 12 cases) followed by proficiency. No learning curve observed for csPCa CDR; statistically significant reduction in procedure duration and pain. Level: per urologist |

| Taha et al. [26] (2024) | Design: single-center (UC), retrospective (R), transrectal (TR); Patients: n = 403; Urologists: seven with varying experience levels (seniors ≥ 50 biopsies; juniors with no experience). Statistical analysis: linear regression and two-sample tests. Endpoints: CDR across prostate regions and junior vs senior urologist. Results: Consistent CDR across all experience levels; trend for positive correlation between experience and csPCa. No learning curve for csPCa CDR. Level: per urologist. |

| Ramacciotti et al. [27] (2024) | Design: single-center (UC), prospective (P), transperineal (TP); Patients: n = 370; Urologist: one highly experienced urologist. Statistical analysis: Wilcoxon rank sum, chi-square, logistic and linear regression, inflection point analysis. Endpoints: csPC CDR, procedure time, complication rates. Results: Learning curve for procedure time, plateau at 156 cases; consistently low complication rates. No learning curve for csPCa CDR. Level: per urologist. |

| Lenfant et al. [7] (2024) | Design: single-center (UC), prospective (P), transrectal (TR); Patients: n = 1721; Urologists: 14 with varying experience levels; novices supervised by lead operator. Statistical analysis: CUSUM. Endpoints: TRUS segmentation time, csPCa detection rate, pain score. Results: plateau for segmentation (40 cases), pain score (20–100 cases), and satisfactory csPCa CDR achieved after 25–48 cases. Level: per urologist. |

| Himmelsbach et al. [23] (2024) | Design: Single-center (UC), retrospective (R), transperineal (TP); Patients: n = 1716; Urologists: 20 with varying experience (low, intermediate, high). Statistical analysis: chi-square, Wilcoxon–Mann–Whitney U, regression analysis. Endpoints: CDR for csPCa in TB, procedure duration. Results: Significant decrease in procedure duration with experience. No learning curve for csPCa CDR. Level: institutional. |

| Alargkof et al. [28] (2024) | Design: single-center (UC), prospective (P), transperineal (TP); Patients: n = 91; Urologists: novice, chief resident, expert. Statistical analysis: Fisher’s exact test, t-test, logistic regression. Endpoints: CDR, procedure time, EPA scores, NASA task load index. Results: EPA scores plateau after 22 cases. No significant differences in CDR for PI-RADS 4 lesions for expert. No difference in CDR between surgeons Level: per urologist. |

| Xu et al. [8] (2023) | Design: Single-center (UC), retrospective (R), route unspecified; Patients: n = 107; Urologists: two board-certified urologists. Statistical analysis: CUSUM, t-test. Endpoints: CDR in PI-RADS 3, 4, and 5. Results: plateau for csPCa CDR observed after 52 biopsies; significant improvements in TB CDR. Level: institutional. |

| Hsieh et al. [15] (2023) | Design: single-center (UC), prospective (P), transperineal (TP); Patients: n = 206; Urologist: one experienced urologist. Statistical analysis: Cochrane–Armitage, McNemar test, logistic regression. Endpoints: temporal changes in csPCa CDR. Results: csPCa CDR improvements with time for TB; specific gains for lesions ≤ 1 cm and anterior lobe. Level: team-based. |

| Gereta et al. [5] (2023) | Design: single-center (UC), retrospective (R), transperineal (TP); Patients: n = 110; Urologist: one urologist inexperienced in UMFB. Statistical analysis: Kruskal–Wallis, chi-square, Fisher exact test, regression analysis. Endpoints: csPCa CDR, complication rates, biopsy core quality. Results: no learning curve for csPCa CDR; improvement in procedure time. Level: per urologist. |

| Calleris et al. [24] (2023) | Design: multi-center (MC), prospective (P), transperineal (TP); Patients: n = 1014; Urologists: 30 experienced (but various for perineal UMFB in LA). Statistical analysis: CUSUM, Kruskal–Wallis, chi-square, Jonckheere–Terpstra, linear regression. Endpoints: Biopsy duration, csCDR-T, complications, pain, urinary function. Results: plateau for procedure time at 50 biopsies; no significant trend for csCDR-T. Level: per urologist and institutional. |

| Görtz et al. [20] (2022) | Design: single-center (UC), prospective (P), transperineal (TP); Patients: n = 939; Urologists: 17 grouped by experience level. Statistical analysis: chi-square, Altman, Tango. Endpoints: RTB vs ETB csPCa CDR. Results: learning curve for csPCa CDR after 100 biopsies; better results in RTB vs ETB for low-experience group. Level: by experience level. |

| Urkmez et al. [19] (2021) | Design: single-center (UC), prospective (P), route unspecified; Patients: n = 1446; Urologist: one inexperienced urologist. Statistical analysis: Wilcoxon rank sum, Fisher’s exact, LOWESS. Endpoints: csCDR for Likert ≥ 3. Results: plateau reached at 500 cases; csPCa CDR significantly improved. Level: team-based. |

| Checcucci et al. [21] (2021) | Design: single-center (UC), prospective (P), transperineal (TP), and transrectal (TR); Patients: n = 1005; Urologists: two, inexperienced, but having undergone FGB training. Statistical analysis: ANOVA, linear and logistic regression. Endpoints: csPCa and PCa CDRs. Results: no influence of experience for csCDR, but stable CDRs for small lesions achieved after 100 procedures. Level: institutional |

| Cata et al. [18] (2021) | Design: single-center (UC), prospective (P), transrectal (TR); Patients: n = 400; Urologists: two with different levels of experience. Statistical analysis: chi-square, Kruskal–Wallis, multivariate regression. Endpoints: csPCa CDR for novice vs experienced. Results: improvement in csPCa CDR for experienced urologist only (after 52 cases). Level: per urologist. |

| Berg et al. [22] (2020) | Design: single-center (UC), prospective (P), transrectal (TR); Patients: n = 183; Urologists: experienced vs novice. Statistical analysis: logistic regression, IP weighting. Endpoints: CDR. Results: experience associated with higher odds of detecting PCa; no difference between expert and novice for CDR. Level: per urologist. |

| Kasabwala et al. [29] (2019) | Design: single-center (UC), retrospective (R), route unspecified; Patients: n = 173; Urologist: experienced in TR but not in UMFB. Statistical analysis: polynomial and linear regression, change point analysis. Endpoints: biopsy accuracy, specimen quality. Results: significant learning curve for biopsy accuracy up to 98 cases; no learning curve for csPCa CDR. Level: per urologist. |

| Truong et al. [30] (2018) | Design: Single-center (UC), retrospective (R), route unspecified; Patients: n = 113; Urologists: Five experienced in UMFB. Statistical analysis: Logistic regression, ROC. Endpoints: csCDR for PI-RADS 3–5. Results: Plateau in csPCa CDR for high PI-RADS lesions. Level: Institutional. |

| Meng et al. [16] (2018) | Design: single-center (UC), prospective (P), route unspecified; Patients: n = 1595; Urologists: four with varying experience. Statistical analysis: ANOVA, Kruskal–Wallis, trend test. Endpoints: csPCa CDR. Results: significant improvement in csPCa CDR over time. Level: institutional. |

| Mager et al. [14] (2017) | Design: single-center (UC), retrospective (R), transrectal (TR); Patients: n = 123; Urologist: one experienced, one novice. Statistical analysis: Wilcoxon–Mann–Whitney U, Kruskal-Wallis. Endpoints: detection quotient, procedure efficacy. Results: significant learning curve for TB detection quotient. Level: per urologist. |

| Calio et al. [17] (2017) | Design: single-center (UC), prospective (P), route unspecified; Patients: n = 1528; Urologists: cohort-based experience. Statistical analysis: McNemar, logistic regression. Endpoints: csPCa CDR. Results: increase in csPCa CDR, decrease in cisPCa. Level: cohort. |

| Own results | Design: single-center (UC), retrospective (R), access route not specified; Patients: n = 399; Urologists: two board-certified urologists experienced in prostate biopsies, yet new to UMFB. Statistical analysis: chi-square, Wilcoxon–Mann–Whitney U, binomial analysis. Endpoints: csCDR across PI-RADS categories 3, 4, and 5. Results: achieved optimal csPCa detection rates immediately following UMFB implementation. Level: per urologist. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Utzat, F.; Herrmann, S.; May, M.; Moersler, J.; Wolff, I.; Lermer, J.; Gregor, M.; Fodor, K.; Groß, V.; Kravchuk, A.; et al. Meeting Cancer Detection Benchmarks in MRI/Ultrasound Fusion Biopsy for Prostate Cancer: Insights from a Retrospective Analysis of Experienced Urologists. Cancers 2025, 17, 277. https://doi.org/10.3390/cancers17020277

Utzat F, Herrmann S, May M, Moersler J, Wolff I, Lermer J, Gregor M, Fodor K, Groß V, Kravchuk A, et al. Meeting Cancer Detection Benchmarks in MRI/Ultrasound Fusion Biopsy for Prostate Cancer: Insights from a Retrospective Analysis of Experienced Urologists. Cancers. 2025; 17(2):277. https://doi.org/10.3390/cancers17020277

Chicago/Turabian StyleUtzat, Fabian, Stefanie Herrmann, Matthias May, Johannes Moersler, Ingmar Wolff, Johann Lermer, Mate Gregor, Katharina Fodor, Verena Groß, Anton Kravchuk, and et al. 2025. "Meeting Cancer Detection Benchmarks in MRI/Ultrasound Fusion Biopsy for Prostate Cancer: Insights from a Retrospective Analysis of Experienced Urologists" Cancers 17, no. 2: 277. https://doi.org/10.3390/cancers17020277

APA StyleUtzat, F., Herrmann, S., May, M., Moersler, J., Wolff, I., Lermer, J., Gregor, M., Fodor, K., Groß, V., Kravchuk, A., Elgeti, T., Degener, S., & Gilfrich, C. (2025). Meeting Cancer Detection Benchmarks in MRI/Ultrasound Fusion Biopsy for Prostate Cancer: Insights from a Retrospective Analysis of Experienced Urologists. Cancers, 17(2), 277. https://doi.org/10.3390/cancers17020277