Simple Summary

In vivo dose monitoring during treatment is crucial for successfully implementing boron neutron capture therapy in clinical practice but still remains not feasible. This study investigates the potentialities of Compton imaging in this setting by employing Monte Carlo simulation techniques, considering the application of deep convolutional neural network architectures to reduce image degradations in few-iteration reconstructed images obtained with the standard maximum-likelihood expectation-maximization algorithm, pursuing the avoidance of the iteration time associated with many-iterations reconstructions, enabling a prompt dose reconstruction during the treatment. The research examines the use of the standard U-Net architecture and two variants based on the deep convolutional framelets framework to accomplish this task, showing promising results in terms of reconstruction accuracy and processing time.

Abstract

Background: Boron neutron capture therapy (BNCT) is an innovative binary form of radiation therapy with high selectivity towards cancer tissue based on the neutron capture reaction 10B(n,)7Li, consisting in the exposition of patients to neutron beams after administration of a boron compound with preferential accumulation in cancer cells. The high linear energy transfer products of the ensuing reaction deposit their energy at the cell level, sparing normal tissue. Although progress in accelerator-based BNCT has led to renewed interest in this cancer treatment modality, in vivo dose monitoring during treatment still remains not feasible and several approaches are under investigation. While Compton imaging presents various advantages over other imaging methods, it typically requires long reconstruction times, comparable with BNCT treatment duration. Methods: This study aims to develop deep neural network models to estimate the dose distribution by using a simulated dataset of BNCT Compton camera images. The models pursue the avoidance of the iteration time associated with the maximum-likelihood expectation-maximization algorithm (MLEM), enabling a prompt dose reconstruction during the treatment. The U-Net architecture and two variants based on the deep convolutional framelets framework have been used for noise and artifact reduction in few-iteration reconstructed images. Results: This approach has led to promising results in terms of reconstruction accuracy and processing time, with a reduction by a factor of about 6 with respect to classical iterative algorithms. Conclusions: This can be considered a good reconstruction time performance, considering typical BNCT treatment times. Further enhancements may be achieved by optimizing the reconstruction of input images with different deep learning techniques.

1. Introduction

1.1. Boron Neutron Capture Therapy

Boron neutron capture therapy (BNCT) is a binary tumor-selective form of radiation therapy based on the very high affinity of the nuclide boron-10 for neutron capture, resulting in the prompt compound nuclear reaction 10B(n,)7Li, and on the preferential accumulation of boron-containing pharmaceuticals in cancer cells rather than normal cells.

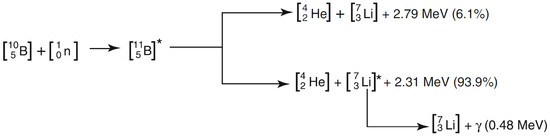

Neutron capture therapy (NCT) was first proposed shortly after the discovery of the neutron by Chadwick in 1932 and the description of boron neutron capture reaction by Taylor and Goldhaber in 1935 [1]. The reaction is illustrated in Figure 1: a 10B nucleus absorbs a slow or thermal neutron (with energy eV), forming for a brief time an highly excited 11B compound nucleus [2,3] which subsequently decays, predominantly disintegrating into an energetic alpha particle back to back with a recoiling 7Li ion, with a range in tissue of µm and 5 µm, respectively, and a high linear energy transfer (LET) of 150 keV/µm and 175 keV/µm, respectively. The Q value of the reaction is about MeV [4]. In about of the cases, the lithium nucleus is in an excited state and immediately emits a MeV gamma photon, leaving a combined average kinetic energy of about 2.31 MeV to the two nuclei.

Figure 1.

Boron neutron capture reaction [5].

The selectivity of this kind of therapy towards cancer tissue is a consequence of the small combined range of 7Li and the alpha particle of 12–14 µm, which is comparable with cellular dimensions. By concentrating a sufficient number of boron atoms within tumor cells, the exposure to thermal neutrons may result in their death with low normal tissue complications, because of the higher radiation dose imparted to cancer cells relative to adjacent normal cells. After the administration of the boron compound to the patient, a proton or deuteron beam emitted from an accelerator is focused on a neutron-emitting target. A suitable neutron beam is obtained with a beam shaping assembly (BSA) and is directed into a treatment room in which a patient is precisely placed. Typically the full dose is administered in one or two applications of 30–90 min in BNCT [6], while in traditional photon treatments, the dose is generally split into multiple fractions administered over a period of 3–7 weeks. Therapeutic efficacy requires an absolute boron concentration higher than 20 ppm and high tumor to normal tissue () and tumor to blood () concentration ratios (ideally and necessarily [6]). If , and are the boron concentrations in tumor tissue, normal tissue, and blood the concentration ratios are also denoted by and , respectively.

The dosimetry for BNCT is much more complex than for conventional photon radiation therapy. While in standard radiotherapy X-rays mainly produce electrons that release all their kinetic energy by ionization, in BNCT, there are four main different radiation components contributing to the absorbed dose in tissue [6]. The boron dose , which is the therapeutic dose, is related to the locally deposited energy of about MeV by the emitted alpha particle and the recoiling 7Li ion in the boron neutron capture reaction 10B(n,)7Li.

It can be shown [6] that the boron dose at a certain point is proportional to boron concentration (). The measurement of real time in vivo boron concentration, necessary for dose determination, is one of the main challenges of BNCT. At present, there is no real-time in vivo method to measure boron concentration during BNCT treatment, although several approaches are under investigation, the most promising being positron-emission tomography, magnetic resonance imaging, and prompt gamma analysis, each exhibiting distinct advantages and limitations [6]. Prompt gamma analysis with single photon emission tomography (SPECT) or Compton imaging systems aims to reconstruct boron distribution in vivo in real time using the prompt 478 keV gamma rays emitted by the excited lithium nucleus in boron neutron capture reaction.

1.2. Compton Imaging

A Compton camera is a gamma-ray detector that uses the kinematics of Compton scattering to reconstruct the original radiation source distribution.

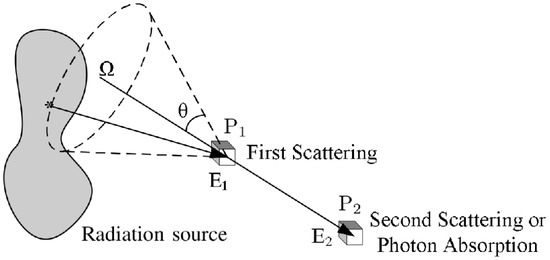

The basic scheme of a Compton camera is shown in Figure 2; The incoming gamma-ray undergoes Compton scattering from an electron in the position-sensitive first detector (scatterer) and then is absorbed by the second position-sensitive detector (absorber). The Compton scattering and absorption events are together called a Compton event.

Figure 2.

Schematic diagram of a general Compton camera.

The measured data are the coordinates of the first and second point of interaction and , the energy deposited in the interaction of the scattered electron in the medium of the first detector, and the energy deposited in the second detector. The energy of the incoming gamma-ray is equal to the sum of the deposited energies: . Assuming that the electron before scattering has no momentum in the laboratory frame, from energy–momentum conservation, it follows that the energy of the scattered photon as a function of the scattering angle in Compton scattering is:

which allows the estimation of the Compton scattering angle from the energy deposited in the first detector and the gamma-ray energy. For each Compton event, the position of the source is confined to the surface of a cone, called Compton cone, with the vertex in the interaction point in the scatterer, the axis passing also through the interaction point in the absorber, and the half-angle obtained from the energies measurements. It is possible to estimate the original gamma source distribution from the superimposition of Compton cones.

While SPECT imaging is characterized by quite low sensitivity, due to the presence of the collimator, which determines a relation of inverse proportionality between the sensitivity and the spatial resolution squared [7], Compton cameras, which apply electronic collimation instead of physical collimation, are characterized by higher sensitivity; moreover, sensitivity depends on the size, type, and geometry of the two detectors, and is therefore independent of angular (and spatial) resolution, depending on the noise and spatial resolution characteristics of the detector. Another important characteristic of Compton imaging compared with SPECT is that angular (and spatial) resolution improves with increasing gamma-ray energy, since from Equation (1):

On the other hand, in SPECT, the sensitivity decreases for higher energies, since the septal thickness must be increased to reduce gamma-ray penetration. Furthermore, Compton imaging could in principle allow the rejection of most of the background events due to 10B present in the shielding walls of most BNCT facilities, by filtering out all Compton cones having zero intersection with the reconstruction region.

1.3. Compton Image Reconstruction

A general imaging system is associated with a linear integral operator such that the continuous source distribution and the expected value of the projection measurements are related by:

or more precisely , if noise is taken into account. The kernel , representing the conditional probability of detecting an event at location given that it was emitted at location in the source, is also-called space-variant (projection) point spread function [8,9], since it amounts to the response of the system to a point source, that is to a Dirac delta distribution. In the case of Compton imaging, the source coordinate in Equation (3) represents a 3D location, while the detector coordinate is a vector containing the Compton event detection locations and the energies deposited in the two detectors.

Since the projection operator is a compact operator and in particular a Hilbert–Schmidt operator (the supports and are finite and the kernel is bounded for all and , thus ), it can be approximated with arbitrary accuracy, in the Hilbert–Schmidt norm, by a finite rank operator [10,11]. If we consider the discrete approximation of the source distribution (pixel values) and is the expected number of events in each of the D detector channels, the discrete version of Equation (3) is obtained:

where is a matrix called a system matrix.

The aim of image reconstruction is to determine an estimate of given the noisy measurements and the operator .

Traditionally, the analytic approach has been employed to solve the inverse problem by neglecting any explicit randomness and deterministic blurring and attenuation mechanisms and trying to exactly invert Equation (3) with a simple enough projection operator . On the other hand modern reconstruction techniques employ more general linear models that can take into account the blurring and attenuation mechanisms (deterministic degradations) and generally also incorporate probabilistic models of noise (random degradations [12,13,14]) without requiring restrictive system geometries [11]. These models typically do not admit an explicit solution, or an analytic solution can be difficult to compute, so in most cases, these models are dealt with by iterative algorithms, in which the reconstructed image is progressively refined in repeated calculations. In this way, greater accuracy can be obtained, although longer computation times are required.

The most commonly employed algorithms for Compton image reconstruction are MLEM and MAP. The maximum likelihood (ML) criterion is the most used technique in statistical inference for deriving estimators. In this criterion, it is presumed that the observation vector is determined by an unknown deterministic parameter , which in this case is the gamma source distribution to be reconstructed, following the conditional probability . The maximum likelihood estimate of is the reconstructed image maximizing the likelihood function for the measured data :

The maximum likelihood expectation maximization (MLEM) algorithm is an iterative procedure whose output tends to the ML solution of the problem. In the case of inversion problems with Poisson noise, it consists in the following iteration step [11]:

The two main shortcomings of MLEM reconstruction are the slow convergence (typically 30–50 iterations are required to get usable images) and high noise.

The iterative step in Equation (6), also known as binned-mode MLEM, could be applied to reconstruct the image. However, in the case of a Compton camera, the number D of possible detector elements in can be exceedingly high; for example, a practical Compton camera could have first-detector elements, the same number of second-detector elements, and energy channels, resulting in [11]. Since a typical Compton camera dataset size is of the order of events, most of the possible detector bins will contain zero. An alternative approach, the so-called list-mode MLEM [15,16,17,18], can be adopted in order to reduce computational cost. In this approach, the number of detector bins D is assumed to be very large, so that most of them contain zero counts, while the occupied bins contain only one count. This is basically equivalent to considering infinitesimal bins. If N is the number of detected events, denotes the set of all occupied bins, and the observation vector becomes:

The list mode iteration is then given by:

where is the sum over all detector bins (and not only on the occupied ones) of the probabilities of the gamma ray emitted from the voxel b being detected in the detector bin d. therefore represents the probability that a gamma ray emitted from b would be detected and is called sensitivity. As a first approximation, can be considered equal to the solid angle subtended by the scatter detector at bin b divided by , which becomes essentially uniform over all the voxels if the detector is small [16]. The calculation of the expressions of the system matrix and sensitivity is carried out in [16]. Using Equation (8), the sum ranges only over the number of events rather than all detector elements, and computational cost is thus generally reduced.

1.4. Research Outline and Discussion

In order to investigate the potentialities of Compton imaging with CZT detectors for BNCT, a Geant4 simulation of a simplified detector in a BNCT setting has been implemented. The data from the simulation have been used to reconstruct gamma source distribution with the list-mode MLEM algorithm.

Models based on deep neural networks for reconstructing the dose distribution from the simulated dataset of BNCT Compton camera images while avoiding the long iteration time associated with the MLEM algorithm have been examined.

The U-Net architecture and two variants based on the deep convolutional framelets approach have been used for noise and artifacts reduction in few-iteration reconstructed images, leading to promising results in terms of reconstruction accuracy and processing time.

Deep convolutional framelet denoising has already demonstrated significant success in enhancing low-dose computed tomography (CT) images, achieving the second-place award in the 2016 AAPM Low-Dose CT Grand Challenge [19,20,21] and providing a basis for its application in other imaging modalities. While in low-dose CT the denoising algorithm primarily operates on two-dimensional image slices, extending this technique to Compton imaging requires modifications to handle increased data dimensionality efficiently, as this imaging modality involves three-dimensional images.

Recent studies have explored various approaches to integrate deep learning techniques into Compton image reconstruction algorithms to reduce computation time and maintain or improve image quality [22]. Deep convolutional framelets represent a significant improvement compared to traditional methods such as generic convolutional neural networks and standard U-Nets [23,24], enabling better multi-scale feature extraction and fine detail preservation. Another study employed a GAN with a standard U-Net generator for Compton image reconstruction in a BNCT setting using the ground-truth boron distributions as labels [25]. The alternative approach adopted in the present study may also be embedded in such generative adversarial framework by substituting the standard U-Net with framelet-based U-Nets as the generator, potentially improving the GAN performance.

2. Deep Learning Models

The past few years have witnessed impressive advancements in the application of deep learning to biomedical image reconstruction. While classical algorithms generally perform well, they often require long processing times. The use of deep learning techniques can lead to orders-of-magnitude faster reconstructions, in some cases even with better image quality than classical iterative methods [26,27,28].

There exist various approaches to apply deep learning to solve the inverse problem. The taxonomy of deep learning approaches to solve inverse problems, based on a first distinction between supervised and unsupervised techniques and a second distinction considering what is known and when about the forward model , consists of sixteen major categories described in [27].

The present study focuses on the case of supervised image degradation reduction with U-Nets in the deep convolutional framelets framework, with forward operator fully known, using a matched dataset of measurements , the Compton events data produced in a Geant4 simulation, and ground-truth images , corresponding to the output of the 60th iteration of the list-mode MLEM algorithm [15,16] applied to the Compton events data (reconstruction time 24–36 min). The objective in the supervised setting is to obtain a reconstruction network mapping measurements to images , where is a vector of parameters to be learned.

Image Degradation Reduction: U-Nets and Deep Convolutional Framelets

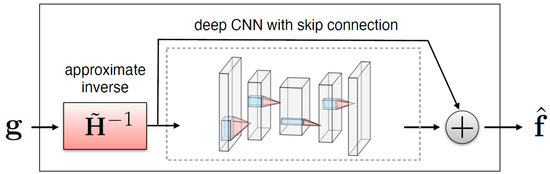

A simple method for embedding the forward operator into the network architecture is to apply an approximate inverse operator (a matrix such that for every image of interest) to first map measurements to image domain and then train a neural network to remove degradations (noise and artifacts) from the resulting images [27], as illustrated in Figure 3.

Figure 3.

Illustration of a CNN with skip connection to remove noise and artifacts from an initial reconstruction obtained by applying to measurements [27].

The expression of the reconstruction network is therefore:

where is a trainable neural network depending on parameters . Networks with more complicated hierarchical skip connections are also commonly used. In this study, the use of the standard U-Net architecture and two variants satisfying the so-called frame condition, the dual-frame U-Net and the tight-frame U-Net, first proposed in [19], is examined.

The deep convolutional framelets framework, introduced by Ye in [29], provides a theoretical understanding of convolutional encoder–decoder architectures, such as U-Nets, by adopting the frame-theoretic viewpoint [30], according to which the forward pass of a CNN can be regarded as a decomposition in terms of a frame that is related to pooling operations and convolution operations with learned filters [31,32]. In the theory of convolutional framelets, the feature maps in the CNNs are interpreted as convolutional framelet coefficients, the user-defined pooling and unpooling layers correspond to the convolutional framelet expansion global bases, and encoder and decoder convolutional filters correspond to the local bases.

Deep convolutional framelets are characterized by an inherent shrinking behavior [29], which determines the degradation reduction capabilities allowing the solution of inverse problems. Optimal local bases are learnt from the training data such that they give the best degradation shrinkage behavior.

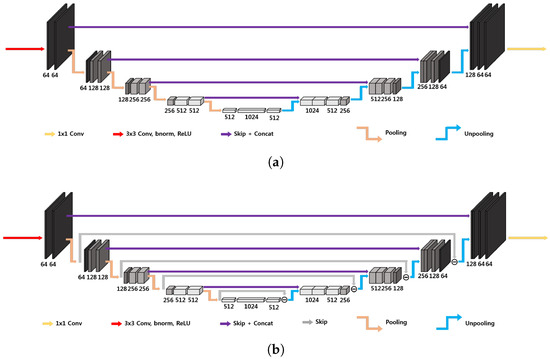

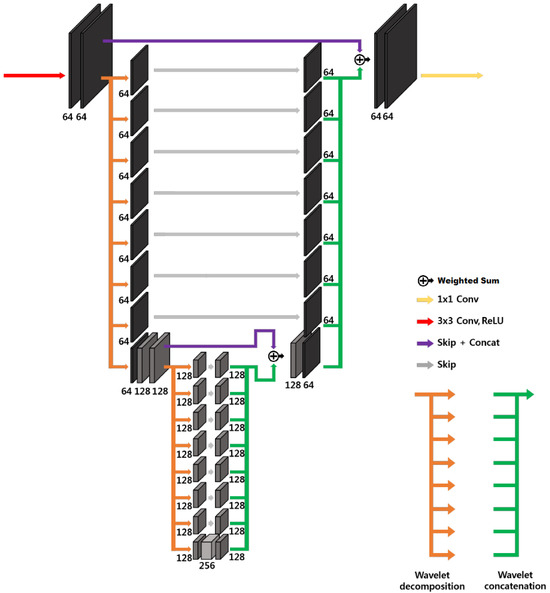

The three-dimensional U-Net architecture, illustrated in Figure 4a, initially proposed for biomedical image segmentation, is widely used for inverse problems [33]. The network is characterized by an encoder–decoder structure organized recursively into several levels, with the next level applied to the low-resolution signal of the previous layer [19]; the encoder part consists of convolutional layers, average pooling layers, denoted by , batch normalization and ReLUs, and the decoder consists of average unpooling layers, denoted by , and convolution. There are also skip connections through channel concatenation, which allow retaining the high-frequency content of the input signal. The pooling and unpooling layers determine an exponentially large receptive field. As outlined in [19], the extended average pooling and unpooling layers ( and , respectively) do not satisfy the frame condition, which leads to an overemphasis of the low-frequency components of images due to the duplication of the low-frequency branch [29], resulting in artifacts.

Figure 4.

Simplified 3D architecture of (a) standard U-Net and (b) dual-frame U-Net [19]. These are 4D representations, where the plane perpendicular to the page corresponds to three-dimensional space.

A possible improvement is represented by the three-dimensional dual-frame U-Net, employing the dual frame of , given by [19]:

corresponding to the architecture in Figure 4b. In this way the frame condition is satisfied, but there exists noise amplification linked to the condition number of , which is equal to 2 [19].

The usage of tight filter-bank frames or wavelets allows improving the performance of the U-Net by satisfying the frame condition with minimum noise amplification. In this case, the non-local basis is now composed of a tight filter-bank:

where denotes the k-th subband operator. The simplest tight filter-bank frame is the Haar wavelet transform [34,35]. In n dimensions, it is composed by filters. The three-dimensional tight-frame U-Net architecture is in principle essentially analogous to the one-dimensional and two-dimensional ones proposed in [19]. The main difficulty is linked to the fact that operations on three-dimensional images are computationally more expensive, and the number of filters in the filter bank rises to 8. In order to reduce the computational cost, the large-output signal concatenation and multi-channel convolution have been substituted by a simple weighted sum of the signals with learnable weights, as shown in Figure 5, representing a two-level 3D modified tight-frame architecture. Notice that this substitution drastically reduces the number of parameters to be learned, reducing the possibility of overfitting.

Figure 5.

Modified 3D tight-frame U-Net. This is a 4D representation, where the plane perpendicular to the page corresponds to three-dimensional space.

3. Methods

3.1. Monte Carlo Simulation

Since Compton imaging requires detectors characterized by both high spatial and energy resolutions [11,36], high-resolution 3D CZT drift strip detectors, which currently offer the best performance [37,38], were considered in this study.

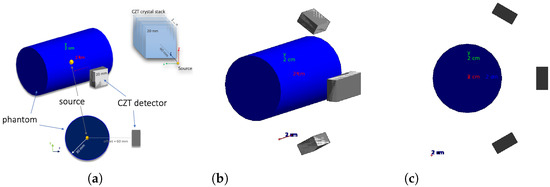

A Geant4 simulation of the CZT detector modules in a BNCT setting was implemented in order to assess the accuracy in the source reconstruction and optimize detector geometry. For the simulation, the G4EMStandardPhysics and G4DecayPhysics classes were used. The range cut value of mm was set. A small animal (or human patient irradiated body part with comparable dimensions) was simulated with a cylinder of radius 30 mm and height 100 mm, declared with the standard soft-tissue material G4_TISSUE_SOFT_ICPR. A single 3D CZT sensor module is composed by four 3D CZT drift strip detectors described in [37], each of which ideally assumed to be a CZT 20 mm mm mm parallelepiped in the simulation, stacked along the y direction (see Figure 6a). Besides the configuration with a single sensor module with the plane parallel to the cylinder axis, placed at a distance of 60 mm from the cylinder center, equidistant from the cylinder bases, illustrated in Figure 6a, other configurations with different numbers of modules in different positions were considered.

Figure 6.

(a) Single-module geometry, (b) four-module geometry, and (c) four-module geometry YZ view.

A good configuration in terms of source reconstruction quality and cost was found to be the four-module configuration in Figure 6b,c.

In the four-module configuration, two modules are obtained by applying a rotation of around the cylinder axis to the original single module of the previous configuration, and with the other two modules obtained by translating by mm the original module along the cylinder axis, so as to obtain a larger effective module given by the union of the two. An idealized case has been considered with respect to the real BNCT energy spectrum [38]: all gamma rays are generated with the same 478 keV energy and the energy of the gamma rays emitted in the boron neutron capture reaction. The gamma emission is set to be isotropic.

Since different tumor region geometries are needed for the training phase of deep learning algorithms, 20 different tumor region geometries were created. For 17 of these, 3:1, 4:1, 5:1, and (zero boron concentration in normal tissue) concentration ratios have been considered, while for the other three, only the concentration ratio has been considered, for a total of 71 different gamma source distributions. Moreover, for each of these, 4 different rototranslations of the tumor region were created, obtaining 355 different gamma source distributions.

In order to select the events of interest, a filter was applied to consider only events with total energy deposition in the range of 0.470–0.485 MeV and with interaction points in the detector crystals. The simulation returns for each event the gamma source position, Compton scattering interaction position, photoabsorption interaction position, Compton recoil electron deposited energy, and the photoabsorption deposited energy.

For each possible gamma source distribution, 300 million events were generated. About 1 million in events were detected by the apparatus in each run.

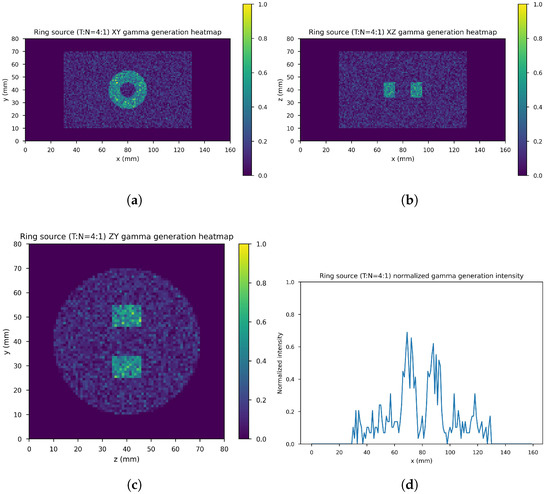

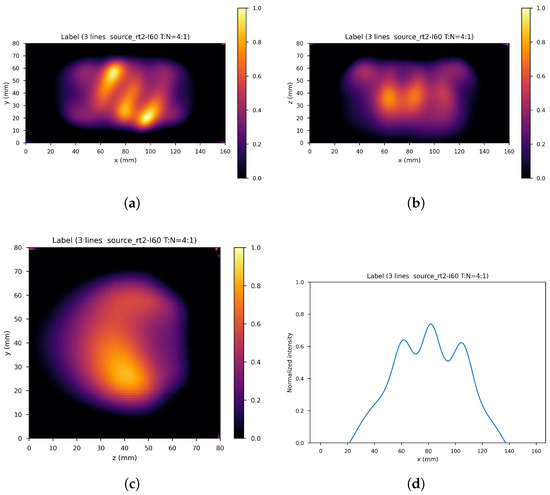

Figure 7 shows the ring gamma source distribution with a concentration ratio 4:1 in the parallelepipedonal region of size 160 mm mm mm. Figure 7a is the XY gamma generation heatmap for mm, Figure 7b represents the XZ gamma generation heatmap for mm, Figure 7c is the YZ gamma generation heatmap for mm, and Figure 7d is the normalized intensity as a function of x for mm.

Figure 7.

Ring source, 4:1. (a) XY gamma generation heatmap, (b) XZ gamma generation heatmap, (c) YZ gamma generation heatmap, and (d) normalized intensity as a function of x.

3.2. U-Nets: Dataset, Network Architectures, Training, and Evaluation

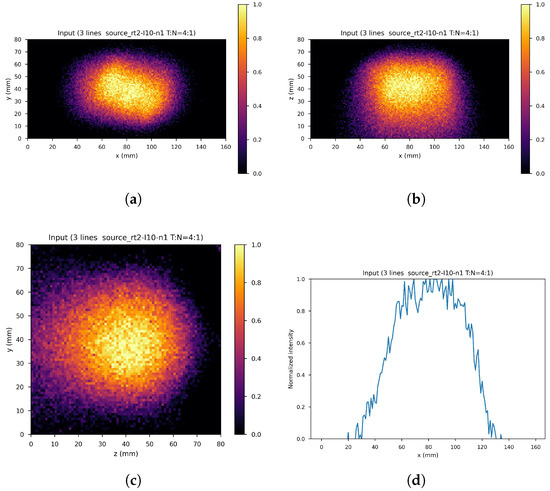

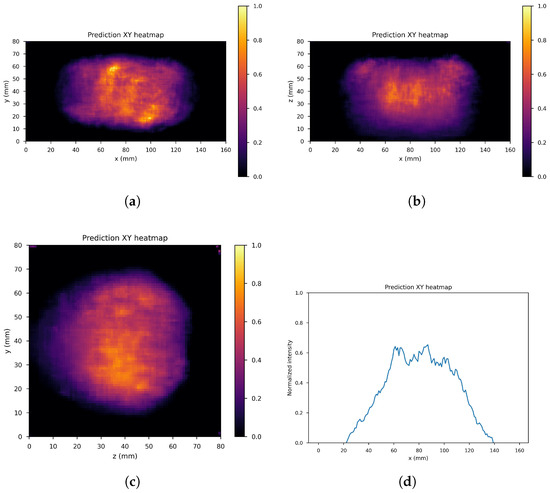

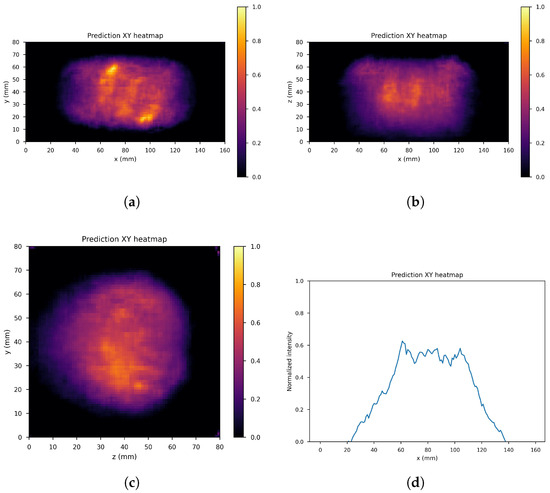

The dataset consists of the output of the 10th iteration of the list-mode MLEM algorithm (input images) and the output of the 60th iteration (label images) of the 71 simulated gamma source distributions and of the data augmentation gamma source distributions obtained by applying four different rototranslations, for a total of 355 samples with the corresponding labels. In order to further increase the size of the dataset, 41 noisy images were created by adding one out of four different levels of Gaussian white noise to each of the sample images (if denotes the difference between the maximum and minimum value of the input image the standard deviations of the four levels are: , , , ), leaving the corresponding label unchanged, resulting into a global dataset of input images with corresponding label. These were distributed with a proportion of 70:10:20 among the training set (11,130 images), validation set (1260 images), and test set (2520 images), maintaining class balance among sets and distributing different rototranslations into different sets, so that every set contains almost new gamma source distributions with respect to the others. Figure 8 shows an example of the input image, with noise level 1 and rototranslation 2. The corresponding label is displayed in Figure 9.

Figure 8.

An example of network input. (a) XY gamma generation heatmap, (b) XZ gamma generation heatmap, (c) YZ gamma generation heatmap, and (d) normalized intensity as a function of x.

Figure 9.

Corresponding label. (a) XY gamma generation heatmap, (b) XZ gamma generation heatmap, (c) YZ gamma generation heatmap, and (d) normalized intensity as a function of x.

The 3D standard U-Net, 3D dual-frame U-Net, and 3D tight-frame U-Net are described in Section 2 and represented in Figure 4 and Figure 5 (they are 4D representations, where the plane perpendicular to the page corresponds to three-dimensional space). All the U-Nets include convolutional layers with filters and rectified linear units (ReLU). The first two networks employ average pooling and unpooling layers. The tight-frame U-Net uses Haar wavelet decomposition with eight filters, the corresponding unpooling operation with eight synthesis filters [34,35], and the weighted addition of nine input tensors with learnable weights. All networks include skip connections. Every input image and label is normalized to the interval .

The networks were trained using ADAM algorithm [39] with a learning rate equal to . The loss function was the normalized mean square error (NMSE), defined below. The batch size was set equal to 1 owing to the large size of three-dimensional images. Data were lazy-loaded to the main memory and then asynchronously loaded to the GPU. The networks were implemented using PyTorch (an optimized tensor library for deep learning using GPUs and CPUs, based on an automatic differentiation system [40]). An A100 PCIe 40 GB GPU with Ampere architecture and eight AMD EPYC 7742 64-Core CPUs (2.25 GHz) were used. All three networks require about 7–8 days for training. The number of training epochs (with epochs running from 0 to ) and best epoch with its training and validation average NMSEs are reported in Table 1. The best epoch model was used for validation.

Table 1.

Number of training epochs and best epoch with its training and validation NMSEs for each network.

For quantitative evaluation, three different metrics were used: the normalized mean square error (NMSE) value defined as

where M, N, and O are the number of pixels in the , , and directions, and and denote the reconstructed images and labels, respectively; the peak signal-to-noise ratio (PSNR), defined by

where MSE denotes the mean square error , with ; The structural similarity index measure (SSIM) [41], defined as

where is a average of , is a variance of and is a covariance of , and . While the first two metrics quantify the difference in the values of the corresponding pixels of the reference and reconstructed images, the structural similarity index quantifies the similarity based on luminance, contrast, and structural information, similarly to the human visual perception system. Low values of and high values of indicate similarity between images. The structural similarity index takes values in , where values close to one indicate similarity, zero indicates no similarity, and values close to indicate anti-correlation.

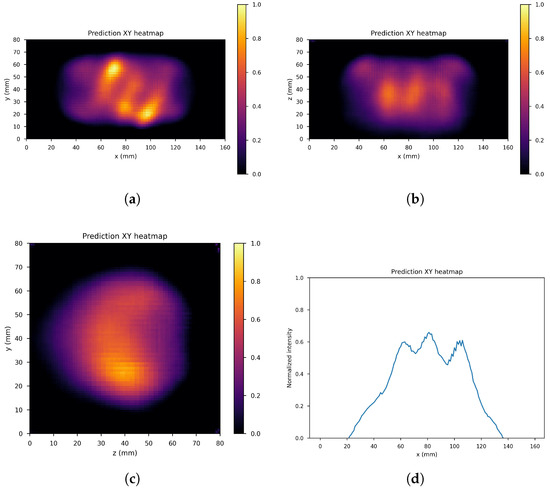

4. Results

Figure 10, Figure 11 and Figure 12 show the predicted reconstructions of the three models given the input in Figure 8. It can be observed that while the standard U-Net and the dual-frame U-Net are more affected by degradations, the tight-frame U-Net produces a prediction very similar to the label image in Figure 9, even if only two levels are considered in the architecture (Figure 5). These observations are confirmed by the similarity metrics reported in Table 2.

Figure 10.

U-Net predictions: (a) XY gamma generation heatmap, (b) XZ gamma generation heatmap, (c) YZ gamma generation heatmap, and (d) normalized intensity as a function of x.

Figure 11.

Dual frame U-Net predictions: (a) XY gamma generation heatmap, (b) XZ gamma generation heatmap, (c) YZ gamma generation heatmap, and (d) normalized intensity as a function of x.

Figure 12.

Tight frame U-Net predictions: (a) XY gamma generation heatmap, (b) XZ gamma generation heatmap, (c) YZ gamma generation heatmap, and (d) normalized intensity as a function of x.

Table 2.

Average NMSE, PSNR, and SSIM on the test set for the trained U-Net, dual-frame U-Net, and tight-frame U-Net modules.

The performance of the standard U-Net and the dual-frame U-Net is essentially comparable, with the latter performing slightly better in terms of NMSE and PSNR but slightly worse in terms of SSIM. The performance of the two networks and the presence of degradations can be explained by considering that the standard U-Net does not satisfy the frame condition, and the dual-frame U-Net, while satisfying the frame condition, tends to amplify noise. The tight-frame architecture showed a considerable improvement in performance considering all three metrics, and in particular in the SSIM, which quantifies structural information.

In terms of processing time, U-Net regression takes generally less than a second, so the overall reconstruction time is dominated by the time necessary to obtain the input image, which is of the order of 4–6 min. Considering a BNCT treatment duration of 30–90 min, the obtained reconstruction time performance represents a significant improvement compared to classical iterative methods (processing time is reduced by a factor of about 6 with respect to the 24–36 min needed for 60 iterations in the case of list-mode MLEM), making this kind of approach a valid step towards real-time dose monitoring during BNCT treatment.

Notice that although the source geometries created in the simulation are not biologically realistic, the same procedure can be applied to distributions obtained in real medical practice. Moreover, transfer learning techniques [42] could be employed to take advantage of the training already performed with simulated datasets.

5. Conclusions

Boron neutron capture therapy (BNCT) represents a promising form of cancer therapy because of its high selectivity towards cancer tissue. However, at present, there are no viable imaging methods capable of in vivo monitoring dose during treatment. Compared to other imaging techniques under investigation, Compton imaging offers various advantages, but the main difficulty in this type of approach is the complexity of Compton image reconstruction, which is associated with long reconstruction times, comparable with BNCT treatment duration. This calls for the development of new reconstruction techniques with lower computational cost.

In order to investigate the potentialities of Compton imaging with CZT detectors for BNCT, a Geant4 simulation of a simplified detector in a BNCT setting has been implemented, considering several tumor region geometries in order to produce a large enough dataset for the training phase of deep neural network algorithms.

In order to reduce reconstruction time, the U-Net architecture and two variants based on the deep convolutional framelets framework, the dual-frame U-Net and the tight-frame U-Net, were applied to reduce degradation in few-iteration reconstructed images. Encouraging results were obtained both in terms of visual inspection and in terms of the three metrics used to evaluate the similarity with the reference images (NMSE, PSNR and SSIM), especially with the use of tight-frame U-Nets. The lower performance of standard U-Net architecture and of the dual frame variant was attributed to the fact that the former does not satisfy frame condition, while the latter tends to amplify noise. The processing time was reduced on average by a factor of about 6 with respect to classical iterative algorithms, with most it amounting to the starting image reconstruction time of about 4–6 min. This can be considered a good reconstruction time performance, considering typical BNCT treatment times.

In principle, it would be possible to further improve reconstruction accuracy and reduce processing time by improving quality and time performance in the reconstruction of the input image provided to the U-Net, for example, by employing unrolled optimization algorithms.

Author Contributions

Conceptualization, A.D., D.R.L. and G.M.I.P.; methodology, A.D. and D.R.L.; software, A.D. and D.R.L.; validation, A.D.; formal analysis, A.D.; investigation, A.D., D.R.L., G.I., N.A., N.F. and G.M.I.P.; resources, D.R.L.; data curation, A.D.; writing—original draft preparation, A.D.; writing—review and editing, A.D., D.R.L. and G.M.I.P.; visualization, A.D.; supervision, A.D., D.R.L. and G.M.I.P.; project administration, A.D., D.R.L. and G.M.I.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data can be shared up on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- IAEA. Current Status of Neutron Capture Therapy; International Atomic Energy Agency: Vienna, Austria, 2001. [Google Scholar]

- Obertelli, A.; Sagawa, H. (Eds.) Modern Nuclear Physics: From Fundamentals to Frontiers; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Isao, T.; Hiroshi, T.; Toshitaka, K. (Eds.) Handbook of Nuclear Physics; Springer: Berlin/Heidelberg, Germany, 2023. [Google Scholar]

- Podgorsak, E.B. (Ed.) Radiation Physics for Medical Physicists; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Sauerwein, W.; Wittig, A.; Moss, R.; Nakagawa, Y. (Eds.) Neutron Capture Therapy: Principles and Applications; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- IAEA. Advances in Boron Neutron Capture Therapy; International Atomic Energy Agency: Vienna, Austria, 2023. [Google Scholar]

- Nillius, P.; Danielsson, M. Theoretical bounds and optimal configurations for multi-pinhole spect. In Proceedings of the 2008 IEEE Nuclear Science Symposium Conference Record, Dresden, Germany, 19–25 October 2008; IEEE: Piscataway, NJ, USA, 2008. [Google Scholar]

- Bertero, M.; Boccacci, P.; Mol, C.D. Introduction to Inverse Problems in Imaging, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2021. [Google Scholar]

- Chen, G.; Wei, Y.; Xue, Y. The generalized condition numbers of bounded linear operators in banach spaces. J. Aust. Math. Soc. 2004, 76, 281–290. [Google Scholar] [CrossRef]

- van Neerven, J. Functional Analysis; corrected edition; Cambridge University Press: Cambridge, UK, 2024. [Google Scholar]

- Wernick, M.N.; Aarsvold, J.N. (Eds.) Emission Tomography: The Fundamentals of PET and SPECT; Elsevier Academic Press: Amsterdam, The Netherlands, 2004. [Google Scholar]

- Jain, A.K. Fundamentals of Digital Image Processing; Pearson: London, UK, 1988. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing; Pearson: London, UK, 2017. [Google Scholar]

- Kobayashi, H.; Mark, B.L.; Turin, W. Probability, Random Processes, and Statistical Analysis: Applications to Communications, Signal Processing, Queueing Theory and Mathematical Finance; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Lozano, I.V.; Dedes, G.; Peterson, S.; Mackin, D.; Zoglauer, A.; Beddar, S.; Avery, S.; Polf, J.; Parodi, K. Comparison of reconstructed prompt gamma emissions using maximum likelihood estimation and origin ensemble algorithms for a compton camera system tailored to proton range monitoring. Z. Für Med. Phys. 2023, 33, 124–134. [Google Scholar] [CrossRef] [PubMed]

- Maxim, V.; Lojacono, X.; Hilaire, E.; Krimmer, J.; Testa, E.; Dauvergne, D.; Magnin, I.; Prost, R. Probabilistic models and numerical calculation of system matrix and sensitivity in list-mode mlem 3d reconstruction of compton camera images. Phys. Med. Biol. 2015, 61, 243. [Google Scholar] [CrossRef] [PubMed]

- Wilderman, S.J.; Clinthorne, N.H.; Fessler, J.A.; Rogers, W.L. List-mode maximum likelihood reconstruction of compton scatter camera images in nuclear medicine. In Proceedings of the 1998 IEEE Nuclear Science Symposium Conference Record. 1998 IEEE Nuclear Science Symposium and Medical Imaging Conference (Cat. No.98CH36255), Toronto, ON, Canada, 8–14 November 1998; IEEE: Piscataway, NJ, USA, 1998; Volume 3, pp. 1716–1720. [Google Scholar]

- Parra, L.C. Reconstruction of cone-beam projections from compton scattered data. IEEE Trans. Nucl. Sci. 2000, 47, 1543–1550. [Google Scholar] [CrossRef]

- Han, Y.; Ye, J.C. Framing u-net via deep convolutional framelets: Application to sparse-view ct. IEEE Trans. Med Imaging 2018, 37, 1418–1429. [Google Scholar] [CrossRef] [PubMed]

- Kang, E.; Chang, W.; Jaejun, Y.; Ye, J.C. Deep Convolutional Framelet Denosing for Low-Dose CT via Wavelet Residual Network. IEEE Trans. Med Imaging 2018, 37, 1358–1369. [Google Scholar] [CrossRef] [PubMed]

- Sherwani, M.K.; Gopalakrishnan, S. A systematic literature review: Deep learning techniques for synthetic medical image generation and their applications in radiotherapy. Front. Radiol. 2024, 4, 1385742. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.M.; Lee, J.S. A comprehensive review on Compton camera image reconstruction: From principles to AI innovations. Biomed. Eng. Lett. 2024, 14, 1175–1193. [Google Scholar] [CrossRef] [PubMed]

- Daniel, G.; Gutierrez, Y.; Limousin, O. Application of a deep learning algorithm to Compton imaging of radioactive point sources with a single planar CdTe pixelated detector. Nucl. Eng. Technol. 2022, 54, 1747–1753. [Google Scholar] [CrossRef]

- Yao, Z.; Shi, C.; Tian, F.; Xiao, Y.; Geng, C.; Tang, X. Technical note: Rapid and high-resolution deep learning–based radiopharmaceutical imaging with 3D-CZT Compton camera and sparse projection data. Med. Phys. 2022, 49, 7336–7346. [Google Scholar] [CrossRef] [PubMed]

- Hou, Z.; Geng, C.; Shi, X.T.C.; Tian, F.; Zhao, S.; Qi, J.; Shu, D.; Gong, C. Boron concentration prediction from Compton camera image for boron neutron capture therapy based on generative adversarial network. Appl. Radiat. Isot. 2022, 186, 110302. [Google Scholar] [CrossRef] [PubMed]

- Yedder, H.B.; Cardoen, B.; Hamarneh, G. Deep learning for biomedical image reconstruction: A survey. Artif. Intell. Rev. 2020, 54, 215–251. [Google Scholar] [CrossRef]

- Ongie, G.; Jalal, A.; Metzler, C.A.; Baraniuk, R.G.; Dimakis, A.G.; Willett, R. Deep learning techniques for inverse problems in imaging. IEEE J. Sel. Areas Inf. Theory 2020, 1, 39–56. [Google Scholar] [CrossRef]

- Ye, J.C.; Eldar, Y.C.; Unser, M. (Eds.) Deep Learning for Biomedical Image Reconstruction; Cambridge University Press: Cambridge, UK, 2023. [Google Scholar]

- Ye, J.C.; Han, Y.; Cha, E. Deep convolutional framelets: A general deep learning framework for inverse problems. SIAM J. Imaging Sci. 2018, 11, 991–1048. [Google Scholar] [CrossRef]

- Casazza, P.G.; Kutyniok, G. (Eds.) Finite Frames: Theory and Applications; Birkhäuser: Boston, MA, USA, 2013. [Google Scholar]

- Grohs, P.; Kutyniok, G. (Eds.) Mathematical Aspects of Deep Learning; Cambridge University Press: Cambridge, UK, 2022. [Google Scholar]

- Ye, J.C. Geometry of Deep Learning: A Signal Processing Perspective; Springer: Berlin/Heidelberg, Germany, 2023. [Google Scholar]

- Jin, K.; Mccann, M.; Froustey, E.; Unser, M. Deep convolutional neural network for inverse problems in imaging. IEEE Trans. Image Process. 2017, 26, 4509–4522. [Google Scholar] [CrossRef] [PubMed]

- Mallat, S. A Wavelet Tour of Signal Processing: The Sparse Way, 3rd ed.; Elsevier Academic Press: Amsterdam, The Netherlands, 2009. [Google Scholar]

- Damelin, S.B.; Miller, W., Jr. The Mathematics of Signal Processing; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Tashima, H.; Yamaya, T. Compton imaging for medical applications. Radiol. Phys. Technol. 2022, 15, 187–205. [Google Scholar] [CrossRef] [PubMed]

- Abbene, L.; Gerardi, G.; Principato, F.; Buttacavoli, A.; Altieri, S.; Protti, N.; Tomarchio, E.; Sordo, S.D.; Auricchio, N.; Bettelli, M.; et al. Recent advances in the development of high-resolution 3D cadmium–zinc–telluride drift strip detectors. J. Synchrotron. Radiat. 2020, 27, 1564–1576. [Google Scholar] [CrossRef] [PubMed]

- Abbene, L.; Principato, F.; Buttacavoli, A.; Gerardi, G.; Bettelli, M.; Zappettini, A.; Altieri, S.; Auricchio, N.; Caroli, E.; Zanettini, S.; et al. Potentialities of high-resolution 3-d czt drift strip detectors for prompt gamma-ray measurements in bnct. Sensors 2022, 22, 1502. [Google Scholar] [CrossRef] [PubMed]

- Sayed, A.H. Inference and Learning From Data, Vol.I-III; Cambridge University Press: Cambridge, UK, 2022. [Google Scholar]

- Baydin, A.; Pearlmutter, B.; A, R.; Siskind, J. Automatic differentiation in machine learning: A survey. J. Mach. Learn. Res. 2018, 18, 1–43. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Murphy, K.P. Probabilistic Machine Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).