The Application of Large Language Models in Gastroenterology: A Review of the Literature

Abstract

Simple Summary

Abstract

1. Introduction

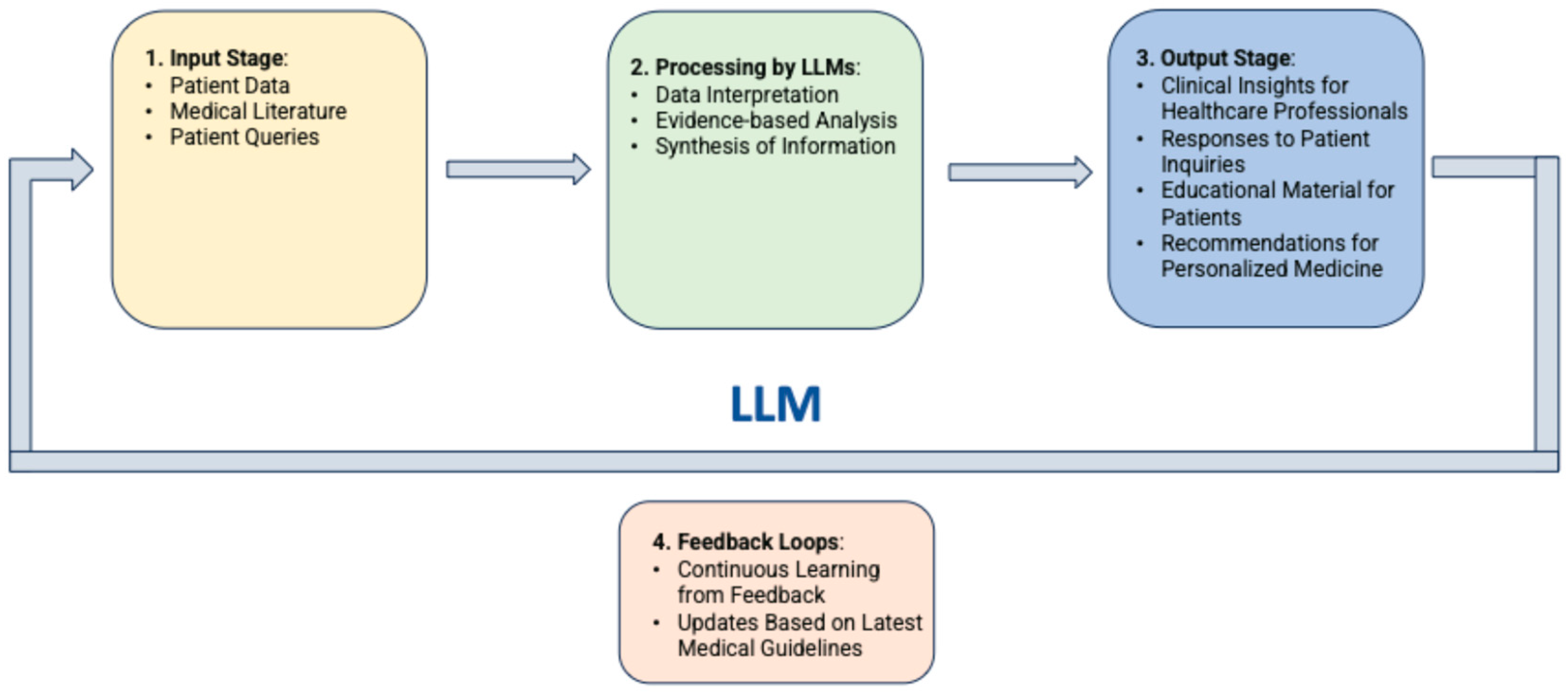

2. Definition and Key Characteristics of LLMs

2.1. Fundamental Concepts

- Scalability: LLMs can scale up to billions of parameters, improving performance with increased size [4];

- Pre-training and Fine-tuning: LLMs are trained on extensive text datasets and can be further fine-tuned for particular tasks or fields to enhance their effectiveness [5];

- Transfer Learning: Knowledge acquired during pre-training can be transferred to new tasks with little additional training data, hence increasing efficiency and adaptability [6].

2.2. Historical Development and Advancements

2.3. Commonly Used LLMs in Medical and Non-Medical Settings

- MedPaLM—Developed by Google and specifically designed for medical applications.

- ClinicalBERT—An adaptation of BERT fine-tuned for clinical and biomedical texts.

- BioGPT—Microsoft’s specialized language model for the biomedical domain, designed to process and understand medical literature.

- GatorTron—Developed by the University of Florida and NVIDIA, this model is tailored for processing clinical data.

- GPT 3.5—An earlier version of OpenAI’s language models, known for its performance in natural language tasks and applications.

- GPT 4—Developed by OpenAI, this is one of the latest models in the GPT family, known for its versatility and broad applicability.

- Claude—Created by Anthropic, it is designed to be helpful, harmless, and honest for communication and interaction tasks.

- LLaMA (Large Language Model Meta AI)—Created by Meta (formerly Facebook), LLaMA focuses on efficiency and effectiveness across various general-purpose tasks.

- PaLM (Pathways Language Model)—Google’s large model designed for diverse NLP tasks, showcasing advanced capabilities in language understanding and generation.

3. Application of LLMs in Gastroenterology

3.1. Ability of LLMs to Answer Patients’ Questions

3.1.1. General Gastroenterology

3.1.2. Colonoscopy and Colorectal Cancer

3.1.3. Pancreatic Diseases

3.1.4. Liver Diseases

3.1.5. Inflammatory Bowel Diseases

3.1.6. Helicobacter pylori

4. Efficacy of LLMs for Clinical Guidance

4.1. Questions from Clinicians

4.2. Colonoscopy Schedule

4.3. Inflammatory Bowel Disease—Decision-Making

5. Other Applications of LLMs in Gastroenterology

5.1. Research Questions

5.2. Scientific Writing

5.3. Medical Education

6. Current Challenges and Future Perspectives

7. Conclusions

- Large language models (LLMs) are emerging as powerful tools in the field of medicine, including Gastroenterology.

- Several studies have been conducted to evaluate the accuracy of ChatGPT in responding to patients’ questions about specific areas of Gastroenterology, demonstrating a good performance.

- Some studies have also been carried out to assess the role of ChatGPT in assisting physicians in clinical decision-making, showing promising results.

- Other areas of interest include the use of LLMs in research, scientific writing, and medical education in Gastroenterology, which are currently under investigation.

- Current concerns about the use of LLMs include their reliability, reproducibility of outputs, data protection, ethical issues, and the need for regulatory systems.

- To date, there are no guidelines regarding the application of LLMs in Gastroenterology, and their routine use is not recommendable outside research settings.

Author Contributions

Funding

Conflicts of Interest

Acronyms and Abbreviations

| Large language models | LLMs |

| ChatGPT | Chat Generative Pretrained Transformer, OpenAI Foundation |

| Artificial intelligence | AI |

| Natural language processing | NLP |

| BERT | Bidirectional Encoder Representations from Transformers |

| Colorectal cancer | CRC |

| Inflammatory bowel disease | IBD |

| Irritable bowel syndrome | IBS |

| Nonalcoholic fatty liver disease | NAFLD |

| Metabolic dysfunction-associated steatotic liver disease | MASLD |

| Hepatocellular carcinoma | HCC |

| Liver transplantation | LT |

| Crohn’s disease | CD |

| Ulcerative colitis | UC |

| Patient educational materials | PEMs |

| Acute pancreatitis | AP |

| Gastroesophageal reflux disease | GERD |

| US Multisociety Task Force | USMSTF |

| Hepatitis C virus | HCV |

| Emergency department | ED |

References

- OpenAI. ChatGPT (Mar 14 Version). 2023. Available online: https://chat.openai.com (accessed on 5 February 2024).

- Vaswani, A.; Shazeer, N.M.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2017; pp. 5998–6008. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. arXiv 2020, arXiv:2005.14165. [Google Scholar]

- Kaplan, J.; McCandlish, S.; Henighan, T.; Brown, T.B.; Chess, B.; Child, R.; Gray, S.; Radford, A.; Wu, J.; Amodei, D. Scaling laws for neural language models. arXiv 2020, arXiv:2001.08361. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Lahat, A.; Shachar, E.; Avidan, B.; Glicksberg, B.; Klang, E. Evaluating the Utility of a Large Language Model in Answering Common Patients’ Gastrointestinal Health-Related Questions: Are We There Yet? Diagnostics 2023, 13, 1950. [Google Scholar] [CrossRef]

- Kerbage, A.; Kassab, J.; El Dahdah, J.; Burke, C.A.; Achkar, J.-P.; Rouphael, C. Accuracy of ChatGPT in Common Gastrointestinal Diseases: Impact for Patients and Providers. Clin. Gastroenterol. Hepatol. 2024, 22, 1323–1325.e3. [Google Scholar] [CrossRef] [PubMed]

- Lee, T.-C.; Staller, K.; Botoman, V.; Pathipati, M.P.; Varma, S.; Kuo, B. ChatGPT Answers Common Patient Questions about Colonoscopy. Gastroenterology 2023, 165, 509–511.e7. [Google Scholar] [CrossRef] [PubMed]

- Tariq, R.; Malik, S.; Khanna, S. Evolving Landscape of Large Language Models: An Evaluation of ChatGPT and Bard in Answering Patient Queries on Colonoscopy. Gastroenterology 2024, 166, 220–221. [Google Scholar] [CrossRef] [PubMed]

- Emile, S.H.; Horesh, N.; Freund, M.; Pellino, G.; Oliveira, L.; Wignakumar, A.; Wexner, S.D. How appropriate are answers of online chat-based artificial intelligence (ChatGPT) to common questions on colon cancer? Surgery 2023, 174, 1273–1275. [Google Scholar] [CrossRef] [PubMed]

- Maida, M.; Ramai, D.; Mori, Y.; Dinis-Ribeiro, M.; Facciorusso, A.; Hassan, C. The role of generative language systems in increasing patient awareness of colon cancer screening. Endoscopy 2024. [Google Scholar] [CrossRef] [PubMed]

- Atarere, J.; Naqvi, H.; Haas, C.; Adewunmi, C.; Bandaru, S.; Allamneni, R.; Ugonabo, O.; Egbo, O.; Umoren, M.; Kanth, P. Applicability of Online Chat-Based Artificial Intelligence Models to Colorectal Cancer Screening. Dig. Dis. Sci. 2024, 69, 791–797. [Google Scholar] [CrossRef] [PubMed]

- Moazzam, Z.; Cloyd, J.; Lima, H.A.; Pawlik, T.M. Quality of ChatGPT Responses to Questions Related to Pancreatic Cancer and its Surgical Care. Ann. Surg. Oncol. 2023, 30, 6284–6286. [Google Scholar] [CrossRef] [PubMed]

- Pugliese, N.; Wong, V.W.-S.; Schattenberg, J.M.; Romero-Gomez, M.; Sebastiani, G.; NAFLD Expert Chatbot Working Group; Aghemo, A.; Castera, L.; Hassan, C.; Manousou, P.; et al. Accuracy, Reliability, and Comprehensibility of ChatGPT-Generated Medical Responses for Patients with Nonalcoholic Fatty Liver Disease. Clin. Gastroenterol. Hepatol. 2024, 22, 886–889.e5. [Google Scholar] [CrossRef] [PubMed]

- Yeo, Y.H.; Samaan, J.S.; Ng, W.H.; Ting, P.-S.; Trivedi, H.; Vipani, A.; Ayoub, W.; Yang, J.D.; Liran, O.; Spiegel, B.; et al. Assessing the performance of ChatGPT in answering questions regarding cirrhosis and hepatocellular carcinoma. Clin. Mol. Hepatol. 2023, 29, 721–732. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Cao, J.J.; Kwon, D.H.; Ghaziani, T.T.; Kwo, P.; Tse, G.; Kesselman, A.; Kamaya, A.; Tse, J.R. Accuracy of Information Provided by ChatGPT Regarding Liver Cancer Surveillance and Diagnosis. Am. J. Roentgenol. 2023, 221, 556–559. [Google Scholar] [CrossRef] [PubMed]

- Endo, Y.; Sasaki, K.; Moazzam, Z.; Lima, H.A.; Schenk, A.; Limkemann, A.; Washburn, K.; Pawlik, T.M. Quality of ChatGPT Responses to Questions Related to Liver Transplantation. J. Gastrointest. Surg. 2023, 27, 1716–1719. [Google Scholar] [CrossRef] [PubMed]

- Cankurtaran, R.E.; Polat, Y.H.; Aydemir, N.G.; Umay, E.; Yurekli, O.T. Reliability and Usefulness of ChatGPT for Inflammatory Bowel Diseases: An Analysis for Patients and Healthcare Professionals. Cureus 2023, 15, e46736. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Naqvi, H.A.; Delungahawatta, T.; Atarere, J.O.; Bandaru, S.K.; Barrow, J.B.; Mattar, M.C. Evaluation of online chat-based artificial intelligence responses about inflammatory bowel disease and diet. Eur. J. Gastroenterol. Hepatol. 2024, 36, 1109–1112. [Google Scholar] [CrossRef] [PubMed]

- Lai, Y.; Liao, F.; Zhao, J.; Zhu, C.; Hu, Y.; Li, Z. Exploring the capacities of ChatGPT: A comprehensive evaluation of its accuracy and repeatability in addressing Helicobacter pylori-related queries. Helicobacter 2024, 29, e13078. [Google Scholar] [CrossRef] [PubMed]

- Zeng, S.; Kong, Q.; Wu, X.; Ma, T.; Wang, L.; Xu, L.; Kou, G.; Zhang, M.; Yang, X.; Zuo, X.; et al. Artificial Intelligence-Generated Patient Education Materials for Helicobacter pylori Infection: A Comparative Analysis. Helicobacter 2024, 29, e13115. [Google Scholar] [CrossRef] [PubMed]

- Du, R.-C.; Liu, X.; Lai, Y.-K.; Hu, Y.-X.; Deng, H.; Zhou, H.-Q.; Lu, N.-H.; Zhu, Y.; Hu, Y. Exploring the performance of ChatGPT on acute pancreatitis-related questions. J. Transl. Med. 2024, 22, 527. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Klein, A.; Khamaysi, I.; Gorelik, Y.; Ghersin, I.; Arraf, T.; Ben-Ishay, O. Using a customized GPT to provide guideline-based recommendations for management of pancreatic cystic lesions. Endosc. Int. Open 2024, 12, E600–E603. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Henson, J.B.; Brown, J.R.G.; Lee, J.P.; Patel, A.; Leiman, D.A. Evaluation of the Potential Utility of an Artificial Intelligence Chatbot in Gastroesophageal Reflux Disease Management. Am. J. Gastroenterol. 2023, 118, 2276–2279. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Gorelik, Y.; Ghersin, I.; Maza, I.; Klein, A. Harnessing language models for streamlined postcolonoscopy patient management: A novel approach. Gastrointest. Endosc. 2023, 98, 639–641.e4. [Google Scholar] [CrossRef] [PubMed]

- Chang, P.W.; Amini, M.M.; Davis, R.O.; Nguyen, D.D.; Dodge, J.L.; Lee, H.; Sheibani, S.; Phan, J.; Buxbaum, J.L.; Sahakian, A.B. ChatGPT4 outperforms endoscopists for determination of post-colonoscopy re-screening and surveillance recommendations. Clin. Gastroenterol. Hepatol. 2024, 9, 1917–1925.e17. [Google Scholar] [CrossRef] [PubMed]

- Lim, D.Y.Z.; Bin Tan, Y.; Koh, J.T.E.; Tung, J.Y.M.; Sng, G.G.R.; Tan, D.M.Y.; Tan, C. ChatGPT on guidelines: Providing contextual knowledge to GPT allows it to provide advice on appropriate colonoscopy intervals. J. Gastroenterol. Hepatol. 2024, 39, 81–106. [Google Scholar] [CrossRef] [PubMed]

- Kresevic, S.; Giuffrè, M.; Ajcevic, M.; Accardo, A.; Crocè, L.S.; Shung, D.L. Optimization of hepatological clinical guidelines interpretation by large language models: A retrieval augmented generation-based framework. NPJ Digit. Med. 2024, 7, 102. [Google Scholar] [CrossRef]

- Levartovsky, A.; Ben-Horin, S.; Kopylov, U.; Klang, E.; Barash, Y. Towards AI-Augmented Clinical Decision-Making: An Examination of ChatGPT’s Utility in Acute Ulcerative Colitis Presentations. Am. J. Gastroenterol. 2023, 118, 2283–2289. [Google Scholar] [CrossRef] [PubMed]

- Lahat, A.; Shachar, E.; Avidan, B.; Shatz, Z.; Glicksberg, B.S.; Klang, E. Evaluating the use of large language model in identifying top research questions in gastroenterology. Sci. Rep. 2023, 13, 4164. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Van Noorden, R.; Perkel, J.M. AI and science: What 1,600 researchers think. Nature 2023, 621, 672–675. [Google Scholar] [CrossRef] [PubMed]

- Sharma, H.; Ruikar, M. Artificial intelligence at the pen’s edge: Exploring the ethical quagmires in using artificial intelligence models like ChatGPT for assisted writing in biomedical research. Perspect. Clin. Res. 2023, 15, 108–115. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Basgier, C.; Sharma, S. Should scientists delegate their writing to ChatGPT? Nature 2023, 624, 523. [Google Scholar] [CrossRef] [PubMed]

- Gravina, A.G.; Pellegrino, R.; Palladino, G.; Imperio, G.; Ventura, A.; Federico, A. Charting new AI education in gastroenterology: Cross-sectional evaluation of ChatGPT and perplexity AI in medical residency exam. Dig. Liver Dis. 2024, 56, 1304–1311. [Google Scholar] [CrossRef] [PubMed]

- Hutson, M. Forget ChatGPT: Why researchers now run small AIs on their laptops. Nature 2024, 633, 728–729. [Google Scholar] [CrossRef] [PubMed]

| Author | Year | Tool | Setting | Objectives | Main Findings |

|---|---|---|---|---|---|

| Lahat [9] | 2023 | ChatGPT (Version not reported) | Patients’ common gastrointestinal health-related questions | To evaluate the performance of ChatGPT in answering patients’ questions, we used a representative sample of 110 real-life questions. | For questions about treatments, the average accuracy, clarity, and efficacy scores (1 to 5) were 3.9 ± 0.8, 3.9 ± 0.9, and 3.3 ± 0.9, respectively. The mean accuracy, clarity, and efficacy scores of queries concerning treatment were 3.4 ± 0.8, 3.7 ± 0.7, and 3.2 ± 0.7, respectively. The mean accuracy, clarity, and efficacy scores for diagnostic test queries were 3.7 ± 1.7, 3.7 ± 1.8, and 3.5 ± 1.7, respectively. |

| Kerbage [10] | 2024 | ChatGPT 4.0 | Colonoscopy and CRC screening, IBS and IBD | To evaluate the accuracy of ChatGPT 4 in addressing frequently asked questions by patients on irritable bowel syndrome (IBS), inflammatory bowel disease (IBD), and colonoscopy and colorectal cancer (CRC) screening. | The study found that 84% of the answers given were generally accurate, with complete agreement among reviewers. When looking at the details, 48% of the answers were completely accurate, 42% were partially inaccurate, and 10% were accurate but with some missing information. Unsuitable references were discovered for 53% of answers related to IBS, 15% of answers related to IBD, and 27% of answers related to colonoscopy and CRC prevention. |

| Lee [11] | 2023 | ChatGPT 3.5 | Colonoscopy | To examine the quality of ChatGPT-generated answers to common questions about colonoscopy and to assess the text similarity among all answers. | The LLM answers were found to be written at higher reading grade levels compared to hospital webpages, exceeding the eighth-grade threshold recommended for readability. The study also found that the LLM answers were scientifically adequate and satisfactory overall. Moreover, ChatGPT responses had a low text similarity (0–16%) compared to answers found on hospital websites. |

| Tariq [12] | 2024 | ChatGPT 3.5 ChatGPT 4.0 Bard | Colonoscopy | To compare the performance of 3 LLMs (ChatGPT 3.5, ChatGPT 4, and Bard) in answering common patient inquiries related to colonoscopy. | Overall, ChatGPT 4 showed the best performance, with 91.4% of its answers deemed fully accurate, 8.6% as correct but partial, and none were found incorrect. In comparison, only 6.4% and 14.9% of the responses from ChatGPT 3.5 and Google’s Bard, respectively, were rated as entirely correct. While no responses from ChatGPT 4 and ChatGPT 3.5 were viewed as unreliable, two responses from Bard were considered unreliable. |

| Emile, S.H. [13] | 2023 | ChatGPT 3.5 | Colorectal cancer | To evaluate ChatGPT’s appropriateness in responding to common questions related to CRC. | Thirty-eight questions regarding CRC were posed to ChatGPT, and the appropriateness of the answers was rated differently by each expert, with percentages of 78.9%, 81.6%, and 97.4%. In general, at least two out of three experts deemed the answers appropriate for 86.8% (with a 95% confidence interval of 71.9% to 95.6%) of the questions. The inter-rater reliability was calculated at 79.8% (with a 95% confidence interval of 71.3% to 86.8%). Out of the 20 questions related to the 2022 ASCRS practice parameters for colon cancer, 19 were in agreement, amounting to a concordance rate of 95%. |

| Maida, M. [14] | 2024 | ChatGPT 4.0 | Colorectal cancer screening | To evaluate ChatGPT’s appropriateness in responding to common questions related to CRC screening and its diagnostic and therapeutic implications. | Fifteen queries about CRC screening were posed to ChatGPT 4, and answers were rated by 20 gastroenterology experts and 20 non-experts in terms of accuracy, completeness, and comprehensibility. Additionally, 100 patients assessed the answers based on completeness, comprehensibility, and trustworthiness. According to the expert rating, the mean accuracy score was 4.8 ± 1.1 on a scale of 1 to 6. The mean completeness score was 2.1 ± 0.7, and the mean comprehensibility score was 2.8 ± 0.4 on a scale of 1 to 3. Compared to non-experts, experts gave significantly lower scores for accuracy (4.8 ± 1.1 vs. 5.6 ± 0.7, p < 0.001) and completeness (2.1 ± 0.7 vs. 2.7 ± 0.4, p < 0.001). Comprehensibility scores were similar between expert and non-expert groups (2.7 ± 0.4 vs. 2.8 ± 0.3, p = 0.546). Patients rated the responses as complete, comprehensible, and trustworthy in 97% to 100% of cases. |

| Atarere, J. [15] | 2024 | ChatGPT, YouChat, BingChat (Version not reported) | Colorectal cancer | To evaluate ChatGPT, YouChat, and BingChat’s appropriateness of responses for educating the public about CRC screening. | The main results of the study show that ChatGPT and YouChat™ provided more reliably appropriate responses to questions on colorectal cancer screening compared to BingChat. There were some questions that more than one AI model provided unreliable responses to. The study also emphasized the necessity for more in-depth evaluation of AI models in the context of patient–physician communication and education regarding colorectal cancer screening. |

| Moazzam, Z. [16] | 2023 | ChatGPT (Version not reported) | Pancreatic cancer | To characterize the quality of ChatGPT’s responses to questions pertaining to pancreatic cancer and its surgical care. | Thirty questions encompassing general information about pancreatic cancer, as well as its preoperative, intraoperative, and postoperative phases, were posed to ChatGPT. Twenty hepatopancreaticobiliary surgical oncology experts evaluated each response. The majority of responses (80%) were rated as “very good” or “excellent”. Overall, 35.2% of the quality ratings were rated as “very good”, 24.5% “excellent”, and only 4.8% “poor”. Overall, 60% of experts considered ChatGPT to be a reliable information source, with only 10% indicating that ChatGPT’s answers were not comparable to those of experienced surgeons. |

| Pugliese [17] | 2023 | ChatGPT 3.5 | Nonalcoholic fatty liver disease (NAFLD) | To evaluate the accuracy, completeness, and comprehensibility of ChatGPT’s responses to NAFLD-related questions. | ChatGPT’s responses to 15 questions about NAFLD were highly comprehensible, achieving an average score of 2.87 ± 0.14 on a Likert scale of 1 to 3. Seven questions received the highest score of 3, signifying they were easy to understand. The mean Kendall’s coefficient of concordance for all questions was 0.822, reflecting strong agreement among the key opinion leaders (KOLs). A nonphysician also assessed comprehensibility, rating 13 questions as 3 and finding 2 questions somewhat difficult to comprehend. |

| Yeo, Y.H. [18] | 2023 | ChatGPT 3.5 | Liver cirrhosis and hepatocellular carcinoma | To assess the accuracy and reproducibility of ChatGPT in answering questions regarding liver cirrhosis and hepatocellular carcinoma. | Two transplant hepatologists independently evaluated ChatGPT’s responses to 164 questions, with a third reviewer resolving any discrepancies. ChatGPT demonstrated significant knowledge about cirrhosis, with a 79.1% correctness rate, and hepatocellular carcinoma (HCC), with a 74.0% correctness rate. However, only 47.3% of the responses on cirrhosis and 41.1% on HCC were deemed comprehensive. In terms of quality metrics, the model correctly answered 76.9% of the questions but lacked adequate specification regarding decision-making cut-offs and treatment durations. Additionally, ChatGPT demonstrated a lack of awareness about variations in guidelines, particularly related to HCC screening criteria. |

| Cao, J.J. [19] | 2023 | ChatGPT 3.5 | Liver cancer surveillance and diagnosis | To evaluate the performance of ChatGPT in answering patients’ questions related to liver cancer screening, surveillance, and diagnosis. | Out of 60 answers, 29 (48%) were deemed accurate. The average scores tended to be higher for questions concerning general HCC risk factors and preventive measures. A total of 15 of 60 (25%) answers were considered inaccurate, mostly regarding LI-RADS categories. ChatGPT often provided inaccurate information regarding liver cancer surveillance and diagnosis. It frequently gave contradictory, falsely reassuring, or outright incorrect responses to questions about specific LI-RADS categories. This could potentially influence management decisions and patient outcomes. |

| Endo, Y. [20] | 2023 | ChatGPT (Version not reported) | Liver transplantation | To evaluate the performance of ChatGPT in answering patients’ questions related to liver transplantation. | Overall, most of the 493 ratings of ChatGPT answers were classified as “very good” (46.0%) or “excellent” (30.2%), while only a small portion (7.5%) were rated as “poor” or “fair”. Moreover, 70.6% of the experts indicated that ChatGPT’s answers were comparable to responses provided by practicing liver transplant clinicians and considered it a reliable source of information. |

| Cankurtaran, R.E. [21] | 2023 | ChatGPT 4.0 | Inflammatory bowel diseases | To evaluate the performance of ChatGPT as a reliable and useful resource for both patients and healthcare professionals in the context of inflammatory bowel disease (IBD). | Twenty questions were created: ten pertaining to Crohn’s disease (CD) and ten concerning ulcerative colitis (UC). The questions from patients were derived from trends observed in Google searches using CD- and UC-related keywords. Questions for healthcare personnel were created by a team of four gastroenterologists. These questions focused on topics such as disease classification, diagnosis, activity level, negative prognostic indicators, and potential complications. The reliability and usefulness ratings were 4.70 ± 1.26 and 4.75 ± 1.06 for CD questions, and 4.40 ± 1.21 and 4.55 ± 1.31 for UC questions. |

| Naqvi, H.A. [22] | 2024 | ChatGPT 3.5, BingChat, YouChat | Inflammatory bowel diseases | To evaluate the role of LLMs in patient education on the dietary management of inflammatory bowel disease (IBD). | Six questions focusing on key concepts related to the dietary management of IBD were presented to three different large language models (LLMs) (ChatGPT, BingChat, and YouChat). Two physicians evaluated all responses for appropriateness and reliability using dietary information provided by the Crohn’s and Colitis Foundation. Overall, ChatGPT provided more reliable responses on the dietary management of IBD compared to BingChat and YouChat. While there were some questions where multiple LLMs gave unreliable feedback, all of them advised seeking expert counsel. The agreement among raters was 88.9%. |

| Lai [23] | 2023 | ChatGPT 3.5 | H. pylori | To assess the potential of ChatGPT in responding to common queries related to H. pylori. | Two reviewers assessed the responses based on categories like basic knowledge, treatment, diagnosis, and prevention. Although one reviewer scored higher than the other one in basic knowledge and treatment, there were no significant differences overall, with excellent interobserver reliability. The average score for all responses was 3.57 ± 0.13, with prevention-related questions receiving the highest score (3.75 ± 0.25). Basic knowledge and diagnosis questions scored equally at 3.5 ± 0.29. Overall, the study found that ChatGPT performed well in providing information on H. pylori management. |

| Zeng [24] | 2024 | Bing Copilot, Claude 3 Opus, Gemini Pro, ChatGPT 4, and ERNIE Bot 4.0 | H. pylori | To assess the quality of patient educational materials (PEMs) generated by LLMs and compared with physician-sourced materials. | English patient education materials (PEMs) were accurate and easy to understand, but they fell short in completeness. PEMs created by physicians had the highest accuracy scores, whereas LLM-generated English PEMs had a range of scores. Both types had similar levels of completeness. LLM-generated Chinese PEMs were less accurate and complete than the English versions, though patients perceived them as more complete than gastroenterologists did. Although all PEMs were understandable, they exceeded the recommended sixth-grade reading level. LLMs show potential in educating patients but need to enhance their completeness and adapt to various languages and contexts to be more effective. |

| Author | Year | Tool | Setting | Objectives | Main Findings |

|---|---|---|---|---|---|

| Du [25] | 2024 | ChatGPT 3.5 ChatGPT 4.0 | Acute pancreatitis | To evaluate and compare the capabilities of ChatGPT 3.5 and ChatGPT 4.0 in answering test questions about AP, employing both subjective and objective metrics. | ChatGPT 4.0 had a higher accuracy rate of 94% compared to ChatGPT 3.5’s 80% for subjective questions, and a 78.1% accuracy rate compared to ChatGPT 3.5’s 68.5% for objective questions. Overall, the concordance rate between ChatGPT 4.0 and ChatGPT 3.5 was 83.6% and 80.8%,respectively. The study highlighted the enhanced performance of ChatGPT 4.0 in answering queries related to AP compared to its earlier version. |

| Gorelik [26] | 2024 | ChatGPT customized version | Pancreatic cysts | To assess the effectiveness of a custom GPT in providing integrated guideline-based recommendations for managing pancreatic cysts. | 60 clinical scenarios regarding pancreatic cysts management were evaluated by a custom ChatGPT and experts. ChatGPT agreed with the experts’ opinion in 87% of the cases. The study found no significant difference in accuracy between ChatGPT and the experts. |

| Henson, J.B. [27] | 2023 | ChatGPT 3.5 | Gastroesophageal reflux disease (GERD) | To assess ChatGPT’s performance in responding to questions regarding GERD diagnosis and treatment. | ChatGPT provided largely appropriate responses in 91.3% of cases, with the majority of responses (78.3%) including at least some specific guidance. On the other hand, patients found this tool to be useful in 100% of cases. |

| Gorelik, Y. [28] | 2023 | ChatGPT 4.0 | Screening and surveillance intervals for colonoscopy | To evaluate ChatGPT’s effectiveness in improving post-colonoscopy management by offering recommendations based on current guidelines. | Out of the 20 scenarios, 90% of the responses adhered to the guidelines. In total, 85% of the responses were deemed correct by both endoscopists and aligned with the guidelines. There was a strong agreement between the two endoscopists and the reference guidelines (Fleiss’ kappa coefficient = 0.84, p < 0.01). In 95% of the scenarios, the free-text clinical notes yielded similar interpretations and recommendations to the structured endoscopic responses. |

| Chang, P.W. [29] | 2024 | ChatGPT 4.0 | Screening and surveillance intervals for colonoscopy | To compared the accuracy, concordance, and reliability of ChatGPT 4 colonoscopy recommendations for colorectal cancer rescreening and surveillance with contemporary guidelines and real-world gastroenterology practice. | Data from 505 colonoscopy patients were anonymized and inputted into ChatGPT 4. The system provided successful follow-up recommendations in 99.2% of cases. Compared to gastroenterology practices, ChatGPT 4’s recommendations aligned more closely with the USMSTF Panel at 85.7% (p < 0.001). Among the cases where recommendations differed between ChatGPT 4 and the USMSTF panel (14.3% of cases), 5.1% suggested later screenings, while 8.7% recommended earlier ones. The inter-rater reliability between ChatGPT 4 and the USMSTF panel was strong, with a Fleiss κ of 0.786 (95% CI, 0.734–0.838; p < 0.001). |

| Lim, D.Y.Z. [30] | 2024 | ChatGPT 4.0 | Screening and surveillance intervals for colonoscopy | To evaluate a contextualized GPT model versus standard GPT with recent guidelines, to offer guidance on the suggested screening and surveillance intervals for colonoscopy. | The GPT 4 model with contextualization outperformed the standard GPT 4 across all domains. It did not miss any high-risk features, and only two cases experienced hallucinations of additional high-risk features. In the majority of cases, it provided the correct interval for colonoscopy, and almost all cases appropriately cited guidelines. |

| Kresevic, S. [31] | 2024 | ChatGPT 4.0 | Hepatitis C | To evaluate the performance of a novel LLM framework integrating clinical guidelines with retrieval augmented generation (RAG), prompt engineering, and text reformatting strategies for the augmented text interpretation of HCV guidelines. | Reformatting guidelines into a consistent structure, converting tables to text-based lists, and using prompt engineering significantly improved the LLM’s accuracy in answering HCV management questions, from 43% to 99% (p < 0.001). The novel framework outperformed the baseline GPT 4 Turbo model across different question types, including text-based, table-based, and clinical scenarios. |

| Cankurtaran, R.E. [21] | 2023 | ChatGPT 4.0 | Inflammatory bowel diseases | To evaluate the efficacy of ChatGPT as a reliable and useful resource for both patients and healthcare professionals in the context of inflammatory bowel disease (IBD). | Twenty questions were created (ten on Crohn’s disease—CD—and ten on ulcerative colitis—UC). The questions from patients were derived from trends observed in Google searches using CD- and UC-related keywords. Questions for healthcare professionals were created by a team of four gastroenterologists. Professional sources received the highest ratings for reliability and usefulness, with scores of 5.00 ± 1.21 and 5.15 ± 1.08, respectively. Moreover, the reliability scores of the responses provided by professionals were significantly greater than those given by patients (p = 0.032). |

| Levartovsky, A. [32] | 2023 | ChatGPT 4.0 | Inflammatory bowel diseases (ulcerative colitis) | To assess the potential of ChatGPT as a decision-making aid for acute ulcerative colitis in emergency-department (ED) settings by assessing disease severity and hospitalization needs. | The evaluation focused on 20 cases of acute UC presentations in the ED, collected over a 2-year period. ChatGPT was tasked with assessing disease severity based on the Truelove and Witts criteria for each case. The AI assessments exhibited a match of 80% with expert gastroenterologists’ evaluations in 20 cases (correlation coefficient for absolute agreement = 0.839). |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maida, M.; Celsa, C.; Lau, L.H.S.; Ligresti, D.; Baraldo, S.; Ramai, D.; Di Maria, G.; Cannemi, M.; Facciorusso, A.; Cammà, C. The Application of Large Language Models in Gastroenterology: A Review of the Literature. Cancers 2024, 16, 3328. https://doi.org/10.3390/cancers16193328

Maida M, Celsa C, Lau LHS, Ligresti D, Baraldo S, Ramai D, Di Maria G, Cannemi M, Facciorusso A, Cammà C. The Application of Large Language Models in Gastroenterology: A Review of the Literature. Cancers. 2024; 16(19):3328. https://doi.org/10.3390/cancers16193328

Chicago/Turabian StyleMaida, Marcello, Ciro Celsa, Louis H. S. Lau, Dario Ligresti, Stefano Baraldo, Daryl Ramai, Gabriele Di Maria, Marco Cannemi, Antonio Facciorusso, and Calogero Cammà. 2024. "The Application of Large Language Models in Gastroenterology: A Review of the Literature" Cancers 16, no. 19: 3328. https://doi.org/10.3390/cancers16193328

APA StyleMaida, M., Celsa, C., Lau, L. H. S., Ligresti, D., Baraldo, S., Ramai, D., Di Maria, G., Cannemi, M., Facciorusso, A., & Cammà, C. (2024). The Application of Large Language Models in Gastroenterology: A Review of the Literature. Cancers, 16(19), 3328. https://doi.org/10.3390/cancers16193328