Simple Summary

The appearance of microcalcifications in mammogram images is an essential predictor for radiologists to detect early-stage breast cancer. This study aims to demonstrate the strength of persistent homology (PH) in noise filtering and feature extraction integrated with machine learning models in classifying microcalcifications into benign and malignant cases. The methods are implemented on two public mammography datasets: the Mammographic Image Analysis Society (MIAS) and Digital Database for Screening Mammography (DDSM). This study discovered that PH-based machine learning techniques can improve classification accuracy, which could benefit radiologists and clinicians in early diagnosis.

Abstract

Microcalcifications in mammogram images are primary indicators for detecting the early stages of breast cancer. However, dense tissues and noise in the images make it challenging to classify the microcalcifications. Currently, preprocessing procedures such as noise removal techniques are applied directly on the images, which may produce a blurry effect and loss of image details. Further, most of the features used in classification models focus on local information of the images and are often burdened with details, resulting in data complexity. This research proposed a filtering and feature extraction technique using persistent homology (PH), a powerful mathematical tool used to study the structure of complex datasets and patterns. The filtering process is not performed directly on the image matrix but through the diagrams arising from PH. These diagrams will enable us to distinguish prominent characteristics of the image from noise. The filtered diagrams are then vectorised using PH features. Supervised machine learning models are trained on the MIAS and DDSM datasets to evaluate the extracted features’ efficacy in discriminating between benign and malignant classes and to obtain the optimal filtering level. This study reveals that appropriate PH filtering levels and features can improve classification accuracy in early cancer detection.

1. Introduction

Female breast cancers continue to record increases in the numbers of new cases and are reported as the most common incidence and mortality cancer worldwide, surpassing other types of cancer [1]. According to the World Health Organization (WHO), more than 2.3 million women were diagnosed with breast cancer globally in 2020, and 685,000 died from the disease [2]. This means that, on average, a woman is diagnosed with breast cancer every 14 s. Preventive measures, including imaging screening, can potentially detect cancer at an early stage, which in turn increases the patient’s survival rate [3]. Among the numerous screening methods currently available for the detection of breast cancer, mammography has become the main and most effective imaging method. Apart from that, it has increased the rate of early-stage cancer detection [4,5].

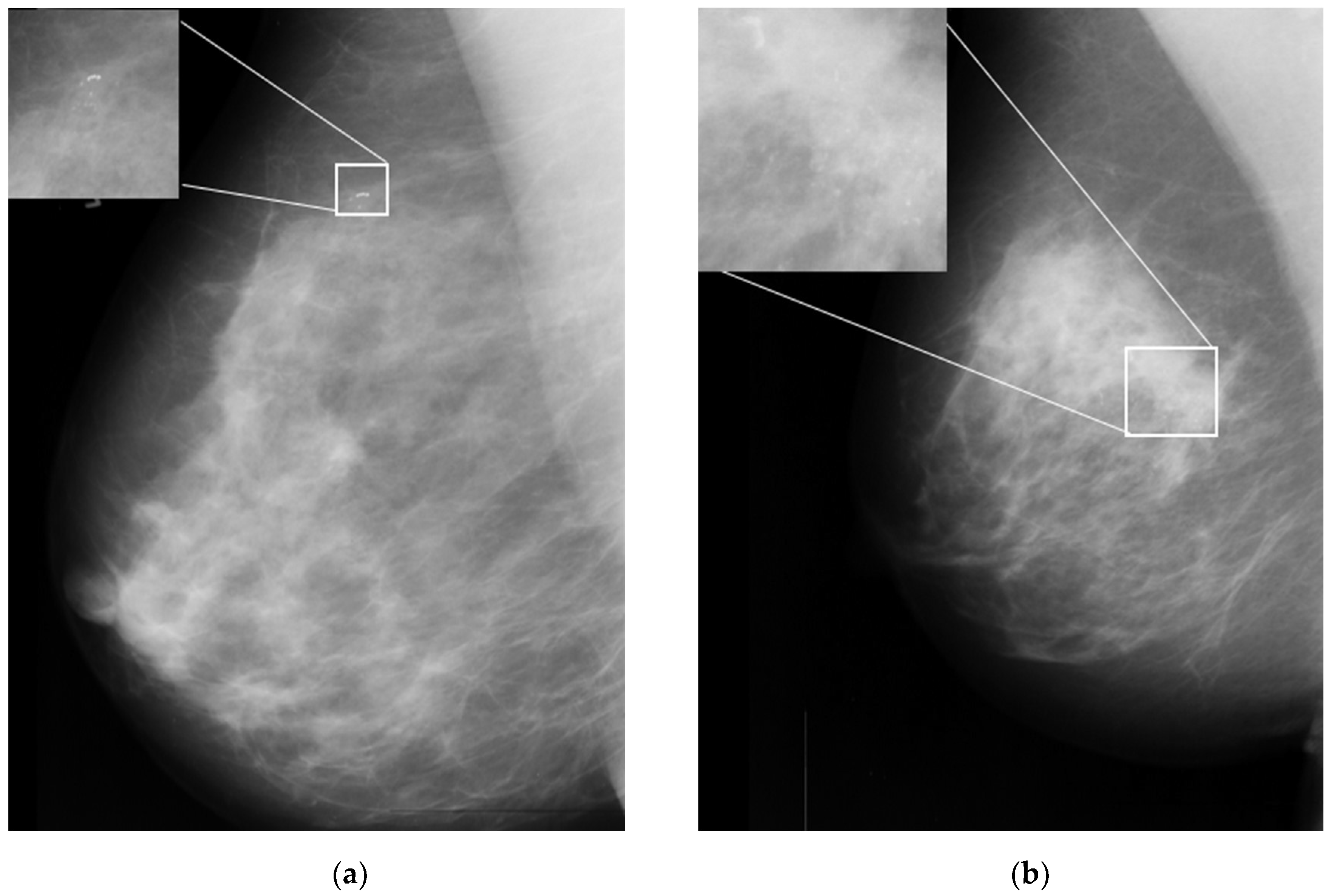

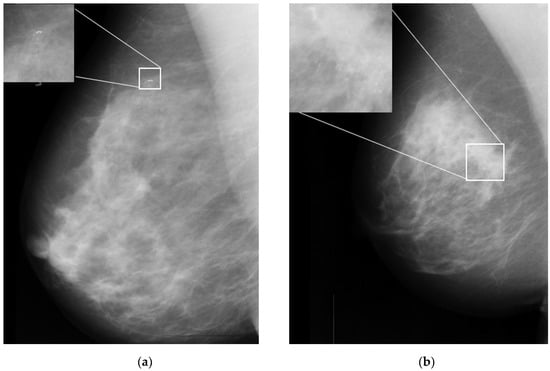

One of the important signs in cancer detection on mammographic images is the presence of microcalcifications, which appear as small bright spots within an inhomogeneous background [6]. Figure 1a,b illustrates an example of benign and malignant microcalcifications on mammogram images taken from the MIAS database [7]. Note that the morphology of this microcalcification is a crucial predictor of its pathological nature. Large, round, and oval calcifications of uniform size exhibit benign (non-cancerous) characteristics. In contrast, smaller and non-uniform calcifications exhibit characteristics of malignant growth [8,9]. In clinical practice, it is difficult and time-consuming for radiologists to interpret and evaluate microcalcifications accurately. This is true especially when the microcalcifications appear in low contrast and are obscured by the background tissue of the images [10]. Here, human errors based on subjective evaluations may lead to unnecessary biopsy procedures, which can cause harm and anxiety for patients [11].

Figure 1.

Sample microcalcification on mammogram images in the MIAS dataset [7]. (a) Benign microcalcifications and (b) malignant microcalcifications.

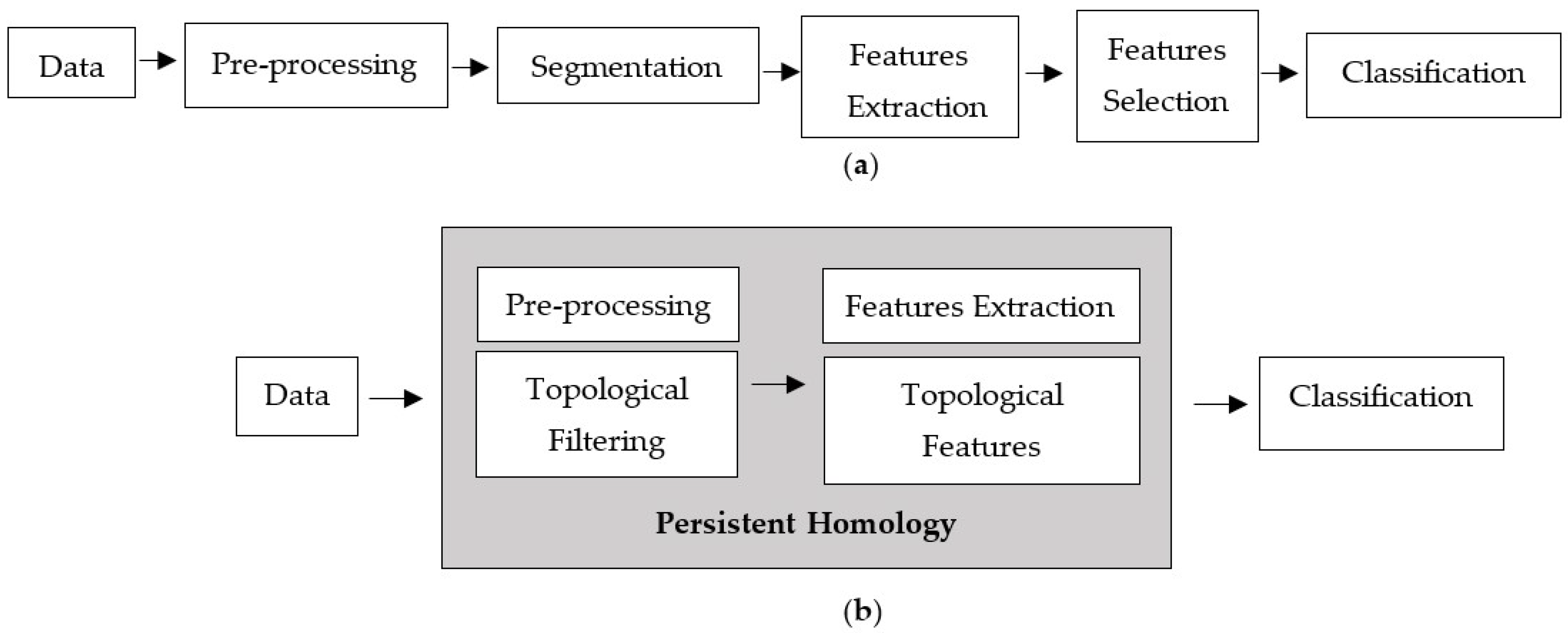

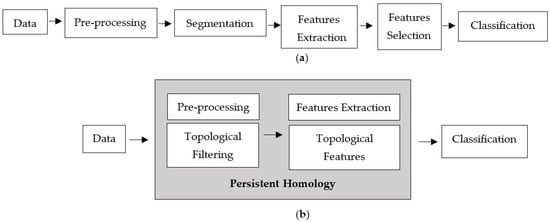

In order to improve the accuracy of assessing microcalcifications, numerous studies have been conducted to develop computational approaches that could potentially aid radiologists in distinguishing benign and malignant microcalcifications [3]. The standard computer-aided detection (CAD) processes consist of image preprocessing, segmentation, feature extraction, feature selection, and classification model, as depicted in Figure 2a. Each phase involves a different technique, and the performance relies heavily on the preceding phase. Preprocessing is the initial phase of the image processing pipeline. Filtering is commonly applied as a preprocessing technique for removing noises and other artefacts in the image. Noise emerges in mammograms when the image’s brightness varies in areas representing the same tissues owing to non-uniform photon distribution [3]. It produces a grainy appearance, reducing the visibility of some features within the image, especially microcalcifications in dense breast tissue. Because noise, edge, and texture are high-frequency components, distinguishing them is challenging [12].

Figure 2.

Computer-aided detection processes. (a) Standard processes and (b) proposed techniques.

Various filtering techniques have been used in the literature to reduce noise in mammogram images. Each method has its benefits and drawbacks. For example, the Wiener filter is considered a linear filter that can improve the images by reducing random noise but may produce a blurry effect and incomplete noise filtration [13]. Non-linear filters, such as the median filter, can overcome the limitations of linear filters thanks to several benefits, such as being straightforward and offering a sensible noise removal performance, but they could distort fine edges even at low noise densities [14]. The comparative reviews from [12] agreed that different types of noise require different filtering techniques.

Another important phase that influences classification performance is feature extraction [11]. Feature extraction methodologies analyse images to extract the most prevalent features and are employed as inputs to machine learning classifiers to distinguish between benign and malignant classes. Such features included intensity, statistical, shape, and textural features [5]. The grey-level cooccurrence matrix (GLCM), which calculates the occurrence of various grey levels in a region of interest (ROI), is a well-known texture feature and is utilised extensively in the literature [5,15,16,17]. Nevertheless, all of these features focus on local information of the images and are often burdened with details, resulting in data complexity [18].

This study proposes a new classification approach based on persistent homology (PH) that can extract informative features from the images, which consists of filtering and feature extraction processes, as shown in Figure 2b.

PH, the topological data analysis (TDA) core tool, has recently been widely used as a multi-scale representation of topological features. It can extract topological summaries from data that capture the birth and death of connected components, loops, and voids through a filtration process [19]. Apart from that, persistence diagrams (PD) are one of the topological descriptors produced by PH [20]. They comprise a collection of points in the half-plane above the diagonal with coordinates (birth and death) of topological features, helpful in distinguishing robust and noisy topological properties [21]. Other than that, the geometric measurement of the associated topological properties directly correlates with the lifespan (differences between death and birth). A long lifespan is considered a prominent feature represented by points far from the diagonal in the diagram. In contrast, short lifespans, represented by points close to the diagonal, are interpreted as noise [22].

There has been minimal effort to explore the potential use of PD as a filtering approach, especially in mammogram images. A noteworthy study by [23] filtered out 20% of points close to the diagonal in PD, but they focused on interpreting quality assessment in the eye fundus image. The PD of a microcalcification image contains thousands of points with many short lifespans (noise), necessitating an additional filtering procedure. Thus, a novel method for filtering noise in a persistent diagram based on the maximum lifespan of the image is proposed.

In PH, the topological features generated from PD can be vectorised and integrated into machine learning models. Various vectorisation approaches have been proposed, with promising results in various fields. For example, the persistent image (PI) feature proposed by [24] has been utilised in hepatic tumour classification with considerable accuracy [25,26]. Meanwhile, the persistent entropy (PE) and p-norm features were applied for dark soliton detection [27] and persistent landscapes (PL) in the quantitative analysis of fluorescence microscopy images [28]. Furthermore, the authors of [29] employed Betti numbers for evaluating tumour heterogeneity in image feature extraction. Nevertheless, a recent study by [30] stated that keeping track of the lifespan is more informative than the progression of Betti numbers. The researchers used the mean, the standard deviation of lifespan for each cycle, and PE for 0- and 1-dimensional features to embed in machine learning techniques in detecting the correct Gleason score of prostate cancer, reporting an accuracy above 95%.

PH-based machine learning is a promising technique with many potential applications across different fields [31]. However, one of the challenges mentioned in the published literature is PH-based feature representation [19]. In other words, selecting suitable topological features is crucial because the suitability of features depends on the data type and the problem at hand. This study explored the potential use of the PH method for noise filtering and feature extraction processes. To the best of our knowledge, this is the first work using PH to tackle the challenge of filtering noise and selecting suitable topological features to improve the classification performance of microcalcifications in mammogram images. The purpose of this paper can be summarised as follows: (i) to propose multi-level noise filtering of 1-dimensional homology group PD based on maximum lifespan, (ii) to obtain the vectorised topological features from the filtered PD using the PI and PE, (iii) to compare the performance of the filter and non-filter PD including the performance of an individual feature and concatenated features using several machine learning models, as well (iv) to suggest the optimal filtration level for the MIAS and DDSM datasets. As this work aims to highlight the importance of a topological approach to classify the microcalcifications in a machine learning setting, prior knowledge of machine learning is assumed. Therefore, it will not be recalled in this section or elsewhere in the paper.

2. Materials and Methods

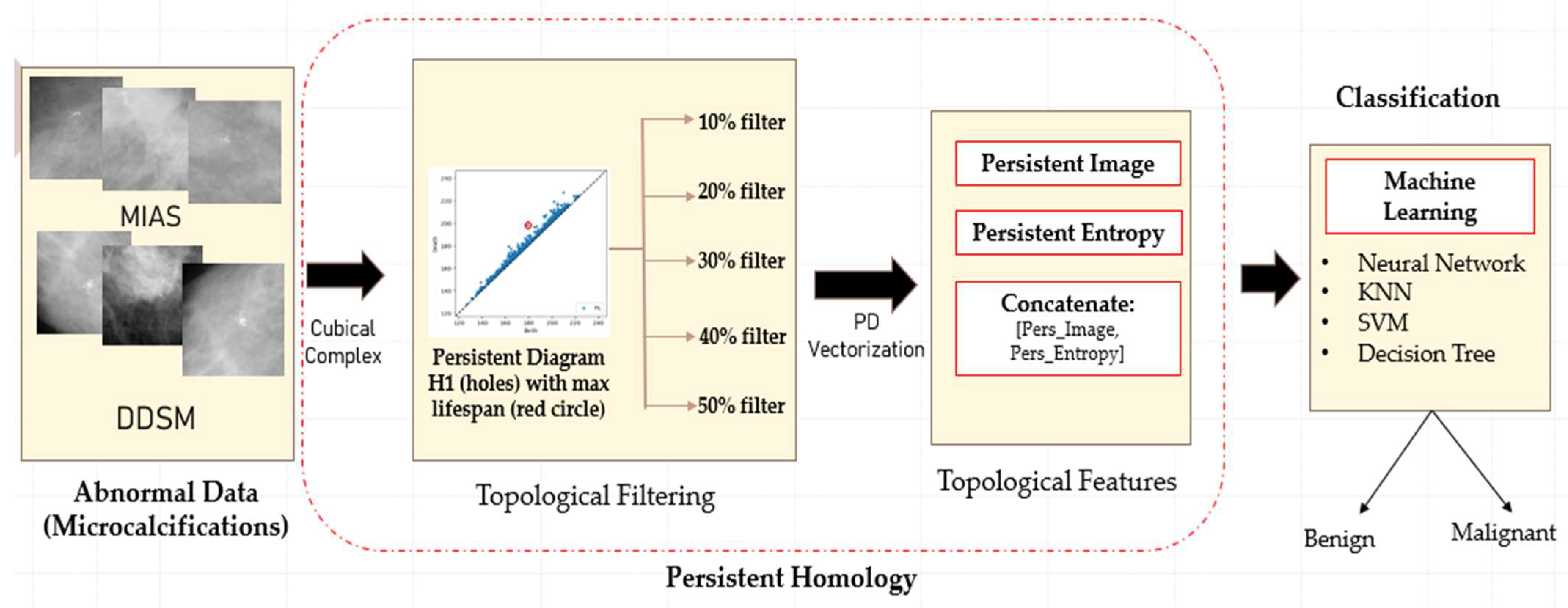

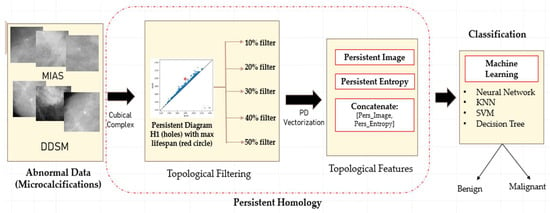

The proposed classification framework consists of four main steps: data acquisition, topological filtering, topological features vectorisation, and classification using machine learning classifiers, as illustrated in Figure 3.

Figure 3.

Illustration of the proposed classification framework using persistent homology.

2.1. Dataset

The data utilised in this study consist of two publicly available datasets. The first dataset was taken from the MIAS dataset [7], containing 26 image patches with a size of 200 × 200 pixels. These patches were cropped manually as the centre and radius of microcalcification clusters are provided. There were thirteen malignant and benign cases, each with three types of background tissue: fatty, fatty glandular, and dense glandular. The second dataset was taken from the digital database for screening mammography (DDSM) dataset [32], consisting of 140 image patches randomly selected with seventy cases for each of malignant and benign cases. The size of the images is set to 300 × 300 pixels. Subsequently, the diagnostics status of each patch is either benign or malignant, annotated by radiologists based on biopsy results. Despite the large number of mammograms in these two datasets, not all have microcalcifications. Therefore, the number of microcalcification patches is substantially lower than the total number of images in the datasets.

2.2. Persistent Homology

PH is a primary technique in TDA based on the concept that topological features detected over varying scales are more likely to represent intrinsic features [33]. Other than that, PH studies geometric patterns by looking at the evolution of -dimensional holes, such as 0-dimensional holes (H0) representing connected components, 1-dimensional holes (H1) representing loops, and 2-dimensional holes (H2) representing voids.

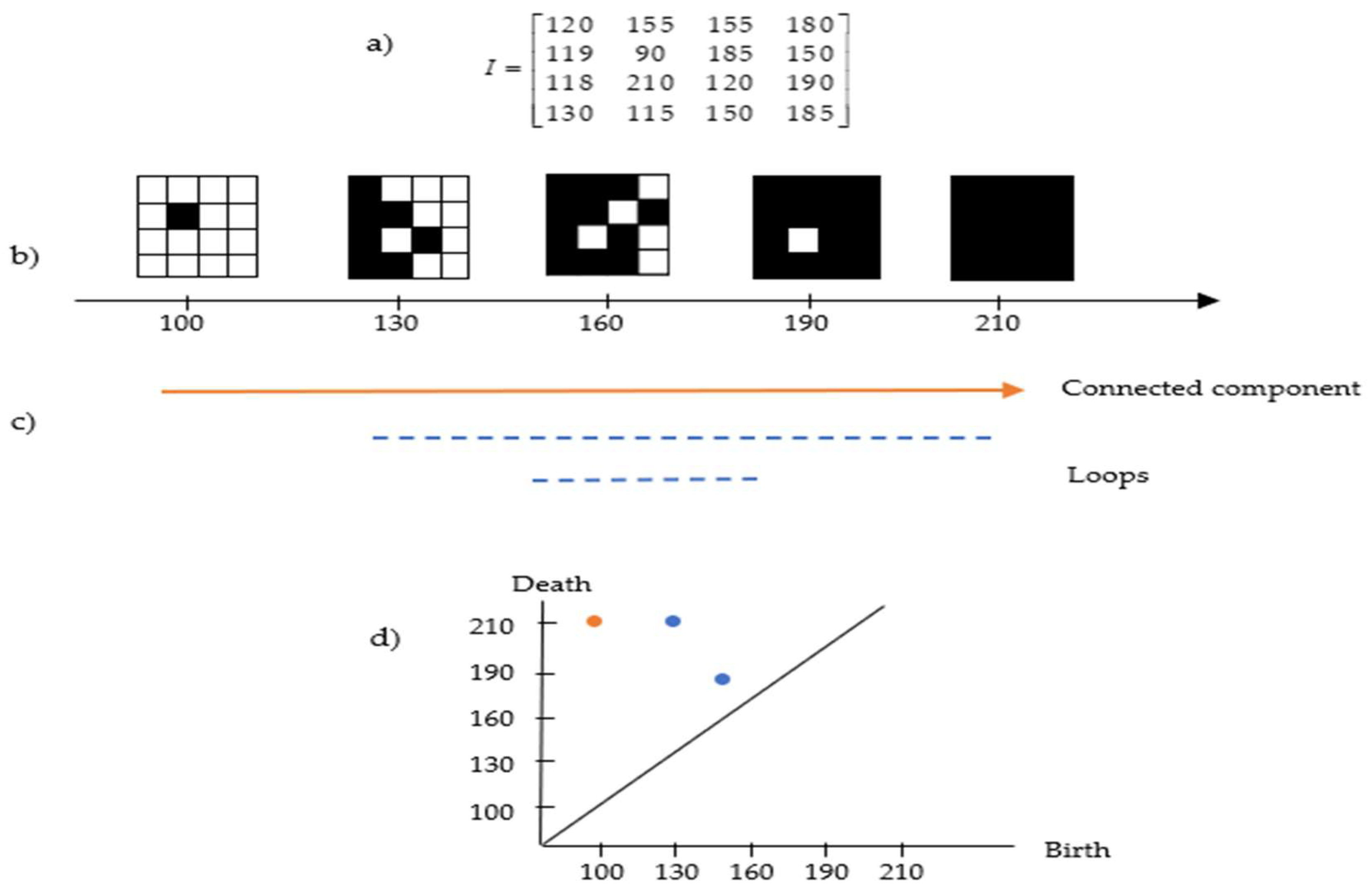

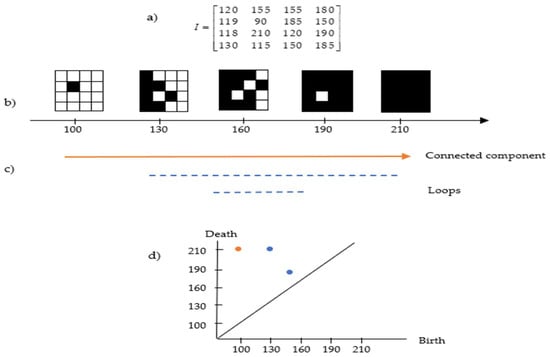

Images can be interpreted as geometric shapes known as cubical complexes. In 2D greyscale images, the cubical complexes are topological spaces consisting of a combination of 0-cube (vertices), 1-cube (edges), and 2-cube (squares). It can be efficiently constructed by assigning a vertex to each pixel, then combining vertices corresponding to nearby pixels by an edge and filling process in the resulting squares [34]. Let be a greyscale image of size with grey intensities (we use an 8-bit greyscale ). The pixel intensity value of on the intervals is , where 0 represents black colour and 255 represents white colour, with shades of grey for the values in between. Once these images are interpreted as 2-cubical simplices, greyscale filtration with 256 sublevels can be constructed as a nested sequence called sublevel set filtration [25] (see the example in Figure 4).

Figure 4.

Example of the cubical complex in a greyscale image. (a) Image pixel values; (b) the filtered cubical complex; (c) the lifespan of connected components and loops based on (b); and (d) the persistent diagram based on (c).

Figure 4a,b present an example of cubical complex filtration in a matrix representing the pixel value of the image. The lifespan can be seen in Figure 4c, where the connected component appears at the intensity of 100 and continues to exist because all subsequent pixels are always connected to the preceding ones. At filtration 120, a loop exists (full white pixels inside components made of black pixels) and deaths exist at filtration 210. Apart from that, another loop exists at filtration 150 and deaths shortly after at filtration 185. This information can be transformed in the persistent diagram representation as in Figure 4d, which will be discussed in the following section.

2.2.1. Interpreting the Persistent Diagrams

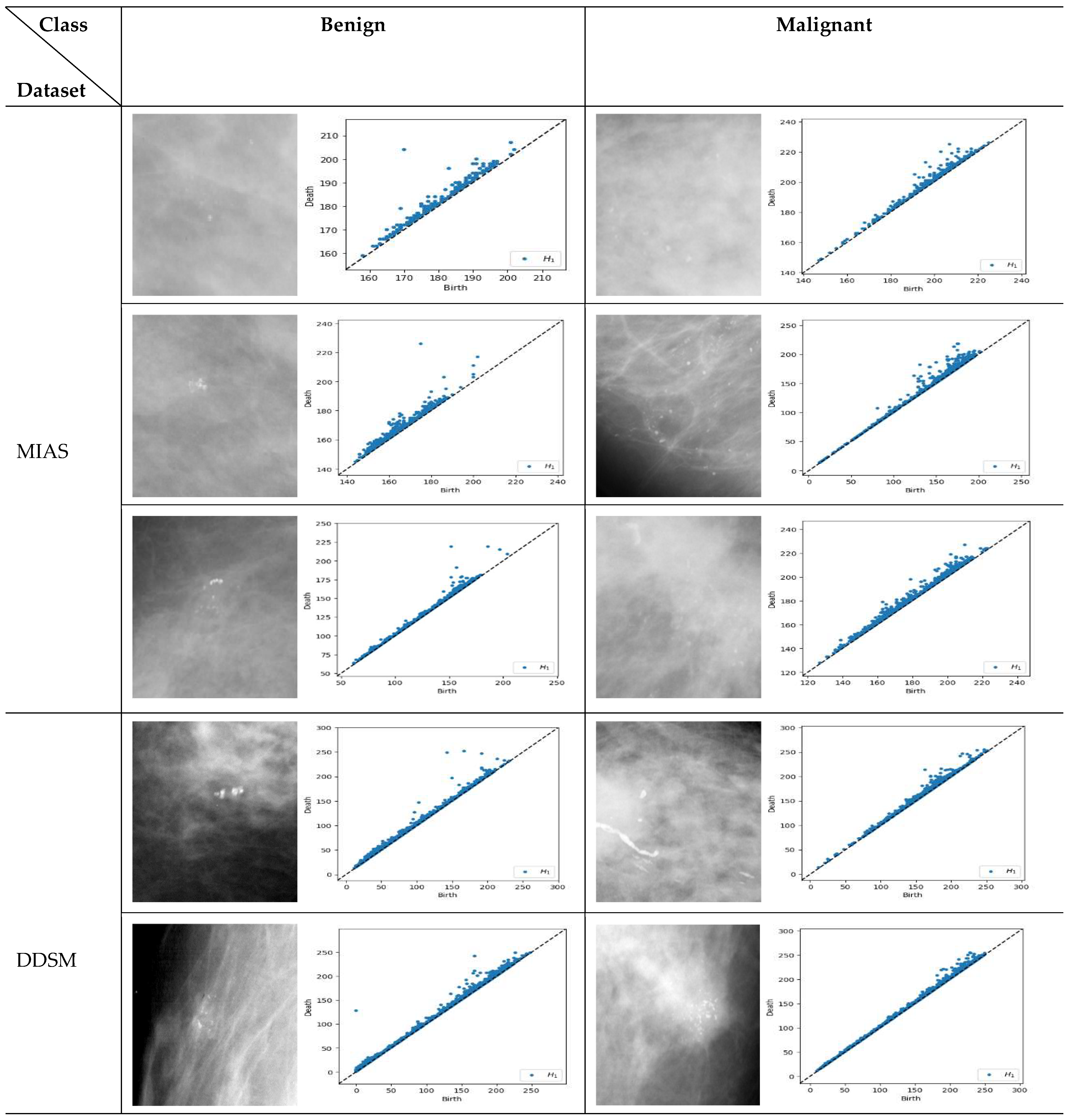

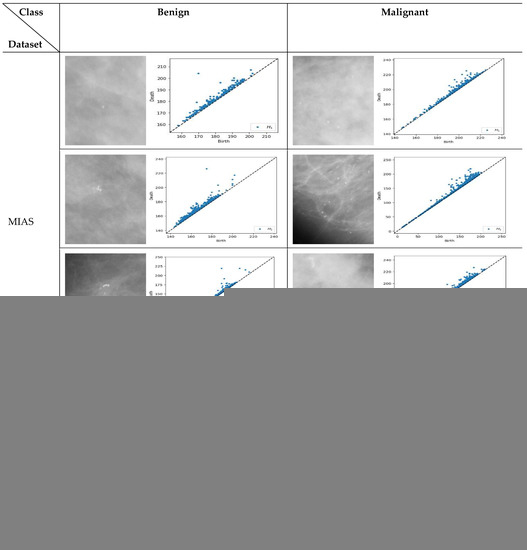

PD represents a pattern-generating field and provides a (visual) summary of the set of points and d > b, where the births (b) are marked on the x-axis and deaths (d) on the y-axis. The lifespan d-b > 0 indicates the prominence of features. Long-lived features are those far from the diagonal line. They are usually regarded as significant features and represent the robustness of holes against noise, whereas short lifespans are represented by points close to the diagonal and are interpreted as noise [25,35]. All points of the different homological dimensions are present in PD, such as 0-dimensional holes denoted as H0 (connected complete black pixels) and 1-dimensional holes denoted as H1 or loops (complete white pixels inside components made of black pixels). However, some dimensions may be irrelevant to the study [31]. As microcalcifications appear as white spots, this study employs only H1. Figure 5 illustrates samples of PD for H1 obtained from benign and malignant microcalcifications in different datasets.

Figure 5.

Persistent diagram of H1 (loops) for benign and malignant microcalcifications in the MIAS [7] and DDSM datasets [32]. For the MIAS dataset, the first row is a sample of dense glandular tissue, the second is a sample of fatty tissue, and the third is fatty glandular tissue.

Similar structure of the images will have comparable pattern in PDs [36]. For example, based on Figure 5, the benign microcalcifications indicate a similar pattern for different types of density and datasets where topological features associated with long-lived lifespan are observed. Conversely, in malignant microcalcifications, the PD illustrates short-lived features where the points are tightly packed and close to the diagonal. Furthermore, it is known that the points close to the diagonal line are usually regarded as less “useful” and linked with noise [37,38]. Thus, multi-level filtering of PDs offers a rigorous solution to the problem of distinguishing between anomalies and noise in these representations. The proposed procedures are described in the following steps:

- Step 1:

- Obtain PDs for 1-dimensional holes (H1);

- Step 2:

- Calculate the lifespan for each point in the PD;

- Step 3:

- Find the maximum lifespan;

- Step 4:

- Filter.

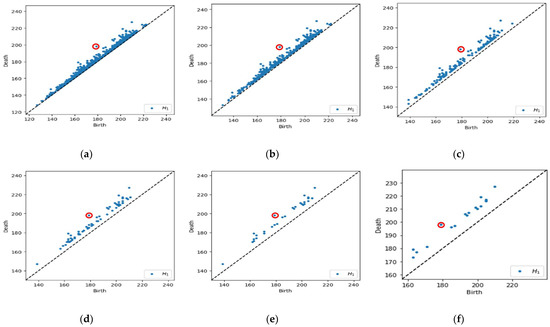

We choose the filter interval range from 10% to 50% with 10% increments to facilitate interpretation when determining which level yields the best classification results for each dataset. The number of points in the PD can be reported as Betti numbers, denoted as B1 for H1. Hence, the filtering process will reduce the number of B1 values. Figure 6 demonstrates a schematic representation of a multi-level filtering PD in a sample of a malignant image.

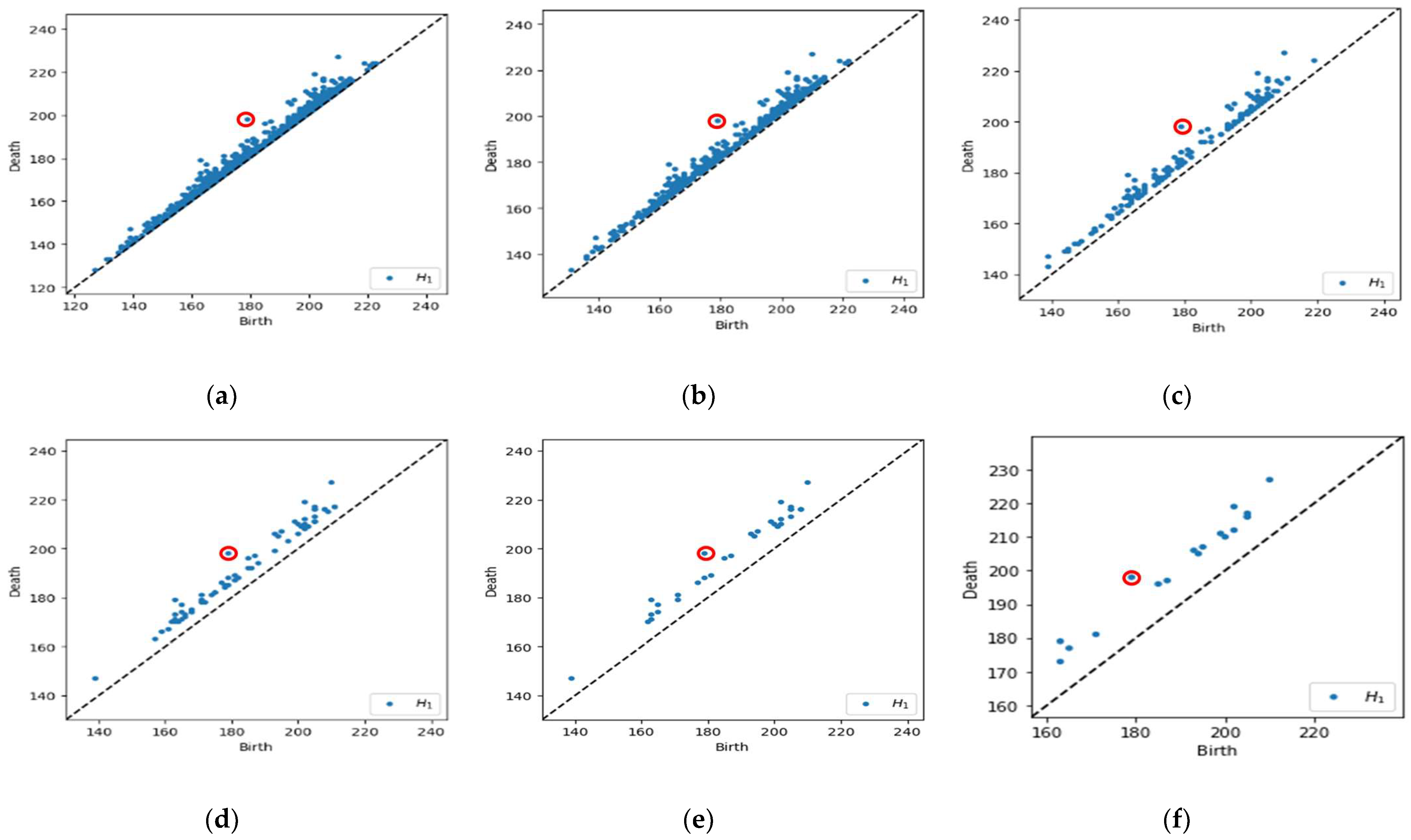

Figure 6.

Example of a multi-level filtering PD with Betti number (B1). (a) Original PD with max lifespan (red circle), B1 = 1341; (b) 10% of data filtered out, B1 = 510; (c) 20% of data filtered out, B1 = 162; (d) 30% of data filtered out, B1 = 68; (e) 40% of data filtered out, B1 = 32; and (f) 50% of data filtered out, B1 = 18.

Referring to Figure 6a, we calculate the lifespan for each point in the PD and obtain the maximum lifespan. In Figure 6b–f, the points are filtered out based on a lifespan greater than 10% to 50% of the maximum lifespan, which implies the number of B1 reduced from 1341 points initially to 510, 162, 68, 32, and 18, respectively. Subsequently, the original and filtered PD is then used to obtain the feature vector representations, which can be easily incorporated as input in machine learning models.

2.2.2. Vectorised Topological Features

Vectorising topological features in PH converts the topological features obtained from a PD into a vector representation that can be employed for machine learning models. This study employs two topological features so that the distribution of the persistence feature (lifespan) can be characterised as either benign or malignant. The vectorised topological features used are described as follows.

- Persistent Entropy (PE)

PE measures the disorder in the distribution of lifespan (persistence). Let be a persistent diagram where and are the birth and death points, respectively, of the topological structure in pixel image I and lifespan . The entropy of PD is defined as follows [23,39]:

where is the sum of the lifespan in the PD. Every PD will produce one entropy value. Based on [27], an entropy value of 0 represents a single feature in the image, while N features consist of .

- Persistent Image (PI)

PI is one of the prevalent vectorisation methods adopted to convert the topological information in PDs into a finite-dimensional vector representation. It was introduced by Adams et al. [24]. The PD, which consists of the persistence point at birth (b)/death (d) coordinates, is rotated by to construct the PI. Consequently, the rotated PD denoted as R can be discretised into the persistent surface () in coordinates, defined as

where is a gaussian smoothing function given by

and are non-negative weighting functions [40]. Finally, the PI can be obtained by integrating the persistence surface function over each pixel [24].

2.3. Machine Learning Classifier

Once the topological features are vectorised, the dataset can be implemented in the following machine learning classifiers:

- Neural network (NN);

- Support vector machine (SVM);

- K-nearest neighbour (KNN);

- Decision tree (DT).

The machine learning models are applied for comparison purposes to evaluate the performance of individual and concatenated features and to determine the optimum filtering level for each dataset.

2.4. Performance Evaluation

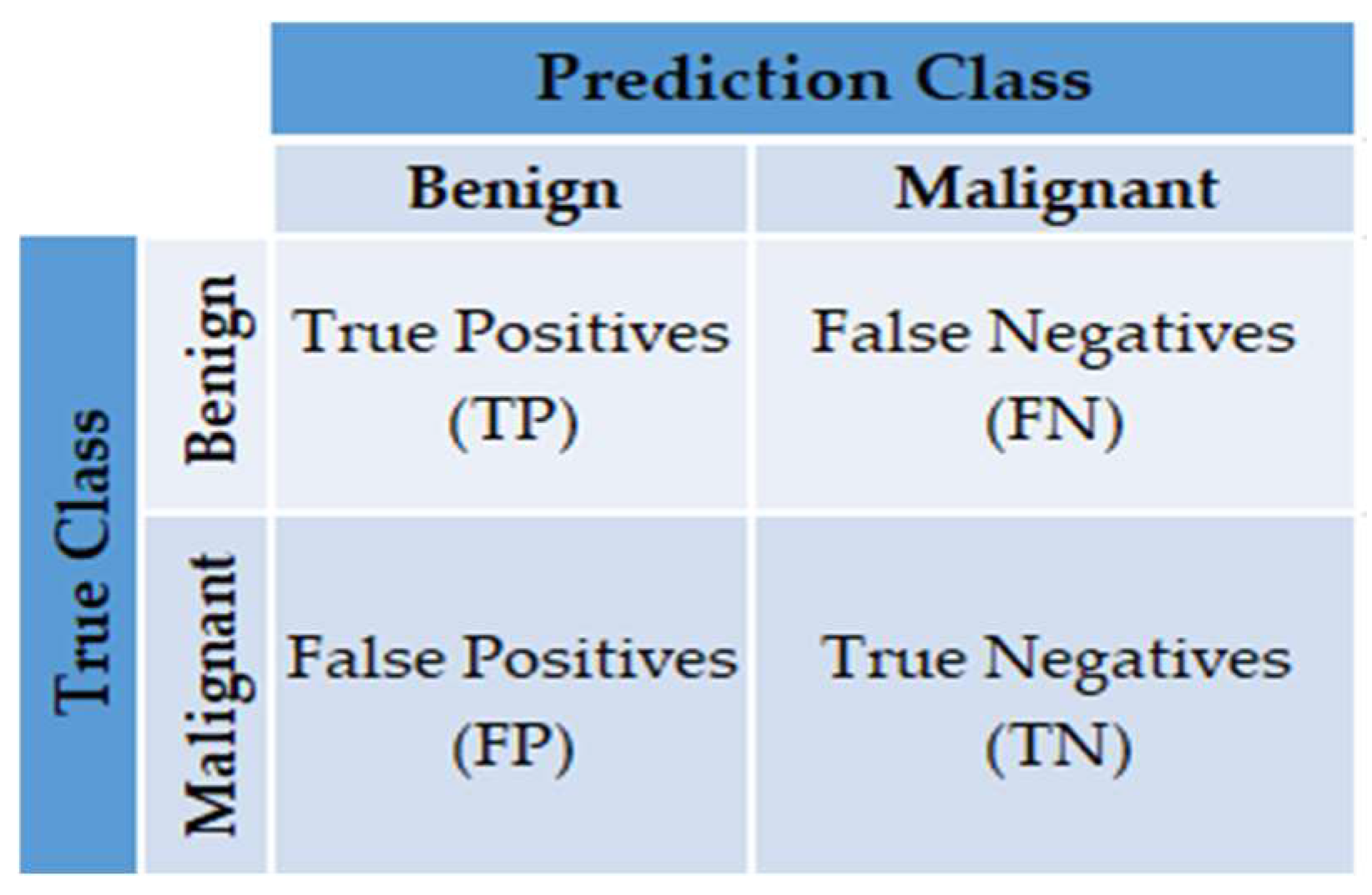

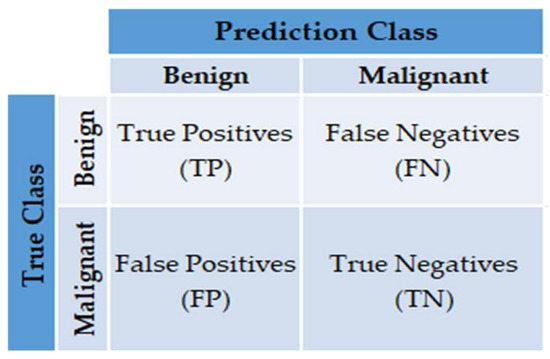

Many different evaluation metrics can be used to measure how well the method and classifier work. This study uses the confusion matrix, accuracy, and area under the receiver operating characteristic curve (AUC). The metrics used to evaluate the results are described below [41]:

- Confusion matrix: Provide a matrix as output that describes the method’s performance consisting of the total number of correct and incorrect predictions. The matrix is shown in Figure 7.

Figure 7. The confusion matrix.

Figure 7. The confusion matrix. - Classification Accuracy (CA): The percentage of microcalcifications correctly classified to the total number of observations. It can be measured as follows:

- Area under the Curve (AUC): The AUC can be measured by calculating the area under the receiver operating characteristic (ROC) curve. The ROC curve is a plot of the true positive rate (TPR), called sensitivity or recall, versus the false positive rate (FPR). TPR is defined in this context as the number of correctly diagnosed malignant cases divided by the total number of malignant cases. In contrast, FPR is defined as the number of benign cases wrongly classified as malignant divided by the total number of benign instances. TPR and FPR can be calculated using Equations (4) and (5), respectively:

2.5. Implementation Details

The cubical complex filtration and H1 of PD are generated in all experiments using an open-source program, Cubical Ripser (https://github.com/shizuo-kaji/CubicalRipser_3dim/, accessed on 2 February 2022) [42]. The vectorised topological features consisting of PI and PE features can be obtained using Scikit-TDA library written in Python (https://persim.scikit-tda.org/, accessed on 19 May 2022). The PI parameters, such as pixel size, are set to 1, meaning that one vectorises the PI value for every PD. Other parameters, such as the weighting function, , are based on the persistence values [24], and the smoothing parameter in the Gaussian function is set to default.

MATLAB R2021b classification learner is implemented with a fivefold cross-validation approach for machine learning models. The input data for all models are vectorised topological features, i.e., PI and PE values for each image. Each experimental test uses 11th Gen Intel(R) Core(TM) i7-11800H, CPU 2.30 GHz, 16 GB memory, and NVIDIA Geforce RTX 3050Ti for the graphics card. The hyperparameters used for each model are described as follows:

- Neural network (NN): classifier type = medium, the number of fully connected layers = 1, the first layer size = 25, and the activation function = ReLu.

- Support vector machine (SVM): kernel type = linear, kernel scale = automatic, and box constraint level = 1

- K-nearest neighbour (KNN): classifier type = fine, number of neighbours = 1, distance metric = Euclidean, and distance weight = equal.

- Decision tree (DT): classifier type = fine tree, the maximum number of splits = 100, and split criterion = Gini’s diversity index.

3. Results

This section presents the results based on the proposed method discussed in Section 2 applied to two public datasets, MIAS and DDSM. Furthermore, this section discusses the usefulness of the PH approach in discriminating the topological features between two classes, benign and malignant. The performances of single and concatenate features are also examined using four machine learning classifiers by comparing the CA and AUC. Finally, the optimal topological filter for each dataset is also presented.

3.1. Topological Filtering

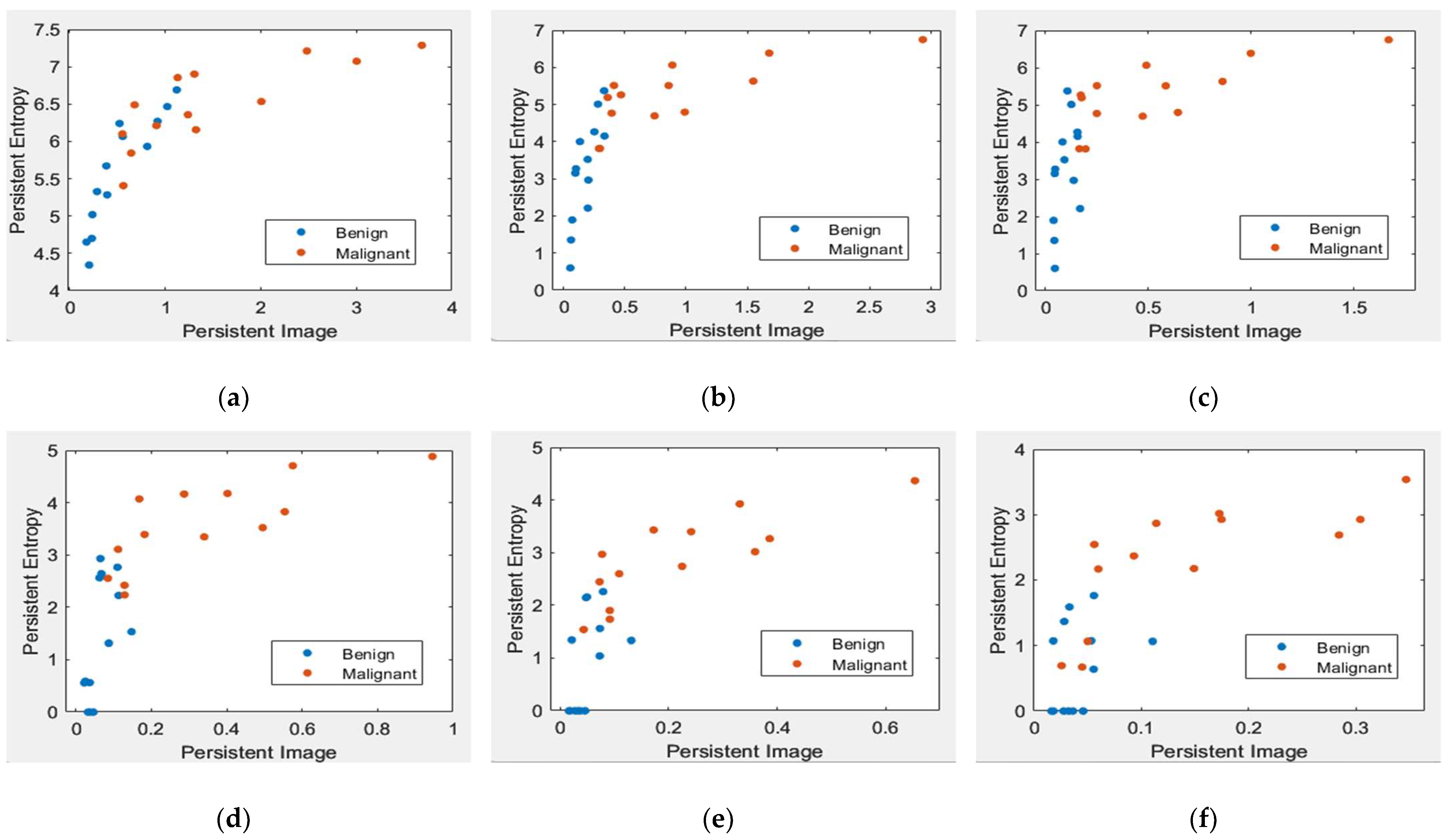

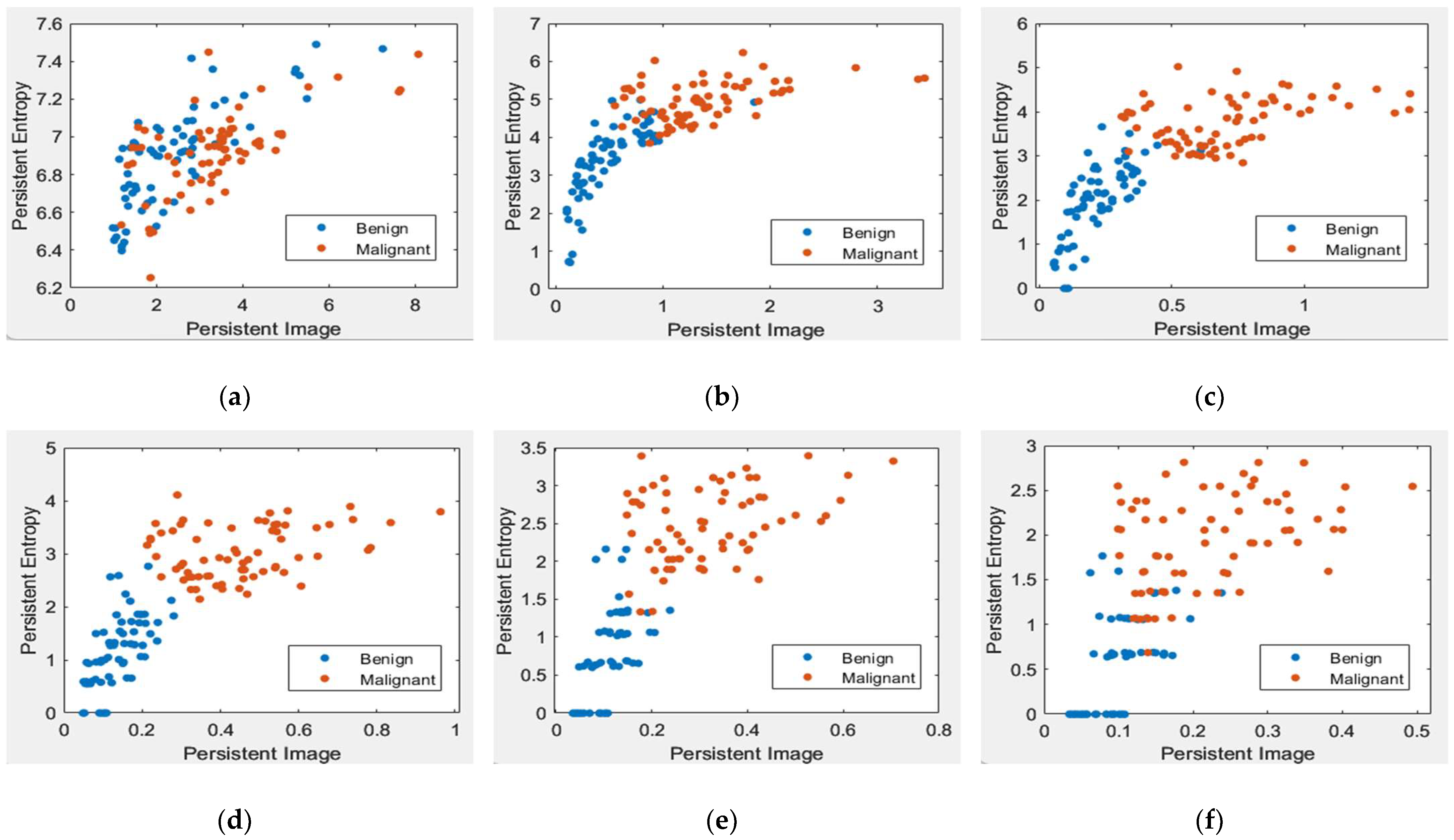

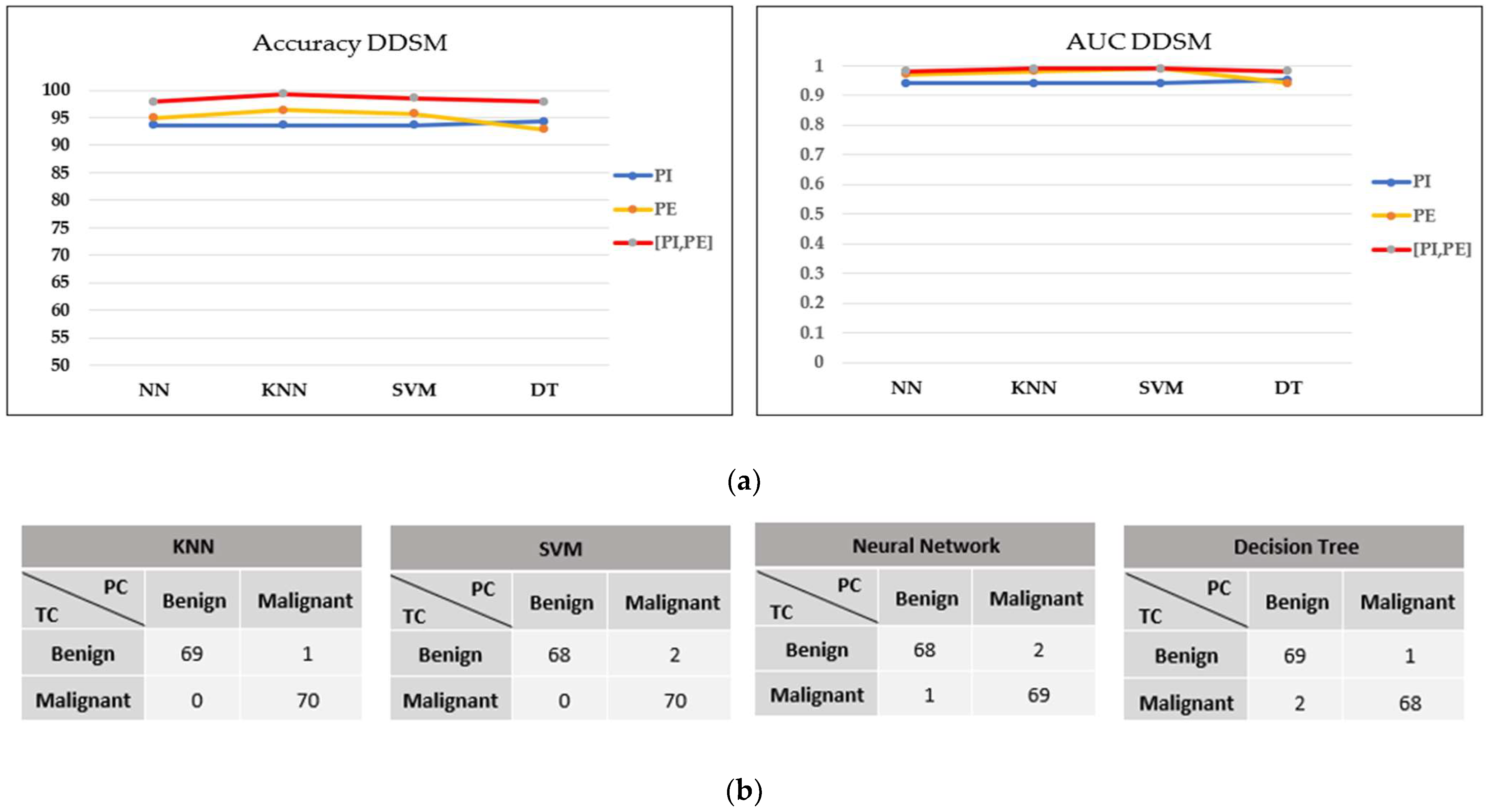

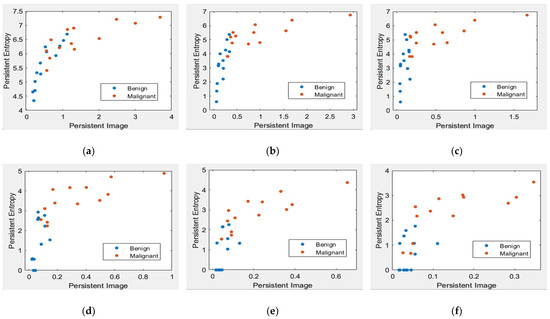

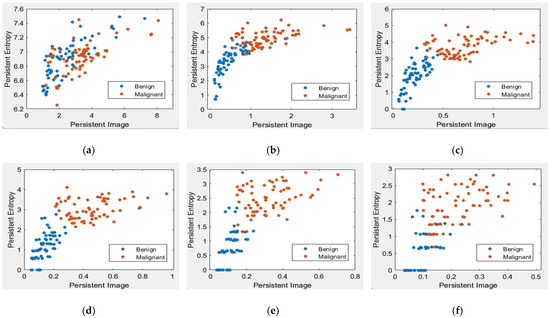

Figure 8 and Figure 9 illustrate the scatter plot of PE versus PI feature values with different levels of topological filtering in the MIAS and DDSM datasets, respectively.

Figure 8.

Scatter plot of PE versus a PI with different levels of filters in the MIAS dataset [7]. (a) Non-filter; (b) filter 10% of max lifespan; (c) filter 20% of max lifespan; (d) filter 30% of max lifespan; (e) filter 40% of max lifespan; and (f) filter 50% of max lifespan.

Figure 9.

Scatter plot of persistent entropy versus a persistent image with different levels of filters in the DDSM dataset [32]. (a) Non-filter; (b) filter 10% of max lifespan; (c) filter 20% of max lifespan; (d) filter 30% of max lifespan; (e) filter 40% of max lifespan; and (f) filter 50% of max lifespan.

Figure 8 and Figure 9 demonstrate scatter plots where each point corresponds to the value of PE versus PI. The number of points represents 26 images in the MIAS dataset and 140 in the DDSM dataset. Note that non-filtered PD in Figure 8a and Figure 9a refers to the original images containing noise. Noise can cause spurious features to appear in the image, leading to incorrect topological inferences. This is because noise can introduce false persistence of features that are not truly persistent and may also obscure the persistence of real features. Thus, the separation between two classes, benign and malignant, is more difficult to distinguish by the value of PE and PI features. Removing the short lifespan or noise through PD filtration at 10 to 30% provides a positive impact, where the benign class tends to have smaller values of PE and PI than the malignant class, as in Figure 8 and Figure 9b–d. However, high PD filters (40% and 50%) cause the PE and PI values to overlap, as in Figure 8 and Figure 9e–f.

3.2. Classification Performance

The optimum filtering level was selected by comparing the individual’s performance and concatenating topological features using four machine learning classifiers, namely, the NN, KNN, SVM, and DT models. Table 1 and Table 2 present the performance of the persistence image feature, while Table 3 and Table 4 present the PE feature in the MIAS and DDSM datasets, respectively.

Table 1.

Classification performance of the PI feature in the MIAS dataset. CA = accuracy and Std = standard deviation.

Table 2.

Classification performance of the PI feature in the DDSM dataset.

Table 3.

Classification performance of the PE feature in the MIAS dataset.

Table 4.

Classification performance of the PE feature in the DDSM dataset.

It can be observed that the classification performance from all classifiers is improved when the filtering process is applied. Table 1 and Table 3 present that the optimal filtering level for persistent images and entropy features in the MIAS dataset is at a filter of 20%. Here, NN, DT, and KNN attain an accuracy of 92.3% for both topological features with an AUC value of 0.9 and above. For the DDSM dataset in Table 2 and Table 4, filter 30% delivered remarkable results, with all classifiers achieving above 92.9% accuracy and up to 0.99 AUC. Note that the performance in the DDSM dataset is more consistent in all classifiers because it utilised more images (140 images) than the MIAS dataset, which only used 26 images.

The classification performance can be significantly improved by concatenating features (multi-vector). In the MIAS dataset, the performance is improved from 92.3% to 96.2% accuracy and, in the DDSM dataset, it is increased to 99.3%, as shown in Table 5 and Table 6.

Table 5.

Classification performance of concatenating features in the MIAS dataset.

Table 6.

Classification performance of concatenating features in the DDSM dataset.

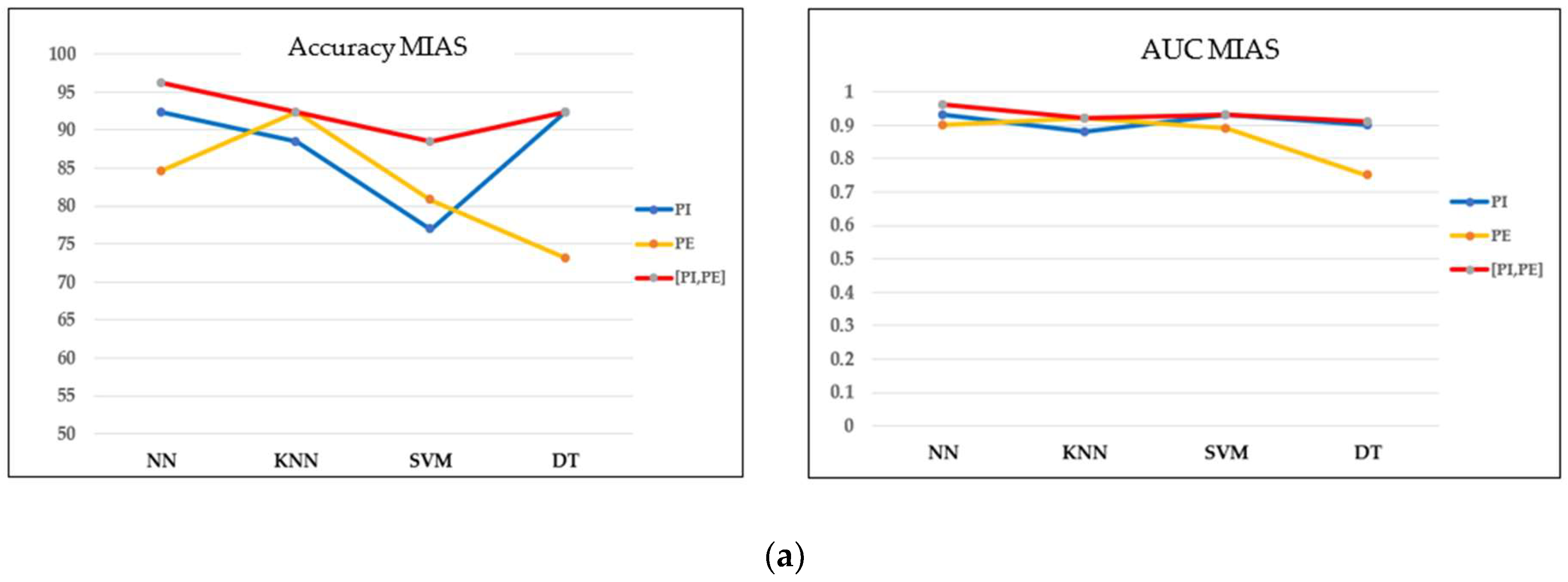

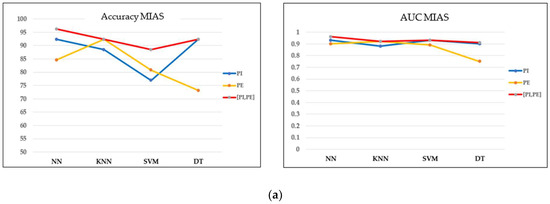

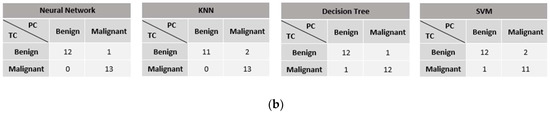

3.3. Performance of Machine Learning Models

Based on the optimal filtering level in both datasets, the performances of four classifiers were evaluated and compared in terms of accuracy, the AUC, and the confusion matrix. Referring to the MIAS dataset in Figure 10a,b, most models perform well on concatenating features with greater than 0.9 AUC and a maximum of three misclassified cases in the confusion matrix. Moreover, the accuracy obtained is greater than 90%, except for the SVM model. As this dataset contains three different types of densities, particularly when the breast density is high, the microcalcifications could be obscured by the dense tissues, making it more challenging to classify them. These findings align with the results reported by [43], where the SVM accuracy drops because of the greyscale image’s brightness. On the other hand, NN indicated the best performance model for this dataset, with the highest accuracy and AUC and only one false negative (FN) case in the confusion matrix.

Figure 10.

Performance comparison of machine learning classifiers based on the optimal filter (20%) in the MIAS dataset. TC = true class and PC = predicted class. (a) Accuracy and AUC of classifiers. (b) Confusion matrix of concatenating features.

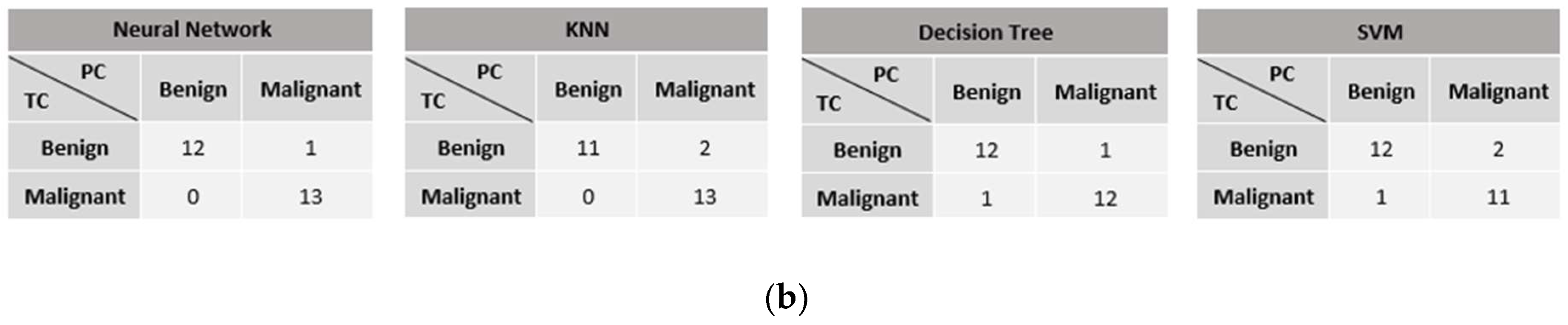

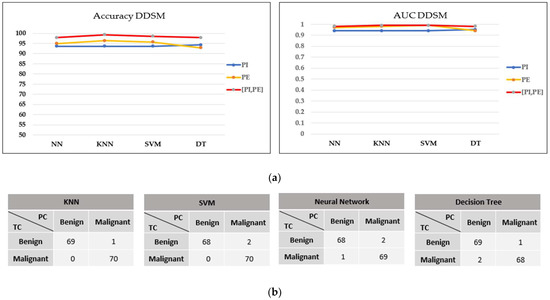

In contrast, all models in the DDSM dataset exhibit outstanding classification performance on concatenating features, with greater than 95% accuracy, greater than 0.97 AUC, and a maximum of three misclassified cases in the confusion matrix, as shown in Figure 11a,b. The KNN model performed the best in this dataset, with the highest accuracy and only one FN result.

Figure 11.

Performance comparison of machine learning classifiers based on the optimal filter (30%) in the DDSM dataset. (a) Accuracy and AUC of classifiers. (b) Confusion matrix of concatenating features.

4. Discussion and Future Work

The literature commonly describes PHs robust against image noise [25,31]. This study demonstrates that if the PD is taken directly without any filtering on the diagram, machine learning models cannot successfully classify the vectorised topological features of microcalcification. Other than that, experimental results indicate that the performance was improved by implementing the optimal filtering level for each dataset.

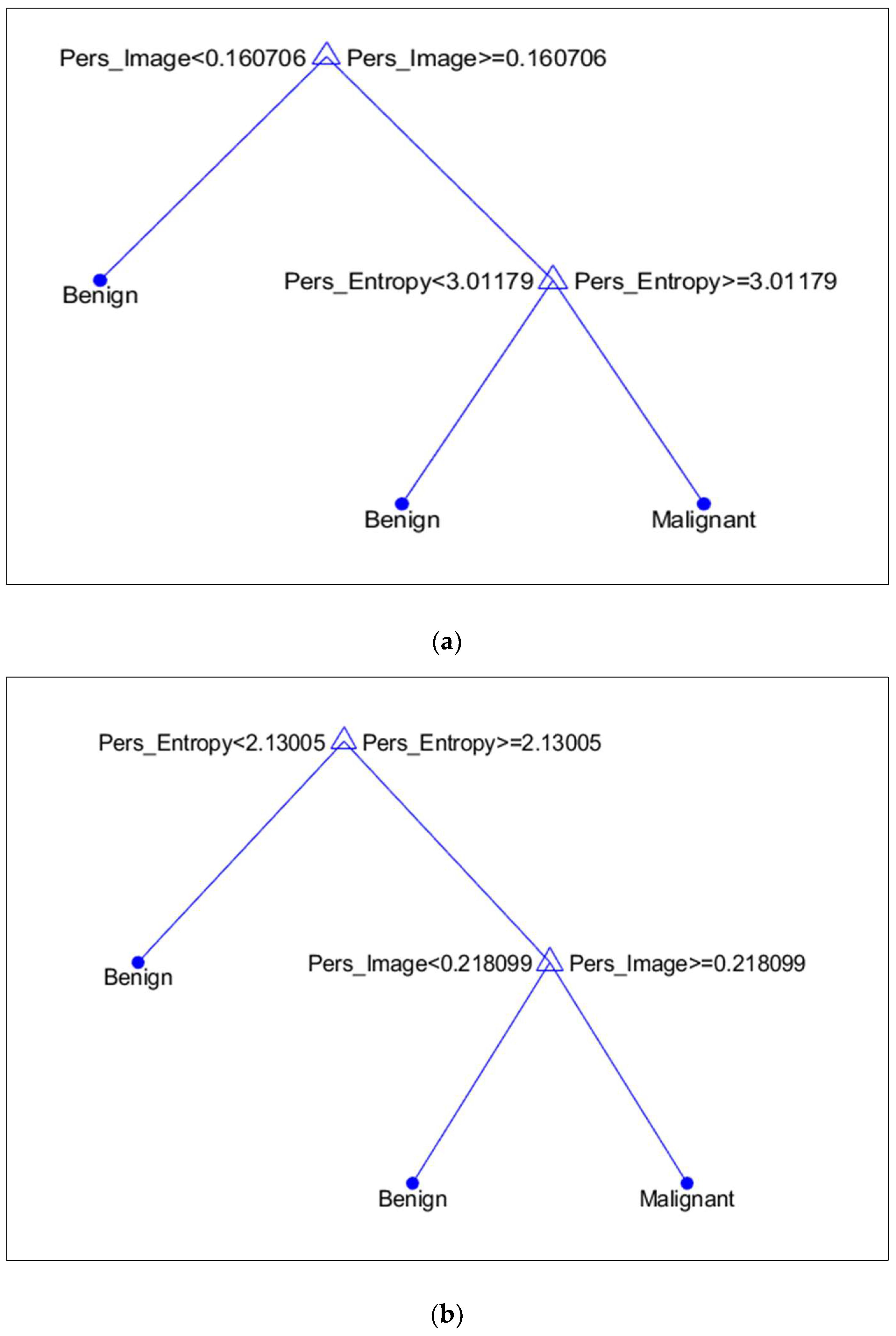

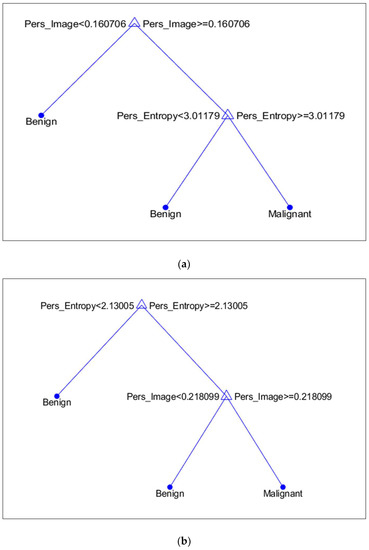

It is discovered that, in terms of topological features, PI is more prominent in the MIAS dataset, whereas PE in the DDSM dataset. Figure 12a,b illustrates the discriminant values of every feature based on the DT model. Compared to malignant microcalcifications, benign microcalcifications will have a lower value of PI and PE.

Figure 12.

Discriminant feature values based on the Decision Tree model. (a) MIAS Dataset; (b) DDSM Dataset.

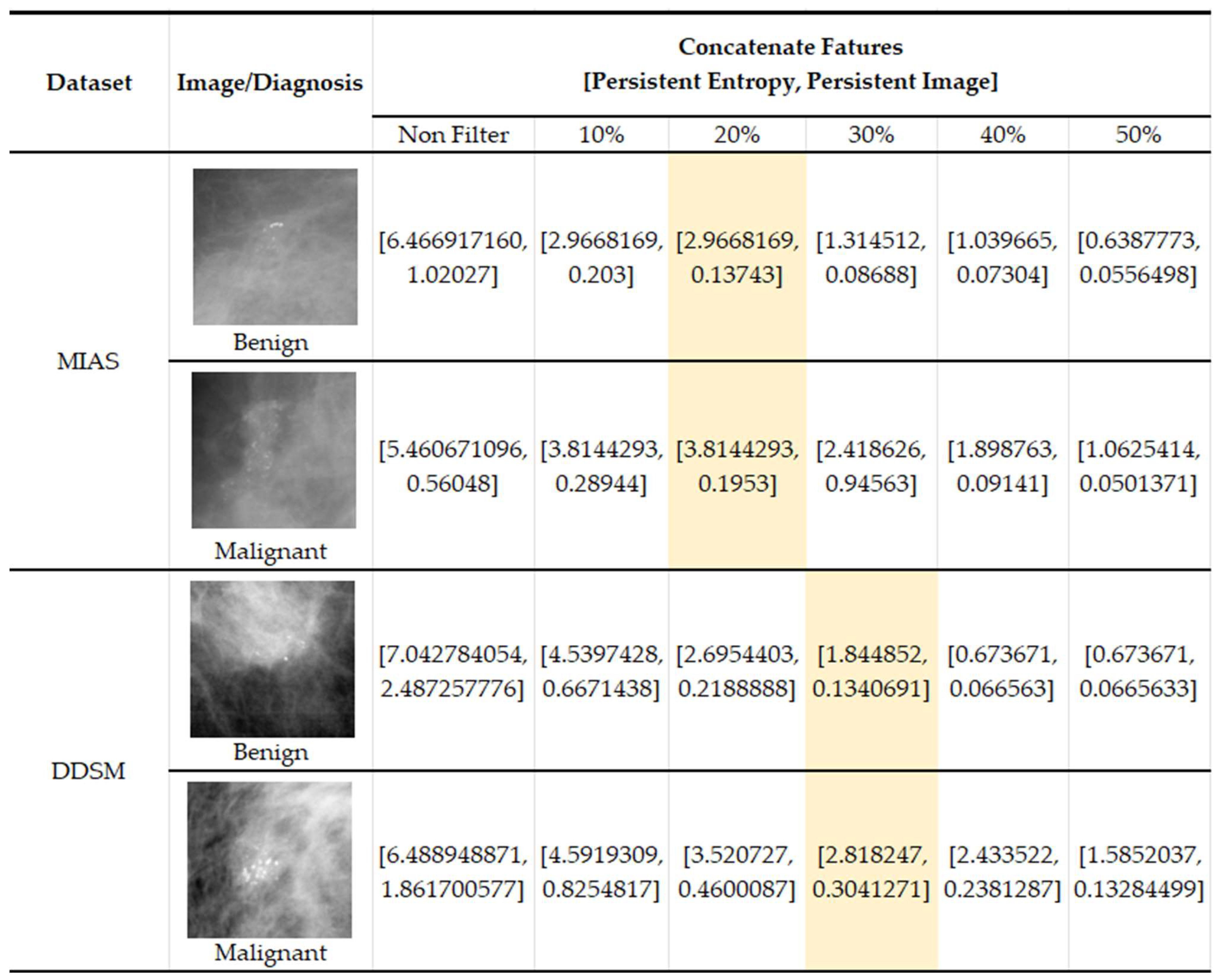

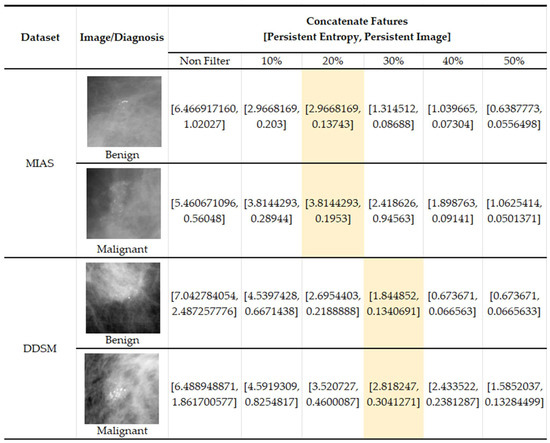

In Figure 13, we present some examples of the images from both datasets where benign images have higher values (PI and PE) in non-filter topological features than malignant features. This results in misclassification between the two classes and impacts the classification performance. Because benign microcalcifications present a long lifespan in the PD (refer to Figure 5), the significant topological features can be distinguished after filtering in the PD.

Figure 13.

Examples of images with topological feature values in the MIAS [7] and DDSM datasets [32].

PH offers some unique advantages. One of the advantages is its ability to analyse data at multiple scales, which can be particularly useful for medical images with features of different sizes and scales. By analysing the data at multiple scales, PH can capture information about features that other methods may miss. Besides, it represents a lower computational burden to the system because the classification operation is not on the image matrix but on a compact vector from the input data [23]. This study uses two topological features for each image: PE and PI features. The complexity of a CAD system increases rapidly with the number of features used [44]. Furthermore, the proposed filtering procedure does not influence the image quality, because the process only operates on the PD, as opposed to some preprocessing methods, such as linear filtering, which can cause degradation of edges and image details, giving the images a blurred effect [13].

Comparative Analysis

Several existing state-of-the-art non-PH models used to conduct experiments with the same MIAS and DDSM datasets were chosen for performance comparison, as shown in Table 7. Based on this table, two types of images were applied for both datasets: greyscale and binary images. Greyscale indicates that the classification process is performed straight from the original image from the dataset, consisting of 256 pixel values. Meanwhile, the classification of binary images indicates that the original images undergo a segmentation process to produce a black-and-white image, where pixel values of 0 (black) are considered as the background region and pixel values of 1 (white) are considered as segmented regions of microcalcifications. However, some microcalcifications, usually malignant cases, are not clearly visible and are closely connected to background tissue, making it impossible for segmentation algorithms to obtain complete segmentation for these calcifications [45]. For that reason, the proposed methods are tested for the greyscale images so that the whole topological structure of the image can be considered.

Table 7.

Comparative analysis with other methods.

Various features have been studied in the literature to classify benign and malignant microcalcifications. Research by Fadil et al. [15] used 2D discrete wavelet transform for contrast enhancement of the microcalcifications and extracted eight textural features on the GLCM, achieving 95% accuracy and 0.92 AUC using the random forest (RF) classifier. On the other hand, Suhail et al. [46] present a way to obtain a single feature value by applying a scalable linear fisher discriminant analysis (LDA) approach, achieving up to 96% accuracy with 0.95 AUC using SVM. Mahmood et al. [16] employed machine learning integrated with the radiomic approach to classifying the textural and statistical features, attaining 98% accuracy and 0.90 AUC. Apart from that, Gowri et al. [17] also used textural features with fractal analysis and obtained 96.3% accuracy. Melekoodappattu et al. [5] proposed a hybrid extreme machine learning classifier consisting of the extreme learning machine (ELM) with the fruitfly optimization algorithm (ELM-FOA) along with glowworm swarm optimization (GSO). The preprocessing stage was conducted using the Wiener filter and enhanced using contrast-limited adaptive histogram equalisation (CLAHE). Here, 44 features are extracted using the speed up robust feature (SURF), Gabor filter, and GLCM, and achieved 99.15% accuracy.

In addition, topological features have also been studied by [47,48] for modelling and classification of microcalcifications, with promising results. To the best of our knowledge, our proposed method is the first work using a persistent diagram as a filtering approach as well as PH features to tackle the challenge of discriminating between benign and malignant microcalcifications. This method achieves 96.2% accuracy with 0.96 AUC for the MIAS dataset and 99.3% accuracy with 0.99 AUC for the DDSM dataset. This is comparable to other state-of-the-art non-PH approaches developed to solve the same problem.

Although the performance is satisfactory, this study has several limitations. First, this study used a limited number of images. Hence, additional testing must be conducted on mammographic images collected from hospitals or population-screening projects. Increasing the number of images would permit a more in-depth assessment and can prevent bias in the data. Second, external validation of model performance was not conducted. Doing so could have further demonstrated its generalisability. Third, the performance of the model is not compared to deep learning approaches owing to the small amount of data. For future studies, the persistent homology features can be extended to deep learning architectures and potentially achieve even better performance and robustness of image classification algorithms, particularly in the context of medical imaging, where complex structures and patterns are often present. Lastly, the choice of preprocessing steps, such as image enhancement or denoising, can also affect the resulting persistent homology features and introduce bias into the model. It is important to carefully consider the appropriate preprocessing steps for the specific dataset and to evaluate the impact of these steps on model performance.

5. Conclusions

In conclusion, this study presents an approach to classifying microcalcifications in mammogram images using PH and machine learning models. This study demonstrates that machine learning models successfully classify microcalcifications using appropriate PH filtering levels and features. In addition, filtering PD with concatenate features improves the classification accuracy of microcalcifications.

Integrating PH-based machine learning into clinical practice for breast cancer diagnosis can offer several potential benefits for patient care. The topological features extracted by PH can provide additional information that may not be captured by traditional image analysis methods, leading to a more accurate diagnosis. This, in turn, may facilitate early detection of breast cancer, ultimately reducing the number of unnecessary biopsies for patients. Additionally, accurate machine learning models can enable faster and more effective analysis of mammogram images, easing the workload of radiologists and other healthcare providers.

Author Contributions

Conceptualization, A.A.M., M.A.A., F.A.R. and M.S.M.N.; methodology, A.A.M., M.A.A., M.S.M.N. and F.A.R.; software, A.A.M.; validation, A.A.M., M.A.A., M.S.M.N., F.A.R. and R.M.; formal analysis, A.A.M.; data curation, A.A.M. and R.M.; writing—original draft preparation, A.A.M.; writing—review and editing, A.A.M., M.A.A., M.S.M.N., F.A.R. and N.F.S.Z.; visualization, A.A.M., M.A.A. and N.F.S.Z.; supervision, M.A.A., M.S.M.N. and F.A.R.; funding acquisition, M.A.A. and F.A.R. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Universiti Kebangsaan Malaysia (UKM) through internal grant (GUP-2021-046), Tabung Agihan Penyelidikan (TAP-K020061) and Dana Pecutan Penerbitan (PP-FST-2023).

Institutional Review Board Statement

There are no ethical implications on the public datasets.

Informed Consent Statement

Patient consent was waived owing to the implementation in public datasets.

Data Availability Statement

The public datasets are available in the publicly accessible repository; refer to [7] for the MIAS and [32] DDSM datasets.

Acknowledgments

We gratefully acknowledge Universiti Kebangsaan Malaysia for internal grant (GUP-2021-046), Tabung Agihan Pendapatan (TAP-K020061) and Dana Pecutan Penerbitan (PP-FST-2023); without which the research would not have been possible. A.A.M. is thankful to the Ministry of Higher Education (MOHE) of Malaysia and Universiti Teknologi MARA (UiTM) for the full scholarship (SLAB). The authors would like to thank the anonymous referees for their expert advice and suggestions, which helped to improve this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ferlay, J.; Colombet, M.; Soerjomataram, I.; Parkin, D.M.; Piñeros, M.; Znaor, A.; Bray, F. Cancer Statistics for the Year 2020: An Overview. Int. J. Cancer 2021, 149, 778–789. [Google Scholar] [CrossRef] [PubMed]

- Vy, V.P.T.; Yao, M.M.-S.; Le, N.Q.K.; Chan, W.P. Machine Learning Algorithm for Distinguishing Ductal Carcinoma in Situ from Invasive Breast Cancer. Cancers 2022, 14, 2437. [Google Scholar] [CrossRef] [PubMed]

- Ramadan, S.Z. Methods Used in Computer-Aided Diagnosis for Breast Cancer Detection Using Mammograms: A Review. J. Healthc. Eng. 2020, 2020, 9162464. [Google Scholar] [CrossRef]

- Htay, M.N.N.; Donnelly, M.; Schliemann, D.; Loh, S.Y.; Dahlui, M.; Somasundaram, S.; Tamin, N.S.B.I.; Su, T.T. Breast Cancer Screening in Malaysia: A Policy Review. Asian Pac. J. Cancer Prev. 2021, 22, 1685. [Google Scholar] [CrossRef] [PubMed]

- Melekoodappattu, J.G.; Subbian, P.S.; Queen, M.P.F. Detection and Classification of Breast Cancer from Digital Mammograms Using Hybrid Extreme Learning Machine Classifier. Int. J. Imaging Syst. Technol. 2021, 31, 909–920. [Google Scholar] [CrossRef]

- Oliver, A.; Torrent, A.; Lladó, X.; Tortajada, M.; Tortajada, L.; Sentís, M.; Freixenet, J.; Zwiggelaar, R. Automatic Microcalcification and Cluster Detection for Digital and Digitised Mammograms. Knowl. Based Syst. 2012, 28, 68–75. [Google Scholar] [CrossRef]

- Suckling, J. The Mammographic Image Analysis Society Digital Mammogram Database. Exerpta Med. Int. Congr. 1994, 1069, 375–386. [Google Scholar]

- Azam, A.S.B.; Malek, A.A.; Ramlee, A.S.; Suhaimi, N.D.S.M.; Mohamed, N. Segmentation of Breast Microcalcification Using Hybrid Method of Canny Algorithm with Otsu Thresholding and 2D Wavelet Transform. In Proceedings of the 2020 10th IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 21–22 August 2020; pp. 91–96. [Google Scholar]

- Dabass, J.; Arora, S.; Vig, R.; Hanmandlu, M. Segmentation Techniques for Breast Cancer Imaging Modalities-A Review. In Proceedings of the 2019 9th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 10–11 January 2019; pp. 658–663. [Google Scholar]

- Banumathy, D.; Khalaf, O.I.; Romero, C.A.T.; Raja, P.V.; Sharma, D.K. Breast Calcifications and Histopathological Analysis on Tumour Detection by CNN. Comput. Syst. Sci. Eng. 2023, 44, 595–612. [Google Scholar] [CrossRef]

- Roty, S.; Wiratkapun, C.; Tanawongsuwan, R.; Phongsuphap, S. Analysis of Microcalcification Features for Pathological Classification of Mammograms. In Proceedings of the 2017 10th Biomedical Engineering International Conference (BMEiCON), Hokkaido, Japan, 31 August–2 September 2017; pp. 1–5. [Google Scholar]

- Fan, L.; Zhang, F.; Fan, H.; Zhang, C. Brief Review of Image Denoising Techniques. Vis. Comput. Ind. Biomed. Art 2019, 2, 7. [Google Scholar] [CrossRef]

- Krishnan, A. An Overview of Mammogram Noise and Denoising Techniques. Int. J. Eng. Res. Gen. Sci. 2016, 4, 557–563. [Google Scholar]

- Patil, R.S.; Biradar, N. Automated Mammogram Breast Cancer Detection Using the Optimized Combination of Convolutional and Recurrent Neural Network. Evol. Intell. 2020, 14, 1459–1474. [Google Scholar] [CrossRef]

- Fadil, R.; Jackson, A.; El Majd, B.A.; El Ghazi, H.; Kaabouch, N. Classification of Microcalcifications in Mammograms Using 2D Discrete Wavelet Transform and Random Forest. In Proceedings of the 2020 IEEE International Conference on Electro Information Technology (EIT), Chicago, IL, USA, 31 July–1 August 2020; pp. 353–359. [Google Scholar]

- Mahmood, T.; Li, J.; Pei, Y.; Akhtar, F.; Imran, A.; Yaqub, M. An Automatic Detection and Localization of Mammographic Microcalcifications ROI with Multi-Scale Features Using the Radiomics Analysis Approach. Cancers 2021, 13, 5916. [Google Scholar] [CrossRef] [PubMed]

- Gowri, V.; Valluvan, K.R.; Vijaya Chamundeeswari, V. Automated Detection and Classification of Microcalcification Clusters with Enhanced Preprocessing and Fractal Analysis. Asian Pac. J. Cancer Prev. 2018, 19, 3093–3098. [Google Scholar] [CrossRef] [PubMed]

- Pun, C.S.; Lee, S.X.; Xia, K. Persistent-Homology-Based Machine Learning: A Survey and a Comparative Study. Artif. Intell. Rev. 2022, 55, 5169–5213. [Google Scholar] [CrossRef]

- Choe, S.; Ramanna, S. Cubical Homology-Based Machine Learning: An Application in Image Classification. Axioms 2022, 11, 112. [Google Scholar] [CrossRef]

- Asaad, A.; Ali, D.; Majeed, T.; Rashid, R. Persistent Homology for Breast Tumor Classification Using Mammogram Scans. Mathematics 2022, 10, 4039. [Google Scholar] [CrossRef]

- Kusano, G.; Fukumizu, K.; Hiraoka, Y. Kernel Method for Persistence Diagrams via Kernel Embedding and Weight Factor. J. Mach. Learn. Res. 2018, 18, 1–41. [Google Scholar]

- Moroni, D.; Pascali, M.A. Learning Topology: Bridging Computational Topology and Machine Learning. Pattern Recognit. Image Anal. 2021, 31, 443–453. [Google Scholar] [CrossRef]

- Avilés-Rodríguez, G.J.; Nieto-Hipólito, J.I.; Cosío-León, M.D.L.Á.; Romo-Cárdenas, G.S.; Sánchez-López, J.D.D.; Radilla-Chávez, P.; Vázquez-Briseño, M. Topological Data Analysis for Eye Fundus Image Quality Assessment. Diagnostics 2021, 11, 1322. [Google Scholar] [CrossRef]

- Adams, H.; Emerson, T.; Kirby, M.; Neville, R.; Peterson, C.; Shipman, P.; Chepushtanova, S.; Hanson, E.; Motta, F.; Ziegelmeier, L. Persistence Images: A Stable Vector Representation of Persistent Homology. J. Mach. Learn. Res. 2017, 18, 1–35. [Google Scholar]

- Teramoto, T.; Shinohara, T.; Takiyama, A. Computer-Aided Classification of Hepatocellular Ballooning in Liver Biopsies from Patients with NASH Using Persistent Homology. Comput. Methods Programs Biomed. 2020, 195, 105614. [Google Scholar] [CrossRef] [PubMed]

- Oyama, A.; Hiraoka, Y.; Obayashi, I.; Saikawa, Y.; Furui, S.; Shiraishi, K.; Kumagai, S.; Hayashi, T.; Kotoku, J. Hepatic Tumor Classification Using Texture and Topology Analysis of Non-Contrast-Enhanced Three-Dimensional T1-Weighted MR Images with a Radiomics Approach. Sci. Rep. 2019, 9, 8764. [Google Scholar] [CrossRef] [PubMed]

- Leykam, D.; Rondón, I.; Angelakis, D.G. Dark Soliton Detection Using Persistent Homology. Chaos Interdiscip. J. Nonlinear Sci. 2022, 32, 73133. [Google Scholar] [CrossRef]

- Edwards, P.; Skruber, K.; Milićević, N.; Heidings, J.B.; Read, T.A.; Bubenik, P.; Vitriol, E.A. TDAExplore: Quantitative Analysis of Fluorescence Microscopy Images through Topology-Based Machine Learning. Patterns 2021, 2, 100367. [Google Scholar] [CrossRef]

- Nishio, M.; Nishio, M.; Jimbo, N.; Nakane, K. Homology-Based Image Processing for Automatic Classification of Histopathological Images of Lung Tissue. Cancers 2021, 13, 1192. [Google Scholar] [CrossRef]

- Rammal, A.; Assaf, R.; Goupil, A.; Kacim, M.; Vrabie, V. Machine Learning Techniques on Homological Persistence Features for Prostate Cancer Diagnosis. BMC Bioinform. 2022, 23, 476. [Google Scholar] [CrossRef]

- Conti, F.; Moroni, D.; Pascali, M.A. A Topological Machine Learning Pipeline for Classification. Mathematics 2022, 10, 3086. [Google Scholar] [CrossRef]

- Heath, M.; Bowyer, K.; Kopans, D.; Moore, R.; Kegelmeyer, P. The Digital Database for Screening Mammography. In Proceedings of the Fifth International Workshop on Digital Mammography, Toronto, ON, Canada, 20–23 July 2000; pp. 212–218. [Google Scholar]

- Beksi, W.J.; Papanikolopoulos, N. 3D Region Segmentation Using Topological Persistence. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejon, Korea, 9–14 October 2016; pp. 1079–1084. [Google Scholar]

- Otter, N.; Porter, M.A.; Tillmann, U.; Grindrod, P.; Harrington, H.A. A Roadmap for the Computation of Persistent Homology. EPJ Data Sci. 2017, 6, 1–38. [Google Scholar] [CrossRef]

- Kramár, M.; Levanger, R.; Tithof, J.; Suri, B.; Xu, M.; Paul, M.; Schatz, M.F.; Mischaikow, K. Analysis of Kolmogorov Flow and Rayleigh–Bénard Convection Using Persistent Homology. Physica D 2016, 334, 82–98. [Google Scholar] [CrossRef]

- Garin, A.; Tauzin, G. A Topological “reading” Lesson: Classification of MNIST Using TDA. In Proceedings of the 2019 18th IEEE International Conference on Machine Learning and Applications, ICMLA 2019, Boca Raton, FL, USA, 16–19 December 2019; pp. 1551–1556. [Google Scholar]

- Pun, C.S.; Xia, K.; Lee, S.X. Persistent-Homology-Based Machine Learning and Its Applications—A Survey. arXiv 2018, arXiv:1811.00252. [Google Scholar] [CrossRef]

- Chazal, F.; Michel, B. An Introduction to Topological Data Analysis: Fundamental and Practical Aspects for Data Scientists. Front. Artif. Intell. 2021, 4, 1–28. [Google Scholar] [CrossRef] [PubMed]

- Atienza, N.; Gonzalez-Díaz, R.; Soriano-Trigueros, M. On the Stability of Persistent Entropy and New Summary Functions for Topological Data Analysis. Pattern Recognit. 2020, 107, 107509. [Google Scholar] [CrossRef]

- Moon, C.; Li, Q.; Xiao, G. Using Persistent Homology Topological Features to Characterize Medical Images: Case Studies on Lung and Brain Cancers. arXiv 2020, arXiv:2012.12102. [Google Scholar]

- Jiao, Y.; Du, P. Performance Measures in Evaluating Machine Learning Based Bioinformatics Predictors for Classifications. Quant. Biol. 2016, 4, 320–330. [Google Scholar] [CrossRef]

- Kaji, S.; Sudo, T.; Ahara, K. Cubical Ripser: Software for Computing Persistent Homology of Image and Volume Data. arXiv 2020, arXiv:2005.12692. [Google Scholar]

- Turkes, R.; Nys, J.; Verdonck, T.; Latre, S. Noise Robustness of Persistent Homology on Greyscale Images, across Filtrations and Signatures. PLoS ONE 2021, 16, e0257215. [Google Scholar] [CrossRef]

- Sakka, E.; Prentza, A.; Koutsouris, D. Classification Algorithms for Microcalcifications in Mammograms (Review). Oncol. Rep. 2006, 15, 1049–1055. [Google Scholar] [CrossRef]

- Temmermans, F.; Jansen, B.; Willekens, I.; Van de Casteele, E.; Deklerck, R.; Schelkens, P.; De Mey, J. Classification of Microcalcifications Using Micro-CT. In Proceedings of the Applications of Digital Image Processing XXXVI, San Diego, CA, USA, 26–29 August 2013; Tescher, A.G., Ed.; SPIE: Bellingham, WA, USA, 2013; Volume 8856. [Google Scholar]

- Suhail, Z.; Denton, E.R.E.; Zwiggelaar, R. Classification of Micro-Calcification in Mammograms Using Scalable Linear Fisher Discriminant Analysis. Med. Biol. Eng. Comput. 2018, 56, 1475–1485. [Google Scholar] [CrossRef]

- Chen, Z.; Strange, H.; Oliver, A.; Denton, E.R.E.; Boggis, C.; Zwiggelaar, R. Topological Modeling and Classification of Mammographic Microcalcification Clusters. IEEE Trans. Biomed. Eng. 2015, 62, 1203–1214. [Google Scholar] [CrossRef]

- Strange, H.; Chen, Z.; Denton, E.R.E.; Zwiggelaar, R. Modelling Mammographic Microcalcification Clusters Using Persistent Mereotopology. Pattern Recognit. Lett. 2014, 47, 157–163. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).