Simple Summary

Three-dimensional models of tumor vascular networks are of significant importance for in vitro and in silico investigations of, for example, the efficiency of anti-cancer drugs in an early stage of clinical transition and can be potentially used for the development of in vitro systems as 3D-printable vascular networks to facilitate personalized medicine and randomized controlled clinical trials. In this work, histologic slices of a human pancreatic tumor are used as examples to establish an algorithm-based method that enables the reconstruction of a 3D vascular network model. The advantages of this method are high resolution and accuracy concerning the characteristics of the vascular network (e.g., density, trajectory of vessels).

Abstract

For reliable in silico or in vitro investigations in, for example, biosensing and drug delivery applications, accurate models of tumor vascular networks down to the capillary size are essential. Compared to images acquired with conventional medical imaging techniques, digitalized histological tumor slices have a higher resolution, enabling the delineation of capillaries. Volume rendering procedures can then be used to generate a 3D model. However, the preparation of such slices leads to misalignments in relative slice orientation between consecutive slices. Thus, image registration algorithms are necessary to re-align the slices. Here, we present an algorithm for the registration and reconstruction of a vascular network from histologic slices applied to 169 tumor slices. The registration includes two steps. First, consecutive images are incrementally pre-aligned using feature- and area-based transformations. Second, using the previous transformations, parallel registration for all images is enabled. Combining intensity- and color-based thresholds along with heuristic analysis, vascular structures are segmented. A 3D interpolation technique is used for volume rendering. This results in a 3D vascular network with approximately 400–450 vessels with diameters down to 25–30 µm. A delineation of vessel structures with close distance was limited in areas of high structural density. Improvement can be achieved by using images with higher resolution and or machine learning techniques.

1. Introduction

Three-dimensional models of vascular networks have the potential to improve the accuracy of computational and experimental investigations in many biomedical fields. Some of these are biosensing, organ-on-a-chip (OOAC), tissue engineering and scaffolding, and development of anti-cancer drugs (ACDs) in cancer therapy. In biosensing, vasculature models are necessary for evaluation of the sensors’ efficiency both in silico [1] and in vitro [2]. However, accurate vasculature models would increase the reliability of these results. Similarly, for OOACs, microfluidic systems are built to simulate the physiology of human organs [3]. Current OOACs allow for the use of 3D structures [4] for which, in general, vascularization is still needed and part of ongoing research [5]. For the development of ACDs, accurate tumor models are needed to evaluate their effectiveness in early-stage development [6]. The ACDs’ development also includes approaches such as magnetic drug targeting to realize more efficient drug delivery [7,8]. This approach can be combined with other tumor therapies to increase their effectiveness [9,10,11,12,13].

Animal models deliver the necessary tissue function but also have limitations (i.e., the comparatively small number of specimens that can be investigated and high variability in the experimental conditions, e.g., due to biodynamic signatures) [14]. In addition, it is recommended to avoid animal experiments whenever possible [15]; however, reliable alternatives exist only for macroscopic structures [16] that do not mimic human tissue function. In tissue engineering, 3D models of vascular networks are already used for the design of scaffolds [17]. The models are usually based on artificially generated data [18] or on medical imaging data (e.g., from micro-computed tomography (µCT)) [19]. The artificially generated models include approaches that allow reverse-engineering; however, they still need rigorous experimental validation. Methods such as CT, µCT, and two-photon microscopy allow imaging of vasculature without destroying the tissue, and spatial encoding is available. As these methods are well established, the model acquisition is relatively fast. Furthermore, these methods allow imaging in vivo. Explantation of the tumor is not necessary. However, the resolution of CT and µCT images is too low (CT: approx. 1 mm, µCT: approx. 5 µm) to sufficiently delineate capillaries [19,20,21]. For two-photon microscopy, the resolution is around 100 nm [22], but the tissue penetration depth is comparatively low (approx. 1 mm). This method was also used for the investigation of in vivo drug delivery [23].

In clinical practice, reliable treatment planning is of paramount importance. Hence, to further clinical translation of the above-mentioned approaches, accurate in silico investigation based on realistic vascular network models plays a key role. In this work, histologic slices of a human pancreatic tumor are used as examples to establish a new approach of building a virtual 3D vascular network model. The advantages of this method compared to the ones mentioned above are high resolution, accuracy concerning the characteristics of the vascular network (e.g., density, trajectory of vessels), and a potential use for in vitro systems as 3D-printable vascular networks to facilitate personalized medicine and randomized controlled clinical trials. Such 3D networks can enable precise simulations of blood flow dynamics, aiding in the development of targeted treatments and predicting patient-specific responses.

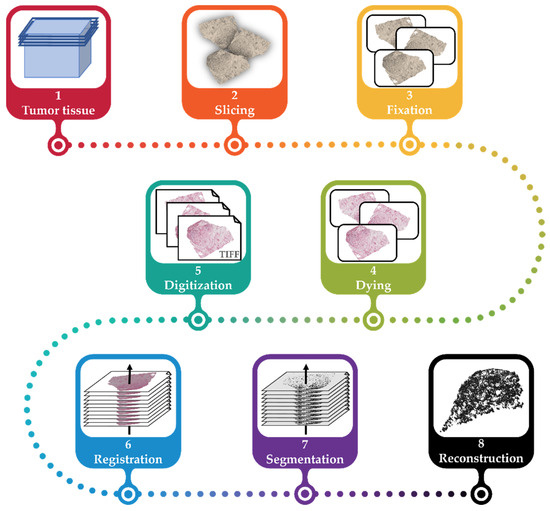

This workflow for the generation of a vascular network model of a tumor using histological images presented in this work consists of several steps: slicing a tumor, fixation of slices on slides, staining the structures of interest, digitization of slides, registration of the digitalized slides (i.e., alignment to the same coordinate system), image segmentation of the structures of interest (i.e., vessels), and reconstruction of the registered and segmented images to a 3D model (cf. Figure 1).

Figure 1.

The general workflow for the reconstruction of the vascular network. The tumor tissue is explanted and embedded in paraffin wax (1), sliced into 2.5 µm slices (2), fixated in 3.5% formaldehyde, and placed on slides (3). The tissue on each slide is then immunohistochemically stained (4) and then digitized (5). The resulting images are registered (6). Using segmentation, vascular structures are extracted (7) and reconstructed for the vascular model of the initial tumor (8).

The major challenges for the generation of a 3D model from histologic images compared to state-of-the-art procedures are the registration of high-resolution and highly repetitive features, segmentation robust to artifacts from sample preparation, and implementation that allows for upscaling of the number of images used. To overcome these challenges, this work uses a combination of area- and feature-based registration, and segmentation including intensity- and color-based thresholds and heuristic analysis. Here, we present as an example the registration and reconstruction of a vascular network from histologic slices applied to 169 tumor slices. The algorithms are discussed, and results are compared to state-of-the-art registration (see Table 2), segmentation, and reconstruction approaches in Section 3.

2. Materials and Methods

The general workflow for the generation of the 3D vascular network is shown in Figure 1. Steps (1) to (5) are described in Section 2.1, step (6) is described in Section 2.2 and Section 2.3, step (7) in Section 2.4, and step (8) in Section 2.5.

2.1. Sample Preparation

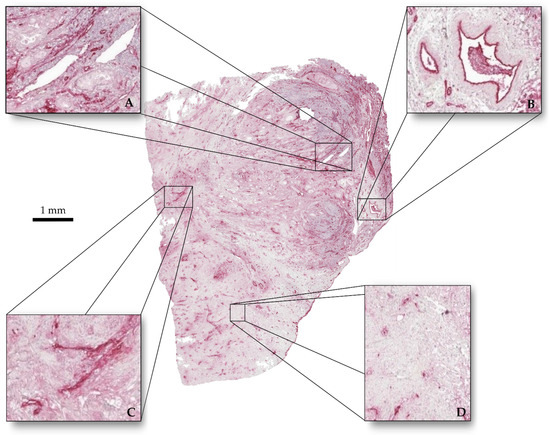

After informed consent of the patient and with permission of the local IRB (EK206/09), a piece of a human pancreatic ductal adenocarcinoma was used after resection. It was fixed in a 3.5% formaldehyde solution (Otto-Fischar GmbH, Saarbrücken, Germany). The tumor was embedded in paraffin wax and cut in 169 slices of 2.5 µm thickness each using a microtome (HM 340 E, Thermo Fisher Scientific Inc., Waltham, MA, USA). Slices were manually placed on slides (Starforst, Engelbrecht Medizin- und Labortechnik, Edermünde, Germany) and dried overnight at 56 °C. The slices were immunohistochemically stained (Figure 2) with the antibody “von Willebrandt-factor” (DakoCytomation, Glostrup, Denmark) using the ZytoChem Plus AP Polymer System (Mouse/Rabbit) (Zytomed Systems, Berlin, Germany) and Hematoxylin (Zytomed Systems, Berlin, Germany). Then, the slides were covered with Vitro-Clud (R. Langenbrinck GmbH, Emmendingen, Germany).

Figure 2.

Tumor slice immunohistochemically stained. (A) Vessels with clear outlines and homogeneous interiors. (B) Vessels with clear outline and perturbed interiors. (C) Vessels with perturbed outlines and no interiors. (D) Stained cell nuclei.

The slides were digitalized using a microscope (Axio Imager.Z2, Carl Zeiss Microscopy Deutschland GmbH, Jena, Germany). The slide images were converted and cropped to a size of 13020 × 10422 px², resulting in images with a pixel size of 0.5 µm.

2.2. Preprocessing

Prior to the registration, the images were preprocessed to achieve better registration results. First, the background of the images (i.e., the area of the image not showing any tissue) was replaced with white. This is necessary, as the registration algorithm (see Section 2.3) requires a white background. Additionally, a white margin (12.5% of the image size) was added to each image. This made the image registration more robust against losing image information through rotation (cf. Section 2.3 on registration). Second, the imregtform function of Matlab (The MathWorks, Inc., Natick, MA, USA) was used to perform a so-called similarity transformation between consecutive images. This similarity transformation included rotation, translation, reflection, and scaling of the images. However, it did not include elastic transformation, which was performed in a later step (see Section 2.3).

One image preprocessing took only few seconds and could be computed in parallel.

All computations were performed with computing resources granted by RWTH Aachen University. The RWTH Aachen University High-Performance Cluster provides access to computational nodes using 2 Intel Xeon Platinum 8160 Processors “SkyLake” with 2.1 GHz, 24 cores each. Each node hast 48 cores and 192 GB of main memory.

2.3. Registration

Because of the slides’ preparation (see chapter 2.1), a misalignment of the images was inevitable. Therefore, an image registration was required, which transfers the images into the same coordinate system. For the registration, the open-source software package Fiji [24] was used.

The registration algorithm presented here is based on the works of Wang et al. [25,26], which was available as a plug-in for Fiji (version 1.45) called ImproveCWR. We updated the bUnwarpJ version 3 [27,28] within this plugin in order to process images with larger file sizes. We also adjusted the ImproveCWR plugin to be able to save the transformation parameters of each registration.

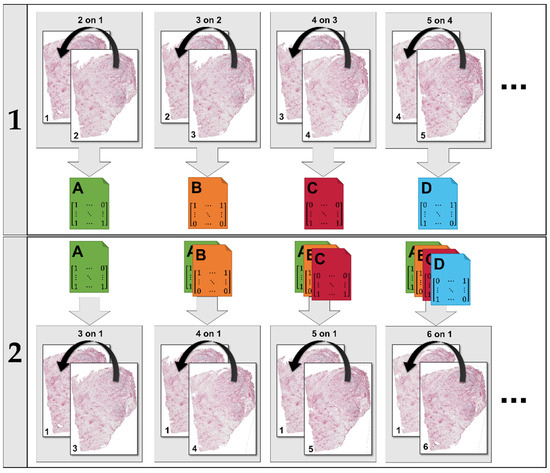

As registering one image after the other would have been very time consuming when working with large numbers of images, we chose a two-step process that parallelized the registration, as described in Figure 3.

Figure 3.

The registration proceeds in two steps. In the first step, each image is aligned to the previous one (i.e., 2 to 1, 3 to 2, etc.). Each of these registrations yield a transformation that can be saved to file (A, B, etc.). In the second step, each image is aligned to the first one in the set using concatenations of the transformations saved in the first step. All calculations within a step are performed in parallel.

The adjusted ImproveCWR registration algorithm was validated using the training datasets of the ANHIR challenge [29,30]. This provides 230 histological image pairs with annotated image features (i.e., SIFT features). Using these registered images, the relative target registration error (rTRE) for every annotated image feature l in every image pair (i,j) was calculated as follows:

with feature position x, the transformed feature position , the diagonal of the image dj, and the Euclidian norm . Then, the median value of all features in a pair of images and the average of all medians were calculated.

The robustness Ri is the number of improved features compared to the total number of features in the set Li in the image i. To calculate Ri, the relative initial registration error (rIRE) was determined as follows:

Using rTRE and rIRE, Ri can be calculated:

The average Ri over all images was calculated.

As the dataset of the ANHIR challenge includes a variety of tissues, we created a subset of images including only images that are similar to the tissue used in this work and performed a separate validation as described above (Equations (1)–(3)). We calculated the average Ri and average median rTRE for the subset of 90 image pairs and the complete set of 230 image pairs.

2.4. Segmentation

The images were converted to grayscale as the segmentation algorithm proposed in this section only relies on the pixel intensities. The brightness for each image was homogenized using the contrast-limited adaptive histogram equalization (CLAHE) algorithm [31]. Then, a median filter was used to reduce noise (from the cell nuclei).

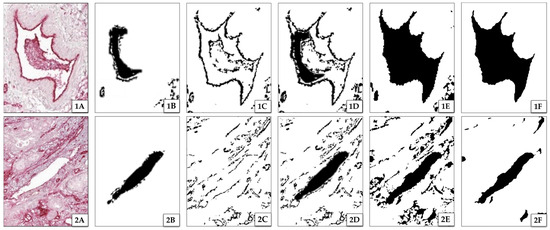

Starting from here, the segmentation was performed as described in Figure 4, consisting of five steps: (i) delineation of vessel interiors, (ii) delineation of vessel contours, (iii) removal of unwanted structures, (iv) fusing contour and interior information, and (v) filling of remaining gaps.

Figure 4.

Results of segmentation steps for two image excerpts 1 and 2. (A) shows excerpts with one vascular structure each. (1A) has a well-defined contour with a perturbed interior, whereas (2A) has a clear interior with a perturbed contour. (B) shows the results of extracted interiors of vessels, and (C) shows the results of their extracted contours. (D) is a fusion of (B) and (C), indicating significant differences in possibly detected interiors and contours (white spaces between interior and contours). (E) shows the result of the segmentation after dilating and filling the detected vessels to their contours (see step (v)). Images (F) show the final segmentation results with applied smoothing.

- (i)

- The first step of the segmentation process was the delineation of vessel interiors (see Figure 4B, black area). These are prominent due to their bright and plain white appearance. To isolate these areas, a threshold with a cut-off value of 82.35% color intensity is used. This threshold was determined using Otsu’s method [32]. Because of the preprocessing, one cut-off value was sufficient for all images. Figure 4(1B) shows that the lumen was only partially delineated due to presence of other structures, such as cells or debris, within the vessel. However, these missed parts of the lumen will be gained by reconstruction (see below step (iii)).

- (ii)

- The second step was the delineation of the contours of the vessels in the preprocessed image (see Figure 4C). This includes the contour of vessel interiors (delineated in step one) and of collapsed vessels that do not have an interior. Because of the staining, the latter are darker compared to the surrounding tissue. Hence, the intensity of the vessel contours varies throughout the image. As the segmentation is based on thresholding, the image was rasterized and a local cut-off value was calculated for every rasterized part as follows:

- (iii)

- In the third step, unwanted structures (such as cell nuclei, megakaryocytes, or damaged tissue) were removed from the images that resulted after processing according the two previous steps. This was performed as follows:

- Pixels that were not related to vessels (bright or dark spots in the image) were removed by application of a sliding window algorithm. This algorithm removes every structure that completely fits into a region of the image with a size of pixels, where is an experimental value. If is too big, vessel structures are deleted. If is too small, the unrelated pixels might not be removed effectively. The size of depends on the and the magnification used.

- Structures in the image of damaged tissue (induced by the manual preparation of the slides) are removed by using the Moore–Neighbor tracing algorithm [33] with Jacob’s stopping criterion [34]. Usually, after segmentation, vessel interiors (delineated in step (i), Figure 4B) show continuous contours of intensely stained tissue (delineated in step (ii), Figure 4C). These contours are dilated by five pixels. Only vessel interiors that have at least 45% of a corresponding contour length are kept.

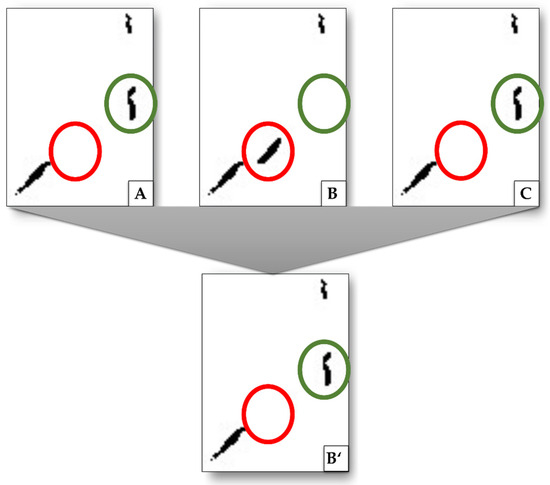

- Further removal of damaged tissue was performed by comparing vessel interiors of three consecutive images using the Moore–Neighbor tracing algorithm. Repeating structures in the images were kept, the other ones were discarded (Figure 5, red circles). Further, interiors not found in step (i) were added when they appeared in two of three images (Figure 5, green circles). To increase accuracy, these removal and addition procedures can be extended to a higher number of consecutive images that are analyzed. For both removed and added structures, a small offset up to 10 pixels of the position of the structure between two consecutive images is allowed to compensate for errors in the registration.

Figure 5. Exemplary procedure to remove structures that are related to damage artifacts and not to vessels as well as to add missing structures by interpolation. Images (A–C) show excerpts from consecutive images, (B’) is the result after (B) is compared to its next neighbors. Highlighted in green is a structure detected in image (A) and (C), but not in (B). By interpolation, this structure is added to image (B’). Highlighted in red is a structure that only appears in (B) but not in (A) or (C). This is interpreted as damage artifacts and is removed (does not appear in (B’)).

Figure 5. Exemplary procedure to remove structures that are related to damage artifacts and not to vessels as well as to add missing structures by interpolation. Images (A–C) show excerpts from consecutive images, (B’) is the result after (B) is compared to its next neighbors. Highlighted in green is a structure detected in image (A) and (C), but not in (B). By interpolation, this structure is added to image (B’). Highlighted in red is a structure that only appears in (B) but not in (A) or (C). This is interpreted as damage artifacts and is removed (does not appear in (B’)).

- (iv)

- The fourth step of the segmentation process was the fusion of images with the information on vessel contours with those of the vessel interiors (see Figure 4D). The interiors are a bit smaller than the contours, due to different cut-off values (cf. steps (i) and (ii)) in the respective segmentations.

- (v)

- The fifth step was the closing of open ends of contours to form closed contours and filling of the gaps between these closed contours and vessel interiors (see Figure 4E). The algorithm uses binary dilation [35] with a disk of radius 5 px on every pixel. If any other pixel is within the radius, the gap is closed. Filled structures were discarded if the filled area was larger than the area of the biggest vessel in the respective image. This procedure was repeated, and the maximum gap-size was doubled for each iteration. If a filling was discarded for the first time, the disc size of the last iteration was increased by 5 px for every following iteration when the filling was discarded for the second time.

For further filling of remaining gaps, contours were dilated, then filled and eroded afterwards. To prevent clustering in areas of high vessel density, the image was rasterized, and the dilation was adapted to the current raster’s vessel density.

Further refinement was performed in the 3D reconstruction.

2.5. 3D Reconstruction

Three-dimensional reconstruction was performed by interpolation of the 2D segmented images. For interpolation, every unset voxel was examined. If there were 15 voxels set in its 3 × 3 × 10 voxel neighborhood, the examined voxel was also set. Looking 10 voxels in z-direction enables the bridging of larger gaps resulting from missing image information. To close discontinued vessels resulting from inaccuracies in registration or segmentation, for every voxel its 3D neighborhood of voxels was checked again. This neighborhood is defined as a 3 × 3 × 3 voxel neighborhood around the examined voxel in the original stack. If there were less than 10 voxels identified as vessel in the original voxel neighborhood, the voxel was discarded. Both the size of the neighborhood and the value of 10 voxels are empirical values.

To achieve a smoother and in this way more natural look of vessel structures, a Gaussian filter in 3D was applied. This was followed by thresholding to receive binary images again. The smoothing also led to a more robust algorithm as remaining discontinuities in a vessel’s trajectory were eliminated.

The reconstructed 3D vessel network was qualitatively evaluated by an experienced radiologist.

3. Results and Discussion

In this work, we developed an algorithm to reconstruct a 3D vascular network from histological slices. The algorithm consists of a two-step registration based on the ImproveCWR algorithm by Wang et al. [25,26] (see Section 2.3), a five-step segmentation (see Section 2.4), and a 3D reconstruction (see Section 2.5).

The computing time for the registration, segmentation, and reconstruction of 169 consecutive slices of an example pancreatic ductal adenocarcinoma is shown in Table 1.

Table 1.

Computation time of the different tasks to reconstruct a 3D vascular network from histologic slices.

During the registration, one computational node was allocated, which then used all 48 cores, and 187 GB of main memory was allocated. For the segmentation, five computational nodes were allocated, using in total 158 cores and 750 GB of main memory. For the reconstruction, one computational node was allocated using one core and 4 GB of main memory. The total computation time of 197 min shows the benefits of the parallelization of the registration steps. If the registration were to be computed linearly on a single CPU, the runtime could be approximated to last over 66 days for the 169 slices. The total of 197 min is therefore within a reasonable time frame in a research context for the development of reliable in vitro models. This would be, however, not appropriate for, as an example, tissue analysis in a clinical setting, in which decisions are time critical.

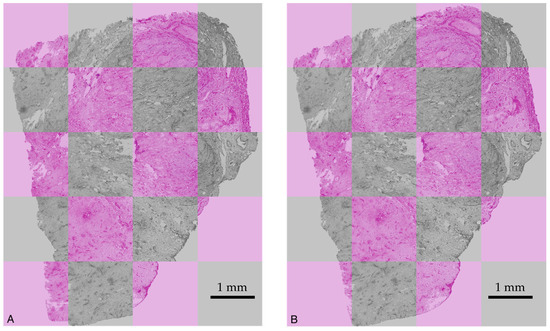

Figure 6 shows unregistered and registered images, demonstrating the performance of the registration algorithm.

Figure 6.

Two superimposed images of slices displayed in grey and in pink, respectively: (A) without registration and (B) after registration. In (A), a clear mismatch between the outlines of the slices displayed in grey and in pink is observed. In (B), the outlines of the slices coincide.

Due to manual handling of the slices for sample preparation and digitization, image artifacts were created. Therefore, registration of the digitalized slides was necessary. Table 2 summarizes registration methods reported in the literature and their applicability for high-resolution histologic slices of tumors. For registration, physical markers can be used, which are applied to the tissue before slicing [36]. This technique has the disadvantage of damaging the tissue, which can no longer be evaluated at the position of the markers. To overcome this problem, Schwier et al. [37] introduced a method in which vessels are used as markers to register the slices as they are re-occurring features on every slice. Another approach is the scale-invariant feature transform (SIFT) by Lowe [38], which extracts distinctive areas, called features, from unregistered images that can be matched to one another. This SIFT algorithm and the similar SURF algorithm [39] are used in a couple of other registration approaches [40,41,42,43,44,45,46,47]. However, feature extraction alone in histologic images of a tumor is not sufficient because of the highly repetitive patterns that are not sufficiently distinctive. Kugler et al. [48,49] also applied the extraction of features technique using normalized cross-correlation and smoothening procedures. Rigid image registration algorithms [50,51] are frequently used, enabling the rotation, displacement, and scaling of digitalized slices. However, these algorithms are not sufficient for the registration of typical histological slices, which show further damages gained during preparation (deformations, fissures, etc.). Consequently, most existing registration methods show shortcomings in registering high-resolution images of tumor slices as they cannot handle extensive damage of tissue such as shearing [40,51,52], ruptures [53], or highly repetitive patterns [38,39,41,45,47,54,55]. Although the images of the example tumor investigated in this work consist of highly repetitive patterns, which limits exclusively feature-based approaches such as SIFT and SURF [38,39,40,41,44,47,55,56], the algorithm used is suitable to register whole slide images. This is due to the combination of area- and feature-based registration. Furthermore, it does not rely on previously segmented images [54,57].

Table 2.

Registration methods reported in the literature, application field, and limitations of the method.

Table 2.

Registration methods reported in the literature, application field, and limitations of the method.

| Focused Methods | Reconstruction-Related Application | Limitations |

|---|---|---|

| Fiducial markers for point matching | ||

| Rigid registration [36] | Protocol for inducing fiducial markers | Markers locally destroy tissue and vessels |

| Affine registration [58] | 3D reconstruction specifically for muscle fibers | |

| Feature extraction and matching methods | ||

| Finding scale-invariant feature transformation SIFT/SURF: local feature descriptors [38,39,40,41,44] | Detection and matching of distinctive features invariant to the transformation | Unsuitable for highly repetitive patterns of histologic samples |

| Object tracing using SIFT [47] | Registration for images with high deformation, artifacts, and missing tissue, incorporating quality assurance | |

| Point matching improving RANSAC [56] | Faster and more robust point matching of features | |

| Non-rigid feature matching [55] | Matching and handling large deformations | |

| Rigid registration methods | ||

| Sparse-feature-based registration [42] | Handling registration of objects with different orders of magnitudes in structure size | Rigid registration is not sufficient for samples deformed by shearing |

| Rigid registration [51] | Co-registering re-stained histological images | |

| Trajectory tracing [49] | Registration and reconstruction of 2D histological images with different staining | Mainly captures vessels along z-axis |

| Non-rigid registration methods | ||

| Rigid and elastic registration [57] | Automatic sectioning, segmentation, registration, 3D reconstruction | Registration of already segmented images |

| Intensity-based registration, curvature flow [54] | Smoothed 3D reconstruction of distinct object boundaries | |

| Feature- and area-based registration [25] | Different image types including highly repetitive histological images | Computationally expensive transformations |

| B-spline [59] | 3D reconstruction preserving tissue microstructures | Not capable of handling large distortions |

| Affine registration, mutual information (MI), matrix exponential neural network (MINE) [53] | Unsupervised registration for mono- and multi-modal images | |

| Registering multi-resolution scales for ROIs [45] | Registration of histological images dealing with major artifacts | No whole-section registration |

| Intensity-based stochastic gradient descent method (SGDM), region-based convolutional neural networks [60] | Registration, segmentation, and reconstruction of multi-modal histological images, focused on nerval specimen | Unsuitable for highly repetitive patterns of histologic samples |

| Non-automatic methods | ||

| Rigid registration [50] | Manual rigid registration | Unfeasible for big data |

| Manual segmentation, rigid registration, mesh generation [52] | Direct 3D mesh generation from images | |

The results of the validation (see Section 2.3) are shown in Table 3. The quality of the presented method ranks in the middle of the earlier published methods for the complete set of images of the ANHIR challenge and for images similar to those presented in this work at the very front [30].

Table 3.

Results of the registration validation. The registration error (rTRE) and the average robustness Ri are calculated as described in Section 2.3 and compared to results from literature.

It can be concluded that the results referring to the investigation of the complete set of images are in the middle of the bulk of those published in the ANHIR challenge [30] or compared to the current leaderboard [61]. However, for the image subset, the results rank within the top ranks published. Parallelization of different steps in the image registration (s. Figure 3) resulted in time-efficient computation (cf. Table 1), making the algorithm suitable for the investigation of a large number of images.

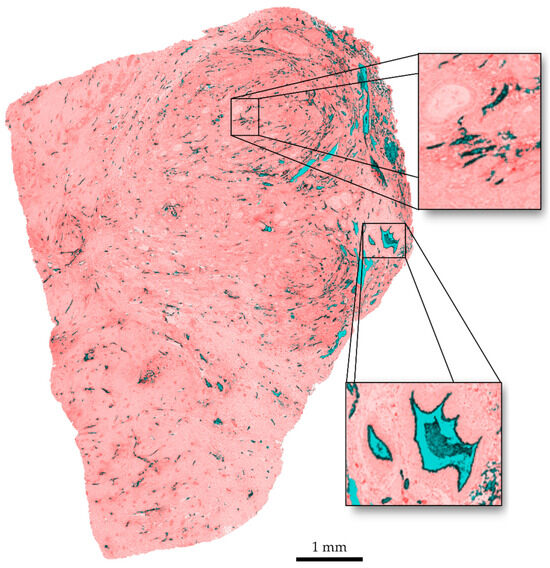

Regarding the visual quality of the segmentation, the results are presented as an overlay of the original slice and the segmented areas in Figure 7. It can be observed that clustered vessels occur in areas with a high structure density. The delineation of individual structures with a very small space of a few pixels between them gets lost in the dilation step. This is a limitation of the algorithm. A delineation of single vessels within these clusters could be achieved for images with a higher resolution. However, this leads to an increase in computation time.

Figure 7.

Overlay of original image and segmentation. Vessel interiors are marked in blue, vessel contours in black, discarded tissue in red.

To develop a 3D vascular network from registered images of slices, vessels need to be segmented from the surrounding tissue. With regard to segmentation, for some of the published works, commercial software was used that is not available anymore [50,62] Other approaches were specifically developed for certain tissue types, such as fascicles [60], and are not applicable to histologic images of tumor tissue. Most segmentation algorithms are designed to delineate cancerous tissue regions from non-cancerous surroundings [63,64,65,66]. These algorithms identify cancerous regions by analysis of misshaped structures, color variations in staining, or different textures within the image. Recent approaches to segment histologic images use standard computer vision techniques such as color-based segmentation with thresholding [67,68] or Markov random fields [69], pattern recognition [65], or clustering such as k-means or mean-shift [70,71,72]. Histological images usually show very high resolutions at large image sizes due to their high level of detail, which makes the above-mentioned approaches very expensive in terms of computing time [70]. With the developments in computational power, the utilization of machine learning in medical image analysis has experienced a huge increase. Many approaches to identify cancerous regions have been developed and have greatly improved the field of medical image analysis [73,74,75,76,77,78,79]. However, identifying cancerous regions is a completely different task compared to delineating small structures such as capillaries in a tumor. The main differences refer to the level of detail of highly repetitive small features that need to be delineated.

Reyes-Aldasoro et al. [62] developed an algorithm for automated segmentation of vascular structures from histologic images. A region-growing algorithm detects vascular structures that are then—using characteristics of continuous vessels—joined, separated, or closed to align with a certain definition of a realistic pathway for vessels. Schwier et al. [80] used the software MeVisLab [81] to reconstruct a whole vessel tree from murine liver sections. The reported procedure combines color thresholds and object-based analysis to include spatial information and assumed characteristics of vessel trajectories such as continuity. A broad segmentation approach is Trainable Weka Segmentation [82]. It uses machine learning to distinguish between different areas and must manually be trained by a user. As the method presented by Schwier et al. [80] shows promising results for the segmentation of consecutive tissue slices, we tested this method with our images and compared it to the method described above. The results are described in the Supplementary Materials S1, Table S1.

Another approach for segmenting vascular structures is vesselness functions [83,84,85]. These functions enhance the appearance of blood vessels in medical images [86,87] and use a vesselness measure to obtain probability masks that state for each pixel if it is likely to belong to a vessel or not. One of the primary challenges here would be the heterogeneity in staining intensities and colors within the histological dataset. Vesselness functions are typically designed to detect structures with distinct intensity or contrast characteristics [83,88], which are likely to be obscured by the irregular and heterogeneous staining. As a result, these functions would exhibit inconsistent and unreliable responses, making the accurate identification of vessels difficult. Additionally, the arbitrary shapes of vessels within the tumor slices are challenging. Vesselness functions rely on detecting tubular or vessel-like shapes [87,88]. In the present data, vessels often exhibited irregular geometries, which do not conform to typical vessel shapes. Consequently, the vesselness functions are likely to fail to effectively capture these diverse structures.

The comparison of the resulting segmentation showed that for the images used in this work, our segmentation is more robust to distortions such as ruptures and tissue located in vessels (see Supplementary Materials S1, Table S1).

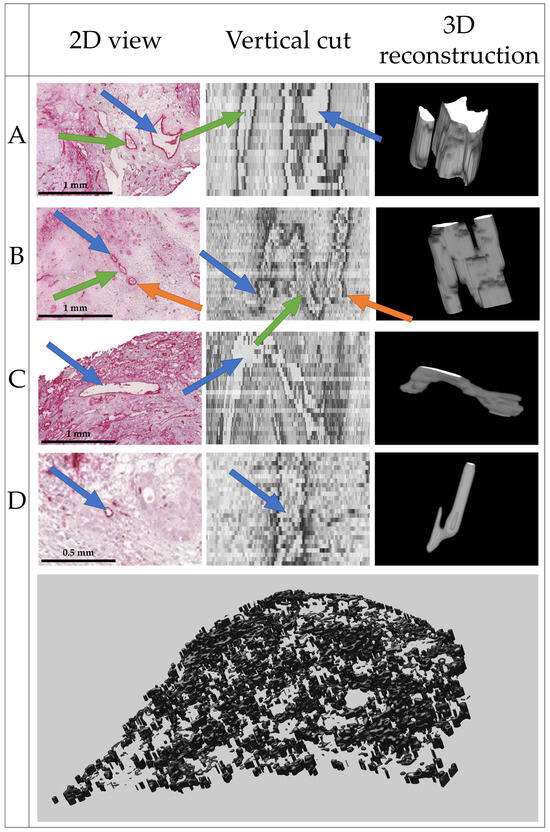

The complete reconstruction of the vascular network from 169 histologic slices is shown in Figure 8. Single vessels and bifurcations are clearly visible.

Figure 8.

Final 3D reconstruction of the 169 slices with close-up views on four distinct vessels, where (A) shows two parallel vessels; (B) shows two bifurcations, one join and one split of two vessels each; (C) shows a vessel perpendicular to the image plane; and (D) shows a very small vessel. Within one row, arrows of the same color point to the same structures.

As no ground truth is available, the quality of the segmentation and 3D reconstruction was evaluated visually by an experienced radiologist (see Acknowledgements). Based on the results shown in Figure 8, the radiologist estimated a realistic delineation of vessels parallel and perpendicular to the image plane of the histologic slices. Some artifacts were present, but the general structure of the vessels was well represented if the vessels were visible in the histopathological source images.

A validation of the continuity of the vessels was not possible with the available dataset. As the histologic slices have a thickness of 2.5 µm, the reconstruction of the 169 slices represents a vessel network with a thickness of 422.5 µm. For an assessment of vessel continuity, a larger dataset is necessary. Therefore, the evaluation of vessel continuity is subject to future research.

Reconstruction of 3D structures based on histological images has been performed before for different medical applications: Feuerstein et al. [89] used Markov random fields with deformable stack registration. Fonyad et al. [90] reconstructed a coronary model of mice using the Voloom software (former µCore by microDimensions GmbH, Munich, Germany). Onozato et al. [91] reconstructed adenocarcinomas of the lung using a former version of the CaseViewer software (3DHistech Ltd., Budapest, Hungary). Another predecessor of CaseViewer was used by Wu et al. [92] to reconstruct colorectal biopsies. Tovbis et al. [60] additionally segmented the fascicles in the reconstructed tissue. The approach presented here focuses on the reconstruction of capillaries on a much smaller scale than previous works.

4. Conclusions

With this work, we developed an algorithm that enables the reconstruction of a 3D vascular network of a human tumor based on histologic images. The algorithm consists of a two-step registration approach, feature- and area-based registration, and is suitable for the investigation of a large number of high-resolution images. It was validated with the ANHIR dataset and competes with the top ranks for similar tissue types as used in this work. It further uses a five-step segmentation, which separately delineates vessel interiors and contours, and fuses this information with that from consecutive images, to extract the vessels from the surrounding tissue. The reconstruction algorithm refines the registration and segmentation results to achieve a natural-looking model of a vascular network. The resulting 3D model was qualitatively evaluated by an experienced radiologist and considered as promising.

Supplementary Materials

The following are available online at https://www.mdpi.com/article/10.3390/cancers15225352/s1, Chapter S1: Comparison to alternative segmentation algorithm, Table S1: Comparison of the segmentation algorithms. Reference [80] is cited in the Supplementary Materials.

Author Contributions

Conceptualization, M.C.L. and I.S.; methodology, M.C.L.; software, L.G.; validation, M.C.L. and L.G.; formal analysis, M.C.L., L.G. and I.S.; resources, A.A.R., I.S. and T.S.-R.; data curation, M.C.L. and I.S.; writing—original draft preparation, M.C.L.; writing—review and editing, M.C.L., L.G. and I.S.; visualization, M.C.L. and L.G.; supervision, I.S.; project administration, I.S.; funding acquisition, I.S. All authors have read and agreed to the published version of the manuscript.

Funding

Funded by the Federal Ministry of Education and Research (BMBF) and the Ministry of Culture and Science of the German State of North Rhine-Westphalia (MKW) under the Excellence Strategy of the Federal Government and the Länder.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Institutional Review Board of the RWTH Aachen University Hospital (EK206/09, 05.01.2010).

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are available in a pseudonymized manner upon request due to ethical restrictions (patient confidentially). Therefore, the data are not available publicly.

Acknowledgments

We thank Daniel Truhn from the Department of Radiology of the University Hospital RWTH Aachen for his evaluation in assessing the segmentation and registration results. Simulations were performed with computing resources granted by RWTH Aachen University under project rwth0481.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Chen, Y.; Ali, M.; Shi, S.; Cheang, U.K. Biosensing-by-Learning Direct Targeting Strategy for Enhanced Tumor Sensitization. IEEE Trans. Nanobioscience 2019, 18, 498–509. [Google Scholar] [CrossRef] [PubMed]

- Haun, J.B.; Yoon, T.-J.; Lee, H.; Weissleder, R. Magnetic nanoparticle biosensors. Wiley Interdiscip. Rev. Nanomed. Nanobiotechnol. 2010, 2, 291–304. [Google Scholar] [CrossRef] [PubMed]

- Bhatia, S.N.; Ingber, D.E. Microfluidic organs-on-chips. Nat. Biotechnol. 2014, 32, 760–772. [Google Scholar] [CrossRef] [PubMed]

- Koyilot, M.C.; Natarajan, P.; Hunt, C.R.; Sivarajkumar, S.; Roy, R.; Joglekar, S.; Pandita, S.; Tong, C.W.; Marakkar, S.; Subramanian, L.; et al. Breakthroughs and Applications of Organ-on-a-Chip Technology. Cells 2022, 11, 1828. [Google Scholar] [CrossRef] [PubMed]

- Bongio, M.; Lopa, S.; Gilardi, M.; Bersini, S.; Moretti, M. A 3D vascularized bone remodeling model combining osteoblasts and osteoclasts in a CaP nanoparticle-enriched matrix. Nanomedicine 2016, 11, 1073–1091. [Google Scholar] [CrossRef]

- Unger, C.; Kramer, N.; Walzl, A.; Scherzer, M.; Hengstschläger, M.; Dolznig, H. Modeling human carcinomas: Physiologically relevant 3D models to improve anti-cancer drug development. Adv. Drug Deliv. Rev. 2014, 79–80, 50–67. [Google Scholar] [CrossRef]

- Abd Elrahman, A.A.; Mansour, F.R. Targeted magnetic iron oxide nanoparticles: Preparation, functionalization and biomedical application: Preparation, functionalization and biomedical application. J. Drug Deliv. Sci. Technol. 2019, 52, 702–712. [Google Scholar] [CrossRef]

- Dadfar, S.M.; Roemhild, K.; Drude, N.I.; von Stillfried, S.; Knüchel, R.; Kiessling, F.; Lammers, T. Iron oxide nanoparticles: Diagnostic, therapeutic and theranostic applications. Adv. Drug Deliv. Rev. 2019, 138, 302–325. [Google Scholar] [CrossRef] [PubMed]

- Chawla, A.; Ferrone, C.R. Neoadjuvant Therapy for Resectable Pancreatic Cancer: An Evolving Paradigm Shift. Front. Oncol. 2019, 9, 1085. [Google Scholar] [CrossRef]

- Roeth, A.A.; Slabu, I.; Baumann, M.; Alizai, P.H.; Schmeding, M.; Guentherodt, G.; Schmitz-Rode, T.; Neumann, U.P. Establishment of a biophysical model to optimize endoscopic targeting of magnetic nanoparticles for cancer treatment. Int. J. Nanomed. 2017, 12, 5933–5940. [Google Scholar] [CrossRef]

- Lindemann, M.C.; Luttke, T.; Nottrodt, N.; Schmitz-Rode, T.; Slabu, I. FEM based simulation of magnetic drug targeting in a multibranched vessel model. Comput. Methods Programs Biomed. 2021, 210, 106354. [Google Scholar] [CrossRef]

- Singh, M.; Ma, R.; Zhu, L. Quantitative evaluation of effects of coupled temperature elevation, thermal damage, and enlarged porosity on nanoparticle migration in tumors during magnetic nanoparticle hyperthermia. Int. Commun. Heat Mass Transf. 2021, 126, 105393. [Google Scholar] [CrossRef]

- Singh, M.; Singh, T.; Soni, S. Pre-operative Assessment of Ablation Margins for Variable Blood Perfusion Metrics in a Magnetic Resonance Imaging Based Complex Breast Tumour Anatomy: Simulation Paradigms in Thermal Therapies. Comput. Methods Programs Biomed. 2021, 198, 105781. [Google Scholar] [CrossRef] [PubMed]

- Van der Meer, A.D.; van den Berg, A. Organs-on-chips: Breaking the in vitro impasse. Integr. Biol. 2012, 4, 461–470. [Google Scholar] [CrossRef] [PubMed]

- Russell, W.; Burch, R.L. The Principles of Humane Experimental Technique; Methuen Publishing: London, UK, 1959. [Google Scholar]

- Roeth, A.A.; Garretson, I.; Beltz, M.; Herbold, T.; Schulze-Hagen, M.; Quaisser, S.; Georgens, A.; Reith, D.; Slabu, I.; Klink, C.D.; et al. 3D-Printed Replica and Porcine Explants for Pre-Clinical Optimization of Endoscopic Tumor Treatment by Magnetic Targeting. Cancers 2021, 13, 5496. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Sun, Y.; Shi, X.; Shen, H.; Ning, H.; Liu, H. 3D printing of tissue engineering scaffolds: A focus on vascular regeneration. Bio-Des. Manuf. 2021, 4, 344–378. [Google Scholar] [CrossRef] [PubMed]

- Szafron, J.M.; Ramachandra, A.B.; Breuer, C.K.; Marsden, A.L.; Humphrey, J.D. Optimization of Tissue-Engineered Vascular Graft Design Using Computational Modeling. Tissue Eng. Part C Methods 2019, 25, 561–570. [Google Scholar] [CrossRef] [PubMed]

- Tu, S.; Hu, F.; Cai, W.; Xiao, L.; Zhang, L.; Zheng, H.; Jiang, Q.; Chen, L. Visualizing polymeric bioresorbable scaffolds with three-dimensional image reconstruction using contrast-enhanced micro-computed tomography. Int. J. Cardiovasc. Imaging 2017, 33, 731–737. [Google Scholar] [CrossRef] [PubMed]

- Ghaghada, K.B.; Sato, A.F.; Starosolski, Z.A.; Berg, J.; Vail, D.M. Computed Tomography Imaging of Solid Tumors Using a Liposomal-Iodine Contrast Agent in Companion Dogs with Naturally Occurring Cancer. PLoS ONE 2016, 11, e0152718. [Google Scholar] [CrossRef] [PubMed]

- Ghani, M.U.; Zhou, Z.; Ren, L.; Li, Y.; Zheng, B.; Yang, K.; Liu, H. Investigation of spatial resolution characteristics of an in vivo micro computed tomography system. Nucl. Instrum. Methods Phys. Res. A 2016, 807, 129–136. [Google Scholar] [CrossRef]

- McDonald, D.M.; Choyke, P.L. Imaging of angiogenesis: From microscope to clinic. Nat. Med. 2003, 9, 713–725. [Google Scholar] [CrossRef]

- Pena, A.-M.; Chen, X.; Pence, I.J.; Bornschlögl, T.; Jeong, S.; Grégoire, S.; Luengo, G.S.; Hallegot, P.; Obeidy, P.; Feizpour, A.; et al. Imaging and quantifying drug delivery in skin—Part 2: Fluorescence andvibrational spectroscopic imaging methods. Adv. Drug Deliv. Rev. 2020, 153, 147–168. [Google Scholar] [CrossRef] [PubMed]

- Schindelin, J.; Arganda-Carreras, I.; Frise, E.; Kaynig, V.; Longair, M.; Pietzsch, T.; Preibisch, S.; Rueden, C.; Saalfeld, S.; Schmid, B.; et al. Fiji: An open-source platform for biological-image analysis. Nat. Methods 2012, 9, 676–682. [Google Scholar] [CrossRef]

- Wang, C.-W.; Chen, H.-C. Improved image alignment method in application to X-ray images and biological images. Bioinformatics 2013, 29, 1879–1887. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.-W.; Ka, S.-M.; Chen, A. Robust image registration of biological microscopic images. Sci. Rep. 2014, 4, 6050. [Google Scholar] [CrossRef] [PubMed]

- Arganda-Carreras, I.; Sorzano, C.O.S.; Marabini, R.; Carazo, J.M.; Ortiz-De-Solorzano, C.; Kybic, J. Consistent and Elastic Registration of Histological Sections Using Vector-Spline Regularization. In Computer Vision Approaches to Medical Image Analysis; Hutchison, D., Kanade, T., Kittler, J., Kleinberg, J.M., Mattern, F., Mitchell, J.C., Naor, M., Nierstrasz, O., Pandu Rangan, C., et al., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 85–95. ISBN 978-3-540-46257-6. [Google Scholar]

- Sorzano, C.O.S.; Thévenaz, P.; Unser, M. Elastic registration of biological images using vector-spline regularization. IEEE Trans. Biomed. Eng. 2005, 52, 652–663. [Google Scholar] [CrossRef] [PubMed]

- Borovec, J.; Munoz-Barrutia, A.; Kybic, J. Benchmarking of Image Registration Methods for Differently Stained Histological Slides. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 3368–3372, ISBN 978-1-4799-7061-2. [Google Scholar]

- Borovec, J.; Kybic, J.; Arganda-Carreras, I.; Sorokin, D.V.; Bueno, G.; Khvostikov, A.V.; Bakas, S.; Chang, E.I.-C.; Heldmann, S.; Kartasalo, K.; et al. ANHIR: Automatic Non-Rigid Histological Image Registration Challenge. IEEE Trans. Med. Imaging 2020, 39, 3042–3052. [Google Scholar] [CrossRef]

- Zuiderveld, K. Contrast limited adaptive histogram equilization. In Graphics Gems IV; Heckbert, P.S., Ed.; Academic Press: San Diego, CA, USA, 1994; pp. 474–485. ISBN 0123361559. [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst., Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E.; Eddins, S.L. Digital Image Processing Using MATLAB; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2004; ISBN 0-13-008519-7. [Google Scholar]

- Reddy, P.R.; Amarnadh, V.; Bhaskar, M. Evaluation of Stopping Criterion in Contour Tracing Algorithms. Int. J. Comput. Sci. Inf. Technol. 2012, 3, 3888–3894. [Google Scholar]

- Haralick, R.M.; Shapiro, L.G. Computer and Robot Vision, 2; Addison-Wesley: Reading, MA, USA, 1993; ISBN 0201569434. [Google Scholar]

- Hughes, C.; Rouvière, O.; Mege-Lechevallier, F.; Souchon, R.; Prost, R. Robust alignment of prostate histology slices with quantified accuracy. IEEE Trans. Biomed. Eng. 2013, 60, 281–291. [Google Scholar] [CrossRef]

- Schwier, M.; Böhler, T.; Hahn, H.K.; Dahmen, U.; Dirsch, O. Registration of histological whole slide images guided by vessel structures. J. Pathol. Inform. 2013, 4, S10. [Google Scholar] [CrossRef] [PubMed]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Kupfer, B.; Netanyahu, N.S.; Shimshoni, I. An Efficient SIFT-Based Mode-Seeking Algorithm for Sub-Pixel Registration of Remotely Sensed Images. IEEE Geosci. Remote Sens. Lett. 2015, 12, 379–383. [Google Scholar] [CrossRef]

- Liu, Q.; Zhao, G.; Deng, J.; Xue, Q.; Hou, W.; He, Y. Image Registration Algorithm for Sequence Pathology Slices of Pulmonary Nodule. In Proceedings of the 2019 8th International Symposium on Next Generation Electronics (ISNE), Zhengzhou, China, 9–10 October 2019; pp. 1–3, ISBN 978-1-7281-2062-1. [Google Scholar]

- Lobachev, O.; Ulrich, C.; Steiniger, B.S.; Wilhelmi, V.; Stachniss, V.; Guthe, M. Feature-based multi-resolution registration of immunostained serial sections. Med. Image Anal. 2017, 35, 288–302. [Google Scholar] [CrossRef]

- Saalfeld, S.; Fetter, R.; Cardona, A.; Tomancak, P. Elastic volume reconstruction from series of ultra-thin microscopy sections. Nat. Methods 2012, 9, 717–720. [Google Scholar] [CrossRef] [PubMed]

- Hermann, J.; Brehmer, K.; Jankowski, V.; Lellig, M.; Hohl, M.; Mahfoud, F.; Speer, T.; Schunk, S.J.; Tschernig, T.; Thiele, H.; et al. Registration of Image Modalities for Analyses of Tissue Samples Using 3D Image Modelling. Proteom. Clin. Appl. 2020, 15, e1900143. [Google Scholar] [CrossRef] [PubMed]

- Paknezhad, M.; Loh, S.Y.M.; Choudhury, Y.; Koh, V.K.C.; Yong, T.T.K.; Tan, H.S.; Kanesvaran, R.; Tan, P.H.; Peng, J.Y.S.; Yu, W.; et al. Regional registration of whole slide image stacks containing major histological artifacts. BMC Bioinform. 2020, 21, 558. [Google Scholar] [CrossRef]

- Zhang, J.; Li, Z.; Yu, Q. Point-Based Registration for Multi-stained Histology Images. In Proceedings of the 2020 IEEE 5th International Conference on Image, Vision and Computing (ICIVC), Beijing, China, 10–12 July 2020; pp. 92–96, ISBN 978-1-7281-6661-2. [Google Scholar]

- Deng, R.; Yang, H.; Jha, A.; Lu, Y.; Chu, P.; Fogo, A.B.; Huo, Y. Map3D: Registration-Based Multi-Object Tracking on 3D Serial Whole Slide Images. IEEE Trans. Med. Imaging 2021, 40, 1924–1933. [Google Scholar] [CrossRef] [PubMed]

- Kugler, M.; Goto, Y.; Kawamura, N.; Kobayashi, H.; Yokota, T.; Iwamoto, C.; Ohuchida, K.; Hashizume, M.; Hontani, H. Accurate 3D Reconstruction of a Whole Pancreatic Cancer Tumor from Pathology Images with Different Stains. In Computational Pathology and Ophthalmic Medical Image Analysis: First International Workshop, COMPAY 2018, and 5th International Workshop, OMIA 2018, held in Conjunction with MICCAI 2018, Granada, Spain, 16–20 September 2018: Proceedings; Stoyanov, D., Taylor, Z., Ciompi, F., Xu, Y., Martel, A., Maier-Hein, L., Rajpoot, N., van der Laak, J., Veta, M., McKenna, S., et al., Eds.; Springer: Cham, Switzerland, 2018; pp. 35–43. ISBN 9783030009496. [Google Scholar]

- Kugler, M.; Goto, Y.; Tamura, Y.; Kawamura, N.; Kobayashi, H.; Yokota, T.; Iwamoto, C.; Ohuchida, K.; Hashizume, M.; Shimizu, A.; et al. Robust 3D image reconstruction of pancreatic cancer tumors from histopathological images with different stains and its quantitative performance evaluation. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 2047–2055. [Google Scholar] [CrossRef]

- Paish, E.C.; Green, A.R.; Rakha, E.A.; Macmillan, R.D.; Maddison, J.R.; Ellis, I.O. Three-dimensional reconstruction of sentinel lymph nodes with metastatic breast cancer indicates three distinct patterns of tumour growth. J. Clin. Pathol. 2009, 62, 617–623. [Google Scholar] [CrossRef] [PubMed]

- Jiang, J.; Larson, N.B.; Prodduturi, N.; Flotte, T.J.; Hart, S.N. Robust hierarchical density estimation and regression for re-stained histological whole slide image co-registration. PLoS ONE 2019, 14, e0220074. [Google Scholar] [CrossRef] [PubMed]

- Niemann, A.; Weigand, S.; Hoffmann, T.; Skalej, M.; Tulamo, R.; Preim, B.; Saalfeld, S. Interactive exploration of a 3D intracranial aneurysm wall model extracted from histologic slices. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 99–107. [Google Scholar] [CrossRef] [PubMed]

- Nan, A.; Tennant, M.; Rubin, U.; Ray, N. DRMIME: Differentiable Mutual Information and Matrix Exponential for Multi-Resolution Image Registration. In Proceedings of the Third Conference on Medical Imaging with Deep Learning, Montreal, QC, Canada, 6–8 July 2020. [Google Scholar]

- Cifor, A.; Bai, L.; Pitiot, A. Smoothness-guided 3-D reconstruction of 2-D histological images. Neuroimage 2011, 56, 197–211. [Google Scholar] [CrossRef] [PubMed]

- Cai, N.; Chen, H.; Li, Y.; Peng, Y.; Li, J.; Li, X. Reducing non-realistic deformations in registration using precise and reliable landmark correspondences. Comput. Biol. Med. 2019, 115, 103515. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Ma, W.; Gong, M.; Su, L.; Jiao, L. A Novel Point-Matching Algorithm Based on Fast Sample Consensus for Image Registration. IEEE Geosci. Remote Sens. Lett. 2015, 12, 43–47. [Google Scholar] [CrossRef]

- Arganda-Carreras, I.; Fernández-González, R.; Muñoz-Barrutia, A.; Ortiz-De-Solorzano, C. 3D reconstruction of histological sections: Application to mammary gland tissue. Microsc. Res. Tech. 2010, 73, 1019–1029. [Google Scholar] [CrossRef] [PubMed]

- Kuravi, R.; Leichsenring, K.; Böl, M.; Ehret, A.E. 3D finite element models from serial section histology of skeletal muscle tissue —The role of micro-architecture on mechanical behaviour. J. Mech. Behav. Biomed. Mater. 2020, 113, 104109. [Google Scholar] [CrossRef]

- Liu, J.; Wu, X.; Xu, C.; Ma, M.; Zhao, J.; Li, M.; Yu, Q.; Hao, X.; Wang, G.; Wei, B.; et al. A Novel Method for Observing Tumor Margin in Hepatoblastoma Based on Microstructure 3D Reconstruction. Fetal Pediatr. Pathol. 2020, 41, 371–380. [Google Scholar] [CrossRef] [PubMed]

- Tovbis, D.; Agur, A.; Mogk, J.P.M.; Zariffa, J. Automatic three-dimensional reconstruction of fascicles in peripheral nerves from histological images. PLoS ONE 2020, 15, e0233028. [Google Scholar] [CrossRef]

- Borovec, J.; Kybic, J.; Barrutia, A.M. Leaderboard—Grand Challenge. Available online: https://anhir.grand-challenge.org/evaluation/challenge/leaderboard/ (accessed on 16 May 2022).

- Reyes-Aldasoro, C.C.; Williams, L.J.; Akerman, S.; Kanthou, C.; Tozer, G.M. An automatic algorithm for the segmentation and morphological analysis of microvessels in immunostained histological tumour sections. J. Microsc. 2011, 242, 262–278. [Google Scholar] [CrossRef]

- Li, C.; Chen, H.; Li, X.; Xu, N.; Hu, Z.; Xue, D.; Qi, S.; Ma, H.; Zhang, L.; Sun, H. A review for cervical histopathology image analysis using machine vision approaches. Artif. Intell. Rev. 2020, 53, 4821–4862. [Google Scholar] [CrossRef]

- Azevedo Tosta, T.A.; Neves, L.A.; do Nascimento, M.Z. Segmentation methods of H&E-stained histological images of lymphoma: A review. Inform. Med. Unlocked 2017, 9, 35–43. [Google Scholar] [CrossRef]

- Tabesh, A.; Teverovskiy, M.; Pang, H.-Y.; Kumar, V.P.; Verbel, D.; Kotsianti, A.; Saidi, O. Multifeature prostate cancer diagnosis and Gleason grading of histological images. IEEE Trans. Med. Imaging 2007, 26, 1366–1378. [Google Scholar] [CrossRef] [PubMed]

- Gurcan, M.N.; Boucheron, L.E.; Can, A.; Madabhushi, A.; Rajpoot, N.M.; Yener, B. Histopathological image analysis: A review. IEEE Rev. Biomed. Eng. 2009, 2, 147–171. [Google Scholar] [CrossRef] [PubMed]

- Goddard, J.C.; Sutton, C.D.; Furness, P.N.; Kockelbergh, R.C.; O’Byrne, K.J. A computer image analysis system for microvessel density measurement in solid tumours. Angiogenesis 2002, 5, 15–20. [Google Scholar] [CrossRef] [PubMed]

- Steiniger, B.; Bette, M.; Schwarzbach, H. The open microcirculation in human spleens: A three-dimensional approach. J. Histochem. Cytochem. 2011, 59, 639–648. [Google Scholar] [CrossRef] [PubMed]

- Meas-Yedid, V.; Tilie, S.; Olivo-Marin, J.-C. Color image segmentation based on Markov random field clustering for histological image analysis. In Proceedings of the 16th International Conference on Pattern Recognition, Quebec City, QC, Canada, 11–15 August 2002; pp. 796–799. [Google Scholar]

- Wu, G.; Zhao, X.; Luo, S.; Shi, H. Histological image segmentation using fast mean shift clustering method. Biomed. Eng. Online 2015, 14, 24. [Google Scholar] [CrossRef]

- Yang, L.; Meer, P.; Foran, D.J. Unsupervised segmentation based on robust estimation and color active contour models. IEEE Trans. Inf. Technol. Biomed. 2005, 9, 475–486. [Google Scholar] [CrossRef] [PubMed]

- He, L.; Long, L.R.; Antani, S.; Thoma, G. Distribution fitting-based pixel labeling for histology image segmentation. Med. Imaging 2011 Comput.-Aided Diagn. 2011, 7963, 79633D. [Google Scholar] [CrossRef]

- Xu, Y.; Zhu, J.-Y.; Chang, E.I.-C.; Lai, M.; Tu, Z. Weakly supervised histopathology cancer image segmentation and classification. Med. Image Anal. 2014, 18, 591–604. [Google Scholar] [CrossRef]

- Rodríguez, R. Blood Vessel Segmentation via Neural Network in Histological Images. J. Intell. Robot. Syst. 2003, 36, 451–465. [Google Scholar] [CrossRef]

- Kothari, S.; Phan, J.H.; Moffitt, R.A.; Stokes, T.H.; Hassberger, S.E.; Chaudry, Q.; Young, A.N.; Wang, M.D. Automatic batch-invariant color segmentation of histological cancer images. Proc. IEEE Int. Symp. Biomed. Imaging 2011, 2011, 657–660. [Google Scholar] [CrossRef] [PubMed]

- Jia, Z.; Huang, X.; Chang, E.I.-C.; Xu, Y. Constrained Deep Weak Supervision for Histopathology Image Segmentation. IEEE Trans. Med. Imaging 2017, 36, 2376–2388. [Google Scholar] [CrossRef] [PubMed]

- Xu, G.; Song, Z.; Sun, Z.; Ku, C.; Yang, Z.; Liu, C.; Wang, S.; Ma, J.; Xu, W. CAMEL: A Weakly Supervised Learning Framework for Histopathology Image Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar] [CrossRef]

- Sun, C.; Li, C.; Zhang, J.; Rahaman, M.M.; Ai, S.; Chen, H.; Kulwa, F.; Li, Y.; Li, X.; Jiang, T. Gastric histopathology image segmentation using a hierarchical conditional random field. Biocybern. Biomed. Eng. 2020, 40, 1535–1555. [Google Scholar] [CrossRef]

- Janssens, T.; Antanas, L.; Derde, S.; Vanhorebeek, I.; van den Berghe, G.; Güiza Grandas, F. Charisma: An integrated approach to automatic H&E-stained skeletal muscle cell segmentation using supervised learning and novel robust clump splitting. Med. Image Anal. 2013, 17, 1206–1219. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Schwier, M.; Hahn, H.K.; Dahmen, U.; Dirsch, O. Reconstruction of vessel structures from serial whole slide sections of murine liver samples. In Proceedings of the SPIE Medical Imaging, Lake Buena Vista, FL, USA, 9–14 February 2013; Gurcan, M.N., Madabhushi, A., Eds.; SPIE: Washington, DC, USA, 2013; p. 86760D. [Google Scholar] [CrossRef]

- Ritter, F.; Boskamp, T.; Homeyer, A.; Laue, H.; Schwier, M.; Link, F.; Peitgen, H.-O. Medical image analysis. IEEE Pulse 2011, 2, 60–70. [Google Scholar] [CrossRef]

- Arganda-Carreras, I.; Kaynig, V.; Rueden, C.; Eliceiri, K.W.; Schindelin, J.; Cardona, A.; Sebastian Seung, H. Trainable Weka Segmentation: A machine learning tool for microscopy pixel classification. Bioinformatics 2017, 33, 2424–2426. [Google Scholar] [CrossRef]

- Drechsler, K.; Oyarzun Laura, C. Comparison of vesselness functions for multiscale analysis of the liver vasculature. In Proceedings of the 2010 10th IEEE International Conference on Information Technology and Applications in Biomedicine (ITAB 2010), Corfu, Greece, 3–5 November 2010; pp. 1–5, ISBN 978-1-4244-6559-0. [Google Scholar]

- Tetteh, G.; Efremov, V.; Forkert, N.D.; Schneider, M.; Kirschke, J.; Weber, B.; Zimmer, C.; Piraud, M.; Menze, B.H. DeepVesselNet: Vessel Segmentation, Centerline Prediction, and Bifurcation Detection in 3-D Angiographic Volumes. Front. Neurosci. 2020, 14, 592352. [Google Scholar] [CrossRef] [PubMed]

- Woźniak, T.; Strzelecki, M.; Majos, A.; Stefańczyk, L. 3D vascular tree segmentation using a multiscale vesselness function and a level set approach. Biocybern. Biomed. Eng. 2017, 37, 66–77. [Google Scholar] [CrossRef]

- Survarachakan, S.; Pelanis, E.; Khan, Z.A.; Kumar, R.P.; Edwin, B.; Lindseth, F. Effects of Enhancement on Deep Learning Based Hepatic Vessel Segmentation. Electronics 2021, 10, 1165. [Google Scholar] [CrossRef]

- Frangi, A.F.; Niessen, W.J.; Vincken, K.L.; Viergever, M.A. Multiscale vessel enhancement filtering. In Medical Image Computing and Computer-Assisted Interventation—MICCAI’98; Wells, W.M., Colchester, A., Delp, S., Eds.; Springer Nature: Berlin/Heidelberg, Germany, 1998; pp. 130–137. ISBN 978-3-540-65136-9. [Google Scholar]

- Lamy, J.; Merveille, O.; Kerautret, B.; Passat, N.; Vacavant, A. Vesselness Filters: A Survey with Benchmarks Applied to Liver Imaging. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 3528–3535, ISBN 978-1-7281-8808-9. [Google Scholar]

- Feuerstein, M.; Heibel, H.; Gardiazabal, J.; Navab, N.; Groher, M. Reconstruction of 3-D histology images by simultaneous deformable registration. Med. Image Comput. Comput. Assist. Interv. 2011, 14, 582–589. [Google Scholar] [CrossRef] [PubMed]

- Fónyad, L.; Shinoda, K.; Farkash, E.A.; Groher, M.; Sebastian, D.P.; Szász, A.M.; Colvin, R.B.; Yagi, Y. 3-dimensional digital reconstruction of the murine coronary system for the evaluation of chronic allograft vasculopathy. Diagn. Pathol. 2015, 10, 16. [Google Scholar] [CrossRef] [PubMed]

- Onozato, M.L.; Klepeis, V.E.; Yagi, Y.; Mino-Kenudson, M. A role of three-dimensional (3D) reconstruction in the classification of lung adenocarcinoma. Stud. Health Technol. Inform. 2012, 179, 250–256. [Google Scholar] [CrossRef] [PubMed]

- Wu, M.L.; Varga, V.S.; Kamaras, V.; Ficsor, L.; Tagscherer, A.; Tulassay, Z.; Molnar, B. Three-dimensional virtual microscopy of colorectal biopsies. Arch. Pathol. Lab. Med. 2005, 129, 507–510. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).