Enhancing the Accuracy of Lymph-Node-Metastasis Prediction in Gynecologic Malignancies Using Multimodal Federated Learning: Integrating CT, MRI, and PET/CT

Abstract

:Simple Summary

Abstract

1. Introduction

2. Related Works

3. Dataset

3.1. Study Participants and Criteria

3.2. Clinical and Laboratory Data

3.3. Data Partitioning

4. Materials and Methods

4.1. Overall Model Architecture

4.2. Text Data Model

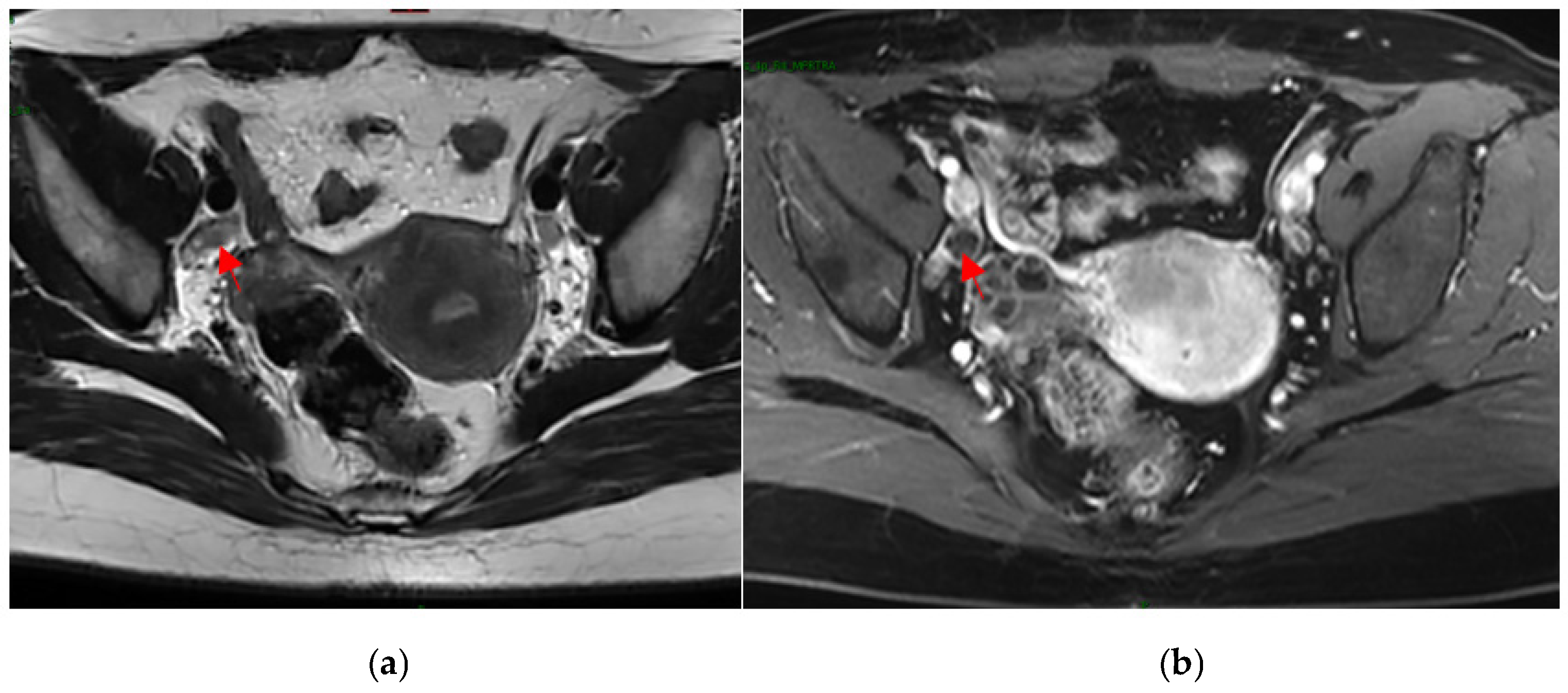

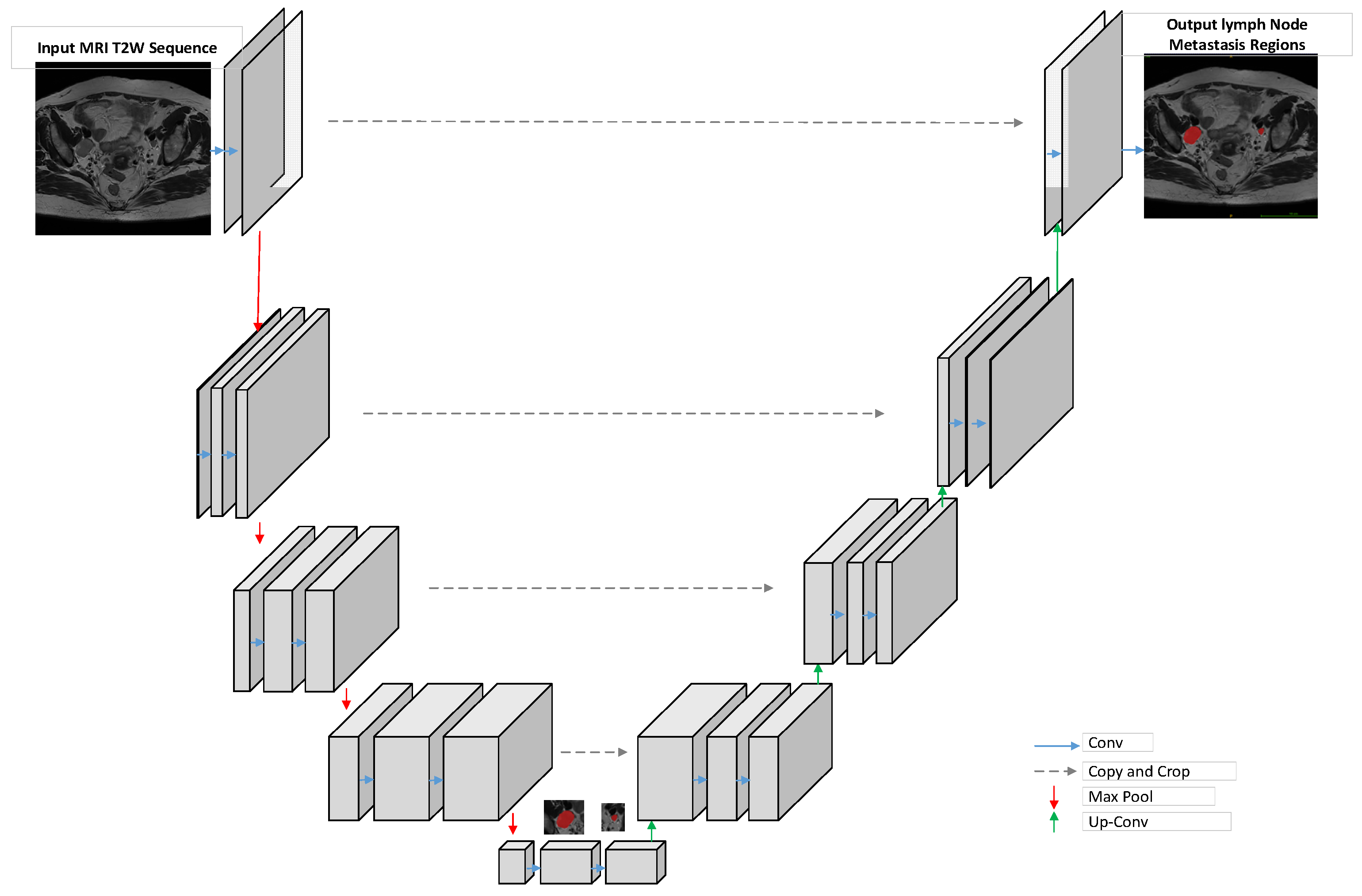

4.3. MRI Image-Processing Model

4.4. Multimodal Fusion

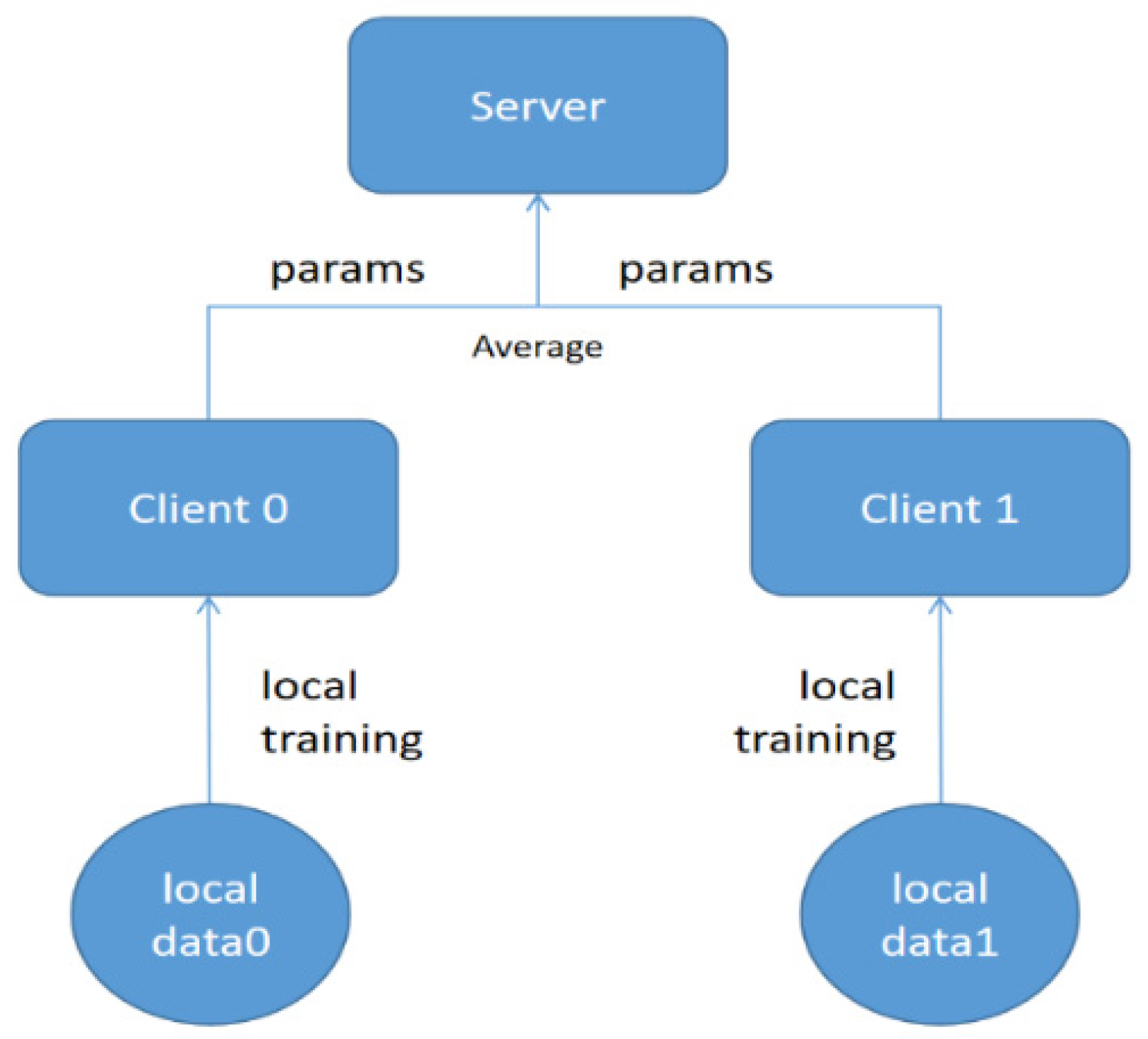

4.5. Federated-Learning Training

4.6. Evaluation Metrics

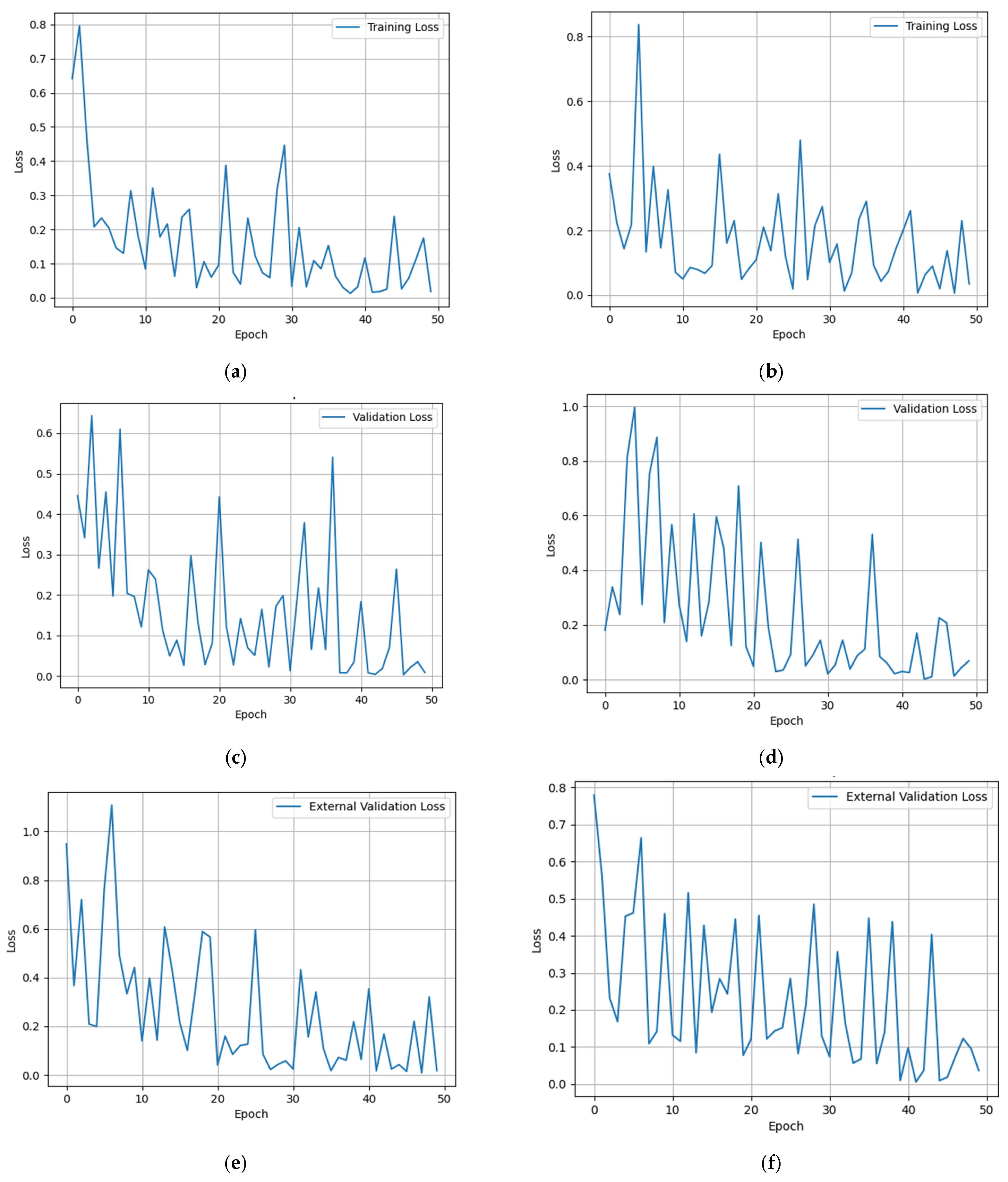

5. Results

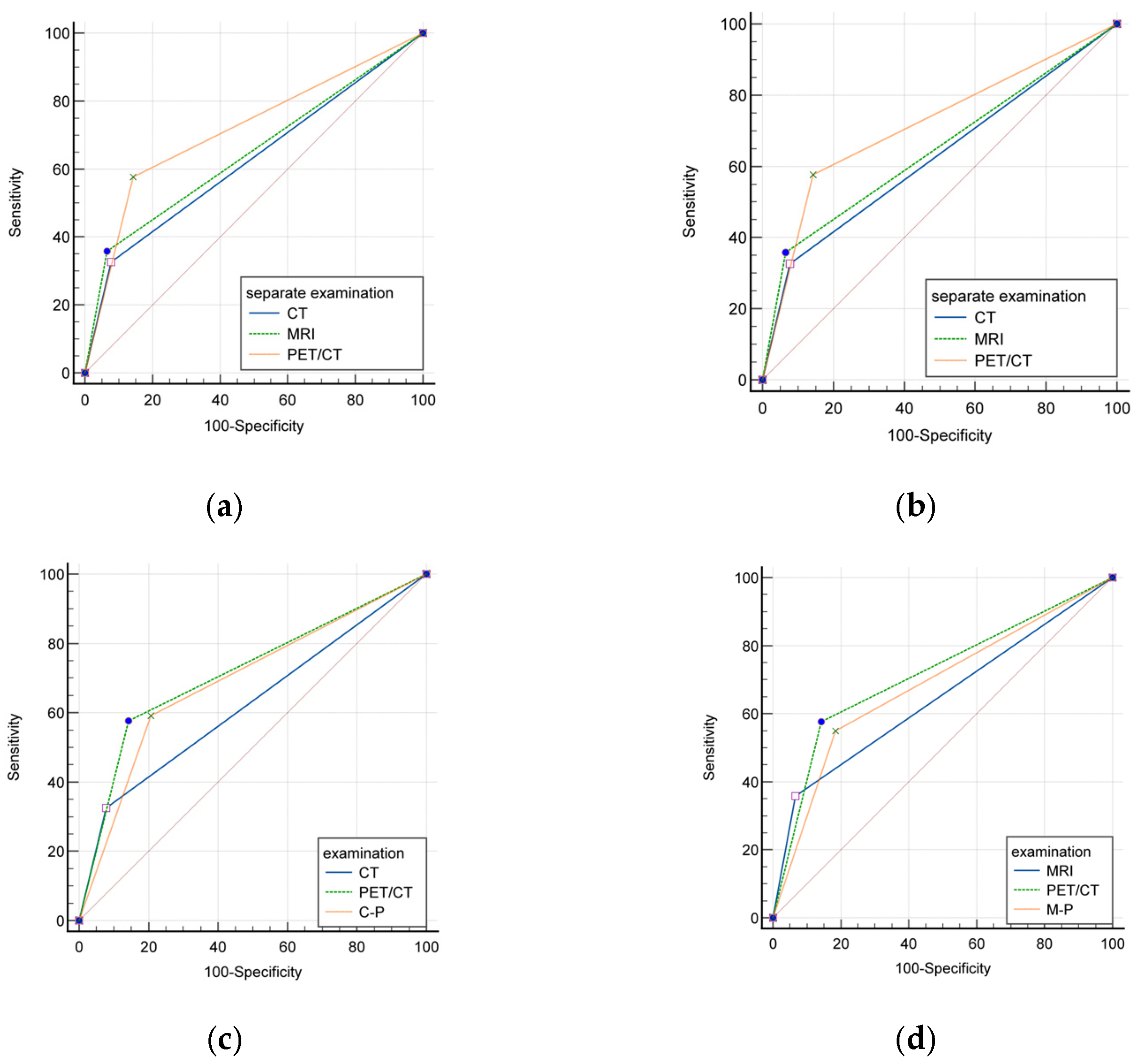

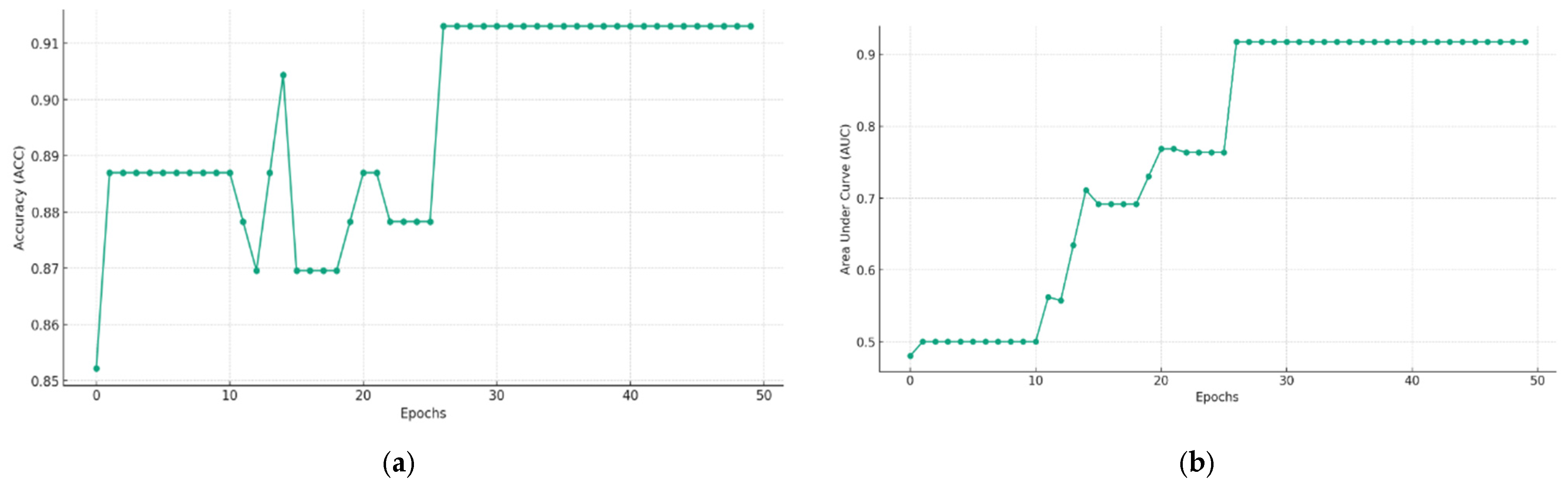

5.1. Evaluating the Efficacy of Individual and Combined Imaging Modalities: CT, MRI, and PET/CT

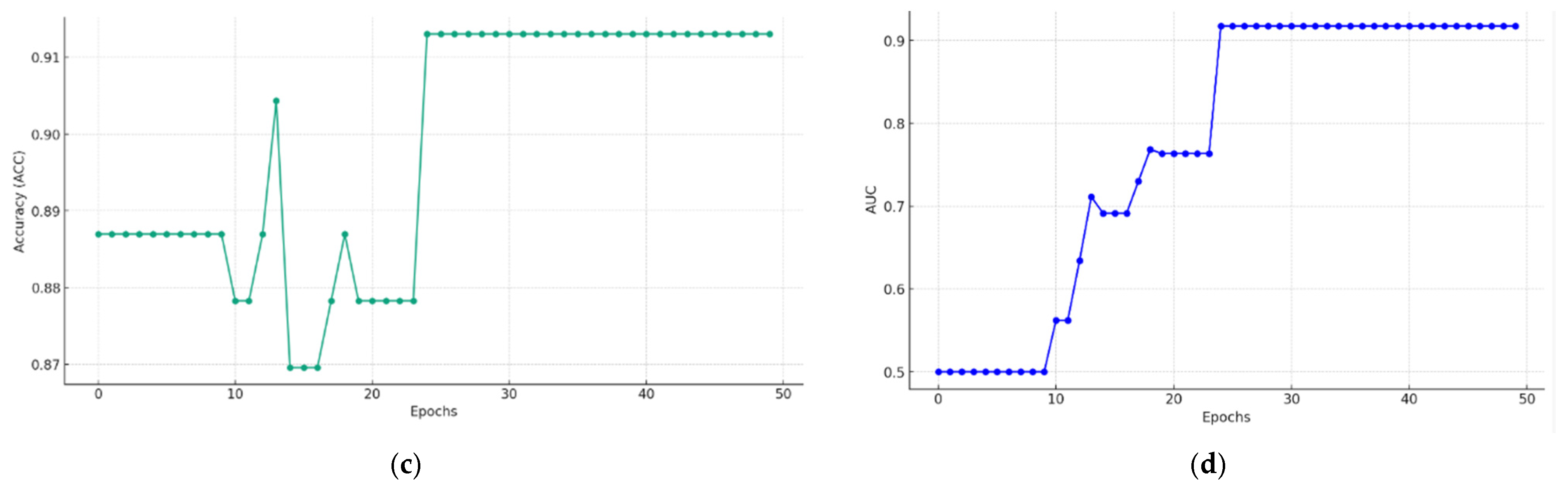

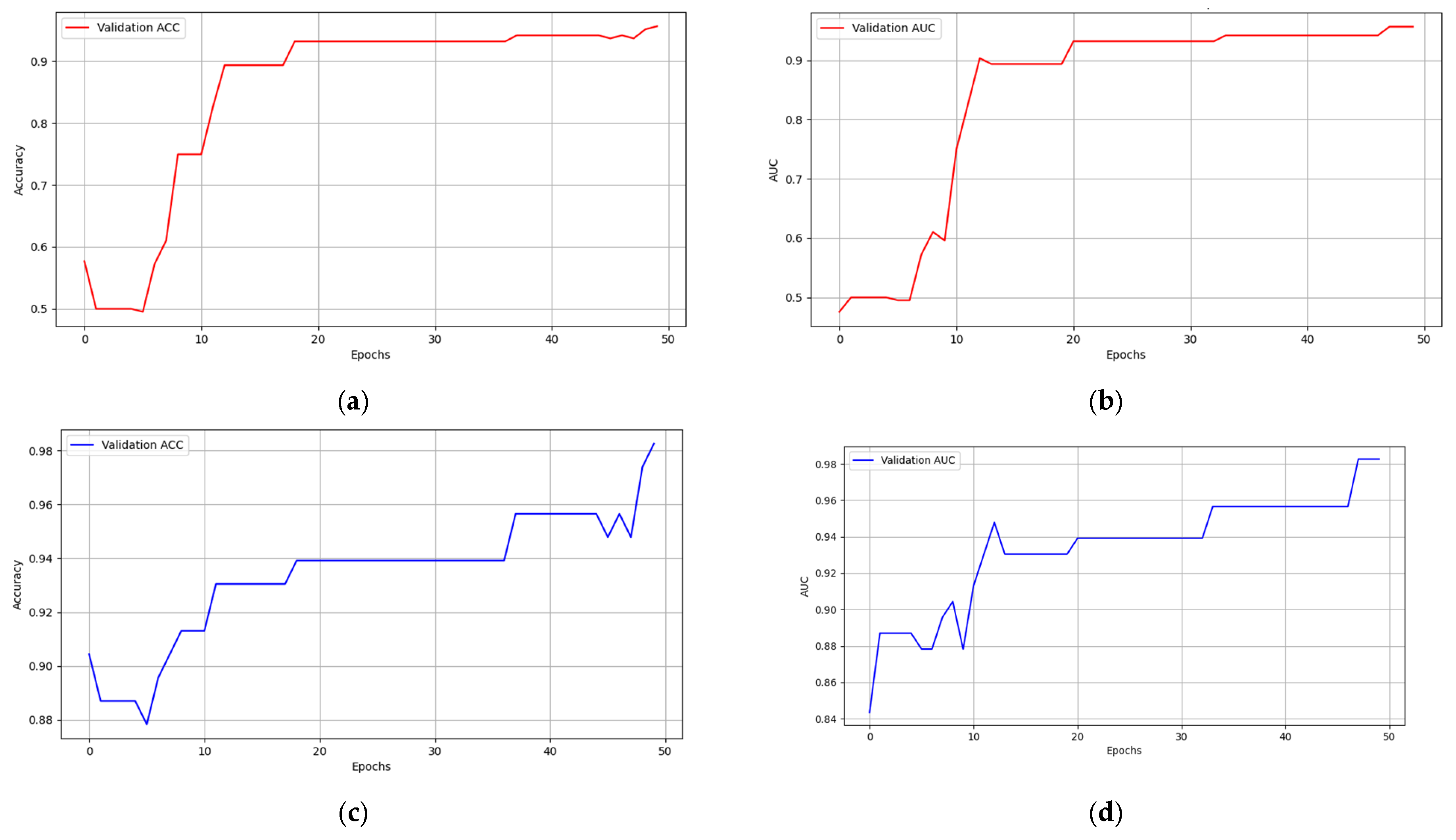

5.2. Multimodal Federated-Learning Framework Evaluation

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef] [PubMed]

- Torrent, A.; Amengual, J.; Sampol, C.M.; Ruiz, M.; Rioja, J.; Matheu, G.; Roca, P.; Cordoba, O. Sentinel Lymph Node Biopsy in Endometrial Cancer: Dual Injection, Dual Tracer-A Multidisciplinary Exhaustive Approach to Nodal Staging. Cancers 2022, 14, 929. [Google Scholar] [CrossRef]

- Kumar, S.; Podratz, K.C.; Bakkum-Gamez, J.N.; Dowdy, S.C.; Weaver, A.L.; McGree, M.E.; Cliby, W.A.; Keeney, G.L.; Thomas, G.; Mariani, A. Prospective assessment of the prevalence of pelvic, paraaortic and high paraaortic lymph node metastasis in endometrial cancer. Gynecol. Oncol. 2014, 132, 38–43. [Google Scholar] [CrossRef]

- Abu-Rustum, N.R.; Yashar, C.M.; Bradley, K.; Brooks, R.; Campos, S.M.; Chino, J.; Chon, H.S.; Chu, C.; Cohn, D.; Crispens, M.A.; et al. Cervical Cancer, Version 1.2022. J. Natl. Compr. Cancer Netw. 2022. Available online: https://www.nccn.org/guidelines/guidelines-detail?category=1&id=1426,2002 (accessed on 26 October 2021).

- Wenzel, H.H.; Olthof, E.P.; Bekkers, R.L.; Boere, I.A.; Lemmens, V.E.; Nijman, H.W.; Stalpers, L.J.; van der Aa, M.A.; van der Velden, J.; Mom, C.H. Primary or adjuvant chemoradiotherapy for cervical cancer with intraoperative lymph node metastasis—A review. Cancer Treat. Rev. 2022, 102, 102311. [Google Scholar] [CrossRef] [PubMed]

- Cibula, D.; Pötter, R.; Planchamp, F.; Avall-Lundqvist, E.; Fischerova, D.; Meder, C.H.; Köhler, C.; Landoni, F.; Lax, S.; Lindegaard, J.C.; et al. The European Society of Gynaecological Oncology/European Society for Radiotherapy and Oncology/European Society of Pathology guidelines for the management of patients with cervical cancer. Radiother. Oncol. 2018, 127, 404–416. [Google Scholar] [CrossRef]

- Nanay, B. Multimodal mental imagery. Cortex 2018, 105, 125–134. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Aguera y Arcas, B. Communication-Efficient Lerrning of Deep Networks from Decentralized Data. In Proceedings of the Artificial Intelligence and Statistics 2017, Ft. Lauderdale, FL, USA, 20–22 April 2017. [Google Scholar]

- Tu, W.-B.; Yuan, Z.-M.; Yu, K. Neural Network Models for Text Classification. Jisuanji Xitong Yingyong/Comput. Syst. Appl. 2019, 28, 145–150. [Google Scholar]

- Li, L.; Xie, J.; Li, G.; Xu, X.; Lan, Z. A Thyroid Nodule Segmentation Model Integrating Global Inference and MLP Architecture. Pattern Recognit. Artif. Intell. 2022, 35, 649–660. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.H.; Kim, S.C.; Choi, B.I.; Han, M.C. Uterine cervical carcinoma: Evaluation of pelvic lymph node metastasis with MR imaging. Radiology 1994, 190, 807–811. [Google Scholar] [CrossRef] [PubMed]

- McMahon, C.J.; Rofsky, N.M.; Pedrosa, I. Lymphatic metastases from pelvic tumors: Anatomic classification, characterization, and staging. Radiology 2010, 254, 31–46. [Google Scholar] [CrossRef] [PubMed]

- Choi, H.J.; Ju, W.; Myung, S.K.; Kim, Y. Diagnostic performance of computer tomography, magnetic resonance imaging, and positron emission tomography or positron emission tomography/computer tomography for detection of metastatic lymph nodes in patients with cervical cancer: Meta-analysis. Cancer Sci. 2010, 101, 1471–1479. [Google Scholar] [CrossRef] [PubMed]

- Bipat, S.; Glas, A.S.; van der Velden, J.; Zwinderman, A.H.; Bossuyt, P.M.; Stoker, J. Computed tomography and magnetic resonance imaging in staging of uterine cervical carcinoma: A systematic review. Gynecol. Oncol. 2003, 91, 59–66. [Google Scholar] [CrossRef] [PubMed]

- Haldorsen, I.S.; Lura, N.; Blaakær, J.; Fischerova, D.; Werner, H.M.J. What Is the Role of Imaging at Primary Diagnostic Work-up in Uterine Cervical Cancer? Curr. Oncol. Rep. 2019, 21, 77. [Google Scholar] [CrossRef]

- Kim, H.J.; Cho, A.; Yun, M.; Kim, Y.T.; Kang, W.J. Comparison of FDG PET/CT and MRI in lymph node staging of endometrial cancer. Ann. Nucl. Med. 2016, 30, 104–113. [Google Scholar] [CrossRef] [PubMed]

- Sarabhai, T.; Schaarschmidt, B.M.; Wetter, A.; Kirchner, J.; Aktas, B.; Forsting, M.; Ruhlmann, V.; Herrmann, K.; Umutlu, L.; Grueneisen, J. Comparison of 18F-FDG PET/MRI and MRI for pre-therapeutic tumor staging of patients with primary cancer of the uterine cervix. Eur. J. Nucl. Med. 2018, 45, 67–76. [Google Scholar] [CrossRef] [PubMed]

- Adam, J.A.; van Diepen, P.R.; Mom, C.H.; Stoker, J.; van Eck-Smit, B.L.; Bipat, S. [18F]FDG-PET or PET/CT in the evaluation of pelvic and para-aortic lymph nodes in patients with locally advanced cervical cancer: A systematic review of the literature. Gynecol. Oncol. 2020, 159, 588–596. [Google Scholar] [CrossRef]

- Kitajima, K.; Murakami, K.; Yamasaki, E.; Kaji, Y.; Sugimura, K. Accuracy of integrated FDG-PET/contrast-enhanced CT in detecting pelvic and paraaortic lymph node metastasis in patients with uterine cancer. Eur. Radiol. 2009, 19, 1529–1536. [Google Scholar] [CrossRef]

- Rahimi, M.; Akbari, A.; Asadi, F.; Emami, H. Cervical cancer survival prediction by machine learning algorithms: A systematic review. BMC Cancer 2023, 23, 341. [Google Scholar] [CrossRef]

- Matsuo, K.; Purushotham, S.; Jiang, B.; Mandelbaum, R.S.; Takiuchi, T.; Liu, Y.; Roman, L.D. Survival outcome prediction in cervical cancer: Cox models vs deep-learning model. Am. J. Obstet. Gynecol. 2019, 220, 381.e1–381.e14. [Google Scholar] [CrossRef] [PubMed]

- Al Mudawi, N.; Alazeb, A. A Model for Predicting Cervical Cancer Using Machine Learning Algorithms. Sensors 2022, 22, 4132. [Google Scholar] [CrossRef]

- Zhang, K.; Sun, K.; Zhang, C.; Ren, K.; Li, C.; Shen, L.; Jing, D. Using deep learning to predict survival outcome in non-surgical cervical cancer patients based on pathological images. J. Cancer Res. Clin. Oncol. 2023, 149, 6075–6083. [Google Scholar] [CrossRef]

- Dong, T.; Wang, L.; Li, R.; Liu, Q.; Xu, Y.; Wei, Y.; Jiao, X.; Li, X.; Zhang, Y.; Zhang, Y.; et al. Development of a Novel Deep Learning-Based Prediction Model for the Prognosis of Operable Cervical Cancer. Comput. Math. Methods Med. 2022, 2022, 4364663. [Google Scholar] [CrossRef] [PubMed]

- Aşıcıoğlu, O.; Erdem, B.; Çelebi, I.; Akgöl, S.; Yüksel, I.T.; Özaydin, I.Y.; Topbaş, F.; Akbayir, Ö. Preoperative prediction of retroperitoneal lymph node involvement in clinical stage IB and IIA cervical cancer. J. Cancer Res. Ther. 2022, 18, 1548–1552. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Chen, J.; Yang, X.; Han, J.; Chen, X.; Fan, Y.; Zheng, H. Novel risk prediction models, involving coagulation, thromboelastography, stress response, and immune function indicators, for deep vein thrombosis after radical resection of cervical cancer and ovarian cancer. J. Obstet. Gynaecol. 2023, 43, 2204162. [Google Scholar] [CrossRef]

- Wang, S.; Ai, P.; Xie, L.; Xu, Q.; Bai, S.; Lu, Y.; Li, P.; Chen, N. Dosimetric comparison of different multileaf collimator leaves in treatment planning of intensity-modulated radiotherapy for cervical cancer. Med. Dosim. 2013, 38, 454–459. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; An, H.; Cho, H.-W.; Min, K.-J.; Hong, J.-H.; Lee, S.; Song, J.-Y.; Lee, J.-K.; Lee, N.-W. Pivotal Clinical Study to Evaluate the Efficacy and Safety of Assistive Artificial Intelligence-Based Software for Cervical Cancer Diagnosis. J. Clin. Med. 2023, 12, 4024. [Google Scholar] [CrossRef] [PubMed]

- Liu, Q.; Jiang, N.; Hao, Y.; Hao, C.; Wang, W.; Bian, T.; Wang, X.; Li, H.; Zhang, Y.; Kang, Y.; et al. Identification of lymph node metastasis in pre-operation cervical cancer patients by weakly supervised deep learning from histopathological whole-slide biopsy images. Cancer Med. 2023. online ahead of print. [Google Scholar] [CrossRef]

- Giuseppi, A.; Della Torre, L.; Menegatti, D.; Priscoli, F.D.; Pietrabissa, A.; Poli, C. An Adaptive Model Averaging Procedure for Federated Learning (AdaFed). J. Adv. Inf. Technol. 2022, 13, 539–548. [Google Scholar] [CrossRef]

- Yao, L.; Zhang, Z.; Keles, E.; Yazici, C.; Tirkes, T.; Bagci, U. A review of deep learning and radiomics approaches for pancreatic cancer diagnosis from medical imaging. Curr. Opin. Gastroenterol. 2023, 39, 436–447. [Google Scholar] [CrossRef]

- Silva, S.; Gutman, B.A.; Romero, E.; Thompson, P.M.; Altmann, A.; Lorenzi, M. Federated learning in distributed medical databases: Meta-analysis of large-scale subcortical brain data. In Proceedings of the 16th International Symposium on Biomedical Imaging, Venice, Italy, 8–11 April 2019. [Google Scholar]

- Liu, G.; Pan, S.; Zhao, R.; Zhou, H.; Chen, J.; Zhou, X.; Xu, J.; Zhou, Y.; Xue, W.; Wu, G. The added value of AI-based computer-aided diagnosis in classification of cancer at prostate MRI. Eur. Radiol. 2023, 33, 5118–5130. [Google Scholar] [CrossRef]

- Wang, Y.; Canahuate, G.M.; Van Dijk, L.V.; Mohamed, A.S.R.; Fuller, C.D.; Zhang, X.; Marai, G.-E. Predicting late symptoms of head and neck cancer treatment using LSTM and patient reported outcomes. Proc. Int. Database Eng. Appl. Symp. 2021, 2021, 273–279. [Google Scholar] [CrossRef] [PubMed]

- Kaur, M.; Singh, D.; Kumar, V.; Lee, H.-N. MLNet: Metaheuristics-Based Lightweight Deep Learning Network for Cervical Cancer Diagnosis. IEEE J. Biomed. Health Inform. 2022. online ahead of print. [Google Scholar] [CrossRef] [PubMed]

- Cibi, A.; Rose, R.J. Classification of stages in cervical cancer MRI by customized CNN and transfer learning. Cogn. Neurodyn. 2023, 17, 1261–1269. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Mao, Y.; Gao, X.; Zhang, Y. Recurrence risk stratification for locally advanced cervical cancer using multi-modality transformer network. Front. Oncol. 2023, 13, 1100087. [Google Scholar] [CrossRef] [PubMed]

| N (%) | |

|---|---|

| Median age (range) | 49 (19–78) |

| Cervical carcinoma | 423 |

| Endometrial carcinoma | 144 |

| Lymph node metastasis | |

| Cervical carcinoma | |

| No | 334(78.96) |

| Yes | 89 (21.04) |

| Endometrial carcinoma | |

| No | 130(90.28) |

| Yes | 14(9.72) |

| FIGO 2009 stage | |

| Cervical carcinoma | |

| IA1 | 8 (1.89) |

| IA2 | 18 (4.26) |

| IB1 | 219 (51.77) |

| IB2 | 33 (7.80) |

| IIA1 | 61 (14.42) |

| IIA2 | 84 (19.86) |

| Endometrial carcinoma | |

| IA | 102 (70.83) |

| IB | 18 (12.5) |

| II | 6 (4.17) |

| IIIA | 4 (2.78) |

| IIIC1 | 9 (6.25) |

| IIIC2 | 5 (3.47) |

| LVSI | |

| Cervical carcinoma | |

| No | 310 (73.29) |

| Yes | 113 (26.71) |

| Endometrial carcinoma | |

| No | 123 (85.42) |

| Yes | 21 (14.58) |

| Stromal invasion | |

| Cervical carcinoma | |

| <1/3 | 171 (40.43) |

| 1/3–2/3 | 86 (20.33) |

| >2/3 | 166 (39.24) |

| Endometrial carcinoma | |

| <1/2 | 117 (81.25) |

| >1/2 | 27 (18.75) |

| Histology | |

| Cervical carcinoma | |

| Squamous cell carcinoma | 321 (75.89) |

| Adenocarcinoma | 81 (19.15) |

| Adenosquamous cell carcinoma | 8 (1.89) |

| Neuroendocrine carcinoma | 9 (2.13) |

| Clear cell carcinoma | 1 (0.24) |

| Rhabdomyosarcoma | 1 (0.24) |

| Carcinosarcoma | 1 (0.24) |

| Genital wart-like carcinoma | 1 (0.24) |

| Endometrial carcinoma | |

| Endometrioid carcinoma | 131 (90.97) |

| Clear cell carcinoma | 6 (4.17) |

| Serous carcinoma | 4 (2.78) |

| Carcinosarcoma | 3 (2.08) |

| Grade | |

| Cervical carcinoma | |

| 1 | 10 (2.36) |

| 2 | 197 (46.57) |

| 3 | 70 (16.55) |

| Non-keratinizing SCC | 26 (6.15) |

| Keratinizing SCC | 5 (1.18) |

| Not reported | 91 (21.75) |

| Endometrial carcinoma | |

| 1 | 33 (22.92) |

| 2 | 64 (44.44) |

| 3 | 26 (18.06) |

| Not reported | 8 (5.56) |

| Field | Meaning |

|---|---|

| Hospital ID | Records the unique identifier of the patient within the hospital |

| Diagnosis result | Indicates the patient’s disease diagnosis, including cervical and endometrial malignant tumors |

| Preoperative CT | Records the results of CT evaluation conducted before surgery |

| MRI | Records the results of MRI evaluation conducted before surgery |

| PET/CT LNM | Indicates the LNM in the pelvic and abdominal cavity evaluated by PET/CT |

| PET results | Record the results of PET evaluation conducted before surgery |

| CT LNM | Indicates the LNM in the pelvic and abdominal cavity evaluated by CT |

| CT results | Record the results of CT evaluation conducted before surgery |

| Client | Positive Samples | Negative Samples | Training Set | Testing Set | Validation Set |

|---|---|---|---|---|---|

| Client 0 | 111 | 115 | 181 (8:2) | 45 (8:2) | 341 (Client 1) |

| Client 1 | 226 | 115 | 273 (8:2) | 68 (8:2) | 226 (Client 0) |

| Pathology | Total | ||

|---|---|---|---|

| Positive for LNM | Negative for LNM | ||

| CT | 439 | ||

| Positive for LNM | 31 | 27 | |

| Negative for LNM | 64 | 317 | |

| MRI | 440 | ||

| Positive for LNM | 28 | 24 | |

| Negative for LNM | 50 | 338 | |

| PET/CT | 393 | ||

| Positive for LNM | 45 | 45 | |

| Negative for LNM | 33 | 270 | |

| CT + MRI | 336 | ||

| Positive for LNM | 27 | 25 | |

| Negative for LNM | 45 | 239 | |

| CT + PET/CT | 308 | ||

| Positive for LNM | 42 | 49 | |

| Negative for LNM | 29 | 188 | |

| MRI + PET/CT | 292 | ||

| Positive for LNM | 33 | 43 | |

| Negative for LNM | 27 | 189 | |

| CT + MRI + PET/CT | 230 | ||

| Positive for LNM | 31 | 40 | |

| Negative for LNM | 24 | 135 | |

| Group | Sensitivity | Specificity | PPV | NPV | Accuracy | AUC | |

|---|---|---|---|---|---|---|---|

| Efficiency | CT | 32.63% | 92.15% | 53.45% | 83.20% | 79.27% | 0.624 (0.555–0.693) |

| MRI | 35.9% | 93.37% | 53.85% | 87.11% | 83.18% | 0.646 (0.571–0.721) | |

| PET/CT | 57.69% | 85.71% | 50.0% | 89.11% | 80.15% | 0.717 (0.647–0.787) | |

| C-M | 37.5% | 90.53% | 51.92% | 84.15% | 79.17% | 0.640 (0.561–0.719) | |

| C-P | 59.15% | 79.32% | 46.15% | 86.64% | 74.68% | 0.692 (0.618–0.767) | |

| M-P | 55.0% | 81.47% | 43.42% | 87.1% | 76.03% | 0.682 (0.601–0.764) | |

| C-M-P | 56.36% | 77.14% | 43.06% | 85.44% | 72.17% | 0.668 (0.582–0.753) | |

| p value | CT vs. MRI | 0.652 | 0.532 | 0.967 | 0.127 | 0.138 | 0.5528 |

| CT vs. PET/CT | 0.001 * | 0.008 * | 0.682 | 0.028 * | 0.752 | 0.0172 * | |

| MRI vs. PET/CT | 0.006 * | 0.001 * | 0.659 | 0.423 | 0.258 | 0.0846 | |

| C-M vs. C-P | 0.01 * | <0.001 * | 0.507 | 0.438 | 0.176 | 0.2359 | |

| C-M vs. M-P | 0.044 * | 0.003 * | 0.344 | 0.291 | 0.346 | 0.3595 | |

| C-M vs. C-M-P | 0.034 * | <0.001 * | 0.365 | 0.834 | 0.055 | 0.5679 | |

| C-P vs. M-P | 0.632 | 0.559 | 0.724 | 0.789 | 0.701 | 0.8318 | |

| C-P vs. C-M-P | 0.753 | 0.595 | 0.752 | 0.634 | 0.515 | 0.6139 | |

| M-P vs. C-M-P | 0.883 | 0.284 | 0.977 | 0.469 | 0.317 | 0.7719 | |

| CT vs. C-P | 0.001 * | <0.001 * | 0.385 | 0.265 | 0.139 | 0.0942 | |

| PET/CT vs. C-P | 0.856 | 0.048 * | 0.605 | 0.391 | 0.084 | 0.5746 | |

| MRI vs. M-P | 0.025 * | <0.001 * | 0.246 | 0.891 | 0.017 * | 0.4212 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, Z.; Ma, L.; Ding, Y.; Zhao, X.; Shi, X.; Lu, H.; Liu, K. Enhancing the Accuracy of Lymph-Node-Metastasis Prediction in Gynecologic Malignancies Using Multimodal Federated Learning: Integrating CT, MRI, and PET/CT. Cancers 2023, 15, 5281. https://doi.org/10.3390/cancers15215281

Hu Z, Ma L, Ding Y, Zhao X, Shi X, Lu H, Liu K. Enhancing the Accuracy of Lymph-Node-Metastasis Prediction in Gynecologic Malignancies Using Multimodal Federated Learning: Integrating CT, MRI, and PET/CT. Cancers. 2023; 15(21):5281. https://doi.org/10.3390/cancers15215281

Chicago/Turabian StyleHu, Zhijun, Ling Ma, Yue Ding, Xuanxuan Zhao, Xiaohua Shi, Hongtao Lu, and Kaijiang Liu. 2023. "Enhancing the Accuracy of Lymph-Node-Metastasis Prediction in Gynecologic Malignancies Using Multimodal Federated Learning: Integrating CT, MRI, and PET/CT" Cancers 15, no. 21: 5281. https://doi.org/10.3390/cancers15215281

APA StyleHu, Z., Ma, L., Ding, Y., Zhao, X., Shi, X., Lu, H., & Liu, K. (2023). Enhancing the Accuracy of Lymph-Node-Metastasis Prediction in Gynecologic Malignancies Using Multimodal Federated Learning: Integrating CT, MRI, and PET/CT. Cancers, 15(21), 5281. https://doi.org/10.3390/cancers15215281