Simple Summary

Manual detection and delineation of brain metastases are time consuming and variable. Studies have therefore been conducted to automate this process using imaging studies and artificial intelligence. To the best of our knowledge, no study has conducted a systematic review and meta-analysis on brain metastasis detection using only deep learning and MRI. As a result, a systematic review of this topic is required, as well as an assessment of the quality of the studies and a meta-analysis to determine the strength of the current evidence. The purpose of this study was to perform a systematic review and meta-analysis of the performance of deep learning models that use MRI to detect brain metastases in cancer patients.

Abstract

Since manual detection of brain metastases (BMs) is time consuming, studies have been conducted to automate this process using deep learning. The purpose of this study was to conduct a systematic review and meta-analysis of the performance of deep learning models that use magnetic resonance imaging (MRI) to detect BMs in cancer patients. A systematic search of MEDLINE, EMBASE, and Web of Science was conducted until 30 September 2022. Inclusion criteria were: patients with BMs; deep learning using MRI images was applied to detect the BMs; sufficient data were present in terms of detective performance; original research articles. Exclusion criteria were: reviews, letters, guidelines, editorials, or errata; case reports or series with less than 20 patients; studies with overlapping cohorts; insufficient data in terms of detective performance; machine learning was used to detect BMs; articles not written in English. Quality Assessment of Diagnostic Accuracy Studies-2 and Checklist for Artificial Intelligence in Medical Imaging was used to assess the quality. Finally, 24 eligible studies were identified for the quantitative analysis. The pooled proportion of patient-wise and lesion-wise detectability was 89%. Articles should adhere to the checklists more strictly. Deep learning algorithms effectively detect BMs. Pooled analysis of false positive rates could not be estimated due to reporting differences.

1. Introduction

Brain metastases (BMs) are observed in nearly 20% of adult cancer patients [1]. They are the most common type of intracranial neoplasm in adults [2]. Although the brain parenchyma is the most common intracranial metastatic site, BMs frequently occur in conjunction with metastases to other sites such as the cranium, dura, or leptomeninges [3]. Therefore, making accurate diagnosis of BM is critical.

Contrast-enhanced magnetic resonance imaging (MRI) is the preferred imaging examination for diagnosing BMs. It is more sensitive than either nonenhanced MRI or computed tomography (CT) in detecting lesions [4,5,6,7]. Although whole-brain radiotherapy (WBRT), which can cause cognitive decline, has historically been the mainstay of radiotherapy for the treatment of BMs, stereotactic radiosurgery (SRS) has now become the standard of care in many clinical situations. In 2014, a multi-institutional prospective study demonstrated that SRS without WBRT in patients with five to ten brain metastases is non-inferior to that in patients with two to four brain metastases [8]. Therefore, SRS plays a more prominent role today and new treatment methods, such as hippocampal avoidance-WBRT and newer systemic agents, are being developed [9]. Before SRS, in the current standard practice, each BM must be correctly detected and manually delineated for treatment planning, which is time consuming and subject to considerable inter- and intra-observer variability [10].

Because manual detection and delineation of BMs is time consuming and variable, research has been done to make this process automated using imaging studies with the help of artificial intelligence [11]. Deep learning, a type of representation learning method, has emerged as the cutting-edge machine learning method [12]. Because it does not require an intermediary feature extraction or an engineering phase to learn the relationship between the input and the output, deep learning has accelerated the development of computer-aided detection in various fields [13]. Furthermore, with advances in network architectures and increased imaging data quantity and quality in recent years, the performance of deep learning-based approaches has been greatly enhanced [14]. A meta-analysis of 12 studies examined computer-aided detection in BMs and showed a pooled proportion of detectability of 0.90 (95% confidence interval [CI], 85–93%) [11]. However, they included articles that used machine learning or deep learning algorithms, totaling twelve studies in their meta-analysis, with only seven implementing deep learning.

To the best of our knowledge, no study in the literature has performed a systematic review and meta-analysis on BM detection with exclusively deep learning using MRI. As a result, it is necessary to conduct a systematic review of this topic, assess the quality of the studies, and run a meta-analysis to evaluate the strength of the current evidence. This study aimed to conduct a systematic review and meta-analysis of the performance of deep learning models that implement MRI in detecting BMs in cancer patients.

2. Materials and Methods

This study followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses extension for Diagnostic Test Accuracy Studies (PRISMA-DTA) [15]. Checklists for PRISMA-DTA for Abstracts and PRISMA-DTA are available online in Supplemental Table S1 and Supplemental Table S2, respectively. The study has not been registered. This study is exempt from ethical approval of the Institutional Review Board since the analysis only involves anonymized data, and all the included studies have received local ethics approval.

2.1. Literature Search

A systematic search of articles was performed by two independent readers (B.B.O. and M.K., with more than 2 years and 4 years of experience in conducting systematic reviews and meta-analyses, respectively) from inception until September 30, 2022. The following search terms were used to search MEDLINE: (“deep learning” OR “deep architecture” OR “artificial neural network” OR “convolutional neural network” OR “convolutional network” OR CNN OR “recurrent neural network” OR RNN OR Auto-Encoder OR Autoencoder OR “Deep belief network” OR DBN OR “Restricted Boltzmann machine” RBM OR “Long Short Term Memory” OR “Long Short-Term Memory” OR LSTM OR “Gated Recurrent Units” OR GRU) AND ((“brain metastasis”) OR (“brain metastases”) OR (“metastatic brain tumor”) OR (“intra-axial metastatic tumor”) OR (“cerebral metastasis”) OR (“cerebral metastases”)). The search strategy was then adapted for EMBASE and Web of Science before searching these databases.

2.2. Study Selection

All search results were exported to the Rayyan online platform [16]. After removing duplicates, two authors (B.B.O. and M.K.) independently screened titles and abstracts using the Rayyan platform and reviewed the full text of potentially relevant articles. A senior author compared and examined the results of each search and analysis step (M.W., 25 years of experience in neuroradiology). Any disagreement was resolved via discussion with the senior author (M.W.).

Articles were included based on the satisfaction of all the following criteria: (I) inclusion of patients with BMs; (II) deep learning using MRI images was applied to detect the BMs; (III) sufficient data were present in terms of detective performance of the deep learning algorithms; (IV) original research articles.

Articles were excluded if they fulfilled any of the following criteria: (I) reviews, letters, guidelines, editorials, or errata; (II) case reports or series with less than 20 patients; (III) studies with overlapping cohorts; (IV) insufficient data in terms of detective performance of the deep learning algorithms; (V) machine learning, not deep learning, was used to detect BMs; (VI) articles not written in English. In the case of overlapping cohorts, the article with the largest sample size was included. If articles have the same number of patients, the most recent one was preferred.

2.3. Data Extraction

The following variables were collected by the two authors (B.B.O. and M.K.): (I) study characteristics (first author, publication year, study design, number of patients in each dataset, sex of patients in each dataset, number of metastatic lesions in each dataset, mean or median size of lesions, reference standard for metastasis detection, validation method, and primary tumors); (II) deep learning details and statistics (detectability, false positive rate, deep learning algorithm, and data augmentation); (III) MRI and scanner characteristics; and (IV) inclusion and exclusion criteria of each study.

2.4. Quality Assessment

Two authors (B.B.O. and M.K.) independently performed the quality assessments using the Checklist for Artificial Intelligence in Medical Imaging (CLAIM) and Quality Assessment of Diagnostic Accuracy Studies-2 (QUADAS-2) [17,18]. The CLAIM is a new 42-item checklist for evaluating artificial intelligence studies in medical imaging. For each item, studies were given a score of 0 or 1 on a 2-point scale. The CLAIM score was calculated by adding the scores from each study. The items were all equally weighted. QUADAS-2 was used to assess four domains: (I) patient selection, (II) index test, (III) reference standard, and (IV) flow and timing. During the quality assessment, any disagreements were resolved with the assistance of the senior author.

2.5. Meta-Analysis

The primary goal was to assess the detectability (sensitivity) of deep learning algorithms in detecting BMs. For the pooled proportion analysis of detectability of all included studies in the meta-analysis, the reported sensitivity per study was multiplied by the total number of patients in each study to estimate the number of detected events. In other words, reported sensitivity was converted to patient-wise sensitivity. Another pooled proportion analysis of detectability was performed for studies reporting their sensitivity lesion-wise. The detectability of each study in this group was calculated by dividing the number of correctly identified metastases in the test set (using given true positive values or calculated with reported sensitivities) by the total number of metastases in the test set. Following statistical analyses were performed in both pooled analyses.

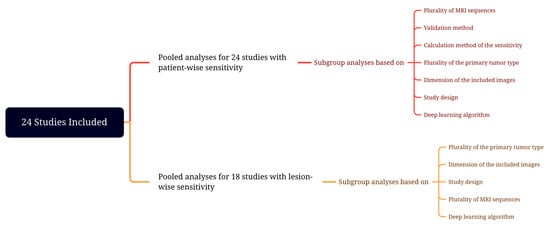

The pooled proportion analysis of detectability estimates with 95% CIs was performed with the random-effects model. The random-effects model, using the inverse variance method, was chosen since it captures uncertainty due to heterogeneity among studies [19,20]. The Freeman–Tukey double arcsine transformation was performed to stabilize the variances before pooling. Forest plots were created to provide a visual representation of the results. Meta-regression analyses were performed to determine if training size was associated with the sensitivity. Heterogeneity among all included studies was evaluated using Q-test with p < 0.05, suggesting the presence of study heterogeneity and I2 statistics. I2 values were defined as follows: heterogeneity that might not be important (0–25%), low heterogeneity (26–50%), moderate heterogeneity (51–75%), and high heterogeneity (76–100%) [21]. Since heterogeneity might indicate subgroup effects, we also explored heterogeneity in the pooled results using subgroup analysis [22]. Subgroup analyses were conducted in terms of the validation method used in the study (split training-test sets versus cross-validation), the plurality of MRI sequences utilized (single sequence versus multi-sequence), the calculation method of the reported sensitivity rates (lesion-wise versus other [patient-wise, voxel-wise]), the plurality of the primary tumor type (single versus multiple), dimension of the included images (2D versus 3D versus both), study design (single-center versus multi-center), and deep learning algorithms (DeepMedic versus U-Net versus others)—Figure 1. Meta-regression analyses were also performed to determine if training size was associated with heterogeneity. Publication bias occurs when the findings of a study influence the study’s likelihood of publication. The Egger method was applied to test the funnel plot asymmetry for publication bias [23]. R version 4.2.1 was used for all statistical analysis, and the function metaprop from package meta was utilized to perform meta-analysis and generate pooled estimates [24]. An alpha level of 0.05 was considered statistically significant.

Figure 1.

Meta-analysis design.

3. Results

3.1. Literature Search

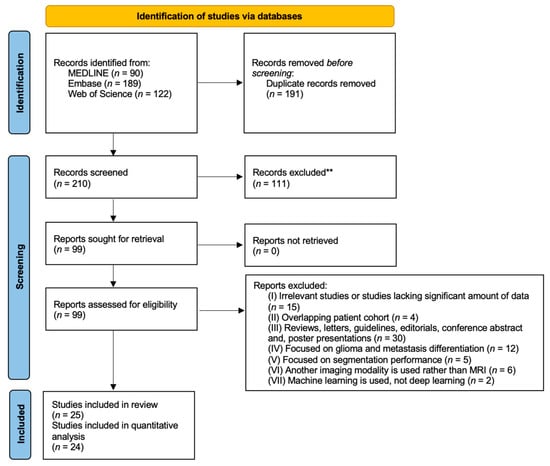

The study selection process is shown in the PRISMA flowchart (Figure 2). The literature search yielded 401 studies: 90 from MEDLINE, 189 from EMBASE, and 122 from Web of Science. After removing 191 duplicate articles, the remaining 210 were screened based on their title and abstract on the Rayyan platform, and 111 were excluded. Full texts of the remaining 99 articles were acquired and reviewed.

Figure 2.

Study selection process.

Seventy-four articles were excluded because: irrelevant studies or studies lacking significant amount of data (n = 15); overlapping patient cohort (n = 4); reviews, letters, guidelines, editorials, conference abstracts, and poster presentations (n= 30); focused on glioma and metastasis differentiation (n = 12); focused on segmentation performance (n = 5); another imaging modality was used rather than MRI (n = 6); machine learning was used, not deep learning (n = 2).

Finally, 25 eligible studies were identified [14,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48]. Due to a lack of sensitivity reporting, one study was included in the review but not in the quantitative analysis.

3.2. Quality Assessment

Table 1 shows a quality assessment summary of the included studies using the CLAIM. The mean CLAIM score of the four studies was 26.56 with a standard deviation (SD) of 3.19 (range, 18.00–31.00). The mean scores of the subsections of the CLAIM were 1.52 (SD = 0.71) for the title/abstract section, 2.00 (SD = 0.00) for the introduction section, 18.16 (SD = 2.25) for the methods section, 2.00 (SD = 0.82) for the results section, 1.96 (SD = 0.20) for the discussion section, and 0.92 (SD = 0.28) for the other information section.

Table 1.

The Checklist for Artificial Intelligence in Medical Imaging scores.

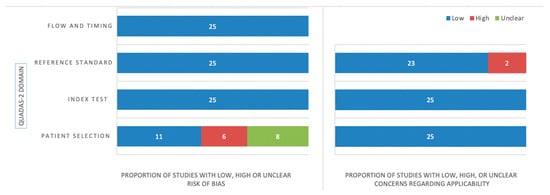

Figure 3 illustrates a quality assessment summary of the included studies using the QUADAS-2 tool. In terms of patient selection, eight studies showed an unclear risk of bias because they did not specify the inclusion criteria for patient enrollment, or they did not report the excluded patients or exclusion criteria [26,30,33,34,35,41,45,47]. Six studies were found to have a high risk of bias due to patient exclusion based on lesion size or the number of lesions [25,29,31,32,37,38]. The inclusion and exclusion criteria of the studies can be found online in Supplementary Table S3. All included studies in the index test section were determined to have a low bias risk since the algorithm was blinded to the reference standard. Since the lesions were annotated on contrast-enhanced MRI manually or semi-manually with manual correction, which is the method of choice for assessing and delineating brain metastases, all studies in the reference standard section were considered to have a low risk of bias [5]. No risk of bias was found concerning the flow and timing. There were no concerns regarding the applicability of patient selection, and index tests. Two studies were found to have high concerns about the applicability of the reference test because they used 1.0 Tesla (T) scanners [36,40]. In current practice, scanners with magnets less than 1.5T are not recommended for BM detection [49].

Figure 3.

A summary of the Quality Assessment of Diagnostic Accuracy Studies-2 results.

3.3. Characteristics of Included Studies

The patient and study characteristics are shown in Table 2. All studies were conducted retrospectively. Five studies were multi-center studies [14,32,36,43,44], and the others were single-center. Four studies used cross-validation [30,31,38,43], and others used split training-test sets to evaluate their models. Delineation of brain metastases was done semi-automatically in one study to serve as a reference standard [25], and it was done manually in others. There were 6840, 1419, and 643 patients in the training and validation sets combined, test sets, and other sets, respectively. Ultimately, 25 studies included a total of 8902 patients. The number of metastatic lesions in the training sets was reported in 20 studies, totaling 31,530. Furthermore, the number of metastatic lesions in the test sets was documented in 18 studies, making a total of 5565. The total reported number of metastatic lesions was 40,654. Twenty-two studies included multiple primary tumor types, and three studies included only one primary tumor type. Two articles included only malignant melanoma patients, and one included non-small cell lung cancer (NSCLC) patients [30,36,40]. Table 2 also displays the means or medians of the volumes of the lesions or the longest diameter of the lesions.

Table 2.

The patient and study characteristics.

A summary of the scanning details of the studies is shown in Table 3. Eleven studies included both 3.0T and 1.5T scanners [25,26,28,29,32,34,41,42,44,47,48]; two studies included only 1.5T scanners [27,30]; five studies included only 3.0T scanners [37,38,39,43,46]; two studies included 1.0T, 1.5T, and 3.0T scanners [36,40]. No information was found in five studies regarding their scanners’ magnets [14,31,33,35,45]. Ten studies used multiple MRI sequences in their models [14,26,27,32,36,38,39,40,41,42], whereas the remaining fifteen only used one. Two studies used 2D images [30,33], and seven used 2D and 3D images [14,26,27,36,38,40,41]. The remaining sixteen studies only used 3D images. All the studies included at least one sequence with contrast enhancement. With twenty studies, the most common included sequence was 3D contrast-enhanced T1 weighted imaging (WI). The slice thickness of images ranged from 0.43 mm to 7.22 mm (Table 3).

Table 3.

Scanning characteristics.

3.4. Deep Learning Algorithms

Table 4 provides a summary of detectability statistics, false-positive rates, and deep learning algorithm details. U-Net was used in ten studies [14,26,28,29,30,39,41,42,45,46], and DeepMedic was used in five [27,35,36,37,40]. Two studies implemented a single-shot detector [25,48]. Each of the following algorithms was used in a single study: You Only Look Once v3, fully convolution network, convolutional neural network (CNN), feature pyramid network, faster region-based CNN, input-level dropout model, Modified V-Net 3D, CropNet and noisy student. Data augmentation was not implemented in 6 studies [32,37,41,43,46,48], and in 19 studies, it was. False positive rates were reported in studies either per scan, per patient, per slice, per case, or per lesion. In two studies, false positive rates were not reported. Detailed information can be found in Table 4.

Table 4.

Algorithms and statistics.

3.5. Assessment of Detectability Performance

In our study, we included the internal test set results or the cross-validation results in the pooled detectability analysis. If more than one algorithm was used in a study, we utilized the best algorithm in our pooled analysis. Furthermore, if more than one level of input was present, the one that yielded the most successful outcome was included in our study.

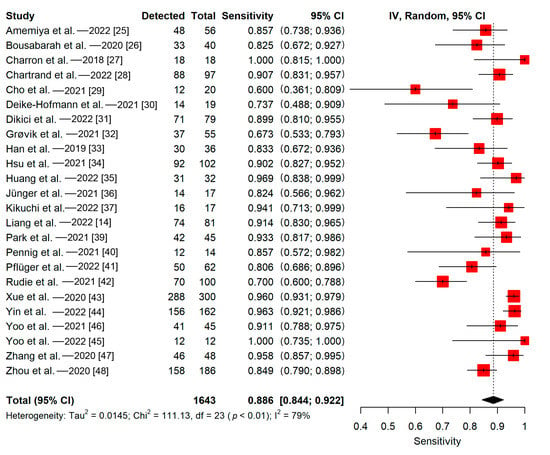

3.5.1. Patient-Wise

Twenty studies evaluated and reported their detectability lesion-wise, whereas the remaining four assessed and reported their detectability voxel-wise or patient-wise. The detectability of the 24 included studies ranged from 58% to 98%. The pooled proportion of detectability (patient-wise) of deep learning algorithms in all 24 included studies was 89% (95% CI, 84–92%) (Figure 4). The meta-regression did not find a significant impact of the training sample size on the sensitivity (p = 0.12). The Q-test indicated that heterogeneity was present across the studies (Q = 111.13, p < 0.01), and the Higgins I2 statistic showed the presence of high heterogeneity in detectability (I2 = 79%).

Figure 4.

Forest plot of deep learning algorithms’ patient-wise detectability.

There was no statistically significant difference in performance between the groups in any subgroup analyses (p-values ranged from 0.08 to 0.69). Heterogeneity was moderate or high in subgroups that are separated based on the plurality of MRI sequences utilized (single MRI sequence; Q = 50.86, p < 0.01; I2 = 72%/multiple MRI sequences; Q = 33.09, p < 0.01; I2 = 76%), validation method (split training-test; Q = 83.18, p < 0.01; I2 = 76%/cross-validation; Q = 11.63, p < 0.01; I2 = 83%), study design (single-center; Q = 52.65, p < 0.01; I2 = 66%/multi-center; Q = 36.83, p < 0.01; I2 = 89%), and the calculation method of the reported sensitivity (lesion-wise; Q = 68.78, p < 0.01; I2 = 72%/others; Q = 37.60, p < 0.01; I2 = 92%). Subgroup analyses based on the plurality of the primary tumor type revealed a high heterogeneity in studies with multiple primary tumor types (Q = 105.92, p < 0.01; I2 = 81%). There was no evidence of heterogeneity in studies with a single primary tumor type (Q = 0.66, p = 0.72; I2 = 0%). Furthermore, subgroup analyses based on the dimension of the included images revealed high heterogeneity in studies with 3D images (Q = 94.2, p < 0.01; I2 = 84%). There was no evidence of heterogeneity in studies with a mixture of 2D and 3D images (Q = 9.64, p = 0.09; I2 = 48%) and studies with 2D images (Q = 0.72, p = 0.39; I2 = 0%). The meta-regression revealed that the heterogeneity not explained by the training sample size was significant, indicating that the training sample size did not influence heterogeneity significantly (p < 0.0001).

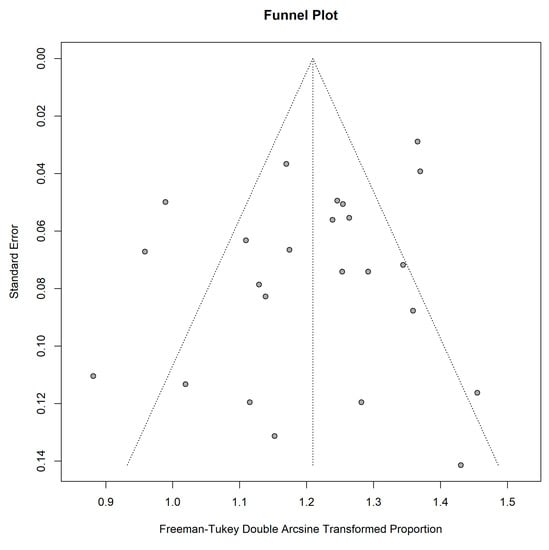

The funnel plot was asymmetrical, indicating publication bias among the included studies (Figure 5). Furthermore, not all studies were plotted within the area under the curve of the pseudo-95% CI, showing a possible publication bias [50]. However, the Egger test did not indicate obvious publication bias (regression intercept = 1.32, p = 0.15).

Figure 5.

Funnel plot for studies included in the pooled analysis for patient-wise detectability.

3.5.2. Lesion-Wise

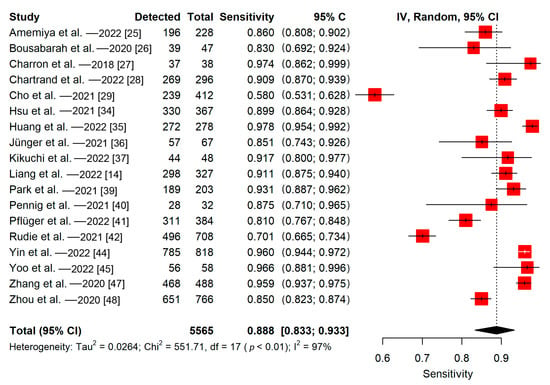

Among 20 studies evaluated and reported their detectability lesion-wise, 18 of them reported the number of metastases in the test sets. All studies in this group used split training-test sets to evaluate their models. The detectability of the 18 included studies ranged from 58% to 98%. The pooled proportion of detectability of deep learning algorithms (lesion-wise) was 89% (95% CI, 83–93%) (Figure 6). The meta-regression did not find a significant impact of the training sample size on the sensitivity (p = 0.86). The Q-test indicated that heterogeneity was present across the studies (Q = 551.71, p < 0.01), and the Higgins I2 statistic showed the presence of high heterogeneity in detectability (I2 = 97%).

Figure 6.

Forest plot of deep learning algorithms’ lesion-wise detectability.

There was no statistically significant difference in performance between the groups in any subgroup analyses (p-values ranged from 0.26 to 0.68). Heterogeneity was high in subgroups that were separated based on the plurality of MRI sequences (single MRI sequence; Q = 370.72, p < 0.01; I2 = 98%/multiple MRI sequences; Q = 112.75, p < 0.01; I2 = 94%), study design (single-center; Q = 439.53, p < 0.01; I2 = 97%/multi-center; Q = 17.01, p < 0.01; I2 = 88%), and the dimension of the included images (3D images; Q = 529.39, p < 0.01; I2 = 98%/both 2D and 3D images; Q = 21.47, p < 0.01; I2 = 77%). Subgroup analyses based on the plurality of the primary tumor type revealed a high heterogeneity in studies with multiple primary tumor types (Q = 551.3, p < 0.01; I2 = 97%). There was no evidence of heterogeneity in studies with a single primary tumor type (Q = 0.06, p = 0.81; I2 = 0%). In this group, two subgroup analyses to investigate heterogeneity could not be carried out: based on validation method (all studies used split training-test method) and detection level (all studies reported lesion-wise sensitivity). The meta-regression showed that the heterogeneity not explained by the training sample size was significant, indicating that the training sample size had no significant effect on heterogeneity (p < 0.0001).

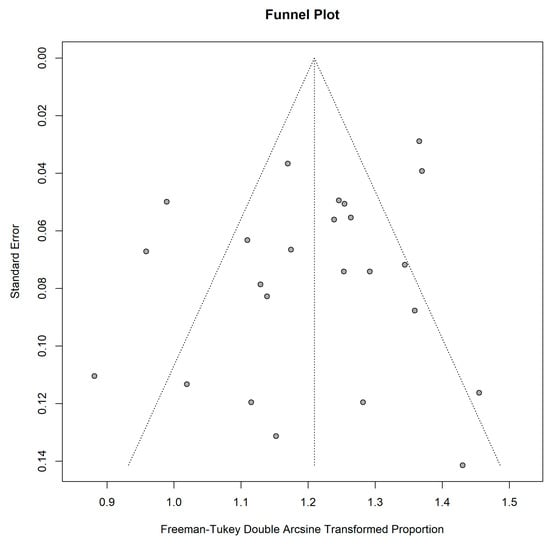

The asymmetrical funnel plot indicated publication bias among the included studies in this group (Figure 7). In addition, only some studies were plotted within the area under the curve of the pseudo-95% CI, indicating possible publication bias [50]. On the other hand, the Egger test revealed no obvious publication bias (regression intercept = 1.17, p = 0.66).

Figure 7.

Funnel plot for studies included in the pooled analysis for lesion-wise detectability.

4. Discussion

BMs are ten times more common than primary malignant brain tumors, and patients with a history of BM should be followed up by imaging every three months and whenever clinically indicated [51,52]. Therefore, there is a massive demand for radiologists to detect and follow-up on these lesions. However, radiologists face several challenges in detecting BMs accurately. Among the challenges are a massive workload, difficulties in differentiation of BMs from noises and blood vessels, BMs with small sizes, significant variations in lesion shape and size among patients, weak signal intensities, and multiple locations and lesions in a patient [11]. As a result of these challenges, the use of deep learning methods in detecting BMs has recently increased in research. Because the recent meta-analysis by Cho et al. included just 12 studies, only 7 of which were deep learning studies, we decided to conduct a systematic review and assess the strength of the present evidence [11]. Their study also found a statistically significant difference in false positives between the deep learning and machine learning groups, necessitating the focused study on each group. Our analysis showed that studies with deep learning models using MR images perform well in detecting BMs, with a pooled sensitivity of 89% (95% CI, 84–92%). The detectability ranged from 58% to 98%.

The size of the lesions is an important determinant of the success of detecting BMs with deep learning algorithms [53]. For instance, in a study by Rudie et al., detection sensitivity for BMs smaller than 3 mm was only 14.6%, and sensitivity for BMs greater than 3 mm was 84.3% [42]. In another study, the detection sensitivity was reduced by 71% for BMs < 3 mm compared to all BMs [48]. Various approaches to solving this problem have been proposed in the literature. Amemiya et al. reinforced their SSD model for small lesion detections with feature fusion and managed to increase its sensitivity from 35.4% to 45.8% for lesions smaller than 3 mm [25]. In another study, Bousabarah et al. showed that the sensitivity of their models for small BMs ranged from 0.40 to 0.51 [26]. They trained another model on a subsample containing only small BMs (smaller than 0.4 mL), showing an increased sensitivity of 0.62–0.68 for small BMs. It is worth noting that this model’s sensitivity was 0.43–0.53 for all sizes of BMs. As stated, Dikici et al. used a sample of smaller BMs and acquired results similar to theirs [54]. Although training deep learning models with small BMs would help to improve sensitivity in detecting BMs, there are concerns when applying this method to a heterogeneous group of BMs. Furthermore, since a meta-analysis demonstrated that black blood images successfully detect small lesions [55], Park et al. added black blood images to their model with gradient echo sequence images and increased their sensitivity by 23.5% for detecting BMs < 3 mm [39]. Kottlors et al. also showed that smaller BMs (<5 mm) could be detected better with deep learning models using black blood images [38]. Yin et al. detected BMs < 3 mm with an impressive sensitivity of 89% by only using contrast-enhanced T1WI, and having a sensitivity of 95.8% in all sizes of BMs [44]. We believe, in part, this success was due to a large number of metastases in the cohort (11514 BMs) and a high proportion of small BMs (<5 mm, 58.6%) in their sample. Therefore, more models that are trained with real-world data representation and successful on both small and large lesions are required. Moreover, surgery or SRS planning scan with a higher spatial resolution can be more sensitive for detecting metastases than a conventional scan, revealing lesions not previously detected [56]. Therefore, utilizing MRI scanners with a higher spatial resolution might improve the efficacy of deep learning models, particularly for smaller lesions. Furthermore, we also analyzed the impact of training sample size on detectability, but no statistically significant effect was observed. Studies in the literature demonstrate that the success of deep learning models typically increases with sample size [57,58]. Although our results contradict this, one possible explanation could be that even though some papers have a small number of patients, the number of lesions in such a small number of patients can be quite high. For instance, Charron et al. included 164 patients with 374 metastatic lesions, whereas Deike-Hofmann et al. included 43 patients with 494 metastatic lesions. However, training sample size (number of lesions) on detectability in the group reporting sensitivity lesions-wise was not statistically significant either, indicating further research is needed.

Various medical imaging modalities have unique characteristics and different reactions to different tissues in the human body. Our review showed that ten studies used multiple MRI sequences in their models whereas the remaining fifteen only used one. Our meta-analysis showed that there was no statistically significant difference between the pooled proportion of detectability of studies with a single MRI sequence and the pooled proportion of detectability of studies with multiple MRI sequences. Park et al. combined and separately used two MRI sequences in their model [39]. Gradient echo sequence and black blood images showed 76.8% and 92.6% sensitivity, respectively. Their combination showed 93.1% sensitivity. There was a statistically significant difference between the models using only gradient echo sequence and combined sequences, but no difference between the black blood model and the combined model. This was also evident in the models’ sensitivity in lesions smaller than 3 mm, with the gradient echo sequence model, black blood model, and the combined model showing a sensitivity of 23.5%, 82.4%, and 82.4%, respectively. Charron et al. showed that the model combining different MRI modalities surpassed the model using single modalities in their study [27]. Contrary to these findings, our subgroup analyses showed no statistically significant difference between models using single or multiple MRI sequences. Therefore, additional research would be needed to determine which MRI scanners and scanner combinations would be the optimal strategy for automatically detecting brain metastases with deep learning. Furthermore, there was no statistically significant difference in the performance of models that included only 2D, 3D, or a combination of them; however, this comparison is not ideal due to the small number of studies that included only 2D images and studies that included both 2D and 3D images.

Most BMs are caused by lung cancer, breast cancer, melanoma, and renal cell carcinoma [2]. Our review showed that two articles included only malignant melanoma patients, and one included NSCLC patients. Others included BMs patients with any primary tumor. The appearance of BMs on MRI may vary between primary tumors, particularly in NSCLC [36,59]. Due to that, Jünger et al. stated that different deep learning models for different BMs originating from different primary tumors should be designed to obtain a satisfactory detection performance [36]. Although this may be helpful to increase the performance of the model detecting BMs originating from the particular primary tumor, the primary tumor is unknown in 5–40% of patients demonstrating the symptoms of BMs [60]. Furthermore, the primary tumor in up to 15% of BM patients cannot be identified [61]. Therefore, generalized deep learning models might be required to detect BMs with unknown primary tumors. Furthermore, we performed subgroup analyses to compare the performance of studies with a single primary tumor type and studies with multiple primary tumor types. There was no statistically significant difference in their performance; however, this comparison was not ideal due to the small number of studies with a single primary tumor type compared to studies with multiple primary tumor types. This is still an open research area, and further studies would be needed.

Besides sensitivity, false positives were the other important measure reported by the included studies. An important cause of false positives was the similarity between BMs and blood vessels, as both may present with a small focus of hyperintensity on a contrast-enhanced T1WI [46]. Several studies showed that most or at least some of their false positives were in and near vascular structures [25,32,37,39,44,46,47]. There have been several proposed solutions to this problem in the literature. Grøvik et al. hypothesized that adding other MRI sequences, such as diffusion-weighted MRI, may help deal with false positives [32]. Furthermore, black blood imaging can be helpful since it can suppress blood vessel signals, which is also increasingly applied in clinical practice [25,38,39,44]. It is worth noting that Park et al. demonstrated that when only black blood imaging is used, false positives are significantly higher than when black blood and gradient echo sequences images are used together [39]. Furthermore, Deike-Hofmann et al. demonstrated that including a pre-diagnosis scan in their model greatly reduced false positives, but including additional sequences contrarily decreased the specificity [30]. They hypothesized that this result was due to models interpreting that lesions that change over time are more likely to be BMs, whereas stable structures such as blood vessels are less likely, as humans do. Finally, skull stripping was another method that significantly reduced false positives, particularly extra-axial ones [14,25,42]. We were unable to conduct a pooled analysis of false positive rates in our study since included studies reported false positive rates differently.

Various deep learning algorithms were applied to MR images to detect BMs. Our review revealed that the most commonly used deep learning algorithm in BMs detection was U-Net, with different versions [62]. U-Net is an algorithm for semantic segmentation, also known as pixel-based classification. A contracting encoder and an expanding decoder comprise the U-Net. The expanding decoder creates the label map after the contracting encoder extracts low-level features. The second most commonly used deep learning algorithm was DeepMedic (Biomedical Image Analysis Group, Department of Computing, Imperial College London, London, UK) [63]. DeepMedic is built around a 3D deep CNN and a 3D conditional random field. Unsurprisingly, both algorithms are the most widely used since they are easily accessible. They are likely to be used more in the future, and there is room for research into combining these models in BMs detection [64]. The performance of the deep learning models was also compared. However, there was no statistically significant difference between the patient-wise sensitivity group and the lesion-wise sensitivity group (p = 0.25 and p = 0.26, respectively).

The performance of the deep learning models should be compared to the performance of radiologists in detecting BMs. Kikuchi et al. compared their model with twelve radiologists; seven were board-certified, and five were residents [37]. Their model exhibited higher sensitivity (91.7%) than the radiologists (88.7 ± 3.7%), however the article did not compare how the algorithms performed compared to faculty versus trainees, and the lumping of the trainees with the faculty may explain the overall lower sensitivity of the radiologists. Rudie et al. compared the performance of two initial manual annotations with the deep learning model [42]. Contrary to the findings of the aforementioned study, the detection sensitivity was higher and statistically significant for radiologists than for the deep learning model for metastases smaller than 3 mm (63.2% versus 14.7%) and metastases between 3 and 6 mm (90.8% versus 76.7%). Liang et al. discovered that their deep learning model detected 2% of BMs that were overlooked during the manual annotation, even though the manual annotations were checked by two investigators and reevaluated by a senior radiation oncologist [14]. In addition, Yin et al. showed that with the assistance of the deep learning model, readers’ mean sensitivity increased by 21% [44]. Another meta-analysis that compared deep learning models’ performance with healthcare professionals in detecting diseases from medical images found that the diagnostic performance of deep learning models and healthcare professionals was equivalent [65]. We believe these results are auspicious, and although completely automated models cannot be fully implemented into clinical practice today, they may serve as a robust assistant for radiologists. We could not conduct a pooled analysis to compare deep learning models and radiologists in our study due to the small number of studies reporting radiologists’ sensitivity. The literature is lacking in this regard, and more papers comparing the two groups are needed, especially for small, difficult to detect lesions.

Our study was not without limitations. The main limitation was the lack of pooled false positive rate analysis since there was no uniform unit of reporting them in the included studies. Studies reported false positives in per-patient or per-scan or per-lesion. Second, subgroup analyses based on different MRI scanners and slice thicknesses were not possible because the results for different scanners and slice thicknesses were not included in any of the included studies. Furthermore, study heterogeneity was high in our analyses; but it was commonly observed in meta-analyses on imaging-based deep learning studies [13,66,67,68,69]. In addition, in our study, the subgroups with no evidence of heterogeneity included very few studies. However, it is known that Q test has inadequate power to detect true heterogeneity when the meta-analysis includes a small number of studies [70]. Therefore, it is likely that heterogeneity is not due to subgroups. It is worth noting that, even though it is not a limitation of our study, our quality assessment showed that reporting was poor in some studies.

5. Conclusions

Our study revealed that deep learning algorithms effectively detect BMs with a pooled sensitivity of 89%. In the future, deep learning studies should adhere to CLAIM and QUADAS-2 checklists more strictly. Uniform reporting standards that clearly explain the results of deep learning models in BMs detection are needed. Since all the included studies were conducted retrospectively, there is a need for additional large-scale prospective studies.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/cancers15020334/s1, Table S1: PRISMA-DTA Abstract Checklist; Table S2: PRISMA-DTA Checklist; Table S3: Inclusion and exclusion criteria.

Author Contributions

Conceptualization, B.B.O., M.M.C., M.K. and M.W.; methodology, B.B.O. and M.K.; software, B.B.O. and M.K.; validation, M.M.C., C.F. and M.W.; formal analysis, B.B.O., C.F. and M.K.; investigation, B.B.O., C.F. and M.K.; resources, T.M.B., J.L. and M.W.; data curation, B.B.O.; writing—original draft preparation, B.B.O., M.M.C. and M.K.; writing—review and editing, C.F., T.M.B., J.L. and M.W.; visualization, B.B.O. and M.K.; supervision, M.M.C., T.M.B., J.L. and M.W.; project administration, M.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lin, X.; DeAngelis, L.M. Treatment of Brain Metastases. J. Clin. Oncol. 2015, 33, 3475–3484. [Google Scholar] [CrossRef]

- Sacks, P.; Rahman, M. Epidemiology of Brain Metastases. Neurosurg. Clin. N. Am. 2020, 31, 481–488. [Google Scholar] [CrossRef]

- Nayak, L.; Lee, E.Q.; Wen, P.Y. Epidemiology of Brain Metastases. Curr. Oncol. Rep. 2012, 14, 48–54. [Google Scholar] [CrossRef]

- Suh, J.H.; Kotecha, R.; Chao, S.T.; Ahluwalia, M.S.; Sahgal, A.; Chang, E.L. Current Approaches to the Management of Brain Metastases. Nat. Rev. Clin. Oncol. 2020, 17, 279–299. [Google Scholar] [CrossRef]

- Soffietti, R.; Abacioglu, U.; Baumert, B.; Combs, S.E.; Kinhult, S.; Kros, J.M.; Marosi, C.; Metellus, P.; Radbruch, A.; Villa Freixa, S.S.; et al. Diagnosis and Treatment of Brain Metastases from Solid Tumors: Guidelines from the European Association of Neuro-Oncology (EANO). Neuro-Oncol. 2017, 19, 162–174. [Google Scholar] [CrossRef]

- Sze, G.; Milano, E.; Johnson, C.; Heier, L. Detection of Brain Metastases: Comparison of Contrast-Enhanced MR with Unenhanced MR and Enhanced CT. AJNR Am. J. Neuroradiol. 1990, 11, 785–791. [Google Scholar]

- Davis, P.C.; Hudgins, P.A.; Peterman, S.B.; Hoffman, J.C. Diagnosis of Cerebral Metastases: Double-Dose Delayed CT vs. Contrast-Enhanced MR Imaging. AJNR Am. J. Neuroradiol. 1991, 12, 293–300. [Google Scholar]

- Yamamoto, M.; Serizawa, T.; Shuto, T.; Akabane, A.; Higuchi, Y.; Kawagishi, J.; Yamanaka, K.; Sato, Y.; Jokura, H.; Yomo, S.; et al. Stereotactic Radiosurgery for Patients with Multiple Brain Metastases (JLGK0901): A Multi-Institutional Prospective Observational Study. Lancet Oncol. 2014, 15, 387–395. [Google Scholar] [CrossRef]

- Gondi, V.; Bauman, G.; Bradfield, L.; Burri, S.H.; Cabrera, A.R.; Cunningham, D.A.; Eaton, B.R.; Hattangadi-Gluth, J.A.; Kim, M.M.; Kotecha, R.; et al. Radiation Therapy for Brain Metastases: An ASTRO Clinical Practice Guideline. Pract. Radiat. Oncol. 2022, 12, 265–282. [Google Scholar] [CrossRef]

- Growcott, S.; Dembrey, T.; Patel, R.; Eaton, D.; Cameron, A. Inter-Observer Variability in Target Volume Delineations of Benign and Metastatic Brain Tumours for Stereotactic Radiosurgery: Results of a National Quality Assurance Programme. Clin. Oncol. 2020, 32, 13–25. [Google Scholar] [CrossRef]

- Cho, S.J.; Sunwoo, L.; Baik, S.H.; Bae, Y.J.; Choi, B.S.; Kim, J.H. Brain Metastasis Detection Using Machine Learning: A Systematic Review and Meta-Analysis. Neuro-Oncol. 2021, 23, 214–225. [Google Scholar] [CrossRef]

- Chan, H.-P.; Samala, R.K.; Hadjiiski, L.M.; Zhou, C. Deep Learning in Medical Image Analysis. Adv. Exp. Med. Biol. 2020, 1213, 3–21. [Google Scholar] [CrossRef]

- Karabacak, M.; Ozkara, B.B.; Mordag, S.; Bisdas, S. Deep Learning for Prediction of Isocitrate Dehydrogenase Mutation in Gliomas: A Critical Approach, Systematic Review and Meta-Analysis of the Diagnostic Test Performance Using a Bayesian Approach. Quant. Imaging Med. Surg. 2022, 12, 4033–4046. [Google Scholar] [CrossRef]

- Liang, Y.; Lee, K.; Bovi, J.A.; Palmer, J.D.; Brown, P.D.; Gondi, V.; Tome, W.A.; Benzinger, T.L.S.; Mehta, M.P.; Li, X.A. Deep Learning-Based Automatic Detection of Brain Metastases in Heterogenous Multi-Institutional Magnetic Resonance Imaging Sets: An Exploratory Analysis of NRG-CC001. Int. J. Radiat. Oncol. Biol. Phys. 2022, 114, 529–536. [Google Scholar] [CrossRef] [PubMed]

- McInnes, M.D.F.; Moher, D.; Thombs, B.D.; McGrath, T.A.; Bossuyt, P.M.; the PRISMA-DTA Group; Clifford, T.; Cohen, J.F.; Deeks, J.J.; Gatsonis, C.; et al. Preferred Reporting Items for a Systematic Review and Meta-Analysis of Diagnostic Test Accuracy Studies: The PRISMA-DTA Statement. JAMA 2018, 319, 388–396. [Google Scholar] [CrossRef]

- Ouzzani, M.; Hammady, H.; Fedorowicz, Z.; Elmagarmid, A. Rayyan—A Web and Mobile App for Systematic Reviews. Syst. Rev. 2016, 5, 210. [Google Scholar] [CrossRef] [PubMed]

- Mongan, J.; Moy, L.; Kahn, C.E. Checklist for Artificial Intelligence in Medical Imaging (CLAIM): A Guide for Authors and Reviewers. Radiol. Artif. Intell. 2020, 2, e200029. [Google Scholar] [CrossRef] [PubMed]

- Whiting, P.F.; Rutjes, A.W.S.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.G.; Sterne, J.A.C.; Bossuyt, P.M.M. QUADAS-2 Group QUADAS-2: A Revised Tool for the Quality Assessment of Diagnostic Accuracy Studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef] [PubMed]

- Dettori, J.R.; Norvell, D.C.; Chapman, J.R. Fixed-Effect vs Random-Effects Models for Meta-Analysis: 3 Points to Consider. Global Spine J. 2022, 12, 1624–1626. [Google Scholar] [CrossRef]

- Borenstein, M.; Hedges, L.V.; Higgins, J.P.T.; Rothstein, H.R. A Basic Introduction to Fixed-Effect and Random-Effects Models for Meta-Analysis. Res. Synth. Methods 2010, 1, 97–111. [Google Scholar] [CrossRef]

- Higgins, J.P.T.; Thompson, S.G.; Deeks, J.J.; Altman, D.G. Measuring Inconsistency in Meta-Analyses. BMJ 2003, 327, 557–560. [Google Scholar] [CrossRef] [PubMed]

- Groenwold, R.H.H.; Rovers, M.M.; Lubsen, J.; van der Heijden, G.J. Subgroup Effects despite Homogeneous Heterogeneity Test Results. BMC Med. Res. Methodol. 2010, 10, 43. [Google Scholar] [CrossRef] [PubMed]

- Egger, M.; Davey Smith, G.; Schneider, M.; Minder, C. Bias in Meta-Analysis Detected by a Simple, Graphical Test. BMJ 1997, 315, 629–634. [Google Scholar] [CrossRef] [PubMed]

- Balduzzi, S.; Rücker, G.; Schwarzer, G. How to Perform a Meta-Analysis with R: A Practical Tutorial. Evid. Based Ment. Health 2019, 22, 153–160. [Google Scholar] [CrossRef]

- Amemiya, S.; Takao, H.; Kato, S.; Yamashita, H.; Sakamoto, N.; Abe, O. Feature-Fusion Improves MRI Single-Shot Deep Learning Detection of Small Brain Metastases. J. Neuroimaging 2022, 32, 111–119. [Google Scholar] [CrossRef]

- Bousabarah, K.; Ruge, M.; Brand, J.-S.; Hoevels, M.; Rues, D.; Borggrefe, J.; Grose Hokamp, N.; Visser-Vandewalle, V.; Maintz, D.; Treuer, H.; et al. Deep Convolutional Neural Networks for Automated Segmentation of Brain Metastases Trained on Clinical Data. Radiat. Oncol. 2020, 15, 87. [Google Scholar] [CrossRef]

- Charron, O.; Lallement, A.; Jarnet, D.; Noblet, V.; Clavier, J.B.; Meyer, P. Automatic Detection and Segmentation of Brain Metastases on Multimodal MR Images with a Deep Convolutional Neural Network. Comput. Biol. Med. 2018, 95, 43–54. [Google Scholar] [CrossRef]

- Chartrand, G.; Emiliani, R.D.; Pawlowski, S.A.; Markel, D.A.; Bahig, H.; Cengarle-Samak, A.; Rajakesari, S.; Lavoie, J.; Ducharme, S.; Roberge, D. Automated Detection of Brain Metastases on T1-Weighted MRI Using a Convolutional Neural Network: Impact of Volume Aware Loss and Sampling Strategy. J. Magn. Reson. Imaging 2022, 56, 1885–1898. [Google Scholar] [CrossRef]

- Cho, J.; Kim, Y.J.; Sunwoo, L.; Lee, G.P.; Nguyen, T.Q.; Cho, S.J.; Baik, S.H.; Bae, Y.J.; Choi, B.S.; Jung, C.; et al. Deep Learning-Based Computer-Aided Detection System for Automated Treatment Response Assessment of Brain Metastases on 3D MRI. Front. Oncol. 2021, 11, 739639. [Google Scholar] [CrossRef]

- Deike-Hofmann, K.; Dancs, D.; Paech, D.; Schlemmer, H.P.; Maier-Hein, K.; Baumer, P.; Radbruch, A.; Gotz, M. Pre-Examinations Improve Automated Metastases Detection on Cranial MRI. Investig. Radiol. 2021, 56, 320–327. [Google Scholar] [CrossRef]

- Dikici, E.; Nguyen, X.V.; Bigelow, M.; Ryu, J.L.; Prevedello, L.M. Advancing Brain Metastases Detection in T1-Weighted Contrast-Enhanced 3D MRI Using Noisy Student-Based Training. Diagnostics 2022, 12, 2023. [Google Scholar] [CrossRef] [PubMed]

- Grovik, E.; Yi, D.; Iv, M.; Tong, E.; Nilsen, L.B.; Latysheva, A.; Saxhaug, C.; Jacobsen, K.D.; Hell, A.; Emblem, K.E.; et al. Handling Missing MRI Sequences in Deep Learning Segmentation of Brain Metastases: A Multicenter Study. NPJ Digit. Med. 2021, 4, 33. [Google Scholar] [CrossRef] [PubMed]

- Han, C.; Murao, K.; Noguchi, T.; Kawata, Y.; Uchiyama, F.; Rundo, L.; Nakayarna, H.; Satoh, S. Learning More with Less: Conditional PGGAN-Based Data Augmentation for Brain Metastases Detection Using Highly-Rough Annotation on MR Images. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management (CIKM), Beijing, China, 3–7 November 2019; pp. 119–127. [Google Scholar] [CrossRef]

- Hsu, D.G.; Ballangrud, A.; Shamseddine, A.; Deasy, J.O.; Veeraraghavan, H.; Cervino, L.; Beal, K.; Aristophanous, M. Automatic Segmentation of Brain Metastases Using T1 Magnetic Resonance and Computed Tomography Images. Phys. Med. Biol. 2021, 66, 175014. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.X.; Bert, C.; Sommer, P.; Frey, B.; Gaipl, U.; Distel, L.V.; Weissmann, T.; Uder, M.; Schmidt, M.A.; Dorfler, A.; et al. Deep Learning for Brain Metastasis Detection and Segmentation in Longitudinal MRI Data. Med. Phys. 2022, 49, 5773–5786. [Google Scholar] [CrossRef] [PubMed]

- Jünger, S.T.; Hoyer, U.C.I.; Schaufler, D.; Laukamp, K.R.; Goertz, L.; Thiele, F.; Grunz, J.-P.; Schlamann, M.; Perkuhn, M.; Kabbasch, C.; et al. Fully Automated MR Detection and Segmentation of Brain Metastases in Non-Small Cell Lung Cancer Using Deep Learning. J. Magn. Reson. Imaging 2021, 54, 1608–1622. [Google Scholar] [CrossRef] [PubMed]

- Kikuchi, Y.; Togao, O.; Kikuchi, K.; Momosaka, D.; Obara, M.; Van Cauteren, M.; Fischer, A.; Ishigami, K.; Hiwatashi, A. A Deep Convolutional Neural Network-Based Automatic Detection of Brain Metastases with and without Blood Vessel Suppression. Eur. Radiol. 2022, 32, 2998–3005. [Google Scholar] [CrossRef] [PubMed]

- Kottlors, J.; Geissen, S.; Jendreizik, H.; Grose Hokamp, N.; Fervers, P.; Pennig, L.; Laukamp, K.; Kabbasch, C.; Maintz, D.; Schlamann, M.; et al. Contrast-Enhanced Black Blood MRI Sequence Is Superior to Conventional T1 Sequence in Automated Detection of Brain Metastases by Convolutional Neural Networks. Diagnostics 2021, 11, 1016. [Google Scholar] [CrossRef]

- Park, Y.W.; Jun, Y.; Lee, Y.; Han, K.; An, C.; Ahn, S.S.; Hwang, D.; Lee, S.K. Robust Performance of Deep Learning for Automatic Detection and Segmentation of Brain Metastases Using Three-Dimensional Black-Blood and Three-Dimensional Gradient Echo Imaging. Eur. Radiol. 2021, 31, 6686–6695. [Google Scholar] [CrossRef]

- Pennig, L.; Shahzad, R.; Caldeira, L.; Lennartz, S.; Thiele, F.; Goertz, L.; Zopfs, D.; Meisner, A.K.; Furtjes, G.; Perkuhn, M.; et al. Automated Detection and Segmentation of Brain Metastases in Malignant Melanoma: Evaluation of a Dedicated Deep Learning Model. AJNR Am. J. Neuroradiol. 2021, 42, 655–662. [Google Scholar] [CrossRef]

- Pfluger, I.; Wald, T.; Isensee, F.; Schell, M.; Meredig, H.; Schlamp, K.; Bernhardt, D.; Brugnara, G.; Heusel, C.P.; Debus, J.; et al. Automated Detection and Quantification of Brain Metastases on Clinical MRI Data Using Artificial Neural Networks. Neuro-Oncol. Adv. 2022, 4, vdac138. [Google Scholar] [CrossRef]

- Rudie, J.D.; Weiss, D.A.; Colby, J.B.; Rauschecker, A.M.; Laguna, B.; Braunstein, S.; Sugrue, L.P.; Hess, C.P.; Villanueva-Meyer, J.E. Three-Dimensional U-Net Convolutional Neural Network for Detection and Segmentation of Intracranial Metastases. Radiol.-Artif. Intell. 2021, 3, e200204. [Google Scholar] [CrossRef] [PubMed]

- Xue, J.; Wang, B.; Ming, Y.; Jiang, Z.; Wang, C.; Liu, X.; Chen, L.; Qu, J.; Xu, S.; Tang, X.; et al. Deep Learning-Based Detection and Segmentation-Assisted Management of Brain Metastases. Neuro-Oncol. 2020, 22, 505–514. [Google Scholar] [CrossRef] [PubMed]

- Yin, S.H.; Luo, X.; Yang, Y.D.; Shao, Y.; Ma, L.D.; Lin, C.P.; Yang, Q.X.; Wang, D.L.; Luo, Y.W.; Mai, Z.J.; et al. Development and Validation of a Deep-Learning Model for Detecting Brain Metastases on 3D Post-Contrast MRI: A Multi-Center Multi-Reader Evaluation Study. Neuro-Oncol. 2022, 24, 1559–1570. [Google Scholar] [CrossRef] [PubMed]

- Yoo, S.K.; Kim, T.H.; Kim, H.J.; Yoon, H.I.; Kim, J.S. Deep Learning-Based Automatic Detection and Segmentation of Brain Metastases for Stereotactic Ablative Radiotherapy Using Black-Blood Magnetic Resonance Imaging. Int. J. Radiat. Oncol. Biol. Phys. 2022, 114, e558. [Google Scholar] [CrossRef]

- Yoo, Y.J.; Ceccaldi, P.; Liu, S.Q.; Re, T.J.; Cao, Y.; Balter, J.M.; Gibson, E. Evaluating Deep Learning Methods in Detecting and Segmenting Different Sizes of Brain Metastases on 3D Post-Contrast T1-Weighted Images. J. Med. Imaging 2021, 8, 037001. [Google Scholar] [CrossRef]

- Zhang, M.; Young, G.S.; Chen, H.; Li, J.; Qin, L.; McFaline-Figueroa, J.R.; Reardon, D.A.; Cao, X.H.; Wu, X.; Xu, X.Y. Deep-Learning Detection of Cancer Metastases to the Brain on MRI. J. Magn. Reson. Imaging 2020, 52, 1227–1236. [Google Scholar] [CrossRef]

- Zhou, Z.J.; Saners, J.W.; Johnson, J.M.; Gule-Monroe, M.; Chen, M.; Briere, T.M.; Wang, Y.; Son, J.B.; Pagel, M.D.; Ma, J.; et al. MetNet: Computer-Aided Segmentation of Brain Metastases in Post-Contrast T1-Weighted Magnetic Resonance Imaging. Radiother. Oncol. 2020, 153, 189–196. [Google Scholar] [CrossRef]

- Ellingson, B.M.; Bendszus, M.; Boxerman, J.; Barboriak, D.; Erickson, B.J.; Smits, M.; Nelson, S.J.; Gerstner, E.; Alexander, B.; Goldmacher, G.; et al. Consensus Recommendations for a Standardized Brain Tumor Imaging Protocol in Clinical Trials. Neuro-Oncol. 2015, 17, 1188–1198. [Google Scholar] [CrossRef]

- Cochrane Handbook for Systematic Reviews of Interventions. Available online: https://training.cochrane.org/handbook (accessed on 17 October 2022).

- Ostrom, Q.T.; Wright, C.H.; Barnholtz-Sloan, J.S. Brain Metastases: Epidemiology. Handb. Clin. Neurol. 2018, 149, 27–42. [Google Scholar] [CrossRef]

- Le Rhun, E.; Guckenberger, M.; Smits, M.; Dummer, R.; Bachelot, T.; Sahm, F.; Galldiks, N.; de Azambuja, E.; Berghoff, A.S.; Metellus, P.; et al. EANO-ESMO Clinical Practice Guidelines for Diagnosis, Treatment and Follow-up of Patients with Brain Metastasis from Solid Tumours. Ann. Oncol. 2021, 32, 1332–1347. [Google Scholar] [CrossRef]

- Zhou, Z.; Sanders, J.W.; Johnson, J.M.; Gule-Monroe, M.K.; Chen, M.M.; Briere, T.M.; Wang, Y.; Son, J.B.; Pagel, M.D.; Li, J.; et al. Computer-Aided Detection of Brain Metastases in T1-Weighted MRI for Stereotactic Radiosurgery Using Deep Learning Single-Shot Detectors. Radiology 2020, 295, 407–415. [Google Scholar] [CrossRef] [PubMed]

- Dikici, E.; Ryu, J.L.; Demirer, M.; Bigelow, M.; White, R.D.; Slone, W.; Erdal, B.S.; Prevedello, L.M. Automated Brain Metastases Detection Framework for T1-Weighted Contrast-Enhanced 3D MRI. IEEE J. Biomed. Health Inform. 2020, 24, 2883–2893. [Google Scholar] [CrossRef]

- Suh, C.H.; Jung, S.C.; Kim, K.W.; Pyo, J. The Detectability of Brain Metastases Using Contrast-Enhanced Spin-Echo or Gradient-Echo Images: A Systematic Review and Meta-Analysis. J. Neurooncol. 2016, 129, 363–371. [Google Scholar] [CrossRef] [PubMed]

- Zakaria, R.; Das, K.; Bhojak, M.; Radon, M.; Walker, C.; Jenkinson, M.D. The Role of Magnetic Resonance Imaging in the Management of Brain Metastases: Diagnosis to Prognosis. Cancer Imaging 2014, 14, 8. [Google Scholar] [CrossRef] [PubMed]

- Cho, J.; Lee, K.; Shin, E.; Choy, G.; Do, S. How Much Data Is Needed to Train a Medical Image Deep Learning System to Achieve Necessary High Accuracy? arXiv 2015, arXiv:1511.06348. [Google Scholar] [CrossRef]

- Fang, Y.; Wang, J.; Ou, X.; Ying, H.; Hu, C.; Zhang, Z.; Hu, W. The Impact of Training Sample Size on Deep Learning-Based Organ Auto-Segmentation for Head-and-Neck Patients. Phys. Med. Biol. 2021, 66, 185012. [Google Scholar] [CrossRef]

- Jena, A.; Taneja, S.; Talwar, V.; Sharma, J.B. Magnetic Resonance (MR) Patterns of Brain Metastasis in Lung Cancer Patients: Correlation of Imaging Findings with Symptom. J. Thorac. Oncol. 2008, 3, 140–144. [Google Scholar] [CrossRef]

- Vuong, D.A.; Rades, D.; Vo, S.Q.; Busse, R. Extracranial Metastatic Patterns on Occurrence of Brain Metastases. J. Neurooncol. 2011, 105, 83–90. [Google Scholar] [CrossRef]

- Balestrino, R.; Rudà, R.; Soffietti, R. Brain Metastasis from Unknown Primary Tumour: Moving from Old Retrospective Studies to Clinical Trials on Targeted Agents. Cancers 2020, 12, 3350. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Kamnitsas, K.; Ledig, C.; Newcombe, V.F.J.; Simpson, J.P.; Kane, A.D.; Menon, D.K.; Rueckert, D.; Glocker, B. Efficient Multi-Scale 3D CNN with Fully Connected CRF for Accurate Brain Lesion Segmentation. Med. Image Anal. 2017, 36, 61–78. [Google Scholar] [CrossRef]

- Nie, D.; Wang, L.; Adeli, E.; Lao, C.; Lin, W.; Shen, D. 3-D Fully Convolutional Networks for Multimodal Isointense Infant Brain Image Segmentation. IEEE Trans. Cybern. 2019, 49, 1123–1136. [Google Scholar] [CrossRef]

- Liu, X.; Faes, L.; Kale, A.U.; Wagner, S.K.; Fu, D.J.; Bruynseels, A.; Mahendiran, T.; Moraes, G.; Shamdas, M.; Kern, C.; et al. A Comparison of Deep Learning Performance against Health-Care Professionals in Detecting Diseases from Medical Imaging: A Systematic Review and Meta-Analysis. Lancet Digit. Health 2019, 1, e271–e297. [Google Scholar] [CrossRef] [PubMed]

- Aggarwal, R.; Sounderajah, V.; Martin, G.; Ting, D.S.W.; Karthikesalingam, A.; King, D.; Ashrafian, H.; Darzi, A. Diagnostic Accuracy of Deep Learning in Medical Imaging: A Systematic Review and Meta-Analysis. NPJ Digit. Med. 2021, 4, 65. [Google Scholar] [CrossRef] [PubMed]

- Bedrikovetski, S.; Dudi-Venkata, N.N.; Kroon, H.M.; Seow, W.; Vather, R.; Carneiro, G.; Moore, J.W.; Sammour, T. Artificial Intelligence for Pre-Operative Lymph Node Staging in Colorectal Cancer: A Systematic Review and Meta-Analysis. BMC Cancer 2021, 21, 1058. [Google Scholar] [CrossRef]

- Decharatanachart, P.; Chaiteerakij, R.; Tiyarattanachai, T.; Treeprasertsuk, S. Application of Artificial Intelligence in Chronic Liver Diseases: A Systematic Review and Meta-Analysis. BMC Gastroenterol. 2021, 21, 10. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.Y.; Cho, S.J.; Sunwoo, L.; Baik, S.H.; Bae, Y.J.; Choi, B.S.; Jung, C.; Kim, J.H. Classification of True Progression after Radiotherapy of Brain Metastasis on MRI Using Artificial Intelligence: A Systematic Review and Meta-Analysis. Neuro-Oncol. Adv. 2021, 3, vdab080. [Google Scholar] [CrossRef]

- Huedo-Medina, T.B.; Sánchez-Meca, J.; Marín-Martínez, F.; Botella, J. Assessing Heterogeneity in Meta-Analysis: Q Statistic or I2 Index? Psychol. Methods 2006, 11, 193–206. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).