2. Sampling Theory and Experimental Design

Sampling methods specify how to obtain a statistical sample, which is a set of objects from a population that one wishes to learn about, that reliably represents the population [

6,

7]. Inferences about the population are based on sample statistics, which are computed from the sample’s data using well-defined formulas or algorithms. A sample mean, correlation, or effect of a covariate on an outcome variable is used to estimate corresponding population parameters, also known as estimands, which are conceptual objects that are almost never known. This requires a number of implicit or explicit assumptions, such as the appropriateness of the statistical models for the type of data being analyzed, and the absence of unknown biases, data recording errors, or selective analysis reporting [

9,

10,

11,

12]. For simplicity, we will assume throughout this review that these statistical assumptions are correct unless otherwise stated.

Denote a population parameter by θ an observable random variable by Y, and let P(Y|θ) denote an assumed probability distribution describing how Y varies in the population. A representative sample Y = {Y1, …, Yn} may be used to compute a statistical estimator of θ, and P(Y|θ) may be used to determine the estimator’s probability distribution. For example, a sample mean may be used to estimate a population mean. The distribution of the sample mean may be approximated by a normal distribution (bell-shaped curve) if the sample size is sufficiently large, and a 95% confidence interval (CI) around the observed sample mean may be computed to quantify uncertainty by giving us an idea of how closely we can estimate θ from the sample. Another example is that, given (X,Y) data on a numerical outcome variable Y and a covariate X, a regression model P(Y|X, α, β) with linear conditional mean E(Y|X) = α + βX may be assumed to characterize how Y varies with X. If Y is a binary (0/1) indicator of response, then a logistic regression model log{Pr(Y = 1|X)/Pr(Y = 0|X)} = α + βX can be used. In each case, the parameters θ = (α, β) may be estimated from a sample of (X,Y) pairs to make inferences about the population from which the sample was taken, provided that the sample accurately represents the population. For example, a simple random sample of size n must be obtained in such a way that all possible sets of n objects from the population are equally likely to be the sample.

In contrast with sampling theory, experimental design involves statistical methods to plan a set of procedures, such as an RCT, to compare the effect of interventions, such as treatments, on outcomes of interest. Experimental design was largely pioneered by the English statistician, biologist, and geneticist Sir Ronald Fisher, who also invented RCTs, initially to maximize crop yield in agricultural experiments in the 1920s and 1930s. RCTs were popularized in medical research by the English epidemiologist and statistician Austin Bradford Hill in the 1940s and 1950s [

13,

14,

15,

16]. We will argue that, under appropriate assumptions, if one’s goal is to compare treatments as a basis for medical decision-making, then data from studies based on experimental designs that include randomization can be very useful.

3. Bayesian and Frequentist Inference

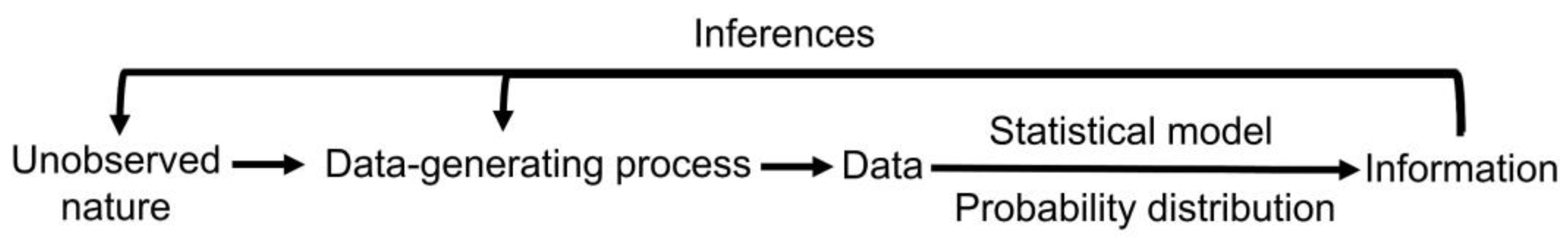

The results of medical studies can be analyzed using either frequentist or Bayesian statistical methods (

Figure 1) [

17]. These are two different statistical philosophies for constructing a probability model for observable variables and parameters and making inferences. A frequentist considers parameters θ to be unknown and fixed. A Bayesian considers parameters to be unknown and random and therefore specifies a

prior probability distribution P(θ) to describe one’s degree of belief or uncertainty about θ before observing data. Specifying a prior distribution based on pre-existing contextual knowledge may be a nontrivial task [

18]. Prior distributions can be classified as either “noninformative” or “informative” [

19,

20,

21]. Noninformative priors are also known variously as “objective”, “flat”, “weak”, “default”, or “reference” priors, and they yield posterior estimators that may be close to frequentist estimators. For example, credible intervals (CrIs) under a Bayesian model may be numerically similar to CIs under a frequentist model, although the interpretation of CrIs is different from that of CIs. Informative priors, sometimes known as “subjective” priors, take advantage of historical data or the investigator’s subject matter knowledge. “Weakly informative” priors encode information on a general class of problems without taking full advantage of contextual subject matter knowledge [

20,

21]. Bayesian analysis is performed by combining the prior information concerning θ [i.e., P(θ)] and the sample information {Y

1, …, Yn} into the posterior distribution P(θ|Y

1, …, Yn) using Bayes’ theorem. The posterior distribution reflects our updated knowledge about θ owing to the information contained in the sample {Y

1, …, Yn}, and quantifies our final beliefs about θ. Bayesian inferences thus are based on the posterior. For example, if L is the 2.5th percentile and U is the 97.5th percentile of the posterior, then [L, U] is a

95% posterior CrI for θ i.e., θ is in the interval [L, U] with a probability of 0.95 based on the posterior, written as Pr(L < θ < U|data) = 0.95.

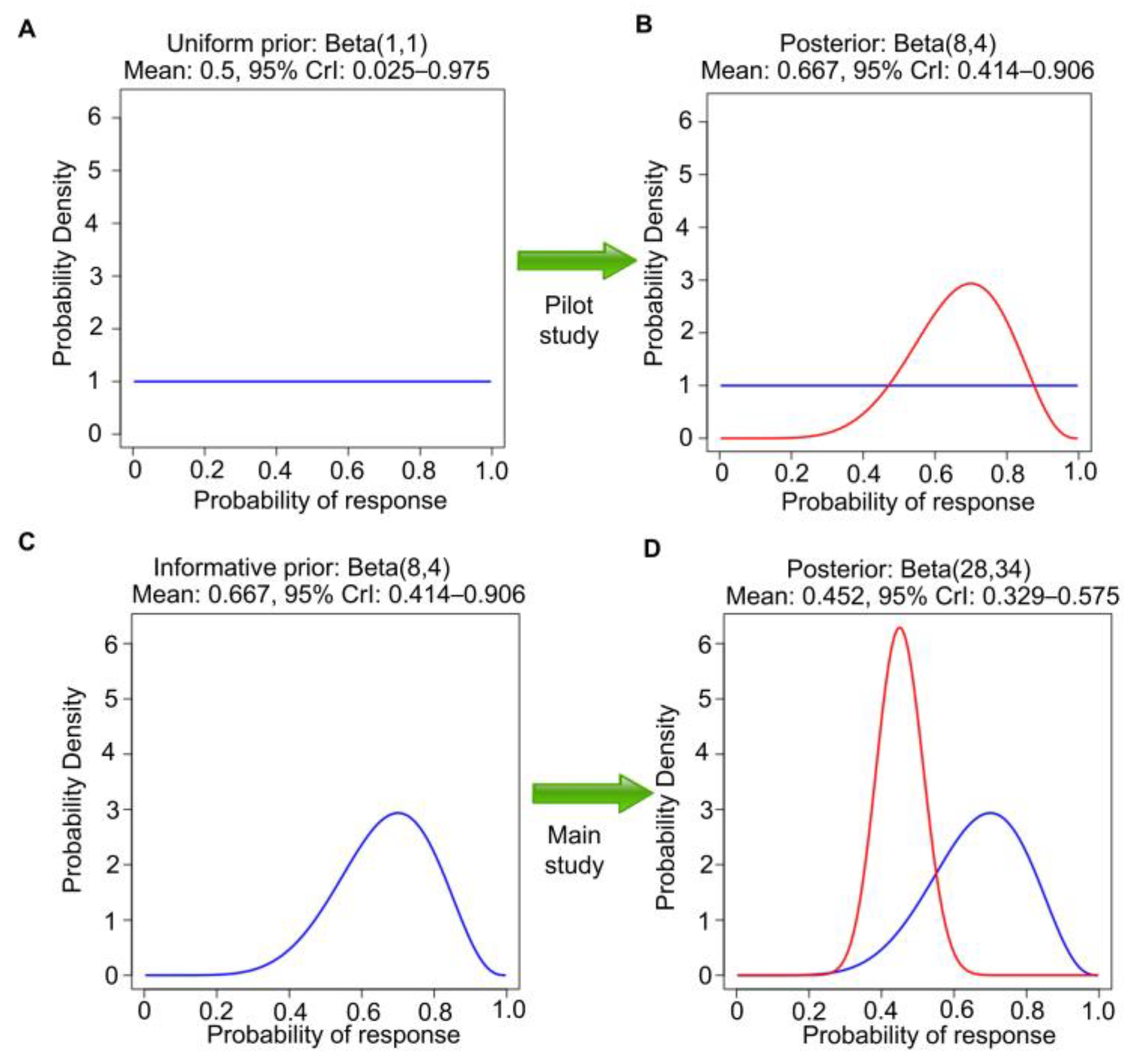

As a medical example of Bayesian inference, suppose that one is interested in the response probability that a new investigational therapy produces in chemotherapy-refractory renal medullary carcinoma (RMC). RMC is a rare, highly aggressive, molecularly homogeneous kidney cancer that lacks any effective treatment options [

22,

23,

24,

25]. To calculate a posterior distribution for Bayesian inferences, we can use the web application “Bayesian Update for a Beta-Binomial Distribution” (

https://biostatistics.mdanderson.org/shinyapps/BU1BB/, accessed on 18 September 2023). This Bayesian model is useful for data consisting of a random number of responses, R, out of n independently sampled subjects, with the focus on θ = Pr(response), 0 < θ < 1. Let Y

1, …, Y

n denote n patients’ binary response indicators, with Y

i = 1 if a response is observed from the ith patient and Y

i = 0 otherwise. We then have R = Y

1 + … + Y

n. Assuming conditional independence of n observations given θ, R follows a binomial distribution with parameters n and θ. A beta(a,b) distribution over the unit interval (0, 1) is a very tractable prior for θ. The beta(a,b) prior has mean a/(a + b) and effective sample size (ESS) = a + b, which quantifies the informativeness of the prior. The beta prior is commonly used because it is

conjugate for the binomial likelihood; the posterior of θ given observed R and n is also a beta distribution, but with updated parameters, beta(a + R, b + n − R). In the RMC example, we define response as complete response (CR) or partial response (PR) on imaging at 3 months and assume beta(1,1) prior distribution, also known as Laplace’s prior. Beta(1,1) is the uniform distribution over the interval of (0, 1). That is, under beta(1,1), all values in the unit interval between 0 and 1 are equiprobable, and they can be viewed as noninformative (

Figure 2A). It also has ESS = 2 and thus encodes little prior knowledge about θ. Because this cancer is rare and there is no comparator treatment, it is not feasible to conduct a randomized study. Suppose that a single-arm pilot study with n = 10 patients is conducted to establish feasibility and R = 7 responses are observed. This dataset allows us to update the uniform prior to the beta (1 + 7, 1 + 3) = beta(8,4) posterior, which has ESS = 12, posterior mean 8/12 = 0.67, and 95% posterior CrI 0.39–0.89 (

Figure 2B). The Bayesian posterior estimator 0.67

shrinks the empirical estimate 7/10 = 0.70 toward the prior mean 0.50, which is characteristic of Bayesian estimation. Frequentist methods, such as those used in Least Absolute Shrinkage and Selection Operator (LASSO) or ridge regression, also achieve shrinkage by including penalty terms, a concept known as penalization [

26]. In general, shrinkage and penalization improve the estimation of unknown parameters and enhance prediction accuracy.

In general, Bayes’ Law may be applied repeatedly to a sequence of samples obtained over time, with the posterior at each stage used as the prior for the next. As a second step in the example, the beta(8,4) posterior can be used as the prior for analyzing a later single-arm study of this therapy in 50 new patients with chemotherapy-refractory RMC (

Figure 2C). We assume that the second study also concerns the same θ = Pr(response). Suppose that 20 responses are observed in the second study. Then, the new beta(8,4) prior is further updated to a beta(8 + 20, 4 + 30) = beta(28,34) posterior. This has a mean 28/(28 + 34) = 0.45, with a narrower 95% CrI 0.33–0.58, reflecting the much larger ESS = 62 (

Figure 2D). Let patient response indicators be denoted by Y

1, …, Y

10 for the pilot study and Y

11, …, Y

60 for the second study. Assume also that the subjects of the second study are sampled randomly from the same population as those of the first pilot study, a strong assumption that will be further explored in later sections. Furthermore, assume that Y

1, …, Y

60 are conditionally independent given θ. These assumptions allow the two Bayesian posterior computations described above to be executed in one step by treating Y

1, …, Y

10, Y

11, …, Y

60 as a single sample, assuming the first beta(1,1) prior, and directly obtaining the beta(28,34) posterior for θ in one step. If, instead, the second study were executed without observing the pilot study results, then it would be appropriate to use a uniform beta(1,1) prior, so 20 responses in 50 patients would lead to beta(1 + 20, 1 + 30) = beta(21,31) posterior. This has mean 21/(21 + 31) = 0.40 and 95% CrI 0.27–0.54 (

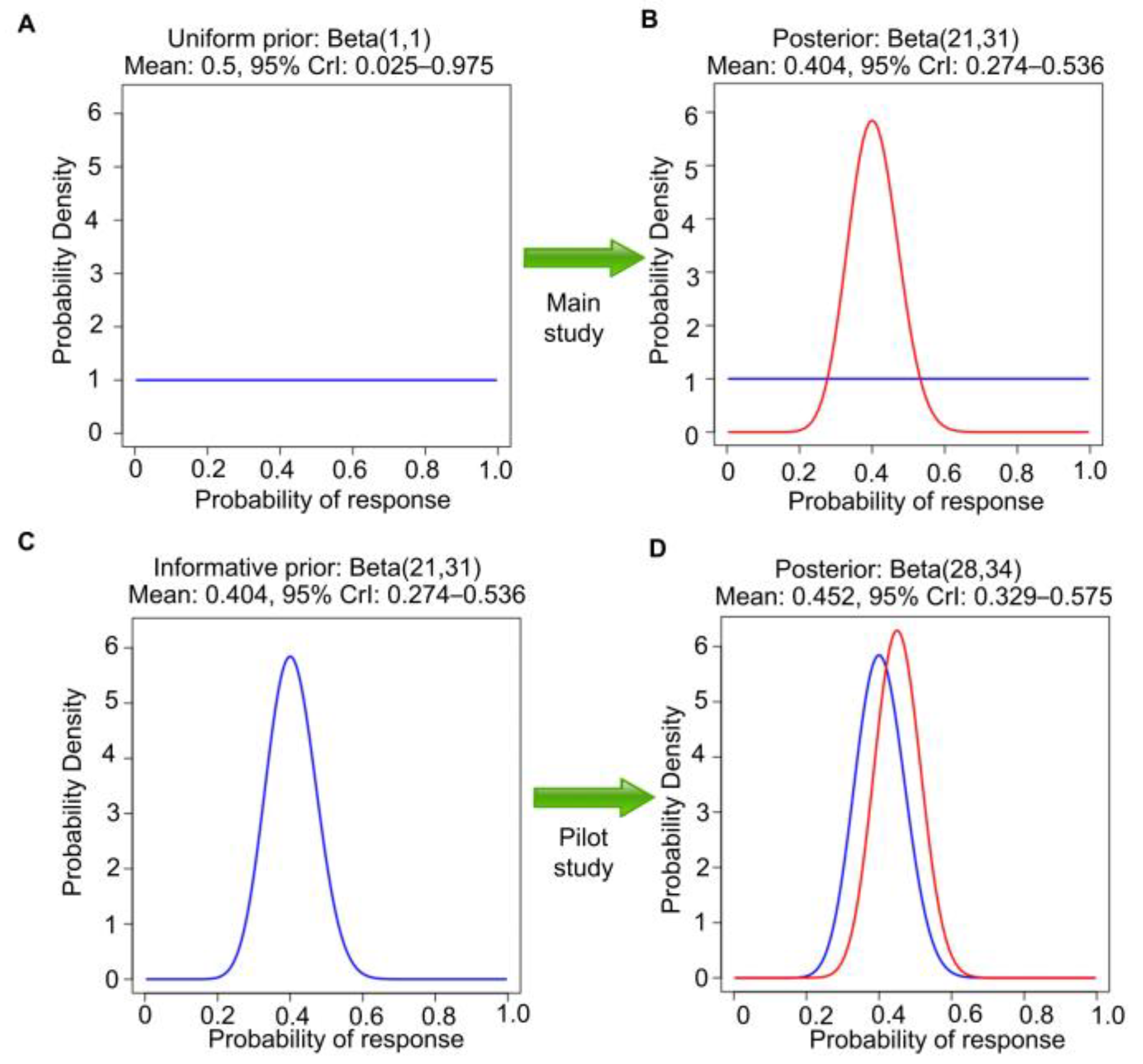

Figure 3A,B). This is different from the posterior in

Figure 2D because the two analyses begin with different priors, a beta(1,1) prior to seeing the pilot study results versus a beta(8,4) prior using the observed pilot study data. However, if the data from the pilot study are revealed afterward (

Figure 3C,D), then the final posterior distribution will be the same as in

Figure 2D. This is an example of the general fact that, if data are generated from the same distribution over time, then repeated application of Bayes’ Law is

coherent in that it gives the same posterior that would be obtained if the sequence of datasets were observed in one study.

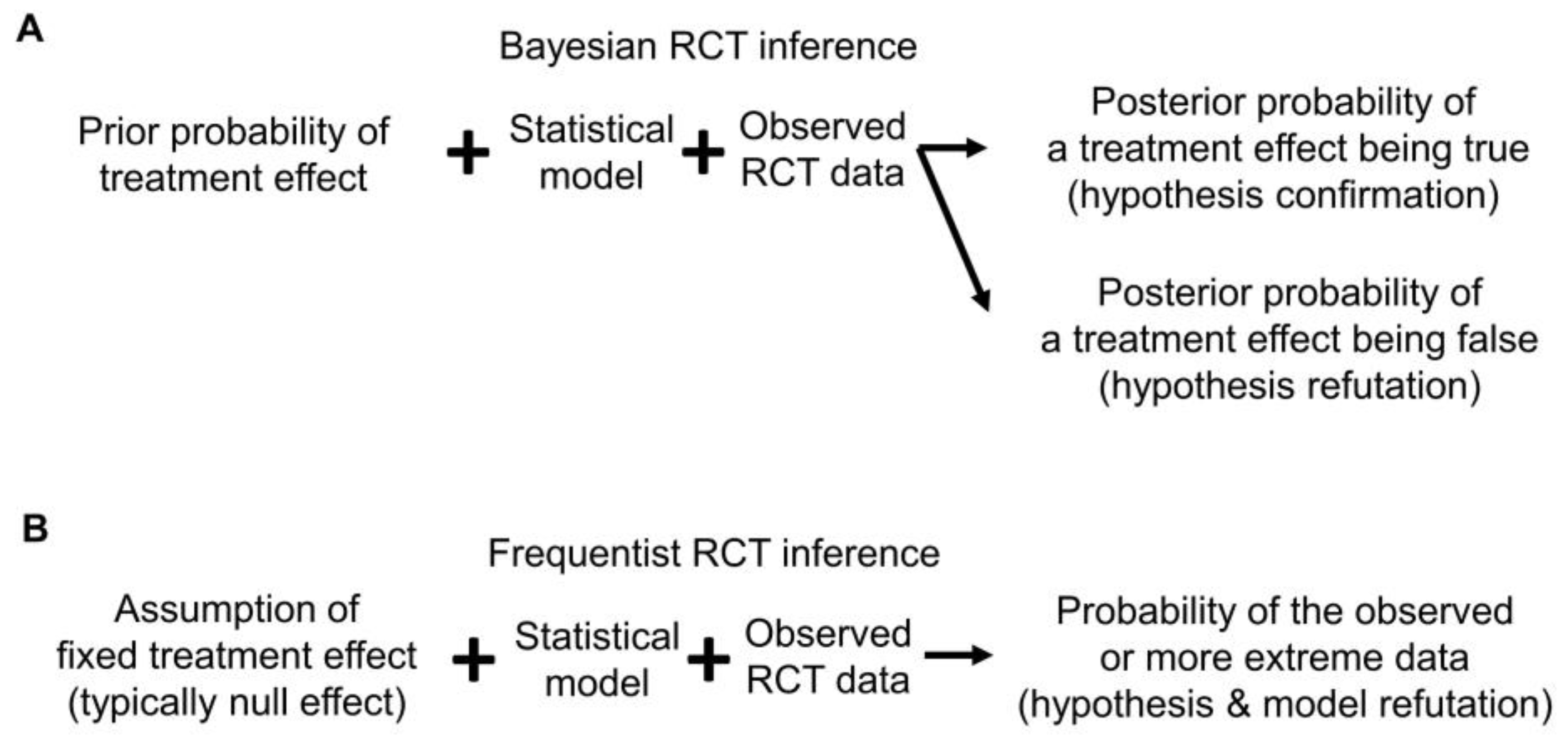

4. Confirmations and Refutations

Bayesian posterior estimates may be used as evidence to either confirm or refute a prior belief or hypothesis. The former may be called “confirmationist” reasoning, which evaluates the evidence supporting a belief or hypothesis regarding specific values of a parameter (

Figure 4A) [

27,

28]. For example, say that analysis of an RCT using the Bayesian survival regression model previously described in [

29,

30,

31] yields posterior mean HR = 0.71 with 95% CrI 0.57–0.87 for overall survival (OS) time comparing a new treatment E to a control C. This may be interpreted as strong confirmational statistical evidence supporting the prior assertion that E is superior to C, formally that HR < 1. In contrast, “refutational” logic seeks evidence against a belief or hypothesis regarding a parameter value [

32,

33,

34]. Using refutational logic, if the hypothesis is that E is inferior to C, formally that HR > 1, then a very small posterior probability Pr(HR > 1|data) can be interpreted as strong evidence against the belief that E is inferior to C (

Figure 4A). Because Bayesian reasoning is probabilistic, it is different from logical conclusive verifications or refutations, such as exculpatory evidence that a suspect of a crime has an alibi, implying that it is certain the suspect could not have committed the crime [

27,

28]. The philosopher of science Karl Popper highlighted the asymmetry between confirmationist and refutational reasoning because evidence can only support (confirm) a theory in relation to other competing theories, whereas evidence can refute a theory even if we lack a readily available alternative explanation [

33,

34]. Therefore, refutational approaches require fewer assumptions than confirmationist ones.

Since frequentists assume that a parameter is fixed and unknown, for example in a test of the frequentist hypothesis H

0: HR = 1.0 of no treatment difference, no probability distribution is assumed for the parameter HR. A frequentist test compares the observed value T

obs of a test statistic T to the distribution of T that would result from an infinite number of repetitions of the experiment that generates the data, assuming that H

0 is true. If T

obs are very unlikely to be observed based on the distribution of T under H

0, this serves as refutational evidence against H

0 (

Figure 4B). This can be quantified by a

p-value, which is defined as 2 × Pr(T > |T

obs) for a two-sided test, under specific model assumptions [

35,

36,

37].

While

p-values are used as refutational evidence against a null hypothesis, they are often misunderstood by researchers [

35,

38]. A pervasive problem is that “statistical significance” is not the same thing as practical significance, which depends on the context of the study. Furthermore, the arbitrary

p-value cutoff of 0.05 is often used to dichotomize evidence as “significant” or “non-significant”. This is a very crude way to describe the strength of evidence for refuting H

0 [

39]. A practical solution for this problem is to quantify the level of surprise provided by a

p-value as refutational evidence against a given hypothesis in terms of bits of information, which are easy to interpret. This can be executed by transforming a

p-value into an S-value [

12,

40], defined as S = −log

2(

p). Bearing in mind that a

p-value is a statistic because it is computed from data, if H

0 is true then a

p-value is uniformly distributed between 0 and 1. This implies that under H

0, a

p-value has a mean 1/2 and, for example, the probability that

p < 0.05 is 0.05. The rationale for computing S is that the probability of observing all tails in S flips of a fair coin equals (1/2)

S, so

p = (1/2)

S gives S as a simple, intuitive way to quantify how surprising a

p-value should be [

12,

41,

42,

43,

44]. S represents the number of coin flips, typically rounded to the nearest integer. Suppose that an HR of 0.71 is observed and a

p-value of 0.0016 is obtained against the null hypothesis of HR = 1.0. Since −log

2(0.0016) = 9.3, rounding this to the nearest integer gives S = 9 bits of refutational information against the null hypothesis of HR = 1. This may be interpreted as the degree of surprise that we would have after observing all tails in 9 consecutive flips of a coin that we believe is fair. A larger S indicates greater surprise, which is stronger evidence to refute the belief that the coin is fair, which corresponds to the belief that H

0 is true. Thus, the surprise provided by an S-value is refutational for H

0. In this case, S = 9 quantifies the degree of surprise that should result from observing a

p-value of 0.0016 if H

0 is true. We provide a simple calculator (

Supplementary File S1) that can be used by clinicians to convert

p-values to S-values. Of note, S-values quantify refutational information against the fairness of the coin tosses, which includes but is not limited to the possibility that the coin itself is biased towards tails. An unbiased coin can be tossed unfairly to result in all tails in S flips. For simplicity herein, our assertion that the coin is “fair” encompasses all these scenarios.

Since S is rounded to the nearest integer,

p-values 0.048, 0.052, and 0.06 all supply approximately 4 bits of refutational information, equivalent to obtaining 4 tails in 4 tosses of a presumed fair coin. A

p-value of 0.25 supplies only 2 bits of refutational information, half the amount of information yielded by

p = 0.06. While

p = 0.05 is conventionally considered “significant” in medical research, it corresponds to only 4 bits of refutational information. This may explain, in part, why so many nominally significant medical research results are not borne out by subsequent studies. For comparison, in particle physics, a common requirement is 22 bits of refutational information (

p ≤ 2.87 × 10

−7), which corresponds to obtaining all tails in 22 tosses of a fair coin [

45].

Converting

p-values to bits of refutational information can also be very helpful for interpreting RCT results that have large

p-values. For example, the phase 3 RCT CALGB 90202 in men with castration-sensitive prostate cancer and bone metastases reported an HR of 0.97 (95% CI 0–1.17,

p = 0.39) for the primary endpoint, time to first skeletal-related event (SRE), using zoledronic acid versus placebo [

46]. It is a common mistake, sometimes made even by trained statisticians [

38], to infer that a large

p-value confirms H

0, which is wrong because a null hypothesis can almost never be confirmed. In the example, this misinterpretation would say that there was no meaningful difference between zoledronic acid versus control. The correct interpretation is that there was no strong evidence against the claim of no difference between zoledronic acid versus control in time to the first SRE. Using the S-value,

p = 0.39 supplies approximately 1 bit of information against the null hypothesis of no difference, which is equivalent to asserting that a coin is fair after tossing it only once. This is why a very large

p-value, by itself, provides very little information [

35].

Conversion to bits of information can also help to interpret a frequentist 95% CI, which may seem counterintuitive due to its perplexing definition, which says that if the experiment generating the data were repeated infinitely many times, about 95% of the experiments would give an (L, U) CI pair containing the true value, assuming the statistical model assumptions are correct [

47]. For example, an estimated HR of 0.71 with a 95% CI of 0.57–0.87 is obtained for HR in a hypothetical RCT study. A frequentist 95% CI, corresponding to a

p-value threshold of 1 − 0.95 = 0.05, gives an interval of HR values for which there are no more than approximately 4 bits of refutational information, since S = −log

2(0.05) ≈ 4, assuming that the statistical model assumptions are correct. Thus, the data from the RCT suggest that HR values within the interval bound 0.57 and 0.87 are at most as surprising as seeing 4 tails in 4 fair coin tosses. Values lying outside this range have more than 4 bits of refutational information against them, and the point estimate HR of 0.71 is the value with the least refutational information against it. Similarly, frequentist 99% CIs correspond to a

p-value threshold of 1 − 0.99 = 0.01 and thus contain values against the null with at most −log

2(0.01) ≈ 7 bits of information, which is the same or less surprising than seeing 7 tails in 7 tosses of a fair coin. A number of recent reviews provide additional guidance on converting statistical outputs into intuitive information measures [

11,

12,

36,

37,

40,

48].

Any statistical inferences depend on the probability model assumed for the analysis. This is important to keep in mind because an assumed model may be wrong. The Cox model assumes that the HR is constant over time, also known as the proportional hazards (PH) assumption. Unless otherwise stated, all the RCT examples we will use herein assume a standard PH model for their primary endpoint analyses. If the data-generating process is different from this assumption, for example, if the risk of death increases over time at different rates for two treatments being compared, then there is not one HR, but rather different HR values over time. For example, it might be the case that the empirical HR is close to 2.0 for the first six months of follow-up, but then is close to 0.50 thereafter. Consequently, inferences focusing on one HR parameter as a between-treatment effect can be very misleading, because that parameter does not exist. To avoid making this type of mistake, the adequacy of the fit of the assumed model to the data and the plausibility of the model for inferential purposes should be assessed. Whereas Bayesian inference focuses more on the coherent updating of beliefs based on observed data (

Figure 2 and

Figure 3), frequentist inference places more emphasis on

calibration, i.e., that events assigned a given probability occur with that frequency in the long run. Furthermore, as reviewed in detail elsewhere [

12,

49], frequentist outputs, such as

p-values, provide refutational evidence against all model assumptions, not only a hypothesis or parameter value (

Figure 4B). Accordingly, frequentist outputs can be used directly to determine whether the distribution of the observed data is compatible with the distribution of the data under the assumed model. Thus, a small

p-value implies that either H

0 is false or that the assumed model does not fit the data well. For simplicity, hereafter we will follow the common convention used in medical RCTs of assuming that the model is adequate, and thus that a small

p-value yields refutational evidence only against the tested hypothesis, which typically is the null hypothesis of no treatment difference.

5. Inferences and Decisions

Although the term “evidence” does not have a single formal definition in the statistical literature, various information summaries are routinely used to quantify the strength of evidence [

10,

12,

35,

50]. These include frequentist parameter point estimates, CIs, and

p-values. Estimation is the process of computing a statistic, such as a point estimate, interval estimate (such as frequentist CIs and Bayesian CrIs), or distributional estimates (such as Bayesian posterior distributions or frequentist confidence distributions), which aim to provide plausible values of the unknown parameter based on the data [

12,

17,

51,

52]. Statistical inference is a larger, more comprehensive process that involves using data not only to estimate parameters but also to make predictions and draw conclusions about a larger population based on a sample from that population (

Figure 1). Causal inferences focus on estimating the effects of interventions [

2,

53]. An example of a frequentist causal inference can be obtained from the KEYNOTE-564 phase 3 RCT of adjuvant pembrolizumab versus placebo in clear cell renal cell carcinoma (ccRCC). The primary endpoint analyses for this RCT were based on the standard PH regression model often used in survival analyses of RCTs in oncology [

1,

2,

54,

55]. After a median follow-up of 24.1 months, the estimated HR for the primary endpoint, disease-free survival (DFS) time, was 0.68 with 95% CI 0.53–0.87 and

p = 0.002 [

54]. This corresponds to 9 bits of refutational information against the assumed model and null hypothesis that adjuvant pembrolizumab has the same mean DFS as placebo. Any HR values in the 95% CI 0.53–0.87 are less surprising than seeing 4 tails in 4 fair coin tosses, while values outside the CI have higher refutational information against them.

The same data from KEYNOTE-564 can be analyzed to compare DFS times of adjuvant pembrolizumab versus placebo in ccRCC using a Bayesian framework. While noninformative priors that give numerical posterior estimates similar to frequentist estimates in the absence of multiple looks at the data [

56] can be considered, informative priors may be used to incorporate prior information [

21,

57]. For example, a prior distribution may be formulated to account for the exaggeration effect, also known as the “winner’s curse”, often seen in reported phase 3 RCTs due to publication bias [

29,

30,

31]. Phase 3 RCTs with negative results are less likely to be accepted for publication by the editors of medical journals [

29], so the estimated effect sizes in published phase 3 RCTs are biased upward and thus are likely to overstate actual treatment differences [

29,

58]. This exaggeration effect due to the biased publication of studies with positive results is an example of a general phenomenon known as

regression toward the mean, wherein, after observing an effect estimate X in a first study, upon replication of the experiment, the estimate Y from a second study is likely to be closer to the population mean [

29]. Three recent studies [

29,

30,

31] empirically analyzed the results of 23,551 medical RCTs available in the Cochrane Database of Systematic Reviews (CDSR), which provided an empirical basis for constructing an informative prior distribution that accounts for the anticipated exaggeration effect in published phase 3 RCTs [

30]. If published pivotal phase 3 RCTs, such as KEYNOTE-564, are of sufficient quality to meet the criteria for inclusion in the CDSR, it is plausible to use the proposed prior, which may be called the “winner’s curse prior”, to account for the anticipated exaggeration effect [

29,

31].

Recalling that the frequentist estimate of the HR for DFS time is 0.68 for the KEYNOTE-564 trial, the winner’s curse prior and model, described in detail elsewhere [

29,

30,

31], gives a posterior mean HR of 0.76 with 95% CrI 0.59–0.96 (

Figure 5), a substantial shrinkage of the frequentist estimate toward 1. This says that, under this prior and assumed statistical model, Pr(0.59 < HR < 0.96|data) = 0.95 (confirmationist inference), and Pr(HR > 1.0|data) = 0.008 (refutationist inference). A free web application is available (

https://vanzwet.shinyapps.io/shrinkrct/, accessed on 18 September 2023) for clinicians to perform such Bayesian conversions of reported RCT data.

Decisions may rely on statistical inferences, but they are not the same thing. A decision may be made by combining information from statistical inferences with subjective cost-benefit trade-offs to guide actions [

10,

59,

60]. Such trade-offs may be expressed as type I and II error probabilities in frequentist tests of hypotheses, or by a utility function [

1,

59,

60]. If an RCT were repeated infinitely many times, its type I error upper limit α quantifies the proportion of times that one would be willing to incorrectly reject a true null hypothesis of no difference between treatment and control. The type II error upper limit β is the proportion of times that one would incorrectly conclude that a false null hypothesis is correct when a particular alternative hypothesis is true. For example, in KEYNOTE-564, it was decided to set α = 0.025 for a test of the primary endpoint, DFS time. Because the estimated

p-value was below this threshold, it was concluded that the result was “statistically significant” [

54]. This approach is typically used to inform regulatory decisions by agencies such as the United States Food and Drug Administration (FDA) and the European Medicines Agency (EMA). Academic journals, by design, focus on publishing inferences, but they must make their decisions on which RCTs to publish based on various cost-benefit trade-offs that can include maintaining the journal’s reputation, as well as information on type I and type II error control [

61]. Whereas estimations and corresponding inferences are quantitative and typically on a continuous scale, decisions usually are dichotomous, e.g., whether a statistical test is “significant” or “nonsignificant”, whether or not to approve a therapy, or whether to accept or reject an article in a journal.

To illustrate the difference between inferences and decisions, we compare the results of the METEOR and COSMIC-313 phase 3 RCTs, which used a similar design and the same decision-theoretic trade-offs of type I error probability α = 0.05 and type II error probability β = 0.10 to guide tests of hypotheses for the primary endpoint of progression-free survival (PFS) time [

62,

63,

64]. METEOR compared salvage therapies cabozantinib versus everolimus as a control in 375 patients with advanced ccRCC and reported an estimated HR of 0.58 (95% CI 0.45–0.75,

p < 0.001) for the PFS endpoint [

62]. COSMIC-313 compared the combination of cabozantinib + nivolumab + ipilimumab versus placebo + nivolumab + ipilimumab control as first-line therapies in 550 patients with advanced ccRCC. To date, this trial has an estimated HR of 0.73 (95% CI 0.57–0.94,

p = 0.013) for the PFS endpoint [

64]. Both results were declared “statistically significant” by the trial design because their

p-values were lower than the conventional threshold of 0.05. They both supplied more than 4 bits of refutational information against the null hypothesis. However, METEOR yielded a far stronger PFS signal than COSMIC-313, since its reported

p-value of <0.001 corresponds to at least 10 bits of refutational information against the null hypothesis, HR = 1, that cabozantinib has the same mean PFS outcome as everolimus. METEOR did not provide the exact

p-value, but using established approaches [

65], and the calculator provided in

Supplementary File S1, we can back-compute the

p-value using the reported 95% CIs to be approximately 3.5 × 10

−5, which corresponds to 15 bits of refutational information against the null hypothesis. On the other hand, the

p-value of 0.013 reported by COSMIC-313 supplied only 6 bits of refutational information against the null hypothesis that the triplet combination of cabozantinib + nivolumab + ipilimumab yields the same average PFS as the control arm. Therefore, although both trials were considered to show a “positive” PFS signal using the same

p-value cutoff of 0.05 based on prespecified decision-theoretic criteria, METEOR yielded more than twice the refutational information against its null hypothesis compared with COSMIC-313.

Similar conclusions may be obtained if we examine the two trials using a Bayesian approach to generate posterior probabilities and CrIs. We assume that both METEOR and COSMIC-313 meet the criteria to be included in the CDSR and accordingly use the winner’s curse prior to reducing exaggeration effects [

30]. For METEOR, the posterior mean HR = 0.65 with 95% CrI 0.49–0.83 and posterior probability Pr(HR > 1.0|data) = 0.00027, strongly favoring the cabozantinib arm over the everolimus control arm. Conversely, for COSMIC-313, the posterior mean HR = 0.81 with 95% CrI 0.63–1.01 and Pr(HR > 1.0|data) = 0.031 the control arm yielded better PFS than the triplet combination. Thus, when viewed through either a frequentist or Bayesian lens, the signal of METEOR is far stronger than that of COSMIC-313, despite both RCTs being reported as positive for their PFS endpoint. This illustrates the general fact that estimation yields far more information than a dichotomous “significant” versus “nonsignificant” conclusion from a test of hypotheses. Ultimately, decisions of which therapies to use in the clinic should incorporate each patient’s goals and values; account for trade-offs related to additional endpoints, such as OS, adverse events, quality of life, and financial and logistical costs; and account for individual patient characteristics [

1].

9. Random Sampling and Random Allocation

Random sampling and random allocation, also known as randomization, are both random procedures in which the experimenter introduces randomness to achieve a scientific goal. This is different from the randomness that an observable variable Y appears to have due to the uncertainty about what value it will take. The use of random procedures as an integral part of frequentist statistical inference to generate aleatory uncertainty estimates was pioneered by Ronald Fisher during the first half of the 20th century [

75,

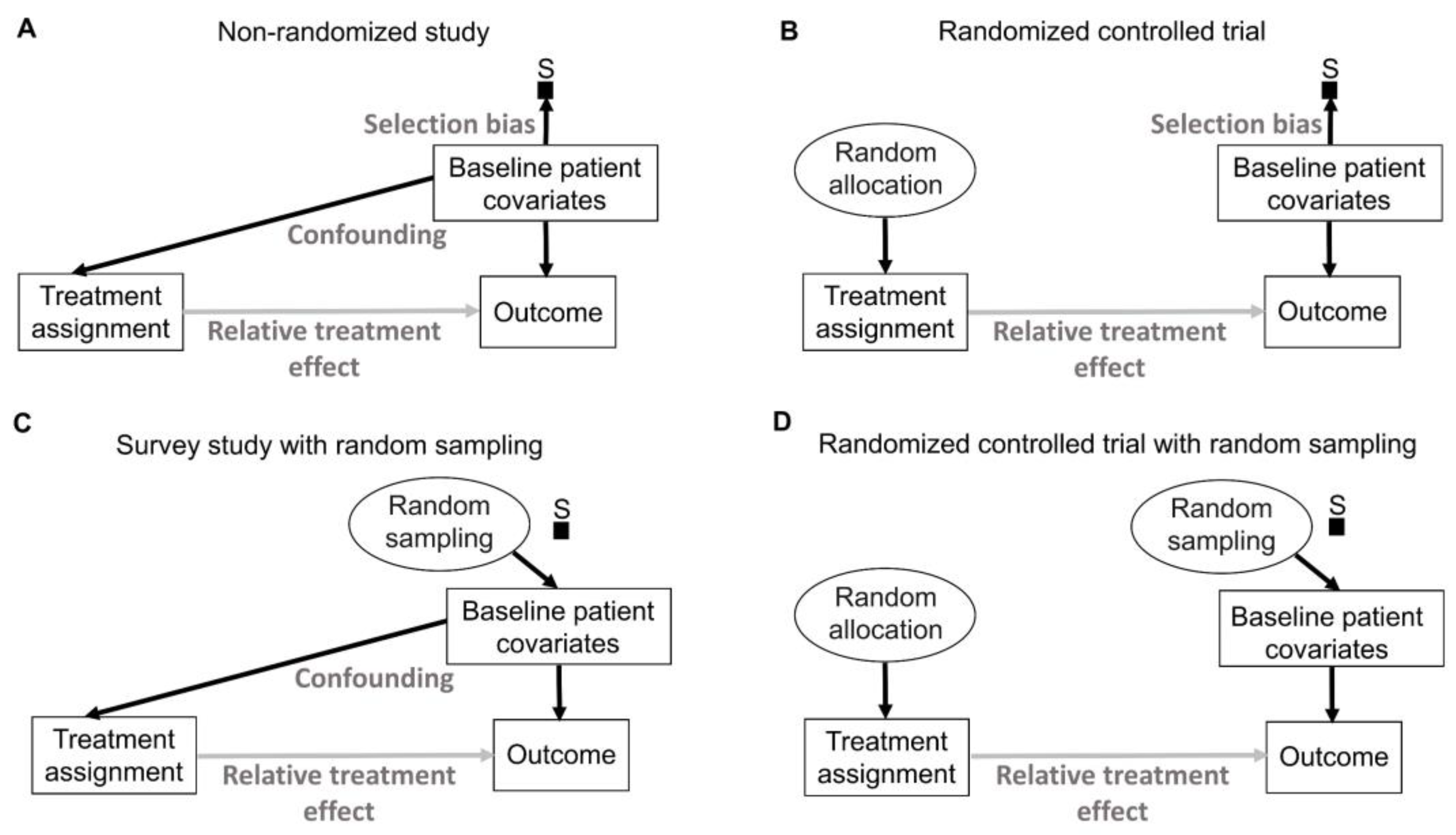

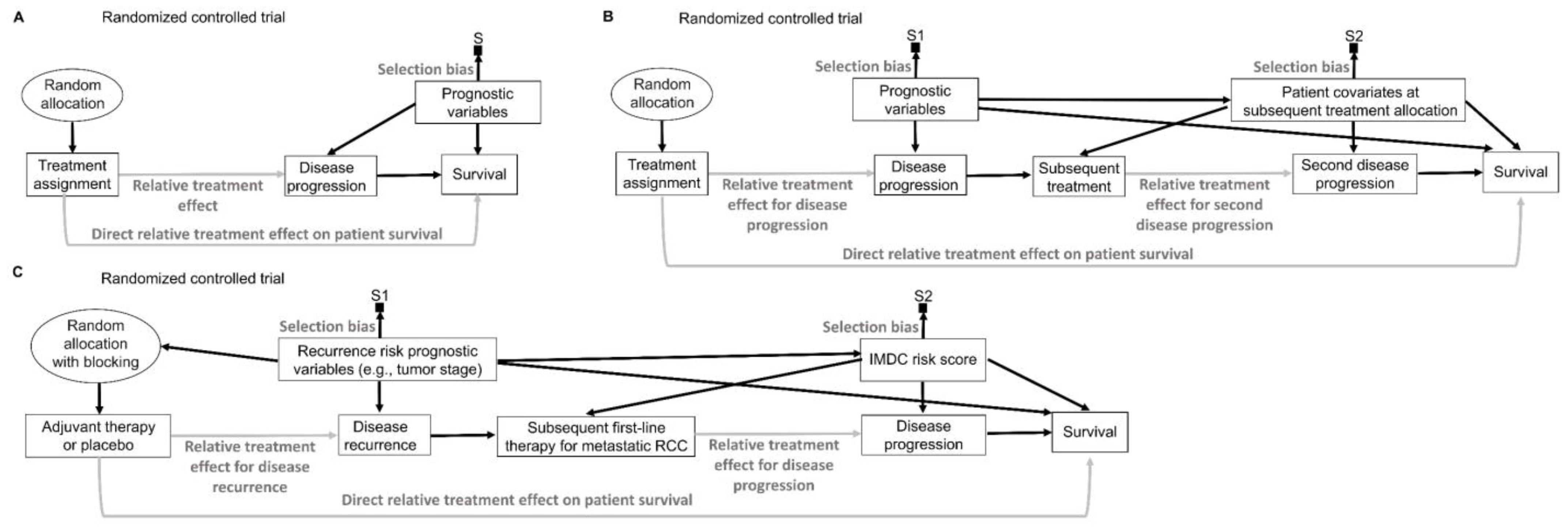

76]. His insight can be represented explicitly by causal diagrams, as shown in

Figure 6. We refer readers to comprehensive overviews for details on causal diagrams, which are used to represent assumptions about the processes that generate the observed data [

2,

77,

78,

79].

Figure 6 uses a type of causal-directed acyclic graph (DAG) known as a selection diagram, which includes a

selection node, S, that represents selection bias when sampling from a patient population [

2,

80,

81,

82,

83]. Selection DAGs can help to distinguish between the effects of random allocation (

Figure 6B) and random sampling (

Figure 6C). In RCTs, the focus is on the comparative causal effect, also known as the relative treatment effect, of a new treatment under investigation versus a standard control treatment. Each patient can only be assigned to one treatment, denoted by “Treatment assignment” in

Figure 6. In nonrandomized studies, because physicians use patient covariates such as age, disease burden, or possibly biomarkers to choose a treatment assignment, this effect is denoted by the solid arrow from “Baseline patient covariates” to “Treatment assignment” in

Figure 6A. The solid arrow from “Baseline patient covariates” to “Outcome” in

Figure 6A denotes that these covariates may also influence the outcome and thus are

confounders that can create a false estimated association between treatment assignment and outcome [

2,

77,

78,

79]. Random treatment allocation removes the causal arrow from any covariate, whether it is observed or not, to treatment and thus removes confounding (

Figure 6B). Whether a study is randomized or observational, baseline patient covariates may directly influence the outcome, e.g., OS time, thus acting as prognostic factors. Because RCTs typically test the null hypothesis of no influence between treatment assignment and outcome,

Figure 6 denotes this putative causal effect with a gray arrow.

If a trial is designed to balance treatment assignments within subsets determined by known patient covariates, but it does not randomize, then other known or unknown covariates still may influence both the treatment assignment and the outcome, as shown in

Figure 6A. Fisher’s insight was that all confounding effects can be removed and the uncertainty of the relative between-treatment effect can be estimated reliably by allowing only a random allocation procedure to influence treatment assignments, as shown in

Figure 6B. Random procedures are denoted by circles in

Figure 6.

Figure 6B represents the data-generating process in RCTs defined by random treatment allocation. In the traditional frequentist approach, random allocation licenses the use of measures of uncertainty such as SEs and CIs for comparative causal estimates, such as the relative treatment effect shown in

Figure 6B. Measures of relative, also known as comparative, treatment effects for survival outcomes include estimands such as HRs, differences in median or mean survival, or risk reduction at specified milestone time points (

Table 1) [

1].

A random sampling method aims to remove selection bias by obtaining a representative random subset of a patient population (

Figure 6C), whereas random allocation uses a method, such as flipping a coin, to randomly assign treatments to patients in a sample (

Figure 6B). We distinguish here “selection bias”, attributable to selective inclusion in the data pool due to sampling biases, from “confounding by indication” due to selective choice of treatment, which can be addressed by random treatment assignment [

84,

85,

86].

The conventional statistical paradigm relies on the assumption that a sample accurately represents the population. For example, a simple random sample (SRS) of size 200 is obtained in such a way that all possible sets of 200 objects from the population are equally likely to comprise the sample. As a simple toy example, the 6 possible subsets of size 2 from a population of 4 objects {a, b, c, d} are {a, b}, {a, c}, {a, d}, {b, c}, {b, d} and {c, d}, so an SRS of size 2 is one of these 6 pairs, each with a probability of 1/6. Random sampling is used in sampling theory, whereas random allocation is used in the design of experiments such as RCTs [

6,

7,

8]. They are connected by the causal principle that random procedures yield specific physical independencies; random allocation removes all other arrows towards treatment assignment (

Figure 6B), while random sampling removes the arrow toward the selection node, S (

Figure 6C) [

44]. Accordingly, random allocation connects inferential statistics with causal parameters expressed as comparative estimands, such as between-treatment effects measured by ratios or differences in parameters (

Table 1). Conversely, random sampling allows us to apply statistical inferences based on the sample to the entire population [

87].

There exist some scenarios outside of medicine whereby both random sampling and random allocation are feasible (

Figure 6D). One such example in the social sciences is to randomly sample a voter list from a population of interest and then randomly assign each voter to receive or not receive voter turnout encouragement mail. However, random sampling from the patient population for whom an approved treatment will be medically indicated is impossible in a clinical trial. A trial includes only subjects who meet enrollment criteria and enroll in the trial. Thus, they comprise a

convenience sample, as denoted by the arrow toward the selection node, S, in

Figure 6A,B, subject to a protocol’s entry criteria as well as other considerations such as access to the trial and willingness to consent to trial entry. Furthermore, patients are accrued sequentially over time in a trial. Due to newly diagnosed patients entering and treating patients leaving a population as they are cured or die, as well as changes in available treatments, any patient population itself constantly changes over time. Consequently, a trial’s sample is very unlikely to be a random sample that represents any definable future patient population. Even when inferences based on a trial’s data are reasonable, they may not be valid for a population because they only represent the trial’s convenience sample and not a well-defined patient population that will exist after the trial’s completion. Despite these caveats, data from an RCT can be very useful as a guide for future medical decision-making if a treatment difference is

transportable from an RCT to a population-based on causal considerations, as extensively reviewed elsewhere [

2].

10. Comparative and Group-Specific Inferences

Because each treatment group in most RCTs is a subsample of a convenience sample, one cannot reliably estimate valid SEs, CIs,

p-values, or other measures of uncertainty for the outcomes within each treatment group, individual patient, or other subgroup. For such outcomes, only measures of variability such as the SD and IQR are useful [

87,

88,

89]. However, this fact often goes unrecognized in contemporary RCTs, and within-arm statistics are reported that are of little inferential use for an identifiable patient population because they do not represent the population. For example, KEYNOTE-564 appropriately reported treatment comparisons in terms of the point estimate of HR, CIs, and a

p-value for the DFS endpoint, but also provided the 95% CI for the proportion of patients who remained alive and recurrence-free at 24 months within each of the pembrolizumab and control groups [

54]. Similarly, the CheckMate-214 phase 3 RCT of the new immunotherapy regimen nivolumab + ipilimumab versus the control therapy sunitinib in patients with metastatic ccRCC reported the 95% CI for the median OS outcomes of each treatment group. Nevertheless, it is important to note that these treatment-specific estimates cannot be reliably used to infer the treatment-specific OS outcomes of patient populations [

90,

91].

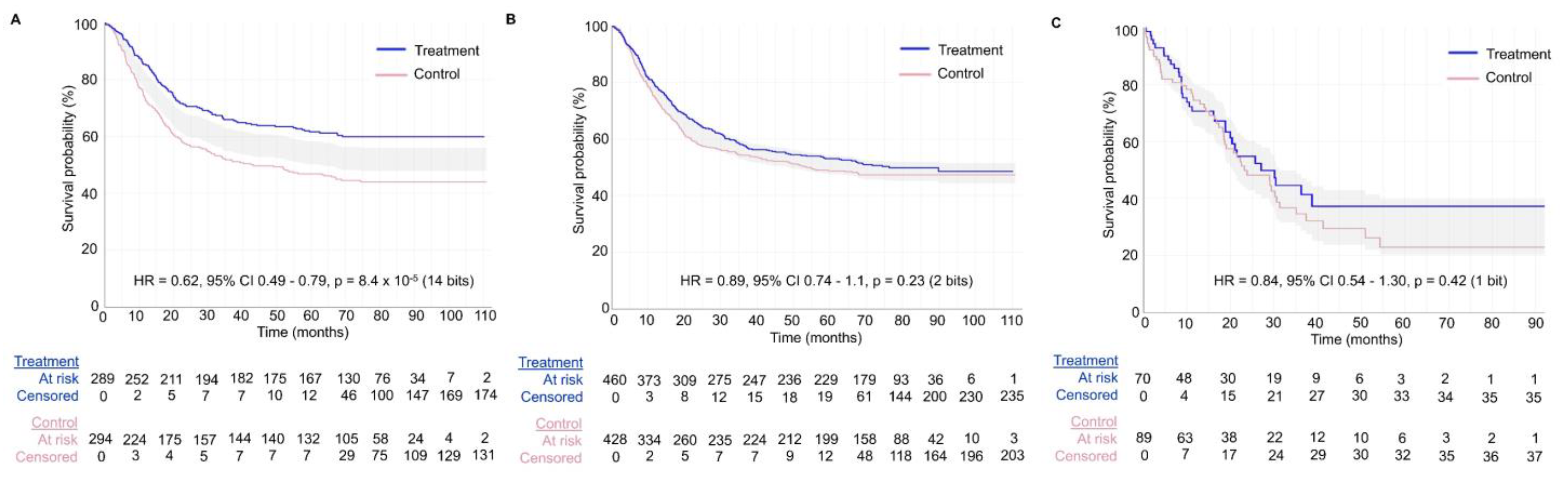

Figure 7 shows the value of generating survival plots that properly focus on comparative inferences between treatment groups in RCTs. The survival plots were generated using the survplotp function from the rms package in R version 4.1.2 [

92]. This function allows users to produce such plots in an interactive format that provides a real-time display of information, such as the number of patients censored and the number at risk per group at any time within the plot [

93]. Furthermore, it allows one to visualize the time points where the

p-value for the differences between groups is <0.05, denoted by the shaded gray area not crossing the survival curves. This shaded gray area represents the cumulative event curve difference. When it intersects with the survival curves, the

p-value is >0.05. Narrow-shaded gray areas indicate less uncertainty, whereas wide-shaded gray areas represent high uncertainty in estimating the differences between groups.

Figure 7A shows an example of an RCT with a consistent signal of an average survival difference between the treatment and control groups throughout the study, as also evidenced by the corresponding HR estimate of 0.62 with 95% CI 0.49–0.79 and

p-value of 8.4 × 10

−5, yielding 14 bits of refutational information against the null hypothesis.

Figure 7B,C shows two RCTs with large

p-values for the relative treatment effect measured by the HR estimate. However, the RCT in

Figure 7B shows a consistent signal of no meaningful effect size difference between the treatment and control groups, as can be determined by looking at the consistently narrow cumulative event curve difference represented by the shaded gray area. The RCTs in

Figure 7A,B yielded informative signals as evidenced by the narrow cumulative event curve difference. Conversely,

Figure 7C shows the results of an uninformative RCT. This low signal is evident by the wide cumulative event curve difference throughout the survival plot and is consistent with the wide 95% CIs of 0.54–1.30 for the HR estimate. Therefore, no inferences can be made at any time point for the survival curves presented in

Figure 7C. Readers inspecting the noisy data in

Figure 7C may mistakenly conclude that there exists a signal of a survival difference favoring the treatment over the control group at the tail end of the curve from approximately 40 months onward. However, the wide cumulative event curve difference shows that the estimated curves from that time point onward are based almost exclusively on noise. This important visual information would be missed in survival plots that do not show the comparative uncertainty estimates for the differences between the RCT groups. In general, any Kaplan–Meier estimate becomes progressively less precise over time as the numbers at risk decrease, and at the tail end of the curve, it provides a much less reliable estimate due to the low numbers of patients followed at those time points. Indeed, only 21/159 = 13.2% of patients in the RCT shown in

Figure 7C were in the risk set at 40 months. It has been proposed, accordingly, to refrain from presenting survival plots after the time point where only around 10% to 20% of patients remain at risk of the failure event [

94]. A key point is that if we are to make decisions regarding a test hypothesis, such as the null hypothesis, then the binary decision to either reject or accept is inadequate because it cannot distinguish between the two very different scenarios shown in

Figure 7B,C. Instead, we can more appropriately use the trinary of “reject” (

Figure 7A), “accept” (

Figure 7B), or “inconclusive” (

Figure 7C).

The number needed to treat (NNT), defined as the reciprocal of the estimated risk difference at a specified milestone time point, is a controversial comparative statistic originally proposed by clinicians to quantify differences between treatment groups in RCTs [

95]. However, NNT is highly problematic statistically because values from the same RCT can vary widely for each milestone time point and follow-up time. Therefore, no single NNT can be used to comprehensively describe the results of an RCT. Additionally, NNTs are typically presented as point estimates without uncertainty measures such as CIs, thus creating the false impression that they represent fixed single numerical summaries [

96,

97,

98,

99]. Standard metrics, such as one-year risk reduction and its corresponding CI, are typically more reliable and interpretable summaries of RCTs. When NNTs are presented, their uncertainty intervals and the assumptions behind estimating this measure should be noted.

11. Blocking and Stratification

Due to the play of chance, random sampling and random allocation both generate random imbalances in the distributions of patient characteristics between treatment arms. These imbalances are a natural consequence of random procedures, and uncertainty measures such as CIs account for such imbalances [

8]. Complete randomization cannot perfectly balance baseline covariates between the treatment groups enrolled in an RCT [

8,

89,

100,

101]. The convention of summarizing covariate distributions by treatment arm and testing for between-arm differences, often presented in

Table 1 of RCT reports, reflects nothing more than sample variability in baseline covariates between the groups, and has been called the “

Table 1 fallacy” [

63,

102].

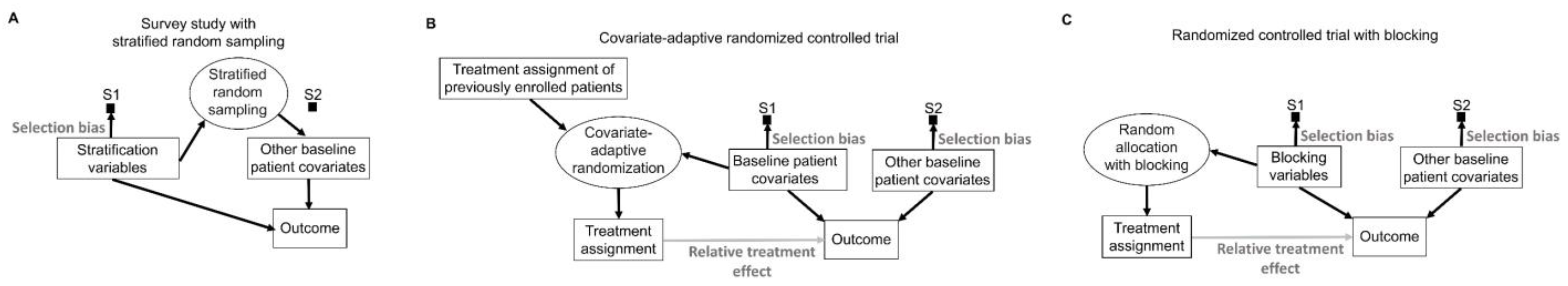

To mitigate potential covariate imbalances in survey studies used in sampling theory, the target population of patients can be partitioned into subgroups known as “strata”, based on specific covariates such as age, sex, race, or ethnicity, known as “stratification variables” [

17]. By design, this sampling procedure induces a known selection bias for the stratification variables, which are sampled according to a specifically selected proportion, typically the population proportion, without deviations due to randomness [

17,

85,

103]. The final sample then is formed by randomly sampling patients from each stratum, thus ensuring that there is no systematic selection bias for the non-stratified covariates (

Figure 8A). For example, if a patient population has a 60% poor prognosis and 40% good prognosis, then a stratified sample of size 200 would consist of a random sample of size 120 from the poor prognosis subpopulation and a random sample of size 80 from the good prognosis subpopulation. There are numerous ways to obtain such representative samples, depending on the structure of the population of interest, particularly for unconscious units, such as sampling to ensure the quality of drug products in the market.

In experimental studies such as RCTs, covariates can be used adaptively during a trial to allocate treatment in a way that minimizes imbalances (

Figure 8B) [

104]. “Minimization” is the most commonly used of these covariate-adaptive randomization schemes [

105]. Treatment allocation in such trials is largely nonrandom because it is directly influenced by the characteristics of earlier patients, along with the baseline covariates of the newly enrolled patients (

Figure 8B) [

101,

106,

107]. Thus, it is critical to choose appropriate statistical methods to validly analyze trials that use covariate-adaptive randomization methods [

107]. Permutation tests can be used as the primary statistical analysis of the comparative treatment effect in RCTs that use covariate-adaptive randomization instead of conventional random treatment allocation [

108]. While the balance achieved by covariate-adaptive randomization can potentially increase power compared with conventional RCTs, knowledge of the characteristics of earlier patients can allow trialists and other stakeholders to predict the next allocation, which increases the vulnerability of the trial to potential manipulation [

101,

108].

To avoid problems caused by the adaptive use of covariates to achieve balance during an RCT, imbalances can be prevented by a procedure known as “blocking” that deliberately restricts random allocation so that each treatment group is balanced with respect to prespecified “blocking variables” (

Figure 8C). For example, in the KEYNOTE-564 phase 3 RCT, the primary outcome of disease recurrence or death was less likely in patients with stage M0 disease, defined as no history of radiologically visible metastasis, compared with patients who previously had such metastasis, classified as stage M1 with no evidence of disease (M1 NED) [

54]. Therefore, to balance this variable between the group of patients randomized to adjuvant pembrolizumab and those randomized to placebo control, blocking was performed according to metastatic status (M0 vs. M1 NED). Within the subpopulation of patients with M0 disease, it was deemed that Eastern Cooperative Oncology Group (ECOG) performance status score and geographic location (United States vs. outside the United States) were baseline covariates that could meaningfully influence the survival endpoints. Accordingly, randomization was further blocked within the M0 subpopulation to balance the ECOG performance status and geographic location of patients randomized to adjuvant pembrolizumab or placebo control [

54].

Medical RCTs often interchangeably use terms such as “blocking” and “stratification” [

109]. However, stratification is a procedure used during sampling in a survey, whereas blocking is used during treatment allocation in an experiment. Conceptually, there is an isomorphism between random sampling, random allocation, and their respective theories and methods in the sense that they are logically equivalent, and thus one can be translated into the other [

36,

87,

110]. Thus, random sampling can be viewed as a random allocation of patients to be included or excluded from a sample. Similarly, random allocation can be viewed as random sampling from the set of patients enrolled in either treatment group. To facilitate conceptual clarity, it may be preferable to keep the terminologies of sampling theory and experimental design distinct, given that each focuses on different physical operations and study designs (

Table 2). Medical RCTs that do use the term “stratification” typically allude to the procedure whereby the prognostic variables (blocking variables) of interest are used to define “strata”, followed by blocking to achieve balance within each stratum [

8,

109].

Including blocking for metastatic status, ECOG performance status, and geographic location in the design of the KEYNOTE-564 RCT ensures that these variables will be balanced between the adjuvant pembrolizumab and placebo control groups. However, it is more efficient to also inform the statistical analysis model that one is interested in “apples-to-apples” comparisons between patients balanced for these blocking variables. To achieve this, the statistical model should adjust for these blocking variables [

89,

101,

111]. This yields “adjusted” HRs that prioritize the comparison of patients randomized to adjuvant pembrolizumab with those randomized to placebo that had the same metastatic status, performance status, and geographic location [

112]. Indeed, the statistical analysis models of KEYNOTE-564 adjusted for these blocking variables [

54]. Of note, while blocked randomization and adjustment can prevent random imbalances of the blocking variables, they do not guarantee the balance of unblocked variables [

113].

12. Forward and Reverse Causal Inference

Suppose that a patient with uncontrolled hypertension starts taking an investigational therapy, and two weeks later her blood pressure is measured and found to be within the normal range. This observed outcome under the investigational treatment is referred to as the “factual” outcome [

114]. One may imagine the two-week outcome that would have been observed if the patient had received standard therapy instead. This may be called the “counterfactual” outcome since it was not observed [

9,

115,

116]. Before a patient’s treatment is chosen and administered, the factual and counterfactual outcomes are called “potential” outcomes because both are possible, but they only become factual and counterfactual once a treatment is given. This structure provides a basis for a “reverse” causal inference task that aims to answer the question, “Did the intervention cause the observed outcome for this particular patient?” [

9,

115,

117]. One may want to transport this knowledge to make predictions about the potential outcomes of using the investigational or standard therapy on other patients belonging to the same or different populations [

2,

118]. Such predictions require more assumptions than the reverse causal inference of RCTs, including causal assumptions about the transportability of the previously estimated relative treatment effects [

2,

80,

81,

119]. These “forward” causal inference models study the effects of causes to answer the question, “What would be the outcome of the intervention?”

To think about the forward causal effect of treatment X on an outcome Y, such as an indicator of response or survival time, one may perform the following thought experiment. To compare two treatments, denoted by 0 and 1, make two physical copies of a patient, treat one with X = 0 and the other with X = 1, and observe the two

potential outcomes, Y(0) and Y(1). The difference Y(1) − Y(0) is called the

causal effect of X on Y for the patient. Since this experiment is impossible, one cannot observe both potential outcomes. This is the central problem of causal inference [

115]. Under reasonable assumptions, however, it can be proven that, if one randomizes actual patients between X = 0 and X = 1, producing sample means as estimators of the population mean treatment effects μ

0 and μ

1, then the difference between the sample means is an unbiased estimator of the between-treatment effect Δ = μ

1 − μ

0 for the population to which the sample corresponds. For example, if Y indicates response, then the sample average treatment effect is the difference between the two treatments’ estimated response probabilities, and the difference between the sample response probabilities follows a probability distribution with mean Δ; that is, it is unbiased. Assume that h

1 is the hazard function (event rate) for the treatment group and h

0 is the hazard function for the control group as previously described [

1,

2]. For HR = h

1/h

0, the relative treatment effect may be written as Δ = log(HR) = log(h

1) − log(h

0), and the sample log(HR) provides an unbiased estimator of Δ. The key assumptions to ensure this is that (1) whichever treatment, X = 0 or 1, is given to a patient, the observed outcome must equal the potential outcome, Y = Y(X); (2) given any patient covariates, treatment choice is conditionally independent of the future potential outcomes (that is, one cannot see into the future); and (3) both treatments must be possible for the patient. In terms of a DAG, (

Figure 6B) [

87], randomization removes any arrows from observed or unknown variables to treatment X, so the causal effect of X on Y cannot be confounded with the effects of any other variables. In particular, randomization removes the treatment decision from the physician or the patient, who would otherwise use the patient’s covariates or preferences to choose treatments (

Figure 6A). An additional statistical tool is the central limit theorem (CLT), which says that, for a sufficiently large sample size, the distribution of the sample estimator is approximately normal with mean Δ and specified variance. This may be used to test hypotheses and compute uncertainty measures such as confidence intervals and

p-values [

120].

13. Generalizability and Transportability of Causal Effects

The term “generalizability” refers to the extension of inferences from an RCT to a patient population that coincides with, or is a subset of, a trial-eligible patient population [

121,

122,

123]. The practical question is how a practicing physician may use inferences based on trial data to make treatment decisions for patients whom the physician sees in a clinic. Generalizability is the primary focus of sampling theory, as discussed above, and random sampling allows one to make inferences about broader populations. However, random sampling often is not practically feasible in trials, and clinical trial samples therefore are not representative. Thus, other sampling mechanisms have been proposed to facilitate generalizability, including the purposive selection of representative patients, pragmatic trials, and stratified sampling based on patient covariates [

121].

The primary focus of experimental design is internal validity, which provides a scientific basis for making causal inferences about the effects of experimental interventions by controlling for bias and random variation [

8,

112,

121,

124]. The tight internal control exercised by experimental designs, such as RCTs, may make it difficult to use sampling theory to identify a population that the enrolled patient sample represents. The approach typically used to move causal inferences from an RCT to a population of patients, such as those seen in clinical practice, is called “transportability” [

2,

80,

81,

125]. Transportability relies on the assumption that the patients enrolled in a RCT and the target populations of interest share key biological or other causal mechanisms that influence the treatment effect. The key transportability assumption is that, while the individual treatment effects μ

0 and μ

1, such as mean survival times, may differ between the sample’s actual population and the target population, the between-treatment effect Δ = μ

1 − μ

0 is the same for the two populations. Transportability from experimental subjects to future patients seen in the clinic who share relevant mechanistic causal properties is a standard scientific assumption [

2,

80,

81,

82]. For example, inferences from an RCT comparing therapies that target human epidermal growth factor receptor 2 (HER2) signaling in breast cancer may be transported if a patient seen in the clinic has breast cancer driven by HER2 signaling, despite the fact that the clinic population is otherwise completely separate in space and time from the sample enrolled in the RCT [

2,

82].

External validity is the ability to extend inferences from a sample to a population, and thus it encompasses both generalizability and transportability [

8,

112,

121,

124]. In studies based on sampling theory, such as health surveys, external validity is mainly based on generalizability, i.e., whether the sample in the study is representative of the broader population of interest. In experimental studies, such as RCTs, external validity is predominantly based on transportability, i.e., whether the RCT investigated causal mechanisms that are shared with the populations of interest. Internal validity, however, is the more fundamental consideration in both sampling theory and experimental design. External validity is meaningless for studies without internal validity.

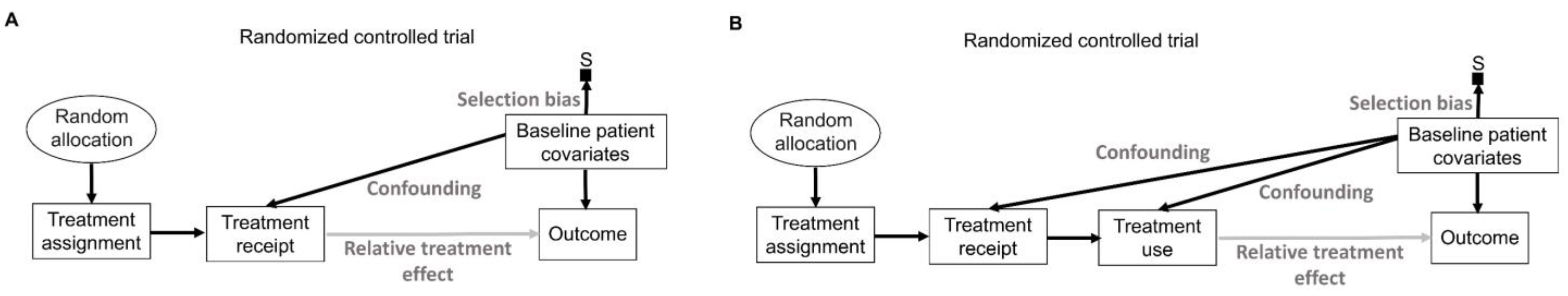

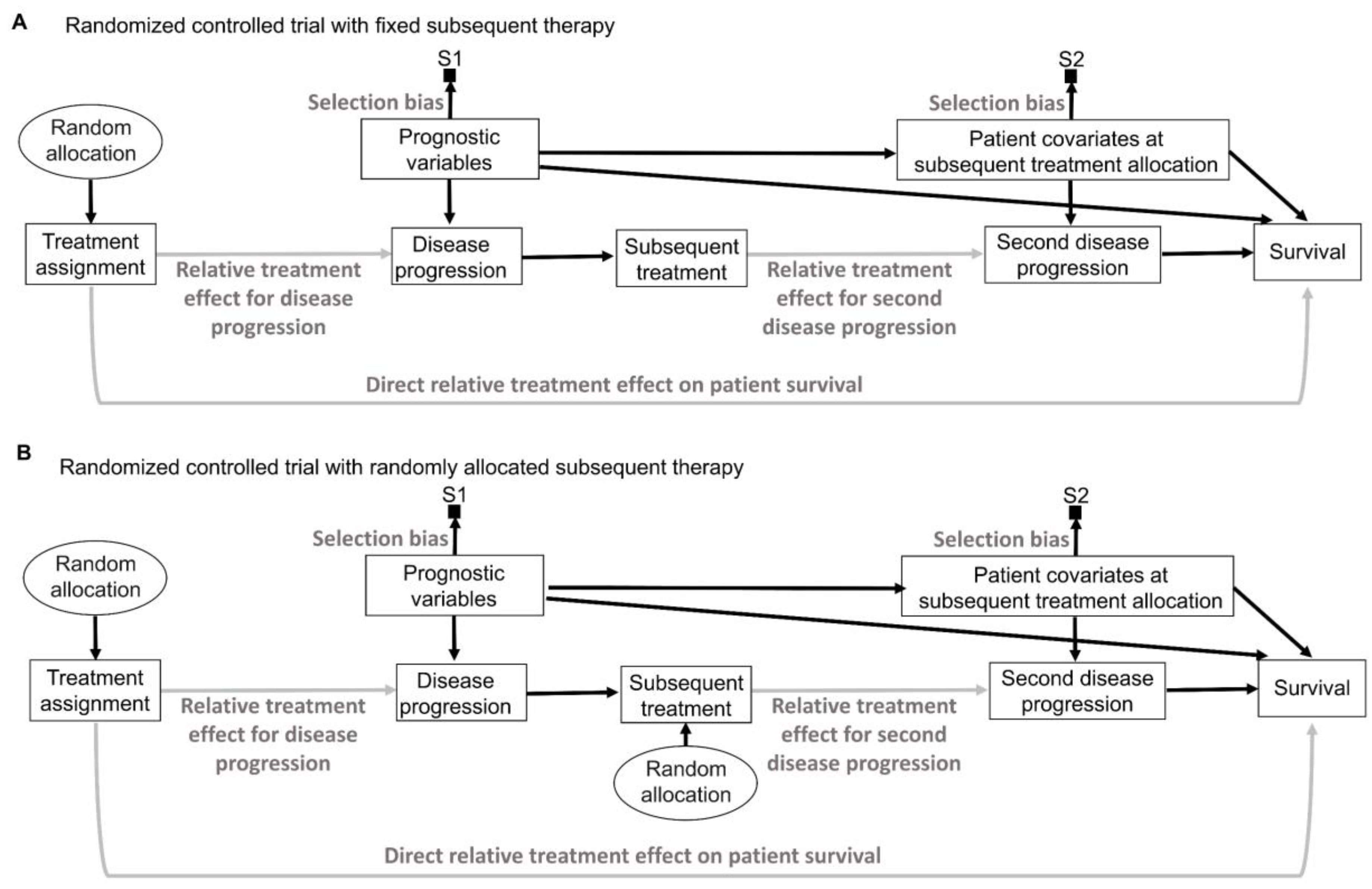

16. Intention to Treat and Per Protocol

While inanimate units, such as plots of land in agricultural experiments, will always follow the allocated intervention in an experiment, experimental design and analysis of RCTs in medicine are more complex because patients may not always follow the randomly assigned treatment (

Figure 9A) [

141,

142,

143]. “Intention-to-treat” (ITT) analyses estimate the relative treatment effect for patients based on their treatment assignment, regardless of whether they actually received the assigned therapy. Uncertainty measures for the relative treatment effect generated by ITT analyses of RCT data are justifiable by the random allocation procedure (

Figure 9A). However, because the actual treatment received is the source of biological efficacy, clinicians are typically interested in predicting the potential outcomes if their patient actually receives a particular therapy. Corresponding causal RCT parameters for such inferences are derived from “per-protocol” (PP) analyses that estimate the relative treatment effect for the therapies that patients actually received [

143]. However, as shown in

Figure 9A, random treatment assignment removes all systematic confounding influences on the assigned treatment but does not prevent the potential influence of patient covariates on whether the treatment was actually received. This means that PP analysis models should account for possible confounding biases to reliably estimate the relative treatment effect of the treatment received on the outcome of interest. This can be facilitated by recognizing that “Treatment assignment” in

Figure 9A is an instrumental variable for the relative treatment effect of the treatment received on the outcome. Instrumental variable methodologies developed in econometrics and epidemiology can be used to account for the systematic confounding influence of the treatments received in RCTs [

142]. A complementary strategy is to enforce RCT internal validity by carefully designing, implementing, and monitoring the trial so that the treatment received corresponds to the treatment assignment as much as possible and is not influenced by patient covariates.

In a recent RCT, patients were randomly allocated to receive an invitation to undergo a single screening colonoscopy or to receive no invitation or screening [

144]. The ITT analysis, termed “intention-to-screen” by the study, found that the risk of colorectal cancer at 10 years was reduced in the invited group compared with the group randomly allocated to no invitation (the usual-care group) with risk ratio (RR) = 0.82, 95% CI 0.70–0.93, and

p ≈ 0.006, corresponding to 7 bits of refutational information against the null hypothesis of no difference in colorectal cancer risk at 10 years. However, only 42% of invited patients actually underwent colonoscopy [

144]. Thus, the estimate yielded by the ITT analysis is more relevant for forward causal inferences related to implementing a health policy of screening colonoscopy invitation. On the other hand, the estimate of higher interest to clinicians and patients is how much an actual screening colonoscopy can modify the risk of colorectal cancer at 10 years. This was provided by the adjusted PP analysis, which reported RR = 0.69, 95% CI 0.55–0.83, and

p ≈ 0.0005, corresponding to 11 bits of refutational information against the null hypothesis. The caveat is that, although the PP analysis is more relevant to direct patient care, its estimates rely on additional assumptions, reviewed elsewhere [

143,

145], and are less physically justifiable from the random allocation than those from the ITT analysis. For simplicity, we have assumed here that what the authors used in their ITT analysis fully corresponded to the random allocation. However, some patients allocated to each group actually were excluded, died, or were diagnosed with colorectal cancer before being included in the study and were thus excluded from the ITT analysis [

144].

An additional distinction, often used in RCTs of medical devices, separates the PP analysis of those who received the treatment from the “as-treated” (AT) analysis of those who actually used their assigned treatment (

Figure 9B). In these scenarios, the AT relative treatment effect estimate is the most relevant for clinical inferences but again requires careful modeling of potential systematic confounders (

Figure 9B). In the colonoscopy RCT [

144], ITT would analyze patients as per their assigned screening intervention regardless of whether the assigned screening invitation was actually sent to the patients, PP would analyze patients based on whether or not they received the assigned invitation, regardless of whether they actually underwent colonoscopy, and AT would analyze patients based on whether they actually underwent colonoscopy, regardless of whether they were originally randomly assigned to the colonoscopy or whether they received an invitation to undergo colonoscopy.

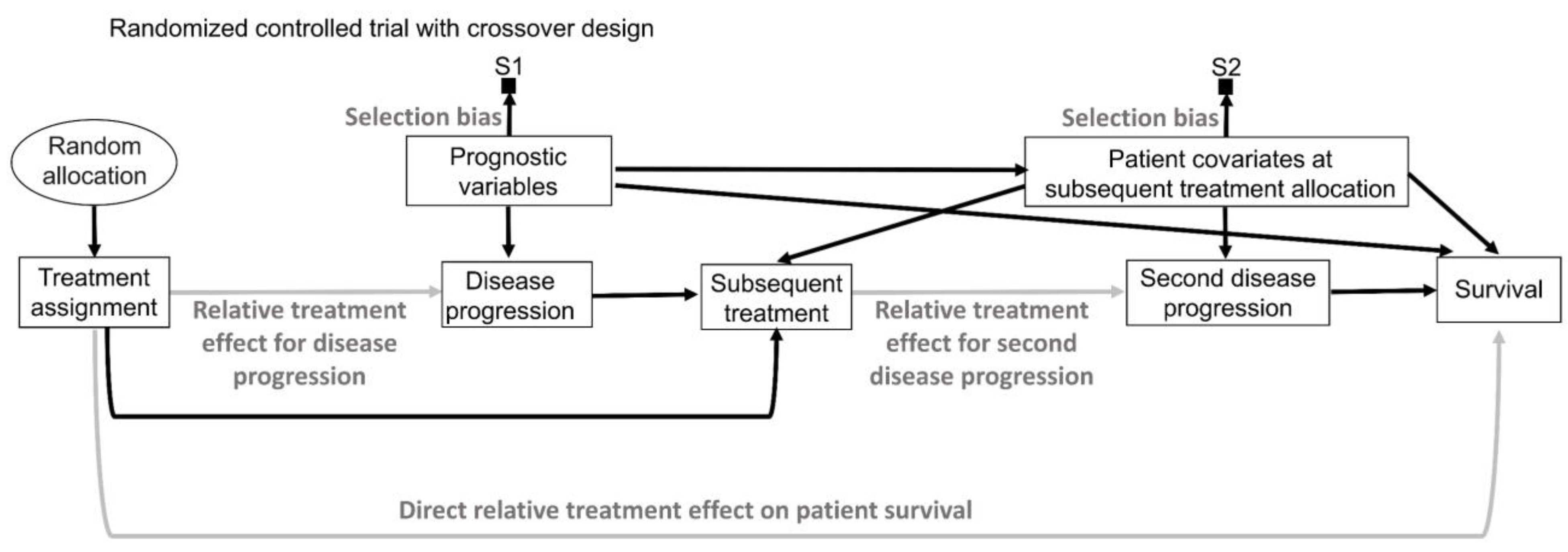

17. Prognostic and Predictive Effects

In addition to the effect of the assigned treatment on the outcomes observed in RCTs, baseline patient covariates, also known as moderator variables, may affect the magnitude and direction of the treatment effect [

2,

146]. These moderator effects can be distinguished based on the two underlying data-generating processes represented in

Figure 10. The first type (

Figure 10A) has been described in the literature using various terms such as “risk magnification”, “risk modeling”, “effect measure modification”, “additive effect”, “main effect”, “heterogeneity of effect”, or “prognostic effect” [

1,

2,

112,

146,

147,

148]. The second type (

Figure 10B) has been described as “biologic interaction”, “effect modeling”, “treatment interaction”, “multiplicative effect”, “biological treatment effect modification”, or “predictive effect” [

1,

2,

112,

146,

147,

148]. For simplicity, we will adopt the terms “prognostic” and “predictive”, often used in medical RCTs, to distinguish between the two moderator effect types.

In an RCT, prognostic variables may directly affect the outcome of interest but do not interact with any treatment. Consequently, they do not affect the comparative treatment effect parameter, such as an HR, which remains stable across patients (

Figure 10A). In contrast, a variable that is predictive for a particular treatment changes the relative treatment effect in RCTs by acting on pathways that mediate the effect of the assigned treatment on the outcome (

Figure 10B). Thus, the HR for survival in an RCT may differ between subgroups of patients harboring distinct values of a predictive biomarker. Predictive biomarkers often have direct prognostic effects as well. For example, patients with breast cancer harboring amplifications of the

HER2 gene, found in 25% to 30% of breast cancers [

149,

150], have different prognoses than patients without such

HER2 amplifications [

151], regardless of what treatment is given. The targeted agent trastuzumab was developed to specifically target the oncogenic HER2 signaling that drives the growth of

HER2-amplified breast cancers [

152]. Therefore, in an RCT comparing the use of trastuzumab versus placebo,

HER2 amplification status acts as both a prognostic biomarker that directly influences patient survival and a predictive biomarker that influences the relative treatment effect for trastuzumab (

Figure 10C). Patients without

HER2 amplification in their tumors would be expected to derive no benefit from trastuzumab [

2,

152].

Nuisance variables are defined as variables that are not of primary interest in a study but still must be accounted for because they may influence the heterogeneity of the outcome of interest. Prognostic variables may act as nuisance variables in RCTs [

8,

99]. Variables expected to have the strongest prognostic effects on the outcome of interest should be used as blocking variables in RCTs (

Figure 8C). The primary endpoint analyses of randomized block designs will model the prognostic effects but rarely the predictive effects of blocking variables [

8,

89]. This is because the predictive effects of patient covariates on the relative treatment effect require large enough replicates (hence, large sample sizes) to be estimated reliably in RCTs [

8,

57,

131]. For this reason, predictive biomarkers typically are first identified in exploratory analyses and characterized in preclinical laboratory studies, with subsequent biologically informed RCTs specifically enriching for patients with these biomarkers [

2,

82,

153,

154]. Modern RCT designs may also attempt to adaptively enrich such biomarkers during trial conduct based on interim analyses of treatment response and survival times [

154,

155].

Due to their powerful direct effects on patient outcomes, prognostic biomarkers should always be considered when making patient-specific clinical inferences and decisions [

1,

148]. On the other hand, identifying predictive effects during an RCT carries the risk of misleading inferences and thus should be performed very rigorously [

156]. For example, an exploratory analysis of the COSMIC-313 RCT investigated whether the International Metastatic Renal Cell Carcinoma Database Consortium (IMDC) risk score [

157] can be used as a predictive covariate for the relative treatment effect of the cabozantinib + nivolumab + ipilimumab triplet therapy versus placebo + nivolumab + ipilimumab control [

64]. Similar to the example shown in

Figure 7A, the Kaplan–Meier survival curves for the IMDC intermediate-risk subgroup showed a clear signal of relative treatment effect difference for PFS favoring the triplet therapy over the control based on a total of 182 PFS events. The HR for PFS was 0.63 with 95% CI 0.47–0.85 and

p ≈ 0.002, corresponding to approximately 9 bits of information against the null hypothesis. However, in the IMDC poor-risk subgroup there were only a total of 67 PFS events, yielding very noisy survival curves, similar to the example shown in

Figure 7C. The HR estimate for PFS in the IMDC poor-risk subgroup was 1.04 with 95% CI 0.65–1.69 and

p ≈ 0.88, corresponding to 0 bits of information against the null hypothesis. Thus, no inferences can be made regarding the predictive effect of the IMDC intermediate- versus poor-risk subgroups in COSMIC-313 because only the intermediate-risk subgroup yielded precise estimates, whereas the poor-risk subgroup estimates were unreliable due to their imprecision. However, because the survival curves did not present the wide uncertainty intervals for the comparative difference between treatment groups as was executed in

Figure 7C, it was incorrectly concluded that the RCT showed no difference in relative treatment effect for the IMDC poor-risk subgroup and thus that the triplet therapy should be favored only in the IMDC intermediate-risk subgroup. Such mistaken inferences from noisy results are very frequent when looking at outcomes within each risk subgroup [

156]. To obtain clinically actionable signals, it is preferable instead to look for either prognostic or predictive effects in the full dataset of all patients enrolled in the RCT. Indeed, if we assume IMDC risk to be a prognostic biomarker in the full dataset, as indicated by the fact that COSMIC-313 used it as a blocking variable in its primary endpoint analysis, then patients with IMDC poor-risk disease will derive more absolute PFS benefit in terms of risk reduction at milestone time points than patients with IMDC intermediate-risk disease [

1,

63]. This is an example in which ignoring prognostic effects while hunting for predictive biomarkers, a type of data dredging, can lead to erroneous clinical inferences and decisions.

Predictive effects are analyzed by including treatment–covariate interaction terms in the statistical regression model used to analyze the RCT dataset [

2,

19]. However, uncertainty measures such as

p-values and CIs for these interaction effects are only physically justifiable in RCTs where both random sampling and random allocation are performed (

Figure 6D). The vast majority of RCTs perform only random allocation, and therefore the uncertainty measures of treatment–covariate interaction terms are not linked to a physical randomization process, since patients were not randomized to their predictive covariates (

Figure 6B). For this reason, modeling these interactions to look for predictive effects in RCT datasets is typically considered exploratory at best, and some journal guidelines specifically recommend against the presentation of

p-values for predictive effects due to the substantial risk of misinterpretation [

158]. However, the same journals also allow the presentation of a cruder visual tool called “forest plots” to perform graphical subgroup comparisons for predictive effects in RCTs [

1,

156,

159]. Forest plots rely on the use of CIs for patient subgroups determined by their presumably predictive covariates (

Figure 11). Neither these inferences nor

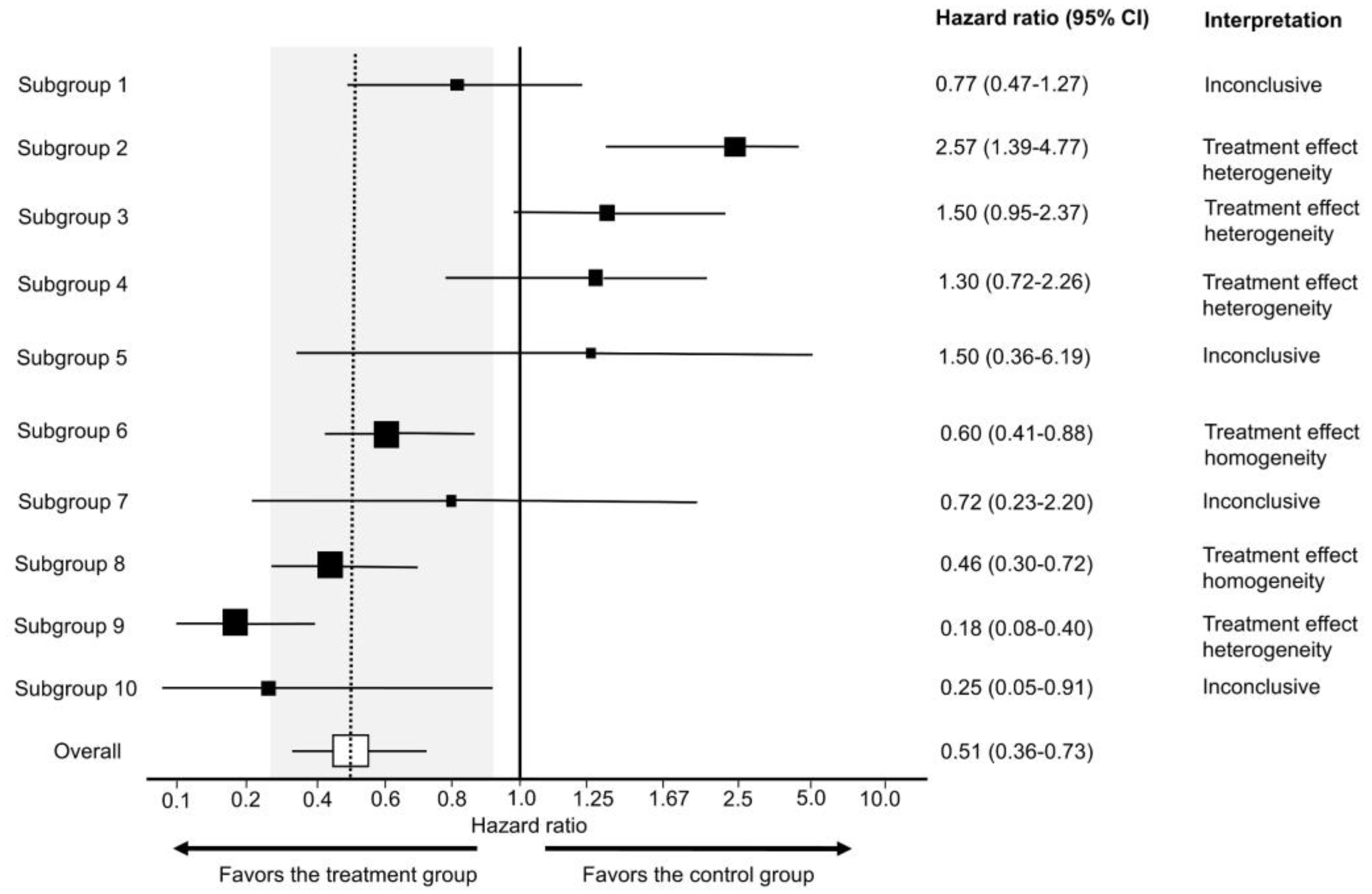

p-values for interaction are physically justifiable due to the lack of random sampling in typical RCT designs. The use of forest plots in this way can easily lead to spurious inferences and may be considered data dredging.

Even when the Cis used in forest plots for subgroup comparisons are valid, the majority of these graphs do not provide clinicians with meaningful indications of patient heterogeneity in practical terms. Empirically, most subgroup analyses of RCTs for predictive effects using forest plots presented at the 2020 and 2021 Annual Meetings of the American Society of Clinical Oncology (ASCO) were found to be inconclusive, yielding no informative signals to either refute (treatment effect heterogeneity) or support (treatment effect homogeneity) the assumption that relative treatment effect parameters such as HRs are stable across subgroups [

156]. All of these forest plots were based on results from a frequentist model, and only 24.2% included one or more subgroups suggestive of treatment effect heterogeneity [

156]. Because clinicians often seek to determine evidence of treatment effect homogeneity from forest plots, a practical approach has been developed to estimate an “indifference zone” of no clinically meaningful difference for the relative treatment effect estimate between the overall RCT cohort and each subgroup visualized by a forest plot [

156]. The assumptions and formulas to estimate the indifference zone are detailed by Hahn et al. [

156], and a simple spreadsheet (

Supplementary File S2) that can be used by clinicians to make these estimations is provided here. The indifference zone shown in

Figure 11 uses the 80% to 125% bioequivalence limits commonly used by the World Health Organization and the FDA, and they correspond to the clinically non-inferior HR effect size interval of 0.80 to 1.25 typically used in RCTs [

160]. Even after using this approach to maximize the information yielded by forest plots, 57.2% of subgroup comparisons presented in forest plots at the 2020 and 2021 annual ASCO meetings were inconclusive, 41.4% showed evidence of treatment effect homogeneity, and only 1.6% were suggestive of treatment effect heterogeneity [

156].

Given these limitations of forest plots, analyses for identifying treatment effect heterogeneity should focus instead on prespecified biologically and clinically plausible predictive biomarkers. Moreover, forest plots often arbitrarily dichotomize subgroups, e.g., into patients aged younger or older than 65 years. Such arbitrary cutoffs misleadingly assume that all patients younger than 65 have the same expected outcome. Rather than arbitrarily categorizing covariates into subgroups, it is more reasonable to preserve all information from continuous variables and fully model treatment-covariate interaction while properly adjusting for other prognostic or predictive effects that can influence outcome heterogeneity [

1,

3,

112,

161]. For example, age-specific treatment inferences and decisions were identified via a utility-based decision analysis based on robust Bayesian nonparametric modeling of the data from the CALGB 40503 phase 3 RCT comparing letrozole alone versus letrozole + bevacizumab in hormone receptor-positive advanced breast cancer [

3,

162].

If forest plots of RCT subgroups are presented, then cautious interpretation should be promoted by journals, professional organizations, and regulatory bodies.

Figure 11 provides teaching examples of how to interpret different subgroup patterns in forest plots. An example of how and why forest plots should be interpreted cautiously is provided by analyses of the POUT phase 3 RCT, which tested whether adjuvant chemotherapy improved outcomes compared with surveillance in patients with upper tract urothelial carcinoma (UTUC) [

163]. The results for the primary endpoint of DFS showed an estimated HR of 0.45 favoring adjuvant chemotherapy with 95% CI 0.30–0.68 and

p = 0.0001, corresponding to 13 bits of refutational information against the null hypothesis of no DFS difference between the two treatment groups. The study’s forest plot illustrating estimated differences in the HR for DFS among the blocking variables and tumor stage was correctly interpreted as inconclusive for any evidence of treatment effect heterogeneity [

163]. However, a common mistake when interpreting forest plots is to conclude that the relative treatment effect estimate is not significant for subgroups with CIs that cross the vertical line corresponding to the null effect, i.e., 1.0 for ratios such as HRs, ORs, and RRs [

1,

39,

156,

164]. Such examples and their proper interpretation are shown in Subgroups 1, 4, 5, and 7 in