Feasibility of Continual Deep Learning-Based Segmentation for Personalized Adaptive Radiation Therapy in Head and Neck Area

Abstract

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Patients

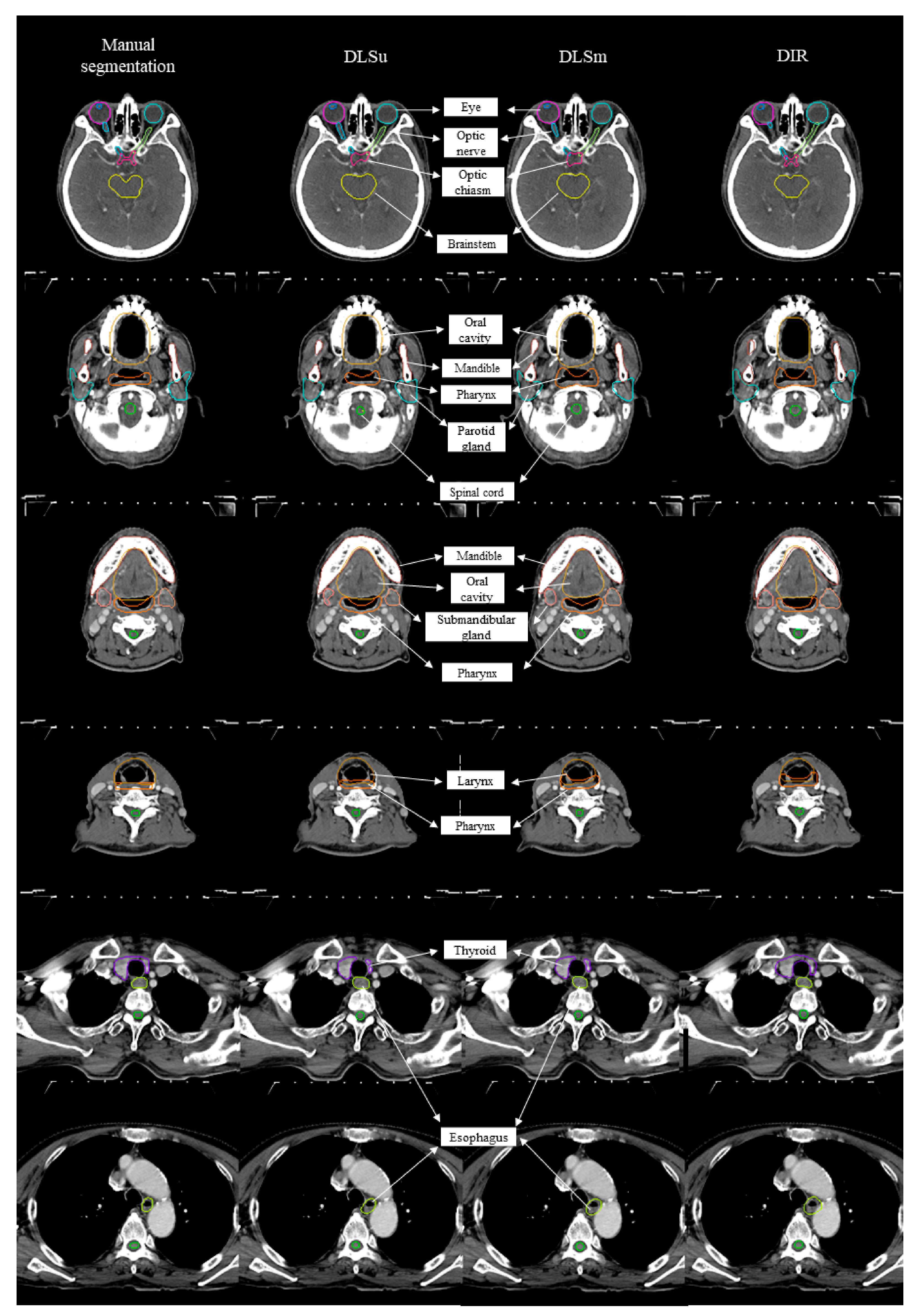

2.2. Manual Segmentation

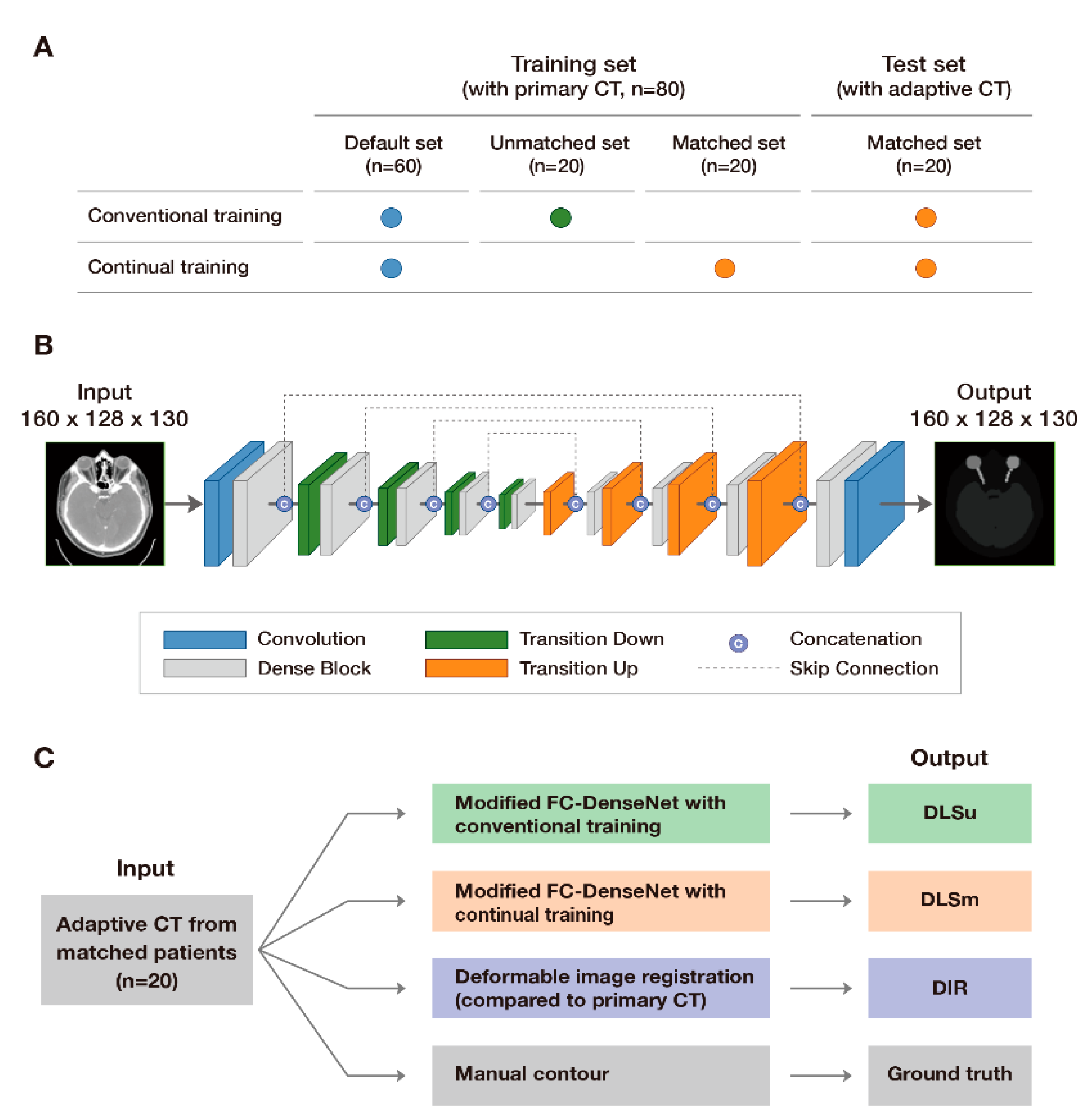

2.3. Deep Learning-Based Segmentation: Training Set and Preprocessing

2.4. Deep Learning-Based Segmentation: Two-Step Segmentation and Network Architecture

2.5. Deformable Image Registration

2.6. Quantitative Evaluation

- (1)

- Similarity metrics: The volumetric DSC calculates the spatial overlap between two binary images [21]:

- (2)

- Classic measurement: False-positive DSC (FPD) and false-negative DSC (FND) calculate the falsely segmented and detected pixels, respectively [22]:

- (3)

- Distance measurements: In both 95th percentile Hausdorff distance (HD) [23] and mean surface distance (MSD) calculation, the value of each voxel is the Euclidean distance in millimeters from each surface voxel of volume C to the nearest surface voxel of volume M. HD and MSD measure the distance and the mean of the absolute values of the surface distance between C and M, respectively:

2.7. Subjective Evaluation

- (1)

- Discrimination of a single contour from M and C (DLSu, DLSm, and DIR) concerning whether the contouring was performed by a human or a computer.

- (2)

- Comparison between M vs. DLSm, DLSm vs. DLSu, and DLSu vs. DIR, respectively.

- (3)

- Quality assurance, for review purposes, of a single contour from M and C (DLSu, DLSm, and DIR). Major error was defined as subjective assessment for difference more than 10% of single contour.

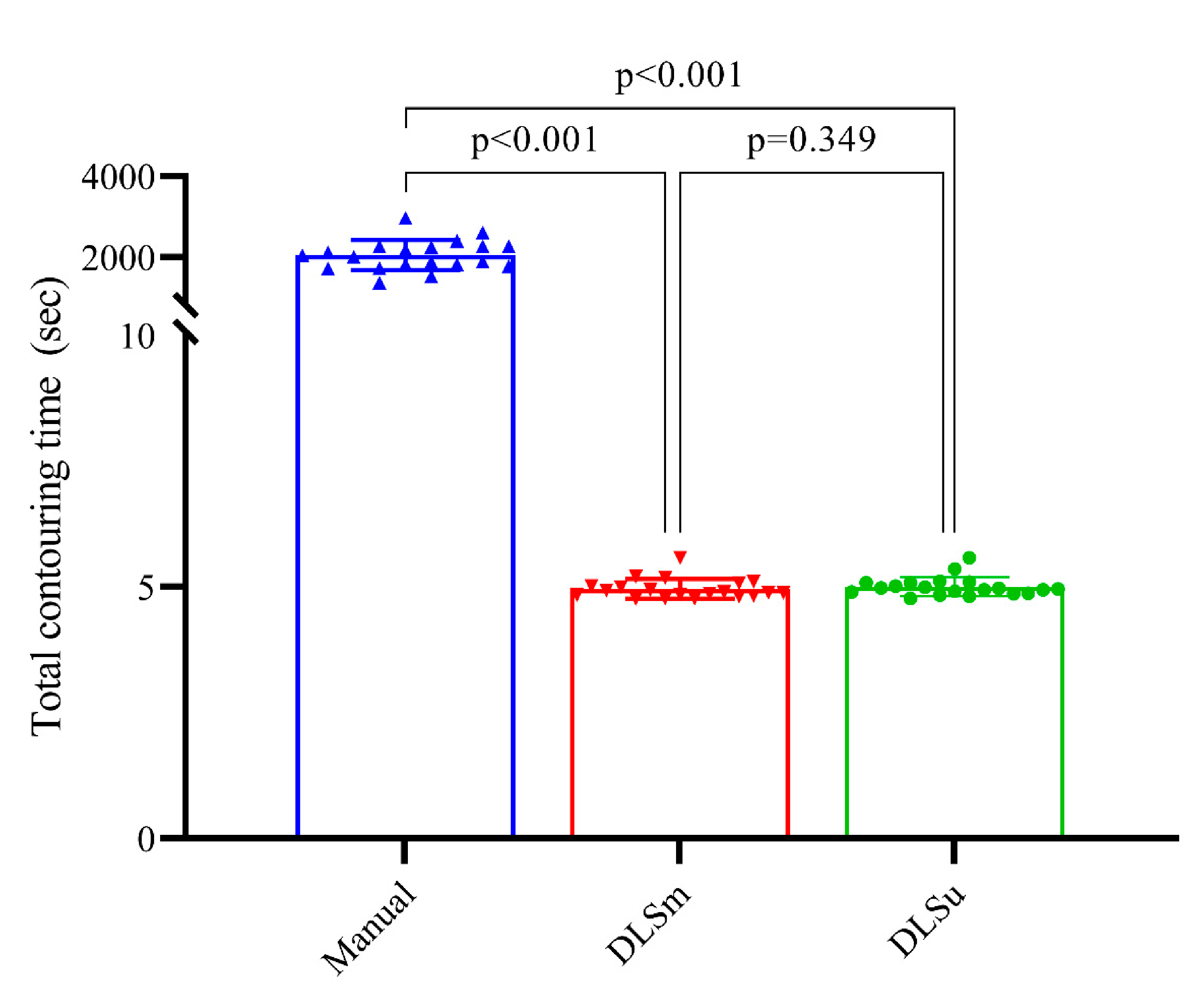

2.8. Contouring Time

2.9. Statistical Analysis

3. Results

3.1. Baseline Information

3.2. Quantitative Evaluation

3.2.1. Overall Performance

3.2.2. Central Organs

3.2.3. Bony Structures

3.2.4. Glandular Structures

3.2.5. Optic Apparatus

3.3. Time

3.4. Subjective Evaluation

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Glide-Hurst, C.K.; Lee, P.; Yock, A.D.; Olsen, J.R.; Cao, M.; Siddiqui, F.; Parker, W.; Doemer, A.; Rong, Y.; Kishan, A.U.; et al. Adaptive radiation therapy (art) strategies and technical considerations: A state of the art review from nrg oncology. Int. J. Radiat. Oncol. Biol. Phys. 2020. [Google Scholar] [CrossRef]

- Brouwer, C.L.; Steenbakkers, R.J.; Bourhis, J.; Budach, W.; Grau, C.; Gregoire, V.; van Herk, M.; Lee, A.; Maingon, P.; Nutting, C.; et al. Ct-based delineation of organs at risk in the head and neck region: Dahanca, eortc, gortec, hknpcsg, ncic ctg, ncri, nrg oncology and trog consensus guidelines. Radiother. Oncol. 2015, 117, 83–90. [Google Scholar] [CrossRef]

- Gregoire, V.; Ang, K.; Budach, W.; Grau, C.; Hamoir, M.; Langendijk, J.A.; Lee, A.; Le, Q.T.; Maingon, P.; Nutting, C.; et al. Delineation of the neck node levels for head and neck tumors: A 2013 update. Dahanca, eortc, hknpcsg, ncic ctg, ncri, rtog, trog consensus guidelines. Radiother. Oncol. 2014, 110, 172–181. [Google Scholar] [CrossRef]

- Hong, T.S.; Tome, W.A.; Harari, P.M. Heterogeneity in head and neck imrt target design and clinical practice. Radiother. Oncol. 2012, 103, 92–98. [Google Scholar] [CrossRef] [PubMed]

- O’Daniel, J.C.; Rosenthal, D.I.; Barker, J.L.; Ahamad, A.; Asper, J.A.; Blanco, A.I.; de Crevoisier, R.; Holsinger, F.C.; Schwartz, D.I.; Ang, K.K.; et al. Inter-observer contouring variations of head-and-neck anatomy. Int. J. Radiat. Oncol. Biol. Phys. 2005, 63, S370. [Google Scholar] [CrossRef]

- Vinod, S.K.; Jameson, M.G.; Min, M.; Holloway, L.C. Uncertainties in volume delineation in radiation oncology: A systematic review and recommendations for future studies. Radiother. Oncol. 2016, 121, 169–179. [Google Scholar] [CrossRef]

- Feng, M.; Demiroz, C.; Vineberg, K.A.; Eisbruch, A.; Balter, J.M. Normal tissue anatomy for oropharyngeal cancer: Contouring variability and its impact on optimization. Int. J. Radiat. Oncol. Biol. Phys. 2012, 84, e245–e249. [Google Scholar] [CrossRef]

- Brouwer, C.L.; Steenbakkers, R.J.; van den Heuvel, E.; Duppen, J.C.; Navran, A.; Bijl, H.P.; Chouvalova, O.; Burlage, F.R.; Meertens, H.; Langendijk, J.A.; et al. 3d variation in delineation of head and neck organs at risk. Radiat. Oncol. 2012, 7, 32. [Google Scholar] [CrossRef]

- Peng, Y.L.; Chen, L.; Shen, G.Z.; Li, Y.N.; Yao, J.J.; Xiao, W.W.; Yang, L.; Zhou, S.; Li, J.X.; Cheng, W.Q.; et al. Interobserver variations in the delineation of target volumes and organs at risk and their impact on dose distribution in intensity-modulated radiation therapy for nasopharyngeal carcinoma. Oral Oncol. 2018, 82, 1–7. [Google Scholar] [CrossRef]

- Budach, W.; Bolke, E.; Fietkau, R.; Buchali, A.; Wendt, T.G.; Popp, W.; Matuschek, C.; Sack, H. Evaluation of time, attendance of medical staff, and resources during radiotherapy for head and neck cancer patients: The degro-quiro trial. Strahlenther. Onkol. 2011, 187, 449–460. [Google Scholar] [CrossRef]

- Vorwerk, H.; Zink, K.; Schiller, R.; Budach, V.; Bohmer, D.; Kampfer, S.; Popp, W.; Sack, H.; Engenhart-Cabillic, R. Protection of quality and innovation in radiation oncology: The prospective multicenter trial the german society of radiation oncology (degro-quiro study). Evaluation of time, attendance of medical staff, and resources during radiotherapy with imrt. Strahlenther. Onkol. 2014, 190, 433–443. [Google Scholar] [CrossRef] [PubMed]

- Kosmin, M.; Ledsam, J.; Romera-Paredes, B.; Mendes, R.; Moinuddin, S.; de Souza, D.; Gunn, L.; Kelly, C.; Hughes, C.O.; Karthikesalingam, A.; et al. Rapid advances in auto-segmentation of organs at risk and target volumes in head and neck cancer. Radiother. Oncol. 2019, 135, 130–140. [Google Scholar] [CrossRef]

- Van Dijk, L.V.; Van den Bosch, L.; Aljabar, P.; Peressutti, D.; Both, S.; Steenbakkers, R.J.H.M.; Langendijk, J.A.; Gooding, M.J.; Brouwer, C.L. Improving automatic delineation for head and neck organs at risk by deep learning contouring. Radiother. Oncol. 2020, 142, 115–123. [Google Scholar] [CrossRef] [PubMed]

- Lee, H.; Lee, E.; Kim, N.; Kim, J.H.; Park, K.; Lee, H.; Chun, J.; Shin, J.I.; Chang, J.S.; Kim, J.S. Clinical evaluation of commercial atlas-based auto-segmentation in the head and neck region. Front. Oncol. 2019, 9, 239. [Google Scholar] [CrossRef] [PubMed]

- Cui, S.; Tseng, H.H.; Pakela, J.; Ten Haken, R.K.; El Naqa, I. Introduction to machine and deep learning for medical physicists. Med. Phys. 2020, 47, e127–e147. [Google Scholar] [CrossRef]

- Seo, H.; Badiei Khuzani, M.; Vasudevan, V.; Huang, C.; Ren, H.; Xiao, R.; Jia, X.; Xing, L. Machine learning techniques for biomedical image segmentation: An overview of technical aspects and introduction to state-of-art applications. Med. Phys. 2020, 47, e148–e167. [Google Scholar] [CrossRef]

- Jégou, S.; Drozdzal, M.; Vazquez, D.; Romero, A.; Bengio, Y. The One Hundred Layers Tiramisu: Fully Convolutional Densenets for Semantic Segmentation. Available online: https://arxiv.org/abs/1611.09326v3 (accessed on 12 December 2020).

- Li, X.; Yu, L.; Chang, D.; Ma, Z.; Cao, J. Dual cross-entropy loss for small-sample fine-grained vehicle classification. IEEE Trans. Veh. Technol. 2019, 68, 4204–4212. [Google Scholar] [CrossRef]

- Nelson, A.S.; Duchateau, M.; Piper, J.W.; Verellen, D.; Ridder, M.D. Su-e-j-198: Evaluation of a free-form intensity-based deformable registration method using the popi model. Med. Phys. 2014, 41, 202. [Google Scholar] [CrossRef]

- Piper, J. Su-ff-i-68: Evaluation of an intensity-based free-form deformable registration algorithm. Med. Phys. 2007, 34, 2353–2354. [Google Scholar] [CrossRef]

- Dice, L.R. Measures of the amount of ecologic association between species. Ecology 1945, 26, 297–302. [Google Scholar] [CrossRef]

- Babalola, K.O.; Patenaude, B.; Aljabar, P.; Schnabel, J.; Kennedy, D.; Crum, W.; Smith, S.; Cootes, T.; Jenkinson, M.; Rueckert, D. An evaluation of four automatic methods of segmenting the subcortical structures in the brain. Neuroimage 2009, 47, 1435–1447. [Google Scholar] [CrossRef] [PubMed]

- Huttenlocher, D.P.; Klanderman, G.A.; Rucklidge, W.J. Comparing images using the hausdorff distance. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 850–863. [Google Scholar] [CrossRef]

- Gooding, M.J.; Smith, A.J.; Tariq, M.; Aljabar, P.; Peressutti, D.; van der Stoep, J.; Reymen, B.; Emans, D.; Hattu, D.; van Loon, J.; et al. Comparative evaluation of autocontouring in clinical practice: A practical method using the turing test. Med. Phys. 2018, 45, 5105–5115. [Google Scholar] [CrossRef] [PubMed]

- Rose-Ped, A.M.; Bellm, L.A.; Epstein, J.B.; Trotti, A.; Gwede, C.; Fuchs, H.J. Complications of radiation therapy for head and neck cancers. The patient’s perspective. Cancer Nurs. 2002, 25, 461–467, quiz 468–469. [Google Scholar] [CrossRef]

- Jin, X.; Hu, W.; Shang, H.; Han, C.; Yi, J.; Zhou, Y.; Xie, C. Cbct-based volumetric and dosimetric variation evaluation of volumetric modulated arc radiotherapy in the treatment of nasopharyngeal cancer patients. Radiat. Oncol. 2013, 8, 279. [Google Scholar] [CrossRef]

- Ho, K.F.; Marchant, T.; Moore, C.; Webster, G.; Rowbottom, C.; Penington, H.; Lee, L.; Yap, B.; Sykes, A.; Slevin, N. Monitoring dosimetric impact of weight loss with kilovoltage (kv) cone beam ct (cbct) during parotid-sparing imrt and concurrent chemotherapy. Int. J. Radiat. Oncol. Biol. Phys. 2012, 82, e375–e382. [Google Scholar] [CrossRef]

- Lee, C.; Langen, K.M.; Lu, W.; Haimerl, J.; Schnarr, E.; Ruchala, K.J.; Olivera, G.H.; Meeks, S.L.; Kupelian, P.A.; Shellenberger, T.D.; et al. Assessment of parotid gland dose changes during head and neck cancer radiotherapy using daily megavoltage computed tomography and deformable image registration. Int. J. Radiat. Oncol. Biol. Phys. 2008, 71, 1563–1571. [Google Scholar] [CrossRef]

- Morgan, H.E.; Sher, D.J. Adaptive radiotherapy for head and neck cancer. Cancers Head Neck 2020, 5, 1. [Google Scholar] [CrossRef] [PubMed]

- Heukelom, J.; Fuller, C.D. Head and neck cancer adaptive radiation therapy (art): Conceptual considerations for the informed clinician. Semin. Radiat. Oncol. 2019, 29, 258–273. [Google Scholar] [CrossRef]

- Wang, Z.H.; Yan, C.; Zhang, Z.Y.; Zhang, C.P.; Hu, H.S.; Kirwan, J.; Mendenhall, W.M. Radiation-induced volume changes in parotid and submandibular glands in patients with head and neck cancer receiving postoperative radiotherapy: A longitudinal study. Laryngoscope 2009, 119, 1966–1974. [Google Scholar] [CrossRef]

- Yao, W.R.; Xu, S.P.; Liu, B.; Cao, X.T.; Ren, G.; Du, L.; Zhou, F.G.; Feng, L.C.; Qu, B.L.; Xie, C.B.; et al. Replanning criteria and timing definition for parotid protection-based adaptive radiation therapy in nasopharyngeal carcinoma. Biomed. Res. Int. 2015, 2015, 476383. [Google Scholar] [CrossRef] [PubMed]

- Fiorentino, A.; Caivano, R.; Metallo, V.; Chiumento, C.; Cozzolino, M.; Califano, G.; Clemente, S.; Pedicini, P.; Fusco, V. Parotid gland volumetric changes during intensity-modulated radiotherapy in head and neck cancer. Br. J. Radiol. 2012, 85, 1415–1419. [Google Scholar] [CrossRef] [PubMed]

- Liang, S.; Tang, F.; Huang, X.; Yang, K.; Zhong, T.; Hu, R.; Liu, S.; Yuan, X.; Zhang, Y. Deep-learning-based detection and segmentation of organs at risk in nasopharyngeal carcinoma computed tomographic images for radiotherapy planning. Eur. Radiol. 2019, 29, 1961–1967. [Google Scholar] [CrossRef]

- Fang, Y.; Wang, J.; Chen, S.; Shen, S.; Zhang, Z.; Hu, W. Impact of dataset size on deep learning-based auto segmentation for head and neck cancer. Int. J. Radiat. Oncol. Biol. Phys. 2019, 105, E129–E130. [Google Scholar] [CrossRef]

- Nikolov, S.; Blackwell, S.; Mendes, R.; Fauw, J.D.; Meyer, C.; Hughes, C.; Askham, H.; Romera-Paredes, B. Deep Learning to Achieve Clinically Applicable Segmentation of Head and Neck Anatomy for Radiotherapy. Available online: https://arxiv.org/abs/1809.04430v1 (accessed on 12 December 2020).

- Fritscher, K.; Raudaschl, P.; Zaffino, P.; Spadea, M.F.; Sharp, G.C.; Schubert, R. Deep neural networks for fast segmentation of 3d medical images. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2016; Springer: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Ibragimov, B.; Xing, L. Segmentation of organs-at-risks in head and neck ct images using convolutional neural networks. Med. Phys. 2017, 44, 547–557. [Google Scholar] [CrossRef]

- Močnik, D.; Ibragimov, B.; Xing, L.; Strojan, P.; Likar, B.; Pernuš, F.; Vrtovec, T. Segmentation of parotid glands from registered ct and mr images. Phys. Med. 2018, 52, 33–41. [Google Scholar] [CrossRef]

- Ren, X.; Xiang, L.; Nie, D.; Shao, Y.; Zhang, H.; Shen, D.; Wang, Q. Interleaved 3d-cnns for joint segmentation of small-volume structures in head and neck ct images. Med. Phys. 2018, 45, 2063–2075. [Google Scholar] [CrossRef]

- Zhu, W.; Huang, Y.; Zeng, L.; Chen, X.; Liu, Y.; Qian, Z.; Du, N.; Fan, W.; Xie, X. Anatomynet: Deep Learning for Fast and Fully Automated Whole-Volume Segmentation of Head and Neck Anatomy. Available online: https://arxiv.org/abs/1808.05238 (accessed on 12 December 2020). [CrossRef]

- Tong, N.; Gou, S.; Yang, S.; Ruan, D.; Sheng, K. Fully automatic multi-organ segmentation for head and neck cancer radiotherapy using shape representation model constrained fully convolutional neural networks. Med. Phys. 2018, 45, 4558–4567. [Google Scholar] [CrossRef]

- Van Rooij, W.; Dahele, M.; Ribeiro Brandao, H.; Delaney, A.R.; Slotman, B.J.; Verbakel, W.F. Deep learning-based delineation of head and neck organs at risk: Geometric and dosimetric evaluation. Int. J. Radiat. Oncol. Biol. Phys. 2019, 104, 677–684. [Google Scholar] [CrossRef]

- Rhee, D.J.; Cardenas, C.E.; Elhalawani, H.; McCarroll, R.; Zhang, L.; Yang, J.; Garden, A.S.; Peterson, C.B.; Beadle, B.M.; Court, L.E. Automatic detection of contouring errors using convolutional neural networks. Med. Phys. 2019, 46, 5086–5097. [Google Scholar] [CrossRef]

- Wong, J.; Fong, A.; McVicar, N.; Smith, S.; Giambattista, J.; Wells, D.; Kolbeck, C.; Giambattista, J.; Gondara, L.; Alexander, A. Comparing deep learning-based auto-segmentation of organs at risk and clinical target volumes to expert inter-observer variability in radiotherapy planning. Radiother. Oncol. 2020, 144, 152–158. [Google Scholar] [CrossRef]

- Zhensong, W.; Lifang, W.; Li, W.; Yaozong, G.; Wufan, C.; Dinggang, S. Hierarchical vertex regression-based segmentation of head and neck ct images for radiotherapy planning. IEEE Trans. Image Process. 2018, 27, 923–937. [Google Scholar]

- Oktay, O.; Nanavati, J.; Schwaighofer, A.; Carter, D.; Bristow, M.; Tanno, R.; Jena, R.; Barnett, G.; Noble, D.; Rimmer, Y.; et al. Evaluation of deep learning to augment image-guided radiotherapy for head and neck and prostate cancers. JAMA Netw. Open 2020, 3, e2027426. [Google Scholar] [CrossRef]

- Mencarelli, A.; van Kranen, S.R.; Hamming-Vrieze, O.; van Beek, S.; Nico Rasch, C.R.; van Herk, M.; Sonke, J.J. Deformable image registration for adaptive radiation therapy of head and neck cancer: Accuracy and precision in the presence of tumor changes. Int. J. Radiat. Oncol. Biol. Phys. 2014, 90, 680–687. [Google Scholar] [CrossRef] [PubMed]

- Mencarelli, A.; van Beek, S.; van Kranen, S.; Rasch, C.; van Herk, M.; Sonke, J.J. Validation of deformable registration in head and neck cancer using analysis of variance. Med. Phys. 2012, 39, 6879–6884. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Rhee, D.J.; Cardenas, C.; Court, L.E.; Yang, J. Training deep-learning segmentation models from severely limited data. Med. Phys. 2021. [Google Scholar] [CrossRef] [PubMed]

- Choi, M.S.; Choi, B.S.; Chung, S.Y.; Kim, N.; Chun, J.; Kim, Y.B.; Chang, J.S.; Kim, J.S. Clinical evaluation of atlas- and deep learning-based automatic segmentation of multiple organs and clinical target volumes for breast cancer. Radiother. Oncol. 2020, 153, 139–145. [Google Scholar] [CrossRef]

| Volumetric Dice Coefficient | False Positive Dice Coefficient | False Negative Dice Coefficient | |||||||

|---|---|---|---|---|---|---|---|---|---|

| DLSu | DLSm | DIR | DLSu | DLSm | DIR | DLSu | DLSm | DIR | |

| Mean ± SD | Mean ± SD | Mean ± SD | Mean ± SD | Mean ± SD | Mean ± SD | Mean ± SD | Mean ± SD | Mean ± SD | |

| All | 0.80 ** ± 0.02 | 0.83 * ± 0.02 | 0.70 † ± 0.05 | 0.19 ** ± 0.03 | 0.19 ± 0.03 | 0.33 † ± 0.07 | 0.20 ** ± 0.04 | 0.18 * ± 0.03 | 0.28 † ± 0.05 |

| Central organs | |||||||||

| Brainstem | 0.87 ± 0.02 | 0.87 ± 0.03 | 0.87 ± 0.05 | 0.18 ± 0.07 | 0.19 ± 0.09 | 0.15 † ± 0.10 | 0.07 ** ± 0.03 | 0.07 ± 0.04 | 0.12 † ± 0.06 |

| Spinal cord | 0.82 ** ± 0.04 | 0.82 ± 0.04 | 0.67† ± 0.16 | 0.15 ** ± 0.10 | 0.17 ± 0.10 | 0.33 † ± 0.21 | 0.21 ± 0.12 | 0.18 ± 0.11 | 0.33 † ± 0.19 |

| Esophagus | 0.80 ± 0.07 | 0.82 ± 0.04 | 0.74 ± 0.10 | 0.20 ± 0.09 | 0.22 ± 0.07 | 0.28 ± 0.14 | 0.20 ± 0.14 | 0.13 * ± 0.06 | 0.25 † ± 0.10 |

| Oral cavity | 0.91 ± 0.02 | 0.91 ± 0.02 | 0.88 † ± 0.04 | 0.11 ± 0.06 | 0.09 * ± 0.05 | 0.12 ± 0.08 | 0.07 ± 0.04 | 0.08 ± 0.04 | 0.11 ± 0.07 |

| Pharynx | 0.82 ** ± 0.03 | 0.82 ± 0.03 | 0.73 † ± 0.11 | 0.20 ** ± 0.08 | 0.28 * ± 0.09 | 0.29 ± 0.13 | 0.15 ** ± 0.07 | 0.08 * ± 0.05 | 0.26 † ± 0.14 |

| Larynx | 0.85 ** ± 0.05 | 0.85 ± 0.04 | 0.77 † ± 0.09 | 0.20 ± 0.12 | 0.19 ± 0.13 | 0.26 ± 0.17 | 0.09 ** ± 0.10 | 0.11 ± 0.10 | 0.20 † ± 0.12 |

| Bony structures | |||||||||

| Mandible | 0.95 ** ± 0.01 | 0.95 ± 0.01 | 0.85 † ± 0.09 | 0.03 ** ± 0.02 | 0.05 * ± 0.02 | 0.15 † ± 0.10 | 0.07 ** ± 0.03 | 0.05 * ± 0.03 | 0.15 † ± 0.09 |

| R_cochlea | 0.76 ± 0.07 | 0.76 ± 0.08 | 0.68 ± 0.15 | 0.32 ± 0.11 | 0.21 * ± 0.09 | 0.34 † ± 0.19 | 0.17 ± 0.12 | 0.26 ± 0.15 | 0.29 ± 0.20 |

| L_cochlea | 0.73 ± 0.07 | 0.76 ± 0.07 | 0.71 ± 0.14 | 0.32 ± 0.16 | 0.25 ± 0.13 | 0.31 ± 0.15 | 0.22 ± 0.13 | 0.24 ± 0.16 | 0.28 ± 0.22 |

| R_TMJ | 0.72 ± 0.07 | 0.70 ± 0.08 | 0.65 ± 0.14 | 0.25 ± 0.10 | 0.25 ± 0.13 | 0.31 ± 0.20 | 0.30 ± 0.17 | 0.35 ± 0.18 | 0.39 ± 0.19 |

| L_TMJ | 0.74 ± 0.07 | 0.75 ± 0.05 | 0.71 ± 0.11 | 0.27 ± 0.13 | 0.21 ± 0.11 | 0.24 ± 0.15 | 0.26 ± 0.10 | 0.29 ± 0.14 | 0.34 ± 0.16 |

| Glandular structures | |||||||||

| R_parotidG | 0.85 ** ± 0.04 | 0.87 * ± 0.03 | 0.76 † ± 0.08 | 0.17 ** ± 0.08 | 0.13 ± 0.06 | 0.34 † ± 0.13 | 0.14 ± 0.08 | 0.13 ± 0.06 | 0.14 ± 0.08 |

| L_parotidG | 0.84 ** ± 0.04 | 0.86 * ± 0.02 | 0.77 † ± 0.07 | 0.18 ** ± 0.07 | 0.12 * ± 0.05 | 0.32 † ± 0.13 | 0.13 ± 0.06 | 0.15 ± 0.06 | 0.15 ± 0.08 |

| R_SMG | 0.81 ** ± 0.10 | 0.88 * ± 0.04 | 0.71 † ± 0.09 | 0.06 ** ± 0.03 | 0.10 * ± 0.04 | 0.40 † ± 0.13 | 0.32 ** ± 0.21 | 0.15 * ± 0.08 | 0.19 ± 0.11 |

| L_SMG | 0.83 ** ± 0.06 | 0.86 * ± 0.04 | 0.71† ± 0.11 | 0.07 ** ± 0.04 | 0.10 * ± 0.05 | 0.39 † ± 0.14 | 0.28 ± 0.12 | 0.17 * ± 0.08 | 0.19 ± 0.14 |

| Thyroid | 0.88 ** ± 0.08 | 0.88 ± 0.04 | 0.70† ± 0.14 | 0.10 ** ± 0.04 | 0.10 ± 0.05 | 0.33 † ± 0.15 | 0.15 ** ± 0.16 | 0.14 ± 0.08 | 0.27 † ± 0.17 |

| Optic apparatus | |||||||||

| R_eye | 0.91 ** ± 0.02 | 0.92 ± 0.02 | 0.84 † ± 0.06 | 0.12 ± 0.06 | 0.09 * ± 0.06 | 0.16 † ± 0.07 | 0.05 ** ± 0.03 | 0.07 * ± 0.04 | 0.16 † ± 0.09 |

| L_eye | 0.91 ** ± 0.02 | 0.90 ± 0.02 | 0.83 † ± 0.07 | 0.09 ** ± 0.07 | 0.13 * ± 0.08 | 0.18 ± 0.09 | 0.09 ** ± 0.06 | 0.06 * ± 0.05 | 0.16 † ± 0.11 |

| R_lens | 0.78 ** ± 0.08 | 0.79 ± 0.09 | 0.52 † ± 0.22 | 0.32 ** ± 0.17 | 0.27 ± 0.16 | 0.54 † ± 0.32 | 0.11 ** ± 0.10 | 0.15 ± 0.10 | 0.42 † ± 0.22 |

| L_lens | 0.76 ** ± 0.13 | 0.78 ± 0.09 | 0.45 † ± 0.24 | 0.22 ** ± 0.20 | 0.28 ± 0.19 | 0.63 † ± 0.33 | 0.26 ** ± 0.27 | 0.16 ± 0.14 | 0.47 † ± 0.25 |

| R_optic nerve | 0.72 ** ± 0.07 | 0.70 ± 0.07 | 0.58 † ± 0.14 | 0.22 ** ± 0.10 | 0.16 * ± 0.09 | 0.36 † ± 0.18 | 0.34 ** ± 0.13 | 0.44 * ± 0.11 | 0.49 ± 0.17 |

| L_optic nerve | 0.70 ** ± 0.07 | 0.72 ± 0.07 | 0.57 † ± 0.15 | 0.17 ** ± 0.07 | 0.16 ± 0.07 | 0.36 † ± 0.17 | 0.43 ± 0.13 | 0.40 ± 0.15 | 0.49 ± 0.19 |

| Optic chiasm | 0.53 ** ± 0.16 | 0.52 ± 0.17 | 0.35 † ± 0.21 | 0.48 ** ± 0.21 | 0.64 * ± 0.20 | 0.78 ± 0.25 | 0.46 ± 0.24 | 0.31 * ± 0.21 | 0.51 † ± 0.28 |

| Hausdorff Distance (mm) | Mean Surface Distance (mm) | |||||

|---|---|---|---|---|---|---|

| DLSu | DLSm | DIR | DLSu | DLSm | DIR | |

| Mean ± SD | Mean ± SD | Mean ± SD | Mean ± SD | Mean ± SD | Mean ± SD | |

| All | 3.04 ** ± 0.36 | 2.79 * ± 0.22 | 4.19 † ± 0.74 | 1.05 ** ± 0.10 | 0.98 * ± 0.07 | 1.61 † ± 0.31 |

| Central organs | ||||||

| Brainstem | 2.96 ± 0.34 | 3.13 ± 0.43 | 3.25 ± 0.86 | 1.20 ** ± 0.22 | 1.29 ± 0.28 | 1.26 † ± 0.43 |

| Spinal cord | 2.09 ** ± 0.48 | 2.10 ± 0.50 | 3.97 † ± 2.15 | 0.84 ** ± 0.24 | 0.82 ± 0.21 | 1.56 † ± 0.77 |

| Esophagus | 3.66 ± 2.15 | 3.04 ± 0.88 | 4.20 ± 1.44 | 1.28 ** ± 0.53 | 1.12 ± 0.23 | 1.62 † ± 0.58 |

| Oral cavity | 4.60 ± 1.42 | 4.28 ± 0.94 | 5.75 ± 2.71 | 1.70 ± 0.42 | 1.59 ± 0.30 | 2.15 † ± 0.93 |

| Pharynx | 3.53 ** ± 0.84 | 3.53 ± 0.52 | 5.18 † ± 2.05 | 1.39 ** ± 0.26 | 1.44 ± 0.20 | 2.01 † ± 0.80 |

| Larynx | 4.19 ** ± 1.48 | 4.26 ± 1.34 | 6.54 † ± 2.39 | 1.61 ** ± 0.54 | 1.66 ± 0.52 | 2.54 † ± 1.06 |

| Bony structures | ||||||

| Mandible | 1.28 ** ± 0.27 | 1.27 ± 0.37 | 3.55 † ± 2.64 | 0.48 ± 0.12 | 0.47 ± 0.09 | 1.31 ± 0.87 |

| R_cochlea | 2.36 ± 0.60 | 2.26 ± 0.52 | 2.70 ± 0.89 | 0.74 ± 0.22 | 0.70 ± 0.22 | 0.97 ± 0.43 |

| L_cochlea | 2.61 ± 0.53 | 2.40 ± 0.67 | 2.47 ± 0.66 | 0.83 ± 0.19 | 0.73 ± 0.19 | 0.88 ± 0.39 |

| R_TMJ | 3.56 ± 1.27 | 4.13 ± 1.53 | 4.39 ± 1.99 | 1.22 ± 0.44 | 1.36 ± 0.44 | 1.55 ± 0.71 |

| L_TMJ | 3.29 ± 0.86 | 3.36 ± 1.14 | 3.61 ± 1.34 | 1.17 ± 0.31 | 1.14 ± 0.30 | 1.29 ± 0.54 |

| Glandular structures | ||||||

| R_parotidG | 3.91 ± 1.09 | 3.16 * ± 0.41 | 5.36 ± 2.27 | 1.41 ** ± 0.33 | 1.18 * ± 0.18 | 2.25 † ± 0.97 |

| L_parotidG | 3.78 ± 0.66 | 3.32 * ± 0.61 | 5.08 ± 2.02 | 1.43 ** ± 0.22 | 1.25 * ± 0.16 | 2.17 † ± 0.83 |

| R_SMG | 4.01 ± 2.18 | 2.45 * ± 0.78 | 5.03 ± 1.80 | 1.30 ** ± 0.64 | 0.84 * ± 0.22 | 2.09 † ± 0.73 |

| L_SMG | 3.60 ** ± 1.15 | 2.72 * ± 0.82 | 4.99 † ± 1.75 | 1.20 ** ± 0.38 | 0.96 * ± 0.29 | 2.08 † ± 0.83 |

| Thyroid | 2.56 ** ± 2.57 | 2.28 ± 0.89 | 4.83 † ± 1.90 | 0.84 ** ± 0.58 | 0.76 ± 0.17 | 1.88 † ± 0.79 |

| Optic apparatus | ||||||

| R_eye | 2.05 ** ± 0.40 | 1.94 ± 0.38 | 3.11 † ± 0.73 | 0.72 ** ± 0.14 | 0.68 ± 0.14 | 1.25 † ± 0.45 |

| L_eye | 2.12 ** ± 0.42 | 2.13 ± 0.53 | 3.53 † ± 1.17 | 0.75 ± 0.13 | 0.78 ± 0.19 | 1.36 † ± 0.58 |

| R_lens | 1.90 ** ± 0.90 | 1.71 ± 0.84 | 3.41 † ± 1.47 | 0.59 ** ± 0.22 | 0.56 ± 0.23 | 1.40 † ± 0.75 |

| L_lens | 1.85 ** ± 0.93 | 1.94 ± 0.99 | 4.15 † ± 2.01 | 0.63 ** ± 0.32 | 0.59 ± 0.22 | 1.75 † ± 1.05 |

| R_optic nerve | 2.74 ± 1.30 | 2.57 ± 0.86 | 3.43 ± 1.02 | 0.74 ** ± 0.25 | 0.74 ± 0.17 | 1.07 † ± 0.37 |

| L_optic nerve | 3.58 ± 3.09 | 2.44 ± 0.74 | 3.57 ± 1.09 | 0.91 ** ± 0.50 | 0.71 ± 0.20 | 1.11 † ± 0.39 |

| Optic chiasm | 3.64 ± 0.95 | 3.67 ± 0.93 | 4.25 ± 1.46 | 1.18 ** ± 0.38 | 1.24 ± 0.40 | 1.57 † ± 0.50 |

| Brain Stem | Spinal Cord | Esophagus | Pharynx | Larynx | Mandible | Cochlea | |

|---|---|---|---|---|---|---|---|

| Current, DLSu | 0.87 | 0.82 | 0.80 | 0.82 | 0.85 | 0.95 | 0.75 |

| Current, DLSm | 0.87 | 0.82 | 0.82 | 0.82 | 0.85 | 0.95 | 0.76 |

| Fritscher et al. [37] | |||||||

| Ibragimov et al. [38] | 0.87 | ||||||

| Mocnik et al. [39] | |||||||

| Ren X et al. [40] | |||||||

| Zhu et al. [41] | 0.87 | 0.93 | |||||

| Nikolov et al. [36] | 0.84 | 0.88 | 0.94 | 0.70 | |||

| Tong et al. [42] | 0.87 | 0.94 | |||||

| van Rooij et al. [43] | 0.64 | 0.60 | 0.71 | 0.78 | |||

| Rhee et al. [44] | 0.86 | 0.83 | 0.81 | 0.87 | 0.66 | ||

| Liang et al. [34] | 0.90 | 0.88 | 0.87 | 0.91 | 0.82 | ||

| van Dijk et al. [13] | 0.84 | 0.87 | 0.55 | 0.68 | 0.71 | 0.94 | |

| Wong et al. [45] | 0.80–0.83 | 0.79 | |||||

| Zhensong et al. [46] | 0.90 | 0.94 | |||||

| Oktay et al. [47] | 0.79–0.90 | 0.82–0.93 | 0.94–0.99 | ||||

| ParotidG | SMG | Thyroid | Eye | Lens | Optic nerve | Optic chiasm | |

| Current, DLSu | 0.85 | 0.82 | 0.88 | 0.91 | 0.77 | 0.71 | 0.53 |

| Current, DLSm | 0.87 | 0.87 | 0.88 | 0.91 | 0.79 | 0.71 | 0.52 |

| Fritscher et al. [37] | 0.81 | 0.65 | 0.51 | ||||

| Ibragimov et al. [38] | 0.78 | 0.71 | 0.88 | 0.64 | 0.37 | ||

| Mocnik et al. [39] | 0.79 | ||||||

| Ren X et al. [40] | 0.71 | 0.58 | |||||

| Zhu et al. [41] | 0.87 | 0.81 | 0.71 | 0.53 | |||

| Nikolov et al. [36] | 0.86 | 0.77 | 0.95 | 0.80 | 0.70 | ||

| Tong et al. [42] | 0.83 | 0.78 | 0.67 | 0.58 | |||

| van Rooij et al. [43] | 0.83 | 0.82 | |||||

| Rhee et al. [44] | 0.83 | 0.89 | 0.72 | 0.69 | 0.41 | ||

| Liang et al. [34] | 0.85 | 0.84 | 0.69 | ||||

| van Dijk et al. [13] | 0.84 | 0.78 | 0.83 | ||||

| Wong et al. [45] | 0.80 | 0.81–0.82 | 0.85–0.88 | 0.43–0.47 | 0.32–0.38 | ||

| Zhensong et al. [46] | 0.83 | ||||||

| Oktay et al. [47] | 0.83–0.93 | 0.75–0.92 | 0.92–0.97 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, N.; Chun, J.; Chang, J.S.; Lee, C.G.; Keum, K.C.; Kim, J.S. Feasibility of Continual Deep Learning-Based Segmentation for Personalized Adaptive Radiation Therapy in Head and Neck Area. Cancers 2021, 13, 702. https://doi.org/10.3390/cancers13040702

Kim N, Chun J, Chang JS, Lee CG, Keum KC, Kim JS. Feasibility of Continual Deep Learning-Based Segmentation for Personalized Adaptive Radiation Therapy in Head and Neck Area. Cancers. 2021; 13(4):702. https://doi.org/10.3390/cancers13040702

Chicago/Turabian StyleKim, Nalee, Jaehee Chun, Jee Suk Chang, Chang Geol Lee, Ki Chang Keum, and Jin Sung Kim. 2021. "Feasibility of Continual Deep Learning-Based Segmentation for Personalized Adaptive Radiation Therapy in Head and Neck Area" Cancers 13, no. 4: 702. https://doi.org/10.3390/cancers13040702

APA StyleKim, N., Chun, J., Chang, J. S., Lee, C. G., Keum, K. C., & Kim, J. S. (2021). Feasibility of Continual Deep Learning-Based Segmentation for Personalized Adaptive Radiation Therapy in Head and Neck Area. Cancers, 13(4), 702. https://doi.org/10.3390/cancers13040702