Artificial Intelligence-Based Segmentation of Residual Tumor in Histopathology of Pancreatic Cancer after Neoadjuvant Treatment

Abstract

:Simple Summary

Abstract

1. Background

2. Materials and Methods

2.1. Data Acquisition

2.2. Data Handling

2.3. Machine Learning

3. Results

3.1. Dataset

3.2. AI-Based Histopathological Classification of Pancreatic Tissue

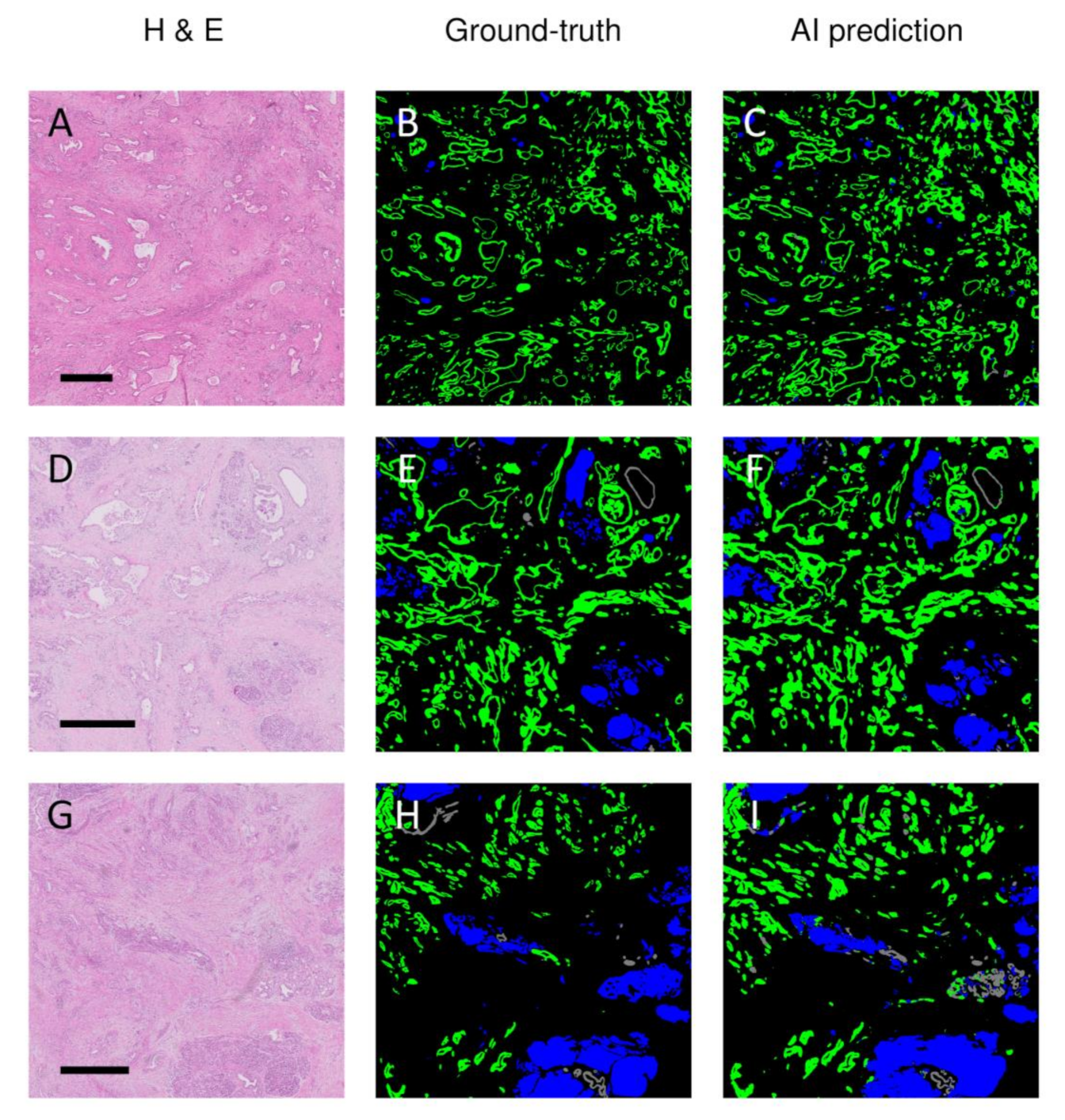

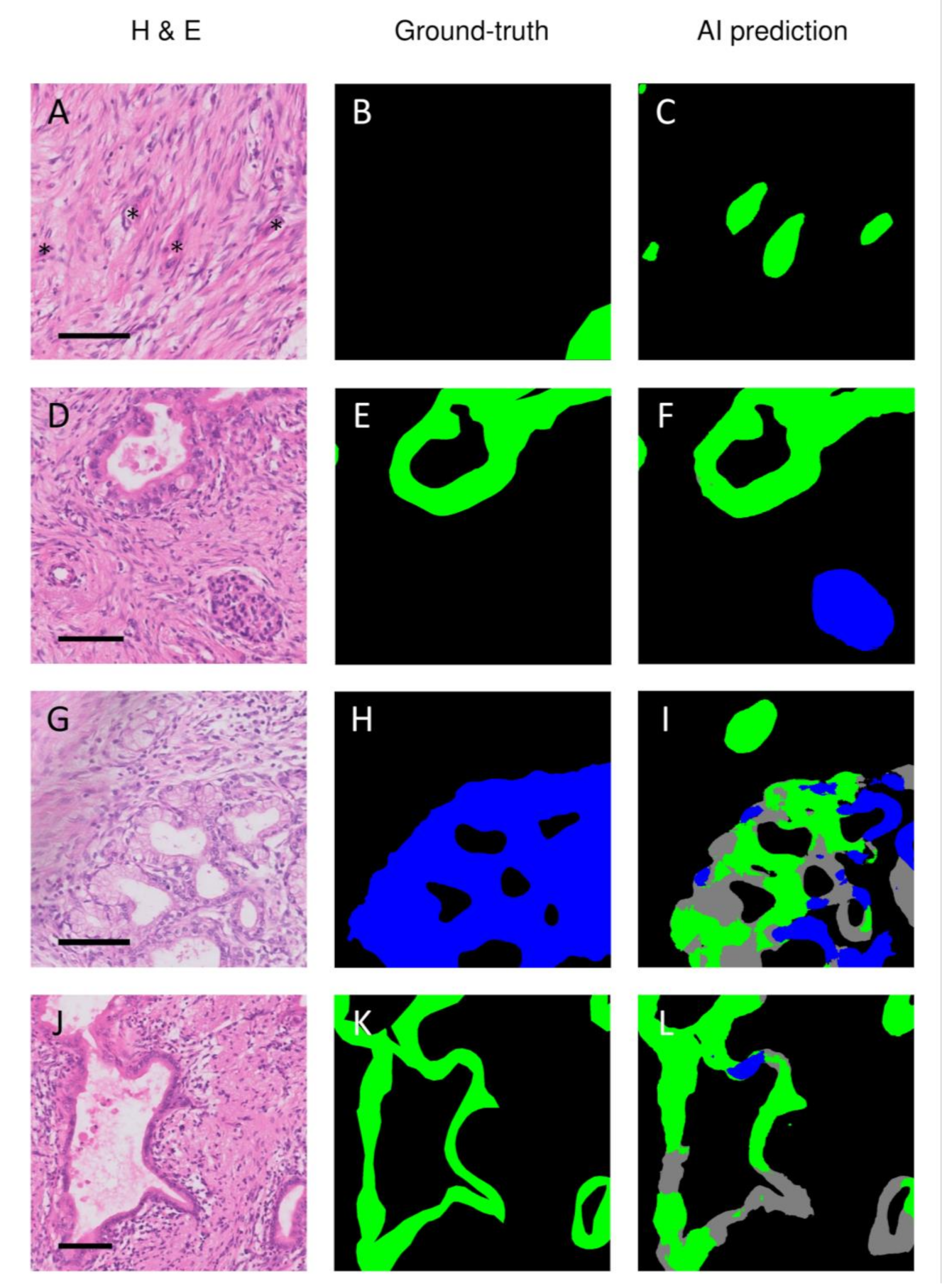

3.3. Discrepancies between Ground-Truth and AI-Based Predictions

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ryan, D.P.; Hong, T.S.; Bardeesy, N. Pancreatic adenocarcinoma. N. Engl. J. Med. 2014, 371, 1039–1049. [Google Scholar] [CrossRef]

- Versteijne, E.; Suker, M.; Groothuis, K.; Akkermans-Vogelaar, J.M.; Besselink, M.G.; Bonsing, B.A.; Buijsen, J.; Busch, O.R.; Creemers, G.M.; van Dam, R.M.; et al. Preoperative Chemoradiotherapy Versus Immediate Surgery for Resectable and Borderline Resectable Pancreatic Cancer: Results of the Dutch Randomized Phase III PREOPANC Trial. J. Clin. Oncol. 2020, 38, 1763–1773. [Google Scholar] [CrossRef]

- Versteijne, E.; Vogel, J.A.; Besselink, M.G.; Busch, O.R.C.; Wilmink, J.W.; Daams, J.G.; van Eijck, C.H.J.; Groot Koerkamp, B.; Rasch, C.R.N.; van Tienhoven, G. Meta-analysis comparing upfront surgery with neoadjuvant treatment in patients with resectable or borderline resectable pancreatic cancer. Br. J. Surg. 2018, 105, 946–958. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jang, J.Y.; Han, Y.; Lee, H.; Kim, S.W.; Kwon, W.; Lee, K.H.; Oh, D.Y.; Chie, E.K.; Lee, J.M.; Heo, J.S.; et al. Oncological Benefits of Neoadjuvant Chemoradiation With Gemcitabine Versus Upfront Surgery in Patients with Borderline Resectable Pancreatic Cancer: A Prospective, Randomized, Open-label, Multicenter Phase 2/3 Trial. Ann. Surg. 2018, 268, 215–222. [Google Scholar] [CrossRef] [PubMed]

- Suker, M.; Beumer, B.R.; Sadot, E.; Marthey, L.; Faris, J.E.; Mellon, E.A.; El-Rayes, B.F.; Wang-Gillam, A.; Lacy, J.; Hosein, P.J.; et al. FOLFIRINOX for locally advanced pancreatic cancer: A systematic review and patient-level meta-analysis. Lancet Oncol. 2016, 17, 801–810. [Google Scholar] [CrossRef] [Green Version]

- Van Eijck, C.H.J.; Versteijne, E.; Suker, M.; Groothuis, K.; Besselink, M.G.H.; Busch, O.R.C.; Bonsing, B.A.; Groot Koerkamp, B.; de Hingh, I.H.J.T.; Festen, S.; et al. Preoperative chemoradiotherapy to improve overall survival in pancreatic cancer: Long-term results of the multicenter randomized phase III PREOPANC trial. J. Clin. Oncol. 2021, 39, 4016. [Google Scholar] [CrossRef]

- Janssen, B.V.; Tutucu, F.; van Roessel, S.; Adsay, V.; Basturk, O.; Campbell, F.; Doglioni, C.; Esposito, I.; Feakins, R.; Fukushima, N.; et al. Amsterdam International Consensus Meeting: Tumor response scoring in the pathology assessment of resected pancreatic cancer after neoadjuvant therapy. Mod. Pathol. 2021, 34, 4–12. [Google Scholar] [CrossRef]

- van Roessel, S.; Janssen, B.V.; Soer, E.C.; Fariña Sarasqueta, A.; Verbeke, C.S.; Luchini, C.; Brosens, L.A.A.; Verheij, J.; Besselink, M.G. Scoring of tumour response after neoadjuvant therapy in resected pancreatic cancer: Systematic review. Br. J. Surg. 2021, 108, 119–127. [Google Scholar] [CrossRef]

- Chou, A.; Ahadi, M.; Arena, J.; Sioson, L.; Sheen, A.; Fuchs, T.L.; Pavlakis, N.; Clarke, S.; Kneebone, A.; Hruby, G.; et al. A Critical Assessment of Postneoadjuvant Therapy Pancreatic Cancer Regression Grading Schemes With a Proposal for a Novel Approach. Am. J. Surg. Pathol. 2021, 45, 394–404. [Google Scholar] [CrossRef]

- Neyaz, A.; Tabb, E.S.; Shih, A.; Zhao, Q.; Shroff, S.; Taylor, M.S.; Rickelt, S.; Wo, J.Y.; Fernandez-Del Castillo, C.; Qadan, M.; et al. Pancreatic ductal adenocarcinoma: Tumour regression grading following neoadjuvant FOLFIRINOX and radiation. Histopathology 2020, 77, 35–45. [Google Scholar] [CrossRef]

- Litjens, G. Automated Slide Analysis Platform. Available online: https://computationalpathologygroup.github.io/ASAP/ (accessed on 5 June 2021).

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Yakubovskiy, P. Segmentation Models Pytorch. Available online: https://github.com/qubvel/segmentation_models.pytorch (accessed on 14 May 2021).

- Cui, Y.; Zhang, G.; Liu, Z.; Xiong, Z.; Hu, J. A deep learning algorithm for one-step contour aware nuclei segmentation of histopathology images. Med. Biol. Eng. Comput. 2019, 57, 2027–2043. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Abdelsamea, M.M.; Grineviciute, R.B.; Besusparis, J.; Cham, S.; Pitiot, A.; Laurinavicius, A.; Ilyas, M. Tumour parcellation and quantification (TuPaQ): A tool for refining biomarker analysis through rapid and automated segmentation of tumour epithelium. Histopathology 2019, 74, 1045–1054. [Google Scholar] [CrossRef]

- Amgad, M.; Sarkar, A.; Srinivas, C.; Redman, R.; Ratra, S.; Bechert, C.J.; Calhoun, B.C.; Mrazeck, K.; Kurkure, U.; Cooper, L.A.; et al. Joint Region and Nucleus Segmentation for Characterization of Tumor Infiltrating Lymphocytes in Breast Cancer. Proc. SPIE Int. Soc. Opt. Eng. 2019, 10956, 109560M. [Google Scholar] [CrossRef]

- Bulten, W.; Bándi, P.; Hoven, J.; van de Loo, R.; Lotz, J.; Weiss, N.; van de Laak, J.; van Ginneken, B.; Hulsbergen-van de Kaa, C.; Litjens, G. Epithelium segmentation using deep learning in H&E-stained prostate specimens with immunohistochemistry as reference standard. Sci. Rep. 2019, 9, 864. [Google Scholar] [CrossRef]

- Chen, C.M.; Huang, Y.S.; Fang, P.W.; Liang, C.W.; Chang, R.F. A computer-aided diagnosis system for differentiation and delineation of malignant regions on whole-slide prostate histopathology image using spatial statistics and multidimensional DenseNet. Med. Phys. 2020, 47, 1021–1033. [Google Scholar] [CrossRef]

- Chen, Y.; Janowczyk, A.; Madabhushi, A. Quantitative Assessment of the Effects of Compression on Deep Learning in Digital Pathology Image Analysis. JCO Clin. Cancer Inform. 2020, 4, 221–233. [Google Scholar] [CrossRef]

- Feng, R.; Liu, X.; Chen, J.; Chen, D.Z.; Gao, H.; Wu, J. A Deep Learning Approach for Colonoscopy Pathology WSI Analysis: Accurate Segmentation and Classification. IEEE J. Biomed. Health Inform. 2020, 25, 3700–3708. [Google Scholar] [CrossRef] [PubMed]

- Guo, Z.; Liu, H.; Ni, H.; Wang, X.; Su, M.; Guo, W.; Wang, K.; Jiang, T.; Qian, Y. A Fast and Refined Cancer Regions Segmentation Framework in Whole-slide Breast Pathological Images. Sci. Rep. 2019, 9, 882. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Haj-Hassan, H.; Chaddad, A.; Harkouss, Y.; Desrosiers, C.; Toews, M.; Tanougast, C. Classifications of multispectral colorectal cancer tissues using convolution neural network. J. Pathol. Inform. 2017, 8, 1. [Google Scholar]

- Ho, D.J.; Yarlagadda, D.V.; D’Alfonso, T.M.; Hanna, M.G.; Grabenstetter, A.; Ntiamoah, P.; Brogi, E.; Tan, L.K.; Fuchs, T.J. Deep multi-magnification networks for multi-class breast cancer image segmentation. Comput. Med. Imaging Graph. 2021, 88, 101866. [Google Scholar] [CrossRef]

- Li, J.; Speier, W.; Ho, K.C.; Sarma, K.V.; Gertych, A.; Knudsen, B.S.; Arnold, C.W. An EM-based semi-supervised deep learning approach for semantic segmentation of histopathological images from radical prostatectomies. Comput. Med. Imaging Graph. 2018, 69, 125–133. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Jiang, H.; Yao, Y.-d.; Pang, W.; Sun, Q.; Kuang, L. Structure convolutional extreme learning machine and case-based shape template for HCC nucleus segmentation. Neurocomputing 2018, 312, 9–26. [Google Scholar] [CrossRef]

- Mavuduru, A.; Halicek, M.; Shahedi, M.; Little, J.V.; Chen, A.Y.; Myers, L.L.; Fei, B. Using a 22-Layer U-Net to Perform Segmentation of Squamous Cell Carcinoma on Digitized Head and Neck Histological Images. Proc. SPIE Int. Soc. Opt. Eng. 2020, 11320, 113200C. [Google Scholar] [CrossRef]

- Qaiser, T.; Tsang, Y.-W.; Taniyama, D.; Sakamoto, N.; Nakane, K.; Epstein, D.; Rajpoot, N. Fast and accurate tumor segmentation of histology images using persistent homology and deep convolutional features. Med. Image Anal. 2019, 55, 1–14. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Roy, M.; Kong, J.; Kashyap, S.; Pastore, V.P.; Wang, F.; Wong, K.C.; Mukherjee, V. Convolutional autoencoder based model HistoCAE for segmentation of viable tumor regions in liver whole-slide images. Sci. Rep. 2021, 11, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Salvi, M.; Molinari, F.; Dogliani, N.; Bosco, M. Automatic discrimination of neoplastic epithelium and stromal response in breast carcinoma. Comput. Biol. Med. 2019, 110, 8–14. [Google Scholar] [CrossRef]

- Silva-Rodríguez, J.; Colomer, A.; Naranjo, V. WeGleNet: A weakly-supervised convolutional neural network for the semantic segmentation of Gleason grades in prostate histology images. Comput. Med. Imaging Graph. 2021, 88, 101846. [Google Scholar] [CrossRef]

- Sun, C.; Li, C.; Zhang, J.; Rahaman, M.M.; Ai, S.; Chen, H.; Kulwa, F.; Li, Y.; Li, X.; Jiang, T. Gastric histopathology image segmentation using a hierarchical conditional random field. Biocybern. Biomed. Eng. 2020, 40, 1535–1555. [Google Scholar] [CrossRef]

- van Rijthoven, M.; Balkenhol, M.; Siliņa, K.; van der Laak, J.; Ciompi, F. HookNet: Multi-resolution convolutional neural networks for semantic segmentation in histopathology whole-slide images. Med. Image Anal. 2021, 68, 101890. [Google Scholar] [CrossRef] [PubMed]

- van Zon, M.C.M.; van der Waa, J.D.; Veta, M.; Krekels, G.A.M. Whole-slide margin control through deep learning in Mohs micrographic surgery for basal cell carcinoma. Exp. Dermatol. 2021, 30, 733–738. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Fang, Y.; Yang, S.; Zhu, D.; Wang, M.; Zhang, J.; Tong, K.Y.; Han, X. A hybrid network for automatic hepatocellular carcinoma segmentation in H&E-stained whole slide images. Med. Image Anal. 2021, 68, 101914. [Google Scholar] [CrossRef]

- Xu, L.; Walker, B.; Liang, P.-I.; Tong, Y.; Xu, C.; Su, Y.C.; Karsan, A. Colorectal Cancer Detection Based on Deep Learning. J. Pathol. Inform. 2020, 11, 28. [Google Scholar] [CrossRef]

- Xu, Y.; Zhu, J.-Y.; Chang, E.I.C.; Lai, M.; Tu, Z. Weakly supervised histopathology cancer image segmentation and classification. Med. Image Anal. 2014, 18, 591–604. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, Q.; Xu, Z.; Liao, C.; Cai, J.; Huang, Y.; Chen, H.; Tao, X.; Huang, Z.; Chen, J.; Dong, J.; et al. Epithelium segmentation and automated Gleason grading of prostate cancer via deep learning in label-free multiphoton microscopic images. J. Biophotonics 2020, 13, e201900203. [Google Scholar] [CrossRef] [PubMed]

- Kiemen, A.; Braxton, A.M.; Grahn, M.P.; Han, K.S.; Babu, J.M.; Reichel, R.; Amoa, F.; Hong, S.-M.; Cornish, T.C.; Thompson, E.D.; et al. In situ characterization of the 3D microanatomy of the pancreas and pancreatic cancer at single cell resolution. bioRxiv 2020. [Google Scholar] [CrossRef]

- Okubo, S.; Kojima, M.; Matsuda, Y.; Hioki, M.; Shimizu, Y.; Toyama, H.; Morinaga, S.; Gotohda, N.; Uesaka, K.; Ishii, G.; et al. Area of residual tumor (ART) can predict prognosis after post neoadjuvant therapy resection for pancreatic ductal adenocarcinoma. Sci. Rep. 2019, 9, 17145. [Google Scholar] [CrossRef]

- Rowan, D.J.; Logunova, V.; Oshima, K. Measured residual tumor cellularity correlates with survival in neoadjuvant treated pancreatic ductal adenocarcinomas. Ann. Diagn. Pathol. 2019, 38, 93–98. [Google Scholar] [CrossRef]

- Matsuda, Y.; Ohkubo, S.; Nakano-Narusawa, Y.; Fukumura, Y.; Hirabayashi, K.; Yamaguchi, H.; Sahara, Y.; Kawanishi, A.; Takahashi, S.; Arai, T.; et al. Objective assessment of tumor regression in post-neoadjuvant therapy resections for pancreatic ductal adenocarcinoma: Comparison of multiple tumor regression grading systems. Sci. Rep. 2020, 10, 18278. [Google Scholar] [CrossRef]

- Ren, P.; Xiao, Y.; Chang, X.; Huang, P.-Y.; Li, Z.; Chen, X.; Wang, X. A survey of deep active learning. arXiv 2020, arXiv:2009.00236. [Google Scholar]

- Turkki, R.; Linder, N.; Kovanen, P.; Pellinen, T.; Lundin, J. Antibody-supervised deep learning for quantification of tumor-infiltrating immune cells in hematoxylin and eosin stained breast cancer samples. J. Pathol. Inform. 2016, 7, 38. [Google Scholar] [CrossRef]

- Aung, K.L.; Fischer, S.E.; Denroche, R.E.; Jang, G.-H.; Dodd, A.; Creighton, S.; Southwood, B.; Liang, S.-B.; Chadwick, D.; Zhang, A.; et al. Genomics-Driven Precision Medicine for Advanced Pancreatic Cancer: Early Results from the COMPASS Trial. Clin. Cancer Res. 2018, 24, 1344–1354. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Echle, A.; Rindtorff, N.T.; Brinker, T.J.; Luedde, T.; Pearson, A.T.; Kather, J.N. Deep learning in cancer pathology: A new generation of clinical biomarkers. Br. J. Cancer 2021, 124, 686–696. [Google Scholar] [CrossRef] [PubMed]

- Calderaro, J.; Kather, J.N. Artificial intelligence-based pathology for gastrointestinal and hepatobiliary cancers. Gut 2021, 70, 1183–1193. [Google Scholar] [CrossRef] [PubMed]

| Characteristic | Number (n) | Percentage (%) |

|---|---|---|

| Tumor Location | ||

| Head | 54 | 84.4 |

| Body | 6 | 9.4 |

| Tail | 4 | 6.3 |

| Neoadjuvant Therapy | ||

| FOLFIRINOX × 8 | 20 | 31.3 |

| FOLFIRINOX × 4 | 20 | 31.3 |

| Gemcitabine × 3 + RTx × 1 | 19 | 29.7 |

| Gem-nab-paclitaxel | 2 | 3.1 |

| FOLFIRINOX × 6 | 1 | 1.6 |

| FOLFIRINOX × 2 | 1 | 1.6 |

| FOLFIRINOX × 1 | 1 | 1.6 |

| Encoder | Tumor (F1, 95% CI) | Normal Ducts (F1, 95% CI) | NTET (F1, 95% CI) | Mean (F1) |

|---|---|---|---|---|

| DenseNet161 | 0.86 ± 0.09 | 0.74 ± 0.12 | 0.85 ± 0.07 | 0.82 |

| DenseNet201 | 0.85 ± 0.09 | 0.77 ± 0.13 | 0.85 ± 0.08 | 0.82 |

| EffecientNet-b1 | 0.78 ± 0.15 | 0 | 0.77 ± 0.13 | 0.51 |

| EffecientNet-b4 | 0.77 ± 0.14 | 0 | 0.61 ± 0.73 | 0.46 |

| EffecientNet-b7 | 0.81 ± 0.12 | 0 | 0.82 ± 0.12 | 0.54 |

| ResNet152 | 0.88 ± 0.06 | 0.77 ± 0.14 | 0.73 ± 0.15 | 0.79 |

| None (‘standard’ U-net) | 0.83 ± 0.10 | 0.69 ± 0.23 | 0.83 ± 0.15 | 0.78 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Janssen, B.V.; Theijse, R.; van Roessel, S.; de Ruiter, R.; Berkel, A.; Huiskens, J.; Busch, O.R.; Wilmink, J.W.; Kazemier, G.; Valkema, P.; et al. Artificial Intelligence-Based Segmentation of Residual Tumor in Histopathology of Pancreatic Cancer after Neoadjuvant Treatment. Cancers 2021, 13, 5089. https://doi.org/10.3390/cancers13205089

Janssen BV, Theijse R, van Roessel S, de Ruiter R, Berkel A, Huiskens J, Busch OR, Wilmink JW, Kazemier G, Valkema P, et al. Artificial Intelligence-Based Segmentation of Residual Tumor in Histopathology of Pancreatic Cancer after Neoadjuvant Treatment. Cancers. 2021; 13(20):5089. https://doi.org/10.3390/cancers13205089

Chicago/Turabian StyleJanssen, Boris V., Rutger Theijse, Stijn van Roessel, Rik de Ruiter, Antonie Berkel, Joost Huiskens, Olivier R. Busch, Johanna W. Wilmink, Geert Kazemier, Pieter Valkema, and et al. 2021. "Artificial Intelligence-Based Segmentation of Residual Tumor in Histopathology of Pancreatic Cancer after Neoadjuvant Treatment" Cancers 13, no. 20: 5089. https://doi.org/10.3390/cancers13205089

APA StyleJanssen, B. V., Theijse, R., van Roessel, S., de Ruiter, R., Berkel, A., Huiskens, J., Busch, O. R., Wilmink, J. W., Kazemier, G., Valkema, P., Farina, A., Verheij, J., de Boer, O. J., & Besselink, M. G. (2021). Artificial Intelligence-Based Segmentation of Residual Tumor in Histopathology of Pancreatic Cancer after Neoadjuvant Treatment. Cancers, 13(20), 5089. https://doi.org/10.3390/cancers13205089