Multi-Level Scale Attention Fusion Network for Adhesive Spots Segmentation in Microlens Packaging

Abstract

1. Introduction

- For the first time, we have constructed LLAS, a high-quality, pixel-by-pixel, labeled adhesive spots dataset for high-performance packages of microlenses, which strongly contributes to the field of quality inspection of micro-optical components.

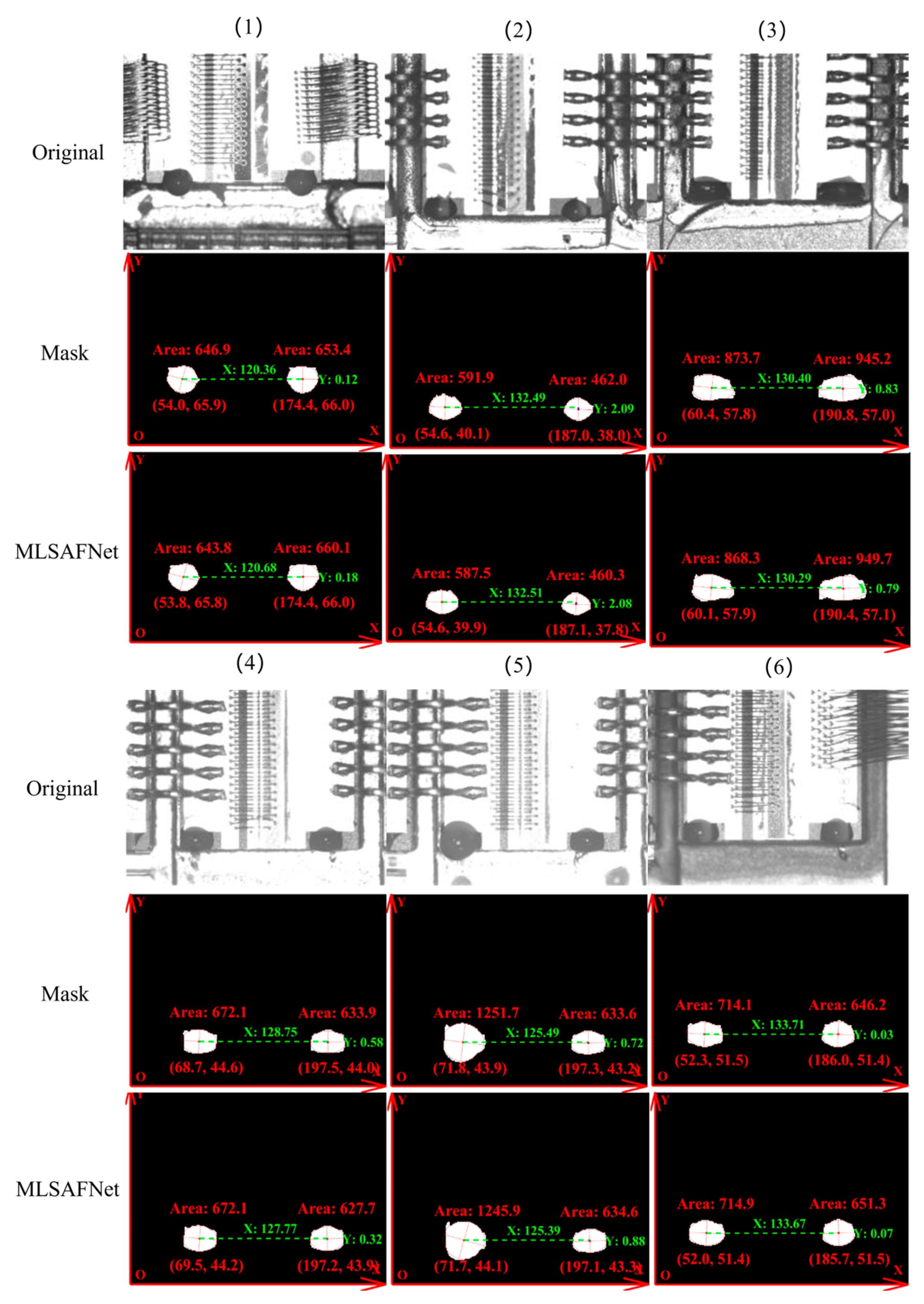

- Aiming at the characteristics of random shape, multi-scale area, and complex background of the adhesive spots, MLSAFNet is proposed to improve the feature fusion capability and detection robustness. The information interaction and feature enhancement ability under multi-level and multi-scale are enhanced by embedded MLAM and MSCGM to improve the positive detection rate of adhesive spots.

- By comparing with the current state-of-the-art target detection algorithms, MLSAFNet shows more obvious advantages in the detection results, and realizes high-precision detection for the on-site adhesive spots images collected under complex conditions.

2. Methodology

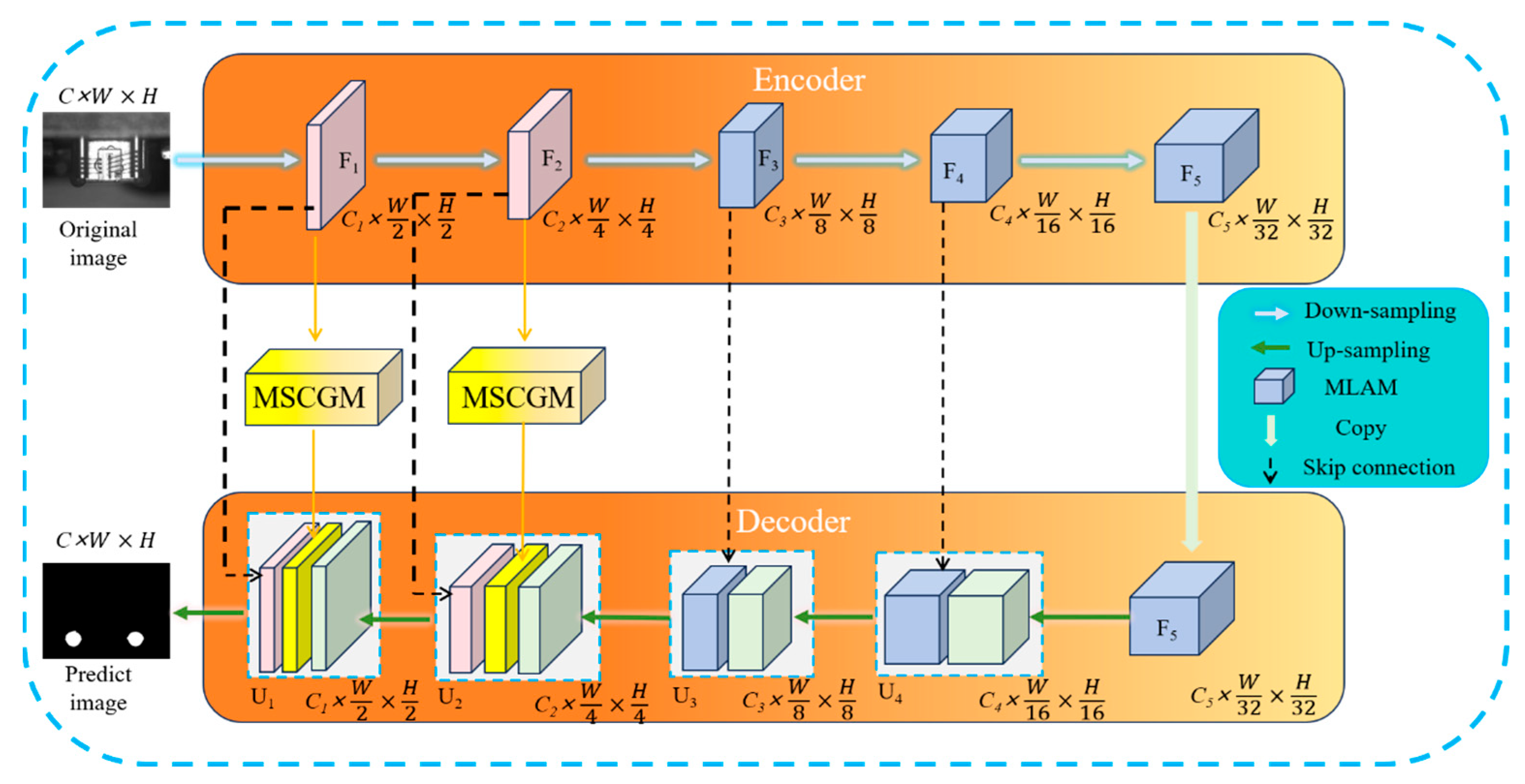

2.1. The Whole Model Structure

2.2. Multi-Scale Channel-Guided Module (MSCGM)

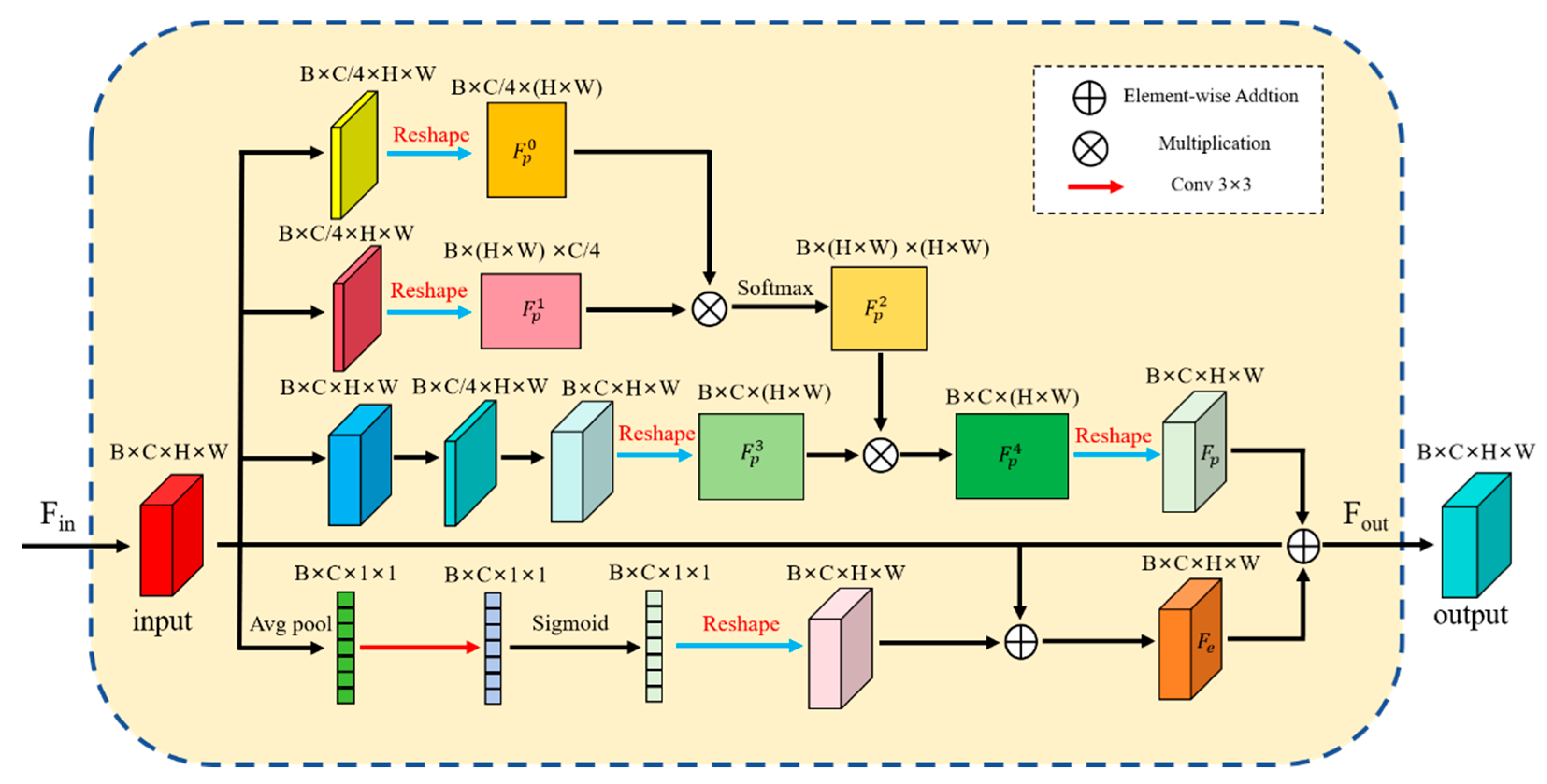

2.3. Multi-Level Attention Module (MLAM)

- (1)

- The input feature map is transmitted to the upper and lower modules, which are operated in their respective sub-modules, and the computations are Equations (9)–(12).

- (2)

- The results of the operations of the two sub-modules are subjected to matrix addition as shown in Equation (13).

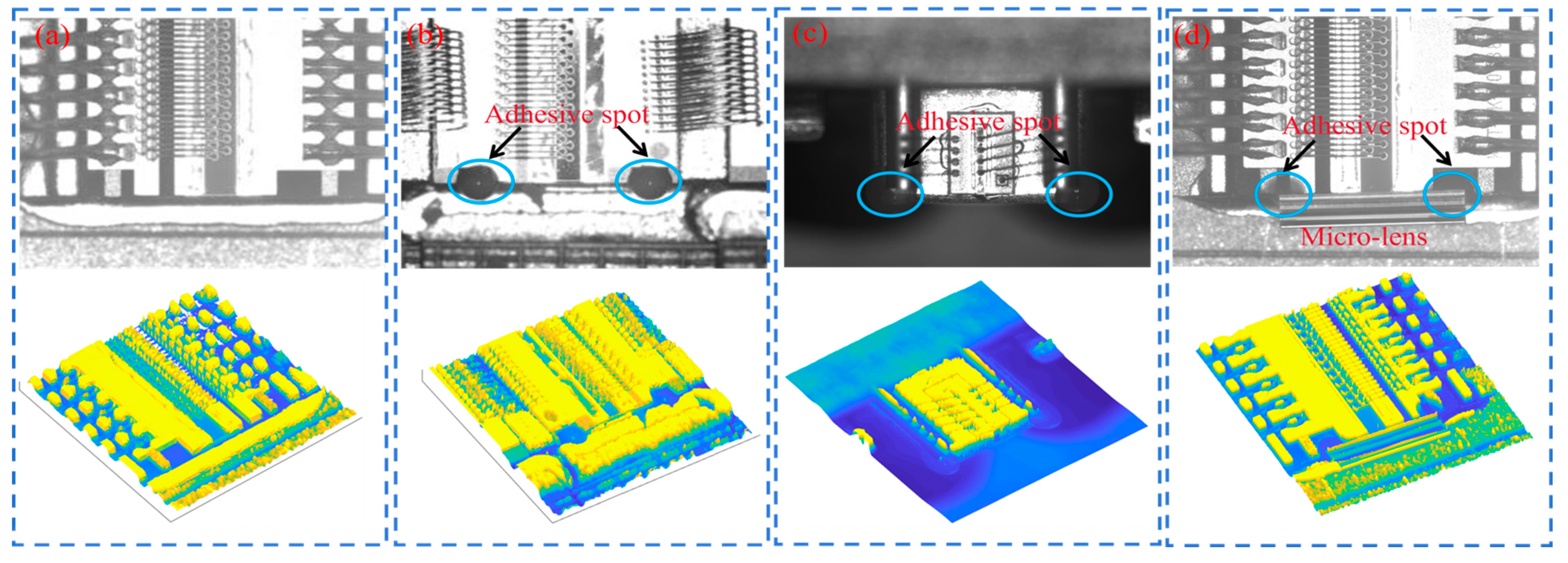

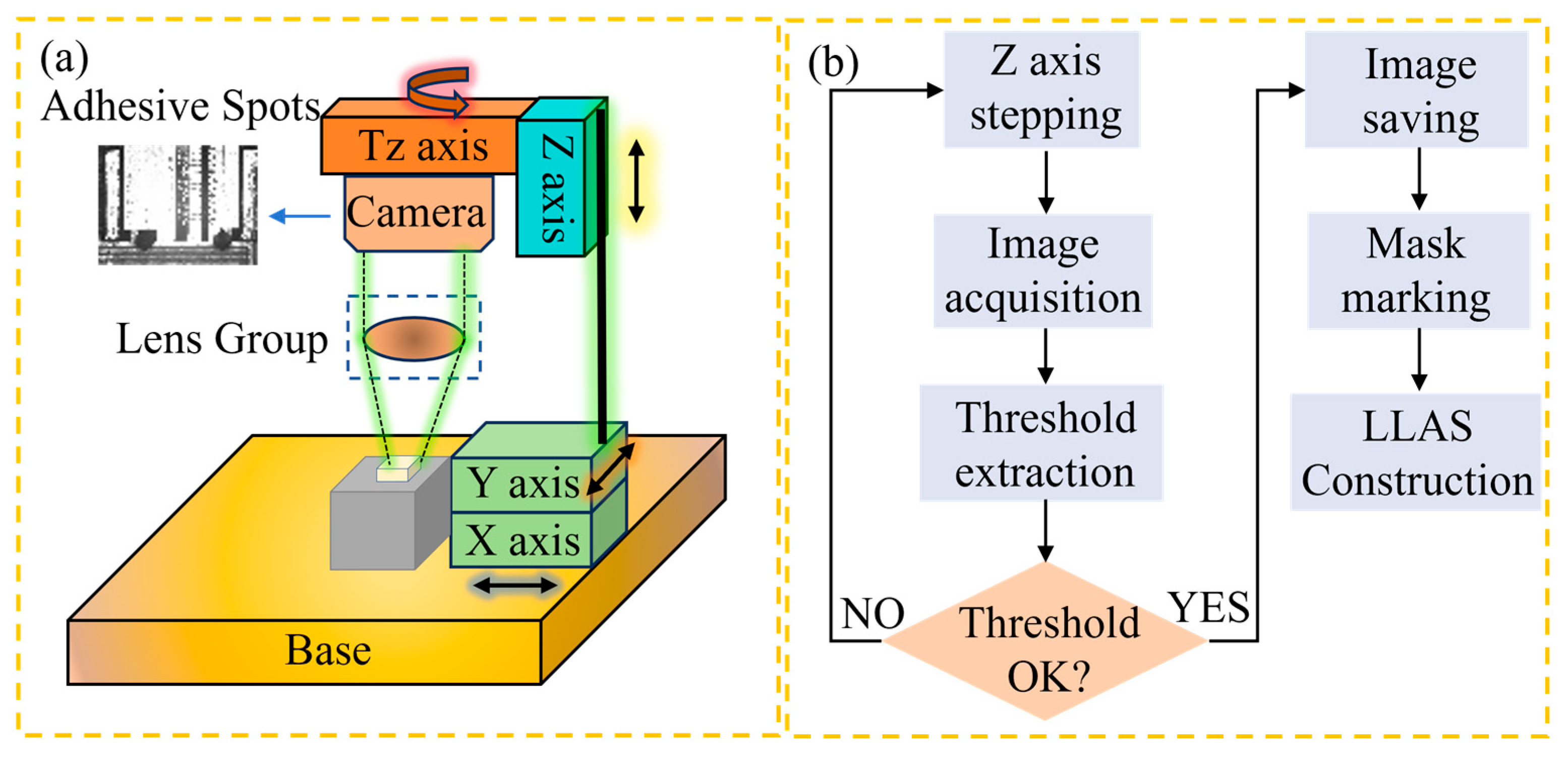

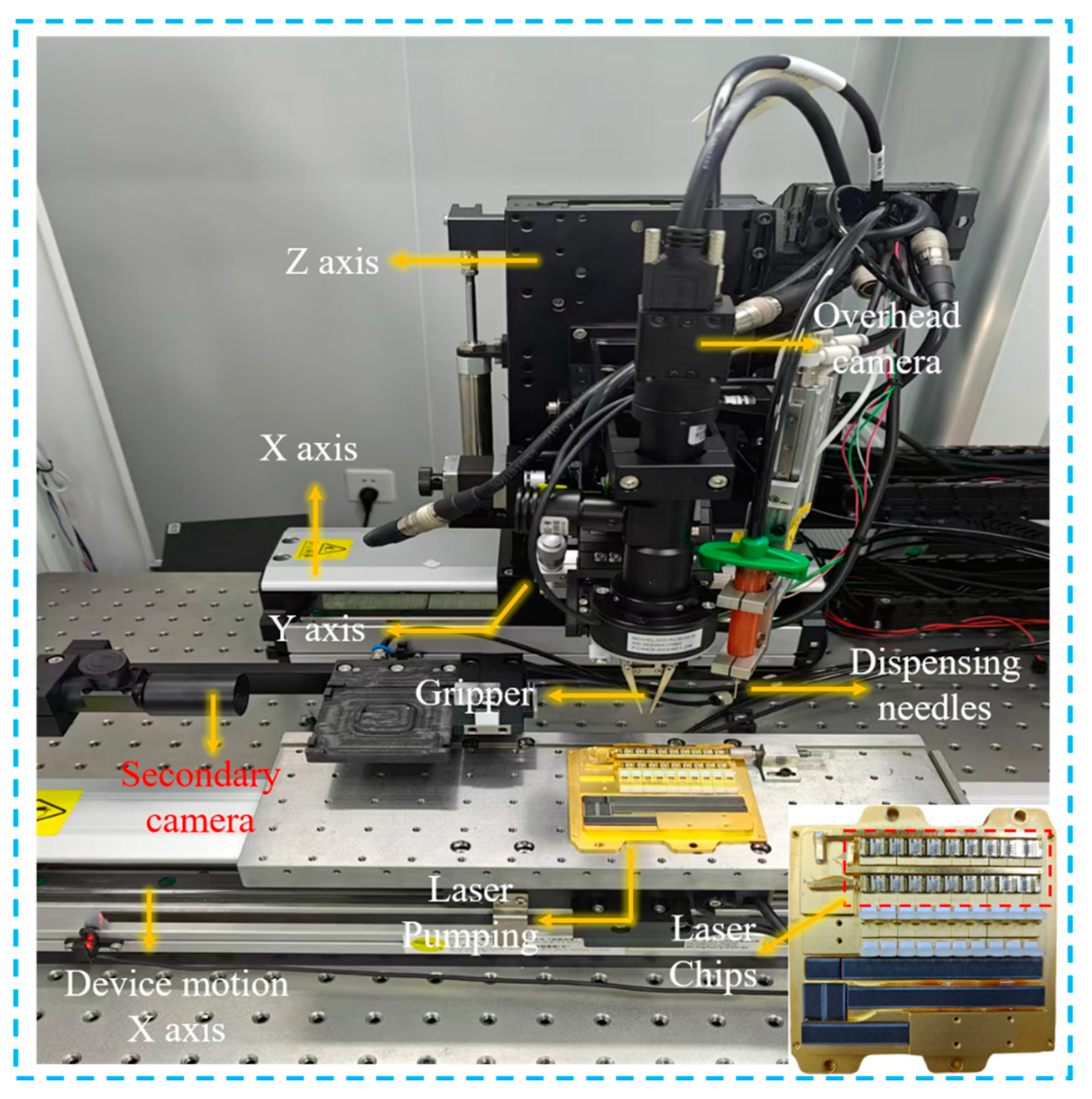

2.4. Creation of Laser Lens Adhesive Spots (LLAS) Dataset

3. Experimental and Results

3.1. Experimental Conditions

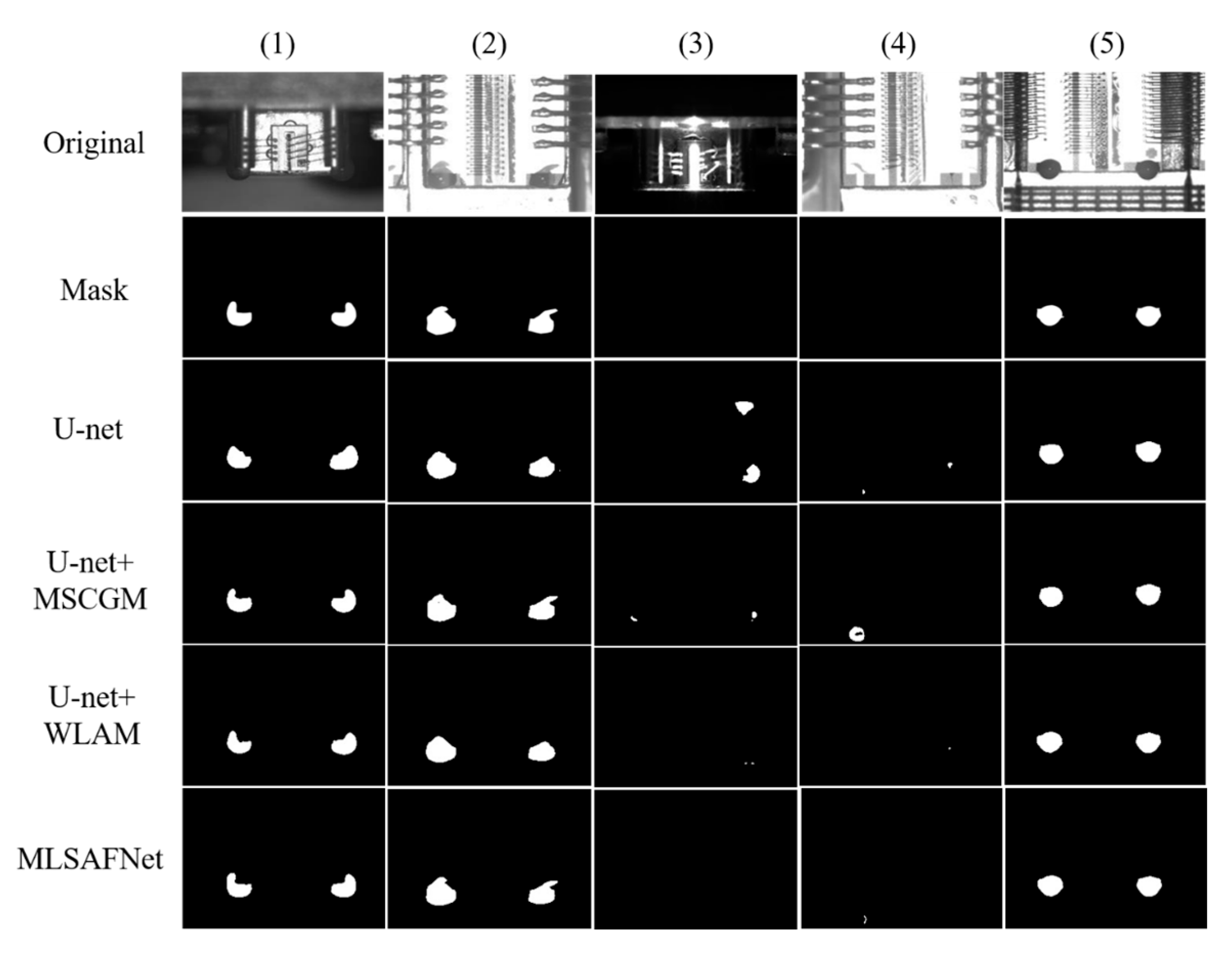

3.2. Ablation Experiments

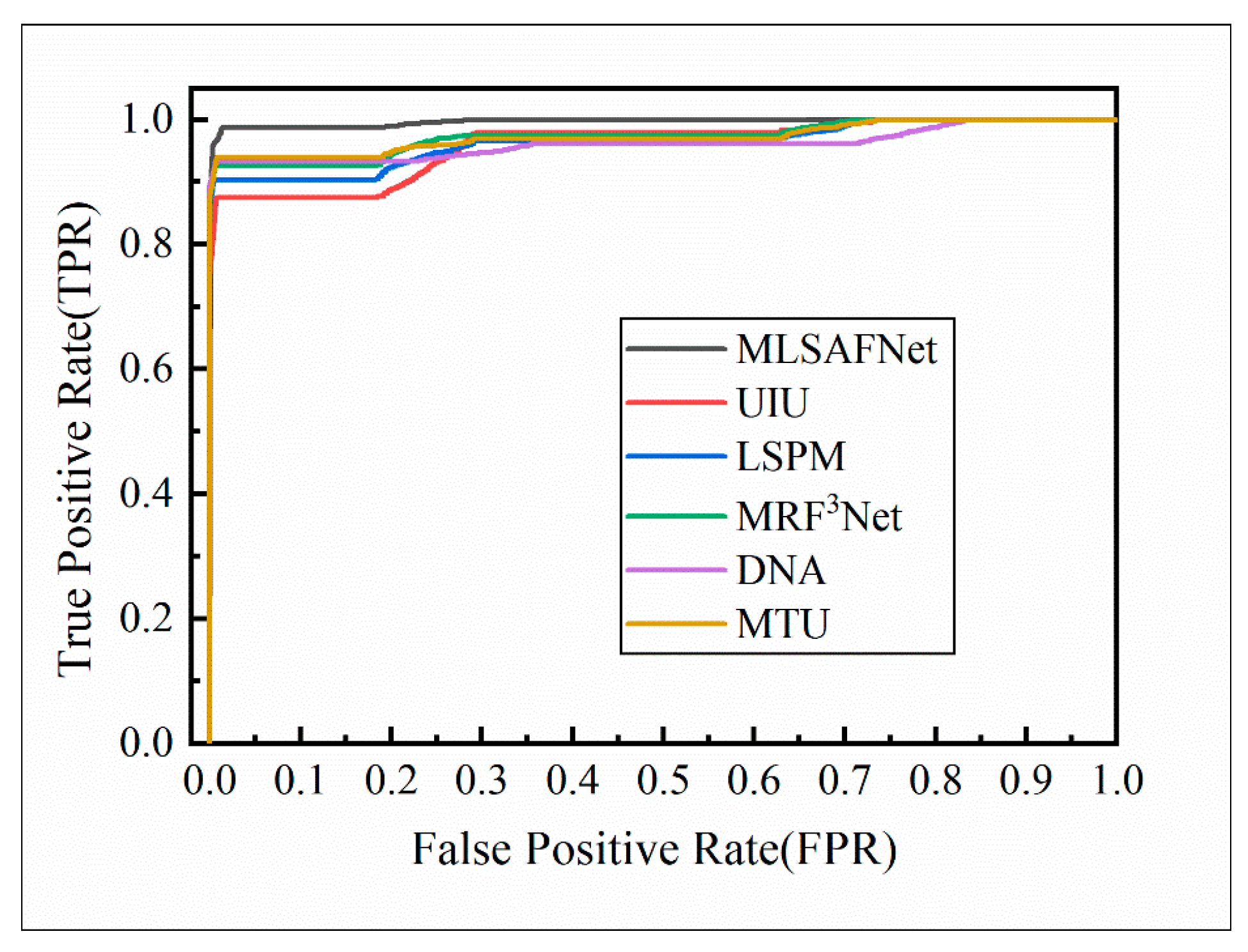

3.3. Comparison Experiments

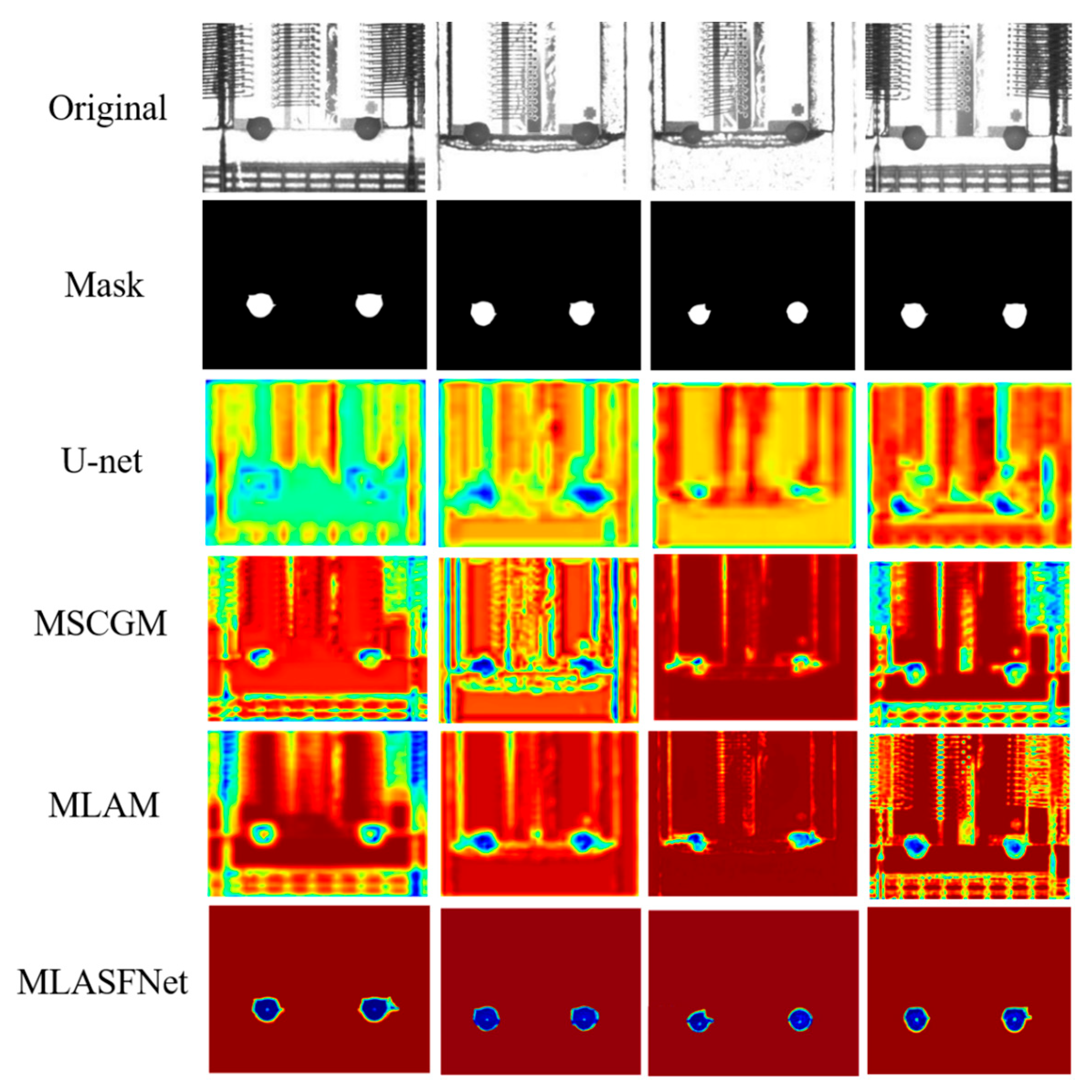

3.4. Adhesive Spots Feature Extraction Experiment

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Iglesias, B.P.; Otani, M.; Oliveira, F.G. Glue Level Estimation through Automatic Visual Inspection in PCB Manufacturing. In Proceedings of the 18th International Conference on Informatics in Control, Automation and Robotics, ICINCO 2021, Virtual, 6–8 July 2021; pp. 731–738. [Google Scholar]

- Charache, G.W.; Wang, F.; Negoita, D.; Hashmi, F.; Lanphear, R.; Duck, R.; Ruiz, J.; Molakala, B.; Baig, J.; Licari, S.; et al. Automated UV-Epoxy-Based Micro-Optic Assembly for Kilowatt-Class Laser-Diode Arrays and Modules. IEEE Trans. Compon. Packag. Manuf. Technol. 2019, 9, 2127–2135. [Google Scholar] [CrossRef]

- Tudeschini, R.B.; de Souza Soares, A.M. Automatic Inspection System of Adhesive on Vehicle Windshield Using Computational Vision. J. Braz. Soc. Mech. Sci. Eng. 2023, 45, 124. [Google Scholar] [CrossRef]

- Tong, Y.; Qiu, J.; Lin, H.; Wang, Y. Deep Learning Based Glue Line Defect Detection. In Proceedings of the 18th IEEE Conference on Industrial Electronics and Applications, ICIEA 2023, Ningbo, China, 18–22 August 2023; Institute of Electrical and Electronics Engineers Inc.: Ningbo, China, 2023; pp. 287–291. [Google Scholar]

- Legrand, A.-C.; Meriaudeau, F.; Gorria, P. Active Infrared Non-Destructive Testing for Glue Occlusion Detection within Plastic Lids. NDT E Int. 2002, 35, 177–187. [Google Scholar] [CrossRef]

- Haniff, H.M.; Sulaiman, M.; Shah, H.N.M.; Teck, L.W. Shape-Based Matching: Defect Inspection of Glue Process in Vision System. In Proceedings of the 2011 IEEE Symposium on Industrial Electronics and Applications, ISIEA 2011, Langkawi, Malaysia, 25–28 September 2011; IEEE Computer Society: Langkawi, Malaysia, 2011; pp. 53–57. [Google Scholar]

- Zhao, L.; Cheng, X.; Yao, Y. Online Intelligent Evaluation of Dispensing Quality Based on Entropy Weight Fuzzy Comprehensive Evaluation Method and Machine Learning. In Proceedings of the 1st International Conference on Sensing, Measurement and Data Analytics in the Era of Artificial Intelligence, ICSMD 2020, Xi’an, China, 15–17 October 2020; Institute of Electrical and Electronics Engineers Inc.: Xi’an, China, 2020; pp. 491–495. [Google Scholar]

- Yang, J.; Hu, H.; Xu, J. Optimization of Image Segmentation Based on a Visual Positioning System for Dispensing Machines. In Proceedings of the 2015 12th International Computer Conference on Wavelet Active Media Technology and Information Processing (ICCWAMTIP), Chengdu, China, 18–20 December 2015; pp. 209–213. [Google Scholar]

- Peng, Y.H.; Yang, X.Y.; Li, D.Z.; Ma, Z.S.; Liu, Z.C.; Bai, X.H.; Mao, Z.B. Predicting flow status of a flexible rectifier using cognitive computing. Expert Syst. Appl. 2024, 264, 125878. [Google Scholar] [CrossRef]

- Mao, Z.B.; Suzuki, S.; Wiranata, A.; Zheng, Y.Q.; Miyagawa, S. Bio-inspired circular soft actuators for simulating defecation process of human rectum. J. Artif. Organs 2024, 28, 252–261. [Google Scholar] [CrossRef] [PubMed]

- Peng, G.; Xiong, C.; Zhou, Y.; Yang, J.; Li, X. Extraction Method of Dispensing Track for Components Based on Transfer Learning and Mask-RCNN. Multimed. Tools Appl. 2023, 83, 2959–2978. [Google Scholar] [CrossRef]

- Li, D.; Deng, H.; Li, C.; Chen, H. Real-Time Segmentation Network for Compact Camera Module Assembly Adhesives Based on Improved U-net. J. Real-Time Image Process. 2023, 20, 44. [Google Scholar] [CrossRef]

- Xing-Wei, Z.; Ke, Z.; Ling-Wang, X.; Yong-Jie, Z.; Xin-Jian, L. An Enhancement and Detection Method for a Glue Dispensing Image Based on the CycleGAN Model. IEEE Access 2022, 10, 92036–92047. [Google Scholar] [CrossRef]

- Ma, S.; Song, K.; Niu, M.; Tian, H.; Wang, Y.; Yan, Y. Shape-Consistent One-Shot Unsupervised Domain Adaptation for Rail Surface Defect Segmentation. IEEE Trans. Ind. Inform. 2023, 19, 9667–9679. [Google Scholar] [CrossRef]

- Xiao, T.; Xu, Y.; Yang, K.; Zhang, J.; Peng, Y.; Zhang, Z. The Application of Two-Level Attention Models in Deep Convolutional Neural Network for Fine-Grained Image Classification. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 842–850. [Google Scholar]

- Li, H.; Xiong, P.; An, J.; Wang, L. Pyramid Attention Network for Semantic Segmentation. In Proceedings of the 29th British Machine Vision Conference, BMVC 2018, Newcastle upon Tyne, UK, 3–6 September 2018; BMVA Press: Newcastle, UK, 2019. [Google Scholar]

- Guo, P.; Su, X.; Zhang, H.; Bao, F. MCDALNet: Multi-Scale Contextual Dual Attention Learning Network for Medical Image Segmentation. In Proceedings of the 2021 International Joint Conference on Neural Networks, IJCNN 2021, Virtual, 18–22 July 2021; Institute of Electrical and Electronics Engineers Inc.: Shenzhen, China, 2021. [Google Scholar]

- Peng, H.; Sun, H.; Guo, Y. 3D Multi-Scale Deep Convolutional Neural Networks for Pulmonary Nodule Detection. PLoS ONE 2021, 16, e0244406. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015; ICLR: San Diego, CA, USA, 2015. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. In Proceedings of the 31st AAAI Conference on Artificial Intelligence, AAAI 2017, San Francisco, CA, USA, 4–10 February 2017; AAAI Press: San Francisco, CA, USA, 2017; pp. 4278–4284. [Google Scholar]

- Snoek, J.; Larochelle, H.; Adams, R.P. Practical Bayesian Optimization of Machine Learning Algorithms. In Proceedings of the 26th Annual Conference on Neural Information Processing Systems 2012, NIPS 2012, Lake Tahoe, NV, USA, 3–6 December 2012; Neural Information Processing Systems Foundation: Lake Tahoe, NV, USA, 2012; Volume 4, pp. 2951–2959. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar]

- Kuang, D. A 1d Convolutional Network for Leaf and Time Series Classification. arXiv 2019, arXiv:1907.00069. [Google Scholar]

- Wei, Y.; You, X.; Li, H. Multiscale Patch-Based Contrast Measure for Small Infrared Target Detection. Pattern Recognit. 2016, 58, 216–226. [Google Scholar] [CrossRef]

- Chen, F.; Gao, C.; Liu, F.; Zhao, Y.; Zhou, Y.; Meng, D.; Zuo, W. Local Patch Network with Global Attention for Infrared Small Target Detection. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 3979–3991. [Google Scholar] [CrossRef]

- Zhao, J.; Li, J.; Ma, Y. RPN+ Fast Boosted Tree: Combining Deep Neural Network with Traditional Classifier for Pedestrian Detection. In Proceedings of the 4th International Conference on Computer and Technology Applications, ICCTA 2018, Istanbul, Turkey, 3–5 May 2018; Institute of Electrical and Electronics Engineers Inc.: Istanbul, Turkey, 2018; pp. 141–150. [Google Scholar]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, Proceedings of the 4th International Workshop on Deep Learning in Medical Image Analysis, DLMIA 2018 and 8th In-ternational Workshop on Multimodal Learning for Clinical Decision Support, ML-CDS 2018, Granada, Spain, 20 September 2018; Springer Verlag: Granada, Spain, 2018; Volume 11045, pp. 3–11. [Google Scholar]

- Zhong, S.; Zhou, H.; Cui, X.; Cao, X.; Zhang, F.; Duan, J. Infrared Small Target Detection Based on Local-Image Construction and Maximum Correntropy. Measurement 2023, 211, 112662. [Google Scholar] [CrossRef]

- Wu, X.; Hong, D.; Chanussot, J. UIU-net: U-net in U-net for Infrared Small Object Detection. IEEE Trans. Image Process. 2023, 32, 364–376. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Liu, F.; Fan, X.; Huang, D. Polarized Self-Attention: Towards High-Quality Pixel-Wise Regression. arXiv 2021, arXiv:2107.00782. [Google Scholar]

- Li, B.; Xiao, C.; Wang, L.; Wang, Y.; Lin, Z.; Li, M.; An, W.; Guo, Y. Dense Nested Attention Network for Infrared Small Target Detection. IEEE Trans. Image Process. 2023, 32, 1745–1758. [Google Scholar] [CrossRef] [PubMed]

- Wu, T.; Li, B.; Luo, Y.; Wang, Y.; Xiao, C.; Liu, T.; Yang, J.; An, W.; Guo, Y. MTU-net: Multilevel TransUNet for Space-Based Infrared Tiny Ship Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, X.; Cao, S.-Y.; Yu, B.; Zhang, C.; Shen, H.-L. MRF3Net: An Infrared Small Target Detection Network Using Multireceptive Field Perception and Effective Feature Fusion. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–14. [Google Scholar] [CrossRef]

- Chien, C.-F.; Cheng, Y.-C.; Lin, T.-T. Robust Ellipse Detection Based on Hierarchical Image Pyramid and Hough Transform. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 2011, 28, 581–589. [Google Scholar] [CrossRef] [PubMed]

- Davies, E.R. Finding Ellipses Using the Generalised Hough Transform. Pattern Recognit. Lett. 1989, 9, 87–96. [Google Scholar] [CrossRef]

| Industrial Cameras | a2A2590-60umBAS | ||

|---|---|---|---|

| Resolution | 2592 × 1944 | Frame rate | 60 fps |

| Pixel size | 2 μm × 2 μm | Signal-to-noise ratio | 38.7 dB |

| Telecentric lens MVL-MY-2-110C-MP | |||

| Working distance | 110 mm | Magnifying power | 2.0 |

| Image size | Φ11 mm | Telecentricity | 0.1° |

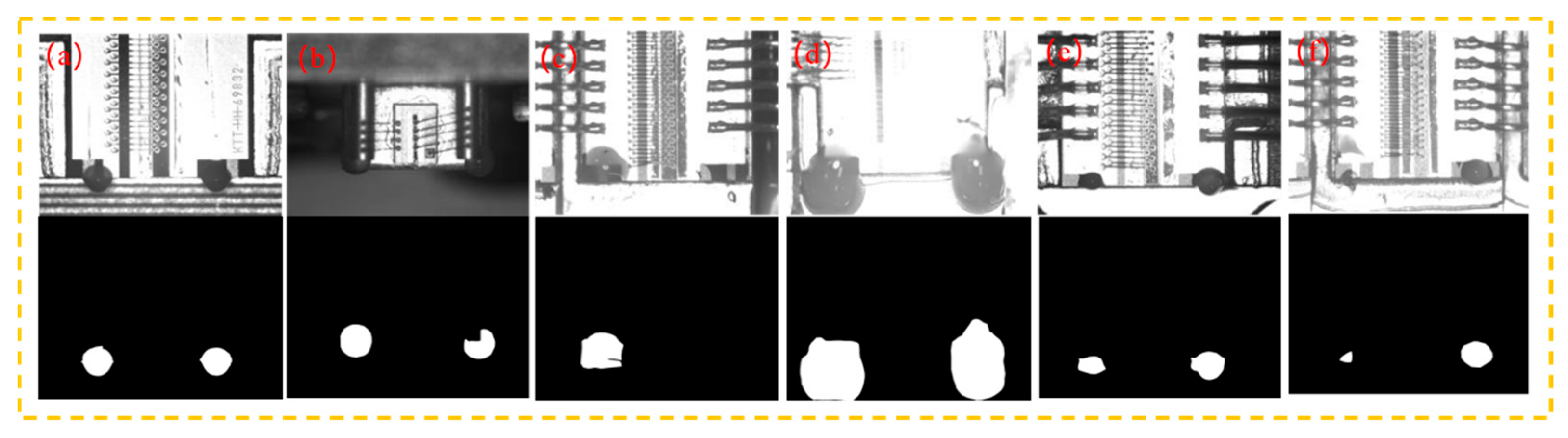

| Seq. | Image Size (Pixels) | Adhesive Spot Area Characterization |

|---|---|---|

| a | 256 × 192 | Strong background Standard adhesive spots |

| b | Complex background Weak target | |

| c | Soothing background Irregular single target | |

| d | High-light background Irregular huge target | |

| e | Complex background Irregular and tiny target | |

| f | Soothing background Tiny target |

| U-net | MSCGM | MLAM | mIoU (%) | Dice (%) | F1 (%) |

|---|---|---|---|---|---|

| √ | × | × | 87.24 | 93.10 | 87.31 |

| √ | √ | × | 88.29 | 93.70 | 88.00 |

| √ | × | √ | 89.74 | 94.53 | 88.56 |

| √ | √ | √ | 91.15 | 95.31 | 89.15 |

| Method | mIoU (%) | Dice (%) | F1(%) | Time (s/100 Images) |

|---|---|---|---|---|

| UIU | 75.94 | 86.21 | 78.92 | 7.92 |

| LSPM | 77.74 | 87.34 | 83.00 | 64.06 |

| DNA | 84.33 | 91.30 | 85.48 | 11.13 |

| MTU | 90.08 | 94.73 | 88.16 | 3.72 |

| MRF3Net | 85.33 | 84.41 | 83.78 | 9.61 |

| MLSAFNet | 91.15 | 95.31 | 89.15 | 3.55 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, Y.; Chen, S.; Duan, L.; Luo, D.; Zhang, F.; Zhong, S. Multi-Level Scale Attention Fusion Network for Adhesive Spots Segmentation in Microlens Packaging. Micromachines 2025, 16, 1043. https://doi.org/10.3390/mi16091043

Yan Y, Chen S, Duan L, Luo D, Zhang F, Zhong S. Multi-Level Scale Attention Fusion Network for Adhesive Spots Segmentation in Microlens Packaging. Micromachines. 2025; 16(9):1043. https://doi.org/10.3390/mi16091043

Chicago/Turabian StyleYan, Yixiong, Sijia Chen, Lian Duan, Dinghui Luo, Fan Zhang, and Shunshun Zhong. 2025. "Multi-Level Scale Attention Fusion Network for Adhesive Spots Segmentation in Microlens Packaging" Micromachines 16, no. 9: 1043. https://doi.org/10.3390/mi16091043

APA StyleYan, Y., Chen, S., Duan, L., Luo, D., Zhang, F., & Zhong, S. (2025). Multi-Level Scale Attention Fusion Network for Adhesive Spots Segmentation in Microlens Packaging. Micromachines, 16(9), 1043. https://doi.org/10.3390/mi16091043