Abstract

Brain-inspired models in artificial intelligence (AI) originated from foundational insights in neuroscience. In recent years, this relationship has been moving toward a mutually reinforcing feedback loop. Currently, AI is significantly contributing to advancing our understanding of neuroscience. In particular, when combined with wireless optogenetics, AI enables experiments without physical constraints. Furthermore, AI-driven real-time analysis facilitates closed-loop control, allowing experimental setups across a diverse range of scenarios. And a deeper understanding of these neural networks may, in turn, contribute to future advances in AI. This work demonstrates the synergy between AI and miniaturized neural technology, particularly through wireless optogenetic systems designed for closed-loop neural control. We highlight how AI is now revolutionizing neuroscience experiments from decoding complex neural signals and quantifying behavior, to enabling closed-loop interventions and high-throughput phenotyping in freely moving subjects. Notably, AI-integrated wireless implants can monitor and modulate biological processes with unprecedented precision. We then recount how neuroscience insights derived from AI-integrated neuroscience experiments can potentially inspire the next generation of machine intelligence. Insights gained from these technologies loop back to inspire more efficient and robust AI systems. We discuss future directions in this positive feedback loop between AI and neuroscience, arguing that the coevolution of the two fields, grounded in technologies like wireless optogenetics and guided by reciprocal insight, will accelerate progress in both, while raising new challenges and opportunities for interdisciplinary collaboration.

1. Introduction

Modern AI owes many of its foundational ideas to neuroscience. The very notion of an artificial “neural network” arose from abstracting how biological neurons connect and learn. Early pioneers like McCulloch, Pitts, and Rosenblatt were directly inspired by brain anatomy and function [1,2,3]. For instance, the concept of Hebbian learning, “cells that fire together wire together” from neuroscience, underpinned the first artificial neural networks [3,4]. This simple network of weighted connections was an attempt to mimic how a brain might learn to recognize patterns [5,6]. Over subsequent decades, neuroscientific principles continued to guide AI. The brain’s layered visual processing hierarchy inspired the design of convolutional neural networks (CNNs) for image recognition, and its working memory capacity motivated recurrent neural networks (RNNs) that feed outputs back as inputs to handle sequences [7,8,9]. Even the trial-and-error learning animals use to seek rewards laid the groundwork for reinforcement learning algorithms in AI. In short, many milestones in AI, from deep learning architectures to learning rules, trace their lineage to insights about how real neural circuits compute and adapt.

Yet, the influence was not one-way. As AI and computer science advanced, they provided formal models and tools that helped neuroscientists frame hypotheses about the brain [10,11,12,13]. The earliest artificial networks were simplistic compared to biological brains, but they demonstrated that brain-inspired computation could yield intelligent behavior [14]. This symbiosis has only deepened with time: neuroscientists draw on AI models to interpret cognition, while AI researchers turn to neural processing for inspiration on making machines more brain-like [15]. The stage was set for a reciprocal relationship, one that has evolved into a virtuous cycle of innovation in recent years.

2. AI Empowering Neuroscience, Wireless Optogenetics, and Beyond

Today, AI is propelling neuroscience into a new era of data-driven discovery and precise intervention. In particular, the convergence of AI with wireless optogenetics, untethered from cables, is enabling experiments once unimaginable [16,17,18,19,20,21,22,23,24]. By coupling intelligent algorithms with miniaturized, implantable devices, researchers can decode neural activity [25], analyze behavior [26,27], and even trigger stimuli in real time [28], all in freely moving animals. This suggests the possibility of truly closed-loop neuroscience experiments, where experimental conditions could autonomously adjust based on feedback [29]. Wireless optogenetic platforms represent a class of miniaturized, implantable neurotechnologies that enable high-precision, untethered stimulation and the recording of neural circuits (Figure 1) [20].

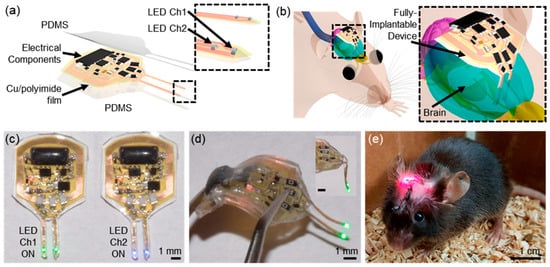

Figure 1.

System overview. (a) Schematic illustration of a soft, fully implantable dual-channel optogenetic device. (b) Illustration of the device location relative to the brain. (c) Demonstration of a dual-channel wireless operation. (d) Picture of the device after bending the body of the device. (e) Image of a mouse with the device implanted [20].

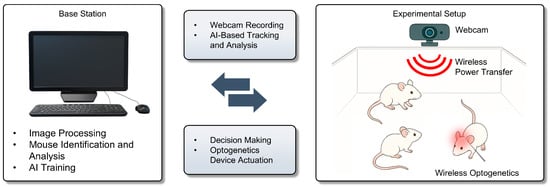

One groundbreaking example is the development of AI-assisted wireless implants for photodynamic therapy and neuromodulation. An implantable, multichannel optoelectronic device was recently created that can be implanted with ease and used to deliver controlled light for tumor treatment. Uniquely, this system integrates a deep neural network (the pose-tracking algorithm DeepLabCut) and a Monte Carlo light simulation [30,31]. In this experimental context, the primary role of AI is to enable the real-time identification and tracking of freely moving mice. While wireless optogenetic devices have successfully removed physical constraints on animal behavior, they have historically faced a key technical limitation in multi-animal experiments: the inability to accurately track and monitor multiple subjects simultaneously. While wireless optogenetic devices have successfully removed physical constraints on animal behavior, they have still faced a key technical limitation in multi-animal experiments, i.e., the challenge of accurately tracking and monitoring multiple subjects simultaneously. The integration of AI-based tracking algorithms, particularly deep learning-driven pose estimation, has now addressed this challenge, for the first time enabling scalable, closed-loop optogenetic experiments in freely behaving animals (Figure 2). These results underscore how embedded AI models, optimized for low-power wireless implants, enable scalable and autonomous neural interfacing under real-world constraints. Thus, researchers simultaneously controlled implants in multiple mice, demonstrating that AI can coordinate complex experiments at scale [32,33]. While developed for oncology, the underlying approach—AI-guided, wireless optical modulation—is directly transferrable to neuroscience experiments (for example, controlling neural circuits with multiple colors of light in many animals at once). It exemplifies how AI empowers precise, closed-loop optogenetic interventions. The system monitors conditions and immediately adjusts optical stimulation for optimal results. In parallel, AI is supercharging our ability to decode neural data and behavior [25,34]. Advances in machine learning enable us to sift through the massive streams of neural signals and video recordings that modern experiments generate [35,36]. For instance, deep learning models can decode a mouse’s brain activity to predict its next action, or conversely, analyze a video of an animal’s behavior to infer its internal neural states [25,37,38,39]. Tools like DeepLabCut (used in the example above) allow for the automated tracking of an animal’s posture and movements with human-level accuracy, providing quantitative readouts of behavior for correlation with neural recordings [30,31]. Such AI-driven behavior analysis is crucial for high-throughput phenotyping, assessing how neural circuit manipulations alter behavior across many individuals. Instead of a human observer scoring videos, a neural network can evaluate dozens of behaviors simultaneously and without bias. Moreover, wireless optogenetic implants typically operate below 10 mW during active stimulation phases, resulting in negligible tissue heating (a < 1 °C increase, confirmed via in vivo thermal imaging). Compared to traditional tethered optical fibers using external lasers (>100 mW at source), wireless systems dramatically reduce systemic power load while maintaining sufficient light delivery for effective neuromodulation.

Figure 2.

Closed-loop wireless optogenetic system driven by real-time behavior tracking and AI-based decision making.

Wireless optogenetic platforms augmented by AI are unlocking especially rich opportunities. Researchers have engineered soft, fully implantable optogenetic devices that deliver light inside specific organs or brain regions without tethering the animal. These platforms represent a class of miniaturized, intelligent neural interfaces that eliminate the need for cables while enabling precise, high-resolution control of brain activity. When combined with AI, these devices become smart systems. They can record physiological signals, run on-board algorithms to detect certain patterns, and trigger light stimulation accordingly (all powered and controlled wirelessly). Even in such situations, machine learning can be effectively utilized not only for tracking multiple subjects in a single cage but also for controlling animals housed individually across multiple cages. Furthermore, the study demonstrated that optogenetics can stimulate not only the brain but also the gut, highlighting that removing tethers enables the targeting of peripheral as well as central nervous systems. This suggests broader potential for expanding our understanding of neural networks beyond the brain [40,41]. Closed-loop control represents a powerful new frontier for AI in neuroscience. In these systems, neural activity or behavior is monitored in real time, and stimulation is dynamically adjusted based on AI-driven pattern recognition [29]. This approach has been shown to prevent seizures in rodent models by triggering targeted optical stimulation upon the detection of specific neural signatures. More broadly, AI enables the adaptive, real-time tuning of stimulation to maintain desired brain states, revealing circuit dynamics with far greater precision than manual methods. As a result, experiments increasingly feature AI not only as an observer but as an active modulator of neural function [42,43,44].

In sum, AI has moved from passive analysis to active experimentation. By decoding behavior and guiding interventions through wireless optogenetic implants, AI enables high-throughput, closed-loop studies that deepen our understanding of brain and peripheral systems. However, there are certainly technical details that need to be addressed. In current closed-loop systems, real-time video is processed externally via deep learning algorithms such as DeepLabCut. Behavioral classifications are transmitted wirelessly to control implants with a typical end-to-end latency of approximately 100–300 ms, primarily limited by video frame acquisition and RF communication delays. On-device computation remains challenging due to power constraints (<10 mW), so real-time inference is typically externalized, while implant devices respond autonomously based on decoded triggers.

3. Discoveries Looping Back, Neuroscience Inspiring Better AI

While artificial intelligence has advanced rapidly in recent years, several persistent limitations remain. Machine learning models typically rely on large-scale, labeled datasets and struggle with generalization under data-sparse conditions [45,46]. They often suffer from catastrophic forgetting when exposed to sequential learning tasks [47,48,49], and in generative systems such as large language models, they frequently produce factually incorrect but syntactically plausible outputs—a phenomenon known as hallucination [50]. Furthermore, these models are computationally intensive, requiring significant energy and hardware resources [51,52,53], in stark contrast to the brain’s compact, low-power operation. These shortcomings, however, present an opportunity; rather than treating neuroscience as merely inspirational, we can begin to use it as a source of empirical solutions. Advances in wireless optogenetics and AI-enabled tracking now allow for the real-time, closed-loop interrogation of neural circuits in freely behaving animals. These systems offer a platform for reverse-engineering biological intelligence under experimentally controlled conditions.

In other words, unresolved issues in current AI models, such as catastrophic forgetting, may potentially be addressed through a deeper understanding of neural circuits. Unlike today’s AI models, which rely on relatively simple neural connections, our actual brain circuits are composed of complex loops and mechanisms, including GABAergic inhibitory circuits [54,55]. Researchers can examine how the brain preserves previously learned information while encoding new inputs. Such experiments may uncover regulatory mechanisms, such as circuit-level gating or synaptic stabilization, that could be translated into architectural solutions for continual learning in artificial systems. Similarly, to address the issue of hallucination in AI, the optogenetic stimulation of prediction error circuits (e.g., the anterior cingulate cortex or orbitofrontal cortex) during ambiguous sensory decision-making tasks can reveal how the brain evaluates internal certainty and suppresses false inferences [56,57]. Incorporating such biological error-correction mechanisms could inform the design of AI systems capable of assessing their own output reliability, potentially leading to more trustworthy generative models. The data inefficiency problem can be explored through one-shot or few-shot learning paradigms in rodents, where wireless optogenetic systems are used to monitor and manipulate neuromodulatory inputs (such as dopamine or acetylcholine) during rapid associative learning. Observing how neuromodulators shape synaptic plasticity under minimal exposure may point toward biologically informed meta-learning strategies in AI. Finally, the brain’s energy-efficient computation, achieved through event-driven, sparse, and local processing, can be dissected through spatiotemporally patterned optogenetic activation of distributed networks. These experiments may identify core principles behind low-power neural coding, informing neuromorphic hardware design or the development of sparse, asynchronous AI architectures.

In sum, AI-powered neuroscience experiments are increasingly capable of uncovering not just how the brain functions but also how it solves the problems that modern AI still struggles with. This convergence enables us to shift from biologically inspired design to biologically validated design—where mechanisms discovered in vivo directly shape future machine learning algorithms. In doing so, we may begin to close the loop between brain and machine in the most literal sense.

4. Discussion and Future Outlook

The notion of biologically validated AI remains speculative and exploratory. Rather than aiming for exact translation, future research may focus on identifying abstract principles, such as hierarchical gating or local learning, from neuroscience that show functional benefits when implemented in AI. Discrepancies between neural and model behavior should not be seen as failures but as feedback points for refining both biological theory and computational modeling.

Nonetheless, the coevolution of neuroscience and artificial intelligence is no longer a theoretical idea—it is a demonstrable and accelerating process. From neural networks inspired by early models of the brain to today’s closed-loop wireless optogenetic systems empowered by AI, the relationship has matured into a bidirectional engine of innovation. This perspective has illustrated how AI now acts as both a lens and a lever: it not only helps us observe the brain in unprecedented ways but also actively manipulates neural circuits to probe their function in real time. Importantly, this interplay is beginning to inform the next generation of AI design. The limitations of current machine learning systems, such as catastrophic forgetting, inefficiency in sparse data settings, and hallucination, are not merely technical challenges but conceptual gaps that biological systems have evolved to solve. Through precise, AI-guided experimental neuroscience, we can uncover the regulatory, structural, and dynamical strategies the brain uses to achieve robustness, adaptability, and efficiency. Looking ahead, we propose a shift in mindset: from biologically inspired AI to biologically validated AI. Rather than taking metaphorical cues from the brain, future AI architectures may be grounded in mechanisms directly observed and tested in vivo. As AI continues to empower neuroscience, and neuroscience continues to inform AI, we are witnessing the emergence of a true feedback loop between mind and machine. This loop is not yet complete, but it is closing. With each cycle of innovation, we move closer to systems that not only compute but also reason, that do not just classify but understand. The promise of this convergence is not just smarter machines or better models, but a more profound, mechanistic grasp of intelligence itself, across both silicon and synapse. We look forward to seeing this mutually reinforcing relationship continue to evolve, fostering meaningful advances across both domains.

Funding

This work was supported by the Hongik University New Faculty Research Support Fund.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

I would like to express my gratitude to Hongik University.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Yashchenko, V. From McCulloch to GPT-4: Stages of development of artificial intelligence. Artif. Intell. 2024, 1, 31–44. [Google Scholar]

- Chakraverty, S.; Sahoo, D.M.; Mahato, N.R.; Chakraverty, S.; Sahoo, D.M.; Mahato, N.R. McCulloch–Pitts neural network model. In Concepts soft Comput. Fuzzy ANN with Program; Springer: Singapore, 2019; pp. 167–173. [Google Scholar]

- Rosenblatt, F. The perceptron: A probabilistic model for information storage and organization in the brain. Psychol. Rev. 1958, 65, 386. [Google Scholar] [CrossRef] [PubMed]

- Kuriscak, E.; Marsalek, P.; Stroffek, J.; Toth, P.G. Biological context of Hebb learning in artificial neural networks, a review. Neurocomputing 2015, 152, 27–35. [Google Scholar] [CrossRef]

- Blundell, C.; Cornebise, J.; Kavukcuoglu, K.; Wierstra, D. Weight uncertainty in neural network. In Proceedings of the International Conference on Machine Learning, Lille, France, 7–9 July 2015; PMLR: New York, NY, USA, 2015; pp. 1613–1622. [Google Scholar]

- Salimans, T.; Kingma, D.P. Weight normalization: A simple reparameterization to accelerate training of deep neural networks. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 5–10 December 2016; Volume 29. [Google Scholar]

- O’shea, K.; Nash, R. An introduction to convolutional neural networks. arXiv 2015, arXiv:1511.08458. [Google Scholar]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Medsker, L.R.; Jain, L. Recurrent neural networks. Des. Appl. 2001, 5, 2. [Google Scholar]

- Rogalla, M.M.; Seibert, A.; Sleeboom, J.M.; Hildebrandt, K.J. Differential optogenetic activation of the auditory midbrain in freely moving behaving mice. Front. Syst. Neurosci. 2023, 17, 1222176. [Google Scholar] [CrossRef]

- Li, J.; Zaikin, A.; Zhang, X.; Chen, S. Editorial: Closed-loop iterations between neuroscience and artificial intelligence. Front. Syst. Neurosci. 2022, 16, 1002095. [Google Scholar] [CrossRef]

- Orzan, F.; Iancu, Ş.D.; Dioşan, L.; Bálint, Z. Textural analysis and artificial intelligence as decision support tools in the diagnosis of multiple sclerosis—A systematic review. Front. Neurosci. 2025, 18, 1457420. [Google Scholar] [CrossRef]

- Song, S.; Li, T.; Lin, W.; Liu, R.; Zhang, Y. Application of artificial intelligence in Alzheimer’s disease: A bibliometric analysis. Front. Neurosci. 2025, 19, 1511350. [Google Scholar] [CrossRef]

- Haenlein, M.; Kaplan, A. A brief history of artificial intelligence: On the past, present, and future of artificial intelligence. Calif. Manage. Rev. 2019, 61, 5–14. [Google Scholar] [CrossRef]

- Adap, V.R.K. NEURO-AI CONVERGENCE: BRIDGING THE GAP BETWEEN NEUROSCIENCE AND ARTIFICIAL INTELLIGENCE. Int. J. Comput. Eng. Technol. 2024, 15, 938–946. [Google Scholar]

- Kim, W.S.; Liu, J.; Li, Q.; Hong, S.; Qi, K.; Cherukuri, R.; Yoon, B.-J.; Moscarello, J.; Choe, Y.; Maren, S. AI-Driven Battery-Free Dual-Channel Wireless Optogenetics for High-Throughput Automation of Behavioral Analysis. Cell Press Sneak Peek 2022. [Google Scholar] [CrossRef]

- Balasubramaniam, S.; Wirdatmadja, S.A.; Barros, M.T.; Koucheryavy, Y.; Stachowiak, M.; Jornet, J.M. Wireless communications for optogenetics-based brain stimulation: Present technology and future challenges. IEEE Commun. Mag. 2018, 56, 218–224. [Google Scholar] [CrossRef]

- Shin, G.; Gomez, A.M.; Al-Hasani, R.; Jeong, Y.R.; Kim, J.; Xie, Z.; Banks, A.; Lee, S.M.; Han, S.Y.; Yoo, C.J.; et al. Flexible Near-Field Wireless Optoelectronics as Subdermal Implants for Broad Applications in Optogenetics. Neuron 2017, 93, 509–521.e3. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Wu, M.; Vázquez-Guardado, A.; Wegener, A.J.; Grajales-Reyes, J.G.; Deng, Y.; Wang, T.; Avila, R.; Moreno, J.A.; Minkowicz, S.; et al. Wireless multilateral devices for optogenetic studies of individual and social behaviors. Nat. Neurosci. 2021, 24, 1035–1045. [Google Scholar] [CrossRef]

- Kim, W.S.; Jeong, M.; Hong, S.; Lim, B.; Park, S.I. Fully implantable low-power high frequency range optoelectronic devices for dual-channel modulation in the brain. Sensors 2020, 20, 3639. [Google Scholar] [CrossRef]

- Xu, S.; Momin, M.; Ahmed, S.; Hossain, A.; Veeramuthu, L.; Pandiyan, A.; Kuo, C.-C.; Zhou, T. Illuminating the Brain: Advances and Perspectives in Optoelectronics for Neural Activity Monitoring and Modulation. Adv. Mater. 2023, 35, 2303267. [Google Scholar] [CrossRef]

- Pandiyan, A.; Vengudusamy, R.; Veeramuthu, L.; Muthuraman, A.; Wang, Y.-C.; Lee, H.; Zhou, T.; Kao, C.R.; Kuo, C.-C. Synergistic effects of size-confined mxene nanosheets in self-powered sustainable smart textiles for environmental remediation. Nano Energy 2025, 133, 110426. [Google Scholar] [CrossRef]

- Veeramuthu, L.; Weng, R.-J.; Chiang, W.-H.; Pandiyan, A.; Liu, F.-J.; Liang, F.-C.; Kumar, G.R.; Hsu, H.-Y.; Chen, Y.-C.; Lin, W.-Y.; et al. Bio-inspired sustainable electrospun quantum nanostructures for high quality factor enabled face masks and self-powered intelligent theranostics. Chem. Eng. J. 2024, 502, 157752. [Google Scholar] [CrossRef]

- Pandiyan, A.; Veeramuthu, L.; Yan, Z.-L.; Lin, Y.-C.; Tsai, C.-H.; Chang, S.-T.; Chiang, W.-H.; Xu, S.; Zhou, T.; Kuo, C.-C. A comprehensive review on perovskite and its functional composites in smart textiles: Progress, challenges, opportunities, and future directions. Prog. Mater. Sci. 2023, 140, 101206. [Google Scholar] [CrossRef]

- Glaser, J.I.; Benjamin, A.S.; Chowdhury, R.H.; Perich, M.G.; Miller, L.E.; Kording, K.P. Machine learning for neural decoding. eNeuro 2020, 7. [Google Scholar] [CrossRef]

- Dos Santos Melicio, B.C.; Xiang, L.; Dillon, E.; Soorya, L.; Chetouani, M.; Sarkany, A.; Kun, P.; Fenech, K.; Lorincz, A. Composite AI for behavior analysis in social interactions. In Proceedings of the Companion Publication of the 25th International Conference on Multimodal Interaction, Paris, France, 9–13 October 2023; pp. 389–397. [Google Scholar]

- Samayamantri, L.S.; Singhal, S.; Krishnamurthy, O.; Regin, R. AI-Driven Multimodal Approaches to Human Behavior Analysis. In Advancing Intelligent Networks Through Distributed Optimization; IGI Global: Hershey, PA, USA, 2024; pp. 485–506. [Google Scholar]

- Di Tecco, A.; Pistolesi, F.; Lazzerini, B. Elicitation of anxiety without time pressure and its detection using physiological signals and artificial intelligence: A proof of concept. IEEE Access 2024, 12, 22376–22393. [Google Scholar] [CrossRef]

- Grosenick, L.; Marshel, J.H.; Deisseroth, K. Closed-loop and activity-guided optogenetic control. Neuron 2015, 86, 106–139. [Google Scholar] [CrossRef] [PubMed]

- Mathis, A.; Mamidanna, P.; Cury, K.M.; Abe, T.; Murthy, V.N.; Mathis, M.W.; Bethge, M. DeepLabCut: Markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci. 2018, 21, 1281–1289. [Google Scholar] [CrossRef] [PubMed]

- Nath, T.; Mathis, A.; Chen, A.C.; Patel, A.; Bethge, M.; Mathis, M.W. Using DeepLabCut for 3D markerless pose estimation across species and behaviors. Nat. Protoc. 2019, 14, 2152–2176. [Google Scholar] [CrossRef] [PubMed]

- Kim, W.S.; Khot, M.I.; Woo, H.-M.; Hong, S.; Baek, D.-H.; Maisey, T.; Daniels, B.; Coletta, P.L.; Yoon, B.-J.; Jayne, D.G.; et al. AI-enabled, implantable, multichannel wireless telemetry for photodynamic therapy. Nat. Commun. 2022, 13, 2178. [Google Scholar] [CrossRef]

- Kim, W.S.; Woo, H.-M.; Khot, M.I.; Hong, S.; Jayne, D.G.; Yoon, B.-J.; Park, S.I. AI-Enabled High-Throughput Wireless Telemetry for Effective Photodynamic Therapy. In Proceedings of the 2021 55th Asilomar Conference on Signals, Systems, and Computers, Pacific Grove, CA, USA, 31 October–3 November 2021; IEEE: New York, NY, USA, 2021; pp. 811–815. [Google Scholar]

- Fazzari, E.; Carrara, F.; Falchi, F.; Stefanini, C.; Romano, D. Using AI to decode the behavioral responses of an insect to chemical stimuli: Towards machine-animal computational technologies. Int. J. Mach. Learn. Cybern. 2024, 15, 1985–1994. [Google Scholar] [CrossRef]

- Camastra, F.; Vinciarelli, A. Machine Learning for Audio, Image and Video Analysis: Theory and Applications; Springer: London, UK, 2015; ISBN 144716735X. [Google Scholar]

- Zhang, Y.; Kwong, S.; Wang, S. Machine learning based video coding optimizations: A survey. Inf. Sci. 2020, 506, 395–423. [Google Scholar] [CrossRef]

- Gharagozloo, M.; Amrani, A.; Wittingstall, K.; Hamilton-Wright, A.; Gris, D. Machine learning in modeling of mouse behavior. Front. Neurosci. 2021, 15, 700253. [Google Scholar] [CrossRef]

- Livezey, J.A.; Glaser, J.I. Deep learning approaches for neural decoding across architectures and recording modalities. Brief. Bioinform. 2021, 22, 1577–1591. [Google Scholar] [CrossRef] [PubMed]

- Wen, H.; Shi, J.; Zhang, Y.; Lu, K.-H.; Cao, J.; Liu, Z. Neural Encoding and Decoding with Deep Learning for Dynamic Natural Vision. Cereb. Cortex 2018, 28, 4136–4160. [Google Scholar] [CrossRef] [PubMed]

- Kim, W.S.; Hong, S.; Gamero, M.; Jeevakumar, V.; Smithhart, C.M.; Price, T.J.; Palmiter, R.D.; Campos, C.; Park, S.I. Organ-specific, multimodal, wireless optoelectronics for high-throughput phenotyping of peripheral neural pathways. Nat. Commun. 2021, 12, 157. [Google Scholar] [CrossRef]

- Hong, S.; Kim, W.S.; Han, Y.; Cherukuri, R.; Jung, H.; Campos, C.; Wu, Q.; Park, S.I. Optogenetic Targeting of Mouse Vagal Afferents Using an Organ-specific, Scalable, Wireless Optoelectronic Device. Bio-protocol 2022, 12, e4341. [Google Scholar] [CrossRef] [PubMed]

- Bohm, M.R.; Stone, R.B. Form Follows Form: Fine tuning artificial intelligence methods. In Proceedings of the International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Montreal, QC, USA, 15–18 August 2010; Volume 44113, pp. 519–528. [Google Scholar]

- Schöning, J.; Pfisterer, H.-J. Safe and trustful AI for closed-loop control systems. Electronics 2023, 12, 3489. [Google Scholar] [CrossRef]

- Nimri, R.; Phillip, M.; Kovatchev, B. Closed-Loop and Artificial Intelligence–Based Decision Support Systems. Diabetes Technol. Ther. 2023, 25, S-70–S-89. [Google Scholar] [CrossRef]

- Kuo, F.Y.; Sloan, I.H. Lifting the curse of dimensionality. Not. AMS 2005, 52, 1320–1328. [Google Scholar]

- Verleysen, M.; François, D. The curse of dimensionality in data mining and time series prediction. In Proceedings of the 8th International Work-Conference on Artificial Neural Networks, IWANN 2005, Vilanova i la Geltrú, Barcelona, Spain, 8–10 June 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 758–770. [Google Scholar]

- Kirkpatrick, J.; Pascanu, R.; Rabinowitz, N.; Veness, J.; Desjardins, G.; Rusu, A.A.; Milan, K.; Quan, J.; Ramalho, T.; Grabska-Barwinska, A. Overcoming catastrophic forgetting in neural networks. Proc. Natl. Acad. Sci. USA 2017, 114, 3521–3526. [Google Scholar] [CrossRef]

- Ramasesh, V.V.; Lewkowycz, A.; Dyer, E. Effect of scale on catastrophic forgetting in neural networks. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 3–7 May 2021. [Google Scholar]

- Kemker, R.; McClure, M.; Abitino, A.; Hayes, T.; Kanan, C. Measuring catastrophic forgetting in neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Zhang, Y.; Li, Y.; Cui, L.; Cai, D.; Liu, L.; Fu, T.; Huang, X.; Zhao, E.; Zhang, Y.; Chen, Y. Siren’s song in the AI ocean: A survey on hallucination in large language models. arXiv 2023, arXiv:2309.01219. [Google Scholar]

- Kolbert, E. The obscene energy demands of AI. The New Yorker, 9 March 2024. [Google Scholar]

- Jin, D.; Ocone, R.; Jiao, K.; Xuan, J. Energy and AI. Energy AI 2020, 1, 100002. [Google Scholar] [CrossRef]

- Nøland, J.K.; Hjelmeland, M.; Korpås, M. Will Energy-Hungry AI create a baseload power demand boom? IEEE Access 2024, 12, 110353–110360. [Google Scholar] [CrossRef]

- Banuelos, C.; Wołoszynowska-Fraser, M.U. GABAergic networks in the prefrontal cortex and working memory. J. Neurosci. 2017, 37, 3989–3991. [Google Scholar] [CrossRef] [PubMed]

- Topolnik, L.; Tamboli, S. The role of inhibitory circuits in hippocampal memory processing. Nat. Rev. Neurosci. 2022, 23, 476–492. [Google Scholar] [CrossRef] [PubMed]

- Alexander, W.H.; Brown, J.W. The role of the anterior cingulate cortex in prediction error and signaling surprise. Top. Cogn. Sci. 2019, 11, 119–135. [Google Scholar] [CrossRef]

- Orr, C.; Hester, R. Error-related anterior cingulate cortex activity and the prediction of conscious error awareness. Front. Hum. Neurosci. 2012, 6, 177. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).