Abstract

As the next generation of in-vehicle intelligent platforms, the augmented reality heads-up display (AR-HUD) has a huge information interaction capacity, can provide drivers with auxiliary driving information, avoid the distractions caused by the lower head during the driving process, and greatly improve driving safety. However, AR-HUD systems still face great challenges in the realization of multi-plane full-color display, and they cannot truly achieve the integration of virtual information and real road conditions. To overcome these problems, many new devices and materials have been applied to AR-HUDs, and many novel systems have been developed. This study first reviews some key metrics of HUDs, investigates the structures of various picture generation units (PGUs), and finally focuses on the development status of AR-HUDs, analyzes the advantages and disadvantages of existing technologies, and points out the future research directions for AR-HUDs.

1. Introduction to Heads-Up Displays (HUDs)

Traffic crashes rank as the sixth leading cause of disability-adjusted life-years lost globally, standing out as the sole non-disease factor among the top 15 contributors according to a report by the World Health Organization (WHO) [1]. The WHO’s findings from 2018 revealed that approximately 1.3 million individuals succumb annually due to road traffic accidents [2]. Moreover, some 94% of them are mainly contributed by human error [3,4]. Specifically, people behind the wheel are exposed to various of factors that may lead to distractions, such as navigation aids and instrument panels. The road environment is unpredictable, and when a driver’s eyes switch back and forth between the vehicle information inside the car and the real-time road conditions outside the car at a distance, it is very easy to cause traffic accidents [5]. As a perfect solution, a heads-up display (HUD) is an interaction-based in-vehicle display technology that projects driving information onto the physical scene beyond the windshield and improves driving safety [6,7]. The driving information includes speed, fuel consumption, navigation data, driver assistance information, warning messages, etc. In addition, an HUD can be combined with autonomous driving to provide a better human–machine interaction experience.

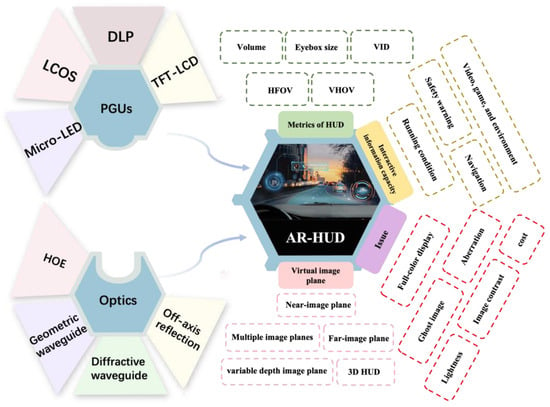

Since the primitive concept was applied for aiming and shooting in 1960, the field of HUDs has made significant advancements [8], especially with the emergence of augmented reality heads-up displays (AR-HUDs) in recent years, which has enabled virtual images to be superimposed on traffic environments because of the large virtual image distance (VID) [9]. So, this avoids a frequent change in the accommodation of the human eye between the physical world and the displayed information [10]. In terms of optical hardware, an HUD is composed of a picture generation unit (PGU) and optics for the HUD. The former utilizes projectors, such as a thin-film transistor–liquid crystal display (TFT-LCD), digital light processing (DLP), liquid crystal on silicon (LCOS), or micro-LEDs, to generate images. The latter is an optical system for projecting a virtual image beyond the windshield. Its function includes folding the light path, magnifying the image, forming an eyebox, and compensating the aberration from the windshield (Figure 1).

Figure 1.

Overview of AR-HUDs.

This review focuses on the research advances in HUD optical systems. Following the introduction, we first introduce the key metrics in HUDs and the technical progression of commercial HUDs. Next, we introduce the PGUs that are generally used in all kinds of HUDs. We further categorize the optics of HUDs according to the features of the virtual image plane. The fundamentals, display performance, and challenges are discussed. Finally, we will explore the major obstacles of AR-HUDs and highlight the potential future directions.

2. Metrics of HUDs

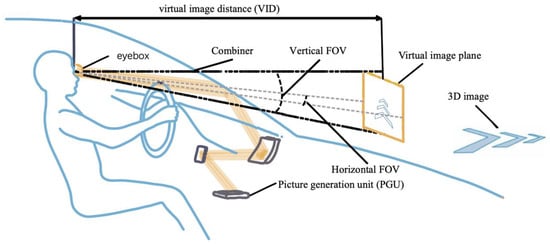

An AR-HUD is a product that combines many fields, such as optical displays, LIDAR detection [11], data processing [12], etc. Although there are numerous parameters for evaluating such systems, this study focuses only on the optical architecture and introduces the metrics of optical-system-related parameters, including the field of view (FOV), virtual image distance, eyebox, and volume of the system [13,14,15] (Figure 2).

Figure 2.

Key metrics of HUDs.

The FOV refers to the observable angle range of a virtual image. In an ideal HUD, the virtual image should cover a minimum of two lanes (the vehicle’s driving lane and half a lane on each side) for effective traffic illustration. Considering a road width of approximately 3.5 m, the minimum horizontal FOV of an HUD should be 20° [16]. Unfortunately, the horizontal FOV of a typical AR-HUD is 10°.

The VID represents the distance between the virtual image and the human eye. To effectively integrate navigation information with the road scene, it is recommended that the VID should exceed 10 m, ideally surpassing 20 m [17]. Increasing the virtual image distance not only enlarges the projection size but also enhances interactivity by enabling a greater amount of information to be presented to the driver.

The interocular distance between human eyes is approximately 65 mm. To ensure the inclusion of both eyes within the eyebox, it is a common practice to set an eyebox size larger than 90 × 60 mm2 [18]. In order to prevent the loss of driving information during vehicle swaying, it is advantageous to have a larger eyebox. In addition, a large eyebox allows drivers to slightly adjust their driving position, thereby significantly enhancing the overall driving experience.

In order to meet the application requirements, the size of the whole HUD system should be as small as possible. A compact system is always the goal pursued by many researchers. Each of the aforementioned performance metrics is not independent; instead, they exhibit a contradictory yet unifying relationship among them. For example, the system volume usually increases as the FOV increases. The objective for researchers lies in striking a harmonious balance among these diverse indicators.

HUDs have undergone three rounds of technological advancement: combiner HUDs (C-HUD), windshield HUDs (W-HUD), and AR-HUDs [19,20,21,22]. C-HUDs are based on a separate optical panel placed above the dashboard, so the HUD’s optical system can be designed and optimized independently of the profile of the windshield, with a lower cost and system complexity. Due to the width limit of the standalone panel, the display distance is small (~1 m) [23]. More importantly, since the display is located inside the vehicle, the driver needs to switch his/her focus away from the outside world. Because of the abovementioned drawbacks, W-HUDs and AR-HUDs have been developed. The windshield is used as a combiner to display the projected information. As a result, the FOV and VID are greatly improved compared with those of C-HUDs. AR-HUDs have a larger FOV and VID than those of W-HUDs, and they can integrate navigation information, warning information, entertainment information, etc. with the real road, realizing human–machine interaction and greatly improving driving safety, and they have become the main development direction for future in-vehicle heads-up display systems. Table 1 summarizes the critical parameters of the three HUD at different stages of development.

Table 1.

HUD parameters at different stages of development.

3. Picture Generation Unit for HUDs

Currently, commercial HUDs mainly employ four kinds of projection technologies: LCD-TFT [26], DLP [27], LCOS [28], and Micro-LEDs. Among them, LCOS can be categorized into two types: the amplitude type and phase type [29]. In the following, we will begin with the principle of picture generation units, based on which the characteristics of different types will be analyzed.

The principle of the LCD-TFT is based on the characteristic that the voltage-induced liquid crystal can modulate the polarization direction of incoming linearly polarized lights. To be specific, when light passes through the liquid crystal layer, it splits into two beams. Due to their different speeds but the fact that they have the same phase, when two beams are recombined, there will be an inevitable change in the vibration direction of the resulting light [30]. As shown in Figure 3a, when light passes through the lower polarizer, it becomes linearly polarized with a vibration direction consistent with that of the polarizer, and the liquid crystal has a specific rotation direction without applying voltage [31]. Consequently, as light traverses through the liquid crystal layer, gradual distortion occurs until reaching the upper polarizer, where its vibration direction is rotated by 90° and aligns with that of the upper polarizer. Upon applying voltage, electric-field-induced orientation eliminates this distortion effect within the liquid crystal layer. Linearly polarized light no longer rotates within this layer so that it cannot traverse through the upper polarizer to form a bright field. By combining liquid crystal with a color filter, an LCD-TFT can be used to realize full-color display, and this is the most common transmission projector.

Figure 3.

Schematics of typical projectors. (a) Transmission LCD-TFT in bright (R and G) and dark (B) states. (b) Reflective DLP technology for field sequential color (RGB) operation. (c) Reflective LCOS for field sequential color (RGB) operation. (d) RGB micro-LED.

Unlike the LCD-TFT, DLP technology utilizes a digital micromirror device (DMD) to steer incident light. A DMD is a complex optical switching device consisting of 1.3 million hinged micromirrors, one for each pixel in a rectangular array. The hinged micromirror can reflect incident light in two opposite directions corresponding to the on and off states. So, with pulse width modulation, DMD can generate multiple gray levels. As shown in Figure 3b, DLP generates full-color images through field sequential color operation. According to this principle, the incident light does not need to be polarized; thus, a higher optical efficiency compared with that of LCD-TFT and LCOS is achieved.

LCOS also employs the photoelectric effect of liquid crystals to modulate the incident linearly polarized light (Figure 3c). However, unlike TFT-LCD, LCOS utilizes a complementary metal-oxide semiconductor (CMOS) as the lower substrate, which is employed as a reflector that allows for the integration of LCOS transistors and lines within a CMOS chip (lower substrate) positioned beneath the reflective surface. This integration optimizes surface area utilization and results in a greater opening rate. As a result, LCOS achieves higher resolution and brightness and mitigates pixelation artifacts. In addition, LCOS can also be used as a phase modulation device in the field of holography [32]. By loading holograms onto a phase-type LCOS, it becomes possible to reconstruct images with depth information under coherent light illumination. As a result, an LCOS with phase modulation may be used in an AR-HUD to achieve 3D effects in the near future.

Recently, inorganic-material-based light-emitting diodes (LEDs) with sizes down to less than 50 μm (which are also called micro-LEDs) have attained much more attention for display technologies [33]. Each pixel can be addressed and driven to emit light individually, as shown in Figure 3d. Therefore, micro-LEDs distinguish themselves due to their small power consumption, low contrast ratio, and high resolution. During their manufacturing, the epitaxial growth of InGaN blue and AlInGaP red micro-LEDs requires different substrates. Subsequently, these micro-LED chips need to be transferred onto a silicon backplane with specific pixel spacing. However, the large-scale transfer technology involved in this process remains a challenge [34,35]. The approach of using only blue micro-LEDs with extremely high pixel density to excite quantum dots (QDs) in a color conversion array to realize full-color display is a feasible solution for easing their manufacturing [36,37]. When the QD material as the color conversion layer is exposed to heat, light, or humidity, its stability becomes an issue [38]. Although micro-LEDs currently still demand substantial development efforts, it is believed that with the development of chip technology, their follow-up potential is huge [39].

Table 2 summarizes the critical parameters of the three aforementioned PGUs. Both the LCD-TFT and amplitude-type LCOS technologies utilize the photoelectric effect of liquid crystals to modulate the polarization state of incident light in combination with a polarizer for the purpose of adjusting light intensity. As a result, the light emitted from both technologies is linearly polarized. Although the light efficiency is dramatically reduced because of the polarization state at the device level, the optical efficiency might be comparable with that of a DLP-based HUD at the system level because of the polarization-sensitive reflectance of the windshield. High image contrast is desirable in HUD systems to eliminate the “darkness window”. DLP generally maintains a better image contrast. It is hard for the LCD-TFT and LCOS technologies to enhance the contrast ratio due to the persistent backlighting. To address this issue, local dimming techniques can be employed. On the other hand, the high cost of DLP technology poses a significant barrier to its widespread application.

Table 2.

Critical parameters of PGUs.

4. Optics for HUDs

In HUD systems, PGUs with different characteristics need to be combined with purposely designed optics to achieve satisfactory display effects. For AR-HUDs, the distance of the virtual image plane is a vital parameter for evaluating the display effect, and it directly determines whether the image can be integrated with the real scene. To be specific, a system with only one image plane needs to push the VID to around 10 m from the observer so that the virtual image can fuse well with the real driving environment. Furthermore, an HUD with a multi-image plane or even a 3D image plane can provide more auxiliary information at different depth planes based on objects at different distances on the road. In the following, we classify AR-HUDs according to their different virtual image planes and introduce the relevant state-of-the-art research progress.

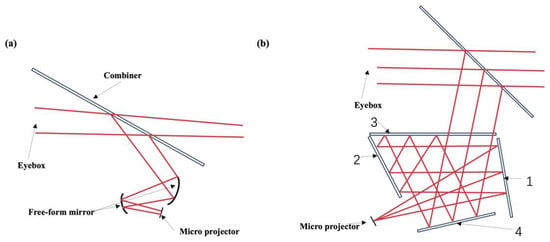

4.1. Virtual Image Distance within 10 m

HUDs based on off-axis reflection have been widely commercialized thanks to the mature manufacturing process of geometric optics. Nevertheless, their image planes tend to be close—typically below 8 m—and they have a large volume. A common design for AR-HUD systems is the free-space-based off-axis two-mirror system. The system, as shown in Figure 4a, utilizes a first fixed free-form surface as an optical path folder to reduce the system’s volume. The second free-form surface reflects the enlarged image onto the windshield, allowing the driver to see a magnified image in the distance. The advantage of using free-form mirrors in optical AR-HUD systems is that they are free of chromatic aberration [40] and can help eliminate off-axis aberrations [41]. Free-form surfaces not only correct image aberrations but also enable the system to be relatively compact in size (10L+).

Figure 4.

Schematics of a free-space HUD system. (a) Schematics of an off-axis reflective HUD with dual free-form mirrors. (b) Schematics of a four-mirror off-axis reflective HUD.

However, the optical structure described above presents a trade-off among the FOV, VID, eyebox size, and system volume. A larger FOV and farther VID require free-form surfaces to be enlarged, which poses a challenge in maintaining a compact system volume. In 2020, an off-axis four-mirror system was proposed to meet the requirement of an adjustable exit pupil height while maintaining compactness [42]. This system had an eyebox size of 106 × 66 mm2, an FOV of 6° × 3°, and a VID of 5 m. As shown in Figure 4b, the four-mirror system consists of four reflection surfaces (labeled as 1, 2, 3, and 4) with a combiner. The light emitted from the GPU undergoes multiple reflections and eventually reaches the combiner, which reflects the light toward the exit of the system. The combiner allows observers to perceive both virtual images and reality simultaneously. Spherical surface 1 can be replaced with a free-form surface, and spherical surfaces 2 and 4 can be replaced with aspherical surfaces to balance non-symmetrical aberrations.

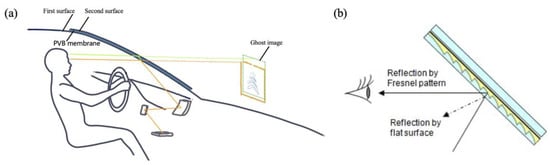

While free-space-based optics offer cost-effectiveness and simplicity, the system volume tends to become larger, bulkier, and more difficult to compactly integrate as the desired FOV, VID, and eyebox size for AR-HUDs increase [43]. Limited to a close image distance (usually smaller than 7 m), the windshield will produce a ghost image that cannot be ignored. Specifically, when a beam of light is incident on the first and second surfaces of the windshield at an oblique angle, the two reflected light rays will not completely coincide, and they will reach the human eye in a staggered form (Figure 5a) [44,45,46]. To reduce ghost images, a PVB film in the two glass layers on the windshield is modified into a wedge; from the traditional equal thickness, it is changed to be thick on the top and thin on the bottom, showing a wedge angle. After the light refracted through the first surface of the glass touches the second surface of the windshield glass, the reflection height and angle also change, making the two virtual images formed by the incident light on the first and second surfaces coincide, thus reducing the phenomenon of double shadow [47]. Moreover, utilizing a partial transparent Fresnel reflector as the combiner could also eliminate ghost images with the Fresnel pattern [48], since it can prevent a ghost image from reaching the eyes of drivers (Figure 5b).

Figure 5.

Issue of ghost images in an optical combiner. (a) Causes of ghost images. (b) A diagram of the elimination of ghost images with the Fresnel pattern [48]. Copyright of IEEE.

In contrast, waveguide optics with ultra-thin flat optical waveguides and a far-image plane significantly reduce system volume. In addition, when the virtual image distance is far enough, the impact of ghost images is almost negligible. This makes waveguide optics an important direction for the future development of AR-HUDs with great market value and prospects [49].

4.2. VID Larger Than 10 m

Indeed, a truly future-proof AR-HUD must possess a large FOV (>10° × 5°), an extended VID (>10 m), and a compact form factor. While existing free-space optical solutions can already realize part of the AR-HUD functionality, the attempt to further enhance the system parameters would result in a significantly large volume. Consequently, waveguide schemes are desirable because of their minimal impact on thickness with varying FOVs and the ability to create an image at an infinite distance (>15 m), thereby aiming to achieve a superior AR-HUD.

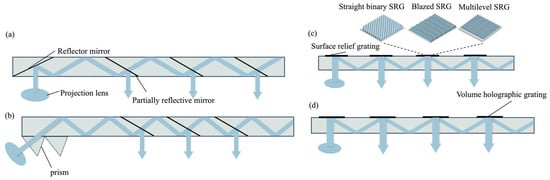

In the waveguide scheme, the light emitted from the projector is coupled into the waveguide medium and propagated to the decoupled region through total internal reflection (TIR). Subsequently, multiple decoupling events occur in the decoupled region, leading to an enlarged effective eyebox size. This approach enables a greater utilization of the waveguide area while maintaining a smaller system volume (2V+). Geometric waveguides and diffraction waveguides can be distinguished based on the coupling methods.

Geometric waveguides employ partially reflective mirrors as the simplest elements for coupling in and out. As depicted in Figure 6a, a fully reflective mirror serves as the coupled-in structure, reflecting light from the projector into the waveguide [50]. Cascaded embedded partially reflective mirrors (PRMs) function as out-couplers, extending the exit pupil [51]. Figure 6b shows that a prism array can also serve as either an in-coupler or out-coupler in a waveguide. However, due to the multiple reflections of rays on the PRMs, issues such as stray light [52], ghosting, and low beam energy uniformity may arise [53,54].

Figure 6.

Schematics of geometric and diffractive waveguides. (a) A geometric waveguide with mirrors coupled in and partially reflective mirrors coupled out. (b) A geometric waveguide with a prism coupled in and partially reflective mirrors coupled out. (c) A diffraction waveguide coupled in and out with SRGs (mainly including straight binary grating, blazed grating, and multilevel grating). (d) A diffraction waveguide coupled in and out with VHGs.

Diffractive couplers modulate the light field through diffraction. Among the various diffractive structures, gratings, such as surface-relief gratings (SRGs) and volume holographic gratings (VHGs), are most commonly employed.

As shown in Figure 6c, one-dimensional SRGs modulate the phase distribution of incident light through a periodically undulating surface structure to diffract light into or out of the waveguides. When the surface relief grating modulates the beam, its transmission strictly follows the diffraction equation of light, and the diffraction direction is determined by the wavelength and incidence angle of the incident light, the grating period, and the refractive index of the material. One-dimensional gratings can be categorized into rectangular, trapezoidal, blazed, and inclined gratings based on their undulating shape. To reduce zeroth-order diffraction and increase optical efficiency, blazed gratings [55] and multilevel structures [56] are commonly employed in SRGs. By adjusting the diffraction efficiency of the diffraction gratings in different regions, the system can manipulate the beam energy coupling from the waveguide, allowing a uniform distribution of beam energy within the expanded eye box. However, due to the relatively low diffractive efficiency of SRGs, a PGU with high brightness is necessary, which increases the system’s energy consumption.

VHGs are composed of holographic optical elements (HOEs) created by illuminating a photosensitive film with two beams of coherent waves [57]. They transfer the intensity information of the interfering lights into transmittance modulation. VHGs offer advantages such as high resolution, low cost, low scattering, and simplicity in fabrication (Figure 6d). In 2019, C. M. Bigler et al. proposed a holographic waveguide HUD system with two-dimensional pupil expansion using VHGs [58]. The work achieved an FOV of 24° × 12.6°, expansion of the horizontal exit pupil by 1.9 times, and expansion of the vertical exit pupil by 1.6 times at an observation distance of 114 mm.

Polarization volume gratings (PVGs) are also extensively studied in the waveguides of augmented reality glasses, serving as holographic optical elements that record the interference between right-handed circularly polarized (RCP) and left-handed circularly polarized (LCP) beams [59,60,61]. PVGs offer the advantage of achieving high diffractive efficiency through the utilization of Bragg structures. However, the successful integration of PVGs into HUDs necessitates addressing the challenge of ensuring consistent performance in large-scale production. Failure to address this issue could lead to variations in image quality, color fidelity, or other visual artifacts, compromising the overall user experience and effectiveness of the HUD.

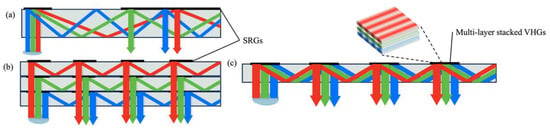

There is a dispersion problem in the realization of full-color display for diffractive waveguides because of the wavelength selectivity (Figure 7a). For SRGs, the most commonly used solution is to separately process beams of different bands by using multi-layer waveguides [62] (Figure 7b). However, a multi-layer waveguide will undoubtedly increase the volume and weight of a system. For VHGs, a method to avoid the issue caused by multiple waveguides is to stack multi-layer VHGs together (Figure 7c). An alternative solution is to use spatial multiplexing [63], since its most unique property is that several volume gratings are recorded in a single material. Moreover, multilayered plasmonic metasurfaces are adopted as achromatic diffractive grating couplers [64] with the features of an ultra-thin (<500 nm) form factor and high transparency (~90%). Three layers of such metasurface gratings are stacked together to couple a full-color image into a single waveguide.

Figure 7.

Issue of diffraction dispersion in a diffractive waveguide. (a) Schematics of diffractive dispersion. (b) Schematics of a multilayer SRG waveguide structure. (c) Schematics of a multilayer stacked VHG structure.

In conclusion, the advantages of geometric waveguides include a large field of view, high light efficiency, and low color dispersion [65], but there are still difficulties in their large-scale processing and manufacturing. When machining geometric optical waveguides, engineers cut glasses according to the specified angle, polish the cutting surface, coat the surface with reflective film, and then glue several pieces of glass. In order to ensure the final image quality, the parallelism tolerance between different PRMSs in the waveguide structure is very critical, leading to a relatively low yield.

Diffractive waveguides can be processed through nano-imprinting or tow-beam interference. These two processing schemes are scalable, so the mass production of large-size diffractive waveguides is feasible. As a result, waveguide-based AR-HUDs are expected to be one of the most possible solutions for a small volume and large FOV [66].

4.3. Multi-Image Distance

Multiple virtual image depth planes or the 3D depth of field are another important optical metric for HUDs. Intuitively, at least two virtual depth planes are required: a near-depth plane at 2–5 m to present driving status information, such as speed, fuel level, etc., and a virtual plane at 10 m or more to present navigation information. The organic separation of virtual information improves the effectiveness and smoothness of information presentation. Moreover, the natural scenery outside a vehicle has different depths. An ideal HUD should be able to provide virtual information that matches the depth and distance of natural objects. However, the current HUD provides only one virtual image plane [67,68,69], which limits the fusion of virtuality with reality [70]. Therefore, multiple virtual image plane, depth-variable virtual image planes, and real 3D virtual scenery have become the main research directions for next-generation heads-up displays.

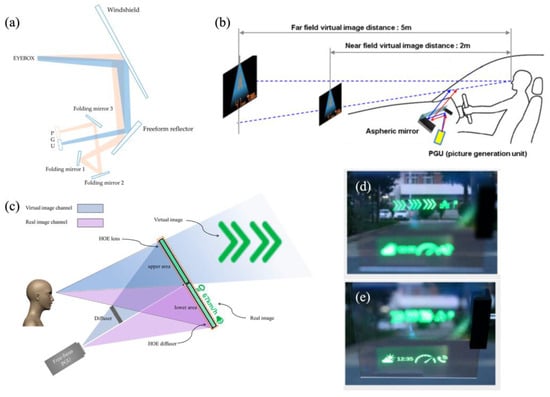

Two image planes can be achieved by employing two PGUs in a dual optical route separation design at the expense of an enlarged system volume [71]. Recently, an HUD with dual-layer display functionality that used a coupling architecture of near-field and far-field dual optical routes was proposed [72]. The near-field image plane was set to 2 m, and the far one was set to a distance of 8–24 m. The FOVs were 6° × 2° and 10° × 3°, respectively. As shown in Figure 8a, three folding mirrors were employed to fold the far-field optical path and connect it with the near-field optical path. The single-PGU approach for linking near-field and far-field dual optical routes can reduce the system volume, but it was achieved at the expense of reduced spatial resolution of the virtual image. In addition, the focus-free characteristic of a laser source can also be used to implement two images on different screens with a single PGU. A multi-depth heads-up display system that uses a single-laser-scanning PGU was proposed by Seo et al. [73]. The system can project an image to distances of 2 m and 5 m (Figure 8b). The projection distance and magnification of the virtual image are determined by the surface profile of the aspheric mirror and the throw distance.

The abovementioned multi-depth image is generated with different objective distances in the optical path. Alternatively, diffractive optical elements can also provide multiple depths. For example, using a combination of a hololens and holographic grating, two virtual images can be realized near and far [74]. The combination of hololens and hololeveling devices provided a multi-plane AR-HUD with dual modes of real–virtual images (Figure 8c) [75]. As shown in Figure 8d,e, the distance of the virtual image is 5 m, while the field-of-view angles for the real and virtual images are 10° × 4° and 8° × 4°, respectively.

Figure 8.

Research progress for multiple-image-plane HUD systems based on a single PGU. (a) Schematic diagram of a multiplane HUD system based on LCD-TFT [72], copyright of Jiang. (b) Schematic diagram of a multiplane HUD system based on LBS [73], copyright of Seo. (c) Schematic diagram of a multiplane HUD system based on HOE. (d,e) Dual image planes on a road [75], copyright of Optica.

4.4. Variable Image Distance

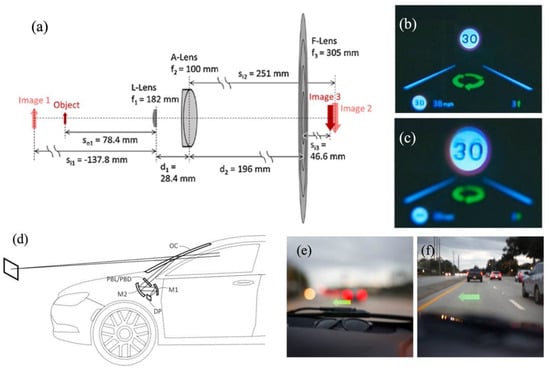

Aside from multi-depth HUD systems, the fusion of virtuality and reality can be improved by projecting images to variable depths [76,77]. In 2020, Kun et al. presented an HUD with a dynamic depth-variable viewing effect based on a liquid lens [78] (Figure 9a). The system comprises a liquid lens (L-Lens), an achromatic lens (A-Lens), and a Fresnel lens (F-Lens). The VID varies from 1.52 m to 1.95 m when the optical power of the L-Lens changes from −4.5 to 5.5 diopters (Figure 9b,c), but the VIDs of both planes are below 2 m. Nevertheless, currently, there is a limited amount of research on the aberration of the tuning lens, and improvements are needed to enhance the optical performance [79,80,81]. Furthermore, the tuning range is limited, and further investigation into focusing hysteresis phenomena is necessary [82]. The Pancharatnam–Berry optical element (PBOE) exhibits exceptional phase modulation capabilities for polarized light. Recently, Tao et al. proposed an HUD with Pancharatnam–Berry lenses (PBLs) to effectively switch the depth of virtual images [83]. As shown in Figure 9d, the PBL was set between the mirror and the combiner to alter the virtual image depth by adjusting the input polarization states to accommodate diverse driving scenarios.

Figure 9.

Research progress for variable−depth−image−plane HUD systems. (a) Optical architectures achieving the intended image depth and size variations where the L−Lens focal length is set to 182 mm. (b) Images captured from the integrated HUD system with the camera focused on the speed−limit sign as it moves from 1.95 to 1.52 m in the distance, which corresponds to the L−Lens powers of 5.5 and (c) −4.5 diopters, respectively [78], copyright of Elsevier. (d) Schematics of the optics in an off-axis reflective HUD. (e,f) Photograph captured through an HUD focusing at (left) short and (right) long virtual image distances enabled by passively driven Pancharatnam−Berry lenses (PBLs) [83], copyright of John Wiley and Sons.

Utilizing dynamically adjustable spatial light modulators, the projected images’ position can be modified without any mechanical movements. Mu et al. integrated a phase-type LCoS to continuously adjust the depths of holographic images within the range of 3 m to 30 m [84]. The display image size, resolution, and field of view are constrained by a state-of-the-art SLM.

To sum up, compared to the traditional multi-optical off-axis reflection scheme, HUDs based on novel photonic devices (such as the PBOE and SLM) replace the system complexity with material and manufacturing intricacies of a single optical component. However, during the transition from cutting-edge research to industrial production, factors such as material stability and optical device manufacturing costs still necessitate meticulous consideration.

4.5. Three-Dimensional HUDs

Two-dimensional displays with multiple depths or variable depth rely on algorithms for the postprocessing of a few 2D images, such as through coordinate conversion, to achieve 3D visual depth, but they are still limited in the continuous depth reconstruction. Therefore, 3D-HUDs represent an inevitable trend in the development of heads-up displays by combining heads-up displays with 3D displays. A 3D-HUD promptly updates the vehicle status and projects various information reflecting the vehicle’s driving condition to different depths of field, facilitating prompt reaction by drivers based on dynamic information from both virtuality and reality.

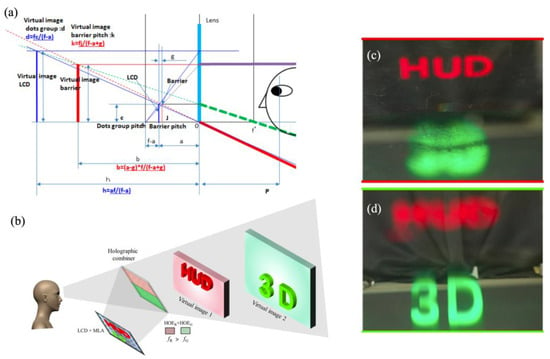

Takuya et al. proposed a 3D AR-HUD system based on a parallax barrier and eye tracking [85]. As depicted in Figure 10a, a minimum crosstalk of 2.08% and a 3D depth range of 1–20 m were achieved at the optimum viewing distance (OVD). To expand the FOV and provide horizontal parallax, Anastasiia et al. presented an interesting 3D-HUD combined with a waveguide for pupil replication with two lens arrays [86]. An eye-tracking system, a PGU, and a spatial mask were employed and synchronized to generate two eyeboxes for binocular vision through time multiplexing. The viewing angle was expanded from 12.5° × 7° to 20° × 7° by the telescopic architecture.

Figure 10.

Research progress for 3D HUD systems. (a) Schematics of a 3D AR-HUD system based on a parallax barrier and eye tracking [85], copyright of John Wiley and Sons. (b) Schematics of a 3D AR-HUD system integrating a microlens-array-based 3D display module with a holographic combiner. (c,d) Results when the camera is focused on the depth plane corresponding to red and green [87], copyright of Optica.

HOEs are crucial photonic devices in augmented reality display and transparent display, effectively manipulating light fields as lenses, gratings, or diffusers with high spectral and angular selectivity. At the same time, HOE-based combiners are the some of the most promising photonic devices for achieving a full windscreen HUD. Most recently, Zhen et al. proposed a 3D heads-up display that integrates a microlens-array-based 3D display module with a holographic combiner [87] (Figure 10b). By selectively recording varying optical powers at different locations of the HOE combiner, the holographic combiner achieves image amplification and off-axis diffraction. As shown in Figure 10c,d a depth range from 250 mm to 850 mm corresponding to green and red was achieved in their experiment.

In general, the aforementioned 3D-HUD schemes integrate existing 3D display technology (such as 3D displays based on parallax barriers, column lens arrays, spatial–temporal multiplexing, vector light fields, etc. [88,89,90,91,92,93,94,95]) with an eye-tracking algorithm to generate separate eyeboxes for both eyes in order to minimize crosstalk. However, due to the vergence–accommodation conflict, it is still under investigation whether discomfort will occur for high-speed vehicle drivers.

A holographic 3D display [96,97] reconstructs a light field by using an SLM dynamic loading hologram. Therefore, a holographic projection scheme can be integrated into an HUD [98,99]. In the field of computer-generated holography (CGH) algorithms, CNN-assisted CGH has made tremendous progress during the last few years [100,101]. However, limitations exist from the perspective of hardware implementation. For example, the presence of speckle noise caused by the interference and diffraction of light affects the reconstruction quality. One solution involves utilizing multiple SLMs to separately modulate the phase and amplitude, although this approach increases the system complexity [102]. The spatial-multiplexing method [103,104,105] is widely used to achieve full-color holography and effectively utilize the spatial bandwidth product (SBP) of the SLM. Alternatively, time-multiplexing techniques [106] can reconstruct full-color images using one SLM based on the principles of human eye retention. Yet holographic displays for 3D HUDs still have limitations, such as the small space bandwidth product, speckle noise, zeroth-order light elimination, and video-rate CGH algorithm.

5. Conclusions and Outlook

In this review, we began with the investigation of PGU modules and compared the disparities among various schemes. Building upon this foundation, we further introduced diverse single-plane AR-HUD systems’ performance while addressing critical challenges pertaining to distortion, dispersion, and poor image contrast ratios. Furthermore, we closely monitored cutting-edge advancements in multi-plane AR-HUDs, AR-HUDs with variable VIDs, and 3D-HUDs. A summary of the optical performance of HUD systems is given in Table 3. Although extensive efforts have been made to improve the VID and FOV, there is still much room for improvement. As the hardware entrance to the automotive metaverse, we expect a full-windshield 3D-HUD that can break current technical bottlenecks.

Table 3.

Critical parameters of AR-HUDs.

Aside from the VID, FOV, and system volume, which we extensively examined in this review, other critical requirements of HUDs include a high brightness (greater than 10,000 nit) and high contrast ratio. However, the reflectivity of a windshield is generally about 25%. The most intuitive way to increase brightness is to increase the irradiance from the PGU, but this is bound to thermal management and energy consumption problems. Another possible solution is to change the spectral reflectance of the windshield with multi-layer coatings. However, this solution has critical requirements in coating uniformity. Complex structures based on dielectric resonant gratings can also control the reflectivity of specific wavelengths, but their angular tolerances are small and incompatible with HUDs [108]. Nanoparticle-array-embedded combinators have been proposed based on bipolar localized surface plasmon resonance (LSPR) [109]. High reflectivity is obtained at specific wavelengths while maintaining clear transparency. A study suggested that correlated disordered arrangement attenuates diffraction effects, leaving the background landscape visually unchanged. Aside from brightness, a high ambient contrast ratio is favorable in AR systems for the readability of virtuality. A study proposed by Shin-Tson Wu involved utilizing electronically controlled transmittance with LC film to regulate ambient brightness and combined a DBEF to augment the display brightness [110].

A comprehensive evaluation of AR-HUDs encompassing not only optical architecture but also real-time 3D imaging and meticulous consideration of information processing algorithms are essential. In practice, the utilization of LiDAR sensors has become imperative for AR-HUDs to accurately perceive real-time road conditions while driving. It is worth mentioning that inappropriate or overly complex virtual images can indeed divert a driver’s attention. Therefore, more research should be conducted to rationalize displayed information, enhance interface clarity and conciseness, and develop more user-friendly and intuitive human–machine interfaces in order to reduce attention load. Furthermore, the utmost attention should be given to enhancing user-centric personalized experiences and implementing robust data privacy protection measures.

Funding

This research was funded by the National Key Research and Development Program of China (2021YFB2802200), the Natural Science Foundation of China (NSFC) (62375194 and 62075145), the Jiangsu Provincial Key Research and Development Program (BE2021010), the Leading Technology of Jiangsu Basic Research Plan (BK20192003), and the project of the Priority Academic Program Development (PAPD) of Jiangsu Higher Education Institutions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Available online: https://www.who.int/data/gho/data/themes/mortality-and-global-health-estimates/global-health-estimates-leading-causes-of-dalys (accessed on 1 January 2020).

- Available online: https://www.who.int/news-room/fact-sheets/detail/road-traffic-injuries (accessed on 1 January 2019).

- Kuhnert, F.; Sturmer, C.; Koster, A. Five Trends Transforming the Automotive Industry; Pricewaterhouse Coopers GmbH: Berlin, Germany, 2018. [Google Scholar]

- Wang, Z.; Wu, Y.; Niu, Q. Multi-Sensor Fusion in Automated Driving: A Survey. IEEE Access 2020, 8, 2847–2868. [Google Scholar] [CrossRef]

- Klauer, S.G.; Dingus, T.A.; Le, T.V. The Impact of Driver Inattention on Near-Crash/Crash Risk: An Analysis Using the 100-Car Naturalistic Driving Study Data; U.S. Department of Transportation: Washington, DC, USA, 2006.

- Lee, V.K.; Champagne, C.R.; Francescutti, L.H. Fatal distraction: Cell phone use while driving. Can. Fam. Physician 2013, 59, 723–725. [Google Scholar] [PubMed]

- Shen, C.Y.; Cheng, Y.; Huang, S.H.; Huang, Y.P. Presented at SID Symposium Digest of Technical Papers. In Book 3: Display Systems Posters: AR/VR/MR; SID: San Jose, CA, USA, 2019. [Google Scholar]

- Crawford, J.; Neal, A. A review of the perceptual and cognitive issues associated with the use of head-up displays in commercial aviation. Int. J. Aviat. Psychol. 2006, 16, 1–19. [Google Scholar] [CrossRef]

- Bast, H.; Delling, D.; Goldberg, A. Route planning in transportation networks. In Algorithm Engineering: Selected Results and Surveys; Springer: Berlin/Heidelberg, Germany, 2016; pp. 19–80. [Google Scholar]

- Skrypchuk, L.; Mouzakitis, A.; Langdon, P.M.; Clarkson, P.J. The effect of age and gender on task performance in the automobile. In Breaking Down Barriers: Usability, Accessibility and Inclusive Design; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 17–27. [Google Scholar]

- Štrumbelj, E.; Kononenko, I. Explaining Prediction Models and Individual Predictions with Feature Contributions. Knowl. Inf. Syst. 2014, 41, 647–665. [Google Scholar] [CrossRef]

- LaValle, S.M. Planning Algorithms; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Skirnewskaja, J.; Wilkinson, T.D. Automotive Holographic Head-Up Displays. Adv. Mater. 2022, 34, 2110463. [Google Scholar] [CrossRef] [PubMed]

- Cai, J.H. Research on Diffraction Head-Up Display Technology; Nanjing University of Technology: Nanjing, China, 2013. [Google Scholar]

- Chen, X.W.; Cao, Y.; Xue, J.L. Optimal design of optical module of the head-up display system with two free-form surfaces. Prog. Laser Optoelectron. 2022, 59, 1722004. [Google Scholar]

- Blankenbach, K. Requirements and System Aspects of AR-Head-Up Displays. IEEE Consum. Electron. Mag. 2019, 8, 62–67. [Google Scholar] [CrossRef]

- Ou, G.H. Design and Research of Optical System for Vehicle Augmented Reality Head-Up Display; University of Chinese Academy of Sciences: Beijing, China, 2019. [Google Scholar]

- Draper, C.T.; Bigler, C.M.; Mann, M.S.; Sarma, K.; Blanche, P.-A. Holographic Waveguide Head-up Display with 2-D Pupil Expansion and Longitudinal Image Magnification. Appl. Opt. 2019, 58, A251. [Google Scholar] [CrossRef] [PubMed]

- Cao, Y. Optimal Design of Optical Module of Vehicular Head-Up Display System; Xi’an University of Technology: Xi’an, China, 2022. [Google Scholar]

- Singh, I.; Kumar, A.; Singh, H.S.; Nijhawan, O.P. Optical Design and Performance Evaluation of a Dual-Beam Combiner Head-up Display. Opt. Eng. 1996, 35, 813–818. [Google Scholar] [CrossRef]

- Park, H.S.; Park, M.W.; Won, K.H.; Kim, K.; Jung, S.K. In-Vehicle AR-HUD System to Provide Driving-Safety Information. ETRI J. 2013, 35, 1038–1047. [Google Scholar] [CrossRef]

- Qin, Z.; Lin, F.-C.; Huang, Y.-P.; Shieh, H.-P.D. Maximal Acceptable Ghost Images for Designing a Legible Windshield-Type Vehicle Head-Up Display. IEEE Photonics J. 2017, 9, 1–12. [Google Scholar] [CrossRef]

- Available online: https://www.adayome.com/product/57.html (accessed on 1 January 2020).

- Available online: https://www.adayome.com/product/58.html (accessed on 1 January 2020).

- Available online: https://www.adayome.com/product/59.html (accessed on 1 January 2020).

- Shim, G.W.; Hong, W.; Cha, J.; Park, J.H.; Lee, K.J.; Choi, S. TFT Channel Materials for Display Applications: From Amorphous Silicon to Transition Metal Dichalcogenides. Adv. Mater. 2020, 32, 1907166. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Mao, Q.; Yin, J.; Wang, Y.; Fu, J.; Huang, Y. Theoretical Prediction and Experimental Validation of the Digital Light Processing (DLP) Working Curve for Photocurable Materials. Addit. Manuf. 2021, 37, 101716. [Google Scholar] [CrossRef]

- Zhang, Z.; You, Z.; Chu, D. Fundamentals of Phase-Only Liquid Crystal on Silicon (LCOS) Devices. Light Sci. Appl. 2014, 3, e213. [Google Scholar] [CrossRef]

- Wakunami, K.; Hsieh, P.-Y.; Oi, R.; Senoh, T.; Sasaki, H.; Ichihashi, Y.; Okui, M.; Huang, Y.-P.; Yamamoto, K. Projection-Type See-through Holographic Three-Dimensional Display. Nat. Commun. 2016, 7, 12954. [Google Scholar] [CrossRef] [PubMed]

- Stephen, M.J.; Straley, J.P. Physics of Liquid Crystals. Rev. Mod. Phys. 1974, 46, 617–704. [Google Scholar] [CrossRef]

- Oh, J.-H.; Kwak, S.-Y.; Yang, S.-C.; Bae, B.-S. Highly Condensed Fluorinated Methacrylate Hybrid Material for Transparent Low- k Passivation Layer in LCD-TFT. ACS Appl. Mater. Interfaces 2010, 2, 913–918. [Google Scholar] [CrossRef]

- Gabor, D. A New Microscopi Prinnciple. Nature 1948, 161, 777–778. [Google Scholar] [CrossRef] [PubMed]

- Anwar, A.R.; Sajjad, M.T.; Johar, M.A.; Hernández-Gutiérrez, C.A.; Usman, M.; Łepkowski, S.P. Recent Progress in Micro-LED-Based Display Technologies. Laser Photonics Rev. 2022, 16, 2100427. [Google Scholar] [CrossRef]

- Li, L.; Tang, G.; Shi, Z.; Ding, H.; Liu, C.; Cheng, D.; Zhang, Q.; Yin, L.; Yao, Z.; Duan, L.; et al. Transfer-Printed, Tandem Microscale Light-Emitting Diodes for Full-Color Displays. Proc. Natl. Acad. Sci. USA 2021, 118, e2023436118. [Google Scholar] [CrossRef]

- Mun, S.; Kang, C.; Min, J.; Choi, S.; Jeong, W.; Kim, G.; Lee, J.; Kim, K.; Ko, H.C.; Lee, D. Highly Efficient Full-Color Inorganic LEDs on a Single Wafer by Using Multiple Adhesive Bonding. Adv. Mater. Interfaces 2021, 8, 2100300. [Google Scholar] [CrossRef]

- Hyun, B.-R.; Sher, C.-W.; Chang, Y.-W.; Lin, Y.; Liu, Z.; Kuo, H.-C. Dual Role of Quantum Dots as Color Conversion Layer and Suppression of Input Light for Full-Color Micro-LED Displays. J. Phys. Chem. Lett. 2021, 12, 6946–6954. [Google Scholar] [CrossRef]

- Luo, J.-W.; Wang, Y.-S.; Hu, T.-C.; Tsai, S.-Y.; Tsai, Y.-T.; Wang, H.-C.; Chen, F.-H.; Lee, Y.-C.; Tsai, T.-L.; Chung, R.-J.; et al. Microfluidic Synthesis of CsPbBr3/Cs4PbBr6 Nanocrystals for Inkjet Printing of Mini-LEDs. Chem. Eng. J. 2021, 426, 130849. [Google Scholar]

- Triana, M.A.; Hsiang, E.-L.; Zhang, C.; Dong, Y.; Wu, S.-T. Luminescent Nanomaterials for Energy-Efficient Display and Healthcare. ACS Energy Lett. 2022, 7, 1001–1020. [Google Scholar] [CrossRef]

- Wu, Y.; Ma, J.; Su, P.; Zhang, L.; Xia, B. Full-Color Realization of Micro-LED Displays. Nanomaterials 2020, 10, 2482. [Google Scholar] [CrossRef]

- Wei, S.; Fan, Z.; Zhu, Z.; Ma, D. Design of a Head-up Display Based on Freeform Reflective Systems for Automotive Applications. Appl. Opt. 2019, 58, 1675–1681. [Google Scholar] [CrossRef]

- Shih, C.-Y.; Tseng, C.-C. Dual-Eyebox Head-up Display. In Proceedings of the 2018 3rd IEEE International Conference on Intelligent Transportation Engineering (ICITE), Singapore, 3–5 September 2018; IEEE: Singapore, 2018; pp. 105–109. [Google Scholar]

- Zhou, M.; Cheng, D.; Wang, Q.; Chen, H.; Wang, Y. Design of an Off-Axis Four-Mirror System for Automotive Head-up Display. In Proceedings of the AOPC 2020: Display Technology; Photonic MEMS, THz MEMS, and Metamaterials, and AI in Optics and Photonics, Beijing, China, 5 November 2020; Wang, Q., Luo, H., Xie, H., Lee, C., Cao, L., Yang, B., Cheng, J., Xu, Z., Wang, Y., Wang, Y., et al., Eds.; SPIE: Beijing, China, 2020; p. 6. [Google Scholar]

- Chand, T.; Debnath, S.K.; Rayagond, S.K.; Karar, V. Design of Refractive Head-up Display System Using Rotational Symmetric Aspheric Optics. Optik 2017, 131, 515–519. [Google Scholar] [CrossRef]

- Born, M.; Wolf, E. Principles of Optics; Pergamon Press INC: New York, NY, USA, 1959. [Google Scholar]

- Gao, L. Introduction of Head Up Display (HUD). China Terminol. 2014, 16, 19–21. [Google Scholar]

- Huang, X.Z. Research on Optical Module Technology of Vehicle Head-Up Display System; Institute of Optoelectronic Technology, Chinese Academy of Sciences: Chengdu, China, 2019. [Google Scholar]

- Lee, J.-H.; Yanusik, I.; Choi, Y.; Kang, B.; Hwang, C.; Malinovskaya, E.; Park, J.; Nam, D.; Lee, C.; Kim, C.; et al. Optical Design of Automotive Augmented Reality 3D Head-up Display with Light-Field Rendering. In Proceedings of the Advances in Display Technologies XI; Lee, J.-H., Wang, Q.-H., Yoon, T.-H., Eds.; SPIE: Online Only, USA, 5 March 2021; p. 16. [Google Scholar]

- Okumura, H.; Hotta, A.; Sasaki, T.; Horiuchi, K.; Okada, N. Wide Field of View Optical Combiner for Augmented Reality Head-up Displays. In Proceedings of the 2018 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 12–14 January 2018; IEEE: Las Vegas, NV, USA, 2018; pp. 1–4. [Google Scholar]

- Homan, M. The Use of Optical Waveguides in Head up Display (HUD) Applications. In Proceedings of the Display Technologies and Applications for Defense, Security, and Avionics VII, Baltimore, MD, USA, 29 April–3 May 2013; Desjardins, D.D., Sarma, K.R., Eds.; SPIE: Bellingham, DC, USA, 2013; Volume 8736, p. 87360E. [Google Scholar]

- Amitai, Y. P-21: Extremely Compact High-Performance HMDs Based on Substrate-Guided Optical Element. SID Symp. Dig. Tech. Pap. 2004, 35, 310–313. [Google Scholar] [CrossRef]

- Amitai, Y. P-27: A Two-Dimensional Aperture Expander for Ultra-Compact, High-Performance Head-Worn Displays. SID Symp. Dig. Tech. Pap. 2005, 36, 360. [Google Scholar] [CrossRef]

- Gu, L.; Cheng, D.; Wang, Q.; Hou, Q.; Wang, Y. Design of a Two-Dimensional Stray-Light-Free Geometrical Waveguide Head-up Display. Appl. Opt. 2018, 57, 9246–9256. [Google Scholar] [CrossRef] [PubMed]

- Gu, L.; Cheng, D.; Wang, Q.; Hou, Q.; Wang, S.; Yang, T.; Wang, Y. Design of a Uniform-Illumination Two-Dimensional Waveguide Head-up Display with Thin Plate Compensator. Opt. Express 2019, 27, 12692. [Google Scholar] [CrossRef]

- Chen, C.-Y.; Yang, T.-T.; Sun, W.-S. Optics System Design Applying a Micro-Prism Array of a Single Lens Stereo Image Pair. Opt. Express 2008, 16, 15495–15505. [Google Scholar] [CrossRef] [PubMed]

- Aoyagi, T.; Aoyagi, Y.; Namba, S. High-Efficiency Blazed Grating Couplers. Appl. Phys. Lett. 1976, 29, 303–304. [Google Scholar] [CrossRef]

- Xu, J.; Yang, S.; Wu, L.; Xu, L.; Li, Y.; Liao, R.; Qu, M.; Quan, X.; Cheng, X. Design and Fabrication of a High-Performance Binary Blazed Grating Coupler for Perfectly Perpendicular Coupling. Opt. Express 2021, 29, 42999–43010. [Google Scholar] [CrossRef]

- Xiong, J.; Yin, K.; Li, K.; Wu, S.-T. Holographic Optical Elements for Augmented Reality: Principles, Present Status, and Future Perspectives. Adv. Photonics Res. 2021, 2, 2000049. [Google Scholar] [CrossRef]

- Draper, C.T.; Blanche, P.-A. Examining Aberrations Due to Depth of Field in Holographic Pupil Replication Waveguide Systems. Appl. Opt. 2021, 60, 1653–1659. [Google Scholar] [CrossRef]

- Lee, Y.-H.; Yin, K.; Wu, S.-T. Reflective Polarization Volume Gratings for High Efficiency Waveguide-Coupling Augmented Reality Displays. Opt. Express 2017, 25, 27008–27014. [Google Scholar] [CrossRef] [PubMed]

- Sakhno, O.; Gritsai, Y.; Sahm, H.; Stumpe, J. Fabrication and Performance of Efficient Thin Circular Polarization Gratings with Bragg Properties Using Bulk Photo-Alignment of a Liquid Crystalline Polymer. Appl. Phys. B 2018, 124, 52. [Google Scholar] [CrossRef]

- Yaroshchuk, O.; Reznikov, Y. Photoalignment of Liquid Crystals: Basics and Current Trends. J. Mater. Chem. 2012, 22, 286–300. [Google Scholar] [CrossRef]

- Mukawa, H.; Akutsu, K.; Matsumura, I.; Nakano, S.; Yoshida, T.; Kuwahara, M.; Aiki, K. A Full-color Eyewear Display Using Planar Waveguides with Reflection Volume Holograms. J. Soc. Inf. Disp. 2009, 17, 185–193. [Google Scholar] [CrossRef]

- Guo, J.; Tu, Y.; Yang, L.; Wang, L.; Wang, B. Design of a Multiplexing Grating for Color Holographic Waveguide. Opt. Eng. 2015, 54, 125105. [Google Scholar] [CrossRef]

- Ditcovski, R.; Avayu, O.; Ellenbogen, T. Full-Color Optical Combiner Based on Multilayered Metasurface Design. In Proceedings of the Advances in Display Technologies IX, San Francisco, CA, USA, 1 March 2019; Wang, Q.-H., Yoon, T.-H., Lee, J.-H., Eds.; SPIE: San Francisco, CA, USA, 1 March 2019; p. 27. [Google Scholar]

- Cheng, D.; Wang, Q.; Liu, Y.; Chen, H.; Ni, D.; Wang, X.; Yao, C.; Hou, Q.; Hou, W.; Luo, G.; et al. Design and Manufacture AR Head-Mounted Displays: A Review and Outlook. Light Adv. Manuf. 2021, 2, 336. [Google Scholar] [CrossRef]

- Han, J.; Liu, J.; Yao, X.; Wang, Y. Portable Waveguide Display System with a Large Field of View by Integrating Freeform Elements and Volume Holograms. Opt. Express 2015, 23, 3534–3549. [Google Scholar] [CrossRef] [PubMed]

- Gabbard, J.L.; Fitch, G.M.; Kim, H. Behind the Glass: Driver Challenges and Opportunities for AR Automotive Applications. Proc. IEEE 2014, 102, 124–136. [Google Scholar] [CrossRef]

- Tufano, D.R. Automotive HUDs: The Overlooked Safety Issues. Human Factors 1997, 39, 303–311. [Google Scholar] [CrossRef] [PubMed]

- Ng-Thow-Hing, V.; Bark, K.; Beckwith, L. User-centered perspectives for automotive augmented reality. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality, Adelaide, SA, Australia, 1–4 October 2013; pp. 13–22. [Google Scholar]

- Ward, N.J.; Parkes, A. Head-up Displays and Their Automotive Application: An Overview of Human Factors Issues Affecting Safety. Accid. Anal. Prev. 1994, 26, 703–717. [Google Scholar] [CrossRef] [PubMed]

- Continental’s 2017 Augmented Reality-Head Up Display Prototype. Available online: https://continental-head-up-display.com (accessed on 1 January 2018).

- Jiang, Q.; Guo, Z. AR-HUD Optical System Design and Its Multiple Configurations Analysis. Photonics 2023, 10, 954. [Google Scholar] [CrossRef]

- Seo, J.H.; Yoon, C.Y.; Oh, J.H.; Kang, S.B.; Yang, C.; Lee, M.R.; Han, Y.H. 59-4: A Study on Multi-depth Head-Up Display. SID Symp. Dig. Tech. Pap. 2017, 48, 883–885. [Google Scholar] [CrossRef]

- Coni, P.; Bardon, J.-L.; Damamme, N.; Coe-Sullivan, S.; Dimov, F.I. 56-1: A Multiplane Holographic HUD Using Light Selectivity of Bragg Grating. SID Symp. Dig. Tech. Pap. 2019, 50, 775–778. [Google Scholar] [CrossRef]

- Lv, Z.; Xu, Y.; Yang, Y.; Liu, J. Multiplane Holographic Augmented Reality Head-up Display with a Real–Virtual Dual Mode and Large Eyebox. Appl. Opt. 2022, 61, 9962–9971. [Google Scholar] [CrossRef]

- Ukkusuri, S.; Gkritza, K.; Qian, X.; Sadri, A.M. Best Practices for Maximizing Driver Attention to Work Zone Warning Signs; Purdue University: West Lafayette, IN, USA, 2017. [Google Scholar] [CrossRef]

- Zhan, T.; Yin, K.; Xiong, J.; He, Z.; Wu, S.-T. Augmented Reality and Virtual Reality Displays: Perspectives and Challenges. iScience 2020, 23, 101397. [Google Scholar] [CrossRef]

- Li, K.; Geng, Y.; Yöntem, A.Ö.; Chu, D.; Meijering, V.; Dias, E.; Skrypchuk, L. Head-up Display with Dynamic Depth-Variable Viewing Effect. Optik 2020, 221, 165319. [Google Scholar] [CrossRef]

- Werber, A.; Zappe, H. Tunable Microfluidic Microlenses. Appl. Opt. 2005, 44, 3238–3245. [Google Scholar] [CrossRef]

- Dong, L.; Agarwal, A.K.; Beebe, D.J.; Jiang, H. Adaptive Liquid Microlenses Activated by Stimuli-Responsive Hydrogels. Nature 2006, 442, 551–554. [Google Scholar] [CrossRef]

- Waibel, P.; Mader, D.; Liebetraut, P.; Zappe, H.; Seifert, A. Chromatic Aberration Control for Tunable All-Silicone Membrane Microlenses. Opt. Express 2011, 19, 18584–18592. [Google Scholar] [CrossRef]

- Shian, S.; Diebold, R.M.; Clarke, D.R. High-Speed, Compact, Adaptive Lenses Using in-Line Transparent Dielectric Elastomer Actuator Membranes. In Proceedings of the Electroactive Polymer Actuators and Devices (EAPAD), San Diego, CA, USA, 11–14 March 2013; Bar-Cohen, Y., Ed.; SPIE: Bellingham, DC, USA, 2013; Volume 8687, p. 86872D. [Google Scholar]

- Zhan, T.; Lee, Y.; Xiong, J.; Tan, G.; Yin, K.; Yang, J.; Liu, S.; Wu, S. High-efficiency Switchable Optical Elements for Advanced Head-up Displays. J. Soc. Inf. Disp. 2019, 27, 223–231. [Google Scholar] [CrossRef]

- Mu, C.-T.; Lin, W.-T.; Chen, C.-H. Zoomable Head-up Display with the Integration of Holographic and Geometrical Imaging. Opt. Express 2020, 28, 35716–35723. [Google Scholar] [CrossRef]

- Matsumoto, T.; Kusafuka, K.; Hamagishi, G.; Takahashi, H. P-87: Glassless 3D Head Up Display Using Parallax Barrier with Eye Tracking Image Processing. SID Symp. Dig. Tech. Pap. 2018, 49, 1511–1514. [Google Scholar] [CrossRef]

- Kalinina, A.; Yanusik, I.; Dubinin, G.; Morozov, A.; Lee, J.-H. Full-Color AR 3D Head-up Display with Extended Field of View Based on a Waveguide with Pupil Replication. In Proceedings of the Advances in Display Technologies XII, San Francisco, CA, USA, 4 March 2022; Lee, J.-H., Wang, Q.-H., Yoon, T.-H., Eds.; SPIE: San Francisco, CA, USA, 2022; p. 17. [Google Scholar]

- Lv, Z.; Li, J.; Yang, Y.; Liu, J. 3D Head-up Display with a Multiple Extended Depth of Field Based on Integral Imaging and Holographic Optical Elements. Opt. Express 2023, 31, 964. [Google Scholar] [CrossRef]

- Zhou, F.; Zhou, F.; Chen, Y.; Hua, J.; Qiao, W.; Chen, L. Vector Light Field Display Based on an Intertwined Flat Lens with Large Depth of Focus. Optica 2022, 9, 288. [Google Scholar] [CrossRef]

- Shi, J.; Hua, J.; Zhou, F.; Yang, M.; Qiao, W. Augmented Reality Vector Light Field Display with Large Viewing Distance Based on Pixelated Multilevel Blazed Gratings. Photonics 2021, 8, 337. [Google Scholar] [CrossRef]

- Hua, J.; Hua, E.; Zhou, F.; Shi, J.; Wang, C.; Duan, H.; Hu, Y.; Qiao, W.; Chen, L. Foveated Glasses-Free 3D Display with Ultrawide Field of View via a Large-Scale 2D-Metagrating Complex. Light Sci. Appl. 2021, 10, 213. [Google Scholar] [CrossRef]

- Hahn, J.; Kim, H.; Lim, Y.; Park, G.; Lee, B. Wide Viewing Angle Dynamic Holographic Stereogram with a Curved Array of Spatial Light Modulators. Opt. Express 2008, 16, 12372–12386. [Google Scholar] [CrossRef]

- Ting, C.-H.; Chang, Y.-C.; Chen, C.-H.; Huang, Y.-P.; Tsai, H.-W. Multi-User 3D Film on a Time-Multiplexed Side-Emission Backlight System. Appl. Opt. 2016, 55, 7922–7928. [Google Scholar] [CrossRef]

- Fattal, D.; Peng, Z.; Tran, T.; Vo, S.; Fiorentino, M.; Brug, J.; Beausoleil, R.G. A Multi-Directional Backlight for a Wide-Angle, Glasses-Free Three-Dimensional Display. Nature 2013, 495, 348–351. [Google Scholar] [CrossRef]

- Li, J.; Tu, H.-Y.; Yeh, W.-C.; Gui, J.; Cheng, C.-J. Holographic Three-Dimensional Display and Hologram Calculation Based on Liquid Crystal on Silicon Device. Appl. Opt. 2014, 53, G222–G231. [Google Scholar] [CrossRef]

- Fan, Z.; Weng, Y.; Chen, G.; Liao, H. 3D Interactive Surgical Visualization System Using Mobile Spatial Information Acquisition and Autostereoscopic Display. J. Biomed. Inform. 2017, 71, 154–164. [Google Scholar] [CrossRef]

- Paturzo, M.; Memmolo, P.; Finizio, A.; Näsänen, R.; Naughton, T.J.; Ferraro, P. Synthesis and Display of Dynamic Holographic 3D Scenes with Real-World Objects. Opt. Express 2010, 18, 8806–8815. [Google Scholar] [CrossRef]

- Takaki, Y. Super Multi-View and Holographic Displays Using MEMS Devices. Displays 2015, 37, 19–24. [Google Scholar] [CrossRef]

- Xue, G.; Liu, J.; Li, X.; Jia, J.; Zhang, Z.; Hu, B.; Wang, Y. Multiplexing Encoding Method for Full-Color Dynamic 3D Holographic Display. Opt. Express 2014, 22, 18473–18482. [Google Scholar] [CrossRef] [PubMed]

- Teich, M.; Schuster, T.; Leister, N.; Zozgornik, S.; Fugal, J.; Wagner, T.; Zschau, E.; Häussler, R.; Stolle, H. Real-Time, Large-Depth Holographic 3D Head-up Display: Selected Aspects. Appl. Opt. 2022, 61, B156–B163. [Google Scholar] [CrossRef] [PubMed]

- Shi, L.; Li, B.; Kim, C.; Kellnhofer, P.; Matusik, W. Towards Real-Time Photorealistic 3D Holography with Deep Neural Networks. Nature 2021, 591, 234–239. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.; Zhu, D.; Li, J.; Wang, D.; Xia, J.; Zhang, X. Three-Dimensional Computer Holography Enabled from a Single 2D Image. Opt. Lett. 2022, 47, 2202–2205. [Google Scholar] [CrossRef] [PubMed]

- Gao, Q.; Liu, J.; Han, J.; Li, X. Monocular 3D See-through Head-Mounted Display via Complex Amplitude Modulation. Opt. Express 2016, 24, 17372–17383. [Google Scholar] [CrossRef] [PubMed]

- Shiraki, A.; Takada, N.; Niwa, M.; Ichihashi, Y.; Shimobaba, T.; Masuda, N.; Ito, T. Simplified Electroholographic Color Reconstruction System Using Graphics Processing Unit and Liquid Crystal Display Projector. Opt. Express 2009, 17, 16038–16045. [Google Scholar] [CrossRef] [PubMed]

- Makowski, M.; Ducin, I.; Sypek, M.; Siemion, A.; Siemion, A.; Suszek, J.; Kolodziejczyk, A. Color Image Projection Based on Fourier Holograms. Opt. Lett. 2010, 35, 1227–1229. [Google Scholar] [CrossRef] [PubMed]

- Makowski, M.; Ducin, I.; Kakarenko, K.; Suszek, J.; Sypek, M.; Kolodziejczyk, A. Simple Holographic Projection in Color. Opt. Express 2012, 20, 25130–25136. [Google Scholar] [CrossRef]

- Oikawa, M.; Shimobaba, T.; Yoda, T.; Nakayama, H.; Shiraki, A.; Masuda, N.; Ito, T. Time-Division Color Electroholography Using One-Chip RGB LED and Synchronizing Controller. Opt. Express 2011, 19, 12008–12013. [Google Scholar] [CrossRef]

- Bigler, C.M.; Mann, M.S.; Blanche, P.A. Holographic waveguide HUD with in-line pupil expansion and 2D FOV expansion. Appl. Opt. 2019, 58, G326–G331. [Google Scholar] [CrossRef]

- Fehrembach, A.-L.; Popov, E.; Tayeb, G.; Maystre, D. Narrow-Band Filtering with Whispering Modes in Gratings Made of Fibers. Opt. Express 2007, 15, 15734–15740. [Google Scholar] [CrossRef] [PubMed]

- Bertin, H.; Brûlé, Y.; Magno, G.; Lopez, T.; Gogol, P.; Pradere, L.; Gralak, B.; Barat, D.; Demésy, G.; Dagens, B. Correlated Disordered Plasmonic Nanostructures Arrays for Augmented Reality. ACS Photonics 2018, 5, 2661–2668. [Google Scholar] [CrossRef]

- Zhu, R.; Chen, H.; Kosa, T.; Coutino, P.; Tan, G.; Wu, S.-T. High-Ambient-Contrast Augmented Reality with a Tunable Transmittance Liquid Crystal Film and a Functional Reflective Polarizer. J. Soc. Inf. Disp. 2016, 24, 229–233. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).