PreOBP_ML: Machine Learning Algorithms for Prediction of Optical Biosensor Parameters

Abstract

1. Introduction

- To investigate the attributes of PCF sensors using various ML algorithms.

- To make some changes to improve the model’s accuracy as well as to minimize the error rate.

- To estimate the output faster than direct numerical simulation strategies.

2. Parameter Estimation Methods

2.1. Machine Learning and Optimization

2.2. Least Squares Regression (LSR) Method

2.3. LASSO Method

2.4. Elastic-Net (ENet) Method

2.5. Bayesian Ridge Regression (BRR) Method

3. Optical Sensor Numerical Models

3.1. Optical Sensor

3.2. Effective Refractive Index (ERI)

3.3. Optical Power Profiles (OPP)

3.4. Optical Power Fraction (OPF)

3.5. Optical Effective Area (OEA)

3.6. Optical Loss Profiles (OLP)

3.7. Optical Sensing Profile (OSP)

3.8. Limitations and Proposed Solutions

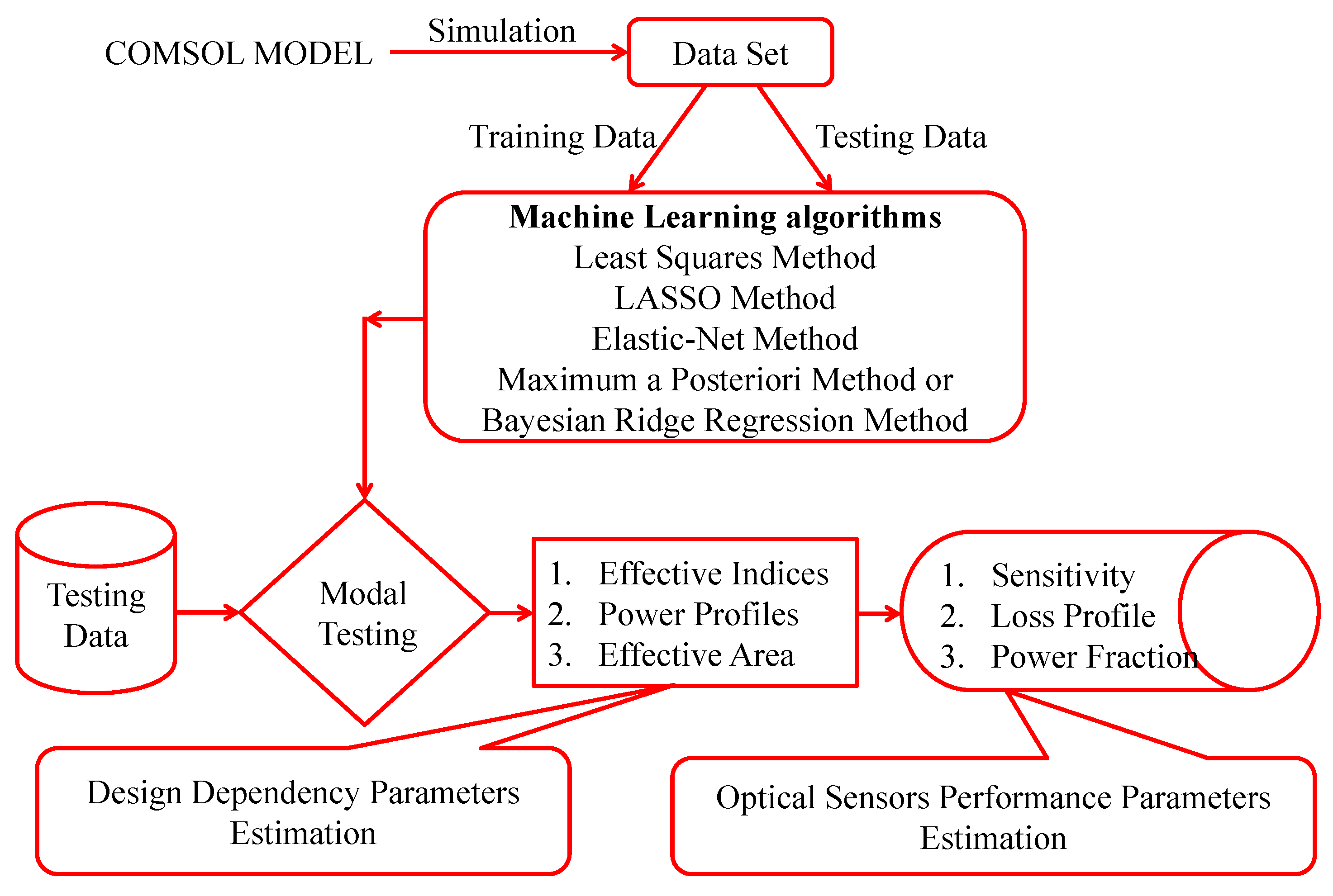

4. Methodology

4.1. Design and Dataset Collection

4.2. Dataset Distribution

4.3. Training, Testing and Evaluation

5. Result Analysis and Discussion

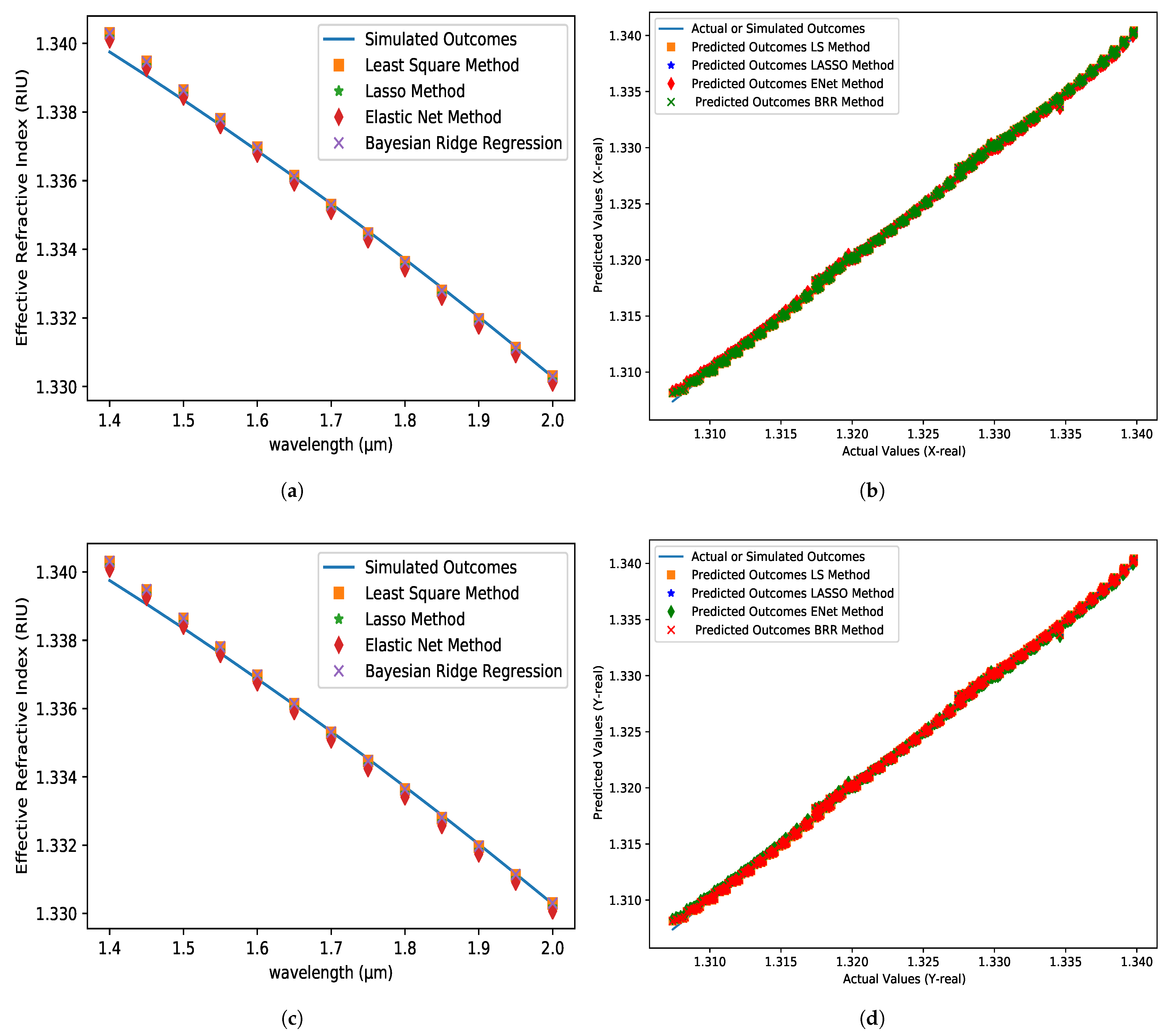

5.1. ERI for X-Axis and Y-Axis

5.2. Effective Mode Area (EMA)

5.3. Total Power and Core Power

5.4. Core Power Fraction (CPF)

5.5. Confinement Loss Profile (CLP)

5.6. Optical Sensitivity Profile (OSP)

5.7. OSP Evaluation for Different Volume of Datasets

5.8. OSP Evaluation for Different Volume of Outliers

5.9. Overall Performance Evaluation

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Knight, J.; Birks, T.; Russell, P.S.J.; Atkin, D. All-silica single-mode optical fiber with photonic crystal cladding. Opt. Lett. 1996, 21, 1547–1549. [Google Scholar] [CrossRef]

- Paul, B.K.; Rajesh, E.; Asaduzzaman, S.; Islam, M.S.; Ahmed, K.; Amiri, I.S.; Zakaria, R. Design and analysis of slotted core photonic crystal fiber for gas sensing application. Results Phys. 2018, 11, 643–650. [Google Scholar] [CrossRef]

- Ahmed, K.; Paul, B.K.; Vasudevan, B.; Rashed, A.N.Z.; Maheswar, R.; Amiri, I.; Yupapin, P. Design of D-shaped elliptical core photonic crystal fiber for blood plasma cell sensing application. Results Phys. 2019, 12, 2021–2025. [Google Scholar] [CrossRef]

- Arif, M.F.H.; Hossain, M.M.; Islam, N.; Khaled, S.M. A nonlinear photonic crystal fiber for liquid sensing application with high birefringence and low confinement loss. Sens. Bio-Sens. Res. 2019, 22, 100252. [Google Scholar] [CrossRef]

- Shi, J.; Chai, L.; Zhao, X.; Li, J.; Liu, B.; Hu, M.; Li, Y.; Wang, C. Femtosecond pulse coupling dynamics between a dispersion-managed soliton oscillator and a nonlinear amplifier in an all-PCF-based laser system. Optik 2017, 145, 569–575. [Google Scholar] [CrossRef]

- Cheo, P.; Liu, A.; King, G. A high-brightness laser beam from a phase-locked multicore Yb-doped fiber laser array. IEEE Photonics Technol. Lett. 2001, 13, 439–441. [Google Scholar] [CrossRef]

- Holzwarth, R.; Udem, T.; Hänsch, T.W.; Knight, J.; Wadsworth, W.; Russell, P.S.J. Optical frequency synthesizer for precision spectroscopy. Phys. Rev. Lett. 2000, 85, 2264. [Google Scholar] [CrossRef]

- Markin, A.V.; Markina, N.E.; Goryacheva, I.Y. Raman spectroscopy based analysis inside photonic-crystal fibers. TrAC Trends Anal. Chem. 2017, 88, 185–197. [Google Scholar] [CrossRef]

- Couny, F.; Carraz, O.; Benabid, F. Control of transient regime of stimulated Raman scattering using hollow-core PCF. JOSA B 2009, 26, 1209–1215. [Google Scholar] [CrossRef]

- Bréchet, F.; Marcou, J.; Pagnoux, D.; Roy, P. Complete analysis of the characteristics of propagation into photonic crystal fibers, by the finite element method. Opt. Fiber Technol. 2000, 6, 181–191. [Google Scholar] [CrossRef]

- Cucinotta, A.; Selleri, S.; Vincetti, L.; Zoboli, M. Holey fiber analysis through the finite-element method. IEEE Photonics Technol. Lett. 2002, 14, 1530–1532. [Google Scholar] [CrossRef]

- Joannopoulos, J.D.; Villeneuve, P.R.; Fan, S. Photonic crystals: Putting a new twist on light. Nature 1997, 386, 143–149. [Google Scholar] [CrossRef]

- Fanglei, L.; Gaoxin, Q.; Yongping, L. Analyzing point defect two-dimensional photonic crystals with transfer matrix and block-iterative frequency-domain method. Chin. J. Quantum Electron. 2003, 20, 35–41. [Google Scholar]

- Shi, S.; Chen, C.; Prather, D.W. Plane-wave expansion method for calculating band structure of photonic crystal slabs with perfectly matched layers. JOSA A 2004, 21, 1769–1775. [Google Scholar] [CrossRef]

- Hsue, Y.C.; Freeman, A.J.; Gu, B.Y. Extended plane-wave expansion method in three-dimensional anisotropic photonic crystals. Phys. Rev. B 2005, 72, 195118. [Google Scholar] [CrossRef]

- Abe, R.; Takeda, T.; Shiratori, R.; Shirakawa, S.; Saito, S.; Baba, T. Optimization of an H0 photonic crystal nanocavity using machine learning. Opt. Lett. 2020, 45, 319–322. [Google Scholar] [CrossRef]

- Christensen, T.; Loh, C.; Picek, S.; Jakobović, D.; Jing, L.; Fisher, S.; Ceperic, V.; Joannopoulos, J.D.; Soljačić, M. Predictive and generative machine learning models for photonic crystals. Nanophotonics 2020, 9, 4183–4192. [Google Scholar] [CrossRef]

- Ghasemi, F.; Aliasghary, M.; Razi, S. Magneto-sensitive photonic crystal optical filter with tunable response in 12–19 GHz; cross over from design to prediction of performance using machine learning. Phys. Lett. A 2021, 401, 127328. [Google Scholar] [CrossRef]

- Chugh, S.; Gulistan, A.; Ghosh, S.; Rahman, B. Machine learning approach for computing optical properties of a photonic crystal fiber. Opt. Express 2019, 27, 36414–36425. [Google Scholar] [CrossRef]

- da Silva Ferreira, A.; Malheiros-Silveira, G.N.; Hernández-Figueroa, H.E. Computing optical properties of photonic crystals by using multilayer perceptron and extreme learning machine. J. Light. Technol. 2018, 36, 4066–4073. [Google Scholar] [CrossRef]

- Khan, F.N.; Fan, Q.; Lu, C.; Lau, A.P.T. An optical communication’s perspective on machine learning and its applications. J. Light. Technol. 2019, 37, 493–516. [Google Scholar] [CrossRef]

- Chugh, S.; Ghosh, S.; Gulistan, A.; Rahman, B. Machine learning regression approach to the nanophotonic waveguide analyses. J. Light. Technol. 2019, 37, 6080–6089. [Google Scholar] [CrossRef]

- Koza, J.R.; Bennett, F.H.; Andre, D.; Keane, M.A. Automated design of both the topology and sizing of analog electrical circuits using genetic programming. In Artificial Intelligence in Design’96; Springer: Berlin/Heidelberg, Germany, 1996; pp. 151–170. [Google Scholar]

- Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F. Voronoi-based multi-robot autonomous exploration in unknown environments via deep reinforcement learning. IEEE Trans. Veh. Technol. 2020, 69, 14413–14423. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern recognition. Mach. Learn. 2006, 128. [Google Scholar]

- Le Roux, N.; Bengio, Y.; Fitzgibbon, A. 15—Improving first and second-order methods by modeling uncertainty. In Optimization for Machine Learning; MIT Press: Cambridge, MA, USA, 2011; p. 403. [Google Scholar]

- Kutner, M.H.; Nachtsheim, C.J.; Neter, J.; Li, W. Applied Linear Statistical Models; McGraw-Hill: New York, NY, USA, 2005. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Mooi, E.; Sarstedt, M.; Mooi-Reci, I. Regression analysis. In Market Research; Springer: Berlin/Heidelberg, Germany, 2018; pp. 215–263. [Google Scholar]

- Çankaya, S.; Eker, S.; Abacı, S.H. Comparison of Least Squares, Ridge Regression and Principal Component Approaches in the Presence of Multicollinearity in Regression Analysis. Turk. J. Agric.-Food Sci. Technol. 2019, 7, 1166–1172. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Friedman, J.; Hastie, T.; Tibshirani, R. Regularization paths for generalized linear models via coordinate descent. J. Stat. Softw. 2010, 33, 1. [Google Scholar] [CrossRef]

- Efron, B.; Hastie, T.; Johnstone, I.; Tibshirani, R. Least angle regression. Ann. Stat. 2004, 32, 407–499. [Google Scholar] [CrossRef]

- Zou, H. The Adaptive Lasso and Its Oracle Properties. J. Am. Stat. Assoc. 2006, 101, 1418–1429. [Google Scholar] [CrossRef]

- Yang, Y.; Yang, Y. Hybrid prediction method for wind speed combining ensemble empirical mode decomposition and bayesian ridge regression. IEEE Access 2020, 8, 71206–71218. [Google Scholar] [CrossRef]

- MacKay, D.J. Bayesian interpolation. Neural Comput. 1992, 4, 415–447. [Google Scholar] [CrossRef]

- Islam, M.S.; Sultana, J.; Ahmed, K.; Islam, M.R.; Dinovitser, A.; Ng, B.W.H.; Abbott, D. A novel approach for spectroscopic chemical identification using photonic crystal fiber in the terahertz regime. IEEE Sens. J. 2017, 18, 575–582. [Google Scholar] [CrossRef]

- Ahmed, K.; Ahmed, F.; Roy, S.; Paul, B.K.; Aktar, M.N.; Vigneswaran, D.; Islam, M.S. Refractive index-based blood components sensing in terahertz spectrum. IEEE Sens. J. 2019, 19, 3368–3375. [Google Scholar] [CrossRef]

- Jabin, M.A.; Ahmed, K.; Rana, M.J.; Paul, B.K.; Islam, M.; Vigneswaran, D.; Uddin, M.S. Surface plasmon resonance based titanium coated biosensor for cancer cell detection. IEEE Photonics J. 2019, 11, 1–10. [Google Scholar] [CrossRef]

- Mitu, S.A.; Ahmed, K.; Al Zahrani, F.A.; Grover, A.; Rajan, M.S.M.; Moni, M.A. Development and analysis of surface plasmon resonance based refractive index sensor for pregnancy testing. Opt. Lasers Eng. 2021, 140, 106551. [Google Scholar] [CrossRef]

- Ahmed, K.; AlZain, M.A.; Abdullah, H.; Luo, Y.; Vigneswaran, D.; Faragallah, O.S.; Eid, M.; Rashed, A.N.Z. Highly sensitive twin resonance coupling refractive index sensor based on gold-and MgF2-coated nano metal films. Biosensors 2021, 11, 104. [Google Scholar] [CrossRef] [PubMed]

| Applied Methods | X-Axis | Y-Axis | ||||

|---|---|---|---|---|---|---|

| Least Squares | 0.9994 | 3.9729 | 0.0001 | 0.9994 | 3.9721 | 0.0001 |

| LASSO | 0.9993 | 4.5486 | 0.0001 | 0.9993 | 4.5581 | 0.0001 |

| Elastic-Net | 0.9991 | 6.1648 | 0.0001 | 0.9992 | 5.8462 | 0.0001 |

| B. Ridge Regression | 0.9994 | 4.0301 | 0.0001 | 0.9994 | 4.5095 | 0.0001 |

| Applied Methods | X-Axis | Y-Axis | ||||

|---|---|---|---|---|---|---|

| Least Squares | 0.9436 | 5.7626 | 6.0969 | 0.9435 | 5.7626 | 6.1013 |

| LASSO | 0.9421 | 5.9223 | 6.1785 | 0.9420 | 5.9274 | 6.1804 |

| Elastic-Net | 0.9318 | 6.0952 | 6.2524 | 0.9318 | 6.0964 | 6.2516 |

| B. Ridge Regression | 0.9319 | 8.3652 | 7.0367 | 0.9319 | 8.3645 | 7.0382 |

| Applied Methods | |||

|---|---|---|---|

| Least Squares Method | 0.9994 | 3.90 | 1.50 |

| LASSO Method | 0.9993 | 4.50 | 1.60 |

| Elastic-Net Method | 0.9990 | 6.20 | 1.90 |

| Bayesian Ridge Regression Method | 0.9994 | 4.00 | 1.90 |

| Applied Methods | Dataset | |||

|---|---|---|---|---|

| Least Squares Method | Dataset-1 | 0.9994 | 3.97 | 1.51 |

| " | Dataset-2 | 0.9993 | 3.86 | 1.50 |

| " | Dataset-3 | 0.9995 | 3.66 | 1.56 |

| LASSO Method | Dataset-1 | 0.9993 | 4.55 | 1.58 |

| " | Dataset-2 | 0.9994 | 4.41 | 1.66 |

| " | Dataset-3 | 0.9994 | 4.63 | 1.61 |

| Elastic-Net Method | Dataset-1 | 0.9991 | 6.16 | 1.91 |

| " | Dataset-2 | 0.9991 | 6.30 | 2.01 |

| " | Dataset-3 | 0.9993 | 5.82 | 1.82 |

| Bayesian Ridge Regression Method | Dataset-1 | 0.9994 | 4.03 | 1.52 |

| " | Dataset-2 | 0.9994 | 3.95 | 1.56 |

| " | Dataset-3 | 0.9994 | 4.57 | 1.60 |

| Applied Methods | Dataset | MSE | MAE | |

|---|---|---|---|---|

| Least Squares Method | Dataset-3 | 0.9995 | ||

| " | Dataset-4 | 0.9897 | ||

| " | Dataset-5 | 0.9657 | ||

| LASSO Method | Dataset-3 | 0.9994 | ||

| " | Dataset-4 | 0.9893 | ||

| " | Dataset-5 | 0.9655 | ||

| Elastic-Net Method | Dataset-3 | 0.9993 | ||

| " | Dataset-4 | 0.9890 | ||

| " | Dataset-5 | 0.9393 | ||

| Bayesian Ridge Regression Method | Dataset-3 | 0.9994 | ||

| " | Dataset-4 | 0.9854 | ||

| " | Dataset-5 | 0.9395 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahmed, K.; Bui, F.M.; Wu, F.-X. PreOBP_ML: Machine Learning Algorithms for Prediction of Optical Biosensor Parameters. Micromachines 2023, 14, 1174. https://doi.org/10.3390/mi14061174

Ahmed K, Bui FM, Wu F-X. PreOBP_ML: Machine Learning Algorithms for Prediction of Optical Biosensor Parameters. Micromachines. 2023; 14(6):1174. https://doi.org/10.3390/mi14061174

Chicago/Turabian StyleAhmed, Kawsar, Francis M. Bui, and Fang-Xiang Wu. 2023. "PreOBP_ML: Machine Learning Algorithms for Prediction of Optical Biosensor Parameters" Micromachines 14, no. 6: 1174. https://doi.org/10.3390/mi14061174

APA StyleAhmed, K., Bui, F. M., & Wu, F.-X. (2023). PreOBP_ML: Machine Learning Algorithms for Prediction of Optical Biosensor Parameters. Micromachines, 14(6), 1174. https://doi.org/10.3390/mi14061174