Hemisphere Tabulation Method: An Ingenious Approach for Pose Evaluation of Instruments Using the Electromagnetic-Based Stereo Imaging Method

Abstract

:1. Introduction

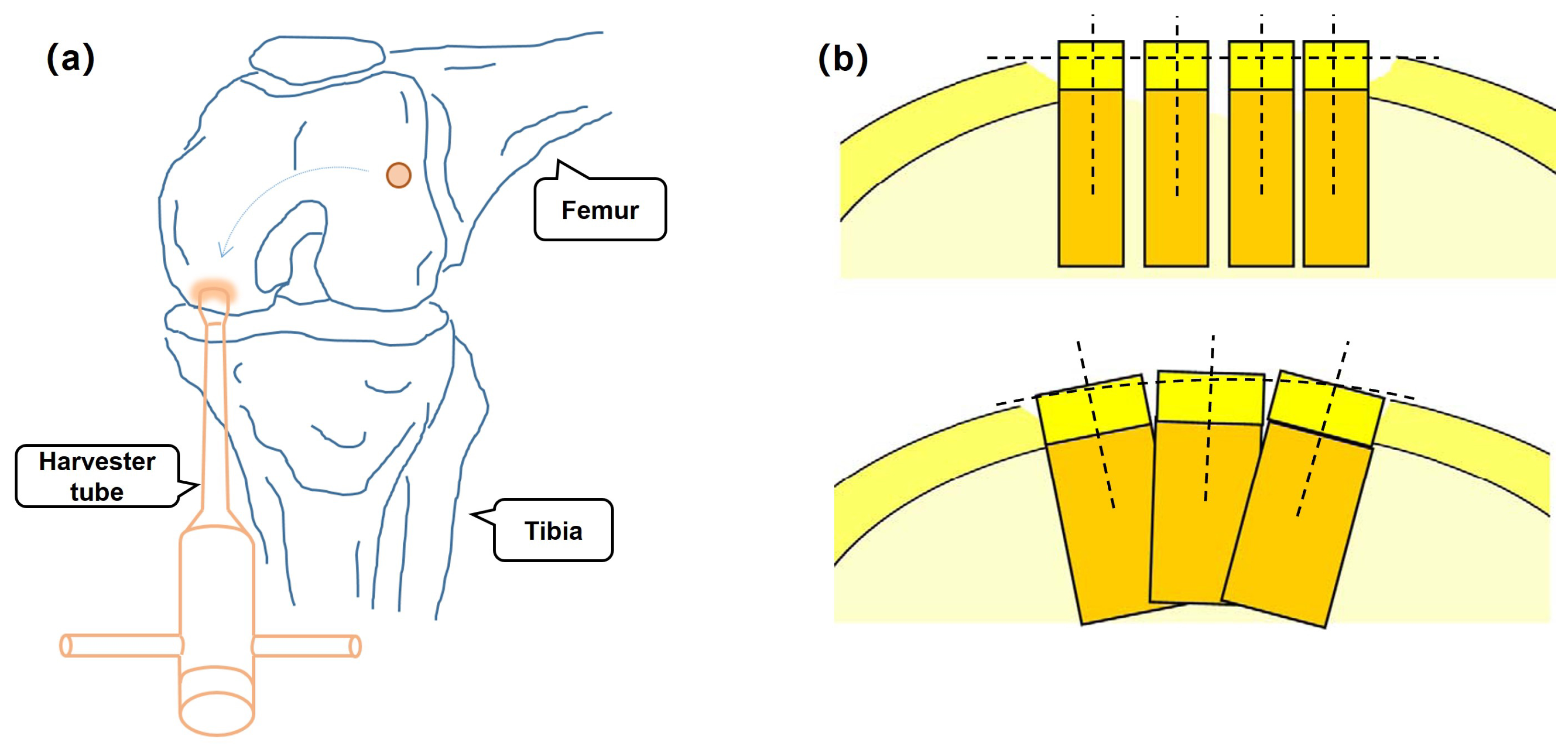

1.1. Background

1.2. Related Work

1.3. Motivation

- A novel hemisphere tabulation method (HTM) for pose evaluation is briefly explained, which is different from the previous work and more accurate in pose assessment.

- Experiments on a hemispheroid model are conducted by a shape measurement prototype to demonstrate the effectiveness of the proposed HTM.

- The development of HTM provides an optics-based solution to OAT surgery in pose analysis of instruments, which can be seen as a baseline for accuracy comparison.

2. Methods

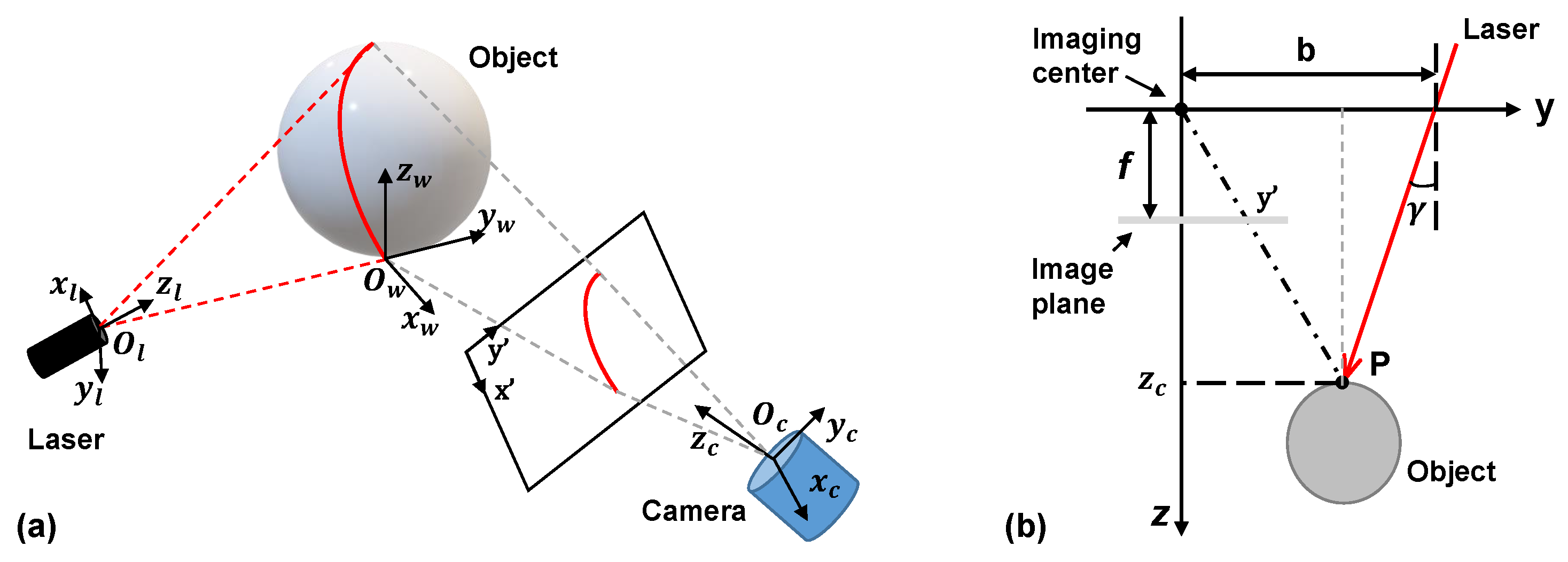

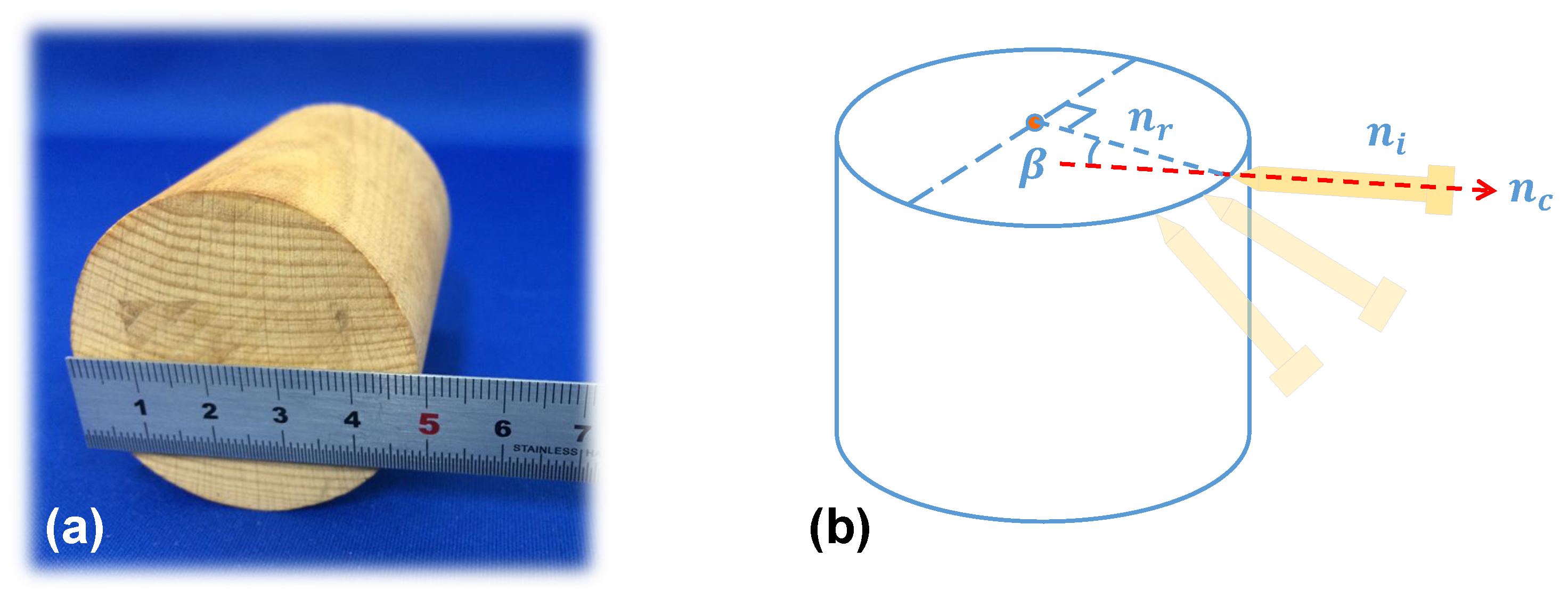

2.1. Principle of Imaging

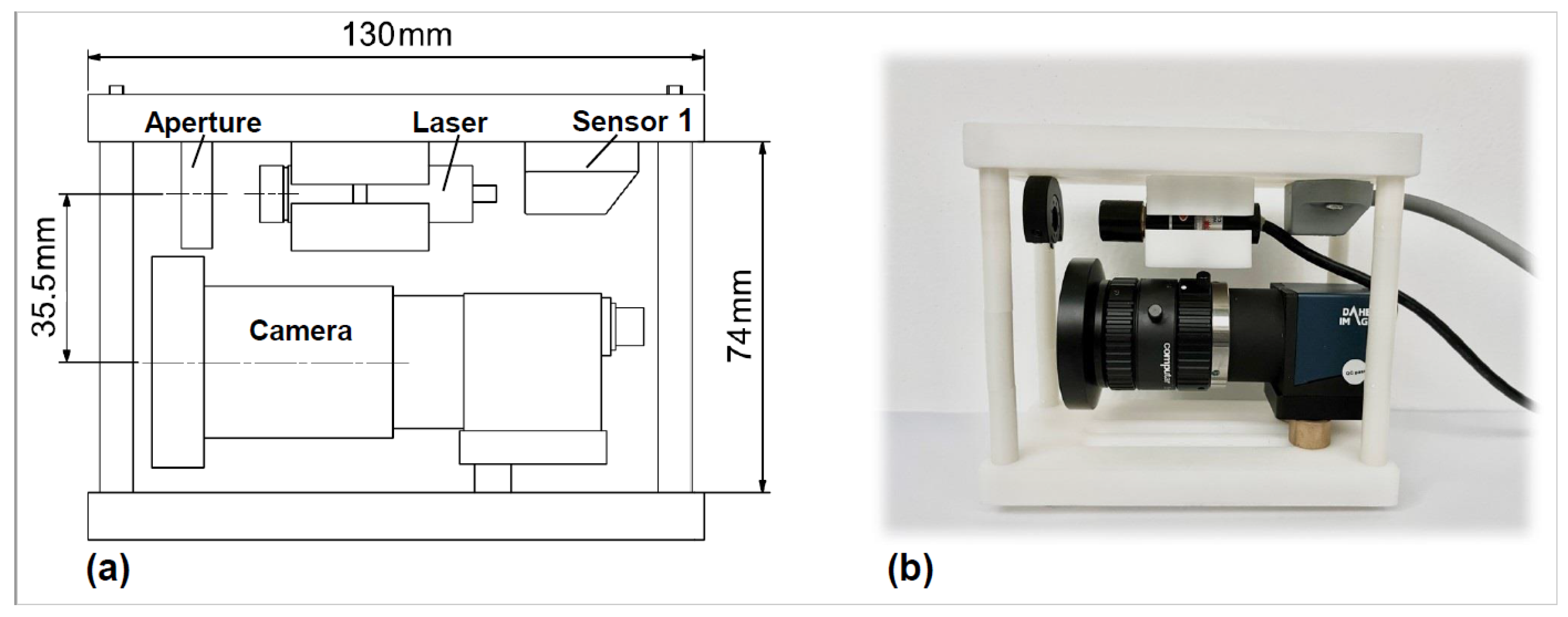

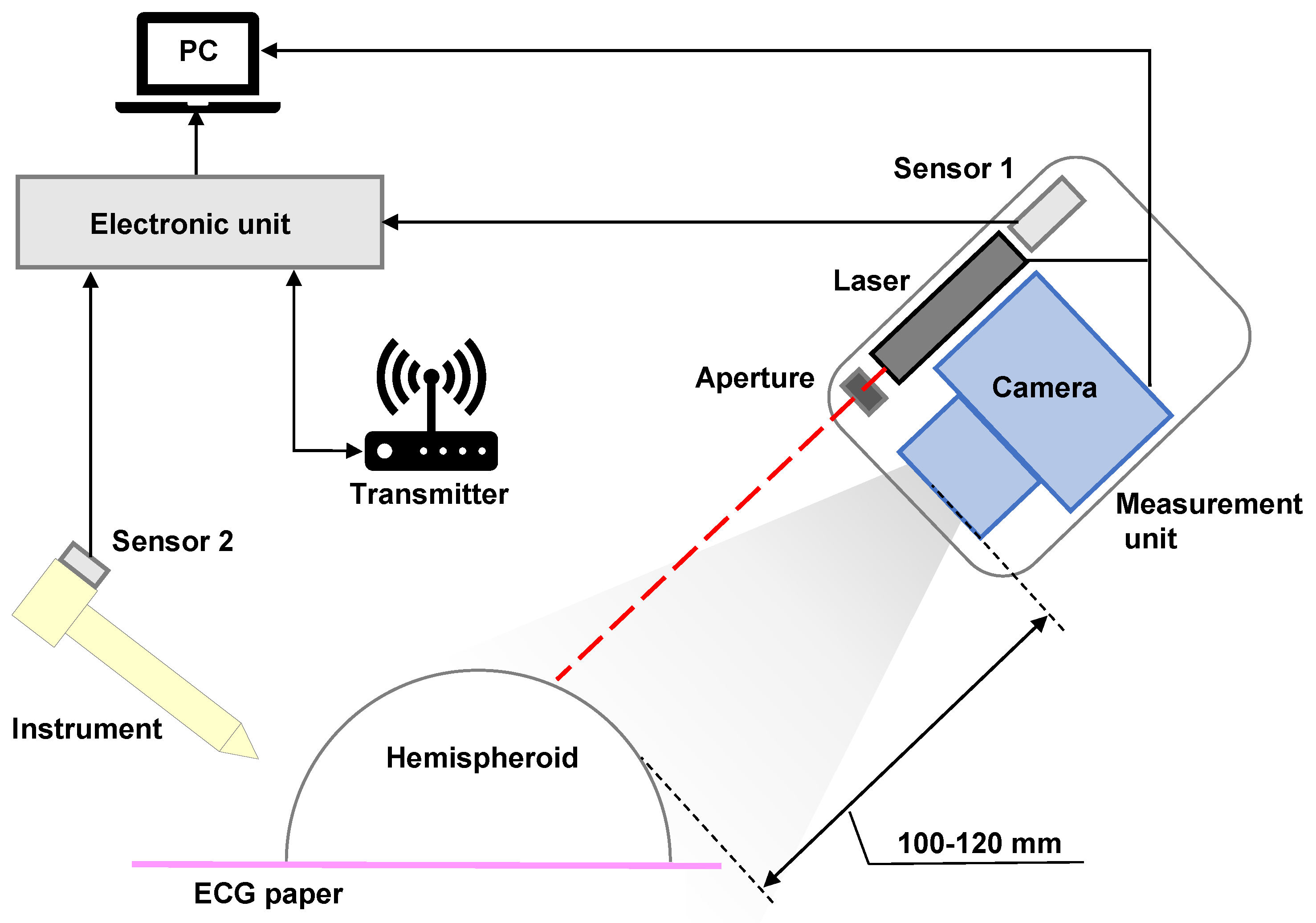

2.2. Development of a 3D Shape Measurement Unit Using the EBSIM

2.3. Previous Pose Evaluation

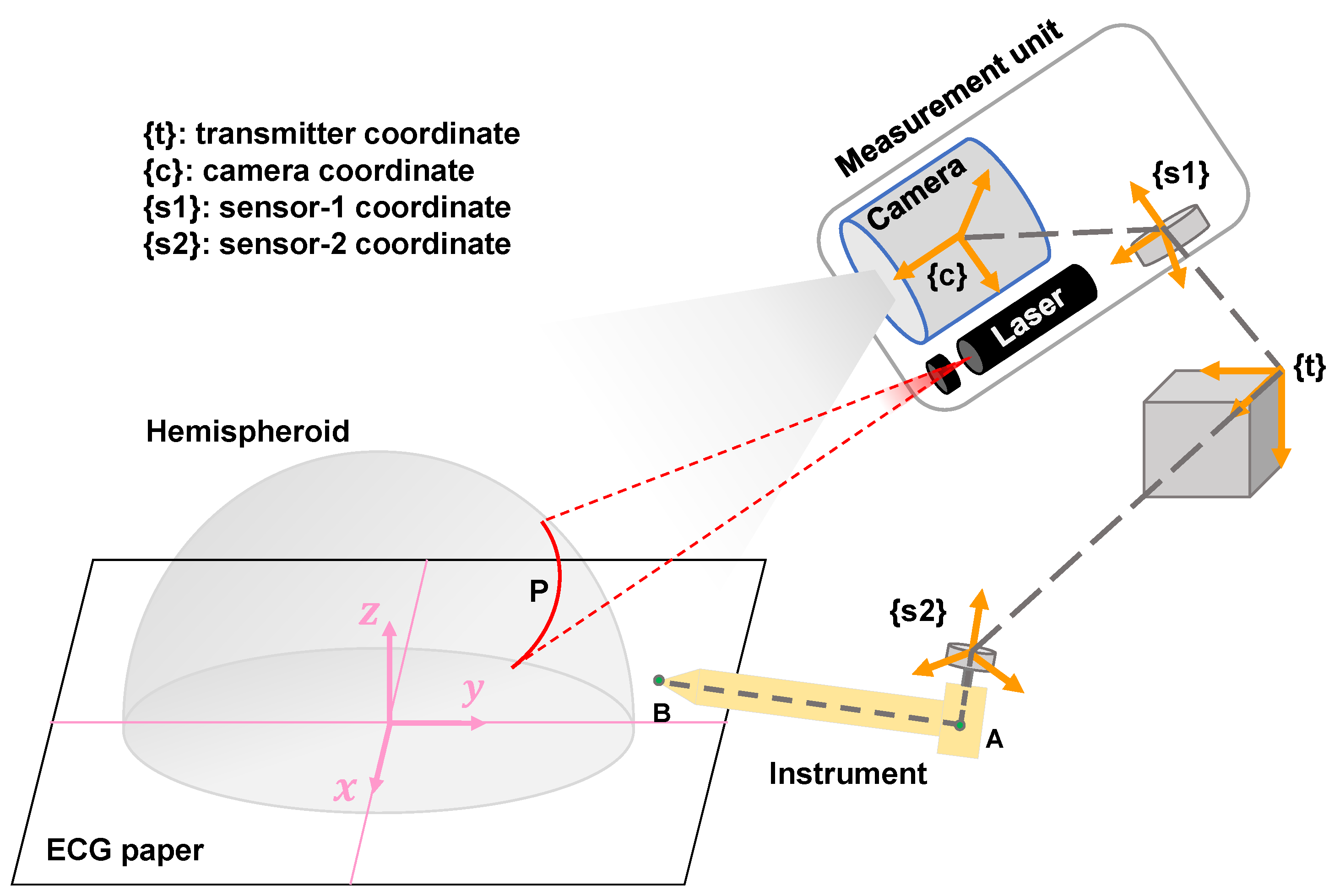

2.4. The Proposed Hemisphere Tabulation Method (HTM)

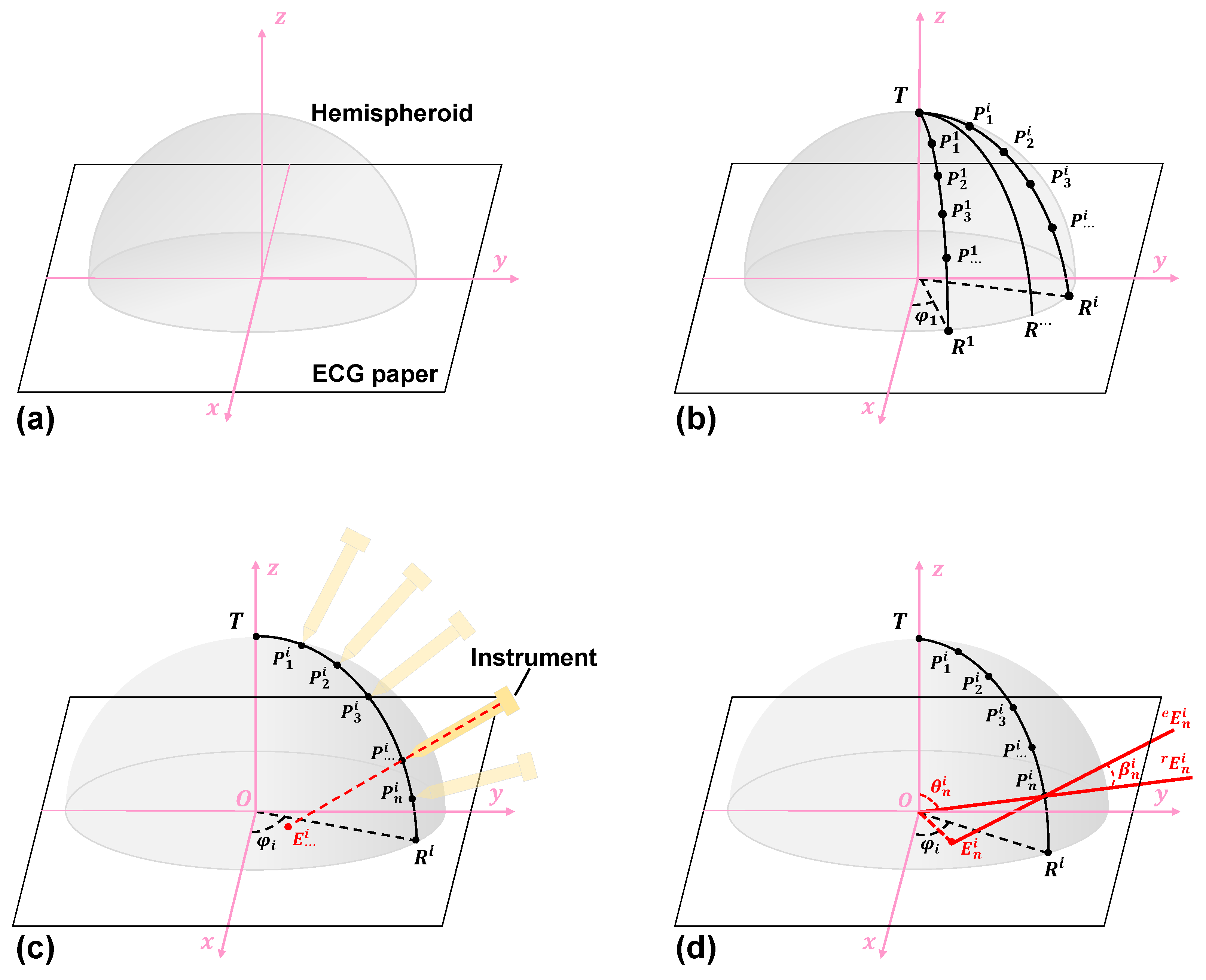

2.4.1. Step 1: Materials and Coordinate Definition

2.4.2. Step 2: Interest Point Marking

2.4.3. Step 3: 3D Shape Measurement

2.4.4. Step 4: Pose Measurement and Calculation

3. Experiments

3.1. Implementation Environment

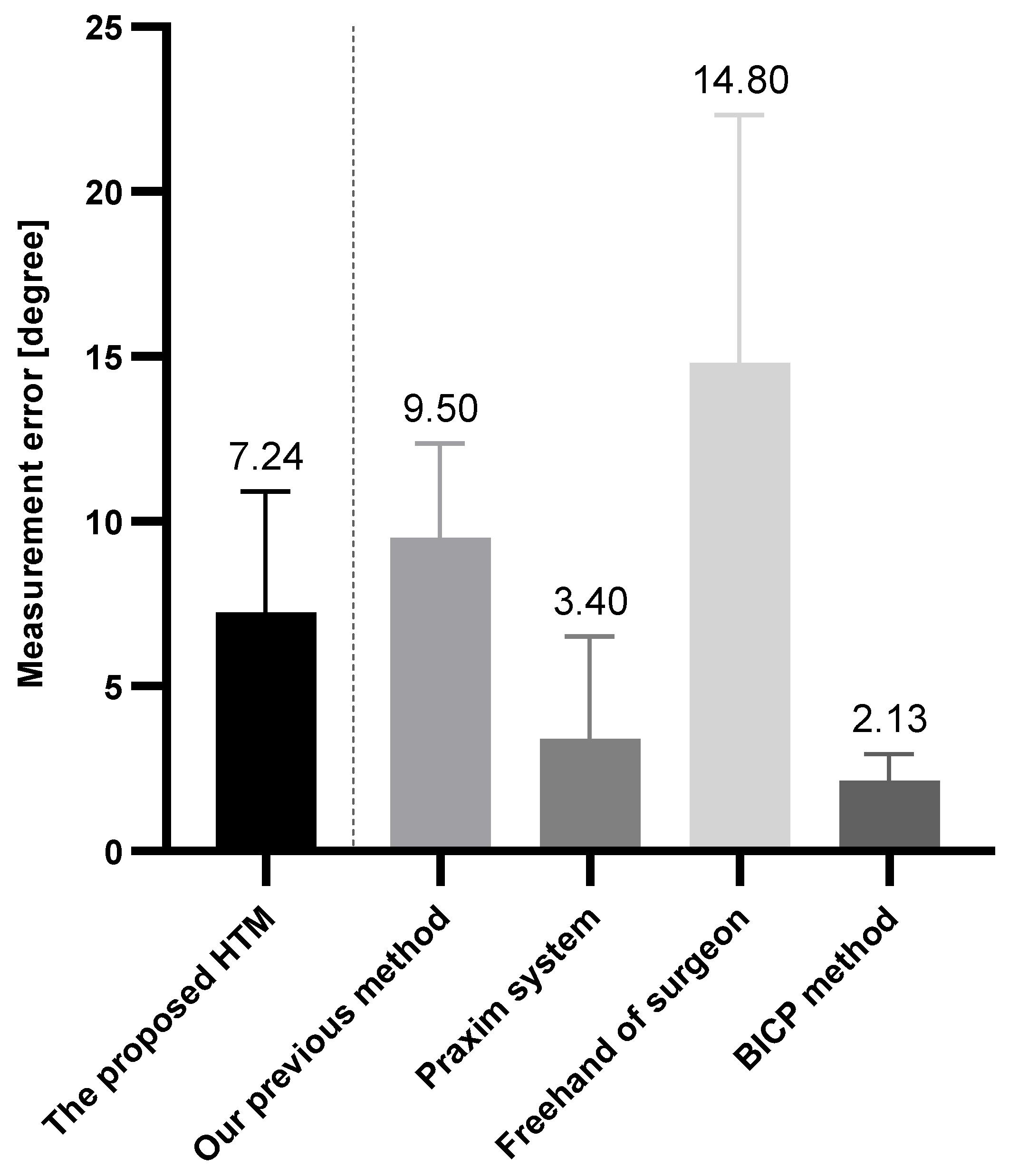

3.2. Results and Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lynch, T.S.; Patel, R.M.; Benedick, A.; Amin, N.H.; Jones, M.H.; Miniaci, A. Systematic Review of Autogenous Osteochondral Transplant Outcomes. Arthrosc. J. Arthrosc. Relat. Surg. 2015, 31, 746–754. [Google Scholar] [CrossRef]

- Chow, J.C.; Hantes, M.E.; Houle, J.B.; Zalavras, C.G. Arthroscopic autogenous osteochondral transplantation for treating knee cartilage defects: A 2- to 5-year follow-up study. Arthrosc. J. Arthrosc. Relat. Surg. 2004, 20, 681–690. [Google Scholar] [CrossRef]

- Marcacci, M.; Kon, E.; Zaffagnini, S.; Iacono, F.; Neri, M.P.; Vascellari, A.; Visani, A.; Russo, A. Multiple osteochondral arthroscopic grafting (mosaicplasty) for cartilage defects of the knee: Prospective study results at 2-year follow-up. Arthrosc. J. Arthrosc. Relat. Surg. 2005, 21, 462–470. [Google Scholar] [CrossRef]

- Unnithan, A.; Jimulia, T.; Mohammed, R.; Learmonth, D. Unique combination of patellofemoral joint arthroplasty with Osteochondral Autograft Transfer System (OATS)—A case series of six knees in five patients. Knee 2008, 15, 187–190. [Google Scholar] [CrossRef]

- Jud, L.; Fotouhi, J.; Andronic, O.; Aichmair, A.; Osgood, G.; Navab, N.; Farshad, M. Applicability of augmented reality in orthopedic surgery–a systematic review. BMC Musculoskelet. Disord. 2020, 21, 1–13. [Google Scholar] [CrossRef]

- Ewurum, C.H.; Guo, Y.; Pagnha, S.; Feng, Z.; Luo, X. Surgical Navigation in Orthopedics: Workflow and System Review. In Intelligent Orthopaedics: Artificial Intelligence and Smart Image-Guided Technology for Orthopaedics; Zheng, G., Tian, W., Zhuang, X., Eds.; Springer: Singapore, 2018; pp. 47–63. [Google Scholar] [CrossRef]

- Kügler, D.; Sehring, J.; Stefanov, A.; Stenin, I.; Kristin, J.; Klenzner, T.; Schipper, J.; Mukhopadhyay, A. i3PosNet: Instrument pose estimation from X-ray in temporal bone surgery. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1137–1145. [Google Scholar] [CrossRef]

- Hein, J.; Seibold, M.; Bogo, F.; Farshad, M.; Pollefeys, M.; Fürnstahl, P.; Navab, N. Towards markerless surgical tool and hand pose estimation. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 799–808. [Google Scholar] [CrossRef]

- Rodrigues, P.; Antunes, M.; Raposo, C.; Marques, P.; Fonseca, F.; Barreto, J.P. Deep segmentation leverages geometric pose estimation in computer-aided total knee arthroplasty. Healthc. Technol. Lett. 2019, 6, 226–230. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Baena, F.R.Y. Automatic Markerless Registration and Tracking of the Bone for Computer-Assisted Orthopaedic Surgery. IEEE Access 2020, 8, 42010–42020. [Google Scholar] [CrossRef]

- Radermacher, K.; Tingart, M. Computer-assisted orthopedic surgery. Biomed. Tech. Eng. 2012, 57, 207. [Google Scholar] [CrossRef] [PubMed]

- Beringer, D.C.; Patel, J.J.; Bozic, K.J. An overview of economic issues in computer-assisted total joint arthroplasty. Clin. Orthop. Relat. Res. 2007, 463, 26–30. [Google Scholar] [CrossRef]

- He, L.; Edouard, A.; Joshua, G.; Ferdinando, R.Y.B. Augmented Reality Based Navigation for Computer Assisted Hip Resurfacing: A Proof of Concept Study. Ann. Biomed. Eng. 2018, 46, 1595–1605. [Google Scholar] [CrossRef]

- Robert, H. Chondral repair of the knee joint using mosaicplasty. Orthop. Traumatol. Surg. Res. 2011, 97, 418–429. [Google Scholar] [CrossRef]

- Long, Z.; Nagamune, K.; Kuroda, R.; Kurosaka, M. Real-Time 3D Visualization and Navigation Using Fiber-Based Endoscopic System for Arthroscopic Surgery. J. Adv. Comput. Intell. Intell. Inform. 2016, 20, 735–742. [Google Scholar] [CrossRef]

- Long, Z.; Guo, H.; Nagamune, K.; Zuo, Y. Development of an ultracompact endoscopic three-dimensional scanner with flexible imaging fiber optics. Opt. Eng. 2021, 60, 114108. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Long, Z.; Nagamune, K. A Marching Cubes Algorithm: Application for Three-dimensional Surface Reconstruction Based on Endoscope and Optical Fiber. Information 2015, 18, 1425–1437. [Google Scholar]

- Di Benedetto, P.; Citak, M.; Kendoff, D.; O’Loughlin, P.F.; Suero, E.M.; Pearle, A.D.; Koulalis, D. Arthroscopic mosaicplasty for osteochondral lesions of the knee: Computer-assisted navigation versus freehand technique. Arthrosc. J. Arthrosc. Relat. Surg. 2012, 28, 1290–1296. [Google Scholar] [CrossRef] [PubMed]

- Hu, X.; Liu, H.; Rodriguez, Y.B.F. Markerless navigation system for orthopaedic knee surgery: A proof of concept study. IEEE Access 2021, 9, 64708–64718. [Google Scholar] [CrossRef]

- Jiang, J.; Xing, Y.; Wang, S.; Liang, K. Evaluation of robotic surgery skills using dynamic time warping. Comput. Methods Programs Biomed. 2017, 152, 71–83. [Google Scholar] [CrossRef]

- Lahanas, V.; Loukas, C.; Georgiou, E. A simple sensor calibration technique for estimating the 3D pose of endoscopic instruments. Surg. Endosc. 2016, 30, 1198–1204. [Google Scholar] [CrossRef]

- Pagador, J.B.; Sánchez, L.F.; Sánchez, J.A.; Bustos, P.; Moreno, J.; Sánchez-Margallo, F.M. Augmented reality haptic (ARH): An approach of electromagnetic tracking in minimally invasive surgery. Int. J. Comput. Assist. Radiol. Surg. 2011, 6, 257–263. [Google Scholar] [CrossRef] [PubMed]

| Camera | Parameters of Camera | Lens | Parameters of Lens |

|---|---|---|---|

| Product Model | MER-133-54U3M/C | Product Model | Computar H0514-MP2 |

| Sensor | Onsemi AR0135 CMOS | Focal length | 5 mm |

| Scan mode | Global shutter | Control focus | Manual |

| Frame rate | 54 frames per second | Focus range | 10 cm–90 cm |

| Resolution | 1280 (horizontal) × 960 (vertical) pixels | Angle of view | 65.5 deg (horizontal) × 51.4 deg (vertical) |

| Dimensions | 29 mm × 29 mm × 29 mm | Dimensions | 44.5 mm × 45.5 mm |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Long, Z.; Chi, Y.; Yang, D.; Jiang, Z.; Bai, L. Hemisphere Tabulation Method: An Ingenious Approach for Pose Evaluation of Instruments Using the Electromagnetic-Based Stereo Imaging Method. Micromachines 2023, 14, 446. https://doi.org/10.3390/mi14020446

Long Z, Chi Y, Yang D, Jiang Z, Bai L. Hemisphere Tabulation Method: An Ingenious Approach for Pose Evaluation of Instruments Using the Electromagnetic-Based Stereo Imaging Method. Micromachines. 2023; 14(2):446. https://doi.org/10.3390/mi14020446

Chicago/Turabian StyleLong, Zhongjie, Yongting Chi, Dejin Yang, Zhouxiang Jiang, and Long Bai. 2023. "Hemisphere Tabulation Method: An Ingenious Approach for Pose Evaluation of Instruments Using the Electromagnetic-Based Stereo Imaging Method" Micromachines 14, no. 2: 446. https://doi.org/10.3390/mi14020446

APA StyleLong, Z., Chi, Y., Yang, D., Jiang, Z., & Bai, L. (2023). Hemisphere Tabulation Method: An Ingenious Approach for Pose Evaluation of Instruments Using the Electromagnetic-Based Stereo Imaging Method. Micromachines, 14(2), 446. https://doi.org/10.3390/mi14020446