Machine Learning Approaches for Predicting Fatty Acid Classes in Popular US Snacks Using NHANES Data

Abstract

1. Introduction

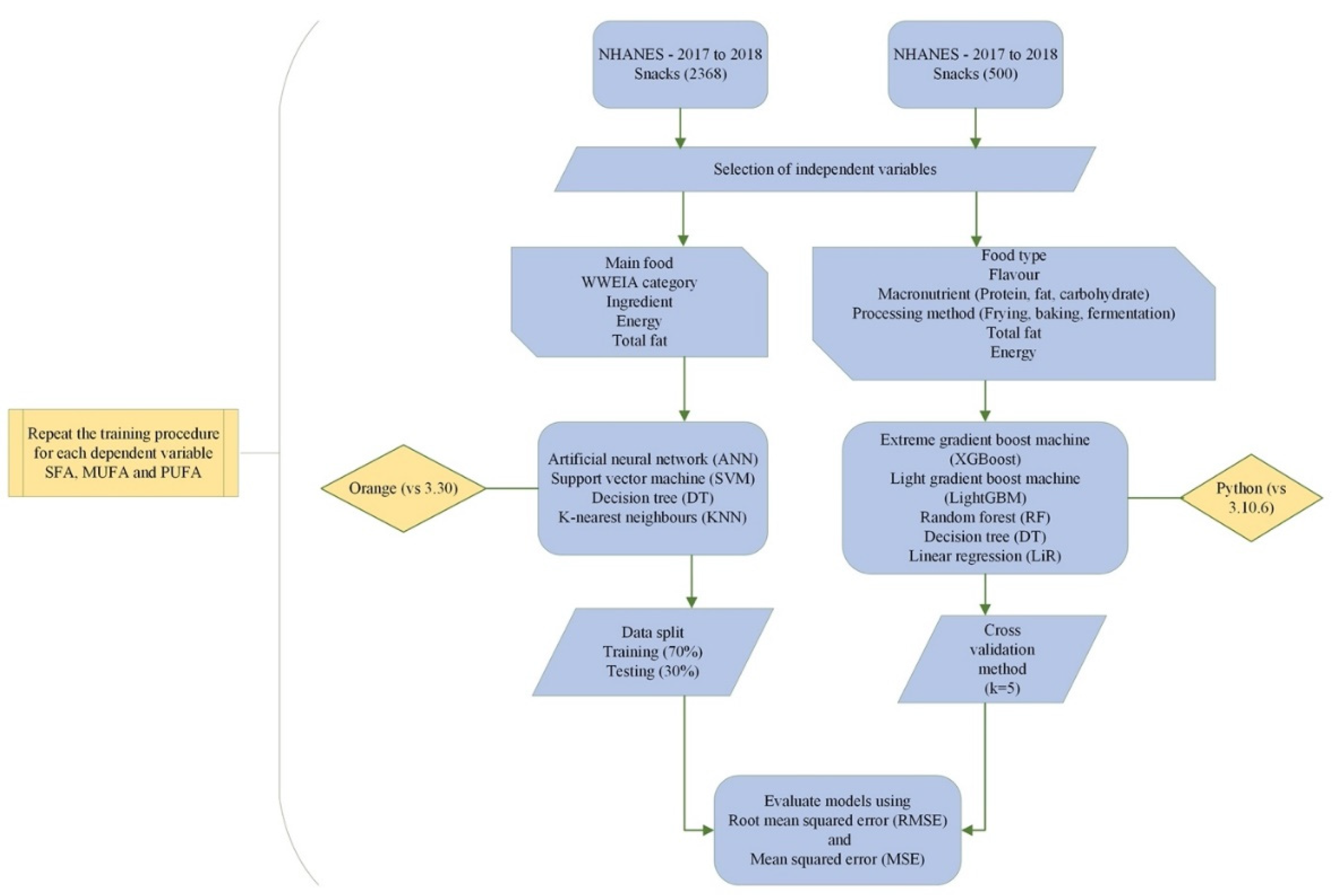

2. Data Source

3. Modeling

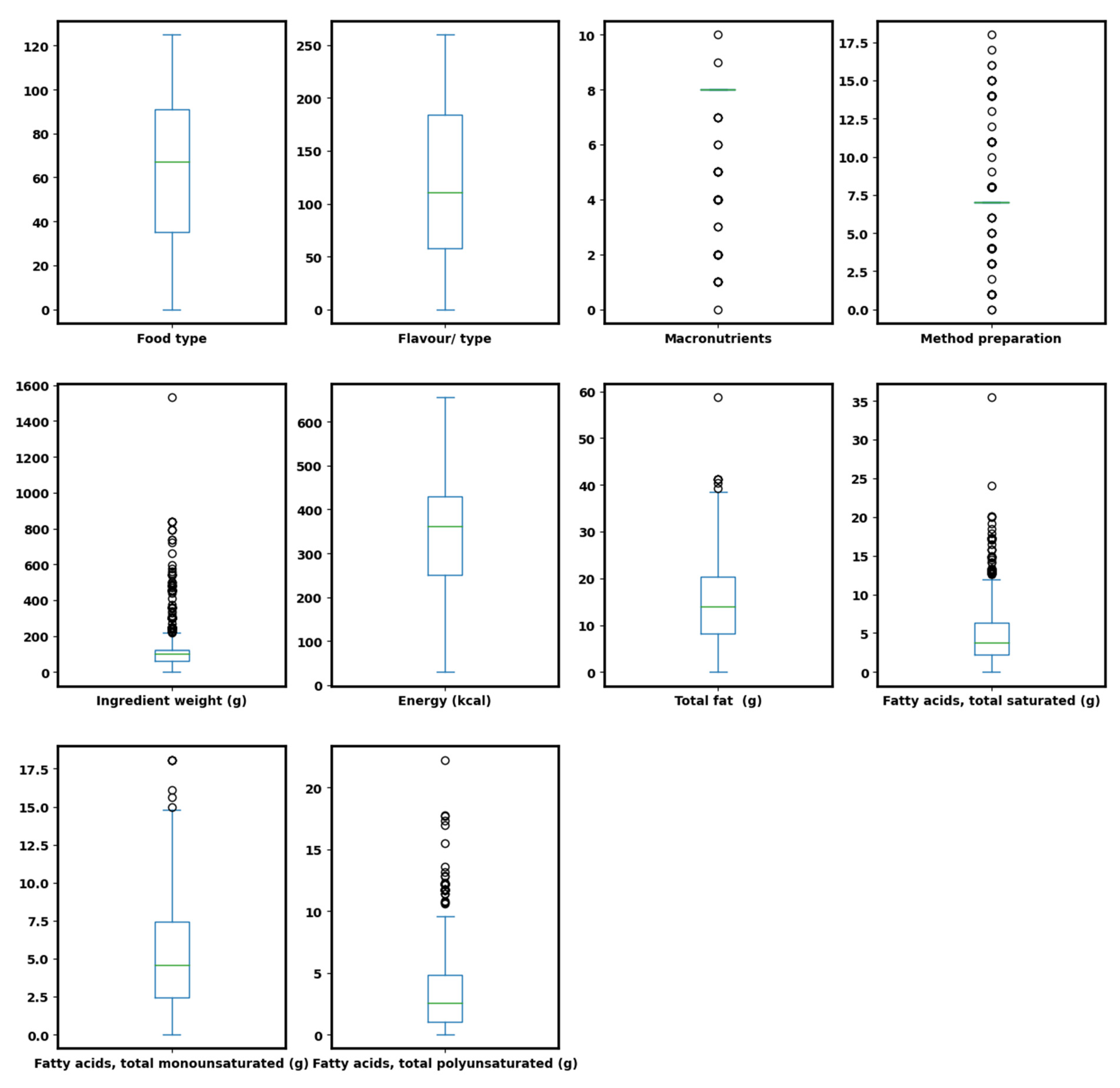

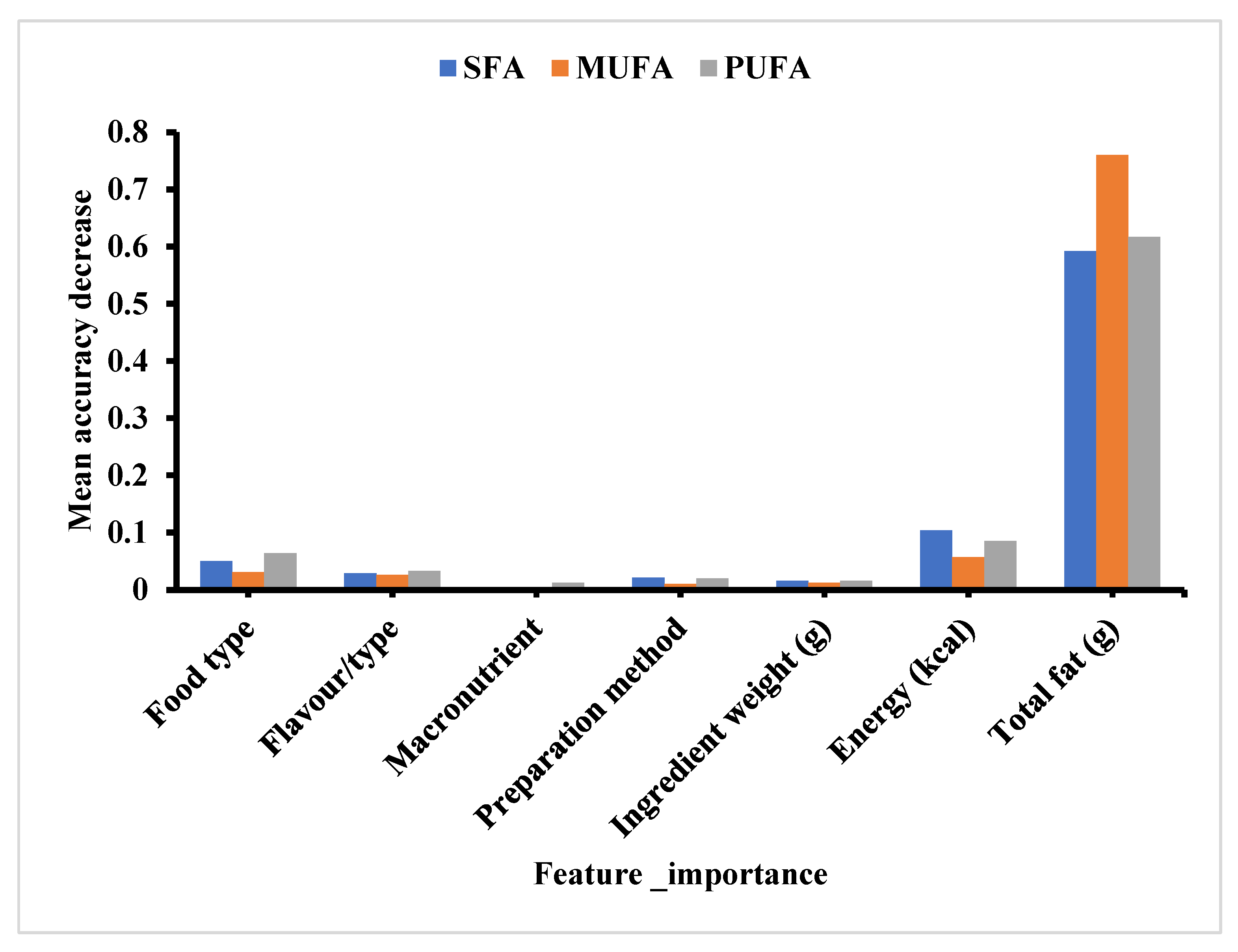

4. Results and Discussion

5. Study Limitation

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bellisle, F. Meals and snacking, diet quality and energy balance. Physiol. Behav. 2014, 134, 38–43. [Google Scholar] [CrossRef]

- The Nutrition Source. The Science of Snacking, The Nutrition Source. Harvard T.H. Chan School of Public Health. 2022. Available online: https://www.hsph.harvard.edu/nutritionsource/snacking/ (accessed on 18 May 2022).

- Casey, C.; Huang, Q.; Talegawkar, S.A.; Sylvetsky, A.C.; Sacheck, J.M.; DiPietro, L.; Lora, K.R. Added sugars, saturated fat, and sodium intake from snacks among U.S. adolescents by eating location. Prev. Med. Rep. 2021, 24, 101630. [Google Scholar] [CrossRef]

- Bowman, S.A. A Vegetarian-Style Dietary Pattern is Associated with Lower Energy, Saturated Fat, and Sodium Intakes; and Higher Whole Grains, Legumes, Nuts, and Soy Intakes by Adults: National Health and Nutrition Examination Surveys 2013–2016. Nutrients 2020, 9, 2668. [Google Scholar] [CrossRef]

- Newman, T. What Have We Learned from the World’s Largest Nutrition Study? MedicalNewsToday 2021. Available online: https://www.medicalnewstoday.com/articles/what-have-we-learned-from-the-worlds-largest-nutrition-study (accessed on 18 May 2022).

- Raatz, S.K.; Conrad, Z.; Johnson, L.K.; Picklo, M.J.; Jahns, L. Relationship of the Reported Intakes of Fat and Fatty Acids to Body Weight in US Adults. Nutrients 2017, 9, 438. [Google Scholar] [CrossRef]

- Martin, J.C.; Moran, L.J.; Harrison, C.L. Diet Quality and Its Effect on Weight Gain Prevention in Young Adults: A Narrative Review. Semin. Reprod. Med. 2020, 38, 407–413. [Google Scholar] [CrossRef]

- Mohammadi-Nasrabadi, F.; Zargaraan, A.; Salmani, Y.; Abedi, A.; Shoaie, E.; Esfarjani, F. Analysis of fat, fatty acid profile, and salt content of Iranian restaurant foods during the COVID-19 pandemic: Strengths, weaknesses, opportunities, and threats analysis. Food Sci. Nutr. 2021, 9, 6120–6130. [Google Scholar] [CrossRef]

- Krishnan, S.; Cooper, J.A. Effect of dietary fatty acid composition on substrate utilization and body weight maintenance in humans. Eur. J. Nutr. 2014, 53, 691–710. [Google Scholar] [CrossRef]

- U.S. Department of Agriculture. Dietary Guidelines for Americans. 2020, pp. 26–50. Available online: https://www.dietaryguidelines.gov/sites/default/files/2020-12/Dietary_Guidelines_for_Americans_2020-2025.pdf (accessed on 27 February 2022).

- World Health Organization. Obesity and Overweight. World Health Organization. 2021. Available online: https://www.who.int/news-room/fact-sheets/detail/obesity-and-overweight (accessed on 18 May 2022).

- De Silva, K.; Lim, S.; Mousa, A.; Teede, H.; Forbes, A.; Demmer, R.T.; Jönsson, D.; Enticott, J. Nutritional markers of undiagnosed type 2 diabetes in adults: Findings of a machine learning analysis with external validation and benchmarking. PLoS ONE 2021, 16, e0250832. [Google Scholar] [CrossRef] [PubMed]

- Petrus, P.; Arner, P. The impact of dietary fatty acids on human adipose tissue. Proc. Nutr. Soc. 2020, 79, 42–46. [Google Scholar] [CrossRef] [PubMed]

- Hicklin, T. How Dietary Factors Influence Disease Risk|National Institutes of Health (NIH). National Institutes of Health (NIH), US Department of Health and Human Service. 2017. Available online: https://www.nih.gov/news-events/nih-research-matters/how-dietary-factors-influence-disease-risk (accessed on 18 May 2022).

- Tao, Q.; Ding, H.; Wang, H.; Cui, X. Application research: Big data in food industry. Foods 2021, 10, 2203. [Google Scholar] [CrossRef] [PubMed]

- Badole, M. Data Science in Retail Industry|Data Science Use Cases in Retail Industry. Anal. Vidya 2021. Available online: https://www.analyticsvidhya.com/blog/2021/05/data-science-use-cases-in-retail-industry/ (accessed on 18 May 2022).

- Das, M.; Cui, R.; Campbell, D.R.; Agrawal, G.; Ramnath, R. Towards methods for systematic research on big data. In Proceedings of the 2015 IEEE International Conference on Big Data (Big Data), Santa Clara, CA, USA, 29 October–1 November 2015; pp. 2072–2081. [Google Scholar] [CrossRef]

- Nychas, G.-J.; Sims, E.; Tsakanikas, P.; Mohareb, F. Data Science in the Food Industry. Annu. Rev. Biomed. Data Sci. 2021, 4, 341–367. [Google Scholar] [CrossRef] [PubMed]

- Mavani, N.R.; Ali, J.M.; Othman, S.; Hussain, M.A.; Hashim, H.; Rahman, N.A. Application of Artificial Intelligence in Food Industry—A Guideline. In Food Engineering Reviews; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar] [CrossRef]

- Brooks, R.; Nguyen, D.; Bhatti, A.; Allender, S.; Johnstone, M.; Lim, C.P.; Backholer, K. Use of artificial intelligence to enable dark nudges by transnational food and beverage companies: Analysis of company documents. Public Health Nutr. 2022, 25, 1291–1299. [Google Scholar] [CrossRef]

- Saha, D.; Manickavasagan, A. Machine learning techniques for analysis of hyperspectral images to determine quality of food products: A review. Curr. Res. Food Sci. 2021, 4, 28–44. [Google Scholar] [CrossRef] [PubMed]

- Gavrilova, Y. Artificial Intelligence vs. Machine Learning vs. Deep Learning: Essentials. 2020. Available online: https://serokell.io/blog/ai-ml-dl-difference (accessed on 16 November 2021).

- Krittanawong, C.; Zhang, H.J.; Wang, Z.; Aydar, M.; Kitai, T. Artificial Intelligence in Precision Cardiovascular Medicine. J. Am. Coll. Cardiol. 2017, 69, 2657–2664. [Google Scholar] [CrossRef]

- Ren, Y. Python Machine Learning: Machine Learning and Deep Learning With Python. Int. J. Knowledge-Based Organ. 2021, 11, 67–70. [Google Scholar]

- Mahesh, B. Machine Learning Algorithms—A Review. Int. J. Sci. Res. 2018, 9, 381–386. [Google Scholar] [CrossRef]

- DeGregory, K.W.; Kuiper, P.; DeSilvio, T.; Pleuss, J.D.; Miller, R.; Roginski, J.W.; Fisher, C.B.; Harness, D.; Viswanath, S.; Heymsfield, S.B.; et al. A review of machine learning in obesity. Obes. Rev. 2018, 19, 668–685. [Google Scholar] [CrossRef]

- Yu, D.; Gu, Y. A machine learning method for the fine-grained classification of green tea with geographical indication using a mos-based electronic nose. Foods 2021, 10, 795. [Google Scholar] [CrossRef]

- Zou, Y.; Gaida, M.; Franchina, F.A.; Stefanuto, P.H.; Focant, J.F. Distinguishing between Decaffeinated and Regular Coffee by HS-SPME-GC×GC-TOFMS, Chemometrics, and Machine Learning. Molecules 2022, 27, 1806. [Google Scholar] [CrossRef]

- Tachie, C.; Aryee, A.N.A. Using machine learning models to predict quality of plant-based foods. Curr. Res. Food Sci. 2023, 7, 100544. [Google Scholar] [CrossRef]

- Imran, M.; Khan, H.; Sablani, S.S.; Nayak, R.; Gu, Y. Machine Learning-based modelling in food processing applications: State of the art. Compr. Rev. Food Sci. Food Saf. 2022, 21, 1409–1438. [Google Scholar] [CrossRef]

- Sharma, S. Artificial Neural Network (ANN) in Machine Learning—Data Science Central. Data Sci. Cent. 2017. Available online: https://www.datasciencecentral.com/artificial-neural-network-ann-in-machine-learning/ (accessed on 20 May 2022).

- SoftwareTestingHelp. A Complete Guide to Artificial Neural Network In Machine Learning. Softw. Test. Help. 2021. Available online: https://www.softwaretestinghelp.com/artificial-neural-network/ (accessed on 19 May 2022).

- Mahanta, J. Introduction to Neural Networks, Advantages and Applications|by Jahnavi Mahanta|Towards Data Science. Towar. Data Sci. 2017, 348. Available online: https://towardsdatascience.com/introduction-to-neural-networks-advantages-and-applications-96851bd1a207 (accessed on 19 May 2022).

- López-Martínez, F.; Schwarcz, A.; Núñez-Valdez, E.R.; García-Díaz, V. Machine learning classification analysis for a hypertensive population as a function of several risk factors. Expert Syst. Appl. 2018, 110, 206–215. [Google Scholar] [CrossRef]

- Joby, A. What Is K-Nearest Neighbor? An ML Algorithm to Classify Data. Learn Hub 2021. Available online: https://learn.g2.com/k-nearest-neighbor (accessed on 19 May 2022).

- NHANES. NHANES—National Health and Nutrition Examination Survey Homepage. Natl. Cent. Health Stat. 2021. Available online: https://www.cdc.gov/nchs/nhanes/index.htm (accessed on 25 February 2022).

- Stierman, B.; Afful, J.; Carroll, M.D.; Chen, T.C.; Davy, O.; Fink, S.; Fryar, C.D.; Gu, Q.; Hales, C.M.; Hughes, J.P.; et al. National Health and Nutrition Examination Survey 2017–March 2020 Prepandemic Data Files—Development of Files and Prevalence Estimates for Selected Health Outcomes. 2021. Available online: https://search.bvsalud.org/global-literature-on-novel-coronavirus-2019-ncov/resource/en/covidwho-1296259 (accessed on 24 July 2022).

- Service, A.R. AMPM—Features: USDA ARS. United States Department of Agriculture. 2021. Available online: https://www.ars.usda.gov/northeast-area/beltsville-md-bhnrc/beltsville-human-nutrition-research-center/food-surveys-research-group/docs/ampm-features/ (accessed on 25 February 2022).

- Korstanje, J. Partial Least Squares|Towards Data Science. Towardsdatascience 2021. Available online: https://towardsdatascience.com/partial-least-squares-f4e6714452a (accessed on 27 May 2022).

- Estelles-Lopez, L.; Ropodi, A.; Pavlidis, D.; Fotopoulou, J.; Gkousari, C.; Peyrodie, A.; Panagou, E.; Nychas, G.-J.; Mohareb, F. An automated ranking platform for machine learning regression models for meat spoilage prediction using multi-spectral imaging and metabolic profiling. Food Res. Int. 2017, 99, 206–215. [Google Scholar] [CrossRef]

- Liu, H.; Li, Q.; Yan, B.; Zhang, L.; Gu, Y. Bionic Electronic Nose Based on MOS Sensors Array and Machine Learning Algorithms Used for Wine Properties Detection. Sensors 2019, 19, 45. [Google Scholar] [CrossRef] [PubMed]

- Sanchez-Pinto, L.N.; Luo, Y.; Churpek, M.M. Big Data and Data Science in Critical Care. Chest 2018, 154, 1239–1248. [Google Scholar] [CrossRef] [PubMed]

- Ray, S. A Quick Review of Machine Learning Algorithms. In Proceedings of the 2019 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COMITCon), Faridabad, India, 14–16 February 2019; pp. 35–39. [Google Scholar] [CrossRef]

- Joshee, K.; Abhang, T.; Kulkarni, R. Fatty acid profiling of 75 Indian snack samples highlights overall low trans fatty acid content with high polyunsaturated fatty acid content in some samples. PLoS ONE 2019, 14, e0225798. [Google Scholar] [CrossRef] [PubMed]

- Patwardhan, S. Simple Understanding and Implementation of KNN Algorithm. Anal. Vidhya 2021, 10000, 1–14. Available online: https://www.analyticsvidhya.com/blog/2021/04/simple-understanding-and-implementation-of-knn-algorithm/ (accessed on 19 May 2022).

- Chiong, R.; Fan, Z.; Hu, Z.; Chiong, F. Using an improved relative error support vector machine for body fat prediction. Comput. Methods Programs Biomed. 2021, 198, 105749. [Google Scholar] [CrossRef]

- Khandelwal, R. K Fold and Other Cross-Validation Techniques|by Renu Khandelwal|DataDrivenInvestor. DataDrivenInvestor 2018. Available online: https://medium.datadriveninvestor.com/k-fold-and-other-cross-validation-techniques-6c03a2563f1e (accessed on 23 September 2022).

- Pulagam, S. How to Detect and Deal with Multicollinearity. Towards Data Science. 2020. Available online: https://towardsdatascience.com/how-to-detect-and-deal-with-multicollinearity-9e02b18695f1 (accessed on 21 March 2023).

- Diana, M.; Balentyne, P. Scatterplots and Correlation Diana. In The Basic Practice of Statistics, 6th ed.; WH Freeman: New York, NY, USA, 2013. [Google Scholar]

- Aggarwal, C.C. Outlier Ensembles. Outlier Anal. 2017, 185–218. [Google Scholar] [CrossRef]

- Pandian, S. K-Fold Cross Validation Technique and Its Essentials—Analytics Vidhya. Anal. Vidhya 2022. Available online: https://www.analyticsvidhya.com/blog/2022/02/k-fold-cross-validation-technique-and-its-essentials/ (accessed on 6 September 2022).

- Fuentes, S.; Tongson, E.; Torrico, D.D.; Viejo, C.G. Modeling Pinot Noir Aroma Profiles Based on Weather and Water Management Information Using. Foods 2020, 9, 33. [Google Scholar] [CrossRef]

- Ma, P.; Li, A.; Yu, N.; Li, Y.; Bahadur, R.; Wang, Q.; Ahuja, J.K. Application of machine learning for estimating label nutrients using USDA Global Branded Food Products Database, (BFPD). J. Food Compos. Anal. 2021, 100, 103857. [Google Scholar] [CrossRef]

- Mandhot, P. What Is LightGBM, How to Implement It? How to Fine Tune the Parameters?|by Pushkar Mandot|Medium. Microsoft LightGBM Documentation. 2017. Available online: https://medium.com/@pushkarmandot/https-medium-com-pushkarmandot-what-is-lightgbm-how-to-implement-it-how-to-fine-tune-the-parameters-60347819b7fc (accessed on 24 August 2022).

- Mondal, A. LightGBM in Python|Complete guide on how to Use LightGBM in Python. Anal. Vidya 2022. Available online: https://www.analyticsvidhya.com/blog/2021/08/complete-guide-on-how-to-use-lightgbm-in-python/ (accessed on 24 August 2022).

| Model | Parameter | MSE | RMSE | MAE | R2 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SFA | MUFA | PUFA | SFA | MUFA | PUFA | SFA | MUFA | PUFA | SFA | MUFA | PUFA | ||

| KNN | Training | 0.616 | 0.229 | 0.505 | 0.785 | 0.479 | 0.711 | 0.190 | 0.135 | 0.175 | 0.966 | 0.982 | 0.959 |

| Testing | 0.500 | 0.239 | 0.357 | 0.707 | 0.489 | 0.612 | 0.194 | 0.139 | 0.179 | 0.974 | 0.983 | 0.966 | |

| DT | Training | 1.203 | 0.512 | 0.795 | 1.097 | 0.715 | 0.892 | 0.376 | 0.257 | 0.298 | 0.934 | 0.960 | 0.935 |

| Testing | 1.137 | 0.715 | 0.544 | 1.172 | 0.846 | 0.738 | 0.417 | 0.301 | 0.290 | 0.927 | 0.948 | 0.940 | |

| SVM | Training | 20.760 | 10.173 | 18.764 | 4.556 | 3.190 | 4.332 | 3.889 | 2.579 | 3.880 | −0.142 | 0.212 | −0.540 |

| Testing | 21.023 | 9.986 | 18.125 | 4.585 | 3.160 | 4.257 | 3.925 | 2.528 | 3.835 | −0.114 | 0.276 | −0.660 | |

| ANN | Training | 9.083 | 3.511 | 5.859 | 3.014 | 1.874 | 2.421 | 2.058 | 0.212 | 1.620 | 0.500 | 0.728 | 0.519 |

| Testing | 9.227 | 3.757 | 5.280 | 3.038 | 1.938 | 2.298 | 1.993 | 1.888 | 1.536 | 0.511 | 0.728 | 0.516 | |

| Parameter | Energy (kcal) | Total Fat (g) | SFA (g) | MUFA (g) | PUFA (g) |

|---|---|---|---|---|---|

| Count | 499 | 499 | 499 | 499 | 499 |

| Mean | 343.909 | 14.829 | 5.062 | 5.100 | 3.601 |

| Std | 113.318 | 8.927 | 4.367 | 3.430 | 3.495 |

| Minimum | 30.000 | 0.000 | 0.000 | 0.000 | 0.000 |

| 25% (1st quartile) | 251.500 | 8.210 | 2.277 | 1.431 | 1.040 |

| 50% (2nd quartile) | 362.000 | 13.920 | 3.804 | 4.570 | 2.581 |

| 75% (3rd quartile) | 429.000 | 20.370 | 6.375 | 7.449 | 4.800 |

| Maximum | 656.000 | 58.780 | 34.470 | 18.077 | 22.228 |

| Model | MSE | RMSE | MAE | R2 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SFA | MUFA | PUFA | SFA | MUFA | PUFA | SFA | MUFA | PUFA | SFA | MUFA | PUFA | |

| XGR | 6.710 | 3.432 | 3.762 | 2.663 | 1.818 | 2.146 | 1.629 | 0.985 | 1.340 | 0.724 | 0.722 | 0.695 |

| DT | 10.735 | 5.120 | 7.075 | 3.303 | 2.194 | 2.680 | 1.594 | 1.124 | 1.259 | 0.418 | 0.514 | 0.390 |

| LightGBM | 7.440 | 3.401 | 4.434 | 2.668 | 1.837 | 2.155 | 1.582 | 1.153 | 1.303 | 0.722 | 0.742 | 0.635 |

| LiR | 9.086 | 3.689 | 5.820 | 3.010 | 1.919 | 2.409 | 2.031 | 1.354 | 1.684 | 0.510 | 0.662 | 0.503 |

| RF | 6.788 | 2.934 | 4.322 | 2.601 | 1.743 | 2.064 | 1.489 | 0.930 | 1.222 | 0.732 | 0.751 | 0.686 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tachie, C.Y.E.; Obiri-Ananey, D.; Tawiah, N.A.; Attoh-Okine, N.; Aryee, A.N.A. Machine Learning Approaches for Predicting Fatty Acid Classes in Popular US Snacks Using NHANES Data. Nutrients 2023, 15, 3310. https://doi.org/10.3390/nu15153310

Tachie CYE, Obiri-Ananey D, Tawiah NA, Attoh-Okine N, Aryee ANA. Machine Learning Approaches for Predicting Fatty Acid Classes in Popular US Snacks Using NHANES Data. Nutrients. 2023; 15(15):3310. https://doi.org/10.3390/nu15153310

Chicago/Turabian StyleTachie, Christabel Y. E., Daniel Obiri-Ananey, Nii Adjetey Tawiah, Nii Attoh-Okine, and Alberta N. A. Aryee. 2023. "Machine Learning Approaches for Predicting Fatty Acid Classes in Popular US Snacks Using NHANES Data" Nutrients 15, no. 15: 3310. https://doi.org/10.3390/nu15153310

APA StyleTachie, C. Y. E., Obiri-Ananey, D., Tawiah, N. A., Attoh-Okine, N., & Aryee, A. N. A. (2023). Machine Learning Approaches for Predicting Fatty Acid Classes in Popular US Snacks Using NHANES Data. Nutrients, 15(15), 3310. https://doi.org/10.3390/nu15153310