Hyperspectral Image Classification Based on Semi-Supervised Rotation Forest

Abstract

:1. Introduction

2. Materials and Methodology

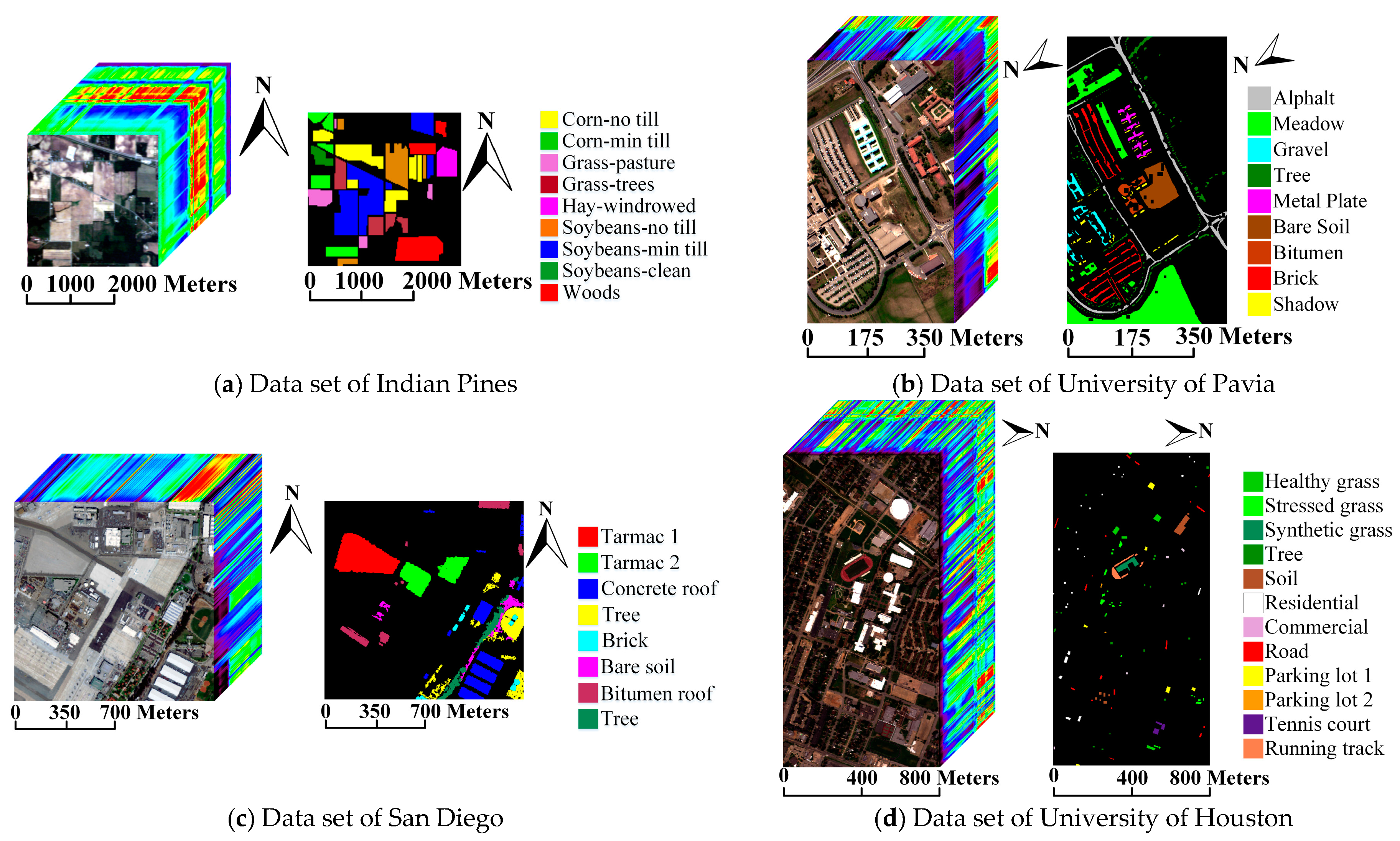

2.1. Study Data Sets

- (1)

- The first data set is the well-known scene taken in 1992 by the Airborne Visible Infrared Imaging Spectrometer (AVIRIS) sensor over the Indian Pines region in Northwestern Indiana. It has 144 × 144 pixels and 200 spectral bands with a pixel resolution of 20 m. Nine classes including different categories of crops have been labeled in the ground truth image.

- (2)

- The second data set was collected over the University of Pavia, Italy, by the Reflective Optics System Imaging Spectrometer (ROSIS) system. It consists of 103 spectral bands after removing the noisy bands, and 610 × 340 pixels for each band with a pixel resolution of 1.3 m. The ground truth image contains nine classes [37,38].

- (3)

- The third data set is a low-altitude AVIRIS HS image of a portion of the North Island of the U.S. Naval Air Station in San Diego, CA, USA. This HS image consists of 126 bands of size 400 × 400 pixels with a spatial resolution of 3.5 m per pixel after removing the noisy bands. The ground truth image has eight classes inside [39].

- (4)

- The last data set is provided by the 2013 Institute of Electrical and Electronics Engineers (IEEE) Geoscience and Remote Sensing Society (GRSS) Data Fusion Contest (DFC). It was acquired by the compact airborne spectrographic imager sensor (CASI) over the University of Houston campus and neighboring urban area, and consists of 144 bands with a spatial resolution of 2.5 m. A subset of size 640 × 320 is used, which contains 12 classes in the corresponding ground truth image. Figure 1 shows the experimental data sets.

2.2. Weighted Semi-Supervised Local Discriminant Analysis

2.2.1. Local Fisher Discriminant Analysis (LFDA)

2.2.2. Neighborhood Preserving Embedding (NPE)

2.2.3. Weighted SLDA

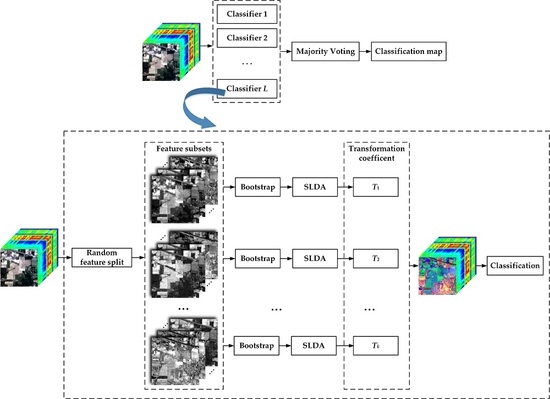

2.3. Proposed Semi-Supervised Rotation Forest

- The original feature set is divided randomly into disjoint subsets with each subset containing features;

- Use the bootstrap approach to select a subset of the training samples for each feature subset (typically 75% of the total training samples);

- Run PCA on each feature subset and store the transformation coefficients;

- Reorder the coefficients to match the original features, rotate the samples using the obtained coefficients (i.e., feature extraction);

- Perform DT on the rotated training and testing samples;

- The process is repeated times to obtain multiple classifiers, followed by a majority voting rule to integrate the classification results.

| Algorithm 1: Procedures of SSRoF |

| Input: Training samples , testing samples , unlabeled samples , ensemble classifiers , number of feature subsets , ensemble Output: Class labels of For 1. Randomly split the features into subsets; For 2. Randomly select a subset of samples from and , respectively, (typically 75% of samples) using bootstrap approach; 3. Perform the weighted SLDA algorithm by the subset of and to obtain the pairs of between-class and within-class scatter matrices in Equation (17); For 4. Obtain the eigenvector matrix by solving Equation (17); End for End for For 5. Construct the transformation matrix by merging the eigenvector matrices, and rearrange the columns of to match the order of original features; 6. Build DT sub-classifier using ; 7. Perform classification for by using the sub-classifier; End for End for 8. Use a majority voting rule for the sub-classifiers to compute the confidence of and assign a class label for each testing sample; |

3. Experimental Results and Discussion

3.1. Experimental Setup

3.2. Performance Evaluation

3.3. Impact of Parameters

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Ballanti, L.; Blesius, L.; Hines, E.; Kruse, B. Tree species classification using hyperspectral imagery: A comparison of two classifiers. Remote Sens. 2016, 8, 445. [Google Scholar] [CrossRef]

- Sami ul Haq, Q.; Tao, L.; Yang, S. Neural network based Adaboosting approach for hyperspectral data classication. In Proceedings of the 2011 International Conference on Computer Science and Network Technology (ICCSNT), Harbin, China, 24–26 December 2011. [Google Scholar]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Li, J.; Bioucas-Dias, J.; Plaza, A. Semisupervised hyperspectral image segmentation using multinomial logistic regression with active learning. IEEE Trans. Geosci. Remote Sens. 2010, 11, 4085–4098. [Google Scholar] [CrossRef]

- Persello, C.; Bruzzone, L. Active and semisupervised learning for the classification of remote sensing images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6937–6956. [Google Scholar] [CrossRef]

- Villa, A.; Chanussot, J.; Benediktsson, J.A.; Jutten, C. Spectral unmixing for the classification of hyperspectral images at a finer spatial resolution. IEEE J. Sel. Top. Signal Process. 2011, 5, 521–533. [Google Scholar] [CrossRef]

- Golipour, M.; Ghassemian, H.; Mirzapour, F. Integrating hierarchical segmentation maps with MRF prior for classification of hyperspectral images in a Bayesian framework. IEEE Trans. Geosci. Remote Sens. 2016, 54, 805–816. [Google Scholar] [CrossRef]

- Licciardi, G.; Pacifici, F.; Tuia, D.; Prasad, S.; West, T.; Giacco, F.; Thiel, C.; Inglada, J.; Christophe, E.; Chanussot, J.; et al. Decision fusion for the classification of hyperspectral data: Outcome of the 2008 GRSS data fusion contest. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3857–3865. [Google Scholar] [CrossRef]

- Wozniak, M.; Graña, M.; Corchado, E. A survey of multiple classifier systems as hybrid systems. Inf. Fusion 2014, 16, 3–17. [Google Scholar] [CrossRef]

- Krawczyk, B.; Minku, L.L.; Gama, J.; Stefanowski, J.; Wozniak, M. Ensemble learning for data stream analysis: A survey. Inf. Fusion 2017, 37, 132–156. [Google Scholar] [CrossRef]

- Santos, A.B.; Araújo, A.A.; Menotti, D. Combining multiple classification methods for hyperspectral data interpretation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 1450–1459. [Google Scholar] [CrossRef]

- Waske, B.; Linden, S.V.D.; Benediktsson, J.A.; Rabe, A.; Hostert, P. Sensitivity of support vector machines to random feature selection in classification of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2010, 2880–2889. [Google Scholar] [CrossRef]

- Wang, S.; Yao, X. Relationships between diversity of classification ensembles and single-class performance measures. IEEE Trans. Knowl. Data Eng. 2013, 25, 206–219. [Google Scholar] [CrossRef]

- Galar, M.; Fernandez, A.; Barrenechea, E.; Bustince, H.; Herrera, F. A review on ensembles for the class imbalance problem: Bagging-, Boosting-, and hybrid-based approaches. IEEE Trans. Syst. Man Cybern.—Part C: Appl. Rev. 2012, 42, 463–484. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Schapire, R.E. The strength of weak learn ability. Mach. Learn. 1990, 5, 197–227. [Google Scholar] [CrossRef]

- Schapire, R.E.; Singer, Y. Improved boosting algorithms using confidence-rated predictions. Mach. Learn. 1999, 37. [Google Scholar] [CrossRef]

- Ho, T.K. The random subspace method for constructing decision forests. IEEE Trans. Pattern. Anal. Mach. Intell. 1998, 20, 832–844. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Ham, J.; Chen, Y.; Crawford, M.M.; Ghosh, J. Investigation of the random forest framework for classification of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 492–501. [Google Scholar] [CrossRef]

- Xia, J.; Liao, W.; Chanussot, J.; Du, P.; Song, G.; Philips, W. Improving random forest with ensemble of features and semisupervised feature extraction. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1471–1475. [Google Scholar] [CrossRef]

- Chan, J.C.; Paelinckx, D. Evaluation of random forest and Adaboost tree-based ensemble classification and spectral band selection for ecotope mapping using airborne hyperspectral imagery. Remote Sens. Environ. 2008, 112, 2999–3011. [Google Scholar] [CrossRef]

- Rodriguez-Galianoa, V.F.; Ghimireb, B.; Roganb, J.; Chica-Olmoa, M.; Rigol-Sanchezc, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Merentitis, A.; Debes, C.; Heremans, R. Ensemble learning in hyperspectral image classification: Toward selecting a favorable bias-variance tradeoff. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1089–1102. [Google Scholar] [CrossRef]

- Gurram, P.; Kwon, H. Sparse kernel-based ensemble learning with fully optimized kernel parameters for hyperspectral classification problems. IEEE Trans. Geosci. Remote Sens. 2013, 51, 787–802. [Google Scholar] [CrossRef]

- Samat, A.; Du, P.; Liu, S.; Li, J.; Cheng, L. E2LMs: Ensemble extreme learning machines for hyperspectral image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1060–1069. [Google Scholar] [CrossRef]

- Guo, H.; Li, Y.; Jennifer, S.; Gu, M.; Huang, Y.; Gong, B. Learning from class-imbalanced data: Review of methods and applications. Expert Syst. Appl. 2017, 73, 220–239. [Google Scholar] [CrossRef]

- Merentitis, A.; Debes, C. Many hands make light work-on ensemble learning techniques for data fusion in remote sensing. IEEE Geosci. Remote Sens. Mag. 2015, 3, 86–99. [Google Scholar] [CrossRef]

- Rodriguez, J.J.; Kuncheva, L.I. Rotation forest: A new classifier ensemble method. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1619–1630. [Google Scholar] [CrossRef] [PubMed]

- Ayerdi, B.; Romay, M.G. Hyperspectral image analysis by spectral-spatial processing and anticipative hybrid extreme rotation forest classification. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2627–2639. [Google Scholar] [CrossRef]

- Xia, J.; Falco, N.; Benediktsson, J.A.; Du, P.; Chanussot, J. Hyperspectral image classification with rotation random forest via KPCA. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1601–1609. [Google Scholar] [CrossRef]

- Chen, J.; Xia, J.; Du, P.; Chanussot, J. Combining rotation forest and multiscale segmentation for the classification of hyperspectral data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4060–4072. [Google Scholar] [CrossRef]

- Rahulamathavan, Y.; Phan, R.C.-W.; Chambers, J.A.; Parish, J.D. Facial expression recognition in the encrypted domain based on local Fisher discriminant analysis. IEEE Trans. Affect. Comput. 2013, 4, 83–92. [Google Scholar] [CrossRef]

- Belhumeur, P.N.; Hespanha, J.P.; Kriegman, D.J. Eigenfaces vs. Fisherfaces: Recognition using class specific linear projection. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 711–720. [Google Scholar] [CrossRef]

- Lu, X.; Zhang, J.; Li, T.; Zhang, G. Synergetic classification of long-wave infrared hyperspectral and visible images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3546–3557. [Google Scholar] [CrossRef]

- Sun, B.; Kang, X.; Li, S.; Benediktsson, J.A. Random-walker-based collaborative learning for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 212–222. [Google Scholar] [CrossRef]

- Kang, X.; Li, S.; Benediktsson, J.A. Feature extraction of hyperspectral images with image fusion and recursive filtering. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3742–3752. [Google Scholar] [CrossRef]

- Kang, X.; Zhang, X.; Li, S.; Li, K.; Li, J.; Benediktsson, J.A. Hperspectral anomaly detection with attribute and edge-preserving filters. IEEE Trans. Geosci. Remote Sens. 2017, 1–12. [Google Scholar] [CrossRef]

- Sugiyama, M.; Idé, T.; Nakajima, S.; Sese, J. Semi-supervised local Fisher discriminant analysis for dimensionality reduction. Mach. Learn. 2010, 78, 35–61. [Google Scholar] [CrossRef]

- Sugiyama, M. Dimensionality reduction of multimodal labeled data by local Fisher discriminant Analysis. J. Mach. Learn. Res. 2007, 8, 1027–1061. [Google Scholar] [CrossRef]

- He, X.; Niyogi, P. Locality preserving projections. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2004; pp. 153–160. [Google Scholar]

- Martinez, M.; Kak, A.C. PCA versus LDA. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 228–233. [Google Scholar] [CrossRef]

- He, X.; Cai, D.; Yan, S.; Zhang, H. Neighborhood preserving embedding. In Proceedings of the Tenth IEEE International Conference on Computer Vision, Beijing, China, 17–20 October 2005; pp. 1208–1213. [Google Scholar]

- Liao, W.; Pi, Y. Feature extraction for hyperspectral images based on semi-supervised local discriminant analysis. In Proceedings of the 2011 Joint Urban Remote Sensing Event (JURSE), Munich, Germany, 11–13 April 2011; pp. 401–404. [Google Scholar]

- Bao, R.; Xia, J.; Mura, M.D.; Du, P.; Chanussot, J.; Ren, J. Combining morphological attribute profiles via an ensemble method for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 2016, 13, 359–363. [Google Scholar] [CrossRef]

- Xia, J.; Mura, M.D.; Chanussot, J.; Du, P.; He, X. Random subspace ensembles for hyperspectral image classification with extended morphological attribute profiles. IEEE Trans. Geosci. Remote Sens. 2015, 53, 4768–4785. [Google Scholar] [CrossRef]

- Xia, J.; Du, P.; He, X.; Chanussot, J. Hyperspectral remote sensing image classification based on rotation forest. IEEE Geosci. Remote Sens. Lett. 2014, 11, 239–243. [Google Scholar] [CrossRef]

| Indian Pines | University of Pavia | San Diego | University of Houston | ||||

|---|---|---|---|---|---|---|---|

| Class | Samples | Class | Samples | Class | Samples | Class | Samples |

| corn-no till | 1434 | asphalt | 6304 | tarmac1 | 7044 | healthy grass | 449 |

| corn-min till | 834 | meadow | 18146 | tramac2 | 4721 | stressed grass | 454 |

| grass-pasture | 234 | gravel | 1815 | concrete roof | 5771 | synthetic grass | 505 |

| grass-trees | 497 | tree | 2912 | tree | 4851 | tree | 293 |

| hay-windrowed | 747 | metal plate | 1113 | brick | 873 | soil | 688 |

| soybeans-no till | 489 | bare soil | 4572 | bare soil | 1748 | residential | 26 |

| soybeans-min till | 968 | bitumen | 981 | bitumen roof | 2454 | commercial | 463 |

| soybeans-clean | 2468 | brick | 3364 | tree | 2135 | road | 112 |

| woods | 1294 | shadow | 795 | parking lot 1 | 427 | ||

| parking lot 2 | 247 | ||||||

| tennis court | 473 | ||||||

| running track | 367 | ||||||

| RF | SSFE-RF | RoF | RoRF-KPCA | SLDA-RoF | RoF-LFDA | RoF-NPE | SSRoF | ||

|---|---|---|---|---|---|---|---|---|---|

| Indian | 1% | 58.35 0.5018 | 66.87 0.5995 | 71.48 0.6587 | 70.54 0.6491 | 63.88 0.5660 | 66.17 0.5943 | 69.39 0.6337 | 74.38 0.6918 |

| 2% | 64.55 0.5746 | 74.89 0.6971 | 75.80 0.7117 | 77.11 0.7272 | 70.22 0.6437 | 76.72 0.7214 | 76.45 0.7179 | 80.83 0.7710 | |

| 5% | 70.79 0.6502 | 81.04 0.7728 | 82.97 0.7971 | 82.96 0.7971 | 77.58 0.7330 | 83.01 0.7978 | 82.66 0.7936 | 86.84 0.8429 | |

| Pavia | 1% | 79.65 0.7143 | 84.93 0.7879 | 87.13 0.8223 | 87.02 0.8205 | 81.20 0.7373 | 87.09 0.8214 | 86.67 0.8152 | 88.98 0.8484 |

| 2% | 82.38 0.7538 | 87.27 0.8220 | 89.54 0.8559 | 89.39 0.8537 | 84.34 0.7840 | 90.15 0.8645 | 89.61 0.8571 | 91.60 0.8846 | |

| 5% | 85.82 0.8029 | 90.26 0.8648 | 92.28 0.8943 | 92.10 0.8919 | 86.82 0.8186 | 92.52 0.8978 | 91.77 0.8871 | 93.67 0.9137 | |

| San Diego | 1% | 86.08 0.8333 | 96.07 0.9529 | 95.28 0.9435 | 94.19 0.9305 | 93.25 0.9192 | 95.20 0.9426 | 95.55 0.9467 | 95.99 0.9520 |

| 2% | 90.10 0.8814 | 96.78 0.9615 | 96.40 0.9569 | 95.88 0.9507 | 94.86 0.9385 | 96.50 0.9582 | 96.56 0.9589 | 97.02 0.9644 | |

| 5% | 93.10 0.9175 | 97.69 0.9724 | 97.64 0.9717 | 97.09 0.9652 | 96.40 0.9569 | 97.62 0.9716 | 97.61 0.9715 | 98.02 0.9764 | |

| Houston | 5% | 91.32 0.9034 | 95.97 0.9551 | 96.06 0.9561 | 96.08 0.9564 | 93.73 0.9302 | 96.06 0.9561 | 96.33 0.9591 | 97.43 0.9714 |

| 10% | 94.40 0.9376 | 96.59 0.9620 | 97.08 0.9676 | 97.60 0.9733 | 94.98 0.9441 | 96.96 0.9662 | 97.33 0.9703 | 98.09 0.9787 | |

| 20% | 96.31 0.9590 | 98.03 0.9780 | 98.18 0.9798 | 98.42 0.9824 | 96.54 0.9615 | 98.22 0.9802 | 97.77 0.9752 | 98.60 0.9845 | |

| OA (%) | Kappa | OA (%) | Kappa | OA (%) | Kappa | OA (%) | Kappa | OA (%) | Kappa | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Indian | 1% | 71.01 | 0.6516 | 74.16 | 0.6887 | 74.69 | 0.6955 | 74.65 | 0.6944 | 74.96 | 0.6978 |

| 2% | 77.91 | 0.7359 | 79.56 | 0.7545 | 80.03 | 0.7600 | 80.54 | 0.7660 | 80.95 | 0.7710 | |

| 5% | 83.55 | 0.8039 | 85.63 | 0.8285 | 86.62 | 0.8403 | 86.87 | 0.8432 | 86.97 | 0.8443 | |

| 10% | 86.51 | 0.8392 | 88.44 | 0.8622 | 88.87 | 0.8672 | 89.24 | 0.8716 | 89.26 | 0.8718 | |

| 20% | 88.91 | 0.8682 | 90.71 | 0.8894 | 91.25 | 0.8958 | 91.67 | 0.9008 | 91.74 | 0.9016 | |

| Pavia | 1% | 87.71 | 0.8308 | 88.79 | 0.8456 | 89.13 | 0.8504 | 89.38 | 0.8538 | 89.45 | 0.8548 |

| 2% | 89.74 | 0.8592 | 91.20 | 0.8794 | 91.35 | 0.8814 | 91.65 | 0.8856 | 91.75 | 0.8869 | |

| 5% | 92.13 | 0.8924 | 93.36 | 0.9094 | 93.70 | 0.9141 | 93.78 | 0.9151 | 93.86 | 0.9163 | |

| 10% | 93.07 | 0.9053 | 94.10 | 0.9195 | 94.46 | 0.9245 | 94.59 | 0.9263 | 94.59 | 0.9262 | |

| 20% | 94.48 | 0.9250 | 95.15 | 0.9341 | 95.31 | 0.9363 | 95.45 | 0.9382 | 95.46 | 0.9383 | |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, X.; Zhang, J.; Li, T.; Zhang, Y. Hyperspectral Image Classification Based on Semi-Supervised Rotation Forest. Remote Sens. 2017, 9, 924. https://doi.org/10.3390/rs9090924

Lu X, Zhang J, Li T, Zhang Y. Hyperspectral Image Classification Based on Semi-Supervised Rotation Forest. Remote Sensing. 2017; 9(9):924. https://doi.org/10.3390/rs9090924

Chicago/Turabian StyleLu, Xiaochen, Junping Zhang, Tong Li, and Ye Zhang. 2017. "Hyperspectral Image Classification Based on Semi-Supervised Rotation Forest" Remote Sensing 9, no. 9: 924. https://doi.org/10.3390/rs9090924

APA StyleLu, X., Zhang, J., Li, T., & Zhang, Y. (2017). Hyperspectral Image Classification Based on Semi-Supervised Rotation Forest. Remote Sensing, 9(9), 924. https://doi.org/10.3390/rs9090924