In this section, we compare the performances of the DCC and DPCA methods for different dimensions. The top-100 retrieval precision results of each class for different methods are evaluated. The top-150 retrieval precision results for each class are also shown for further evaluation. For convenience, we use “ADCC# ”to represent the names of the DCC frameworks: it denotes DCCs with a dimensionality of # learned using the “Alexnet” framework. The same method is also applied to the corresponding VGG frameworks. Note that the length of the features extracted from the original Alexnet and VGG frameworks is 4096. Similarly, we use “APCA#” to represent the names of the DPCA learning methods: it denotes image feature codes learned by the original “Alexnet” framework and then compressed to dimension of # via the PCA method. A similar method is also used for the corresponding VGG frameworks.

4.3.1. Retrieval Performance of DCCs

In this section, the image retrieval performance of the DCC method is evaluated. The top-100 retrieval precision results for each class are shown in

Table 1. In

Table 1, there are two results reported in bold in each row. The first result is the best retrieval result for Alexnet and the corresponding DCC models; the second one is the best retrieval result for VGG and the corresponding models. As shown in

Table 1, most of the best results for Alexnet and its DCC models are obtained using ADCC64 and ADCC32, and all the best results are obtained using our DCC models. Specifically, the findings indicate that precision is greatly increased by our DCC models for all classes compared with results of the original Alexnet model. The river class in the ADCC64 model shows the largest increase in precision, with a significant improvement of nearly 20%. Moreover, nearly all our DCC models achieve obvious improvements in the retrieval results for each class compared with the results of the original Alexnet model. For VGG and its corresponding DCC models, most of the best results are achieved using VDCC64, and there is also a high improvement in the retrieval precision for each class compared with the original VGG model. Several of the best retrieval results are not obtained using VGG64, and the precision achieved using VDCC64 is comparable with the best results. Additionally, the best improvement is observed for the wharf class, for which the precision increases from 51.02% to 75.32%. Thus, the precision of the VDCC64 models is more than 24% higher than that of the original VGG model. Compared with the ADCC32 model, which achieved several of the best retrieval results, the VDCC32 model does not generate the best retrieval results for any class, and certain classes appear to show decreases in retrieval precision. Nevertheless, the VDCC32 model achieves prominent improvements in the retrieval precision for 60% of the classes and certain improvements in retrieval precision are noticeable compared with the results of the original VGG model, such as for the wharf and building classes, which showed precision improvements of 18.66% and 14.95%, respectively.

Table 2 shows the top-150 retrieval precision results for all classes. The retrieval performance based on the P@150 values shows a similar trend as that based on the P@100 values. The greatest improvements in precision are observed for the river class using the ADCC64 model and the wharf class using the VDCC64 model. Taken together, all our DCC models can generate significant improvements in the retrieval precision compared with their corresponding original frameworks.

For Alexnet and its DCC models, several of the best results are obtained using ADCC32, and the corresponding results obtained using ADCC64 are similar to those of ADCC32. For the VDCC32 model, a sharp decline in the precision is observed compared with that of the VDCC64 model. These results indicate that image features with a dimensionality of 64 may be the most optimal for adapting to the image representation. To comprehensively assess the performances of different models, we compare the model accuracies of these methods, which are calculated according to the mean of retrieval precisions of each class achieved by the CNN model. The model accuracies based on the P@100 and P@150 values are listed in

Table 3 and

Table 4, respectively. Additionally, the best results are reported in bold.

Table 3 and

Table 4 show that the model accuracy clearly increases as the feature dimensionality is reduced from 4096 to 64. ADCC64 and VDCC64 achieve the best results at both the P@100 and P@150 levels, and remarkable accuracy improvements can be observed. Specifically, at the P@100 level, ADCC64 and VDCC644 show accuracy improvements of 8.81% and 8.15% compared with the results of the corresponding original CNN frameworks, respectively. At the P@150 level, ADCC64 and VDCC644 show accuracy improvements of 9.37% and 9.06% compared with the results of the baseline CNN frameworks, respectively. These findings are in accordance with the class precision results shown in

Table 1 and

Table 2. In

Table 3 and

Table 4, each row shows approximate accuracy improvements as the dimensions of the DCCs decrease from 4096 to 1024, 256 and 64. Thus, the efficacy of our proposed DCC method is demonstrated by the performances of different types of CNN frameworks. Regarding the 32-dimensional feature codes, both ADCC32 and VDCC32 show decreases in model accuracy compared with the corresponding 64-dimensional feature codes, especially VDCC32. This finding indicates that 64-dimensional feature codes are more effective for image representation than feature codes with other dimensions, which confirms our former hypothesis. Moreover, even when decreases in accuracy are observed for the 32-dimensional features compared with 64-dimensional features, the ADCC32 and VDCC32 models still achieve significantly higher accuracies than those of the Alexnet and VGG models, respectively.

Specifically, a comparison of the same dimensional feature codes from different frameworks shows that VGG and its corresponding DCC models achieve higher accuracies than those of Alexnet and its corresponding DCC models. This finding makes sense because VGG and its corresponding DCC models are deep CNN frameworks, while Alexnet and its corresponding DCC models are shallow CNN frameworks. Nevertheless, a comparison of the results of the DCC models of Alexnet with those of the original VGG model shows that the accuracies of the DCC models are considerably higher than those of the VGG model, especially for the Alexnet64 and Alexnet32 models, which present accuracy improvements of 6.89% and 6.30%, respectively. These findings demonstrate that the feature codes with low dimensionality learned by our DCC models have more powerful image representation abilities compared with the high-dimensional features codes. This finding reveals that high-dimensional features learned by traditional CNN frameworks do not always have the best image representation abilities, whereas the lower-dimensional features that can be learned by our DCC method can greatly improve upon the performance of the original frameworks. More importantly, these results indicate that our proposed DCC method can achieve a better performance using a shallow CNN framework rather than a deep CNN framework. The superiority of this approach is obvious. Our proposed DCC method is easy to use, and it can also reduce the training time requirements and improve the convenience of the applications. This discovery can also be employed for many other tasks, such as image classification and object detection, to further advance the performance and efficiency of the method because of the shorter and more powerful feature representation capacity.

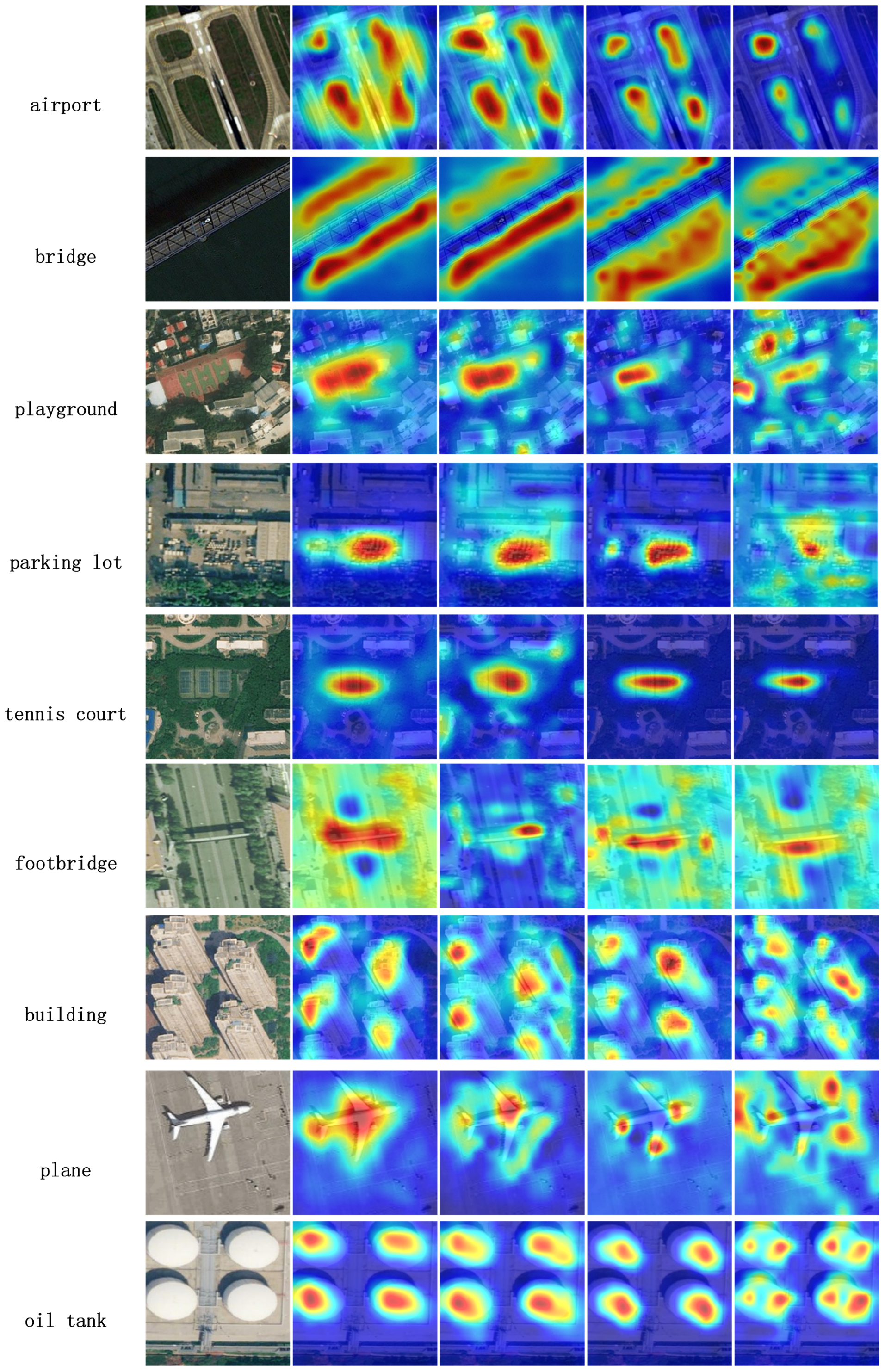

For further evaluation, we employ a confusion matrix to show the classification results for 64- and 4096-dimensional features extracted from different models, as shown in

Table 5,

Table 6,

Table 7 and

Table 8. For simplicity, let C1, C2, …, C25 represent agricultural, airport, basketball court, bridge, building, container, fishpond, footbridge, forest, greenhouse, intersection, oiltank, overpass, parking lot, plane, playground, residential, river, ship, solar power area, square, tennis court, water, wharf and workshop, respectively. Each column in

Table 5,

Table 6,

Table 7 and

Table 8 corresponds to the prediction result, while each row represents the actual class. Note that the last column and row correspond to the classification and prediction precision, respectively. In addition, the overall accuracy is reported in bold in the right-bottom cell. As seen from

Table 5 and

Table 6, even though there are slight decreases in classification precision for several classes, most of the classes experienced improvements in classification precision when the ADCC64 model was used. In addition, the precision improvements are more obvious compared to the decreases in classification precision, such as for river (C18), square (C21), tennis court (C22), which achieved a 9.60%, 6.80%, and 7.60% higher classification precision, respectively, when our DCC method was used, respectively. In addition, the prediction precision of each class shows a similar trend. The intersection class achieved a 10.70% improvement in prediction precision when the ADCC64 model was used. For the VGG and VDCC64 models (

Table 7 and

Table 8), most classes experienced precision improvements in both classification and prediction when our DCC method was used. However, the playground (C16) class suffered a 5.60% decrease in classification precision when the VDCC64 model was used. This result occurred because some playground samples are more easily classified as basketball court (C3) samples, as shown in

Table 8. In addtion, some tennis court (C22) samples are also classified wrongly as basketball court samples. This led to a 3.78% decrease in the prediction precision of the basketball court class. The main reason for this phenomenon is that there is a certain similarity between the backgrounds of the tennis court, playground and basketball court samples to some extent. The VDCC64 model is more capable of discriminating the basketball court class, as the classification precision for the basketball court class using this model experienced a 10.80% improvement. This result implies that the VDCC64 model can learn to discriminate the basketball court class from other classes. It also reveals that VDCC64 has some deficiencies in discriminating similar classes. Nevertheless, our DCC method can achieve a higher overall classification accuracy. For the ADCC64 model, the overall classification accuracy is 86.56%, which is nearly 2.00% higher than that of the original Alexnet model (84.58%). In addition, the VGG64 model achieves an approximately 1.50% improvement in overall classification accuracy (88.37%) compared with the original VGG model (86.88%). In general, our DCC models can achieve better classification results for most classes.

4.3.2. Retrieval Performance of the DPCA

In this section, we show the retrieval performance achieved using the DPCA scheme described in

Section 3.

Table 9 and

Table 10 show the top-100 and top-150 retrieval precision results for each class, respectively. The results show that when we compress the original deep feature codes to dimensions of 1024, 256 and 64, only a few classes show improved retrieval precision. The 32-dimensional features show better retrieval performance for certain classes. The wharf class shows the greatest increase in precision when the original deep features are compressed via the PCA method. Specifically, at the P@100 level, an 8.45% precision improvement is achieved by compressing the deep features from the Alexnet model and a 17.57% precision improvement is achieved by compressing the deep features from the VGG model, whereas at the P@150 level, 8.75% and 18.33% precision improvements are achieved by compressing the deep features from the Alexnet and VGG models, respectively. However,

Table 9 and

Table 10 show that the improvements in retrieval performance are limited compared with the results based on the original deep features. Many of the best retrieval results are obtained using the original deep features. In other words, the retrieval performance decreases for certain classes when extracting the feature codes via the PCA method. This finding indicates that certain important feature information can be lost while compressing the deep features to lower dimensionalities. A comparison of the information in

Table 1 and

Table 2 shows that our DCC method can learn shorter feature codes and achieve much better retrieval performance.

Table 11 and

Table 12 show the comprehensive retrieval accuracies for the different dimensional features for the entire dataset. As shown in

Table 11 and

Table 12, sharp decreases in the comprehensive retrieval performance are observed when compressing the original deep features to different dimensional features. Note that the reduction in precision declines as the dimensionality is reduced. When deep features are compressed to a dimensionality of 32, retrieval precision improvements occur; this finding corresponds to the information presented in

Table 9 and

Table 10. Based on this observation, we also calculate the retrieval accuracies achieved when the deep features are compressed to a dimensionality of 16. Specifically, at the P@100 level, 16-dimensional features compressed from the deep features of the Alexnet framework achieve a retrieval precision of 69.05%, which is 0.30% lower than the precision of the original deep features. With regard to the deep features of the VGG framework, the compressed 16-dimensional features achieve a retrieval precision of 73.01%, which is 1.73% higher than the precision of the original deep features. The P@150 level generally shows the same results. Nevertheless, all these results show reduced performance compared with that of the compressed 32-dimensional features, as shown in

Table 11 and

Table 12. A comparison of our DCC method, which can achieve significant improvements in the retrieval performance for all the different features at lower dimensionalities, with the DPCA method indicates that the DPCA method only achieves limited improvements in retrieval performance for the 32- and 16-dimensional features. This phenomenon mainly occurs because the PCA method is a linear compression scheme, while the features of remote sensing images should have non-linear relations. This finding indicates that our proposed DCC method is an efficient method for learning more powerful image feature representations with lower dimensionalities.

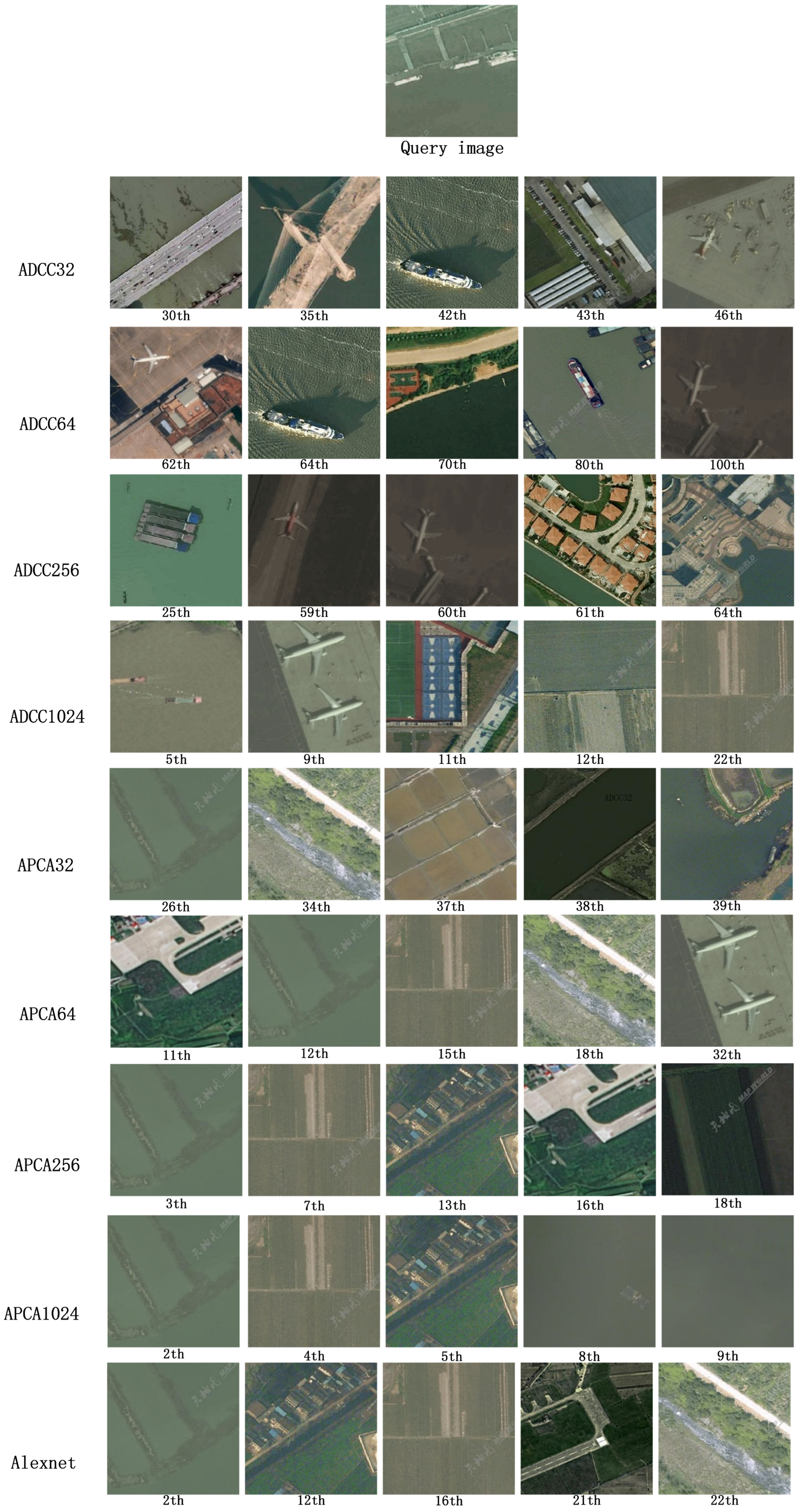

Figure 3 shows a query image of a wharf and the corresponding top-5 irrelevant retrieval images obtained using the Alexnet based DCC and DPCA methods. The results show that the orders of irrelevant images obtained using the ADCC32, ADCC64 and ADCC256 models are much greater than those obtained using other models at the same position, which indicates that our proposed DCC method has a better retrieval performance. Specifically, ADCC64 shows the best results, which is consistent with the results shown in

Table 1,

Table 2,

Table 3 and

Table 4 and prior analyses.

4.3.3. Comparison

In this section, we compare the performances of the DCC and DPCA methods. The mAP results for the evaluated methods are listed in

Table 13, which shows that all DCC frameworks substantially outperform the baseline CNN frameworks. Compared with the baseline frameworks, the 64-dimensional features extracted by our proposed DCC method based on the Alexnet or VGG frameworks achieve the best results. Specifically, absolute mAP increases of 8.51% and 8.64% are observed for the 64-dimensional features using the Alexnet- and VGG-based DCC frameworks, respectively. For the DPCA method, only 32-dimensional features compressed from the deep features of the VGG framework achieve better mAP performance compared with the baseline framework. However, only the VPCA32 features can improve the performance of the image retrieval. In contrast, the 32-dimensional features extracted by our proposed DCC method can highly outperform those of the DPCA method. Furthermore, the mAP values of all DCC frameworks are greater than those of the DPCA method, as shown in

Table 13.

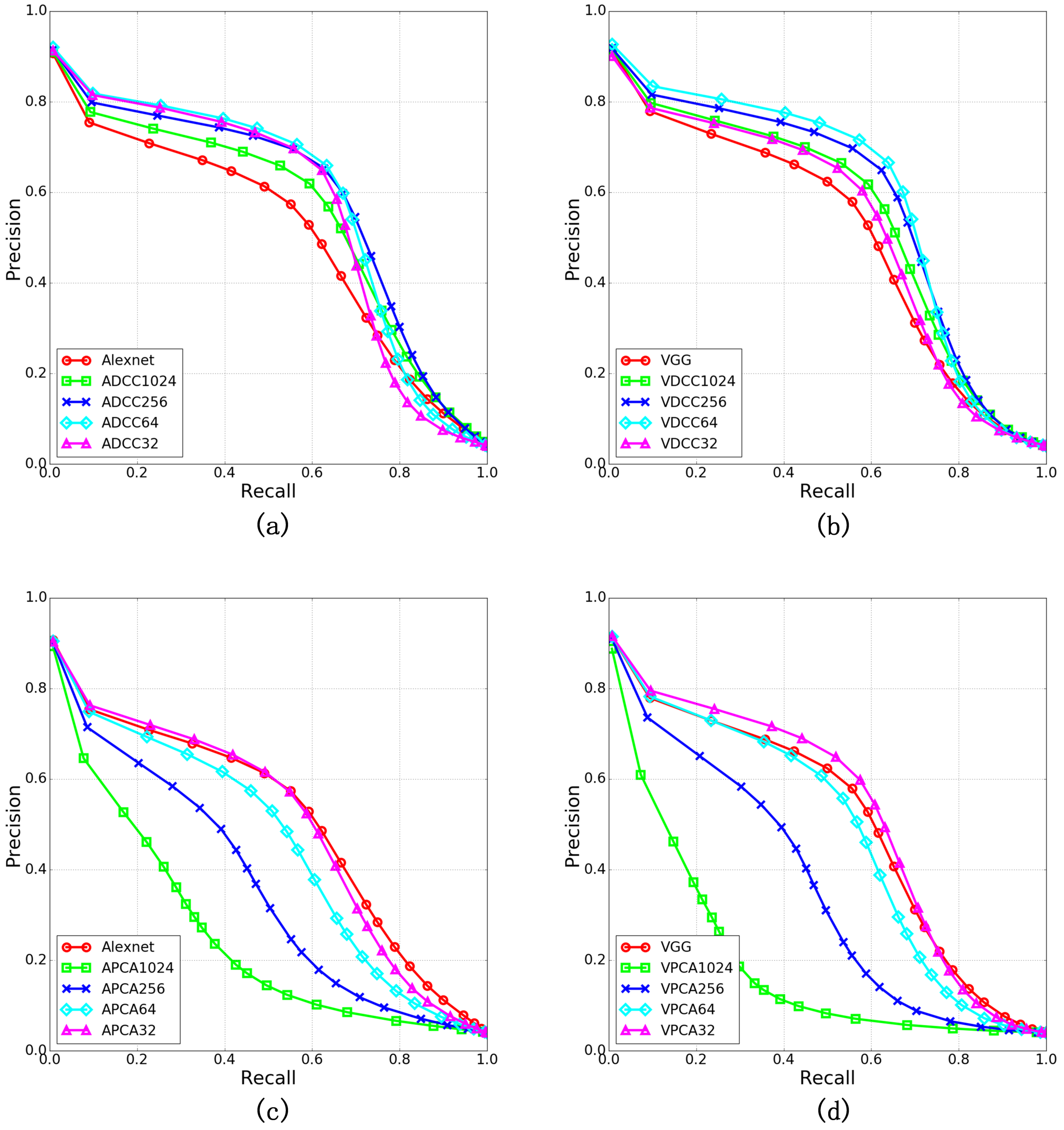

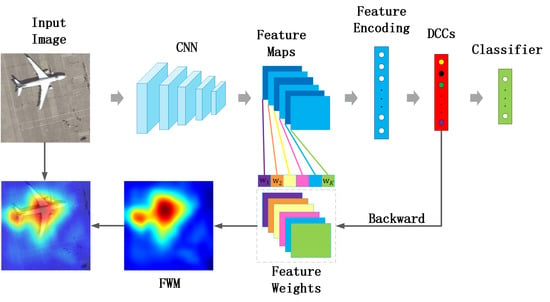

The recall-precision curves of the different DCC and DPCA methods are plotted in

Figure 4. As shown, low-dimensional features obtained using our proposed DCC method outperform those of the baseline frameworks (

Figure 4a,b), which is also consistent with

Table 3 and

Table 13. Specifically, for Alexnet-based frameworks, 64-dimensional features have the best performance, with high recall and precision retrieval results (

Figure 4a). Note that the 32-dimensional DCC features based on Alexnet achieve much better results at lower recall levels than the corresponding baseline features, which is very desirable for precision-oriented image retrieval. For high recall levels, the 256-dimensional features have the best performance. Therefore, this amount is suitable for recall-oriented image retrieval. For VGG-based frameworks, the 64-dimensional features also outperform all the other dimensional features and show comparative results at high recall levels, even when compared with the 256-dimensional features (

Figure 4b). For the DPCA method, only 32-dimensional features show comparative results when compared with the original deep features (

Figure 4c,d). In general, the 64-dimensional features based on our proposed DCC frameworks show the best results, and they dramatically improve the retrieval performance.

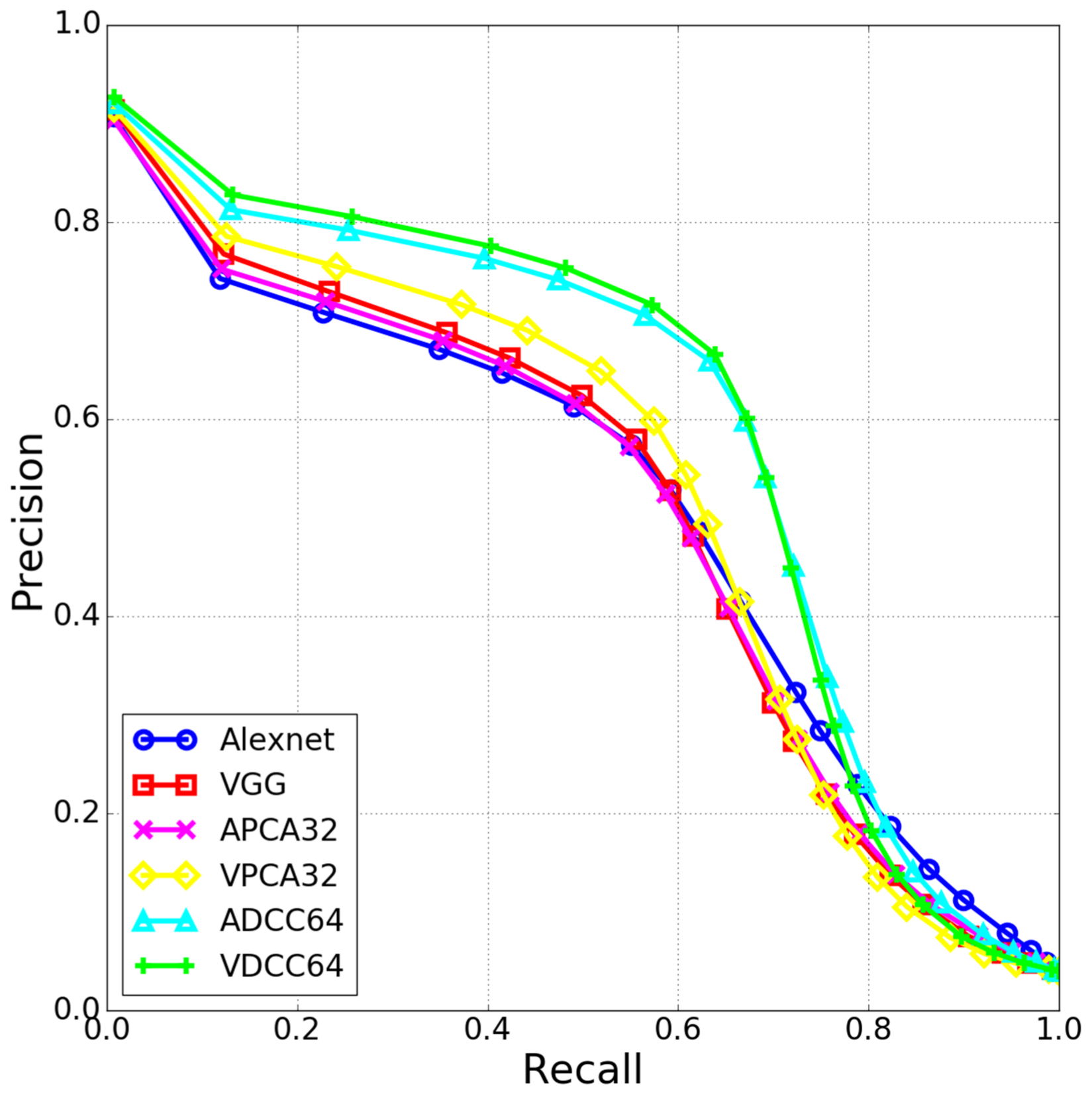

A further comparison based on the above analyses is performed by plotting the best results of each framework in

Figure 5. The results reveal that the 64-dimensional features extracted by our DCC frameworks significantly outperform those of the baseline frameworks and the DPCA method, which demonstrates the effectiveness and practicability of our proposed DCC method for remote sensing image retrieval. Specifically, the findings indicate that the 64-dimensional DCC features based on the Alexnet and the VGG frameworks generally have the same performance. Note that Alexnet-based DCC models are shallow frameworks, whereas VGG-based models are much deeper. This comparison shows that our proposed DCC method can use a shallow CNN framework to realize a performance comparable to that achieved using a much deeper CNN framework. Furthermore, the 64-dimensional DCC features based on the Alexnet framework achieve much better retrieval results than the original VGG framework, which is also consistent with the results shown in

Table 3 and

Table 13. This finding reveals that our proposed DCC method can greatly improve upon the performance of a shallow CNN framework and can be used to obtain a greater precision than that achieved using a deeper CNN framework, which shows the advantages of improving storage and efficiency simultaneously.