Generation of Radiometric, Phenological Normalized Image Based on Random Forest Regression for Change Detection

Abstract

:1. Introduction

2. Background

2.1. Linear Radiometric Normalization

2.1.1. Mean-Standard Deviation (MS) Regression

2.1.2. Simple Regression (SR)

2.1.3. No-Change (NC) Regression

2.2. Random Forest Regression

2.3. Other Nonlinear Regression

2.3.1. Adaptive Boosting Regression

2.3.2. Stochastic Gradient Boosting Regression

3. Materials and Methods

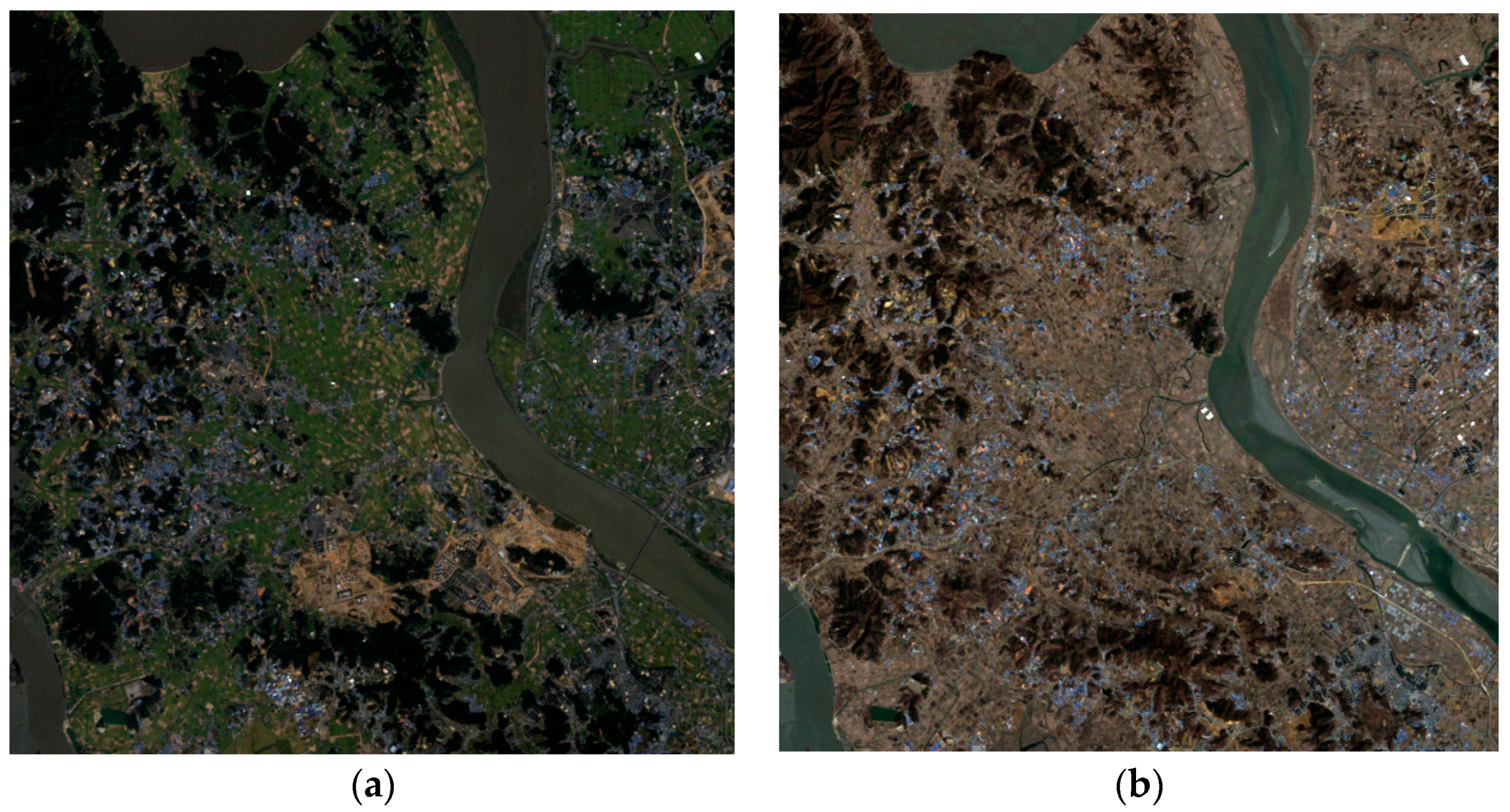

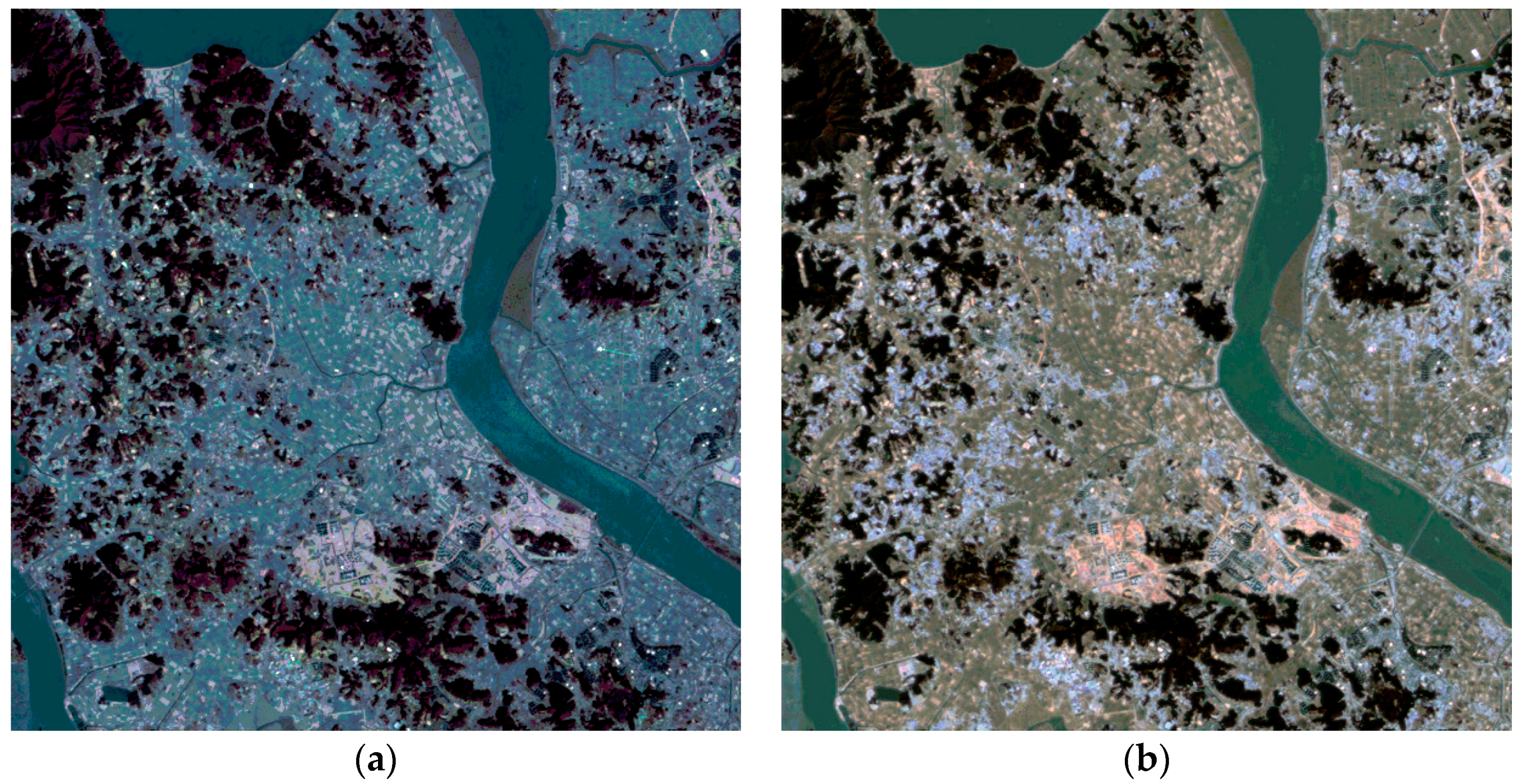

3.1. Study Sites and Data

3.2. Methods

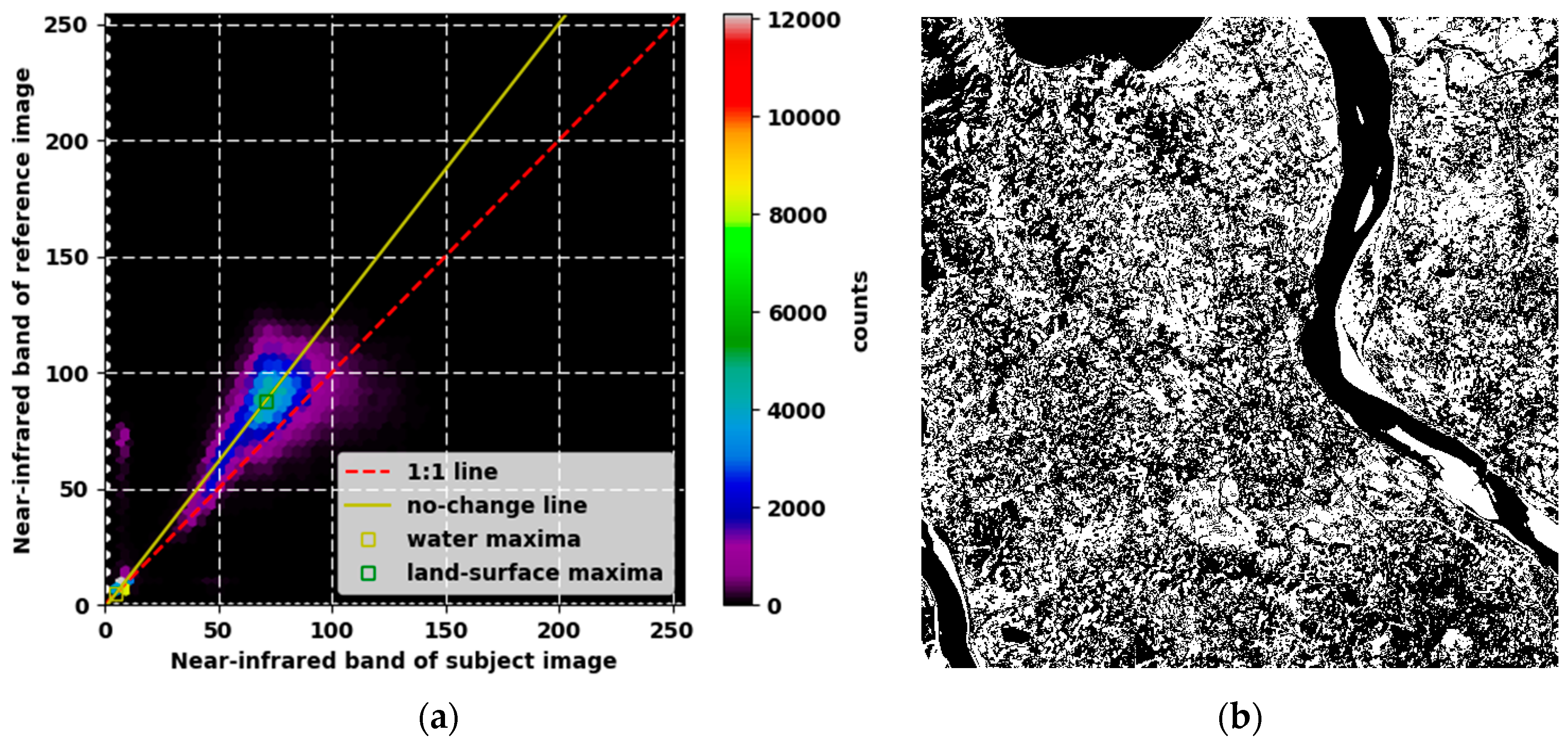

3.2.1. Automatic Detection of No-Change Pixel

3.2.2. Radiometric Normalization Using Random Forest Regression

3.2.3. Accuracy Assessment of Radiometric Normalization

3.2.4. Change Detection

4. Results

4.1. Assessment of Accuracy of the Linear Regression Method

4.2. Assessment of Accuracy of Random Forest Regression

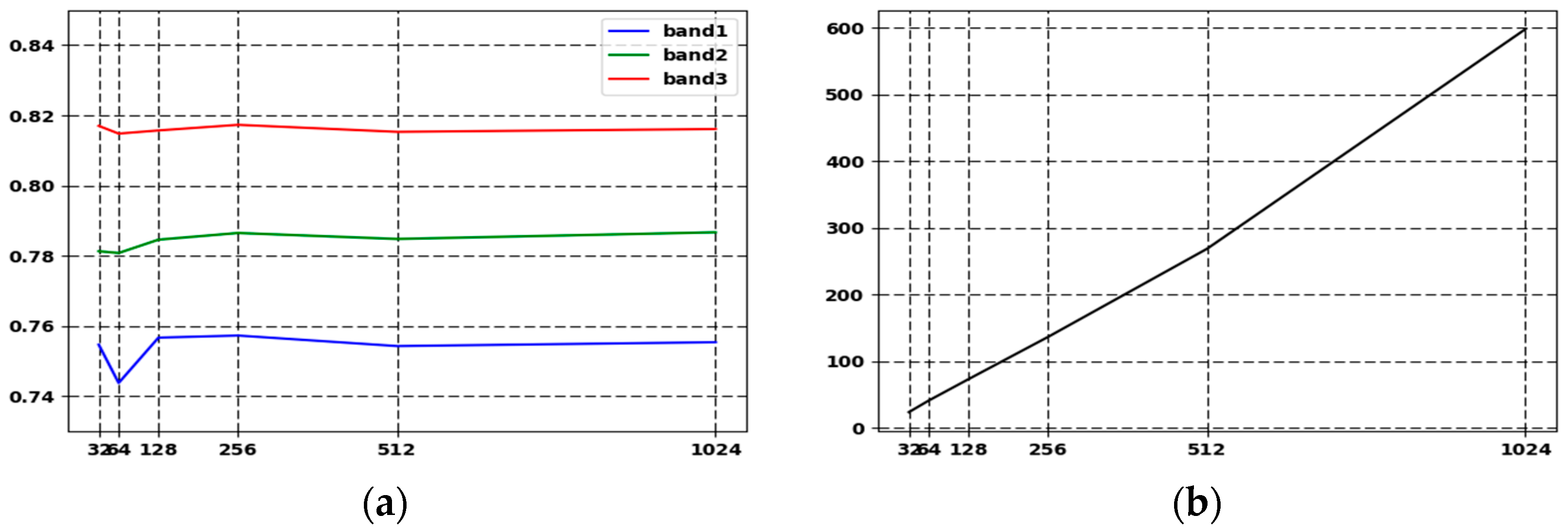

4.3. Comparison of Accuracy between Random Forest Regression and Linear Regression

4.4. Assessment of Accuracy of Other Nonlinear Regression Method and Comparison of Accuracy with Random Forest Regression

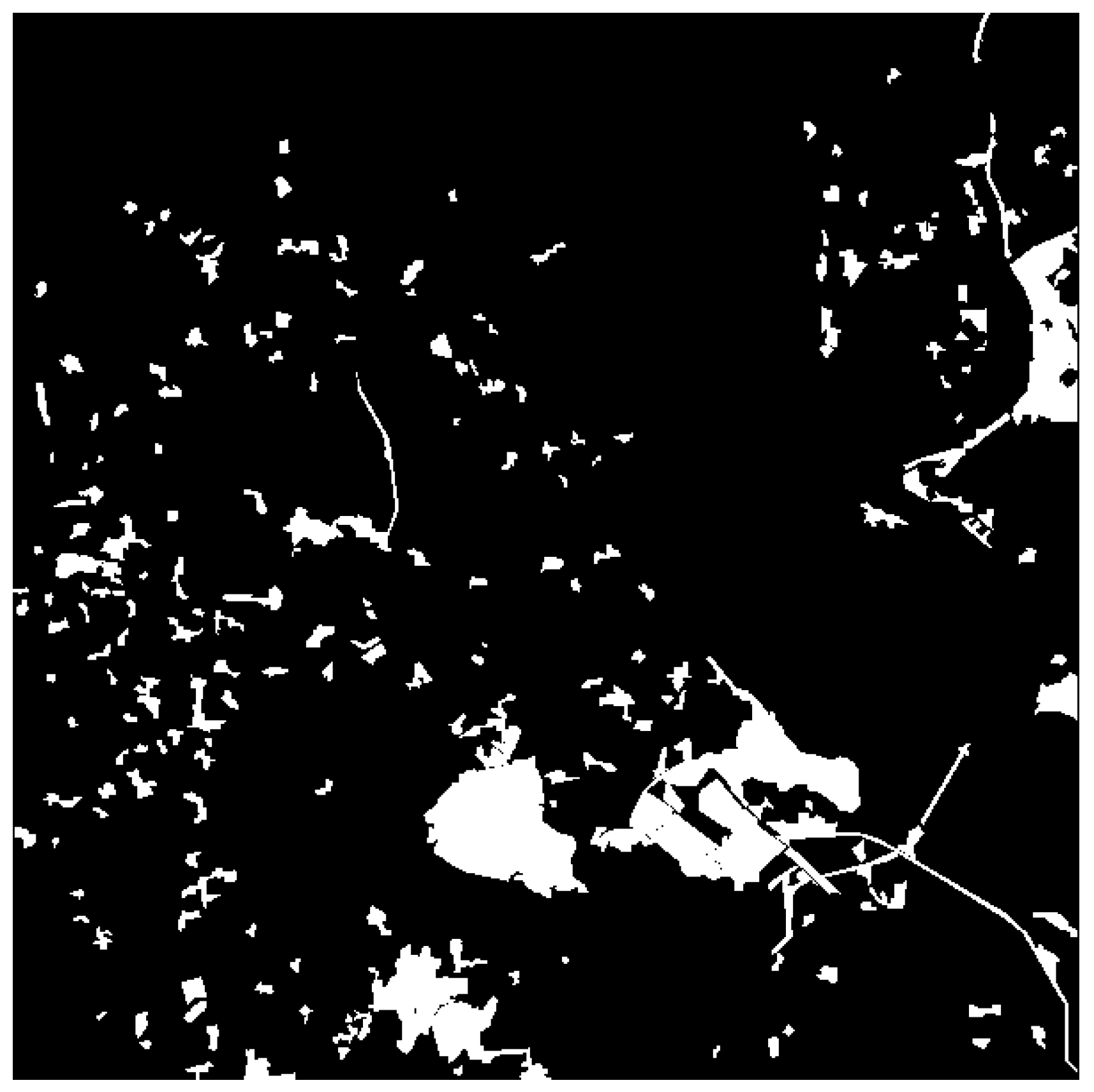

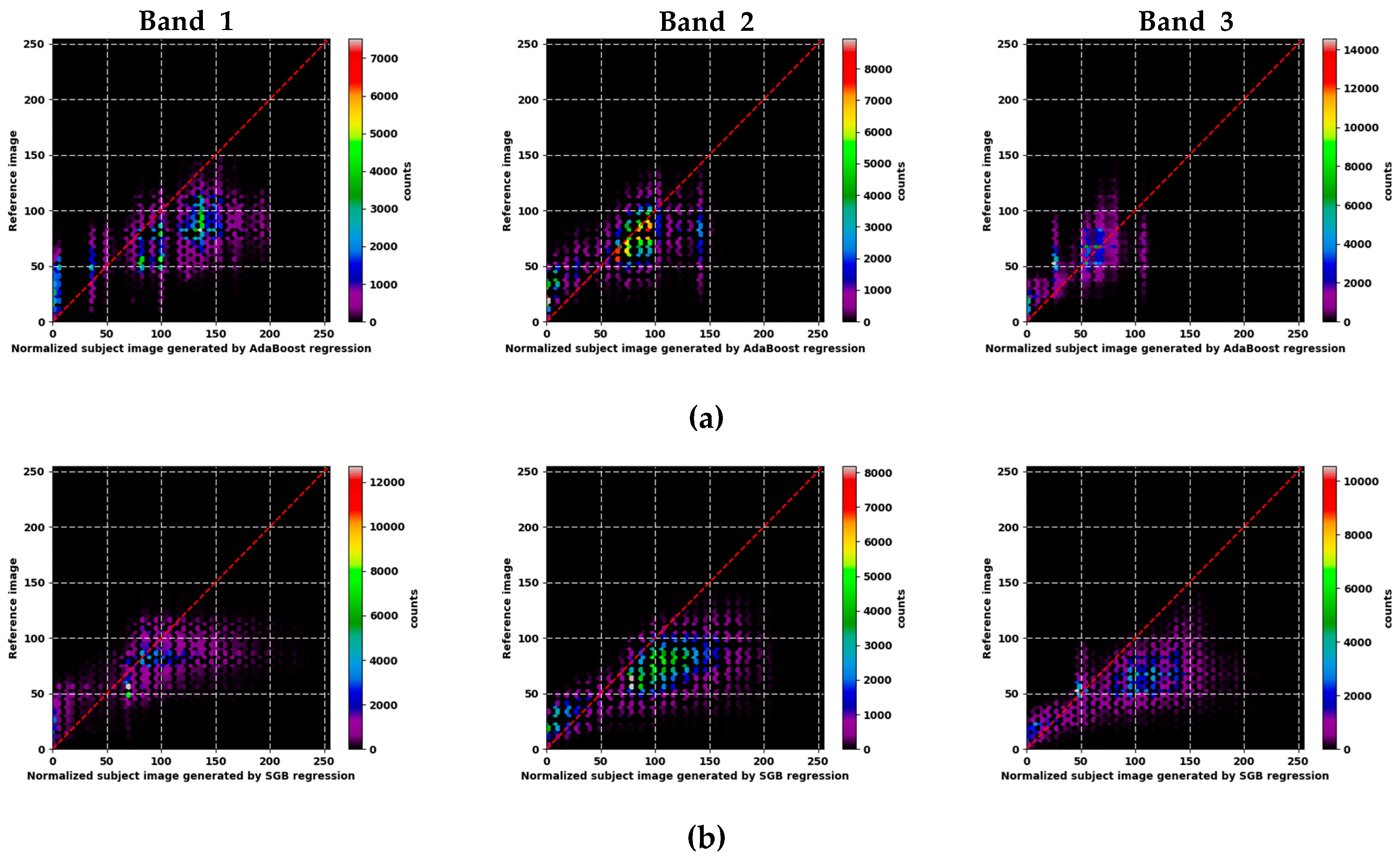

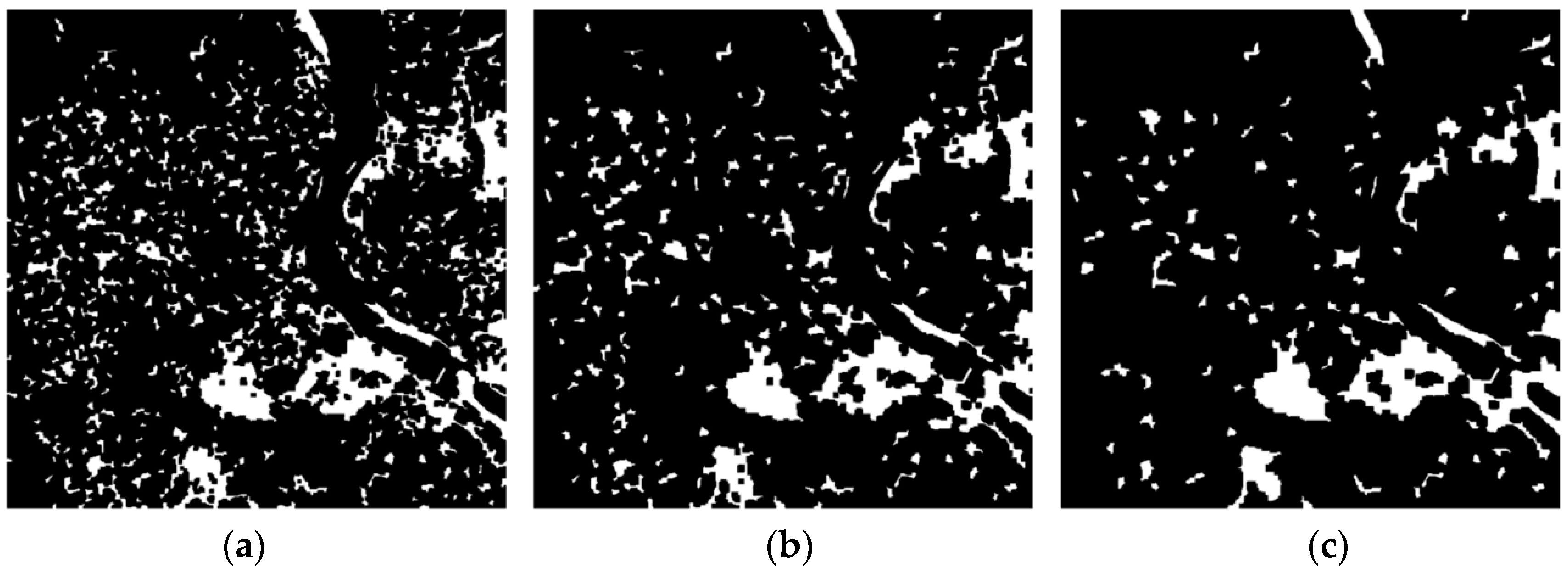

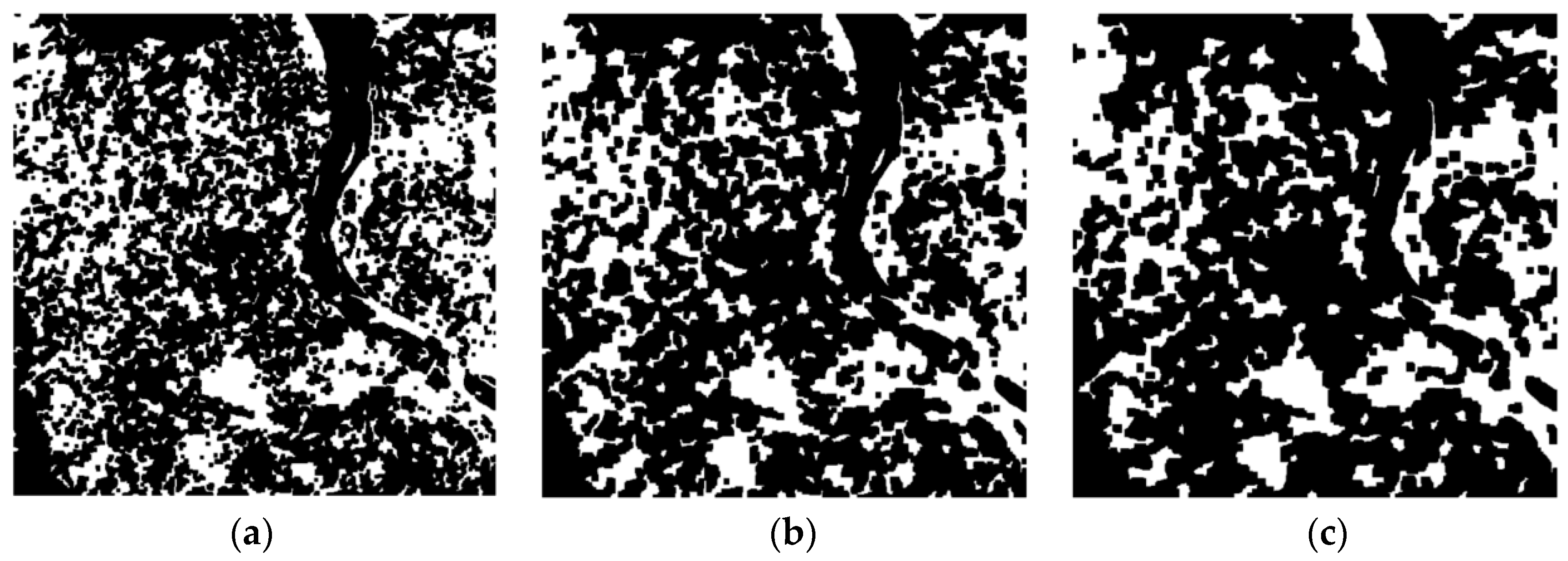

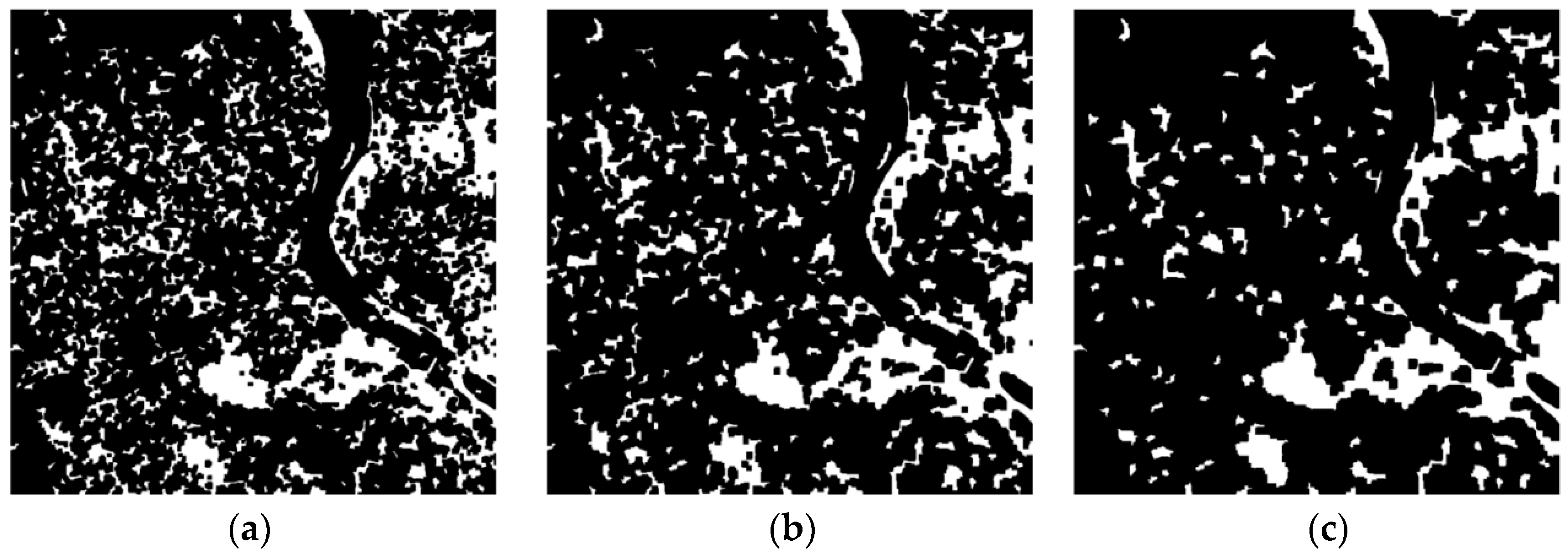

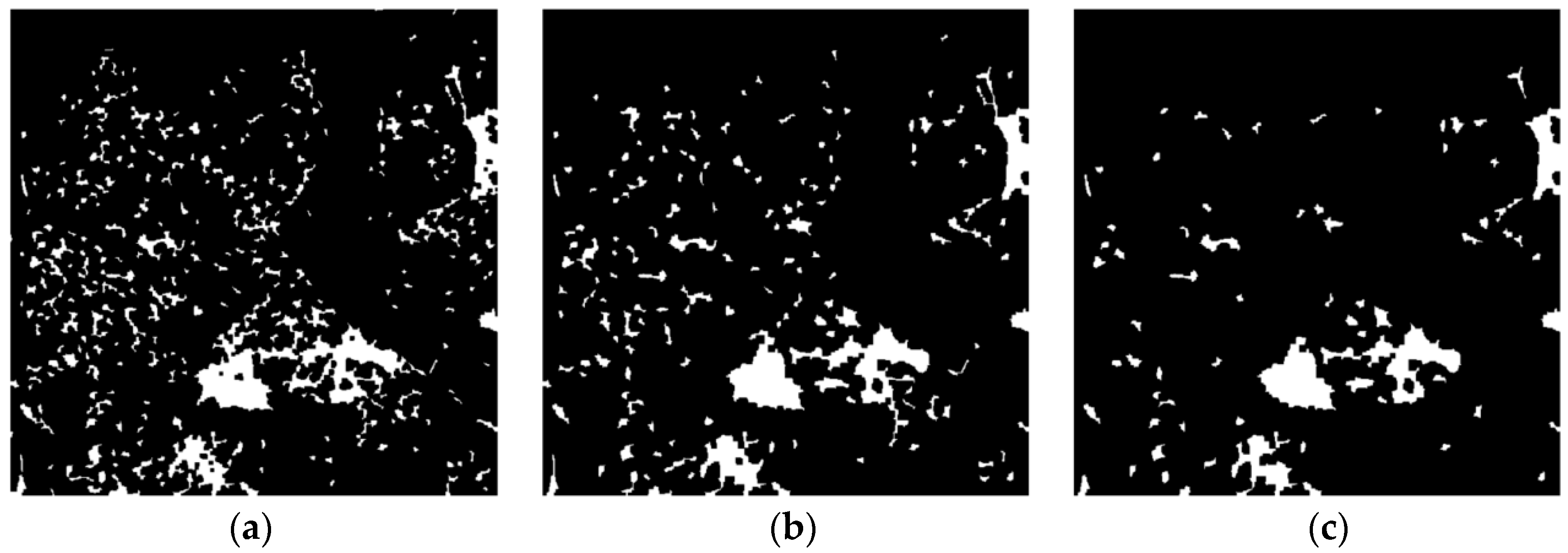

4.5. Analysis of Change Detection

5. Discussion

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Alberga, V. Similarity Measures of Remotely Sensed Multi-Sensor Images for Change Detection Applications. Remote Sens. 2009, 1, 122–143. [Google Scholar] [CrossRef]

- Almutairi, A.; Warner, T.A. Change Detection Accuracy and Image Properties: A Study Using Simulated Data. Remote Sens. 2010, 2, 1508–1529. [Google Scholar] [CrossRef]

- Singh, A. Digital Change Detection Technique Using Remotely-Sensed Data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef]

- Zhou, L.; Cao, G.; Li, Y.; Shang, Y. Change Detection Based on Conditional Random Field with Region Connection Constraints in High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3478–3488. [Google Scholar] [CrossRef]

- Ajadi, O.A.; Meyer, F.J.; Webley, P.W. Change Detection in Synthetic Aperture Radar Images Using a Multiscale-Driven Approach. Remote Sens. 2016, 8, 482. [Google Scholar] [CrossRef]

- Rokni, K.; Ahmad, A.; Solaimani, K.; Hazini, S. A New Approach for Surface Water Change Detection: Integration of Pixel Level Image Fusion and Image Classification Techniques. Int. J. Appl. Earth Obs. Geoinf. 2014, 34, 226–234. [Google Scholar] [CrossRef]

- Song, C.; Woodcock, C.E.; Seto, K.C.; Lenney, M.P.; Macomber, S.A. Classification and Change Detection Using Landsat TM Data: When and How to Correct Atmospheric Effects? Remote Sens. Environ. 2001, 75, 230–244. [Google Scholar] [CrossRef]

- Pudale, S.R.; Bhole, U.V. Comparative Study of Relative Normalization Technique for Resourcesat1 LISS III Sensor Images. In Proceedings of the International Conference on Computational Intelligence and Multimedia Applications 2007, Sivakasi, India, 13–15 December 2007; pp. 233–239. [Google Scholar]

- Bao, N.; Lechner, A.M.; Fletcher, A.; Mellor, A.; Mulligan, D.; Bai, Z. Comparison of Relative Radiometric Normalization Methods Using Pseudo-Invariant Features for Change Detection Studies in Rural and Urban Landscapes. J. Appl. Remote Sens. 2012, 6, 063578. [Google Scholar] [CrossRef]

- Carvalho, O.A.; Guimaraes, R.F.; Silva, N.C.; Gillespie, A.R.; Gomes, A.T.; Silva, C.R.; Carvalho, P.F. Radiometric Normalization of Temporal Images Combining Automatic Detection of Pseudo-Invariant Features from the Distance and Similarity Spectral Measures, Density Scatterplot Analysis, and Robust Regression. Remote Sens. 2013, 5, 2763–2794. [Google Scholar] [CrossRef]

- Liu, Y.; Yano, T.; Nishiyama, S.; Kimura, R. Radiometric Correction for Linear Change-Detection Technique: Analysis in Bi-temporal Space. Int. J. Remote Sens. 2007, 28, 5143–5157. [Google Scholar] [CrossRef]

- Biday, S.G.; Bhosle, U. Radiometric Correction of Multitemporal Satellite Imagery. J. Comput. Sci. 2010, 6, 201–213. [Google Scholar] [CrossRef]

- Du, Y.; Teillet, P.M.; Cihlar, J. Radiometric Normalization of Multitemporal High-Resolution Satellite Image with Quality Control for Land Cover Change Detection. Remote Sens. Environ. 2002, 82, 123–134. [Google Scholar] [CrossRef]

- Schott, J.R.; Salvaggion, C.; Volchok, W.J. Radiometric Scene Normalization Using Pseudo Invariant Features. Remote Sens. Environ. 1988, 26, 1–14. [Google Scholar] [CrossRef]

- Rahman, M.M.; Hay, G.J.; Couloginer, I.; Hemachandran, B.; Bailin, J. An Assessment of Polynomial Regression Techniques for the Relative Radiometric Normalization (RRN) of High-Resolution Multi-Temporal Airborne Thermal Infrared (TIR) Imagery. Remote Sens. 2014, 6, 11810–11828. [Google Scholar] [CrossRef]

- Zheng, Y.; Zhang, J.; VanGenderen, K.L. Change Detection Approach to SAR and Optical Image Integration. Int. Arch. Photogramm. Remote Sens. 2008, XXXVII Pt B7, 1077–1084. [Google Scholar]

- Brieman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Chica-Rivas, M. Evaluation of Different Machine Learning Methods for Land Cover Mapping of a Mediterranean Area Using Multi-Seasonal Landsat Images and Digital Terrain Models. Int. J. Digit. Earth 2014, 7, 492–509. [Google Scholar] [CrossRef]

- Wang, L.; Zhou, X.; Zhu, X.; Dong, Z.; Guo, W. Estimation of Biomass in Wheat Using Random Forest Regression Algorithm and Remote Sensing Data. Crop J. 2016, 4, 212–219. [Google Scholar] [CrossRef]

- Ahmadlou, M.; Delavar, M.R.; Shafizadeh-Moghadam, H.; Tayyebi, A. Modeling Urban Dynamics Using Random Forest: Implementing Roc and Toc for Model Evaluation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B2, 285–290. [Google Scholar] [CrossRef]

- Guan, H.; Yu, J.; Li, J.; Luo, L. Random Forest-Based Feature Selection for Land-Use Classification Using Lidar Data and Orthoimagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B7, 203–208. [Google Scholar] [CrossRef]

- Hultquist, C.; Chen, G.; Zhao, K. A Comparison of Gaussian Process Regression, Random Forests and Support Vector Regression for Burn Severity Assessment in Diseased Forests. Remote Sens. Lett. 2014, 5, 723–732. [Google Scholar] [CrossRef]

- Belgiu, M.; Dragut, L. Random Forest in Remote Sensing: A Review of Applications and Future Directions. ISPRS J. Photogramm Remote Sens. 2015, 114, 24–31. [Google Scholar] [CrossRef]

- Culter, D.R.; Edwards, T.C.; Beard, K.H.; Culter, A.; Hess, K.T.; Gibson, J.C. Random Forests for Classification in Ecological Society of America. Ecology 2007, 88, 2783–2792. [Google Scholar] [CrossRef]

- Yuan, D.; Elvidge, C.D. Comparison of Relative Radiometric Normalization Techniques. ISPRS. J. Photogramm. Remote Sens. 1996, 51, 117–126. [Google Scholar] [CrossRef]

- Olthof, I.; Pouliot, D.; Fernandes, R.; Latifovic, R. Landsat-7 ETM+ Radiometric Normalization Comparison for Northern Mapping Applications. Remote Sens. Environ. 2005, 95, 388–398. [Google Scholar] [CrossRef]

- Sadeghi, V.; Ebadi, H.; Farshid, F.A. A New Model for Automatic Normalization of Multi-temporal Satellite Images Using Artificial Neural Network and Mathematical Methods. Appl. Math. Model. 2013, 37, 6437–6445. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Yuan, D.; Weerackoon, R.D.; Lunetta, R.S. Relative Radiometric Normalization of Landsat Multispectral Scanner(MSS) Data Using an Automatic Scattergram Controlled Regression. Photogramm. Eng. Remote Sens. 1995, 61, 1255–1260. [Google Scholar]

- Ya’allah, S.M.; Saradjian, M.R. Automatic Normalization of Satellite Images Using Unchanged Pixels within Urban Areas. Inf. Fusion. 2005, 6, 235–241. [Google Scholar] [CrossRef]

- Prasad, A.M.; Iverson, L.R.; Liaw, A. Newer Classification and Regression Tree Techniques: Bagging and Random Forests for Ecological Prediction. Ecosystems 2006, 9, 181–199. [Google Scholar] [CrossRef]

- Shataee, S.; Kalbi, S.; Fallah, A.; Pelz, D. Forest Attribute Imputation Using Machine Learning Methods and ASTER Data: Comparison of K-NN, SVR, Random Forest Regression Algorithms. Int. J. Remote Sens. 2012, 33, 6254–6280. [Google Scholar] [CrossRef]

- Peters, J.; Baets, B.D.; Verhoest, N.E.C.; Samson, R.; Degroeve, S.; Becker, P.D.; Huybrechts, W. Random Forests as a Tool for Ecohydrological Distribution Modeling. Ecol. Model. 2007, 207, 304–318. [Google Scholar] [CrossRef]

- Abdel-Rahman, E.M.; Ahmed, F.B.; Ismail, R. Random Forest Regression and Spectral Band Selection for Estimating Surgarcane Leaf Nitrogen Concentration using EO-1 Hyperion Hyperspectral Data. Int. J. Remote Sens. 2013, 34, 712–728. [Google Scholar] [CrossRef]

- Hutengs, C.; Vohland, M. Downscaling Land Surface Temperatures at Regional Scales with Random Forest Regression. Remote Sens. Environ. 2016, 178, 127–141. [Google Scholar] [CrossRef]

- Gromping, U. Variable Importance Assessment in Regression: Linear Regression versus Random Forest. Am. Stat. 2009, 63, 308–319. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.; Sanchez-Castillo, M.; Chica-olmo, M.; Chica-Rivas, M. Machine Learning Predictive Models for Mineral Prospectivity: An Evaluation of Neural Networks, Random Forest, Regression Trees and Support Vector Machine. Ore Geol. Rev. 2015, 71, 804–818. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random Forests for Land Cover Classification. Pattern Recogn. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Pal, M. Random Forest Classifier for Remote Sensing Classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. Experiments with a New Boosting Algorithm. In Proceedings of the Thirteenth International Conference on International Conference on Machine Learning, Bari, Italy, 3–6 July 1996; pp. 148–156. [Google Scholar]

- Pardoe, D.; Stone, P. Boosting for Regression Transfer. In Proceedings of the 27th International Conference on Machine Learning, Haifa, Israel, 21–25 June 2010; pp. 863–870. [Google Scholar]

- Avnimelech, R.; Intrator, N. Boosting Regression Estimators. Neural Comput. 1999, 11, 499–520. [Google Scholar] [CrossRef] [PubMed]

- Drucker, H. Improving Regressors Using Boosting Techniques. In Proceedings of the Fourteenth International Conference on Machine Learning, San Francisco, CA, USA, 8–12 July 1997; pp. 107–115. [Google Scholar]

- Friedman, J.H. Greedy Function Approximation: A Gradient Boosting Machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Friedman, J.H. Stochastic Gradient Boosting. Comput. Stat. Data Anal. 2002, 38, 367–378. [Google Scholar] [CrossRef]

- Moisen, G.; Freeman, E.A.; Blackard, J.A.; Frescino, T.S.; Zimmermann, N.E.; Edwords, T.C., Jr. Prediction Tree Species Presence and Basal Area in Utah: A Comparison of Stochastic Gradient Boosting, Generalized Addictive Models, and Tree-Based Method. Ecol. Model. 2006, 199, 176–187. [Google Scholar] [CrossRef]

- Lawrence, R.; Bunn, A.; Powell, S.; Zambon, M. Classification of Remotely Sensed Imagery Using Stochastic Gradient Boosting as a Refinement of Classification Tree Analysis. Remote Sens. Environ. 2004, 90, 331–336. [Google Scholar] [CrossRef]

- Kim, S.H.; Park, J.H.; Woo, C.S.; Lee, K.S. Analysis of Temporal Variability of MODIS Leaf Area Index (LAI) Product over Temperate Forest in Korea. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Seoul, Korea, 29 July 2005; pp. 4343–4346. [Google Scholar]

- Wang, Q.; Zou, C.; Yuan, Y.; Lu, H.; Yan, P. Image Registration by Normalized Mapping. Neurocomputing 2013, 101, 181–189. [Google Scholar] [CrossRef]

- Chen, G.; He, Y.; Santis, A.D.; Li, G.; Cobb, R.; Meentemeyer, R.K. Assessing the Impact of Emerging Forest Disease on Wildfire Using Landsat and KOMPSAT-2 Data. Remote Sens. Environ. 2017, 195, 218–229. [Google Scholar] [CrossRef]

- Hong, G.; Zhang, Y. A Comparative Study on Radiometric Normalization Using High Resolution Satallite Images. Int. J. Remote Sens. 2008, 29, 425–438. [Google Scholar] [CrossRef]

- Mohanaiah, P.; Sathyanarayana, P.; GuruKumar, L. Image Texture Feature Extraction Using GLCM Approach. Int. J. Sci. Res. 2013, 3, 1–5. [Google Scholar]

- Zhang, X.; Cui, J.; Wang, W.; Lin, C. A Study for Texture Feature Extraction of High-Resolution Satellite Images Based on a Direction Measure and Gray Level Co-Occurrence Matrix Fusion Algorithm. Sensors 2017, 17, 1474. [Google Scholar] [CrossRef] [PubMed]

- Kamp, U.; Bolch, T.; Olsenholler, J. Geomorphometry of Cerro Sillajhuay (Andes, Chile/Bolivia): Comparison of Digital Elevation Models (DEMs) from ASTER Remote Sensing Data and Contour Maps. Geocarto Int. 2005, 20, 23–33. [Google Scholar] [CrossRef]

- Kumar, L. Effect of Rounding off Elevation Values on the Calculation of Aspect and Slope from Gridded Digital Elevation Model. J. Spat. Sci. 2013, 58, 91–100. [Google Scholar] [CrossRef]

- Zhang, Z.; Collie, F.C.; Ou, X.; Wulf, R.D. Integration of Satellite Imagery, Topography and Human Disturbance Factors Based on Canonical Correspondence Analysis Ordination for Mountain Vegetation Mapping: A Case Study in Yunnan, China. Remote Sens. 2014, 6, 1026–1056. [Google Scholar] [CrossRef] [Green Version]

- Mokarram, M.; Sathyamoorthy, D. Modeling the Relationship between Elevation, Aspect and Spatial Distribution of Vegetation in the Darab Mountain, Iran Using Remote Sensing Data. Model. Earth Syst. Environ. 2015, 1, 30. [Google Scholar] [CrossRef]

- Du, P.; Samat, A.; Waske, B.; Liu, S.; Li, Z. Random Forest and Rotation Forest for Fully Polarized SAR Image Classification Using Polarimetric and Spatial Feature. ISPRS J. Photogramm. Remote Sens. 2015, 105, 38–53. [Google Scholar] [CrossRef]

- Otsu, M.A. Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Blachke, T. Object Based Image Analysis for Remote Sensing. ISPRS. J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Susstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Toro, C.A.O.; Martin, C.G.; Pedrero, A.G. Superpixel-Based Roughness Measure for Multispectral Satellite Image Segmentation. Remote Sens. 2015, 7, 14620–14645. [Google Scholar] [CrossRef]

- Mathieu, R.; Aryal, J.; Change, A.K. Object-Based Classification of IKONOS Imagery for Mapping Large-Scale Vegetation Communities in Urban Areas. Sensors 2007, 7, 2860–2880. [Google Scholar] [CrossRef] [PubMed]

- Story, M. Accuracy Assessment: A User’s Perspective. Photogramm. Eng. Remote Sens. 1986, 52, 397–399. [Google Scholar]

| Variable | Derived from |

|---|---|

| Band 1 | Landsat 5 TM Band 1 pixel value |

| Band 2 | Landsat 5 TM Band 2 pixel value |

| Band 3 | Landsat 5 TM Band 3 pixel value |

| Band 4 | Landsat 5 TM Band 4 pixel value |

| Band 5 | Landsat 5 TM Band 5 pixel value |

| Band 7 | Landsat 5 TM Band 7 pixel value |

| GLCM(Texture) of Bands 1–3 | ASM, contrast, correlation, and entropy of 5 × 5 pixel neighborhood |

| Mean of Bands 1–3 | Mean of 5 × 5 pixel neighborhood |

| Variance of Bands 1–3 | Variance of 5 × 5 pixel neighborhood |

| Elevation | Derived from ASTER GDEM |

| Slope | Derived from ASTER GDEM using terrain function |

| Aspect | Derived from ASTER GDEM using terrain function |

| Method | Band | R² | RMSE |

|---|---|---|---|

| Raw | Band 1 | 0.2994 | 29.2566 |

| Band 2 | 0.4219 | 29.5337 | |

| Band 3 | 0.3894 | 39.2366 | |

| MS regression | Band 1 | 0.2998 | 22.9201 |

| Band 2 | 0.4231 | 22.3732 | |

| Band 3 | 0.3915 | 27.6336 | |

| SR | Band 1 | 0.2994 | 20.7462 |

| Band 2 | 0.4219 | 20.3767 | |

| Band 3 | 0.3895 | 24.3443 | |

| NC regression | Band 1 | 0.2998 | 23.0747 |

| Band 2 | 0.4232 | 22.4726 | |

| Band 3 | 0.3915 | 28.0409 |

| Tree Numbers | Band | OOB-R² | Training Time |

|---|---|---|---|

| 32 | Band 1 | 0.7547 | 23.6119 s |

| Band 2 | 0.7813 | ||

| Band 3 | 0.8170 | ||

| 64 | Band 1 | 0.7438 | 41.0407 s |

| Band 2 | 0.7808 | ||

| Band 3 | 0.8148 | ||

| 128 | Band 1 | 0.7567 | 73.1419 s |

| Band 2 | 0.7846 | ||

| Band 3 | 0.8157 | ||

| 256 | Band 1 | 0.7573 | 136.5000 s |

| Band 2 | 0.7865 | ||

| Band 3 | 0.8173 | ||

| 512 | Band 1 | 0.7543 | 268.7962 s |

| Band 2 | 0.7848 | ||

| Band 3 | 0.8153 | ||

| 1024 | Band 1 | 0.7554 | 598.1220 s |

| Band 2 | 0.7867 | ||

| Band 3 | 0.8161 |

| Method | Band | R² | RMSE |

|---|---|---|---|

| RF regression | Band 1 | 0.9040 | 8.2260 |

| Band 2 | 0.8982 | 8.5494 | |

| Band 3 | 0.9249 | 7.9662 |

| Method | Band | R² | RMSE |

|---|---|---|---|

| AdaBoost regression | Band 1 | 0.3902 | 10.1300 |

| Band 2 | 0.4679 | 9.8297 | |

| Band 3 | 0.3906 | 10.1142 | |

| SGB regression | Band 1 | 0.4657 | 9.8904 |

| Band 2 | 0.4705 | 10.5055 | |

| Band 3 | 0.3856 | 10.0355 |

| Method | Overall Accuracy (%) | User’s Accuracy (%) | Producer’s Accuracy (%) | |||

|---|---|---|---|---|---|---|

| Change | No-Change | Change | No-Change | |||

| MS | 5 × 5 | 89.49 | 43.30 | 97.23 | 72.33 | 91.11 |

| 7 × 7 | 90.99 | 48.23 | 96.81 | 67.34 | 93.21 | |

| 9 × 9 | 91.97 | 52.79 | 96.33 | 61.56 | 94.83 | |

| SR regression | 5 × 5 | 70.33 | 19.19 | 96.93 | 76.48 | 69.75 |

| 7 × 7 | 71.33 | 19.42 | 96.72 | 74.30 | 71.05 | |

| 9 × 9 | 73.86 | 20.36 | 96.37 | 70.19 | 74.21 | |

| NC regression | 5 × 5 | 82.65 | 29.59 | 97.16 | 73.97 | 83.47 |

| 7 × 7 | 84.63 | 31.74 | 96.70 | 68.66 | 86.13 | |

| 9 × 9 | 87.04 | 35.68 | 96.30 | 63.51 | 89.25 | |

| RF | 5 × 5 | 94.00 | 63.59 | 97.20 | 70.44 | 96.21 |

| 7 × 7 | 95.30 | 74.81 | 97.05 | 74.81 | 97.84 | |

| 9 × 9 | 95.07 | 81.22 | 95.94 | 55.45 | 98.80 | |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seo, D.K.; Kim, Y.H.; Eo, Y.D.; Park, W.Y.; Park, H.C. Generation of Radiometric, Phenological Normalized Image Based on Random Forest Regression for Change Detection. Remote Sens. 2017, 9, 1163. https://doi.org/10.3390/rs9111163

Seo DK, Kim YH, Eo YD, Park WY, Park HC. Generation of Radiometric, Phenological Normalized Image Based on Random Forest Regression for Change Detection. Remote Sensing. 2017; 9(11):1163. https://doi.org/10.3390/rs9111163

Chicago/Turabian StyleSeo, Dae Kyo, Yong Hyun Kim, Yang Dam Eo, Wan Yong Park, and Hyun Chun Park. 2017. "Generation of Radiometric, Phenological Normalized Image Based on Random Forest Regression for Change Detection" Remote Sensing 9, no. 11: 1163. https://doi.org/10.3390/rs9111163

APA StyleSeo, D. K., Kim, Y. H., Eo, Y. D., Park, W. Y., & Park, H. C. (2017). Generation of Radiometric, Phenological Normalized Image Based on Random Forest Regression for Change Detection. Remote Sensing, 9(11), 1163. https://doi.org/10.3390/rs9111163