Multilayer Perceptron Neural Networks Model for Meteosat Second Generation SEVIRI Daytime Cloud Masking

Abstract

:1. Introduction

2. Data

2.1. MSG-SEVIRI Dataset

2.2. EUMETSAT Cloud Mask

3. Methods and Algorithms

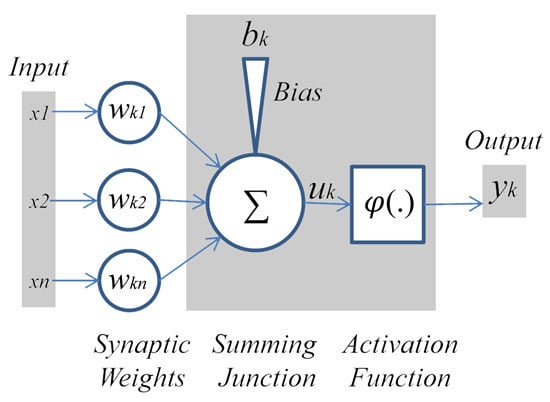

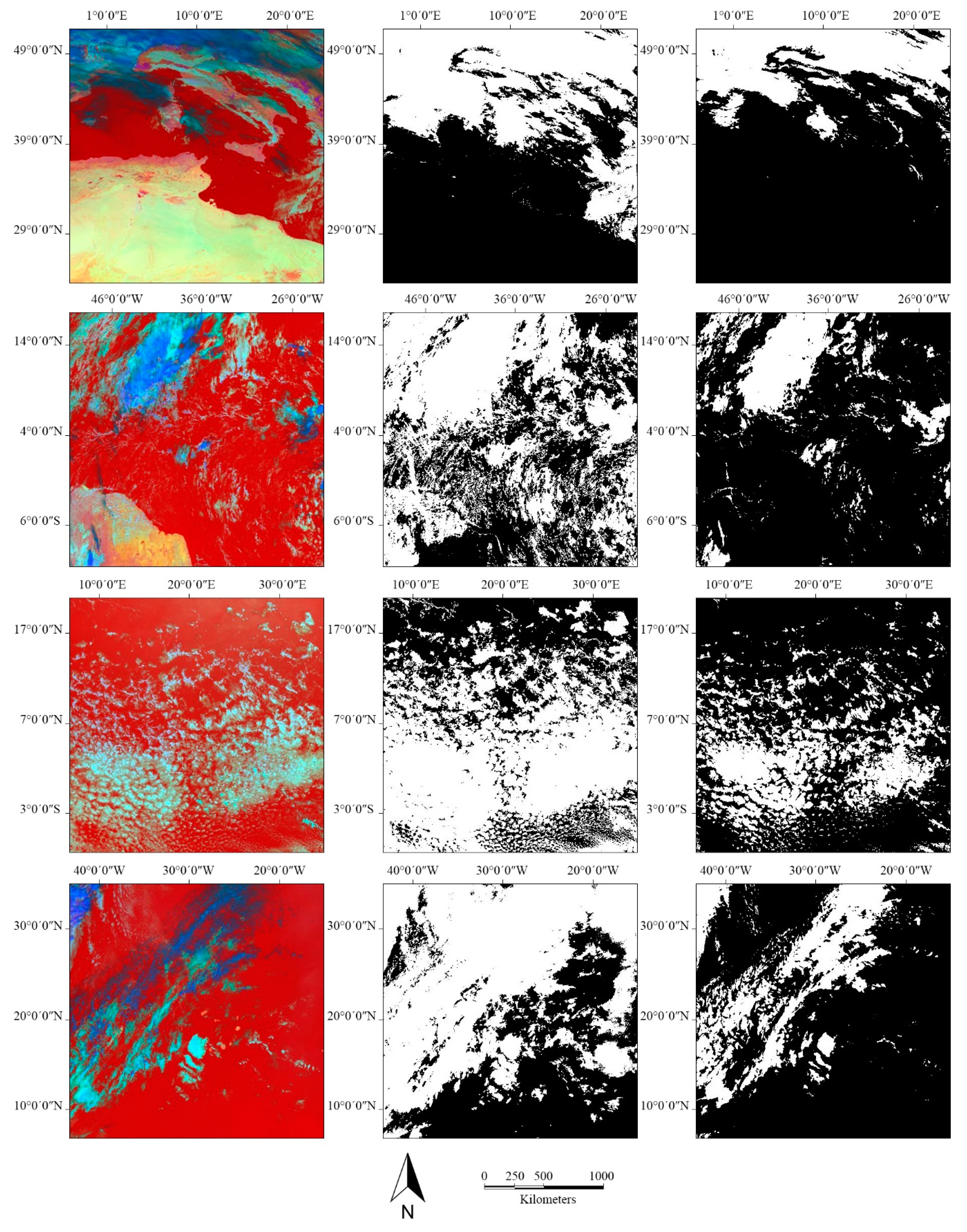

3.1. An Overview of Multilayer Perceptron Neural Network

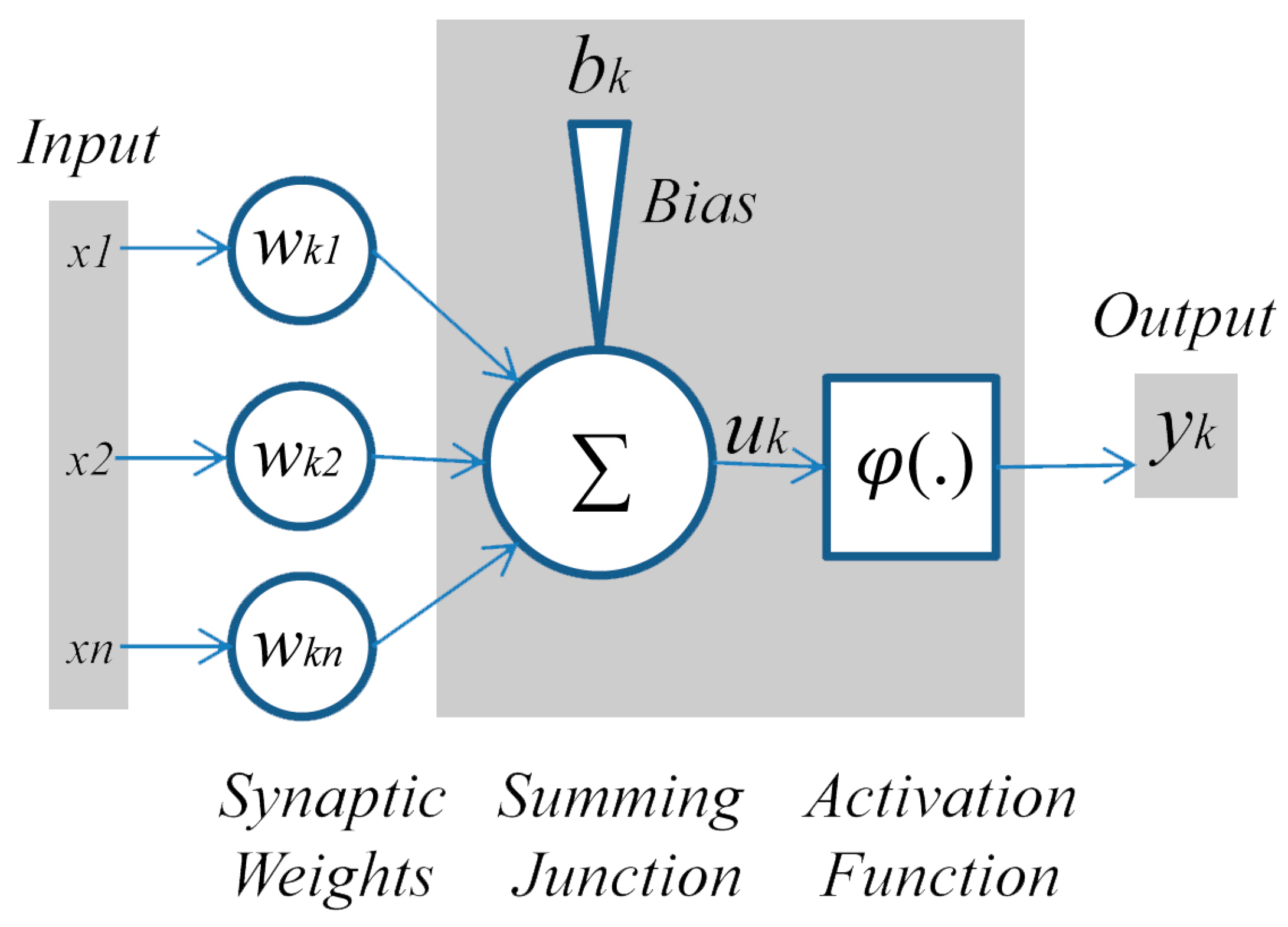

3.2. Methods

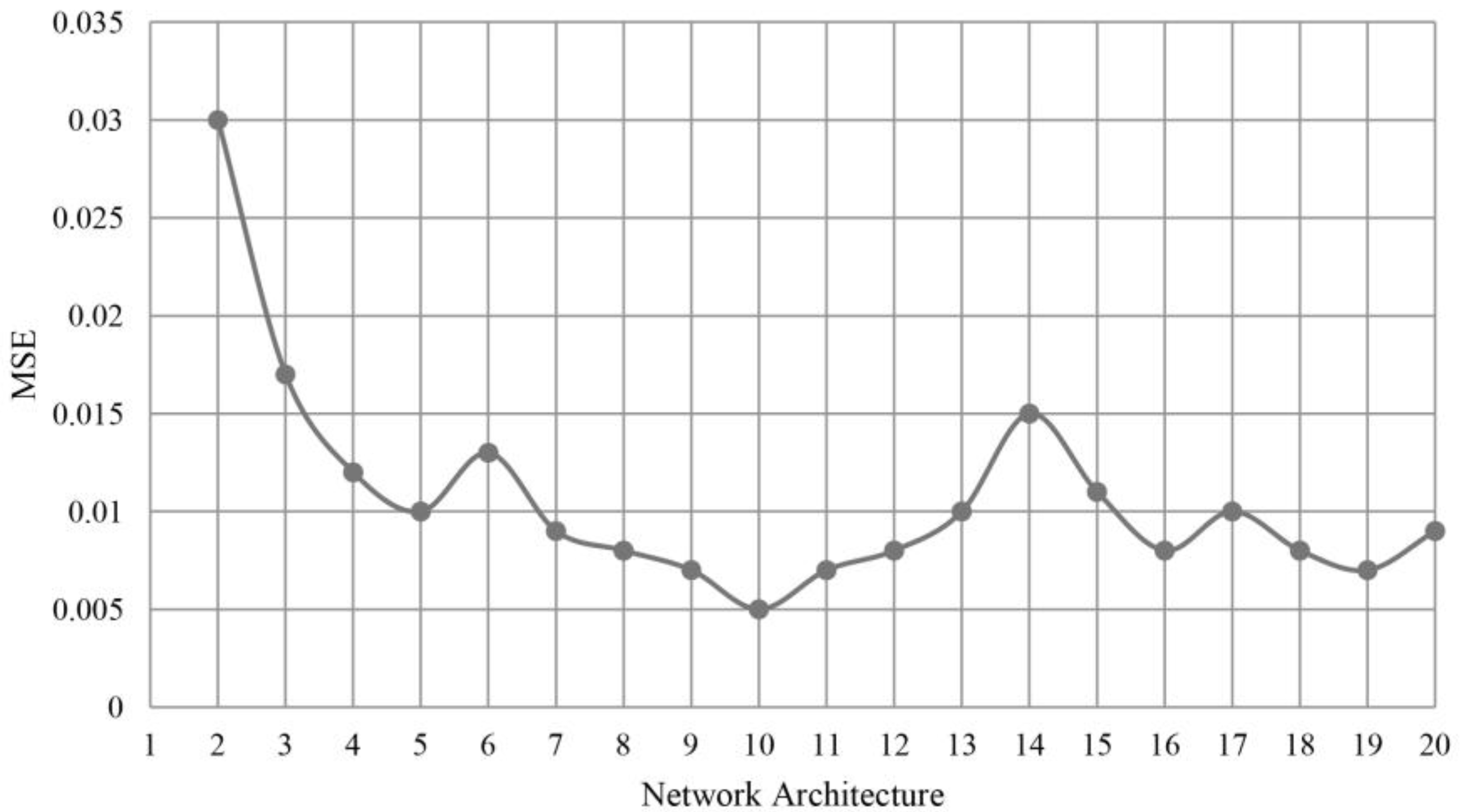

4. Discussion

| Model | Mean | Min | Max | St.Dev | MeanComm | MeanOmm |

|---|---|---|---|---|---|---|

| MLP NN | 88.96 | 85 | 91.8 | 1.68 | 3.88 | 11.04 |

| MPEF CLM | 86.10 | 82 | 89.2 | 2.47 | 7.27 | 13.90 |

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Choi, Y.-S.; Ho, C.-H.; Ahn, M.-H.; Kim, Y.-M. Remote Sensing of Cloud Properties from the Communication, Ocean and Meteorological Satellite (COMS) Imagery. Available online: http://www.researchgate.net/publication/239847339_Remote_Sensing_of_Cloud_Properties_from_the_Communication_Ocean_and_Meteorological_Satellite_%28COMS%29_Imagery (accessed on 29 September 2014).

- Asmala, A.; Shaun, Q. Cloud masking for remotely sensed data using spectral and principal components analysis. ETASR Eng. Tech. Appl. Sci. Res. 2012, 2, 221–225. [Google Scholar]

- Bennouna, Y.S.; Curier, L.; de Leeuw, G.; Piazzola, J.; Roebeling, R.; de Valk, P. An automated day-time cloud detection technique applied to MSG-SEVIRI data over Western Europe. Int. J. Remote Sens. 2010, 31, 6073–6093. [Google Scholar] [CrossRef]

- Bley, S.; Deneke, H. A threshold-based cloud mask for the high-resolution visible channel of Meteosat second generation SEVIRI. Atmos. Meas. Tech. 2013, 6, 2713–2723. [Google Scholar] [CrossRef]

- Schmetz, J.; Pili, P.; Tjemkes, S.; Just, D.; Kerkann, J.; Rota, S.; Ratier, A. An introduction to Meteosat second generation (MSG). Bull. Am. Meteorol. Soc. 2002, 83, 1271–1271. [Google Scholar]

- Roebeling, R.A.; van Meijgaard, E. Evaluation of the daylight cycle of model-predicted cloud amount and condensed water path over Europe with observations from MSG SEVIRI. J. Clim. 2009, 22, 1749–1766. [Google Scholar] [CrossRef]

- Reynolds, D.; Vonderhaar, T. A bispectral method for cloud parameter determination. Mon. Weather Rev. 1977, 105, 446–457. [Google Scholar] [CrossRef]

- Schiffer, R.; Rossow, W. The international satellite cloud climatology project (ISCCP): The first project of the world climate research programme. Bull. Am. Meteorol. Soc. 1983, 64, 2261–2287. [Google Scholar]

- Saunders, R.; Kriebel, K.T. An improved method for detecting clear sky and cloudy radiances from AVHRR data. Int. J. Remote Sens. 1988, 9, 123–150. [Google Scholar] [CrossRef]

- Derrien, M.; Le Gleau, H. MSG/SEVIRI cloud mask and type from SAFNWC. Int. J. Remote Sens. 2005, 26, 4707–4732. [Google Scholar] [CrossRef]

- Derrien, M.; Farki, B.; Harang, L.; Legleau, H.; Noyalet, A.; Pochic, D.; Sairouni, A. Automatic cloud detection applied to NOAA-11/AVHRR imagery. Remote Sens. Environ. 1993, 46, 246–267. [Google Scholar] [CrossRef]

- Feijt, A.; de Valk, P.; van der Veen, S. Cloud detection using Meteosat imagery and numerical weather prediction model data. J. Appl. Meteorol. 2000, 39, 1017–1030. [Google Scholar] [CrossRef]

- Bhosle, U.; Biday, S. Relative radiometric correction of cloudy multitemporal satellite imagery. Int. J. Civ. Eng. 2010, 2, 138–142. [Google Scholar]

- Mukherjee, D.P.; Acton, S.T. Cloud tracking by scale space classification. IEEE Trans. Geosci. Remote Sens. 2002, 40, 405–415. [Google Scholar] [CrossRef] [Green Version]

- Davis, G.B.; Griggs, D.J.; Sullivan, G.D. Automatic estimation of cloud amount using computer vision. J. Atmos. Ocean. Tech. 1992, 9, 81–85. [Google Scholar] [CrossRef]

- Souza-Echer, M.P.; Pereir-A, E.B.; Bins, L.S.; Andrade, M.A.R. A simple method for the assessment of the cloud cover state in high-latitude regions by a ground-based digital camera. J. Atmos. Ocean. Tech. 2006, 23, 437–447. [Google Scholar] [CrossRef]

- Roy, G.; Hayman, S.; Julian, W. Sky analysis from CCD images: Cloud cover. Lighting Res. Tech. 2001, 33, 211–222. [Google Scholar] [CrossRef]

- Coakley, J.; Bretherton, FP. Cloud cover from high-resolution scanner data: Detecting and allowing for partially filled fields of view. J. Geophys. Res. Ocean. 1982, 87, 4917–4932. [Google Scholar] [CrossRef]

- Simmer, C.; Raschke, E.; Ruprecht, E. A method for de-termination of cloud properties from two-dimensional histograms. Ann. Meteorol. 1982, 18, 130–132. [Google Scholar]

- Desbios, M.; Seze, G.; Szejwach, G. Automatic classification of clouds on Meteosat imagery: Application to high-level clouds. J. Appl. Meteorol. 1982, 21, 401–412. [Google Scholar] [CrossRef]

- Seze, G.; Desbois, M. Cloud cover analysis from satellite imagery using spatial and temporal characteristics of the data. J. Clim. Appl. Meteorol. 1987, 26, 287–303. [Google Scholar] [CrossRef]

- Bankert, R.L. Cloud classification of AVHRR imagery in maritime regions using a probabilistic neural network. J. Appl. Meteorol. 1994, 33, 909–918. [Google Scholar] [CrossRef]

- Lee, J.; Weger, R.C.; Sengupta, S.K.; Welch, R.M. A neural network approach to cloud classification. IEEE Trans. Geosci. Remote Sens. 1990, 28, 846–855. [Google Scholar] [CrossRef]

- Miller, S.W.; Emery, W.J. An automated neural network cloud classifier for use over land and ocean surfaces. J. Appl. Meteorol. 1997, 36, 1346–1362. [Google Scholar] [CrossRef]

- Kox, S.; Bugliaro, L.; Ostler, A. Retrieval of cirrus cloud optical thickness and top altitude from geostationary remote sensing. Atmos. Meas. Tech. 2014, 7, 3233–3246. [Google Scholar] [CrossRef]

- Bishop, C.M. Neural Networks for Pattern Recognition; Oxford University Press: Oxford, UK, 1995. [Google Scholar]

- Lafont, D.; Jourdan, O.; Guillemet, B. Mesoscale cloud pattern classification over ocean with a neural network using a new index of cloud variability. Int. J. Remote Sens. 2006, 27, 3533–3552. [Google Scholar] [CrossRef]

- Cazorla, A.; Olmo, F.J.; Alados-Arboledas, L. Using a sky imager for aerosol characterization. Atmos. Environ. 2008, 42, 2739–2745. [Google Scholar] [CrossRef]

- Macías, M.M.; Aligué, J.L.; Pérez, A.S.; Vivas, A.A. A comparative study of two neural models for cloud screening of Iberian peninsula Meteosat images. In Proceedings of the International Work Conference on Artificial and Neural Networks, Granada, Spain, 13–15 June 2001; pp. 184–191.

- Mellit, A.; Kalogirou, S.A. Artificial intelligence techniques for photovoltaic applications: A review. Prog. Energ. Combust. 2008, 34, 574–632. [Google Scholar] [CrossRef]

- Liu, Y.; Xia, J.; Shi, C.-X.; Hong, Y. An improved cloud classification algorithm for China’s FY-2C multi-channel images using artificial neural network. Sensors 2009, 9, 5558–5579. [Google Scholar] [CrossRef] [PubMed]

- Ricciardelli, E.; Romano, F.; Cuomo, V. A technique for classifying uncertain MOD35/MYD35 pixels through Meteosat second generation-spinning enhanced visible and infrared imager observations. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2137–2149. [Google Scholar] [CrossRef]

- Derrien, M.; Le Gléau, H. Improvement of cloud detection near sunrise and sunset by temporal-differencing and region-growing techniques with real-time SEVIRI. Int. J. Remote Sens. 2010, 31, 1765–1780. [Google Scholar] [CrossRef]

- Prata, A.J. Observations of volcanic ash clouds in the 10–12 μm window using AVHRR/2 data. Int. J. Remote Sens. 1989, 10, 751–761. [Google Scholar] [CrossRef]

- Iyer, M.S.; Rhinehart, R.R. A method to determine the required number of neural-network training repetitions. IEEE Trans. Neural Netw. 1999, 10, 427–432. [Google Scholar] [CrossRef] [PubMed]

- Karlsson, K.-G.; Johansson, E. On the optimal method for evaluating cloud products from passive satellite imagery using CALIPSO-CALIOP data: Example investigating the CM SAF CLARA-A1 dataset. Atmos. Meas. Tech. 2013, 6, 1271–1286. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Taravat, A.; Proud, S.; Peronaci, S.; Del Frate, F.; Oppelt, N. Multilayer Perceptron Neural Networks Model for Meteosat Second Generation SEVIRI Daytime Cloud Masking. Remote Sens. 2015, 7, 1529-1539. https://doi.org/10.3390/rs70201529

Taravat A, Proud S, Peronaci S, Del Frate F, Oppelt N. Multilayer Perceptron Neural Networks Model for Meteosat Second Generation SEVIRI Daytime Cloud Masking. Remote Sensing. 2015; 7(2):1529-1539. https://doi.org/10.3390/rs70201529

Chicago/Turabian StyleTaravat, Alireza, Simon Proud, Simone Peronaci, Fabio Del Frate, and Natascha Oppelt. 2015. "Multilayer Perceptron Neural Networks Model for Meteosat Second Generation SEVIRI Daytime Cloud Masking" Remote Sensing 7, no. 2: 1529-1539. https://doi.org/10.3390/rs70201529

APA StyleTaravat, A., Proud, S., Peronaci, S., Del Frate, F., & Oppelt, N. (2015). Multilayer Perceptron Neural Networks Model for Meteosat Second Generation SEVIRI Daytime Cloud Masking. Remote Sensing, 7(2), 1529-1539. https://doi.org/10.3390/rs70201529