Multi-Sensor As-Built Models of Complex Industrial Architectures

Abstract

:1. Introduction

1.1. Industrial Context

1.2. Contributions

- The state of the art of tools and methods for the acquisition, processing and georeferencing of as-built datasets dedicated to the specific conditions of complex indoor facilities, Subsection 2.1 and Subsection 2.2;

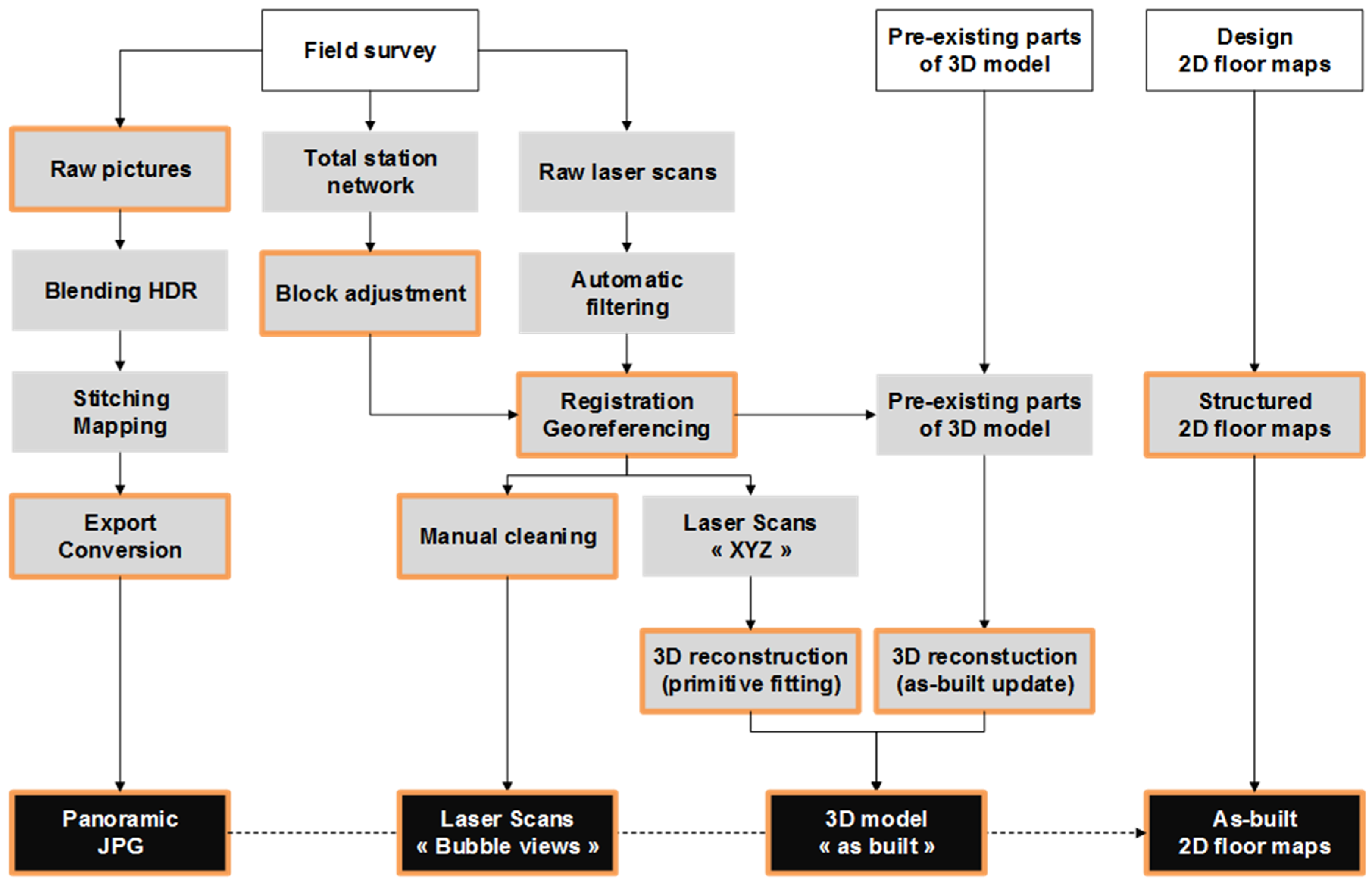

- The proposition of a global method for multi-sensor acquisition and processing to represent complex architecture, Subsection 2.3 and Figure 2;

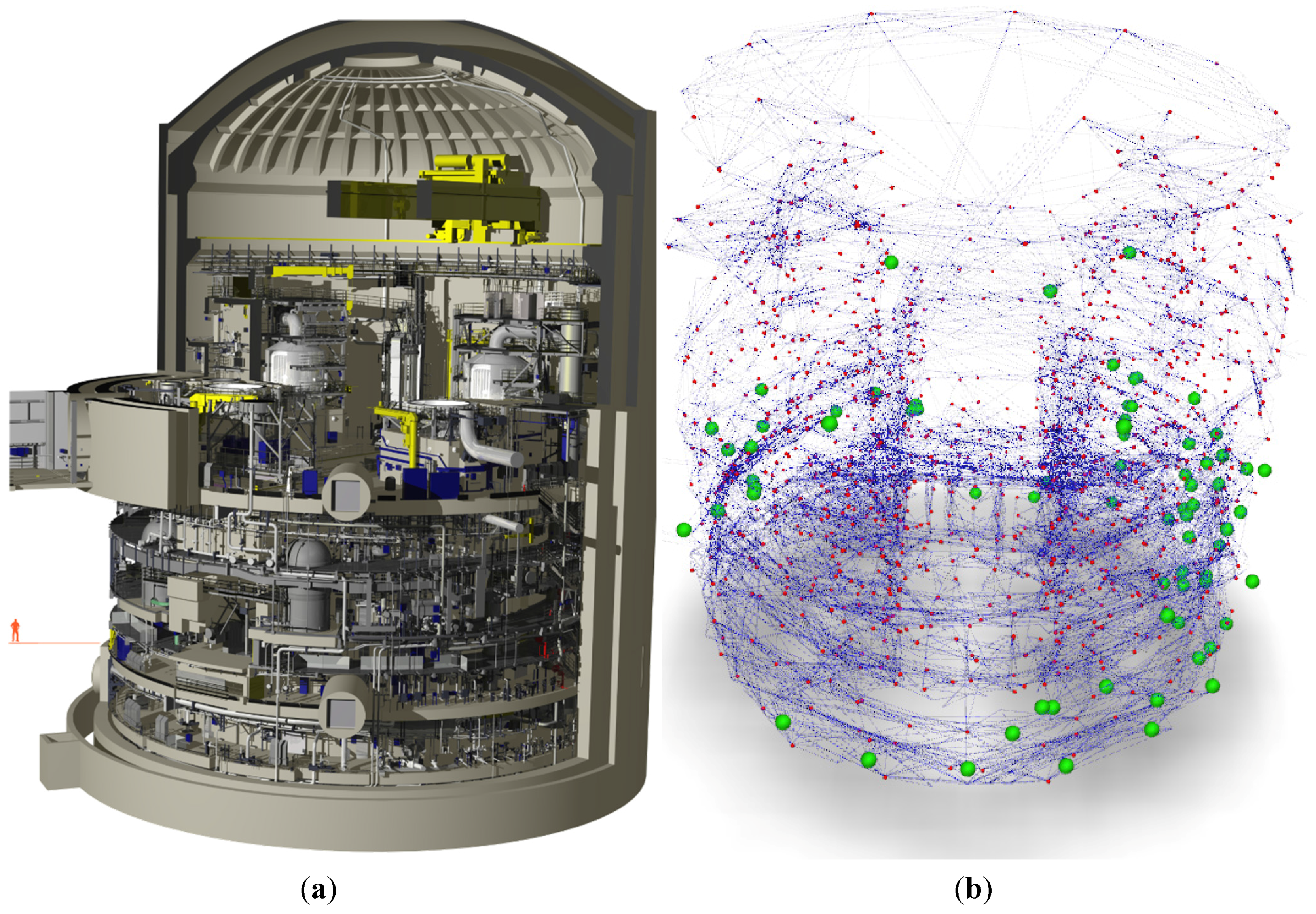

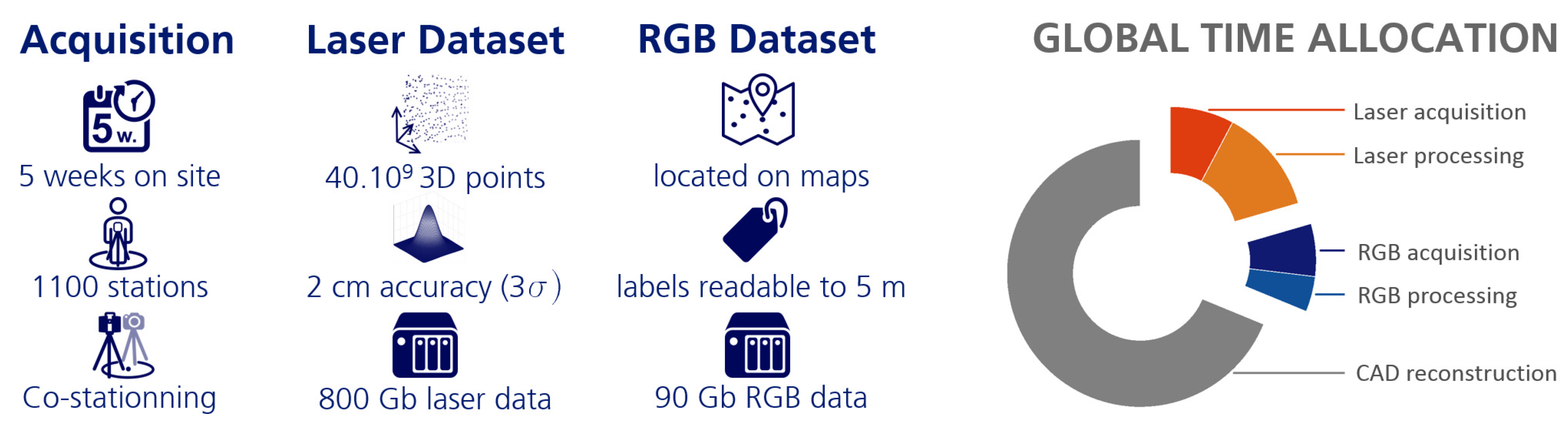

- Settings, processes and feedback from a large-scale multi-sensor scanning survey experiment on a whole 1300-megawatt nuclear reactor building (1000+ stations of both TLS and panoramic images) with a highlight of the role of the human beings in the process, Section 3;

- A new interactive tool for pose estimation of panoramic images, Section 4;

- Recommendations and examples for developing dedicated applications for virtual tours of complex architectures using multiple data types in order to increase the value of the dataset and answer users’ requirements, Section 5;

- An overview of the remaining bottlenecks and challenges in view of the generalization of large, dense, multi-sensor scanning surveys, Section 6.

2. State of the Art in 3D Surveying for the Industry

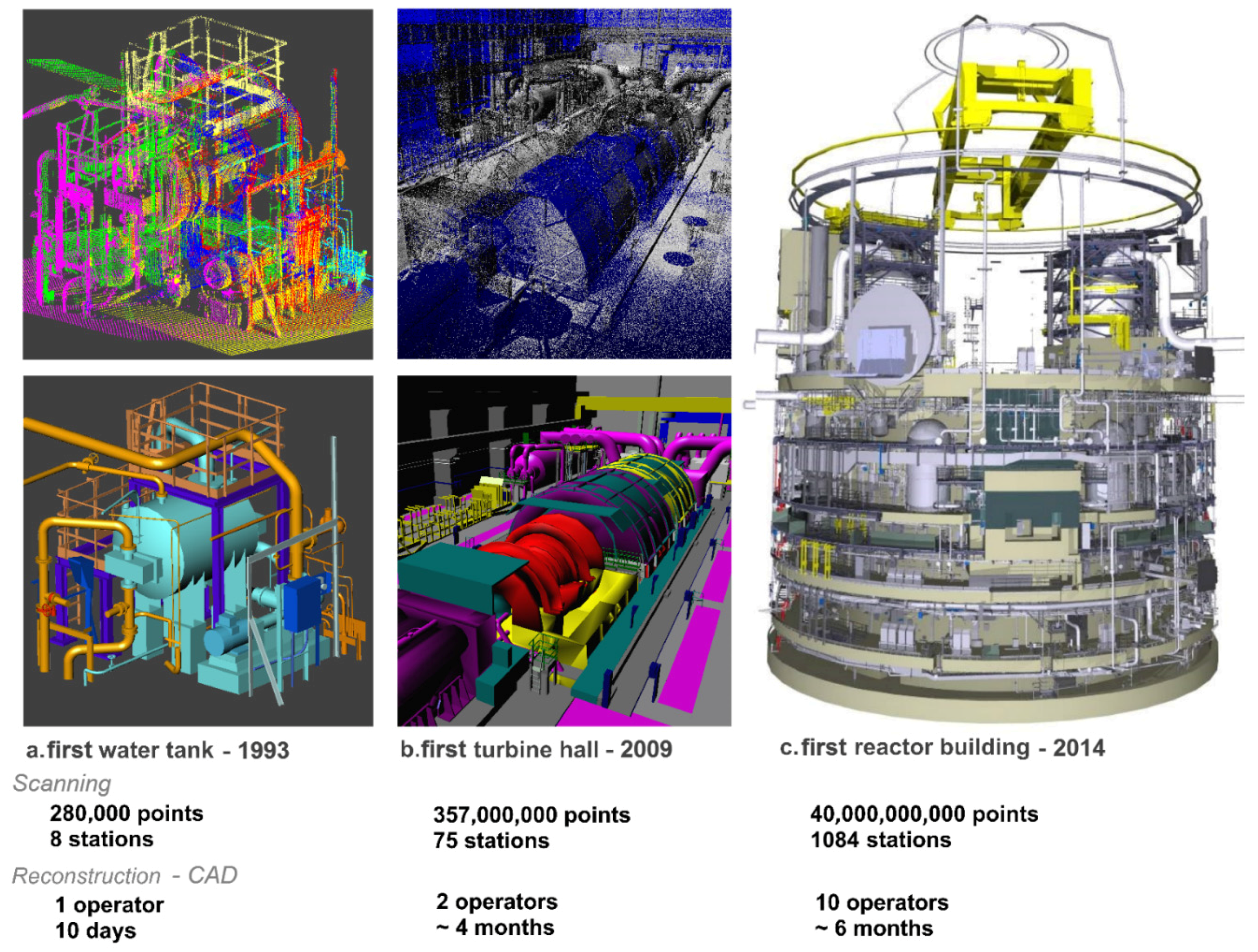

2.1. Scanning Industrial Interiors, a 20-Year-Old Challenge

- (1)

- Multi-sensor datasets that describe both the geometry and appearance of a facility;

- (2)

- Fast and accurate sensors, responsive to harsh albedos or very reflective surfaces;

- (3)

- Automated pipelines for conversions and formatting;

- (4)

- Large-scale efficient tools for registration and processing;

- (5)

- User-friendly diagnostic tools to achieve high quality requirements on large and complex datasets;

- (6)

- Dedicated solutions for the intuitive exploration and use of multi-sensor datasets.

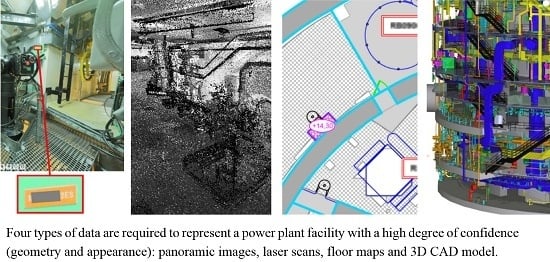

2.2. Requirement Engineering for As-Built Datasets of Complex Architectures

- (1)

- Panoramic images, in order to be able to read each equipment tag that might be visible up to 5 m from the main pathways in the facility, as well as to understand the overall architecture of the building;

- (2)

- Georeferenced terrestrial laser scans, in order to deliver local sub-centimeter geometric accuracy on distance measurements, as well as global 3D locations with less than 2 cm of deviation from the ground truth (2.58σ tolerance);

- (3)

- 2D floor maps, with all elements relative to the structure of the building (walls, floors, ladders, stairs, etc.), as well as landmarks to help pedestrians navigate the plant;

- (4)

- 3D, as-built CAD models with categories, to capture empty areas or open walking spaces with less than 5 cm of error (2.58σ tolerance), as well as the shape of the main equipment of the facility.

| Type of Data | Criteria |

|---|---|

| Panoramic images | completeness, location of stations, field of view, resolution and noise level of digital images, white balance and high dynamic range (HDR), naming, approximate poses, vertical estimation and mapping |

| Georeferenced and cleaned laser scans | completeness, location of stations, block structure of sub-networks, targets for registration, reference targets for georeferencing, field of view, resolution, signal and total station traverse survey |

| 2D floor maps | completeness, geometry, naming and formatting |

| 3D as-built CAD model | completeness, categories of objects, type of shapes, geometric accuracies by category, names and structure |

2.3. State of the Art of Large-Scale Dense Scanning Surveys

2.3.1. Panorama Acquisition

2.3.2. 3D Point Cloud Acquisition and Registration

2.3.3. 3D CAD Reconstruction

3. Experiment of a Multi-Sensor Survey in a 1300-MW Nuclear Reactor

3.1. Goals and Context of the Experiment

3.2. A Level 2 Procedure for the Quality Control of Large Datasets

- Level 1: the data creator checks 100% of the dataset and documents it;

- Level 2: the project data manager performs spot checks both on areas of interest and randomly on the whole dataset.

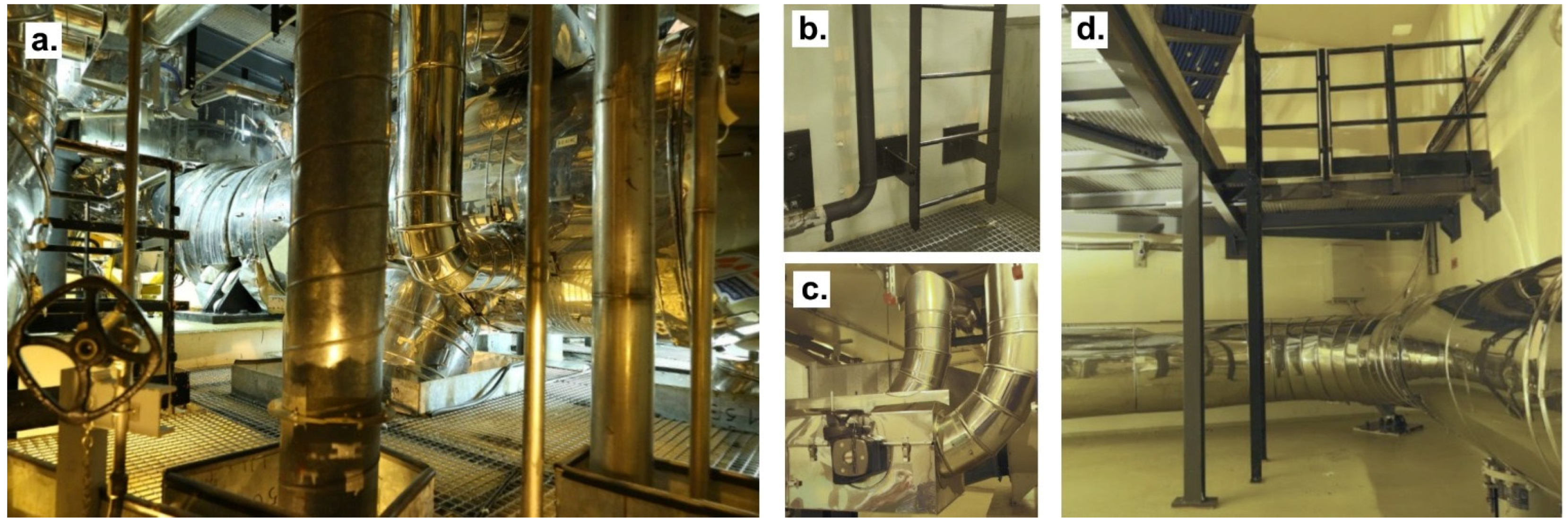

3.3. Description of the Data Acquisition

- Several tests on TLS sensors have shown that, even today, the scanning of dark surfaces is not efficient, and the reflective surfaces lead to billions of erroneous 3D points that cannot be filtered by current firmware or software. These points are certainly an issue for 3D reconstructions, but mostly for taking measurements of so-called “bubble views” or station-based views where no feedback on the real position of 3D points is given. Our solution consisted of developing an efficient interface to perform a manual segmentation of these outliers Figure 7.

- Without better algorithms for the fine referencing of TLS dataset and large 3D networks, the only way to comply with a global 2.58σ tolerance of 2 cm accuracy overall, the dataset consists of creating sub-networks with fewer than 200 stations, independently referenced to the external reference system. This constraint implies thorough planning on site for placing and surveying targets. Recent developments have shown that we can expect better results in automation and quality by bundler snooping (moving targets) and the use of robust estimation well known from geodesists [36].

- When dealing with 450 Mpixel images of indoor scenes, the field of view of a single picture is quite small and can lead to a lack of feature points when only uniform objects, such as a painted wall, are visible in the picture. The Level 2 control procedure has led to the reopening of 20% of the panoramic stitches for editing;

- Another recurrent error in panoramic images consists of estimating verticality, based on the images. In congested environments, default settings can lead to errors of up to 20° in that estimate. We recommend either the use of leveled panoramic heads with custom settings for estimating the relative position of unit images or the use of vertical definition tools through the software interface;

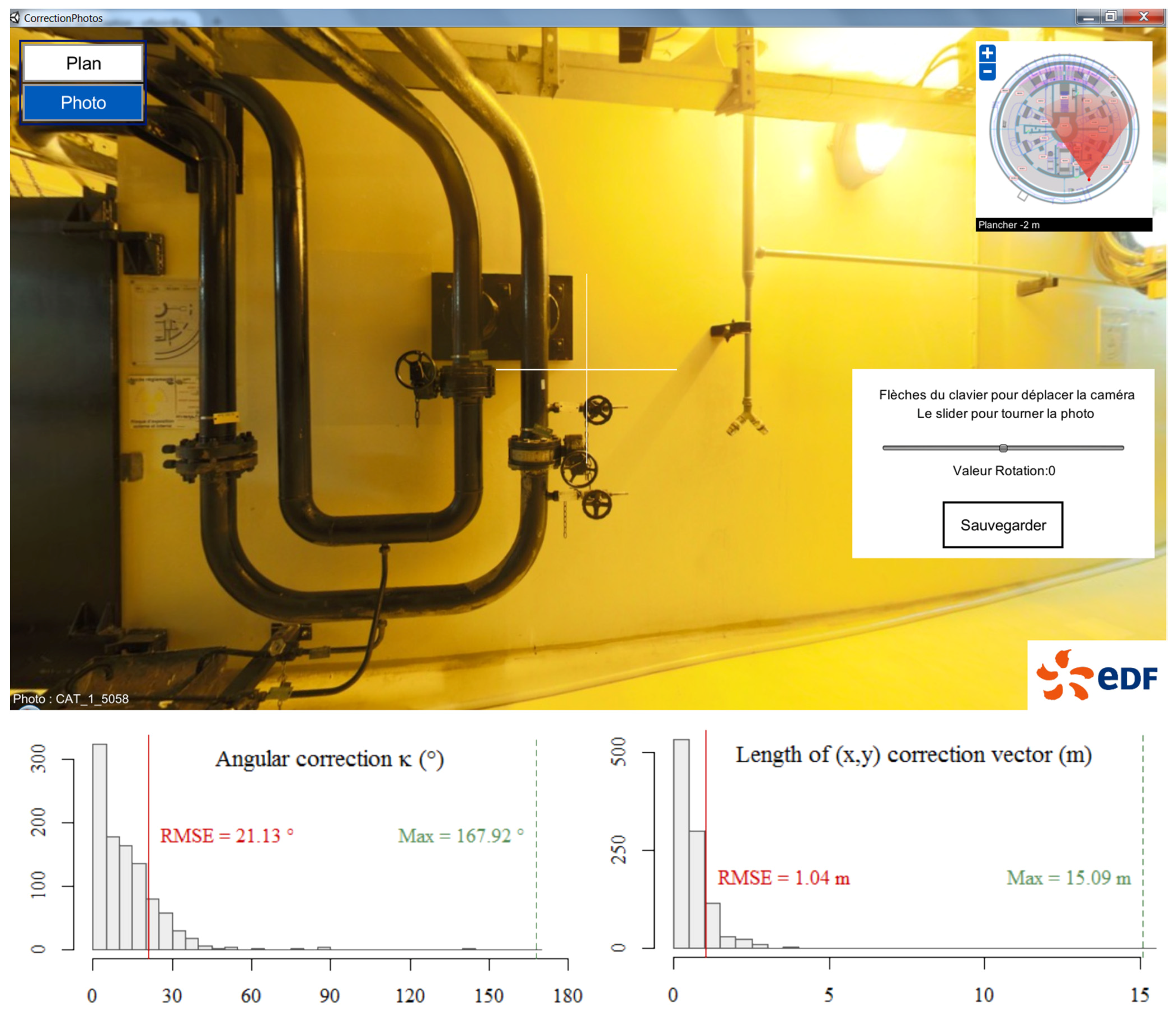

- A major issue is the registration of the panoramic images on the external reference frame; see Figure 8. As will be discussed later on, the fine registration of panoramic images is valuable in assisting with the quality control of 3D reconstructions and offers a better user experience when browsing the dataset. We will address this issue later in this paper.

- (1)

- Redraw existing floor maps in AutoCAD, following specific drawing rules and using only two types of objects: polylines and blocks (manual);

- (2)

- Compare the 3D as-built model and panoramic images to floor plans in a specific tool developed in Unity3D, to update them in AutoCAD (manual);

- (3)

- Convert polygons of floor maps to Scalable Vector Graphics (SVG) files (automatic) and export the blocks to XLS using EXTATTBE in AutoCAD (automatic);

- (4)

- In a dedicated C# tool, instantiate a class model of objects of the building:

- For each floor:

- import SVG and XLS to instantiate a relational model of the building (floors, ladders, walls, etc.), including controls to check with respect to the drawing rules,

- using a Clipper Library clipping algorithm [38] and threshold, compute relations between objects, such as adjacency, inclusion, etc.

- For each pair of floors:

- Connect objects, such as elevators, ladders, stairs,

- Using Clipper Library, compute vertical relationships between objects.

- (5)

- Export the instantiated building model to a topological graph in XML, with a description of the building model in XML Schema Definition (XSD).

3.4. Summary of Tool Development during the Project

4. Example of Specific Development to Reach Quality Expectations for Referencing Panoramic Images Precisely

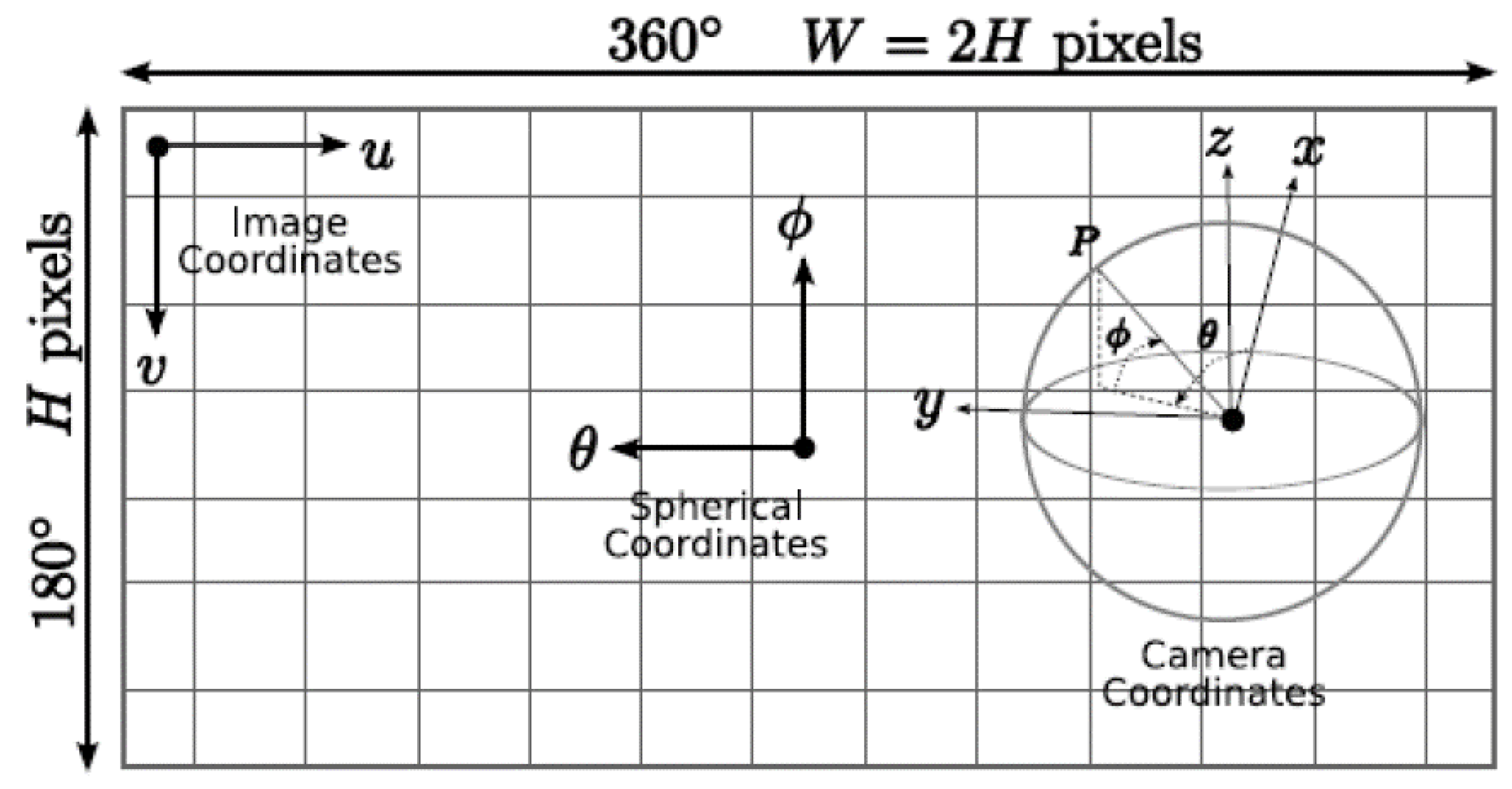

4.1. Camera Model for Panoramic Images

4.2. Pose Estimate Using Constraints between Image Pixels and 3D Points from Laser Scans

4.3. Partial Pose Estimate of Panoramic Images Using 2D Floor Maps

- (1)

- Move (tx, ty) panoramic using near and identifiable details of the floor maps by estimating the ratio of distances (doors, holes in floors, etc.);

- (2)

- Orient (κ) using far landmarks of the floor maps;

- (3)

- Check at +90° and +180° and iterate the first two steps until the best estimate.

4.4. Full Pose Estimate of Panoramic Images Using the 3D As-Built Dataset

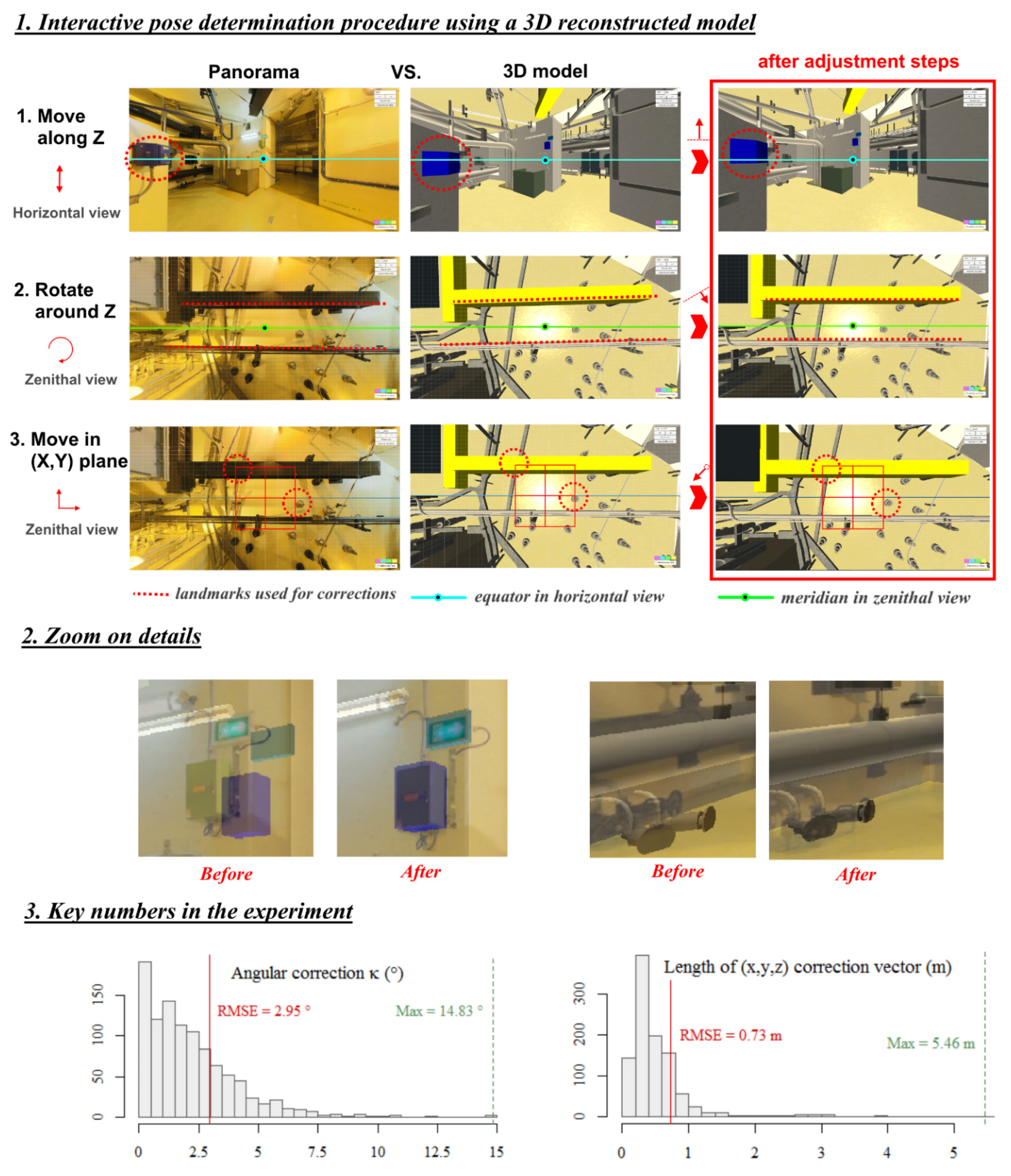

- (1)

- Move (tz) panoramic using landmarks of the 3D model that cross the equator of the panoramic in the image (boxes, stairs, guardrail, etc.); front view;

- (2)

- Align (κ) panoramic using parallel objects of the 3D model to any meridian of the panoramic in the image (pipes, beams, etc.); zenith view;

- (3)

- Move (tx, ty) panoramic using landmarks on the ceiling of the 3D model to align them to the zenith of the image; zenith view;

- (4)

- Check horizontally for any issue at +90° and +180° and iterate the first three steps until the best estimate; an incorrect estimate of the vertical when mapping the panorama is the main source of a poor estimate of the pose of the panoramic, and such a panoramic should be corrected.

4.5. Overall Feedback on the Experiment and Discussion on Future Large Scanning Surveys

- specifying needs, requirements and constraints in detail. On the scale of a building, every misunderstanding or fuzzy specification may have a severe impact on costs, quality or durations;

- documenting every step in the process and performing quality monitoring from the beginning, to help both fixing non-conforming data and enriching the dataset for future use;

- parallelizing the tasks as much as possible (acquisition and post-processing); when the data production time increases, the number of non-qualities increases significantly.

5. Developing New Software Applications to Increase the Value of the Dataset

5.1. State of the Art of Multi-Data Visualization for Complex Architectures

- virtual tours with floor plans and panoramic RGB [39];

- navigation through several spherical laser views;

- navigation and path calculation in 3D environments, for instance in 3D video games or 2.5D cartography services, such as Google Maps indoors.

5.2. Rules for the Development of Virtual Tours of a Complex Building

5.3. A Framework for Taking Measurements on Laser Scans for Non-Expert Users

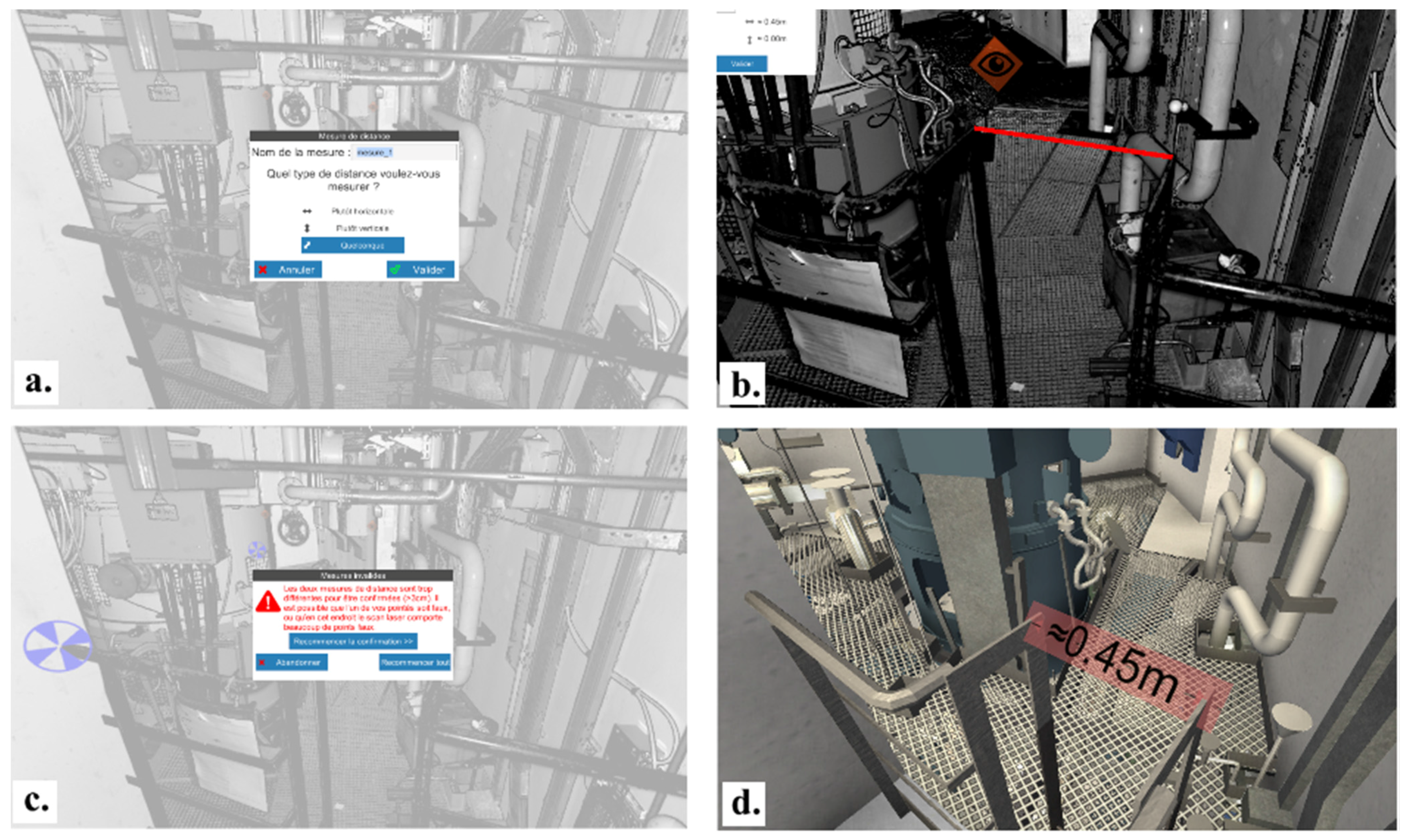

- (1)

- choose a type of measurement from “almost horizontal”, “almost vertical” and “oblique”

- (2)

- pick two points in the laser scan to define the distance to be measured.

- ➔

- If the picked segment is “consistent” with the type of measurement, then:

- (3)

- pick the two points in the laser scan again.

- ➔

- If the measured distance is “similar” to the previous one:

- (4)

- the measurement is displayed on the laser scan with centimeter accuracy; it can be checked against the reconstructed 3D model.

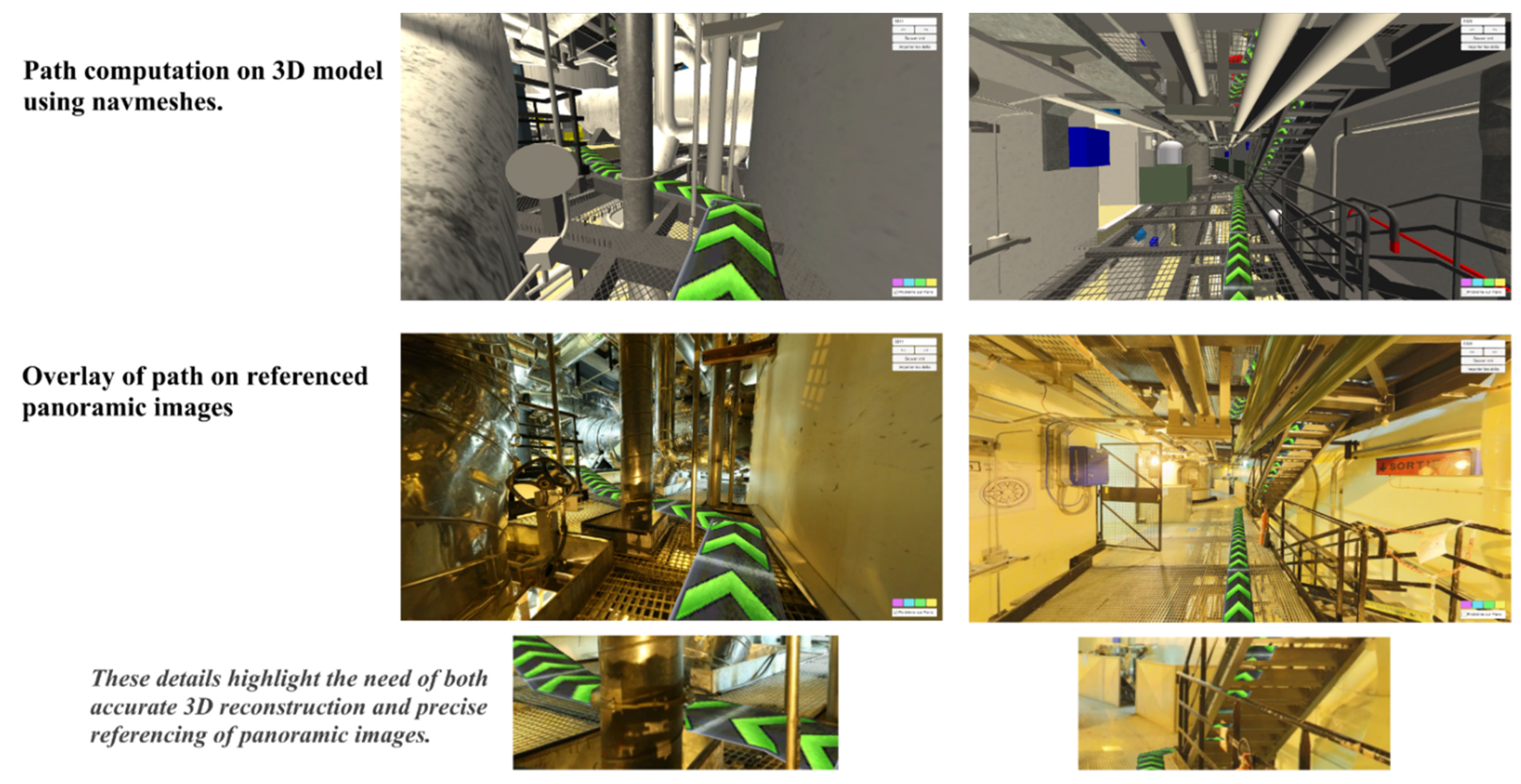

5.4. An Example of Advanced Technological Features Using a Georeferenced Dataset

6. Conclusions and Future Work

- We assessed the feasibility, as well as underlined the current complexity of tools and methods used in a multi-sensor scanning of industrial environments (1000+ stations). Issues remain to reduce the effort in the production of such models.

- We have highlighted human beings’ contribution in the data production (from interactive tools to quality management and control).

- We have shown how the value of such datasets can be increased by developing multi-source data visualization and navigation applications in multi-floor plants with recommendations for general principles applied to virtual tours of complex architectures.

- Improve data referencing procedures and algorithms to ensure confidence in quality levels, across all of the datasets.

- Develop new tools for data cross-validation and consistency across a multi-source dataset.

- How could we significantly increase (benefit >50% of current cost) the productivity of 3D as-built CAD (or BIM) models from very large point clouds (hundreds of billions)?

- Which procedures and resources should be employed to update a large multi-sensor dataset and detect errors or inconsistencies between two epochs?

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Hullo, J.-F.; Thibault, G.; Boucheny, C. Advances in multi-sensor scanning and visualization of complex plants: The utmost case of a reactor building. ISPRS Arch. Photogramm. 2015, 1, 163–169. [Google Scholar] [CrossRef]

- Pot, J.; Thibault, G.; Levesque, P. Techniques for CAD reconstruction of “as-built” environments and application to preparing for dismantling of plants. Nucl. Eng. Des. 1997, 178, 135–143. [Google Scholar] [CrossRef]

- Depraz, F.; Popovic, V.; Ott, B.; Wellig, P.; Leblebici, Y. Real-time object detection and tracking in omni-directional surveillance using GPU. In Proceedings of the 2015 SPIE Target and Background Signatures, Toulouse, France, 21 September 2015.

- Kopf, J.; Uyttendaele, M.; Deussen, O.; Cohen, M. Capturing and viewing gigapixel images. ACM Trans. Gr. 2007, 26, 93. [Google Scholar] [CrossRef]

- Khoshelham, K.; Elberink, S.O. Accuracy and resolution of kinect depth data for indoor mapping applications. Sensors 2012, 12, 1437–1454. [Google Scholar] [CrossRef] [PubMed]

- Chen, G.; Kua, J.; Shum, S.; Naikal, N.; Carlberg, M.; Zakhor, A. Indoor localization algorithms for a human-operated backpack system. In Proceedings of the 5th International Symposium 3D Data Conference on Visualization, and Transmission, Paris, France, 17–20 May 2010.

- Bosse, M.; Zlot, R.; Flick, P. Zebedee: Design of a spring-mounted 3-d range sensor with application to mobile mapping. IEEE Trans. Robot. 2012, 28, 1104–1119. [Google Scholar] [CrossRef]

- Adán, A.; Quintana, B.; Vázquez, A.S.; Olivares, A.; Parra, E.; Prieto, S. Towards the automatic scanning of indoors with robots. Sensors 2015, 15, 11551–11574. [Google Scholar] [CrossRef] [PubMed]

- Zlot, R.; Bosse, M. Efficient large-scale three-dimensional mobile mapping for underground mines. J. Field Robot. 2014, 31, 758–779. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, P.; Lan, H.; Yuan, Z.; Niu, X.; El-Sheimy, N. Real-time indoor navigation using smartphones. In Proceedings of IPIN 2015 Conference on Evaal Indoor Navigation Competition, Banff, AB, Canada, 13–16 October 2015.

- De la Puente, P.; Rodriguez-Losada, D. Feature based graph-SLAM in structured environments. Auton. Robot. 2014, 37, 243–260. [Google Scholar] [CrossRef]

- Labbe, M.; Michaud, F. Online global loop closure detection for large-scale multi-session graph-based slam. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014.

- Ji, S.; Shi, Y.; Shan, J.; Shao, X.; Shi, Z.; Yuan, X.; Ynag, P.; Wu, W.; Tang, H.; Shibasaki, R. Particle filtering methods for georeferencing panoramic image sequence in complex urban scenes. ISPRS J. Photogramm. 2015, 105, 1–12. [Google Scholar] [CrossRef]

- Barras, V.; Delley, N.; Chapotte, G. Analyses aux limites des scanners laser terrestres. XYZ Rev. Assoc. Fr. Topogr. 2013, 137, 19–26. [Google Scholar]

- Voegtle, T.; Wakaluk, S. Effects on the measurements of the terrestrial laser scanner HDS 6000 (Leica) caused by different object materials. In Proceedings of the 2009 ISPRS Work on Laser Scanning, Paris, France, 1–2 September 2009.

- Tang, P.; Huber, D.; Akinci, B. A comparative analysis of depth-discontinuity and mixed-pixel detection algorithms. In Proceedings of the IEEE Sixth International Conference on 3-D Digital Imaging and Modeling, Montreal, QC, Canada, 21–23 August 2007.

- Hullo, J.-F.; Thibault, G.; Grussenmeyer, P.; Landes, T.; Bennequin, D. Probabilistic feature matching applied to primitive based registration of TLS data. In Proceedings of the XXII ISPRS Congress on Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Melbourne, Australia, 25 August–1 September 2012.

- Komorowski, M. A History of Storage Cost. Available online: http://www.mkomo.com/cost-per-gigabyte-update (accessed 30 October 2015).

- Wikipedia. List of Device Bit Rates. Available online: https://en.wikipedia.org/wiki/List_of_device_bit_rates (accessed 4 October 2015).

- Elseberg, J.; Borrmann, D.; Nüchter, A. One billion points in the cloud—An octree for efficient processing of 3D laser scans. ISPRS J. Photogramm. 2013, 76, 76–88. [Google Scholar] [CrossRef]

- Scheiblauer, C. Interactions with Gigantic Point Clouds. Ph.D. Thesis, Institute of Computer Graphics and Algorithms, Vienna, Austria, 2014. [Google Scholar]

- Schütz, M. Potree, A Free Open-Source WebGL Based Point Cloud Renderer for Large Point Clouds. SCANOPY Project. Available online: https://github.com/potree/potree (accessed 30 October 2015).

- Boucheny, C. Interactive Scientific Visualization of Large Datasets: Towards a Perceptive-Based Approach. Ph.D. Thesis, Université Joseph Fourier, Grenoble, France, 2009. [Google Scholar]

- Azhar, S. Building information modeling (BIM): Trends, benefits, risks, and challenges for the AEC industry. Leadersh. Manag. Eng. 2011, 11, 241–252. [Google Scholar] [CrossRef]

- Golparvar-Fard, M.; Peña-Mora, F.; Savarese, S. Automated progress monitoring using unordered daily construction photographs and IFC-based building information models. J. Comput. Civil. Eng. 2012, 29, 04014025. [Google Scholar] [CrossRef]

- Jung, I.; Kim, W. Analysis of the possibility of required resources estimation for nuclear power plant decommissioning applying BIM. In Proceedings of the KNS 2014 Spring Meeting, Jeju, Korea, 28–30 May 2014.

- Volk, R.; Stengel, J.; Schultmann, F. Building Information Modeling (BIM) for existing buildings—Literature review and future needs. Automat. Constr. 2014, 38, 109–127. [Google Scholar] [CrossRef]

- Trickett, K.G.; Chaney, J.C. PDMS: Plant Layout and Piping Design; Gulf Publishing Company: Houston, TX, USA, 1982. [Google Scholar]

- Tang, P.; Huber, D.; Akinci, B.; Lipman, R.; Lytle, A. Automatic reconstruction of as-built building information models from laser-scanned point clouds: A review of related techniques. Automat. Constr. 2010, 19, 829–843. [Google Scholar] [CrossRef]

- Son, H.; Kim, C.; Kim, C. 3D reconstruction of as-built industrial instrumentation models from laser-scan data and a 3D CAD database based on prior knowledge. Automat. Constr. 2015, 49, 193–200. [Google Scholar] [CrossRef]

- Kang, Z.; Li, Z. Primitive fitting based on the efficient multiBaySAC algorithm. PLoS ONE 2015, 10, e0117341. [Google Scholar] [CrossRef] [PubMed]

- Whyte, J.; Bouchlaghem, N.; Thorpe, A.; McCaffer, R. From CAD to virtual reality: Modelling approaches, data exchange and interactive 3D building design tools. Automat. Constr. 2000, 10, 43–55. [Google Scholar] [CrossRef]

- Girardeau-Montaut, D.; Roux, M.; Marc, R.; Thibault, G. Change detection on points cloud data acquired with a ground laser scanner. ISPRS Arch. Photogramm. 2005, 36, 30–35. [Google Scholar]

- Bey, A.; Chaine, R.; Marc, R.; Thibault, G.; Akkouche, S. Reconstruction of consistent 3D CAD models from point cloud data using a priori CAD models. In Proceedings of the 2011 ISPRS Workshop on Laser Scanning, Calgary, AB, Canada, 29–31 August 2011.

- Hullo, J.-F.; Thibault, G. Scaling up close-range surveys: A challenge for the generalization of as-built data in industrial applications. ISPRS Arch. Photogramm. 2014, 1, 293–299. [Google Scholar] [CrossRef]

- Wicki, F. Robust estimator for the adjustment of geodetic networks. In Proceedings of the First International Symposium on Robust Statistics and Fuzzy Techniques in Geodesy and GIS, Zurich, Switzerland, 12–16 March 2001.

- Whiting, E. Geometric, Topological & Semantic Analysis of Multi-Building Floor Plan Data. Master’s Thesis, Massachusetts Institute of Technology, Deptment of Architecture, Cambridge, MA, USA, 2006. [Google Scholar]

- Vatti, B.R. A generic solution to polygon clipping. Commun. ACM 1992, 35, 56–63. [Google Scholar] [CrossRef]

- Koehl, M.; Scheider, A.; Fritsch, E.; Fritsch, F.; Rachebi, A.; Guillemin, S. Documentation of historical building via virtual tour: the complex building of baths in Strasbourg. In Proceedings of the XXIV International CIPA Symposium on Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Strasbourg, France, 2–6 September 2013.

- Thibault, G.; Pasqualotto, A.; Vidal, M.; Droulez, J.; Berthoz, A. How does horizontal and vertical navigation influence spatial memory of multifloored environments? Atten. Percept. Psychophys. 2013, 75, 10–15. [Google Scholar] [CrossRef] [PubMed]

- Dollé, L.; Droulez, J.; Bennequin, D.; Berthoz, A.; Thibault, G. How the learning path and the very structure of a multifloored environment influence human spatial memory? Adv. Cogniti. Psychol. 2015, in press. [Google Scholar]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hullo, J.-F.; Thibault, G.; Boucheny, C.; Dory, F.; Mas, A. Multi-Sensor As-Built Models of Complex Industrial Architectures. Remote Sens. 2015, 7, 16339-16362. https://doi.org/10.3390/rs71215827

Hullo J-F, Thibault G, Boucheny C, Dory F, Mas A. Multi-Sensor As-Built Models of Complex Industrial Architectures. Remote Sensing. 2015; 7(12):16339-16362. https://doi.org/10.3390/rs71215827

Chicago/Turabian StyleHullo, Jean-François, Guillaume Thibault, Christian Boucheny, Fabien Dory, and Arnaud Mas. 2015. "Multi-Sensor As-Built Models of Complex Industrial Architectures" Remote Sensing 7, no. 12: 16339-16362. https://doi.org/10.3390/rs71215827

APA StyleHullo, J.-F., Thibault, G., Boucheny, C., Dory, F., & Mas, A. (2015). Multi-Sensor As-Built Models of Complex Industrial Architectures. Remote Sensing, 7(12), 16339-16362. https://doi.org/10.3390/rs71215827