An Assembled Feature Attentive Algorithm for Automatic Detection of Waste Water Treatment Plants Based on Multiple Neural Networks

Abstract

1. Introduction

2. Related Works

2.1. Object Detection Based on Deep Learning

2.2. Semantic Segmentation Based on Deep Learning

2.3. Extraction Methods for WWTPs

3. Methodology

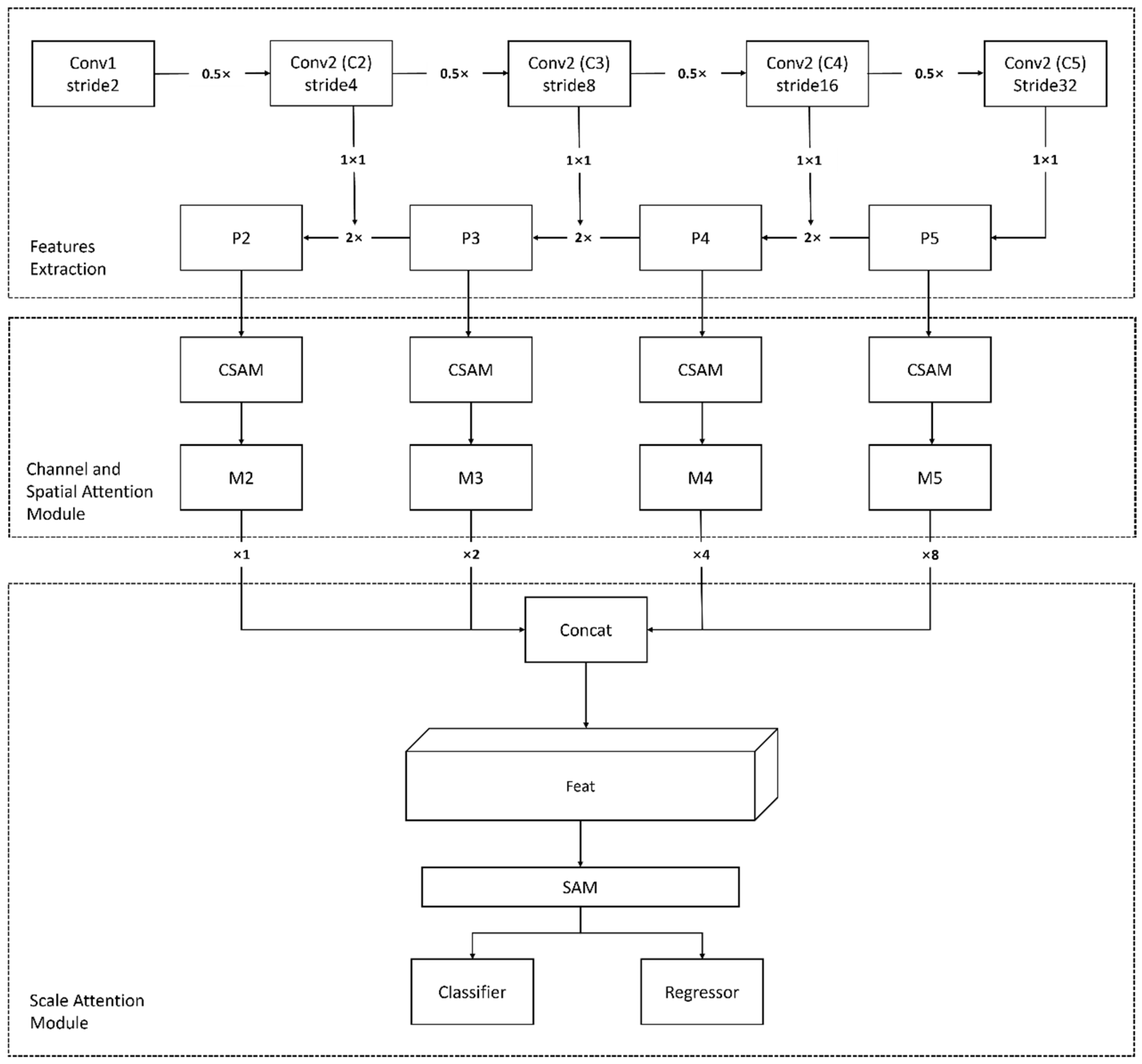

3.1. Multi-Attention Network

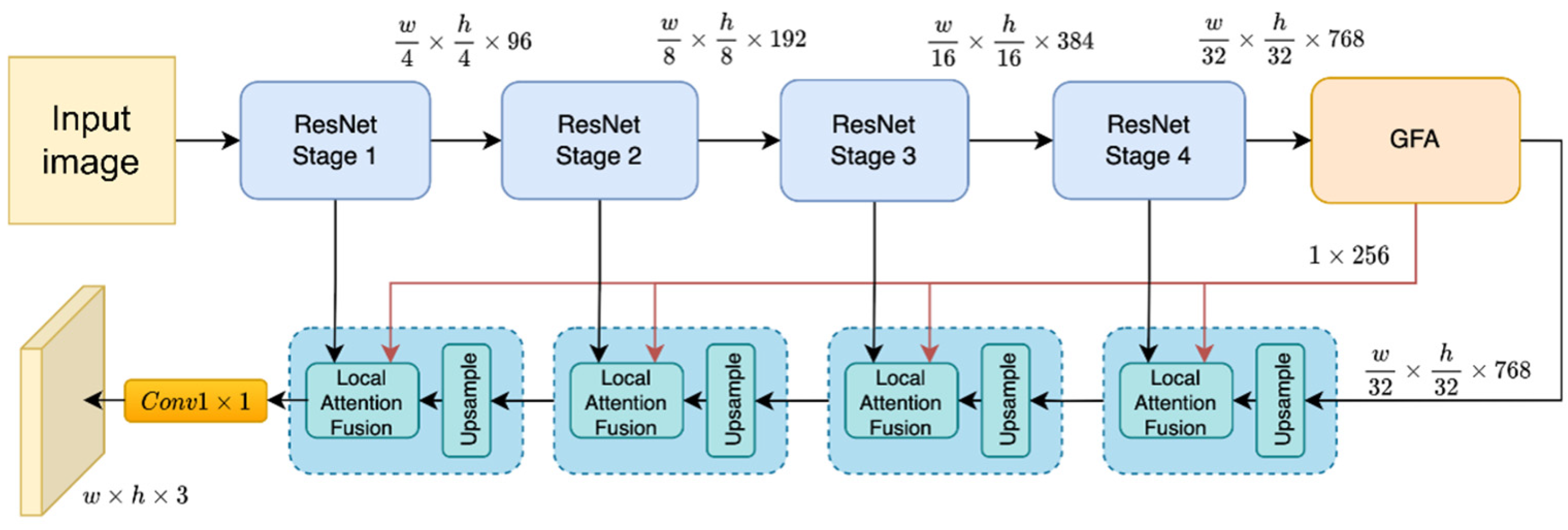

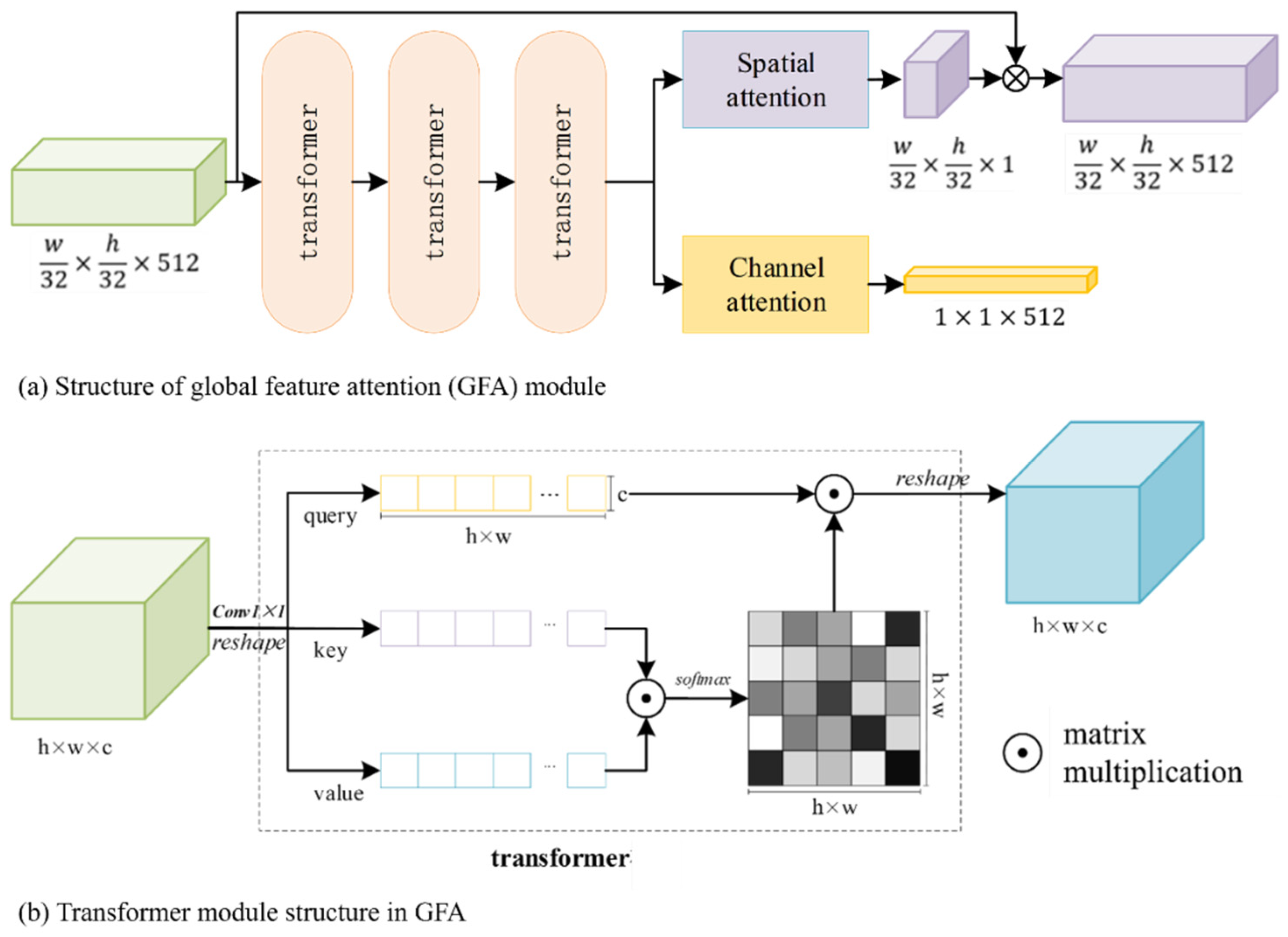

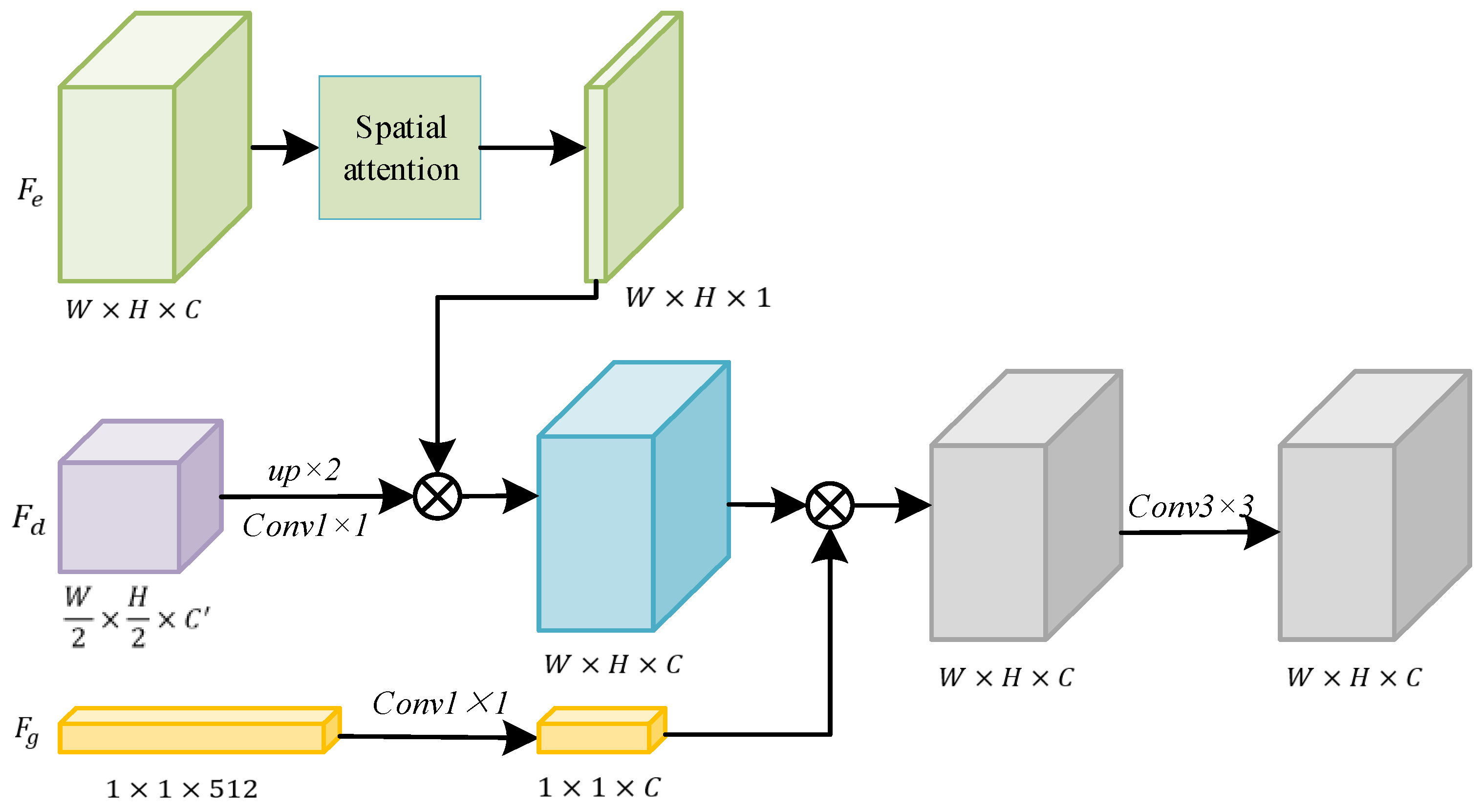

3.2. Global-Local Feature Modelling Network

4. Experiments

4.1. Study Area

4.2. Data Acquisition

- Remote Sensing Data: For this research, we utilized GF-2 satellite data as the basic source of remote sensing imagery, which offers high spatial, temporal, and radiometric resolution. To ensure the WWTPs are clearly identifiable in high-resolution imagery, we employed 2-m resolution products from China’s domestically developed GF-2 satel-lite to create the WWTP sample dataset. The remote sensing data consisted of 1-m and 2-m resolution images from GF-1 (2020) and 2-m resolution images from GF-2 (2019). A total of 110 images from a single year were selected to cover the entire study area. Ad-ditionally, the chosen data had minimal cloud cover, reducing cloud interference in in-terpreting the remote sensing images.;

- Waste Water Treatment Facility Data: The information from the 2020 “The National List of Centralized Waste Water Treatment Facilities”, published by the Ministry of Ecology and Environment, was sourced from the China Urban Water Association’s official website (https://www.cuwa.org.cn/, accessed on 12 December 2023). The statistical data were gathered in 2019. Centralized wastewater treatment plants are essential infrastructure for reducing water pollution. Based on the data released by the Ministry, the second batch of the “The National List of Centralized Waste Water Treatment Facilities”, which includes plants with a design capacity of 500 tons/day or more, shows that Beijing has 176 urban WWTPs in total.;

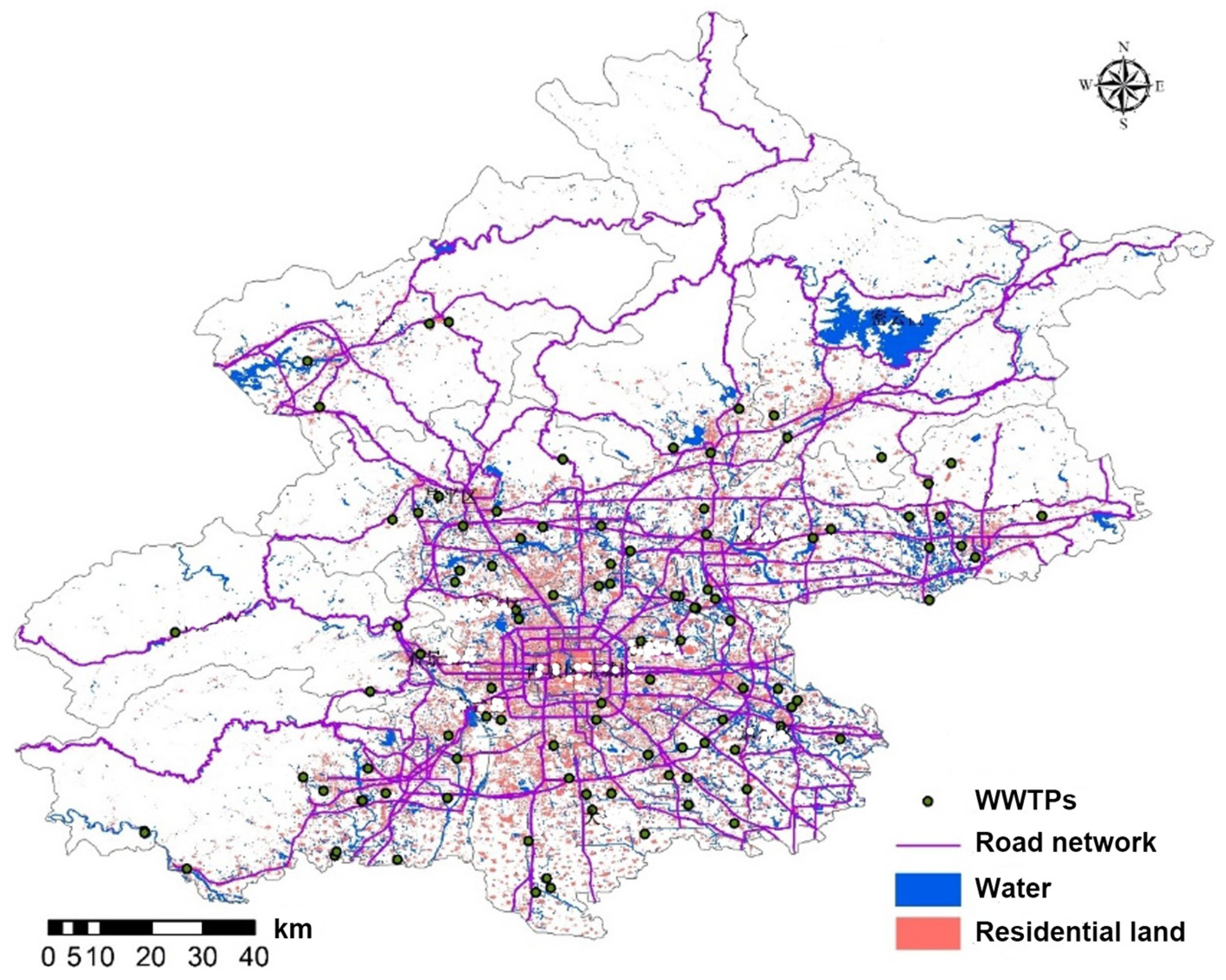

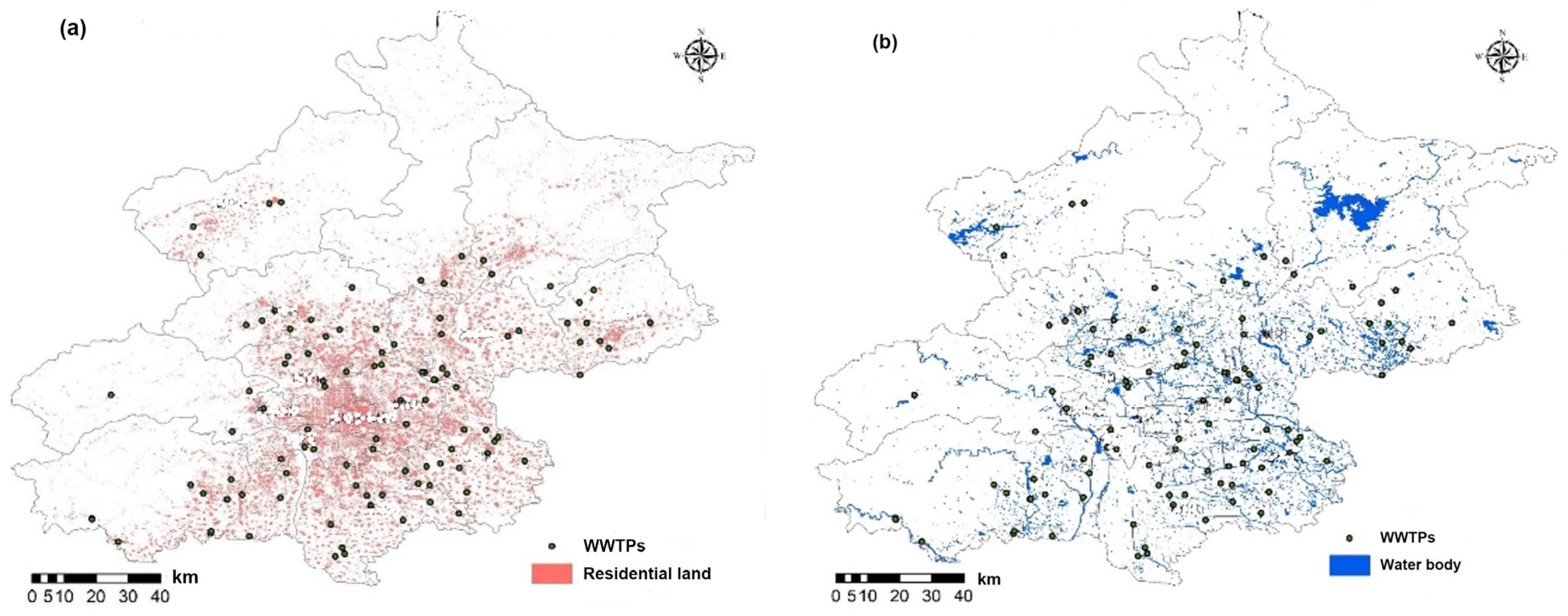

- Distribution of Residential Land and Water in Beijing: In this study, residential land and water of Beijing were accurately extracted through the integration of high-resolution satellite remote sensing imagery data and advanced deep learning methods.

4.3. Dataset Production of WWTPs

4.4. Experimental Setup and Sample Training

4.5. Results and Analysis

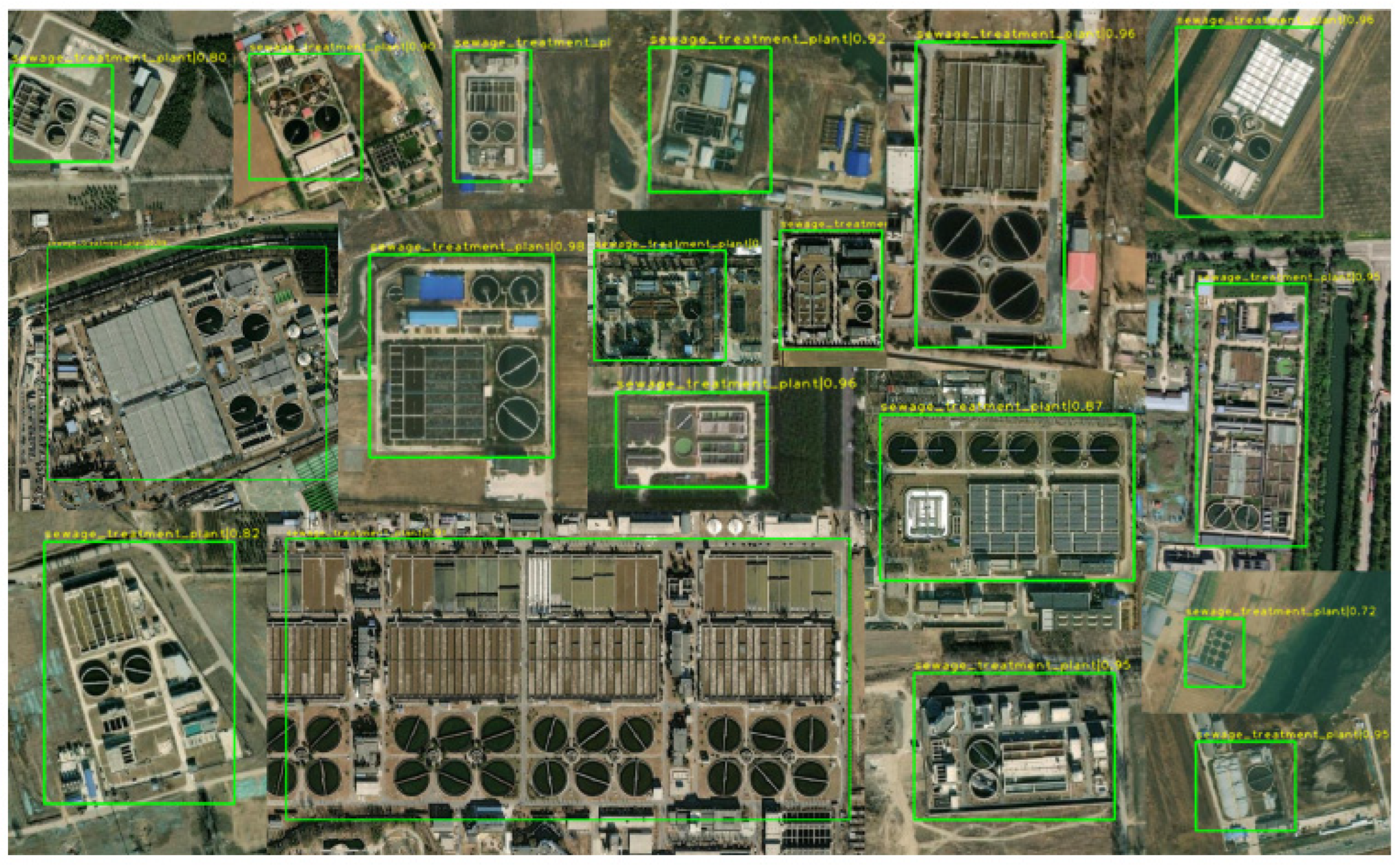

4.5.1. The Efficiency of MANet

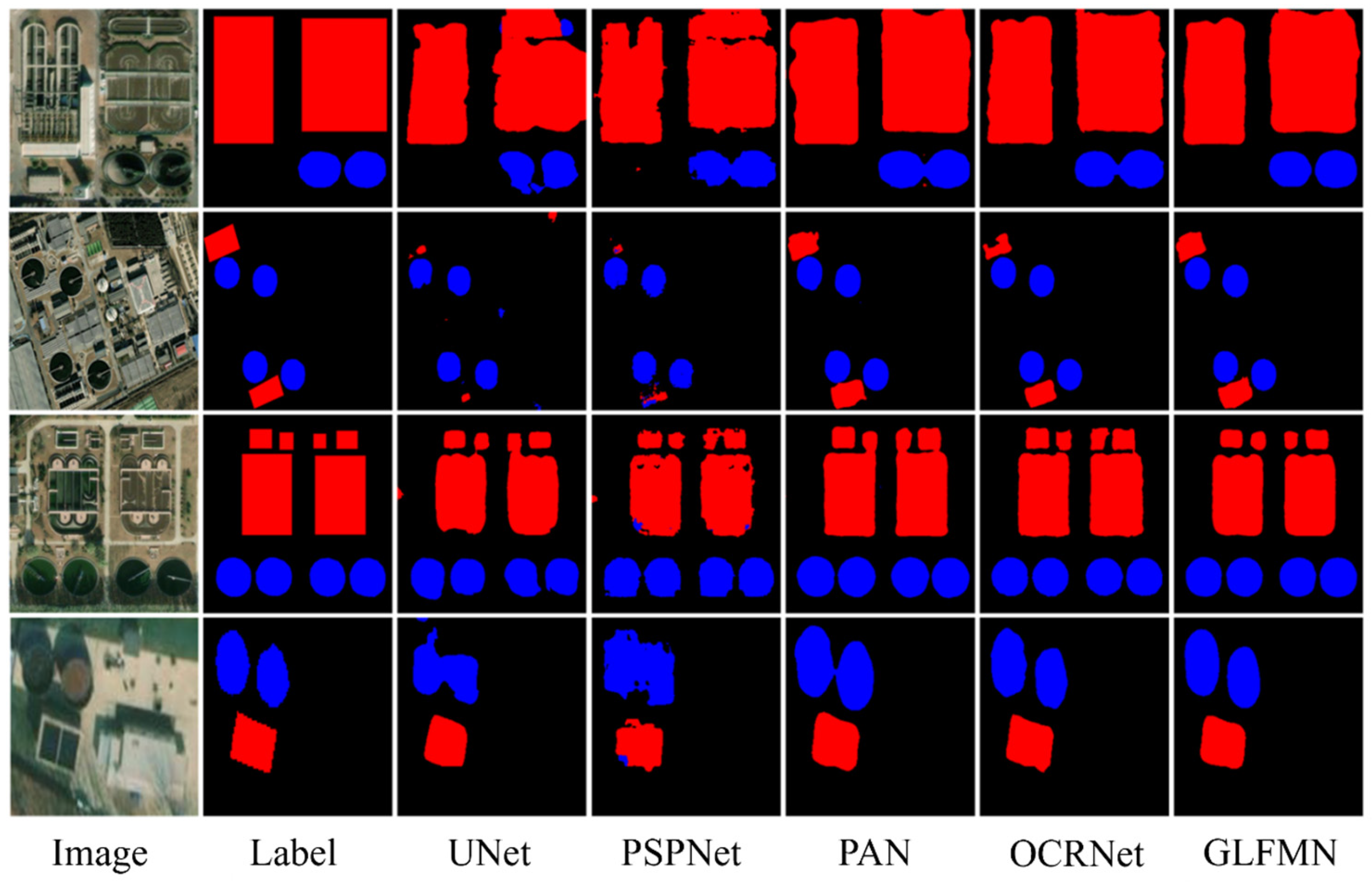

4.5.2. The Efficiency of GLFMN

4.5.3. Detection Result of WWTPs in Beijing

5. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sun, D.Z.; Yang, W. Genetic algorithm solution of a gray nonlinear water environment management model developed for the liming river in Daqing, China. J. Environ. Eng. 2007, 133, 287–293. [Google Scholar] [CrossRef]

- Feng, Y.; Feng, J.K.; Lee, J.H.; Lu, C.C.; Chiu, Y.H. Undesirable output in efficiency: Evidence from wastewater treatment plants in China. Appl. Ecol. Environ. Res. 2019, 17, 9279–9290. [Google Scholar] [CrossRef]

- Shuai, Y.; Xie, J.; Lu, K.X.; Chen, Z.C. Multi-Attention Network for Sewage Treatment Plant Detection. Sustainability 2023, 15, 5880. [Google Scholar] [CrossRef]

- Wang, S.P.; Liu, X.A.; Zheng, Q.; Yang, Z.L.; Zhang, R.X.; Yin, B.H. Characteristics and feasibility study of sewage sludge for landscaping application in Xi’an, China. Environ. Eng. Manag. J. 2013, 12, 1515–1520. [Google Scholar] [CrossRef]

- Yao, L.M.; He, L.H.; Chen, X.D. Scale and process design for sewage treatment plants in airports using multi-objective optimization model with uncertain influent concentration. Environ. Sci. Pollut. Res. 2019, 26, 14534–14546. [Google Scholar] [CrossRef]

- Wang, Y.; Sheng, L.X.; Li, K.; Sun, H.Y. Analysis of present situation of water resources and countermeasures for sustainable development in China. J. Water Resour. Water Eng. 2008, 3, 10–14. (In Chinese) [Google Scholar]

- Liao, Z.L.; Hu, T.T.; Roker, S.A.C. An obstacle to China’s WWTPs: The COD and BOD standards for discharge into municipal sewers. Environ. Sci. Pollut. Res. 2015, 22, 16434–16440. [Google Scholar] [CrossRef]

- Long, S.; Zhao, L.; Shi, T.T.; Li, J.C.; Yang, J.Y.; Liu, H.B.; Mao, G.Z.; Qiao, Z.; Yang, Y.K. Pollution control and cost analysis of wastewater treatment at industrial parks in Taihu and Haihe water basins, China. J. Clean. Prod. 2018, 172, 2435–2442. [Google Scholar] [CrossRef]

- Wang, D.; Ye, W.L.; Wu, G.X.; Li, R.Q.; Guan, Y.R.; Zhang, W.; Wang, J.X.; Shan, X.L.; Hubacek, K. Greenhouse gas emissions from municipal wastewater treatment facilities in China from 2006 to 2019. Sci. Data 2022, 9, 317. [Google Scholar] [CrossRef]

- Wang, C.; Guo, Z.H.; Li, Q.S.; Fang, J. Study on layout optimization of sewage outfalls: A case study of wastewater treatment plants in Xiamen. Sci. Rep. 2021, 11, 18326. [Google Scholar] [CrossRef]

- Yuan, F.; Zhao, H.; Sun, H.B.; Sun, Y.J.; Zhao, J.H.; Xia, T. Investigation of microplastics in sludge from five wastewater treatment plants in Nanjing, China. J. Environ. Manag. 2022, 301, 113793. [Google Scholar] [CrossRef] [PubMed]

- Hong, D.F.; Zhang, B.; Li, H.; Li, Y.X.; Yao, J.; Li, C.Y.; Werner, M.; Chanussot, J.; Zipf, A.; Zhu, X.X. Cross-city matters: A multimodal remote sensing benchmark dataset for cross-city semantic segmentation using high-resolution domain adaptation networks. Remote Sens. Environ. 2023, 299, 113856. [Google Scholar] [CrossRef]

- Li, H.; Zech, J.; Hong, D.F.; Ghamisi, P.; Schultz, M.; Zipf, A. Leveraging OpenStreetMap and Multimodal Remote Sensing Data with Joint Deep Learning for Wastewater Treatment Plants Detection. Int. J. Appl. Earth Obs. Geoinf. 2022, 110, 102804. [Google Scholar] [CrossRef] [PubMed]

- Du, P.J.; Bai, X.Y.; Tan, K.; Xue, Z.H.; Samat, A.; Xia, J.S.; Li, E.Z.; Su, H.J.; Liu, W. Advances of Four Machine Learning Methods for Spatial Data Handling: A Review. J. Geovisualization Spat. Anal. 2020, 4, 13. [Google Scholar] [CrossRef]

- Quartulli, M.; Olaizola, I.G. A review of EO image information mining. ISPRS J. Photogramm. Remote Sens. 2013, 75, 11–28. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Qin, H.; Wang, J.Z.; Mao, X.; Zhao, Z.A.; Gao, X.Y.; Lu, W.J. An Improved Faster R-CNN Method for Landslide Detection in Remote Sensing Images. J. Geovisualization Spat. Anal. 2024, 8, 2. [Google Scholar] [CrossRef]

- Zhong, E.S. Deep mapping–A critical engagement of cartography with neuroscience. Geomat. Inf. Sci. Wuhan Univ. 2022, 47, 1988–2002. (In Chinese) [Google Scholar]

- Hong, D.F.; Hu, J.L.; Yao, J.; Chanussot, J.; Zhu, X.X. Multimodal remote sensing benchmark datasets for land cover classification with a shared and specific feature learning model. ISPRS J. Photogramm. Remote Sens. 2021, 178, 68–80. [Google Scholar] [CrossRef]

- Hong, D.F.; Yokoya, N.; Xia, G.S.; Chanussot, J.; Zhu, X.X. X-ModalNet: A semi-supervised deep cross-modal network for classification of remote sensing data. ISPRS J. Photogramm. Remote Sens. 2020, 167, 12–23. [Google Scholar] [CrossRef]

- Ghasemloo, N.; Matkan, A.A.; Alimohammadi, A.; Aghighi, H.; Mirbagheri, B. Estimating the agricultural farm soil moisture using spectral indices of Landsat 8, and Sentinel-1, and artificial neural networks. J. Geovisualization Spat. Anal. 2022, 6, 19. [Google Scholar] [CrossRef]

- Yu, N.J.; Ren, H.H.; Deng, T.M.; Fan, X.B. Stepwise locating bidirectional pyramid network for object detection in remote sensing imagery. IEEE Geosci. Remote Sens. Lett. 2023, 20, 6001905. [Google Scholar] [CrossRef]

- Mamandipoor, B.; Majd, M.; Sheikhalishahi, S.; Modena, C.; Osmani, V. Monitoring and detecting faults in wastewater treatment plants using deep learning. Environ. Monit. Assess. 2020, 192, 148. [Google Scholar] [CrossRef] [PubMed]

- Martinez, J.S.; Fernandez, Y.B.; Leinster, P.; Casado, M.R. Combining unmanned aircraft systems and image processing for wastewater treatment plant asset inspection. Remote Sens. 2020, 12, 1461. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.M.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Yang, X.; Sun, H.; Fu, K.; Yang, J.; Sun, X.; Yan, M.; Guo, Z. Automatic ship detection of remote sensing images from Google Earth in complex scenes based on multi-scale rotation dense feature pyramid networks. Remote Sens. 2018, 10, 132. [Google Scholar] [CrossRef]

- Yan, J.Q.; Wang, H.Q.; Yan, M.L.; Diao, W.H.; Sun, X.; Li, H. IoU-adaptive deformable R-CNN: Make full use of IoU for multi-class object detection in remote sensing imagery. Remote Sens. 2019, 11, 286. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017. [Google Scholar] [CrossRef]

- Zhu, X.K.; Lyu, S.C.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Long, H.; Chung, Y.N.; Liu, Z.B.; Bu, S.H. Object detection in aerial images using feature fusion deep networks. IEEE Access 2019, 7, 30980–30990. [Google Scholar] [CrossRef]

- Yang, X.; Yang, J.R.; Yan, J.C.; Zhang, Y.; Zhang, T.F.; Guo, Z.; Sun, X.; Fu, K. SCRDet: Towards more robust detection for small, cluttered and rotated objects. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Dai, J.F.; Qi, H.Z.; Xiong, Y.W.; Li, Y.; Zhang, G.D.; Hu, H.; Wei, Y.C. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zhu, J.; Fang, L.Y.; Ghamisi, P. Deformable convolutional neural networks for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1254–1258. [Google Scholar] [CrossRef]

- Ding, P.; Zhang, Y.; Deng, W.J.; Jia, P.; Kujiper, A. A light and faster regional convolutional neural network for object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2018, 141, 208–218. [Google Scholar] [CrossRef]

- Li, S.; Xu, Y.L.; Zhu, M.M.; Ma, S.P.; Tang, H. Remote sensing airport detection based on end-to-end deep transferable convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1640–1644. [Google Scholar] [CrossRef]

- Wang, J.L.; Cui, Z.Y.; Zang, Z.P.; Meng, X.J.; Cao, Z.J. Absorption pruning of deep neural network for object detection in remote sensing imagery. Remote Sens. 2022, 14, 6245. [Google Scholar] [CrossRef]

- Zhang, S.J.; Mu, X.D.; Kou, G.J.; Zhao, J.Y. Object Detection Based on Efficient Multiscale Auto-Inference in Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1650–1654. [Google Scholar] [CrossRef]

- Yu, Q.X.; Wei, W.B.; Pan, Z.K.; He, J.F.; Wang, S.H.; Hong, D.F. GPF-Net: Graph-Polarized Fusion Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5519622. [Google Scholar] [CrossRef]

- Papageorgiou, C.P.; Oren, M.; Poggio, T. A general framework for object detection. In Proceedings of the 6th International Conference on Computer Vision, Bombay, India, 4–7 January 1998. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Freund, Y.; Schapire, R.E. A desicion-theoretic generalization of on-line learning and an application to boosting. In Proceedings of the European Conference on Computational Learning Theory, Barcelona, Spain, 13–15 March 1995. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–26 June 2005. [Google Scholar]

- Yin, W.X.; Diao, W.H.; Wang, P.J.; Gao, X.; Li, Y.; Sun, X. PCAN-Part-Based Context Attention Network for Thermal Power Plant Detection in Remote Sensing Imagery. Remote Sens. 2021, 13, 1243. [Google Scholar] [CrossRef]

- Liu, N.Q.; Celik, T.; Li, H.C. Gated Ladder-Shaped Feature Pyramid Network for Object Detection in Optical Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Tian, Z.Z.; Zhan, R.H.; Hu, J.M.; Wang, W.; He, Z.Q.; Zhuang, Z.W. Generating Anchor Boxes Based on Attention Mechanism for Object Detection in Remote Sensing Images. Remote Sens. 2020, 12, 2416. [Google Scholar] [CrossRef]

- Fan, L.; Chen, X.Y.; Wan, Y.; Dai, Y.S. Comparative Analysis of Remote Sensing Storage Tank Detection Methods Based on Deep Learning. Remote Sens. 2023, 15, 2460. [Google Scholar] [CrossRef]

- Mnih, V.; Heess, N.; Graves, A.; Kavukcuoglu, K. Recurrent Models of Visual Attention. arXiv 2014. [Google Scholar] [CrossRef]

- Jaderberg, M.; Simonyan, K.; Zisserman, A. Spatial transformer networks. arXiv 2015. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E.H. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Springenberg, J.T.; Tatarchenko, M.; Brox, T. Learning to Generate Chairs, Tables and Cars with Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 692–705. [Google Scholar] [CrossRef] [PubMed]

- Guo, M.Q.; Liu, H.; Xu, Y.Y.; Huang, Y. Building Extraction Based on U-Net with an Attention Block and Multiple Losses. Remote Sens. 2020, 12, 1400. [Google Scholar] [CrossRef]

- Wan, J.; Xie, Z.; Xu, Y.Y.; Chen, S.Q.; Qiu, Q.J. DA-RoadNet: A Dual-Attention Network for Road Extraction from High Resolution Satellite Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6302–6315. [Google Scholar] [CrossRef]

- Shotton, J.; Winn, J.; Rother, C.; Criminisi, A. TextonBoost: Joint appearance, shape and context modeling for multi-class object recognition and segmentation. Eur. Conf. Comput. Vis. (ECCV) 2006, 3951, 1–15. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, D.; Ramanan, P.; Dollar, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. Eur. Conf. Comput. Vis. (ECCV) 2014, 8693, 740–755. [Google Scholar]

- Mottaghi, R.; Chen, X.J.; Liu, X.B.; Cho, N.G.; Lee, S.W.; Fidler, S.; Urtasun, R.; Yuille, A. The Role of Context for Object Detection and Semantic Segmentation in the Wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Shi, J.B.; Malik, J. Normalized Cuts and Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 888–905. [Google Scholar]

- Lafferty, J.; Mccallum, A.; Pereira, F.; Fields, R.D. Probabilistic Models for Segmenting and Labeling Sequence Data. In Proceedings of the 18th International Conference on Machine Learning, Williamstown, MA, USA, 28 June–1 July 2001. [Google Scholar]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.F.; Sun, X.; Zhang, Y.; Yan, M.L.; Wang, Y.L.; Wang, Z.R.; Fu, K. A Training-free, One-shot Detection Framework for Geospatial Objects in Remote Sensing Images. In Proceedings of the IEEE International Symposium on Geoscience and Remote Sensing IGARSS, Yokohama, Japan, 28 July–2 August 2019. [Google Scholar]

- Sun, X.; Wang, P.J.; Wang, C.; Liu, Y.F.; Fu, K. PBNet: Part-Based Convolutional Neural Network for Complex Composite Object Detection in Remote Sensing Imagery. ISPRS J. Photogramm. Remote Sens. 2021, 173, 50–65. [Google Scholar] [CrossRef]

- Zheng, Y.C.; Li, Y.J.; Yang, S.; Lu, H.M. Global-PBNet: A Novel Point Cloud Registration for Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22312–22319. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar] [CrossRef]

- Bengio, Y.; Delalleau, O. On the Expressive Power of Deep Architectures. In Proceedings of the 22nd International Conference on Algorithmic Learning Theory (ALT 2011), Espoo, Finland, 5–7 October 2011. [Google Scholar] [CrossRef]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. Lect. Notes Artif. Intell. 2015, 9351, 234–241. [Google Scholar]

- Zhao, H.S.; Shi, J.P.; Qi, X.J.; Wang, X.G.; Jia, J.Y. Pyramid Scene Parsing Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Li, H.; Xiong, P.; An, J.; Wang, L. Pyramid Attention Network for Semantic Segmentation. arXiv 2018, arXiv:1805.10180. [Google Scholar] [CrossRef]

- Wang, J.; Chen, X.; Chen, X.; Yuan, Y. Segmentation Transformer: Object-Contextual Representations for Semantic Segmentation. arXiv 2019, arXiv:1909.11065. [Google Scholar]

| Threshold | Actual Amount | Predicted Amount | TP | FP | FN | AP | AR |

|---|---|---|---|---|---|---|---|

| 0.5 | 151 | 235 | 143 | 92 | 8 | 60.9 | 94.7 |

| 0.6 | 224 | 138 | 86 | 13 | 61.6 | 91.4 | |

| 0.7 | 196 | 132 | 64 | 19 | 67.3 | 87.4 | |

| 0.8 | 184 | 129 | 55 | 22 | 70.1 | 85.4 | |

| 0.9 | 172 | 123 | 49 | 28 | 71.5 | 81.4 |

| OA (%) | IoU (%) | F1 (%) | |

|---|---|---|---|

| UNet | 85.53 | 56.41 | 71.20 |

| PSPNet | 83.02 | 53.66 | 66.22 |

| PAN | 91.93 | 72.30 | 83.77 |

| OCRNet | 90.45 | 73.47 | 84.49 |

| GLFMN | 91.97 | 75.59 | 86.12 |

| District | Area (km2) | Area of Residential Land (km2) | Area of Water (km2) | Proportion of Residential Land (%) | Proportion of Water (%) | Number of WWTPs | Area of Key Facilities (km2) | Waste Water Treatment Capability per Area (%) | Waste Water Treatment Capability per Area of Residential Land (%) | Waste Water Treatment Cpability per Area of Water (%) |

|---|---|---|---|---|---|---|---|---|---|---|

| Dongcheng | 41.92 | 27.48 | 1.00 | 65.56 | 2.40 | 0 | 0.00 | 0.00 | 0.00 | 0.00 |

| Xicheng | 50.35 | 31.00 | 1.93 | 61.58 | 3.84 | 0 | 0.00 | 0.00 | 0.00 | 0.00 |

| Chaoyang | 465.19 | 153.40 | 12.11 | 32.97 | 2.60 | 5 | 0.43 | 0.09 | 0.28 | 3.52 |

| Fengtai | 305.98 | 98.72 | 8.84 | 32.26 | 2.89 | 6 | 0.13 | 0.04 | 0.13 | 1.44 |

| Shijingshan | 84.33 | 24.95 | 2.66 | 29.58 | 3.15 | 1 | 0.01 | 0.01 | 0.03 | 0.26 |

| Haidian | 428.89 | 119.51 | 9.64 | 27.86 | 2.24 | 7 | 0.23 | 0.05 | 0.19 | 2.36 |

| Mentougou | 1450.08 | 26.95 | 7.91 | 1.85 | 0.54 | 3 | 0.01 | 0.00 | 0.04 | 0.12 |

| Fangshan | 1997.90 | 161.90 | 26.59 | 8.10 | 1.33 | 20 | 0.24 | 0.01 | 0.15 | 0.90 |

| Tongzhou | 904.40 | 178.61 | 36.79 | 19.74 | 4.06 | 19 | 0.18 | 0.02 | 0.10 | 0.50 |

| Shunyi | 1009.16 | 173.64 | 21.91 | 17.23 | 2.17 | 9 | 0.20 | 0.02 | 0.12 | 0.92 |

| Changping | 1343.64 | 158.85 | 17.48 | 11.82 | 1.30 | 12 | 0.21 | 0.02 | 0.13 | 1.20 |

| Daxing | 1033.98 | 206.46 | 8.53 | 19.96 | 0.82 | 16 | 0.45 | 0.04 | 0.22 | 5.22 |

| Huairou | 2119.86 | 58.63 | 12.84 | 2.76 | 0.60 | 4 | 0.04 | 0.00 | 0.06 | 0.29 |

| Pinggu | 947.50 | 77.04 | 17.53 | 8.13 | 1.85 | 9 | 0.08 | 0.01 | 0.11 | 0.48 |

| Miyun | 2223.96 | 81.93 | 97.21 | 3.68 | 4.37 | 5 | 0.15 | 0.01 | 0.18 | 0.15 |

| Yanqing | 1997.33 | 59.38 | 60.80 | 2.97 | 3.04 | 5 | 0.05 | 0.00 | 0.08 | 0.08 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Chen, Z.; Huang, Z.; Shuai, Y.; Wang, S.; Qi, X.; Zheng, J. An Assembled Feature Attentive Algorithm for Automatic Detection of Waste Water Treatment Plants Based on Multiple Neural Networks. Remote Sens. 2025, 17, 1645. https://doi.org/10.3390/rs17091645

Li C, Chen Z, Huang Z, Shuai Y, Wang S, Qi X, Zheng J. An Assembled Feature Attentive Algorithm for Automatic Detection of Waste Water Treatment Plants Based on Multiple Neural Networks. Remote Sensing. 2025; 17(9):1645. https://doi.org/10.3390/rs17091645

Chicago/Turabian StyleLi, Cong, Zhengchao Chen, Zhuonan Huang, Yue Shuai, Shaohua Wang, Xiangkun Qi, and Jiayi Zheng. 2025. "An Assembled Feature Attentive Algorithm for Automatic Detection of Waste Water Treatment Plants Based on Multiple Neural Networks" Remote Sensing 17, no. 9: 1645. https://doi.org/10.3390/rs17091645

APA StyleLi, C., Chen, Z., Huang, Z., Shuai, Y., Wang, S., Qi, X., & Zheng, J. (2025). An Assembled Feature Attentive Algorithm for Automatic Detection of Waste Water Treatment Plants Based on Multiple Neural Networks. Remote Sensing, 17(9), 1645. https://doi.org/10.3390/rs17091645