One-Dimensional Convolutional Neural Network for Automated Kimchi Cabbage Downy Mildew Detection Using Aerial Hyperspectral Images

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Site

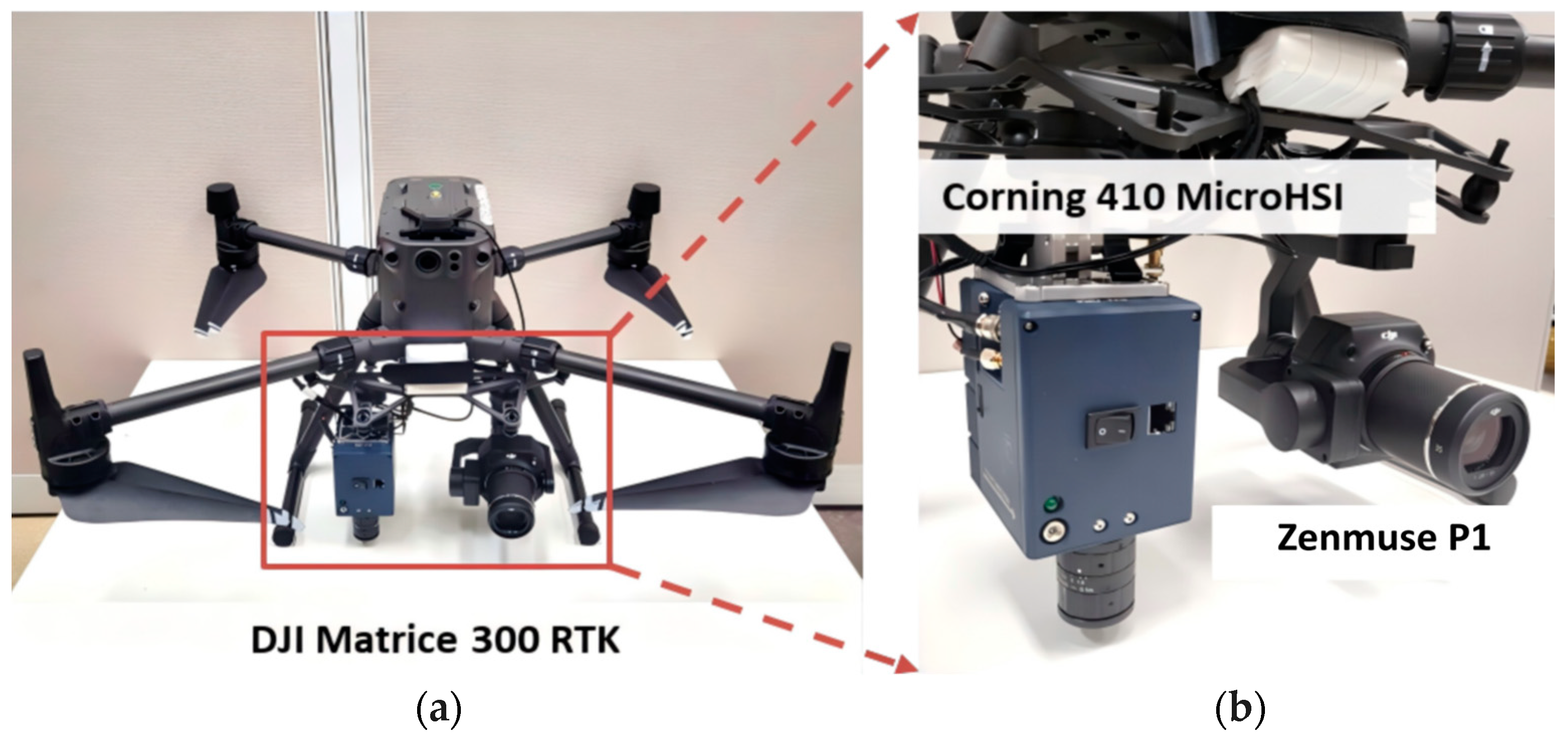

2.2. Instruments and Operations for the Flight Mission

2.3. Ground Survey

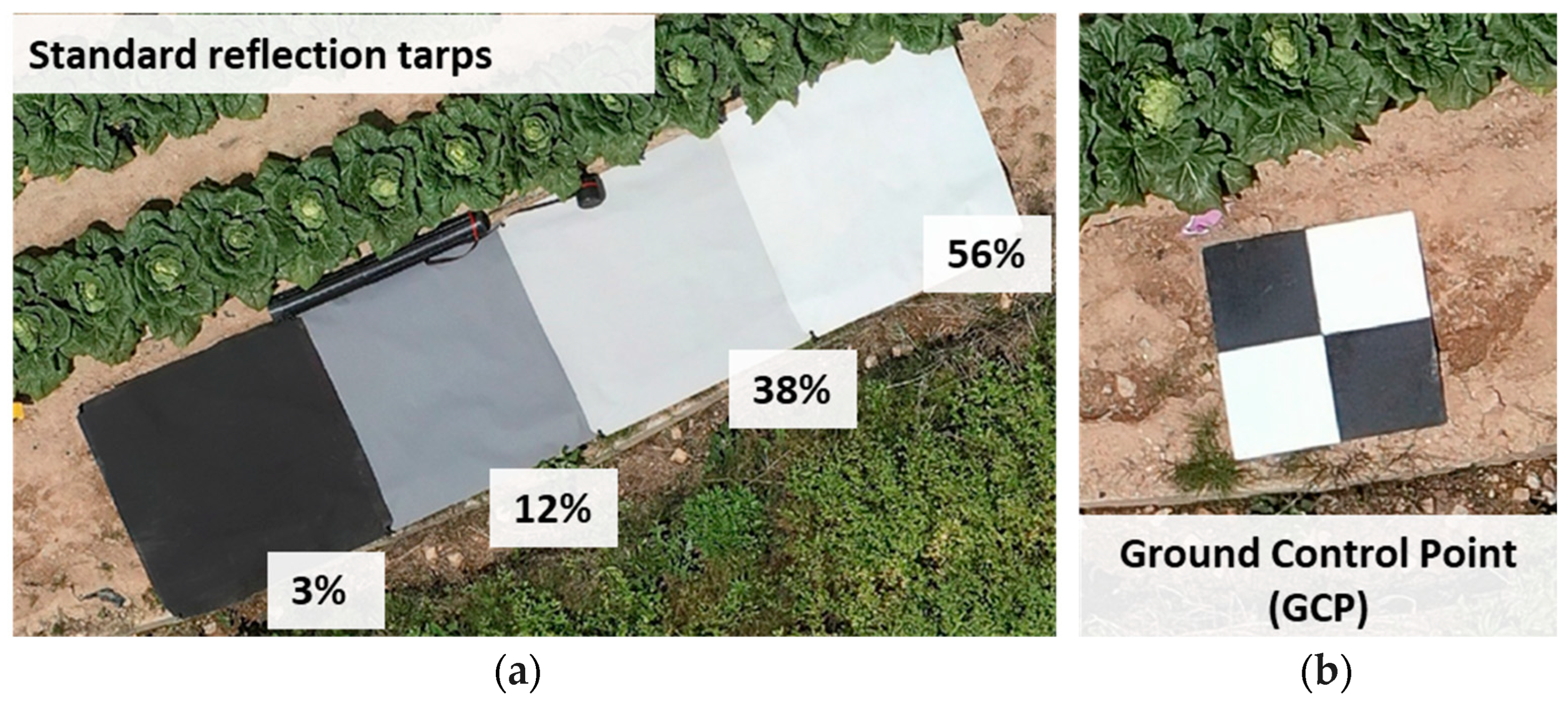

2.4. Preprocessing of the Hyperspectral Images

2.4.1. Image Rectification

2.4.2. Mosaicking

2.4.3. Georeferencing

2.4.4. Radiometric Calibration

2.5. Defining and Detecting Early Diseases

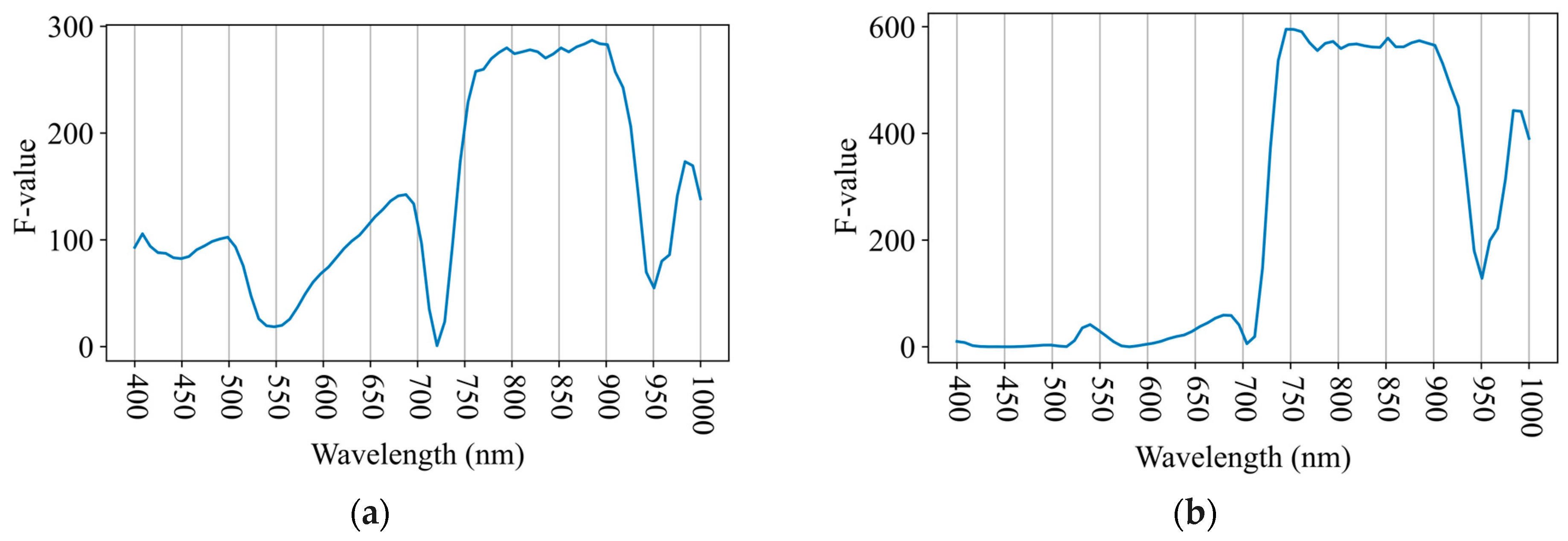

2.6. Spectrum Analysis

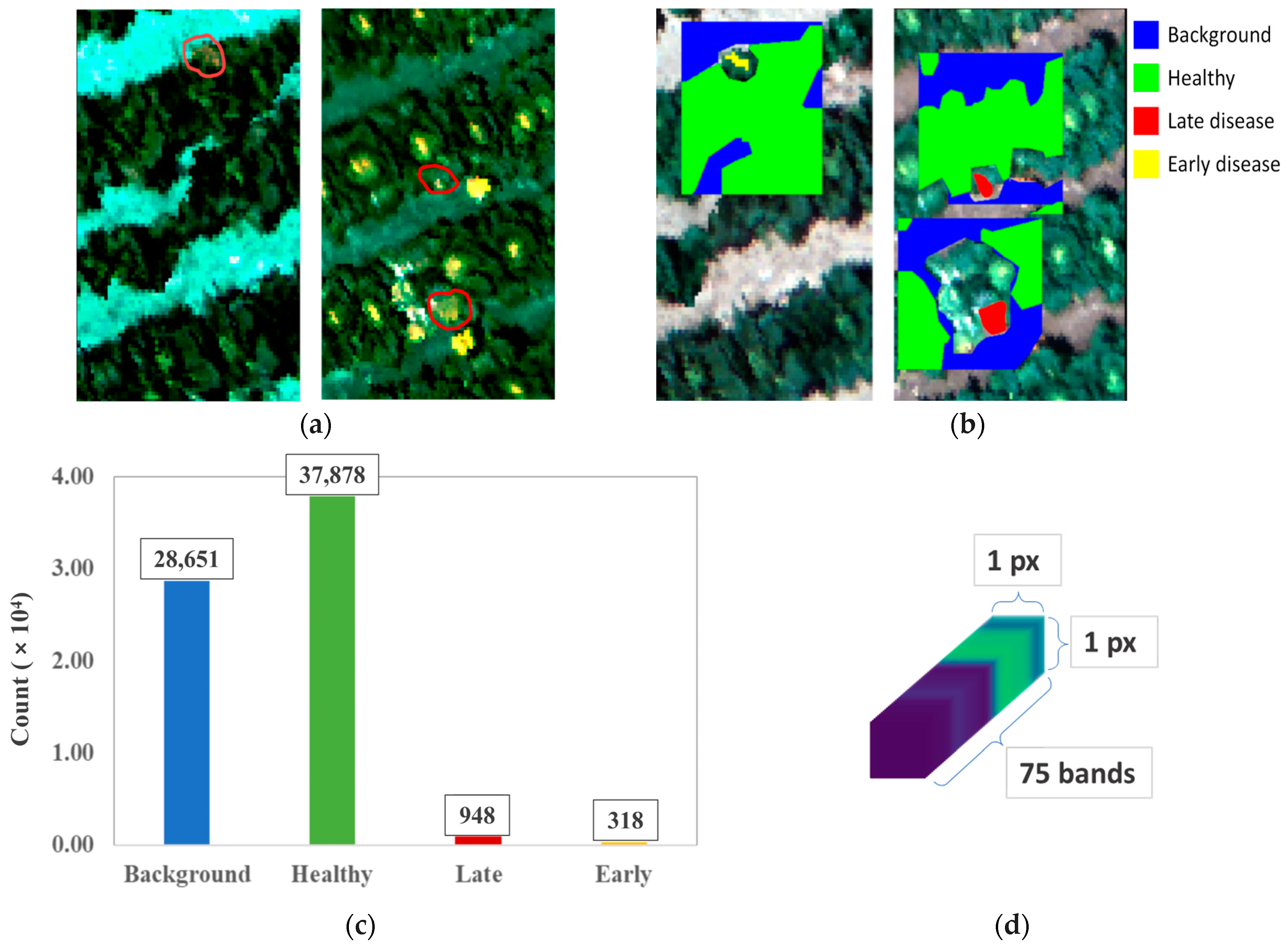

2.7. Establishing the Ground-Truth Dataset Through Labeling

2.8. 1D-CNN and ML Models

2.8.1. Random Forest

2.8.2. 1D-CNN

2.9. Experiment Design

3. Results

3.1. Spectrum Analysis Results

3.2. Training Process for Each Model

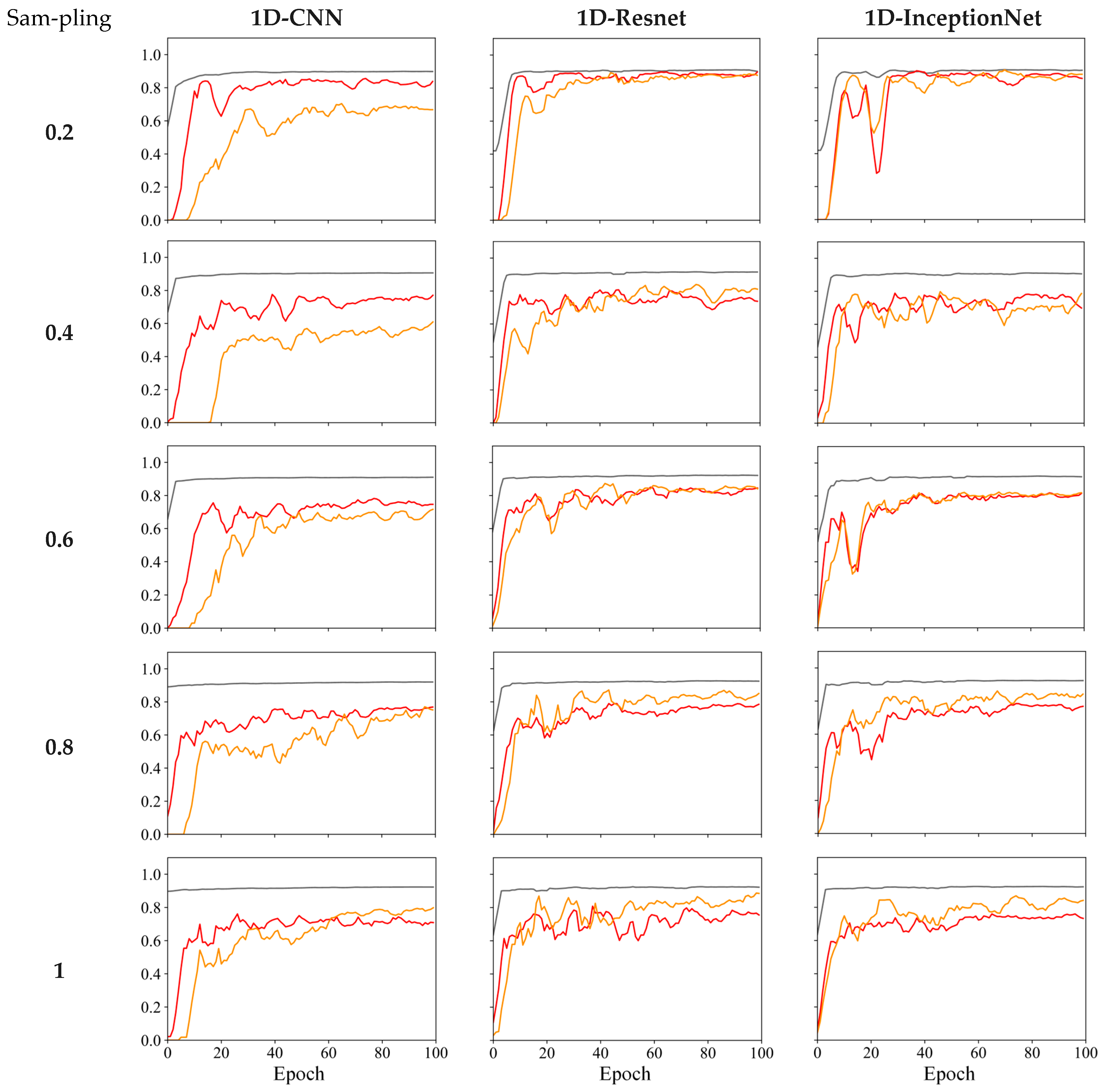

3.3. Comparing Each Model’s Performance and Generating Confusion Matrices

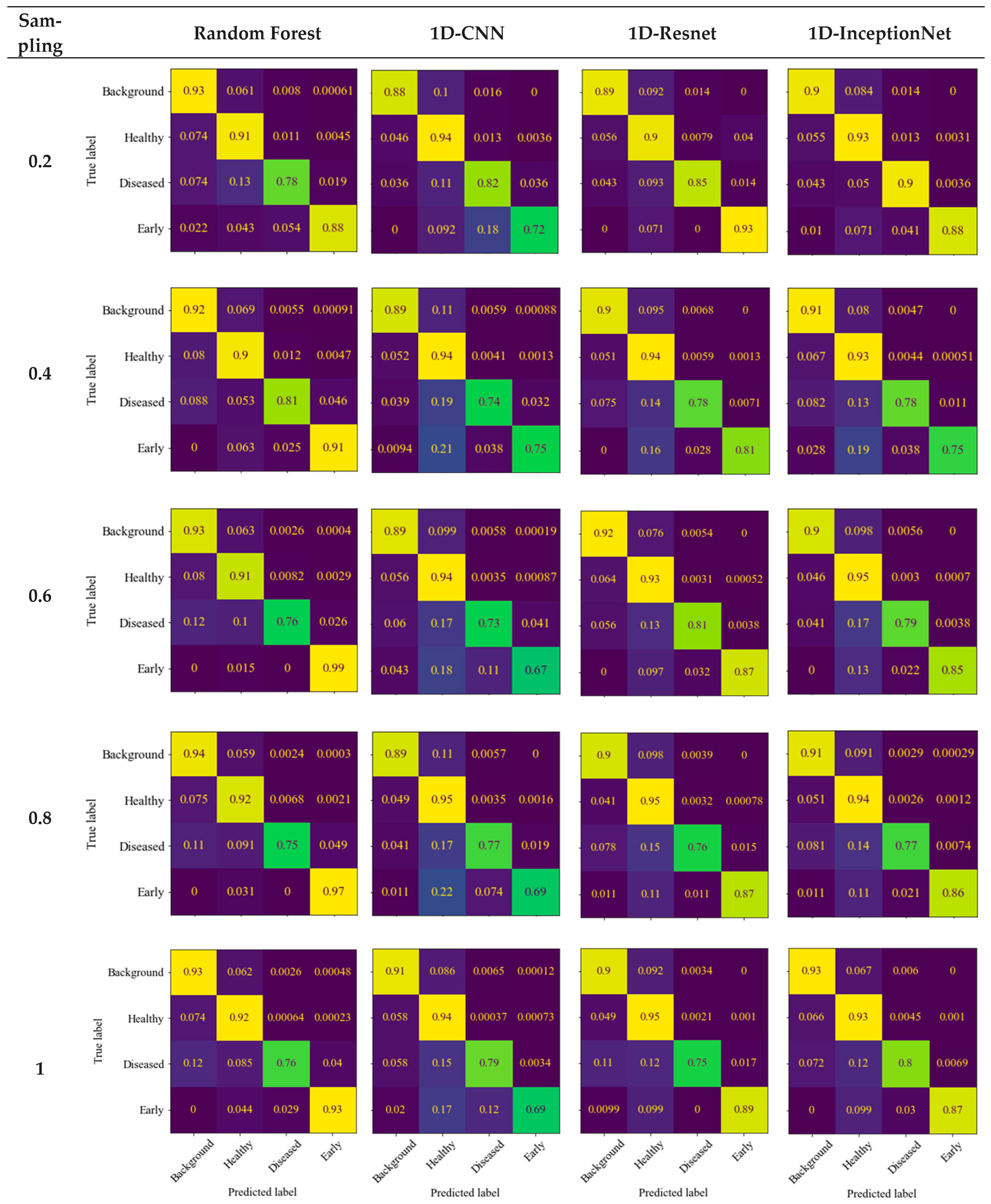

3.4. Visualization of the Prediction Results

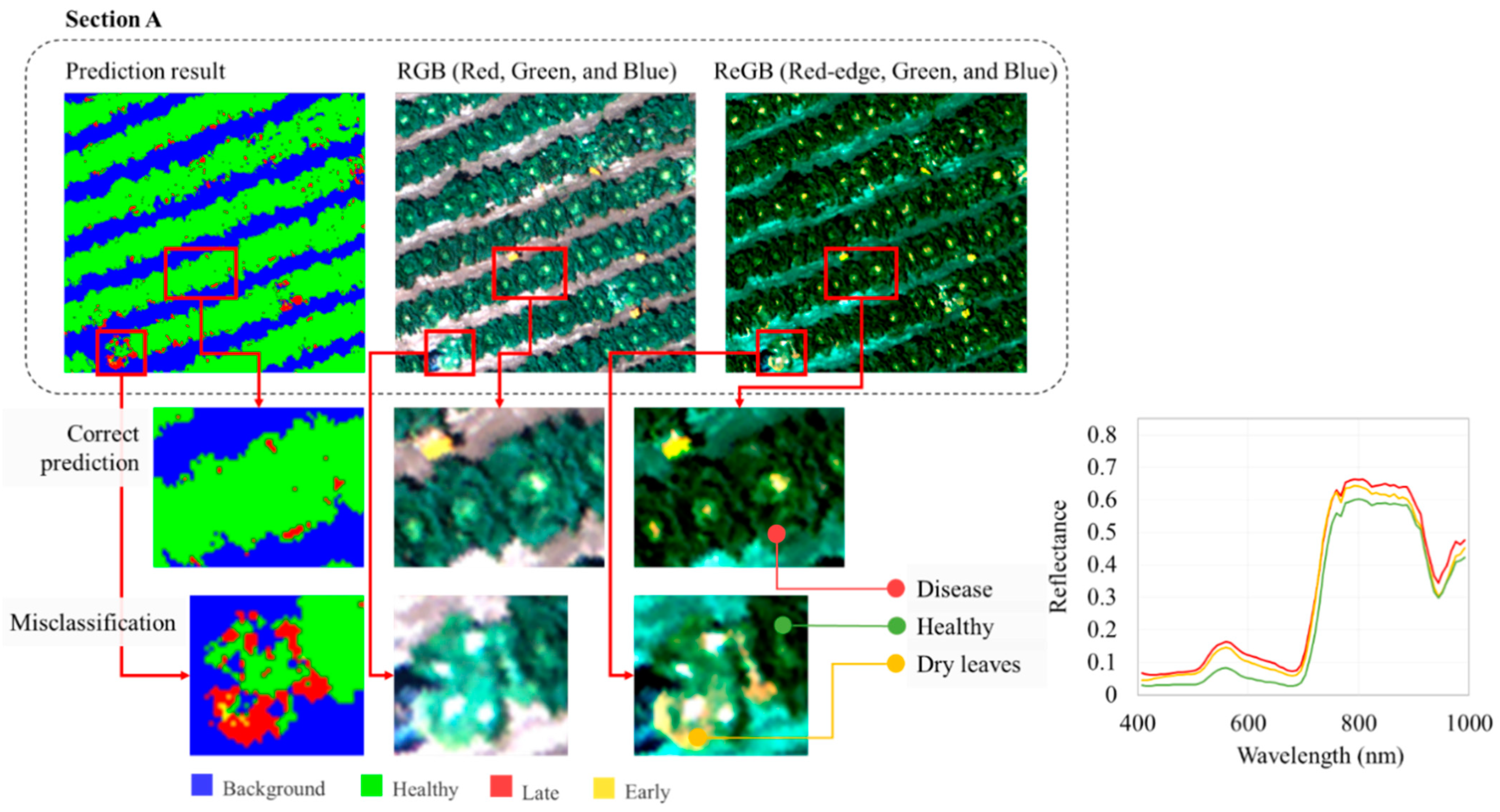

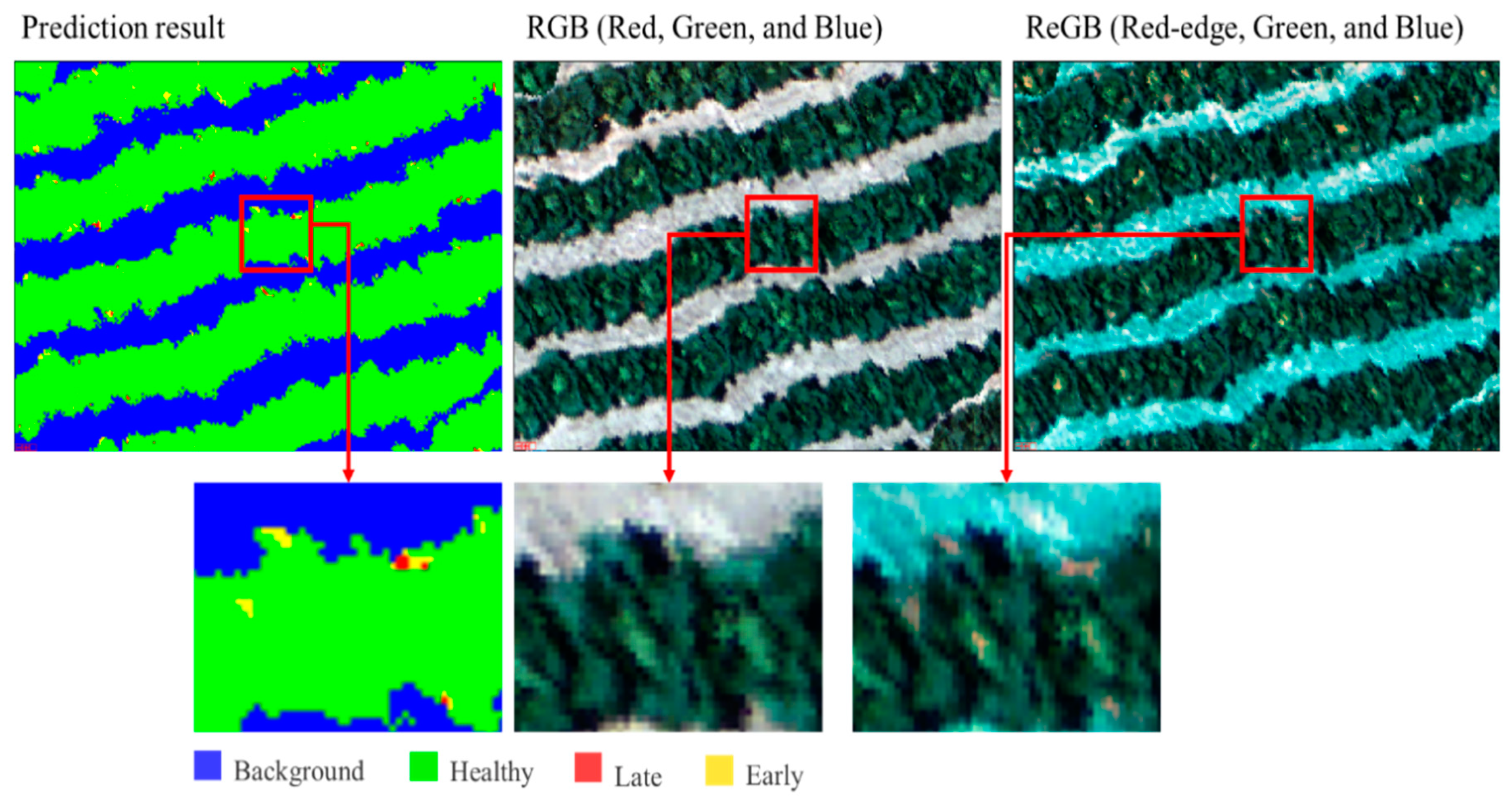

4. Discussion

4.1. Data Acquisition Results and Challenges

4.2. Kimchi Cabbage Downy Mildew Spectrum Signature

4.3. Comparison with the Previous Study

4.4. Implications

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lee, H.J.; Lee, J.S.; Choi, Y.J. New downy mildew disease caused by Hyaloperonospora brassicae on Pak choi (Brassica rapa) in Korea. Res. Plant Dis. 2019, 25, 99. [Google Scholar] [CrossRef]

- Niu, X.; Leung, H.; Williams, P.H. Sources and Nature of Resistance to Downy Mildew and Turnip Mosaic in Chinese Cabbage. J. Am. Soc. Hortic. Sci. 1983, 108, 775–778. [Google Scholar] [CrossRef]

- Buja, I.; Sabella, E.; Monteduro, A.G.; Chiriacò, M.S.; De Bellis, L.; Luvisi, A.; Maruccio, G. Advances in Plant Disease Detection and Monitoring: From Traditional Assays to In-Field Diagnostics. Sensors 2021, 21, 2129. [Google Scholar] [CrossRef] [PubMed]

- Barbedo, J. A Review on the Use of Unmanned Aerial Vehicles and Imaging Sensors for Monitoring and Assessing Plant Stresses. Drones 2019, 3, 40. [Google Scholar] [CrossRef]

- Selci, S. The Future of Hyperspectral Imaging. J. Imaging 2019, 5, 84. [Google Scholar] [CrossRef]

- Jung, D.-H.; Kim, J.D.; Kim, H.-Y.; Lee, T.S.; Kim, H.S.; Park, S.H. A Hyperspectral Data 3D Convolutional Neural Network Classification Model for Diagnosis of Gray Mold Disease in Strawberry Leaves. Front. Plant Sci. 2022, 13, 837020. [Google Scholar] [CrossRef]

- Kuswidiyanto, L.W.; Noh, H.-H.; Han, X. Plant Disease Diagnosis Using Deep Learning Based on Aerial Hyperspectral Images: A Review. Remote Sens. 2022, 14, 6031. [Google Scholar] [CrossRef]

- Wan, L.; Li, H.; Li, C.; Wang, A.; Yang, Y.; Wang, P. Hyperspectral Sensing of Plant Diseases: Principle and Methods. Agronomy 2022, 12, 1451. [Google Scholar] [CrossRef]

- Singh, P.; Pandey, P.C.; Petropoulos, G.P.; Pavlides, A.; Srivastava, P.K.; Koutsias, N.; Deng, K.A.K.; Bao, Y. Hyperspectral remote sensing in precision agriculture: Present status, challenges, and future trends. In Hyperspectral Remote Sensing; Elsevier: Amsterdam, The Netherlands, 2020; pp. 121–146. [Google Scholar] [CrossRef]

- Mishra, P.; Asaari, M.S.M.; Herrero-Langreo, A.; Lohumi, S.; Diezma, B.; Scheunders, P. Close range hyperspectral imaging of plants: A review. Biosyst. Eng. 2017, 164, 49–67. [Google Scholar] [CrossRef]

- Carter, G.A.; Knapp, A.K. Leaf optical properties in higher plants: Linking spectral characteristics to stress and chlorophyll concentration. Am. J. Bot. 2001, 88, 677–684. [Google Scholar] [CrossRef]

- Fernández, C.I.; Leblon, B.; Wang, J.; Haddadi, A.; Wang, K. Cucumber Powdery Mildew Detection Using Hyperspectral Data. Can. J. Plant Sci. 2022, 102, 20–32. [Google Scholar] [CrossRef]

- Song, H.; Yoon, S.-R.; Dang, Y.-M.; Yang, J.-S.; Hwang, I.M.; Ha, J.-H. Nondestructive classification of soft rot disease in napa cabbage using hyperspectral imaging analysis. Sci. Rep. 2022, 12, 14707. [Google Scholar] [CrossRef] [PubMed]

- Guo, A.; Huang, W.; Dong, Y.; Ye, H.; Ma, H.; Liu, B.; Wu, W.; Ren, Y.; Ruan, C.; Geng, Y. Wheat yellow rust detection using UAV-based hyperspectral technology. Remote Sens. 2021, 13, 123. [Google Scholar] [CrossRef]

- Ma, H.; Huang, W.; Dong, Y.; Liu, L.; Guo, A. Using UAV-Based Hyperspectral Imagery to Detect Winter Wheat Fusarium Head Blight. Remote Sens. 2021, 13, 3024. [Google Scholar] [CrossRef]

- Krichen, M. Convolutional Neural Networks: A Survey. Computers 2023, 12, 151. [Google Scholar] [CrossRef]

- Liakos, K.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine Learning in Agriculture: A Review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef]

- Agarwal, M.; Gupta, S.; Biswas, K.K. A new Conv2D model with modified ReLU activation function for identification of disease type and severity in cucumber plant. Sustain. Comput. Inform. Syst. 2021, 30, 100473. [Google Scholar] [CrossRef]

- Latif, G.; Abdelhamid, S.E.; Mallouhy, R.E.; Alghazo, J.; Kazimi, Z.A. Deep Learning Utilization in Agriculture: Detection of Rice Plant Diseases Using an Improved CNN Model. Plants 2022, 11, 2230. [Google Scholar] [CrossRef]

- Fang, X.; Zhen, T.; Li, Z. Lightweight Multiscale CNN Model for Wheat Disease Detection. Appl. Sci. 2023, 13, 5801. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Avci, O.; Abdeljaber, O.; Ince, T.; Gabbouj, M.; Inman, D.J. 1D convolutional neural networks and applications: A survey. Mech. Syst. Signal Process. 2021, 151, 107398. [Google Scholar] [CrossRef]

- Li, H.; Chen, L.; Yao, Z.; Li, N.; Long, L.; Zhang, X. Intelligent Identification of Pine Wilt Disease Infected Individual Trees Using UAV-Based Hyperspectral Imagery. Remote Sens. 2023, 15, 3295. [Google Scholar] [CrossRef]

- Deng, J.; Zhang, X.; Yang, Z.; Zhou, C.; Wang, R.; Zhang, K.; Lv, X.; Yang, L.; Wang, Z.; Li, P.; et al. Pixel-level regression for UAV hyperspectral images: Deep learning-based quantitative inverse of wheat stripe rust disease index. Comput. Electron. Agric. 2023, 215, 108434. [Google Scholar] [CrossRef]

- Kuswidiyanto, L.W.; Wang, P.; Noh, H.H.; Jung, H.Y.; Jung, D.H.; Han, X. Airborne Hyperspectral Imaging for Early Diagnosis of Kimchi Cabbage Downy Mildew Using 3D-ResNet and Leaf Segmentation. Comput. Electron. Agric. 2023, 214, 108312. [Google Scholar] [CrossRef]

- Terentev, A.; Dolzhenko, V.; Fedotov, A.; Eremenko, D. Current State of Hyperspectral Remote Sensing for Early Plant Disease Detection: A Review. Sensors 2022, 22, 757. [Google Scholar] [CrossRef]

- Yi, L.; Chen, J.M.; Zhang, G.; Xu, X.; Ming, X.; Guo, W. Seamless mosaicking of uav-based push-broom hyperspectral images for environment monitoring. Remote Sens. 2021, 13, 4720. [Google Scholar] [CrossRef]

- Mamaghani, B.; Saunders, M.G.; Salvaggio, C. Inherent Reflectance Variability of Vegetation. Agriculture 2019, 9, 246. [Google Scholar] [CrossRef]

- Polat, K.; Öztürk, Ş. Diagnostic Biomedical Signal and Image Processing Applications with Deep Learning Methods; Intelligent Data-Centric Systems; Academic Press: San Diego, CA, USA, 2023. [Google Scholar] [CrossRef]

- Orozco-Arias, S.; Piña, J.S.; Tabares-Soto, R.; Castillo-Ossa, L.F.; Guyot, R.; Isaza, G. Measuring performance metrics of machine learning algorithms for detecting and classifying transposable elements. Processes 2020, 8, 638. [Google Scholar] [CrossRef]

- Trajkovski, K.K.; Grigillo, D.; Petrovic, D. Optimization of UAV Flight Missions in Steep Terrain. Remote Sens. 2020, 12, 1293. [Google Scholar] [CrossRef]

- Barbosa, M.R.; Tedesco, D.; Carreira, V.D.; Pinto, A.A.; Moreira, B.; Shiratsuchi, L.S.; Zerbato, C.; Da Silva, R.P. The Time of Day Is Key to Discriminate Cultivars of Sugarcane upon Imagery Data from Unmanned Aerial Vehicle. Drones 2022, 6, 112. [Google Scholar] [CrossRef]

- Chang, K.; Balachandar, N.; Lam, C.; Yi, D.; Brown, J.; Beers, A.; Rosen, B.; Rubin, D.L.; Kalpathy-Cramer, J. Distributed Deep Learning Networks among Institutions for Medical Imaging. J. Am. Med. Inform. Assoc. 2018, 25, 945–954. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N.; Lichtenthaler, H.K. Detection of red edge position and chlorophyll content by reflectance measurements near 700 nm. J. Plant Physiol. 1996, 148, 501–508. [Google Scholar] [CrossRef]

- Struck, C.; Cooke, B.M.; Jones, D.G.; Kaye, B. Infection Strategies of Plant Parasitic Fungi. In The Epidemiology of Plant Diseases; Springer: Berlin/Heidelberg, Germany, 2006; pp. 117–137. [Google Scholar] [CrossRef]

- Riley, M.; Williamson, M.; Maloy, O. Plant Disease Diagnosis. Plant Health Instr. 2002, 10, 193–210. [Google Scholar] [CrossRef]

- Martinelli, F.; Scalenghe, R.; Davino, S.; Panno, S.; Scuderi, G.; Ruisi, P.; Villa, P.; Stroppiana, D.; Boschetti, M.; Goulart, L.R.; et al. Advanced methods of plant disease detection. A review. Agron. Sustain. Dev. 2015, 35, 1–25. [Google Scholar] [CrossRef]

| CNN Architecture | Dataset Sampling | Healthy Class | Late Disease Class | Early Disease Class | Overall Accuracy | F1 Score |

|---|---|---|---|---|---|---|

| RF | 0.2 | 0.913 | 0.777 | 0.882 | 0.907 | 0.876 |

| 0.4 | 0.902 | 0.813 | 0.911 | 0.909 | 0.848 | |

| 0.6 | 0.913 | 0.758 | 0.985 | 0.916 | 0.859 | |

| 0.8 | 0.924 | 0.755 | 0.969 | 0.923 | 0.847 | |

| 1 | 0.924 | 0.758 | 0.926 | 0.922 | 0.831 | |

| 1D-CNN | 0.2 | 0.882 | 0.826 | 0.721 | 0.901 | 0.845 |

| 0.4 | 0.893 | 0.747 | 0.752 | 0.907 | 0.849 | |

| 0.6 | 0.891 | 0.731 | 0.676 | 0.919 | 0.835 | |

| 0.8 | 0.896 | 0.774 | 0.697 | 0.914 | 0.832 | |

| 1 | 0.919 | 0.798 | 0.694 | 0.921 | 0.835 | |

| 1D-ResNet | 0.2 | 0.893 | 0.854 | 0.934 | 0.909 | 0.897 |

| 0.4 | 0.906 | 0.782 | 0.812 | 0.914 | 0.875 | |

| 0.6 | 0.923 | 0.814 | 0.876 | 0.923 | 0.893 | |

| 0.8 | 0.905 | 0.763 | 0.877 | 0.924 | 0.878 | |

| 1 | 0.902 | 0.753 | 0.892 | 0.924 | 0.876 | |

| 1D-InceptionNet | 0.2 | 0.908 | 0.905 | 0.883 | 0.914 | 0.899 |

| 0.4 | 0.914 | 0.787 | 0.755 | 0.914 | 0.870 | |

| 0.6 | 0.901 | 0.798 | 0.852 | 0.921 | 0.885 | |

| 0.8 | 0.913 | 0.772 | 0.861 | 0.923 | 0.877 | |

| 1 | 0.933 | 0.805 | 0.872 | 0.925 | 0.873 |

| Parameters | Previous Study | Current Study |

|---|---|---|

| Crops | Autumn cabbage | Spring cabbage |

| Disease occurrence | High disease incidence | Low disease incidence |

| Illumination condition | Diffused illumination (obscured by the shadows of the surrounding mountains) | Direct illumination |

| Segmentation method | Leaf level | Pixel level |

| Data dimension | 20 × 20 × 55 (difficult to train) | 1 × 1 × 75 (easier to train) |

| Dataset number | Sparse data | Extensive data |

| Model | 2D-ResNet, 3D-ResNet 1, 3D-ResNet 2 | RF, 1D-CNN, 1D-ResNet, 1D-InceptionNet |

| Robustness | Robust to noise | Prone to noise |

| Output prediction | Background, healthy, diseased | Background, healthy, early, late |

| Overall accuracy | 0.876 | 0.914 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lyu, Y.; Kuswidiyanto, L.W.; Wang, P.; Noh, H.-H.; Jung, H.-Y.; Han, X. One-Dimensional Convolutional Neural Network for Automated Kimchi Cabbage Downy Mildew Detection Using Aerial Hyperspectral Images. Remote Sens. 2025, 17, 1626. https://doi.org/10.3390/rs17091626

Lyu Y, Kuswidiyanto LW, Wang P, Noh H-H, Jung H-Y, Han X. One-Dimensional Convolutional Neural Network for Automated Kimchi Cabbage Downy Mildew Detection Using Aerial Hyperspectral Images. Remote Sensing. 2025; 17(9):1626. https://doi.org/10.3390/rs17091626

Chicago/Turabian StyleLyu, Yang, Lukas Wiku Kuswidiyanto, Pingan Wang, Hyun-Ho Noh, Hee-Young Jung, and Xiongzhe Han. 2025. "One-Dimensional Convolutional Neural Network for Automated Kimchi Cabbage Downy Mildew Detection Using Aerial Hyperspectral Images" Remote Sensing 17, no. 9: 1626. https://doi.org/10.3390/rs17091626

APA StyleLyu, Y., Kuswidiyanto, L. W., Wang, P., Noh, H.-H., Jung, H.-Y., & Han, X. (2025). One-Dimensional Convolutional Neural Network for Automated Kimchi Cabbage Downy Mildew Detection Using Aerial Hyperspectral Images. Remote Sensing, 17(9), 1626. https://doi.org/10.3390/rs17091626