Accurate Mapping of Downed Deadwood in a Dense Deciduous Forest Using UAV-SfM Data and Deep Learning

Abstract

1. Introduction

1.1. Importance of Deadwood Mapping

1.2. Deadwood Categories and Definitions

1.3. State of the Art of Downed Deadwood Mapping

1.4. Study Objectives and Scope

- Develop a DL-based approach for classifying CWD on very high-resolution UAV imagery;

- Assess the accuracy of the results at area, length, and object levels;

- Compare the results of the accuracy assessment with an OBIA CWD detection approach;

- Derive deadwood volume for the mapping results;

- Test the generalizability of the model by applying it to data from other years.

2. Material and Methods

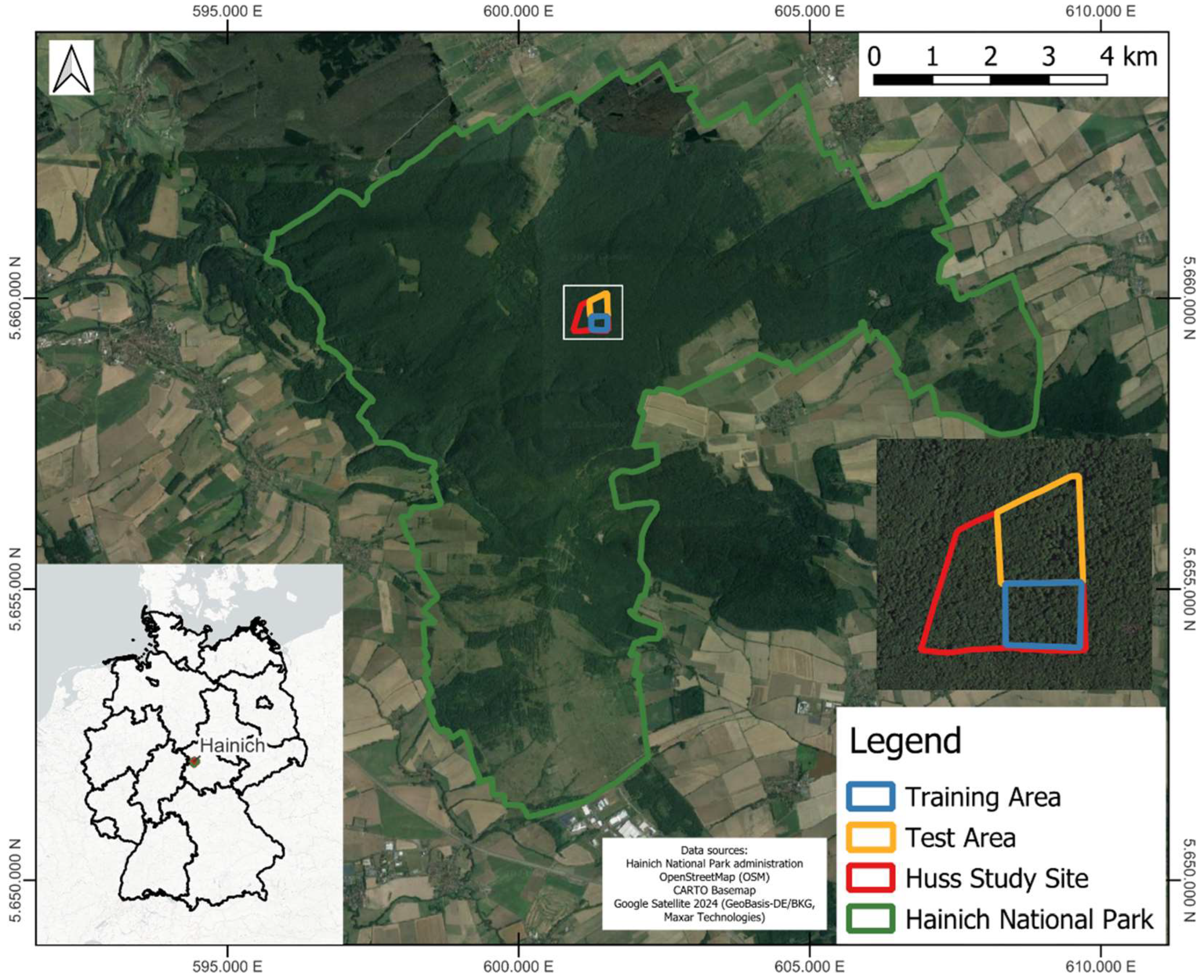

2.1. Study Site

2.2. UAV Imagery Acquisition

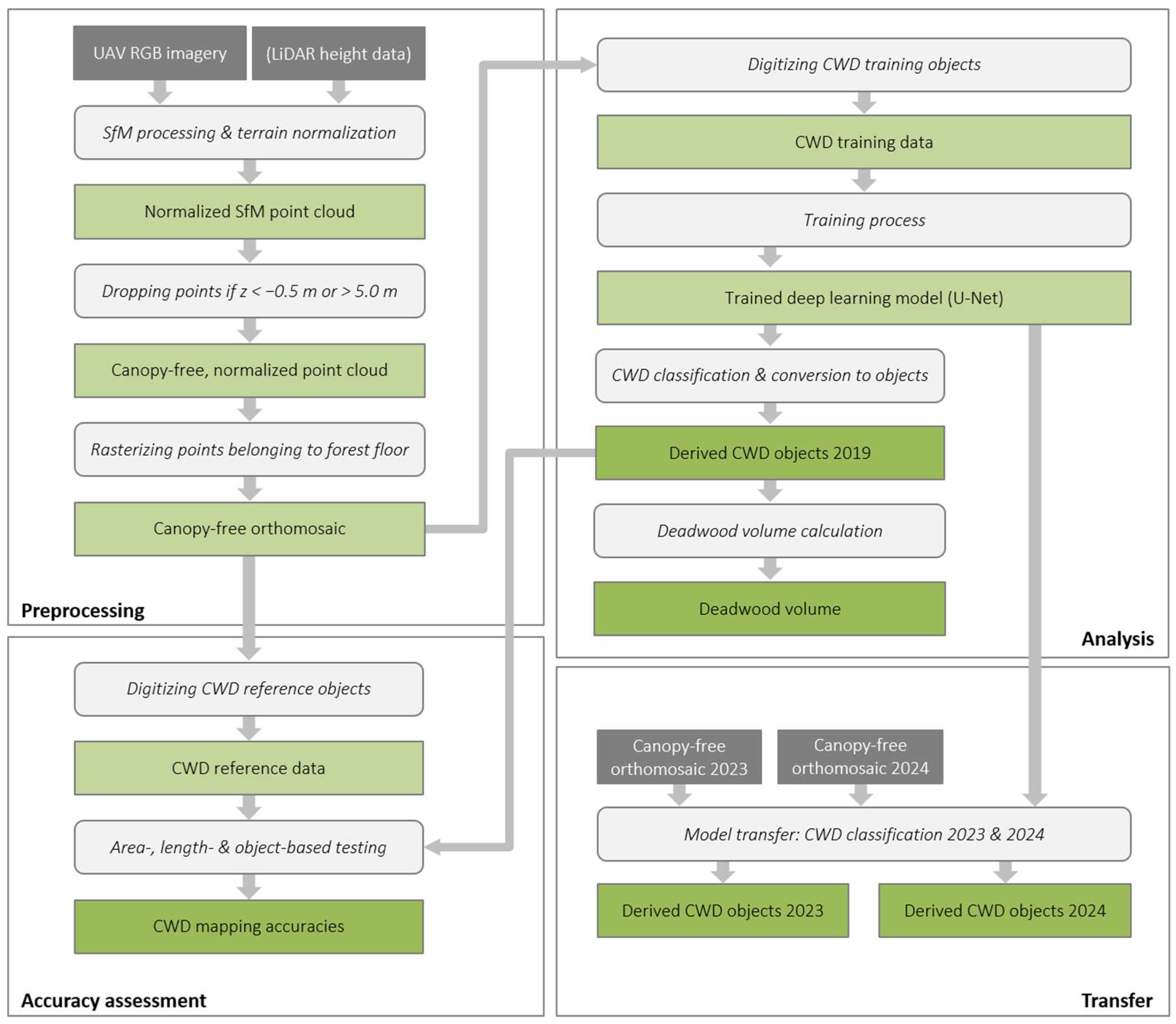

2.3. Deep Learning-Based CWD Mapping

2.3.1. Training Data Generation

2.3.2. Model Training and Classification

2.3.3. Transferability of the Trained Deep Learning Model

2.3.4. Calculation of Deadwood Volume

2.4. Comparative Analysis: Object-Based Image Analysis (OBIA) Approach

2.5. Accuracy Assessment

3. Results

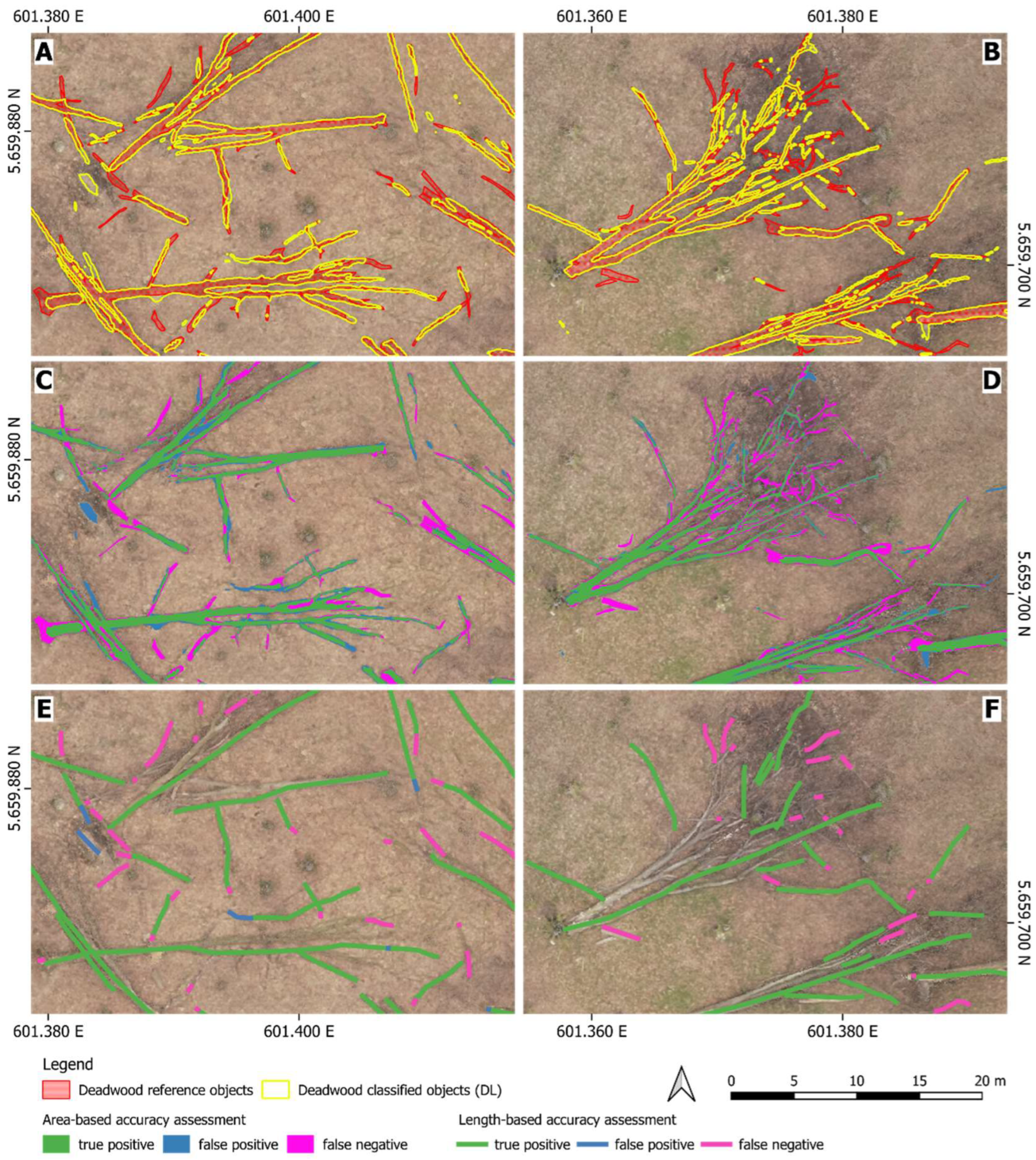

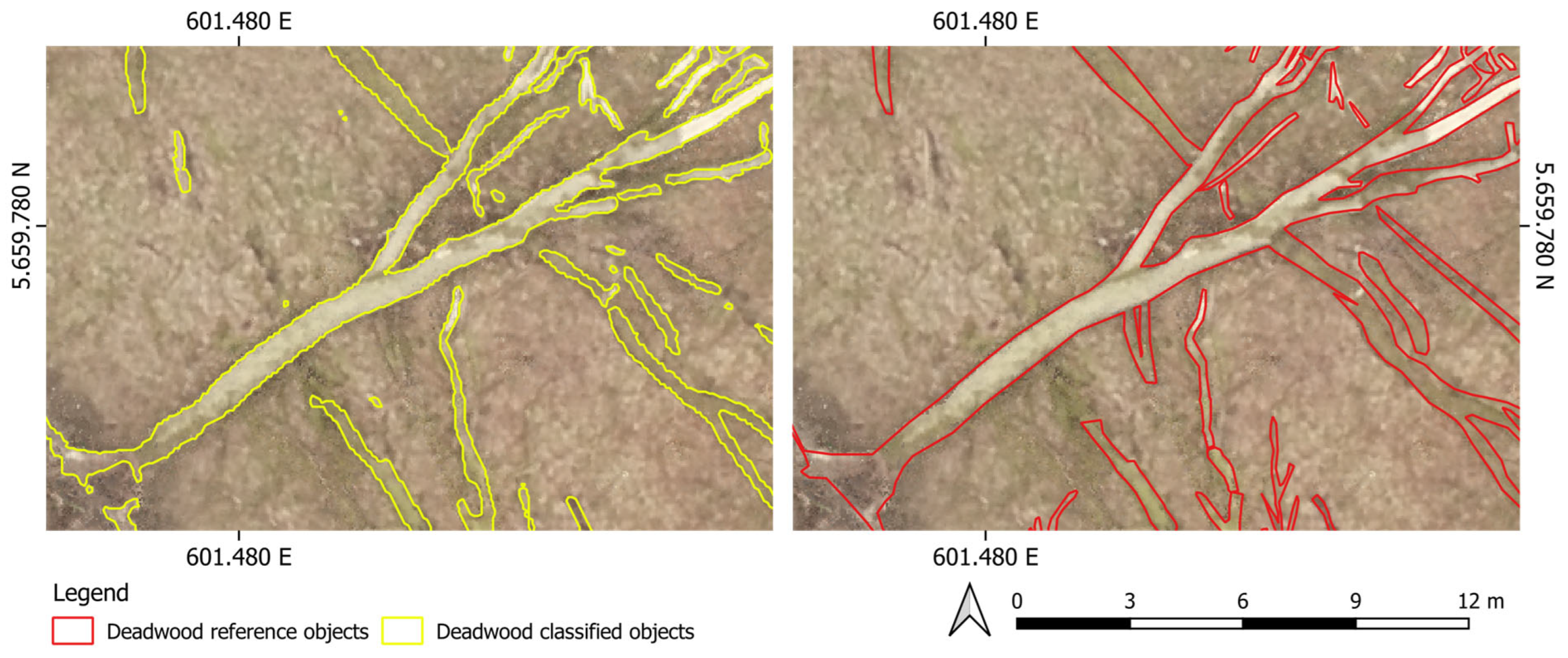

3.1. Deep Learning-Based Classification Results

3.1.1. Area-Based Accuracy Assessment

3.1.2. Length-Based Accuracy Assessment

3.1.3. Object-Based Accuracy Assessment

3.2. Impact of Deep Learning Model Parameters on CWD Detection

3.3. Comparative Analysis: Deep Learning vs. OBIA Classification Performance

3.4. Model Transferability for Deadwood Detection in 2023 and 2024

4. Discussion

4.1. Performance of the DL-Based CWD Mapping

4.2. Comparative Analysis Between the DL and OBIA Approach

4.3. Generalization of the DL Model

4.4. Use of Deadwood Detection Results for Further Forest Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Paper | Sensor, Platform | Method | Resolution | Research Area | Deadwood Type | Results |

|---|---|---|---|---|---|---|

| [69] | ALS | Regression models | 4 pts/m2, DEM 1 m res. (area-based) | Koli National Park—eastern Finland | Logs and snags | nRMSE of the volume prediction: 51.6% (downed), 78.8% (standing), and 12.7% (living trees) |

| [71] | ALS | Probability proportional to size sampling and probability layers derived from LiDAR data | 0.5 pts/m2 (area-based) | Central Finland, 305.8 ha | Logs and snags | Auxiliary laser data can increase CWD detection accuracies |

| [72] | ALS | Random Forest to predict dead basal area | N/A (area-based) | Bark beetle affected areas in the USA | Snags | Intensity metrics were important in predicting dead basal area |

| [70] | ALS | Regression models with discrete-return and full-waveform LiDAR, leaf-on and leaf-off combined | 5.0 pts/m2 (leaf-on) and 5.2 pts/m2 (leaf-off) (area-based) | New Forest National Park, southern England, 2200 ha | Logs and snags | nRMSE of the volume prediction: 16% (standing), 27% (downed) |

| [19] | ALS | Bi-temporal LiDAR data, allometric equations | >20 pts/m2, Canopy Height Model 0.5 m res. | Helsinki National Park, 14.9 ha | Logs | 97.8% new downed trees, 89% species group prediction |

| [46] | ALS | Rule-based OBIA | ≥9 pts/m2 | Near Last Chance in Placer County, CA, USA, 11 ha | Logs | 73% identified logs |

| [47] | ALS | DEM based on multi-temporal, full waveform LiDAR, map algebra | 22–40 pts/m2 (leaf-on), 17–20 pts/m2 (leaf-off), | Beech forest, Uckermark, Germany, 110 ha, and Lägern, Switzerland, 9 ha | Logs and snags | 70.5% identified logs (37.3% fully, 33.2% partially) |

| [73] | ALS | Line template-matching | 69 pts/m2 | Managed hemi-boreal forest southwest of Sweden, 54 ha | Logs | 41% stem matching |

| [74] | ALS | Normalized cut algorithm | 30 pts/m2 (leaf-off) | Bavarian Forest National Park, Germany | Logs | 90% fallen stems while having 30–40% overstory presence with a precision of 80% |

| [48] | TLS | Cylindrical shape detection and merging | High point density | Bavarian Forest National Park, Germany | Logs | Downed trunks completeness up to 0.79 |

| [75] | TLS | Cylinder fitting | High point density | Evo, southern Finland | Logs | Downed trunks completeness of 33% and correctness of 76% |

| [76] | ALS, UAV LiDAR, TLS | Clustering based on geometrical (planarity) and intensity point cloud features | 171–7457 pts/m2 (leaf-off) | Plantation and natural forest, USA | Logs | Average recall of 0.83 and precision between 0.40 and 0.85 |

| [77] | CIR image, airborne | Manual digitization on imagery using GIS | 23 cm res. | Spruce forests, Switzerland | Snags | 82% of intact snags, 67% broken snags detected |

| [78] | RGB image, UAV | Classifications: Pixel-based (decision trees) and object-oriented | 6.8–21.8 cm res. | Riparian forest, France, 174 ha | Snags | Object-oriented: 80% with respect to omission errors and 65% with respect to commission errors |

| [22] | CIR image, airborne | Hybrid classification (e.g., ISODATA clustering, OBIA), regression model (area-based) | 25 cm res. | Gatineau Park, Canada | Snags, logs volume | Snags: accuracy of 94%, regression models with high errors |

| [79] | CIR image + ALS | Single-tree classification using logistic regresion | 17 cm and 9.5 cm res., LiDAR: 55 pts/m2 | Šumava and Bavarian Forest National Park, 924 km2 | Snags | Overall accuracy of 82.8–92.6% |

| [21] | RGB image, airborne | Template matching, machine learning approaches for volume prediction | 0.2 m res. | Tuscany Region, Italy, 456 km2 | Logs | R2 = 0.92 with SVM regression for volume prediction of windthrown trees |

| [20] | RGB image, UAV | Manual detection | 0.5–1 m res. | Ogawa Forest Reserve in Kitaibaraki, Japan, 6 ha | Logs | Insufficient detection of small CWD, 80–90% accuracy on bigger CWD |

| [23] | RGB image, UAV | Line template matching | 2.68 cm res. | West Bohemia, Czech Republic | Logs | Kappa of 0.44 |

| [80] | RGB image, airborne | Random forest classification, filters | 0.5 m res. DEM 1 m res. | Black Forest, Germany, 600 ha | Snags | User’s accuracy of 0.74, producer’s accuracy of 0.80 after application of filters |

| [81] | CIR image, airborne | Classification using extended VGG-16 (CNN) | 20 cm res. | Province of Quebec, Canada, 32 km2 | Snags (live vs. dead) | Tree health status prediction accuracy 94% |

| [82] | RGB image, UAV | Object detection using a new CNN | 5–10 cm res. | Nature reserve “Stolby” in Krasnoyarsk, Russia | Snags (damage categories) | F-Score up to 88.89% with data augmentation for deadwood classification |

| [30] | RGB image, UAV | Semantic segmentation using adapted U-Net | <2 cm res. | Hainich National Park and Black Forest, Germany, 51 ha | Snags (deadwood and tree species) | Mean F1-score of 73% classifying several tree species including deadwood |

| [83] | RGB image, UAV | Segmentation using YOLOv8x-seg | 25 cm res. | Júcar river, Spain | Wood debris in river | Accuracy strongly depends on the characteristics of the debris |

| [24] | CIR image, airborne | Semantic segmentation using optimized FCN-DenseNet | 10 cm res. | Bavarian national forest park, Germany | Logs and snags | Recall of 94.6% (standing) and 67.8% (downed), precision of 100% (standing) and 99.0% (downed) |

| [49] | RGB image, UAV | Classification using AlexNet and GoogLeNet (CNNs) | 8.47 cm res. | Jinjiang, Fujiang province in southeastern China, 4.25 km2 | Snags (dead pine trees) | AlexNet: 90% precision, 70% recall, GoogLeNet: 98% precision, 51% recall of dead pine trees |

| [84] | RGB image, UAV | Object detection using optimized Faster R-CNN | N/A | Ji’an, Jiangxi Province, China, 1.8 km2 | Snags (pine wilt diseased) | Accuracy of 89.1% |

| [25] | RGB image, UAV | Semantic segmentation using U-Net and heuristic stem reconstruction model | <2 cm res. | Coniferous forests, Germany | Logs | Rates of at least 50% stem detection betweem 60% and 96% |

| [26] | RGB image, UAV | Instance segmentation using Mask R-CNN | N/A | German forests | Logs and snags | 92.4% overall accuracy, 43.4% mean average precision |

| [18] | RGB image, UAV | Rule-based OBIA | 5 cm res. | Hainich National Park, 28.2 ha | Logs | 83.5% precision, 69.2% recall (length-based) |

References

- Gardner, C.J.; Bicknell, J.E.; Baldwin-Cantello, W.; Struebig, M.J.; Davies, Z.G. Quantifying the impacts of defaunation on natural forest regeneration in a global meta-analysis. Nat. Commun. 2019, 10, 4590. [Google Scholar] [CrossRef] [PubMed]

- van Tiel, N.; Fopp, F.; Brun, P.; van den Hoogen, J.; Karger, D.N.; Casadei, C.M.; Lyu, L.; Tuia, D.; Zimmermann, N.E.; Crowther, T.W.; et al. Regional uniqueness of tree species composition and response to forest loss and climate change. Nat. Commun. 2024, 15, 4375. [Google Scholar] [CrossRef]

- Cook-Patton, S.C.; Leavitt, S.M.; Gibbs, D.; Harris, N.L.; Lister, K.; Anderson-Teixeira, K.J.; Briggs, R.D.; Chazdon, R.L.; Crowther, T.W.; Ellis, P.W.; et al. Mapping carbon accumulation potential from global natural forest regrowth. Nature 2020, 585, 545–550. [Google Scholar] [CrossRef]

- Winkel, G.; Lovrić, M.; Muys, B.; Katila, P.; Lundhede, T.; Pecurul, M.; Pettenella, D.; Pipart, N.; Plieninger, T.; Prokofieva, I.; et al. Governing Europe’s forests for multiple ecosystem services: Opportunities, challenges, and policy options. For. Policy Econ. 2022, 145, 102849. [Google Scholar] [CrossRef]

- Crecente-Campo, F.; Pasalodos-Tato, M.; Alberdi, I.; Hernández, L.; Ibañez, J.J.; Cañellas, I. Assessing and modelling the status and dynamics of deadwood through national forest inventory data in Spain. For. Ecol. Manag. 2016, 360, 297–310. [Google Scholar] [CrossRef]

- Harmon, M.E.; Franklin, J.F.; Swanson, F.J.; Sollins, P.; Gregory, S.V.; Lattin, J.D.; Anderson, N.H.; Cline, S.P.; Aumen, N.G.; Sedell, J.R.; et al. Ecology of Coarse Woody Debris in Temperate Ecosystems. Adv. Ecol. Res. 2004, 34, 59–234. [Google Scholar] [CrossRef]

- Ravindranath, N.H.; Ostwald, M. Carbon Inventory Methods: A Handbook for Greenhouse Gas Inventory, Carbon Mitigation and Roundwood Production Projects; Advances in Global Change Research; Springer: Berlin/Heidelberg, Germany, 2008; pp. 217–235. [Google Scholar]

- Lassauce, A.; Paillet, Y.; Jactel, H.; Bouget, C. Deadwood as a surrogate for forest biodiversity: Meta-analysis of correlations between deadwood volume and species richness of saproxylic organisms. Ecol. Indic. 2011, 11, 1027–1039. [Google Scholar] [CrossRef]

- Augustynczik, A.L.D.; Gusti, M.; Di Fulvio, F.; Lauri, P.; Forsell, N.; Havlík, P. Modelling the effects of climate and management on the distribution of deadwood in European forests. J. Environ. Manag. 2024, 354, 120382. [Google Scholar] [CrossRef] [PubMed]

- Nyström, M.; Holmgren, J.; Fransson, J.E.S.; Olsson, H. Detection of windthrown trees using airborne laser scanning. Int. J. Appl. Earth Obs. Geoinf. 2014, 30, 21–29. [Google Scholar] [CrossRef]

- Trumbore, S.; Brando, P.; Hartmann, H. Forest health and global change. Science 2015, 349, 814–818. [Google Scholar] [CrossRef]

- European Parliament and Council. Regulation (EU) 2024/1991 of the European Parliament and of the Council of 24 June 2024 on Nature Restoration and Amending Regulation (EU) 2022/869. Official Journal of the European Union. 2024. Available online: https://eur-lex.europa.eu/eli/reg/2024/1991/oj/eng (accessed on 1 April 2025).

- Larjavaara, M.; Brotons, L.; Corticeiro, S.; Espelta, J.M.; Gazzard, R.; Leverkus, A.; Lovrić, N.; Maia, P.; Sanders, T.G.M.; Svoboda, M.; et al. Deadwood and Fire Risk in Europe: Knowledge Synthesis for Policy; Publications Office of the European Union: Luxembourg, 2023; Available online: https://www.openagrar.de/receive/openagrar_mods_00090284 (accessed on 1 April 2025).

- Schwill, S.; Schleyer, E.; Planek, J. Handbuch Waldmonitoring für Flächen des Nationalen Naturerbes. 2016. Available online: https://www.naturschutzflaechen.de/fileadmin/Medien/Downloads/NNE_Infoportal/Monitoring/Handbuch_Waldmonitoring.pdf (accessed on 1 April 2025).

- Maltamo, M.; Kallio, E.; Bollandsås, O.M.; Næsset, E.; Gobakken, T.; Pesonen, A. Assessing Dead Wood by Airborne Laser Scanning. In Forestry Applications of Airborne Laser Scanning; Maltamo, M., Næsset, E., Vauhkonen, J., Eds.; Springer: Dordrecht, The Netherlands, 2014; pp. 375–395. ISBN 978-94-017-8663-8. [Google Scholar]

- Marchi, N.; Pirotti, F.; Lingua, E. Airborne and terrestrial laser scanning data for the assessment of standing and lying deadwood: Current situation and new perspectives. Remote Sens. 2018, 10, 1356. [Google Scholar] [CrossRef]

- Pignatti, G.; Natale, F.D.; Gasparini, P.; Paletto, A. Deadwood in Italian forests according to National Forest Inventory results. For. Riv. Selvic. Ecol. For. 2009, 6, 365–375. [Google Scholar] [CrossRef]

- Thiel, C.; Mueller, M.M.; Epple, L.; Thau, C.; Hese, S.; Voltersen, M.; Henkel, A. UAS Imagery-Based Mapping of Coarse Wood Debris in a Natural Deciduous Forest in Central Germany (Hainich National Park). Remote Sens. 2020, 12, 3293. [Google Scholar] [CrossRef]

- Tanhuanpää, T.; Kankare, V.; Vastaranta, M.; Saarinen, N.; Holopainen, M. Monitoring downed coarse woody debris through appearance of canopy gaps in urban boreal forests with bitemporal ALS data. Urban For. Urban Green. 2015, 14, 835–843. [Google Scholar] [CrossRef]

- Inoue, T.; Nagai, S.; Yamashita, S.; Fadaei, H.; Ishii, R.; Okabe, K.; Taki, H.; Honda, Y.; Kajiwara, K.; Suzuki, R. Unmanned aerial survey of fallen trees in a deciduous broadleaved forest in eastern Japan. PLoS ONE 2014, 9, e109881. [Google Scholar] [CrossRef]

- Pirotti, F.; Travaglini, D.; Giannetti, F.; Kutchartt, E.; Bottalico, F.; Chirici, G. Kernel feature cross-correlation for unsupervised quantification of damage from windthrow in forests. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2016, 41, 17–22. [Google Scholar] [CrossRef]

- Pasher, J.; King, D.J. Mapping dead wood distribution in a temperate hardwood forest using high resolution airborne imagery. For. Ecol. Manag. 2009, 258, 1536–1548. [Google Scholar] [CrossRef]

- Panagiotidis, D.; Abdollahnejad, A.; Surový, P.; Kuželka, K. Detection of fallen logs from high-resolution UAV images. N. Z. J. For. 2019, 49, 1–11. [Google Scholar] [CrossRef]

- Jiang, S.; Yao, W.; Heurich, M. Dead wood detection based on semantic segmentation of VHR aerial CIR imagery using optimized FCN-Densenet. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2019, 42, 127–133. [Google Scholar] [CrossRef]

- Reder, S.; Kruse, M.; Miranda, L.; Voss, N.; Mund, J.-P. Unveiling wind-thrown trees: Detection and quantification of wind-thrown tree stems on UAV-orthomosaics based on UNet and a heuristic stem reconstruction. For. Ecol. Manag. 2025, 578, 122411. [Google Scholar] [CrossRef]

- Bulatov, D.; Leidinger, F. Instance segmentation of deadwood objects in combined optical and elevation data using convolutional neural networks. In Proceedings Volume 11863, Earth Resources and Environmental Remote Sensing/GIS Applications XII; Schulz, K., Ed.; SPIE: Bellingham, WA, USA, 2021; p. 37. ISBN 9781510645707. [Google Scholar]

- Dietenberger, S.; Mueller, M.M.; Bachmann, F.; Nestler, M.; Ziemer, J.; Metz, F.; Heidenreich, M.G.; Koebsch, F.; Hese, S.; Dubois, C.; et al. Tree Stem Detection and Crown Delineation in a Structurally Diverse Deciduous Forest Combining Leaf-On and Leaf-Off UAV-SfM Data. Remote Sens. 2023, 15, 4366. [Google Scholar] [CrossRef]

- Mueller, M.M.; Dietenberger, S.; Nestler, M.; Hese, S.; Ziemer, J.; Bachmann, F.; Leiber, J.; Dubois, C.; Thiel, C. Novel UAV Flight Designs for Accuracy Optimization of Structure from Motion Data Products. Remote Sens. 2023, 15, 4308. [Google Scholar] [CrossRef]

- Biehl, R. Der Nationalpark Hainich—“Urwald mitten in Deutschland”. In Exkursionsführer zur Tagung der AG Forstliche Standorts-und Vegetationskunde vom 18. bis 21. Mai 2005 in Thüringen; Wald, T.L., Fischerei, J., Eds.; Thüringer Landesanstalt für Wald, Jagd und Fischerei: Gotha, Germany, 2005; pp. 44–47. [Google Scholar]

- Schiefer, F.; Kattenborn, T.; Frick, A.; Frey, J.; Schall, P.; Koch, B.; Schmidtlein, S. Mapping forest tree species in high resolution UAV-based RGB-imagery by means of convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2020, 170, 205–215. [Google Scholar] [CrossRef]

- Huss, J.; Butler-Manning, D. Entwicklungsdynamik eines buchendominierten “Naturwald”-Dauerbeobachtungsbestands auf Kalk im Nationalpark Hainich/Thüringen. Wald. Online 2006, 3, 67–81. [Google Scholar]

- Henkel, A.; Hese, S.; Thiel, C. Erhöhte Buchenmortalität im Nationalpark Hainich? AFZ Wald 2022, 26–29. [Google Scholar]

- Fritzlar, D.; Henkel, A.; Hornschuh, M.; Kleidon-Hildebrandt, A.; Kohlhepp, D.; Lehmann, R.; Lorenzen, K.; Mund, M.; Profft, I.; Siebicke, L. Exkursionsführer—Wissenschaft im Hainich. 2016. Available online: http://www.hainichtagung2016.de/downloads/HT2016_Exkursionsfuehrer_final.pdf (accessed on 3 August 2024).

- Schellenberg, K.; Jagdhuber, T.; Zehner, M.; Hese, S.; Urban, M.; Urbazaev, M.; Hartmann, H.; Schmullius, C.; Dubois, C. Potential of Sentinel-1 SAR to Assess Damage in Drought-Affected Temperate Deciduous Broadleaf Forests. Remote Sens. 2023, 15, 1004. [Google Scholar] [CrossRef]

- Freeland, R.; Allred, B.; Eash, N.; Martinez, L.; de Wishart, B. Agricultural drainage tile surveying using an unmanned aircraft vehicle paired with Real-Time Kinematic positioning—A case study. Comput. Electron. Agric. 2019, 165, 104946. [Google Scholar] [CrossRef]

- Knohl, A.; Schulze, E.-D.; Kolle, O.; Buchmann, N. Large carbon uptake by an unmanaged 250-year-old deciduous forest in Central Germany. Agric. For. Meteorol. 2003, 118, 151–167. [Google Scholar] [CrossRef]

- Thiel, C.; Müller, M.M.; Berger, C.; Cremer, F.; Dubois, C.; Hese, S.; Baade, J.; Klan, F.; Pathe, C. Monitoring Selective Logging in a Pine-Dominated Forest in Central Germany with Repeated Drone Flights Utilizing a Low Cost RTK Quadcopter. Drones 2020, 4, 11. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Malenovskỳ, Z.; Turner, D.; Vopěnka, P. Assessment of forest structure using two UAV techniques: A comparison of airborne laser scanning and structure from motion (SfM) point clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the World from Internet Photo Collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, October 5–9, 2015, Proceedings; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer: Cham, Switzerland, 2015; ISBN 9783319245522. [Google Scholar]

- Wagner, F.H.; Sanchez, A.; Tarabalka, Y.; Lotte, R.G.; Ferreira, M.P.; Aidar, M.P.M.; Gloor, E.; Phillips, O.L.; Aragão, L.E.O.C. Using the U-net convolutional network to map forest types and disturbance in the Atlantic rainforest with very high resolution images. Remote Sens. Ecol. Conserv. 2019, 5, 360–375. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 29th IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; IEEE: Piscataway, NJ, USA, 2016. ISBN 978-1-4673-8851-1. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Lovász, V.; Halász, A.; Molnár, P.; Karsa, R.; Halmai, Á. Application of a CNN to the Boda Claystone Formation for high-level radioactive waste disposal. Sci. Rep. 2023, 13, 5491. [Google Scholar] [CrossRef] [PubMed]

- Nyberg, B.; Buckley, S.J.; Howell, J.A.; Nanson, R.A. Geometric attribute and shape characterization of modern depositional elements: A quantitative GIS method for empirical analysis. Comput. Geosci. 2015, 82, 191–204. [Google Scholar] [CrossRef]

- Blanchard, S.D.; Jakubowski, M.K.; Kelly, M. Object-based image analysis of downed logs in disturbed forested landscapes using lidar. Remote Sens. 2011, 3, 2420–2439. [Google Scholar] [CrossRef]

- Leiterer, R.; Mücke, W.; Morsdorf, F.; Hollaus, M.; Pfeifer, N.; Schaepman, M.E. Operational forest structure monitoring using airborne laser scanning. Photogramm. Fernerkund. Geoinf. 2013, 2013, 173–184. [Google Scholar] [CrossRef]

- Polewski, P.; Yao, W.; Heurich, M.; Krzystek, P.; Stilla, U. A voting-based statistical cylinder detection framework applied to fallen tree mapping in terrestrial laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2017, 129, 118–130. [Google Scholar] [CrossRef]

- Tao, H.; Li, C.; Zhao, D.; Deng, S.; Hu, H.; Xu, X.; Jing, W. Deep learning-based dead pine tree detection from unmanned aerial vehicle images. Int. J. Remote Sens. 2020, 41, 8238–8255. [Google Scholar] [CrossRef]

- Magnússon, R.Í.; Tietema, A.; Cornelissen, J.H.; Hefting, M.M.; Kalbitz, K. Tamm Review: Sequestration of carbon from coarse woody debris in forest soils. For. Ecol. Manag. 2016, 377, 1–15. [Google Scholar] [CrossRef]

- Huang, L.; Pan, W.; Zhang, Y.; Qian, L.; Gao, N.; Wu, Y. Data Augmentation for Deep Learning-Based Radio Modulation Classification. IEEE Access 2020, 8, 1498–1506. [Google Scholar] [CrossRef]

- Mikolajczyk, A.; Grochowski, M. Data augmentation for improving deep learning in image classification problem. In Proceedings of the 2018 International Interdisciplinary PhD Workshop (IIPhDW), Swinoujście, Poland, 9–12 May 2018; IIPhDW, Ed.; IEEE: Piscataway, NJ, USA, 2018; pp. 117–122, ISBN 978-1-5386-6143-7. [Google Scholar]

- Moreno-Barea, F.J.; Jerez, J.M.; Franco, L. Improving classification accuracy using data augmentation on small data sets. Expert Syst. Appl. 2020, 161, 113696. [Google Scholar] [CrossRef]

- Chen, C.; Fan, L. Scene segmentation of remotely sensed images with data augmentation using U-net++. In Proceedings of the 2021 International Conference on Computer Engineering and Artificial Intelligence (ICCEAI), Shanghai, China, 27–29 August 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 201–205, ISBN 978-1-6654-3960-2. [Google Scholar]

- He, Y.; Jia, K.; Wei, Z. Improvements in Forest Segmentation Accuracy Using a New Deep Learning Architecture and Data Augmentation Technique. Remote Sens. 2023, 15, 2412. [Google Scholar] [CrossRef]

- Kattenborn, T.; Eichel, J.; Fassnacht, F.E. Convolutional Neural Networks enable efficient, accurate and fine-grained segmentation of plant species and communities from high-resolution UAV imagery. Sci. Rep. 2019, 9, 17656. [Google Scholar] [CrossRef]

- Diez, Y.; Kentsch, S.; Fukuda, M.; Caceres, M.L.L.; Moritake, K.; Cabezas, M. Deep learning in forestry using uav-acquired rgb data: A practical review. Remote Sens. 2021, 13, 2837. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Kattenborn, T.; Mosig, C.; Pratima, K.; Frey, J.; Perez-Priego, O.; Schiefer, F.; Cheng, Y.; Potts, A.; Jehle, J.; Mälicke, M.; et al. deadtrees.earth—An open, dynamic database for accessing, contributing, analyzing, and visualizing remote sensing-based tree mortality data. In Proceedings of the EGU General Assembly, Vienna, Austria, 14–19 April 2024. [Google Scholar]

- Troles, J.; Schmid, U.; Fan, W.; Tian, J. BAMFORESTS: Bamberg Benchmark Forest Dataset of Individual Tree Crowns in Very-High-Resolution UAV Images. Remote Sens. 2024, 16, 1935. [Google Scholar] [CrossRef]

- Luo, M.; Ji, S. Cross-spatiotemporal land-cover classification from VHR remote sensing images with deep learning based domain adaptation. ISPRS J. Photogramm. Remote Sens. 2022, 191, 105–128. [Google Scholar] [CrossRef]

- Luo, M.; Ji, S.; Wei, S. A Diverse Large-Scale Building Dataset and a Novel Plug-and-Play Domain Generalization Method for Building Extraction. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 4122–4138. [Google Scholar] [CrossRef]

- Zhu, S.; Wu, C.; Du, B.; Zhang, L. Style and content separation network for remote sensing image cross-scene generalization. ISPRS J. Photogramm. Remote Sens. 2023, 201, 1–11. [Google Scholar] [CrossRef]

- Corley, I.; Robinson, C.; Dodhia, R.; Ferres, J.M.L.; Najafirad, P. Revisiting pre-trained remote sensing model benchmarks: Resizing and normalization matters. arXiv 2023, arXiv:2305.13456v1. [Google Scholar]

- Sacher, P.; Meyerhoff, J.; Mayer, M. Evidence of the association between deadwood and forest recreational site choices. For. Policy Econ. 2022, 135, 102638. [Google Scholar] [CrossRef]

- Shannon, V.L.; Vanguelova, E.I.; Morison, J.I.L.; Shaw, L.J.; Clark, J.M. The contribution of deadwood to soil carbon dynamics in contrasting temperate forest ecosystems. Eur. J. For. Res. 2022, 141, 241–252. [Google Scholar] [CrossRef]

- Lingua, E.; Marques, G.; Marchi, N.; Garbarino, M.; Marangon, D.; Taccaliti, F.; Marzano, R. Post-Fire Restoration and Deadwood Management: Microsite Dynamics and Their Impact on Natural Regeneration. Forests 2023, 14, 1820. [Google Scholar] [CrossRef]

- Pesonen, A.; Maltamo, M.; Eerikäinen, K.; Packalèn, P. Airborne laser scanning-based prediction of coarse woody debris volumes in a conservation area. For. Ecol. Manag. 2008, 255, 3288–3296. [Google Scholar] [CrossRef]

- Sumnall, M.J.; Hill, R.A.; Hinsley, S.A. Comparison of small-footprint discrete return and full waveform airborne lidar data for estimating multiple forest variables. Remote Sens. Environ. 2016, 173, 214–223. [Google Scholar] [CrossRef]

- Pesonen, A.; Leino, O.; Maltamo, M.; Kangas, A. Comparison of field sampling methods for assessing coarse woody debris and use of airborne laser scanning as auxiliary information. For. Ecol. Manag. 2009, 257, 1532–1541. [Google Scholar] [CrossRef]

- Bright, B.C.; Hudak, A.T.; McGaughey, R.; Andersen, H.-E.; Negrón, J. Predicting live and dead tree basal area of bark beetle affected forests from discrete-return lidar. Can. J. Remote Sens. 2013, 39, S99–S111. [Google Scholar] [CrossRef]

- Lindberg, E.; Hollaus, M.; Mücke, W.; Fransson, J.E.S.; Pfeifer, N. Detection of lying tree stems from airborne laser scanning data using a line template matching algorithm. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 2, 169–174. [Google Scholar] [CrossRef]

- Polewski, P.; Yao, W.; Heurich, M.; Krzystek, P.; Stilla, U. Detection of fallen trees in ALS point clouds using a Normalized Cut approach trained by simulation. ISPRS J. Photogramm. Remote Sens. 2015, 105, 252–271. [Google Scholar] [CrossRef]

- Yrttimaa, T.; Saarinen, N.; Luoma, V.; Tanhuanpää, T.; Kankare, V.; Liang, X.; Hyyppä, J.; Holopainen, M.; Vastaranta, M. Detecting and characterizing downed dead wood using terrestrial laser scanning. ISPRS J. Photogramm. Remote Sens. 2019, 151, 76–90. [Google Scholar] [CrossRef]

- dos Santos, R.C.; Shin, S.-Y.; Manish, R.; Zhou, T.; Fei, S.; Habib, A. General Approach for Forest Woody Debris Detection in Multi-Platform LiDAR Data. Remote Sens. 2025, 17, 651. [Google Scholar] [CrossRef]

- Bütler, R.; Schlaepfer, R. Spruce snag quantification by coupling colour infrared aerial photos and a GIS. For. Ecol. Manag. 2004, 195, 325–339. [Google Scholar] [CrossRef]

- Dunford, R.; Michel, K.; Gagnage, M.; Piégay, H.; Trémelo, M.-L. Potential and constraints of Unmanned Aerial Vehicle technology for the characterization of Mediterranean riparian forest. Int. J. Remote Sens. 2009, 30, 4915–4935. [Google Scholar] [CrossRef]

- Krzystek, P.; Serebryanyk, A.; Schnörr, C.; Červenka, J.; Heurich, M. Large-scale mapping of tree species and dead trees in Sumava National Park and Bavarian Forest National Park using lidar and multispectral imagery. Remote Sens. 2020, 12, 661. [Google Scholar] [CrossRef]

- Zielewska-Büttner, K.; Adler, P.; Kolbe, S.; Beck, R.; Ganter, L.M.; Koch, B.; Braunisch, V. Detection of standing deadwood from aerial imagery products: Two methods for addressing the bare ground misclassification issue. Forests 2020, 11, 801. [Google Scholar] [CrossRef]

- Sylvain, J.D.; Drolet, G.; Brown, N. Mapping dead forest cover using a deep convolutional neural network and digital aerial photography. ISPRS J. Photogramm. Remote Sens. 2019, 156, 14–26. [Google Scholar] [CrossRef]

- Safonova, A.; Tabik, S.; Alcaraz-Segura, D.; Rubtsov, A.; Maglinets, Y.; Herrera, F. Detection of Fir Trees (Abies sibirica) Damaged by the Bark Beetle in Unmanned Aerial Vehicle Images with Deep Learning. Remote Sens. 2019, 11, 643. [Google Scholar] [CrossRef]

- Barbero-García, I.; Guerrero-Sevilla, D.; Sánchez-Jiménez, D.; Marqués-Mateu, Á.; González-Aguilera, D. Aerial-Drone-Based Tool for Assessing Flood Risk Areas Due to Woody Debris Along River Basins. Drones 2025, 9, 191. [Google Scholar] [CrossRef]

- Deng, X.; Tong, Z.; Lan, Y.; Huang, Z. Detection and Location of Dead Trees with Pine Wilt Disease Based on Deep Learning and UAV Remote Sensing. AgriEngineering 2020, 2, 294–307. [Google Scholar] [CrossRef]

| Parameter | 24 March 2019 | 21 March 2023 | 12 March 2024 | |

|---|---|---|---|---|

| UAV Type | DJI Phantom 4 Pro | DJI Mavic 3 Enterprise | DJI Mavic 3 Enterprise | |

| (a) | Time (UTC+1) Of First Shot | 10:36 a.m. | 12:08 p.m. | 11:34 a.m. |

| Clouds | Overcast (8/8) | Overcast (8/8) | Overcast (8/8) | |

| No. Images | 578 | 2446 | 3012 | |

| Image Overlap (Front/Side) | 85%/80% | 85%/80% | 85%/80% | |

| Flight Speed | 5.0 m/s | 6.0 m/s | 6.0 m/s | |

| Shutter Speed | 1/360 s (Shutter Speed Priority) | 1/250–1/1000 s | 1/2500–1/500 s | |

| Distortion Correction | Yes | No | No | |

| Gimbal Angle | −90° (Nadir) | 1 × −90° (Nadir) 3 × −65° (Oblique) | 1 × −90° (Nadir) 4 × −65° (Oblique) | |

| Flight Altitude Over Tower Platform | 100 m | 105 m | 105 m | |

| ISO Sensitivity | ISO400 | ISO100–340 | ISO100–730 | |

| Aperture | F/5.0–F/5.6 (Exposure Value: −0.3) | F/2.8 (Exposure Value: 0) | F/2.8 (Exposure Value: 0) | |

| (b) | Geometric Resolution (Ground) | 4.18 cm | 4.25 cm | 4.26 cm |

| Detected Tie Points | 104,768 | 540,736 | 1,127,058 | |

| Aligned Cameras | 578/578 | 2446/2446 | 3012/3012 | |

| Average Error of Camera Position (x, y, z) | 0.22, 0.13, 0.13 cm | 2.17, 2.27, 3.46 cm | 0.32, 0.56, 3.25 cm | |

| Effective Reprojection Error | 0.32 pix | 0.40 pix | 0.36 pix |

| Approach | Reference | tp | fn | fp | Recall | Precision | F1-Score | rBias | |

|---|---|---|---|---|---|---|---|---|---|

| DL | Area- based | 2987.06 m2 | 2018.35 m2 | 968.76 m2 | 553.20 m2 | 67.57% | 78.49% | 72.62% | −13.91% |

| OBIA | 1775.95 m2 | 1211.10 m2 | 3797.77 m2 | 59.45% | 31.86% | 41.49% | 86.60% | ||

| Length-based... | |||||||||

| DL | …min. 2 m | 7459.67 m | 5509.43 m | 1336.72 m | 153.29 m | 80.47% | 97.29% | 88.09% | −15.86% |

| OBIA | 4281.36 m | 2636.82 m | 1027.20 m | 61.89% | 80.65% | 70.03% | −21.58% | ||

| DL | …min. 10 m | 3529.88 m | 3156.81 m | 231.97 m | 33.16 m | 93.15% | 98.96% | 95.97% | −5.63% |

| OBIA | 2481.87 m | 261.98 m | 889.32 m | 73.62% | 90.45% | 81.17% | 17.77% | ||

| DL | Object-based | 1110 | 700 | 268 | 21 | 72.31% | 97.09% | 82.89% | −22.25% |

| OBIA | 504 | 463 | 136 | 52.12% | 78.75% | 62.73% | −29.46% | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dietenberger, S.; Mueller, M.M.; Stöcker, B.; Dubois, C.; Arlaud, H.; Adam, M.; Hese, S.; Meyer, H.; Thiel, C. Accurate Mapping of Downed Deadwood in a Dense Deciduous Forest Using UAV-SfM Data and Deep Learning. Remote Sens. 2025, 17, 1610. https://doi.org/10.3390/rs17091610

Dietenberger S, Mueller MM, Stöcker B, Dubois C, Arlaud H, Adam M, Hese S, Meyer H, Thiel C. Accurate Mapping of Downed Deadwood in a Dense Deciduous Forest Using UAV-SfM Data and Deep Learning. Remote Sensing. 2025; 17(9):1610. https://doi.org/10.3390/rs17091610

Chicago/Turabian StyleDietenberger, Steffen, Marlin M. Mueller, Boris Stöcker, Clémence Dubois, Hanna Arlaud, Markus Adam, Sören Hese, Hanna Meyer, and Christian Thiel. 2025. "Accurate Mapping of Downed Deadwood in a Dense Deciduous Forest Using UAV-SfM Data and Deep Learning" Remote Sensing 17, no. 9: 1610. https://doi.org/10.3390/rs17091610

APA StyleDietenberger, S., Mueller, M. M., Stöcker, B., Dubois, C., Arlaud, H., Adam, M., Hese, S., Meyer, H., & Thiel, C. (2025). Accurate Mapping of Downed Deadwood in a Dense Deciduous Forest Using UAV-SfM Data and Deep Learning. Remote Sensing, 17(9), 1610. https://doi.org/10.3390/rs17091610