Urban Sprawl Monitoring by VHR Images Using Active Contour Loss and Improved U-Net with Mix Transformer Encoders

Abstract

1. Introduction

2. Methods

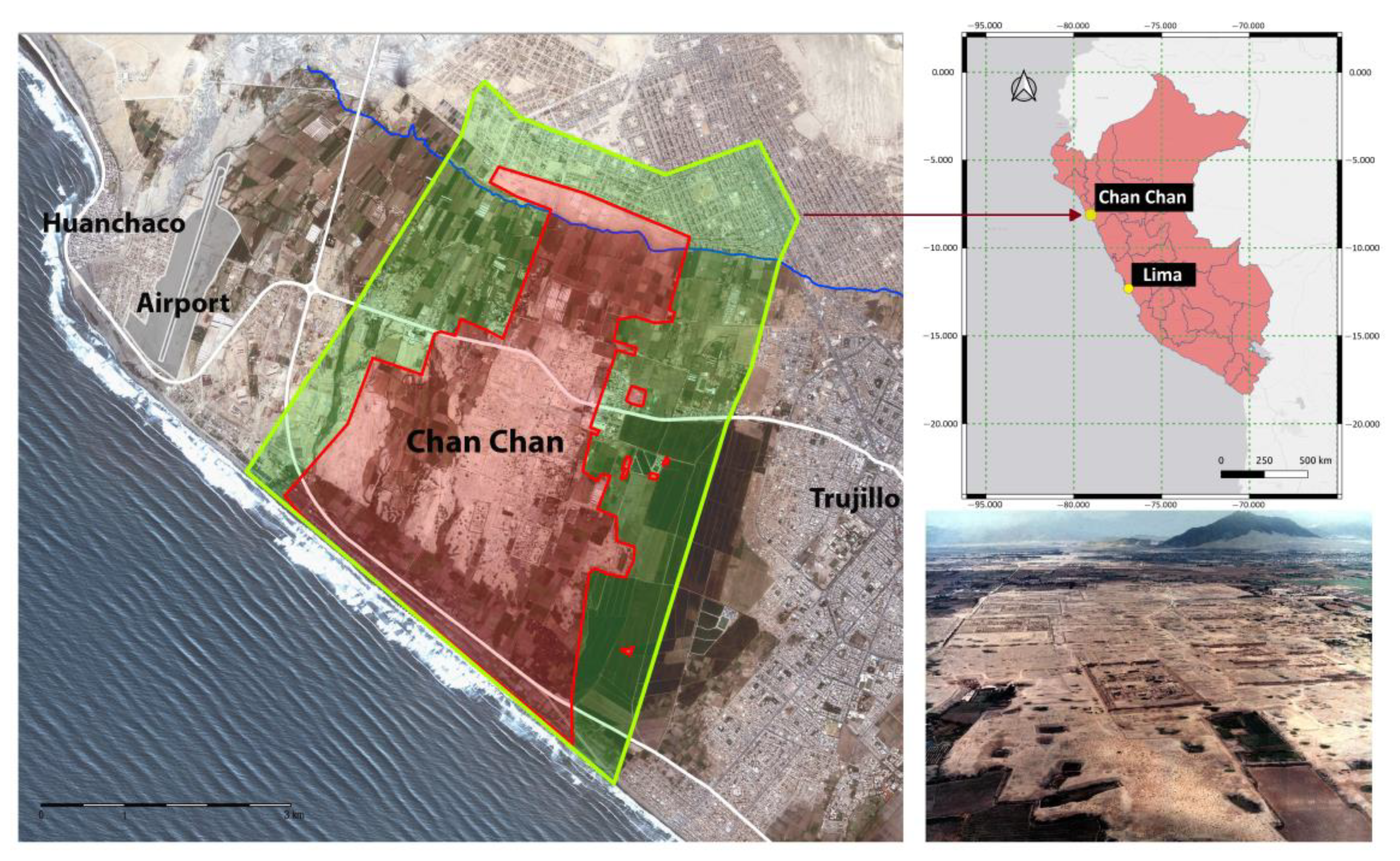

2.1. Study Area

2.2. Dataset

2.3. Network Architecture

2.4. Experimental Protocol and Setting

2.5. Data Augmentation

- The input was subjected to various transformations with 50% probability for each operation. These transformations included a horizontal flip around the y-axis (HorizontalFlip), flip around the x-axis (VerticalFlip), transposition, and a 90-degree rotation;

- Four complementary strategies were implemented to obtain 512 × 512 pixel images: (1) 512 × 512 pixel resizing, (2) 512 × 512-pixel random crop, (3) cropping between 256 and 768 pixels of a random portion of the input, followed by 512 × 512 pixel resizing, and (4) 15° rotation, followed by 512 × 512 pixel center crop. For images with dimensions smaller than 768 pixels, padding was applied to the edges when the dimensions were below 512 pixels. In case (3), the random section was cropped with dimensions ranging from 256 to 512 pixels. Each of these strategies had an equal probability of being applied to the input data;

- Three complementary pixel intensity variation strategies with 25% probability of application were employed: (1) random brightness and contrast variations, (2) alterations in RGB color representations, and (3) modifications to hue and saturation values.

2.6. Loss Function

2.7. Metrics

3. Results

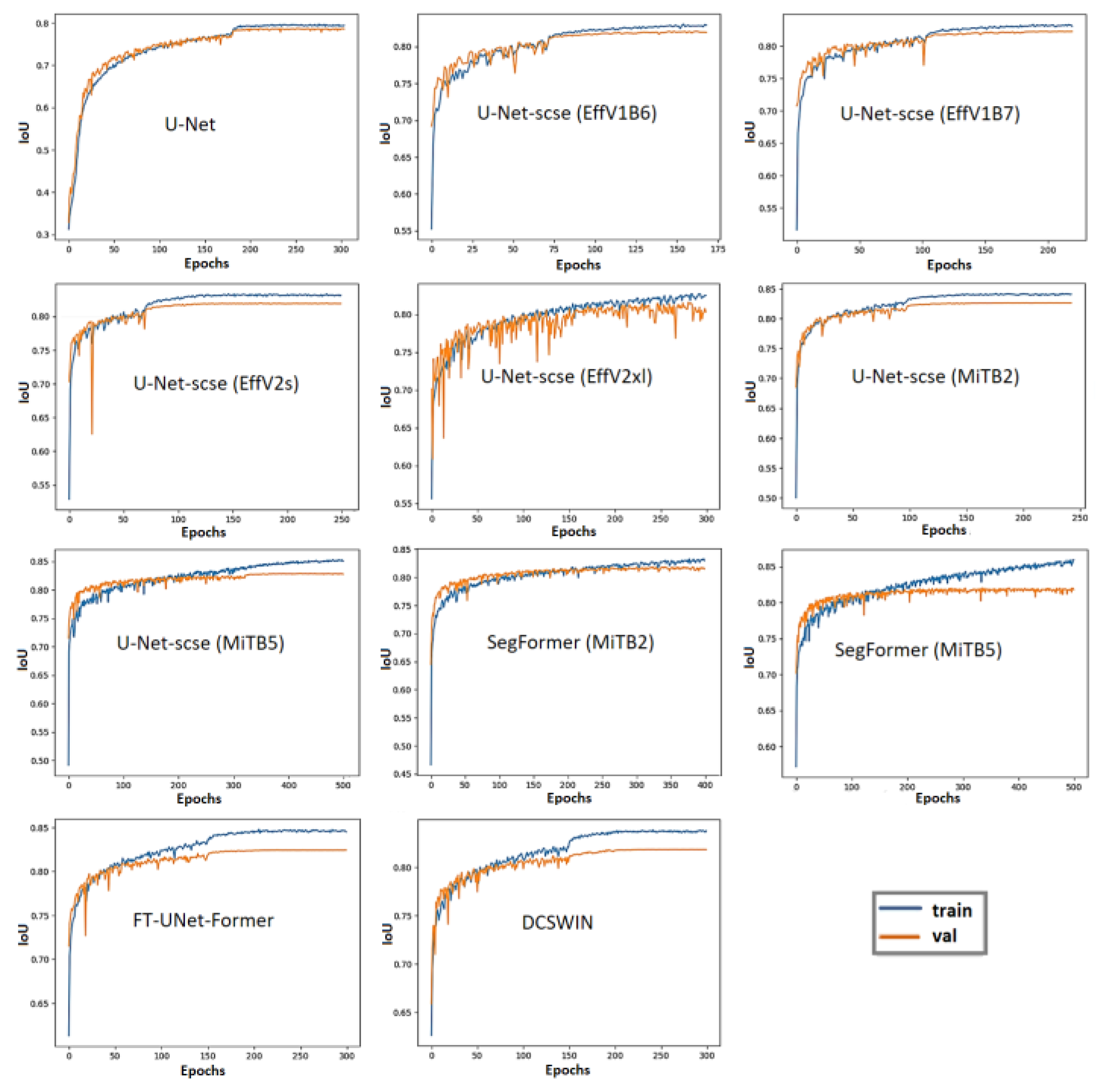

3.1. Quantitative Results

3.2. Qualitative Results in Peruvian Cities

4. Discussion

4.1. Building Semantic Segmentation in Peruvian Cities

4.2. Urban Sprawl Analysis in Chan Chan

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- United Nations, Department of Economic and Social Affairs, Population Division. World Urbanization Prospects: The 2018 Revision (ST/ESA/SER.A/420); United Nations, Department of Economic and Social Affairs, Population Division: New York, NY, USA, 2019; Available online: https://population.un.org/wup/assets/WUP2018-Report.pdf (accessed on 14 May 2024).

- Gantulga, N.; Iimaa, T.; Batmunkh, M.; Surenjav, U.; Tserennadmin, E.; Turmunkh, T.; Denchingungaa, D.; Dorjsuren, B. Impacts of natural and anthropogenic factors on soil erosion. Proc. Mong. Acad. Sci. 2023, 63, 3–18. [Google Scholar] [CrossRef]

- Chidi, C.L. Urbanization and Soil Erosion in Kathmandu Valley, Nepal. In Nature, Society, and Marginality; Perspectives on Geographical Marginality Series; Pradhan, P.K., Leimgruber, W., Eds.; Springer International Publishing: Cham, Switzerland, 2022; Volume 8, pp. 67–83. [Google Scholar] [CrossRef]

- Santos, F.; Calle, N.; Bonilla, S.; Sarmiento, F.; Herrnegger, M. Impacts of soil erosion and climate change on the built heritage of the Pambamarca Fortress Complex in northern Ecuador. PLoS ONE 2023, 18, e0281869. [Google Scholar] [CrossRef]

- Colosi, F.; Bacigalupo, C.; De Meo, A.; Kobata Alva, S.A.; Orazi, R.; Rojas Vasquez, G.E.; León Trujillo, F.J. Chan Chan y la pérdida de su paisaje cultural. ACE Archit. City Environ. 2025, in press. [Google Scholar]

- Gainullin, I.I.; Khomyakov, P.V.; Sitdikov, A.G.; Usmanov, B.M. Study of anthropogenic and natural impacts on archaeological sites of the Volga Bulgaria period (Republic of Tatarstan) using remote sensing data. In Proceedings of the Fourth International Conference on Remote Sensing and Geoinformation of the Environment, Paphos, Cyprus, 12 August 2016; SPIE: Bellingham, WA, USA, 2016. [Google Scholar] [CrossRef]

- Agapiou, A.; Lysandrou, V.; Alexakis, D.D.; Themistocleous, K.; Cuca, B.; Argyriou, A.V.; Sarris, A.; Hadjimitsis, D.G. Cultural heritage management and monitoring using remote sensing data and GIS: The case study of Paphos area, Cyprus. Comput. Environ. Urban Syst. 2015, 54, 230–239. [Google Scholar] [CrossRef]

- Agapiou, A. Multi-Temporal Change Detection Analysis of Vertical Sprawl over Limassol City Centre and Amathus Archaeological Site in Cyprus during 2015–2020 Using the Sentinel-1 Sensor and the Google Earth Engine Platform. Sensors 2021, 21, 1884. [Google Scholar] [CrossRef]

- Xiao, D.; Lu, L.; Wang, X.; Nitivattananon, V.; Guo, H.; Hui, W. An urbanization monitoring dataset for world cultural heritage in the Belt and Road region. Big Earth Data 2021, 6, 127–140. [Google Scholar] [CrossRef]

- Moise, C.; Dana Negula, I.; Mihalache, C.E.; Lazar, A.M.; Dedulescu, A.L.; Rustoiu, G.T.; Inel, I.C.; Badea, A. Remote Sensing for Cultural Heritage Assessment and Monitoring: The Case Study of Alba Iulia. Sustainability 2021, 13, 1406. [Google Scholar] [CrossRef]

- Tang, Y.; Chen, F.; Yang, W.; Ding, Y.; Wan, H.; Sun, Z.; Jing, L. Elaborate Monitoring of Land-Cover Changes in Cultural Landscapes at Heritage Sites Using Very High-Resolution Remote-Sensing Images. Sustainability 2022, 14, 1319. [Google Scholar] [CrossRef]

- Yao, Y.; Wang, X.; Luo, L.; Wan, H.; Ren, H. An Overview of GIS-RS Applications for Archaeological and Cultural Heritage under the DBAR-Heritage Mission. Remote Sens. 2023, 15, 5766. [Google Scholar] [CrossRef]

- Cuca, B.; Agapiou, A. The Potentials of Large-Scale Open Access Remotely Sensed Ready Products: Use and Recommendations when Monitoring Urban Sprawl Near Cultural Heritage Sites. In Proceedings of the 2024 IEEE Mediterranean and Middle-East Geoscience and Remote Sensing Symposium (M2GARSS), Oran, Algeria, 15–17 April 2024; pp. 300–305. [Google Scholar] [CrossRef]

- Megarry, W.P.; Cooney, G.; Comer, D.C.; Priebe, C.E. Posterior Probability Modeling and Image Classification for Archaeological Site Prospection: Building a Survey Efficacy Model for Identifying Neolithic Felsite Workshops in the Shetland Islands. Remote Sens. 2016, 8, 529. [Google Scholar] [CrossRef]

- Balz, T.; Caspari, G.; Fu, B.; Liao, M. Discernibility of Burial Mounds in High-Resolution X-Band SAR Images for Archaeological Prospections in the Altai Mountains. Remote Sens. 2016, 8, 817. [Google Scholar] [CrossRef]

- Stewart, C.; Oren, E.D.; Cohen-Sasson, E. Satellite Remote Sensing Analysis of the Qasrawet Archaeological Site in North Sinai. Remote Sens. 2018, 10, 1090. [Google Scholar] [CrossRef]

- Cuypers, S.; Nascetti, A.; Vergauwen, M. Land Use and Land Cover Mapping with VHR and Multi-Temporal Sentinel-2 Imagery. Remote Sens. 2023, 15, 2501. [Google Scholar] [CrossRef]

- Bachagha, N.; Elnashar, A.; Tababi, M.; Souei, F.; Xu, W. The Use of Machine Learning and Satellite Imagery to Detect Roman Fortified Sites: The Case Study of Blad Talh (Tunisia Section). Appl. Sci. 2023, 13, 2613. [Google Scholar] [CrossRef]

- Zarro, C.; Cerra, D.; Auer, S.; Ullo, S.L.; Reinartz, P. Urban Sprawl and COVID-19 Impact Analysis by Integrating Deep Learning with Google Earth Engine. Remote Sens. 2022, 14, 2038. [Google Scholar] [CrossRef]

- Gu, Z.; Zeng, M. The Use of Artificial Intelligence and Satellite Remote Sensing in Land Cover Change Detection: Review and Perspectives. Sustainability 2024, 16, 274. [Google Scholar] [CrossRef]

- Southworth, J.; Smith, A.C.; Safaei, M.; Rahaman, M.; Alruzuq, A.; Tefera, B.B.; Muir, C.S.; Herrero, H.V. Machine learning versus deep learning in land system science: A decision-making framework for effective land classification. Front. Remote Sens. 2024, 5, 1374862. [Google Scholar] [CrossRef]

- Chicchon, M.; Malinverni, E.S.; Sanità, M.; Pierdicca, R.; Colosi, F.; Trujillo, F.J.L. Building Semantic Segmentation Using UNet Convolutional Network on SpaceNet Public Data Sets for Monitoring Surrounding Area of Chan Chan (Peru). Geomat. Environ. Eng. 2024, 18, 25–43. [Google Scholar] [CrossRef]

- Hoeser, T.; Kuenzer, C. Object Detection and Image Segmentation with Deep Learning on Earth Observation Data: A Review-Part I: Evolution and Recent Trends. Remote Sens. 2020, 12, 1667. [Google Scholar] [CrossRef]

- Xie, Y.; Cai, J.; Bhojwani, R.; Shekhar, S.; Knight, J. A locally-constrained YOLO framework for detecting small and densely-distributed building footprints. Int. J. Geogr. Inf. Sci. 2019, 34, 777–801. [Google Scholar] [CrossRef]

- Nurkarim, W.; Wijayanto, A.W. Building footprint extraction and counting on very high-resolution satellite imagery using object detection deep learning framework. Earth Sci. Inform. 2023, 16, 515–532. [Google Scholar] [CrossRef]

- Pan, Z.; Xu, J.; Guo, Y.; Hu, Y.; Wang, G. Deep Learning Segmentation and Classification for Urban Village Using a Worldview Satellite Image Based on U-Net. Remote Sens. 2020, 12, 1574. [Google Scholar] [CrossRef]

- Rastogi, K.; Bodani, P.; Sharma, S.A. Automatic building footprint extraction from very high-resolution imagery using deep learning techniques. Geocarto Int. 2020, 37, 1501–1513. [Google Scholar] [CrossRef]

- Mou, L.; Hua, Y.; Zhu, X.X. Relation matters: Relational context-aware fully convolutional network for semantic segmentation of high-resolution aerial images. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7557–7569. [Google Scholar] [CrossRef]

- Adamiak, M.; Jażdżewska, I.; Nalej, M. Analysis of Built-Up Areas of Small Polish Cities with the Use of Deep Learning and Geographically Weighted Regression. Geosciences 2021, 11, 223. [Google Scholar] [CrossRef]

- Huang, Y.; Jin, Y. Aerial Imagery-Based Building Footprint Detection with an Integrated Deep Learning Framework: Applications for Fine Scale Wildland–Urban Interface Mapping. Remote Sens. 2022, 14, 3622. [Google Scholar] [CrossRef]

- Amirgan, B.; Erener, A. Semantic segmentation of satellite images with different building types using deep learning methods. Remote Sens. Appl. Soc. Environ. 2024, 34, 101176. [Google Scholar] [CrossRef]

- Wen, Q.; Jiang, K.; Wang, W.; Liu, Q.; Guo, Q.; Li, L.; Wang, P. Automatic Building Extraction from Google Earth Images under Complex Backgrounds Based on Deep Instance Segmentation Network. Sensors 2019, 19, 333. [Google Scholar] [CrossRef]

- Tian, D.; Han, Y.; Wang, B.; Guan, B.; Gu, H.; Wei, W. Review of object instance segmentation based on deep learning. J. Electron. Imaging 2021, 31, 041205. [Google Scholar] [CrossRef]

- Amo-Boateng, M.; Nkwa Sey, N.E.; Ampah Amproche, A.; Kyereh Domfeh, M. Instance segmentation scheme for roofs in rural areas based on mask R-CNN. Egypt. J. Remote Sens. Space Sci. 2022, 25, 569–577. [Google Scholar] [CrossRef]

- Chen, S.; Ogawa, Y.; Zhao, C.; Sekimoto, Y. Large-Scale Building Footprint Extraction from Open-Sourced Satellite Imagery via Instance Segmentation Approach. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; IEEE: New York, NY, USA, 2022; pp. 6284–6287. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Handa, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for robust semantic pixel-wise labelling. arXiv 2015, arXiv:1505.07293. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Lecture Notes in Computer Science; Navab, N., Hornegger, J., Wells, W., Frangi, A., Eds.; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, Proceedings of the 4th International Workshop, DLMIA 2018 and 8th International Workshop, ML-CDS 2018 Held in Conjunction with MICCAI 2018; Granada, Spain, 20 September 2018; Lecture Notes in Computer Science; Stoyanov, D., Taylor, Z., Carneiro, G., Syeda-Mahmood, T., Martel, A., Maier-Hein, L., Tavares, J.M.R.S., Bradley, A., Papa, J.P., Belagiannis, V., et al., Eds.; Springer: Cham, Switzerland, 2018; pp. 3–11. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 6230–6239. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; IEEE: New York, NY, USA, 2017; pp. 2980–2988. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Computer Vision—ECCV 2018; Lecture Notes in Computer Science; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018; pp. 833–851. [Google Scholar] [CrossRef]

- Jégou, S.; Drozdzal, M.; Vázquez, D.; Romero, A.; Bengio, Y. The One Hundred Layers Tiramisu: Fully Convolutional DenseNets for Semantic Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 1175–1183. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Zhang, W.; Tang, P.; Zhao, L.; Huang, Q. A comparative study of U-nets with various convolution components for building extraction. In Proceedings of the 2019 Joint Urban Remote Sensing Event (JURSE), Vannes, France, 22–24 May 2019; IEEE: New York, NY, USA, 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Bakirman, T.; Komurcu, I.; Sertel, E. Comparative analysis of deep learning based building extraction methods with the new VHR Istanbul dataset. Expert Syst. Appl. 2022, 202, 117346. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems; Pereira, F., Burges, C.J.C., Bottou, L., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2012; Volume 25, pp. 1097–1105. [Google Scholar]

- Abdollahi, A.; Pradhan, B.; Alamri, A.M. An ensemble architecture of deep convolutional Segnet and Unet networks for building semantic segmentation from high-resolution aerial images. Geocarto Int. 2022, 37, 3355–3370. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2021, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Chen, C.-F.R.; Fan, Q.; Panda, R. Crossvit: Cross-attention multi-scale vision transformer for image classification. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; IEEE: New York, NY, USA, 2021; pp. 347–356. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; IEEE: New York, NY, USA, 2021; pp. 9992–10002. [Google Scholar] [CrossRef]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.S.; et al. Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; IEEE: New York, NY, USA, 2021; pp. 6877–6886. [Google Scholar] [CrossRef]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Dai, X.; Chen, Y.; Yang, J.; Zhang, P.; Yuan, L.; Zhang, L. Dynamic DETR: End-to-End Object Detection with Dynamic Attention. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; IEEE: New York, NY, USA, 2021; pp. 2968–2977. [Google Scholar] [CrossRef]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging properties in self-supervised vision transformers. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; IEEE: New York, NY, USA, 2021; pp. 9630–9640. [Google Scholar] [CrossRef]

- Strudel, R.; Garcia, R.; Laptev, I.; Schmid, C. Segmenter: Transformer for semantic segmentation. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; IEEE: New York, NY, USA, 2021; pp. 7242–7252. [Google Scholar] [CrossRef]

- Sariturk, B.; Seker, D.Z. A Residual-Inception U-Net (RIU-Net) Approach and Comparisons with U-Shaped CNN and Transformer Models for Building Segmentation from High-Resolution Satellite Images. Sensors 2022, 22, 7624. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like transformer for efficient semantic segmentation of remote sensing urban scene imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Chan, T.F.; Vese, L.A. Active Contours without Edges. IEEE Trans. Image Process. 2001, 10, 266–277. [Google Scholar] [CrossRef]

- Kim, B.; Ye, J.C. Mumford–Shah loss functional for image segmentation with deep learning. IEEE Trans. Image Process. 2019, 29, 1856–1866. [Google Scholar] [CrossRef]

- Ma, J.; He, J.; Yang, X. Learning Geodesic Active Contours for Embedding Object Global Information in Segmentation CNNs. IEEE Trans. Med. Imaging 2020, 40, 93–104. [Google Scholar] [CrossRef]

- Hatamizadeh, A.; Sengupta, D.; Terzopoulos, D. End-to-End Trainable Deep Active Contour Models for Automated Image Segmentation: Delineating Buildings in Aerial Imagery. In Computer Vision—ECCV 2020; Lecture Notes in Computer Science; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020; pp. 730–746. [Google Scholar] [CrossRef]

- Mengjia, L.; Peng, L.; Bingze, S.; Yuwei, Z.; Luo, Z. Active Contour Building Segmentation Model based on Convolution Neural Network. IOP Conf. Ser. Earth Environ. Sci. 2022, 1004, 012015. [Google Scholar] [CrossRef]

- Moseley, M.E.; Day, K.C. (Eds.) Chan Chan: Andean Desert City, 1st ed.; The University of New Mexico Press: Albuquerque, NM, USA, 1982. [Google Scholar]

- Campana, C. Chan Chan del Chimo: Estudio de la Ciudad de Adobe Más Grande de América Latina; Editorial ORUS: Lima, Peru, 2006. [Google Scholar]

- Vergara Montero, E.; Valle Álvarez, L. Chan Chan: Ayer y Hoy; Ediciones SIAN: Trujillo, Peru, 2012. [Google Scholar]

- Rengifo, C. (Ed.) Chan Chan: Esplendor y Legado. Redescubriendo la Antigua Capital del Chimor; Ministerio de Cultura del Perú: Lima, Peru, 2020; Available online: https://ddclalibertad.gob.pe/transparencia/pdf/LibroChanChanEsplendorlegado.pdf (accessed on 15 January 2025).

- Sitios del Patrimonio Mundial del Perú. Lista del Patrimonio Mundial en Peligro. Available online: https://patrimoniomundial.cultura.pe/listadelpatrimoniomundial/listadelpatrimoniomundialenpeligro (accessed on 1 October 2024).

- Ministerio de Cultura. Resolución Ministerial N.° 130-2021-DM-MC, Plan Maestro para la Conservación y Manejo del Complejo Arqueológico de Chan Chan 2021–2031. 10 de Mayo de 2021. Available online: https://www.gob.pe/institucion/cultura/normas-legales/1915213-130-2021-dm-mc (accessed on 15 August 2024).

- Colosi, F.; Fangi, G.; Gabrielli, R.; Orazi, R.; Angelini, A.; Bozzi, C.A. Planning the Archaeological Park of Chan Chan (Peru) by means of satellite images, GIS and photogrammetry. J. Cult. Herit. 2009, 10 (Suppl. S1), e27–e34. [Google Scholar] [CrossRef]

- Colosi, F.; Gabrielli, R.; Malinverni, E.S.; Orazi, R. Discovering Chan Chan: Modern technologies for urban and architectural analysis. Archeol. Calc. 2013, 24, 187–207. Available online: http://www.archcalc.cnr.it/indice/PDF24/09_Colosi_et_al.pdf (accessed on 16 January 2025).

- Colosi, F.; Orazi, R. Integridad Material e Inmaterial de Chan Chan. In Proceedings of the En Actas VIII Congreso Nacional de Arqueología (Virtual), Lima, Peru, 16–21 August 2021; Ministerio de Cultura: Lima, Peru, 2022; pp. 131–144. [Google Scholar]

- Colosi, F.; Orazi, R. Chan Chan Archaeological Park: Looming threats and suggested remedies. In World Heritage and Ecological Transition, Proceendings of the Le Vie dei Mercanti XX International Forum, Napoli-Capri, Italy, 8–10 September 2022; Ciambrone, A., Ed.; Architecture Heritage and Design; Gangemi Editore: Rome, Italy, 2022; Volume 10, pp. 452–460. [Google Scholar]

- Colosi, F.; Malinverni, E.S.; Leon Trujillo, F.J.; Pierdicca, R.; Orazi, R.; Di Stefano, F. Exploiting HBIM for historical mud architecture: The huaca Arco Iris in Chan Chan (Peru). Heritage 2022, 5, 2062–2082. [Google Scholar] [CrossRef]

- Instituto Nacional de Cultura. Resolución Directoral Nacional N° 1383/INC. 23 de Junio de 2010. Diario Oficial El Peruano, Normas Legales, 421488–421489. Available online: https://elperuano.pe/NormasElperuano/2010/06/30/512071-2.html (accessed on 4 February 2025).

- Colosi, F.; Leon Trujillo, F.J.; Malinverni, E.S.; Kobata Alva, S.; Orazi, R. Multidisciplinary analisys and HBIM metodology for the risk management of harmfull events: The large earth complex of Chan Chan (Trujillo, Peru). Restauro Archeologico, Special Issue. In Proceedings of the Convegno Internazionale 1972–2022—II Patrimonio Mondiale alla Prova del Tempo: A Proposito di Gestione, Salvaguardia e Sostenibilità, Florence, Italy, 18–19 November 2022; pp. 24–31. [Google Scholar]

- Xia, J.; Yokoya, N.; Adriano, B.; Broni-Bediako, C. OpenEarthMap: A Benchmark Dataset for Global High-Resolution Land Cover Mapping. In Proceedings of the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; IEEE: New York, NY, USA, 2023; pp. 6243–6253. [Google Scholar] [CrossRef]

- Roy, A.G.; Navab, N.; Wachinger, C. Concurrent Spatial and Channel ‘Squeeze & Excitation’ in Fully Convolutional Networks. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2018; Lecture Notes in Computer Science; Frangi, A., Schnabel, J., Davatzikos, C., Alberola-López, C., Fichtinger, G., Eds.; Springer: Cham, Switzerland, 2018; pp. 421–429. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, ICML 2019, Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 6105–6114. Available online: http://proceedings.mlr.press/v97/tan19a.html (accessed on 11 July 2024).

- Tan, M.; Le, Q.V. EfficientNetV2: Smaller Models and Faster Training. In Proceedings of the 38th International Conference on Machine Learning, PMLR 139, Virtual Event, 18–24 July 2021; pp. 10096–10106. Available online: https://proceedings.mlr.press/v139/tan21a/tan21a.pdf (accessed on 12 July 2024).

- Xie, E.; Wang, W.; Álvarez, J.; Anandkumar, A.; Yu, Z.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. In Advances in Neural Information Processing Systems 34 (NeurIPS 2021), Proceeding of the NeurIPS 2021, Thirty-Fifth Annual Conference on Neural Information Processing Systems, Virtual Conference, 6–14 December 2021; Ranzato, M., Beygelzimer, A., Dauphin, Y., Liang, P.S., Wortman Vaughan, J., Eds.; NeurIPS: San Diego, CA, USA, 2021; Available online: https://proceedings.neurips.cc/paper_files/paper/2021/file/64f1f27bf1b4ec22924fd0acb550c235-Paper.pdf (accessed on 12 July 2024).

- Wang, L.; Duan, C.; Fang, S.; Li, R.; Meng, X.; Zhang, C. A Novel Transformer Based Semantic Segmentation Scheme for Fine-Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6506105. [Google Scholar] [CrossRef]

- Chicchon, M.; Bedon, H.; Del-Blanco, C.R.; Sipiran, I. Semantic Segmentation of Fish and Underwater Environments Using Deep Convolutional Neural Networks and Learned Active Contours. IEEE Access 2023, 11, 33652–33665. [Google Scholar] [CrossRef]

- Widyaningrum, R.; Aulianisa, R.; Aji, N.R.A.S.; Candradewi, I. Comparison of Multi-Label U-Net and Mask R-CNN for panoramic radiograph segmentation to detect periodontitis. Imaging Sci. Dent. 2022, 52, 383–391. [Google Scholar] [CrossRef]

| Network | OpenEarthMap | Chan Chan | ||

|---|---|---|---|---|

| Architecture | IoU | Dice | IoU | Dice |

| U-Net | 0.7746(0.0127) | 0.8730(0.0081) | 0.4280(0.0070) | 0.5994(0.0068) |

| U-Net-scse (EffV1B0) | 0.8066(0.0015) | 0.8929(0.0009) | 0.5748(0.0232) | 0.7318(0.0189) |

| U-Net-scse (EffV1B2) | 0.8105(0.0046) | 0.8954(0.0028) | 0.5587(0.0137) | 0.7168(0.0112) |

| U-Net-scse (EffV1B4) | 0.8153(0.0037) | 0.8982(0.0022) | 0.5709(0.0146) | 0.7267(0.0118) |

| U-Net-scse (EffV1B6) | 0.8202(0.0006) | 0.9012(0.0004) | 0.5797(0.0349) | 0.7334(0.0282) |

| U-Net-scse (EffV1B7) | 0.8207(0.0013) | 0.9015(0.0008) | 0.5679(0.0295) | 0.7241(0.0295) |

| U-Net-scse (EffV2s) | 0.8173(0.0022) | 0.8995(0.0014) | 0.5878(0.0076) | 0.7404(0.0060) |

| U-Net-scse (EffV2m) | 0.8141(0.0030) | 0.8975(0.0018) | 0.5758(0.0238) | 0.7306(0.0194) |

| U-Net-scse (EffV2l) | 0.8138(0.0036) | 0.8973(0.0022) | 0.5776(0.0175) | 0.7328(0.0134) |

| U-Net-scse (EffV2xl) | 0.8077(0.0074) | 0.8937(0.0046) | 0.5731(0.0240) | 0.7273(0.0197) |

| U-Net-scse (MiTB0) | 0.8064(0.0022) | 0.8929(0.0013) | 0.6081(0.0063) | 0.7563(0.0049) |

| U-Net-scse (MiTB2) | 0.8229(0.0023) | 0.9029(0.0014) | 0.6162(0.0086) | 0.7630(0.0071) |

| U-Net-scse (MiTB5) | 0.8284(0.0016) | 0.9062(0.0010) | 0.6465(0.0023) | 0.7853(0.0017) |

| SegFormer (B0) | 0.7934(0.0013) | 0.8848(0.0008) | 0.5837(0.0090) | 0.7371(0.0072) |

| SegFormer (B2) | 0.8172(0.0012) | 0.8994(0.0007) | 0.6270(0.0106) | 0.7707(0.0081) |

| SegFormer (B5) | 0.8190(0.0012) | 0.9005(0.0008) | 0.6386(0.0080) | 0.7794(0.0060) |

| FT-UNet-Former | 0.8217(0.0024) | 0.9021(0.0014) | 0.6100(0.0097) | 0.7577(0.0075) |

| DCSWIN | 0.8154(0.0042) | 0.8983(0.0026) | 0.5958(0.0265) | 0.7465(0.0210) |

| Network Architecture | BS | Total Parameters (M) | Inference Time (ms) |

|---|---|---|---|

| U-Net | 8 | 31.0 | 11.38 |

| U-Net-scse (EffV1B0) | 16 | 6.3 | 4.29 |

| UNet-scse (EffV1B2) | 16 | 10.1 | 5.28 |

| U-Net-scse (EffV1B4) | 16 | 20.3 | 6.65 |

| U-Net-scse (EffV1B6) | 8 | 43.9 | 8.81 |

| U-Net-scse (EffV1B7) | 6 | 67.2 | 11.27 |

| U-Net-scse (EffV2s) | 16 | 22.1 | 5.71 |

| U-Net-scse (EffV2m) | 16 | 55.2 | 7.31 |

| U-Net-scse (EffV2l) | 8 | 119.9 | 9.43 |

| U-Net-scse (EffV2xl) | 5 | 209.6 | 11.45 |

| U-Net-scse (MiTB0) | 16 | 5.6 | 3.21 |

| U-Net-scse (MiTB2) | 16 | 27.6 | 5.42 |

| U-Net-scse (MiTB5) | 8 | 84.8 | 10.71 |

| SegFormer (B0) | 16 | 3.7 | 2.17 |

| SegFormer (B2) | 16 | 24.7 | 5.15 |

| SegFormer (B5) | 8 | 90.0 | 12.83 |

| FT-UNet-Former | 8 | 96.0 | 11.69 |

| DCSWIN | 8 | 118.9 | 10.83 |

| Model | OpenEarthMap | Chan Chan | ||

|---|---|---|---|---|

| IoU | Dice | IoU | Dice | |

| U-Net | 0.7867 | 0.8806 | 0.4453 | 0.6162 |

| U-Net-scse (EffV1B6) | 0.8208 | 0.9016 | 06100 | 0.7578 |

| U-Net-scse (EffV1B7) | 0.8222 | 0.9024 | 0.5968 | 0.7475 |

| U-Net-scse (EffV2s) | 0.8194 | 0.9008 | 0.5944 | 0.7456 |

| U-Net-scse (EffV2xl) | 0.8160 | 0.8987 | 0.6038 | 0.7530 |

| U-Net-scse (MiTB2) | 0.8260 | 0.9047 | 0.6263 | 0.7702 |

| U-Net-scse (MiTB5) | 0.8288 | 0.9064 | 0.6503 | 0.7881 |

| SegFormer (MiTB2) | 0.8187 | 0.9003 | 0.6350 | 0.7768 |

| SegFormer (MiTB5) | 0.8203 | 0.9013 | 0.6468 | 0.7855 |

| FT-UNet-Former | 0.8244 | 0.9037 | 0.6150 | 0.7616 |

| DCSWIN | 0.8185 | 0.9002 | 0.6163 | 0.7626 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chicchon, M.; Colosi, F.; Malinverni, E.S.; León Trujillo, F.J. Urban Sprawl Monitoring by VHR Images Using Active Contour Loss and Improved U-Net with Mix Transformer Encoders. Remote Sens. 2025, 17, 1593. https://doi.org/10.3390/rs17091593

Chicchon M, Colosi F, Malinverni ES, León Trujillo FJ. Urban Sprawl Monitoring by VHR Images Using Active Contour Loss and Improved U-Net with Mix Transformer Encoders. Remote Sensing. 2025; 17(9):1593. https://doi.org/10.3390/rs17091593

Chicago/Turabian StyleChicchon, Miguel, Francesca Colosi, Eva Savina Malinverni, and Francisco James León Trujillo. 2025. "Urban Sprawl Monitoring by VHR Images Using Active Contour Loss and Improved U-Net with Mix Transformer Encoders" Remote Sensing 17, no. 9: 1593. https://doi.org/10.3390/rs17091593

APA StyleChicchon, M., Colosi, F., Malinverni, E. S., & León Trujillo, F. J. (2025). Urban Sprawl Monitoring by VHR Images Using Active Contour Loss and Improved U-Net with Mix Transformer Encoders. Remote Sensing, 17(9), 1593. https://doi.org/10.3390/rs17091593