RMVAD-YOLO: A Robust Multi-View Aircraft Detection Model for Imbalanced and Similar Classes

Abstract

1. Introduction

2. Related Work

2.1. Object Detection

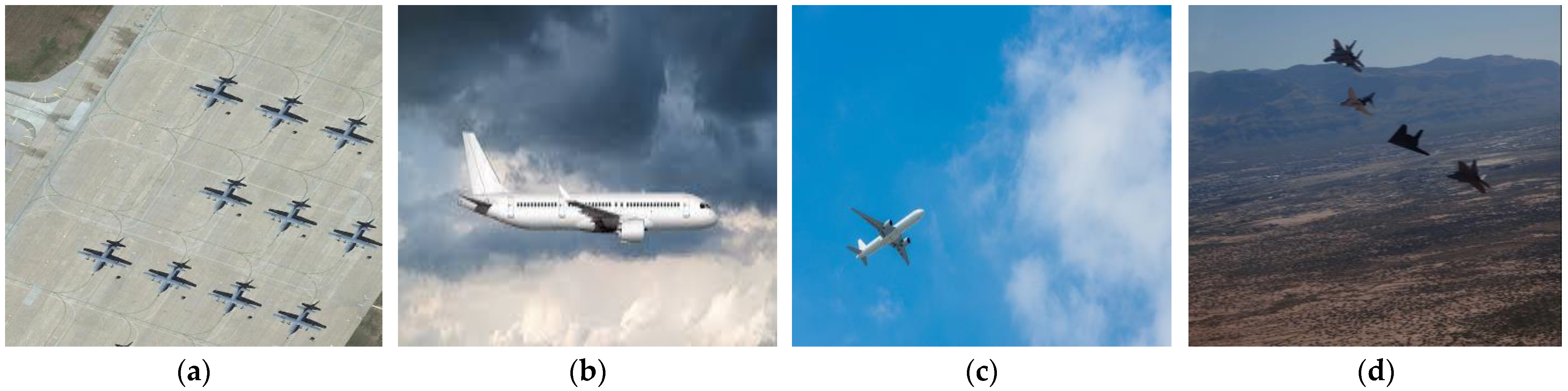

2.2. Aircraft Detection

3. Method

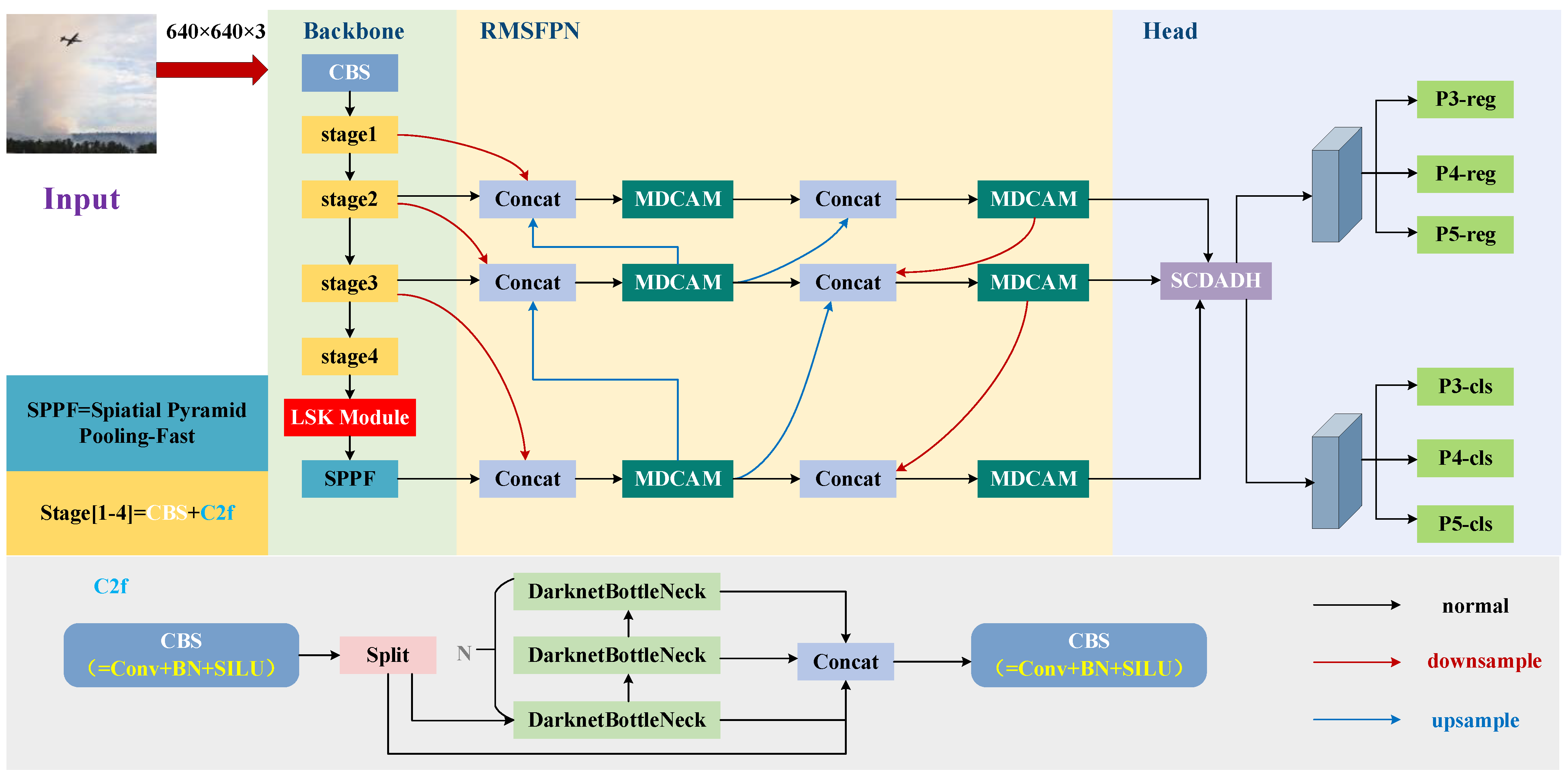

3.1. Introduction to the RMVAD-YOLO Model

3.2. Robust Multi-Link Scale Interactive Feature Pyramid Network

3.2.1. Improvements in the Structure

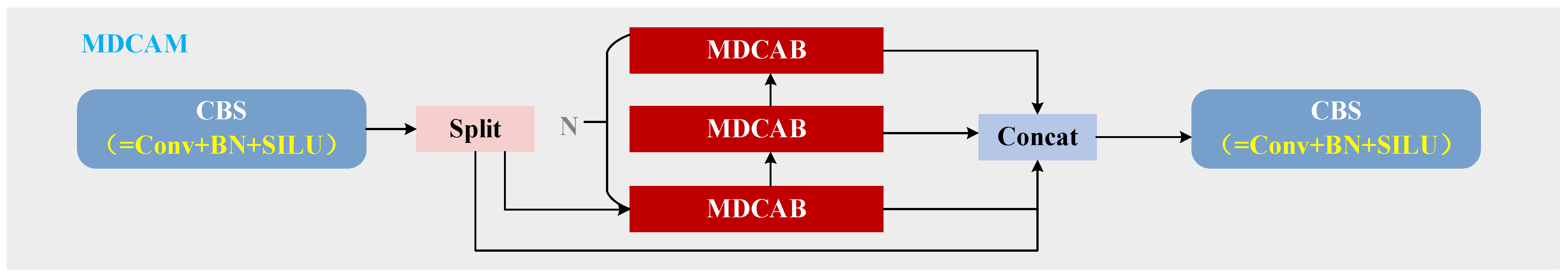

3.2.2. Multi-Scale Deep Convolutional Aggregation Module

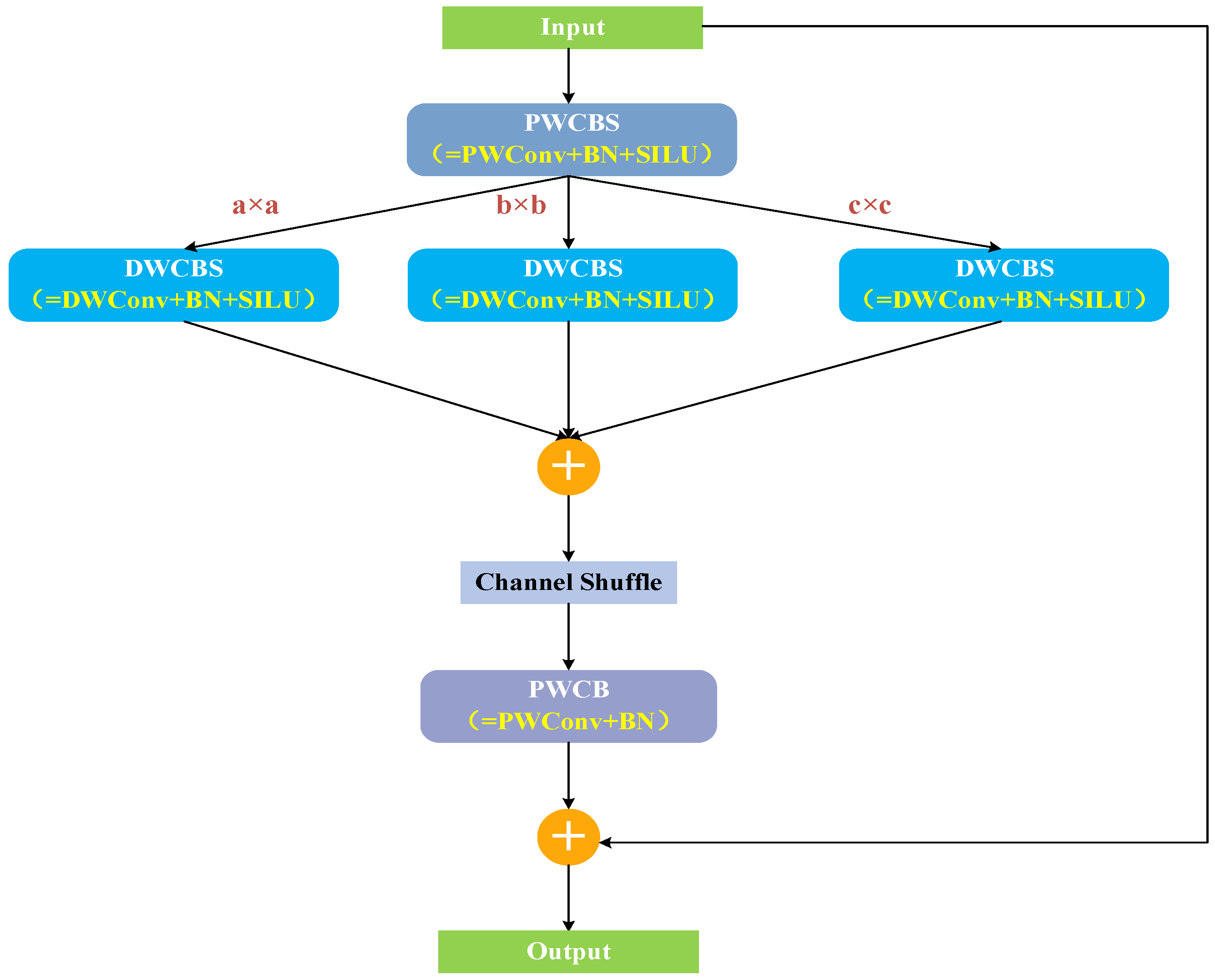

3.2.3. Neck Heterogeneous Kernel Selection Mechanism

3.3. Shared Convolutional Dynamic Alignment Detection Head

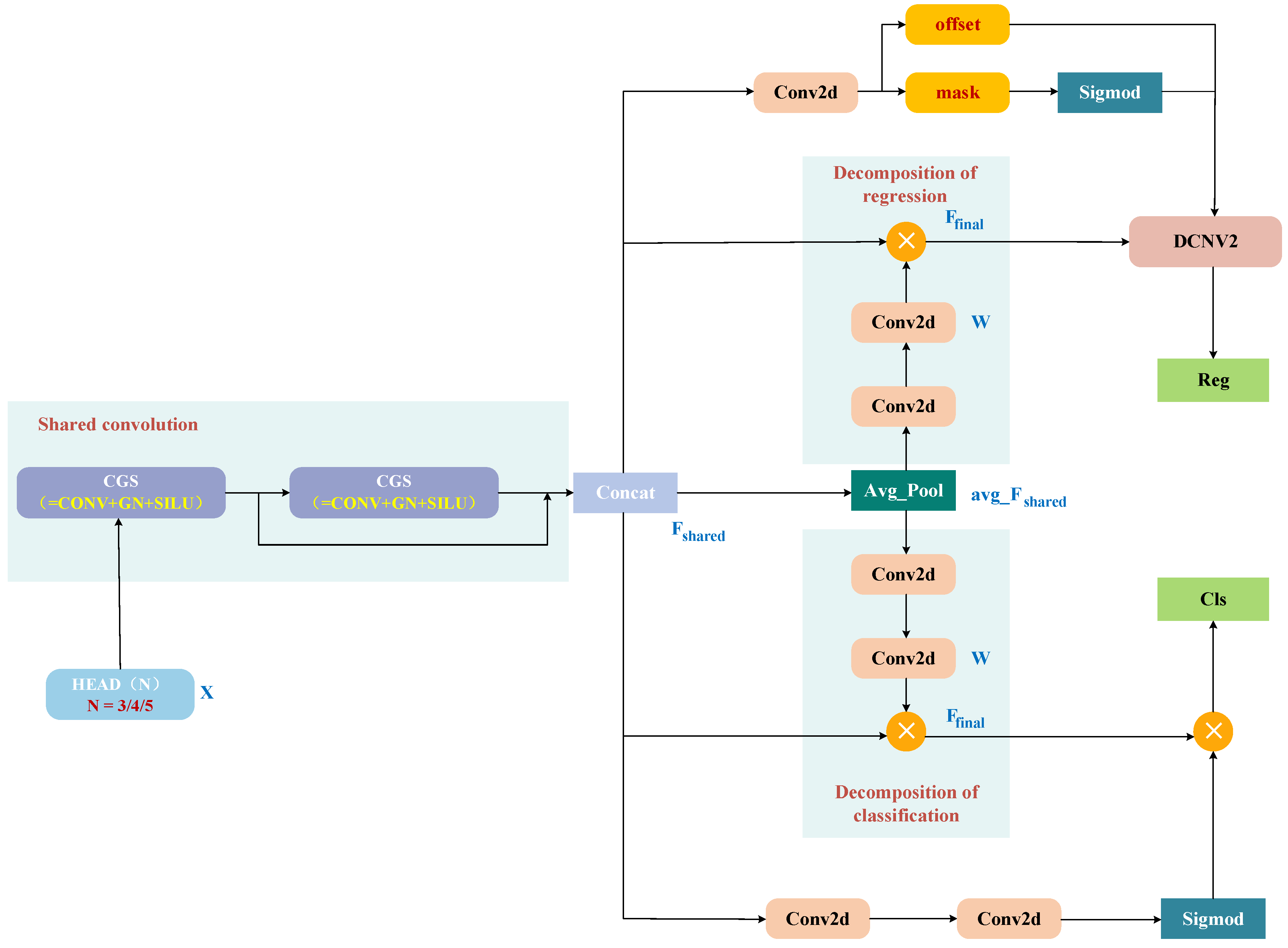

3.4. LSK Module

3.5. Loss Function Optimisation

4. Datasets and Experimental Environment

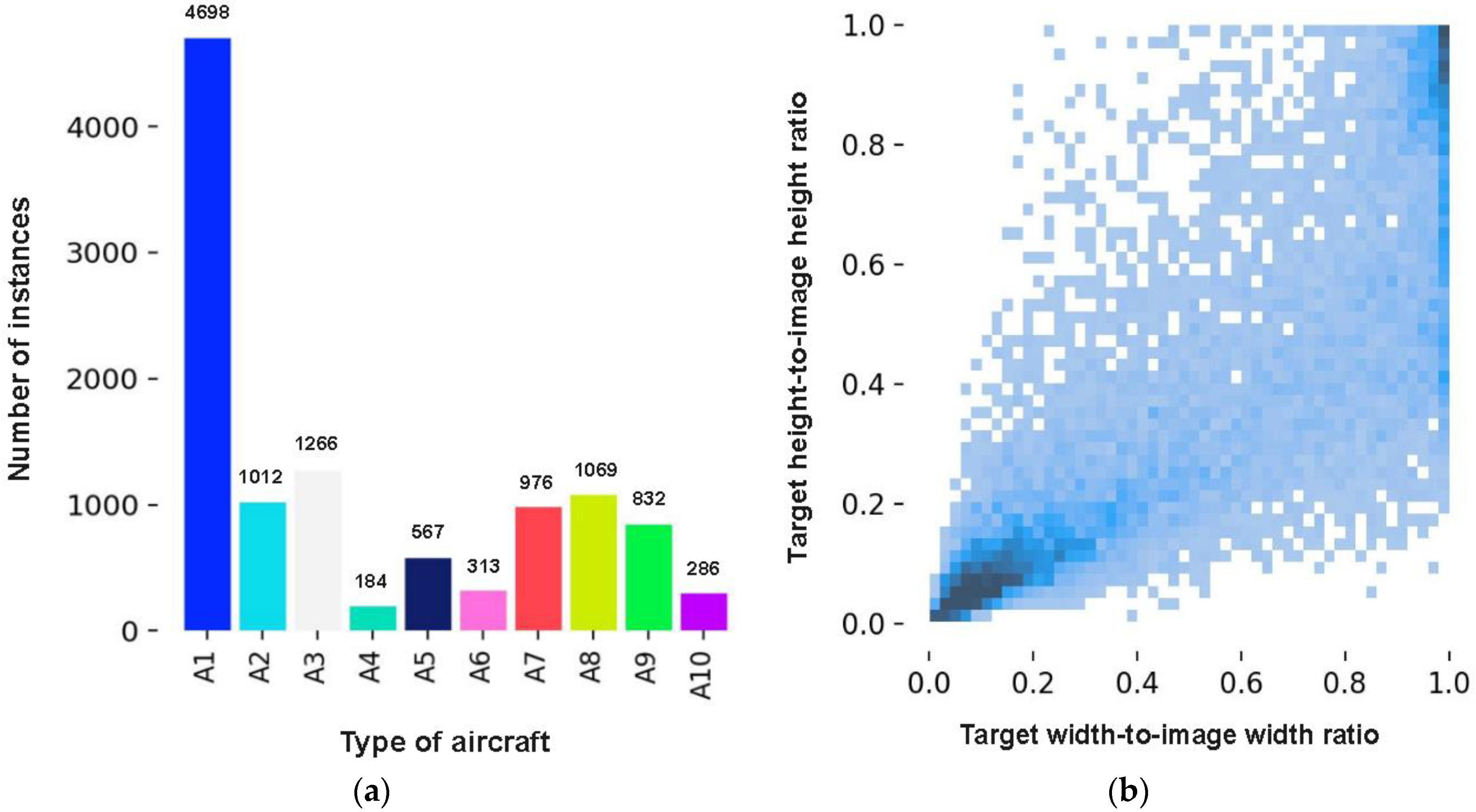

4.1. Dataset

4.2. Experimental Evaluation Indicators

4.3. Experimental Configuration

5. Experiment

5.1. Module Comparison Experiment

5.1.1. Comparison of Feature Pyramid Networks

5.1.2. Comparison of Detection Heads

5.1.3. The Optimal Way to Incorporate the LSK Module

5.1.4. Comparison of Loss Functions

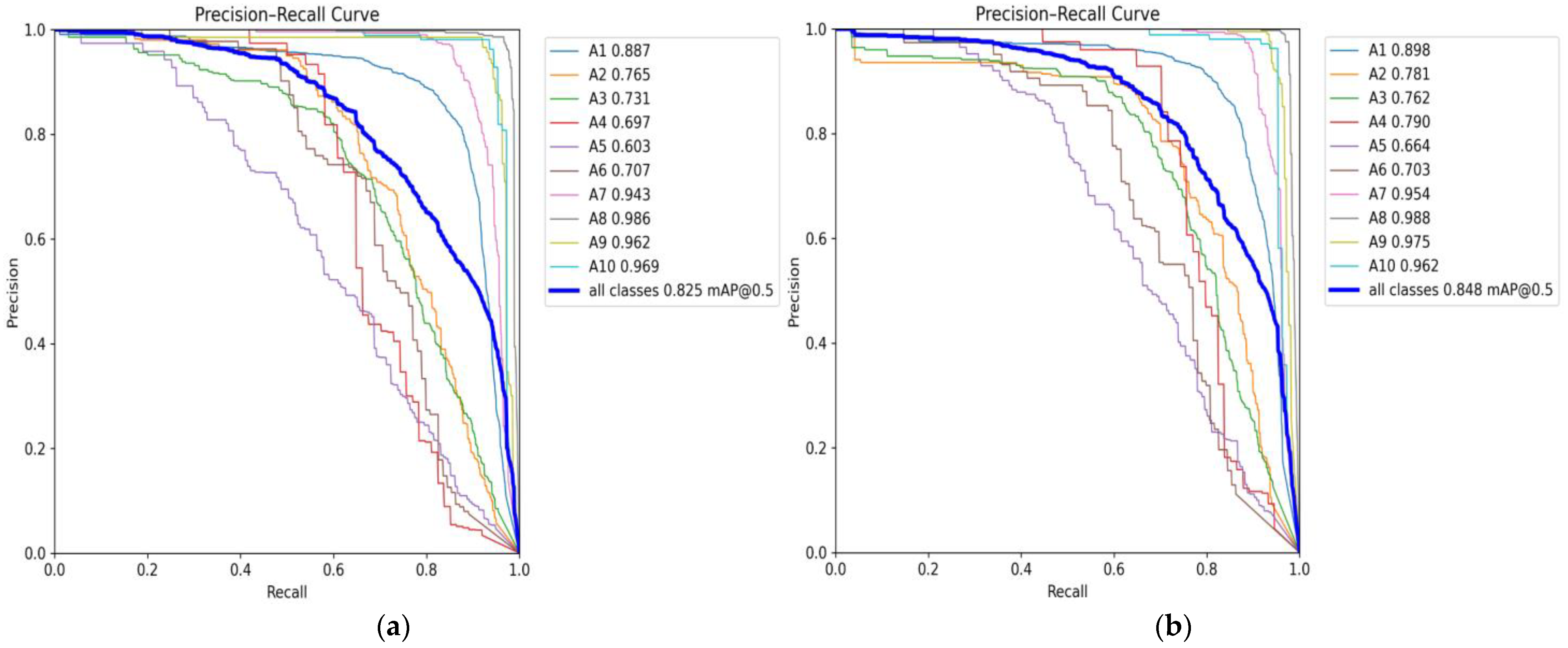

5.2. Ablation Experiment

5.3. Comparison Experiment with Other Models

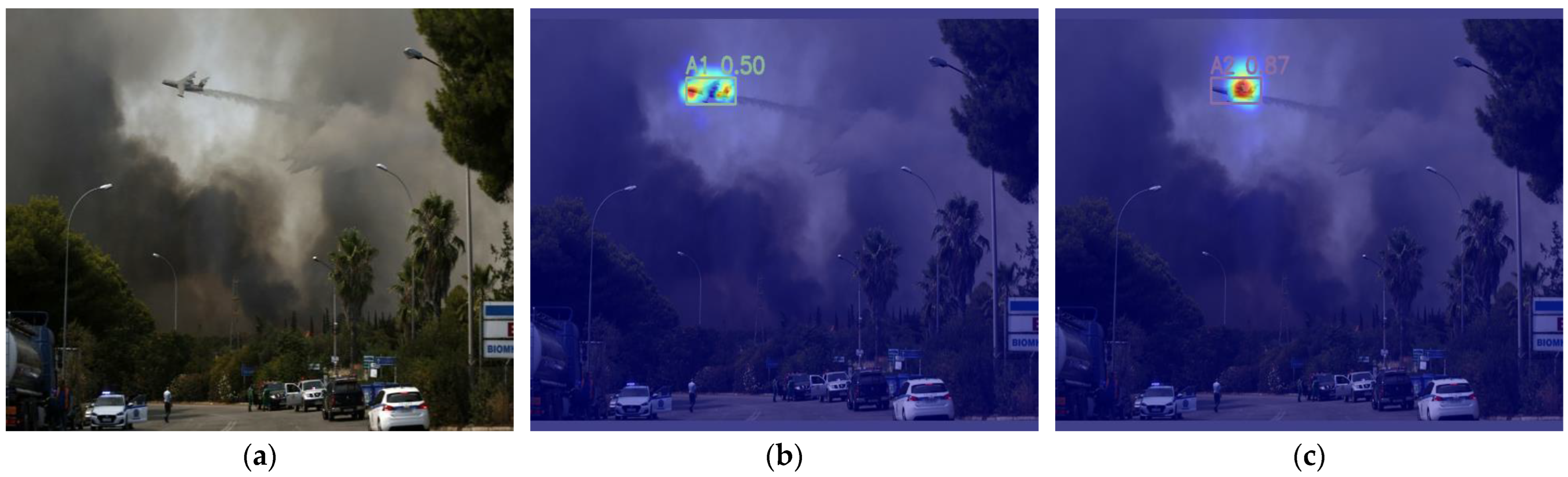

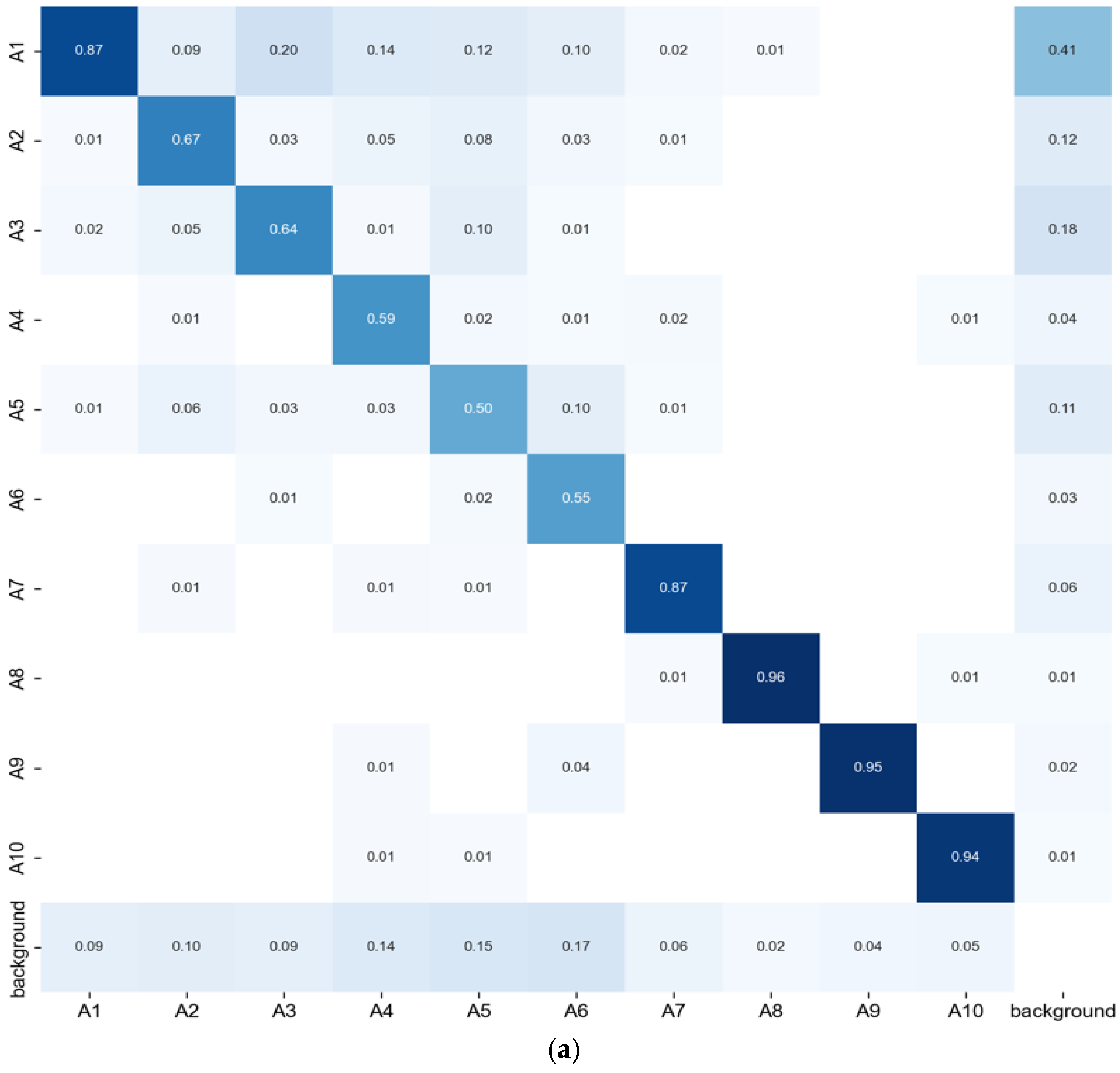

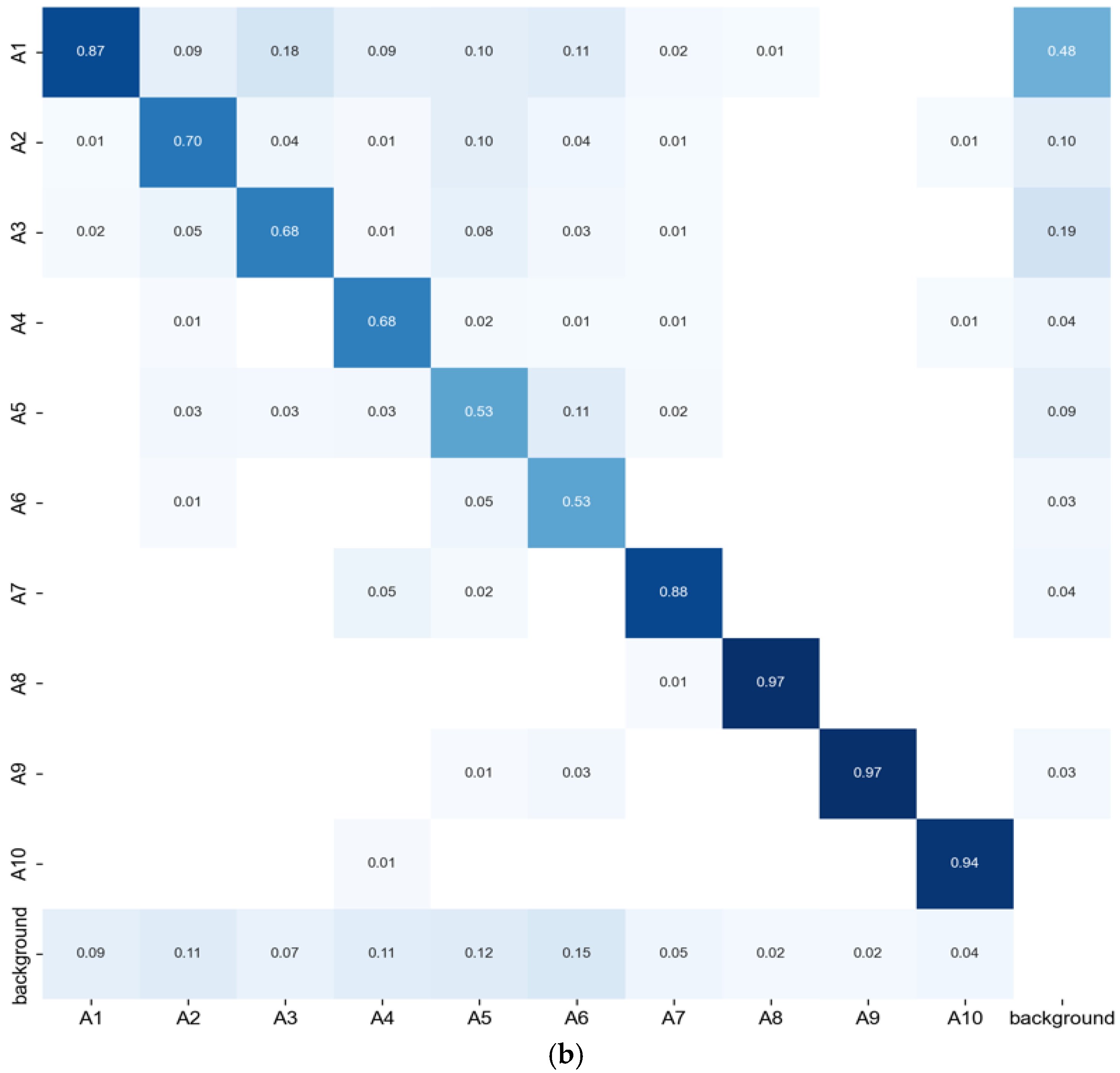

5.4. Visualization Experiment

5.5. Generalization Experiment

6. Discussion

6.1. Impact of Viewpoint Diversity on Accurate Aircraft Category Detection

6.2. Confidence Score Analysis in Occlusion Scenarios

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sandamali, G.G.N.; Su, R.; Sudheera, K.L.K.; Zhang, Y.; Zhang, Y. Two-Stage Scalable Air Traffic Flow Management Model Under Uncertainty. IEEE Trans. Intell. Transp. Syst. 2021, 22, 7328–7340. [Google Scholar] [CrossRef]

- Shao, L.; He, J.; Lu, X.; Hei, B.; Qu, J.; Liu, W. Aircraft Skin Damage Detection and Assessment from UAV Images Using GLCM and Cloud Model. IEEE Trans. Intell. Transp. Syst. 2024, 25, 3191–3200. [Google Scholar] [CrossRef]

- Li, B.; Hu, J.; Fang, L.; Kang, S.; Li, X. A new aircraft classification algorithm based on sum pooling feature with remote sensing image. In Proceedings of the MIPPR 2019: Pattern Recognition and Computer Vision, Wuhan, China, 2–3 November 2019; pp. 361–369. [Google Scholar]

- Zhang, F.; Du, B.; Zhang, L.; Xu, M. Weakly Supervised Learning Based on Coupled Convolutional Neural Networks for Aircraft Detection. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5553–5563. [Google Scholar] [CrossRef]

- Shi, T.; Gong, J.; Jiang, S.; Zhi, X.; Bao, G.; Sun, Y.; Zhang, W. Complex Optical Remote-Sensing Aircraft Detection Dataset and Benchmark. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5612309. [Google Scholar] [CrossRef]

- Qian, Y.; Pu, X.; Jia, H.; Wang, H.; Xu, F. ARNet: Prior Knowledge Reasoning Network for Aircraft Detection in Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5205214. [Google Scholar] [CrossRef]

- Xu, X.; Chen, Z.; Zhang, X.; Wang, G. Context-Aware Content Interaction: Grasp Subtle Clues for Fine-Grained Aircraft Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5641319. [Google Scholar] [CrossRef]

- Li, Y.; Hou, Q.; Zheng, Z.; Cheng, M.M.; Yang, J.; Li, X. Large Selective Kernel Network for Remote Sensing Object Detection. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; 2023; pp. 16748–16759. [Google Scholar]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding Box Regression Loss with Dynamic Focusing Mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, S. Focaler-IoU: More Focused Intersection over Union Loss. arXiv 2024, arXiv:2401.10525. [Google Scholar] [CrossRef]

- Ma, S.; Xu, Y. MPDIoU: A Loss for Efficient and Accurate Bounding Box Regression. arXiv 2023, arXiv:2307.07662. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Bombara, G.; Vasile, C.-I.; Penedo, F.; Yasuoka, H.; Belta, C. A Decision Tree Approach to Data Classification using Signal Temporal Logic. In Proceedings of the 19th International Conference on Hybrid Systems: Computation and Control, Vienna, Austria, 12–14 April 2016; pp. 1–10. [Google Scholar]

- Freund, Y.; Schapire, R.E. A Decision-Theoretic Generalization of On-Line Learning and an Application to Boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2015, arXiv:1506.02640. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Wang, C.-Y.; Yeh, I.H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar] [CrossRef]

- Xu, C.; Duan, H. Artificial bee colony (ABC) optimized edge potential function (EPF) approach to target recognition for low-altitude aircraft. Pattern Recognit. Lett. 2010, 31, 1759–1772. [Google Scholar] [CrossRef]

- Lin, Y.; He, H.; Yin, Z.; Chen, F. Rotation-Invariant Object Detection in Remote Sensing Images Based on Radial-Gradient Angle. IEEE Geosci. Remote Sens. Lett. 2015, 12, 746–750. [Google Scholar] [CrossRef]

- Yu, Y.; Guan, H.; Zai, D.; Ji, Z. Rotation-and-scale-invariant airplane detection in high-resolution satellite images based on deep-Hough-forests. ISPRS J. Photogramm. Remote Sens. 2016, 112, 50–64. [Google Scholar] [CrossRef]

- Ding, P.; Zhang, Y.; Deng, W.-J.; Jia, P.; Kuijper, A. A light and faster regional convolutional neural network for object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2018, 141, 208–218. [Google Scholar] [CrossRef]

- Yang, J.; Zhu, Y.; Jiang, B.; Gao, L.; Xiao, L.; Zheng, Z. Aircraft detection in remote sensing images based on a deep residual network and Super-Vector coding. Remote Sens. Lett. 2018, 9, 228–236. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wu, J.; Zhao, F.; Jin, Z. LEN-YOLO: A Lightweight Remote Sensing Small Aircraft ObjectDetection Model for Satellite On-Orbit Detection. J. Real-Time Image Proc. 2025, 22, 25. [Google Scholar] [CrossRef]

- Wu, J.; Zhao, F.; Yao, G.; Jin, Z. FGA-YOLO: A one-stage and high-precision detector designed for fine-grained aircraft recognition. Neurocomputing 2025, 618, 129067. [Google Scholar] [CrossRef]

- Yu, D.; Fang, Z.; Jiang, Y. Alleviating category confusion in fine-grained visual classification. Vis. Comput. 2025. early access. [Google Scholar] [CrossRef]

- Wan, H.; Nurmamat, P.; Chen, J.; Cao, Y.; Wang, S.; Zhang, Y.; Huang, Z. Fine-Grained Aircraft Recognition Based on Dynamic Feature Synthesis and Contrastive Learning. Remote Sens. 2025, 17, 768. [Google Scholar] [CrossRef]

- Yang, B.; Tian, D.; Zhao, S.; Wang, W.; Luo, J.; Pu, H.; Zhou, M.; Pi, Y. Robust Aircraft Detection in Imbalanced and Similar Classes with a Multi-Perspectives Aircraft Dataset. IEEE Trans. Intell. Transp. Syst. 2024, 25, 21442–21454. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Chen, Y.; Yuan, X.; Wu, R.; Wang, J.; Hou, Q.; Cheng, M.-M. YOLO-MS: Rethinking Multi-Scale Representation Learning for Real-time Object Detection. arXiv 2023, arXiv:2308.05480. [Google Scholar] [CrossRef]

- Zhu, X.; Hu, H.; Lin, S.; Dai, J. Deformable ConvNets v2: More Deformable, Better Results. arXiv 2018, arXiv:1811.11168. [Google Scholar] [CrossRef]

- Yang, G.; Lei, J.; Zhu, Z.; Cheng, S.; Feng, Z.; Liang, R. AFPN: Asymptotic Feature Pyramid Network for Object Detection. arXiv 2023, arXiv:2306.15988. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, C.; Chen, B.; Huang, Y.; Sun, Y.; Wang, C.; Fu, X.; Dai, Y.; Qin, F.; Peng, Y.; et al. Accurate leukocyte detection based on deformable-DETR and multi-level feature fusion for aiding diagnosis of blood diseases. Comput. Biol. Med. 2024, 170, 107917. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar]

- Wang, C.; He, W.; Nie, Y.; Guo, J.; Liu, C.; Han, K.; Wang, Y. Gold-YOLO: Efficient Object Detector via Gather-and-Distribute Mechanism. arXiv 2023, arXiv:2309.11331. [Google Scholar] [CrossRef]

- Xu, X.; Jiang, Y.; Chen, W.; Huang, Y.; Zhang, Y.; Sun, X. DAMO-YOLO: A Report on Real-Time Object Detection Design. arXiv 2022, arXiv:2211.15444. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A lightweight-design for real-time detector architectures. arXiv 2022, arXiv:2206.02424. [Google Scholar] [CrossRef]

- Yang, Z.; Guan, Q.; Zhao, K.; Yang, J.; Xu, X.; Long, H.; Tang, Y. Multi-Branch Auxiliary Fusion YOLO with Re-parameterization Heterogeneous Convolutional for accurate object detection. arXiv 2024, arXiv:2407.04381. [Google Scholar] [CrossRef]

- Dai, X.; Chen, Y.; Xiao, B.; Chen, D.; Liu, M.; Yuan, L.; Zhang, L. Dynamic Head: Unifying Object Detection Heads with Attentions. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 7369–7378. [Google Scholar]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and Tracking Meet Drones Challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 7380–7399. [Google Scholar] [CrossRef]

- Luo, J.; Liu, Z.; Wang, Y.; Tang, A.; Zuo, H.; Han, P. Efficient Small Object Detection You Only Look Once: A Small Object Detection Algorithm for Aerial Images. Sensors 2024, 24, 7067. [Google Scholar] [CrossRef] [PubMed]

| MDCAM Number | Convolution Kernel Size |

|---|---|

| 1 | 5, 7, 9 |

| 2 | 3, 5, 7 |

| 3 | 1, 3, 5 |

| 4 | 1, 3, 5 |

| 5 | 3, 5, 7 |

| 6 | 5, 7, 9 |

| Parameters | Value | Parameters | Value |

|---|---|---|---|

| Epochs | 200 | Optimizer | SGD |

| Batch size | 16 | Learning rate | 0.01 |

| Image size | 640×640 | Warmup epochs | 3 |

| FPN | P/% | R/% | mAP@0.5/% | mAP@0.5:0.95/% | Params/M | GFLOPS |

|---|---|---|---|---|---|---|

| PANetbase | 87.5 | 73.5 | 82.5 | 69.3 | 3.01 | 8.1 |

| AFPN [47] | 84.6 | 72 | 79.7 | 67.3 | 2.60 | 8.4 |

| HSFPN [48] | 83.2 | 73.3 | 80.6 | 66.2 | 1.94 | 6.9 |

| BIFPN [49] | 83 | 75.8 | 82.1 | 68.2 | 1.99 | 7.1 |

| Gold-YOLO [50] | 82.9 | 75.9 | 82.4 | 69.2 | 5.98 | 10.3 |

| GFPN [51] | 83.2 | 75.3 | 82.5 | 69 | 3.26 | 8.3 |

| Slim-neck [52] | 83.2 | 76.8 | 82.8 | 70.5 | 2.80 | 7.3 |

| MAFPN [53] | 84.2 | 76 | 83.3 | 70 | 2.99 | 8.7 |

| RMSFPN (ours) | 85.7 (↓1.8) | 75.3 (↑1.8) | 83.5 (↑1.0) | 70.6 (↑1.3) | 2.91 (↓0.1) | 8.4 (↑0.3) |

| Detection Head | P/% | R/% | mAP@0.5/% | mAP@0.5:0.95/% | Params/M | GFLOPS |

|---|---|---|---|---|---|---|

| Base | 85.7 | 75.3 | 83.5 | 70.6 | 2.91 | 8.4 |

| DyHead | 85.2 | 77.2 | 84.1 | 71.9 | 4.17 | 14 |

| SCDADH (ours) | 84.7 (↓1.0) | 77.7 (↑2.4) | 83.4 (↓0.1) | 71.1 (↑0.5) | 2.60 (↓0.31) | 8.8 (↑0.4) |

| Method | P/% | R/% | mAP@0.5/% | mAP@0.5:0.95/% | Params/M | GFLOPS |

|---|---|---|---|---|---|---|

| Base | 84.7 | 77.7 | 83.4 | 71.1 | 2.60 | 8.8 |

| A | 85.1 | 75.1 | 81.2 | 66.4 | 2.77 | 9.4 |

| B | 85.4 | 76.4 | 83.2 | 70.7 | 2.72 | 8.9 |

| C (ours) | 86.9 (↑2.2) | 77.8 (↑0.1) | 84.2 (↑0.8) | 71.3 (↑0.2) | 2.72 (↑0.12) | 8.9 (↑0.1) |

| Loss Function | A1 | A2 | A3 | A4 | A5 | A6 | A7 | A8 | A9 | A10 |

|---|---|---|---|---|---|---|---|---|---|---|

| CIoU | 90.1 | 79.6 | 74.4 | 75.2 | 64.5 | 69.8 | 94.9 | 98.8 | 98.4 | 96.1 |

| WFMIoUv3 | 89.8 | 78.1 | 76.2 | 79.0 | 66.4 | 70.3 | 95.4 | 98.8 | 97.5 | 96.2 |

| Group | Base | A | B | C | D | P/% | R/% | mAP@0.5/% | mAP@0.5:0.95/% | Params/M | GFLOPS |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | √ | 87.5 | 73.5 | 82.5 | 69.3 | 3.01 | 8.1 | ||||

| 2 | √ | √ | 85.7 | 75.3 | 83.5 | 70.6 | 2.91 | 8.4 | |||

| 3 | √ | √ | 83.6 | 76.2 | 82.9 | 70 | 2.24 | 8.6 | |||

| 4 | √ | √ | 83.1 | 77.7 | 83.7 | 70.7 | 3.12 | 8.2 | |||

| 5 | √ | √ | 85.5 | 75.9 | 83.3 | 68.8 | 3.01 | 8.1 | |||

| 6 | √ | √ | √ | 84.7 | 77.7 | 83.4 | 71.1 | 2.60 | 8.8 | ||

| 7 | √ | √ | √ | √ | 86.9 | 77.8 | 84.2 | 71.3 | 2.72 | 8.9 | |

| 8 (ours) | √ | √ | √ | √ | √ | 90.1 (↑2.6) | 76 (↑2.5) | 84.8 (↑2.3) | 70.5 (↑1.2) | 2.72 (↓0.29) | 8.9 (↑0.8) |

| Model | P/% | R/% | mAP@0.5/% | Params/M | GFLOPS |

|---|---|---|---|---|---|

| YOLOv5s | 82.6 | 76.3 | 81.2 | 7.04 | 15.8 |

| YOLOv6n | 75.6 | 71 | 80.1 | 4.63 | 11.34 |

| YOLOv8n | 87.5 | 73.5 | 82.5 | 3.01 | 8.1 |

| YOLOv9t | 80.5 | 76.2 | 82.3 | 2.62 | 10.7 |

| YOLOv10n | 84.2 | 73.3 | 80.5 | 2.70 | 8.2 |

| YOLOv11n | 83 | 74.7 | 81.9 | 2.58 | 6.3 |

| Yang | 88.5 | 74.1 | 83.4 | - | - |

| RMVAD-YOLO (ours) | 90.1 | 76 | 84.8 | 2.72 | 8.9 |

| Model | P/% | R/% | mAP@0.5/% | Params/M | GFLOPS |

|---|---|---|---|---|---|

| RT-DETR-x | 90.8 | 79.8 | 82.6 | 64.59 | 222.5 |

| RT-DETR-l | 90.3 | 79.4 | 82.2 | 32.00 | 103.5 |

| RT-DETR-Resnet50 | 90.8 | 82.3 | 84.3 | 41.96 | 125.7 |

| RMVAD-YOLO (ours) | 90.1 | 76 | 84.8 | 2.72 | 8.9 |

| Model | P/% | R/% | mAP@0.5/% | mAP@0.5:0.95/% | Params/M |

|---|---|---|---|---|---|

| YOLOv8n | 37.9 | 28.8 | 26.3 | 14.5 | 3.01 |

| RMVAD-YOLO-n | 40.8 | 31 | 28.8 | 16.2 | 2.72 |

| YOLOv8s | 45 | 33.4 | 31.7 | 17.8 | 11.13 |

| RMVAD-YOLO-s | 46.3 | 35.6 | 33.8 | 19.3 | 10.61 |

| YOLOv8m | 46.2 | 36.1 | 34.1 | 19.7 | 25.85 |

| RMVAD-YOLO-m | 48.5 | 37.6 | 35.8 | 20.6 | 24.28 |

| YOLOv8l | 48.1 | 37.2 | 35.4 | 20.6 | 43.61 |

| RMVAD-YOLO-l | 50 | 39 | 37.5 | 21.8 | 42.04 |

| Model | P/% | R/% | mAP@0.5/% | mAP@0.5:0.95/% | Params/M | GFLOPS |

|---|---|---|---|---|---|---|

| ESOD-YOLO | - | - | 29.3 | 16.6 | 4.47 | 14.3 |

| RMVAD-YOLO | 40.8 | 31 | 28.8 | 16.2 | 2.72 | 8.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, K.; Zheng, X.; Bi, J.; Zhang, G.; Cui, Y.; Lei, T. RMVAD-YOLO: A Robust Multi-View Aircraft Detection Model for Imbalanced and Similar Classes. Remote Sens. 2025, 17, 1001. https://doi.org/10.3390/rs17061001

Li K, Zheng X, Bi J, Zhang G, Cui Y, Lei T. RMVAD-YOLO: A Robust Multi-View Aircraft Detection Model for Imbalanced and Similar Classes. Remote Sensing. 2025; 17(6):1001. https://doi.org/10.3390/rs17061001

Chicago/Turabian StyleLi, Keda, Xiangyue Zheng, Jingxin Bi, Gang Zhang, Yi Cui, and Tao Lei. 2025. "RMVAD-YOLO: A Robust Multi-View Aircraft Detection Model for Imbalanced and Similar Classes" Remote Sensing 17, no. 6: 1001. https://doi.org/10.3390/rs17061001

APA StyleLi, K., Zheng, X., Bi, J., Zhang, G., Cui, Y., & Lei, T. (2025). RMVAD-YOLO: A Robust Multi-View Aircraft Detection Model for Imbalanced and Similar Classes. Remote Sensing, 17(6), 1001. https://doi.org/10.3390/rs17061001