Automated Detection of Araucaria angustifolia (Bertol.) Kuntze in Urban Areas Using Google Earth Images and YOLOv7x

Abstract

1. Introduction

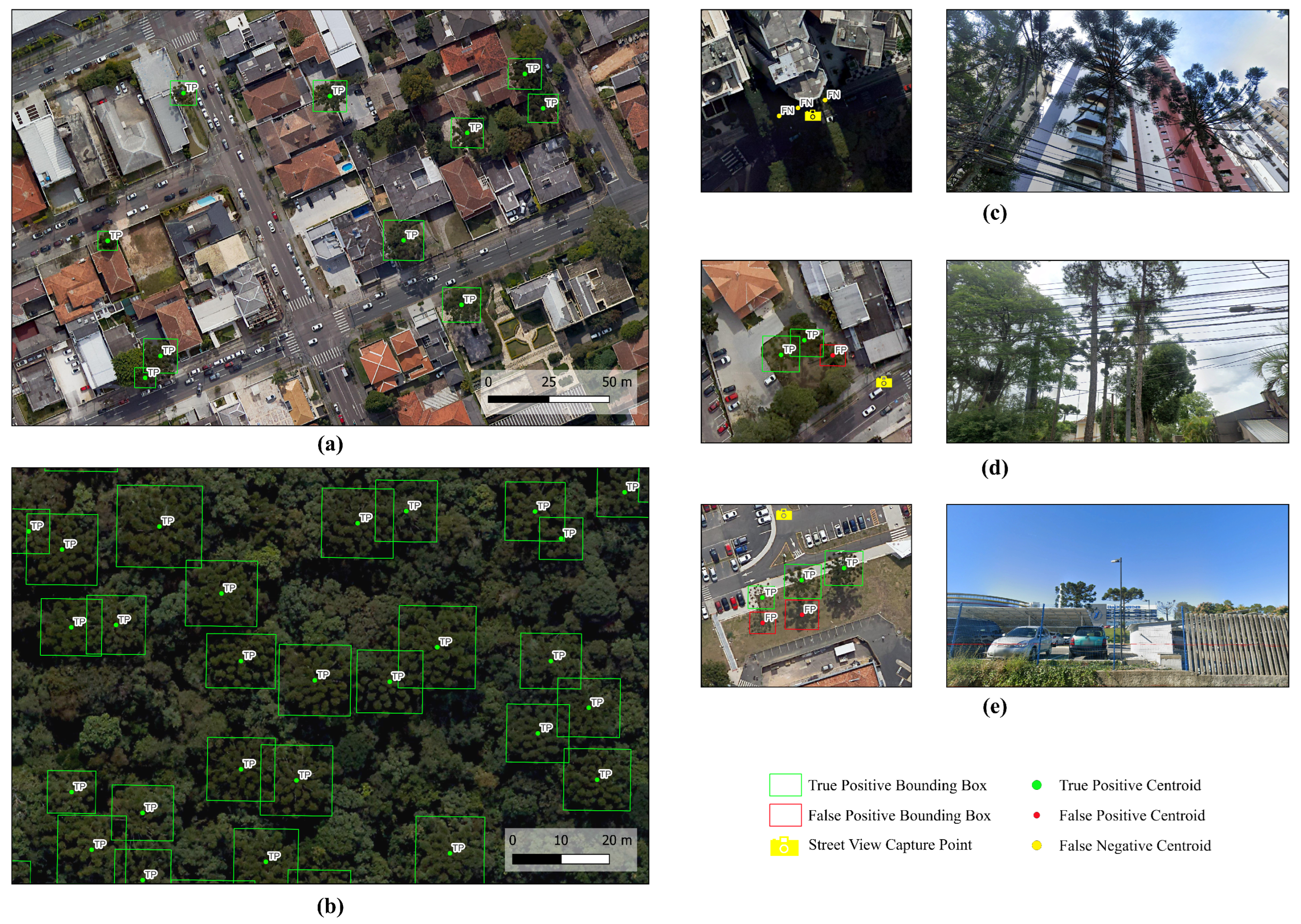

2. Materials and Methods

2.1. Study Area Location

2.2. Pre-Training

2.2.1. Acquisition of RGB Images

2.2.2. Field Data Collection and Data Labeling

2.3. Training

2.3.1. Data Customization

2.3.2. Data Augmentation

2.3.3. Experimentation Environment

2.4. Metrics

2.4.1. Intersection over Union

2.4.2. Recall and Precision

2.4.3. F1-Score

2.4.4. Average Precision

2.4.5. K-Fold Cross-Validation

2.4.6. Uncertainty

3. Results

3.1. Training and Validation

3.2. Test

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Dornelles, M.P.; Heiden, G.; Nic Lughadha, E.; Iganci, J. Quantifying and mapping angiosperm endemism in the Araucaria Forest. Bot. J. Linn. Soc. 2022, 199, 449–469. [Google Scholar] [CrossRef]

- Bogoni, J.A.; Muniz-Tagliari, M.; Peroni, N.; Peres, C.A. Testing the keystone plant resource role of a flagship subtropical tree species (Araucaria angustifolia) in the Brazilian Atlantic Forest. Ecol. Indic. 2020, 118, 106778. [Google Scholar] [CrossRef]

- IBGE. Produção da Extração Vegetal e da Silvicultura. 2022. Available online: https://www.ibge.gov.br/estatisticas/economicas/agricultura-e-pecuaria/9105-producao-da-extracao-vegetal-e-da-silvicultura.html (accessed on 10 July 2024).

- Godoy, R.C.B.d.; Negre, M.d.F.d.O.; Mendes, I.M.; Siqueira, G.L.d.A.d.; Helm, C.V. O Pinhão na Culinária; Embrapa: Brasilia, Brazil, 2013; Volume 1, 138p. [Google Scholar]

- Castrillon, R.G.; Helm, C.V.; Mathias, A.L. Araucaria angustifolia and the pinhão seed: Starch, bioactive compounds and functional activity—A bibliometric review. CiÊNcia Rural. 2023, 53, e20220048. [Google Scholar] [CrossRef]

- Zamarchi, F.; Vieira, I.C. Determination of paracetamol using a sensor based on green synthesis of silver nanoparticles in plant extract. J. Pharm. Biomed. Anal. 2021, 196, 113912. [Google Scholar] [CrossRef] [PubMed]

- Bogoni, J.A.; Peres, C.A.; Ferraz, K.M. Effects of mammal defaunation on natural ecosystem services and human well being throughout the entire Neotropical realm. Ecosyst. Serv. 2020, 45, 101173. [Google Scholar] [CrossRef]

- Ruiz, E.C.Z.; de Abreu Neto, R.; Behling, A.; Guimarães, F.A.R.; Filho, A.F. Bioenergetic use of Araucaria angustifolia branches. SSRN Electron. J. 2020, 153, 106212. [Google Scholar] [CrossRef]

- Dittmann, R.L.; de Souza, J.T.; Talgatti, M.; Baldin, T.; de Menezes, W.M. Stacking methods and lumber quality of Eucalyptus dunnii and Araucaria angustifolia after air drying. Sci. Agrar. Parana. 2017, 16, 260–264. [Google Scholar]

- Sanquetta, C.R.; Wojciechowski, J.; Corte, A.P.D.; Rodrigues, A.L.; Maas, G.C.B. On the use of data mining for estimating carbon storage in the trees. Carbon Balance Manag. 2013, 8, 6. [Google Scholar] [CrossRef]

- Rosenfield, M.F.; Souza, A.F. Forest biomass variation in Southernmost Brazil: The impact of Araucaria trees. Rev. Biol. Trop. 2014, 62, 359–372. [Google Scholar] [CrossRef]

- Roik, M.; Machado, S.d.A.; Figueiredo Filho, A.; Sanquetta, C.R.; Ruiz, E.C.Z. Aboveground biomass and organic carbon of native araucaria angustifolia (bertol.) Kuntze. Floresta Ambiente 2020, 27, e20180103. [Google Scholar] [CrossRef]

- Zinn, Y.L.; Fialho, R.C.; Silva, C.A. Soil organic carbon sequestration under Araucaria angustifolia plantations but not under exotic tree species on a mountain range. Rev. Bras. Ciênc. Solo 2024, 48, e0230146. [Google Scholar] [CrossRef]

- Scarano, F.R.; Ceotto, P. Brazilian Atlantic forest: Impact, vulnerability, and adaptation to climate change. Biodivers. Conserv. 2015, 24, 2319–2331. [Google Scholar] [CrossRef]

- Rezende, C.; Scarano, F.; Assad, E.; Joly, C.; Metzger, J.; Strassburg, B.; Tabarelli, M.; Fonseca, G.; Mittermeier, R. From hotspot to hopespot: An opportunity for the Brazilian Atlantic Forest. Perspect. Ecol. Conserv. 2018, 16, 208–214. [Google Scholar] [CrossRef]

- Ribeiro, M.C.; Metzger, J.P.; Martensen, A.C.; Ponzoni, F.J.; Hirota, M.M. The Brazilian Atlantic Forest: How much is left, and how is the remaining forest distributed? Implications for conservation. Biol. Conserv. 2009, 142, 1141–1153. [Google Scholar] [CrossRef]

- Castro, M.B.; Barbosa, A.C.M.C.; Pompeu, P.V.; Eisenlohr, P.V.; de Assis Pereira, G.; Apgaua, D.M.G.; Pires-Oliveira, J.C.; Barbosa, J.P.R.A.D.; Fontes, M.A.L.; dos Santos, R.M.; et al. Will the emblematic southern conifer Araucaria angustifolia survive to climate change in Brazil? Biodivers. Conserv. 2019, 29, 591–607. [Google Scholar] [CrossRef]

- Joly, C.A.; Metzger, J.P.; Tabarelli, M. Experiences from the Brazilian Atlantic Forest: Ecological findings and conservation initiatives. New Phytol. 2014, 204, 459–473. [Google Scholar] [CrossRef] [PubMed]

- Paraná. Legislação Estadual. 1995. Available online: https://leisestaduais.com.br/pr/lei-ordinaria-n-11054-1995-parana-dispoe-sobre-a-lei-florestal-do-estado (accessed on 14 January 2023).

- Ministério do Meio Ambiente (MMA). Portaria nº 443, de 17 de Dezembro de 2014. Available online: https://jbb.ibict.br/handle/1/672 (accessed on 1 January 2023).

- Martinelli, G.; Moraes, M.A. Livro vermelho da flora do Brasil; CNCFlora, Centro Nacional de Conservação da Flora do Rio de Janeiro: Rio de Janeiro, Brazil, 2013. [Google Scholar]

- Herrera, H.A.R.; Rosot, N.C.; Rosot, M.A.D.; de Oliveira, E. Análise florística e fitossociológica do componente arbóreo da Floresta Ombrófila Mista presente na Reserva Florestal EMBRAPA/EPAGRI, Caçador, SC-Brasil. Floresta 2009, 39, 485–500. [Google Scholar] [CrossRef]

- Ribeiro, S.B.; Longhi, S.J.; Brena, D.A.; Nascimento, A.R.T. Diversidade e classificação da comunidade arbórea da Floresta Ombrófila Mista da FLONA de São Francisco de Paula, RS. Ciênc. Florest. 2007, 17, 101–108. [Google Scholar] [CrossRef]

- Cubas, R.; Watzlawick, L.F.; Figueiredo Filho, A. Incremento, ingresso, mortalidade em um remanescente de Floresta Ombrófila Mista em Três Barras-SC. Ciênc. Florest. 2016, 26, 889–900. [Google Scholar] [CrossRef]

- Neto, R.M.R.; Kozera, C.; de Andrade, R.d.R.; Cecy, A.T.; Hummes, A.P.; Fritzsons, E.; Caldeira, M.V.W.; Maria de Nazaré, M.M.; de Souza, M.K.F. Caracterizaçao florística e estrutural de um fragmento de Floresta Ombrófila Mista, em Curitiba, PR–Brasil. Floresta 2002, 32, 3–16. [Google Scholar] [CrossRef]

- Boldarini, F.R.; Gris, D.; de Moraes Conceição, L.H.S.; Godinho, L. Phytosociological characterization of an urban fragment of interior Araucaria forest—Paraná, Brazil. Floresta 2024, 54, e-92974. [Google Scholar] [CrossRef]

- Heidemann, A.S.; Pelissari, A.L.; Cysneiros, V.C.; Rodrigues, C.K. Avaliação da estrutura espacial em uma floresta urbana por meio da estimativa da densidade de Kernel Assessing spatial structure in an urban forest by Kernel density estimation Evaluación de la estructura espacial en un bosque urbano mediante la estimación de la densidad Kernel. Contrib. Las Cienc. Soc. 2024, 17, 1–24. [Google Scholar] [CrossRef]

- WFCA-Melinda. The Evolution of Forest Inventory. 2021. Available online: https://forestbiometrics.org/references-articles/publications/the-evolution-of-forest-inventory/ (accessed on 25 September 2024).

- Puttemans, S.; Van Beeck, K.; Goedemé, T. Comparing boosted cascades to deep learning architectures for fast and robust coconut tree detection in aerial images. In Proceedings of the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Madeira, Portugal, 27–29 January 2018; SCITEPRESS—Science and Technology Publications: Setubal, Portugal, 2018. [Google Scholar] [CrossRef]

- Zheng, J.; Li, W.; Xia, M.; Dong, R.; Fu, H.; Yuan, S. Large-Scale oil palm tree detection from high-resolution remote sensing images using Faster-RCNN. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019. [Google Scholar] [CrossRef]

- Ferreira, M.P.; Almeida, D.R.A.d.; Papa, D.d.A.; Minervino, J.B.S.; Veras, H.F.P.; Formighieri, A.; Santos, C.A.N.; Ferreira, M.A.D.; Figueiredo, E.O.; Ferreira, E.J.L. Individual tree detection and species classification of Amazonian palms using UAV images and deep learning. For. Ecol. Manag. 2020, 475, 118397. [Google Scholar] [CrossRef]

- Itakura, K.; Hosoi, F. Automatic Tree Detection from Three-Dimensional Images Reconstructed from 360° Spherical Camera Using YOLO v2. Remote Sens. 2020, 12, 988. [Google Scholar] [CrossRef]

- Sun, Y.; Hao, Z.; Guo, Z.; Liu, Z.; Huang, J. Detection and Mapping of Chestnut Using Deep Learning from High-Resolution UAV-Based RGB Imagery. Remote Sens. 2023, 15, 4923. [Google Scholar] [CrossRef]

- Selvaraj, M.G.; Vergara, A.; Montenegro, F.; Ruiz, H.A.; Safari, N.; Raymaekers, D.; Ocimati, W.; Ntamwira, J.; Tits, L.; Omondi, A.B.; et al. Detection of banana plants and their major diseases through aerial images and machine learning methods: A case study in DR Congo and Republic of Benin. ISPRS J. Photogramm. Remote Sens. 2020, 169, 110–124. [Google Scholar] [CrossRef]

- Culman, M.; Delalieux, S.; Tricht, K.V. Palm tree inventory from aerial images using retinanet. In Proceedings of the 2020 Mediterranean and Middle-East Geoscience and Remote Sensing Symposium (M2GARSS), Tunis, Tunisia, 9–11 March 2020. [Google Scholar] [CrossRef]

- Jintasuttisak, T.; Edirisinghe, E.; Elbattay, A. Deep neural network based date palm tree detection in drone imagery. Comput. Electron. Agric. 2022, 192, 106560. [Google Scholar] [CrossRef]

- Dong, C.; Cai, C.; Chen, S.; Xu, H.; Yang, L.; Ji, J.; Huang, S.; Hung, I.K.; Weng, Y.; Lou, X. Crown width extraction of Metasequoia glyptostroboides using improved YOLOv7 based on UAV images. Drones 2023, 7, 336. [Google Scholar] [CrossRef]

- Braga, J.R.G.; Peripato, V.; Dalagnol, R.; Ferreira, M.P.; Tarabalka, Y.; Aragão, L.E.O.C.; Velho, H.F.d.C.; Shiguemori, E.H.; Wagner, F.H. Tree Crown Delineation Algorithm Based on a Convolutional Neural Network. Remote Sens. 2020, 12, 1288. [Google Scholar] [CrossRef]

- Butler, D. Virtual globes: The web-wide world. Nature 2006, 439, 776–778. [Google Scholar] [CrossRef]

- Yu, L.; Gong, P. Google Earth as a virtual globe tool for Earth science applications at the global scale: Progress and perspectives. Int. J. Remote Sens. 2012, 33, 3966–3986. [Google Scholar] [CrossRef]

- Hou, H.; Chen, M.; Tie, Y.; Li, W. A universal landslide detection method in optical remote sensing images based on improved YOLOX. Remote Sens. 2022, 14, 4939. [Google Scholar] [CrossRef]

- Sepehry Amin, M.; Emami, H. True orthophoto generation using google earth imagery and comparison to UAV orthophoto. Sci.-Res. Q. Geogr. Data (SEPEHR) 2023, 32, 7–25. [Google Scholar]

- Sun, L.; Guo, H.; Chen, Z.; Yin, Z.; Feng, H.; Wu, S.; Siddique, K.H. Check dam extraction from remote sensing images using deep learning and geospatial analysis: A case study in the Yanhe River Basin of the Loess Plateau, China. J. Arid. Land 2023, 15, 34–51. [Google Scholar] [CrossRef]

- Aguiar, J.T.d.; Brun, F.G.K.; Higuchi, P.; Bobrowski, R. Although it lacks connectivity, isolated urban forest fragments can deliver similar amounts of ecosystem services as in protected areas. CERNE 2023, 29, e-103193. [Google Scholar] [CrossRef]

- Nowak, D.J.; Dwyer, J.F. Understanding the benefits and costs of urban forest ecosystems. In Urban and Community Forestry in the Northeast; Springer: Berlin/Heidelberg, Germany, 2007; pp. 25–46. [Google Scholar] [CrossRef]

- da Silva Santos, A.; de Souza, I.; de Souza, J.M.T.; Schaffrath, V.R.; Galvão, F.; Bohn Reckziegel, R. Urban Parks in Curitiba as Biodiversity Refuges of Montane Mixed Ombrophilous Forests. Sustainability 2023, 15, 968. [Google Scholar] [CrossRef]

- Google. Google Earth. Available online: https://www.google.com.br/earth/ (accessed on 20 January 2025).

- IBGE; Coordenação de Meio Ambiente. Áreas Urbanizadas do Brasil: 2019; Coleção Ibgeana, IBGE: Rio de Janeiro, Brazil, 2022; p. 16. 30p. [Google Scholar]

- Alvares, C.A.; Stape, J.L.; Sentelhas, P.C.; Gonçalves, J.d.M.; Sparovek, G. Köppen’s climate classification map for Brazil. Meteorol. Z. 2013, 22, 711–728. [Google Scholar] [CrossRef]

- Instituto Nacional de Meteorologia. Instituto Nacional de Meteorologia, n.d. Available online: https://portal.inmet.gov.br/normais (accessed on 16 January 2025).

- Thang, Q.D. HCMGIS: Plugin for QGIS 3. 2021. Available online: https://plugins.qgis.org/plugins/HCMGIS/ (accessed on 20 February 2025).

- QGIS Development Team. QGIS Geographic Information System. Open Source Geospatial Foundation (OSGeo), 2024. Version 3.32. Available online: https://www.osgeo.org/ (accessed on 3 January 2024).

- Tzutalin. LabelImg. Git Code 2015. Available online: https://github.com/tzutalin/labelImg (accessed on 20 January 2023).

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Proceedings, Part V 13, Zurich, Switzerland, 6– 12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Powers, D.M. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The pascal visual object classes (VOC) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Anthony, M.; Holden, S.B. Cross-validation for binary classification by real-valued functions: Theoretical analysis. In Proceedings of the 11th Annual Conference on Computational Learning Theory, Madison, WI, USA, 24–26 July 1998; pp. 218–229. [Google Scholar]

- Antoniou, A. Data Augmentation Generative Adversarial Networks. arXiv 2017, arXiv:1711.04340. [Google Scholar]

- Perez, L.; Wang, J. The Effectiveness of Data Augmentation in Image Classification using Deep Learning. arXiv 2017, arXiv:1712.04621. [Google Scholar]

- Perez Malla, C.U.; Valdes Hernandez, M.d.C.; Rachmadi, M.F.; Komura, T. Evaluation of enhanced learning techniques for segmenting ischaemic stroke lesions in brain magnetic resonance perfusion images using a convolutional neural network scheme. Front. Neuroinform. 2019, 13, 33. [Google Scholar] [CrossRef]

- Hao, W.; Zhili, S. Improved mosaic: Algorithms for more complex images. J. Phys. Conf. Ser. 2020, 1684, 012094. [Google Scholar] [CrossRef]

- Redmon, J. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Chen, X.; Xiang, S.; Liu, C.L.; Pan, C.H. Vehicle detection in satellite images by hybrid deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1797–1801. [Google Scholar] [CrossRef]

- Bai, T.; Wang, L.; Yin, D.; Sun, K.; Chen, Y.; Li, W.; Li, D. Deep learning for change detection in remote sensing: A review. Geo-Spat. Inf. Sci. 2023, 26, 262–288. [Google Scholar] [CrossRef]

- Beloiu, M.; Heinzmann, L.; Rehush, N.; Gessler, A.; Griess, V.C. Individual tree-crown detection and species identification in heterogeneous forests using aerial RGB imagery and deep learning. Remote Sens. 2023, 15, 1463. [Google Scholar] [CrossRef]

| Settings | Value | Settings | Value |

|---|---|---|---|

| batch | 8 | anchor_t | 4.0 |

| width | 640 | fl_gamma | 0.0 |

| height | 640 | hsv_h | 0.015 |

| channels | 3.0 | hsv_v | 0.4 |

| momentum | 0.937 | hsv_s | 0.7 |

| decay | 0.0005 | degrees | 0.0 |

| warmup_momentum | 0.8 | translate | 0.2 |

| warmup_bias_lr | 0.1 | scale | 0.5 |

| lr0 | 0.01 | shear | 0.0 |

| lrf | 0.1 | perspective | 0.0 |

| box | 0.05 | flipud | 0.0 |

| cls | 0.3 | fliplr | 0.5 |

| cls_pw | 1.0 | mosaic | 1.0 |

| obj | 0.7 | mixup | 0.0 |

| obj_pw | 1.0 | train_size | 878 |

| iou_t | 0.2 | validation_size | 219 |

| Metrics | 1-Fold | 2-Fold | 3-Fold | 4-Fold | 5-Fold | Average |

|---|---|---|---|---|---|---|

| Precision | 0.8963 | 0.9319 | 0.8951 | 0.9149 | 0.8972 | 0.9071 |

| Recall | 0.8355 | 0.8671 | 0.8610 | 0.8757 | 0.8989 | 0.8676 |

| F1-score | 0.8648 | 0.8983 | 0.8777 | 0.8949 | 0.8980 | 0.8868 |

| AP (%) | 0.8883 | 0.9119 | 0.9041 | 0.9095 | 0.9257 | 0.9079 |

| Scenario | Precision | Recall | F1-Score |

|---|---|---|---|

| Isolated | 0.8379 | 0.9432 | 0.8874 |

| Forest | 0.9129 | 0.9614 | 0.9365 |

| Isolated + Palm Refined | 0.8543 | - | - |

| Forest + Palm Refined | 0.9136 | - | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karasinski, M.A.; Leite, R.d.S.; Guaraná, E.C.; Figueiredo, E.O.; Broadbent, E.N.; Silva, C.A.; Santos, E.K.M.d.; Sanquetta, C.R.; Dalla Corte, A.P. Automated Detection of Araucaria angustifolia (Bertol.) Kuntze in Urban Areas Using Google Earth Images and YOLOv7x. Remote Sens. 2025, 17, 809. https://doi.org/10.3390/rs17050809

Karasinski MA, Leite RdS, Guaraná EC, Figueiredo EO, Broadbent EN, Silva CA, Santos EKMd, Sanquetta CR, Dalla Corte AP. Automated Detection of Araucaria angustifolia (Bertol.) Kuntze in Urban Areas Using Google Earth Images and YOLOv7x. Remote Sensing. 2025; 17(5):809. https://doi.org/10.3390/rs17050809

Chicago/Turabian StyleKarasinski, Mauro Alessandro, Ramon de Sousa Leite, Emmanoella Costa Guaraná, Evandro Orfanó Figueiredo, Eben North Broadbent, Carlos Alberto Silva, Erica Kerolaine Mendonça dos Santos, Carlos Roberto Sanquetta, and Ana Paula Dalla Corte. 2025. "Automated Detection of Araucaria angustifolia (Bertol.) Kuntze in Urban Areas Using Google Earth Images and YOLOv7x" Remote Sensing 17, no. 5: 809. https://doi.org/10.3390/rs17050809

APA StyleKarasinski, M. A., Leite, R. d. S., Guaraná, E. C., Figueiredo, E. O., Broadbent, E. N., Silva, C. A., Santos, E. K. M. d., Sanquetta, C. R., & Dalla Corte, A. P. (2025). Automated Detection of Araucaria angustifolia (Bertol.) Kuntze in Urban Areas Using Google Earth Images and YOLOv7x. Remote Sensing, 17(5), 809. https://doi.org/10.3390/rs17050809