Light-Weight Synthetic Aperture Radar Image Saliency Enhancement Method Based on Sea–Land Segmentation Preference

Abstract

1. Introduction

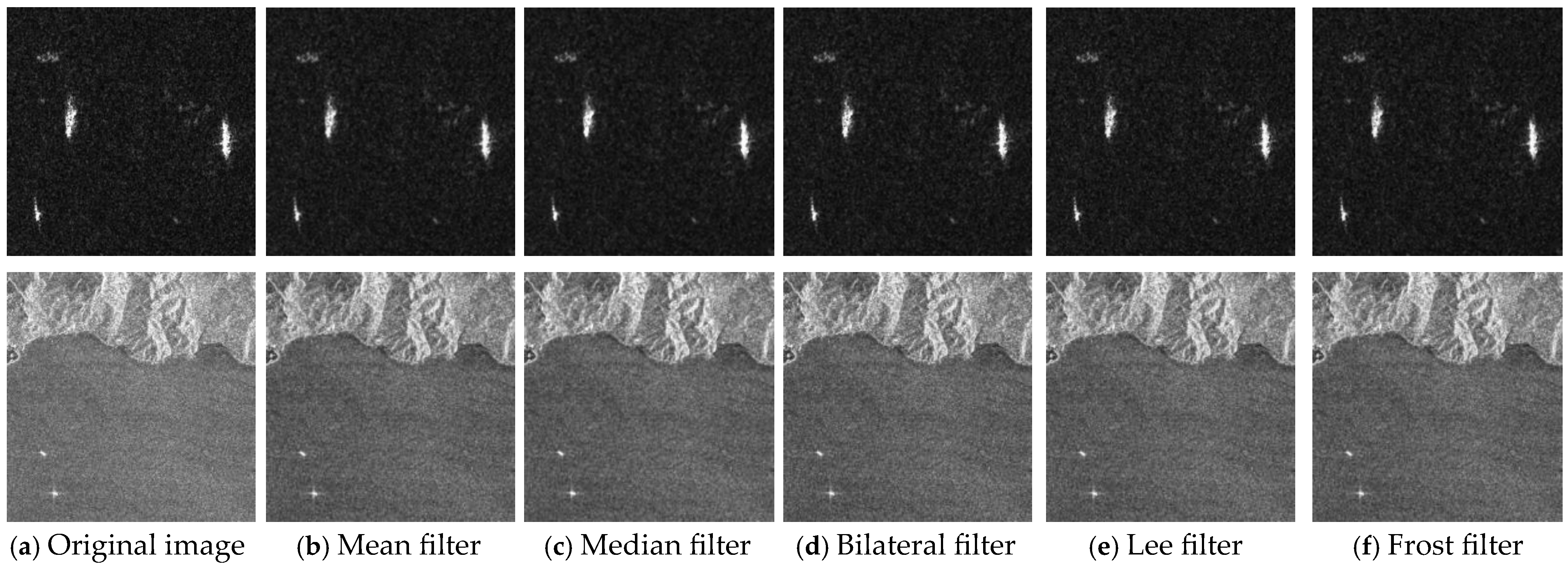

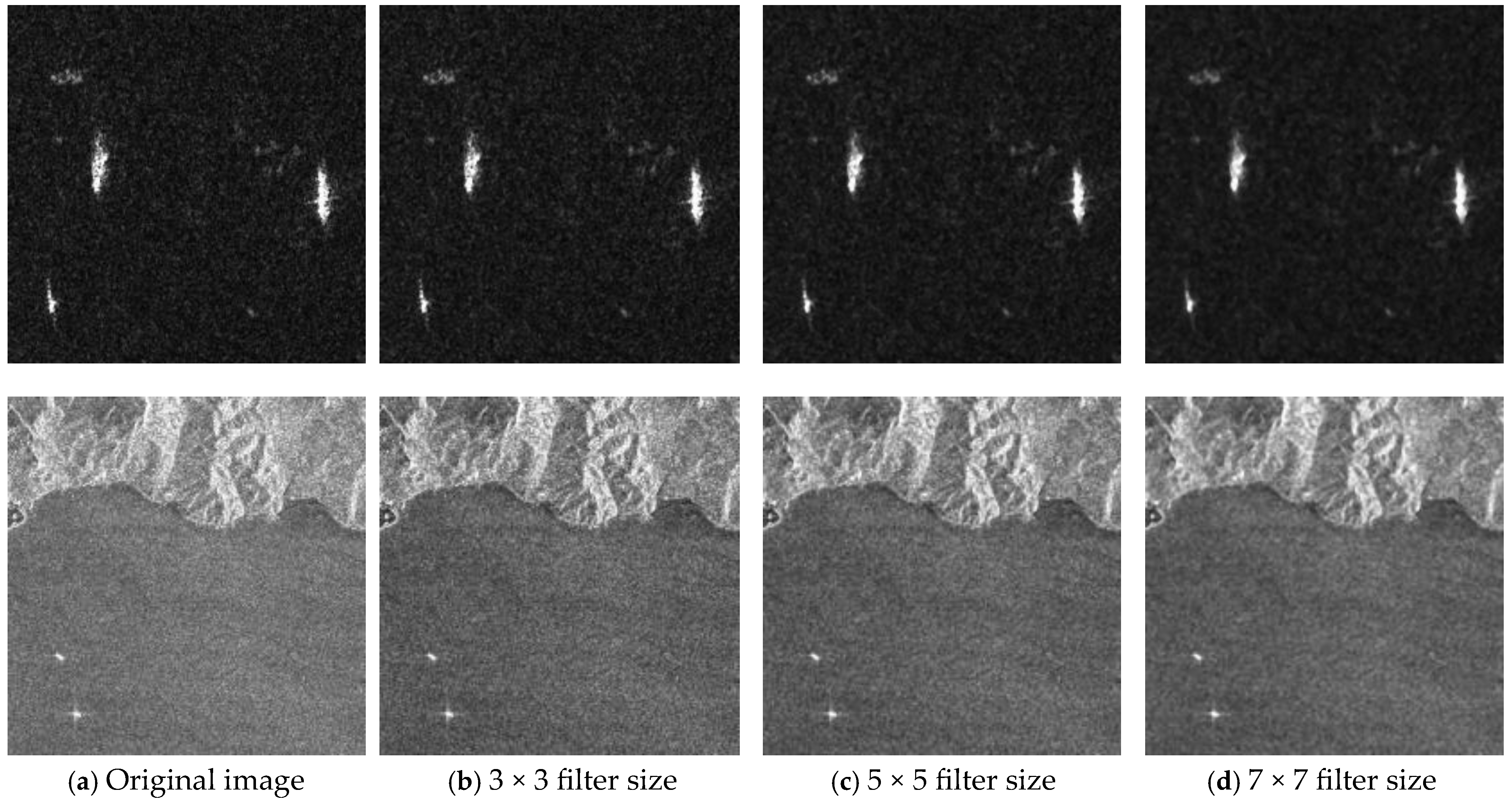

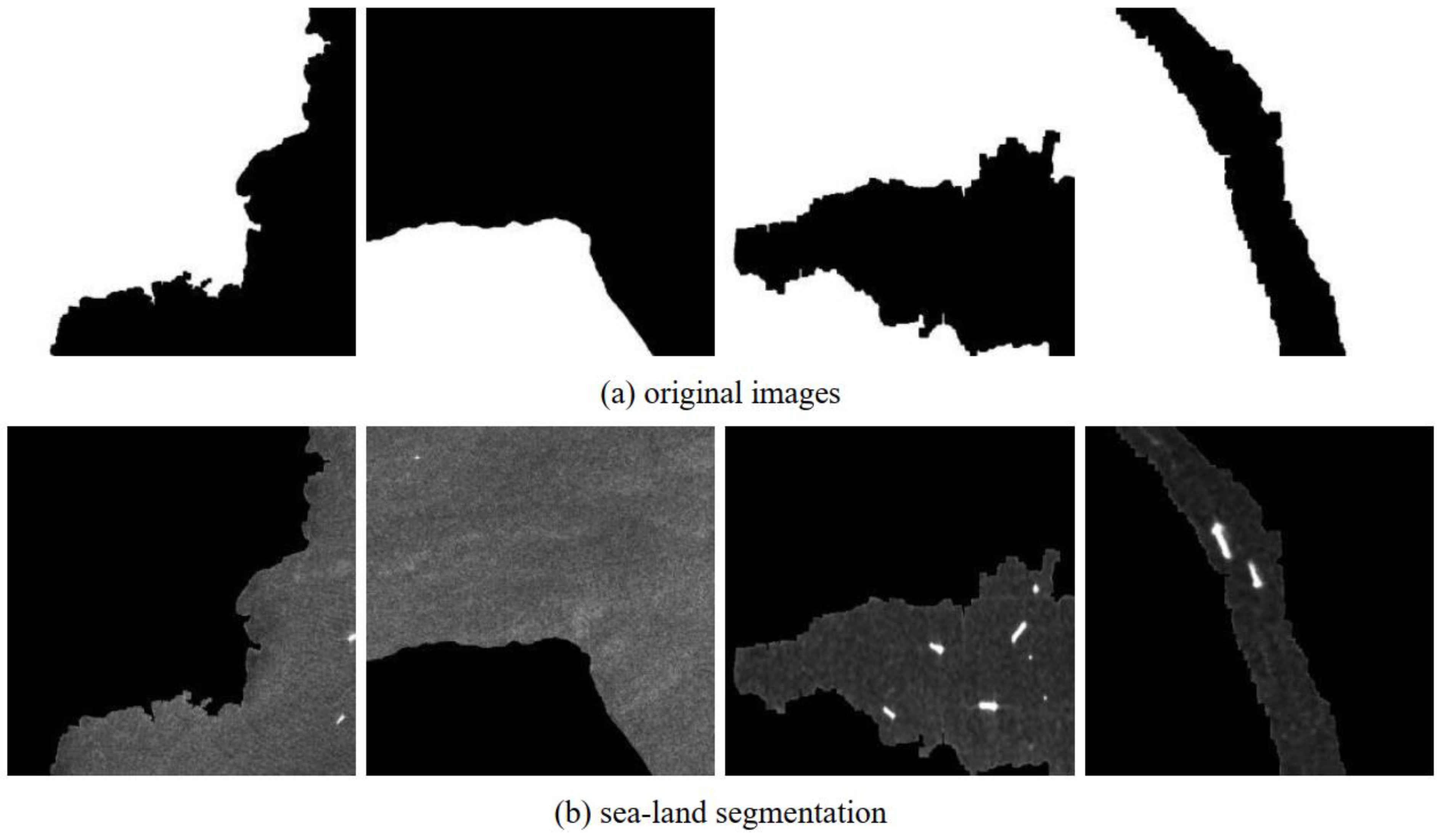

2. Image Denoising

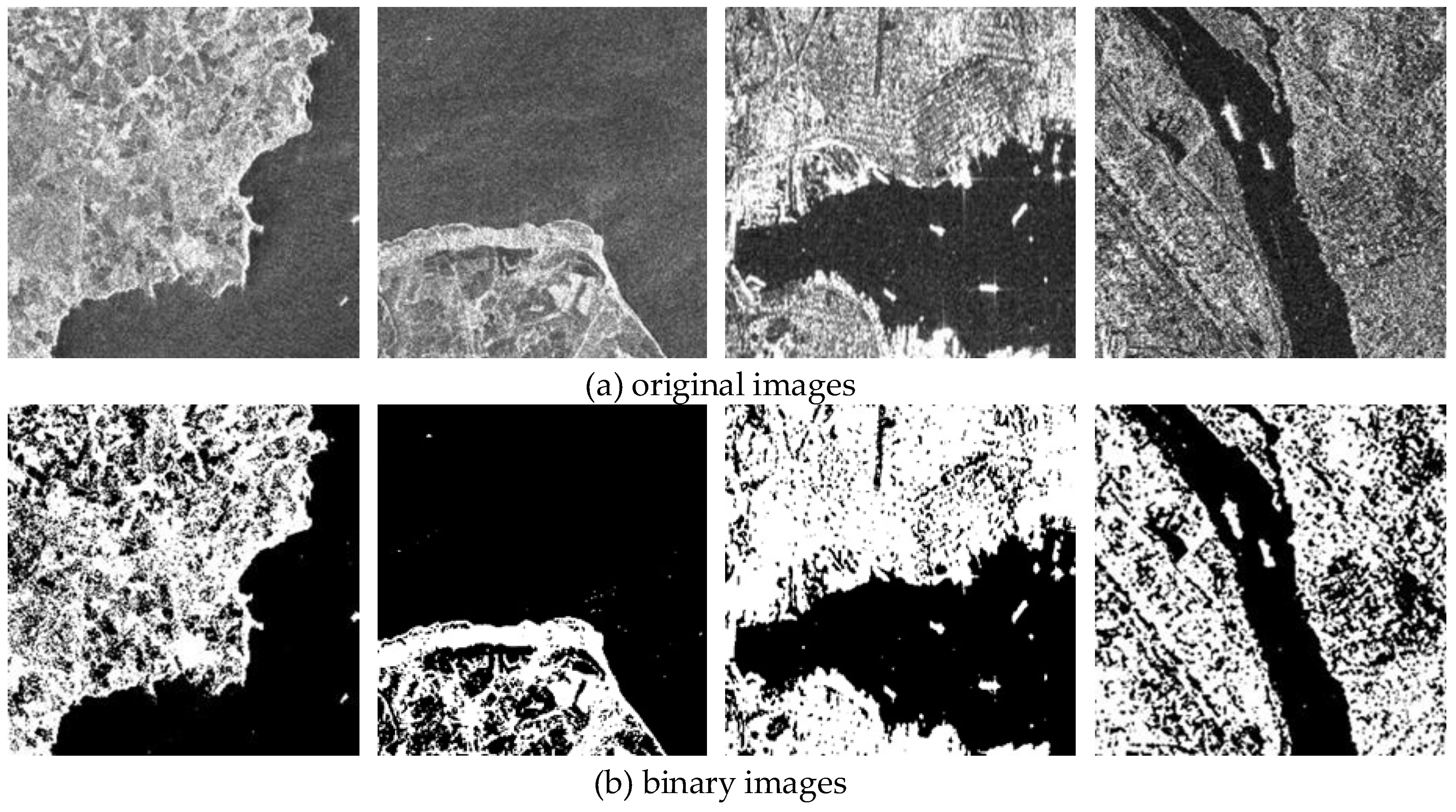

3. Sea–Land Segmentation

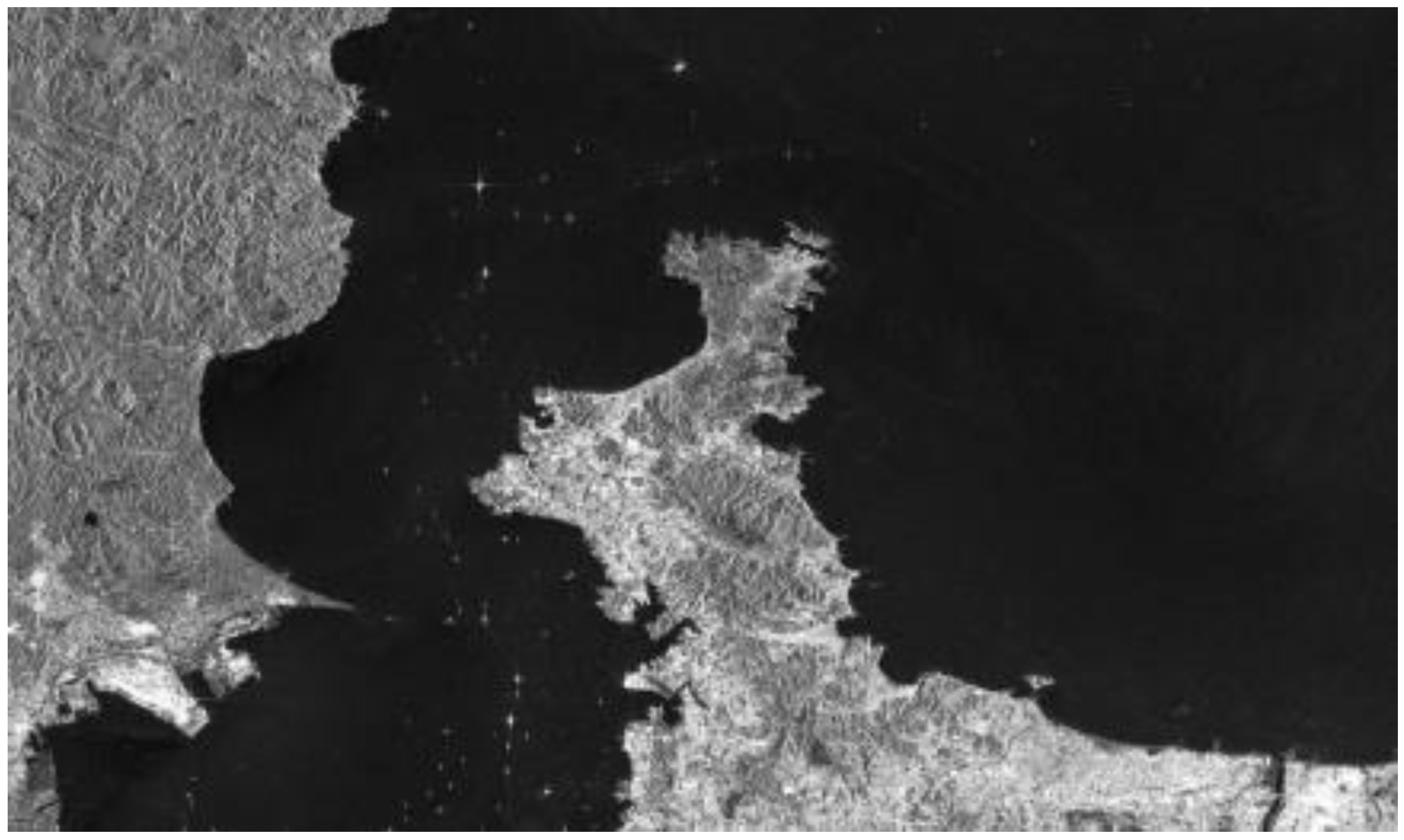

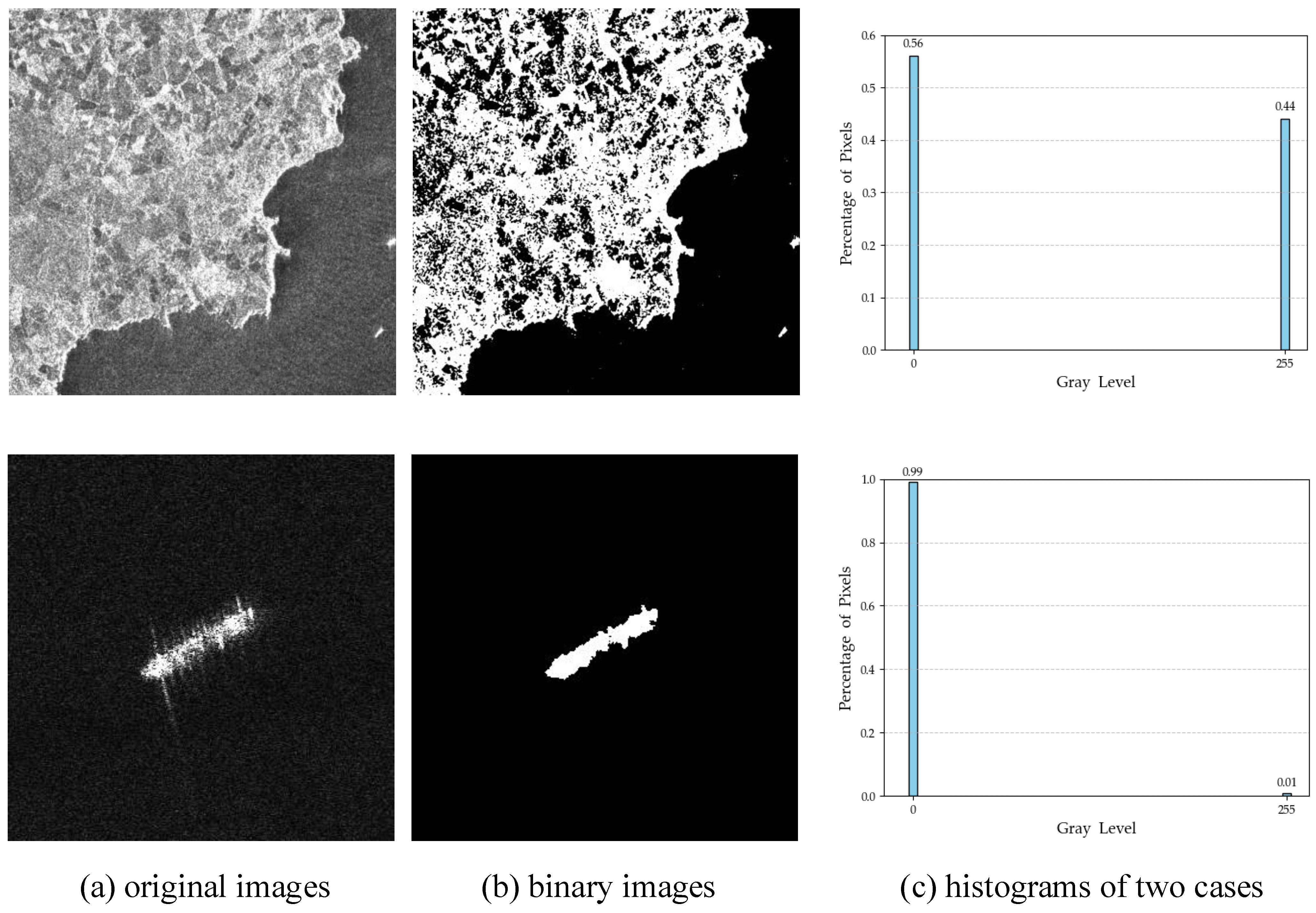

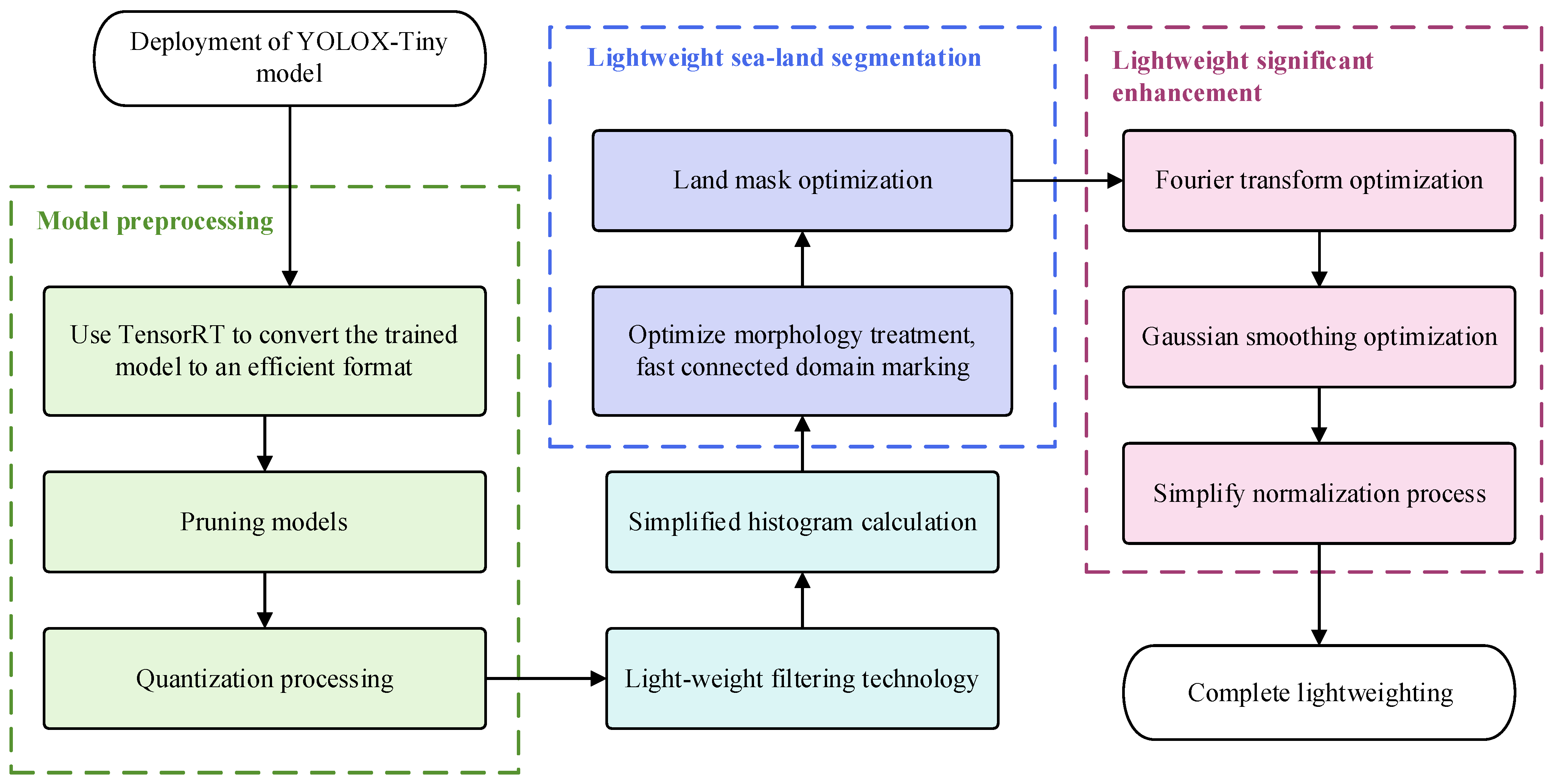

3.1. Land Recognition

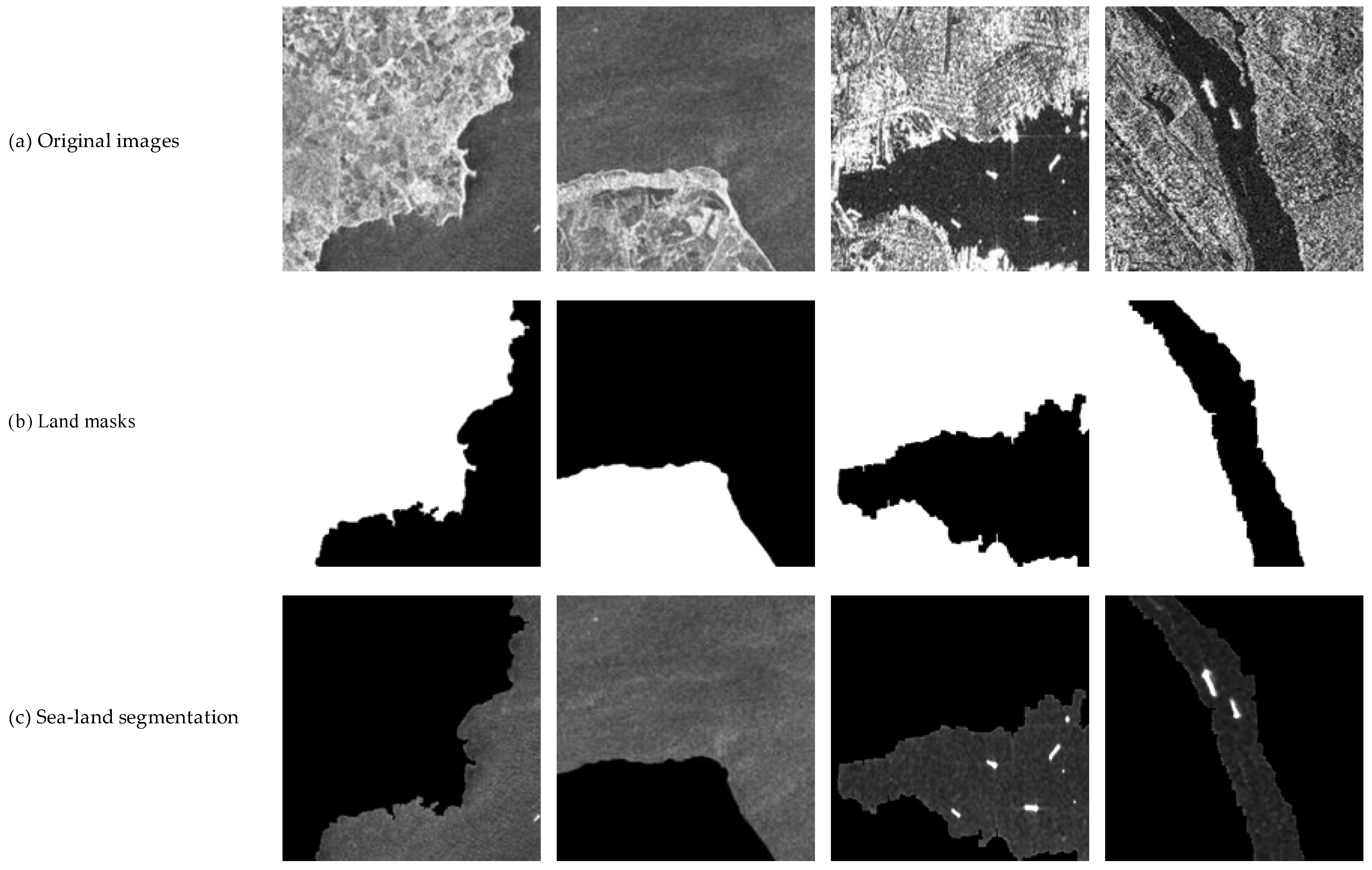

3.2. Land Mask Generation

4. SAR ISEM Based on Sea–Land Segmentation Preference

5. Light-Weight Model Deployment

6. Experiments

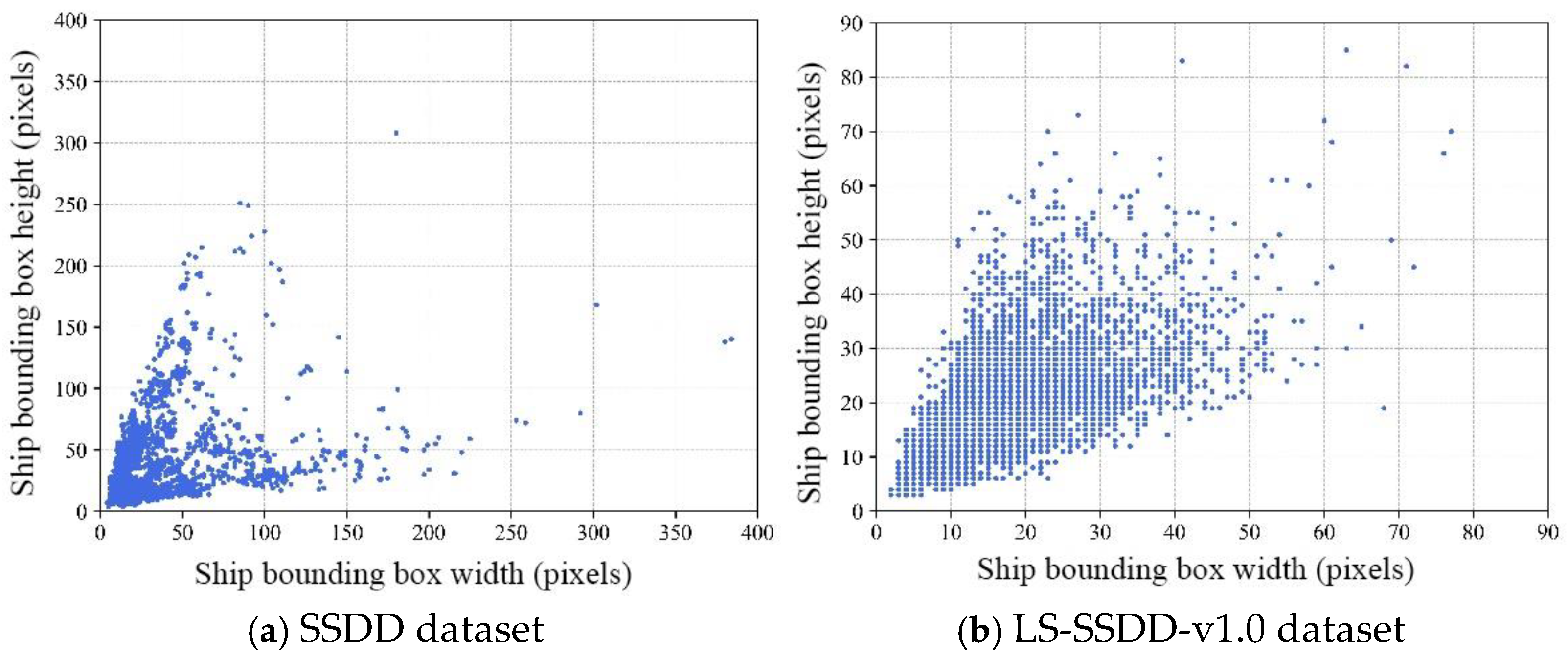

6.1. Evaluation Metrics and Datasets

6.2. Results of SAR Image Denoising

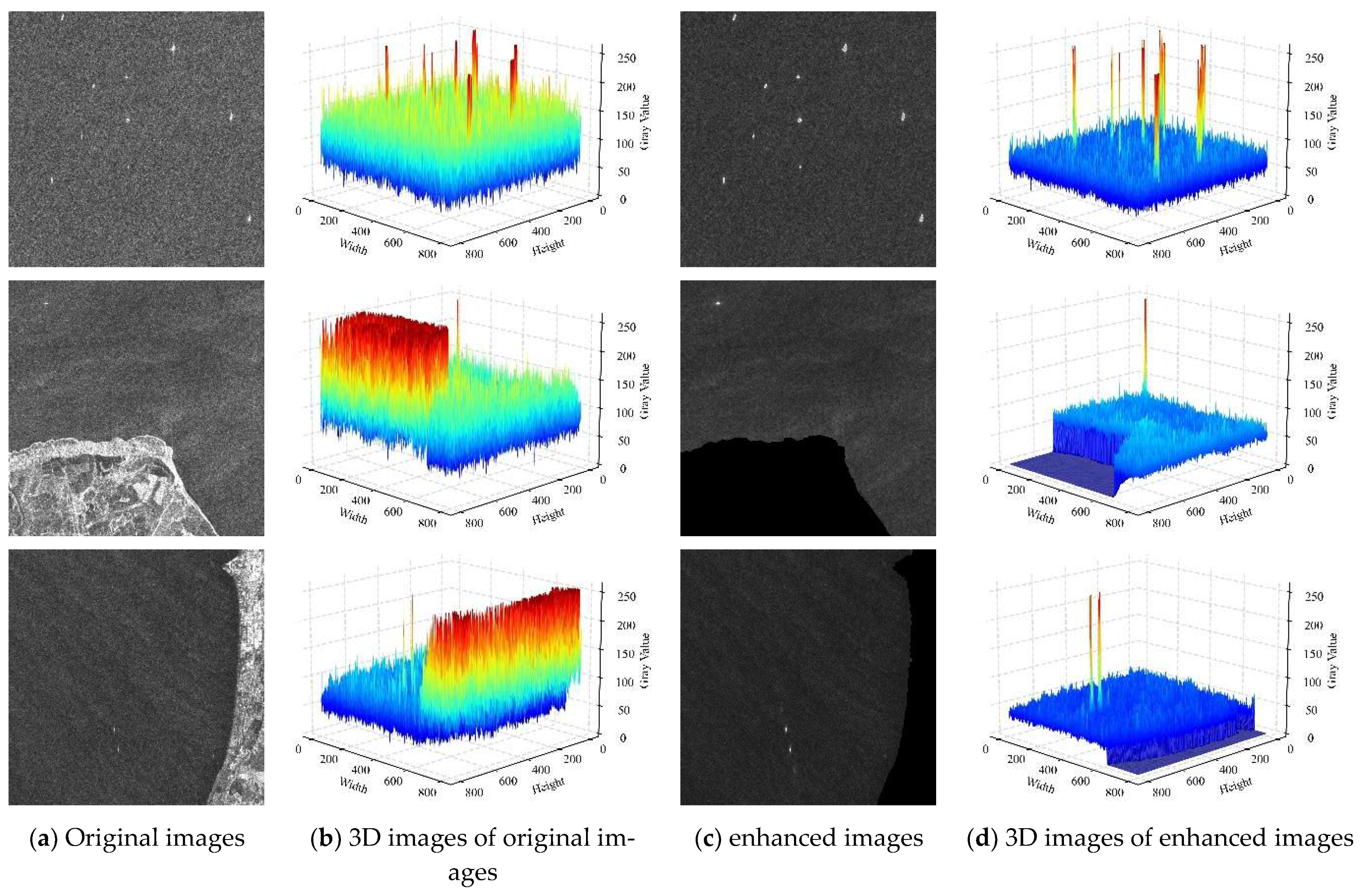

6.3. Results of Image Saliency Enhancement

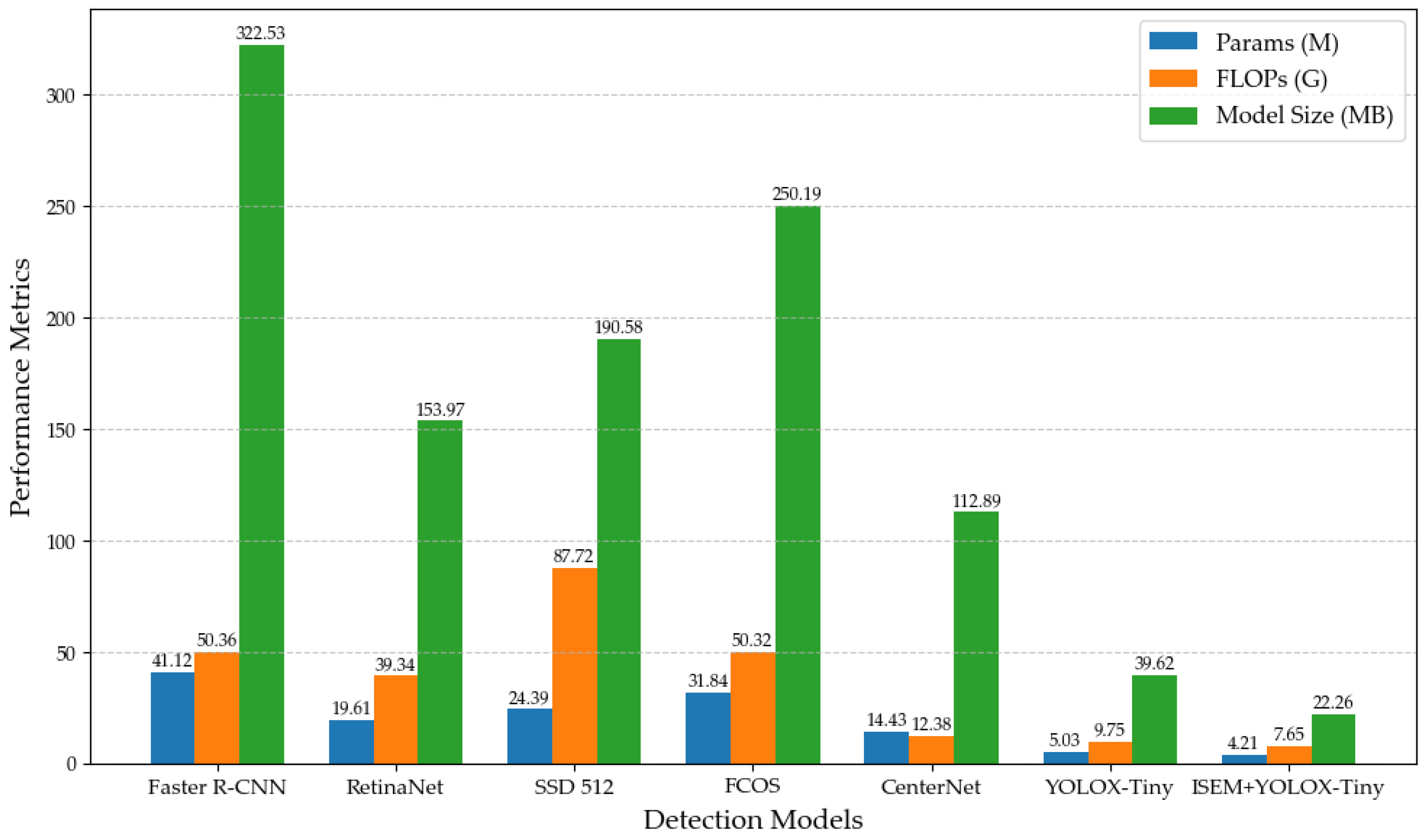

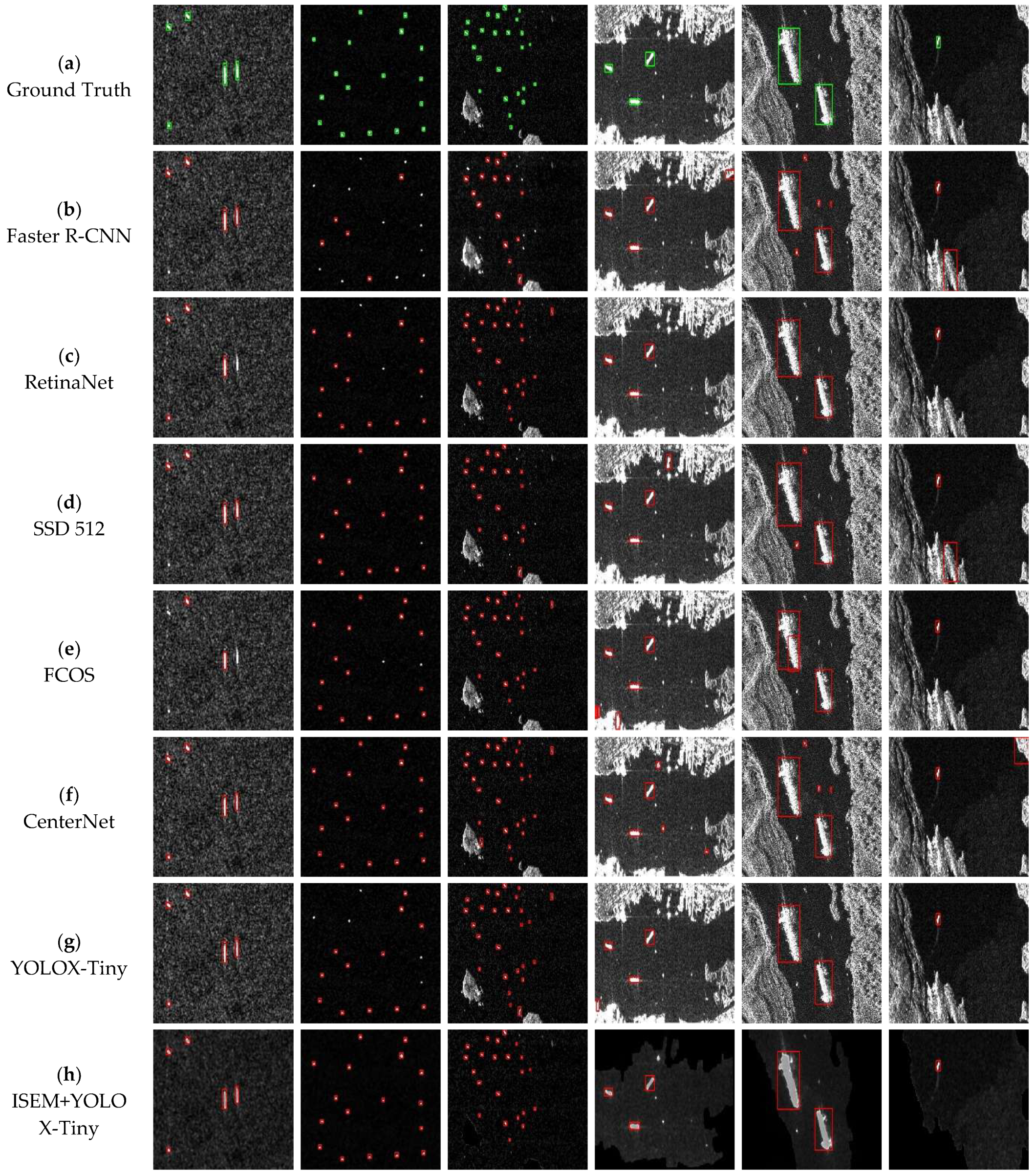

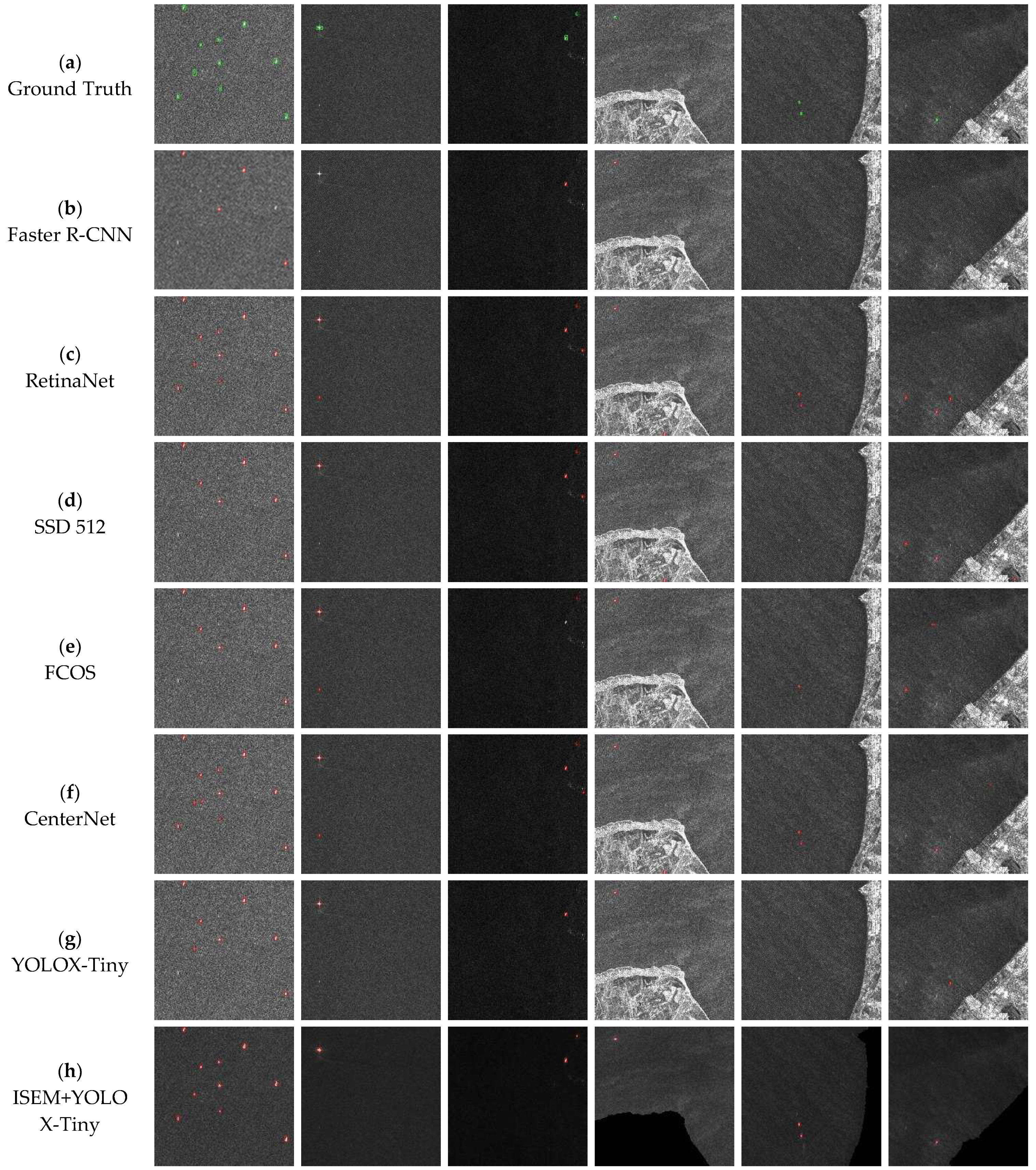

6.4. Light-Weight Ship Detection

7. Conclusions

- (1)

- The limitations of traditional and CNN methods are handled. The low accuracy and the poor generalization ability of traditional methods and the high complexity of CNN methods are all resolved. Light-weight design, parallel computing, hardware acceleration, efficient reasoning, and low power consumption are considered to achieve high efficiency in edge computing environments.

- (2)

- The detection accuracy and robustness are improved. Image preprocessing and saliency enhancement are utilized to suppress the noise and sea clutter effectively. It is key to improving the detection accuracy of the model on the SSDD and LS-SSDD-v1.0 datasets with complex backgrounds.

- (3)

- The computational resource consumption is reduced. The light-weight design increases the speed by about three times and reduces the model size and inference time by 70% and 50%, respectively.

- (4)

- Data transmission consumption is reduced. During the deployment of spaceborne SAR, the slice images contain the key ships are transmitted instead of the entire image, which significantly reduces data transmission bandwidth and improves efficiency and cost-effectiveness of satellite running.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bao, Z.; Xing, M.D.; Wang, T. Radar Imaging Technology; Electronics Industry Press: Beijing, China, 2005. [Google Scholar]

- Moreira, A.; Prats-Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.P. A tutorial on synthetic aperture radar. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–43. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Gao, G. Research on Automatic Acquisition Technology of SAR Image Target ROI. Ph.D. Thesis, National University of Defense Science and Technology, Changsha, China, 2007. [Google Scholar]

- Ding, B.; Wen, G.; Ma, C.; Yang, X. An efficient and robust framework for SAR target recognition by hierarchically fusing global and local features. IEEE Trans. Image Process. 2018, 27, 5983–5995. [Google Scholar] [CrossRef] [PubMed]

- Feng, S.; Ji, K.; Wang, F.; Zhang, L.; Ma, X.; Kuang, G. PAN: Part attention network integrating electromagnetic characteristics for interpretable SAR vehicle target recognition. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5204617. [Google Scholar] [CrossRef]

- Yang, D. Research on Sparse Imaging and Moving Target Detection Processing Method for Satellite. Ph.D. Thesis, Xidian University, Xi’an, China, 2015. [Google Scholar]

- Lee, J.S.; Jurkevich, I. Speckle noise reduction in synthetic aperture radar images. IEEE Trans. Geosci. Remote Sens. 1990, 28, 38–46. [Google Scholar]

- Liu, Y.; Zhang, L.; Wei, H. A novel ship detection method in SAR images based on saliency detection and deep learning. Remote Sens. 2020, 12, 407. [Google Scholar]

- Xie, Y.; Wang, J.; Yu, T. Ship detection in SAR images based on saliency detection and extreme learning machine. IEEE Access 2019, 7, 35608–35615. [Google Scholar]

- Borji, A.; Itti, L. State-of-the-art in visual attention modeling. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 185–207. [Google Scholar] [CrossRef]

- Liu, N.; Zhang, L.; Tang, X. Saliency detection-oriented image enhancement. IEEE Trans. Image Process. 2017, 26, 2658–2672. [Google Scholar]

- Achanta, R.; Hemami, S.; Estrada, F.; Süsstrunk, S. Frequency-tuned salient region detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Gu, X.; Zhang, L. A novel saliency detection method based on multi-scale deep features. IEEE Trans. Multimed. 2018, 20, 2081–2093. [Google Scholar]

- Li, H.; Lu, H.; Zhang, L. Saliency detection via graph-based manifold ranking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Shi, C.; Zhang, X.; Sun, J.; Wang, L. Remote Sensing Scene Image Classification Based on Dense Fusion of Multi-level Features. Remote Sens. 2021, 13, 4379. [Google Scholar] [CrossRef]

- Guo, R.; Sim, C.H. Retinex theory for image enhancement via recursive filtering. IEEE Trans. Image Process. 2022, 31, 4107–4120. [Google Scholar]

- Chen, Y.; Fang, X.; Ma, K. Local contrast enhancement based on adaptive local ternary pattern. IEEE Trans. Image Process. 2023, 32, 1–14. [Google Scholar]

- Heijmans, H.J.; Roerdink, J.B. Mathematical morphology: A modern approach to image processing based on algebra and geometry. J. Vis. Commun. Image Represent. 1998, 8, 348–349. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep learning for generic object detection: A survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Wang, T.; Chen, W.; Luo, X. Deep retinex decomposition with dense inception residual network for low-light image enhancement. IEEE Trans. Instrum. Meas. 2022, 71, 1–12. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Liu, F.; Shen, J. GAN-based image super-resolution with salient region detection and attention mechanism. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 5818–5830. [Google Scholar]

- He, Y.; Yao, L.P.; Li, G.; Liu, Y.; Yang, D.; Li, W. On-orbit fusion processing and analysis of multi-source satellite information. J. Astronaut. 2021, 42, 1–10. [Google Scholar]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge computing: Vision and challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Bian, C.J. Research on In-Orbit Real-Time Detection and Compression Technology of Effective Area for Optical Remote Sensing Images. Ph.D. Thesis, Harbin Institute of Technologym, Harbin, China, 2019. [Google Scholar]

- Wang, Z.K.; Fang, Q.Y.; Han, D.P. Research progress of in-orbit intelligent processing technology for imaging satellites. J. Astronaut. 2022, 43, 259–270. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.B.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Zeming, L.; Jian, S. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Pitas, I.; Venetsanopoulos, A. Nonlinear mean filters in image processing. IEEE Trans. Acoust. Speech Signal Process. 1986, 34, 573–584. [Google Scholar] [CrossRef]

- Huang, T.; Yang, G.; Tang, G. A fast two-dimensional median filtering algorithm. IEEE Trans. Acoust. Speech Signal Process. 1979, 27, 13–18. [Google Scholar] [CrossRef]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the IEEE International Conference on Computer Vision, Bombay, India, 4–7 January 1998; pp. 839–846. [Google Scholar]

- Lee, J.-S. Digital image enhancement and noise filtering by use of local statistics. IEEE Trans. Pattern Anal. Mach. Intell. 1980, PAMI-2, 165–168. [Google Scholar] [CrossRef] [PubMed]

- Frost, V.S.; Stiles, J.A.; Shanmugan, K.S.; Holtzman, J.C. A model for radar images and its application to adaptive digital filtering of multiplicative noise. IEEE Trans. Pattern Anal. Mach. Intell. 1982, PAMI-4, 157–166. [Google Scholar] [CrossRef] [PubMed]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Sauvola, J.; Pietikainen, M. Adaptive document image binarization. Pattern Recognit. 2000, 33, 225–236. [Google Scholar] [CrossRef]

- Hou, X.; Zhang, L. Saliency detection: A spectral residual approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2019, arXiv:1804.02767. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Sattar, F.; Floreby, L.; Salomonsson, G.; Lovstrom, B. Image enhancement based on a nonlinear multiscale method. IEEE Trans. Image Process. 1997, 6, 888–895. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal loss for dense object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. CenterNet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2019, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6568–6577. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2019, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9626–9635. [Google Scholar]

- Zhang, T.; Zhang, X.; Li, J.; Xu, X.; Wang, B.; Zhan, X.; Xu, Y.; Ke, X.; Zeng, T.; Su, H.; et al. SAR ship detection dataset (SSDD): Official release and comprehensive data analysis. Remote Sens. 2021, 13, 3690. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X.; Zhan, X.; Shi, J.; Wei, S.; Pan, D.; Li, J.; Su, H.; Zhou, Y.; et al. LS-SSDD-v1.0: A deep learning dataset dedicated to small ship detection from large-scale Sentinel-1 SAR images. Remote Sens. 2020, 12, 2997. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X.; Zhan, X.; Shi, J.; Wei, S.; Pan, D.; Li, J.; Su, H.; Zhou, Y.; et al. HRSID: A High-Resolution SAR Images Dataset for Ship Detection and Instance Segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar]

| Filter | Image Size | PSNR (dB) | SSIM | EPI | Time (s) |

|---|---|---|---|---|---|

| Mean filter [32] | 512 × 512 | 30.445 | 0.731 | 0.443 | 0.0037 |

| 800 × 800 | 21.469 | 0.571 | 0.340 | 0.0073 | |

| Median filter [33] | 512 × 512 | 31.535 | 0.792 | 0.487 | 0.0078 |

| 800 × 800 | 22.499 | 0.637 | 0.372 | 0.0110 | |

| Bilateral filtering [34] | 32.288 | 0.843 | 0.495 | 0.0846 | |

| 24.138 | 0.755 | 0.413 | 0.1357 | ||

| Lee filter [35] | 32.931 | 0.825 | 0.512 | 5.5143 | |

| 22.640 | 0.667 | 0.389 | 13.0928 | ||

| Frost filter [36] | 31.563 | 0.819 | 0.483 | 9.1715 | |

| 22.329 | 0.648 | 0.355 | 20.4392 |

| Filter Size | Image Size | PSNR (dB) | SSIM | EPI | Time (s) |

|---|---|---|---|---|---|

| 33.318 | 0.881 | 0.626 | 0.0024 | ||

| 23.413 | 0.773 | 0.521 | 0.0058 | ||

| 31.535 | 0.792 | 0.487 | 0.0078 | ||

| 22.499 | 0.637 | 0.372 | 0.0110 | ||

| 28.140 | 0.615 | 0.296 | 0.0144 | ||

| 19.812 | 0.336 | 0.241 | 0.0320 |

| Anchor Box | Detection Model | P (%) | R (%) | F1 (%) | AP (%) | FPS |

|---|---|---|---|---|---|---|

| With Anchor Boxes | Faster R-CNN [44] | 85.11 | 93.13 | 87.27 | 90.77 | 8.65 |

| RetinaNet [45] | 97.05 | 94.81 | 95.92 | 94.85 | 11.53 | |

| SSD 512 [46] | 92.30 | 94.53 | 93.40 | 95.28 | 12.28 | |

| Without Anchor Boxes | FCOS [48] | 94.38 | 94.90 | 94.63 | 93.95 | 13.70 |

| CenterNet [47] | 95.59 | 92.53 | 94.04 | 94.32 | 14.12 | |

| YOLOX-Tiny [31] | 96.51 | 95.67 | 96.09 | 96.44 | 30.31 | |

| ISEM+YOLOX-Tiny | 98.25 | 97.29 | 98.38 | 97.96 | 35.31 |

| Anchor Box | Detection Model | P (%) | R (%) | F1 (%) | AP (%) | FPS |

|---|---|---|---|---|---|---|

| With Anchor Boxes | Faster R-CNN [44] | 73.81 | 72.46 | 73.13 | 72.95 | 5.54 |

| RetinaNet [45] | 81.77 | 73.26 | 77.28 | 76.15 | 7.38 | |

| SSD 512 [46] | 76.85 | 77.59 | 77.22 | 78.63 | 7.86 | |

| Without Anchor Boxes | FCOS [48] | 79.52 | 77.85 | 78.68 | 77.14 | 8.77 |

| CenterNet [47] | 80.54 | 75.93 | 78.17 | 77.90 | 9.04 | |

| YOLOX-Tiny [31] | 81.32 | 78.51 | 79.89 | 79.21 | 19.40 | |

| ISEM+YOLOX-Tiny | 83.25 | 80.77 | 82.89 | 81.38 | 25.63 |

| Metrics | Original 3 Models | Light-Weight Architecture | Improvements |

|---|---|---|---|

| Inference time | About 375 milliseconds/frame | About 150 milliseconds/frame | Reduced by about 60% |

| Average inference speed | About 2.67 frames/second | About 6.67 frames/second | Increased by about 150% |

| High-resolution image processing | Long, insufficient real-time performance | Real-time processing time greatly reduced | Significantly improved |

| Image processing | Insufficient real-time performance | Significantly shortened inference time | Significant improvement in capability |

| Power | About 10 watts | About 7 watts | Reduced by about 30% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, H.; Yan, K.; Li, C.; Wang, L.; Li, T. Light-Weight Synthetic Aperture Radar Image Saliency Enhancement Method Based on Sea–Land Segmentation Preference. Remote Sens. 2025, 17, 795. https://doi.org/10.3390/rs17050795

Yu H, Yan K, Li C, Wang L, Li T. Light-Weight Synthetic Aperture Radar Image Saliency Enhancement Method Based on Sea–Land Segmentation Preference. Remote Sensing. 2025; 17(5):795. https://doi.org/10.3390/rs17050795

Chicago/Turabian StyleYu, Hang, Ke Yan, Chenyang Li, Lei Wang, and Teng Li. 2025. "Light-Weight Synthetic Aperture Radar Image Saliency Enhancement Method Based on Sea–Land Segmentation Preference" Remote Sensing 17, no. 5: 795. https://doi.org/10.3390/rs17050795

APA StyleYu, H., Yan, K., Li, C., Wang, L., & Li, T. (2025). Light-Weight Synthetic Aperture Radar Image Saliency Enhancement Method Based on Sea–Land Segmentation Preference. Remote Sensing, 17(5), 795. https://doi.org/10.3390/rs17050795