Geometric Alignment Improves Wheat NDVI Calculation from Ground-Based Multispectral Images

Abstract

1. Introduction

2. Materials and Methods

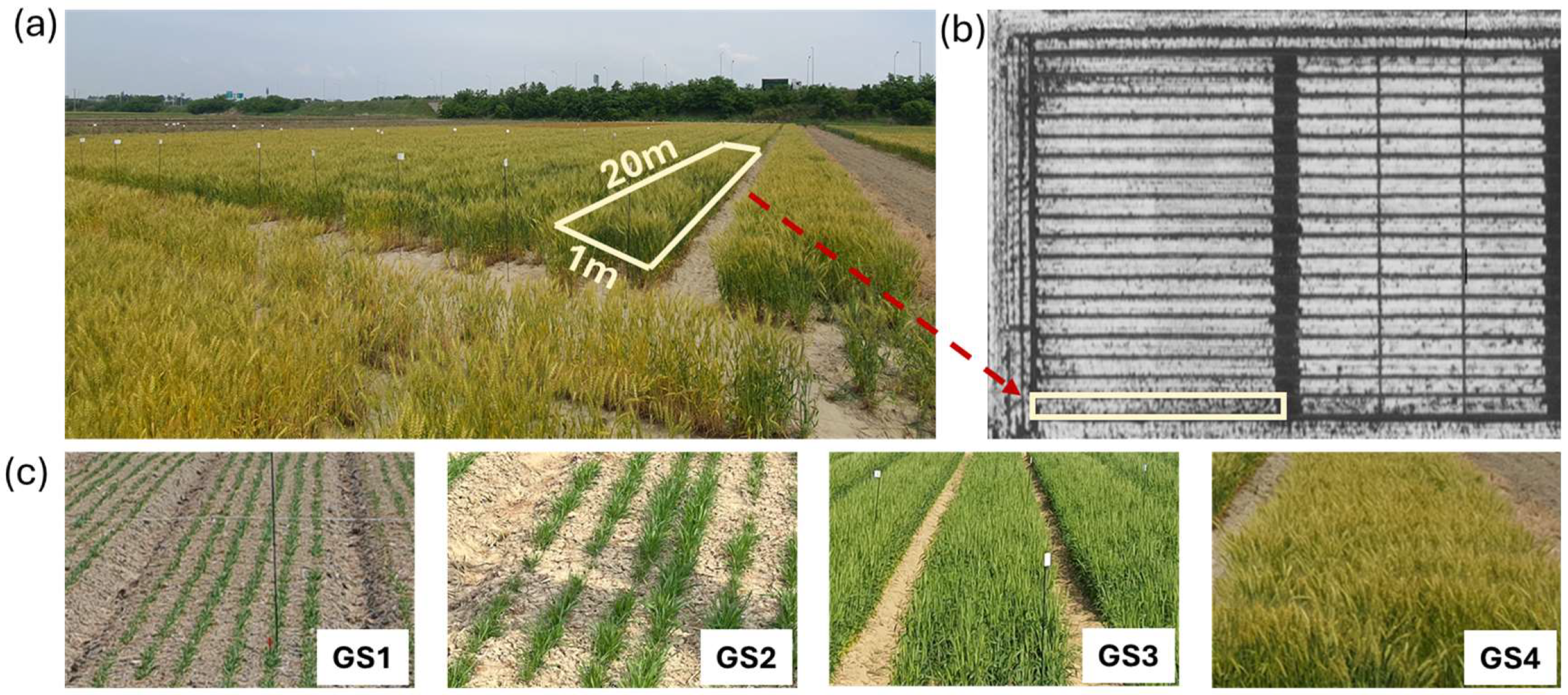

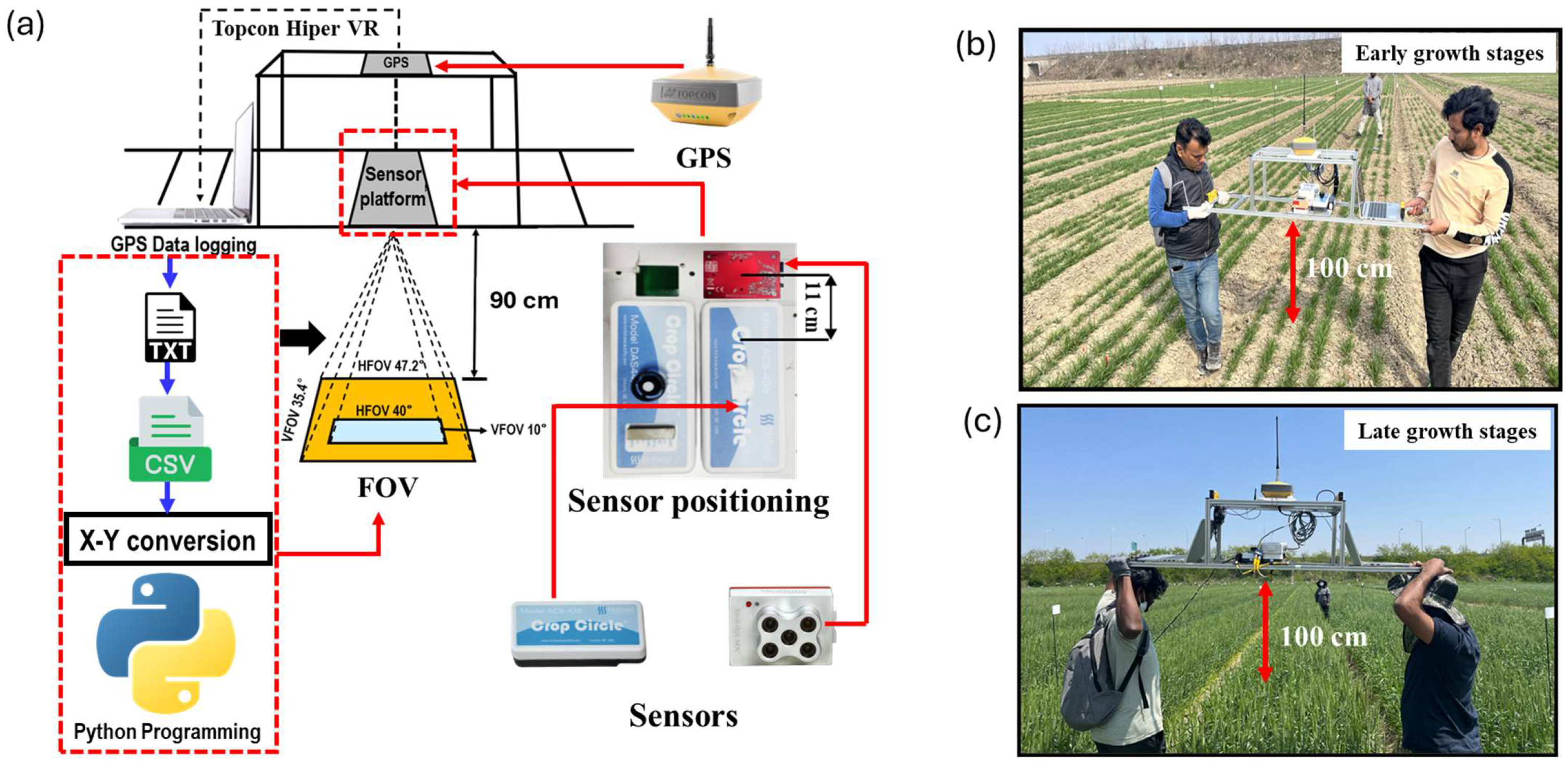

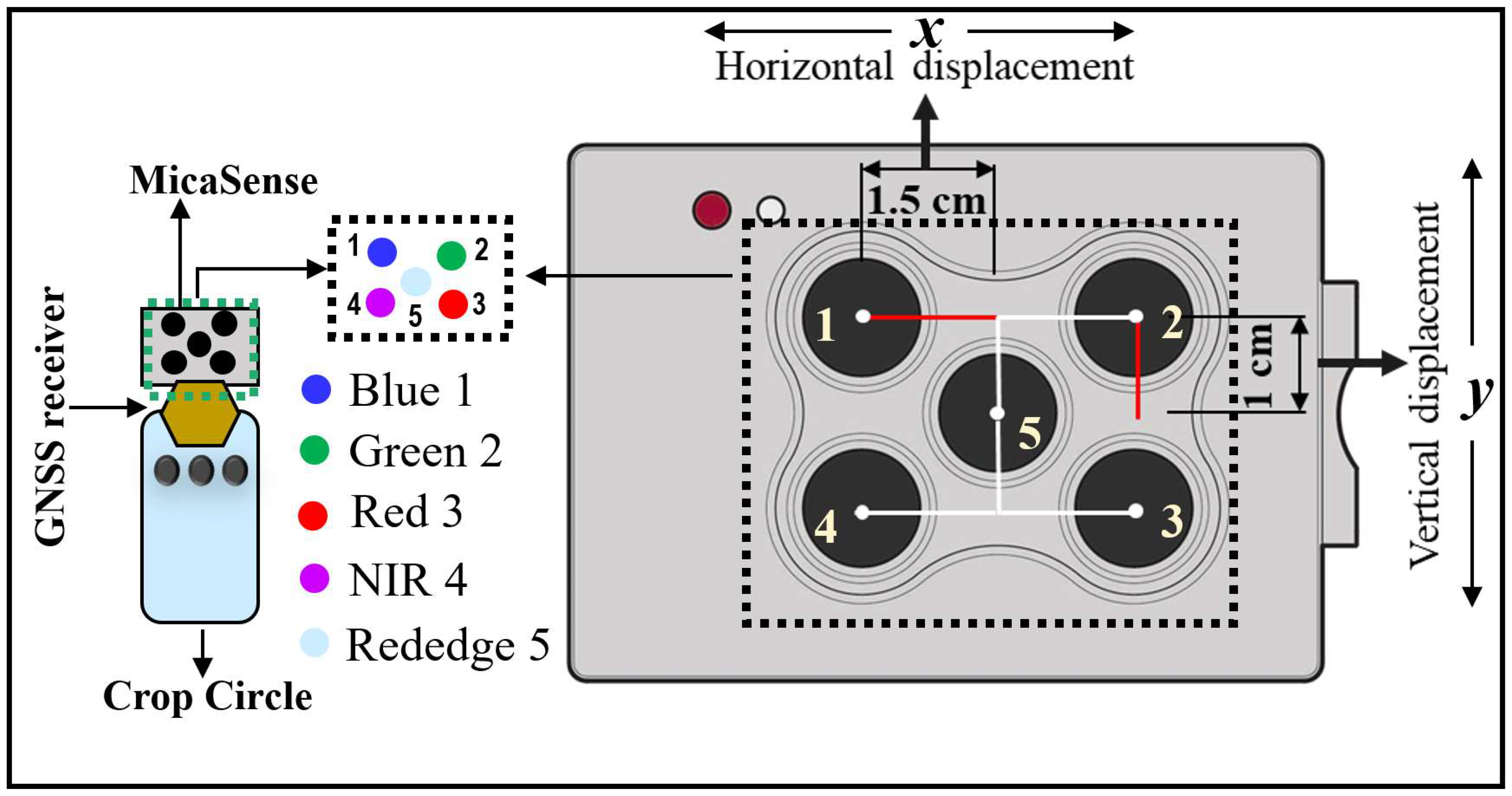

2.1. Study Area, Sensors, and Data Collection

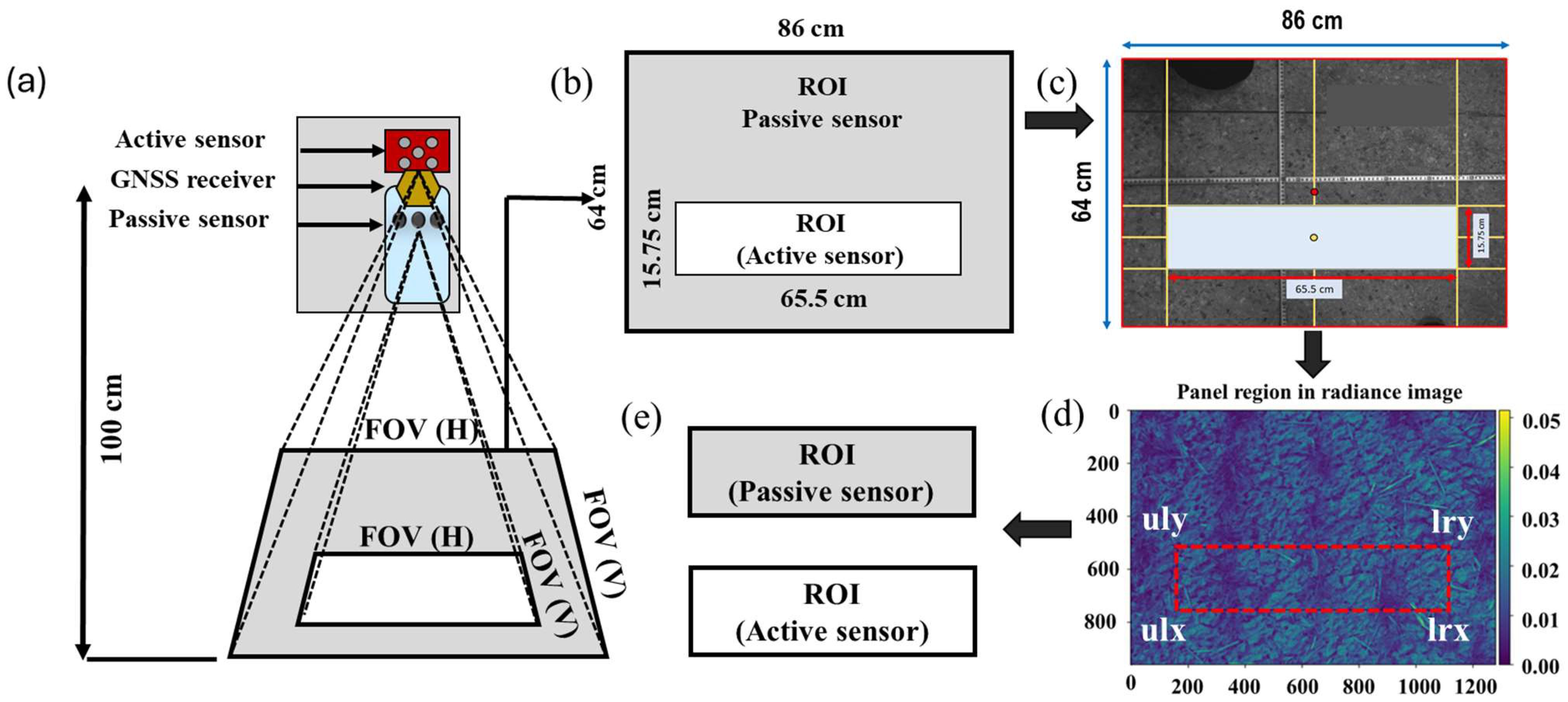

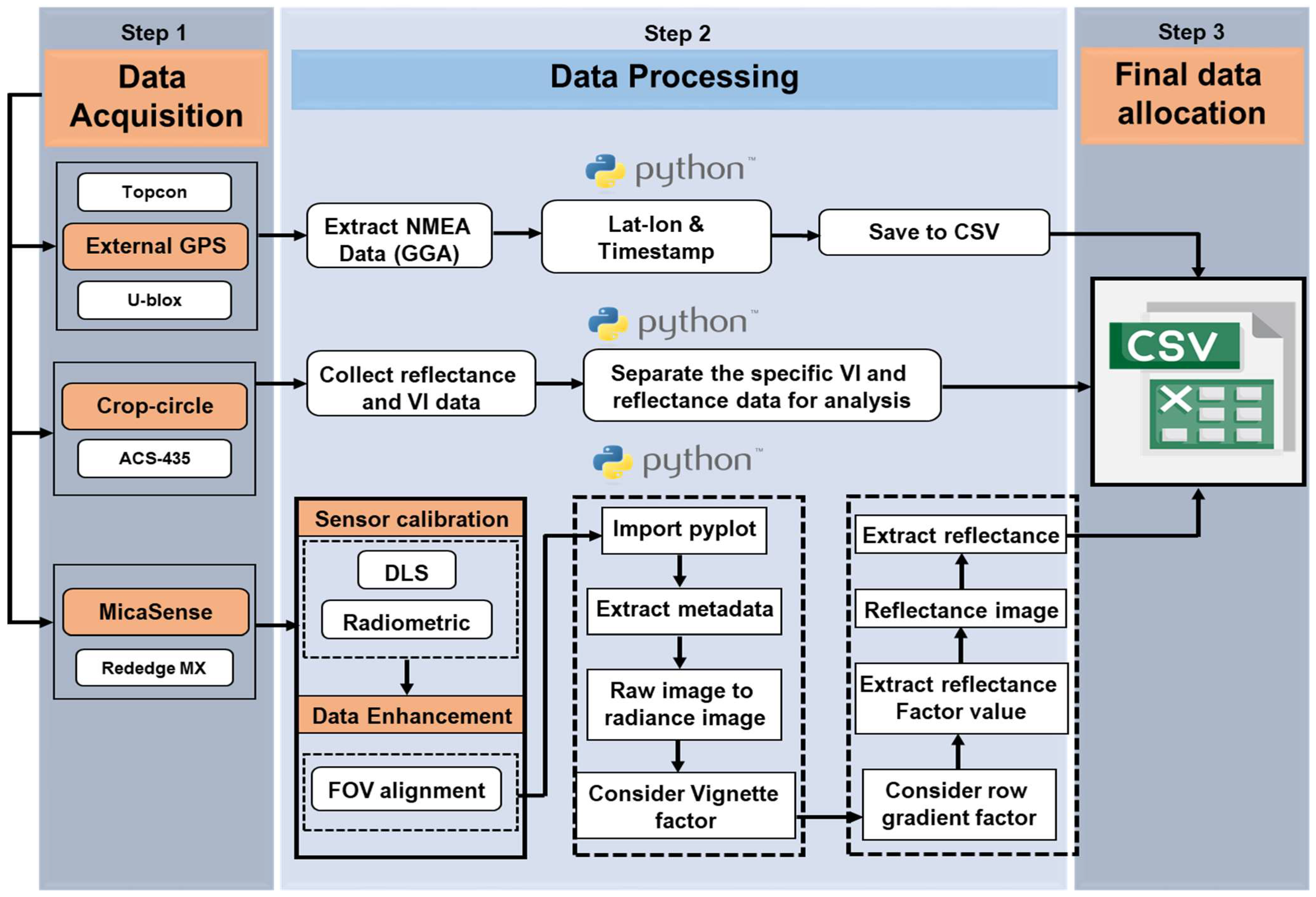

2.2. Multispectral Data Processing Procedures

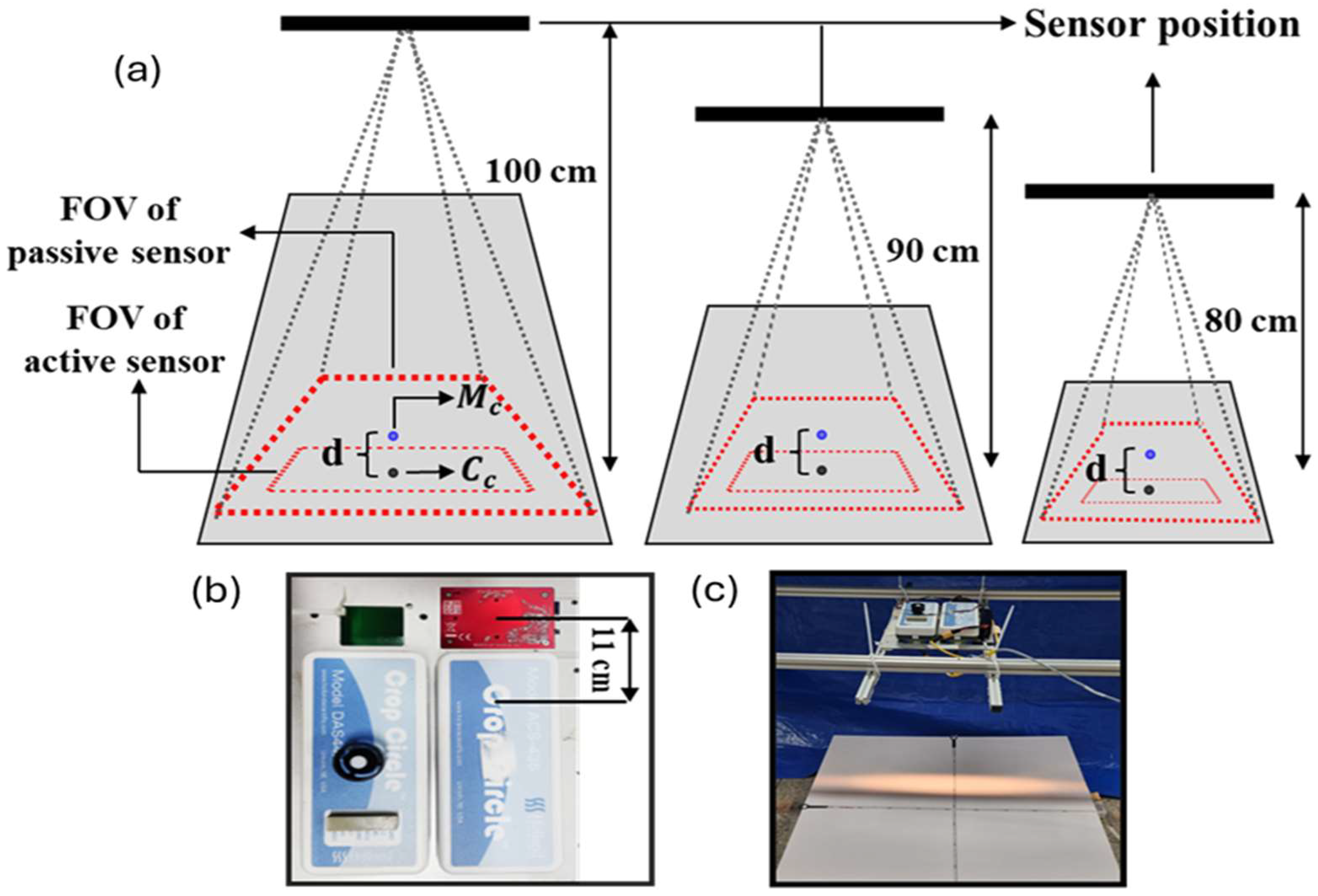

2.3. FOV Alignment Procedure

3. Results

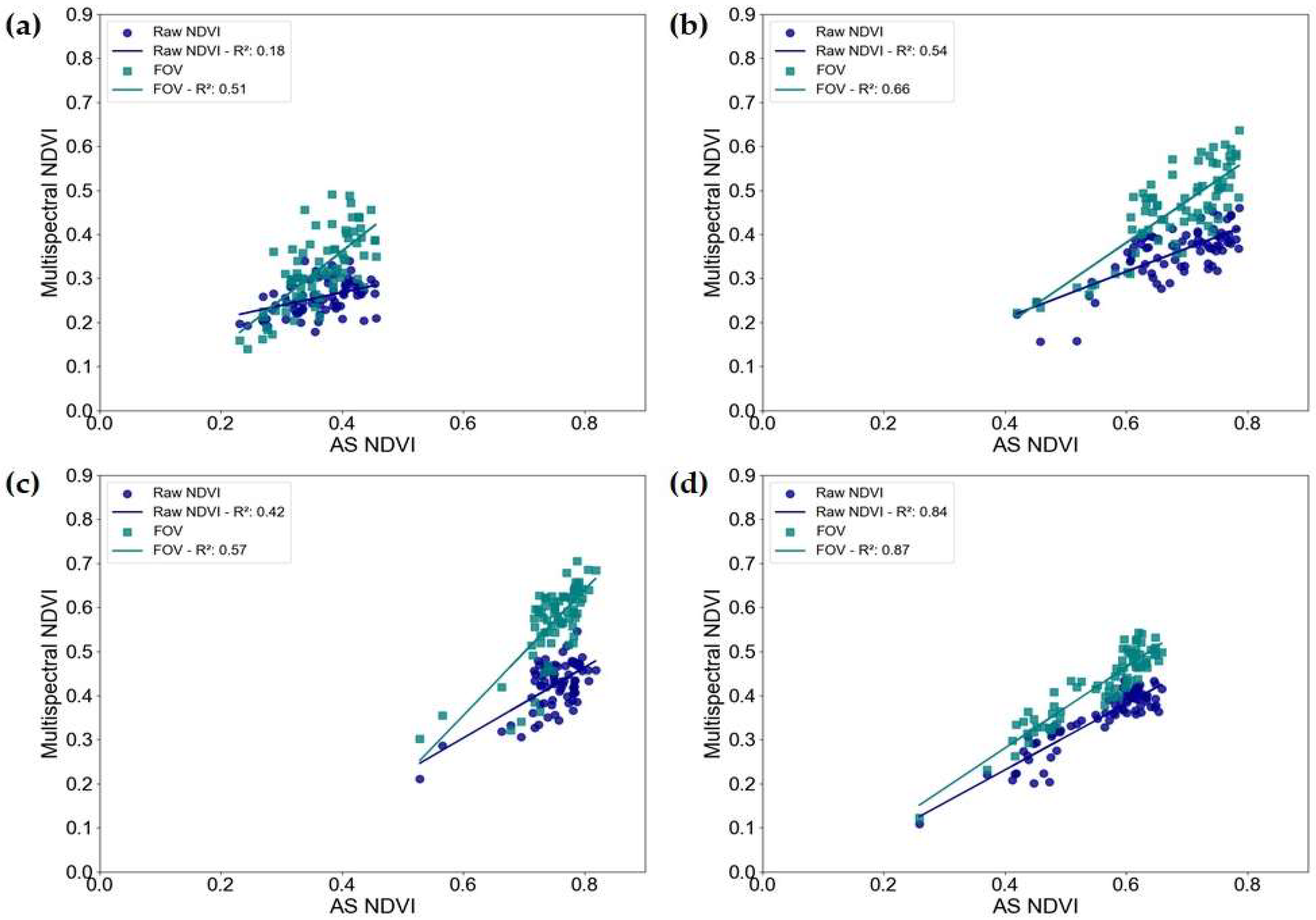

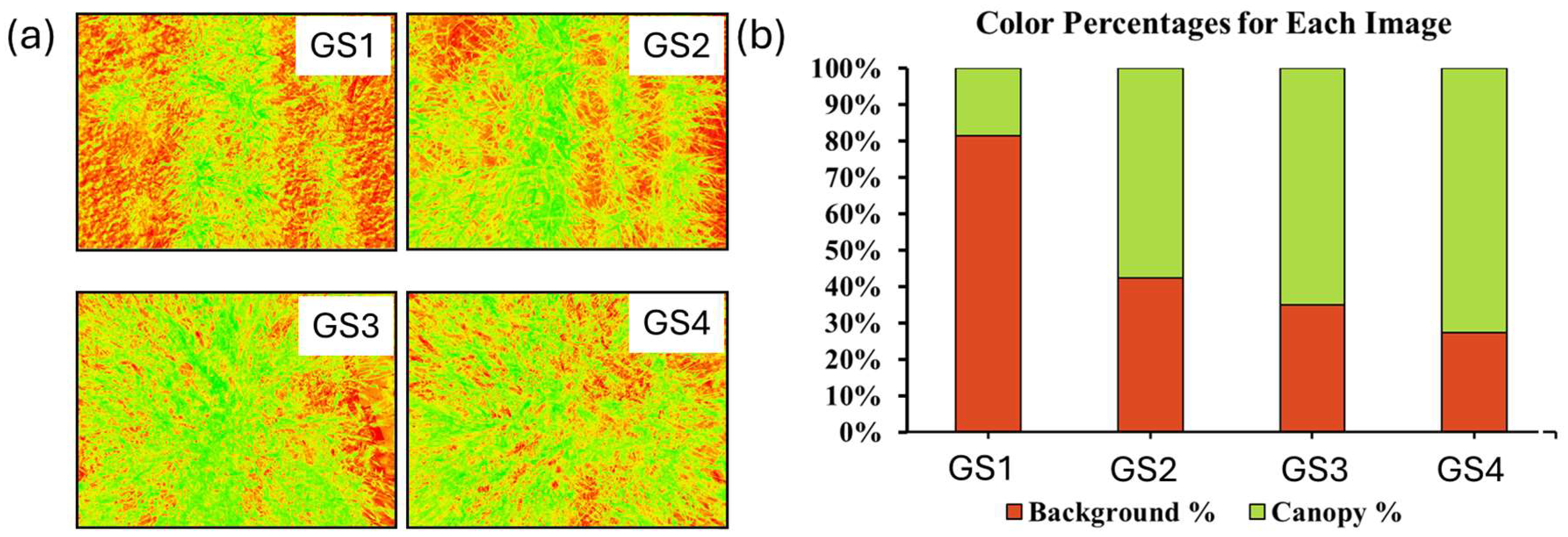

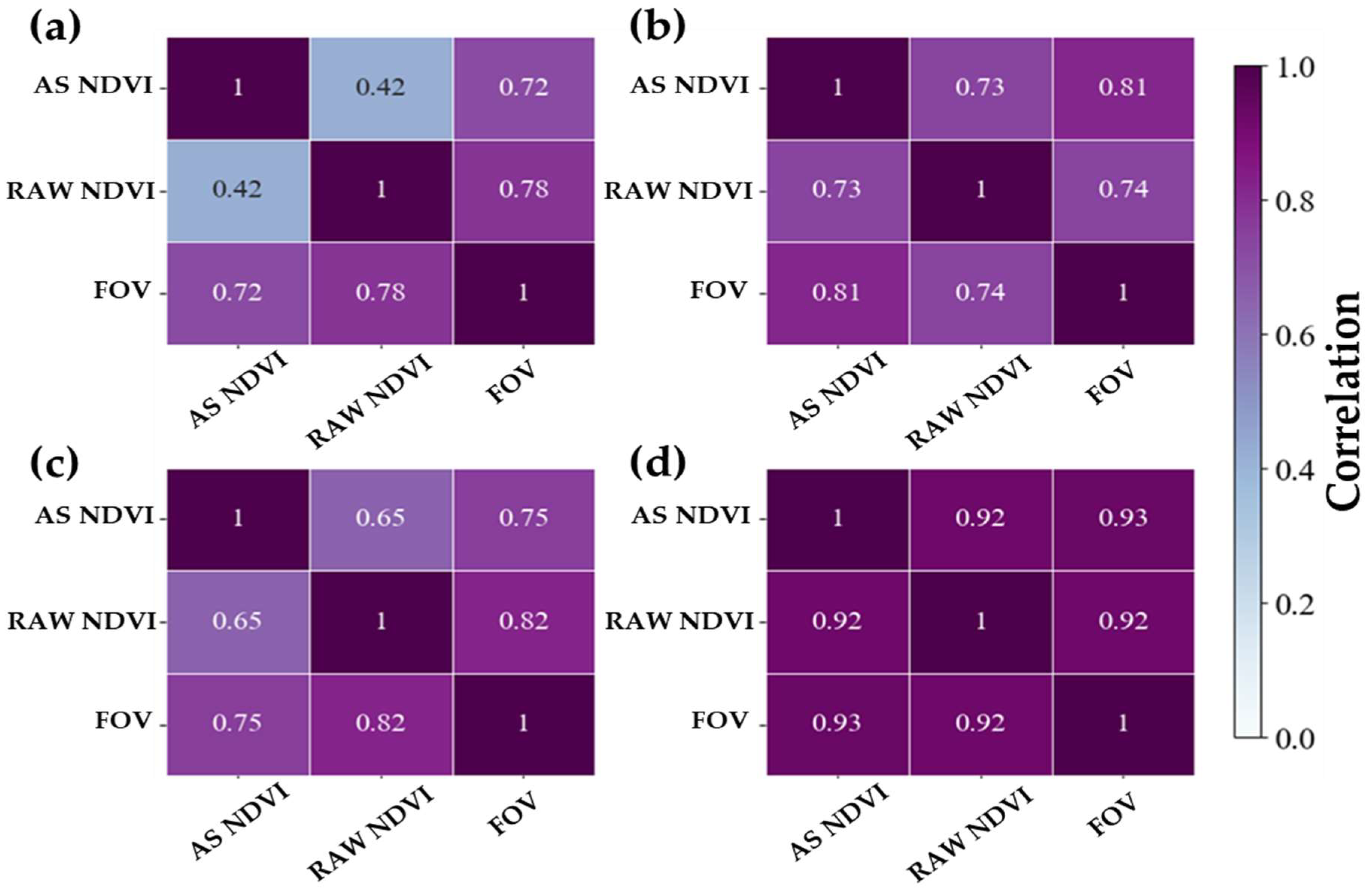

3.1. Data Characterization

3.2. Measurement Accuracy

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sishodia, R.P.; Ray, R.L.; Singh, S.K. Applications of remote sensing in precision agriculture: A review. Remote Sens. 2020, 12, 3136. [Google Scholar] [CrossRef]

- Shafi, U.; Mumtaz, R.; García-Nieto, J.; Hassan, S.A.; Zaidi, S.A.; Iqbal, N. Precision agriculture techniques and practices: From considerations to applications. Sensors 2019, 19, 3796. [Google Scholar] [CrossRef]

- Sarkar, T.K.; Ryu, C.S.; Kang, Y.S.; Kim, S.H.; Jeon, S.R.; Jang, S.H.; Park, J.W.; Kim, S.G.; Kim, H.J. Integrating UAV remote sensing with GIS for predicting rice grain protein. J. Biosyst. Eng. 2018, 43, 148–159. [Google Scholar]

- Montes de Oca, A.; Arreola, L.; Flores, A.; Sanchez, J.; Flores, G. Low-cost multispectral imaging system for crop monitoring. In Proceedings of the 2018 International Conference on Unmanned Aircraft Systems (ICUAS), Dallas, TX, USA, 12–15 June 2018. [Google Scholar]

- Lu, N.; Wang, W.; Zhang, Q.; Li, D.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Baret, F.; Liu, S.; et al. Estimation of nitrogen nutrition status in winter wheat from unmanned aerial vehicle-based multi-angular multispectral imagery. J. Front. Plant Sci. 2019, 10, 1601. [Google Scholar] [CrossRef] [PubMed]

- Lu, N.; Wu, Y.; Zheng, H.; Yao, X.; Zhu, Y.; Cao, W.; Cheng, T. An assessment of multi-view spectral information from UAV-based color-infrared images for improved estimation of nitrogen nutrition status in winter wheat. Precis. Agric. 2022, 23, 1653–1674. [Google Scholar] [CrossRef]

- Olesen, M.H.; Nikneshan, P.; Shrestha, S.; Tadayyon, A.; Deleuran, L.C.; Boelt, B.; Gislum, R. Viability prediction of Ricinus communis L. seeds using multispectral imaging. Sensors 2015, 15, 4592–4604. [Google Scholar]

- Di Nisio, A.; Adamo, F.; Acciani, G.; Attivissimo, F. Fast detection of olive trees affected by Xylella fastidiosa from UAVs using multispectral imaging. Sensors 2020, 20, 4915. [Google Scholar] [CrossRef]

- Yu, R.; Luo, Y.; Zhou, Q.; Zhang, X.; Wu, D.; Ren, L. Early detection of pine wilt disease using deep learning algorithms and UAV-based multispectral imagery. For. Ecol. Manag. 2021, 497, 119493. [Google Scholar] [CrossRef]

- Radočaj, D.; Šiljeg, A.; Marinović, R.; Jurišić, M. State of major vegetation indices in precision agriculture studies indexed in Web of Science: A review. Agriculture 2023, 13, 707. [Google Scholar] [CrossRef]

- Güven, B.; Baz, İ.; Kocaoğlu, B.; Toprak, E.; Barkana, D.E.; Özdemir, B.S. Smart farming technologies for sustainable agriculture: From food to energy. In A Sustainable Green Future; Oncel, S.S., Ed.; Springer: Cham, Switzerland, 2023. [Google Scholar] [CrossRef]

- Nicolis, O.; Gonzalez, C. Wavelet-based fractal and multifractal analysis for detecting mineral deposits using multispectral images taken by drones. In Methods and Applications in Petroleum and Mineral Exploration and Engineering Geology; Elsevier: Amsterdam, The Netherlands, 2021; pp. 295–307. [Google Scholar]

- Lapray, P.J.; Wang, X.; Thomas, J.B.; Gouton, P. Multispectral filter arrays: Recent advances and practical implementation. Sensors 2014, 14, 21626–21659. [Google Scholar] [CrossRef] [PubMed]

- Haque, M.A.; Reza, M.N.; Ali, M.; Karim, M.R.; Ahmed, S.; Lee, K.; Khang, Y.H.; Chung, S. Effects of environmental conditions on vegetation indices from multispectral images: A review. Korean J. Remote Sens. 2024, 40, 319–341. [Google Scholar]

- Park, M.J.; Ryu, C.S.; Kang, Y.S.; Jang, S.H.; Park, J.W.; Kim, T.Y.; Kang, K.S.; Ba, H.C. Estimation of moisture stress for soybean using thermal image sensor mounted on UAV. Precis. Agric. Sci. Technol. 2021, 3, 111–119. [Google Scholar]

- Pan, Y.; Bélanger, S.; Huot, Y. Evaluation of atmospheric correction algorithms over lakes for high-resolution multispectral imagery: Implications of adjacency effect. Remote Sens. 2022, 14, 2979. [Google Scholar] [CrossRef]

- Zhou, X.; Liu, C.; Xue, Y.; Akbar, A.; Jia, S.; Zhou, Y.; Zeng, D. Radiometric calibration of a large-array commodity CMOS multispectral camera for UAV-borne remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102968. [Google Scholar] [CrossRef]

- Chen, W.; Li, X.; Wang, L. Multimodal remote sensing science and technology. In Remote Sensing Intelligent Interpretation for Mine Geological Environment; Springer: Singapore, 2022. [Google Scholar] [CrossRef]

- Sun, W.; Ren, K.; Meng, X.; Yang, G.; Xiao, C.; Peng, J.; Huang, J. MLR-DBPFN: A multi-scale low-rank deep back projection fusion network for anti-noise hyperspectral and multispectral image fusion. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Dian, R.; Li, S.; Sun, B.; Guo, A. Recent advances and new guidelines on hyperspectral and multispectral image fusion. Inf. Fusion 2021, 69, 40–51. [Google Scholar] [CrossRef]

- Fu, X.; Wang, W.; Huang, Y.; Ding, X.; Paisley, J. Deep multiscale detail networks for multiband spectral image sharpening. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 2090–2104. [Google Scholar] [CrossRef] [PubMed]

- Coesfeld, J.; Kuester, T.; Kuechly, H.U.; Kyba, C.C. Reducing variability and removing natural light from nighttime satellite imagery: A case study using the VIIRS DNB. Sensors 2020, 20, 3287. [Google Scholar] [CrossRef] [PubMed]

- Krus, A.; Valero, C.; Ramirez, J.; Cruz, C.; Barrientos, A.; del Cerro, J. Distortion and mosaicking of close-up multi-spectral images. In Precision Agriculture’21; Wageningen Academic Publishers: Wageningen, The Netherlands, 2021; pp. 33–46. [Google Scholar] [CrossRef]

- Agrahari, R.K.; Kobayashi, Y.; Tanaka, T.S.T.; Panda, S.K.; Koyama, H. Smart fertilizer management: The progress of imaging technologies and possible implementation of plant biomarkers in agriculture. Soil Sci. Plant Nutr. 2021, 67, 248–258. [Google Scholar] [CrossRef]

- Wasonga, D.O.; Yaw, A.; Kleemola, J.; Alakukku, L.; Mäkelä, P.S. Red-green-blue and multispectral imaging as potential tools for estimating growth and nutritional performance of cassava under deficit irrigation and potassium fertigation. Remote Sens. 2021, 13, 598. [Google Scholar] [CrossRef]

- Berger, K.; Machwitz, M.; Kycko, M.; Kefauver, S.C.; Van Wittenberghe, S.; Gerhards, M.; Schlerf, M. Multi-sensor spectral synergies for crop stress detection and monitoring in the optical domain: A review. Remote Sens. Environ. 2022, 280, 113198. [Google Scholar] [CrossRef]

- Vidican, R.; Mălinaș, A.; Ranta, O.; Moldovan, C.; Marian, O.; Ghețe, A.; Cătunescu, G.M. Using remote sensing vegetation indices for the discrimination and monitoring of agricultural crops: A critical review. Agronomy 2023, 13, 3040. [Google Scholar] [CrossRef]

- Skendžić, S.; Zovko, M.; Lešić, V.; Živković, I.P.; Lemić, D. Detection and evaluation of environmental stress in winter wheat using remote and proximal sensing methods and vegetation indices—A review. Diversity 2023, 15, 481. [Google Scholar] [CrossRef]

- Rojas, O. Next generation agricultural stress index system (ASIS) for agricultural drought monitoring. Remote Sens. 2021, 13, 959. [Google Scholar] [CrossRef]

- Ahmed, N.; Zhang, B.; Deng, L.; Bozdar, B.; Li, J.; Chachar, S.; Chachar, Z.; Jahan, I.; Talpur, A.; Gishkori, M.S.; et al. Advancing horizons in vegetable cultivation: A journey from age-old practices to high-tech greenhouse cultivation—A review. Front. Plant Sci. 2024, 15, 1357153. [Google Scholar] [CrossRef] [PubMed]

- Biney, J.K.; Saberioon, M.; Borůvka, L.; Houška, J.; Vašát, R.; Chapman Agyeman, P.; Coblinski, J.A.; Klement, A. Exploring the suitability of UAS-based multispectral images for estimating soil organic carbon: Comparison with proximal soil sensing and spaceborne imagery. Remote Sens. 2021, 13, 308. [Google Scholar] [CrossRef]

- Roma, E.; Catania, P.; Vallone, M.; Orlando, S. Unmanned aerial vehicle and proximal sensing of vegetation indices in olive tree (Olea europaea). J. Agric. Eng. 2023, 54, 1536. [Google Scholar] [CrossRef]

- Park, J.K.; Das, A.; Park, J.H. Application trend of unmanned aerial vehicle (UAV) image in agricultural sector: Review and proposal. Korean J. Agric. Sci. 2015, 42, 269–276. [Google Scholar] [CrossRef]

- Tae-Chun, K.; Jong-Jin, P.; Gi-Soo, B.; Tae-dong, K. Optimal position of thermal fog nozzles for multicopter drones. Precis. Agric. Sci. Technol. 2021, 3, 122. [Google Scholar]

- Yun, H.S.; Park, S.H.; Kim, H.J.; Lee, W.D.; Lee, K.D.; Hong, S.Y.; Jung, G.H. Use of unmanned aerial vehicle for multi-temporal monitoring of soybean vegetation fraction. J. Biosyst. Eng. 2016, 41, 126–137. [Google Scholar] [CrossRef]

- Govender, M.; Chetty, K.; Naiken, V.; Bulcock, H. A comparison of satellite hyperspectral and multispectral remote sensing imagery for improved classification and mapping of vegetation. Water SA 2008, 34, 147–154. [Google Scholar] [CrossRef]

- Barnes, E.M.; Sudduth, K.A.; Hummel, J.W.; Lesch, S.M.; Corwin, D.L.; Yang, C.; Daughtry, C.S.; Bausch, W.C. Remote and ground-based sensor techniques to map soil properties. Photogramm. Eng. Remote Sens. 2003, 69, 619–630. [Google Scholar] [CrossRef]

- De Souza, R.; Buchhart, C.; Heil, K.; Plass, J.; Padilla, F.M.; Schmidhalter, U. Effect of time of day and sky conditions on different vegetation indices calculated from active and passive sensors and images taken from UAV. Remote Sens. 2021, 13, 1691. [Google Scholar] [CrossRef]

- Cummings, C.; Miao, Y.; Paiao, G.D.; Kang, S.; Fernández, F.G. Corn nitrogen status diagnosis with an innovative multi-parameter crop circle phenom sensing system. Remote Sens. 2021, 13, 401. [Google Scholar] [CrossRef]

- Fadadu, S.; Pandey, S.; Hegde, D.; Shi, Y.; Chou, F.C.; Djuric, N.; Vallespi-Gonzalez, C. Multi-view fusion of sensor data for improved perception and prediction in autonomous driving. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 2349–2357. [Google Scholar]

- Fang, Z.; Liang, C.; Xu, S.; Bai, Q.; Wang, Y.; Zhang, H.; Jin, B. Spatial resolution enhancement of OFDR sensing system using phase-domain-interpolation resampling method. IEEE Sens. J. 2021, 22, 3202–3210. [Google Scholar] [CrossRef]

- Zhang, K.; Zhang, F.; Wan, W.; Yu, H.; Sun, J.; Del Ser, J.; Elyan, E.; Hussain, A. Panchromatic and multispectral image fusion for remote sensing and earth observation: Concepts, taxonomy, literature review, evaluation methodologies and challenges ahead. Inf. Fusion 2023, 93, 227–242. [Google Scholar] [CrossRef]

- Moltó, E. Fusion of different image sources for improved monitoring of agricultural plots. Sensors 2022, 22, 6642. [Google Scholar] [CrossRef] [PubMed]

- Guo, B.; Pi, Y.; Wang, M. Sensor correction method based on image space consistency for planar array sensors of optical satellite. IEEE Trans. Geosci. Remote Sens. 2023, 62, 5601915. [Google Scholar] [CrossRef]

- Colaço, A.F.; Schaefer, M.; Bramley, R.G. Broadacre mapping of wheat biomass using ground-based LiDAR technology. Remote Sens. 2021, 13, 3218. [Google Scholar] [CrossRef]

- Stamford, J.D.; Vialet-Chabrand, S.; Cameron, I.; Lawson, T. Development of an accurate low-cost NDVI imaging system for assessing plant health. Plant Methods 2023, 19, 9. [Google Scholar] [CrossRef]

- Wei, Z.; Fang, W. UV-NDVI for real-time crop health monitoring in vertical farms. Smart Agric. Technol. 2024, 8, 100462. [Google Scholar] [CrossRef]

- Huang, W.; Huang, J.; Wang, X.; Wang, F.; Shi, J. Comparability of red/near-infrared reflectance and NDVI based on the spectral response function between MODIS and 30 other satellite sensors using rice canopy spectra. Sensors 2013, 13, 16023–16050. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Cherkauer, K.; Bowling, L. Corn response to climate stress detected with satellite-based NDVI time series. Remote Sens. 2016, 8, 269. [Google Scholar] [CrossRef]

- Prudnikova, E.; Savin, I.; Vindeker, G.; Grubina, P.; Shishkonakova, E.; Sharychev, D. Influence of soil background on spectral reflectance of winter wheat crop canopy. Remote Sens. 2019, 11, 1932. [Google Scholar] [CrossRef]

- Liu, S.; Jin, X.; Bai, Y.; Wu, W.; Cui, N.; Cheng, M.; Liu, Y.; Meng, L.; Jia, X.; Nie, C.; et al. UAV multispectral images for accurate estimation of the maize LAI considering the effect of soil background. Int. J. Appl. Earth Obs. Geoinf. 2023, 121, 103383. [Google Scholar] [CrossRef]

- MicaSense Incorporated. Image Processing. 2017. Available online: https://github.com/micasense/imageprocessing (accessed on 13 July 2024).

- Hecht, R.; Herold, H.; Behnisch, M.; Jehling, M. Mapping long-term dynamics of population and dwellings based on a multi-temporal analysis of urban morphologies. ISPRS Int. J. Geo-Inf. 2018, 8, 2. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS. In Third Earth Resources Technology Satellite-1 Symposium: Section A–B; Freden, S.C., Mercanti, E.P., Becker, M.A., Eds.; Technical Presentations; National Aeronautics and Space Administration: Washington, DC, USA, 1974; pp. 309–317. [Google Scholar]

- Prey, L.; Von Bloh, M.; Schmidhalter, U. Evaluating RGB imaging and multispectral active and hyperspectral passive sensing for assessing early plant vigor in winter wheat. Sensors 2018, 18, 2931. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.; Tang, L.; Hupy, J.P.; Wang, Y.; Shao, G. A commentary review on the use of normalized difference vegetation index (NDVI) in the era of popular remote sensing. J. For. Res. 2017, 32, 1–6. [Google Scholar] [CrossRef]

- Farias, G.D.; Bremm, C.; Bredemeier, C.; de Lima Menezes, J.; Alves, L.A.; Tiecher, T.; Martins, A.P.; Fioravanço, G.P.; da Silva, G.P.; de Faccio Carvalho, P.C. Normalized Difference Vegetation Index (NDVI) for soybean biomass and nutrient uptake estimation in response to production systems and fertilization strategies. Front. Sustain. Food Syst. 2023, 6, 959681. [Google Scholar] [CrossRef]

- Aasen, H.; Honkavaara, E.; Lucieer, A.; Zarco-Tejada, P.J. Quantitative remote sensing at ultra-high resolution with UAV spectroscopy: A review of sensor technology, measurement procedures, and data correction workflows. Remote Sens. 2018, 10, 1091. [Google Scholar] [CrossRef]

- Su, H.; Jung, C. Multi-spectral fusion and denoising of RGB and NIR images using multi-scale wavelet analysis. In Proceedings of the 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 1779–1784. [Google Scholar]

- Tu, B.; Zhang, X.; Wang, J.; Zhang, G.; Ou, X. Spectral–Spatial Hyperspectral Image Classification via Non-local Means Filtering Feature Extraction. Sens Imaging 2018, 19, 11. [Google Scholar] [CrossRef]

- Ma, Z. Sensing Technologies for High-Throughput Plant Phenotyping: A Comprehensive Review With a Case Study. Ph.D. Thesis, University of British Columbia, Vancouver, BC, Canada, 2023. [Google Scholar]

- Wan, L.; Ryu, Y.; Dechant, B.; Hwang, Y.; Feng, H.; Kang, Y.; Jeong, S.; Lee, J.; Choi, C.; Bae, J. Correcting confounding canopy structure, biochemistry and soil background effects improves leaf area index estimates across diverse ecosystems from Sentinel-2 imagery. Remote Sens. Environ. 2024, 309, 114224. [Google Scholar] [CrossRef]

- Xu, R.; Li, C.; Bernardes, S. Development and testing of a UAV-based multi-sensor system for plant phenotyping and precision agriculture. Remote Sens. 2021, 13, 3517. [Google Scholar] [CrossRef]

- Sankaran, S.; Khot, L.R.; Espinoza, C.Z.; Jarolmasjed, S.; Sathuvalli, V.R.; Vandemark, G.J.; Miklas, P.N.; Carter, A.H.; Pumphrey, M.O.; Knowles, N.R.; et al. Low-altitude, high-resolution aerial imaging systems for row and field crop phenotyping: A review. Eur. J. Agron. 2015, 70, 112–123. [Google Scholar] [CrossRef]

| Item | Passive Multispectral Sensor | Active Reflectometry Sensor |

|---|---|---|

| Model | MicaSense RedEdge MX | Crop Circle ACS-435 |

| Sensor type | Passive sensor | Active sensor |

| Dimensions (m) | 0.121 × 0.066 × 0.046 | 0.038 × 0.178 × 0.076 |

| Weight | 150 g | 385 g |

| Sensing interval | 1 s | 1–10 s |

| Center wavelength | Blue (475), Green (560), Red (668), NIR (717), RedEdge (840) | Red (670), NIR (717), RedEdge (730) |

| Resolution | 1280 × 960 pixels | |

| Focal length | 5.4 mm | |

| FOV | 47.2° (HFOV), 35.4° (VFOV) | 40~45° (HFOV), 6~10° (VFOV) |

| Ground sampling distance @ 60 m Ground sampling distance @ 3 m | ~4 cm/pixel per band ~2.5 mm/pixel per band |

| Growth Stage | Data Collection Date | Days After Sowing | Data Collection Start Time | Data Collection End Time |

|---|---|---|---|---|

| GS1 | 20 March 2023 | 10 | 14:38 | 15:01 |

| GS2 | 14 April 2023 | 34 | 14:46 | 15:10 |

| GS3 | 10 May 2023 | 70 | 15:01 | 15:26 |

| GS4 | 24 May 2023 | 84 | 14:54 | 15:18 |

| Band Images | uly | ulx | lry | lrx |

|---|---|---|---|---|

| 1 | 522 | 104 | 522 | 1110 |

| 2 | 522 | 150 | 522 | 1156 |

| 3 | 552 | 150 | 552 | 1156 |

| 4 | 552 | 104 | 552 | 1110 |

| 5 | 537 | 127 | 537 | 1133 |

| Growth Stages | Enhancements Techniques | Sample Number | Average NDVI | Standard Deviation (SD) | Equation | R2 | RMSE |

|---|---|---|---|---|---|---|---|

| GS1 | Raw data | 77 | 0.26 | 0.04 | y = 0.30x + 0.15 | 0.18 | 0.03 |

| FOV | 77 | 0.32 | 0.08 | y = 1.09x − 0.07 | 0.51 | 0.06 | |

| GS2 | Raw data | 77 | 0.36 | 0.06 | y = 0.53x − 0.01 | 0.54 | 0.04 |

| FOV | 77 | 0.46 | 0.09 | y = 0.94x − 0.19 | 0.66 | 0.05 | |

| GS3 | Raw data | 77 | 0.43 | 0.07 | y = 0.80x − 0.18 | 0.42 | 0.04 |

| FOV | 77 | 0.57 | 0.11 | y = 1.42x − 0.50 | 0.57 | 0.06 | |

| GS4 | Raw data | 77 | 0.35 | 0.08 | y = 0.75x − 0.07 | 0.84 | 0.03 |

| FOV | 77 | 0.43 | 0.08 | y = 0.92x − 0.09 | 0.87 | 0.03 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Haque, M.A.; Reza, M.N.; Karim, M.R.; Ali, M.R.; Samsuzzaman; Lee, K.-D.; Kang, Y.H.; Chung, S.-O. Geometric Alignment Improves Wheat NDVI Calculation from Ground-Based Multispectral Images. Remote Sens. 2025, 17, 743. https://doi.org/10.3390/rs17050743

Haque MA, Reza MN, Karim MR, Ali MR, Samsuzzaman, Lee K-D, Kang YH, Chung S-O. Geometric Alignment Improves Wheat NDVI Calculation from Ground-Based Multispectral Images. Remote Sensing. 2025; 17(5):743. https://doi.org/10.3390/rs17050743

Chicago/Turabian StyleHaque, Md Asrakul, Md Nasim Reza, Md Rejaul Karim, Md Razob Ali, Samsuzzaman, Kyung-Do Lee, Yeong Ho Kang, and Sun-Ok Chung. 2025. "Geometric Alignment Improves Wheat NDVI Calculation from Ground-Based Multispectral Images" Remote Sensing 17, no. 5: 743. https://doi.org/10.3390/rs17050743

APA StyleHaque, M. A., Reza, M. N., Karim, M. R., Ali, M. R., Samsuzzaman, Lee, K.-D., Kang, Y. H., & Chung, S.-O. (2025). Geometric Alignment Improves Wheat NDVI Calculation from Ground-Based Multispectral Images. Remote Sensing, 17(5), 743. https://doi.org/10.3390/rs17050743