1. Introduction

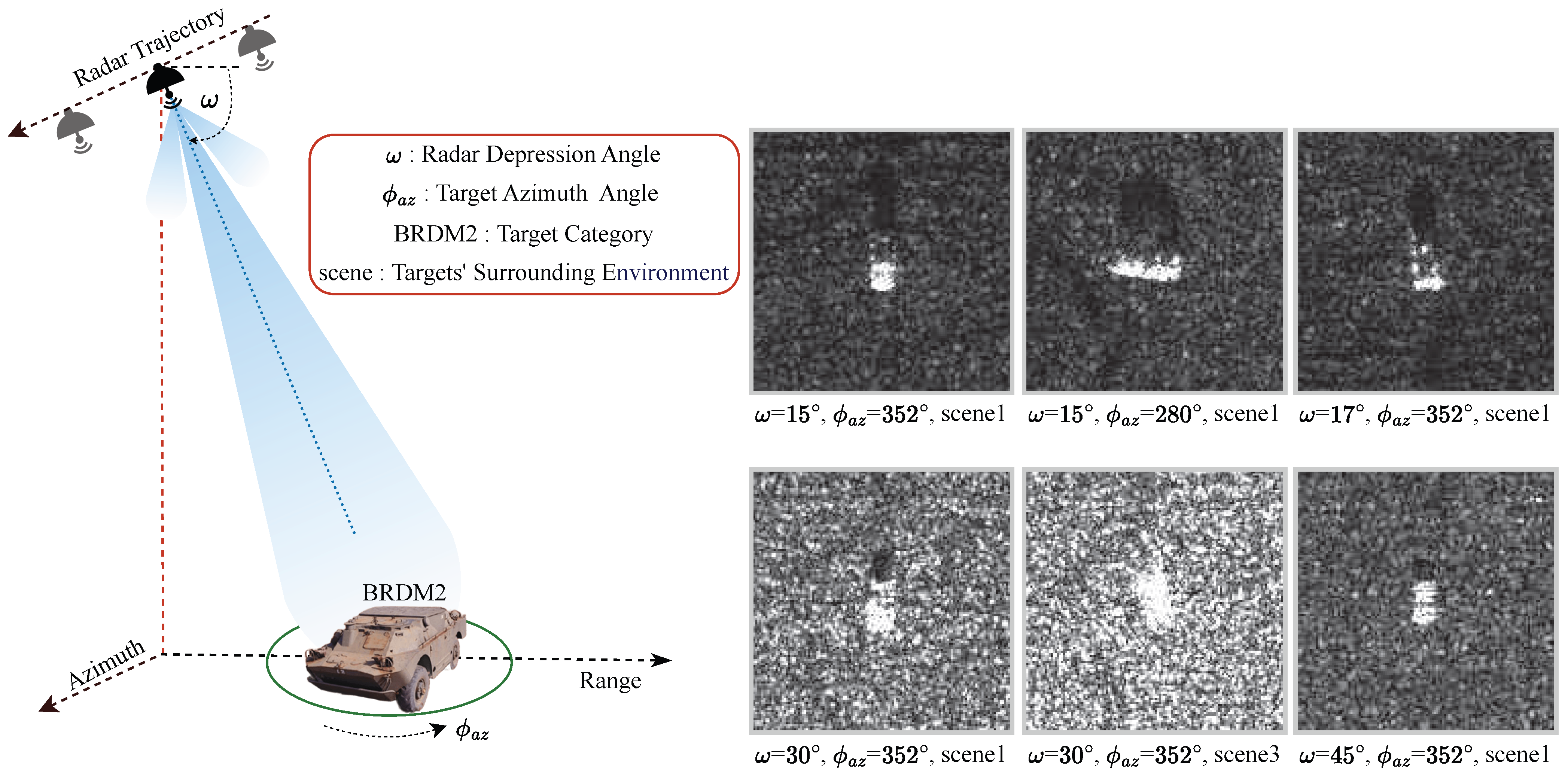

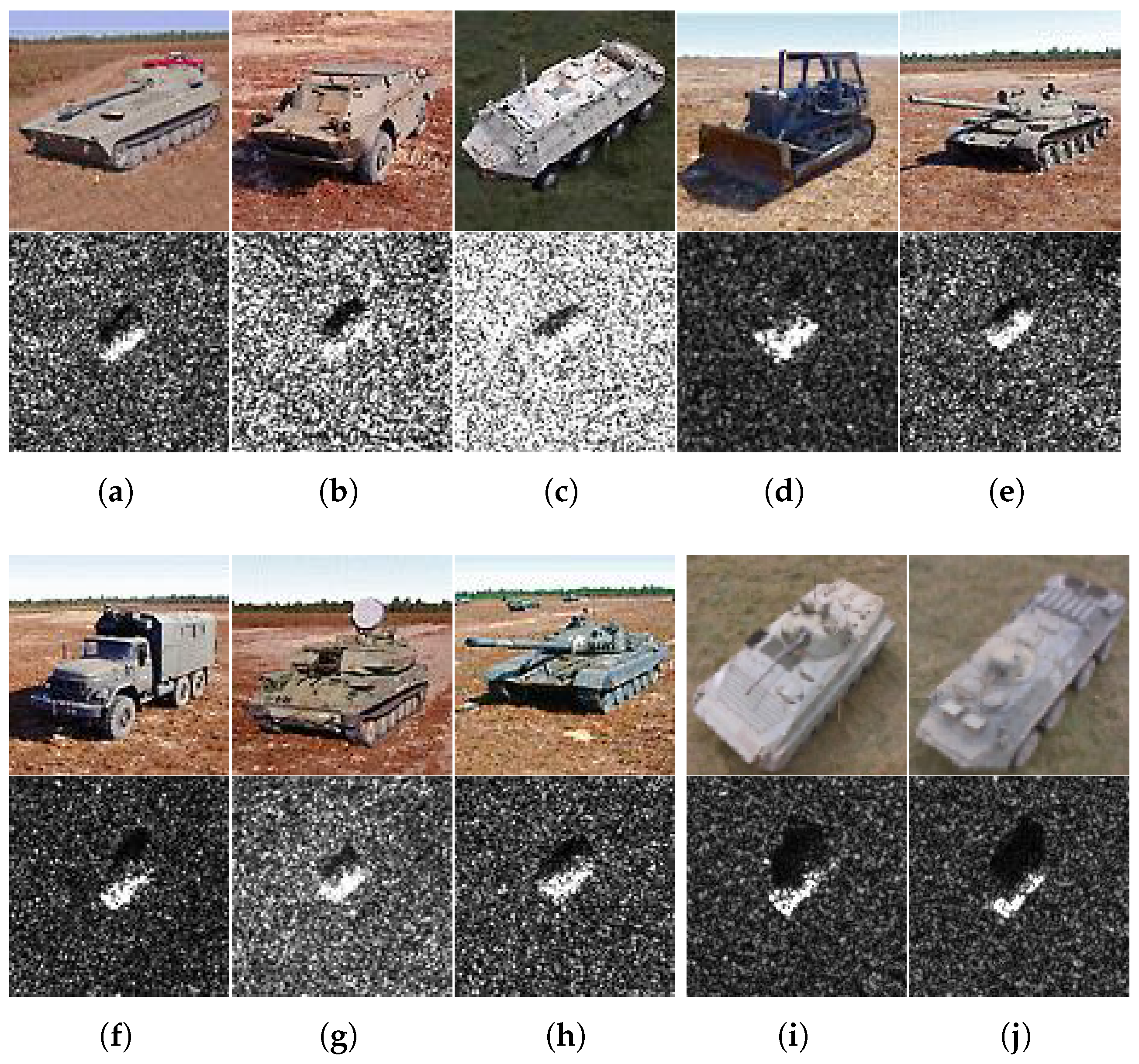

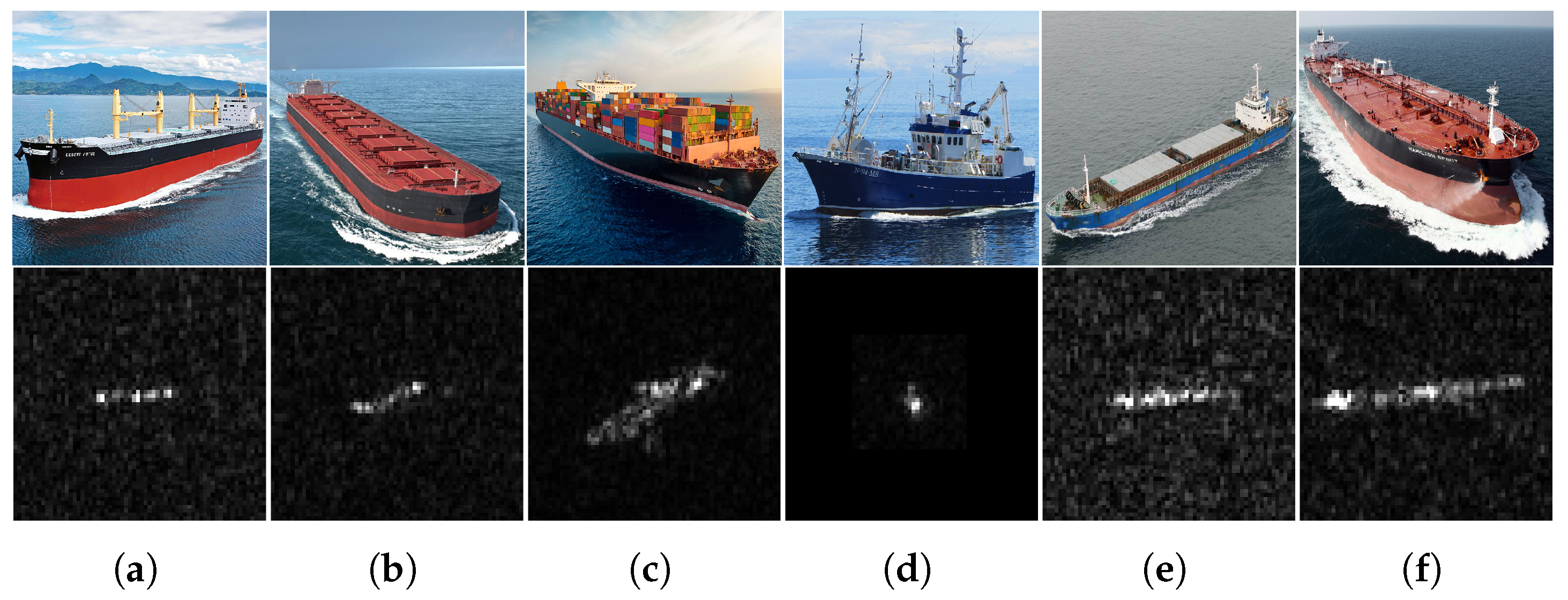

Synthetic aperture radar (SAR), with its all-weather and day-and-night operational advantages, has become a crucial means for remote sensing [

1]. However, interpreting SAR images remains relatively challenging [

2], because their characteristics are significantly shaped by operating conditions, including radar parameters, target signature and scene (e.g., background clutter). Moreover, the reflectivity of materials within commonly used radar frequency bands is not intuitive to human vision [

3,

4], as illustrated in

Figure 1, which shows SAR images of a BRDM2 armored personnel carrier under different operating conditions.

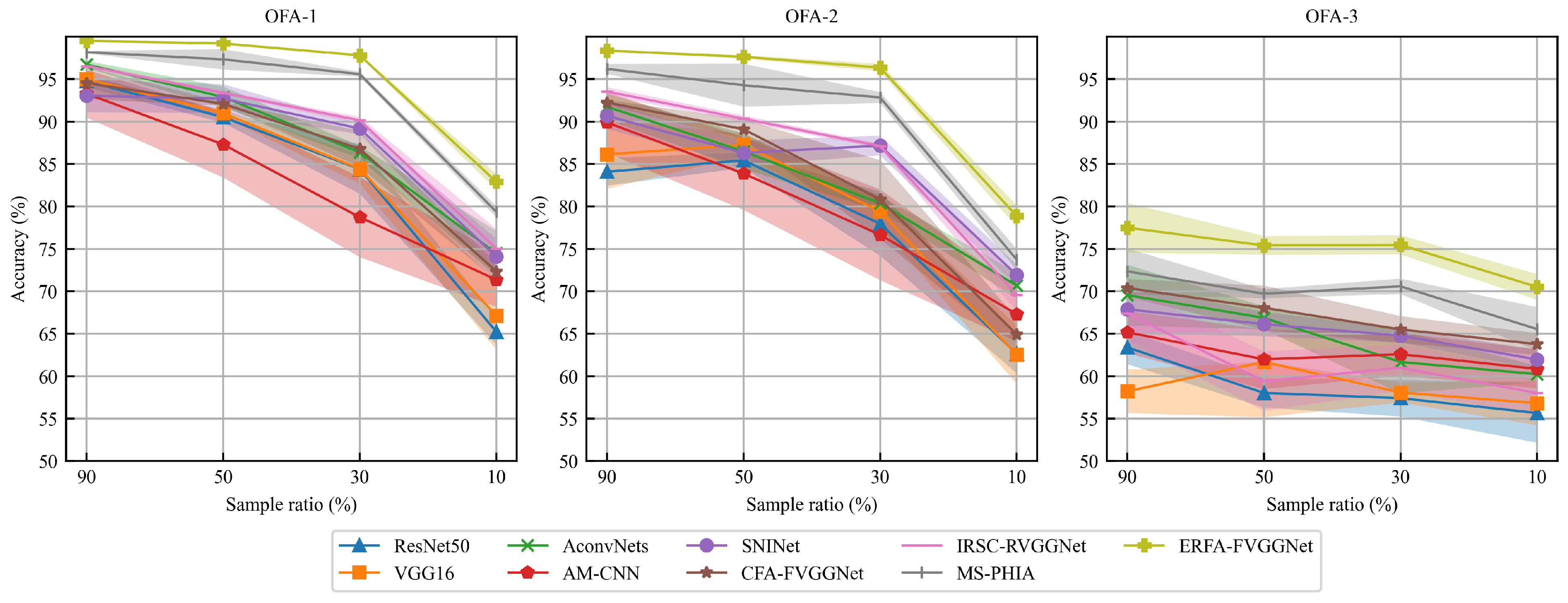

In recent years, advances in deep learning have substantially propelled SAR target recognition, achieving accuracy rates exceeding 95% under standard operating conditions (SOCs)—where training and test sets exhibit homogeneous conditions. Numerous convolutional neural networks (CNNs), such as VGG [

5] and ResNet [

6], which originally designed for optical image recognition, have been successfully applied to SAR automatic target recognition (ATR). However, given the vast scale of optical image training samples and the substantial parameter sizes of these networks, directly applying them to SAR ATR with limited samples often induces overfitting. Chen et al. [

7] proposed all convolutional networks (A-ConvNets), replacing fully connected layers with convolutional layers to reduce parameters, which demonstrates superior generalization in SAR ATR. The attention mechanism CNN (AM-CNN) [

8] incorporates a lightweight convolutional block attention module (CBAM) after each convolutional layer in A-ConvNets for SAR ATR. Moreover, transfer learning has been leveraged to improve generalization under limited samples. Huang et al. [

9,

10] experimentally demonstrated that shallow features in transfer learning networks exhibit strong generality, whereas deep features are highly task-specific due to fundamental disparities in imaging mechanisms between optical and SAR modalities [

11]. Building on ImageNet [

12]-pretrained VGG16, Zhang et al. [

13,

14] redesigned deep classifiers while retaining shallow weights, proposing the reduced VGG network (RVGGNet) and the modified VGG network (MVGGNet), both achieving high recognition performance in SAR ATR. However, the operating condition space is vast, and when deep learning algorithms overfit specific operating conditions, models suffer from poor robustness and interpretability [

15,

16]. For instance, deep learning models may mainly depend on background clutter for classification [

17,

18]. Our previous experiments in [

19] demonstrate that A-ConvNets, AM-CNN, and MVGGNet inevitably utilize background clutter as the primary decision-making basis, potentially compromising model robustness.

To enhance robustness, the speckle-noise-invariant network (SNINet) [

20] employs

-regularized contrastive loss to align SAR images before and after despeckling, mitigating speckle noise effects. The contrastive feature alignment (CFA) method [

21] employs a channel-weighted mean square error (CWMSE) loss to align deep features before and after background clutter perturbation, thereby reducing clutter dependency and enhancing robustness at the cost of compromised recognition performance. Although current deep learning SAR ATR methods demonstrate superior performance under SOC, their efficacy deteriorates under extended operating conditions (EOCs)—where substantial disparities exist between training and test sets. Researchers leverage the core characteristics of SAR imagery—complex-valued data encoding electromagnetic scattering mechanisms [

22,

23,

24]—for high-performance target recognition. Multi-stream complex-valued networks (MS-CVNets) [

25] decompose SAR complex images into real and imaginary components to fully exploit complex imaging features of SAR, constructing complex convolutional neural networks to extract richer information from SAR images and demonstrate superior performance under EOCs [

25].

To further enhance robustness and interpretability, recent advancements incorporate electromagnetic features [

22]—such as scattering centers [

24] and attributed scattering centers (ASCs)—to enhance recognition performance across operating conditions. Zhang et al. [

26] integrates the electromagnetic scattering feature with image features for SAR ATR. Liao et al. [

27] design an end-to-end physics-informed interpretable network for scattering center feature extraction and target recognition under EOCs. Furthermore, Zhang et al. [

13] enhances model recognition capability by concatenating deep features extracted by RVGGNet with independent ASC component patches. Huang et al. [

28] integrate ASC components into a physics-inspired hybrid attention (PIHA) module within MS-CVNets, guiding the attention mechanism to focus on physics-aware semantic information in target regions and demonstrating superior recognition performance across diverse operating conditions. However, electromagnetic feature-based recognition methods are heavily reliant on reconstruction and extraction quality. Reconstruction and extraction algorithms are primarily divided into image-domain methods [

29,

30], which are computationally efficient but yield inaccurate results due to coarse segmentation, and frequency-domain approaches [

31,

32], which achieve higher precision but suffer from increased computational complexity that limits their practical application.

To address the issues mentioned above, we propose an electromagnetic reconstruction feature alignment (ERFA) method to boost model robustness, generalization and interpretability. ERFA leverages an orthogonal matching pursuit with image-domain cropping-optimization (OMP-IC) algorithm to reconstruct target ASC components, a fully convolutional VGG network (FVGGNet) for deep feature extraction, and a contrastive loss inspired by contrastive language-image pretraining (CLIP) [

33,

34] to align features between SAR images and reconstructed components. The main contributions of this paper are summarized as follows:

A novel electromagnetic reconstruction and extraction algorithm named OMP-IC is proposed, which integrates image-domain priors into the frequency-domain OMP algorithm to optimally balance reconstruction accuracy and computational efficiency.

A novel feature extraction network, termed FVGGNet, leverages the generic feature extraction capability of transfer learning with the inherent generalization of fully convolutional architectures, demonstrating enhanced discriminability and generalization.

A dual-loss mechanism combining contrastive and classification losses is proposed that enables the ERFA module to suppress background clutter and enhance discriminative features, thus improving the robustness and interpretability of FVGGNet.

The rest of this article is organized as follows:

Section 2 presents preliminaries on ASC reconstruction and extraction. The ERFA-FVGGNet architecture is presented in

Section 3, followed by comprehensive experiments in

Section 4 validating the method’s robustness, generalization and interpretability. Finally,

Section 5 concludes this article.

3. Proposed Methods

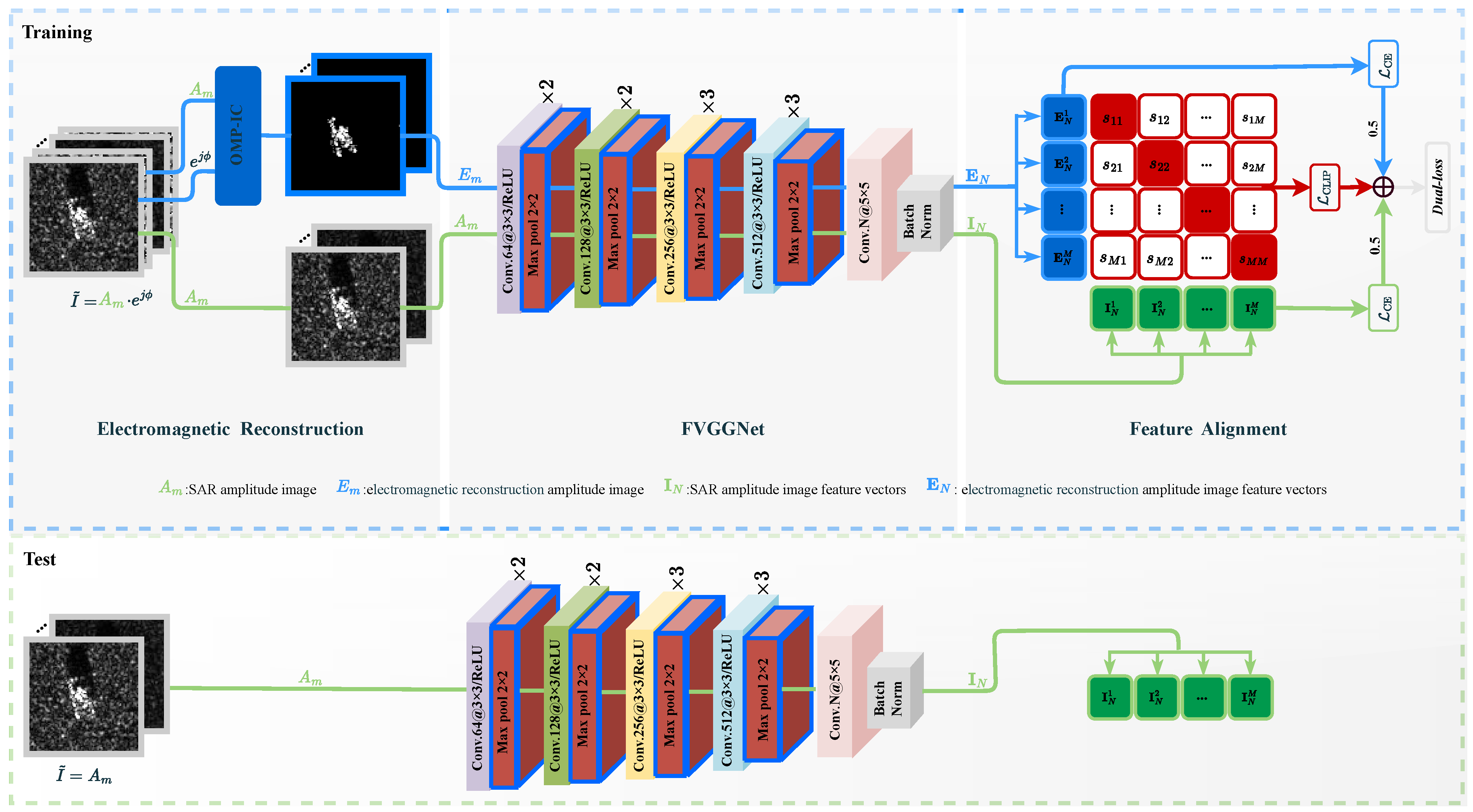

This section introduces the proposed ERFA-FVGGNet as shown in

Figure 3 for trustworthy SAR target recognition.

denotes the SAR complex image, with

and

representing its amplitude and phase, respectively. The electromagnetic reconstruction module based on OMP-IC is described in

Section 3.1, which generates source domain data for feature alignment. The FVGGNet is presented in

Section 3.2.1, extracting deep features from both SAR images and electromagnetic reconstructions. Finally, applying the clip contrastive loss to align deep SAR image features with deep electromagnetic reconstruction features while suppressing background clutter is detailed in

Section 3.2.2.

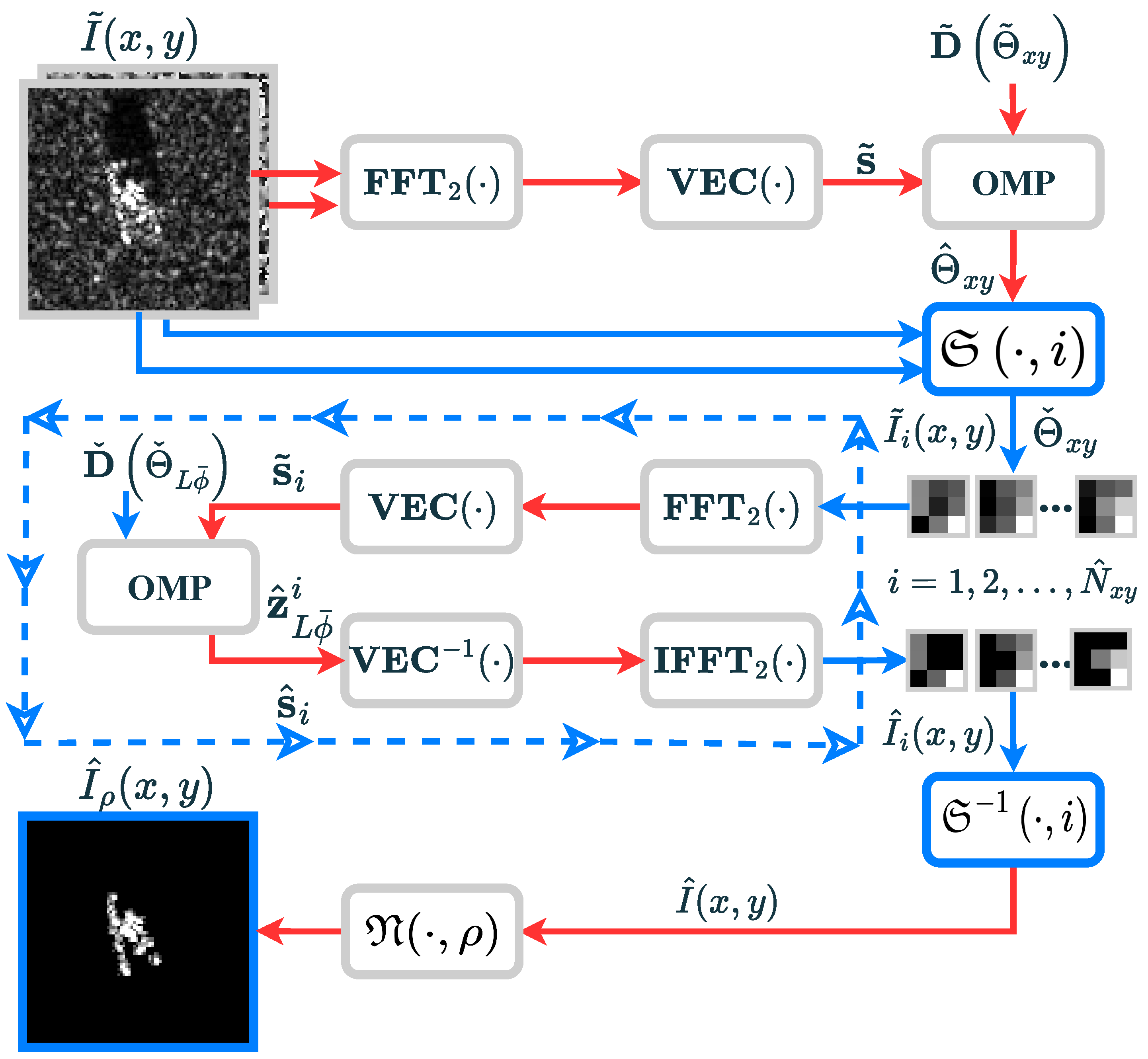

3.1. Electromagnetic Reconstruction

The original OMP reconstruction algorithm introduced in

Section 2.2 suffers from substantial computational load and memory consumption, resulting in low computational efficiency. Specifically, for (

) estimation, assuming the SAR image is

, with the estimated (

) parameter set size

and the (

) parameter set size

, the computational speed of the OMP algorithm is primarily determined by atom selection and matrix inversion. The dictionary for solving (

) contains

atoms, and each iteration of the OMP involves computational complexity of

for atom selection and pseudo-inverse computation of

-sized matrices, making each iteration computationally intensive.

To reduce computation, block compressed sensing [

37] divides the entire image into multiple independent uniform blocks and reconstructs all blocks using the same dictionary, effectively reducing storage pressure and computational complexity. However, this disjoint blocking severs inter-block correlations, causing blocking artifacts that degrade reconstruction quality. To simultaneously reduce computation and suppress blocking artifacts [

38], we propose the OMP-IC algorithm to preserve overlapping regions between adjacent blocks to enhance inter-block correlations.

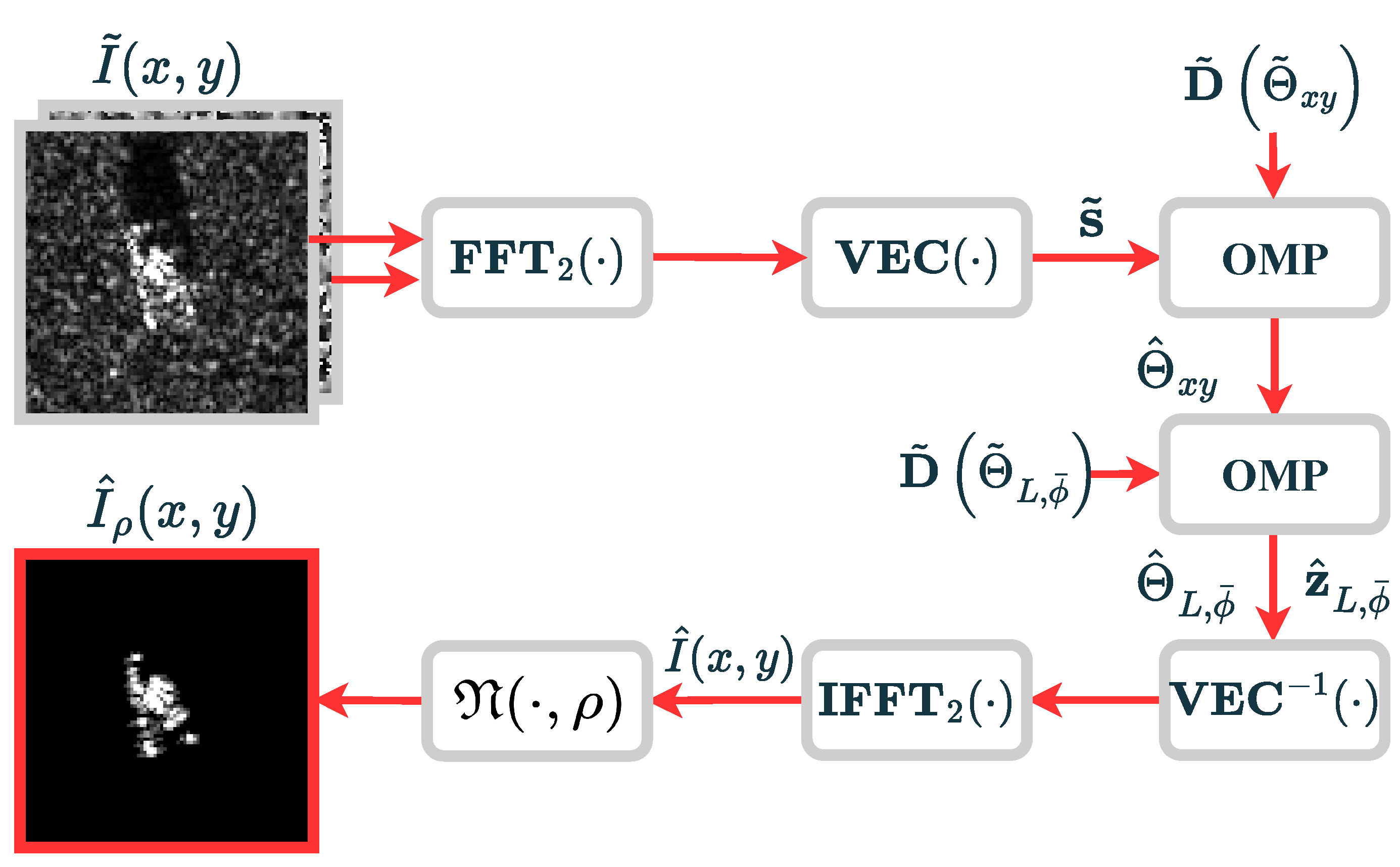

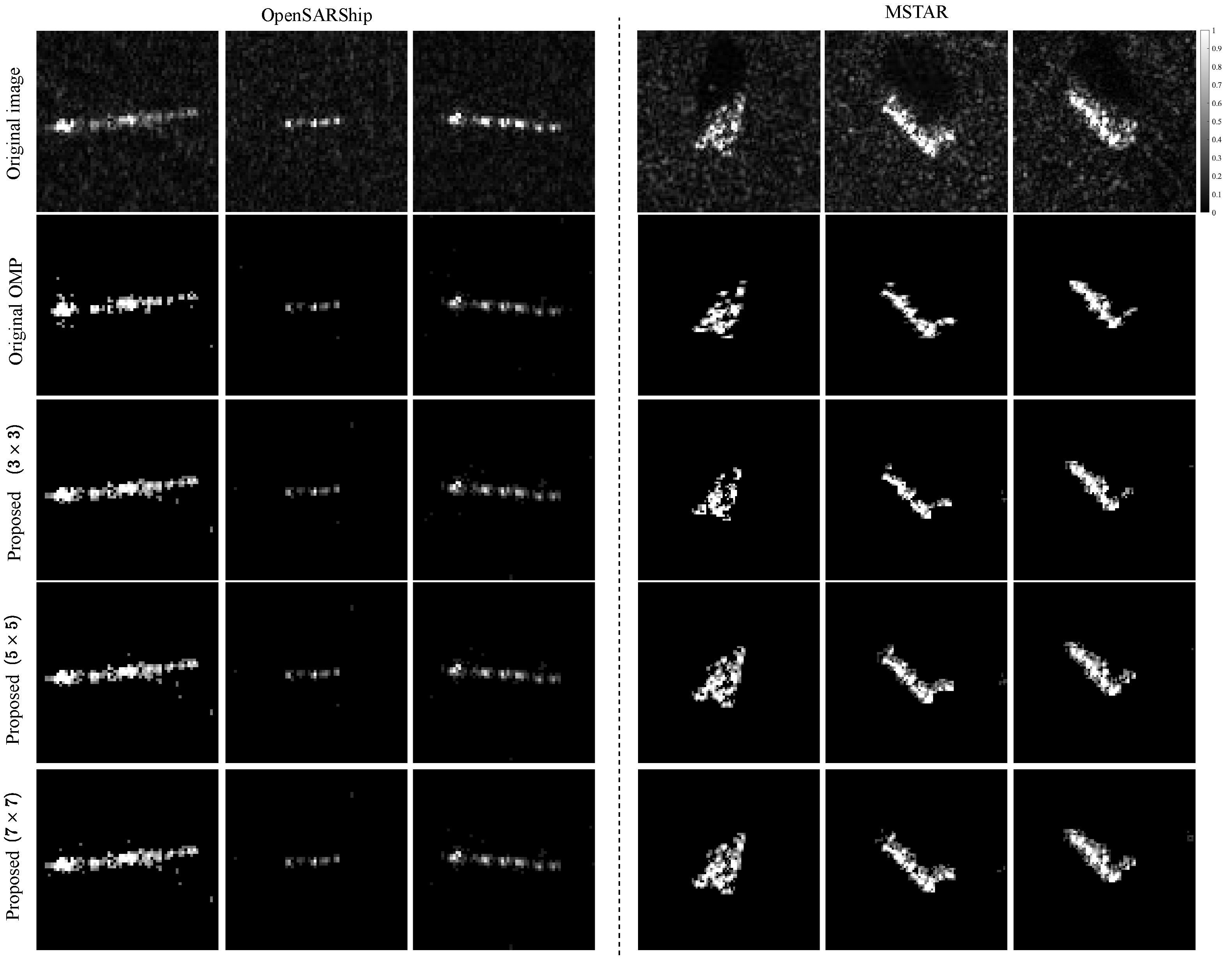

Specifically, the OMP-IC, as illustrated in

Figure 4, shares the step (1) and (2) with the original OMP-based reconstruction and extraction method in

Section 2.2. Prior to estimating (

), the original SAR image is cropped into overlapping blocks in the image domain, centered on the estimated (

) coordinates, preserving inter-block correlations to suppress artifacts. This arises from the translational property of ASCs in the image domain [

39], where two ASCs with identical parameters except

positions exhibit identical waveforms

Meanwhile, ASCs exhibit an additive property in the image domain [

39], meaning that a scattering center of length

L can be represented by the linear superposition of multiple ASCs

where

The translational property of ASCs in the image domain allows all cropped image blocks to retain identical position parameters, enabling dictionary reuse and reducing memory requirements for dictionary storage. Simultaneously, the additive property ensures that a complete ASC can be represented through the linear superposition of multiple cropped ASCs, thereby validating the feasibility of image-domain cropping.

The proposed OMP-IC specifically modifies step (3) in

Section 2.2: using (

) position parameters estimated in (2), crop the full SAR image

into

image blocks of size

, centered at each ASC location, with overlapping cropping between adjacent blocks. The overlapping regions maintain structural continuity through additive property, enabling seamless block fusion during the next step,

where

, and

denotes the image cropping operation centered at the locations of the

i-th ASC. Subsequently, echoes

(

) are generated for each image block as shown in

Figure 4, where each block contains at least one ASC. Leveraging the translational property, all ASC positions within a block are represented by a common overcomplete position parameter set

, with cardinality

. Thus, the dictionary

is then repeatedly used for the OMP algorithm to estimate the scattering coefficients

.

The OMP-IC specifically modifies step (4) in

Section 2.2 as follows: sequentially reconstructing the echoes

, transforming them to the image-domain via

and

to obtain

, and repositioning all reconstructed blocks into their original locations within a blank image matching the dimensions of the original SAR image

where

denotes the image block restoration operation. Finally, passing

through the activation function shown in Equation (

4) to obtain the reconstructed

.

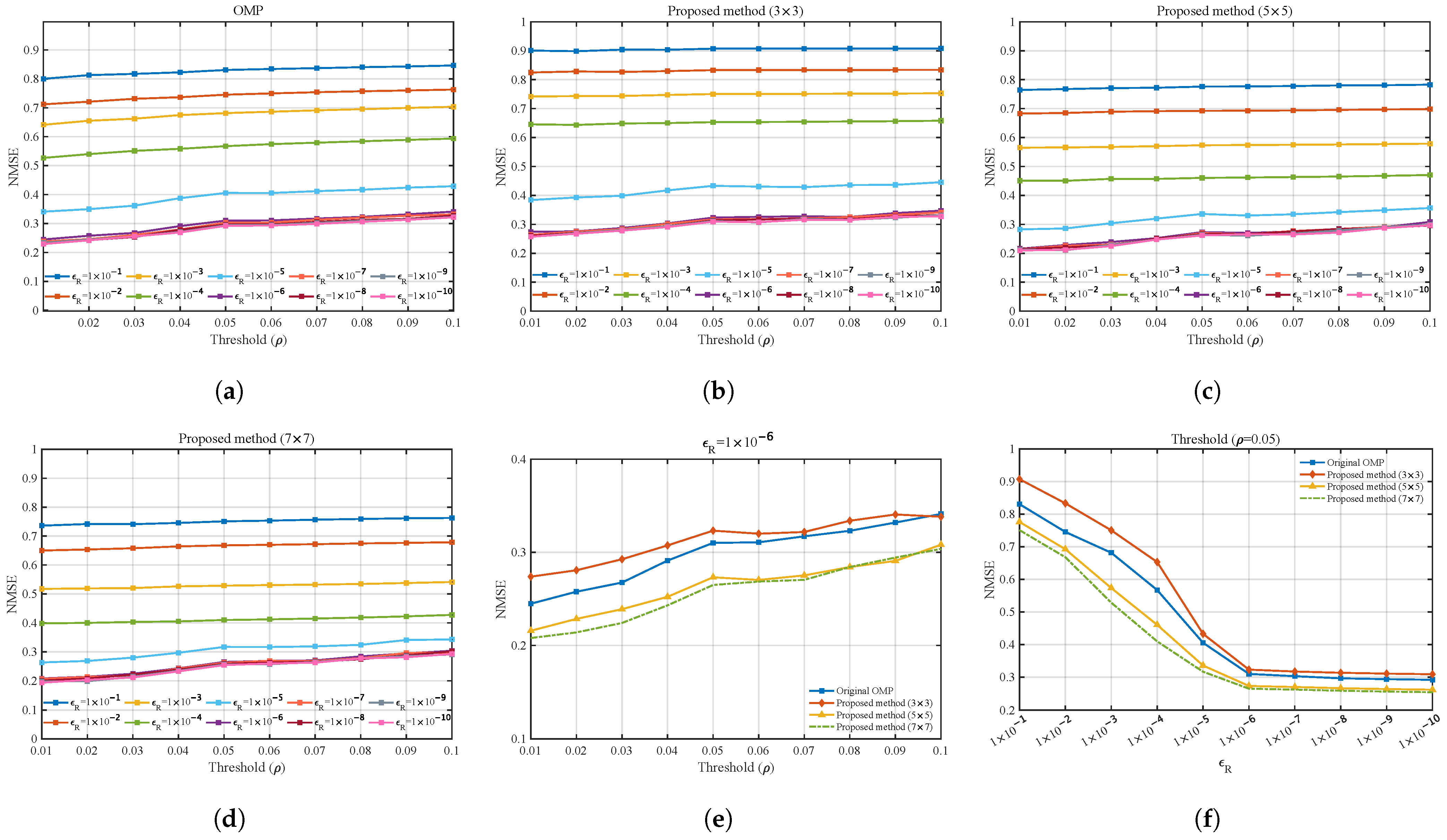

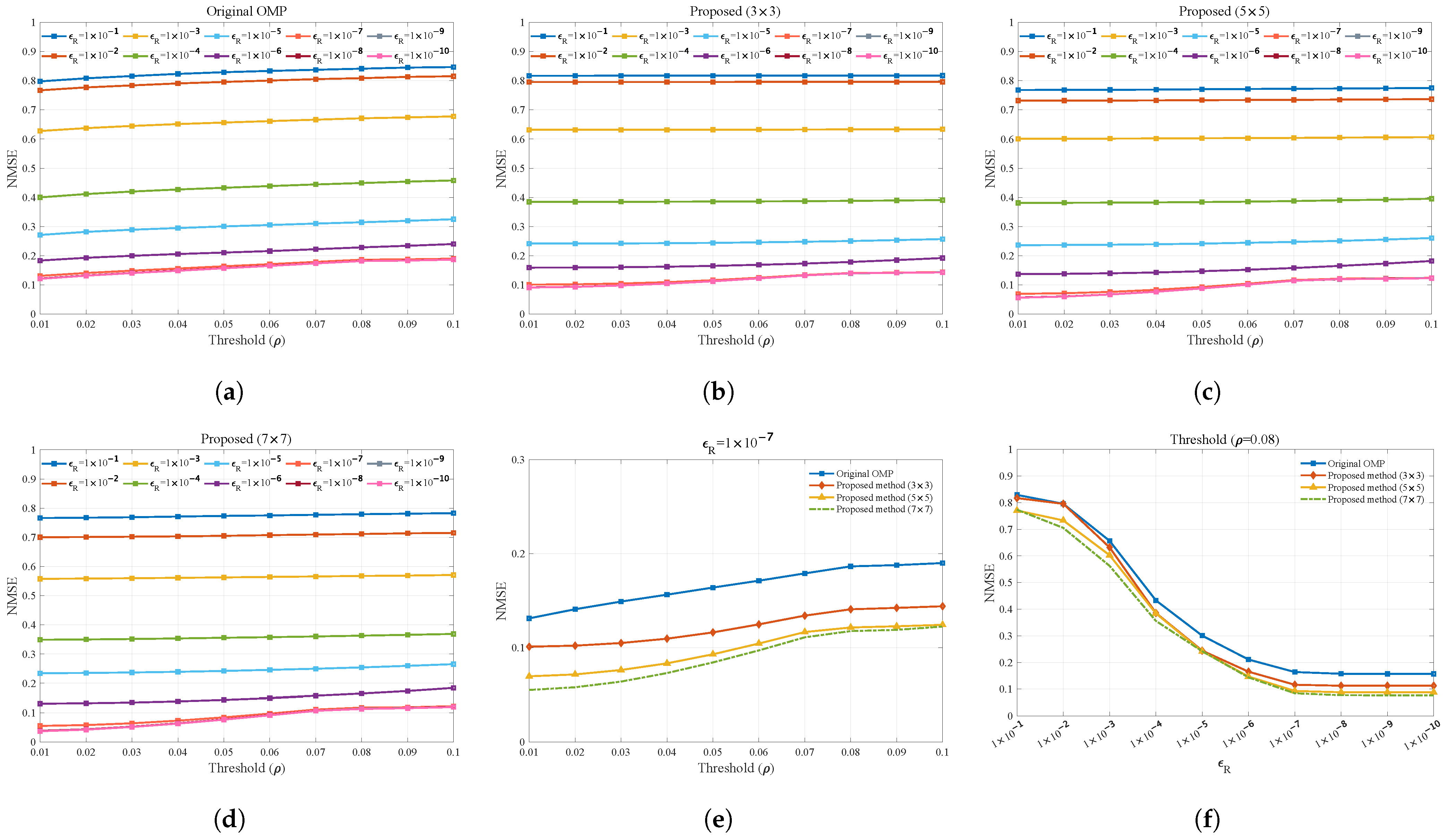

By incorporating image-domain cropping, the number of dictionary atoms required for estimating () reduces to , where . The computational complexity for atom selection per OMP iteration becomes , with only the pseudo-inverse calculation of an -sized matrix required each iteration. As a result, the OMP-IC enables efficient SAR target component reconstruction.

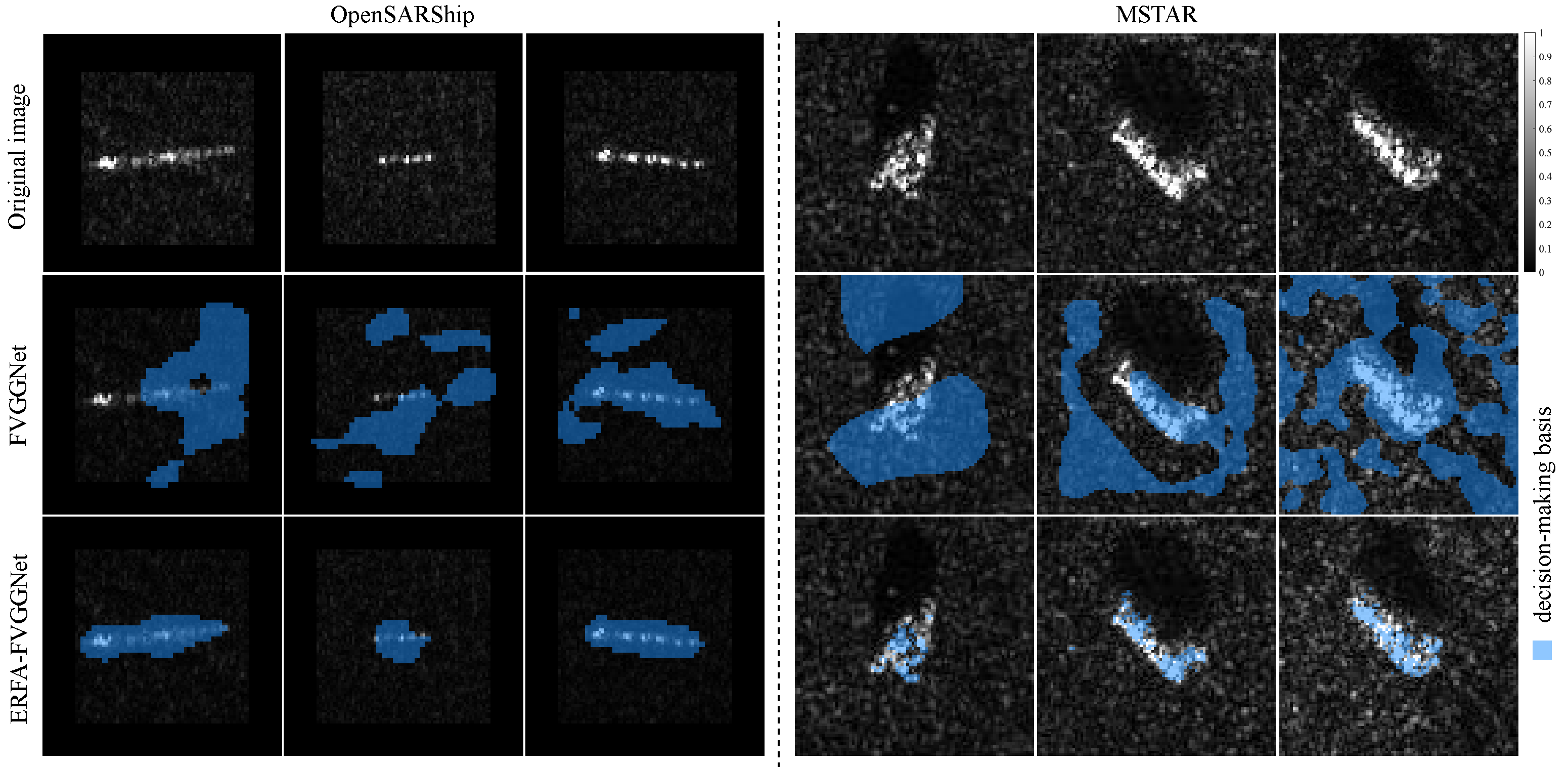

3.2. Feature Extraction and Alignment

To enhance feature discriminability and suppress background clutter via electromagnetic reconstruction results from OMP-IC, a feature alignment framework integrating pretrained FVGGNet with CLIP contrastive loss is proposed.

3.2.1. FVGGNet

As mentioned in

Section 1, shallow structures of pretrained models can extract general information from images, with their generalization stemming from pervasive similarities across images. In contrast, deep layers exhibit strong dependence on domain-specific characteristics, resulting in redundant parameters. Simultaneously, SAR ATR often suffers from limited training samples, causing models to easily overfit and impair generalization. A-ConvNets [

7] mitigates this by removing the parameter-heavy fully connected layers, thereby reducing model complexity and enhancing generalization [

40]. Furthermore, small convolutional kernels (e.g.,

or

) typically exhibit superior feature extraction capabilities [

41].

Therefore, we propose a fully convolutional VGG network (FVGGNet), which leverages the feature extraction advantages of pretrained shallow networks to improve model generalization under limited samples by replacing fully connected layers with small convolutional kernel layers, as illustrated in

Figure 3 and

Table 1, “Conv”, “MaxPool”, and “BatchNorm” denote the convolutional layers, max-pooling layers, and batch normalization layers, respectively. This network selects the first 14 layers of the VGG16 network pre-trained on the ImageNet dataset [

12], utilizing the shallow structure of the pre-trained network to extract features from SAR images. Subsequently, a

convolutional kernel with a stride of 1 followed by batch normalization serves as the classifier, where

N represents the number of target categories, enabling target classification.

3.2.2. Feature Alignment

As shown in

Figure 3, a batch of SAR amplitude images and electromagnetic reconstruction amplitude images are input into the FVGGNet to extract their image features

and electromagnetic reconstruction features

. Here,

N denotes the number of target categories (i.e., the length of each feature vector), and

M denotes the batch size. To suppress interference from background clutter and ensure recognition performance, the contrastive loss [

33] is introduced. The contrastive loss first computes the normalized cosine similarity between the image features and electromagnetic reconstruction features across the batch

The bidirectional contrastive loss is then applied, where the image feature

only matches the electromagnetic reconstruction feature

among all electromagnetic reconstruction features

Similarly, the electromagnetic reconstruction feature

only matches the image feature

among all image features

Here,

is a learnable temperature coefficient used to adjust the alignment strength between features. When

is smaller, tighter alignment occurs between image features and electromagnetic features. This may lead to over-penalization, which increases the distance between different samples of the same target in the feature space, potentially causing model overfitting. Conversely, when

is larger, feature alignment becomes more relaxed. While this avoids excessive penalization, samples from different targets may lack sufficient discrimination in the feature space, compromising model recognition performance [

42]. Ultimately, the contrastive loss is

Here, we employ the learnable temperature coefficient

to adaptively regulate feature alignment strength and control recognition efficiency. Hence, the dual loss is defined as

where

denotes the cross-entropy loss function for target classification,

are the ground-truth labels

Similarly, can be obtained. The proposed dual-loss is designed to adaptively adjust the alignment between electromagnetic reconstruction features and image features, suppress model overfitting to background clutter, and preserve recognition accuracy.