DualRecon: Building 3D Reconstruction from Dual-View Remote Sensing Images

Highlights

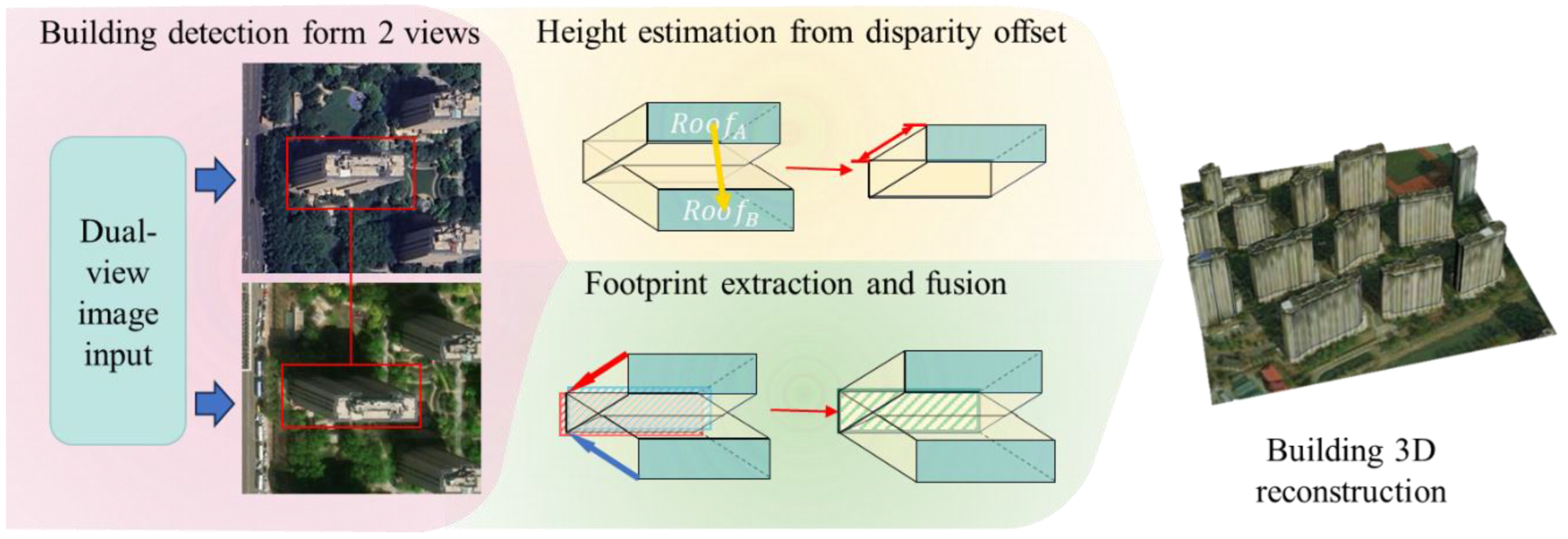

- DualRecon can detect buildings from two remote sensing images and estimate building heights based on disparity, enabling 3D building reconstruction.

- DualRecon achieves state-of-the-art accuracy in dual-view building reconstruction.

- It provides a reconstruction method for large-scale, time-sensitive applications in urban areas.

- It provides a practical design recipe for building reconstruction from dual-view remote sensing images.

Abstract

1. Introduction

- 1.

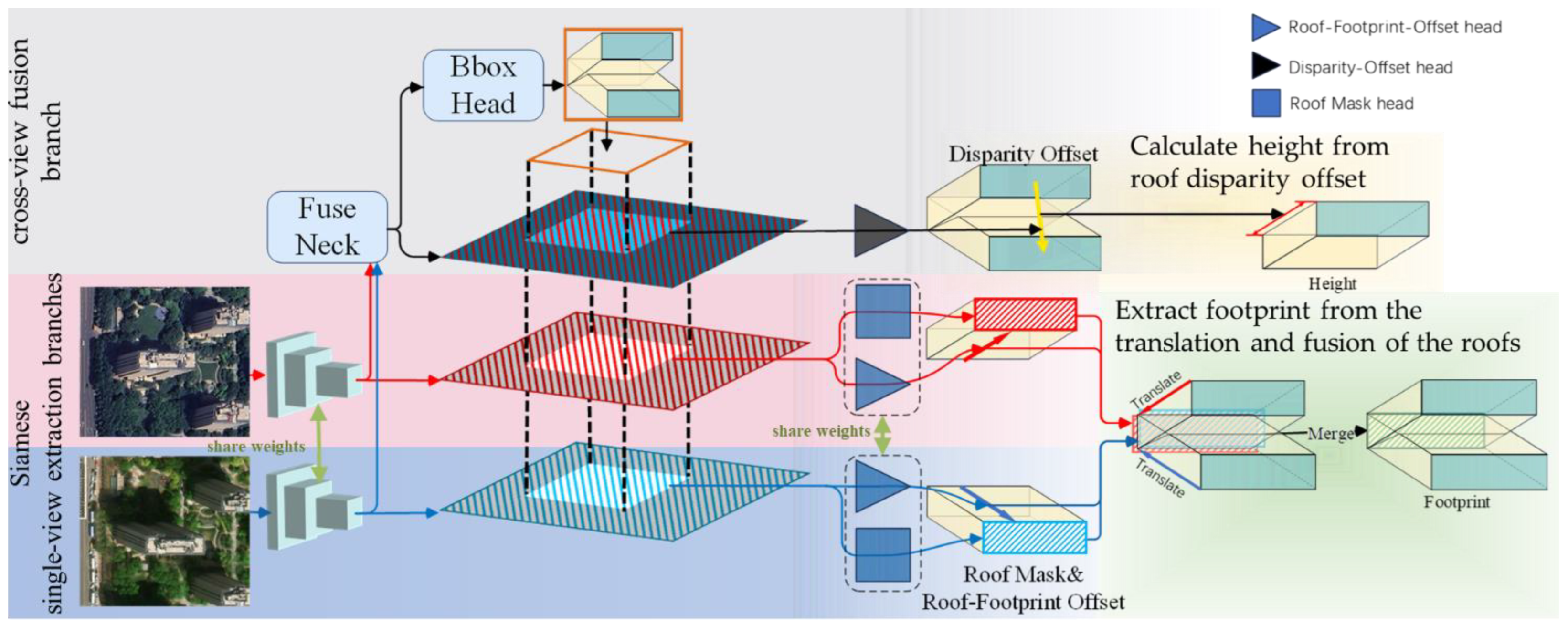

- We propose DualRecon, which, to the best of our knowledge, is the first method specifically designed for building 3D reconstruction from dual-view remote sensing imagery. DualRecon detects building instances based on two remote sensing images from different view angles, and then utilizes the roof disparity offset to estimate building height, thereby addressing the inaccurate building reconstruction in existing off-nadir-based methods caused by occlusion.

- 2.

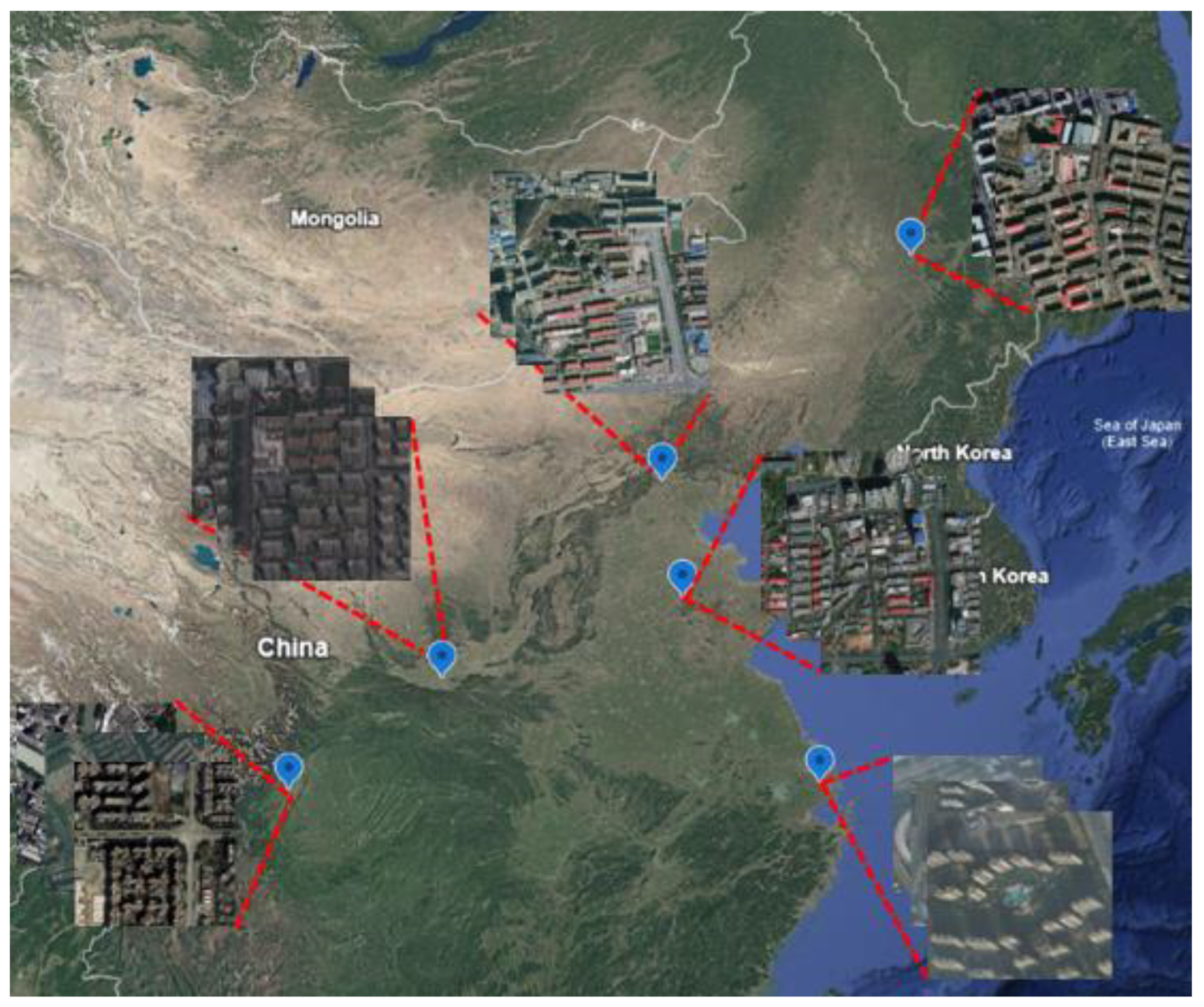

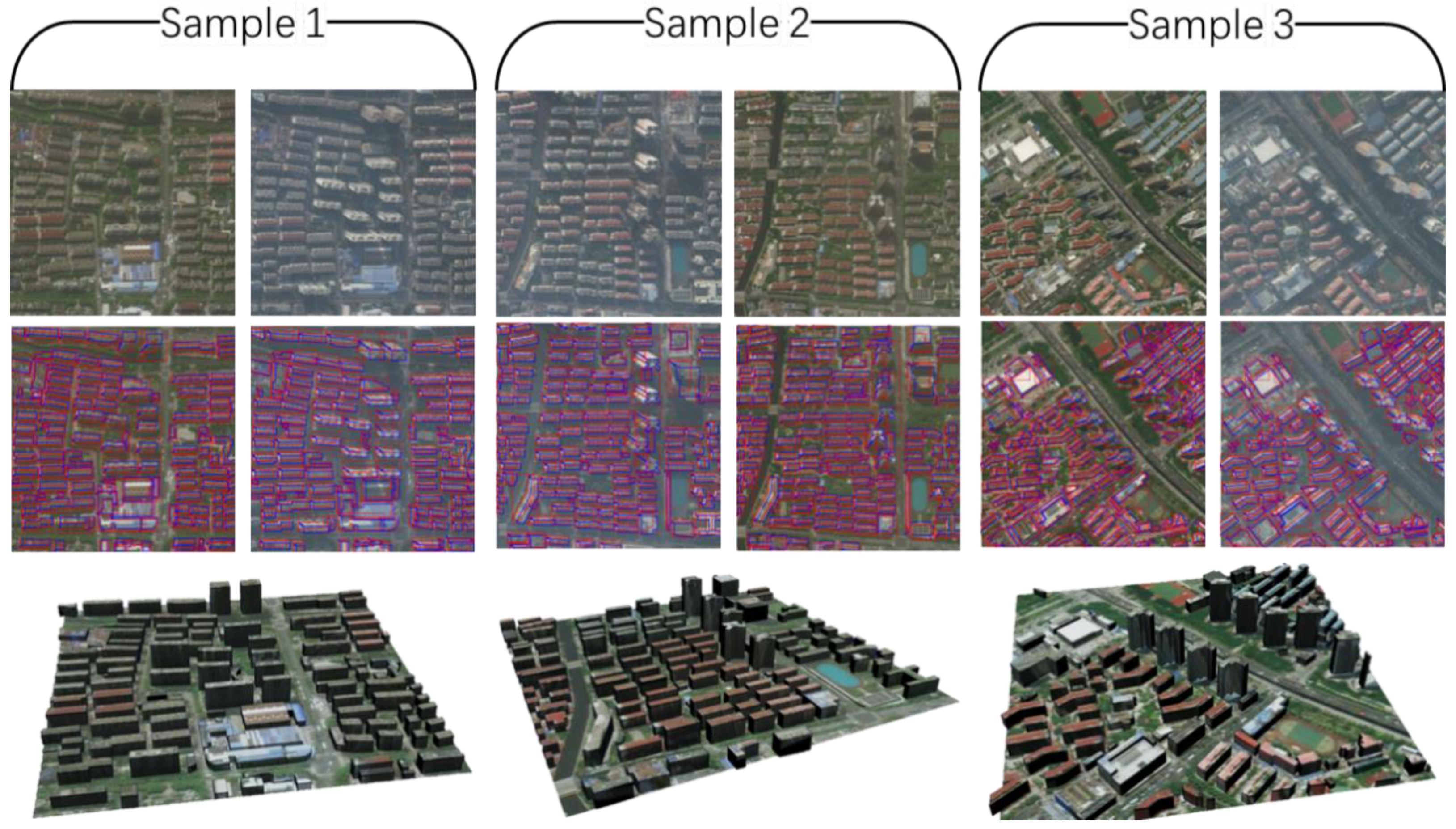

- We construct a dual-view remote sensing dataset for building 3D reconstruction, named BuildingDual. In BuildingDual, building instances in both views are annotated, including their distinct roofs in the two views, roof-to-footprint offsets, footprints, and roof disparity offsets.

- 3.

- We conduct extensive experiments to demonstrate that, in the task of 3D building reconstruction from dual-view remote sensing imagery, DualRecon outperforms existing methods in reconstruction accuracy.

2. Related Work

2.1. Traditional Satellite Imagery–Based 3D Reconstruction

2.2. Deep Learning–Based 3D Reconstruction from Remote Sensing Imagery

3. Methodology

3.1. DualRecon Network

3.2. Loss Functions

4. Experiment

4.1. Dataset

4.2. Implementation Details

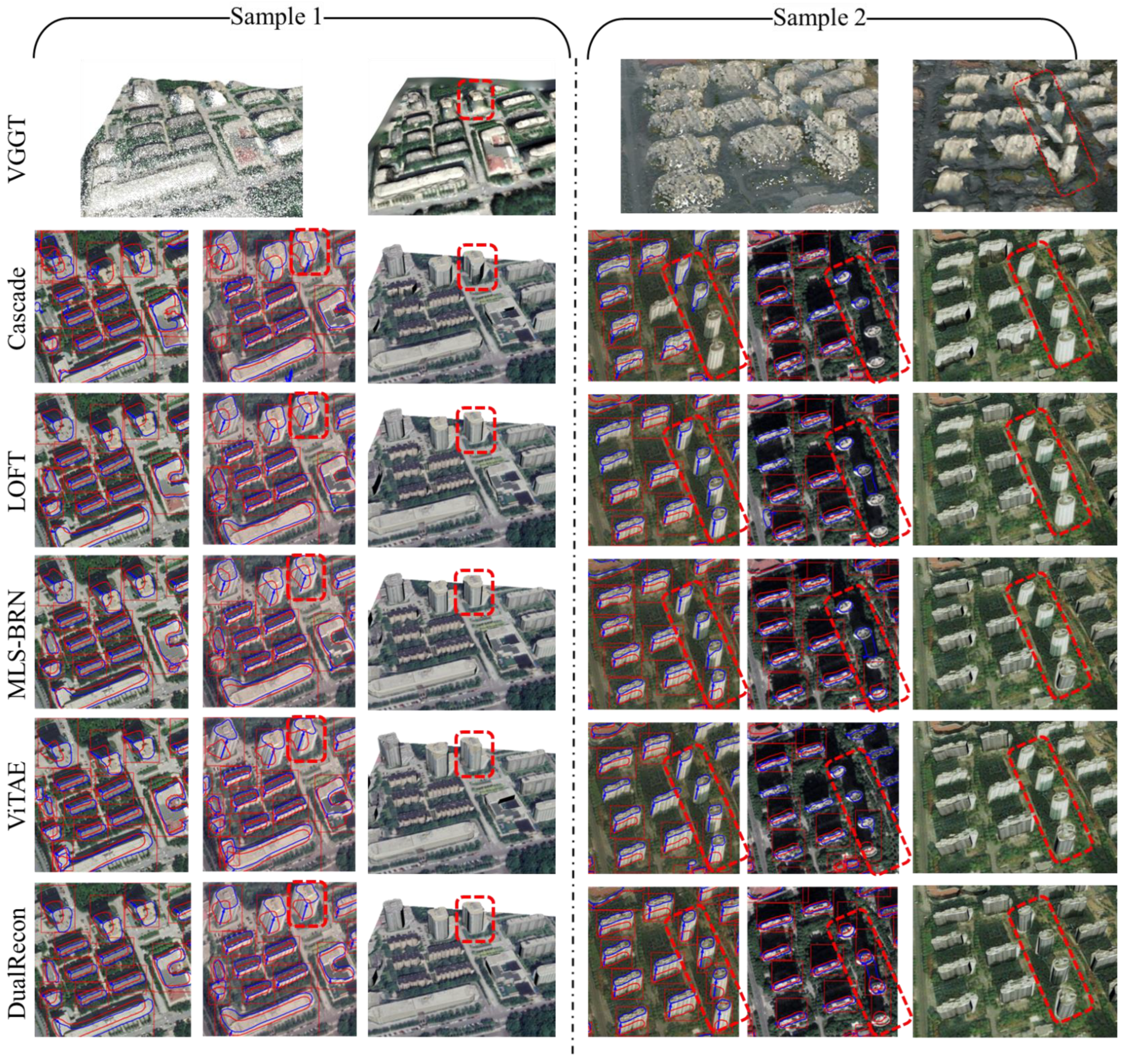

4.3. Comparative Experiments

- MLS-BRN (CVPR 2025) [15]: A method specifically designed for 3D building reconstruction from off-nadir imagery.

- LoFT (TIPAMI 2022) [14]: A method developed for extracting 3D building information from off-nadir imagery.

- ViTAE (TGRS 2022) [51]: A foundation model designed for the analysis of remote sensing data. In our experiments, we built upon its framework for building detection and added an offset head for offset estimation.

- Cascade Mask R-CNN (CVPR 2018) [52]: Originally developed for multi-object detection in images. In our adaptation, an offset head is added for the estimation of roof-to-footprint offsets.

- Single-View Input: In the first protocol, we feed only one of the two images from a pair into the baseline model. This allows the models to operate in their native intended state.

- IoU-Based Merging: The second protocol utilizes both images. We first apply the baseline model to each image independently to extract two separate sets of 3D building information. Then, we match the buildings from these two sets based on their footprint overlap. A building is considered a valid detection only if the Intersection over Union (IoU) of its footprints from the two views exceeds a predefined threshold of 0.3; otherwise, it is discarded. For the matched buildings, the outputs from both views are fused to produce the final 3D model. We posit that through this operation, the building extraction accuracy of an existing single-view method can be enhanced by fusing the extracted information from two views.

4.4. Ablation Study

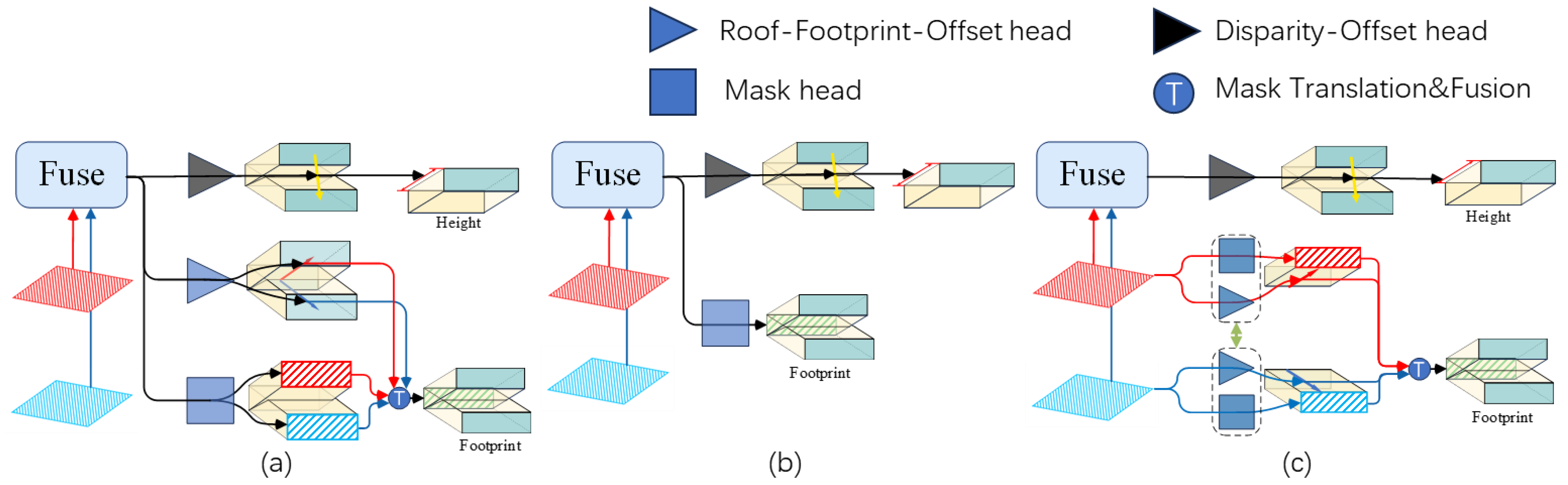

4.4.1. The Network Architectures to Extract Single-View Information (Roof-to-Footprint Offset and Roof)

4.4.2. Weight of the Disparity Offset Loss

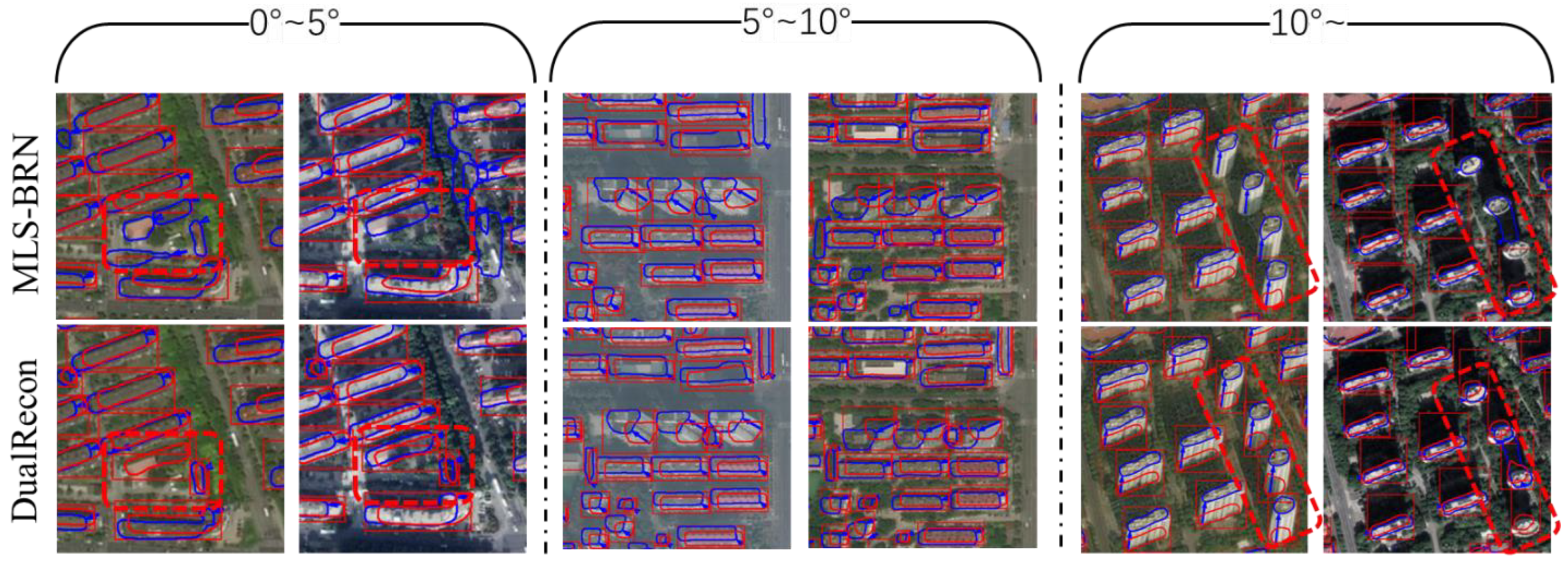

4.5. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Domingo, D.; van Vliet, J.; Hersperger, A.M. Long-Term Changes in 3D Urban form in Four Spanish Cities. Landsc. Urban Plan. 2023, 230, 104624. [Google Scholar] [CrossRef]

- Zi, W.; Li, J.; Chen, H.; Chen, L.; Du, C. Urbansegnet: An Urban Meshes Semantic Segmentation Network Using Diffusion Perceptron And Vertex Spatial attention. Int. J. Appl. Earth Obs. Geoinf. 2024, 129, 103841. [Google Scholar] [CrossRef]

- Zi, W.; Chen, H.; Li, J.; Wu, J. Mambameshseg-Net: A Large-Scale Urban Mesh Semantic Segmentation Method Using A State Space Model with A Hybrid Scanning Strategy. Remote Sens. 2025, 17, 1653. [Google Scholar] [CrossRef]

- Cheng, M.-L.; Matsuoka, M.; Liu, W.; Yamazaki, F. Near-Real-Time Gradually Expanding 3D Land Surface Reconstruction in Disaster Areas By Sequential Drone Imagery. Autom. Constr. 2022, 135, 104105. [Google Scholar] [CrossRef]

- Liang, F.; Yang, B.; Dong, Z.; Huang, R.; Zang, Y.; Pan, Y. A Novel Skyline Context Descriptor for Rapid Localization of Terrestrial Laser Scans To Airborne Laser Scanning Point Clouds. ISPRS J. Photogramm. Remote Sens. 2020, 165, 120–132. [Google Scholar] [CrossRef]

- Bizjak, M.; Mongus, D.; Žalik, B.; Lukač, N. Novel Half-Spaces Based 3D Building Reconstruction Using Airborne LiDAR Data. Remote Sens. 2023, 15, 1269. [Google Scholar] [CrossRef]

- Chen, X.; Song, Z.; Zhou, J.; Xie, D.; Lu, J. Camera and LiDAR Fusion for Urban Scene Reconstruction and Novel View Synthesis Via Voxel-Based Neural Radiance Fields. Remote Sens. 2023, 15, 4628. [Google Scholar] [CrossRef]

- Yan, Y.; Wang, Z.; Xu, C.; Su, N. Geop-Net: Shape Reconstruction of Buildings from LiDAR Point Clouds. IEEE Geosci. Remote Sens. Lett. 2023, 20, 6502005. [Google Scholar] [CrossRef]

- Fan, L.; Yang, Q.; Wang, H.; Deng, B. Robust Ground Moving Target Imaging Using Defocused Roi Data and Sparsity-Based Admm Autofocus Under Terahertz Video Sar. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–16. [Google Scholar] [CrossRef]

- Fan, L.; Yang, Q.; Wang, H.; Qin, Y.; Deng, B. Sequential Ground Moving Target Imaging Based on Hybrid Visar-Isar Image formation in Terahertz Band. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 8738–8753. [Google Scholar] [CrossRef]

- Yu, D.; Wei, S.; Liu, J.; Ji, S. Advanced Approach for Automatic Reconstruction of 3D Buildings from Aerial Images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 541–546. [Google Scholar] [CrossRef]

- Yang, B.; Ali, F.; Zhou, B.; Li, S.; Yu, Y.; Yang, T.; Liu, X.; Liang, Z.; Zhang, K. A Novel Approach of Efficient 3D Reconstruction for Real Scene Using Unmanned Aerial Vehicle Oblique Photogrammetry with Five Cameras. Comput. Electr. Eng. 2022, 99, 107804. [Google Scholar] [CrossRef]

- Bullinger, S.; Bodensteiner, C.; Arens, M. 3D Surface Reconstruction from Multi-Date Satellite Images. In Proceedings of the International Society for Photogrammetry and Remote Sensing (ISPRS Congress) 2021, Nice, France, 5–9 July 2021. [Google Scholar]

- Wang, J.; Meng, L.; Li, W.; Yang, W.; Yu, L.; Xia, G.-S. Learning To Extract Building Footprints from off-Nadir Aerial Images. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 1294–1301. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Yang, H.; Hu, Z.; Zheng, J.; Xia, G.-S.; He, C. 3D Building Reconstruction from Monocular Remote Sensing Images with Multi-Level Supervisions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 27728–27737. [Google Scholar]

- Shao, R.; Wu, J.; Li, J.; Peng, S.; Chen, H.; Du, C. Singlerecon: Reconstructing Building 3D Models of Lod1 from A Single off-Nadir Remote Sensing Image. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 19588–19600. [Google Scholar] [CrossRef]

- Niu, Y.; Chen, H.; Li, J.; Du, C.; Wu, J.; Zhang, Y. Lgfaware-Meshing: Online Mesh Reconstruction from LiDAR Point Cloud with Awareness of Local Geometric Features. Geo-Spat. Inf. Sci. 2025, 1–19. [Google Scholar] [CrossRef]

- Elhashash, M.; Qin, R. Cross-View Slam Solver: Global Pose Estimation of Monocular Ground-Level Video Frames for 3D Reconstruction Using A Reference 3D Model from Satellite Images. ISPRS J. Photogramm. Remote Sens. 2022, 188, 62–74. [Google Scholar] [CrossRef]

- Cui, H.; Shen, S.; Gao, W.; Hu, Z. Efficient Large-Scale Structure from Motion By Fusing Auxiliary Imaging Information. IEEE Trans. Image Process. 2015, 24, 3561–3573. [Google Scholar] [CrossRef]

- Kuhn, A.; Hirschmüller, H.; Scharstein, D.; Mayer, H. A Tv Prior for High-Quality Scalable Multi-View Stereo Reconstruction. Int. J. Comput. Vis. 2016, 124, 2–17. [Google Scholar] [CrossRef]

- Xu, Q.; Kong, W.; Tao, W.; Pollefeys, M. Multi-Scale Geometric Consistency Guided and Planar Prior Assisted Multi-View Stereo. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 4945–4963. [Google Scholar] [CrossRef]

- Kazhdan, M.; Bolitho, M.; Hoppe, H. Poisson Surface Reconstruction. In Proceedings of the Fourth Eurographics Symposium on Geometry Processing, Sardinia, ltaly, 26–28 June 2006. [Google Scholar]

- Cazals, F.; Giesen, J. Delaunay Triangulation Based Surface Reconstruction. In Effective Computational Geometry for Curves and Surfaces; Springer: Berlin/Heidelberg, Germany, 2006; pp. 231–276. [Google Scholar]

- Waechter, M.; Moehrle, N.; Goesele, M. Let There Be Color! Large-Scale Texturing of 3D Reconstructions. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 836–850. [Google Scholar]

- Yang, B.; Bao, C.; Zeng, J.; Bao, H.; Zhang, Y.; Cui, Z.; Zhang, G. Neumesh: Learning Disentangled Neural Mesh-Based Implicit Field for Geometry and Texture Editing. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 597–614. [Google Scholar]

- Zhao, L.; Wang, H.; Zhu, Y.; Song, M. A Review of 3D Reconstruction from High-Resolution Urban Satellite Images. Int. J. Remote Sens. 2023, 44, 713–748. [Google Scholar] [CrossRef]

- Jeong, J. Imaging Geometry and Positioning Accuracy of Dual Satellite Stereo Images: A Review. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 235–242. [Google Scholar] [CrossRef]

- Facciolo, G.; De Franchis, C.; Meinhardt-Llopis, E. Automatic 3D Reconstruction from Multi-Date Satellite Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 57–66. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Gao, Z.; Sun, W.; Lu, Y.; Zhang, Y.; Song, W.; Zhang, Y.; Zhai, R. Joint Learning of Semantic Segmentation and Height Estimation for Remote Sensing Image Leveraging Contrastive Learning. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5614015. [Google Scholar] [CrossRef]

- Buyukdemircioglu, M.; Kocaman, S.; Kada, M. Deep Learning for 3D Building Reconstruction: A Review. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLIII-B2-2022, 359–366. [Google Scholar] [CrossRef]

- Costa, C.J.; Tiwari, S.; Bhagat, K.; Verlekar, A.; Kumar, K.M.C.; Aswale, S. Three-Dimensional Reconstruction of Satellite Images Using Generative Adversarial Networks. In Proceedings of the 2021 International Conference on Technological Advancements and Innovations (ICTAI), Tashkent, Uzbekistan, 10–12 November 2021; pp. 121–126. [Google Scholar]

- Christie, G.; Abujder, R.R.R.M.; Foster, K.; Hagstrom, S.; Hager, G.D.; Brown, M.Z. Learning Geocentric Object Pose in Oblique Monocular Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 14512–14520. [Google Scholar]

- Chang, J.-R.; Chen, Y.-S. Pyramid Stereo Matching Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5410–5418. [Google Scholar]

- Yang, G.; Manela, J.; Happold, M.; Ramanan, D. Hierarchical Deep Stereo Matching on High-Resolution Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5515–5524. [Google Scholar]

- Le Saux, B.; Yokoya, N.; Hansch, R.; Brown, M.; Hager, G. 2019 Data Fusion Contest [Technical Committees]. IEEE Geosci. Remote Sens. Mag. 2019, 7, 103–105. [Google Scholar] [CrossRef]

- Wu, T.; Vallet, B.; Pierrot-Deseilligny, M.; Rupnik, E. A New Stereo Dense Matching Benchmark Dataset for Deep Learning. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 43, 405–412. [Google Scholar] [CrossRef]

- Marí, R.; Ehret, T.; Facciolo, G. Disparity Estimation Networks for Aerial And High-Resolution Satellite Images: A Review. Image Process. Online 2022, 12, 501–526. [Google Scholar] [CrossRef]

- Yang, L.; Kang, B.; Huang, Z.; Xu, X.; Feng, J.; Zhao, H. Depth Anything: Unleashing The Power of Large-Scale Unlabeled Data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle WA, USA, 17–21 June 2024; pp. 10371–10381. [Google Scholar]

- Wang, J.; Chen, M.; Karaev, N.; Vedaldi, A.; Rupprecht, C.; Novotny, D. Vggt: Visual Geometry Grounded Transformer. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 5294–5306. [Google Scholar]

- Günaydın, E.; Yakar, İ.; Bakırman, T.; Selbesoğlu, M.O. Evaluation of Depth Anything Models for Satellite-Derived Bathymetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2025, 48, 101–106. [Google Scholar] [CrossRef]

- Wu, X.; Landgraf, S.; Ulrich, M.; Qin, R. An Evaluation of Dust3r/Mast3r/Vggt 3D Reconstruction on Photogrammetric Aerial Blocks. arXiv 2025, arXiv:2507.14798. [Google Scholar]

- Biljecki, F.; Ledoux, H.; Stoter, J. An Improved Lod Specification for 3D Building Models. Comput. Environ. Urban Syst. 2016, 59, 25–37. [Google Scholar] [CrossRef]

- Stucker, C.; Schindler, K. Resdepth: A Deep Residual Prior for 3D Reconstruction from High-Resolution Satellite Images. ISPRS J. Photogramm. Remote Sens. 2022, 183, 560–580. [Google Scholar] [CrossRef]

- Yu, D.; Ji, S.; Liu, J.; Wei, S. Automatic 3D Building Reconstruction from Multi-View Aerial Images with Deep Learning. ISPRS J. Photogramm. Remote Sens. 2021, 171, 155–170. [Google Scholar] [CrossRef]

- Li, W.; Meng, L.; Wang, J.; He, C.; Xia, G.-S.; Lin, D. 3D Building Reconstruction from Monocular Remote Sensing Images. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Li, W.; Zhao, W.; Yu, J.; Zheng, J.; He, C.; Fu, H.; Lin, D. Joint Semantic–Geometric Learning for Polygonal Building Segmentation from High-Resolution Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2023, 201, 26–37. [Google Scholar] [CrossRef]

- Li, W.; Hu, Z.; Meng, L.; Wang, J.; Zheng, J.; Dong, R.; He, C.; Xia, G.-S.; Fu, H.; Lin, D. Weakly-Supervised 3D Building Reconstruction from Monocular Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5615315. [Google Scholar]

- Li, Z.; Ji, S.; Fan, D.; Yan, Z.; Wang, F.; Wang, R. Reconstruction of 3D Information of Buildings from Single-View Images Based on Shadow Information. ISPRS Int. J. Geo-Inf. 2024, 13, 62. [Google Scholar] [CrossRef]

- Ling, J.; Wang, Z.; Xu, F. Shadowneus: Neural Sdf Reconstruction By Shadow Ray Supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 175–185. [Google Scholar]

- Wang, D.; Zhang, J.; Du, B.; Xia, G.-S.; Tao, D. An Empirical Study of Remote Sensing Pretraining. IEEE Trans. Geosci. Remote Sens. 2022, 61, 5608020. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-Cnn: Delving Into High Quality Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

| Footprint AR ↑ | Footprint AP ↑ | Footprint F1 ↑ | Height MAE ↓ | ||

|---|---|---|---|---|---|

| VGGT + PSR | \ | \ | \ | 4.670 | |

| LoFT | single | 0.629 | 0.755 | 0.686 | 2.337 |

| MLS-BRN | 0.640 | 0.769 | 0.698 | 2.330 | |

| ViTAE | 0.617 | 0.727 | 0.668 | 2.417 | |

| CMRCNN | 0.547 | 0.646 | 0.592 | 2.861 | |

| LoFT | IoU-merge | 0.614 | 0.787 | 0.690 | 2.279 |

| MLS-BRN | 0.633 | 0.808 | 0.709 | 2.230 | |

| ViTAE | 0.592 | 0.765 | 0.668 | 2.348 | |

| CMRCNN | 0.494 | 0.750 | 0.596 | 2.769 | |

| DualRecon | 0.652 | 0.790 | 0.714 | 2.059 | |

| Footprint AR ↑ | Footprint AP ↑ | Footprint F1 ↑ | Height MAE ↓ | |

|---|---|---|---|---|

| Architecture (a) | 0.639 | 0.768 | 0.698 | 2.568 |

| Architecture (b) | 0.654 | 0.801 | 0.719 | 2.162 |

| DualRecon (c) | 0.652 | 0.790 | 0.714 | 2.059 |

| Footprint AR ↑ | Footprint AP ↑ | Footprint F1 ↑ | Height MAE ↓ | |

|---|---|---|---|---|

| 32 | 0.646 | 0.784 | 0.708 | 2.063 |

| 24 | 0.652 | 0.778 | 0.710 | 2.063 |

| 16 | 0.652 | 0.790 | 0.714 | 2.059 |

| 8 | 0.651 | 0.785 | 0.711 | 2.062 |

| Inter-Camera Angle (°) | Footprint AR ↑ | Footprint AP ↑ | Footprint F1 ↑ | Height MAE ↓ | |

|---|---|---|---|---|---|

| mls-brn | <5 | 0.635 | 0.812 | 0.713 | 2.199 |

| 5~10 | 0.633 | 0.796 | 0.705 | 2.139 | |

| >10 | 0.632 | 0.835 | 0.719 | 1.825 | |

| DualRecon | <5 | 0.649 | 0.803 | 0.718 | 2.095 |

| 5~10 | 0.653 | 0.776 | 0.709 | 1.946 | |

| >10 | 0.654 | 0.809 | 0.723 | 1.655 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shao, R.; Chen, H.; Li, J.; Ma, M.; Du, C. DualRecon: Building 3D Reconstruction from Dual-View Remote Sensing Images. Remote Sens. 2025, 17, 3793. https://doi.org/10.3390/rs17233793

Shao R, Chen H, Li J, Ma M, Du C. DualRecon: Building 3D Reconstruction from Dual-View Remote Sensing Images. Remote Sensing. 2025; 17(23):3793. https://doi.org/10.3390/rs17233793

Chicago/Turabian StyleShao, Ruizhe, Hao Chen, Jun Li, Mengyu Ma, and Chun Du. 2025. "DualRecon: Building 3D Reconstruction from Dual-View Remote Sensing Images" Remote Sensing 17, no. 23: 3793. https://doi.org/10.3390/rs17233793

APA StyleShao, R., Chen, H., Li, J., Ma, M., & Du, C. (2025). DualRecon: Building 3D Reconstruction from Dual-View Remote Sensing Images. Remote Sensing, 17(23), 3793. https://doi.org/10.3390/rs17233793