Abstract

Remote sensing image classification has achieved remarkable success in environmental monitoring and urban planning using deep neural networks (DNNs). However, the performance of these models is significantly impacted by domain shifts due to seasonal changes, varying atmospheric conditions, and different geographical locations. Existing solutions, including rehearsal-based and prompt-based methods, face limitations such as data privacy concerns, high computational overhead, and unreliable feature embeddings due to domain gaps. To address these challenges, we propose DACL (dual-pool architecture with contrastive learning), a novel framework for domain incremental learning in remote sensing image classification. DACL introduces three key components: (1) a dual-pool architecture comprising a prompt pool for domain-specific tokens and an adapter pool for feature adaptation, enabling efficient domain-specific feature extraction; (2) a dual loss mechanism that combines image-attracting loss and text-separating loss to enhance intra-domain feature discrimination while maintaining clear class boundaries; and (3) a K-means-based domain selector that efficiently matches unknown domain features with existing domain representations using cosine similarity. Our approach eliminates the need for storing historical data while maintaining minimal computational overhead. Extensive experiments on six widely used datasets demonstrate that DACL consistently outperforms state-of-the-art methods in domain incremental learning for remote sensing image classification scenarios, achieving an average accuracy improvement of 4.07% over the best baseline method.

1. Introduction

In recent years, with the rapid development of deep neural networks (DNNs), applying DNNs in remote sensing image analysis has attracted massive attention and achieved promising progress [1,2]. With the increasing availability of satellite imagery, DNN-based land use and land cover (LULC) classification has become widely used in environmental monitoring and urban planning [3,4]. For example, monitoring deforestation in the Amazon Rainforest, which has lost significant coverage since 2019, requires efficient and accurate classification of massive satellite imagery [5]. By employing DNNs for land cover change detection, DNN-based remote sensing image analysis significantly improves the efficiency and accuracy of environmental monitoring, especially for large-scale areas [6].

Despite these outstanding achievements [1], the current mainstream of DNNs adheres to the closed-world assumption, which requires that the classes, distribution, modalities, etc., of the testing data be identical to those of the training [7]. In real-world applications, however, the DNN models have to face dynamic environments, seasonal changes, and varying atmospheric conditions. For instance, the spectral signatures of vegetation types are constantly changing across different seasons and geographical locations. In temperate regions, deciduous forests show distinct spectral characteristics between summer and winter [8]. In tropical regions, the same vegetation types may present different features due to varying rainfall patterns and soil conditions [9]. The distribution shifts (i.e., the domain gaps) caused by changes in atmospheric conditions, seasonal variations, and sensor characteristics in real-world scenarios make it difficult to obtain a model by close-set training. Therefore, there is an urgent technical need to enable DNN models to continuously and incrementally learn new knowledge from new domains while maintaining performance on old ones, i.e., domain incremental learning (DIL).

Traditional non-deep neural network methods for remote sensing image classification methods, including support vector machines (SVM), random forests (RF), and K-nearest neighbors (k-NN), have been widely used for decades [10]. While these methods have demonstrated success in certain scenarios, they face several inherent limitations. First, these conventional approaches rely heavily on hand-crafted features, which require significant domain expertise and may not capture complex spatial–spectral patterns effectively. Second, their performance degrades notably when dealing with large-scale datasets or when encountering complex scenes with high intra-class variability and low inter-class separability. Third, these methods often struggle with the spatial context information that is crucial for accurate remote sensing image interpretation [11].

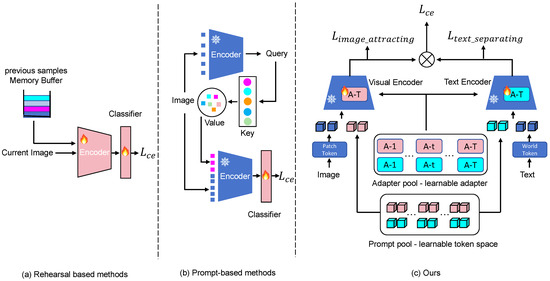

As shown in Figure 1a,b, existing deep neural network approaches to address the DIL problem can be broadly categorized into two groups: rehearsal-based methods [12,13,14] and prompt-based methods [15,16,17]. Rehearsal-based methods maintain a buffer to store representative samples or features from previous domains for replay during new learning sessions. However, these methods face significant challenges in remote sensing applications: (1) data privacy and commercial restrictions often prevent access to historical satellite imagery, and (2) the storage and transmission of high-resolution satellite images demand substantial computational resources. Prompt-based methods, on the other hand, leverage frozen pre-trained models with key–query-matching mechanisms for prompt generation. While these methods effectively mitigate catastrophic forgetting without storing previous data, they encounter two main limitations: (1) the substantial domain gap between pre-training data and remote sensing imagery leads to unreliable feature embeddings and subsequent inaccurate prompt selection, and (2) the requirement of dual forward passes introduces considerable computational overhead.

Figure 1.

(a) Rehearsal-based methods maintain a buffer to store representative samples or features from previous domains for replay during new learning sessions. (b) Prompt-based methods leverage frozen pre-trained models with key–query-matching mechanisms for prompt generation. (c) Our proposed DACL framework introduces a novel dual-pool architecture combined with a contrastive learning strategy. The framework efficiently manages domain-specific knowledge through complementary prompt and adapter pools while utilizing dual loss mechanisms (image-attracting loss and text-separating loss) to enhance feature discrimination.

To tackle these limitations, we propose a novel dual-pool architecture with contrastive learning (DACL) framework for domain incremental learning in remote sensing image classification, as shown in Figure 1c. The proposed DACL uses (contrastive language-image pre-training) CLIP [18] as the backbone and contains three major components, including a dual-pool design with a prompt pool and adapter pool to handle domain-specific feature extraction, a dual loss mechanism combining image-attracting loss and text-separating loss, and a K-means selector. Specifically, (1) in the proposed dual-pool architecture, we establish two complementary components: a prompt pool and an adapter pool, which work synergistically to capture domain-specific characteristics. The prompt pool maintains a collection of learnable tokens, where each domain is assigned a unique set of tokens that encode domain-specific information. Concurrently, the adapter pool houses corresponding domain-adaptive modules that facilitate feature transformation and adaptation. Specifically, for each domain, we learn and store a set of image prompt tokens and text prompt tokens in the prompt pool and their corresponding image adapter modules and text adapter modules in the adapter pool. This architectural design enables effective domain-specific feature extraction through the complementary interaction between prompts and adapters. The prompt tokens serve as learned domain descriptors that guide the feature extraction process, while the adapter modules provide domain-specific transformation capabilities, collectively enhancing the model’s ability to capture and distinguish domain-specific features. (2) In the dual loss mechanism, we propose two complementary loss functions: image-attracting loss () and text-separating loss (). Both losses operate within each domain to enhance intra-domain feature learning. The image-attracting loss pulls together image features from the same class within a domain, increasing intra-class compactness. The text-separating loss pushes apart text features of different classes within the same domain, enhancing inter-class discriminability. This dual-loss design significantly improves the model’s ability to learn discriminative features while maintaining clear class boundaries within each domain. (3) To facilitate efficient domain adaptation, we propose a K-means-based domain selector that leverages cosine similarity metrics to establish correspondences between unknown domain features and existing domain representations. During the training phase, we construct representative feature prototypes for each domain through centroid computation. At inference time, when the domain attribution of an input image is unknown, we first employ the pre-trained CLIP model to extract feature embeddings. Subsequently, we compute the cosine similarity between these extracted features and the established domain prototypes. The domain identifier is then determined by selecting the prototype that exhibits the highest similarity score. Based on the identified domain, the selector activates the corresponding prompt-combined adapter from the dual pools, enabling domain-specific feature processing. This mechanism ensures efficient and accurate domain adaptation without requiring explicit storage of historical data. The proposed selection strategy is particularly effective for domain incremental learning in remote sensing applications, where the model must adapt to evolving domain characteristics while maintaining performance on previously encountered domains. Our approach offers several advantages over conventional methods that rely on sample rehearsal or architectural modifications. First, it eliminates the need for storing historical training samples, significantly reducing memory requirements. Second, the lightweight selection mechanism enables rapid domain adaptation with minimal computational overhead. Finally, the modular design ensures scalability as new domains are incorporated, making it well-suited for handling the dynamic nature of remote sensing data distributions.

To demonstrate the effectiveness of JDA, we conducted extensive experiments on various benchmark datasets. Overall, we achieved a significant improvement over previous methods for remote sensing image classification. We summarize our contributions as follows:

- We propose a novel dual-pool architecture, where each domain is assigned its specific prompt tokens and adapter modules. This domain-specific design enables effective feature extraction and adaptation for each individual domain while maintaining minimal computational overhead.

- We introduce a dual loss mechanism that enhances intra-domain classification performance through image feature attraction and text embedding separation, improving the model’s ability to learn discriminative features for each domain’s classification task.

- Extensive experiments show that our proposed method consistently outperforms state-of-the-art methods in the DIL setting.

2. Related Work

2.1. Remote Sensing Scene Classification

Leveraging the powerful capabilities of deep learning in feature extraction and pattern recognition, remote sensing image classification has seen remarkable advancements in recent years. Deep neural networks (DNNs) are particularly effective in automatically learning relevant features from input images, making them highly suitable for remote sensing image classification tasks. These advancements can be grouped into three major approaches: CNN-based models, Transformer-based models, and hybrid CNN-Transformer architectures.

Several studies, such as [19,20], have proposed training CNN-based remote sensing classification models from scratch. This approach relies entirely on the task-specific dataset to learn relevant features without any pre-existing knowledge or pre-trained weights. While training from scratch requires a significant amount of labeled data and computational resources, it offers flexibility to tailor the model to the unique characteristics of remote sensing images, such as large spatial resolutions and diverse environmental conditions. Alternatively, many works, such as [21,22,23], have adopted transfer learning by leveraging CNN models pre-trained on large-scale image datasets like ImageNet. Reference [23] not only employed pre-trained CNNs for feature extraction from the NWPU-RESISC45 dataset but also fine-tuned the learning rate in the final layer to achieve improved classification results. By fine-tuning these models for remote sensing classification tasks, researchers can take advantage of the pre-trained features learned from large and diverse datasets, significantly improving performance, especially when labeled remote sensing data are scarce. This method reduces training time and mitigates the challenge of insufficient annotated data.

Transformer models, particularly Vision Transformers (ViTs), have shown great promise in remote sensing image classification due to their ability to capture global contextual information and long-range dependencies. ViT’s architecture is based on the self-attention mechanism, which allows the model to focus on various regions of the image and process them simultaneously, improving the capture of spatial relations in the image. ViTs, as explored by [24,25,26], are especially well-suited for remote sensing tasks because they excel at handling long-range dependencies and global contextual features. Unlike CNNs, which focus primarily on local features, ViTs can model relationships across the entire image, making them effective for complex scene recognition where context and spatial relationships are crucial. As a result, ViTs have yielded promising classification results in various remote sensing applications, including land-use mapping and urban planning.

Recent research has explored combining the strengths of CNNs and Transformers, recognizing that CNNs excel at local feature extraction, while Transformers are superior at modeling global dependencies. This hybrid approach leverages the advantages of both architectures, leading to improved performance in remote sensing classification tasks. For instance, [26,27] proposed hybrid models that integrate CNNs for efficient local feature extraction with Transformers for capturing long-range contextual information. This combination allows the model to effectively analyze remote sensing images by first extracting fine-grained details through CNNs and then considering the broader contextual relationships through Transformers. This hybrid approach has demonstrated substantial improvements in classification accuracy, particularly in complex scenarios such as multi-class and multi-scale image classification.

One major challenge in remote sensing image classification is the scarcity of labeled data, which can limit the performance of deep learning models. To address this, generative adversarial networks (GANs) have been introduced to augment the dataset by generating synthetic data that mimic real-world remote sensing images. GANs, as explored by [28,29,30], can generate realistic, high-quality synthetic images based on existing data, which can then be used to expand the training dataset. This approach helps alleviate the issue of limited labeled data by providing additional samples for training, thus improving the robustness and generalization capability of the model. Furthermore, GANs can be employed to create rare or underrepresented classes in remote sensing datasets, improving the overall classification performance, especially in imbalanced datasets.

2.2. Domain Incremental Learning

Domain incremental learning (DIL) addresses scenarios where data arriving sequentially share the same categorical space but exhibit distribution shifts across different domains. In this paradigm, while the fundamental classification task remains unchanged, the underlying data distribution evolves as new domains are encountered. The primary challenge in DIL is to effectively transfer knowledge between related but distinct domains while preventing catastrophic forgetting of previously acquired domain expertise. For example, in image classification, a model initially trained on digital photos might need to adapt to sketches, paintings, or other artistic renditions of the same object categories. Despite the visual disparities between these domains, the core semantic concepts remain consistent. The overarching objectives of DIL are to maintain performance on previously encountered domains without requiring access to their historical data, and efficiently adapt to new domains by leveraging knowledge accumulated from prior domains. Existing approaches to DIL can be broadly categorized into two main streams.

Rehearsal-based methods are trained by storing data samples from previous tasks and reintroducing them into the model when learning new tasks, helping the model retain and preserve knowledge from earlier tasks. These stored samples, known as exemplars, can include raw images [31], feature representations [32,33], or samples from the generative model [34,35,36]. By combining exemplars from old tasks with data from new tasks, the model can maintain its memory of previous tasks without the need to retrain the entire model. The early rehearsal (ER) method [37] utilizes a memory buffer to store samples from previous sessions and incorporates them into the new data. DER [38] improves upon ER by storing the outputs of the old model and applying a loss function to align these outputs with those of the new model. Some methods add constraints or regularization to parameters during model training based on storing old data to prevent the model from forgetting the knowledge of old tasks when learning new tasks. Some of these methods regularize parameters [39,40,41,42], while others use knowledge distillation methods [43,44,45,46]. EWC [47] is a classic incremental learning method that imposes regularization constraints on model parameters to prevent excessive changes in the learned old task knowledge when learning new tasks. EWC_on [43] retains the basic idea of EWC and dynamically adjusts the regularization term of the parameters according to the loss of the current task and the output of the learned task. The core idea of LWF [44] is to ensure that the learning of new tasks does not destroy the knowledge of old tasks through knowledge distillation. Based on storing previous data, some methods change or extend the architecture of the model so that it can effectively learn new tasks while retaining its memory for old tasks. Some methods expand the network by adding new neurons or connections to handle new tasks. Others allocate independent network modules for each new task, allowing the knowledge of different tasks to coexist in the model in parallel, thereby avoiding interference between tasks [45,48,49].

Methods based on prompts are widely used in the fields of NLP [50,51,52] and CV [15,53,54]. L2P [55] has pioneered the integration of prompt learning into the field of continual learning. It proposes a key–query-matching strategy to select prompts from a prompt pool, which are then incorporated into pre-trained models. Later research expands upon this key–query-matching approach. DualPrompt [56] utilizes a set of task-specific prompts and dynamically selects the most relevant one during inference based on the similarity between the input and these prompts. By designing different prompts for different tasks, this method allows the model to choose the most suitable prompt based on the characteristics of the input, thereby improving its performance and adaptability in multi-task scenarios. Recent developments have further advanced prompt-based methods. VPT [15] introduces visual prompt tuning, which adapts pre-trained vision models to downstream tasks by prepending trainable tokens to the input sequence. This approach maintains the frozen backbone while achieving competitive performance through lightweight prompt optimization. CPT [57] proposes conditional prompt tuning that generates dynamic prompts based on input conditions, enabling more flexible and context-aware feature extraction. Additionally, Prograd [58] introduces a gradient-based prompt selection mechanism that automatically determines the most relevant prompts through gradient analysis, reducing the computational overhead of traditional key–query matching. CODA [59] applies key–query matching to assign weights to prompts, facilitating end-to-end optimization. This weighted approach allows for more nuanced prompt selection and better handling of ambiguous cases. S-Prompts [16] focuses on domain incremental learning by collecting distinct prompts for each domain and using KNN to select the appropriate prompt during inference. Building upon this, MPT [60] proposes a multi-modal prompt tuning framework that leverages both visual and textual prompts to enhance cross-domain adaptation capabilities. In the context of efficiency and scalability, recent works have explored lightweight prompt architectures. Adapter-Tuning [61] combines prompts with efficient adapter modules to reduce parameter overhead while maintaining performance. Similarly, Tip [62] introduces a learning-free inference technique that significantly reduces computational costs during inference while preserving the benefits of incremental learning. For multi-layer fusion methods in CNN-based remote sensing scene classification: Chen et al. [63] proposed a multi-scale feature fusion network that hierarchically combines features from different CNN layers to enhance scale-invariant representation. Xu et al. [64] introduced a pyramid feature attention network (PFAN) that effectively fuses multi-scale features with attention mechanisms. Yuan et al. [65] developed a deep feature fusion framework that integrates both shallow and deep features through adaptive weight learning. Regarding attention-based CNN methods: Hou et al. [66] designed a spatial-channel attention module specifically for remote sensing scene understanding. Cui et al. [67] proposed a dual-attention fusion network that simultaneously captures spatial and channel dependencies. Kim et al. [68] introduced a multi-attention feature fusion network that selectively emphasizes informative features across different scales. Domain incremental learning has emerged as a crucial paradigm for adapting models to new domains while preserving performance on previously learned domains [69]. Traditional DIL approaches primarily rely on knowledge distillation and regularization techniques to mitigate catastrophic forgetting. For instance, learning without forgetting (LwF) employs knowledge distillation to preserve responses on old domains while learning new ones. However, these methods often struggle with the stability–plasticity dilemma, leading to suboptimal performance across domains. More recent approaches have explored parameter-efficient adaptation strategies. Adapter-based methods [70] introduce small, domain-specific modules while keeping the backbone network frozen. While these methods show promise in parameter efficiency, they typically lack mechanisms for explicit domain-aware feature learning, potentially limiting their effectiveness in capturing domain-specific characteristics. Our DACL framework advances beyond these existing approaches in several key aspects. Unlike conventional DIL methods that focus solely on preventing forgetting, DACL actively promotes domain-aware feature learning through contrastive learning. We introduce a novel dual-adaptation mechanism that combines both domain-specific and shared adaptations, enabling more flexible and efficient domain adaptation. Our approach maintains a better balance between domain-specific feature learning and cross-domain knowledge transfer, addressing the limitations of previous adapter-based methods.

2.3. Vision-Language Models

Recently, vision-language models have emerged as a powerful paradigm in computer vision and multimodal understanding. These models learn joint representations of visual and textual information through large-scale pre-training. CLIP [18] pioneered this direction by training on 400 million image–text pairs using contrastive learning, achieving impressive zero-shot transfer capabilities. Following CLIP’s success, Florence [71] proposed a unified vision-language representation space that further enhanced the model’s ability to understand complex visual scenarios. ALIGN [72] scaled up the training to even larger datasets with noisy image–text pairs from the internet, demonstrating the robustness of vision-language pre-training. These models have shown remarkable potential in bridging the semantic gap between visual and textual modalities, opening new possibilities for various vision tasks.

3. Method

3.1. Problem Formulation

We address the challenge of domain incremental learning (DIL) in remote sensing image classification. Given a sequence of T domains arriving sequentially, where T represents the number of incremental sessions, the model needs to continuously adapt to new domain knowledge while retaining previously learned information. For each domain t, we have a training dataset and testing datasets , where represents the i-th image from domain t, denotes its corresponding label, and is the total number of images in domain t. At each session, is the only accessible training set. After this training is completed, the model must be evaluated on all previous test data, which is from .

3.2. Framework Overview

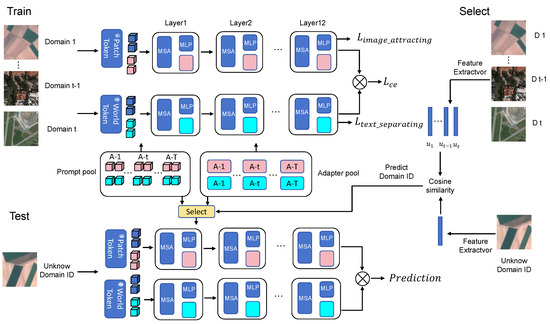

As shown in Figure 2, we propose DACL (dual-pool architecture with contrastive learning), a novel framework for domain incremental learning. Built upon CLIP architecture, DACL consists of three key components: a dual-pool architecture that combines a prompt pool, which stores learnable tokens for domain-specific information encoding, and an adapter pool containing domain-adaptive modules for feature transformation. Together, these components enable effective domain-specific feature extraction through synergistic interaction. The framework includes a dual loss mechanism that incorporates an image-attracting loss (), consolidating intra-class features, and a text-separating loss (), which maximizes inter-class distances. This dual objective enhances feature discrimination while maintaining clear class boundaries. Additionally, a K-means domain selector builds domain prototypes during training, uses cosine similarity to match unknown inputs with existing domain representations, and activates corresponding prompt-adapter pairs for domain-specific processing. In our architecture, prompts are implemented as learnable tokens, while adapters are constructed as MLP modules strategically incorporated parallel to the frozen MLP layers in each CLIP Transformer block. The framework initializes with empty prompts and adapter pools. Upon encountering data from a novel domain, the system initializes domain-specific prompts and adapters to capture domain-specific features. Following domain-specific training, these optimized parameters are archived in their respective pools, alongside a domain prototype computed as the mean CLIP feature representation of that domain. During inference, the domain selector identifies the most similar domain prototype for each test image and activates the corresponding prompt–adapter parameter set for prediction, ensuring efficient domain-specific processing.

Figure 2.

Overview of the proposed DACL framework. During training, images from different domains are processed through domain-specific prompt–adapter combinations, with the dual loss mechanism ( and ) optimizing feature discrimination. During testing, unknown domain images are first matched with the most suitable domain parameters through the K-means domain selector, and then processed through the corresponding prompt–adapter path for final prediction. The prompt pool and adapter pool collectively store and manage domain-specific knowledge, enabling efficient domain adaptation without requiring historical data storage.

3.3. Prompt Design

Our framework employs a dual-prompt strategy consisting of image prompts and language prompts, both designed to enhance domain adaptation capabilities while maintaining independence across different domains.

3.3.1. Image Prompt Design

For domain t, we introduce a set of learnable continuous parameters as image prompts , where and represent the prompt length and embedding dimension, respectively. The complete image embedding has the following format:

where denotes the original image tokens and represents the classification token inherited from the CLIP image encoder. This extended embedding sequence serves as input to the Transformer blocks, maintaining the CLIP image encoder processing pipeline.

As new domains arrive sequentially, we establish an image prompt pool containing domain-specific prompts:

where represents the image prompt for domain t, and T denotes the total number of domains. Each prompt set maintains independence from other domains, ensuring specialized feature extraction capabilities.

3.3.2. Language Prompt Design

For the language modality, we propose learnable context prompts to replace conventional hand-crafted prompts in CLIP. For domain t, we define a sequence of M learnable vectors as the language prompt:

where and denote the prompt length and embedding dimension for language prompts. These prompts are designed to complement the image prompts and enhance domain-specific text representation.

For class j in domain t, the complete text input is structured as follows:

where represents the word embeddings of the j-th class name. Similar to image prompts, we maintain a language prompt pool across all domains:

This domain-specific prompt design enables better adaptation of the pre-trained CLIP model to various domains while maintaining independence between domains, which is crucial for incremental learning scenarios.

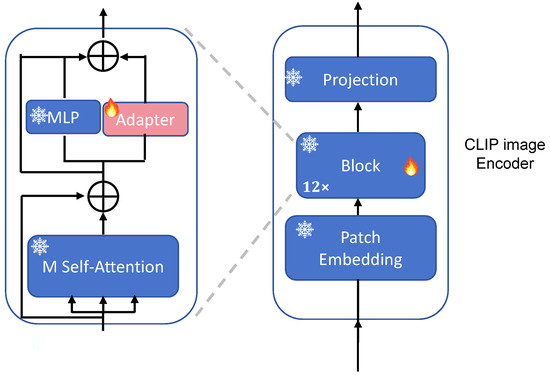

3.4. Adapter Pool Design

Our framework employs separate adapter pools for visual and textual streams to capture both domain-specific and layer-specific characteristics while maintaining cross-modal alignment. In each Transformer block, we insert a learnable adapter next to the MLP. The location of the adapter is shown in Figure 3.

Figure 3.

Adapter location diagram.

3.4.1. Visual Adapter Architecture

For domain t and Transformer layer l, we maintain a visual adapter pool containing domain-specific and layer-specific visual adapters:

Each visual adapter consists of: Visual down-projection , visual up-projection , and activation function (ReLU), where is the visual feature dimension and is the visual bottleneck dimension ().

3.4.2. Textual Adapter Architecture

Similarly, we maintain a textual adapter pool for domain-specific and layer-specific language adaptation:

Each textual adapter comprises the following: Textual down-projection , textual up-projection , and activation function (ReLU), where is the text feature dimension and is the textual bottleneck dimension ().

3.5. Training Objectives

Our training framework employs three complementary loss components targeting intra-class image feature attraction, inter-class text feature repulsion, and cross-modal alignment, as follows:

3.5.1. Image Feature Attraction Loss

To enhance intra-class compactness, we employ an attraction loss that pulls together image features from the same class:

where , are the visual features of two image samples, , are their corresponding class labels, is an indicator function that equals 1 when , and N is the batch size.

3.5.2. Text Feature Repulsion Loss

To enhance inter-class discriminability, we introduce a repulsion loss that pushes apart text features from different classes:

where , are textual features of two text samples, m is the margin parameter, and is an indicator function equals 1 when .

3.5.3. Cross-Modal Alignment Loss

To optimize the alignment between image and text features within the same class, we employ a cross-entropy loss:

where denotes the inner product between image and text features, is the temperature parameter, and the summation in the denominator is over all image–text pairs in the batch, represents the category text feature corresponding to image i.

3.6. K-Means Domain Selector

During the training phase, we constructed domain prototypes to facilitate automatic domain selection for test images with unknown domain labels. For each domain, we computed a representative prototype by averaging the features of all training images within that domain:

where represents the domain prototype for the t-th domain, is the number of training images in the t-th domain, and denotes the CLIP visual encoder applied to the i-th image. This process results in a set of domain prototypes , where T represents the total number of domains.

Domain Selection and Inference

To select the correct domain prototypes and, we first extract a feature of the input image :

The domain with the highest similarity to domain prototypes is selected:

We retrieve corresponding domain-specific parameters from the dual pools: visual prompt from the image prompt pool , language prompt from the language prompt pool , visual adapter from the visual adapter pool , and textual adapter from the textual adapter pool .

The similarity scores between visual feature and text features of all classes are calculated as follows:

The final prediction is based on the highest similarity score, as follows:

This inference mechanism effectively leverages our dual-pool architecture to enhance both visual and textual representations, enabling accurate cross-modal alignment and predictions across different domains. The algorithms are shown in Algorithm 1.

| Algorithm 1: Domain incremental learning with dynamic component selection. |

|

4. Experiment

4.1. Experimental Setup

4.1.1. Datasets

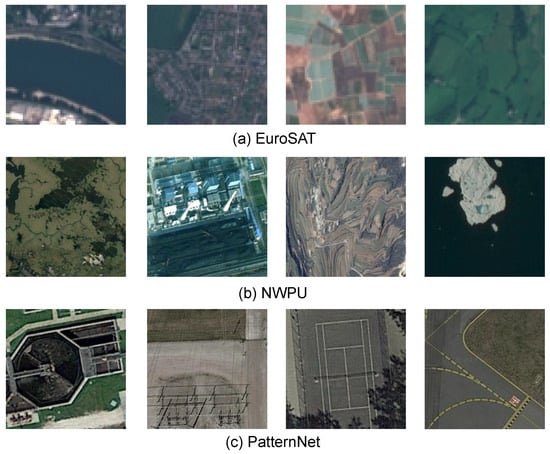

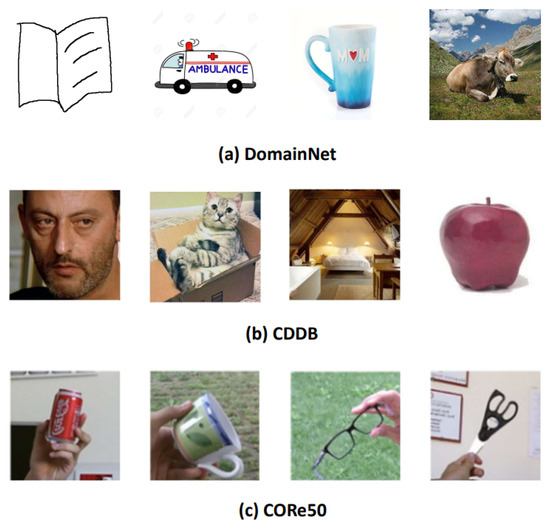

We conducted a series of experiments on three widely recognized benchmark datasets (see Figure 4) for remote sensing image classification tasks and on three natural datasets (see Figure 5).

Figure 4.

Sample images from EuroSAT, NWPU, and PatternNet datasets.

Figure 5.

Sample images from DomainNet, CDDB, and CORe50 datasets.

EuroSAT [73] is a well-established dataset for land use and land cover classification, widely used in remote sensing and machine learning research. It is derived from Sentinel-2 satellite imagery, which provides high-resolution, multi-spectral data useful for analyzing various types of land cover across the globe. The dataset includes 13 spectral bands, spanning from visible to infrared wavelengths, offering comprehensive information that is critical for distinguishing different land cover types. This makes EuroSAT particularly valuable for developing and evaluating models that require multi-spectral input to identify patterns in land use. The EuroSAT dataset contains 27,000 labeled and georeferenced images, each representing a specific geographical area and land use type. These images are annotated into 10 distinct classes, which include common land cover categories such as forests, agricultural areas, urban regions, water bodies, and roads. The labels are designed to represent both natural and man-made environments, enabling a diverse range of classification tasks. The 10 classes in EuroSAT are as follows: Annual Crop, Forest, Herbaceous Vegetation, Highway, Industrial, Mountains, Pasture, Permanent Crop, Residential, and River.

NWPU [23] is another prominent benchmark dataset designed for remote sensing image scene classification. It has become a widely adopted resource in the remote sensing and computer vision communities for evaluating and comparing classification models. The dataset consists of a total of 31,500 high-resolution images, carefully collected and categorized into 45 distinct scene categories. These categories span a wide variety of land scenes and environments, making the dataset highly valuable for training and evaluating models that need to classify complex, real-world geographic settings. Each of the 45 categories in the dataset is represented by 700 images, resulting in a balanced distribution of images across all categories. The dataset is specifically designed to cover a diverse range of land cover types and geographic environments, including both natural landscapes and human-made structures. The scene categories in the NWPU dataset include (but are not limited to) urban areas, agricultural land, forests, mountains, deserts, water bodies, and various infrastructure types such as airports, roads, bridges, and ports. This diversity allows researchers to explore a broad array of remote sensing applications, from environmental monitoring to urban planning and disaster management. The images in the NWPU dataset are geo-referenced, meaning they include spatial metadata, making it particularly useful for geospatial analysis and geographic information system (GIS) applications. The dataset was compiled from various sources of remote sensing imagery, including satellite imagery, aerial photography, and drone-captured images, all processed to standardize their resolution and size for consistency across the dataset.

PatternNet [74] is a specialized dataset created for land use classification in remote sensing imagery. It includes images from 38 distinct land use categories, with each category containing 800 images, resulting in a total of 30,400 high-resolution images. These images are collected from a wide variety of urban and rural environments across the United States, providing a diverse set of land use examples that are typical of real-world remote sensing applications. One of the key features of PatternNet is its large scale. The dataset is designed to support the development and evaluation of deep learning models, which require vast amounts of labeled data. It serves as an alternative to other datasets, such as UCMD, and aims to push the boundaries of RSIR, particularly for deep learning-based methods that depend on large, well-annotated datasets for training. Moreover, PatternNet is characterized by both high intra-class diversity and high inter-class similarity, which presents a unique challenge for classification models. The dataset is collected from a wide range of cities across the United States, ensuring that each land use category contains images with significant variations in appearance. This high diversity within classes and similarity between classes ensures that models trained on PatternNet are exposed to complex and varied scenarios, improving their robustness and ability to generalize to new data.

DomainNet [75] is widely used for cross-domain learning tasks. It consists of 6 distinct image domains, with 345 categories in each domain, including Clipart (vector art images), Infograph (infographics combining images and text), Painting (hand-painted artworks), Quick, Draw! (simple sketches from Google Quick, Draw!), Real (real-world photographs), and Sketch (hand-drawn sketches with abstract lines and shapes). The training set of DomainNet contains over 400,000 images, which are distributed across the six domains, providing a rich source of labeled data for training. The test set is composed of more than 170,000 images, enabling rigorous evaluation of models’ generalization capabilities across domains. The dataset is structured in a way that enables the evaluation of domain adaptation methods, where models are typically trained on one domain (the source domain) and tested on another (the target domain). Furthermore, DomainNet is also beneficial for exploring the limits of deep learning models in handling highly varied and large-scale datasets. It has served as a benchmark in multiple papers proposing novel domain adaptation methods, including moment matching, adversarial learning, and contrastive learning techniques. With its large size and diverse set of domains, it remains one of the most challenging datasets for testing cross-domain learning approaches.

CDDB [76] is a deepfake detection benchmark dataset designed to evaluate the performance of detection models on deepfakes generated by multiple generative models. This dataset is particularly valuable for testing the robustness of deepfake detection algorithms in realistic scenarios, where the generated content may vary significantly in quality, complexity, and source model. To highlight the diverse range of generative techniques used to create deepfakes, CDDB is organized into different tracks, each focusing on a unique subset of deepfake generation methods. Among these tracks, we focus on the challenging hard track, which includes a diverse set of deepfake generation models that vary in their complexity and the level of realism they can produce. The hard track includes models such as GauGAN, BigGAN, WildDeepfake, WhichFaceReal, and SAN. Each of these models represents a distinct approach to generating deepfakes, thereby presenting different challenges for detection algorithms.

CORe50 [77] is a comprehensive and challenging dataset designed for studying object recognition in dynamic, real-world environments. It contains a total of 50 object classes, covering a wide variety of common household objects, such as toys, electronics, and tools. The dataset is divided into 11 distinct domains, with 8 domains dedicated to training and three domains reserved for testing. Each domain consists of approximately 15,000 images, offering a rich variety of data for model training and evaluation. The CORe50 dataset stands out due to the extensive variations in environmental conditions under which the images are captured. The samples span different lighting conditions, backgrounds, viewpoints, and motion blur effects, ensuring that models are exposed to realistic, real-world variability. This feature makes CORe50 particularly well-suited for evaluating the generalization ability of machine learning models, especially when exposed to changing or unseen environmental factors.

4.1.2. Evaluation Metrics

We use four metrics to evaluate the training effect of the model:

(1) Following the training phase encompassing S domains, the model’s performance is evaluated using precision metric on the respective test sets across all domains:

where indicates the number of samples that were correctly classified and represents the total sample size. For different domains, we have a series of corresponding accuracies:. The average accuracy quantifies the model’s cross-domain generalization capability by computing the mean performance across individual domain test sets:

Specifically, represents the arithmetic mean of domain-specific classification accuracies, providing a comprehensive metric for assessing the model’s efficacy in managing multiple domains within the domain-incremental learning paradigm.

(2) We use the forgetting degree as a measure of how much the model loses memory of previously learned domain knowledge when it learns new domains. The forgetting degree for a specific domain can be expressed as follows:

where represents the accuracy obtained when the i-th domain is introduced into the training process and trained for the first time. Specifically, quantifies the extent to which the model’s performance on old domains deteriorates as it adapts to new domains. A higher forgetting degree indicates a greater loss of accuracy on the old domains after training on new ones, reflecting the model’s inability to retain previously acquired knowledge. Conversely, a lower forgetting degree suggests that the model can maintain its performance on old domains while successfully learning new tasks, demonstrating its capacity for better knowledge retention and adaptability in a domain-incremental learning setting.

(3) “Parameters” refers to the total number of additional trainable parameters introduced in the model, excluding those in the frozen backbone:

These parameters are typically added to the model during incremental learning to adapt it to new tasks or domains. By tracking the number of parameters, we can measure the model’s complexity and the resources required for learning new knowledge without altering the core, pre-trained components. This helps assess how well the model scales when continuously learning from new data while maintaining the stability of the backbone network.

(4) “Buffer size” refers to the number of samples from previous domains that the model stores during the learning process. If no samples from previous domains are used, then the buffer size is 0. This buffer is used to mitigate the problem of catastrophic forgetting by retaining representative examples from old domains. These stored samples are periodically reintroduced into the training process to help the model maintain its performance on earlier tasks while learning new ones. The buffer size directly impacts the model’s ability to balance memory retention and resource efficiency, as a larger buffer can preserve more knowledge but requires more storage and computational resources. If the model achieves relatively high accuracy with a smaller buffer size, it indicates that the model has high performance, as it can retain essential knowledge from previous domains without needing a large memory buffer. This suggests that the model is effective at generalizing and minimizing the loss of knowledge when learning new tasks.

4.1.3. Implementation Details

For fairness, we use the pre-trained CLIP ViT-B/16 model as the backbone. For each incremental session, the model is trained using the SGD optimizer for 30 epochs, and the batch size is set to 128, except for DomainNet, which is 256. The SGD optimizer is used with a learning rate of 0.01, a learning rate decay of 0.1, a weight decay of 2 × 10−4, and the number of workers set to 16.

In the context of domain incremental learning for remote sensing imagery, we evaluate our proposed DACL framework against several state-of-the-art methods: VPT [15], CoOp [17], Clip-Adapter [70], CoCoOp [57], DAPT [78], and S-Prompts [16]. VPT exclusively addresses visual adaptation, while CoOp emphasizes learning task-specific text prompts. CLIP-Adapter introduces adaptation layers on top of the CLIP model, allowing the model to flexibly adapt to downstream tasks while retaining the strong representation capabilities of the original model. CoCoOp builds upon CoOp by introducing a lightweight neural network to generate input-conditional tokens (vectors) for each image. These tokens are added to the learnable vectors from CoOp, enabling better image-specific adaptation. DAPT leverages a dynamic adapter mechanism that assigns a unique scale to each token, ensuring that tokens are adjusted based on their "importance scores" to better adapt to 3D samples. Additionally, DAPT proposes an internal prompt strategy, which directly utilizes the adapter’s output to construct prompts. This approach helps the model capture both instance-specific features and global perspectives. S-Prompts adopts a more comprehensive approach by simultaneously optimizing both text and visual prompt tokens to enhance cross-domain adaptation capabilities.

For evaluation on three canonical DIL benchmark datasets, S-Prompts serve as the primary baseline. EWC [39] and L2P [55], which operate without requiring historical domain sample retention, constitute significant comparative benchmarks in terms of both performance metrics and parametric efficiency. The comparison framework also encompasses rehearsal-based methodologies such as DyTox [12]. However, given their substantial memory requirements for sample caching, their performance metrics are considered primarily for reference purposes.

4.2. Comparison Results

4.2.1. Results on Remote Sensing Datasets

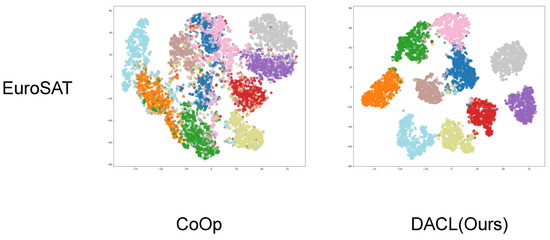

Overall Performance: Table 1, Table 2, Table 3, Table 4, Table 5 and Table 6 show each domain result in the final session on six different orders. Figure 6 shows the visual comparison results of DACL and CoOp. The empirical results presented in the tabulated data demonstrate the model’s performance trajectory across sequential domain adaptation phases. The proposed methodology exhibits consistently superior performance metrics relative to all baseline approaches across diverse testing sequences.

Table 1.

Domain-incremental results on order 1 on the final session. The (%) of each dataset is evaluated as well as the (All). The best results are marked in bold.

Table 2.

Domain-incremental results on order 2 on the final session. The (%) of each dataset is evaluated as well as the (All). The best results are marked in bold.

Table 3.

Domain-incremental results on order 3 on the final session. The (%) of each dataset is evaluated as well as the (All). The best results are marked in bold.

Table 4.

Domain-incremental results on order 4 on the final session. The (%) of each dataset is evaluated as well as the (All). The best results are marked in bold.

Table 5.

Domain-incremental results on order 5 on the final session. The (%) of each dataset is evaluated as well as the (All). The best results are marked in bold.

Table 6.

Domain-incremental results on order 6 on the final session. The (%) of each dataset is evaluated as well as the (All). The best results are marked in bold.

Figure 6.

Feature visualization comparison on the EuroSAT dataset using t-SNE. From left to right: the original feature distribution, features learned by CoOp, and features learned by our proposed DACL method. The visualization demonstrates that DACL achieves better feature separation and more compact clusters compared to CoOp, indicating superior domain-specific feature learning and class discrimination capabilities. Different colors represent different classes, and the more distinct boundaries between clusters in DACL suggest improved classification performance.

During the training process, as multiple domains are incrementally introduced for training, we use KNN clustering to classify the instances. With each new domain added, a corresponding set of cluster centers is also added. This approach allows us to efficiently determine the category of each instance during testing. As shown in Table 7, our method achieves a high classification accuracy across different domains, demonstrating its effectiveness in handling domain-specific variations. As observed, due to the larger number of categories in the NWPU dataset, the classification task is more challenging. As a result, the corresponding KNN classification accuracy is slightly lower compared to other datasets.

Table 7.

Accuracy of category classification using KNN clustering on order 1.

Conventional methodologies encounter significant scalability constraints as buffer requirements expand with progressive incremental sessions, resulting in increased memory utilization and computational complexity. In contrast, our proposed framework circumvents these limitations, rendering it particularly advantageous for extended incremental learning scenarios. While elevated forgetting rates are observed in instances of domain overlap between the NWPU and PatternNet datasets, this phenomenon does not significantly compromise the framework’s efficacy in mitigating catastrophic forgetting.

Traditional approaches predominantly rely on pre-trained architectures optimized for natural image processing, which exhibit suboptimal performance when applied to remote sensing imagery. Our end-to-end framework offers enhanced computational efficiency while maintaining robust classification performance metrics.

Evaluation of different orders: The proposed methodology demonstrates superior performance, achieving mean accuracies of 88.95%, 89.08%, 89.32%, 89.14%, 89.39%, and 88.87% across orders 1 through 6, respectively, consistently surpassing comparative methodologies, particularly S-prompts. Notably, our framework exhibits substantial performance margins over the second-highest-performing method, with improvements of 4.07%, 3.74%, 3.70%, 4.38%, 4.58%, and 3.82% across the respective orders.

The framework’s robust performance across varying sequential orders can be attributed to its architectural design, specifically the independent branch structure comprising the adapter-pool and prompt-pool components. This modular architecture enables independent domain knowledge acquisition, conferring order-robustness and invariance properties across different continual learning sequences.

Evaluation of Different Domains: Empirical observations indicate that comparative methodologies demonstrate satisfactory performance on EuroSAT and PatternNet datasets while exhibiting significant performance degradation on the NWPU dataset. Our framework, through the preservation of previously acquired parameters and domain-specific fine-tuning of the adapter and prompt learner parameters, achieves superior classification outcomes.

On the EuroSAT dataset, our methodology surpasses the second-highest-performing approach by margins of 2.23%, 2.40%, 2.02%, 2.23%, 1.78%, and 2.04% across orders 1 through 6, respectively. For the PatternNet dataset, performance improvements of 0.59%, 0.44%, 0.26%, 0.23%, 0.43%, and 0.58% are observed across the respective orders. Given the pronounced inter-class distinctions within these datasets, while all comparative methods achieved notable classification accuracy, our proposed approach consistently demonstrated superior performance metrics. The substantial class separability in these datasets contributed to elevated performance metrics across all methodologies, yet our framework maintained consistent superiority in classification precision.

The NWPU dataset evaluation reveals more substantial performance differentials, with our methodology surpassing the second-highest-performing approach by 4.80%, 8.38%, 7.59%, 9.96%, 10.55%, and 7.23% across orders 1 through 6, respectively. This dataset presents more nuanced inter-class variations and heightened challenges in class discrimination, with intricate class boundaries rendering precise categorization particularly demanding. While conventional approaches exhibited suboptimal performance under these challenging conditions, our framework maintains robust classification capabilities, achieving substantially superior results despite the inherent complexity of the classification task.

Training Across All Domains: As shown in Table 8, to evaluate the performance of our proposed method, we conduct joint training using datasets from all domains and test the trained model on all samples to obtain the average accuracy. As shown, our method achieves an accuracy that is 3.41% higher than the second-best method, S-Prompts. This indicates that, even without considering incremental learning, the adapter structure we proposed is highly effective.

Table 8.

The performance of the experiments when we use all domains as the training samples. The (%) values are evaluated. The best results are marked in bold.

4.2.2. Results on Classic DIL Datasets

We conducted a comprehensive evaluation comparing our proposed DACL framework against state-of-the-art DIL methods. Our comparison focused on both average accuracy and forgetting metrics to assess the ability of each method to maintain high performance while mitigating catastrophic forgetting over time. In addition, we explored the extra parametric overhead introduced by tuning-based approaches, providing a deeper understanding of their efficiency in real-world applications.

One of the key strengths of DACL lies in its efficient design, which strategically limits the number of parameters requiring optimization. Specifically, DACL exclusively trains the adapter pool and prompt word pool, enabling it to effectively capture domain-specific characteristics during incremental learning. This targeted approach ensures that the model adapts to new domains and tasks while keeping the number of alignment parameters to a minimum, thus preventing unnecessary complexity.

Moreover, this streamlined architecture allows DACL to substantially reduce the parametric burden when scaling to scenarios involving numerous classes and domains. As the number of tasks grows, DACL continues to provide a resource-efficient solution, outperforming existing methods in terms of both accuracy and computational efficiency. This makes DACL particularly well-suited for large-scale incremental learning tasks, where memory and computational resources may be limited.

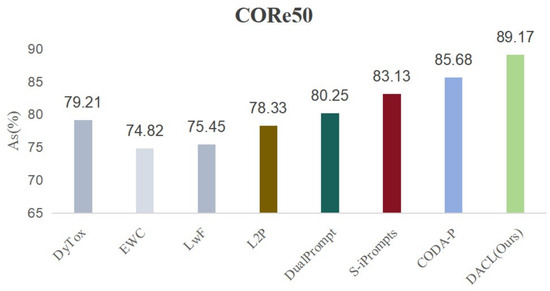

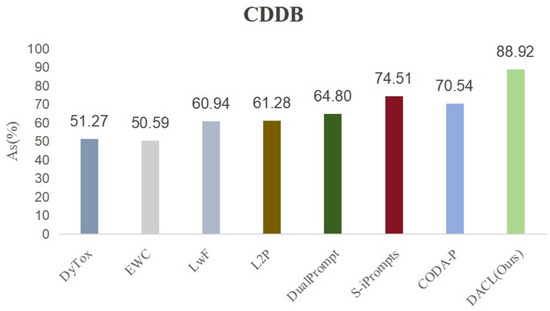

Table 9 shows the comparison of results on the DomainNet dataset. Our model achieves an accuracy improvement of +2.64% while having only slightly more trainable parameters than the SOTA method. In terms of the degree of forgetfulness, our DACL also achieves SOTA results. Figure 7 shows the accuracy results on the CORe50 dataset. Note that the degree of forgetfulness does not apply to the CORe50 dataset because the training and test sets of CORe50 are from non-overlapping domains. Figure 8 shows the accuracy results on CDDB-Hard. CDDB is a deepfake detection dataset with only two classes: real and fake. Due to the particularity of CDDB-Hard, it only contains five domains, each with two classes. On the CORe50 and CDDB-Hard datasets, our accuracy is still significantly higher than other methods.

Table 9.

Comparison results on the DomainNet dataset. The domain ID of the test image is unknown in the DIL setting. (%) is the average accuracy after training all S tasks. The best exemplar-free DIL results are marked in bold.

Figure 7.

The comparison results on the CORe50 dataset.

Figure 8.

The comparison results on the hard track of the CDDB dataset.

4.3. Ablations

4.3.1. Ablation Study on Loss Components

This section presents an ablation analysis conducted on order 1 to evaluate the differential impact of loss function components on model performance. As demonstrated in Table 10, optimal performance is achieved with the complete loss function implementation, exhibiting a 0.88% performance margin over the second-highest configuration. The systematic degradation in model accuracy observed with partial loss function implementations underscores the significance of comprehensive loss component integration.

Table 10.

Loss analysis on order 1. The best result is indicated in bold.

The consistency of this performance pattern across varied configurations emphasizes the critical nature of maintaining complete loss component integration for optimal performance metrics. The ablation analysis provides compelling evidence for the substantial contribution of each component to the model’s overall efficacy. Component removal or modification results in diminished feature capture capabilities, manifesting in quantifiable performance deterioration. This observation suggests that the loss components exhibit non-additive interactions that enhance model robustness and generalization capabilities.

Furthermore, the performance differential between the complete model and its ablated variants indicates the fundamental importance of integrated loss components in addressing complex computational tasks. Each component addresses specific aspects of the learning process, encompassing convergence optimization, feature discrimination enhancement, and training stability maintenance. The empirical evidence supports the conclusion that the synergistic interaction of all components is instrumental in achieving optimal model performance.

4.3.2. Component Ablations

Table 11 presents the ablation studies of the adapter-pool and prompt-pool on order 1, proving the efficiency of the two components. From the second and third rows, we can observe that using either component alone does not yield the best classification performance. Specifically, the accuracy achieved with the adapter pool alone is lower than that of the prompt pool alone. As shown in the fourth row, it is clear from the figure that using both components simultaneously has an average that is 4.07% higher than the second highest accuracy, which is significantly better than using either component alone. The results show that increasing the number of trainable parameters leads to more significant improvements in classification performance. This confirms that the model benefits from both the tailored inputs (prompts) and the specialized transformations (adapters) that are designed to optimize the model’s capacity for each specific domain. When either of these components is missing, the model is unable to fully leverage the domain-specific information, resulting in suboptimal performance.

Table 11.

Adapter-pool and prompt-pool whole ablation experiments on order 1. We report the (%) on each domain test set and the (%).

In conclusion, the ablation experiment highlights the importance of incorporating both the prompt pool and adapter pool in domain increment tasks. The results confirm that using the best-performing prompts and adapters for each domain is a key factor in improving the model’s ability to generalize and perform well across different domains. Future work could explore further refinements to these pools or investigate alternative strategies for domain adaptation, but the current findings clearly establish the value of dynamic, domain-specific selection for optimal model performance.

4.3.3. Dimensional Ablation in Adapter Architectures

As shown in Table 12, we conduct ablation experiments to explore the impact of the adapter’s dimensionality on order 1. It is important to clarify that the dimensionality here refers to the size of the bottleneck structure within the adapter, specifically the input and output dimensions of the projection layers. To control the number of parameters, adapters typically use a “bottleneck” architecture that first reduces the input dimensionality before expanding it back to the original size via a projection layer. This design helps to lower both the computational cost and the total number of parameters while maintaining the model’s capacity.

Table 12.

Ablation experiments on adapter dimension on order 1. We report the (%) on each domain test set and the (%).

In our comprehensive ablation study, we systematically investigated the impact of embedding dimensionality by comparing two configurations: 32 and 64 dimensions. The empirical results consistently demonstrated that the 64-dimensional setting achieved superior performance across multiple evaluation metrics. Specifically, the higher-dimensional representation exhibited enhanced accuracy compared to its 32-dimensional counterpart. Based on these experimental findings, we adopted 64 as the optimal dimensionality for the adapter architecture in subsequent experiments.

4.3.4. Ablation Study of Loss Function Coefficients

For the two additional components in the loss function, we explore the impact of different coefficients on the training performance. As shown in Table 13, for the coefficients and , we test a range of values: 0.1, 0.3, 0.5, 0.7, and 1.0. When investigating the effect of one coefficient, we fix the other coefficient at 1.0. The results show that the highest average accuracy is achieved when and . For consistency across experiments, we set both coefficients to 1.0 in all subsequent tests. Additionally, it is clear that the two loss components we introduced significantly enhance the model’s performance.

Table 13.

Ablation experiments of loss function coefficients on order 1. We report the (%).

5. Conclusions

This paper presented DACL, a novel framework for domain incremental learning in remote sensing image classification that combines a dual-pool architecture with contrastive learning. Our extensive experiments demonstrated that DACL effectively addresses the challenges of domain adaptation while maintaining minimal computational overhead and ensuring data privacy. The key contributions and findings of this work include the development of a dual-pool architecture that efficiently manages domain-specific knowledge without storing historical data, the implementation of a novel dual loss mechanism for enhanced feature discrimination, and the design of a K-means-based domain selector for efficient parameter selection during inference. Despite its promising performance, DACL faces several limitations. The framework’s effectiveness may decrease when dealing with extreme domain shifts, such as severe weather conditions or dramatic seasonal changes. The current domain selection mechanism might become less efficient with a large number of domains, and the model’s performance is somewhat sensitive to the choice of hyperparameters in the contrastive learning component. Additionally, resource requirements increase linearly with the number of domains, though at a lower rate compared to existing methods. Several promising directions for future research emerge from this work. DACL could be extended to handle more complex domain shifts by developing adaptive mechanisms for extreme weather conditions, incorporating temporal dynamics for seasonal variations, and exploring multi-modal domain adaptation. To enhance scalability and efficiency, future work should investigate more compact parameter-sharing strategies and develop hierarchical domain selection mechanisms. The framework could also be extended to other remote sensing tasks such as object detection and segmentation, with potential applications in real-time monitoring systems. Furthermore, improving robustness through uncertainty quantification methods, active learning for domain adaptation, and enhanced resilience to noisy or incomplete domain information represents another important direction for future research. These advancements would further strengthen DACL’s capability to address the evolving challenges in remote sensing applications while maintaining its core advantages of efficiency and privacy preservation. The continued development of such domain incremental learning approaches is crucial for the advancement of robust and adaptable remote sensing systems.

Author Contributions

Conceptualization, Y.S. and Y.L.; methodology, Y.S. and X.H.; software, Y.S. and Y.L.; validation, X.H.; writing—original draft preparation, Y.S. and Y.L.; writing—review and editing, Y.S. and X.H.; supervision, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

No new data were created.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS-J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Khan, A.H.; Fraz, M.M.; Shahzad, M. Deep learning based land cover and crop type classification: A comparative study. In Proceedings of the 2021 International Conference on Digital Futures and Transformative Technologies (ICoDT2), Islamabad, Pakistan, 20–21 May 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Zang, N.; Cao, Y.; Wang, Y.; Huang, B.; Zhang, L.; Mathiopoulos, P.T. Land-use mapping for high-spatial resolution remote sensing image via deep learning: A review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5372–5391. [Google Scholar] [CrossRef]

- Singh, G.; Singh, S.; Sethi, G.; Sood, V. Deep learning in the mapping of agricultural land use using Sentinel-2 satellite data. Geographies 2022, 2, 691–700. [Google Scholar] [CrossRef]

- Prudente, V.H.R.; Skakun, S.; Oldoni, L.V.; Xaud, H.A.; Xaud, M.R.; Adami, M.; Sanches, I.D. Multisensor approach to land use and land cover mapping in Brazilian Amazon. ISPRS-J. Photogramm. Remote Sens. 2022, 189, 95–109. [Google Scholar] [CrossRef]

- Zhang, C.; Sargent, I.; Pan, X.; Li, H.; Gardiner, A.; Hare, J.; Atkinson, P.M. Joint Deep Learning for land cover and land use classification. Remote Sens. Environ. 2019, 221, 173–187. [Google Scholar] [CrossRef]

- Bai, L.; Du, S.; Zhang, X.; Wang, H.; Liu, B.; Ouyang, S. Domain adaptation for remote sensing image semantic segmentation: An integrated approach of contrastive learning and adversarial learning. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Wang, M.; Zheng, Y.; Huang, C.; Meng, R.; Pang, Y.; Jia, W.; Zhou, J.; Huang, Z.; Fang, L.; Zhao, F. Assessing Landsat-8 and Sentinel-2 spectral-temporal features for mapping tree species of northern plantation forests in Heilongjiang Province, China. For. Ecosyst. 2022, 9, 100032. [Google Scholar] [CrossRef]

- Mello, F.A.; Demattê, J.A.; Bellinaso, H.; Poppiel, R.R.; Rizzo, R.; de Mello, D.C.; Rosin, N.A.; Rosas, J.T.; Silvero, N.E.; Rodríguez-Albarracín, H.S. Remote sensing imagery detects hydromorphic soils hidden under agriculture system. Sci. Rep. 2023, 13, 10897. [Google Scholar] [CrossRef]

- Ghamisi, P.; Plaza, J.; Chen, Y.; Li, J.; Plaza, A.J. Advanced spectral classifiers for hyperspectral images: A review. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–32. [Google Scholar] [CrossRef]

- Foody, G.M.; Mathur, A. A relative evaluation of multiclass image classification by support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1335–1343. [Google Scholar] [CrossRef]

- Douillard, A.; Ramé, A.; Couairon, G.; Cord, M. Dytox: Transformers for continual learning with dynamic token expansion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9285–9295. [Google Scholar]

- Zhu, K.; Zhai, W.; Cao, Y.; Luo, J.; Zha, Z.J. Self-sustaining representation expansion for non-exemplar class-incremental learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9296–9305. [Google Scholar]

- Peng, L.; He, Y.; Wang, S.; Song, X.; Dong, S.; Wei, X.; Gong, Y. Global self-sustaining and local inheritance for source-free unsupervised domain adaptation. Pattern Recognit. 2024, 155, 110679. [Google Scholar] [CrossRef]

- Jia, M.; Tang, L.; Chen, B.C.; Cardie, C.; Belongie, S.; Hariharan, B.; Lim, S.N. Visual prompt tuning. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 709–727. [Google Scholar]

- Wang, Y.; Huang, Z.; Hong, X. S-prompts learning with pre-trained transformers: An occam’s razor for domain incremental learning. Adv. Neural Inf. Process. Syst. 2022, 35, 5682–5695. [Google Scholar]

- Zhou, K.; Yang, J.; Loy, C.C.; Liu, Z. Learning to prompt for vision-language models. Int. J. Comput. Vis. 2022, 130, 2337–2348. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- He, N.; Fang, L.; Li, S.; Plaza, J.; Plaza, A. Skip-connected covariance network for remote sensing scene classification. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 1461–1474. [Google Scholar] [CrossRef] [PubMed]

- Zhang, F.; Du, B.; Zhang, L. Scene classification via a gradient boosting random convolutional network framework. IEEE Trans. Geosci. Remote Sens. 2015, 54, 1793–1802. [Google Scholar] [CrossRef]

- Penatti, O.A.; Nogueira, K.; Dos Santos, J.A. Do deep features generalize from everyday objects to remote sensing and aerial scenes domains? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 44–51. [Google Scholar]

- Dong, R.; Xu, D.; Jiao, L.; Zhao, J.; An, J. A fast deep perception network for remote sensing scene classification. Remote Sens. 2020, 12, 729. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Bazi, Y.; Bashmal, L.; Rahhal, M.M.A.; Dayil, R.A.; Ajlan, N.A. Vision transformers for remote sensing image classification. Remote Sens. 2021, 13, 516. [Google Scholar] [CrossRef]

- Bashmal, L.; Bazi, Y.; Al Rahhal, M. Deep vision transformers for remote sensing scene classification. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium—IGARSS, Brussels, Belgium, 11–16 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 2815–2818. [Google Scholar]

- Xu, K.; Deng, P.; Huang, H. Vision transformer: An excellent teacher for guiding small networks in remote sensing image scene classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Wang, G.; Zhang, N.; Liu, W.; Chen, H.; Xie, Y. MFST: A multi-level fusion network for remote sensing scene classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Lin, D.; Fu, K.; Wang, Y.; Xu, G.; Sun, X. MARTA GANs: Unsupervised representation learning for remote sensing image classification. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2092–2096. [Google Scholar] [CrossRef]

- Ma, D.; Tang, P.; Zhao, L. SiftingGAN: Generating and sifting labeled samples to improve the remote sensing image scene classification baseline in vitro. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1046–1050. [Google Scholar] [CrossRef]

- Wei, Y.; Luo, X.; Hu, L.; Peng, Y.; Feng, J. An improved unsupervised representation learning generative adversarial network for remote sensing image scene classification. Remote Sens. Lett. 2020, 11, 598–607. [Google Scholar] [CrossRef]

- Perkonigg, M.; Hofmanninger, J.; Langs, G. Continual active learning for efficient adaptation of machine learning models to changing image acquisition. In Proceedings of the International Conference on Information Processing in Medical Imaging, Virtual, 28–30 June 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 649–660. [Google Scholar]

- Hayes, T.L.; Kafle, K.; Shrestha, R.; Acharya, M.; Kanan, C. Remind your neural network to prevent catastrophic forgetting. In Proceedings of the European Conference on Computer Vision, Virtual, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 466–483. [Google Scholar]

- Iscen, A.; Zhang, J.; Lazebnik, S.; Schmid, C. Memory-efficient incremental learning through feature adaptation. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XVI 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 699–715. [Google Scholar]

- Shin, H.; Lee, J.K.; Kim, J.; Kim, J. Continual learning with deep generative replay. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Van De Ven, G.M.; Li, Z.; Tolias, A.S. Class-incremental learning with generative classifiers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 3611–3620. [Google Scholar]

- Ostapenko, O.; Puscas, M.; Klein, T.; Jahnichen, P.; Nabi, M. Learning to remember: A synaptic plasticity driven framework for continual learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11321–11329. [Google Scholar]

- Riemer, M.; Cases, I.; Ajemian, R.; Liu, M.; Rish, I.; Tu, Y.; Tesauro, G. Learning to learn without forgetting by maximizing transfer and minimizing interference. arXiv 2018, arXiv:1810.11910. [Google Scholar]

- Buzzega, P.; Boschini, M.; Porrello, A.; Abati, D.; Calderara, S. Dark experience for general continual learning: A strong, simple baseline. Adv. Neural Inf. Process. Syst. 2020, 33, 15920–15930. [Google Scholar]

- Kirkpatrick, J.; Pascanu, R.; Rabinowitz, N.; Veness, J.; Desjardins, G.; Rusu, A.A.; Milan, K.; Quan, J.; Ramalho, T.; Grabska-Barwinska, A.; et al. Overcoming catastrophic forgetting in neural networks. Proc. Natl. Acad. Sci. USA 2017, 114, 3521–3526. [Google Scholar] [CrossRef]

- Lopez-Paz, D.; Ranzato, M. Gradient episodic memory for continual learning. Adv. Neural Inf. Process. Syst. 2017, 30, 1–17. [Google Scholar]

- Aljundi, R.; Babiloni, F.; Elhoseiny, M.; Rohrbach, M.; Tuytelaars, T. Memory aware synapses: Learning what (not) to forget. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 139–154. [Google Scholar]

- Zenke, F.; Poole, B.; Ganguli, S. Continual learning through synaptic intelligence. In Proceedings of the International Conference on Machine Learning—PMLR, Sydney, Australia, 6–11 August 2017; pp. 3987–3995. [Google Scholar]

- Schwarz, J.; Czarnecki, W.; Luketina, J.; Grabska-Barwinska, A.; Teh, Y.W.; Pascanu, R.; Hadsell, R. Progress & compress: A scalable framework for continual learning. In Proceedings of the International Conference on Machine Learning—PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 4528–4537. [Google Scholar]

- Li, Z.; Hoiem, D. Learning without forgetting. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 2935–2947. [Google Scholar] [CrossRef]

- Serra, J.; Suris, D.; Miron, M.; Karatzoglou, A. Overcoming catastrophic forgetting with hard attention to the task. In Proceedings of the International Conference on Machine Learning—PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 4548–4557. [Google Scholar]

- Rebuffi, S.A.; Kolesnikov, A.; Sperl, G.; Lampert, C.H. iCaRL: Incremental classifier and representation learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2001–2010. [Google Scholar]

- Liu, Y.; Schiele, B.; Sun, Q. Rmm: Reinforced memory management for class-incremental learning. Adv. Neural Inf. Process. Syst. 2021, 34, 3478–3490. [Google Scholar]

- Mallya, A.; Lazebnik, S. Packnet: Adding multiple tasks to a single network by iterative pruning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7765–7773. [Google Scholar]

- Golkar, S.; Kagan, M.; Cho, K. Continual learning via neural pruning. arXiv 2019, arXiv:1903.04476. [Google Scholar]

- Devlin, J. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]