Integrated Geomatic Approaches for the 3D Documentation and Analysis of the Church of Saint Andrew in Orani, Sardinia

Abstract

Highlights

- Panoramic photogrammetry provides centimeter-level accuracy in complex spaces.

- Apple LiDAR generates reliable 3D models comparable to CRP but with lower density.

- Panoramic photogrammetry offers a fast, low-cost alternative for cultural heritage surveys.

- Apple LiDAR enables accessible, accurate 3D documentation of small structures.

Abstract

1. Introduction

2. Materials and Methods

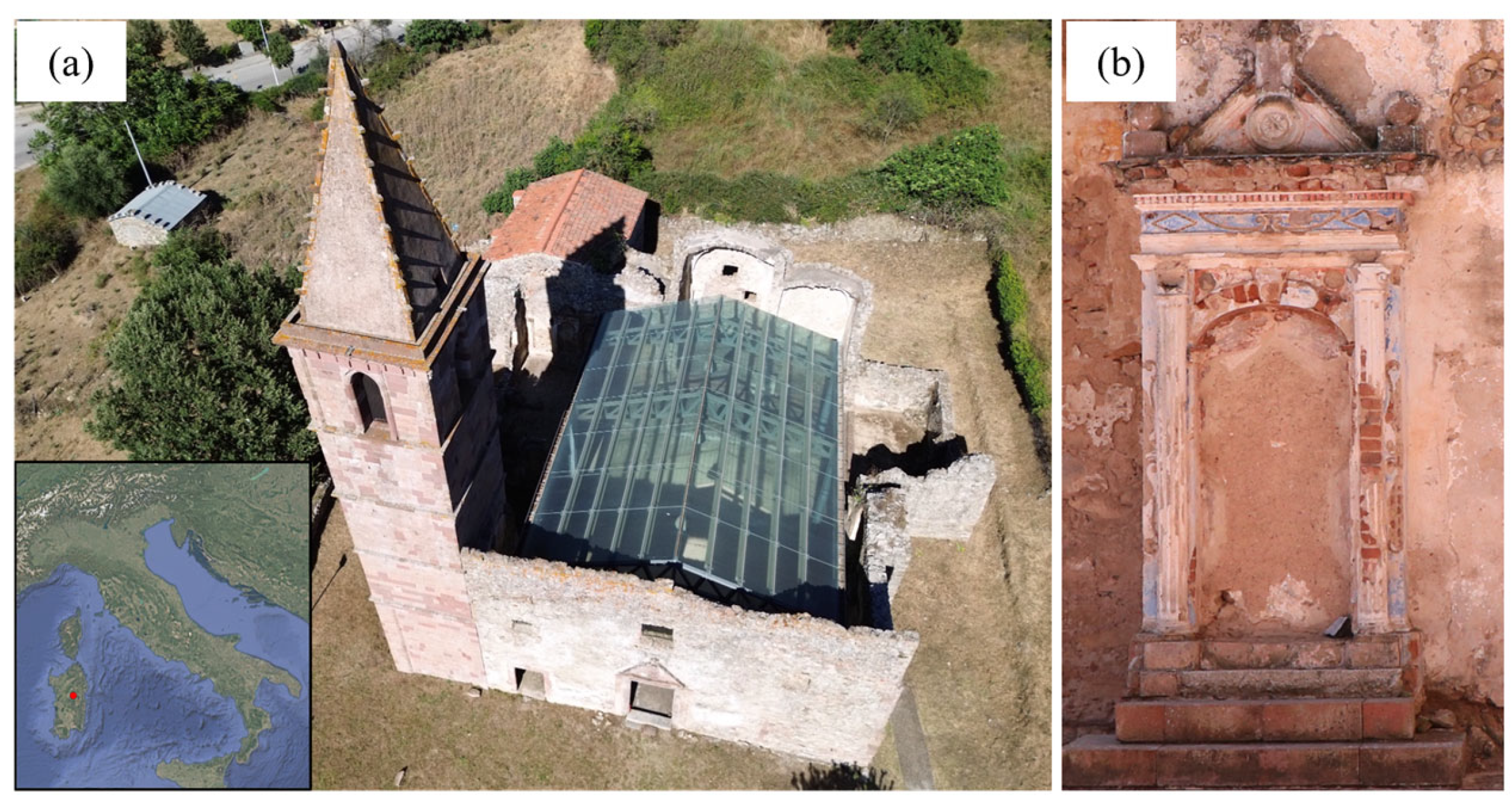

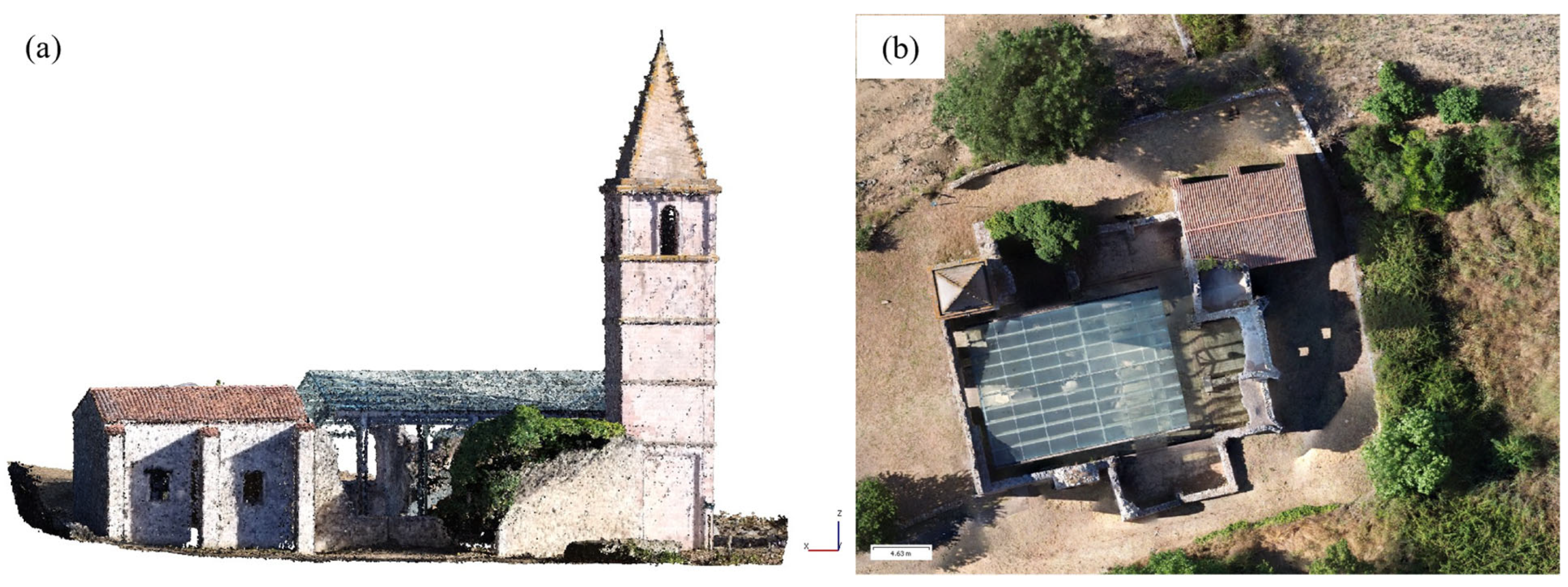

2.1. Case Study

2.2. Acquired Data

- Terrestrial Laser Scanner (TLS): interior and exterior of the bell tower, inner and outer areas of the courtyard.

- 360° Camera: interior of the bell tower.

- Unmanned Aerial Vehicle (UAV) photogrammetry: exterior of the bell tower (specifically for the upper parts), open areas of the courtyard and a wider surrounding area.

- Close range Photogrammetry (CRP): Niche.

- LiDAR Apple: Niche.

2.2.1. Terrestrial Laser Scanner (TLS)

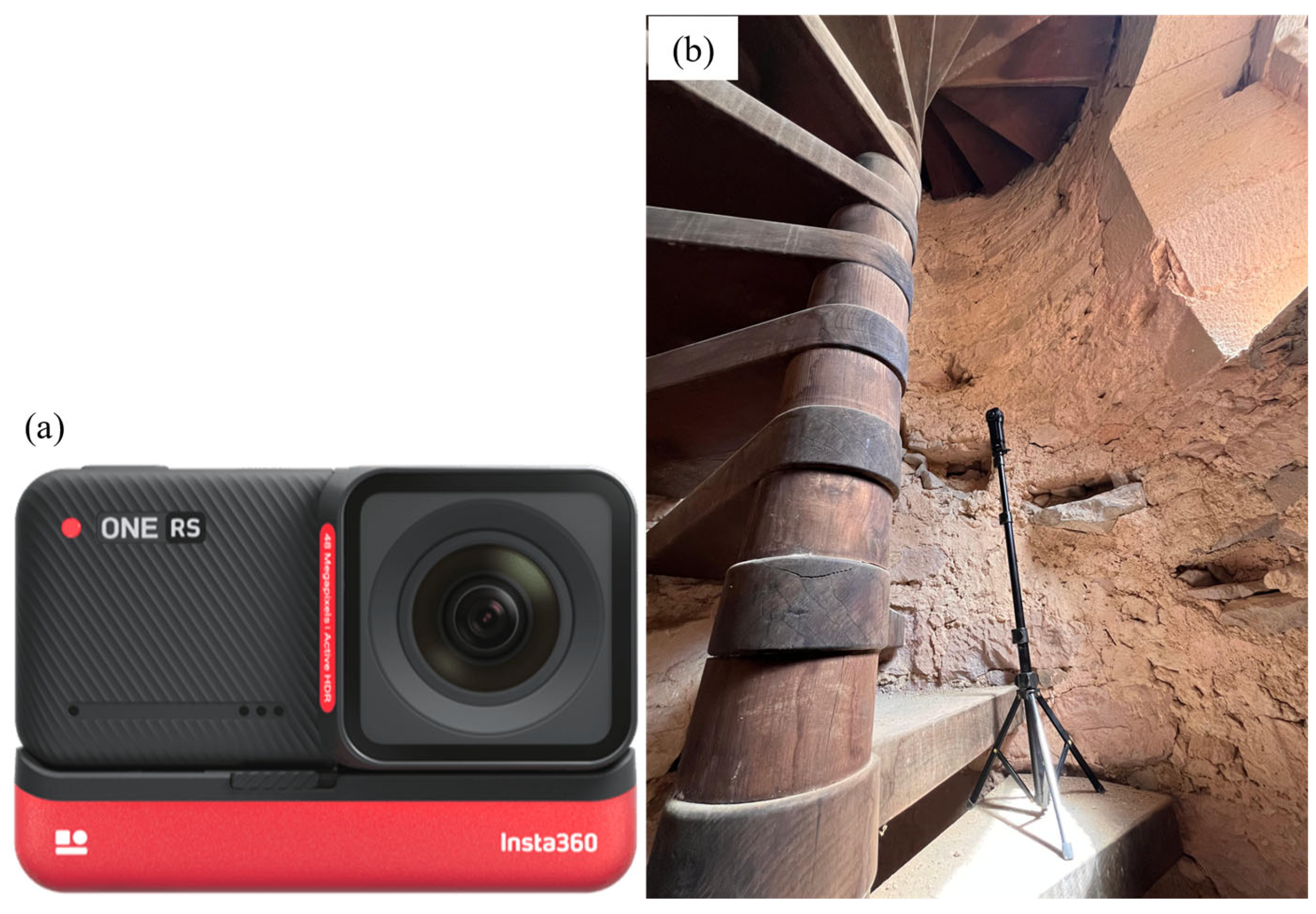

2.2.2. 360° Digital Camera

2.2.3. UAV Flight Survey

2.2.4. Close Range Photogrammetry (CRP)

2.2.5. Apple LiDAR

3. Data Processing

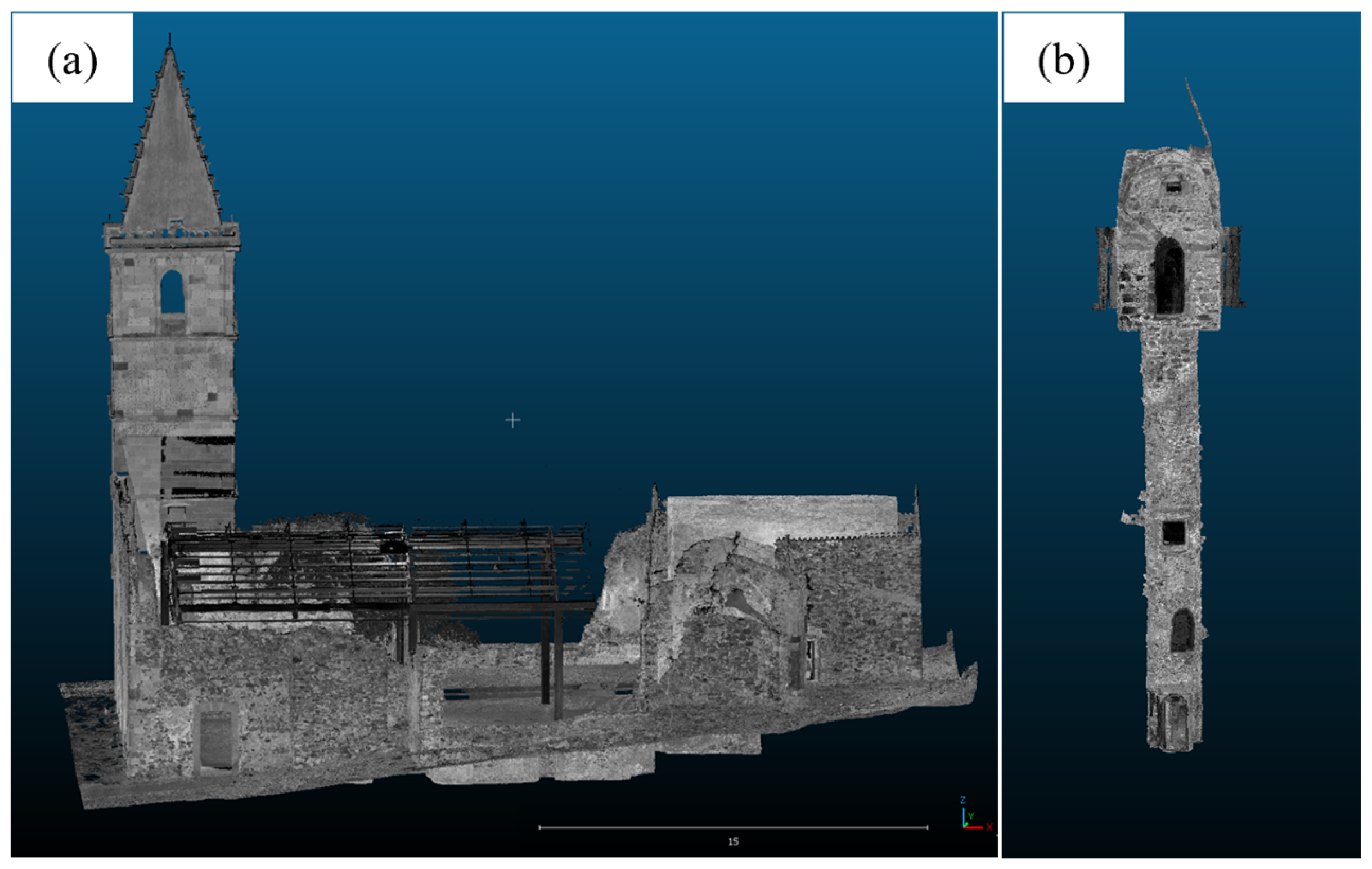

3.1. TLS Processing

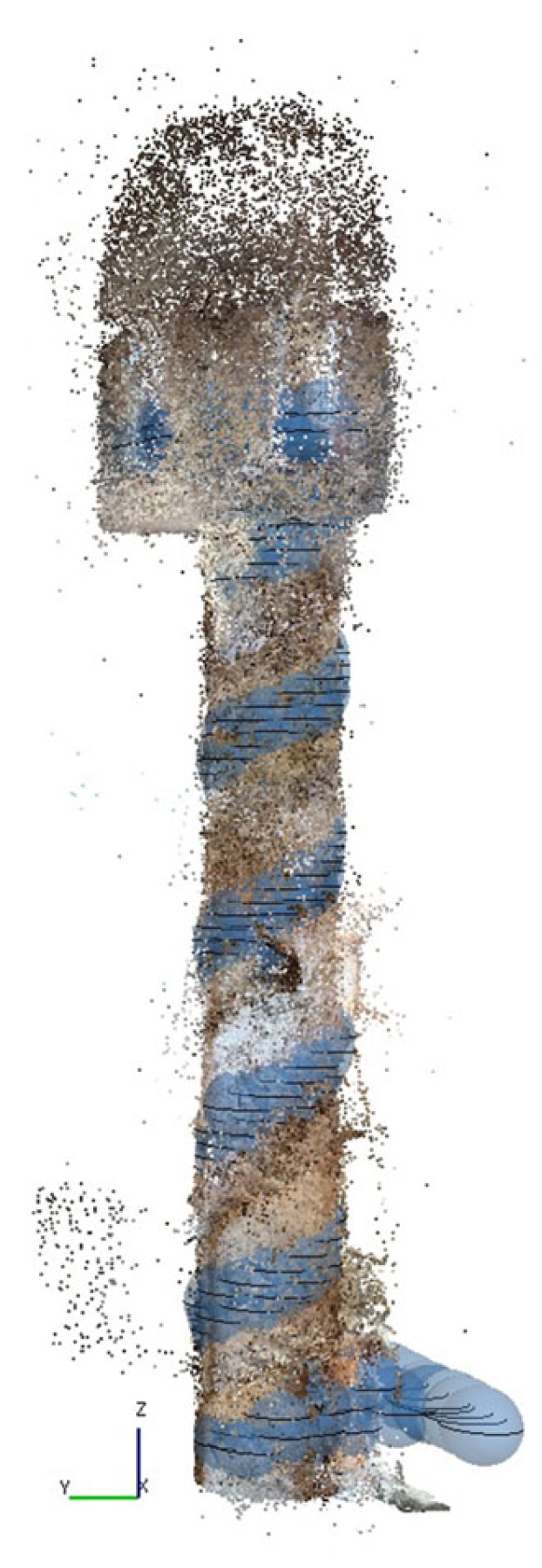

3.2. Panoramic Photogrammetry

3.3. UAV Photogrammetry

3.4. CRP Processing

3.5. Apple LiDAR Processing

4. Results

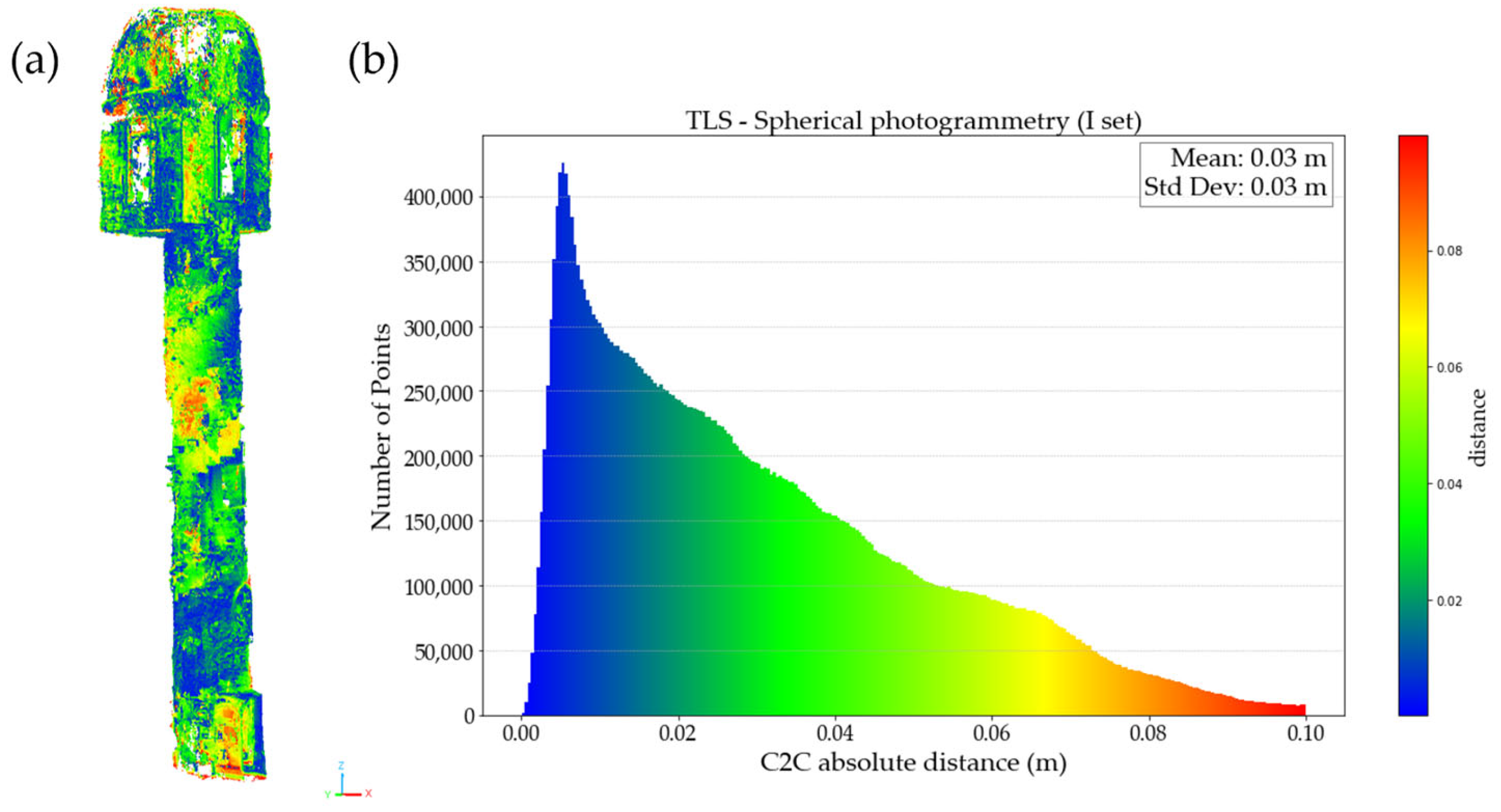

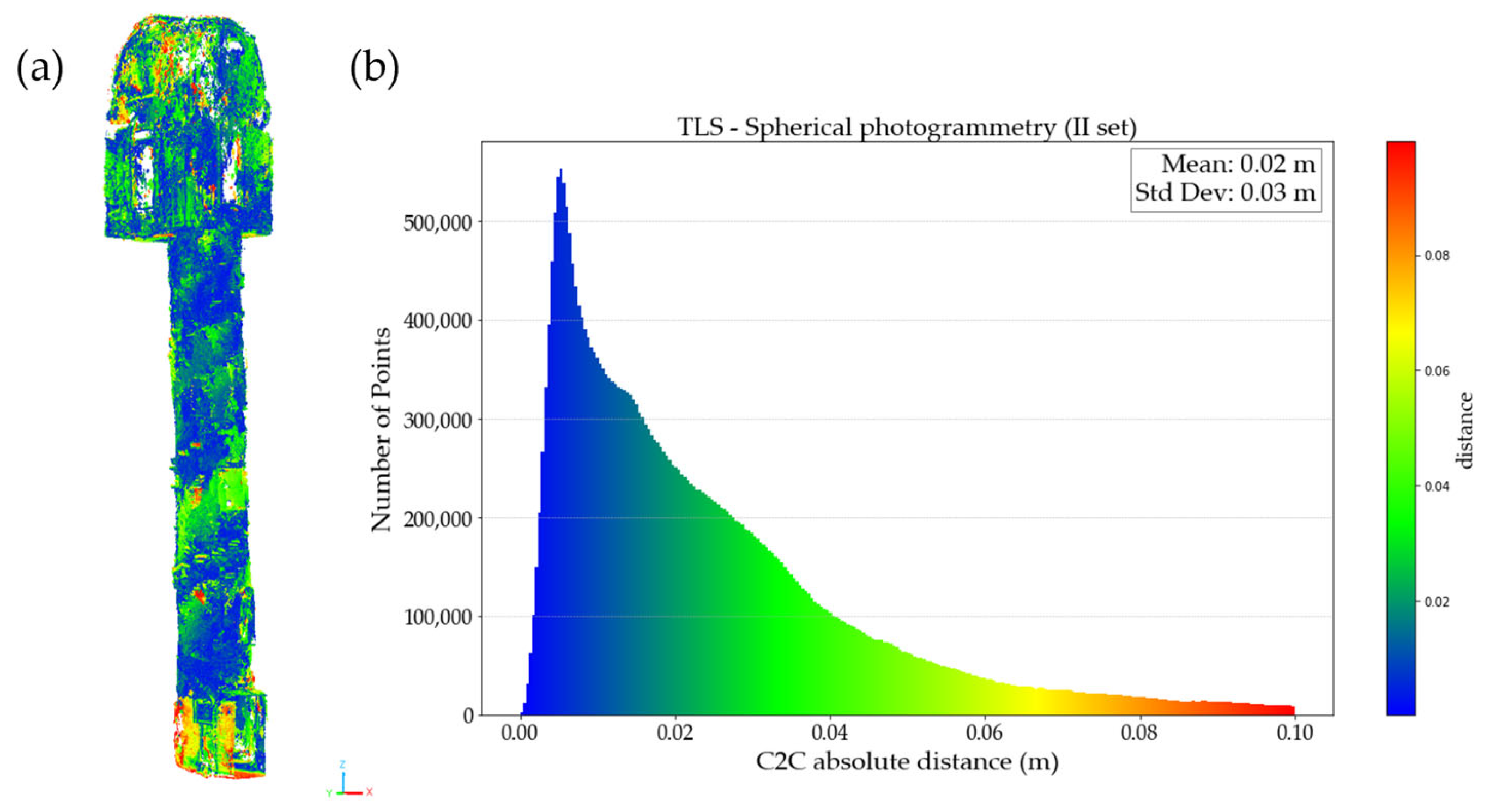

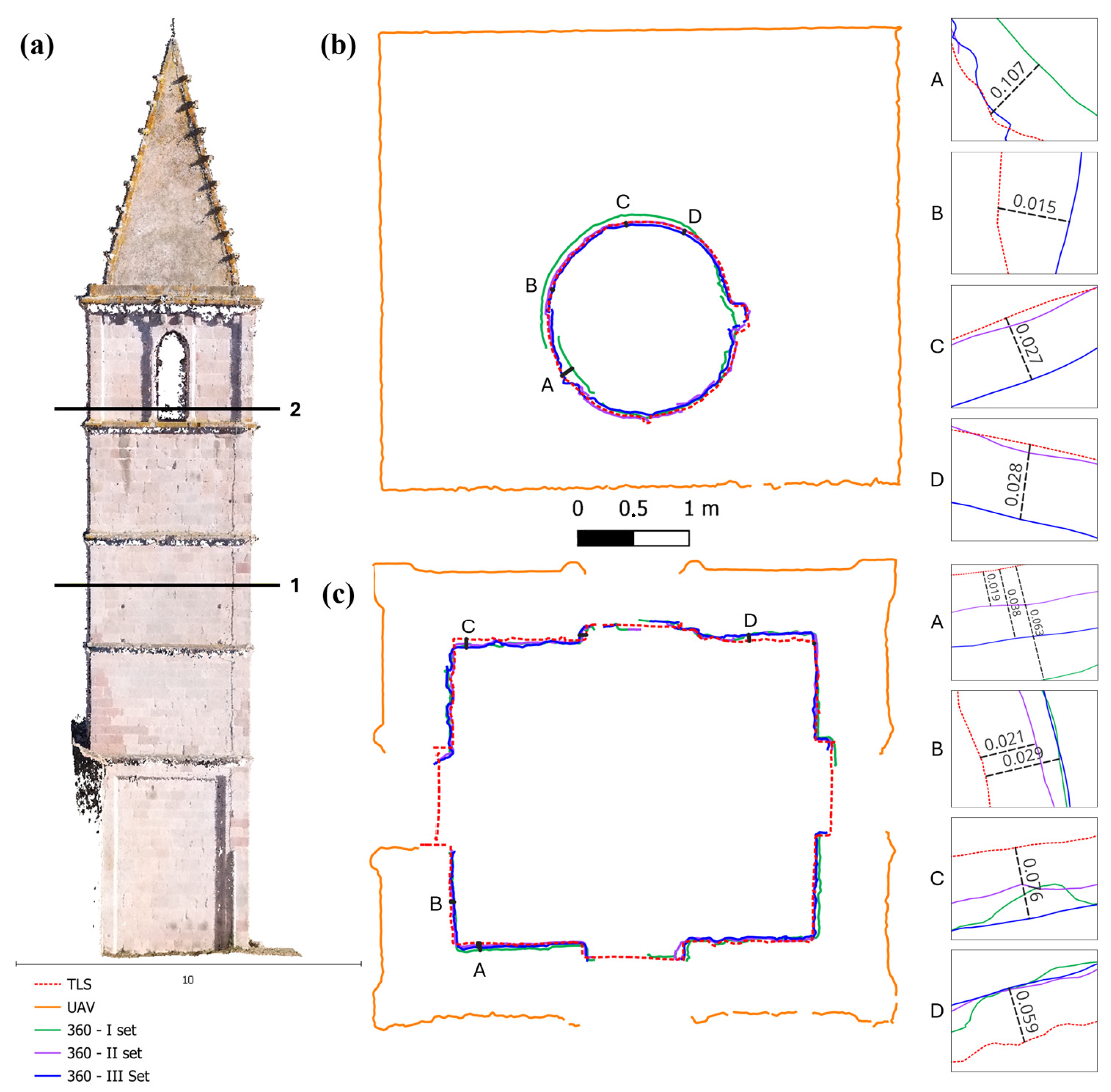

4.1. Validation of the 360 Survey of the Bell Tower Interior

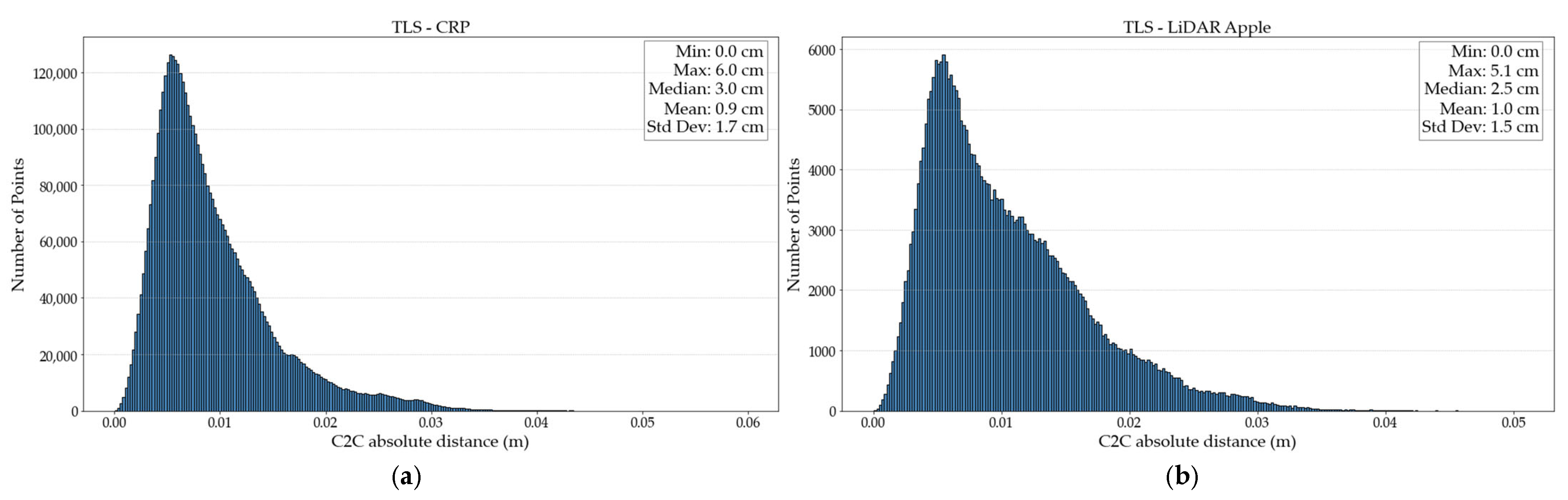

4.2. Different Surveys on the Niche

4.3. Sections from Different Techniques

- 1.

- Reading sections as polylines: Sections are analyzed as polylines, and any possible outlier vertices are removed.

- 2.

- Calculating the centroids: The geometric centroid is calculated for each horizontal section, treating it as an enclosed 2D polygon using the Shapely package. The coordinates of the centroids are stored (). In our georeferenced model, the planimetric coordinates of each centroid are expressed in terms of Northing and Easting, aligned with the ETRF2000-UTM32N reference system.

- 3.

- Establishing the vertical line: The reference vertical line is defined from the centroid of the base section, maintaining the same planimetric coordinates.

- 4.

- Computing intersection points: The intersection point of each horizontal section with the vertical line is calculated using the plane defined by that section, and its coordinates are stored ().

- 5.

- Assessing the deviation: The differences between each section centroid’s coordinates and the corresponding ideal intersection point on the vertical line are calculated to evaluate any deviations, expressed as (|ΔN|, |ΔE|).

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Teppati Losè, L.; Chiabrando, F.; Giulio Tonolo, F. Documentation of complex environments using 360 cameras. The Santa Marta Belltower in Montanaro. Remote Sens. 2021, 13, 3633. [Google Scholar] [CrossRef]

- Letellier, R.; Eppich, R. (Eds.) Recording, Documentation and Information Management for the Conservation of Heritage Places; Routledge: Abingdon, UK, 2015. [Google Scholar]

- Pilia, E.; Pirisino, M.S. Towards strategies for the conservation and enhancement of the cultural landscape. The medieval fortified heritage in North-Eastern Sardinia = Strategie per la conservazione e la valorizzazione del paesaggio culturale. Il caso studio del patrimonio fortificato medievale della Sardegna nord-orientale. GRANDI OPERE 2017, 2, 478–483. [Google Scholar]

- Grillo, S.M.; Pilia, E.; Vacca, G. Protocols of Knowledge for the Restoration: Documents, Geomatics, Diagnostic. The Case of the Beata Vergine Assunta Basilic in Guasila (Sardinia). In Computational Science and Its Applications–ICCSA 2022 Workshops, Proceedings of the International Conference on Computational Science and Its Applications, Malaga, Spain, 4–7 July 2022; Springer International Publishing: Cham, Switzerland, 2022; pp. 670–685. [Google Scholar]

- Georgopoulos, A. Data acquisition for the geometric documentation of cultural heritage. In Mixed Reality and Gamification for Cultural Heritage; Springer: Berlin/Heidelberg, Germany, 2017; pp. 29–73. [Google Scholar]

- Delegou, E.T.; Mourgi, G.; Tsilimantou, E.; Ioannidis, C.; Moropoulou, A. A multidisciplinary approach for historic buildings diagnosis: The case study of the Kaisariani monastery. Heritage 2019, 2, 1211–1232. [Google Scholar] [CrossRef]

- Vacca, G.; Quaquero, E.; Pili, D.; Brandolini, M. Integrating BIM and GIS data to support the management of large building stocks. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2018, 42, 717–724. [Google Scholar] [CrossRef]

- Yang, X.; Grussenmeyer, P.; Koehl, M.; Macher, H.; Murtiyoso, A.; Landes, T. Review of built heritage modelling: Integration of HBIM and other information techniques. J. Cult. Herit. 2020, 46, 350–360. [Google Scholar] [CrossRef]

- Tobiasz, A.; Markiewicz, J.; Łapiński, S.; Nikel, J.; Kot, P.; Muradov, M. Review of methods for documentation, management, and sustainability of cultural heritage. case study: Museum of king jan iii’s palace at wilanów. Sustainability 2019, 11, 7046. [Google Scholar] [CrossRef]

- Alshawabkeh, Y.; El-Khalili, M.; Almasri, E.; Bala’awi, F.; Al-Massarweh, A. Heritage documentation using laser scanner and photogrammetry. The case study of Qasr Al-Abidit, Jordan. Digit. Appl. Archaeol. Cult. Herit. 2020, 16, e00133. [Google Scholar] [CrossRef]

- Pritchard, D.; Sperner, J.; Hoepner, S.; Tenschert, R. Terrestrial laser scanning for heritage conservation: The Cologne Cathedral documentation project. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 213–220. [Google Scholar] [CrossRef]

- Napolitano, R.; Hess, M.; Glisic, B. Integrating non-destructive testing, laser scanning, and numerical modeling for damage assessment: The room of the elements. Heritage 2019, 2, 151–168. [Google Scholar] [CrossRef]

- Barazzetti, L.; Previtali, M.; Roncoroni, F. Can we use low-cost 360 degree cameras to create accurate 3D models? Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 69–75. [Google Scholar] [CrossRef]

- Furfaro, G.; Tanduo, B.; Fiorini, G.; Guerra, F. Spherical photogrammetry for the survey of historical-cultural heritage: The necropolis of Anghelu Ruju. In Proceedings of the National Conference of Geomatics and Geographic Information ASITA 2022, Genova, Italy, 20–24 June 2022. [Google Scholar]

- Herban, S.; Costantino, D.; Alfio, V.S.; Pepe, M. Use of low-cost spherical cameras for the digitisation of cultural heritage structures into 3d point clouds. J. Imaging 2022, 8, 13. [Google Scholar] [CrossRef] [PubMed]

- Marcos-González, D.; Álvaro-Tordesillas, A.; López-Bragado, D.; Martínez-Vera, M. Fast and Accurate Documentation of Architectural Heritage with Low-Cost Spherical Panoramic Photographs from 360 Cameras. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 48, 1007–1011. [Google Scholar] [CrossRef]

- Perfetti, L.; Polari, C.; Fassi, F. Fisheye multi-camera system calibration for surveying narrow and complex architectures. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 877–883. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer Nature: Cham, Switzerland, 2022. [Google Scholar]

- De Marco, J.; Maset, E.; Cucchiaro, S.; Beinat, A.; Cazorzi, F. Assessing Repeatability and Reproducibility of Structure-from-Motion Photogrammetry for 3D Terrain Mapping of Riverbeds. Remote Sens. 2021, 13, 2572. [Google Scholar] [CrossRef]

- Mistretta, F.; Sanna, G.; Stochino, F.; Vacca, G. Structure from motion point clouds for structural monitoring. Remote Sens. 2019, 11, 1940. [Google Scholar] [CrossRef]

- Adamopoulos, E.; Rinaudo, F. UAS-based archaeological remote sensing: Review, meta-analysis and state-of-the-art. Drones 2020, 4, 46. [Google Scholar] [CrossRef]

- Bitelli, G.; Dellapasqua, M.; Girelli, V.A.; Sanchini, E.; Tini, M.A. 3D Geomatics Techniques for an integrated approach to Cultural Heritage knowledge: The case of San Michele in Acerboli’s Church in Santarcangelo di Romagna. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 291–296. [Google Scholar] [CrossRef]

- Carraro, F.; Monego, M.; Callegaro, C.; Mazzariol, A.; Perticarini, M.; Menin, A.; Giordano, A. The 3D survey of the roman bridge of San Lorenzo in Padova (Italy): A comparison between SfM and TLS methodologies applied to the arch structure. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 255–262. [Google Scholar] [CrossRef]

- Georgopoulos, A.; Oikonomou, C.; Adamopoulos, E.; Stathopoulou, E.K. Evaluating unmanned aerial platforms for cultural heritage large scale mapping. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 355–362. [Google Scholar] [CrossRef]

- Mateus, L.; Fernández, J.; Ferreira, V.; Oliveira, C.; Aguiar, J.; Gago, A.S.; Pernão, J. Terrestrial laser scanning and digital photogrammetry for heritage conservation: Case study of the Historical Walls of Lagos, Portugal. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 843–847. [Google Scholar] [CrossRef]

- Mateus, L.; Ferreira, V.; Aguiar, J.; Pacheco, P.; Ferreira, J.; Mendes, C.; Silva, A. The role of 3D documentation for restoration interventions. The case study of Valflores in Loures, Portugal. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 44, 381–388. [Google Scholar] [CrossRef]

- Sestras, P.; Roșca, S.; Bilașco, Ș.; Naș, S.; Buru, S.M.; Kovacs, L.; Sestras, A.F. Feasibility assessments using unmanned aerial vehicle technology in heritage buildings: Rehabilitation-restoration, spatial analysis and tourism potential analysis. Sensors 2020, 20, 2054. [Google Scholar] [CrossRef]

- Jiang, S.; You, K.; Li, Y.; Weng, D.; Chen, W. 3D reconstruction of spherical images: A review of techniques, applications, and prospects. Geo-Spat. Inf. Sci. 2024, 27, 1959–1988. [Google Scholar] [CrossRef]

- Mandelli, A.; Fassi, F.; Perfetti, L.; Polari, C. Testing different survey techniques to model architectonic narrow spaces. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 505–511. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Grussenmeyer, P.; Suwardhi, D. Technical considerations in Low-Cost heritage documentation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 225–232. [Google Scholar] [CrossRef]

- Fangi, G.; Nardinocchi, C. Photogrammetric processing of spherical panoramas. Photogramm. Rec. 2013, 28, 293–311. [Google Scholar] [CrossRef]

- Kwiatek, K.; Tokarczyk, R. Immersive photogrammetry in 3D modelling. Geomat. Environ. Eng. 2015, 9, 51–62. [Google Scholar] [CrossRef]

- Janiszewski, M.; Torkan, M.; Uotinen, L.; Rinne, M. Rapid photogrammetry with a 360-degree camera for tunnel mapping. Remote Sens. 2022, 14, 5494. [Google Scholar] [CrossRef]

- Pérez-García, J.L.; Gómez-López, J.M.; Mozas-Calvache, A.T.; Delgado-García, J. Analysis of the photogrammetric use of 360-degree cameras in complex heritage-related scenes: Case of the Necropolis of Qubbet el-Hawa (Aswan Egypt). Sensors 2024, 24, 2268. [Google Scholar] [CrossRef]

- Perfetti, L.; Spettu, F.; Achille, C.; Fassi, F.; Navillod, C.; Cerutti, C. A Multi-Sensor Approach to Survey Complex Architectures Supported by Multi-Camera Photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 48, 1209–1216. [Google Scholar] [CrossRef]

- Masciotta, M.G.; Sanchez-Aparicio, L.J.; Oliveira, D.V.; Gonzalez-Aguilera, D. Integration of laser scanning technologies and 360° photography for the digital documentation and management of cultural heritage buildings. Int. J. Archit. Herit. 2023, 17, 56–75. [Google Scholar] [CrossRef]

- Vacca, G. 3D Survey with Apple LiDAR Sensor—Test and Assessment for Architectural and Cultural Heritage. Heritage 2023, 6, 1476–1501. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Grussenmeyer, P.; Landes, T.; Macher, H. First assessments into the use of commercial-grade solid state lidar for low cost heritage documentation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 43, 599–604. [Google Scholar] [CrossRef]

- Luetzenburg, G.; Kroon, A.; Bjørk, A.A. Evaluation of the Apple iPhone 12 Pro LiDAR for an application in geosciences. Sci. Rep. 2021, 11, 22221. [Google Scholar] [CrossRef] [PubMed]

- Teppati Losè, L.; Spreafico, A.; Chiabrando, F.; Giulio Tonolo, F. Apple LiDAR sensor for 3D surveying: Tests and results in the cultural heritage domain. Remote Sens. 2022, 14, 4157. [Google Scholar] [CrossRef]

- Jo, Y.H.; Hong, S. Three-dimensional digital documentation of cultural heritage site based on the convergence of terrestrial laser scanning and unmanned aerial vehicle photogrammetry. ISPRS Int. J. Geo-Inf. 2019, 8, 53. [Google Scholar] [CrossRef]

- Il Portale di Orani. Available online: http://www.orani.it/torre-aragonese-di-orani.php (accessed on 1 February 2025).

- Naitza, S. Architettura dal Tardo ‘600 al Classicismo Purista, 1st ed.; Ilisso: Nuoro, Italy, 1992. [Google Scholar]

- Scolaro, A.M. Orani; Ex Chiesa di Sant’Andrea: Cesi, Italy, 2015; pp. 147–150. [Google Scholar]

- Angius, V. Orani. In Dizionario Geografico Storico-Statistico-Commerciale Degli Stati di S.M. il Re di Sardegna; Casalis, G., Ed.; G. Maspero: Torino, Italy, 1845; Volume XIII, pp. 193–209. [Google Scholar]

- Bonfante, A.; Carta, G. Santuari e Chiese Campestri della Diocesi di Nuoro; Ilisso: Nuoro, Italy, 1992; pp. 156–157. [Google Scholar]

- Segni Pulvirenti, F.; Sari, A. Architettura Tardogotica e D’influsso Rinascimentale; Ilisso: Nuoro, Italy, 1994. [Google Scholar]

- Sarnet. Web Server della Rete di Stazioni Permanenti Della Sardegna. Available online: www.sarnet.it/servizi.html (accessed on 1 January 2025).

- Centro Interregionale per I Sistemi Informatici Geografici e Statistici in Liquidazione. Trasformazioni di Coordinate—Il Software ConveRgo. Available online: https://www.cisis.it/?page_id=3214 (accessed on 1 January 2025).

- International Service for the Geoid (ISG). Italy (ITALGEO05). Available online: https://www.isgeoid.polimi.it/Geoid/Europe/Italy/italgeo05_g.html (accessed on 1 January 2025).

- Polycam Inc. Polycam 3D Scanner [Mobile Application Software]; Polycam Inc.: San Francisco, CA, USA, 2023; Available online: https://www.polycam.com (accessed on 1 September 2025).

- Yole Développement. SP20557: Apple iPad Pro LiDAR Module [Flyer]. Available online: https://medias.yolegroup.com/uploads/2020/06/SP20557-Yole-Apple-iPad-pro-Lidar-Module_flyer.pdf (accessed on 1 September 2025).

- García-Gómez, P.; Royo, S.; Rodrigo, N.; Casas, J.R. Geometric model and calibration method for a solid-state LiDAR. Sensors 2020, 20, 2898. [Google Scholar] [CrossRef]

- Wang, D.; Watkins, C.; Xie, H. MEMS mirrors for LiDAR: A review. Micromachines 2020, 11, 456. [Google Scholar] [CrossRef]

- Aijazi, A.K.; Malaterre, L.; Trassoudaine, L.; Checchin, P. Systematic evaluation and characterization of 3d solid state lidar sensors for autonomous ground vehicles. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 199–203. [Google Scholar] [CrossRef]

- Goldsmith, T.; Smith, J. Reconstructor [Software], Version 4.4.2; DataSoft Solutions: Karachi, Pakistan, 2023. Available online: https://gexcel.it/en/software/reconstructor (accessed on 1 September 2025).

- He, Y.; Liang, B.; Yang, J.; Li, S.; He, J. An iterative closest points algorithm for registration of 3D laser scanner point clouds with geometric features. Sensors 2017, 17, 1862. [Google Scholar] [CrossRef]

- Szeliski, R.; Shum, H.Y. Creating full view panoramic image mosaics and environment maps. In Seminal Graphics Papers: Pushing the Boundaries; ACM Digital Library: New York, NY, USA, 2023; Volume 2, pp. 653–660. [Google Scholar]

- Agisoft LLC. Agisoft Metashape Professional Edition [Software], Version 2.1.0; Agisoft LLC: St. Petersburg, Russia, 2025. Available online: https://www.agisoft.com (accessed on 1 September 2025).

- CloudCompare, Version 2.14.4. GPL Software. Telecom ParisTech: Grenoble, France, 2025. Available online: http://www.cloudcompare.org (accessed on 1 January 2025).

- Deidda, M.; Vacca, G. Tecniche di rilievo Laser Scanner a supporto del progetto di restauro conservativo dei beni culturali. L’esempio del Castello di Siviller e del campanile di Mores. Boll. Soc. Ital. Fotogramm. Topogr. 2012, 4, 23–39. [Google Scholar]

- Abdel-Majeed, H.M.; Shaker, I.F.; Abdel-Wahab, A.M.; Awad, A.A.D.I. Indoor mapping accuracy comparison between the apple devices’ LiDAR sensor and terrestrial laser Scanner. HBRC J. 2024, 20, 915–931. [Google Scholar] [CrossRef]

- Abbas, S.F.; Abed, F.M. Revolutionizing Depth Sensing: A Review study of Apple LiDAR sensor for as-built scanning Applications. J. Eng. 2024, 30, 175–199. [Google Scholar] [CrossRef]

- Bruno, N.; Perfetti, L.; Fassi, F.; Roncella, R. Photogrammetric survey of narrow spaces in cultural heritage: Comparison of two multi-camera approaches. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2024, 48, 87–94. [Google Scholar] [CrossRef]

| Sensor | Image Res | Dimensions | 35 mm Equiv. Focal Length |

|---|---|---|---|

| Dual 1” sensors | 6528 × 3264 (2:1) | 52.4 × 48.6 × 49.4 mm | 7.2 mm |

| Average scan2scan Residuals (m) | Mean Georeferencing Error (m) |

|---|---|

| 0.0015 | 0.0186 |

| Set | Type | n | X Error (cm) | Y Error (cm) | Z Error (cm) | Total (cm) | Image (pix) |

|---|---|---|---|---|---|---|---|

| I outward | Control points | 20 | 2.94 | 3.37 | 3.82 | 5.88 | 11.43 |

| Check points | 17 | 3.45 | 4.55 | 6.58 | 8.71 | 6.78 | |

| II | Control points | 20 | 2.70 | 1.84 | 1.40 | 3.55 | 13.78 |

| return | Check points | 17 | 3.16 | 3.26 | 4.26 | 6.23 | 10.83 |

| III all images | Control points | 20 | 2.15 | 2.53 | 3.14 | 4.57 | 13.54 |

| Check points | 17 | 3.28 | 4.00 | 6.08 | 7.99 | 11.57 |

| Type | n | X Error (cm) | Y Error (cm) | Z Error (cm) | Total (cm) | Image (pix) |

|---|---|---|---|---|---|---|

| Control points | 4 | 0.62 | 0.49 | 1.47 | 1.67 | 1.02 |

| Check points | 1 | 1.20 | 2.43 | 0.90 | 2.86 | 1.79 |

| Type | n | X Error (mm) | Y Error (mm) | Z Error (mm) | Total (mm) | Image (pix) |

|---|---|---|---|---|---|---|

| Control points | 5 | 4.00 | 2.14 | 2.71 | 5.28 | 1.12 |

| Check points | 1 | 5.50 | 0.45 | 0.81 | 5.57 | 0.60 |

| Range | CRP | Apple LiDAR |

|---|---|---|

| 0–1 cm | 64.68% | 55.71% |

| 1–2 cm | 28.02% | 34.67% |

| 2–3 cm | 5.04% | 7.34% |

| 3–4 cm | 0.54% | 0.76% |

| 4–5 cm | 0.03% | 0.03% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vacca, G.; Vecchi, E. Integrated Geomatic Approaches for the 3D Documentation and Analysis of the Church of Saint Andrew in Orani, Sardinia. Remote Sens. 2025, 17, 3376. https://doi.org/10.3390/rs17193376

Vacca G, Vecchi E. Integrated Geomatic Approaches for the 3D Documentation and Analysis of the Church of Saint Andrew in Orani, Sardinia. Remote Sensing. 2025; 17(19):3376. https://doi.org/10.3390/rs17193376

Chicago/Turabian StyleVacca, Giuseppina, and Enrica Vecchi. 2025. "Integrated Geomatic Approaches for the 3D Documentation and Analysis of the Church of Saint Andrew in Orani, Sardinia" Remote Sensing 17, no. 19: 3376. https://doi.org/10.3390/rs17193376

APA StyleVacca, G., & Vecchi, E. (2025). Integrated Geomatic Approaches for the 3D Documentation and Analysis of the Church of Saint Andrew in Orani, Sardinia. Remote Sensing, 17(19), 3376. https://doi.org/10.3390/rs17193376