Tree Health Assessment Using Mask R-CNN on UAV Multispectral Imagery over Apple Orchards

Abstract

Highlights

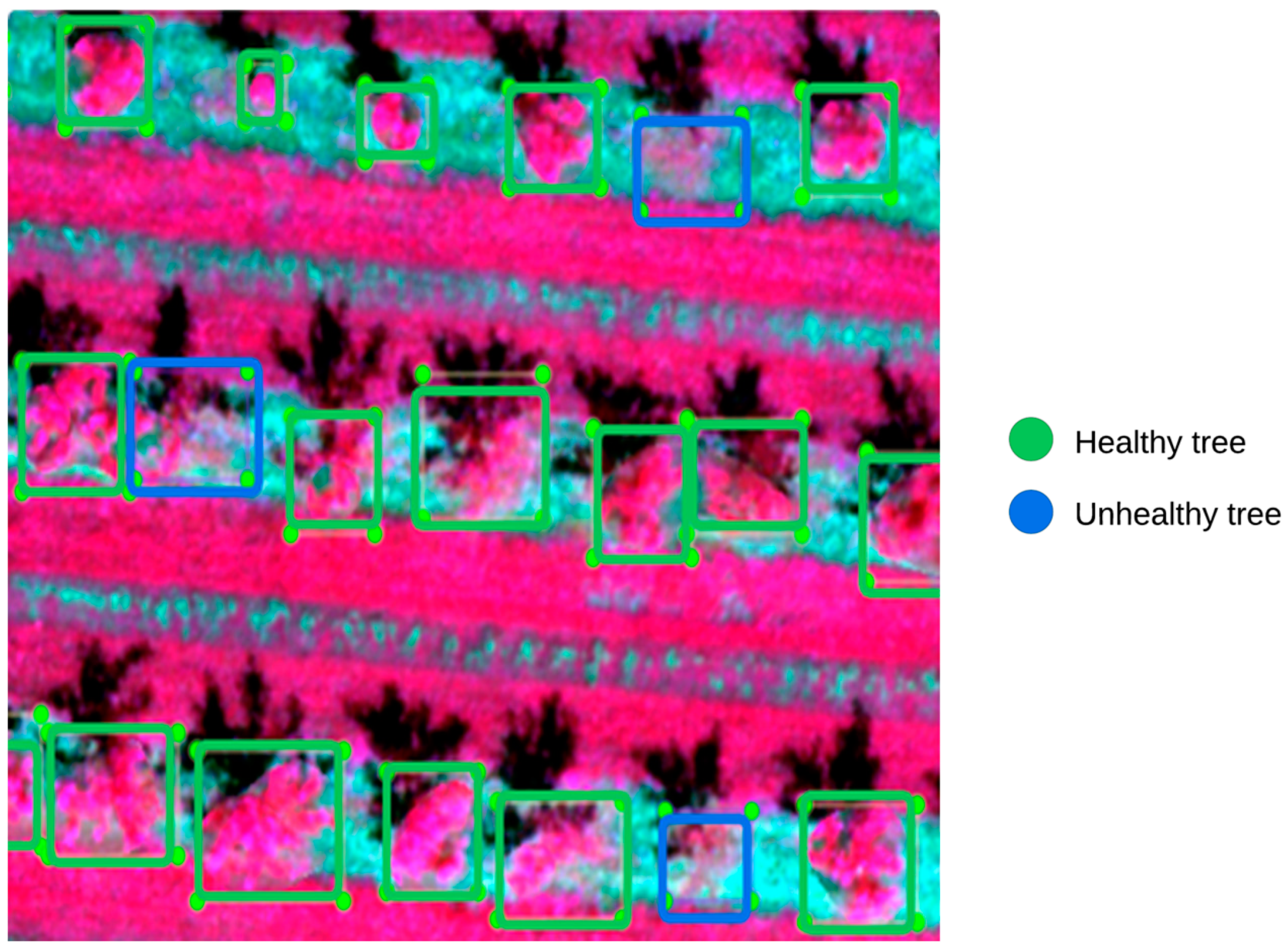

- A one-step Mask R-CNN with a ResNeXt-101 backbone on 5-band UAV multispectral imagery best distinguishes healthy vs. unhealthy apple trees (F1 = 85.70%, mIoU = 92.85%).

- Multispectral (including Red-Edge & NIR bands) consistently outperforms RGB and PCA-compressed inputs; adding vegetation indices via 3PCs did not surpass 5-band performance.

- The 5-band approach enables accurate, single-step orchard health assessment suitable for precision agriculture.

- Handling class imbalance with class weights + focal loss substantially improves minority class detection (AP for the unhealthy class: 39.32% → 42.76%; Macro-F1: 76.22% → 83.10%; Weighted-F1: 93.60% → 94.76%; TP for unhealthy doubled: 12 → 24).

Abstract

1. Introduction

1.1. Tree Detection

| Imagery Type | Input Feature (*) | Method | F1-Score (%) | Species | Crown Size (m) | Pixel Size (cm) | Reference |

|---|---|---|---|---|---|---|---|

| RGB | Reflectance | CART | 73.40 | Apricot | 8–12 | 1.955 | [20] |

| ELM spectral- spatial classifier | 93.61 | Banana | 2–4 | N/A | [28] | ||

| 85.12 | Coconut | 6–8 | |||||

| 75.49 | Mango | 10–15 | |||||

| HOG-SVM | 99.90 | Oil Palm | 12–18 | 5 | [20] | ||

| YOLOv5 | 92.40 | Fir | 4–8 | 3 | [29] | ||

| YOLOv5 with HNM | 84.81 | Apple | 3–6 | 7 | [3] | ||

| YOLOv7 | 89.20 | [30] | |||||

| DeepForest with HNM | 86.24 | [3] | |||||

| FC-DenseNet | 96.10 | Cumbaru | 10–15 | 1 | [26] | ||

| FCNN | 97.55 | Oil Palm | 3–4 | 4 | [27] | ||

| 92.04 | Palm | 6 | |||||

| U-Net | 95.20 | Apricot | 8–12 | 1.955 | [21] | ||

| Mask R-CNN | 99.10 | ||||||

| 96.00 | Almond | 3–5 | 4 | [22] | |||

| 95.00 | Oil Palm | <12 | 5 | [23] | |||

| 94.68 | Fir | 1–4 | 3 | [24] | |||

| 94.51 | Olive | 6–10 | 3 | [25] | |||

| 93.00 | Walnut | 10–15 | 6 | [22] | |||

| 84.00 | Olive | 6–10 | 1 | ||||

| 75.61 | Olive | 6–10 | 13 | [25] | |||

| RGB & CHM | GLI, VARI, NDTI, RGBVI, ExG, GLCM | SVM | 93.00 | Oak | 6–9 | N/A | [32] |

| RGB & TIR | Binary Map | U-Net | 96.51 | Various | N/A | RGB: 2.3 TIR: 10.8 | [31] |

| Multispectral | NDVI | CNN | 99.80 | Citrus | 3–6 | 5 | [34] |

| Circular Hough Transform | 96.00 | Palm | <12 | 30 | [33] | ||

| Mask R-CNN | 82.58 | Olive | 6–10 | 13 | [25] | ||

| GNDVI | Mask R-CNN | 77.38 | Olive | 6–10 | 13 | [25] |

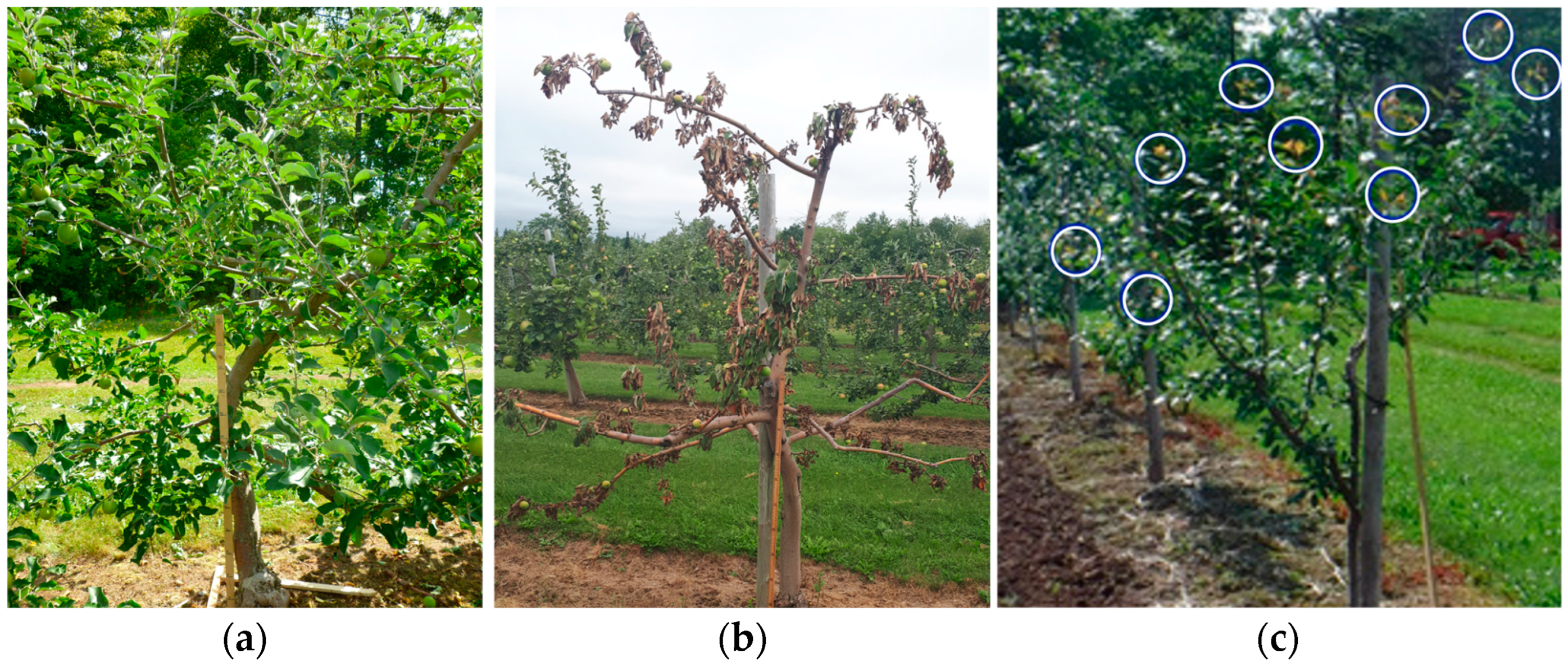

1.2. Tree Health Status Assessment

1.3. 1-Step Method

2. Materials and Methods

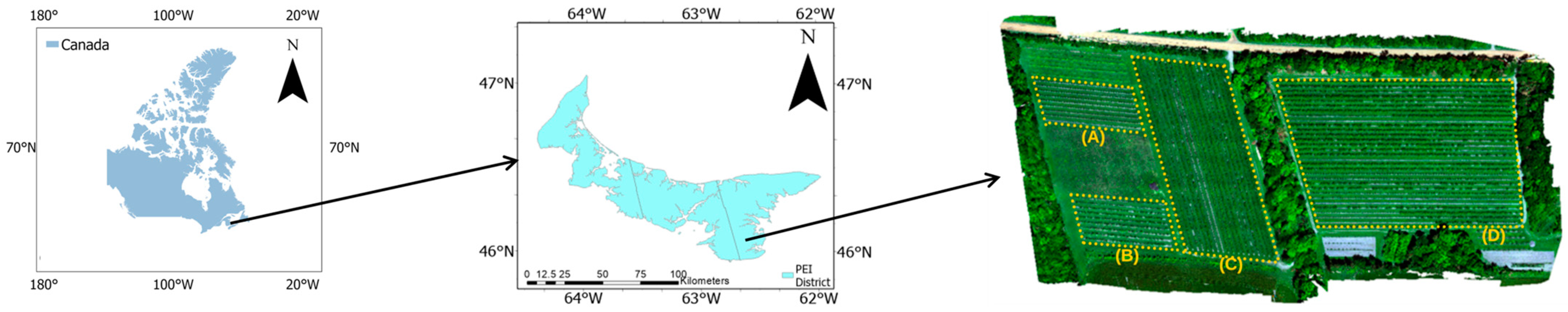

2.1. Study Area and UAV Imagery

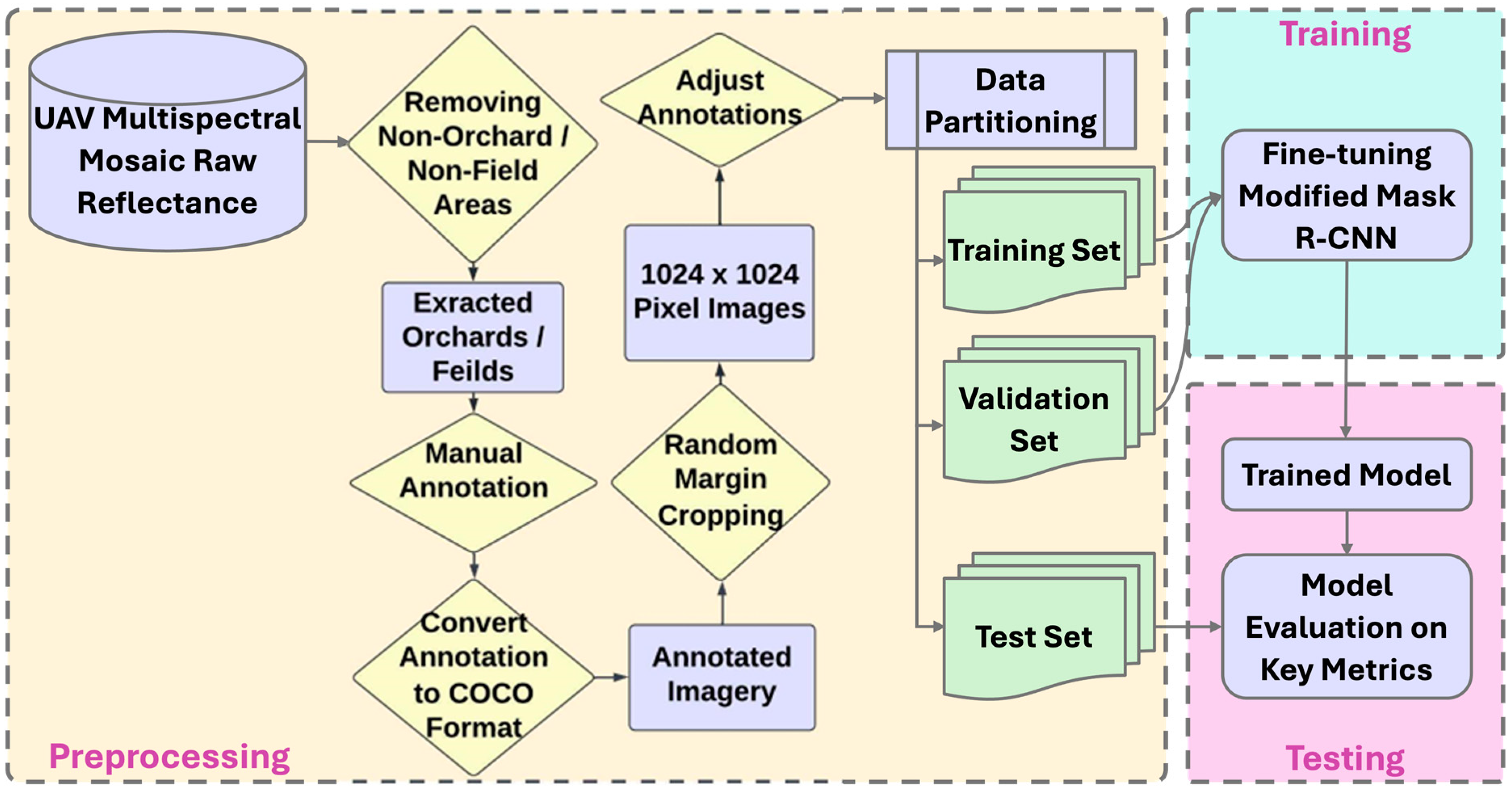

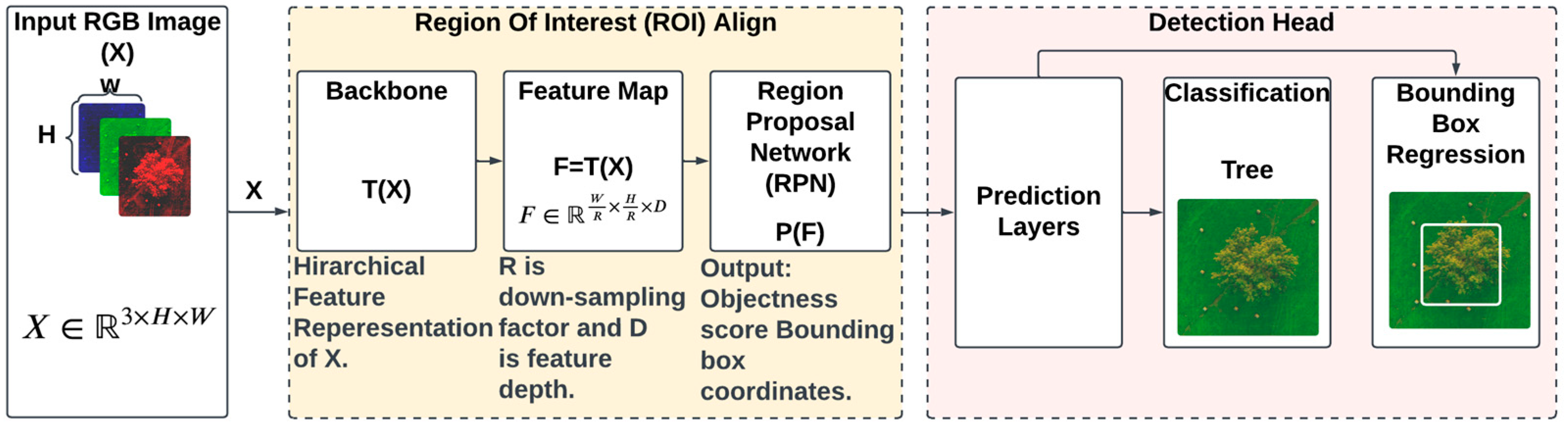

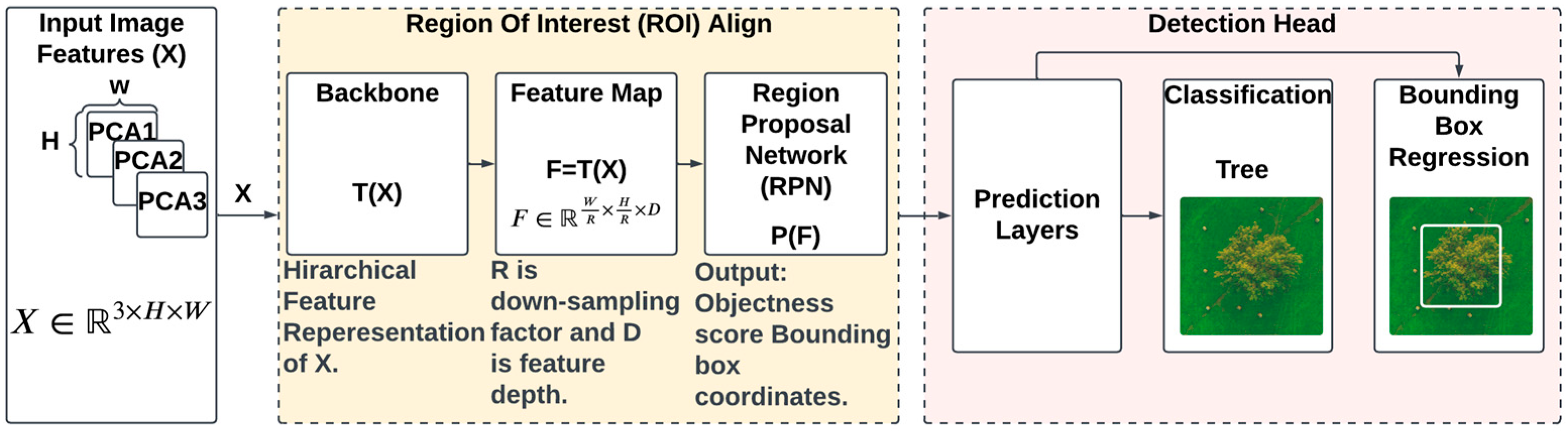

2.2. Methodology

2.2.1. Preprocessing

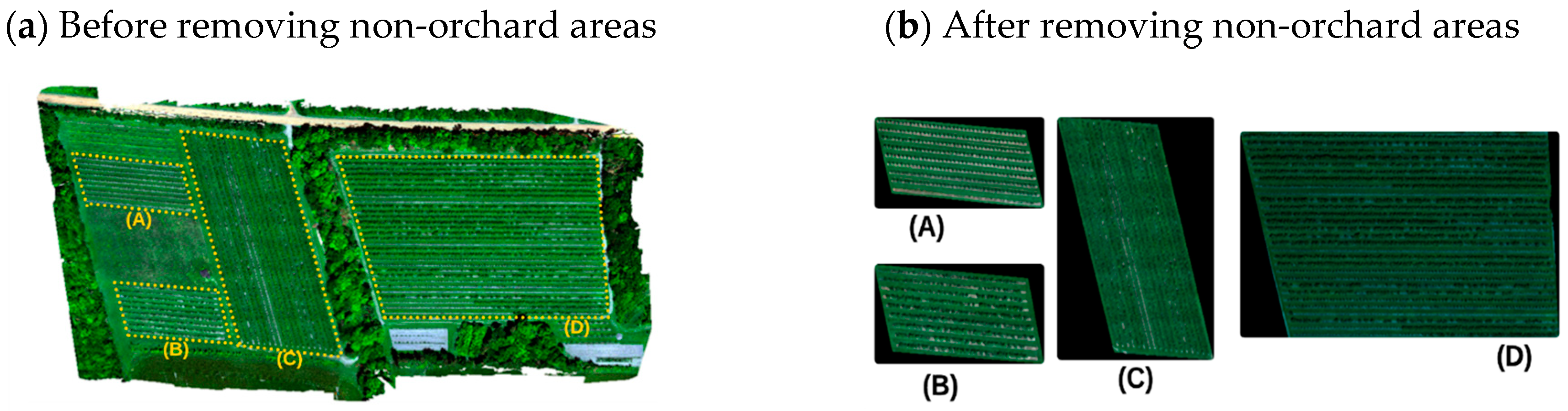

Removing Non-Orchard Areas

Annotation

Random Margin Cropping

- Consistently targeted patch sizes of 1024 × 1024 pixels to be compatible with model input requirements. This ensures integration into the training without additional resizing that could cause distortion or preprocessing steps.

- Producing enough samples for a generalized model, minimizing bias during model training and validation.

- Including contextual information surrounding the trees.

- Supporting data augmentation by creating variability in the extracted patches and increasing sample diversity.

Data Partitioning

2.2.2. Model Training

- Scenario 1 uses RGB images, such as in the case of the original Mask R-CNN;

- Scenario 2 is based on multispectral 5-band imagery;

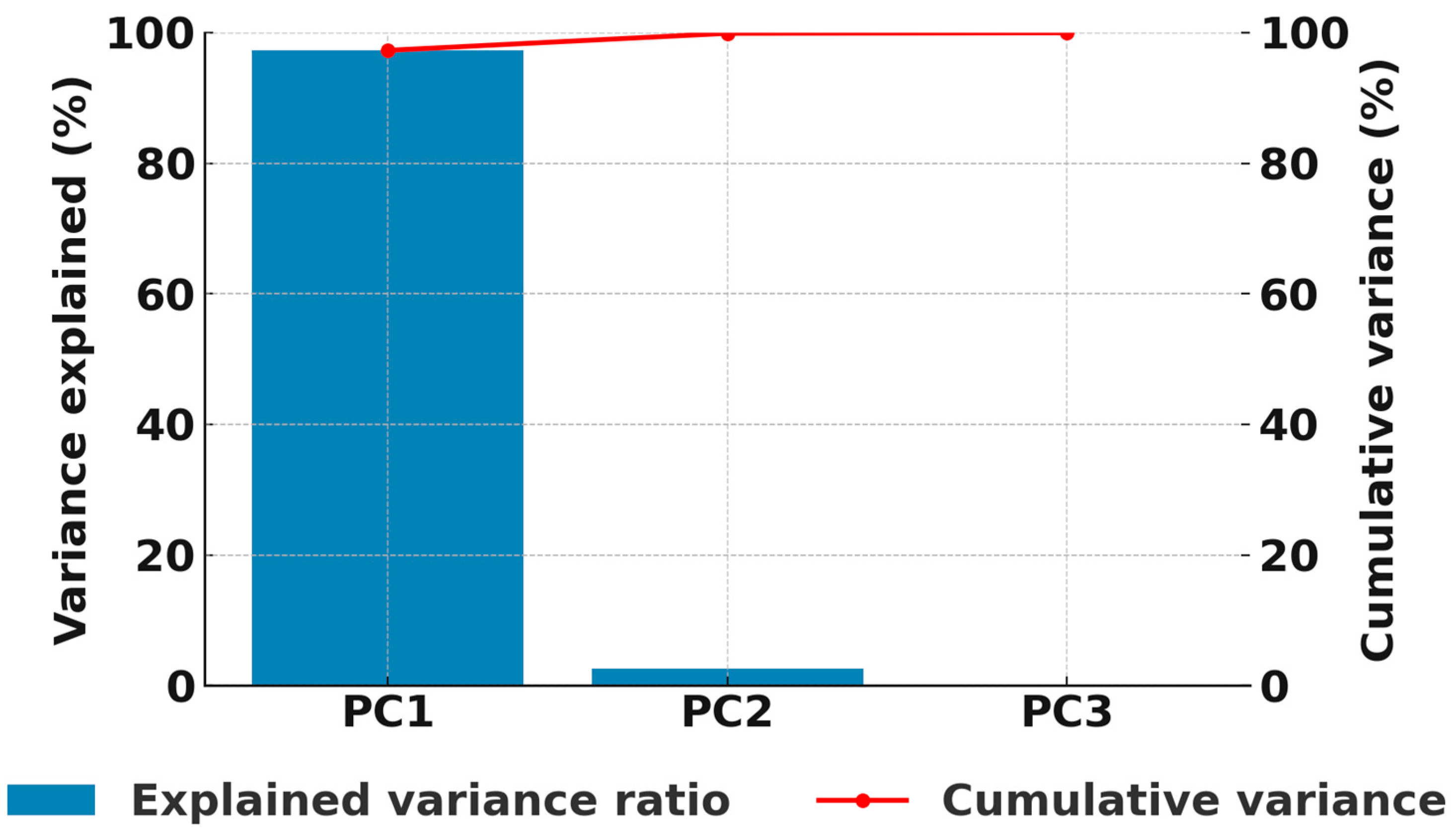

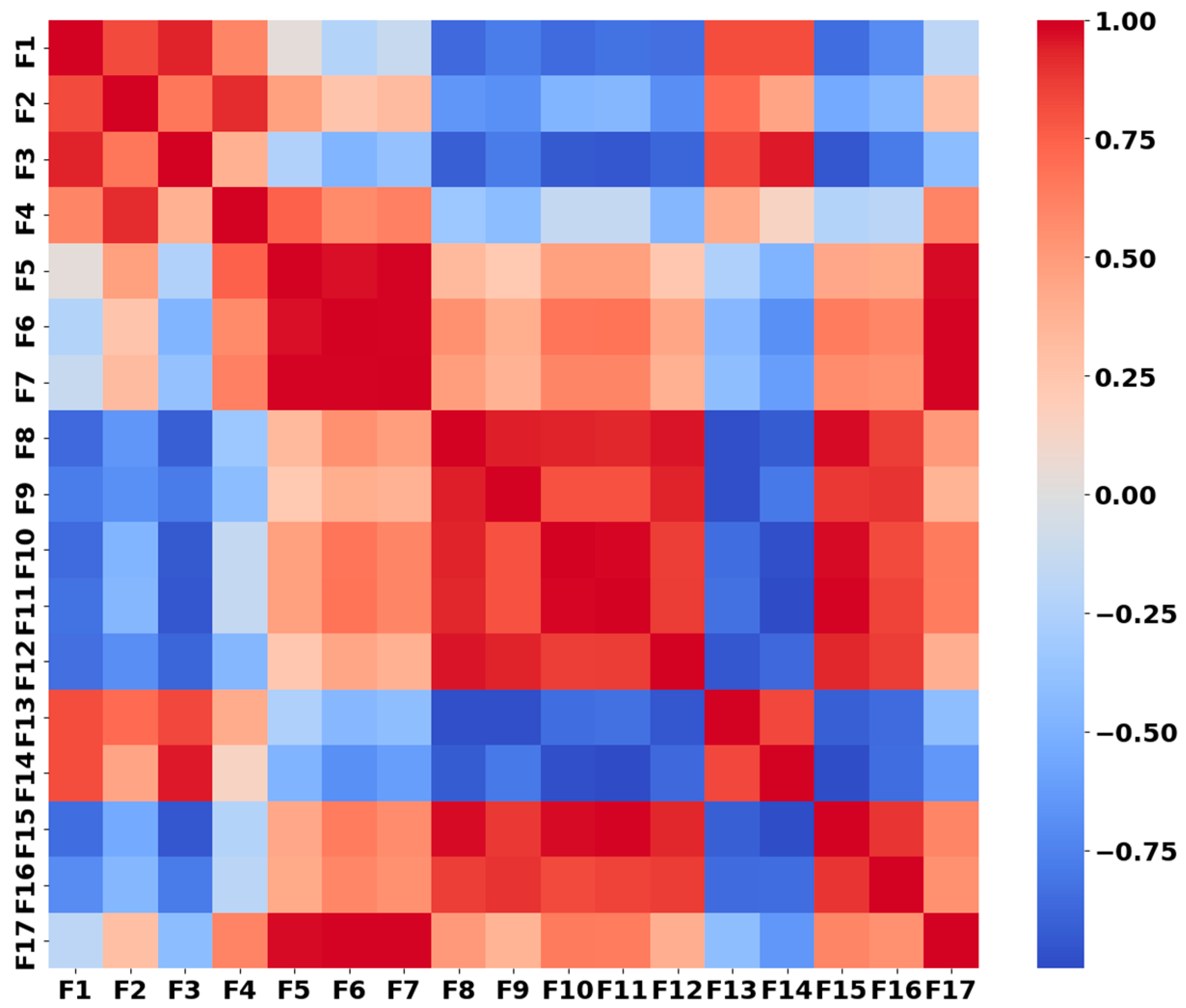

- Scenario 3 involves three principal components that were computed using the multispectral 5-band reflectance images and their associated vegetation index images.

Scenario 1: RGB Imagery

Scenario 2: Multispectral Imagery

- Conv1 channel expansion: The ImageNet conv1 weight tensor (64, 3, 7, 7) was replaced with (64, 5, 7, 7) so the backbone directly ingests 5 channels.

- Weight initialization: The RGB slices of conv1 were copied from the pretrained weights, and the two additional slices (Red-Edge, NIR) were initialized to zero. During fine-tuning, the network learns band-specific filters for these channels. This initialization was found stable in practice rather than adding random values to the added layers.

- Preprocessing and ordering: Bands are stacked in the order [B, G, R, RE, NIR] and standardized per band using training-set means and standard deviations.

Scenario 3: Multispectral Imagery & Vegetation Indices

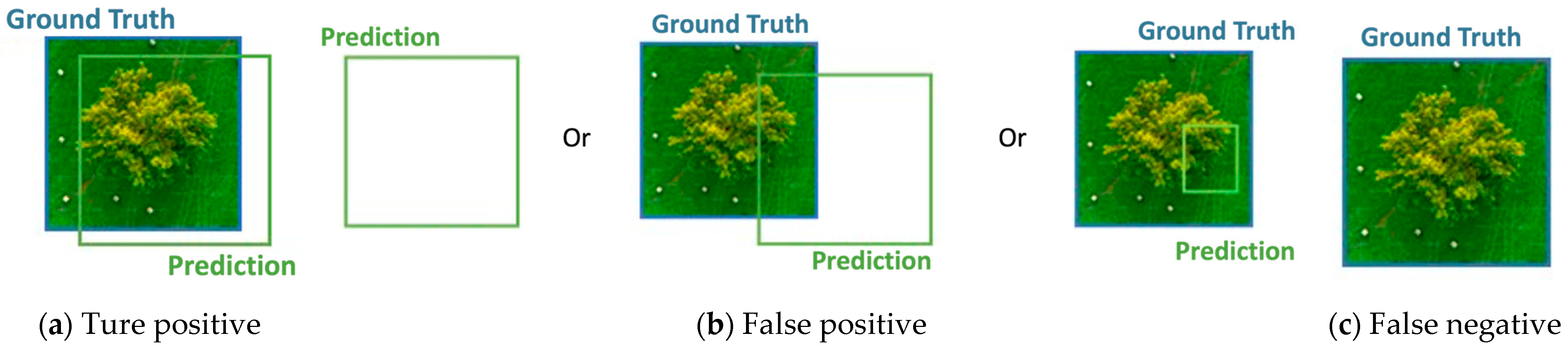

2.2.3. Performance Evaluation

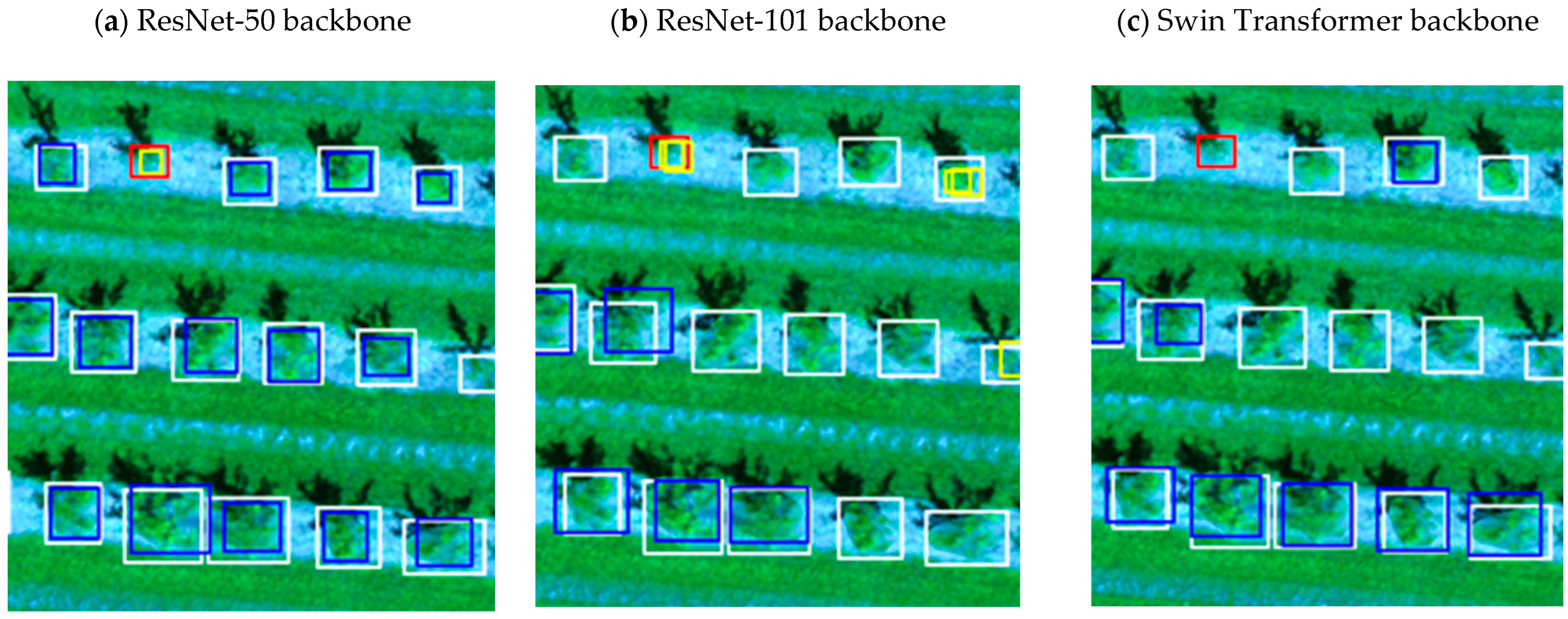

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ANOVA | Analysis of Variance |

| BC-DPC | Betweenness Centrality—Density Peak Clustering |

| BGRI | Blue Green Red Index = Green/(Blue + Red) |

| BNDVI | Blue Normalized Difference Vegetation Index = (NIR − Blue)/(NIR + Blue) |

| Bright | Crown Brightness = Red + Green + Blue |

| CHM | Canopy Height Model |

| CIG | Chlorophyll Index Green = NIR/G − 1 |

| CIRE | Chlorophyll Index Red Edge = NIR/RE − 1 |

| CIRE | Chlorophyll index Red Edge = (NIR/RE) − 1 |

| CNN | Convolutional Neural Network |

| CPA | Crown Projection Area |

| CRI | Carotenoid Reflectance Index = (1/R510) − (1/R550) |

| CropdocNet | Novel end-to-end deep learning model |

| CVI | Chlorophyll Vegetation Index = (NIR × R)/G2 |

| DT | Decision Tree |

| DVI | Difference Vegetation Index = NIR − Red |

| EGI | Excessive Green Index = 2 × Green − Red − Blue |

| EGMRI | Excessive Green Minus Red Index = 3 × Green − 2.4 × Red − Blue |

| ELM | Extreme Learning Machine |

| EPF | Edge-Preserving Filter |

| ER | Excessive Red = 1.4 × Red − Green |

| EVI | Enhanced Vegetation Index = 2.5 × (NIR − Red)/(NIR + 6 × Red − 7.5 × Blue + 1) |

| EVI2 | Two-band enhanced vegetation index = 2.5 × (NIR-R)/(NIR + 2.4R + 1) |

| ExG | Excess Green Index = 2 × Green − Red − Blue |

| ExGR | Green Excess-Red Excess = ExG − (1.4R − green) |

| ExRE | Excess Red Edge = 2 × RedEdge − Green − Blue |

| FC-DenseNet | Fully Convolutional DenseNet |

| G/R | Green to Red ratio = green/red |

| GBVI | Green-Blue Vegetation Index = Green − Blue |

| GDVI | Green Difference Vegetation Index = NIR − Green |

| GLCM | Gray Level Co-occurrence Matrix |

| GLI | Green Leaf Index = (2 × Green − Blue − Red)/(2 × Green + Blue + Red) |

| GNDVI | Green normalized difference vegetation index = (NIR − Green)/(NIR + Green) |

| GRVI | Green-Red Vegetation Index = Green − Red |

| GSAVI | Green Soil-Adjusted Vegetation Index = (NIR − Green)/(NIR + Green + 0.5) × 1.5 |

| HNM | Hard Negative Mining |

| HOG | Histogram of Oriented Gradients |

| ITC | Individual Tree Crown |

| KNN | K-Nearest Neighbors |

| LAI | Leaf area index = − (1/k) ln (a (1 − bEVI2)) |

| LMT | Logistic Model Tree; AdaBoost = Adaptive Boosting |

| M-CR | MultiConvolution Residual |

| MGRVI | Modified Green Red Vegetation Index = (Green2 + Red2)/(Green2 + (Blue × Red)) |

| Morph. Ops | Morphological Operations |

| MSAVI | Modified Soil-Adjusted Vegetation Index = ((NIR − Red) × 1.5)/(NIR + Red + 0.5) |

| NCI | Normalized Color Intensities = (Blue − Green)/(Blue + Green) |

| NDAVI | Normalized Difference Aquatic Vegetation Index = (NIR − Blue)/(NIR + Blue) |

| NDI | Normalized Difference Index = (Green − Red)/(Green + Red) |

| NDRE | Normalized Difference Red Edge Index = (NIR − RedEdge)/(NIR + RedEdge) |

| NDREI | Normalized Difference Red Edge Index = (NIR − RE)/(NIR + RE) |

| NDTI | Normalized Difference Turbidity Index = (NIR − Red)/(NIR + Red) |

| NDVI | Normalized difference vegetation index = (NIR − Red)/(NIR + Red) |

| NDVI textures | Energy, Entropy, Correlation, Inverse difference moment, Inertia |

| ndvi_GLCM | Texture (Mean, Variance, Homogeneity, Contrast, Dissimilarity, Entropy, Second Moment, Correlation) |

| NDVIRE | Red Edge normalized difference vegetation index = (NIR-RE)/(NIR + RE) |

| NG | Green/(NIR + Red + Green) |

| NG | Normalized Green = Green/(NIR + Red + Green) |

| NGB | Green Normalized by Blue = (Green − blue)/(Green + Blue) |

| NGRVI | Normalized Green-Red Vegetation Index = (Green − Red)/(Green + Red) |

| NIR | Near InfraRed |

| NIR textures | Mean, Variance, Difference variance, Difference entropy, IC1, IC2 |

| NLI | Non-Linear Index = (NIR2 − Red)/(NIR2 + Red) |

| NNIR | Normalized Near-InfraRed = NIR/(NIR + Red + Green) |

| NR | Normalized Red = Red/(NIR + Red + Green) |

| NRB | Red Normalized by Blue = (Red − Blue)/(Red + Blue) |

| OSAVI | Optimized Soil Adjusted Vegetation Index = ((NIR − Red)/(NIR + Red + 0.15)) × (1 + 0.5) |

| OVCS | Overlapped Contour Separation |

| PG | Percent Greenness = Green/(Red + Green + Blue) |

| Pixel Size | Camera Focal Length/Height of UAV |

| R/B | Red to Blue ratio = Red/Blue |

| R-CNN | Regions with Convolutional Neural Networks |

| REGNDVI | Green RENVI = (RedEdge − Green)/(RedEdge + Green) |

| RENDVI | Red Edge Normalized Difference Vegetation Index = (NIR − RedEdge)/(NIR + RedEdge) |

| RERNDVI | Red RENVI = (RedEdge − Red)/(RedEdge + Red) |

| RGBVI | Red Green Blue vegetation index = (Green × Green) − (Red * Blue)/(Green × Green) + (Red × Blue) |

| RGBVI | RGB Vegetation Index = (Green − Red + Blue)/(Green + Red + Blue) |

| SAVI | Soil adjusted vegetation index = ((NIR − Red)/(NIR + Red + L))(1 + L): L is the soil brightness correction factor) |

| SAVI | Soil-Adjusted Vegetation Index = (NIR − Red)/(NIR + Red + 0.5) × 1.5 |

| SCCCI | Simplified Canopy Chlorophyll Content Index = NDREI/NDVI |

| SEG | Semantic Segmentation |

| SMOTE | Synthetic Minority Oversampling Technique |

| SR | Simple Ratio = NIR/Red |

| SSF | Scale-Space Filtering |

| SVM | Support Vector Machine |

| TIR | Thermal InfraRed |

| VARI | Visual Atmospheric Resistance Index = (Green − Red)/(Green + Red − Blue) |

| VARIg | Vegetation Index Green = (Green − Red)/(Green + Red − Blue) |

| VI | Vegetation Index |

| VNIR | Visible and Near-Infrared |

| WBI | Water Band Index = R900/R907 |

| WI | Woebbecke Index = (Green − Blue)/(Red − Green) |

References

- Eugenio, F.C.; Schons, C.T.; Mallmann, C.L.; Schuh, M.S.; Fernandes, P.; Badin, T.L. Remotely piloted aircraft systems and forests: A global state of the art and future challenges. Can. J. For. Res. 2020, 50, 705–716. [Google Scholar] [CrossRef]

- Ecke, S.; Dempewolf, J.; Frey, J.; Schwaller, A.; Endres, E.; Klemmt, H.J.; Tiede, D.; Seifert, T. UAV-based forest health monitoring: A systematic review. Remote Sens. 2022, 14, 3205. [Google Scholar] [CrossRef]

- Jemaa, H.; Bouachir, W.; Leblon, B.; LaRocque, A.; Haddadi, A.; Bouguila, N. UAV-based computer vision system for orchard apple tree detection and health assessment. Remote Sens. 2023, 15, 3558. [Google Scholar] [CrossRef]

- Li, H.; Chen, L.; Yao, Z.; Li, N.; Long, L.; Zhang, X. Intelligent identification of pine wilt disease infected individual trees using UAV-based hyperspectral imagery. Remote Sens. 2023, 15, 3295. [Google Scholar] [CrossRef]

- Miraki, M.; Sohrabi, H.; Fatehi, P.; Kneubuehler, M. Detection of mistletoe infected trees using UAV high spatial resolution images. J. Plant Dis. Prot. 2021, 128, 1679–1689. [Google Scholar] [CrossRef]

- Guerra-Hernández, J.; Díaz-Varela, R.A.; Ávarez-González, J.G.; Rodríguez-González, P.M. Assessing a novel modelling approach with high resolution UAV imagery for monitoring health status in priority riparian forests. For. Ecosyst. 2021, 8, 61. [Google Scholar] [CrossRef]

- Naseri, M.H.; Shataee Jouibary, S.; Habashi, H. Analysis of forest tree dieback using UltraCam and UAV imagery. Scand. J. For. Res. 2023, 38, 392–400. [Google Scholar] [CrossRef]

- Brovkina, O.; Cienciala, E.; Surový, P.; Janata, P. Unmanned Aerial Vehicles (UAV) for assessment of qualitative classification of Norway spruce in temperate forest stands. Geo-Spat. Inf. Sci. 2018, 21, 12–20. [Google Scholar] [CrossRef]

- Moriya, É.A.; Imai, N.N.; Tommaselli, A.M.; Berveglieri, A.; Santos, G.H.; Soares, M.A.; Marino, M.; Reis, T.T. Detection and mapping of trees infected with citrus gummosis using UAV hyperspectral data. Comput. Electron. Agric. 2021, 188, 106298. [Google Scholar] [CrossRef]

- Näsi, R.; Honkavaara, E.; Lyytikäinen-Saarenmaa, P.; Blomqvist, M.; Litkey, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Tanhuanpää, T.; Holopainen, M. Using UAV-based photogrammetry and hyperspectral imaging for mapping bark beetle damage at tree-level. Remote Sens. 2015, 7, 15467–15493. [Google Scholar] [CrossRef]

- Näsi, R.; Honkavaara, E.; Blomqvist, M.; Lyytikäinen-Saarenmaa, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Holopainen, M. Remote sensing of bark beetle damage in urban forests at individual tree level using a novel hyperspectral camera from UAV and aircraft. Urban For. Urban Green. 2018, 30, 72–83. [Google Scholar] [CrossRef]

- Zhang, N.; Wang, Y.; Zhang, X. Extraction of tree crowns damaged by Dendrolimus tabulaeformis Tsai et Liu via spectral-spatial classification using UAV-based hyperspectral images. Plant Methods 2020, 16, 135. [Google Scholar] [CrossRef]

- Honkavaara, E.; Näsi, R.; Oliveira, R.; Viljanen, N.; Suomalainen, J.; Khoramshahi, E.; Hakala, T.; Nevalainen, O.; Markelin, L.; Vuorinen, M.; et al. Using multitemporal hyper-and multispectral UAV imaging for detecting bark beetle infestation on Norway spruce. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 429–434. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Stathaki, T.; Kamperidou, V. Monitoring of trees’ health condition using a UAV equipped with low-cost digital camera. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2019), Brighton, UK, 12–17 May 2019. [Google Scholar]

- Kent, O.W.; Chun, T.W.; Choo, T.L.; Kin, L.W. Early symptom detection of basal stem rot disease in oil palm trees using a deep learning approach on UAV images. Comput. Electron. Agric. 2023, 213, 108192. [Google Scholar] [CrossRef]

- Jiang, X.; Wu, Z.; Han, S.; Yan, H.; Zhou, B.; Li, J. A multi-scale approach to detecting standing dead trees in UAV RGB images based on improved faster R-CNN. PLoS ONE. 2023, 18, e0281084. [Google Scholar] [CrossRef]

- Dolgaia, L.; Illarionova, S.; Nesteruk, S.; Krivolapov, I.; Baldycheva, A.; Somov, A.; Shadrin, D. Apple tree health recognition through the application of transfer learning for UAV imagery. In Proceedings of the IEEE 28th International Conference on Emerging Technologies and Factory Automation (ETFA 2023), Sinaia, Romania, 12–15 September 2023. [Google Scholar]

- Hofinger, P.; Klemmt, H.J.; Ecke, S.; Rogg, S.; Dempewolf, J. Application of YOLOv5 for point label based object detection of black pine trees with vitality losses in UAV data. Remote Sens. 2023, 15, 1964. [Google Scholar] [CrossRef]

- Puliti, S.; Astrup, R. Automatic detection of snow breakage at single tree level using YOLOv5 applied to UAV imagery. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102946. [Google Scholar] [CrossRef]

- Wang, Y.; Zhu, X.; Wu, B. Automatic detection of individual oil palm trees from UAV images using HOG features and an SVM classifier. Int. J. Remote Sens. 2019, 40, 7356–7370. [Google Scholar] [CrossRef]

- Erdem, F.; Ocer, N.E.; Matci, D.K.; Kaplan, G.; Avdan, U. Apricot tree detection from UAV-images using mask R-CNN and U-NET. Photogramm. Eng. Remote Sens. 2023, 89, 89–96. [Google Scholar] [CrossRef]

- Șandric, I.; Irimia, R.; Petropoulos, G.P.; Anand, A.; Srivastava, P.K.; Pleșoianu, A.; Faraslis, I.; Stateras, D.; Kalivas, D. Tree’s detection & health’s assessment from ultra-high resolution UAV imagery and deep learning. Geocarto Int. 2022, 37, 10459–10479. [Google Scholar]

- Gibril, M.B.; Shafri, H.Z.; Shanableh, A.; Al-Ruzouq, R.; Wayayok, A.; Hashim, S.J.; Sachit, M.S. Deep convolutional neural networks and Swin transformer-based frameworks for individual date palm tree detection and mapping from large-scale UAV images. Geocarto Int. 2022, 37, 18569–18599. [Google Scholar] [CrossRef]

- Yu, K.; Hao, Z.; Post, C.J.; Mikhailova, E.A.; Lin, L.; Zhao, G.; Tian, S.; Liu, J. Comparison of classical methods and mask R-CNN for automatic tree detection and mapping using UAV imagery. Remote Sens. 2022, 14, 295. [Google Scholar] [CrossRef]

- Safonova, A.; Guirado, E.; Maglinets, Y.; Alcaraz-Segura, D.; Tabik, S. Olive tree biovolume from UAV multi-resolution image segmentation with Mask R-CNN. Sensors 2021, 21, 1617. [Google Scholar] [CrossRef]

- Lobo Torres, D.; Queiroz Feitosa, R.; Nigri Happ, P.; Elena Cué La Rosa, L.; Marcato Junior, J.; Martins, J.; Olã Bressan, P.; Gonçalves, W.N.; Liesenberg, V. Applying fully convolutional architectures for semantic segmentation of a single tree species in urban environment on high resolution UAV optical imagery. Sensors 2020, 20, 563. [Google Scholar] [CrossRef]

- Ferreira, M.P.; de Almeida, D.R.; de Almeida Papa, D.; Minervino, J.B.; Veras, H.F.; Formighieri, A.; Santos, C.A.; Ferreira, M.A.; Figueiredo, E.O.; Ferreira, E.J. Individual tree detection and species classification of Amazonian palms using UAV images and deep learning. For. Ecol. Manag. 2020, 475, 118397. [Google Scholar] [CrossRef]

- Kestur, R.; Angural, A.; Bashir, B.; Omkar, S.N.; Anand, G.; Meenavathi, M.B. Tree crown detection, delineation and counting in UAV remote sensed images: A neural network based spectral–spatial method. J. Indian Soc. Remote Sens. 2018, 46, 991–1004. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, H.; Liu, Y.; Zhang, H.; Zheng, D. Tree-Level Chinese Fir Detection Using UAV RGB Imagery and YOLO-DCAM. Remote Sens. 2024, 16, 335. [Google Scholar] [CrossRef]

- Kaviani, M.; Akilan, T.; Leblon, B.; Amishev, D.; Haddadi, A.; LaRocque, A. Comparison of YOLOv7 and YOLOv8n for tree detection on UAV RGB imagery. In Proceedings of the 45th Canadian Symposium on Remote Sensing, Halifax, NS, Canada, 10–13 June 2024. [Google Scholar]

- Moradi, F.; Javan, F.D.; Samadzadegan, F. Potential evaluation of visible-thermal UAV image fusion for individual tree detection based on convolutional neural network. Int. J. Appl. Earth Obs. Geoinf. 2022, 113, 103011. [Google Scholar] [CrossRef]

- Ghasemi, M.; Latifi, H.; Pourhashemi, M. A novel method for detecting and delineating coppice trees in UAV images to monitor tree decline. Remote Sens. 2022, 14, 5910. [Google Scholar] [CrossRef]

- Al Mansoori, S.; Kunhu, A.; Al Ahmad, H. Automatic palm trees detection from multispectral UAV data using normalized difference vegetation index and circular Hough transform. In Proceedings of the SPIE Remote Sensing, Berlin, Germany, 10–13 September 2018. [Google Scholar]

- Ampatzidis, Y.; Partel, V. UAV-based high throughput phenotyping in citrus utilizing multispectral imaging and artificial intelligence. Remote Sens. 2019, 11, 410. [Google Scholar] [CrossRef]

- Suab, S.A.; Syukur, M.S.; Avtar, R.; Korom, A. Unmanned Aerial Vehicle (UAV) derived normalised difference vegetation index (NDVI) and crown projection area (CPA) to detect health conditions of young oil palm trees for precision agriculture. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 611–614. [Google Scholar] [CrossRef]

- Anisa, M.N.; Hernina, R. UAV application to estimate oil palm trees health using Visible Atmospherically Resistant Index (VARI) (Case study of Cikabayan Research Farm, Bogor City). In Proceedings of the 2nd International Conference on Sustainable Agriculture and Food Security (ICSAFS), West Java, Indonesia, 28–29 October 2020. [Google Scholar]

- Marques, P.; Pádua, L.; Adão, T.; Hruška, J.; Peres, E.; Sousa, A.; Sousa, J.J. UAV-based automatic detection and monitoring of chestnut trees. Remote Sens. 2019, 11, 855. [Google Scholar] [CrossRef]

- Wu, Y.; Li, X.; Zhang, Q.; Zhou, X.; Qiu, H.; Wang, P. Recognition of spider mite infestations in jujube trees based on spectral-spatial clustering of hyperspectral images from UAVs. Front. Plant Sci. 2023, 14, 1078676. [Google Scholar] [CrossRef]

- Safonova, A.; Tabik, S.; Alcaraz-Segura, D.; Rubtsov, A.; Maglinets, Y.; Herrera, F. Detection of fir trees (Abies sibirica) damaged by the bark beetle in unmanned aerial vehicle images with deep learning. Remote Sens. 2019, 11, 643. [Google Scholar] [CrossRef]

- Bergmüller, K.O.; Vanderwel, M.C. Predicting tree mortality using spectral indices derived from multispectral UAV imagery. Remote Sens. 2022, 14, 2195. [Google Scholar] [CrossRef]

- Hu, G.; Yin, C.; Wan, M.; Zhang, Y.; Fang, Y. Recognition of diseased Pinus trees in UAV images using deep learning and AdaBoost classifier. Biosyst. Eng. 2020, 194, 138–151. [Google Scholar] [CrossRef]

- Iqbal, M.S.; Ali, H.; Tran, S.N.; Iqbal, T. Coconut trees detection and segmentation in aerial imagery using mask region-based convolution neural network. IET Comput. Vis. 2021, 15, 428–439. [Google Scholar] [CrossRef]

- Mo, J.; Lan, Y.; Yang, D.; Wen, F.; Qiu, H.; Chen, X.; Deng, X. Deep learning-based instance segmentation method of litchi canopy from UAV-acquired images. Remote Sens. 2021, 13, 3919. [Google Scholar] [CrossRef]

- Elharrouss, O.; Akbari, Y.; Almadeed, N.; Al-Maadeed, S. Backbones-review: Feature extractor networks for deep learning and deep reinforcement learning approaches in computer vision. Comput. Sci. Rev. 2024, 53, 100645. [Google Scholar] [CrossRef]

- Li, Y.; Wang, H.; Dang, L.M.; Song, H.K.; Moon, H. Orcnn-x: Attention-driven multiscale network for detecting small objects in complex aerial scenes. Remote Sens. 2023, 15, 3497. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, QC, Canada, 11–17 October 2021. [Google Scholar]

- Jeevan, P.; Sethi, A. Which backbone to use: A resource-efficient domain specific comparison for computer vision. arXiv 2024, arXiv:2406.05612. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Arjoune, Y.; Peri, S.; Sugunaraj, N.; Biswas, A.; Sadhukhan, D.; Ranganathan, P. An instance segmentation and clustering model for energy audit assessments in built environments: A multi-stage approach. Sensors 2021, 21, 4375. [Google Scholar] [CrossRef]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Dash, J.P.; Watt, M.S.; Pearse, G.D.; Heaphy, M.; Dungey, H.S. Assessing very high resolution UAV imagery for monitoring forest health during a simulated disease outbreak. ISPRS J. Photogramm. Remote Sens. 2017, 131, 1–14. [Google Scholar] [CrossRef]

- Cogato, A.; Pagay, V.; Marinello, F.; Meggio, F.; Grace, P.; De Antoni Migliorati, M. Assessing the feasibility of using Sentinel-2 imagery to quantify the impact of heatwaves on irrigated vineyards. Remote Sens. 2019, 11, 2869. [Google Scholar] [CrossRef]

- Hawryło, P.; Bednarz, B.; Wężyk, P.; Szostak, M. Estimating defoliation of Scots pine stands using machine learning methods and vegetation indices of Sentinel-2. Eur. J. Remote Sens. 2018, 51, 194–204. [Google Scholar] [CrossRef]

- Kobayashi, N.; Tani, H.; Wang, X.; Sonobe, R. Crop classification using spectral indices derived from Sentinel-2A imagery. J. Inf. Telecommun. 2020, 4, 67–90. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Miller, J.R.; Mohammed, G.H.; Noland, T.L.; Sampson, P.H. Vegetation stress detection through chlorophyll a+ b estimation and fluorescence effects on hyperspectral imagery. J. Environ. Qual. 2002, 31, 1433–1441. [Google Scholar] [CrossRef] [PubMed]

- Barry, K.M.; Stone, C.; Mohammed, C.L. Crown-scale evaluation of spectral indices for defoliated and discoloured eucalypts. Int. J. Remote Sens. 2008, 29, 47–69. [Google Scholar] [CrossRef]

- Garcia-Ruiz, F.; Sankaran, S.; Maja, J.M.; Lee, W.S.; Rasmussen, J.; Ehsani, R. Comparison of two aerial imaging platforms for identification of Huanglongbing-infected citrus trees. Comput. Electron. Agric. 2013, 91, 106–115. [Google Scholar] [CrossRef]

- Verbesselt, J.; Robinson, A.; Stone, C.; Culvenor, D. Forecasting tree mortality using change metrics derived from MODIS satellite data. For. Ecol. Manag. 2009, 258, 1166–1173. [Google Scholar] [CrossRef]

- Oumar, Z.; Mutanga, O. Using WorldView-2 bands and indices to predict bronze bug (Thaumastocoris peregrinus) damage in plantation forests. Int. J. Remote Sens. 2013, 34, 2236–2249. [Google Scholar] [CrossRef]

- Datt, B. Remote sensing of chlorophyll a, chlorophyll b, chlorophyll a+b, and total carotenoid content in eucalyptus leaves. Remote Sens. Environ. 1998, 66, 111–121. [Google Scholar] [CrossRef]

- Deng, X.; Guo, S.; Sun, L.; Chen, J. Identification of short-rotation eucalyptus plantation at large scale using multi-satellite imageries and cloud computing platform. Remote Sens. 2020, 12, 2153. [Google Scholar] [CrossRef]

- Bajwa, S.G.; Tian, L. Multispectral CIR image calibration for cloud shadow and soil background influence using intensity normalization. Appl. Eng. Agric. 2002, 18, 627. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Sripada, R.P.; Heiniger, R.W.; White, J.G.; Meijer, A.D. Aerial color infrared photography for determining early in-season nitrogen requirements in corn. Agron. J. 2006, 98, 968–977. [Google Scholar] [CrossRef]

- Buschmann, C.; Nagel, E. In vivo spectroscopy and internal optics of leaves as basis for remote sensing of vegetation. Int. J. Remote Sens. 1993, 14, 711–722. [Google Scholar] [CrossRef]

- Villa, P.; Mousivand, A.; Bresciani, M. Aquatic vegetation indices assessment through radiative transfer modeling and linear mixture simulation. Int. J. Appl. Earth Obs. Geoinf. 2014, 30, 113–127. [Google Scholar] [CrossRef]

- Barnes, E.M.; Clarke, T.R.; Richards, S.E.; Colaizzi, P.D.; Haberland, J.; Kostrzewski, M.; Waller, P.; Choi, C.; Riley, E.; Thompson, T.; et al. Coincident detection of crop water stress, nitrogen status, and canopy density using ground-based multispectral data. In Proceedings of the Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, 16–19 July 2000. [Google Scholar]

- Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS. In Proceedings of the Third ERTS Symposium, Washington, DC, USA, 10–14 December 1973; Volume 1A, pp. 309–317. [Google Scholar]

- Chen, G.; Qian, S.E. Simultaneous dimensionality reduction and denoising of hyperspectral imagery using bivariate wavelet shrinking and principal component analysis. Can. J. Remote Sens. 2008, 34, 447–454. [Google Scholar] [CrossRef]

- Food and Agriculture Organization of the United Nations (FAO). Understanding the Context|Pest and Pesticide Management. FAO. Available online: https://www.fao.org/pest-and-pesticide-management/about/understanding-the-context/en/ (accessed on 25 August 2025).

- Shadrin, D.; Pukalchik, M.; Uryasheva, A.; Tsykunov, E.; Yashin, G.; Rodichenko, N.; Tsetserukou, D. Hyper-spectral NIR and MIR data and optimal wavebands for detection of apple tree diseases. arXiv 2020, arXiv:2004.02325. [Google Scholar] [CrossRef]

| Imagery Type | Input Feature (*) | Method (*) | Classification Accuracy (%) | Number of Classes | Species | Reference |

|---|---|---|---|---|---|---|

| RGB | Textural Features | Linear Dynamic System | 93.80 | 3 | Fir | [14] |

| ExG, ExGR, NGRDI, NGB, NRB, VARI, WI, R/B, G/R | Random Forest | 87.00 | 2 | Various | [5] | |

| Multispectral | DVI, GDVI, GNDVI, GRVI, NDAVI, NDVI, NDRE, NG, NR, NNIR | Random Forest | 97.52 | 2 | Apple | [3] |

| NDVI, GNDVI, RENDVI, REGNDVI, RERNDVI, NGRVI, NLI, OSAVI, NDVI_GLCM | Logistic Regression | 94.00 | 2 | Various forest tree species | [6] | |

| Random Forest | 91.00 | |||||

| Blue, Green, Red, NIR, Mean, Variance, Entropy, Second Moment, NDVI, GNDVI | Naïve Bayes | 91.20 | 4 | Various | [7] | |

| VNIR, NDVI | Qualitative Classification | 78.40 | 9 | Norway spruce, Beech, Fir | [8] | |

| PG, ER, NDI, EGI, EGMRI, VARI, GLI, NCI, Bright, NDVI, NDRE | Random Forest | 85.20 | 2 | Lodgepole pine | [40] | |

| 77.80 | White spruce | |||||

| 73.30 | Trembling aspen | |||||

| Hyperspectral | NDVI, ANOVA-based band selection, 22-band spectra | KNN | 94.29 | 2 | Norway spruce | [10] |

| Reflectance (25 spectral bands) | Spectral Angle Mapper Classification | 94.00 | 2 | Citrus | [9] | |

| 24 spectral bands, VI | SVM | 93.00 | 2 | Spruce | [11] | |

| Reflectance (125 spectral bands) | EPF + SVM | 93.17 | 4 | Pinus tabulaeformis | [12] | |

| Reflectance (8 spectral bands) | Prototypical Network Classification | 74.89 | 4 | Pine | [4] | |

| 46 spectral bands, VI | Random Forest | 40–55 | 3 | Norway spruce | [13] |

| Method (*) | F1-Score | Pixel Size (cm) | Species | Region | Reference |

|---|---|---|---|---|---|

| M-CR U-NET with OVCS | 98.55 | N/A | Oil Palm | Indonesia | [15] |

| Faster R-CNN | 93.90 | 5.1 | Broadleaved trees, Conifers | China | [16] |

| YOLOv5 | 70.80 | 1.5 | Apple | Russia | [17] |

| 67–77 | 2–4 | Pine | Germany | [18] | |

| 61.46 | 3 | Forest tree species | Norway | [19] |

| Backbone | Parameters (Million) | GFLOPs* Per 1024 × 1024-Pixel Image | |

|---|---|---|---|

| 3-Band | 5-Band | ||

| ResNet-50 | 26 | 41.30 | 424.85 |

| ResNet-101 | 44 | 60.20 | 557.80 |

| ResNeXt-101 (32 × 8d) | 89 | 104.60 | 930.70 |

| Swin Transformer (Base) | 88 | 924.97 | 924.97 |

| Vegetation Index | Equation | Reference |

|---|---|---|

| Difference Vegetation Index | DVI = Near-infrared (NIR)-Red | [63] |

| Generalized Difference Vegetation Index | GDVI = NIR − Green | [64] |

| Green Normalized Difference Vegetation Index | GNDVI = (NIR − Green)/(NIR + Green) | [65] |

| Green-Red Vegetation Index | GRVI = NIR/Green | [64] |

| Normalized Difference Aquatic Vegetation Index | NDAVI = (NIR − Blue)/(NIR + Blue) | [66] |

| Normalized Difference Vegetation Index | NDVI = (NIR − Red)/(NIR + Red) | [63] |

| Normalized Difference Red-Edge | NDRE = (NIR − RedEdge)/(NIR + RedEdge) | [67] |

| Normalized Green | NG = Green/(NIR + Red + Green) | [62] |

| Normalized Red | NR = Red/(NIR + Red + Green) | [62] |

| Normalized NIR | NNIR = NIR/(NIR + Red + Green) | [62] |

| Red simple ratio Vegetation Index | RVI = NIR/Red | [68] |

| Water Adjusted Vegetation Index | WAVI = (1.5 × (NIR–Blue))/((NIR + Blue) + 0.5) | [66] |

| Dataset | Backbone | Average Precision (%) | Average Recall (%) | Average F1 Score (%) | Average mIoU (%) |

|---|---|---|---|---|---|

| RGB | Resnet-50 | 73.96 | 80.84 | 74.36 | 91.26 |

| Resnet-101 | 48.28 | 17.77 | 24.40 | 63.58 | |

| ResneXt-101 | 82.39 | 87.65 | 83.46 | 92.18 | |

| Swin Transformer | 45.86 | 09.66 | 15.96 | 42.67 | |

| Multispectral | Resnet-50 | 80.21 | 79.40 | 77.92 | 91.28 |

| Resnet-101 | 73.26 | 45.04 | 53.25 | 84.13 | |

| ResneXt-101 | 83.93 | 87.28 | 84.48 | 92.11 | |

| Swin Transformer | 75.23 | 56.59 | 60.42 | 88.02 | |

| 3PCs | Resnet-50 | 75.67 | 61.97 | 66.77 | 82.20 |

| Resnet-101 | 67.91 | 38.50 | 46.95 | 69.93 | |

| ResneXt-101 | 82.88 | 87.58 | 83.67 | 92.85 | |

| Swin Transformer | 14.93 | 44.35 | 17.87 | 72.84 |

| Dataset | Backbone | Average Precision (%) | Average Recall (%) | Average F1-Score (%) | Average mIoU (%) |

|---|---|---|---|---|---|

| RGB | Resnet-50 | 78.54 | 85.65 | 80.53 | 90.68 |

| Resnet-101 | 48.06 | 17.63 | 24.83 | 76.05 | |

| ResneXt-101 | 82.71 | 87.00 | 83.64 | 92.23 | |

| Swin Transformer | 48.53 | 13.45 | 21.06 | 47.12 | |

| Multispectral | Resnet-50 | 79.84 | 79.78 | 77.92 | 91.07 |

| Resnet-101 | 68.22 | 34.83 | 43.37 | 82.02 | |

| ResneXt-101 | 85.15 | 88.18 | 85.70 | 92.85 | |

| Swin Transformer | 75.55 | 62.06 | 63.94 | 86.21 | |

| 3PCs | Resnet-50 | 75.98 | 73.01 | 73.78 | 88.86 |

| Resnet-101 | 73.63 | 58.69 | 64.42 | 87.55 | |

| ResneXt-101 | 82.46 | 86.22 | 82.75 | 92.93 | |

| Swin Transformer | 54.52 | 22.91 | 31.25 | 84.10 |

| Loss Function | Prediction | Ground Truth | ||

|---|---|---|---|---|

| Healthy Trees | Unhealthy Trees | Accuracy (%) | ||

| Cross Entropy | Healthy trees | 467 | 5 | 95.99 |

| Unhealthy trees | 15 | 12 | ||

| Focal | Healthy trees | 452 | 13 | 95.58 |

| Unhealthy trees | 9 | 24 | ||

| Loss Function | Average Precision (%) | mAP@50 (%) | Average F1-Score (%) | Macro F1-Score (%) | Weighted Average F1-Score | |

|---|---|---|---|---|---|---|

| Healthy Trees | Unhealthy Trees | |||||

| Cross Entropy | 90.18 | 39.32 | 64.75 | 85.70 | 76.22 | 93.60 |

| Focal | 90.73 | 42.76 | 66.74 | 84.18 | 83.10 | 94.76 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kaviani, M.; Leblon, B.; Akilan, T.; Amishev, D.; LaRocque, A.; Haddadi, A. Tree Health Assessment Using Mask R-CNN on UAV Multispectral Imagery over Apple Orchards. Remote Sens. 2025, 17, 3369. https://doi.org/10.3390/rs17193369

Kaviani M, Leblon B, Akilan T, Amishev D, LaRocque A, Haddadi A. Tree Health Assessment Using Mask R-CNN on UAV Multispectral Imagery over Apple Orchards. Remote Sensing. 2025; 17(19):3369. https://doi.org/10.3390/rs17193369

Chicago/Turabian StyleKaviani, Mohadeseh, Brigitte Leblon, Thangarajah Akilan, Dzhamal Amishev, Armand LaRocque, and Ata Haddadi. 2025. "Tree Health Assessment Using Mask R-CNN on UAV Multispectral Imagery over Apple Orchards" Remote Sensing 17, no. 19: 3369. https://doi.org/10.3390/rs17193369

APA StyleKaviani, M., Leblon, B., Akilan, T., Amishev, D., LaRocque, A., & Haddadi, A. (2025). Tree Health Assessment Using Mask R-CNN on UAV Multispectral Imagery over Apple Orchards. Remote Sensing, 17(19), 3369. https://doi.org/10.3390/rs17193369