Highlights

What are the main findings?

- A novel hybrid model for segmentation of remote sensing images is proposed.

- Our model achieves high-precision land-cover classification.

What is the implication of the main finding?

- Enables more reliable mapping of complex land-cover patterns.

- Provides a robust foundation for environmental monitoring and resource management.

Abstract

High-precision semantic segmentation of remote sensing imagery is crucial in geospatial analysis. It plays an immeasurable role in fields such as urban governance, environmental monitoring, and natural resource management. However, when confronted with complex objects (such as winding roads and dispersed buildings), existing semantic segmentation methods still suffer from inadequate target recognition capabilities and multi-scale representation issues. This paper proposes a neural network model, LMVMamba (LoRA Multi-scale Vision Mamba), for semantic segmentation of remote sensing images. This model integrates the advantages of convolutional neural networks (CNNs), Transformers, and state-space models (Mamba) with a multi-scale feature fusion strategy. It simultaneously captures global contextual information and fine-grained local features. Specifically, in the encoder stage, the ResT Transformer serves as the backbone network, employing a LoRA fine-tuning strategy to effectively enhance model accuracy by training only the introduced low-rank matrix pairs. The extracted features are then passed to the decoder, where a U-shaped Mamba decoder is designed. In this stage, a Multi-Scale Post-processing Block (MPB) is introduced, consisting of depthwise separable convolutions and residual concatenation. This block effectively extracts multi-scale features and enhances local detail extraction after the VSS block. Additionally, a Local Enhancement and Fusion Attention Module (LAS) is added at the end of each decoder block. LAS integrates the SimAM attention mechanism, further enhancing the model’s multi-scale feature fusion capability and local detail segmentation capability. Through extensive comparative experiments, it was found that LMVMamba achieves superior performance on the OpenEarthMap dataset (mIoU 52.3%, OA 69.8%, mF1: 68.0%) and LoveDA (mIoU 67.9%, OA 80.3%, mF1: 80.5%) datasets. Ablation experiments validated the effectiveness of each module. The final results indicate that this model is highly suitable for high-precision land-cover classification tasks in remote sensing imagery. LMVMamba provides an effective solution for precise semantic segmentation of high-resolution remote sensing imagery.

1. Introduction

Semantic segmentation of remote sensing images is crucial in geospatial analysis. This technique is used to classify each pixel in those images into a specific semantic category. Then, it finally generates segmentation maps with semantic information [1]. It has many application areas and plays an inestimable role in urban governance [2], environmental monitoring [3], disaster emergency response [4], and natural resource management [5]. Traditional semantic segmentation methods primarily depend on manual feature extraction. This involves using spectral indices (e.g., NDVI) and texture descriptors (e.g., GLCM) to represent features of different categories. Then, classical machine learning models assign category labels based on these features. Common models include support vector machine (SVM) [6], random forest (RF) [7], and maximum likelihood classifier. However, these methods exhibit significant limitations: they rely on manual feature design based on specialized prior knowledge, which is both time-consuming and labor-intensive. Moreover, when confronted with complex scenarios such as intricate urban development patterns, fragmented road networks, and finely divided green belts, they struggle to capture high-level semantic information [8]. Moreover, their processing workflow is complex and inefficient, with limited generalization capability [9]. These shortcomings seriously restrict the semantic segmentation techniques from keeping up with the demands of large-scale remote sensing interpretation in terms of accuracy, efficiency, and adaptability.

In recent years, deep learning technology has been widely used in various fields, such as object detection and recognition [10], feature change detection [11], environmental management [12], and so on. Especially in the field of semantic segmentation of remote sensing images, deep learning technology has made significant breakthroughs. Convolutional neural networks (CNNs) [13] not only overcome the limitations of traditional methods reliant on handcrafted features, but have also made significant contributions to enhancing the recognition accuracy of multi-scale objects. CNN-based approaches such as DeepLabv3+ [14], A2FPN [15], and MANet [16] have all advanced the development of remote sensing segmentation methods. However, when confronted with continuous objects, CNN-based methods still struggle to model large-scale feature correlations, which frequently results in segmentation discontinuities. Visual Transformer (ViT) [17] further enhances global context modeling capability, dramatically boosting the segmentation performance for long line-like features such as rivers and roads [18]. The development of numerous innovative approaches, such as representative models including DC-Swin [19], UNetFormer [20], and EMRT [21], has established them as significant forces within the field. Also, numerous studies [22,23] utilize complementary multi-modal images and construct dual-branches models, extracting features from different modalities and fusing them to enhance segmentation. These investigations indubitably represent a highly promising research direction. Compared to multi-model, unimodal data are more readily available, and numerous open-source datasets have already been proposed for researchers to utilize. However, ViT’s global attention mechanism is effective but computationally heavy, hindering efficient processing of high-resolution imagery [24]. Additionally, limited labeled data constrains generalization, impeding cross-regional applications. These limitations originate from data and labeling challenges: remote sensing data acquisition is constrained by meteorological conditions and sensors, while labeling is too dependent on professional knowledge and is expensive. Further complexity arises because similar features exhibit significant spectral-texture variations, yet different features are easily confused [25]. Additionally, spatiotemporal dynamics (e.g., farmland cultivation and urban construction) heighten analytical complexity, such that the recognition ability of deep learning remains insufficient.

In this context, through the innovative design of the state-space model (SSM), the Mamba model not only realizes long-range dependency modeling, but also reduces the computational complexity [26]. Mamba can achieve dynamic induction field adjustment, not only capturing local fine features but also efficiently modeling long-range spatial correlations between pixels [27]. For instance, when segmenting a winding road, Mamba tracks its global path through dynamic planning, avoiding segmentation breaks caused by limited receptive fields. Meanwhile, its linear scanning algorithm significantly boosts processing speed for high-resolution images while maintaining accuracy [28]. Methods based on Mamba, such as RS3Mamba [29], Efficient PyramidMamba [30], and RTMamba [31], have demonstrated significant potential in remote sensing segmentation. Although Mamba’s advantages position it as a promising solution for current remote sensing image segmentation limitations, the model still has drawbacks: it struggles with fine-grained tasks requiring detailed manipulation [32]. This results in poor performance in complex logical reasoning and other precision-sensitive scenarios needing further improvement.

Hybrid architectures integrating Transformers, CNNs, and Mamba have emerged as a new trend in semantic segmentation of remote sensing imagery. However, existing approaches often lack targeted mechanisms to address the unique challenges of remote sensing imagery, such as the multi-scale distribution of ground objects, spectral confusion, and limited annotated data. Based on this analysis, this paper proposes a remote sensing semantic segmentation method based on ResT Transformer [33] and Mamba [26] modules, employing a multi-scale feature fusion strategy. This method combines the strengths of CNNs, Transformers, and Mamba with the following specific improvements. First, during the encoder stage of the ResT feature extraction, we employ a LoRA fine-tuning strategy [34] to train the model exclusively on introduced low-rank matrix pairs. This approach effectively mitigates overfitting risks arising from sparse annotated data. Secondly, we introduce a novel Multi-Scale Post Block (MPB) following the core Visual State Space (VSS Block) in the Mamba architecture. This block employs depthwise separable convolutions and residual cascades to efficiently extract multi-scale features from the model, enhancing local detail extraction post-VSS block. This significantly improves the performance on fine-grained objects. Finally, a Local Attention Supervision (LAS) module incorporating the SimAM attention mechanism [35] is introduced at the end of each decoder block. This design mitigates spectral confusion, enabling more precise boundary delineation and category discrimination. This hybrid encoder–decoder architecture leverages the strengths of ViT in global context representation and Mamba in efficient long-sequence processing, achieving an optimal balance between computational efficiency and the recovery of fine-grained features.

The main contributions of this study are illustrated as follows:

- (1)

- An innovative hybrid model combining the ViT, Mamba, and CNN models is constructed for remote sensing semantic segmentation tasks. The model has the advantages of global modeling from ViT, long-sequence dependency processing from Mamba and local feature extraction from CNN. The performance of LMVMamba in terms of land-cover segmentation is superior to several state-of-the-art segmentation models.

- (2)

- A low-rank adaptation fine-tuning strategy is incorporated into the ResT encoder. This can preserve the capabilities of ViT and can enhance its capability for remote sensing segmentation tasks by updating a limited number of trainable parameters.

- (3)

- Two modules for multi-scale feature fusion, MPB and LAS, are designed. These modules collectively enhance local feature representation and enable more efficient fusion across multiple scales, which is particularly beneficial for remote sensing images rich in semantic information.

2. Related Works

2.1. Deep Learning Methods in Semantic Segmentation

In recent years, deep learning methods have achieved remarkable progress in remote sensing image semantic segmentation. This advancement stems from their powerful automated feature extraction and end-to-end modeling capabilities. For example, in order to enhance the long-range dependency modeling capability, Deng et al. [36] proposed the joint CNN–VIT framework, CTNet. Through testing, the model achieved high accuracy on the NWPU-RESISC45 and AID datasets, with accuracy rates of 95.49% and 97.70%, respectively. However, its performance enhancement is accompanied by the complexity of the model structure, the increase in computation and the decrease in running speed. MANet [16] achieved high-precision semantic segmentation in remote sensing by proposing a kernel attention mechanism and employing ResNeXt-101, yet it still suffers from substantial parameter computation and high computational complexity. Li et al. [15] proposed an innovative framework based on Feature Pyramid Networks (FPNs) and Attention Aggregation Modules (AAMs) to enhance multi-scale feature learning and address the inherent shortcomings of a traditional FPN in feature extraction and fusion. Fang et al. [37] developed a contextual Representation Enhancement Network (CRENet), which aims to enhance the global and local context modeling capabilities in high-level features. Meng et al. [38] designed the category-guided Swin Transformer (CG-Swin), which made a significant breakthrough in semantic segmentation tasks. It further improved the Transformer and effectively enhanced the segmentation accuracy. Compared to traditional global modeling approaches, the Criss-Cross Network (CCNet) developed by Huang et al. [39] and the UNet-based Transformer network (UNeTFormer) proposed by Wang et al. [20] achieve a balance between accuracy and efficiency in semantic segmentation tasks. To fully leverage contextual information, Wang et al. [19] introduced the Swin Transformer as the backbone architecture and designed a novel decoder (DCFAM), enabling fine-grained segmentation of high-resolution remote sensing imagery. It is obvious that although deep learning has made significant progress in the field of semantic segmentation of remote sensing images, most studies have not yet explicitly mentioned the interference situation of noise on the model performance. And the feature extraction ability of the model needs to be further improved. In order to solve these disturbances, in this paper, we use the improved Mamba model to further enhance the feature extraction ability of the model, and at the same time, suppress the interference of the noise on the performance of the model.

2.2. Efficient Fine-Tuning with Low-Rank Adaptation

In the process of the continuous improvement of deep learning models, there are problems such as high training and computation costs of data, and overfitting in data-scarce scenarios. In order to break through this bottleneck, the parametric efficient fine-tuning technique (PEFT) has become a hot research topic in recent years. Among them, the low-rank adaptive method called LoRA (Low-Rank Adaptation) (Hu et al.) [34] is a widely used efficient fine-tuning method. Compared with full-parameter fine-tuning, this method reduces the computational cost while maintaining the accuracy by fixing the pre-training parameters and updating only the low-rank adaptation matrix and effectively suppresses overfitting. For example, Xue et al. [40] incorporated the LoRA technique into the ViT encoder in the SAM model. This incorporation aims to achieve efficient fine-tuning to improve the accuracy of semantic segmentation of remote sensing images. Xu et al. [41] used the LoRA technique to fine-tune the optimization adapters only, which reduced the number of trainable parameters and greatly improved the quality of code optimization. Similarly, Xiong et al. [42] used a low-rank matrix to update the pre-trained model, fusing the model with plant protection knowledge and optimizing the generalization ability of the model. It can be seen that LoRA plays an extremely important role in different fields and has also inspired a series of improvement works. For instance, Hu et al. [43] proposed a structure-aware low-rank adaptation method called SaLoRA, which aims to improve the performance of efficient parameter fine-tuning. The main advantage of this method is that the model can automatically remove components with rank zero and learn by itself how much rank to use for each incremental matrix. Generalized LoRA (Chavan et al.) [44] further improves Lora by pre-training the model weights and adjusting intermediate activations to enable more flexible parameter tuning and optimization. Huang et al. [45] achieved cross-task generalization by introducing the LoraHub framework, aiming to improve the model’s performance on new task adaptation performance. Although these studies confirmed the effectiveness of LoRA, their applications are still not fully explored, especially in the field of semantic segmentation of remote sensing images. In light of these approaches, we propose an architecture that combines the use of Mamba with LoRA. The proposed architecture aims to take advantage of the synergies between these two models to fine-tune the larger model and make it perform better without heavy modifications.

2.3. Mamba Models

Mamba has great application value in the field of semantic segmentation due to its advantage of establishing remote dependencies while maintaining linear computational complexity (Zhu et al.) [26]. Based on this, researchers have fused mamba with different modules, aiming to further enhance the performance of semantic segmentation. For example, Ma et al. [29] proposed a novel dual-branch network, RS3Mamba, which employs VSS blocks to address long-range modeling challenges and introduces a CCM module to enhance feature fusion between dual encoders. To strengthen multi-scale feature representation, Wang et al. [30] constructed a novel segmentation network termed PyramidMamba. This approach achieved state-of-the-art performance across three datasets: OpenEarthMap, Vaihingen, and Potsdam. To address the challenge of balancing computational efficiency and segmentation accuracy, the multimodal fusion network MFMamba (Wang et al.) [46] and the efficient UNet-like model UNetMamba (Zhu et al.) [47] both further improve Mamba, increasing the segmentation accuracy while reducing computational complexity. By introducing a foreground-aware mechanism and a semantic flow aggregation module, the SF-Mamba model of Li et al. [48] improves the semantic segmentation performance of remote sensing images. It also confirms the superiority of the model in dealing with small targets in remote sensing images. In order to achieve real-time semantic segmentation of remote sensing images, Ding et al. [31] introduced a model called RTMamba. The core of this model lies in the use of Visual State Space blocks to extract depth features, while filtering redundant information through the Inverted Triangle Pyramid Pooling module. This approach exploits the great potential of the Mamba model in the field of real-time segmentation. Mu et al. [49] developed a new model named PPmamba based on the Mamba architecture, which innovatively fuses Resblock and PPmamba in an encoder–decoder framework to show its powerful global modeling performance. Du et al. [50] worked on achieving strong quantization of the model and therefore constructed the ECMNet (Efficient CNN-Mamba Network) hybrid architecture. This hybrid architecture shows great advantages in lightweight semantic segmentation tasks through the enhanced dual-attention module and multi-scale attention units. Although the above studies have improved Mamba, most of them suffer from poor detail handling. In this paper, by adding a multi-scale feature fusion module to the VSS layer, the segmentation performance improves.

3. Method

In this section, we introduce an overview of the proposed models, followed by a detailed description of the improved modules that contribute to the network’s performance, respectively.

3.1. Overview

In this study, a novel hybrid LMVMamba is proposed to address the deficiencies of current remote sensing image processing techniques. The proposed model addresses the challenges posed by the weak global modeling capability, the difficulty of identifying fine targets, and the limitations in multi-scale feature extraction during the classification process. The model aims to facilitate high-precision and automated classification of feature types in remote sensing images, thus contributing to the enhancement of image analysis and interpretation capabilities.

As Figure 1 shows, the architecture of LMVMamba leverages a U-shaped network structure to progressively downsample to extract features at different scales. At the encoder stage, we adopt ResT as the core architecture, leveraging its robust global context modeling capabilities to establish a stable and semantically rich feature foundation for the model. High-resolution remote sensing imagery contains extensive terrain feature information, with many features exhibiting strong continuity, such as roads, rivers, and coastlines. To better segment these features, long-range modeling is required. Relying solely on Transformers proves insufficient for capturing their dependencies effectively. Therefore, at the decoder stage, we introduce the Mamba module. This primarily leverages its linear computational complexity advantage to further enhance the model’s ability to model distant dependencies within high-resolution feature maps. Moreover, LoRA is embedded into each ResT block for the purpose of fine-tuning the model and enhancing the model’s ability to acquire high-level semantic features. The PEVP Block in the decoder part consists of a combination of a Patch Expanding module, two VSS Blocks, and a Post Block, which extracts local semantic features while efficiently modeling long-range dependencies. Specifically, within each block, the Patch Expanding module first performs higher spatial resolution sampling on feature maps derived from the encoder. The expanded features are then fed into the Visual State Space (VSS) blocks to effectively capture long-range dependencies. Subsequently, the Multi-scale Post Block (MPB) enhances local feature extraction and multi-scale representations through depthwise separable convolutions and residual connections. In the final stage of the model, a Local Attention Supervision (LAS) module with an attention mechanism is incorporated as an auxiliary branch. This module serves to enhance the model’s capacity to segment small targets, thereby leading to an improvement in the segmentation accuracy.

Figure 1.

Overall structure of LMVMamba.

3.2. LoRA Fine-Tuning Technology

The ResT backbone network facilitates the modeling of long-range relationships for global information within images, thereby serving as a fundamental component within the Vision Transformer framework. However, when confronted with information-rich remote sensing imagery, its feature extraction capabilities face certain limitations. The extensive parameterization of ResT renders the model susceptible to overfitting during training. End-to-end training of the full ResT Transformer incurs substantial computational and memory costs. LoRA confines updates to a low-rank space, which can effectively prevent overfitting and thereby enhance the model’s generalization ability while reducing the training expanse. Moreover, the attention layers within ResT may exhibit insufficient sensitivity towards complex features or small objects. This limitation impairs the model’s ability to represent such features and diminishes overall segmentation accuracy. Consequently, our selection of LoRA does not signify abandoning full-parameter training capabilities, but rather stems from a comprehensive consideration of efficiency, generalizability, and precision.

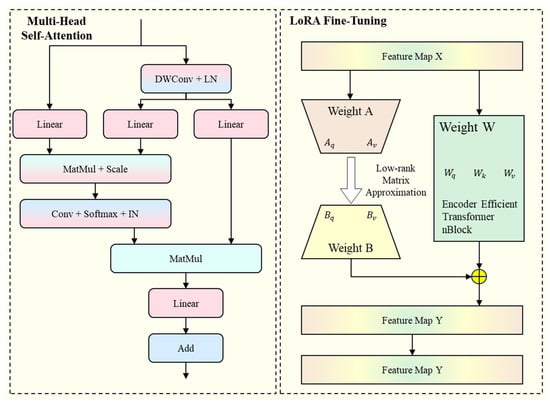

The function of multi-head self-attention within the ResT is to divide the features in the image and process them concurrently to ascertain the spatial relationships of the captured features. The structure of the multi-head self-attention is shown in Figure 2. In this study, we introduce the LoRA fine-tuning to the multi-head self-attention mechanism part of the ResT backbone to improve the precision of semantic segmentation on remote sense images. As illustrated in Figure 2, the LoRA fine-tuning strategy freezes the weight matrix during the model update process to remain the predicted result of our LMVMamba model. The original weight matrix is decomposed into two low-rank constituent matrices A and B. During the training phase, the model is required to only train these two smaller, low-rank matrices in order to enhance the model’s accuracy while preserving the original performance. Specifically, the input feature map X generates the query (Q), key (K), and value (V) through linear layers. Subsequently, the LoRA layer adjusts the low-rank weight matrices and , based on the parameters Q, K and V. In the original linear variation layer, the weight matrix and the input features are transformed according to the following linear function to obtain the output:

where , and . is the dimension of input token, is the dimension of output token and denotes the number of training samples. LoRA updates the weight matrix into via and as follows:

where denotes the increment of more rows, which is the product of and . In our LMVMamba model, we apply LoRA to the multi-head self-attention component of the ResT backbone. The calculation of multi-head self-attention can be articulated as follows:

Figure 2.

Overview of the LoRA fine-tuning strategy.

The LoRA is applied to the Q, K, and V matrices of the multi-head self-attention part, with their calculations defined as follows:

where the , and are the linear projection weights of the Q, K, and V matrices, respectively, which keep their value while training.

3.3. Multi-Scale Post Block

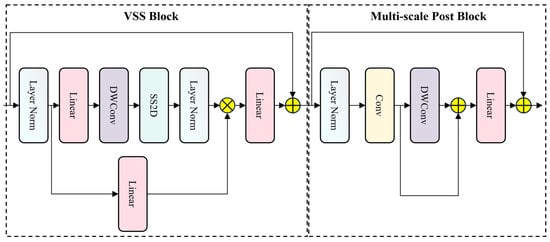

The ResT encoder facilitates effective target feature extraction for the model, with the incorporation of LoRA further enhancing the model’s accuracy. In the saw blade, we introduced the VSS block from the Mamba model into the decoder section to enhance the model’s long-range modeling capabilities. The Transformer mainly focuses on global features. Therefore, it is not sensitive to texture, shape, and edge features. In high-resolution remote sensing images, there is a large number of small targets, which may make accurate segmentation difficult. To address the limitation, we proposed a Multi-scale Post Block that complements the global context captured by Transformer and Mamba with local detail information from CNN. The block is designed to enhance model stability and local detail feature extraction capabilities, primarily through the utilization of deep separable convolution and residual concatenation. The Multi-scale Post Block, along with the Patch expanding module and two VSS Blocks constitute the PEVP module.

The structure of the VSS block and the MPB is shown in Figure 3. Features are modeled over long distances by SS2D in the VSS block. Subsequent to the VSS block, the pointwise convolution initiates the compression of features in the channel dimension. A depthwise separable convolution is then employed to facilitate the efficient extraction of local features while minimizing the number of parameters. The input and output of the DWConv are fused through a residual connection, while a GELU activation function is employed to enhance the model’s capacity to learn and represent complex nonlinear mapping relationships. To further enhance small targets segmentation, channel-wise feature fusion is performed by a pointwise convolution, followed by a residual integration to preserve detailed representation. Moreover, the residual connections in this block have potential to address the issue of gradient vanishing, enabling the model to be stable while training.

Figure 3.

Structure of proposed VSS block and Multi-Scale Post Block.

In the MPB, the model achieves multi-scale feature extraction, with a particular emphasis on enhancing the focus on small, localized targets. This allows the model to have both the global perception of the Transformer and the VSS blocks, while concurrently exhibiting the capability to discern edges, small targets, and details.

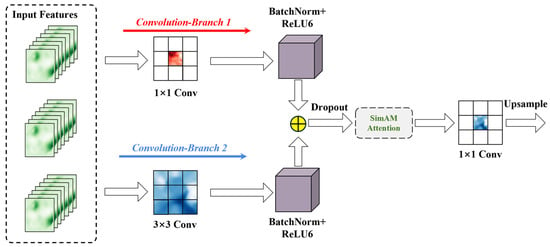

3.4. Local Attention Supervision

After the improved PEVP block, the feature map initially integrates information at different scales effectively, facilitating the fusion of multi-level information. The SS2D with a large receptive field in the VSS block significantly enhances the global contextual modeling capability of the model. However, high-resolution remote sensing images frequently contain a plethora of intricate details that are pivotal in determining the precision of semantic segmentation models. Consequently, we propose the LAS module to further enhance the model’s segmentation ability for local details. The structure of the LAS module is demonstrated in Figure 4.

Figure 4.

Structure of proposed LAS module.

In the LAS module, the input is subjected to two parallel branches of convolution kernels, with the kernels in each branch set to 1 and 3, respectively. This small convolution kernel is well-suited to the extraction of features of tiny-scale targets in remote sensing images. Subsequent to the convolution process, a batch normalization layer and an activation function ReLU6 are incorporated. The output from the two branches are then spliced in the channel dimension; this process is as depicted in the following equations:

where the and are denote convolution with kernel size 1 and 3, respectively. is the sum of the two branches’ output. To further enhance the feature representation capability of the model, the SimAM attention mechanism is introduced in the module. The SimAM can dynamically adjust the importance of each channel and emphasize key feature information by the energy function without adding additional parameters. The structure of SimAM is illustrated in Figure 5.

Figure 5.

Structure of SimAM attention mechanism.

Eventually, a 1 × 1 convolution is employed to map the dimensions of the feature map to the category dimensions and to scale the feature map size to be the same as the input images through upsampling. This process can be represented by the following equation:

4. Experimental Results

4.1. Experimental Dataset Description

To validate the effectiveness of the proposed LMVMamba model, we used two publicly remote sensing semantic segmentation datasets in this study. The following are the details of the OpenEarthMap dataset [51] and the LoveDA dataset [52]:

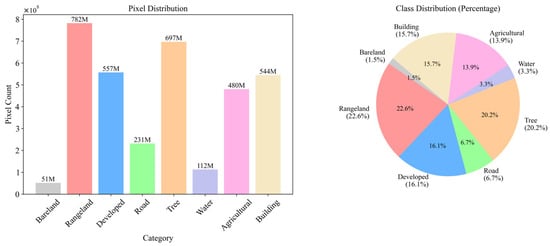

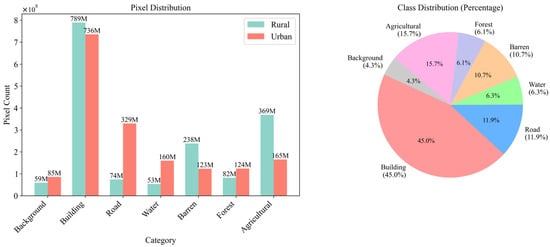

OpenEarthMap: The OpenEarthMap dataset comprises 5000 images captured from arial photography and satellite imagery, encompassing 97 regions across 44 nations on six continents. The resolution of the images in this dataset is 0.25–0.5 m. Since all images in the dataset have a 1:1 aspect ratio but differ in size, we uniformly resized them to 1024 × 1024 pixels. The dataset contains eight land-use categories: bareland, rangeland, developed space, road, tree, water, agriculture land and building. The total count and percentage of pixels in each category in the dataset is shown in Figure 6. For the OpenEarthMap dataset, we set the dataset in accordance with the stipulated guidelines. The number of images constituting the training, validation, and testing subset is 3500, 500 and 1500, respectively.

Figure 6.

Details of the OpenEarthMap dataset.

LoveDA: The LoveDA dataset contains 5987 rural and urban high-resolution remote sensing images captured in China. The resolution of the images in this dataset is 0.3 m. The dataset contains seven semantic categories: background, building, road, water, barren, forest, and agriculture. The dataset is notable for its substantial quantity of multi-scale objects, complex background samples, and inconsistent sample distribution, which collectively present significant challenges to semantic segmentation models. The LoveDA dataset emphasizes the difference between urban and rural areas and their count and percentage of pixels in each category in the dataset is shown in Figure 7. The dataset is split into 2522 training, 1669 validation and 1796 testing images. A detailed division overview of two datasets is shown in Table 1.

Figure 7.

Details of the LoveDA dataset.

Table 1.

Distribution of datasets.

The experiments were conducted using PyTorch version 2.1.0, with a NVIDIA RTX 4080 GPU and CUDA version 11.8. No pre-training weights were employed, and the models were all trained from a value of zero. The models were optimized using the AdamW optimizer. All models underwent training for a total of 100 epochs, with a batch size of four.

4.2. Evaluation Metrics

In this study, we utilize mean intersection over union (mIoU), mean F1 score (mF1), and overall accuracy (OA) as our evaluation metrics for remote sensing semantic segmentation, employing these measures to assess the performance of our models across a range of scenarios. All the evaluation metrics are shown in Table 2. In Table 2, refers to the number of correctly identified positive samples in category . refers to the number of incorrectly negative samples in category . refers to the number of positive samples that were missed.

Table 2.

Definitions of evaluation metrics.

The mIoU is defined as the mean of the degree of overlap between the predicted and actual labels, reflecting the model’s segmentation performance across all categories. The mIoU metric is defined mathematically as follows:

The mF1 represents a comprehensive evaluation metric that considers both precision and recall simultaneously, offering a rigorous assessment of a model’s performance in segmentation tasks. The mF1 can be determined by the following formula

The OA is defined as the proportion of correctly classified pixels across all pixels in the dataset. The definition of OA is as follows:

4.3. Comparison Results

To evaluate the performance difference between our proposed LMVMamba model and the established one, comparative experiments were conducted on the OpenEarthMap and LoveDA datasets. In this part, we selected various types of semantic segmentation models, including the following: (1) CNN-based models, MANet [16] and A2FPN [15]; (2) Transformer-based models, DC-Swin [19] and UNetFormer [20]; and (3) Mamba-based models, RS3Mamba [29] and PyramidMamba [30]. In the experiments, we employ the same loss functions to evaluate the models. Specifically, joint loss is used as the primary loss function, comprising SoftCrossEntropy and Dice. SoftCrossEntropy is selected as the auxiliary loss function.

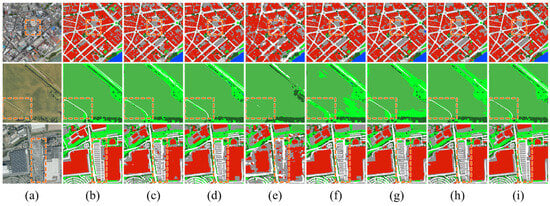

We compare the performance of our LMVMamba model against six selected models. As can be seen in Table 3, the LMVMamba model outperformed all other models in terms of comprehensive performance. The proposed model achieved the highest mIoU (67.9%), OA (80.3%), and mF1 (80.5%). Furthermore, our model attained the highest level of accuracy in each specific category. The CNN model, which is suitable for extracting local features, and the Transformer and Mamba models, which are adept at global modeling, underperformed compared to the proposed model. This finding suggests that the LMVMamba model achieved a satisfactory equilibrium between the intricacies of detail and the comprehensive extraction of features. The qualitative comparison results on the OpenEarthMap dataset are illustrated in Figure 8, which shows that LMVMamba provided more accurate segmentation results than the other methods, with a better performance in detail and boundary extraction.

Table 3.

Results of comparison experiments on the OpenEarthMap dataset. The best result is shown in bold.

Figure 8.

Visualization of comparison experiment results on the OpenEarthMap dataset. (a) Original Image; (b) Groundtruth; (c) MANet; (d) A2FPN; (e) DC-Swin; (f) UNetFormer; (g) Efficient PyramidMamba; (h) RS3Mamba; and (i) Proposed LMVMamba. Colors represent different land-cover categories: dark red for bareland, bright green for rangeland, gray for developed space, white for roads, dark green for trees, blue for water, light green for agricultural land, and orange-red for buildings. The red box is used to highlight areas requiring particular emphasis.

Table 4 illustrates the results of the comparative experiments conducted on the LoveDA dataset. From the table, it is evident that the LMVMamba proposed in this study had the most optimal overall performance, with 52.3%, 69.8%, and 68.0% in mIoU, OA, and mF1, respectively. These values are higher than that of other models. Notably, the mIoU is 3.4% more than the second place (48.8% of MANet and RS3Mamba). Our OA is 1.7% ahead of the second-place model (68.1% of MANet). The mF1 score is also 3.1% higher than other models (64.8% of MANet).

Table 4.

Results of comparison experiments on the LoveDA dataset. The best result is shown in bold.

Our model also demonstrates a significant advantage in each specific category, achieving the highest segmentation precision in the categories of background, road, water, barren, and forest. In the context of the barren category, the efficacy of the proposed model improved by 21% compared to A2FPN. The LMVMamba exhibited a mere 0.1% and 2.9% deviation from the optimal model (MANet) in the building and agriculture categories, respectively. However, the higher mF1 indicates that our model has better stability across different land-cover categories. The visualization of the comparative results is shown in Figure 9.

Figure 9.

Visualization of comparison experiment results on the LoveDA dataset. (a) Original Image; (b) Groundtruth; (c) MANet; (d) A2FPN; (e) DC-Swin; (f) UNetFormer; (g) Efficient PyramidMamba; (h) RS3Mamba; (i) Proposed LMVMamba. Colors represent different land-cover categories: white for background, red for buildings, yellow for roads, blue for water, purple for barren land, green for forest, and orange for agricultural land. The red box is used to highlight areas requiring particular emphasis.

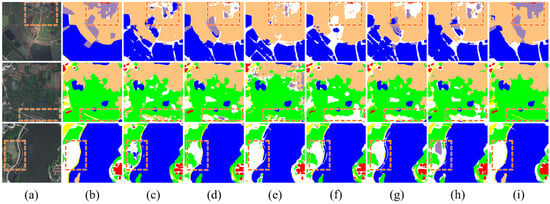

We selected a complex image from OpenEarthMap on which to test the performance of the proposed model in intricate scenarios. This photograph was taken in Dortmund, which is located in western Germany. This image contains a variety of terrain features, including densely packed buildings, intersecting roads and extensive urban greenery. These features present considerable challenges for segmentation tasks.

In remote sensing imageries, linear features such as roads, rivers, and coastlines manifest conspicuous spatial continuity, thereby evincing a state of extension and connectivity across the landscape. During the process of segmentation, it is imperative to ensure that the continuous landforms do not become isolated points or patches. As demonstrated in Figure 10, the majority of methods produce noticeable discontinuities in road segmentation, with the roads appearing broken in the segmented output. The proposed method has been demonstrated to effectively preserve road geometry even in the presence of substantial urban greenery and building interference.

Figure 10.

Visualization of comparison experiment results on complex scene. (a) Original Image; (b) Groundtruth; (c) MANet; (d) A2FPN; (e) DC-Swin; (f) UNetFormer; (g) Efficient PyramidMamba; (h) RS3Mamba; and (i) Proposed LMVMamba. Colors represent different land-cover categories: white for background, red for buildings, yellow for roads, blue for water, purple for barren land, green for forest, and orange for agricultural land. The red box is used to highlight areas requiring particular emphasis.

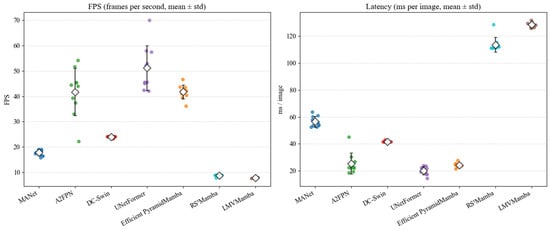

To evaluate the difference in inference speed between the LMVMamba model and other models, experiments were designed with the focus being on frames per second (FPS) and latency. In the course of these experiments, ten separate inferences were performed for each model in order to obtain the FPS and latency for each iteration. The mean values along with the standard deviations were then calculated to evaluate the stability of each model’s prediction speed during inference.

As shown in Figure 11, the proposed model demonstrated slower inference speeds in comparison to alternative models, achieving an average frame rate of approximately 7.8 FPS across ten inference experiments, with a latency of around 128 milliseconds per image. However, the model demonstrated robust computational stability, with minimal variation in inference speed observed across each experiment. The UNetFormer and A2FPN demonstrated faster inference speeds, which is attributed to the design of their models. The results demonstrate that they achieve average speeds of over 50 FPS and 40 FPS, respectively. However, it is evident from the findings that inference speeds vary considerably across experiments, thus indicating insufficient stability. RS3Mamba’s inference speed is comparable to that of the model under investigation.

Figure 11.

Comparison of FPS and latency (ms per image) for different models.

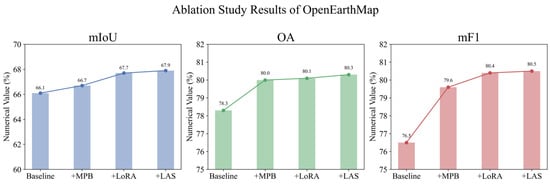

4.4. Ablation Experiments and Results

In this study, the effectiveness of each proposed improvement modules was evaluated through the conduction of ablation experiments on the OpenEarthMap dataset and the LoveDA dataset. In the ablation experiments, we added MPB, LoRA, and LAS modules to the baseline model gradually and performed experimental validation.

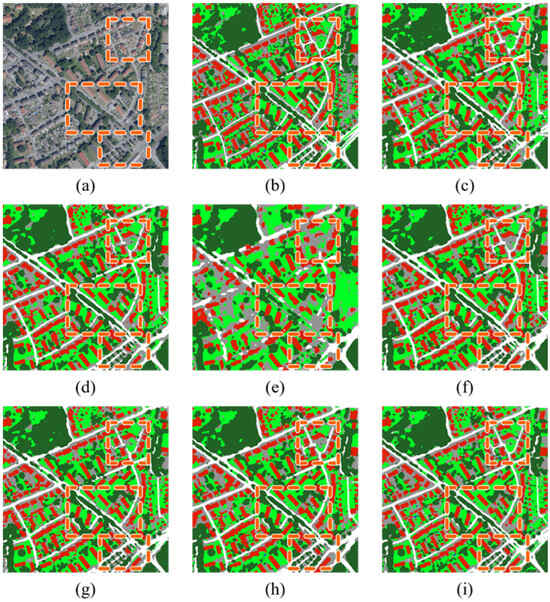

Table 5 demonstrates the results of the ablation experiments conducted with LMVMamba on the OpenEarthMap dataset, while Figure 12 visualizes the results of the ablation experiments. It can be observed that when only the MPB module is added to the baseline model, the mIoU, OA, and mF1 of the LMVMamba model increase by 0.6%, 1.7%, and 3.1%, reaching 66.7%, 80.0%, and 79.6%, respectively. All the model’s indicators of segmentation ability improve further after adding the LoRA fine-tuning strategy. With the incorporation of the LAS module, the final mIoU, OA, and mF1 improve by 1.8% (from 66.1% to 67.9%), 2.0% (from 78.3% to 80.3%) and 4.0% (from 76.5% to 80.5%) compared to the baseline model.

Table 5.

Ablation experiments on the OpenEarthMap dataset. ✓ indicates that this module has been utilised. The best result is shown in bold.

Figure 12.

Visualization of ablation experiment results on the OpenEarthMap dataset.

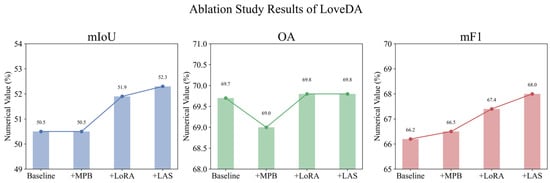

Table 6 presents the results of the ablation experiments on the LoveDA dataset. Figure 13 visualizes the results of the ablation experiments. On this dataset, adding the MPB module to the baseline model does not significantly improve segmentation performance. The model’s mF1 metric increases by 0.3%. However, the mIoU remains unchanged, and the OA drops by 0.7%. When both MPB and LoRA are incorporated, mIoU improves by 1.4% (from 50.5% to 51.9%), OA rises to 69.8%, and mF1 increases by 1.2% to 67.4%. The model’s performance is greatly improved by adding all modules, with mIoU and mF1 increasing by 1.8%.

Table 6.

Ablation experiments on the LoveDA dataset. ✓ indicates that this module has been utilised. The best result is shown in bold.

Figure 13.

Visualization of ablation experiment results on the LoveDA dataset.

In summary, the findings of ablation experiment clearly demonstrate the efficiency of the LMVMamba modules, which contribute significant performance gains in remote sensing semantic segmentation tasks across multiple datasets.

5. Discussion and Future Work

This paper proposes a hybrid U-shaped network, LMVMamba, for semantic segmentation of remote sensing imagery. The model employs ResNet as the backbone network for its encoder component, integrated with a Mamba-based decoder. Concurrently, we designed MPB and LAS modules to enhance local multi-scale feature extraction while preserving the model’s inherent global modeling capabilities. A LoRA fine-tuning strategy was adopted to optimize model performance and reduce training costs. Through the synergistic effects of these designs, LMVMamba demonstrated superior performance on the OpenEarthMap and LoveDA datasets, providing an optimal solution for high-precision land-cover classification tasks. Specifically, the combination of the ResT encoder and LoRA reduces training costs while mitigating overfitting in the small-sample scenario of remote sensing. Secondly, the introduction of the MPB module compensates for Mamba’s limitations in capturing local details, effectively improving segmentation accuracy for small objects such as scattered buildings. Concurrently, the integration of the LAS module with SimAM attention effectively alleviates the challenge of spectral confusion in remote sensing imagery. Moreover, in experiments conducted within complex environments, our model demonstrated robust segmentation outcomes, maintaining excellent road continuity. This performance stems from the long-range modeling capabilities of our Transformer and Mamba architectures. Despite the exemplary performance exhibited by our model in remote sensing image segmentation, LMVMamba still has some limitations. The present study does not address lightweighting techniques for the model, which limits its application to real-time tasks. In experiments testing inference speed, our model exhibited lower frames per second (FPS) but demonstrated greater stability compared to other models. Currently, the model is tested only on RGB remote sensing images. However, there is a rich variety of remote sensing images, including synthetic aperture radar (SAR), hyperspectral images and infrared data, etc. The applicability of the model in multimodal scenarios still needs further validation.

Although there are some deficiencies in the extant research, the LMVMamba provides an effective solution for the issue of semantic segmentation in remote sensing images. For instance, the automatic segmentation of recent remote sensing images is an effective method of reducing labor requirements. The high-precision segmentation results can provide data support for many fields such as urban planning, land-use monitoring and natural resources management.

In the future, the focus of our work will be on the exploration of lightweighting techniques for models that can combine global modeling, multi-scale feature representation and real-time segmentation capabilities. This will enable the model to be deployed on edge devices and used in real-world applications. We will also continue the exploration of the application of the model to multimodal data, so that the model can effectively deal with different remote sensing scenarios and improve the generalization performance of the model. The model can be extended to incorporate multimodal inputs, fusing feature information from different modalities to enhance robustness and transferability.

6. Conclusions

This study proposes a hybrid U-shaped network, LMVMamba. This approach effectively addresses the common challenges of identifying fine-grained objects and insufficient multi-scale feature extraction in remote sensing imagery. It demonstrates significant potential for semantic segmentation tasks in high-resolution remote sensing imagery. We introduce three meaningful innovations. Firstly, this model integrates the strengths of ViT’s global modeling, Mamba’s handling of long sequence dependencies, and CNN’s local feature extraction to address semantic segmentation tasks in remote sensing imagery. Secondly, this study incorporates LoRA within the ResT encoder, effectively enhancing its adaptability and generalisation capabilities for remote sensing segmentation tasks. Furthermore, the designed MPB and LAS modules collectively strengthen local feature representation capabilities and multiscale fusion efficiency. Extensive experimental results on two public datasets validate the superior performance of the proposed LMVMamba algorithm through these innovative designs. The model achieves an mIoU of 52.3%, OA of 69.8%, and mF1 of 68.0% on the OpenEarthMap dataset, while obtaining 67.9%, 80.3%, and 80.5% on the LoveDA dataset, respectively. Future works will fuse multimodal data, such as hyperspectral and SAR images, and incorporate model lightweighting strategies to enhance the ability of cross-task segmentation.

Author Contributions

Conceptualization, H.K.; Methodology, H.W.; Validation, G.Z.; Formal analysis, F.L.; Data curation, J.S.; Supervision, X.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Young Foundation of China under Grant 41806117; the Jiangsu Provincial Natural Resources Science and Technology Project under Grant JSZRKJ202421; the National Natural Science Foundation of China under Grant 62071207; and the State Key Laboratory of Geo-Information Engineering and Key Laboratory of Surveying and Mapping Science and Geospatial Information Technology of MNR, CASM under Grant 2024-01-08.

Data Availability Statement

The data will be made available upon request from the corresponding author, as the dataset is currently being used for ongoing research.

Acknowledgments

The authors would like to thank the editors and anonymous reviewers for their valuable comments that greatly improved our manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yuan, X.; Shi, J.; Gu, L. A review of deep learning methods for semantic segmentation of remote sensing imagery. Expert Syst. Appl. 2021, 169, 114417. [Google Scholar] [CrossRef]

- Yang, H.; Yu, B.; Luo, J.; Chen, F. Semantic segmentation of high spatial resolution images with deep neural networks. GISci. Remote Sens. 2019, 56, 749–768. [Google Scholar] [CrossRef]

- Zhang, F.; Guo, A.; Hu, Z.; Liang, Y. A novel image fusion method based on UAV and Sentinel-2 for environmental monitoring. Sci. Rep. 2025, 15, 27256. [Google Scholar] [CrossRef]

- Zhu, H.; Yao, J.; Meng, J.; Cui, C.; Wang, M.; Yang, R. A method to construct an environmental vulnerability model based on multi-source data to evaluate the hazard of short-term precipitation-induced flooding. Remote Sens. 2023, 15, 1609. [Google Scholar] [CrossRef]

- Tamás, J.; Louis, A.; Fehér, Z.Z.; Nagy, A. Land Cover Mapping Using High-Resolution Satellite Imagery and a Comparative Machine Learning Approach to Enhance Regional Water Resource Management. Remote Sens. 2025, 17, 2591. [Google Scholar] [CrossRef]

- Guo, Y.; Jia, X.; Paull, D. Effective sequential classifier training for SVM-based multitemporal remote sensing image classification. IEEE Trans. Image Process. 2018, 27, 3036–3048. [Google Scholar] [CrossRef] [PubMed]

- Adugna, T.; Xu, W.; Fan, J. Comparison of random forest and support vector machine classifiers for regional land cover mapping using coarse resolution FY-3C images. Remote Sens. 2022, 14, 574. [Google Scholar] [CrossRef]

- Song, X.; Chen, M.; Rao, J.; Luo, Y.; Lin, Z.; Zhang, X.; Li, S.; Hu, X. MFPI-Net: A Multi-Scale Feature Perception and Interaction Network for Semantic Segmentation of Urban Remote Sensing Images. Sensors 2025, 25, 4660. [Google Scholar] [CrossRef]

- Wang, F.; Zhang, L.; Jiang, T.; Li, Z.; Wu, W.; Kuang, Y. An Improved Segformer for Semantic Segmentation of UAV-Based Mine Restoration Scenes. Sensors 2025, 25, 3827. [Google Scholar] [CrossRef]

- Wang, H.; Shi, J.; Karimian, H.; Liu, F.; Wang, F. YOLOSAR-Lite: A lightweight framework for real-time ship detection in SAR imagery. Int. J. Digit. Earth 2024, 17, 2405525. [Google Scholar] [CrossRef]

- Chen, J.; Yuan, Z.; Peng, J.; Chen, L.; Huang, H.; Zhu, J.; Liu, Y.; Li, H. DASNet: Dual attentive fully convolutional Siamese networks for change detection in high-resolution satellite images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1194–1206. [Google Scholar] [CrossRef]

- Tao, Y.; Karimian, H.; Shi, J.; Wang, H.; Yang, X.; Xu, Y.; Yang, Y. MobileYOLO-Cyano: An Enhanced Deep Learning Approach for Precise Classification of Cyanobacterial Genera in Water Quality Monitoring. Water Res. 2025, 285, 124081. [Google Scholar] [CrossRef]

- Song, J.; Gao, S.; Zhu, Y.; Ma, C. A survey of remote sensing image classification based on CNNs. Big Earth Data 2019, 3, 232–254. [Google Scholar] [CrossRef]

- Peng, H.; Xue, C.; Shao, Y.; Chen, K.; Xiong, J.; Xie, Z.; Zhang, L. Semantic segmentation of litchi branches using DeepLabV3+ model. IEEE Access 2020, 8, 164546–164555. [Google Scholar] [CrossRef]

- Li, R.; Wang, L.; Zhang, C.; Duan, C.; Zheng, S. A2-FPN for semantic segmentation of fine-resolution remotely sensed images. Int. J. Remote Sens. 2022, 43, 1131–1155. [Google Scholar] [CrossRef]

- Li, R.; Zheng, S.; Zhang, C.; Duan, C.; Su, J.; Wang, L.; Atkinson, P.M. Multiattention network for semantic segmentation of fine-resolution remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5607713. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Panboonyuen, T.; Jitkajornwanich, K.; Lawawirojwong, S.; Srestasathiern, P.; Vateekul, P. Transformer-based decoder designs for semantic segmentation on remotely sensed images. Remote Sens. 2021, 13, 5100. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Duan, C.; Zhang, C.; Meng, X.; Fang, S. A novel transformer based semantic segmentation scheme for fine-resolution remote sensing images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6506105. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like transformer for efficient semantic segmentation of remote sensing urban scene imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Xiao, T.; Liu, Y.; Huang, Y.; Li, M.; Yang, G. Enhancing multiscale representations with transformer for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5605116. [Google Scholar] [CrossRef]

- Wei, T.; Chen, H.; Liu, W.; Chen, L.; Wang, J. Retain and Enhance Modality-Specific Information for Multimodal Remote Sensing Image Land Use/Land Cover Classification. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5635318. [Google Scholar] [CrossRef]

- Wang, Z.; Li, J.; Xu, N.; You, Z. Combining feature compensation and GCN-based reconstruction for multimodal remote sensing image semantic segmentation. Inf. Fusion 2025, 122, 103207. [Google Scholar] [CrossRef]

- Hatamizadeh, A.; Heinrich, G.; Yin, H.; Tao, A.; Alvarez, J.M.; Kautz, J.; Molchanov, P. Fastervit: Fast vision transformers with hierarchical attention. arXiv 2023, arXiv:2306.06189. [Google Scholar]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. ResUNet-a: A deep learning framework for semantic segmentation of remotely sensed data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Zhao, S.; Chen, H.; Zhang, X.; Xiao, P.; Bai, L.; Ouyang, W. Rs-mamba for large remote sensing image dense prediction. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5633314. [Google Scholar] [CrossRef]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision mamba: Efficient visual representation learning with bidirectional state space model. arXiv 2024, arXiv:2401.09417. [Google Scholar] [CrossRef]

- Ma, X.; Zhang, X.; Pun, M.-O. Rs3mamba: Visual state space model for remote sensing image semantic segmentation. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6011405. [Google Scholar] [CrossRef]

- Wang, L.; Li, D.; Dong, S.; Meng, X.; Zhang, X.; Hong, D. PyramidMamba: Rethinking pyramid feature fusion with selective space state model for semantic segmentation of remote sensing imagery. arXiv 2024, arXiv:2406.10828. [Google Scholar] [CrossRef]

- Ding, H.; Xia, B.; Liu, W.; Zhang, Z.; Zhang, J.; Wang, X.; Xu, S. A novel mamba architecture with a semantic transformer for efficient real-time remote sensing semantic segmentation. Remote Sens. 2024, 16, 2620. [Google Scholar] [CrossRef]

- He, H.; Zhang, J.; Cai, Y.; Chen, H.; Hu, X.; Gan, Z.; Wang, Y.; Wang, C.; Wu, Y.; Xie, L. Mobilemamba: Lightweight multi-receptive visual mamba network. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 4497–4507. [Google Scholar]

- Zhang, Q.; Yang, Y.-B. Rest: An efficient transformer for visual recognition. Adv. Neural Inf. Process. Syst. 2021, 34, 15475–15485. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. Lora: Low-rank adaptation of large language models. ICLR 2022, 1, 3. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Nashville, TN, USA, 19–25 June 2021; pp. 11863–11874. [Google Scholar]

- Deng, P.; Xu, K.; Huang, H. When CNNs meet vision transformer: A joint framework for remote sensing scene classification. IEEE Geosci. Remote Sens. Lett. 2021, 19, 8020305. [Google Scholar] [CrossRef]

- Fang, L.; Zhou, P.; Liu, X.; Ghamisi, P.; Chen, S. Context enhancing representation for semantic segmentation in remote sensing images. IEEE Trans. Neural Netw. Learn. Syst. 2022, 35, 4138–4152. [Google Scholar] [CrossRef]

- Meng, X.; Yang, Y.; Wang, L.; Wang, T.; Li, R.; Zhang, C. Class-guided swin transformer for semantic segmentation of remote sensing imagery. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6517505. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. Ccnet: Criss-cross attention for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 603–612. [Google Scholar]

- Xue, B.; Cheng, H.; Yang, Q.; Wang, Y.; He, X. Adapting segment anything model to aerial land cover classification with low-rank adaptation. IEEE Geosci. Remote Sens. Lett. 2024, 21, 2502605. [Google Scholar] [CrossRef]

- Xu, C.; Guo, H.; Cen, C.; Chen, M.; Tao, X.; He, J. Efficient program optimization through knowledge-enhanced LoRA fine-tuning of large language models. J. Supercomput. 2025, 81, 1006. [Google Scholar] [CrossRef]

- Xiong, J.; Pan, L.; Liu, Y.; Zhu, L.; Zhang, L.; Tan, S. Enhancing Plant Protection Knowledge with Large Language Models: A Fine-Tuned Question-Answering System Using LoRA. Appl. Sci. 2025, 15, 3850. [Google Scholar] [CrossRef]

- Hu, Y.; Xie, Y.; Wang, T.; Chen, M.; Pan, Z. Structure-aware low-rank adaptation for parameter-efficient fine-tuning. Mathematics 2023, 11, 4317. [Google Scholar] [CrossRef]

- Chavan, A.; Liu, Z.; Gupta, D.; Xing, E.; Shen, Z. One-for-all: Generalized lora for parameter-efficient fine-tuning. arXiv 2023, arXiv:2306.07967. [Google Scholar]

- Huang, C.; Liu, Q.; Lin, B.Y.; Pang, T.; Du, C.; Lin, M. Lorahub: Efficient cross-task generalization via dynamic lora composition. arXiv 2023, arXiv:2307.13269. [Google Scholar]

- Wang, Y.; Cao, L.; Deng, H. MFMamba: A mamba-based multi-modal fusion network for semantic segmentation of remote sensing images. Sensors 2024, 24, 7266. [Google Scholar] [CrossRef] [PubMed]

- Zhu, E.; Chen, Z.; Wang, D.; Shi, H.; Liu, X.; Wang, L. Unetmamba: An efficient unet-like mamba for semantic segmentation of high-resolution remote sensing images. IEEE Geosci. Remote Sens. Lett. 2024, 22, 6001205. [Google Scholar] [CrossRef]

- Li, M.; Xing, Z.; Wang, H.; Jiang, H.; Xie, Q. SF-Mamba: A Semantic-flow Foreground-aware Mamba for Semantic Segmentation of Remote Sensing Images. IEEE Multimed. 2025, 32, 85–95. [Google Scholar] [CrossRef]

- Mu, J.; Zhou, S.; Sun, X. PPMamba: Enhancing Semantic Segmentation in Remote Sensing Imagery by SS2D. IEEE Geosci. Remote Sens. Lett. 2024, 22, 6001705. [Google Scholar] [CrossRef]

- Du, F.; Wu, S. ECMNet: Lightweight Semantic Segmentation with Efficient CNN-Mamba Network. arXiv 2025, arXiv:2506.08629. [Google Scholar] [CrossRef]

- Xia, J.; Yokoya, N.; Adriano, B.; Broni-Bediako, C. Openearthmap: A benchmark dataset for global high-resolution land cover mapping. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–7 January 2023; pp. 6254–6264. [Google Scholar]

- Wang, J.; Zheng, Z.; Ma, A.; Lu, X.; Zhong, Y. LoveDA: A remote sensing land-cover dataset for domain adaptive semantic segmentation. arXiv 2021, arXiv:2110.08733. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).