S2M-Net: A Novel Lightweight Network for Accurate Small Ship Recognition in SAR Images

Abstract

Highlights

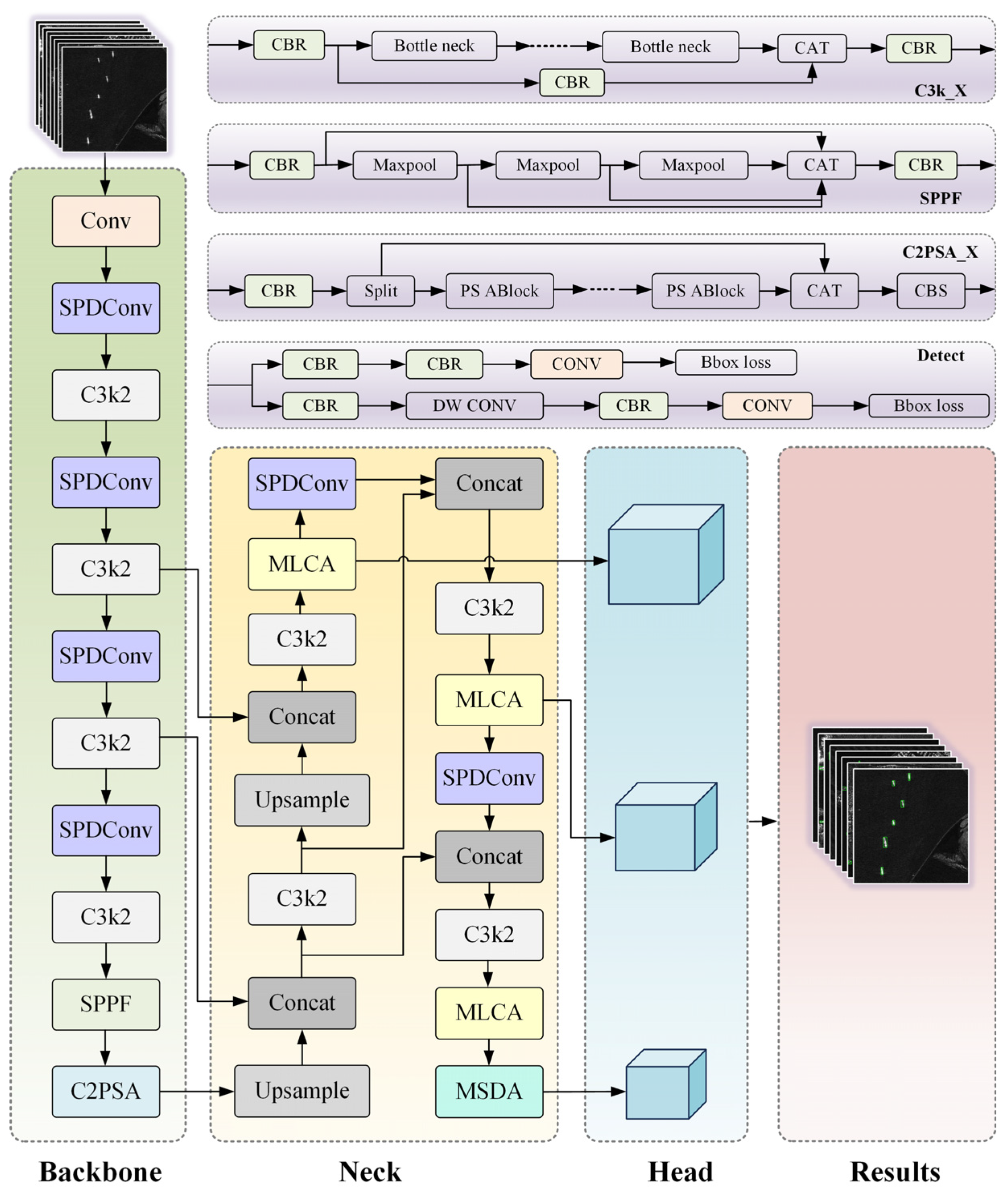

- We proposed a lightweight network, S2M-Net, for accurate small-ship recognition.

- We optimized convolution and attention mechanisms to reduce computational cost and model parameters.

- We constructed a multi-scale fusion module to enhance small-ship perception.

- We demonstrated superior accuracy and a more lightweight design versus state-of-the-art methods.

Abstract

1. Introduction

- We propose a novel lightweight SAR small-ship detection network, named S2M-Net. By optimizing the network structure and convolutional strategy, S2M-Net effectively reduces computational cost and parameter size while maintaining high detection accuracy, providing a feasible solution for practical deployment.

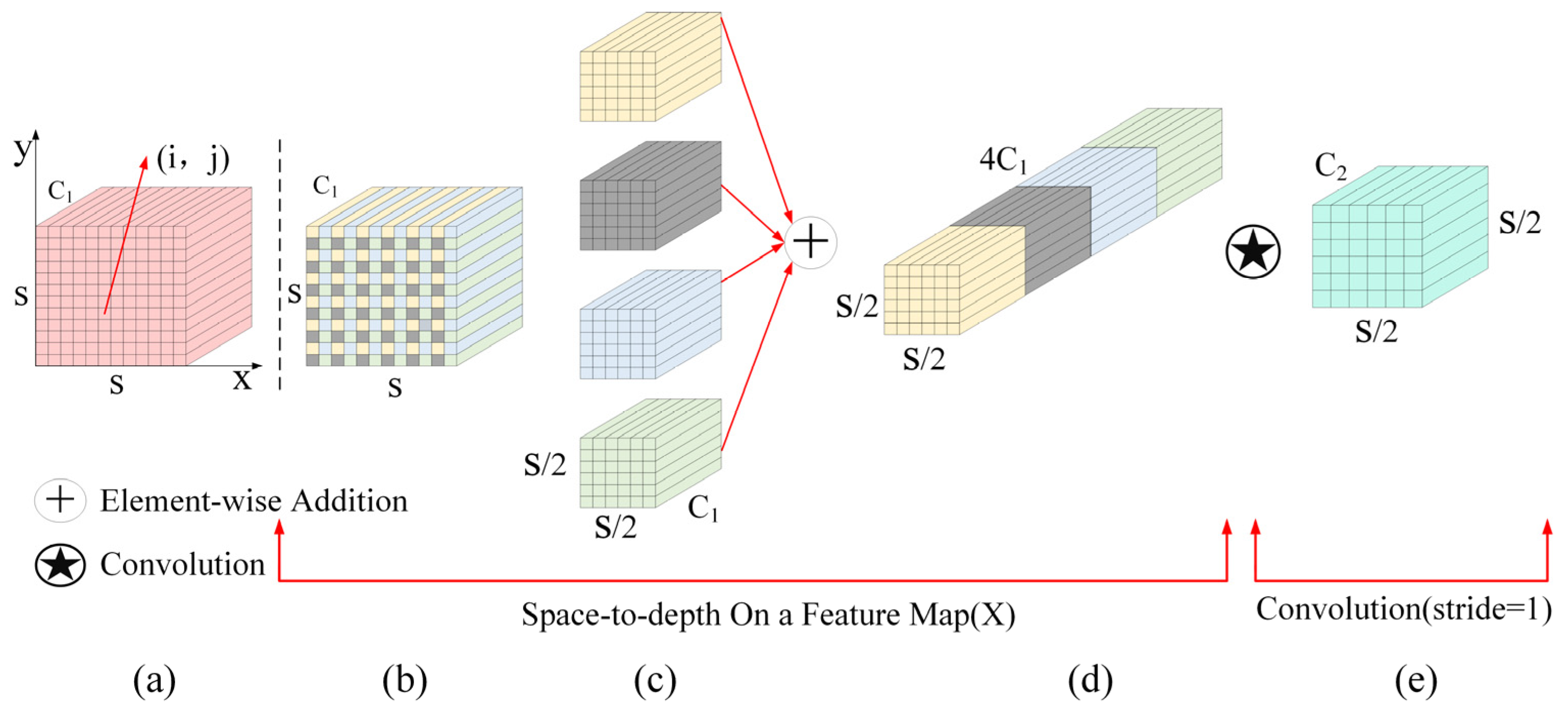

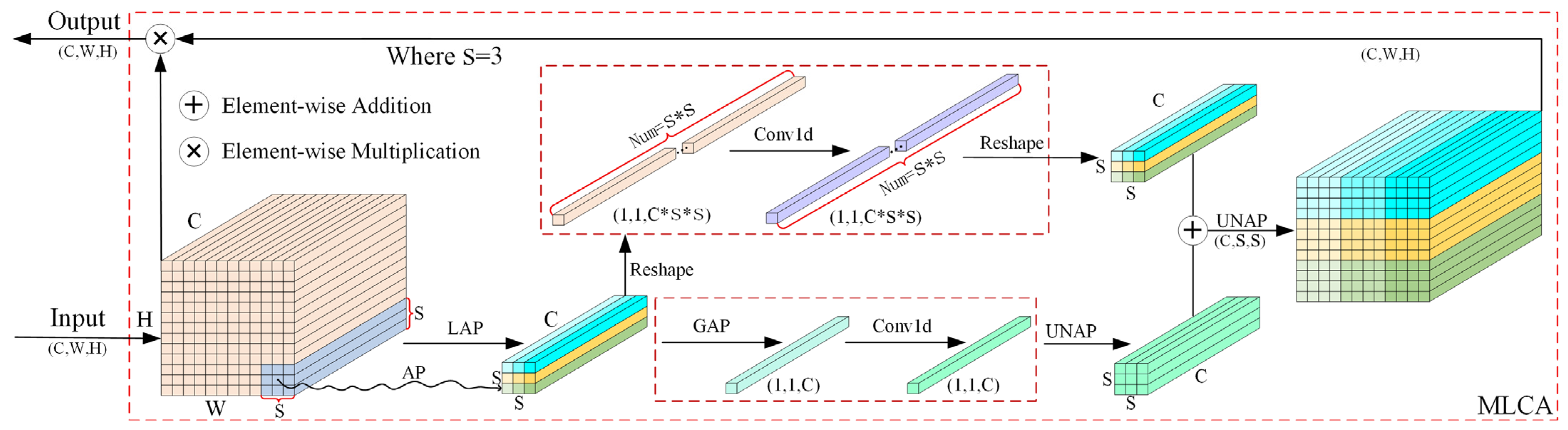

- We designed a processing strategy consisting of feature extraction, feature enhancement, and feature selection. The Space-to-Depth Convolution (SPD-Conv) module preserves fine-grained information during downsampling. The Mixed Local-Channel Attention (MLCA) module integrates local and channel attention mechanisms, while the output stage finely models the positional and categorical relationships of small targets. This design achieves a balanced trade-off between detection accuracy and inference speed, enabling superior performance in SAR small-ship recognition tasks.

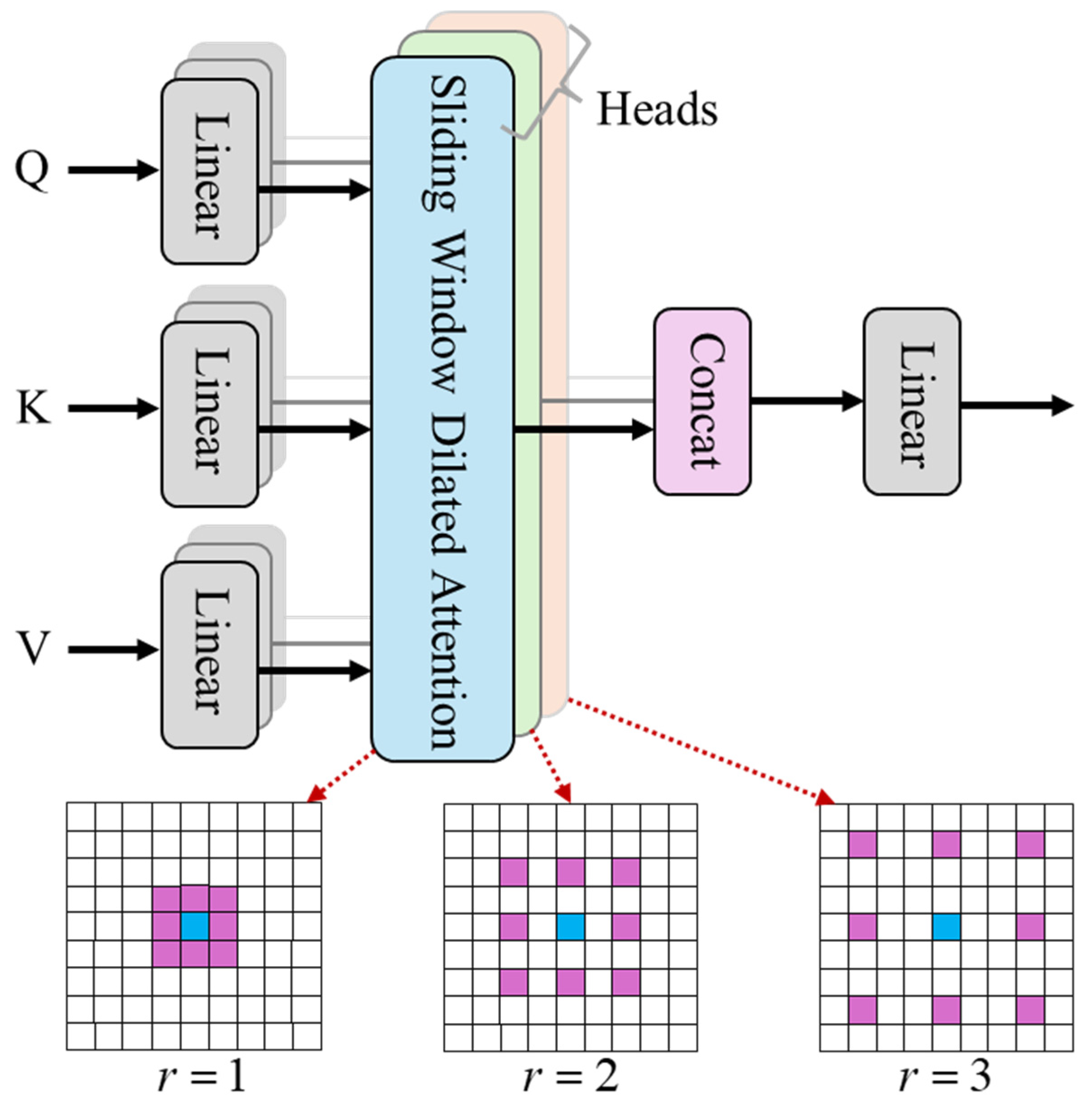

- We constructed the Multi-Scale Dilated Attention (MSDA) module to enhance the perception of small targets of different sizes during multi-scale feature fusion, while simultaneously suppressing noise in SAR images. This significantly improves detection accuracy in complex backgrounds.

2. Materials and Methods

2.1. Space-to-Depth Convolution

2.2. Mixed Local-Channel Attention

2.3. Multi-Scale Dilated Attention

3. Experiments

3.1. Experimental Details

3.2. Datasets

3.3. Evaluation Metrics

4. Results

4.1. Ablation Experiments

4.2. Comparison Experiments

4.2.1. Quantitative Comparison

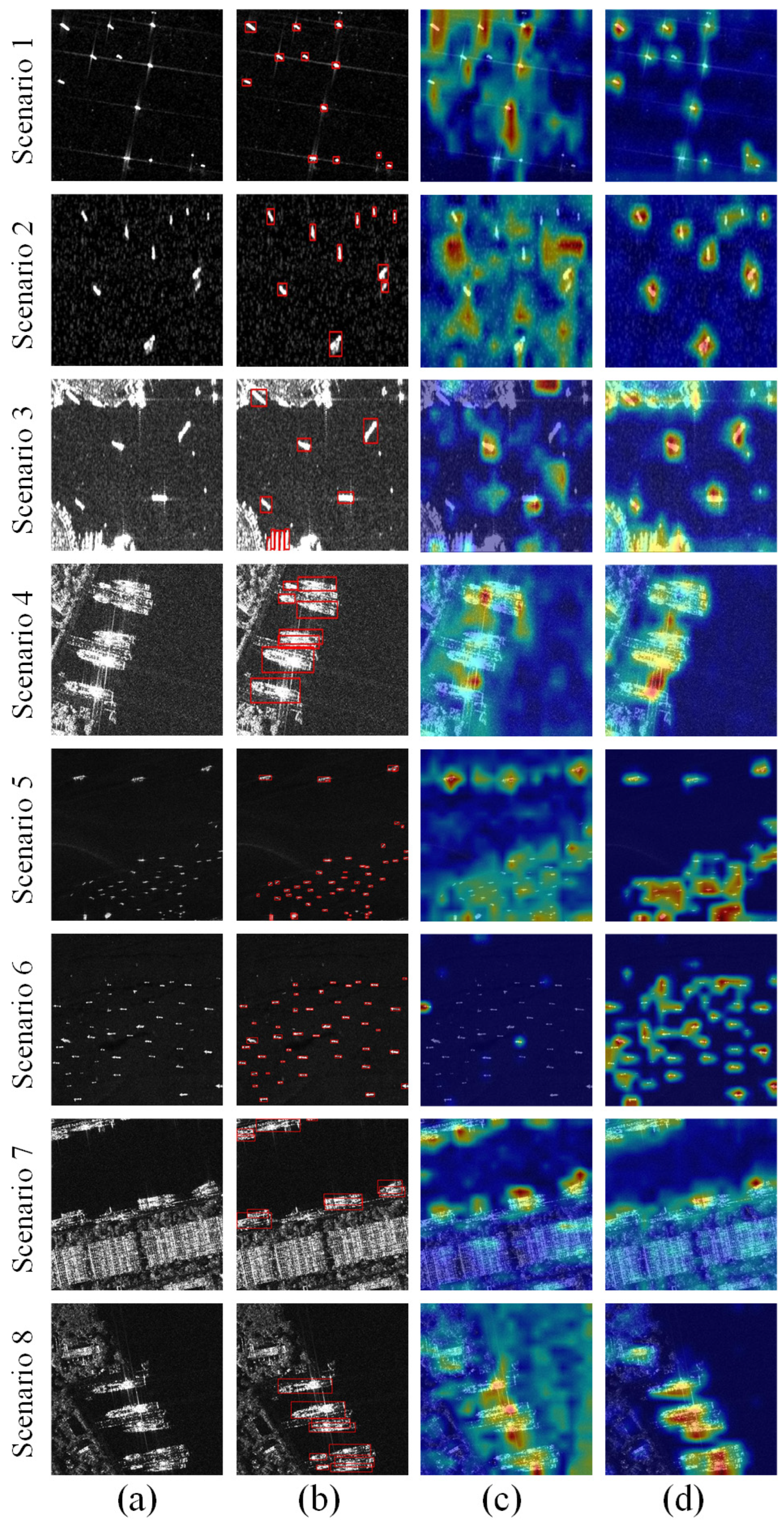

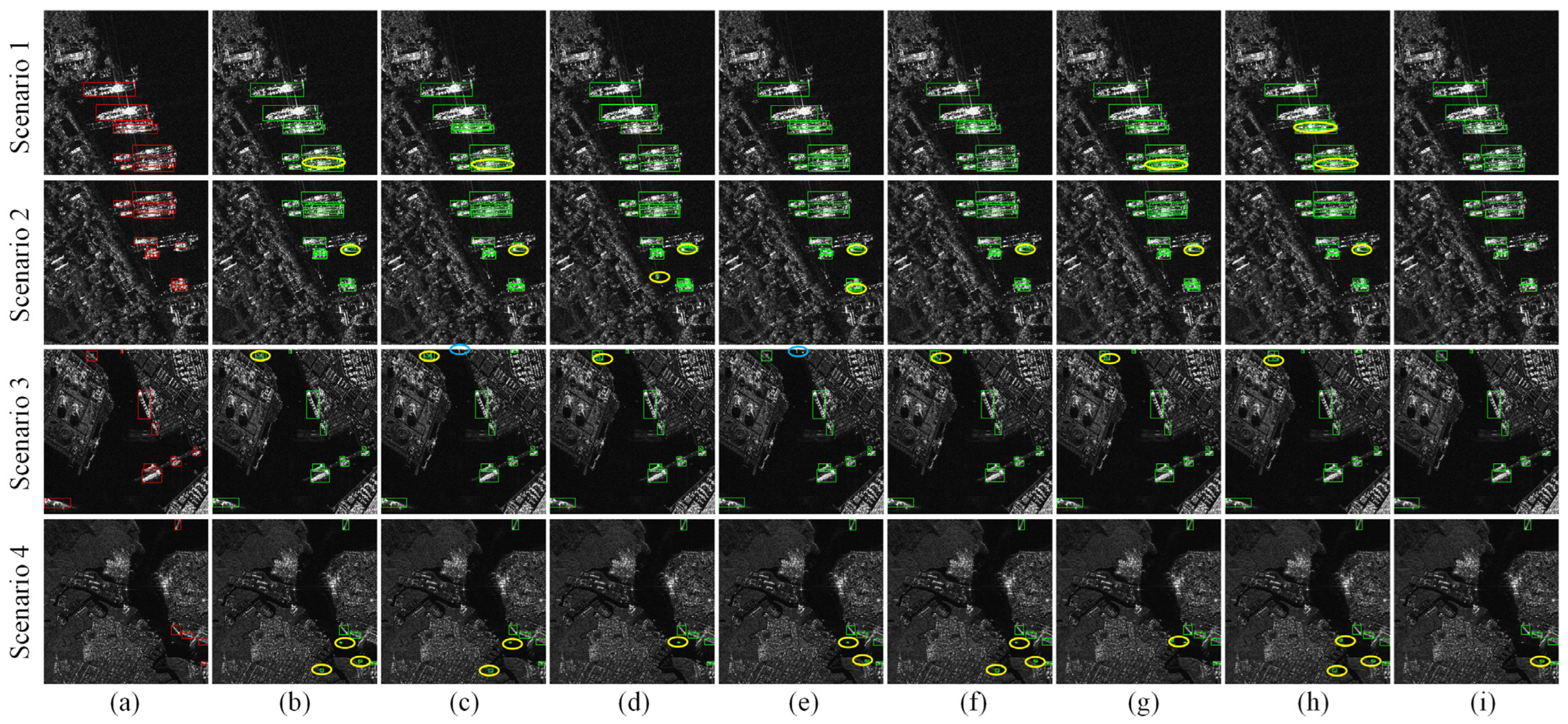

4.2.2. Qualitative Comparison

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shi, Q.; Li, W.; Tao, R.; Sun, X.; Gao, L. Ship Classification Based on Multifeature Ensemble with Convolutional Neural Network. Remote Sens. 2019, 11, 419. [Google Scholar] [CrossRef]

- Domala, V.; Lee, W.; Kim, T. Wave Data Prediction with Optimized Machine Learning and Deep Learning Techniques. J. Comput. Des. Eng. 2022, 9, 1107–1122. [Google Scholar] [CrossRef]

- Kanjir, U.; Greidanus, H.; Oštir, K. Vessel Detection and Classification from Spaceborne Optical Images: A Literature Survey. Remote Sens. Environ. 2018, 207, 1–26. [Google Scholar] [CrossRef]

- Leng, X.; Ji, K.; Zhou, S.; Xing, X.; Zou, H. An Adaptive Ship Detection Scheme for Spaceborne SAR Imagery. Sensors 2016, 16, 1345. [Google Scholar] [CrossRef]

- Wang, X.; Yang, L.T.; Meng, D.; Dong, M.; Ota, K.; Wang, H. Multi-UAV Cooperative Localization for Marine Targets Based on Weighted Subspace Fitting in SAGIN Environment. IEEE Internet Things J. 2022, 9, 5708–5718. [Google Scholar] [CrossRef]

- Chen, X.; Bi, H.; Yue, R.; Chen, Z.; An, C. Effects of Oil Characteristics on the Performance of Shoreline Response Operations: A Review. Front. Environ. Sci. 2022, 10, 1033909. [Google Scholar] [CrossRef]

- Jafarzadeh, H.; Mahdianpari, M.; Homayouni, S.; Mohammadimanesh, F.; Dabboor, M. Oil Spill Detection from Synthetic Aperture Radar Earth Observations: A Meta-Analysis and Comprehensive Review. GIScience Remote Sens. 2021, 58, 1022–1051. [Google Scholar] [CrossRef]

- Li, S.; Grifoll, M.; Estrada, M.; Zheng, P.; Feng, H. Optimization on Emergency Materials Dispatching Considering the Characteristics of Integrated Emergency Response for Large-Scale Marine Oil Spills. J. Mar. Sci. Eng. 2019, 7, 214. [Google Scholar] [CrossRef]

- Wang, X.; Li, G.; Jiang, Z.; Liu, Y.; He, Y. Density-Based Ship Detection in SAR Images: Extension to a Self-Similarity Perspective. Chin. J. Aeronaut. 2024, 37, 168–180. [Google Scholar] [CrossRef]

- Sun, Z.; Meng, C.; Cheng, J.; Zhang, Z.; Chang, S. A Multi-Scale Feature Pyramid Network for Detection and Instance Segmentation of Marine Ships in SAR Images. Remote Sens. 2022, 14, 6312. [Google Scholar] [CrossRef]

- Zha, C.; Min, W.; Han, Q.; Xiong, X.; Wang, Q.; Xiang, H. SAR Ship Detection Based on Salience Region Extraction and Multi-Branch Attention. Int. J. Appl. Earth Obs. Geoinf. 2023, 123, 103489. [Google Scholar] [CrossRef]

- Yasir, M.; Liu, S.; Pirasteh, S.; Xu, M.; Sheng, H.; Wan, J.; De Figueiredo, F.A.P.; Aguilar, F.J.; Li, J. YOLOShipTracker: Tracking Ships in SAR Images Using Lightweight YOLOv8. Int. J. Appl. Earth Obs. Geoinf. 2024, 134, 104137. [Google Scholar] [CrossRef]

- Li, Z.; Ma, H.; Guo, Z. MAEE-Net: SAR Ship Target Detection Network Based on Multi-Input Attention and Edge Feature Enhancement. Digit. Signal Process. 2025, 156, 104810. [Google Scholar] [CrossRef]

- Mohanty, S.P.; Czakon, J.; Kaczmarek, K.A.; Pyskir, A.; Tarasiewicz, P.; Kunwar, S.; Rohrbach, J.; Luo, D.; Prasad, M.; Fleer, S.; et al. Deep Learning for Understanding Satellite Imagery: An Experimental Survey. Front. Artif. Intell. 2020, 3, 534696. [Google Scholar] [CrossRef] [PubMed]

- Gao, G.; Liu, L.; Zhao, L.; Shi, G.; Kuang, G. An Adaptive and Fast CFAR Algorithm Based on Automatic Censoring for Target Detection in High-Resolution SAR Images. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1685–1697. [Google Scholar] [CrossRef]

- Liu, T.; Zhang, J.; Gao, G.; Yang, J.; Marino, A. CFAR Ship Detection in Polarimetric Synthetic Aperture Radar Images Based on Whitening Filter. IEEE Trans. Geosci. Remote Sens. 2020, 58, 58–81. [Google Scholar] [CrossRef]

- An, W.; Xie, C.; Yuan, X. An Improved Iterative Censoring Scheme for CFAR Ship Detection with SAR Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4585–4595. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Xie, C.; Wang, R.; Zhang, J.; Chen, P.; Dong, W.; Li, R.; Chen, T.; Chen, H. Multi-Level Learning Features for Automatic Classification of Field Crop Pests. Comput. Electron. Agric. 2018, 152, 233–241. [Google Scholar] [CrossRef]

- Wang, J.; Quan, S.; Xing, S.; Li, Y.; Wu, H.; Meng, W. PSO-Based Fine Polarimetric Decomposition for Ship Scattering Characterization. ISPRS J. Photogramm. Remote Sens. 2025, 220, 18–31. [Google Scholar] [CrossRef]

- Hu, B.; Miao, H. An Improved Deep Neural Network for Small-Ship Detection in SAR Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 2596–2609. [Google Scholar] [CrossRef]

- Lv, J.; Zhang, R.; Bao, X.; Wu, R.; Hong, R.; He, X.; Liu, G. Time-Series InSAR Landslide Three-Dimensional Deformation Prediction Method Considering Meteorological Time-Delay Effects. Eng. Geol. 2025, 350, 107986. [Google Scholar] [CrossRef]

- Lv, J.; Zhang, R.; Shama, A.; Hong, R.; He, X.; Wu, R.; Bao, X.; Liu, G. Exploring the Spatial Patterns of Landslide Susceptibility Assessment Using Interpretable Shapley Method: Mechanisms of Landslide Formation in the Sichuan-Tibet Region. J. Environ. Manag. 2024, 366, 121921. [Google Scholar] [CrossRef]

- Ma, Y.; Guan, D.; Deng, Y.; Yuan, W.; Wei, M. 3SD-Net: SAR Small Ship Detection Neural Network. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5221613. [Google Scholar] [CrossRef]

- Zhang, Y.; Ye, M.; Zhu, G.; Liu, Y.; Guo, P.; Yan, J. FFCA-YOLO for Small Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5611215. [Google Scholar] [CrossRef]

- Ren, Z.; Tang, Y.; Yang, Y.; Zhang, W. SASOD: Saliency-Aware Ship Object Detection in High-Resolution Optical Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5611115. [Google Scholar] [CrossRef]

- Yasir, M.; Shanwei, L.; Mingming, X.; Jianhua, W.; Nazir, S.; Islam, Q.U.; Dang, K.B. SwinYOLOv7: Robust Ship Detection in Complex Synthetic Aperture Radar Images. Appl. Soft Comput. 2024, 160, 111704. [Google Scholar] [CrossRef]

- Liu, S.; Chen, P.; Zhang, Y. A Multiscale Feature Pyramid SAR Ship Detection Network with Robust Background Interference. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 9904–9915. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Girshick, R. Fast R-CNN. arXiv 2015, arXiv:1504.08083. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Pang, J.; Chen, K.; Shi, J.; Feng, H.; Ouyang, W.; Lin, D. Libra R-CNN: Towards Balanced Learning for Object Detection. arXiv 2019, arXiv:1904.02701. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into High Quality Object Detection. arXiv 2017, arXiv:1712.00726. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. arXiv 2018, arXiv:1708.02002. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. arXiv 2016, arXiv:1512.02325. [Google Scholar] [CrossRef]

- Li, Y.; Mao, H.; Girshick, R.; He, K. Exploring Plain Vision Transformer Backbones for Object Detection. In Computer Vision—ECCV 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Lecture Notes in Computer Science; Springer Nature Switzerland: Cham, Switzerland, 2022; Volume 13669, pp. 280–296. ISBN 978-3-031-20076-2. [Google Scholar]

- Zhou, S.; Zhang, M.; Wu, L.; Yu, D.; Li, J.; Fan, F.; Zhang, L.; Liu, Y. Lightweight SAR Ship Detection Network Based on Transformer and Feature Enhancement. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 4845–4858. [Google Scholar] [CrossRef]

- Hu, B.; Miao, H. A Lightweight SAR Ship Detection Network Based on Deep Multiscale Grouped Convolution, Network Pruning, and Knowledge Distillation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 2190–2207. [Google Scholar] [CrossRef]

- Yu, J.; Chen, J.; Wan, H.; Zhou, Z.; Cao, Y.; Huang, Z.; Li, Y.; Wu, B.; Yao, B. SARGap: A Full-Link General Decoupling Automatic Pruning Algorithm for Deep Learning-Based SAR Target Detectors. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5202718. [Google Scholar] [CrossRef]

- Hao, Y.; Zhang, Y. A Lightweight Convolutional Neural Network for Ship Target Detection in SAR Images. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 1882–1898. [Google Scholar] [CrossRef]

- Pan, H.; Guan, S.; Jia, W. EMO-YOLO: A Lightweight Ship Detection Model for SAR Images Based on YOLOv5s. Signal Image Video Process. 2024, 18, 5609–5617. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, S.; Wang, W.-Q. A Lightweight Faster R-CNN for Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4006105. [Google Scholar] [CrossRef]

- Liu, S.; Kong, W.; Chen, X.; Xu, M.; Yasir, M.; Zhao, L.; Li, J. Multi-Scale Ship Detection Algorithm Based on a Lightweight Neural Network for Spaceborne SAR Images. Remote Sens. 2022, 14, 1149. [Google Scholar] [CrossRef]

- Dai, D.; Wu, H.; Wang, Y.; Ji, P. LHSDNet: A Lightweight and High-Accuracy SAR Ship Object Detection Algorithm. Remote Sens. 2024, 16, 4527. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. ShipDeNet-20: An Only 20 Convolution Layers and <1-MB Lightweight SAR Ship Detector. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1234–1238. [Google Scholar] [CrossRef]

- Li, J.; Huo, H.; Guo, L.; Zhang, D.; Feng, W.; Lian, Y.; He, L. Small Ship Detection Based on Improved Neural Network Algorithm and SAR Images. Remote Sens. 2025, 17, 2586. [Google Scholar] [CrossRef]

- Gong, Y.; Zhang, Z.; Wen, J.; Lan, G.; Xiao, S. Small Ship Detection of SAR Images Based on Optimized Feature Pyramid and Sample Augmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 7385–7392. [Google Scholar] [CrossRef]

- Xiao, X.; Xue, X.; Zhao, Z.; Fan, Y. A Recursive Prediction-Based Feature Enhancement for Small Object Detection. Sensors 2024, 24, 3856. [Google Scholar] [CrossRef]

- Li, C.; Hei, Y.; Xi, L.; Li, W.; Xiao, Z. GL-DETR: Global-to-Local Transformers for Small Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2024, 21, 4016805. [Google Scholar] [CrossRef]

- Zhao, C.; Fu, X.; Dong, J.; Cao, S.; Zhang, C. MLC-Net: A Robust SAR Ship Detector with Speckle Noise and Multiscale Targets. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 19260–19273. [Google Scholar] [CrossRef]

- Li, C.; Xi, L.; Hei, Y.; Li, W.; Xiao, Z. Efficient Feature Focus Enhanced Network for Small and Dense Object Detection in SAR Images. IEEE Signal Process. Lett. 2025, 32, 1306–1310. [Google Scholar] [CrossRef]

- Xu, Y.; Pan, H.; Wang, L.; Zou, R. MC-ASFF-ShipYOLO: Improved Algorithm for Small-Target and Multi-Scale Ship Detection for Synthetic Aperture Radar (SAR) Images. Sensors 2025, 25, 2940. [Google Scholar] [CrossRef] [PubMed]

- Yu, W.; Wang, Z.; Li, J.; Luo, Y.; Yu, Z. A Lightweight Network Based on One-Level Feature for Ship Detection in SAR Images. Remote Sens. 2022, 14, 3321. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Sunkara, R.; Luo, T. No More Strided Convolutions or Pooling: A New CNN Building Block for Low-Resolution Images and Small Objects. arXiv 2022, arXiv:2208.0364. [Google Scholar] [CrossRef]

- Li, J.; Yuan, C.; Wang, X. Real-Time Instance-Level Detection of Asphalt Pavement Distress Combining Space-to-Depth (SPD) YOLO and Omni-Scale Network (OSNet). Autom. Constr. 2023, 155, 105062. [Google Scholar] [CrossRef]

- Gu, Z.; Zhu, K.; You, S. YOLO-SSFS: A Method Combining SPD-Conv/STDL/IM-FPN/SIoU for Outdoor Small Target Vehicle Detection. Electronics 2023, 12, 3744. [Google Scholar] [CrossRef]

- Zhu, W.; Han, X.; Zhang, K.; Lin, S.; Jin, J. Application of YOLO11 Model with Spatial Pyramid Dilation Convolution (SPD-Conv) and Effective Squeeze-Excitation (EffectiveSE) Fusion in Rail Track Defect Detection. Sensors 2025, 25, 2371. [Google Scholar] [CrossRef]

- Wan, D.; Lu, R.; Shen, S.; Xu, T.; Lang, X.; Ren, Z. Mixed Local Channel Attention for Object Detection. Eng. Appl. Artif. Intell. 2023, 123, 106442. [Google Scholar] [CrossRef]

- Groenendijk, R.; Dorst, L.; Gevers, T. MorphPool: Efficient Non-Linear Pooling & Unpooling in CNNs. arXiv 2022, arXiv:2211.14037. [Google Scholar]

- Jiao, J.; Tang, Y.-M.; Lin, K.-Y.; Gao, Y.; Ma, J.; Wang, Y.; Zheng, W.-S. DilateFormer: Multi-Scale Dilated Transformer for Visual Recognition. IEEE Trans. Multimed. 2023, 25, 8906–8919. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Li, J.; Xu, X.; Wang, B.; Zhan, X.; Xu, Y.; Ke, X.; Zeng, T.; Su, H.; et al. SAR Ship Detection Dataset (SSDD): Official Release and Comprehensive Data Analysis. Remote Sens. 2021, 13, 3690. [Google Scholar] [CrossRef]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A High-Resolution SAR Images Dataset for Ship Detection and Instance Segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Li, Y.; Li, X.; Li, W.; Hou, Q.; Liu, L.; Cheng, M.-M.; Yang, J. SARDet-100K: Towards Open-Source Benchmark and ToolKit for Large-Scale SAR Object Detection. arXiv 2024, arXiv:2403.06534. [Google Scholar]

| Configuration Item | Configuration Value |

|---|---|

| CPU | Intel i9-13900K |

| Memory | 256 GB |

| GPU | NVIDIA GeForce RTX 3060 |

| Graphics memory | 16 GB |

| Operating system | Ubuntu 20.04 |

| CUDA version | 11.8 |

| Python version | 3.9 |

| Deep learning framework | PyTorch 2.6.0 |

| Parameter | Value |

|---|---|

| Datasets splitting | 8:2 |

| Epoch | 200 |

| Batch size | 16 |

| Max. learning rate | 0.01 |

| Min. learning rate | 0.0001 |

| Optimizer | SGD |

| Learning rate decline mode | cos |

| Input image size | 640 × 640 |

| Data enhancement method | mosaic |

| SPDconv | MLCA | MSDA | SSDD | HRSID | SARDet-100k | Params (M) | FLOPs (G) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| mAP50 | F1 | FPS | mAP50 | F1 | FPS | mAP50 | F1 | FPS | |||||

| – | – | – | 0.976 | 0.971 | 250 | 0.930 | 0.884 | 256 | 0.874 | 0.851 | 240 | 2.582 | 6.3 |

| √ | – | – | 0.978 | 0.960 | 244 | 0.932 | 0.898 | 250 | 0.870 | 0.836 | 260 | 2.212 | 5.4 |

| – | √ | – | 0.986 | 0.975 | 400 | 0.943 | 0.897 | 400 | 0.879 | 0.852 | 238 | 2.582 | 6.4 |

| – | – | √ | 0.987 | 0.981 | 345 | 0.944 | 0.895 | 385 | 0.875 | 0.872 | 242 | 3.269 | 8.3 |

| √ | √ | – | 0.991 | 0.975 | 385 | 0.948 | 0.904 | 385 | 0.856 | 0.846 | 265 | 2.212 | 5.5 |

| √ | – | √ | 0.988 | 0.972 | 357 | 0.947 | 0.901 | 357 | 0.865 | 0.849 | 255 | 2.475 | 5.6 |

| – | √ | √ | 0.988 | 0.977 | 263 | 0.951 | 0.905 | 233 | 0.866 | 0.867 | 248 | 2.800 | 7.2 |

| √ | √ | √ | 0.989 | 0.982 | 385 | 0.955 | 0.908 | 385 | 0.883 | 0.869 | 258 | 2.475 | 5.7 |

| Model | SSDD | HRSID | SARDet-100k | Params (M) | FLOPs (G) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| mAP50 | F1 | FPS | mAP50 | F1 | FPS | mAP50 | F1 | FPS | |||

| Faster R-CNN | 0.943 | 0.902 | 12 | 0.937 | 0.896 | 11 | 0.880 | 0.855 | 8 | 270.000 | 180.500 |

| RetinaNet | 0.941 | 0.897 | 14 | 0.934 | 0.892 | 13 | 0.875 | 0.850 | 9 | 303.000 | 165.200 |

| FCOS | 0.946 | 0.905 | 18 | 0.936 | 0.894 | 17 | 0.880 | 0.860 | 12 | 256.200 | 142.300 |

| YOLOX-tiny | 0.95 | 0.912 | 200 | 0.945 | 0.902 | 195 | 0.860 | 0.830 | 150 | 40.600 | 14.800 |

| YOLOv5n | 0.961 | 0.965 | 263 | 0.942 | 0.899 | 278 | 0.886 | 0.862 | 238 | 2.503 | 7.100 |

| YOLOv8n | 0.945 | 0.977 | 244 | 0.938 | 0.894 | 240 | 0.887 | 0.854 | 217 | 3.006 | 8.100 |

| YOLOv8s | 0.985 | 0.968 | 55 | 0.945 | 0.904 | 138 | 0.891 | 0.863 | 208 | 11.126 | 28.400 |

| YOLOv10n | 0.978 | 0.945 | 334 | 0.930 | 0.882 | 341 | 0.872 | 0.850 | 230 | 2.265 | 6.500 |

| YOLOv10s | 0.974 | 0.945 | 209 | 0.922 | 0.899 | 211 | 0.877 | 0.862 | 227 | 7.218 | 21.400 |

| YOLOv11s | 0.978 | 0.968 | 166 | 0.942 | 0.895 | 236 | 0.871 | 0.861 | 213 | 9.413 | 21.300 |

| YOLOv12n | 0.972 | 0.966 | 222 | 0.930 | 0.885 | 213 | 0.873 | 0.845 | 238 | 2.527 | 5.800 |

| YOLOv12s | 0.981 | 0.962 | 144 | 0.932 | 0.901 | 182 | 0.881 | 0.872 | 169 | 9.111 | 19.300 |

| ShipDeNet-20 * | 0.971 | 0.924 | 233 | - | - | - | - | - | - | 0.165 | 0.00033 |

| Ours | 0.989 | 0.982 | 385 | 0.955 | 0.908 | 385 | 0.883 | 0.869 | 258 | 2.475 | 5.700 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, G.; Zhang, R.; He, J.; Tang, Y.; Wang, Y.; He, Y.; Gong, X.; Ye, J. S2M-Net: A Novel Lightweight Network for Accurate Small Ship Recognition in SAR Images. Remote Sens. 2025, 17, 3347. https://doi.org/10.3390/rs17193347

Wang G, Zhang R, He J, Tang Y, Wang Y, He Y, Gong X, Ye J. S2M-Net: A Novel Lightweight Network for Accurate Small Ship Recognition in SAR Images. Remote Sensing. 2025; 17(19):3347. https://doi.org/10.3390/rs17193347

Chicago/Turabian StyleWang, Guobing, Rui Zhang, Junye He, Yuxin Tang, Yue Wang, Yonghuan He, Xunqiang Gong, and Jiang Ye. 2025. "S2M-Net: A Novel Lightweight Network for Accurate Small Ship Recognition in SAR Images" Remote Sensing 17, no. 19: 3347. https://doi.org/10.3390/rs17193347

APA StyleWang, G., Zhang, R., He, J., Tang, Y., Wang, Y., He, Y., Gong, X., & Ye, J. (2025). S2M-Net: A Novel Lightweight Network for Accurate Small Ship Recognition in SAR Images. Remote Sensing, 17(19), 3347. https://doi.org/10.3390/rs17193347