1. Introduction

As artificial satellite technology progresses, satellite imagery is increasingly utilized for Earth observation. Annually, approximately 67% of the Earth’s surface experiences cloud cover [

1]. Over 30% of the region is affected by seasonal snow, with 10% being permanently snow-covered [

2]. The presence of clouds and snow in satellite images significantly impacts the effectiveness of Earth observation. Due to the plateau area, every year there will be heavy snow to bring great losses to animal husbandry, and timely detection of snow cover can substantially reduce personnel and material losses caused by snowstorms. However, the spectral characteristics of the panchromatic band of cloud and snow have high similarity, presenting a technical challenge in satellite cloud and snow image recognition.

On one side, cloud and snow coverage complicates remote sensing by obstructing targets or altering surface reflectance, thereby affecting image interpretation and processing. On the other side, their highly similar visual and spectral properties make it difficult to distinguish between them, especially in cloud segmentation [

3] and snow reflection estimation [

4]. Hence, developing accurate cloud and snow-detection techniques is crucial for enhancing the reliability of remote sensing-based analysis.

Recent advances in deep learning have revolutionized remote sensing image analysis, achieving significant breakthroughs in cloud and snow detection [

5,

6,

7,

8]. Many models have been developed in recent years to address these challenges [

9,

10,

11], pushing the field forward considerably. However, even state-of-the-art solutions have limitations.

Prior to the rise of deep learning, detection techniques were generally divided into three main categories: heuristic-based, temporal-reflectance comparison, and classical machine learning approaches. Heuristic or rule-based methods rely on spectral and thermal differences between land covers—such as clouds, snow, and vegetation—typically using thresholding strategies. Notable examples include the Automatic Cloud Cover Assessment (ACCA) [

12], the Normalized Difference Snow Index (NDSI), Fmask [

13], and snow decision tree algorithms [

14]. These approaches, while straightforward, are primarily grounded in low-level spectral cues and exhibit strong dependence on shortwave infrared and thermal imaging [

15], making them highly sensitive to sensor variability and reducing their cross-platform robustness [

16].

Temporal-reflectance-based approaches (or multi-temporal methods) track radiometric changes over time to detect transient events like clouds or recent snowfall. Algorithms such as Tmask [

17], CS [

18], and ATSA [

19] represent this class. Despite their temporal advantage, these techniques often face issues such as high dependency on dense time-series data, limited ability to identify persistent snow cover, and vulnerability to natural surface changes—factors that constrain their scalability.

Supervised machine learning strategies—including support vector machines [

20], random forests [

21], and Bayesian classifiers [

22]—aim to learn discriminative features from labeled samples. Shallow neural networks [

17] have also been applied. However, these models often struggle with feature complexity and fail to generalize well in challenging environments, falling short when compared to more recent, deep learning-based alternatives.

The introduction of deep learning has substantially improved detection accuracy, generalizability, and processing efficiency. In particular, Convolutional Neural Networks (CNNs) have become the standard for large-scale segmentation of clouds and snow [

23]. Lightweight architectures like RS-Net [

16] and streamlined variants of U-Net [

24] are designed for fast inference while maintaining high accuracy. By utilizing U-Net [

25] as a backbone and optimizing network depth, these models achieve reduced computational costs and parameter overheads, yet still significantly outperform traditional solutions such as Fmask in terms of precision and robustness.

Efforts to enhance prediction accuracy are primarily focused on refining existing modules, introducing auxiliary components, or redesigning the overall network architecture. One common strategy involves incorporating advanced modules into conventional frameworks. For instance, CloudNet [

26] employs ResNet18 as its backbone and integrates an improved Atrous Spatial Pyramid Pooling (ASPP) module. This module, placed after the backbone feature extraction, captures multi-scale contextual features from the deepest layers, thereby refining the segmentation of cloud and shadow boundaries. The overall performance surpasses that of DeepLabv2 [

27].

Another approach centers on rethinking the network design itself, especially in the encoder-decoder structure. CDUNet [

28] enhances the traditional UNet by introducing a booster branch—comprising convolution and dropout layers—during training. This addition facilitates more effective loss computation and contributes to faster network convergence. In the decoding stage, rather than relying on the basic hierarchical fusion strategy used in UNet, the authors propose a novel feature fusion layer that simultaneously integrates three different feature maps. This design not only strengthens the extraction of fine-grained texture cues but also suppresses high-frequency noise. As a result, CDUNet yields more precise segmentation at object boundaries and offers stronger global contextual awareness, which improves its adaptability across different spatial environments. The model has demonstrated superior generalization on multiple satellite datasets.

Beyond these accuracy-driven strategies, some research also explores lightweight architectures to balance prediction precision and computational efficiency. For example, SGBNet [

29] significantly reduces model complexity and enhances runtime performance. However, when applied to cloud and snow-segmentation tasks, it shows limitations in maintaining high segmentation accuracy.

Recent research efforts [

30,

31,

32] have extended the use of Transformers—originally developed for natural language processing—to vision-related tasks by leveraging their ability to capture long-range dependencies. Transformer models [

33] utilize a multi-head self-attention mechanism, enabling them to aggregate information across the entire input and emphasize salient regions. The essence of this mechanism lies in modeling the interrelations among all pixels, where each pixel participates in the computation but contributes to varying degrees. This enables the model to achieve a global perceptual field and prioritize contextually important features.

Building on this, the Vision Transformer (ViT) was initially proposed by Dosovitskiy et al. [

34], which applies a pure Transformer architecture directly to sequences of image patches for classification tasks. This patch-based modeling approach has shown superior performance over conventional convolutional architectures in multi-class image classification scenarios. However, despite its success in classification, ViT exhibits limitations when directly applied to dense prediction tasks like semantic segmentation, where maintaining spatial detail and local context is crucial.

To extend Transformer models to dense vision problems like detection and segmentation, including object localization and semantic parsing, Wang et al. [

35] introduced the Pyramid Vision Transformer (PVT). This approach adopts ViT as its foundation while incorporating a hierarchical design that progressively reduces the spatial resolution of feature maps, thereby decreasing both memory consumption and computational cost. This makes PVT particularly suitable for pixel-level prediction tasks. Similarly, Wu et al. [

36] proposed the Convolutional Vision Transformer (CvT), which embeds convolutional operations into the Transformer framework to improve representational capacity and overall performance. Furthermore, Zamir et al. [

37] presented Restormer, a Transformer-based model tailored for image restoration tasks such as denoising, motion deblurring, and defocus correction, leveraging long-range dependencies to enhance reconstruction quality.

Despite their success in general vision tasks, these models encounter considerable difficulties when applied to remote sensing scenarios involving cloud and snow segmentation. The main challenge lies in the frequent occlusion of surface details by cloud and snow layers, which leads to degraded image quality. Additionally, the interaction between these elements and background features introduces substantial noise. The primary shortcomings of current Transformer-based approaches in this domain include:

(1) Limited robustness to noise and complex surface features, often resulting in false positives;

(2) Ineffective detection of small, isolated targets, contributing to omissions;

(3) Coarse delineation at cloud and snow boundaries, which hinders precise edge segmentation and affects overall accuracy.

This study tackles the aforementioned challenges by introducing a Transformer-driven multi-branch architecture for the detection and segmentation of clouds and snow in remote sensing imagery. After extensive experimentation and refinement, we present an enhanced Transformer framework for both the encoder and decoder stages, termed the Transformer Encoder Module (TEM) and the Transformer Fusion Module (TFM). The core of our network integrates TEM with the ResNet18 [

38] convolutional backbone. While the Transformer contributes global self-attention, context modeling, and strong generalization capabilities [

39], the convolutional branch provides robustness to geometric transformations such as translation, scaling, and distortion [

40,

41]. By fusing these complementary advantages, our dual-branch design ensures more effective semantic representation and spatial detail extraction.

In this study, we focus on the specific spectral bands of the Landsat-8 satellite, which are essential for cloud and snow classification. Specifically, the visible bands—such as the Blue (Band 2), Green (Band 3), and Red (Band 4) bands, along with the Near-Infrared (NIR, Band 5)—are primarily used. These bands are crucial because the contrast between snow and clouds is most evident in the visible and near-infrared regions. The Blue and Red bands help distinguish clouds and snow from their surroundings, while the NIR band is particularly sensitive to snow, allowing for better separation of snow from other land cover types.

To further boost feature representation, a Feature-Enhancement Module (FEM) is positioned between the Transformer and convolutional pathways, enabling mutual guidance and adaptive information exchange. This architecture improves the model’s ability to capture subtle and scattered cloud-snow structures. Furthermore, a Deep Feature-Extraction Module (DFEM) is integrated at the deepest convolutional layer to enhance channel-level representations. By emphasizing salient feature dimensions, DFEM improves the model’s ability to capture abstract representations and leads to more precise delineation along cloud and snow contours.

In the decoder, the Transformer Fusion Module (TFM) and the Strip Pooling Auxiliary Module (SPAM) jointly process multi-scale features from both encoder branches. This collaborative decoding strategy facilitates the integration of high-level semantics with low-level spatial cues, enhancing resistance to background interference and reducing classification errors. Consequently, the network delivers clearer and more reliable segmentation, particularly in complex cloud-snow boundary regions.

2. Methodology

2.1. Backbone

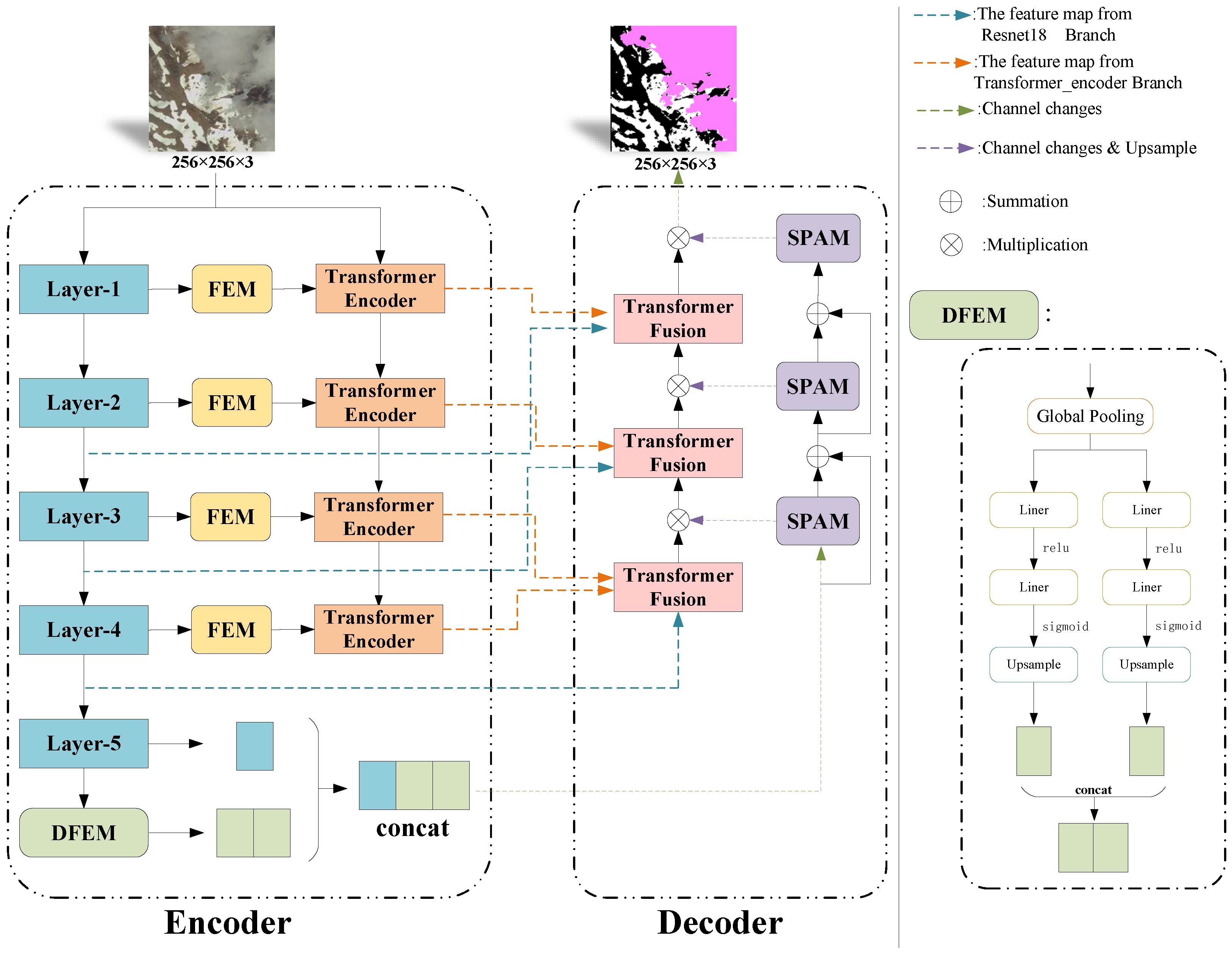

To capture multi-scale features during encoding, we adopt a hybrid architecture that integrates both a Transformer-based branch and a convolutional branch, as illustrated in

Figure 1. Convolutional neural networks (CNNs) excel at modeling local spatial correlations by operating on localized receptive fields, which helps minimize parameter count, reduce overfitting risk, and enhance the model’s ability to learn translation-invariant representations [

42]. In contrast, the Transformer architecture is adept at capturing long-range dependencies through its self-attention mechanism, offering a more stable alternative to recurrent neural networks (RNNs) that are prone to gradient vanishing or explosion when processing extended sequences. By leveraging this mechanism, the model can dynamically prioritize informative input regions, thereby improving contextual understanding.

To take full advantage of these complementary strengths, we propose a Transformer Encoder Module (TEM), incorporated into the encoder stage. Its structure is shown in

Figure 2a. By unifying local feature learning from CNNs with the global modeling capacity of Transformers, the proposed design achieves superior performance compared to architectures relying solely on either convolution or attention mechanisms.

The integration of CNNs and Transformers within the TEM allows the network to effectively capture both local spatial features and long-range dependencies. The convolutional branch excels in learning fine-grained spatial information, which is crucial for tasks requiring precise localization, such as segmenting objects with well-defined boundaries like clouds. In contrast, the Transformer branch leverages its global self-attention mechanism to model long-range dependencies, improving the network’s ability to understand contextual relationships across the entire image. This hybrid design helps the model handle visually similar regions, such as snow and clouds, by combining detailed local features with broader contextual understanding, ultimately leading to more accurate segmentation in complex areas.

The dual-branch structure leverages the complementary advantages of both architectures, allowing for more effective extraction of spatial details and semantic context. To tackle the challenge posed by the visual similarity between clouds and snow—both of which often share comparable shapes and spectral characteristics—we enhance the conventional ResNet18 convolutional pathway by integrating a Transformer Encoder Module (TEM). This addition significantly increases the network’s ability to suppress mutual interference, thereby enhancing the precision of cloud and snow segmentation.

While the Transformer-ResNet18 hybrid architecture we propose effectively captures both local and global dependencies, it is worth noting that alternative hybrid models, such as the Swin Transformer combined with CNNs, also have their own advantages. The Swin Transformer [

40], for instance, adopts a shifted window mechanism, which is more computationally efficient and scalable compared to traditional Transformers. However, the Swin Transformer may not capture local spatial details as effectively as CNNs, especially in highly detailed regions like cloud and snow boundaries. In contrast, our approach benefits from the detailed feature extraction of ResNet18 and the global attention capabilities of the Transformer, offering a well-rounded solution that improves segmentation accuracy in challenging scenarios.

Details of the network architecture are provided in

Table 1. Feature maps are organized into one to five stages according to their spatial dimensions. The proposed TEM incorporates multiple enhancements compared to conventional Transformer modules, especially in the Multi-Head Self-Attention (MHSA) and MLP components. Within the MHSA mechanism, a matrix fusion strategy is employed between the query (Q) and key (K) vectors to enhance the extraction of relevant image-level dependencies. This improves the model’s ability to handle the complex dependencies between the cloud and snow regions, addressing their visual similarity more effectively.

Meanwhile, the standard MLP component in Transformer architectures typically consists of a linear transformation followed by a non-linear activation function. We revise this component into a Convolutional Feedforward Perceptron (CFP), which incorporates 2D convolution operations with the Swish activation. Compared to traditional fully connected layers, the convolutional structure benefits from local connectivity and weight sharing, which reduces the overall number of trainable parameters and computational load. Additionally, this convolutional design allows the model to better preserve local spatial features during downsampling, further improving its ability to capture fine-grained details.

To further improve generalization, dropout is incorporated into the CFP. Specifically, a 1 × 1 convolution is used to encode inter-channel contextual information at the pixel level, and dropout with a probability of 0.1 is applied during training to randomly deactivate a portion of the neurons. This stochastic regularization helps reduce overfitting and marginally improves segmentation performance. These modifications make our model more resilient to noise and able to segment complex cloud and snow regions more accurately.

In summary, while alternative hybrid architectures such as the Swin Transformer + CNN may offer advantages in computational efficiency and scalability, the combination of ResNet18 and Transformer in our model provides a balanced solution that excels in both local and global feature extraction. The empirical results presented later demonstrate that our approach outperforms these alternatives in specific segmentation tasks, making it a more suitable choice for remote sensing applications that involve complex cloud and snow regions.

The mathematical formulation of the TEM module is as follows:

In this context, and represent the inputs to the Transformer Encoder Module (TEM). The operation refers to a 2D convolution with a kernel, while denotes a 2D convolution with a kernel. MHSA stands for Multi-Head Self-Attention, Norm refers to layer normalization, and CFP represents the Convolutional Feedforward Perceptron.

The calculation process of MHSA is outlined as follows:

The calculation process of CFP is outlined as follows:

Here, , , and are derived from the original tensor after reshaping. refers to a 2D convolution with a kernel, while represents a depthwise separable 2D convolution with a kernel. R denotes the rearrangement operation, and is a learnable scaling factor that adjusts the pointwise product between and before applying the softmax function. Softmax refers to the normalized exponential function. Swish represents the Swish activation function, and Drop indicates the dropout operation.

Accurate edge segmentation of clouds and snow remains a major challenge in target detection and segmentation tasks. To address this, we take advantage of the fact that the deepest layers of the backbone network contain a high number of channels, which capture rich contextual and semantic information. Accordingly, we introduce a Deep Feature-Extraction Module (DFEM) at the bottom of the backbone, as shown in

Figure 1.

This module starts by compressing the spatial dimensions to produce a representation, which summarizes each channel’s global context through global average pooling, resulting in C global descriptors. These descriptors are then processed through two parallel transformation paths. In each path, a fully connected layer reduces the channel dimension, followed by a ReLU activation. The channel dimensions are then restored through another fully connected layer and refined with a sigmoid activation, helping the model focus on the most informative channels.

Next, spatial resolution is recovered, and to reinforce semantic and contextual cues—especially for delineating fine cloud and snow edges—the outputs of the two branches are merged by stacking features across channels. This fusion strategy encourages the network to focus more precisely on boundary localization. The above process can be formally described as:

here,

x and

denote the module’s input and the output after feature mapping, respectively.

refers to global average pooling, and

indicates concatenation along the channel dimension.

2.2. Feature-Enhancement Module (FEM)

Detecting and segmenting thin clouds and scattered small snow patches is challenging due to their wide dispersion and small size, making them prone to missed detections.

We believe this issue stems from insufficient fusion of location and category feature information. To improve accuracy, we weight the low-level features from the convolution branch alongside the high-level semantic features from the Transformer Encoder Module (TEM) branch. While the convolution branch preserves more spatial detail, the TEM branch captures higher-level features, making the convolution branch essential for mining deeper feature information and guiding the TEM branch with spatial context.

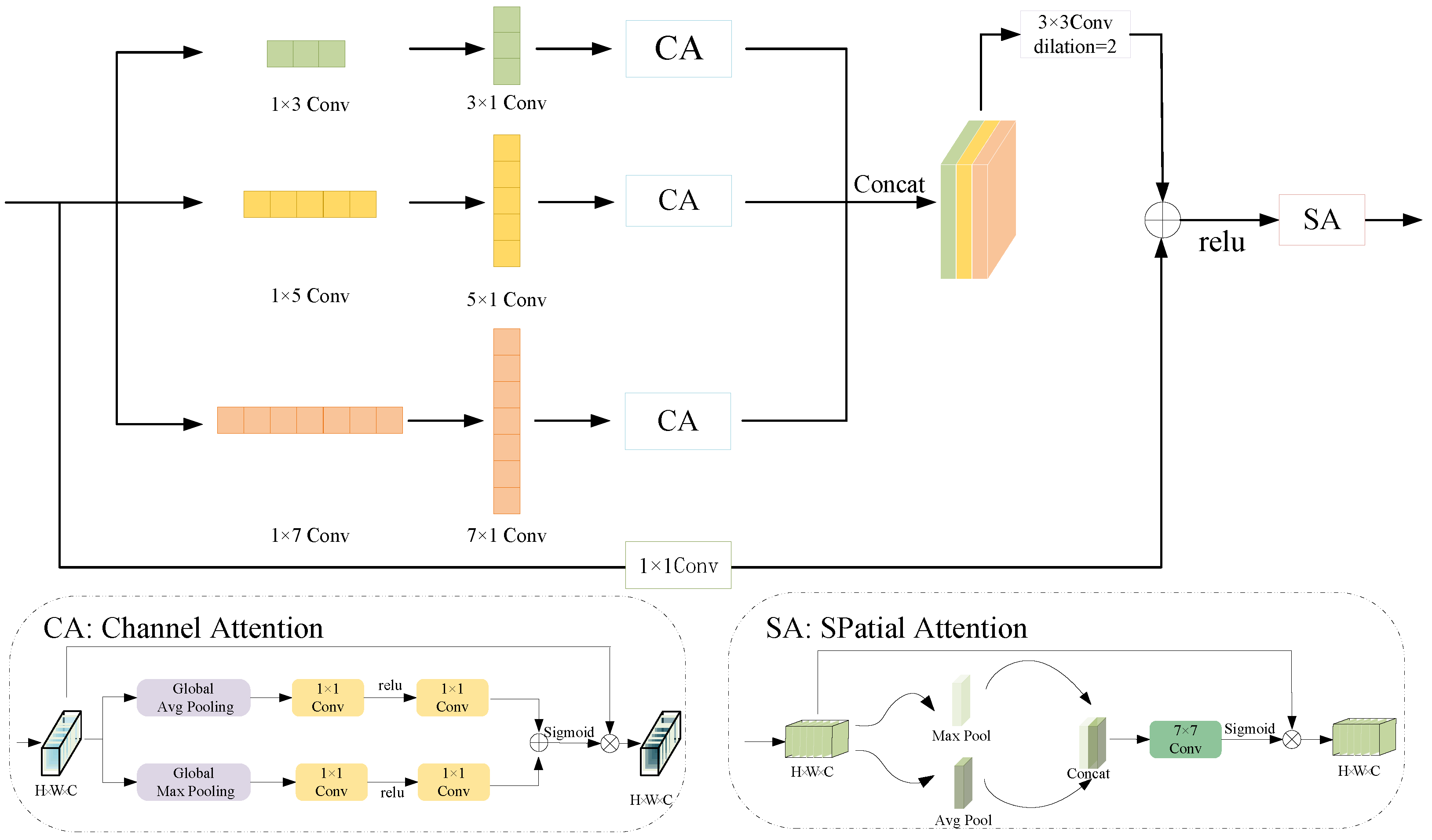

To enhance the low-level features, we introduced a Feature-Enhancement Module (FEM), as shown in

Figure 3. This module strengthens spatial context, extracts multi-scale features, and highlights key elements. Consequently, it improves the model’s ability to detect target boundaries and manage targets at various scales, boosting overall performance. The features from all three branches are concatenated and subsequently processed using depthwise separable convolution (dilation = 2), which restores channel capacity while broadening the effective receptive area, thereby improving the model’s grasp of both local context and overall scene structure.

Initial input weights for the FEM are adjusted via a convolution. The output from the depthwise separable convolution is then added for feature fusion, followed by a ReLU activation function for nonlinear transformation and spatial attention. This allows the model to focus on specific spatial positions, improving the segmentation’s spatial accuracy.

The channel attention module leverages both global max pooling and global average pooling to extract high-level features, enabling the capture of richer and more diverse semantic representations. A pointwise (

) convolution is subsequently employed to reduce the number of channels to one-sixteenth of the original, serving as a channel-wise feature selector that highlights the relative importance of each dimension. This operation is formulated in Equations (18) and (19):

where

x denotes the input tensor.

and

perform maximum and average pooling across spatial dimensions, respectively.

applies a pointwise convolution for channel-wise transformation. After feature extraction, the ReLU activation function is applied to the feature map, helping suppress neuron activations without feedback, which enhances the model’s sparse representation capability, noise resistance, and generalization. The channel dimensions are then restored through another

convolution. The weight vector from the global average pooling branch is added to the result from the global maximum pooling. The Sigmoid function is applied to recalibrate the feature map, which is subsequently combined with the original channel attention through element-wise multiplication. This operation is described by formula (20):

where

denotes the channel attention output, ReLU is the ReLU activation function, and

refers to the Sigmoid activation function.

To enhance feature extraction, the spatial attention module integrates both maximum and average pooling. The resulting feature maps are then fused along the channel dimension through concatenation. After concatenation, a convolution with a

kernel reduces the number of channels from two to one. This large convolution kernel enables the extraction of a broader receptive field. The detailed computation process of the spatial attention module is presented in formula (21):

where

denotes the spatial attention output,

represents the Sigmoid activation function,

is a 2D convolution with a

kernel, and ⊕ indicates concatenation along the channel axis. MP and AP refer to maximum pooling and average pooling, respectively.

2.3. Transformer Fusion Module (TFM)

When a large area of snow and cloud overlap in the 2D image, cloud shadows may project onto the snow layer, creating significant color differences and interfering with surface elements in remote sensing images that resemble both clouds and snow. This results in incorrect attention to snow by the network, leading to misclassification. During decoding, a Transformer-driven fusion block is incorporated, illustrated in

Figure 2b. This module effectively integrates the upsampled output from the decoder with multi-level feature data from the encoder branches. By leveraging diverse feature information, it strengthens the feature representation and enhances model performance. Additionally, it improves the network’s ability to resist interference, particularly in regions where snow is covered by cloud shadows.

In this module, the weights output by the Multi-Head Self-Attention (MHSA) are passed through a convolutional embedding layer and combined with the three original weights input to the TFM. This integration allows the model to extract diverse feature information across different levels, optimizing the use of semantic details. By merging low-level and high-level features, the model achieves more comprehensive feature representations. In the MLP section, we replace the standard linear layer in most Transformers with 2D and depthwise separable convolutions, creating a Convolutional Feedforward Perceptron (CFP). This convolutional method extracts local patterns and spatial context via a sliding window, improving the network’s capacity for fine-grained feature analysis. The use of depthwise separable convolution reduces the number of parameters, improving training efficiency compared to traditional fully connected layers.

The detailed calculation process of the TFM is outlined as follows:

where

,

, and

are the inputs to the TFM.

denotes a 2D convolution with a

kernel. MHSA stands for Multi-head Self-Attention, Norm refers to layer normalization, and CFP represents Convolutional Feedforward Perceptron.

The detailed computation process of the CFP is as follows:

where

,

, and

are obtained by reshaping the original tensor of size

.

R denotes the rearrangement operation, and

serves as a trainable scaling factor that controls the dot product magnitude between

and

before the softmax.

indicates a 2D convolution using a

kernel, while

refers to a 2D depthwise separable convolution with a

kernel.

is the GELU activation function, BN represents batch normalization, ⊙ stands for element-wise multiplication, and Drop refers to the Dropout function.

2.4. Strip Pooling Auxiliary Module (SPAM)

Precisely distinguishing clouds and snow in satellite imagery is a challenge due to their similar colors and shapes. Existing methods often struggle to define precise boundaries, especially after down-sampling and up-sampling operations, which can result in the loss of fine details. To address these issues, we introduce the Strip Pooling Auxiliary Module (SPAM) in the decoding stage, as shown in

Figure 2c.

SPAM consists of two parallel strip average pooling branches that extract spatial information from the feature map. These branches use convolution kernels of and , respectively, to capture average values along the horizontal and vertical axes. This dual pooling mechanism enables the model to focus on both width and height dimensions, improving its ability to capture the shape and size of the target. The averaging process across both axes generates statistical features that better represent the spatial characteristics of the target, improving the smoothness and integrity of the feature map, and refining the segmentation of target boundaries.

After combining the outputs from both pooling branches, a dropout with a probability of 0.1 is applied. This regularization technique helps prevent overfitting by randomly eliminating neurons during the prediction phase, leading to more accurate segmentation results. The operations of SPAM are formally defined in formulas (33) and (34).

where

x and

y denote the input and output values of the module, respectively.

and

refer to average pooling layers with kernel sizes of

and

, respectively.

indicates a 2D convolution with a

kernel. Drop refers to the Dropout operation.

3. Experiments

3.1. Dataset Introduction

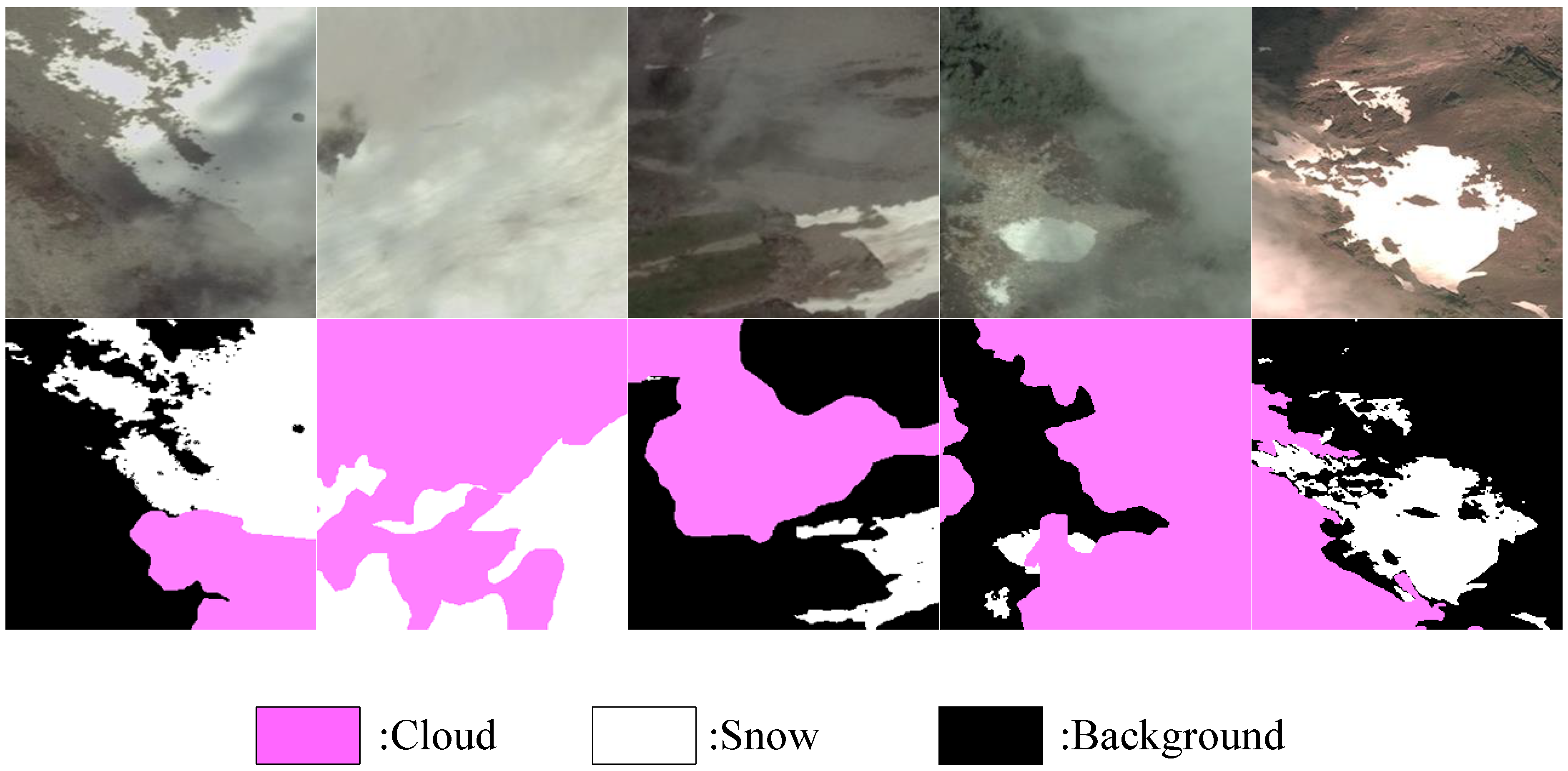

(1) The Cloud and Snow (CSWV) Dataset [

43] is used to evaluate the generalization performance of the proposed network. It consists of 27 high-resolution WorldView2 images, collected between June 2014 and July 2016 in the Cordillera Mountains, North America. The dataset features diverse landscapes, including forests, grasslands, ridges, and deserts, with cloud types (cirrus, cumulus, altocumulus, stratus) and snow forms (permanent, stable, discontinuous). These variations in shape, size, and texture make the dataset both comprehensive and challenging.

Each image is partitioned into 256 × 256 pixel patches, resulting in 3200 samples, which are divided into training (80%) and validation (20%) sets. To address the common issue of limited data in deep learning, augmentation techniques, such as translation, flipping, and random rotation, are applied, expanding the dataset to 10,240 training and 2560 validation images.

Figure 4 displays sample images with cloud (pink), snow (white), and background (black) labels.

Regarding the satellite data used in this study, we focus on specific spectral bands of the Landsat-8 satellite. Specifically, the visible bands—such as the Blue (Band 2), Green (Band 3), and Red (Band 4) bands, along with the Near-Infrared (NIR, Band 5)—are primarily used for cloud and snow classification. These bands are crucial because the contrast between snow and clouds is most evident in the visible and near-infrared regions. The Blue and Red bands help distinguish clouds and snow from their surroundings, while the NIR band is particularly sensitive to snow, allowing for better separation of snow from other land cover types.

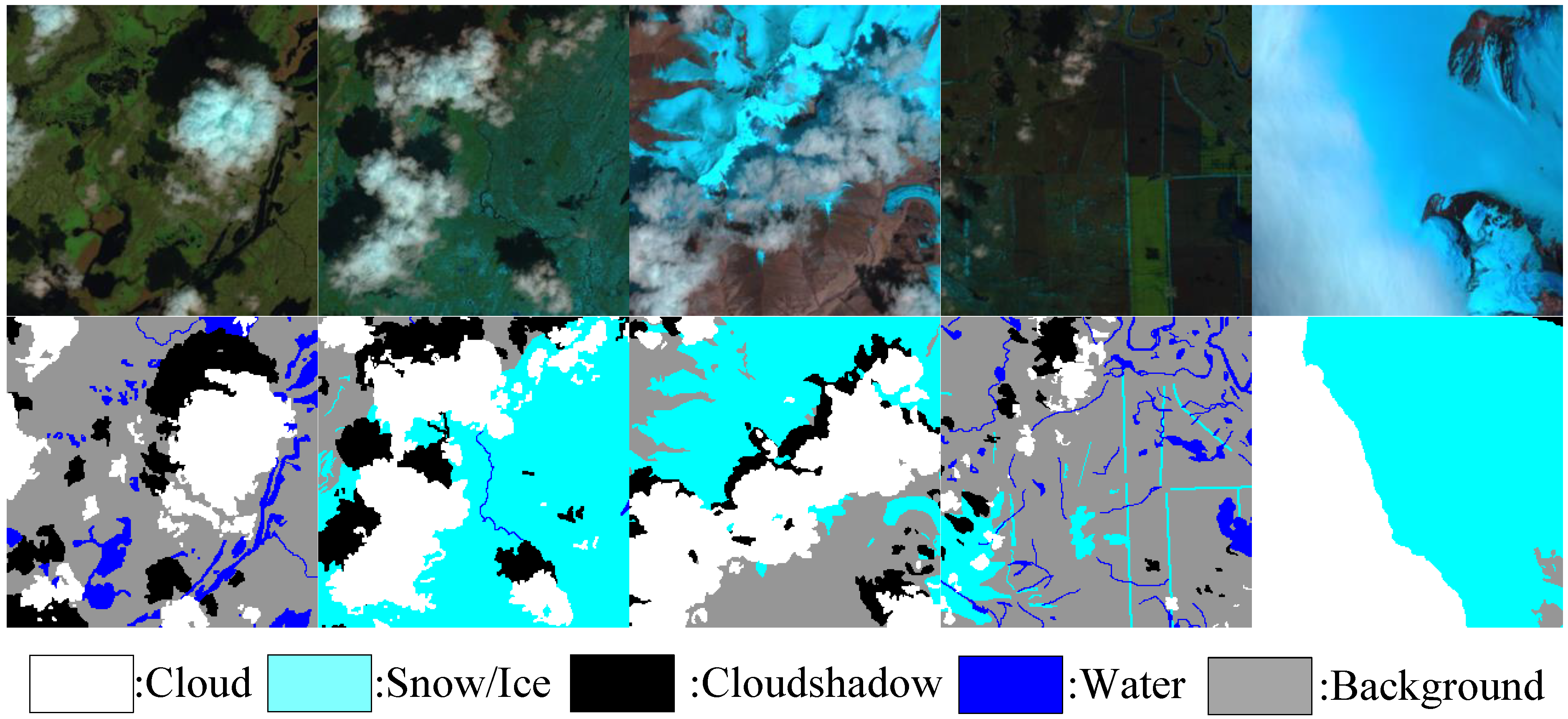

(2) The SPARCS Dataset [

17] serves to evaluate the effectiveness of our method in multi-spectral image analysis. Developed by M. Joseph Hughes, it includes 80 Landsat-8 images sized 1000 × 1000 pixels, annotated with classes like clouds, cloud shadows, snow/ice, water, and background.

Due to GPU memory constraints, these images were cropped into smaller patches of 256 × 256 pixels, yielding 2000 samples. These were then divided into training and validation subsets with an 80:20 split. To enhance model generalization, data augmentation—incorporating translation, flipping, and rotation—expanded the training set to 6400 images and the validation set to 1600 images. Examples from the SPARCS dataset are presented in

Figure 5, where cloud regions appear white, cloud shadows black, snow/ice light blue, water dark blue, and background gray.

For the SPARCS dataset, we also use Landsat-8 spectral bands, specifically the visible bands (Blue, Green, Red) and the Near-Infrared (NIR) band. These bands are chosen because they effectively highlight the differences between snow and cloud regions, which are crucial for accurate classification in multi-spectral image analysis.

3.2. Experimental Details

We conducted experiments using PyTorch 2.2.2 with CUDA 12.1 support for GPU acceleration. [

44]. The learning rate followed the “Steplr” schedule, computed as

. Initially set to 0.001, the learning rate decays by a factor of 0.98 every 3 epochs. We used the Adam optimizer [

45], which is known for its stable and rapid convergence, with

and

set to 0.9 and 0.999, respectively. The experiments were performed on an NVIDIA GeForce RTX 3070 with 8 GB of memory, with a batch size of 4 due to GPU limitations. Training was carried out over 200 epochs. Performance on the CSWV and SPARCS datasets was assessed using key evaluation metrics: precision (P), recall (R), F1 score, pixel accuracy (PA), FWIoU, and MIoU. The corresponding formulas are listed as follows:

In these formulas, represents the correctly predicted cloud (or snow) pixels, while refers to incorrect predictions. indicates cloud (or snow) pixels that were misclassified. The number of categories, excluding the background, is denoted by k. For each category i, denotes the true positives, while represents pixels of category i predicted as category j.

3.3. Ablation Experiment

We conducted ablation studies on the CSWV cloud and snow dataset to evaluate the contribution of each module. Initially, we used the ResNet18 convolutional branch as the backbone, applying upsampling at each layer before connecting them for output. Then, we progressively added the modules (FEM, TEM, DFEM, SPAM, TFM) to assess their individual and collective impact. As shown in

Table 2, the performance of each module was evaluated using MIoU, and the results demonstrate clear improvements with the inclusion of each module. To better visualize the effects of each module, we performed a thermal visualization experiment, which is illustrated in

Figure 6.

FEM Ablation: To achieve precise localization and segmentation of thin clouds and small scattered snow patches, we designed the Feature-Enhancement Module (FEM) to facilitate cross-level connections between the two encoder branches. This module enhances the exchange of information and feature fusion. As shown in

Table 2, the inclusion of FEM increased the network’s MIoU to 86.33%, marking a 0.5% improvement. Thermal visualization in

Figure 6d demonstrates that FEM improves the network’s focus on cloud regions, enhances the detection of small, scattered targets, and reduces both missed and false detections.

TEM Ablation: Snow cover can interfere with cloud detection, reducing network attention to clouds and causing missegmentation. A single convolutional or transformer branch cannot fully extract the necessary features for accurate segmentation of both cloud and snow. To overcome this, we introduced the Transformer Encoder Module (TEM) as a parallel branch to the ResNet18 convolution branch, creating a dual-branch structure. This setup leverages the transformer’s ability to capture long-range dependencies and the convolution’s capability for extracting local details. The result is improved multi-scale feature extraction and better resistance to cloud-snow interference. As shown in

Table 2, incorporating TEM increased the MIoU to 87.52%, an improvement of 1.19%.

Figure 6e illustrates that the TEM module significantly refines the focus on cloud prediction, improving segmentation accuracy.

DFEM Ablation: The Deep Feature-Extraction Module (DFEM) was introduced at the base of the encoder’s convolution branch, which holds the largest number of channels and contains rich semantic and contextual information. DFEM compresses and restores channels via linear layers, concatenates the output feature maps from the two parallel branches along the channel dimension, and maximizes the extraction of semantic and contextual information. This process enhances edge and texture details, improving the accuracy of edge segmentation for detection targets. As indicated in

Table 2, the addition of DFEM raised the MIoU to 87.64%, a 0.12% increase. Heat map visualization in

Figure 6f shows that the DFEM module helps the network focus on edge details, leading to more precise segmentation.

SPAM Ablation: The Strip Pooling Auxiliary Module (SPAM) was incorporated into the decoding stage to enhance the network’s ability to perceive the shape, size, and boundaries of detected targets. This helps achieve precise segmentation of complex cloud and snow junctions. As shown in

Table 2, adding SPAM increased the MIoU to 87.80%, a 0.16% improvement.

Figure 6g highlights that SPAM enables the network to focus better on the cloud-snow junction, refining edge details and improving segmentation accuracy.

TFM Ablation: The Transformer Fusion Module (TFM) was designed in the decoding stage to fuse the feature information output by the upsampling decoding with the feature information extracted from the two encoder branches at different levels. This fusion process enhances feature mining, fully extracts spatial and semantic information, improves the network’s resistance to interference, and increases focus on the detection target. As seen in

Table 2, the addition of TFM raised the MIoU to 89.23%, a 1.43% increase. Thermal visualizations in

Figure 6h show that TFM significantly improves the network’s attention to snow covered by cloud shadows in large-area snow images, reducing misjudgments and missed detections, while enhancing the robustness of the network.

3.4. Comparative Testing of Cloud and Snow (CSWV) Dataset

In this section, we compare our proposed network with several top-performing models, such as FCN, PAN, PSPNet, DeepLabV3Plus, BiSeNetV2, and others, to demonstrate its effectiveness. Each of these networks has distinct strengths. FCN uses a fully convolutional structure for pixel-wise classification. PSPNet captures multi-scale semantic information through pooling layers of different sizes, while DeepLabV3Plus incorporates an ASPP module with atrous convolutions at varying rates. BiSeNetV2, designed for real-time semantic segmentation, employs a dual-branch architecture to separately extract detailed and semantic features. In the Transformer-based models, PVT integrates feature pyramids with Transformers to leverage both methods’ strengths, improving feature representation and small target detection. CvT enhances performance by introducing convolution operations within the Transformer framework. DBNet, a dual-branch model combining Transformer and convolutional networks, targets both semantic and spatial details to reduce false and missed detections in cloud-detection and -segmentation tasks.

Table 3 presents a comparison of various networks. For cloud detection, our network outperforms others in both recall (R) and F1 score, achieving 91.64% and 92.19%, respectively. Similarly, our network attains a recall of 93.59% and an F1 score of 94.25% for snow detection, surpassing other methods. While our network does not achieve the highest precision (P) in either cloud or snow detection, the gap compared to the top-performing method is minimal. Moreover, the proposed method achieves top performance in pixel accuracy (PA), frequency-weighted intersection over union (FWIoU), and mean intersection over union (MIoU), with values of 94.81%, 90.19%, and 89.23%, respectively. The findings confirm the outstanding capability and efficiency of our model.

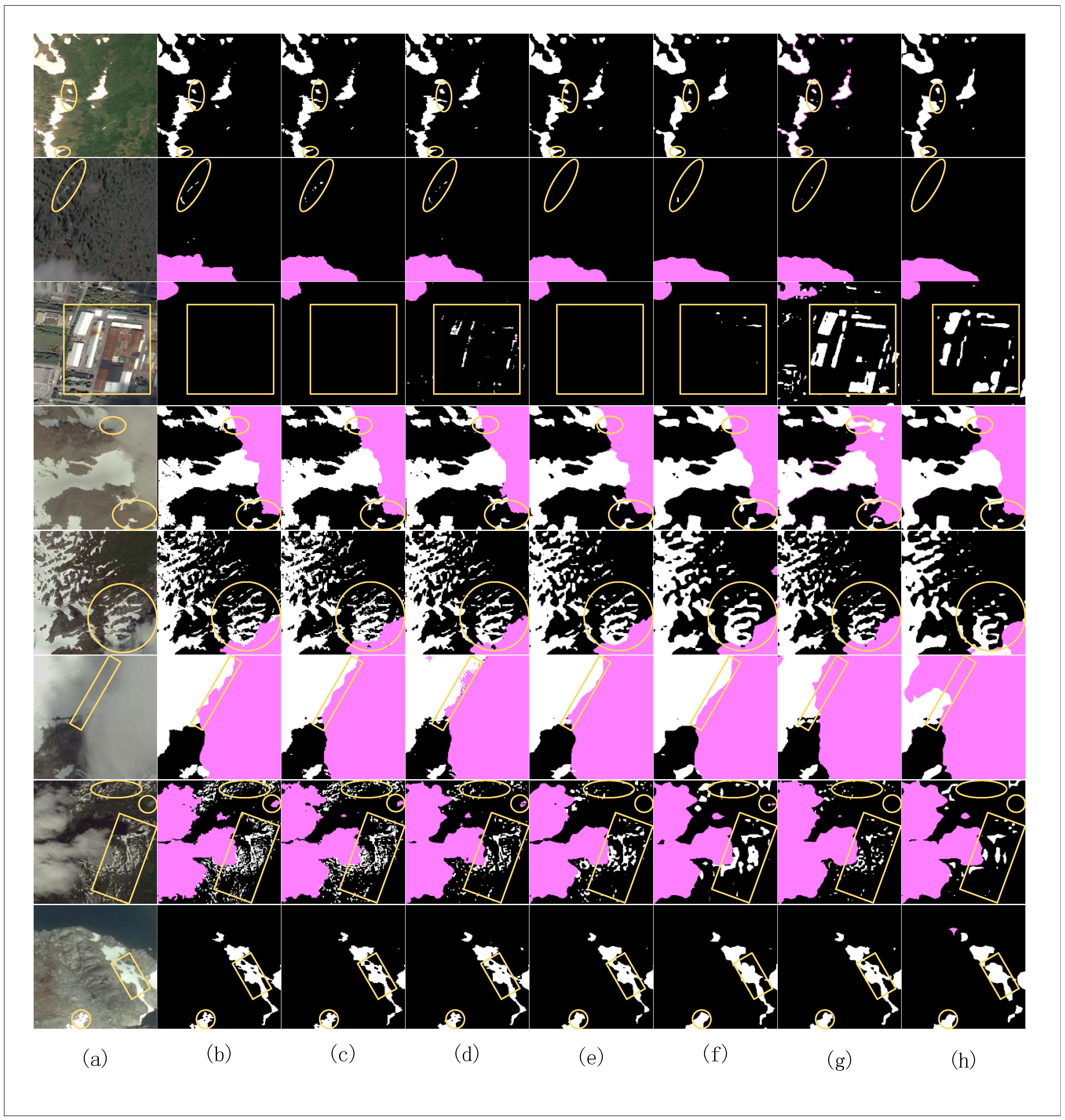

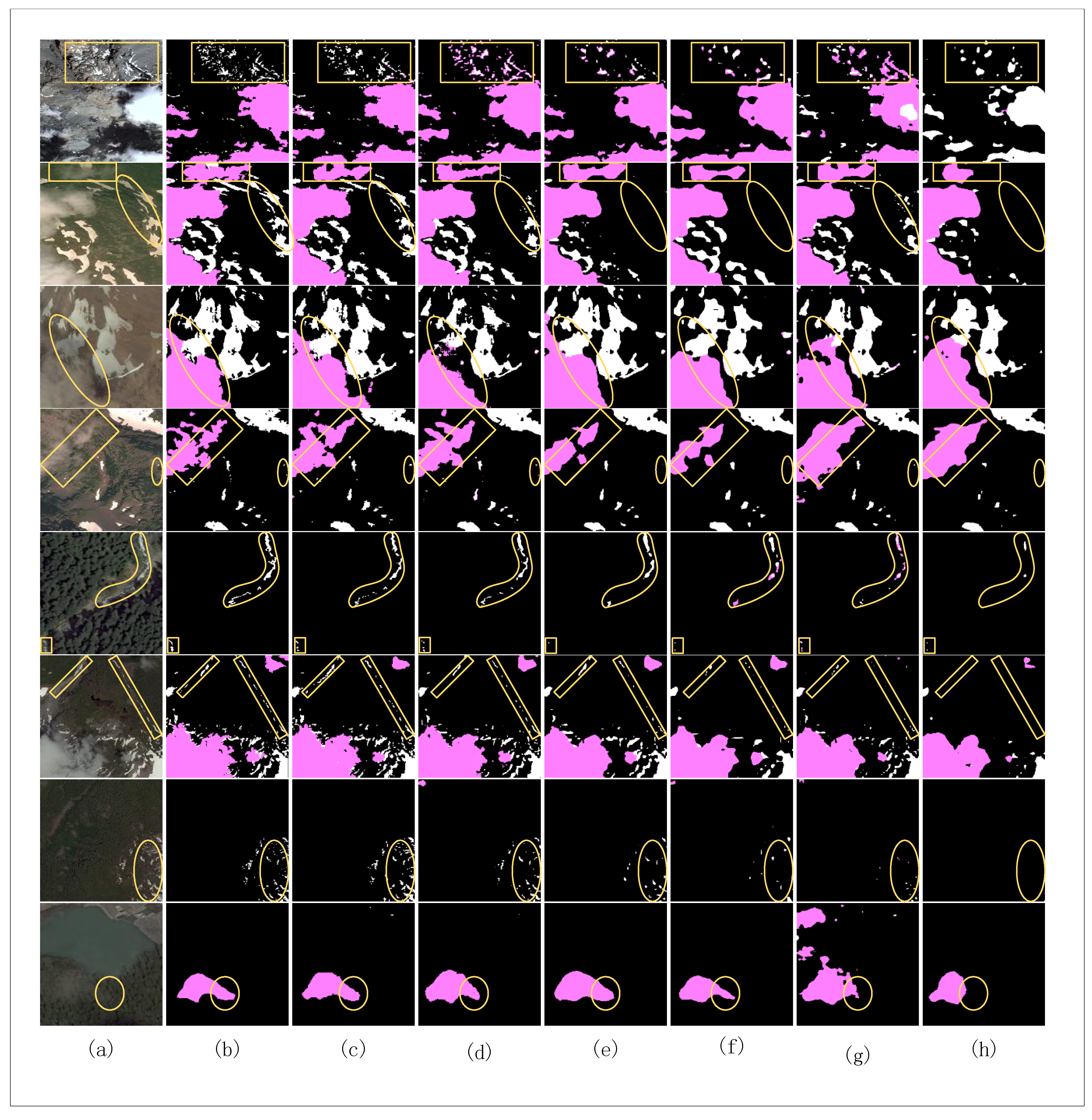

Figure 7 presents examples from various representative scenarios. In the selected examples, we highlight the network segmentation results at the same location with yellow boxes for easy comparison. In scenes with forest, grassland, and desert backgrounds, FCN8s, PAN, PSPNet, DeepLabV3Plus, and BiSeNetV2 miss or incorrectly detect scattered small clouds and snow. In contrast, our network accurately detects and segments nearly all clouds and snow in the image. In the third row, featuring urban backgrounds, our network’s TFM module, with its self-attention mechanism, effectively fuses and decodes feature information. It extracts semantic details from supplementary hierarchical context, minimizing the impact of interference factors and enhancing segmentation accuracy and robustness. PAN also performs well by constructing a feature pyramid that captures multi-scale semantic information, improving robustness to size and position changes. However, only our network and PAN avoid misjudging snow caused by the white roof in the image, while other networks make errors. In the fourth and fifth rows, our network shows higher segmentation accuracy, especially at the irregular junctions of cloud and snow. In the sixth row, when large areas of snow and clouds overlap and cloud shadows are cast onto the snow, our network more accurately segments the snow beneath the cloud shadow. Finally, in the seventh and eighth rows, our network, aided by the DFEM module, provides more detailed edge segmentation of clouds and snow compared to other networks. These results demonstrate the superior performance and robustness of our proposed network across various backgrounds.

In the cloud and snow-segmentation task, we observed a significant presence of thin clouds and scattered small cloud clusters and snow patches in remote sensing images. To address this, we selected relevant images and segmentation results for comparative analysis, as shown in

Figure 8. The results indicate that networks such as BiSeNetV2, DeepLabV3Plus, and PSPNet struggle with many missed and false detections when detecting thin clouds and small snow patches. This is primarily due to the noise present on the cloud boundaries, which can confuse the model’s decision-making process. These methods fail to extract sufficient semantic and spatial information, leading to poor performance on small-scale, scattered clouds and snow. In contrast, our network demonstrates superior detection accuracy for these types of targets. As illustrated in the third, fourth, and eighth rows, our network performs better in both cloud-detection accuracy and edge detail segmentation. The FEM module, by weighting low-level features from the convolution branch and combining them with high-level features rich in semantic information from the TEM branch, guides the network with location information. This feature fusion allows our network to more accurately predict and segment thin clouds and scattered small snow blocks.

3.5. Generalization Experiment of SPARCS Dataset

To additionally assess our method’s segmentation capabilities on multi-spectral satellite imagery, we performed generalization tests with the SPARCS dataset. The results are presented in

Table 4. On the left side of the table, we can observe that our network achieves the highest F1 scores for snow/ice, water, and land categories, reaching 94.07%, 91.22%, and 95.72%, respectively. Although our F1 score for cloud and cloud shadow detection is not the highest, it is very close to the best-performing network. On the right side of the table, our network outperforms others in terms of PA, FWIoU, and MIoU, demonstrating its effectiveness and strong generalization ability.

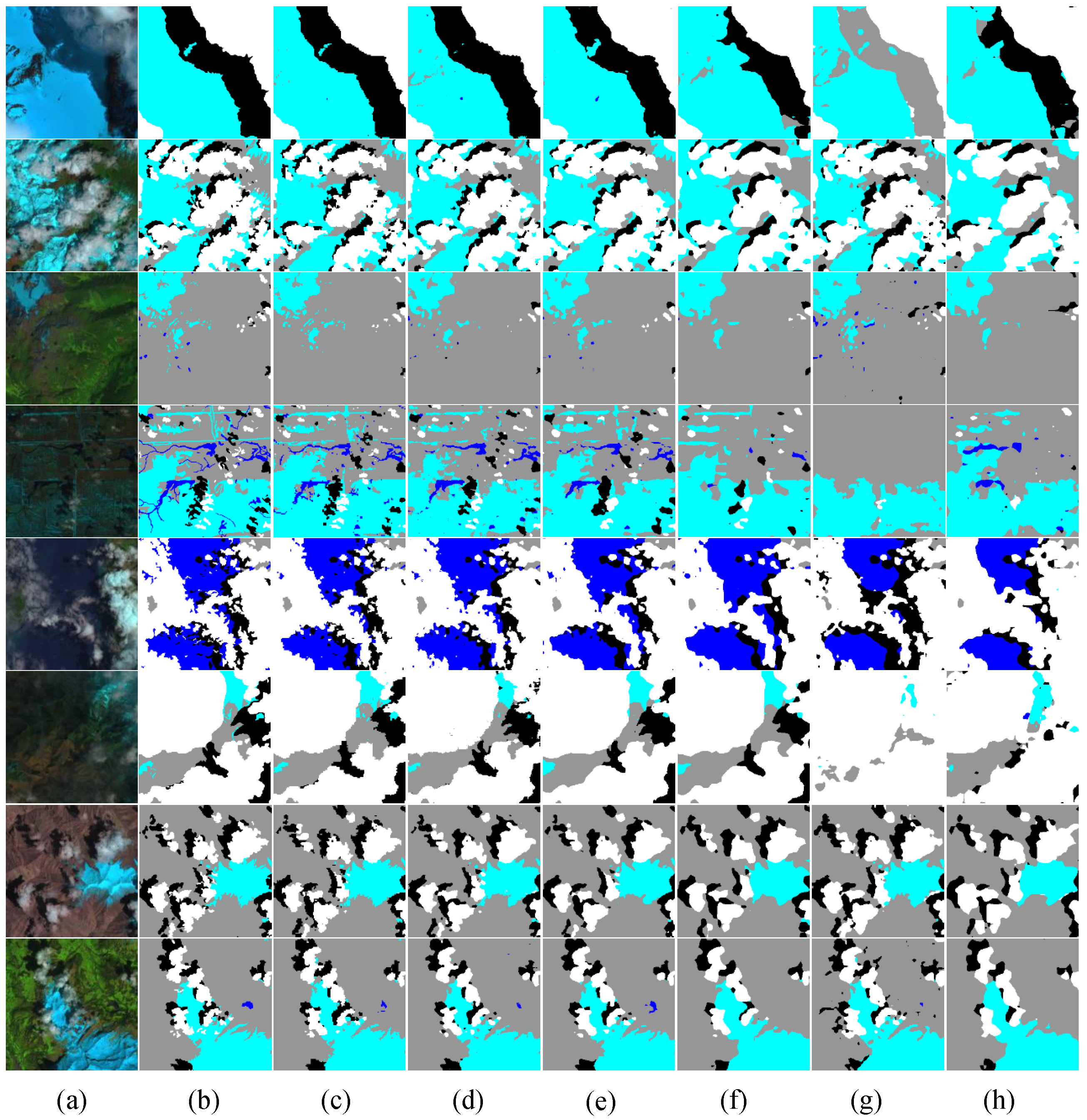

Figure 9 presents the segmentation performance of various networks on the SPARCS dataset across different scenarios. The third, fourth, and seventh rows focus on the segmentation of scattered small-scale clouds and snow, while the fifth and sixth rows highlight the segmentation of thin clouds. From the results, it is clear that BiSeNetV2 and PSPNet struggle with large-scale misdetections, insufficient small target detection, and rough edge segmentation. While FCN8s performs better overall, it still has some error detections, particularly in segmenting the cloud-snow junction, where the details are lacking.

Our network, however, benefits from a dual-branch design that combines convolution and transformer branches in the encoding stage. By leveraging the strengths of both, we enhance the feature-extraction process and improve decoding. This significantly boosts the network’s robustness and anti-interference capabilities, leading to more accurate segmentation. The FEM module, placed between the convolution and transformer branches, further strengthens cross-level information exchange, improving the network’s ability to detect thin clouds and small targets.

The first and second rows demonstrate the network’s ability to handle large snow and cloud areas, where cloud shadows are projected onto the snow layer in the remote sensing image. Our network effectively fuses multi-level feature information thanks to the TFM module, improving feature representation and segmentation accuracy. This results in better segmentation of clouds, snow, and cloud shadows, outperforming other networks. Additionally, the SPAM module extracts feature map averages in both horizontal and vertical directions, providing width and height information to enhance the network’s ability to perceive the shape, size, and boundaries of the target. This contributes to a more accurate segmentation of complex junctions between cloud, snow, and cloud shadow. Compared to other networks, our approach demonstrates superior segmentation accuracy in these intricate regions.

These results confirm that our network outperforms others in the five-category multispectral remote sensing image-segmentation task, showcasing its effectiveness in complex semantic segmentation scenarios.