Highlights

What are the main findings?

- This study generated a novel ship semantic segmentation dataset for high-resolution remote sensing images.

- In order to improve the small objects segmentation precision, a novel mamba-style segmentation model was proposed.

What is the implication of the main finding?

- Large-scale real-world scenarios experiment has proven the excellent performance of the proposed model.

- The generated dataset and advanced model have a significant impact on ocean monitoring and other intelligent interpretation fields.

Abstract

High-resolution remote sensing imagery is crucial for ship extraction in ocean-related applications. Existing object detection and semantic segmentation methods for ship extraction have limitations: the former cannot precisely obtain ship shapes, while the latter struggles with small targets and complex backgrounds. This study addresses these issues by constructing two datasets, DIOR_SHIP and LEVIR_SHIP, using the SAM model and morphological operations. A novel MambaSegNet is then designed based on the advanced Mamba architecture. It is an encoder–decoder network with MambaLayer and ResMambaBlock for effective multi-scale feature processing. The experiments conducted with seven mainstream models show that the IOU of MambaSegNet is 0.8208, the Accuracy is 0.9176, the Precision is 0.9276, the Recall is 0.9076, and the F1-score is 0.9176. Compared with other models, it acquired the best performance. This research offers a valuable dataset and a novel model for ship extraction, with potential cross-domain application prospects.

1. Introduction

Ship extraction technology plays a crucial role in marine monitoring, maritime traffic management, and marine resource development. With the rapid advancement of satellite remote sensing technology, unprecedented opportunities have emerged in the field of ocean surveillance. High-resolution remote sensing images provide abundant data resources for ship extraction. Currently, existing ship extraction methods can be broadly categorized into object detection and semantic segmentation approaches [1].

At present, remote sensing ship detection primarily focuses on object detection methods. Traditional object detection methods rely on bounding box regression to quickly locate the target. Although they perform well in terms of timeliness, these methods struggle to accurately capture the geometric boundaries of the target. Their primary focus is on target localization rather than precise geometric representation, which renders them inadequate in tasks where boundary accuracy is paramount.

In stark contrast, semantic segmentation offers a novel approach for ship detection. It provides pixel-level prediction capabilities, enabling a detailed depiction of the shape and structure of ships. This method not only locates the target but also comprehensively describes its shape and details, offering distinct advantages in fine-grained tasks. However, applying semantic segmentation to remote sensing ship detection still faces numerous challenges. The high variability of ship targets in appearance, orientation, and quantity, along with the interference from oceanic backgrounds such as waves, clouds, and low contrast, poses significant challenges to the model’s generalization ability, particularly in small target detection and handling complex backgrounds.

Additionally, the field of satellite remote sensing for ship segmentation faces a significant challenge: the lack of high-quality datasets specifically designed for ship segmentation. Table 1 summarizes some publicly available remote sensing image segmentation datasets, such as Dataset [2], HRSID [3], and MMShip [4]. The majority of existing datasets for ship targets in remote sensing are limited to the visible spectrum, with only a small number incorporating data from other spectral bands. Furthermore, these datasets often consist of original remote sensing images that have been cropped into smaller sizes, resulting in segmented ship images with incomplete boundaries or poor image clarity. Such limitations severely impact the accuracy of subsequent analysis and model training.

Table 1.

Comparison of remote sensing ship datasets.

In light of the foregoing, this work endeavors to bridge the existing lacuna in the field of ship segmentation. Initially, we devised and constructed an automated sample generation method for ship segmentation, culminating in the creation of two distinct ship segmentation datasets—DIOR_SHIP and LEVIR_SHIP. In contrast to previous efforts, the datasets established herein not only preserve the original dimensions of the imagery but also meticulously safeguard the integrity and clarity of ship boundaries during the segmentation process. Such characteristics render these datasets invaluable for the training of ship detection models, as their high-caliber annotations and precise segmentation furnish a robust foundation for subsequent research, while simultaneously bolstering the performance of ship target detection systems.

Furthermore, building upon the advanced Mamba architecture, we have pioneered the development of a high-precision ship extraction model that is tailored specifically to enhance long-range dependency modeling and address complex scenarios inherent in maritime contexts. This approach marks a significant departure from existing methodologies, as no prior research has applied the Mamba model to ship extraction tasks. Our innovation lies not only in adapting a proven architecture from the medical domain, renowned for its success in handling intricate data structures, but also in refining it for the unique challenges posed by ship detection in maritime environments. In contrast to traditional methods, our model achieves remarkable advancements in both accuracy and inference efficiency. The ultimate aim is to realize the precise extraction of ship targets, thus laying a solid foundation for subsequent, detailed analyses. In doing so, we contribute to the practical advancement and theoretical development of ship extraction technologies, furthering their application in maritime surveillance and other related fields. The main contributions of this article are as follows.

(1) To address the issue of the lack of high-quality datasets specifically designed for ship segmentation, we propose a high-precision dataset construction system that integrates the SAM model with advanced morphological operations. Our method faithfully preserves the native dimensions of the original imagery during the segmentation process, while rigorously maintaining the integrity and clarity of ship boundaries. Drawing on the well-established techniques of edge-assisted methods for capturing intricate details, our dataset construction strategy merges the advantages of edge extraction with morphological correction. This innovative fusion effectively mitigates the common problem of information loss encountered in traditional pixel-level comparisons.

(2) To tackle the formidable challenge posed by the diverse target types, stochastic spatial distributions, and varying quantities of ship targets, which significantly affect model generalization, we have developed MambaSegNet—a high-resolution remote sensing image segmentation network based on the Mamba architecture. Within an encoder–decoder framework, this network incorporates advanced deep learning concepts such as branch fusion and skip connections, focusing on multi-scale feature extraction and integration. By employing residual connections to enable lossless information transfer between downsampling and upsampling stages, the network adeptly avoids the notorious issue of gradient vanishing. Striking a delicate balance between capturing long-range dependencies and local details, MambaSegNet excels in complex backgrounds and small target extraction, showcasing the remarkable ability of modern architectures to merge the strengths of CNNs and Transformers.

(3) At the core of our design lies the ResMambaBlock module, which injects new vitality into feature representation and spatial detail recovery through state-space construction. Inspired by the exemplary performance of self-attention and cross-attention mechanisms in encapsulating global information, this module’s state-space paradigm seamlessly integrates high-frequency local details with global semantics. Much like the bi-temporal image transformer network (BIT) that excels in modeling spatiotemporal dependencies, ResMambaBlock not only preserves delicate edge information but also enhances the discriminative capability for small targets, thereby providing a robust technical guarantee for precise segmentation in complex scenarios. The introduction of this module significantly elevates the network’s sensitivity to subtle variations and fortifies the overall expressiveness of features, ensuring that the model retains superior robustness when confronting diverse targets.

The rest of this article is organized as follows. Section 2 provides a brief review of related work. Section 3 describes the specific details of the proposed framework. Section 4 presents the evaluation results on public datasets and compares them with current state-of-the-art algorithms. Section 5 discusses the proposed approach, and finally, Section 6 concludes this article.

2. Related Work

2.1. Object Detection and Ship Orientation Handling

Object detection technology enables rapid identification by locating target regions, offering high time efficiency. Li et al. proposed an improved R3Det algorithm [5] that introduces rotation bounding boxes to address the low detection accuracy in optical remote sensing images, significantly enhancing the recognition ability in areas with complex ship orientations. Ali et al. improved YOLOv4 [6] by incorporating a multi-scale feature fusion module, which boosted the detection accuracy of small ship targets. Fu et al. introduced the Dual Attention Network (DANet) [7], which adjusts the feature weights within the network, effectively reducing the interference of noise in maritime environments. We have adopted this attention mechanism to enhance the model’s robustness in noisy conditions.

2.2. Semantic Segmentation for Ship Extraction

Semantic segmentation provides a novel approach for ship extraction by enabling pixel-level predictions and precise object boundary delineation. This method not only locates targets but also fully describes their shape and details, offering a unique advantage in fine-grained tasks. In recent years, significant progress has been made in applying semantic segmentation for ship extraction. Diakogiannis et al. proposed the ResUNet-a framework [8], which establishes conditional relationships between tasks, significantly improving segmentation performance, especially in complex marine backgrounds and scenarios with blurred target features. Liu et al. introduced TMFNet [9], a Transformer-based architecture that integrates multi-modal features and Digital Surface Model (DSM) height information, enhancing the model’s ability to understand different class features, particularly in multispectral remote sensing images. Additionally, Sun et al. proposed HRNet [10], a network that achieves hierarchical fusion of high-resolution features, demonstrating exceptional robustness in capturing ship boundary details. MiSSNet introduces a memory-inspired approach for class-incremental semantic segmentation [11], leveraging Local Semantic Distillation (LSD) and Class-Specific Regularization (CSR) to address semantic distribution shifts in the background class, while maintaining performance on old and new classes. Gao et al. introduced a novel framework for generalized few-shot semantic segmentation (GFSS) in remote sensing images, called FoMA [12], which combines foundation models with few-shot learning. It leverages the semantic knowledge from foundation models to improve segmentation accuracy for both base and novel classes by using strategies like Support Label Enrichment (SLE), Distillation of General Knowledge (DGK), and Voting Fusion of Experts (VFE). Chen et al. proposed a method for arbitrary-direction ship detection based on Kullback–Leibler divergence regression [13], addressing the challenges of dense ship arrangements, directional variations, and complex backgrounds. They also introduced P2RNet [14], which generates region proposals using key points and achieves efficient, real-time maritime object detection based on a lightweight YOLOv5 network. Furthermore, they proposed a small ship target detection method [15] for low-resolution remote sensing images based on diffusion models (DMs). This method effectively enhances the detection accuracy of small ships in low-resolution images by incorporating cognitive condition inputs, a low-level super-resolution (L2SR) module, and a spatial refinement module (SRM). We have adopted this method to improve the model’s ability to capture ship boundaries in low-resolution images.

2.3. Mamba Long-Range Dependency Modeling

In recent years, the Mamba architecture [16] has gained widespread attention for its high precision and powerful long-range dependency modeling capabilities (Table 2). Hatamizadeh et al. proposed the Mamba-Transformer backbone [17], which combines convolutional and Transformer features to overcome the performance degradation of traditional methods in complex backgrounds. Ma et al. developed U-Mamba [18], a universal network for biomedical image segmentation that introduces long-range dependency modeling to advance biomedical image analysis. Liu et al. proposed VMamba [19], which utilizes State Space Models (SSM) and two-dimensional selective scanning (SS2D) to bridge the gap between one-dimensional sequence data processing and the non-sequential two-dimensional structure of visual data, achieving efficient computation with linear time complexity. Wang et al. combined the advantages of U-Net and Mamba to propose Mamba-UNet [20], which has shown promising potential in medical image segmentation, particularly in capturing precise boundaries and long-range dependencies. Zhang et al. introduced the CDMamba backbone [21], which synergistically integrates convolutional layers with the Mamba architecture to effectively capture both local details and global context for remote sensing change detection. In addition, they proposed an Adaptive Global-Local Guided Fusion block to further enhance the interaction of bi-temporal features by dynamically fusing temporal features with guidance from both global and local cues. Zhao et al. proposed the RS-Mamba (RSM) backbone [22], which integrates a state space model with an omnidirectional selective scan module to achieve efficient global context modeling in very-high-resolution remote sensing images with linear complexity. Ma et al. introduced RS3Mamba [23], a novel dual-branch network that integrates Visual State Space (VSS) blocks for global information enhancement in remote sensing image semantic segmentation, effectively overcoming the limitations of CNNs and Transformers. It also proposes the Collaborative Completion Module (CCM) to fuse features from both branches, improving the representation learning of remote sensing images. We have drawn inspiration from this design, creatively applying the Mamba architecture to remote sensing ship processing by combining the local detail capturing ability of U-Net with the long-range modeling advantages of Mamba, thereby enhancing the precision of ship extraction tasks.

Table 2.

Quantitative Comparison Table.

3. Data and Methods

3.1. Data

Through the following workflow, we successfully constructed a high-quality ship segmentation dataset, laying a solid foundation for subsequent ship extraction model training. The entire process, from data filtering and SAM-based segmentation to morphological correction, is illustrated in Figure 1.

Figure 1.

The generation flowchart of the proposed ship segmentation dataset.

In this study, we utilized two publicly available remote sensing datasets—DIOR [24] and LEVIR [25]. These datasets contain a wide range of object categories, including airplanes, bridges, chimneys, dams, ports, ships, and storage tanks. To focus specifically on the task of ship extraction, we performed a rigorous filtering process on the images and annotations. First, images and labels unrelated to ships were removed according to the dataset category tags. Subsequently, each image was manually inspected to ensure both sufficient image quality and the clarity of the ship targets. This procedure ensured that the curated dataset only contained accurate and high-quality ship images, thereby enhancing the precision and robustness of the subsequent tasks.

Next, we applied the SAM model [26,27] to segment the filtered images. The input to SAM consisted of the image and the corresponding label coordinates. To generate these labels, we unified the original annotations provided in the public datasets and manually corrected cases where the segmentation results were unsatisfactory. After balancing accuracy, memory requirements, and segmentation quality, we selected the ViT-L variant of the encoder for our experiments. SAM leveraged the input coordinates to identify and localize the ship targets within the image and then generated masks to extract them. Although this process effectively captured the ship targets, the resulting segmentation masks still exhibited potential defects or noise, which could adversely affect subsequent model training. To address this issue, we incorporated morphological post-processing [28] to refine the masks and further improve data quality.

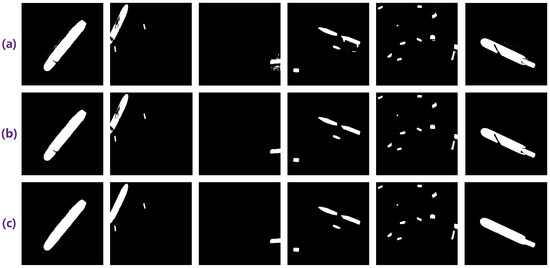

During the morphological correction stage, an opening operation was first applied to suppress isolated bright noise, followed by a closing operation to fill small holes in the masks, thereby improving the overall consistency and completeness of the segmentation results. Formally, the opening operation , defined as erosion followed by dilation with a structuring element S, removes small bright spots and fragmented noise while preserving the primary structure of the target. Conversely, the closing operation , defined as dilation followed by erosion, bridges small boundary gaps and fills local voids, resulting in smoother and more continuous object contours. The combination of opening and closing thus suppresses noise-induced artifacts and repairs segmentation discontinuities, yielding more stable ship masks. Given the heterogeneity of ship images, however, parameter settings for these operations varied across samples. Therefore, each corrected mask was manually reviewed and, where necessary, adjusted to ensure that the final dataset met the required quality standards. The comparison of the effect before and after morphological school calibration is shown in Figure 2.

Figure 2.

The comparison chart of the effects before and after shape correction of the school, where (a) represents the image before the treatment, (b) represents the image after the opening operation processing, and (c) represents the image after the further closing operation processing.

We classified the dataset based on the perimeter of the ships, where those under 450 pixels are classified as small ships, those between 450 and 900 pixels as medium ships, and those over 900 pixels as large ships. Table 3 shows the number of each type of ship in the DIOR_SHIP and LEVIR_SHIP datasets.

Table 3.

The number of each type of ship.

3.2. MambaSegNet

3.2.1. Framework Overview

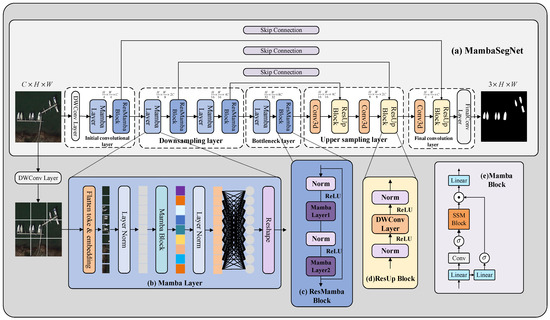

MambaSegNet is a novel lightweight encoder–decoder network designed for efficient and precise image segmentation tasks, particularly suited for remote sensing image segmentation. The model integrates two core modules: MambaLayer and ResMambaBlock. These modules are built upon the State Space Model (SSM) [29,30,31], which effectively enhances feature representation capabilities and spatial detail recovery.

The input to the model is a three-dimensional color image of size . This image undergoes initial transformation through a deep convolutional layer and is subsequently partitioned into discrete patches, or tokens, which are then restructured into a one-dimensional sequence . A preliminary linear embedding layer is employed to calibrate the feature dimensionality to , thus priming the representation for the architectural pipeline that follows. As the sequence traverses the network, a progressive construction of hierarchical features unfolds through the orchestration of multiple ResMamba Blocks and token merging layers.

These merging layers assume the role of downsampling modules, where Mamba Layers in conjunction with ResMamba Blocks serve to enrich the representational capacity by expanding the feature dimensions. The inverse process—upsampling—relies on Conv3d operations and ResUp Blocks to recover the lost dimensionality with fidelity. At the heart of this system lies the ResMamba Block, a construct meticulously designed to extract expressive feature representations with remarkable efficiency, thereby enhancing the model’s acuity in discerning intricate patterns and detecting diminutive targets within complex visual scenes. The encoder yields outputs across stages with resolutions specified by , , , and .

Mirroring the encoder in structure yet reversing its trajectory, the decoder integrates multiple Mamba Layers and ResMamba Blocks, along with token expansion layers, to reconstruct feature maps congruent with the spatial dimensions of the input. The incorporation of skip connections permits the restitution of spatial granularity attenuated during downsampling, bridging the semantic richness of deeper layers with the fine details of earlier representations. Such an architectural design not only augments computational economy but also mitigates the gradient vanishing phenomenon that often plagues conventional deep networks. Its efficacy is particularly pronounced in segmentation tasks, where it excels in parsing the complex geometry of remote sensing imagery. The intricate mechanics of the Mamba Layer, the ResMamba Block, as well as the encoder’s merging strategy and the decoder’s expansion scheme, shall be elucidated in depth in the ensuing chapters. The overall architecture is illustrated in Figure 3.

Figure 3.

The model architecture of MambaSegNet as proposed, where (a) depicts the MambaSegNet network architecture; (b) illustrates the Mamba Layer module; (c) shows the ResMamba Block; (d) presents the ResUp Block; (e) highlights the Mamba module within the Mamba Layer.

3.2.2. Convolutional Layers

The convolutional design in MambaSegNet comprises two principal components: the initial convolutional layer and the final convolutional layer. The initial stage employs depthwise convolution [32] enabling each input channel to undergo convolution independently. Such an architectural choice profoundly reduces the parameter count and markedly enhances computational efficiency. In contrast, the final convolutional layer performs a mapping of high-dimensional feature representations onto the desired number of output channels, thereby producing the segmentation result. This configuration demonstrates remarkable adaptability. It is not only well-suited for binary classification tasks but also robust in addressing multi-class segmentation challenges. Its versatility empowers the model to interpret complex scenes and heterogeneous object types characteristic of remote sensing imagery with both precision and nuance.

3.2.3. Mamba Layer and ResMamba Block

The Mamba Layer initiates by flattening and embedding the image, partitioning it into several tokens. These tokens are then mapped from their original high-dimensional space to another dimensional feature space via embedding and the integration of the Mamba module (e). At the heart of MambaSegNet lies the ResMamba Block, a core module built upon the visual Mamba module. Within this block, input features undergo an initial linear embedding before being divided into two processing paths. The first path includes feature normalization, depthwise convolution, and ReLU activation [33,34] followed by further convolution operations and feature extraction through the Mamba Layer module. Ultimately, the output of this path undergoes layer normalization and merges with features from the second path.

The model can be formulated as linear ordinary differential equations (ODEs) in Equation (1)

The discrete version of this linear model can be transformed by a zero-order hold given a timescale parameter .

where . The approximation of B is refined using the first-order Taylor series .

In contrast to conventional visual Transformer architectures, the ResMamba Block eliminates the multi-head attention mechanism and simplifies the structure by omitting the multi-layer perceptron (MLP) stage. This streamlined design allows for the stacking of additional modules within the same computational budget, thereby enhancing both computational efficiency and feature representation capacity. Moreover, the incorporation of the Mamba Layer enables the network to effectively learn complex image features, demonstrating marked advantages in processing small objects and handling intricate scenes, especially within remote sensing image analysis.

3.2.4. Encoder

In the encoder, after downsampling, the tokenized input features with dimensions pass through two consecutive ResMamba Block modules. These blocks preserve the feature dimensions and resolution while learning feature representations, yielding a refined dimensionality of . The downsampling process begins by partitioning the input image into four quadrants (1/4 segments), which are then merged. Following this, layer normalization is applied to standardize the dimensions, halving the token count. After each downsampling step, convolution operations are employed to merge the tokens, reducing the spatial resolution of the feature map while simultaneously doubling the feature dimensions. This stepwise downsampling process enables the encoder to iteratively extract hierarchical features, ultimately producing the deepest feature map rich with semantic information.

3.2.5. Decoder

The decoder is responsible for progressively restoring the spatial resolution of the image through upsampling [35,36] and convolution operations. Similarly to the encoder, the decoder employs consecutive ResUp Block modules to incrementally recover the resolution of the feature maps.

Additionally, skip connections are utilized to merge the shallow features extracted by the encoder with the deeper features reconstructed by the decoder. This architectural choice preserves more spatial information, facilitating the recovery of finer resolution and details. In this context, skip connections play a pivotal role within the decoder, effectively integrating features from different levels of the encoder, enabling the decoder to recover a richer set of spatial details.

3.2.6. Bottleneck and Skip Connections

To address the bottleneck issue within MambaSegNet, the network design incorporates two ResMamba Block modules. Skip connections serve as a bridge between the encoder and decoder, facilitating the effective fusion of shallow features extracted by the encoder with the deeper features reconstructed by the decoder. This approach aids in retaining spatial information, enhancing the model’s ability to recover fine details. The introduction of skip connections significantly alleviates the gradient vanishing problem commonly encountered in deep networks. This becomes especially evident as network depth increases, where such connections notably improve both training stability and convergence speed.

In deep neural networks, two primary issues often emerge as the network deepens:

Degradation problem: As the network depth increases, training errors may no longer decrease, or even increase, leading to saturation or decline in accuracy.

Gradient vanishing/explosion: During backpropagation, when gradients are multiplied across layers, they may become exceedingly small (vanishing) or excessively large (exploding).

To address these challenges, refs. [37] introduced residual modules. These modules support the construction of deep network architectures while resolving issues of accuracy degradation and gradient abnormalities. Additionally, residual modules offer advantages such as reduced computational complexity and fast processing speeds. By learning features through convolutional blocks and performing dimensionality reduction and expansion via convolutional kernels, residual learning adds the original feature matrix to the learned feature matrix, thereby generating the final feature representation. This concept, known as residual learning, allows the model to learn new features based on the original ones, ultimately improving overall performance.

The mathematical representation of a residual unit is as follows:

where y and x represent the output and input of the residual unit, respectively. denotes the residual function, represents the weights associated with the residual function, and is the ReLU activation function.

3.3. Loss Function

The loss function plays a critical role in training deep learning models. By measuring the difference between predicted results and ground truth labels, it guides model optimization, thereby ensuring that predictions approach true values and ultimately improving the model’s performance. In image segmentation tasks, commonly used loss functions include Cross-Entropy Loss and Intersection over Union (IoU) Loss.

Cross-entropy loss is widely used in classification tasks, aiming to minimize the difference between predicted and actual class distributions. It is particularly effective in pixel-level classification problems as it quantifies the discrepancy between predicted and true class probabilities. On the other hand, IoU Loss directly optimizes the overlap between predicted and ground truth regions. This makes IoU Loss more suitable for image segmentation tasks, especially in scenarios where there is a significant imbalance between target regions and the background, such as when ships occupy a small area in remote sensing images. Combining Cross-Entropy Loss [38] with IoU Loss effectively balances classification accuracy and region overlap, resulting in more precise segmentation outcomes.

The formula for Cross-Entropy Loss is as follows:

where yi represents the ground truth label (0 or 1), pi is the predicted probability, and N is the total number of pixels.

The formula for IoU Loss is:

where P denotes the predicted region and G represents the ground truth region.

The combined loss function is formulated as:

where and control the weights of the Cross-Entropy Loss and IoU Loss, respectively. In addition, parameter can be represented as . This combination strategy balances the effects of both losses, enabling the model to achieve precise classification while effectively capturing target regions during training. In the article, the value of is set as 0.5.

3.4. Evaluation Metrics

Evaluation metrics are essential standards for assessing the performance of image segmentation models on test datasets, particularly in classification tasks. Commonly used metrics include Theo FLOPs, Parameter, inference speed, Recall, Precision, Accuracy, F1-Score, and Intersection over Union (IoU) [39,40,41]. Theo FLOPs measures the computational complexity of a model by quantifying the total floating-point operations required during inference, indicating the model’s

hardware demands. Models with lower FLOPs tend to be more efficient, resulting in faster processing and reduced computational costs. Parameter refers to the sum of all trainable weights and biases in a model, defining its capacity and complexity. While more parameters enable a model to learn more complex features, they also increase memory usage and computational demands, potentially leading to overfitting, especially when the dataset is small or training time is excessive. Inference speed, measured by frames processed per second, reflects the model’s responsiveness in real-time applications, ensuring quicker model responses for time-sensitive tasks such as autonomous driving or real-time video analysis.

Inference speed is crucial for real-time applications, ensuring smooth user experiences in resource-limited environments like mobile devices. Precision measures the proportion of predicted positive samples that are correctly identified, focusing on the model’s ability to accurately predict positive classes, which is particularly useful in tasks prone to high false positive rates. Recall evaluates the proportion of actual positive samples that are correctly identified, emphasizing the model’s ability to capture positive samples and is especially important in tasks where identifying as many positive samples as possible is critical. Accuracy reflects the model’s overall classification accuracy across all samples, though it may fail to adequately represent the performance of minority classes in cases of imbalanced data distribution. The F1-Score, as the harmonic mean of Precision and Recall, is suitable for tasks requiring a balance between the two metrics, making it particularly significant in small target segmentation tasks. IoU measures the overlap between predicted and ground truth regions, making it especially applicable to object detection and segmentation tasks by effectively evaluating the model’s precision in locating and segmenting target regions. Collectively, these evaluation metrics are complementary, offering a comprehensive multidimensional assessment of model performance. This ensures more accurate and representative evaluations under different tasks and scenarios.

The formulas for the metrics are as follows:

where and are the height and width of the input image, and represent the number of input and output channels, and is the size of the convolution kernel. Throughput refers to the number of operations that the hardware can execute per second. (True Positive) is the number of pixels correctly predicted as positive, (False Positive) is the number of pixels incorrectly predicted as positive, (False Negative) is the number of pixels incorrectly predicted as negative, and (True Negative) is the number of pixels correctly predicted as negative.

4. Results

4.1. Comparative Model Selection

To validate the effectiveness of the proposed MambaSegNet model in remote sensing ship extraction tasks, we compared its performance with seven mainstream segmentation models, including Unet [42], Unet++ [43], DeepLabV3+ [44,45], SegNet [46], BiseNetV2 [47,48], Segformer [49], SegNext [50], SwinTransformer [35,51], PIDNet [52], DCSAUnet [53], and TransUnet [54]. Segmentation experiments were conducted on the DIOR_SHIP and LEVIR_SHIP datasets to evaluate the performance of these models, and the results were compared with the segmentation performance of MambaSegNet. The key parameter configurations for each model are as follows: the optimizer employed is Adam, with a learning rate set at 0.0001 and momentum at 0.9. The weight decay is assigned a value of 0.0010, and the batch size is 4. The model undergoes 100 iterations, with an input size of , providing foundational data to support the subsequent discussion and analysis of experimental results.

4.2. Results Presentation

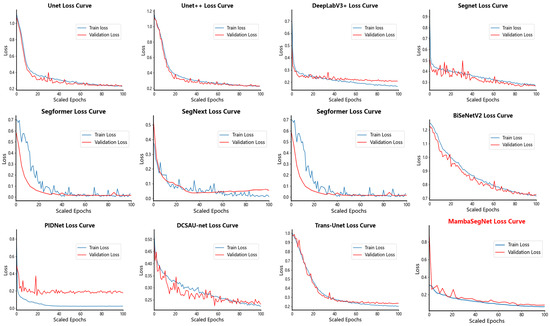

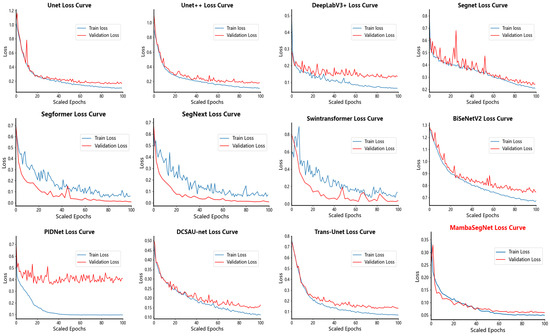

The DIOR_SHIP and LEVIR_SHIP datasets were divided into training, validation, and test sets in a 7:2:1 ratio. The training and validation sets were used for model training and validation, respectively, while the test set was utilized for final performance evaluation. The training loss values obtained from the experiments are shown in Figure 4 and Figure 5.

Figure 4.

Each model is trained to verify the Loss value of the DIOR_SHIP dataset.

Figure 5.

Each model is trained to verify the Loss value of the LEVIR_SHIP dataset.

Upon closely analyzing the loss curves from the Dior_ship and LEVIR_ship datasets, MambaSegNet emerges as a model of remarkable efficiency and stability, distinguishing itself from other architectures in terms of both rapid and smooth convergence. Throughout the training process, MambaSegNet exhibits a consistently steady decrease in training loss, with its validation loss also following a similarly smooth and continuous downward trajectory, demonstrating its inherent robustness. In stark contrast, while other models show relatively rapid loss reduction during the early epochs, they often suffer from significant fluctuations, particularly in the validation loss, which is indicative of overfitting or the model’s failure to generalize well beyond the training set. MambaSegNet, however, maintains a harmonious alignment between training and validation losses, with its validation curve remaining consistently stable in the later epochs, further emphasizing the model’s superior generalization capabilities. This pattern holds true across both the Dior_ship and LEVIR_ship datasets, confirming that MambaSegNet is highly adept at handling a diverse range of data distributions, reinforcing its credibility and versatility in real-world applications. The model’s ability to converge efficiently while mitigating overfitting signals its potential to reliably perform across a wide array of tasks that demand both high stability and precision.

This study was conducted on a Windows 11 Professional operating system. The software environment included Python 3.10.13, PyTorch 2.1.1, and CUDA 11.8. The experiments were performed using an NVIDIA GeForce RTX 3090 Ti GPU and a 12th Gen Intel(R) Core(TM) i9-12900KF CPU.

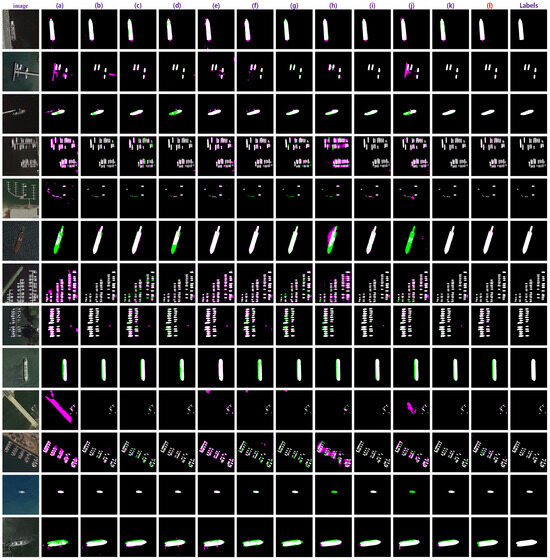

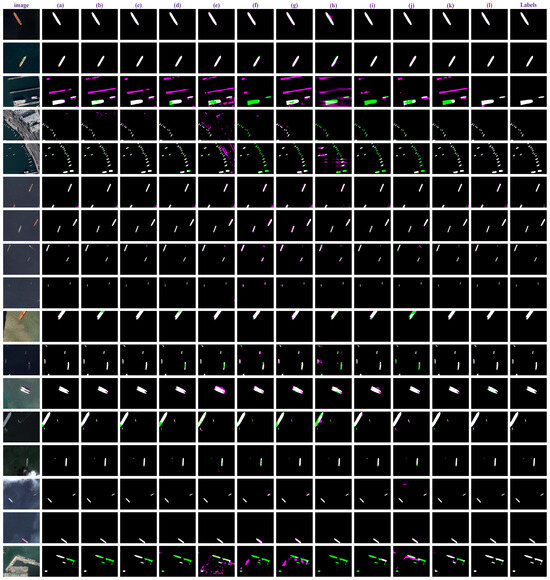

By using the trained weight files to perform segmentation on the test set, the resulting segmentation outputs are shown in Figure 6 and Figure 7. Upon careful observation of the results, it is clear that MambaSegNet outperforms all eight comparison models, with its segmentation performance significantly surpassing that of the other models. Compared to the label images generated by the SAM model, the segmentation results of MambaSegNet not only closely align with the ground truth but, in certain cases, even exceed the overall quality of the label images.

Figure 6.

Qualitative results on WHU-CD of DIOR_SHIP dataset. TP (white), TN (black), FP (magenta), and FN (green). Among them, (a) Unet, (b) Unet++, (c) DeepLabV3+, (d) SegNet, (e) SegFormer, (f) SegNeXt, (g) Swin Transformer, (h) BiseNetV2, (i) DCSAUnet, (j) PIDNet, (k) TransUnet, and (l) MambaSegNet.

Figure 7.

Qualitative results on WHU-CD of LEVIR_SHIP dataset. TP (white), TN (black), FP (magenta), and FN (green). Among them, (a) Unet, (b) Unet++, (c) DeepLabV3+, (d) SegNet, (e) SegFormer, (f) SegNeXt, (g) Swin Transformer, (h) BiseNetV2, (i) DCSAUnet, (j) PIDNet, (k) TransUnet, and (l) MambaSegNet.

4.3. Quantitative Accuracy Comparison

To further assess the performance of MambaSegNet in remote sensing ship segmentation tasks, this study compares its results with those of various segmentation models, including Unet, DeepLabV3+, and SegNet, on the DIOR_SHIP and LEVIR_SHIP datasets. Table 4 comprehensively sums up the architecture, strengths, and limitations of each model, while Table 5 and Table 6 present the key performance metrics for each model on the DIOR_SHIP and LEVIR_SHIP datasets, respectively, including inference speed, IoU, precision, and recall. These critical data points provide a robust foundation for a thorough comparative analysis of model performance. Through this comparison, the significant advantages of MambaSegNet in terms of both accuracy and robustness are highlighted. All parameter settings are the same as MambaSegNet (see it in Section 4.1).

Table 4.

Advantages and disadvantages of each model.

Table 5.

DIOR_SHIP dataset training accuracy comparison results.

Table 6.

LEVIR_SHIP dataset training accuracy comparison results.

4.4. Ablation Study

To empirically validate the effectiveness of the proposed architecture, we conducted a series of ablation experiments aimed at elucidating the critical role of the core token-mixing mechanism—State Space Model (SSM)—embedded within MambaSegNet. In this controlled experiment, we constructed a variant model, MambaSegNet-C [55], which retained the overall structural configuration of the original architecture but replaced the SSM module with a convolutional layer. This variant model provides a vital benchmark, enabling us to carefully assess the indispensability of the SSM in high-precision maritime target segmentation tasks.

We conducted experiments on the DIOR_SHIP and LEVIR_SHIP datasets, comparing the performance of both MambaSegNet and MambaSegNet-C. The results (Table 7) clearly demonstrate that while MambaSegNet-C outperforms many mainstream semantic segmentation models, its performance remains significantly inferior to that of the fully configured MambaSegNet. The absence of the SSM module notably diminishes the model’s ability to capture long-range dependencies and hinders its precision in recognizing small and densely distributed targets. This is especially evident in complex, heavily occluded scenes. A marked decline in metrics such as Intersection over Union (IoU), recall, and F1 score provides solid quantitative evidence of this performance gap.

Table 7.

Efficiency comparison of different methods.

Through this rigorous analysis, the theoretical advantages of the MambaSegNet architecture were strongly validated. Furthermore, the suitability of SSM for ship segmentation algorithms stems from its unique scanning mechanism. In ship segmentation scenarios, ship targets are typically densely distributed with complex and varied textures. The SSM module, through its recursive scanning process, gradually updates states and transmits information at each time step. This scanning approach enables the SSM to capture long-range dependencies effectively, integrating complex relationships between ship targets, surrounding environments, and other ships. In contrast, traditional convolutional layers, constrained by their receptive field, struggle to dynamically scan and integrate long-range image information. The scanning characteristics of the SSM remedy this limitation, providing significant advantages in handling ship segmentation tasks involving long-range dependencies and complex scenes.

As we know, the shape and context semantic features of ships in remote sensing images are complicated, and the downsampling operation in the feature extraction stage may destroy the high-level features of small objects in remote sensing images. In MambaSegNet, we used three upsampling blocks to recover the semantic features of objects, and to enhance the feature representation of ships, we used a depth-wise convolution kernel and two normalization layers to enhance semantic features. In contrast, we only used an interpolation method, such as the nearest neighbor sampling, to build a new Mamba-Style model, named MambaSegNet-U. The quantitative results of various models can also be seen in Table 7. We can see that the MambaSegNet-U achieved better results than MambaSegNet-C, while it acquired a relatively worse result compared with the original MambaSegNet. The results demonstrated that the carefully designed upsample block can help the whole model to learn effective semantic features.

5. Discussion

5.1. MambaSegNet’s Outstanding Performance and Overall Advantages in Semantic Segmentation

From the perspective of accuracy and efficiency, MambaSegNet’s performance on the DIOR_SHIP and LEVIR_SHIP datasets demonstrates a remarkable level of excellence in the field of semantic segmentation. Its precision and stability far surpass those of existing mainstream models, firmly securing its leading position. On the DIOR_SHIP dataset, MambaSegNet achieved a better performance in precision and F1-score, and regarding of speed and accuracy indicator, the proposed MambaSegNet also achieved a balance between them. Although DeepLabV3+ performed relatively closely in terms of Recall, it failed to achieve optimal global performance. Meanwhile, models such as SegNet and Segformer showed poor performance across multiple key metrics, exposing notable weaknesses.

On the LEVIR_SHIP dataset, MambaSegNet once again demonstrated absolute superiority in all metrics, further underscoring its strong adaptability to complex and dynamic scenarios. In contrast, other models exhibited significant performance fluctuations. For instance, the IoU dropped to 0.598, and DeepLabV3+ continued to show inadequate global metric balance, while Swin Transformer fell behind comprehensively across all evaluation metrics. These results further highlight MambaSegNet’s comprehensive advantages in accuracy, efficiency, and stability.

It is noteworthy that MambaSegNet’s outstanding performance is not limited to excelling in a single metric but is instead the result of a systematic improvement in overall performance driven by architectural design and technical optimization. The model achieves a perfect balance between accuracy, efficiency, and stability. Whether in terms of single-dataset accuracy comparisons or cross-dataset generalization capabilities, MambaSegNet exhibits a high degree of consistency. MambaSegNet not only redefines the performance benchmarks for semantic segmentation tasks but also provides clear guidance for future research and technological optimization, serving as a landmark achievement in the field.

5.2. The Broad Applicability and Future Expansion Potential of the Dataset

The high-precision ship segmentation dataset constructed in this study addresses the lack of annotated datasets for ship segmentation in the field of remote sensing. Through high-quality annotations and automated processing methods, this dataset significantly reduces manual annotation costs while ensuring the precision and consistency of segmentation results.

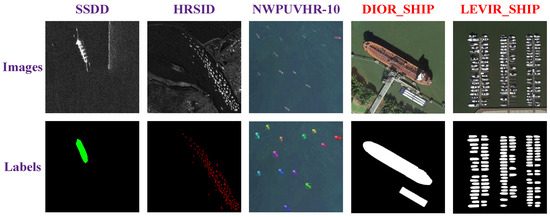

Compared to existing similar datasets such as SSDD [56], HRSID [3], and NWPUVHR-10 [57], the proposed dataset demonstrates significant improvements in image clarity, boundary integrity, and saliency, as illustrated in Figure 8. This dataset not only offers exceptional accuracy and precision but also exhibits strong generalization capabilities, making it applicable to a wide range of practical scenarios, including marine traffic monitoring, port management, and ship emission monitoring.

Figure 8.

Comparison of Segmented Images from Different Datasets.

Additionally, this dataset provides a solid foundation for comparative experiments on ship segmentation algorithms, optimization under complex scenarios, and multi-target extensions, driving technological development and innovation. As technology advances, this dataset can be extended to multi-modal remote sensing imagery tasks, further enriching and enhancing the data resources available in the remote sensing field. By establishing an open and shared platform, this dataset has the potential to promote the development and application of remote sensing segmentation technologies globally, becoming a key driver of progress in the field.

5.3. Evaluation of MambaSegNet’s Performance on Real-World Remote Sensing Imagery

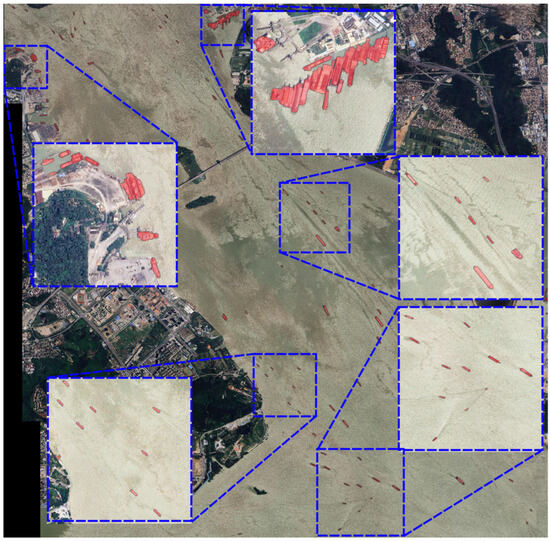

In the experimental section, the application of the MambaSegNet model for ship extraction in remote sensing imagery was explored. However, the suitability of the model in real-world remote sensing scenarios was not thoroughly investigated. To address this gap, we selected an exceptionally large remote sensing satellite image, applying the model to process it and assess its performance in practical settings.

In this experiment, the remote sensing satellite image was subjected to processing using the MambaSegNet model, resulting in a high-resolution output. This outcome vividly illustrates the model’s ability to handle small ship targets amidst complex backgrounds and confirms its effectiveness when applied to real-world remote sensing data. The processed image serves as a direct visual representation of MambaSegNet’s proficiency in extracting ships from intricate scenes, further validating the model’s precision and robustness.

Despite the model’s impressive performance on theoretical datasets, the results obtained from real-world imagery were equally compelling, showcasing its ability to deliver satisfactory segmentation even when confronted with the challenges inherent in remote sensing imagery. Figure 9 presents the processed result, providing a clear depiction of the model’s performance in a complex maritime environment.

Figure 9.

Processing Results of the Real-World Extracted Remote Sensing Image.

5.4. Robust Performance of MambaSegNet in Complex Maritime Environments

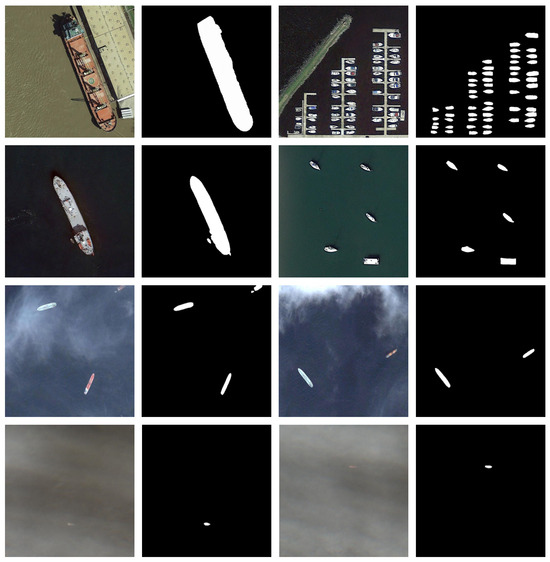

The MambaSegNet model demonstrates exceptional precision and adaptability across a variety of maritime environments. As shown in Figure 10, the model maintains remarkable accuracy in segmenting ships, regardless of environmental challenges. Whether dealing with ships of different sizes (first row), under varying lighting conditions (second row), amidst cloud cover (third row), or in dense fog (fourth row), MambaSegNet consistently delivers highly precise segmentation results. This highlights its superior ability to handle complex segmentation tasks across diverse conditions.

Figure 10.

Ship segmentation results under various maritime environments.

What distinguishes MambaSegNet from traditional models is its ability to navigate challenging environments where others may falter. Unlike conventional models, which often struggle with cloud cover or fog, MambaSegNet excels in these difficult settings. The model’s advanced multi-scale processing and long-range dependency modeling allow it to efficiently adapt to a variety of ship sizes and environmental interferences. This robustness not only underscores MambaSegNet’s precision but also establishes it as a leader in remote sensing ship extraction technologies.

In addition, the model’s consistent performance in complex conditions emphasizes its practical potential for real-world applications in maritime monitoring, traffic regulation, and environmental studies. MambaSegNet’s ability to maintain high accuracy despite environmental variability sets it apart as an invaluable tool, establishing new benchmarks for segmentation quality and generalization.

5.5. Impact of Training Set Sample Size on Model Weights and Performance

Experimental results indicate a close correlation between the quality of model weights and the sample size of the training dataset. When training was confined solely to the DIOR_SHIP and LEVIR_SHIP datasets, the model’s performance during the testing phase was somewhat constrained. However, when these two datasets were amalgamated for training, the resultant weights manifested a marked improvement in segmentation accuracy. Table 8 offers a comparative analysis of the accuracy metrics derived from processing the datasets separately versus in unison. This observation underscores that augmenting the sample size of the training dataset enables the model to learn richer and more diverse feature representations, thereby enhancing the precision of the weights.

Table 8.

Accuracy of the MambaSegNet Model in Processing Different Datasets.

Larger datasets not only provide a more diverse feature distribution but also improve the model’s generalization ability in complex scenarios. In future training dataset construction, combining multiple high-quality datasets and further expanding the sample size will effectively enhance model performance.

5.6. Limitations

In the domain of high-resolution remote sensing image segmentation, MambaSegNet excels in ship segmentation yet faces deployment challenges on mobile and lightweight devices due to its heavy reliance on substantial hardware resources. Additionally, it struggles with compatibility on legacy devices, mainly attributable to the Mamba framework’s design characteristics. The core issue lies in MambaSegNet’s visual state space model, which, despite effectively capturing long-range dependencies and handling complex scenes, entails significant computational complexity. The multi-layered image encoder and decoder require intensive processing power, hindering real-time performance on resource-constrained devices. For instance, alternatives like MobileSAM adopt decoupled distillation and a simplified encoder design to reduce computational overhead. Even with depthwise separable convolutions to lower computational demands, MambaSegNet’s parameter count and memory footprint remain high, leading to performance drops when memory and GPU resources are limited. Furthermore, deploying MambaSegNet on legacy hardware is problematic as manufacturers like NVIDIA discontinue CUDA support for older architectures (e.g., Maxwell, Pascal, Volta), and legacy GPUs with limited computational and memory capabilities cannot meet the model’s high-performance requirements. The need for updated CUDA versions and modern operating systems further complicates deployment on outdated devices.

6. Conclusions

This study presents an innovative approach to constructing a ship segmentation dataset and introduces the high-performance MambaSegNet segmentation model. The dataset was constructed by leveraging automated annotation processes and morphological correction techniques, which significantly enhanced the accuracy of data labeling while ensuring consistency and completeness in segmentation labels. This dataset addresses the challenges of limited data for fine-grained ship segmentation tasks in high-resolution remote sensing, thus providing a robust foundation for future research and applications.

The MambaSegNet model, grounded in the Visual State Space Model, integrates advanced multi-scale feature extraction and reconstruction mechanisms. It exhibits superior performance in segmentation tasks, particularly in handling boundary details, small targets, and multi-scale information, thereby outperforming existing mainstream models on various performance metrics. In experiments, MambaSegNet not only reproduced fine segmentation details but, in certain cases, even surpassed the quality of the original labels, demonstrating its capability to handle complex targets in remote sensing imagery. However, due to the high computational demands and large model size, it cannot achieve real-time performance on resource-constrained devices, facing deployment challenges on mobile and legacy devices. Future work should focus on optimizing the model’s efficiency and expanding compatibility to overcome these challenges.

Author Contributions

Conceptualization, R.W. and Y.Y.; methodology, R.W. and Y.Y.; software, R.W. and Y.Y.; validation, R.W., X.X. and Y.Y.; formal analysis, R.W. and X.X.; investigation, R.W. and Y.Y.; resources, R.W., X.X. and Y.Y.; data curation, R.W., X.X., Z.C. and Y.Y.; writing—original draft preparation, R.W., X.X. and Y.Y.; writing—review and editing, R.W., S.Y. and Z.W.; visualization, R.W. and Y.Y.; supervision, S.Y., Z.W., H.Z.; project administration, R.W., Z.W., Z.C. and S.Y.; funding acquisition, S.Y. and Z.W. R.W., H.Z., Y.Y., and X.X. contributed equally. All authors have read and agreed to the published version of the manuscript.

Funding

This paper is supported in part by the Natural Science Foundation of Jiangsu Province Project (BK20230312) and the Chongqing Science and Technology Bureau technology innovation and application development special (cstc2021jscx-gksb0116), and the Natural Science Foundation of Hunan Province Project (2025JJ80009), and the National Students’ Platform for Innovation and Entrepreneurship Training Program (Project No.: 202510291009).

Data Availability Statement

The code of the proposed approach can be found at https://github.com/wzp8023391/Ship_Segmentation (accessed on 19 September 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, X.N.; Chen, P.; Yang, J.S.; An, W.T.; Zheng, G.; Luo, D.; Lu, A.Y.; Wang, Z.M. TKP-Net: A Three Keypoint Detection Network for Ships Using SAR Imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2024, 17, 364–376. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. A SAR dataset of ship detection for deep learning under complex backgrounds. Remote Sens. 2019, 11, 765. [Google Scholar] [CrossRef]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A high-resolution SAR images dataset for ship detection and instance segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Li, C.; LI, L.; Wang, S.; Gao, S.; Ye, X. MMShip: Medium resolution multispectral satellite imagery ship dataset. Opt. Precis. Eng. 2023, 31, 1962–1972. [Google Scholar] [CrossRef]

- Li, J.; Li, Z.; Chen, M.; Wang, Y.; Luo, Q. A New Ship Detection Algorithm in Optical Remote Sensing Images Based on Improved R3Det. Remote Sens. 2022, 14, 5048. [Google Scholar] [CrossRef]

- Ali, S.; Siddique, A.; Ateş, H.F.; Güntürk, B.K. Improved YOLOv4 for aerial object detection. In Proceedings of the 2021 29th Signal Processing and Communications Applications Conference (SIU), Istanbul, Turkey, 9–11 June 2021; pp. 1–4. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3141–3149. [Google Scholar]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. ResUNet-a: A deep learning framework for semantic segmentation of remotely sensed data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef]

- Liu, Y.; Gao, K.; Wang, H.; Yang, Z.; Wang, P.; Ji, S.; Huang, Y.; Zhu, Z.; Zhao, X. A Transformer-based multi-modal fusion network for semantic segmentation of high-resolution remote sensing imagery. Int. J. Appl. Earth Obs. Geoinf. 2024, 133, 104083. [Google Scholar] [CrossRef]

- Sun, K.; Zhao, Y.; Jiang, B.; Cheng, T.; Xiao, B.; Liu, D.; Mu, Y.; Wang, X.; Liu, W.; Wang, J. High-Resolution Representations for Labeling Pixels and Regions. arXiv 2019, arXiv:1904.04514. [Google Scholar] [CrossRef]

- Xie, J.; Pan, B.; Xu, X.; Shi, Z. MiSSNet: Memory-Inspired Semantic Segmentation Augmentation Network for Class-Incremental Learning in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5607913. [Google Scholar] [CrossRef]

- Gao, T.; Ao, W.; Wang, X.-a.; Zhao, Y.; Ma, P.; Xie, M.; Fu, H.; Ren, J.; Gao, Z. Enrich Distill and Fuse: Generalized Few-Shot Semantic Segmentation in Remote Sensing Leveraging Foundation Model’s Assistance. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–18 June 2024; pp. 2771–2780. [Google Scholar]

- Chen, Y.; Wang, J.; Zhang, Y.; Liu, Y. Arbitrary-oriented ship detection based on Kullback-Leibler divergence regression in remote sensing images. Earth Sci. Inform. 2023, 16, 3243–3255. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, J.; Zhang, Y.; Liu, Y.; Wang, J. P2RNet: Fast Maritime Object Detection From Key Points to Region Proposals in Large-Scale Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2024, 17, 9294–9308. [Google Scholar] [CrossRef]

- Chen, Y.; Yan, J.; Liu, Y.; Gao, Z. LRS2-DM: Small Ship Target Detection in Low-Resolution Remote Sensing Images Based on Diffusion Models. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5628615. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Hatamizadeh, A.; Kautz, J. MambaVision: A Hybrid Mamba-Transformer Vision Backbone. arXiv 2024, arXiv:2407.08083. [Google Scholar] [CrossRef]

- Ma, J.; Li, F.; Wang, B. U-Mamba: Enhancing Long-range Dependency for Biomedical Image Segmentation. arXiv 2024, arXiv:2401.04722. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. VMamba: Visual State Space Model. arXiv 2024, arXiv:2401.10166. [Google Scholar] [CrossRef]

- Wang, Z.; Zheng, J.-Q.; Zhang, Y.; Cui, G.; Li, L. Mamba-UNet: UNet-Like Pure Visual Mamba for Medical Image Segmentation. arXiv 2024, arXiv:2402.05079. [Google Scholar] [CrossRef]

- Zhang, H.; Chen, K.; Liu, C.; Chen, H.; Zou, Z.; Shi, Z. CDMamba: Remote sensing image change detection with mamba. arXiv 2024, arXiv:2406.04207. [Google Scholar] [CrossRef]

- Zhao, S.; Chen, H.; Zhang, X.; Xiao, P.; Bai, L.; Ouyang, W. RS-Mamba for Large Remote Sensing Image Dense Prediction. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5633314. [Google Scholar] [CrossRef]

- Ma, X.; Zhang, X.; Pun, M.O. RS3Mamba: Visual State Space Model for Remote Sensing Image Semantic Segmentation. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6011405. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Zou, Z.; Shi, Z. Random access memories: A new paradigm for target detection in high resolution aerial remote sensing images. IEEE Trans. Image Process. 2017, 27, 1100–1111. [Google Scholar] [CrossRef] [PubMed]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 4015–4026. [Google Scholar]

- Ravi, N.; Gabeur, V.; Hu, Y.-T.; Hu, R.; Ryali, C.; Ma, T.; Khedr, H.; Rädle, R.; Rolland, C.; Gustafson, L.; et al. SAM 2: Segment Anything in Images and Videos. arXiv 2024, arXiv:2408.00714. [Google Scholar] [CrossRef] [PubMed]

- Ma, N.; Liu, C. Multi-Feature FCM Segmentation Algorithm Combining Morphological Reconstruction and Superpixels. Comput. Syst. Appl. 2021, 30, 194–200. [Google Scholar] [CrossRef]

- Gu, A. Modeling Sequences with Structured State Spaces; Stanford University: Stanford, CA, USA, 2023. [Google Scholar]

- Mehta, H.; Gupta, A.; Cutkosky, A.; Neyshabur, B. Long range language modeling via gated state spaces. arXiv 2022, arXiv:2206.13947. [Google Scholar] [CrossRef]

- Wang, J.; Zhu, W.; Wang, P.; Yu, X.; Liu, L.; Omar, M.; Hamid, R. Selective structured state-spaces for long-form video understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 6387–6397. [Google Scholar]

- Howard, A.G. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Eckle, K.; Schmidt-Hieber, J. A comparison of deep networks with ReLU activation function and linear spline-type methods. Neural Netw. 2019, 110, 232–242. [Google Scholar] [CrossRef]

- Shazeer, N. Glu variants improve transformer. arXiv 2020, arXiv:2002.05202. [Google Scholar] [CrossRef]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-unet: Unet-like pure transformer for medical image segmentation. In Proceedings of the European conference on computer vision, Tel Aviv, Israel, 23–27 October 2022; pp. 205–218. [Google Scholar]

- Zhang, Y.; Zhao, W.; Sun, B.; Zhang, Y.; Wen, W. Point cloud upsampling algorithm: A systematic review. Algorithms 2022, 15, 124. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Li, X.; He, M.; Li, H.; Shen, H. A combined loss-based multiscale fully convolutional network for high-resolution remote sensing image change detection. IEEE Geosci. Remote Sens. Lett. 2021, 19, 8017505. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Cheng, B.; Girshick, R.; Dollár, P.; Berg, A.C.; Kirillov, A. Boundary IoU: Improving object-centric image segmentation evaluation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15334–15342. [Google Scholar]

- Yacouby, R.; Axman, D. Probabilistic extension of precision, recall, and f1 score for more thorough evaluation of classification models. In Proceedings of the First Workshop on Evaluation and Comparison of NLP Systems, Online, 20 November 2020; pp. 79–91. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, Granada, Spain, 20 September 2018; pp. 3–11. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. arXiv 2018, arXiv:1802.02611. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Yu, C.; Gao, C.; Wang, J.; Yu, G.; Shen, C.; Sang, N. Bisenet v2: Bilateral network with guided aggregation for real-time semantic segmentation. Int. J. Comput. Vis. 2021, 129, 3051–3068. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, M. LUN-BiSeNetV2: A lightweight unstructured network based on BiSeNetV2 for road scene segmentation. Comput. Sci. Inf. Syst. 2023, 20, 1749–1770. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. arXiv 2021, arXiv:2105.15203. [Google Scholar] [CrossRef]

- Guo, M.-H.; Lu, C.-Z.; Hou, Q.; Liu, Z.; Cheng, M.-M.; Hu, S.-M. Segnext: Rethinking convolutional attention design for semantic segmentation. Adv. Neural Inf. Process. Syst. 2022, 35, 1140–1156. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Xu, J.; Xiong, Z.; Bhattacharyya, S.P. PIDNet: A real-time semantic segmentation network inspired by PID controllers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 19529–19539. [Google Scholar]

- Xu, Q.; Ma, Z.; He, N.; Duan, W. DCSAU-Net: A deeper and more compact split-attention U-Net for medical image segmentation. Comput. Biol. Med. 2023, 154, 106626. [Google Scholar] [CrossRef]

- Chen, J.; Mei, J.; Li, X.; Lu, Y.; Yu, Q.; Wei, Q.; Luo, X.; Xie, Y.; Adeli, E.; Wang, Y.; et al. TransUNet: Rethinking the U-Net architecture design for medical image segmentation through the lens of transformers. Med. Image Anal. 2024, 97, 103280. [Google Scholar] [CrossRef] [PubMed]

- Yu, W.; Wang, X. Mambaout: Do we really need mamba for vision? arXiv 2024, arXiv:2405.07992. [Google Scholar] [CrossRef]

- Su, H.; Wei, S.; Liu, S.; Liang, J.; Wang, C.; Shi, J.; Zhang, X. HQ-ISNet: High-quality instance segmentation for remote sensing imagery. Remote Sens. 2020, 12, 989. [Google Scholar] [CrossRef]

- Su, H.; Wei, S.; Yan, M.; Wang, C.; Shi, J.; Zhang, X. Object detection and instance segmentation in remote sensing imagery based on precise mask R-CNN. In Proceedings of the IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1454–1457. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).