1. Introduction

Detecting the crucial ocean mesoscale eddies (OMEs) under harsh sea states, characterized by conditions such as significant wave heights (SWHs) exceeding 4 m and surface wind speeds surpassing 17 m/s, is of great significance but faces numerous challenges. Such conditions are commonly accompanied by significant fluctuations of SSH, anomalous distributions of sea surface temperature (SST), and intense activities of ocean surface currents, all of which are often further modulated by eddies [

1,

2]. Conversely, these extreme sea states can significantly alter the dynamic behaviors of eddies [

3]. For example, intense wind stress induced by typhoons could lead to rapid changes in the morphology and position of eddies and even the formation of secondary eddies [

4]. Moreover, the strong noise occurring under harsh sea states, such as storm surges and surface waves, poses more complexity to the OME detection based on remote sensing data or numerical simulations. The OME detection under harsh sea states is essential for understanding the interactions among typhoons, waves, and oceans. Moreover, it provides scientific support for maritime safety, extreme weather prevention, and disaster warning systems. Despite its crucial importance, reliable OME detection under such conditions remains a significant technical challenge. To our knowledge, the majority of research has focused on developing detection methods for normal sea states, where signals are clearer and less affected by noise.

Generally, detecting OMEs can be regarded as marking the regions where OMEs occur in an image. In earlier years, traditional methods for OME detection relied on manual annotations, mathematical or physical knowledge, and image processing techniques [

5]. With the increase in ocean observation data and advancements in computational power, deep learning has gradually shown unique advantages in OME detection. Despite this progress, dealing with the interference induced by harsh sea states on the ocean surface and data collection remains challenging.

Traditional Detection Methods: The most widely used physics-based method is the Okubo–Weiss (OW) parameter method [

6,

7], which introduced a parameter to describe eddy activities in a flow field. It calculated the shear and rotational strain to describe the dynamic characteristics of the flow. The OW parameter method strongly depended on the choice of threshold and experience, which often resulted in substantial misclassifications. The Winding-Angle (WA) [

8] algorithm and Vector Geometry (VG) [

9,

10] algorithm did not rely on parameter selection, but they focused on the global topological properties of the flow field for OME detection instead. Later, an automatic eddy detection method based on the SSH data [

11,

12,

13] from satellite altimeters emerged as a more accurate, threshold-free eddy detection technique; however, it was susceptible to the strong noise in the flow field, leading to misclassifications. Therefore, the traditional physics-based approaches either relied on the choice of parameters and experience or struggled to generalize in complex oceanic states, motivating the exploration of AI-based methods for more robust and adaptive OME detection.

Deep Learning-based Research: Referring to previous studies, detecting OMEs can be typically defined as a semantic segmentation task [

14]. For instance, Lguensat et al. [

15] proposed the EddyNet approach, a U-Net-based semantic segmentation architecture that used SSH data for pixel-level eddy classification. Subsequently, Du et al. [

16] proposed DeepEddy to implement the classification of SAR images, which combined the Principal Component Analysis Network [

17] with Spatial Pyramid Pooling. Xu et al. applied PSPNet [

18], a semantic segmentation architecture, for OME detection. Duo et al. [

19] applied bounding boxes for OME detection based on SLA data, focusing on object detection rather than pixel-level classification to obtain accurate eddy localization. DeepLabV3 [

20] employed Atrous Spatial Pyramid Pooling (ASPP) to integrate contextual information and employed parallel atrous convolutions with varying dilation rates to extract multiscale features. Zhao et al. [

21] utilized Pyramid Split Attention (PSA) U-Net to extract OMEs from remote sensing images. PSA_EDUNet [

21], based on the UNet architecture, incorporated the PSA module and skip connections to capture eddy spatial information at different scales from both channel and spatial attention perspectives. DUNet [

22] adopted a dual encoder–decoder structure to mitigate the issue of overfitting in deep learning models. In recent work, Ding et al. [

23] established four foundational design principles for large kernel convolutional networks, ConvNets, systematically revealing their potential across various new domains. In the architecture, a larger receptive field enabled the simultaneous capture of multiscale contextual features, which facilitated enhanced learning of eddy structures characterized by intricate spatial patterns and localized morphological details. It helped the model better understand the relationship between the global structure and local variations in complex sea surface environments. However, most existing deep learning models were trained in relatively stable meteorological or ocean conditions (i.e., calm or light sea states). It creates a significant domain shift when the models are applied to real-world scenarios involving extreme weather. This shift severely restricts the models’ generalization ability. Specifically, the visual characteristics of eddies and the surrounding sea surface states change dramatically under high-noise conditions, preventing models from extracting robust and valid features and thereby leading to a rising rate of false detections. Therefore, bridging this domain gap is essential for developing a truly reliable OME detection system, which necessitates the introduction of domain adaptation strategies.

Domain adaptation aims to transfer knowledge acquired from a source domain to a target domain [

24]. The knowledge from each domain contains similar objects but exhibits different data distributions. In many practical scenarios, training data come from a source domain that is well-annotated but exhibits a domain shift from the real-world target domain, which typically has scarce or even no annotations. In this view, if conceptualizing the essence of harsh sea states as specific modes of ocean dynamics, we can frame the harsh sea states problem as a cross-domain challenge. We take normal sea states as the source domain and harsh sea states as the target domain. However, directly applying a model trained on the source domain to the target domain often results in substantial performance degradation. Domain adaptation addresses this issue by establishing a mechanism to mitigate the distribution discrepancy between the source and target domains, thereby enhancing the model’s generalization ability on the target domain. Depending on the availability of labeled data in the target domain, domain adaptation methods can be categorized into supervised, self-supervised, semi-supervised, and unsupervised approaches. Among them, Unsupervised Domain Adaptation (UDA) is the most challenging [

25], as it relies solely on labeled data from the source domain and unlabeled data from the target domain during training. Recently, this technique has been extensively applied to semantic segmentation tasks [

26,

27,

28]. Adversarial training is one of the UDA methods designed to align the distributions of the source and target domains at feature and output levels within a Generative Adversarial Network (GAN) framework. Using multiscale or multi-category information as discriminators can refine the alignment process. Inspired by this, we develop an eddy segmentation architecture which incorporates an ADF to ensure an effective OME segmentation under harsh sea states.

Specific Contributions of Our Work:

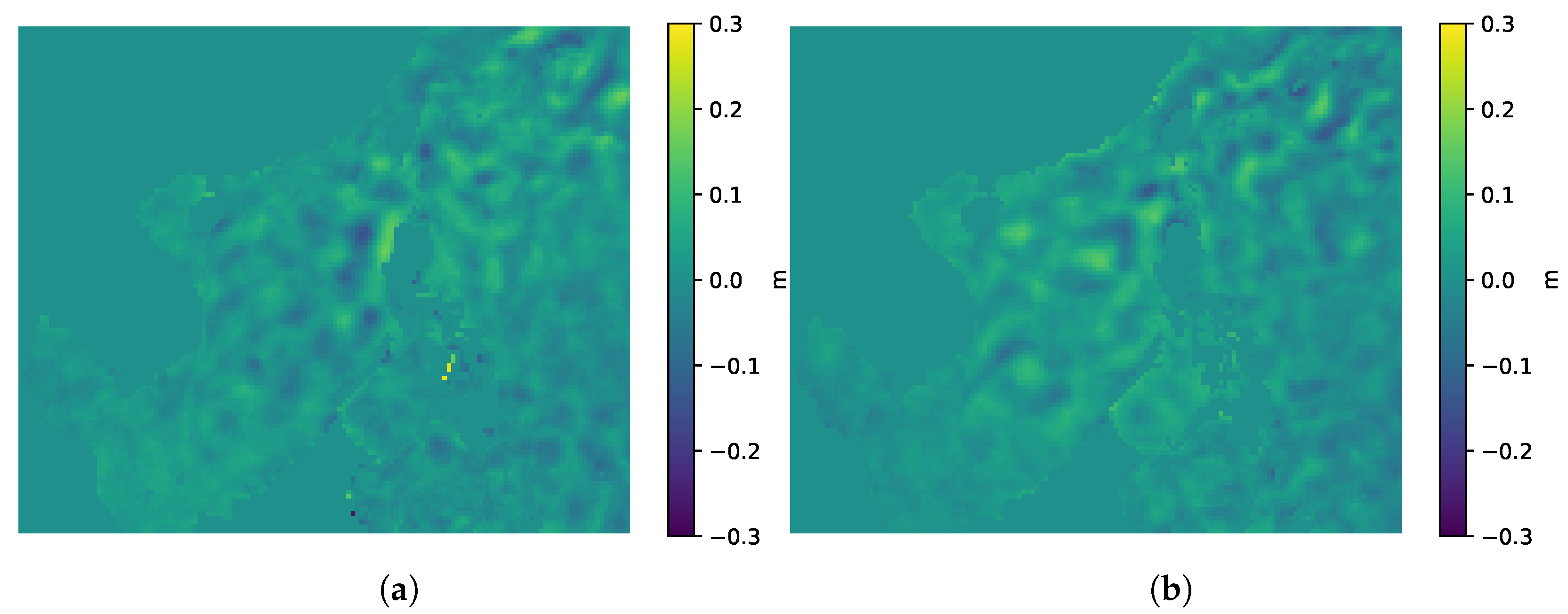

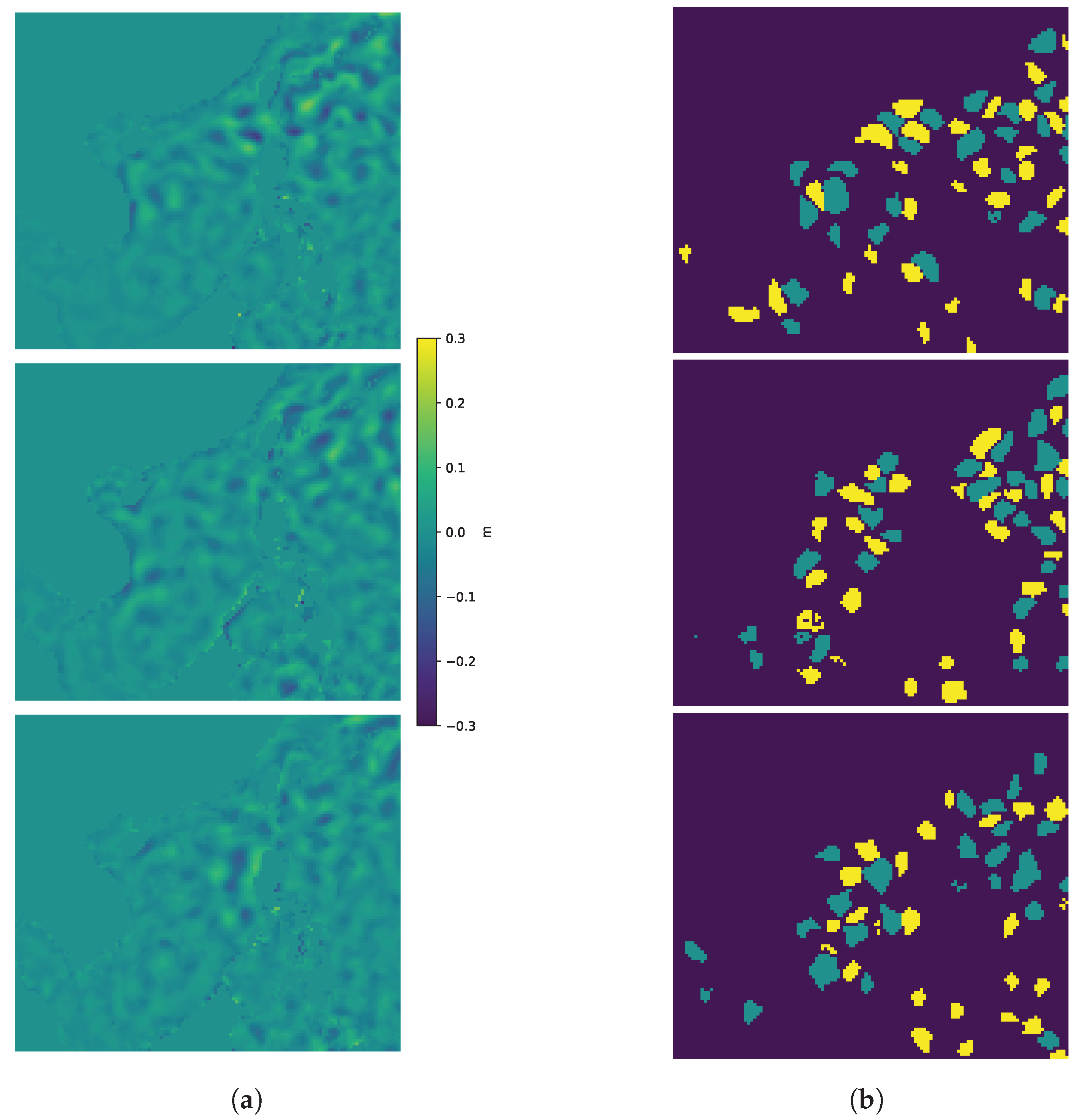

Investigating changes in sea surface data under harsh sea states: We have developed a dataset for OME detection, including sea level anomaly (SLA) data in normal sea states, which we constructed based on the reanalysis dataset of the South China Sea (REDOS) [

29], and SLA data in the harsh sea states obtained using a two-dimensional (2D) Gaussian function (GF), along with corresponding annotations.

Introducing an adversarial learning framework (ADF): We conceptualize the essence of harsh sea states as specific modes of ocean variabilities, thereby framing the harsh sea states problem as a cross-domain challenge. Thus, the domain adaptation technique can be applied to obtain promising results in both normal and harsh sea states. By incorporating a domain discrimination module, we introduce an ADF to learn domain-invariant feature representations between source (normal sea states) and target (harsh sea states) domains. It effectively addresses the challenges of abnormal sea surface disturbances and substantial background noise under harsh sea states. The proposed method achieves superior performance based on harsh sea states datasets compared to models without the domain discriminator module.

Proposing a novel end-to-end eddy segmentation model with large kernel convolution (LCNN): This model utilizes large kernel convolution to systematically expand the effective receptive field of the model and increase the level of spatial abstraction. It contributes to coping with complex fluctuations and various irregular eddy forms on the sea surface in harsh sea states. It also introduces more learnable parameters and nonlinear elements to increase the model’s representation capacity.

2. Proposed Method

Adversarial Domain Adaptation is a method that leverages adversarial learning mechanisms to enable a model to learn domain-invariant feature representations between the source (normal sea states) and target (harsh sea states) domains, thereby achieving cross-domain knowledge transfer. This architecture integrates a domain discrimination module with a Gradient Reversal Layer, and the gradient from the domain discriminator can be reversed and propagated back to the model. It inhibits the domain discriminator from identifying the different data sources, which encourages the model to extract the domain-invariant features that are indistinguishable between the source and target domains. Ultimately, adversarial training prompts the model to obtain similar feature representations and outputs in both domains, which improves the model’s generalization ability on the target domain and ensures its detection effectiveness under harsh sea states. We leverage this mechanism to design our training approach.

Our approach defines a source domain

S to describe ocean dynamics in normal sea states and a target domain

H to stand for the variabilities in harsh sea states. The source domain is derived from the SLA dataset we constructed based on the REDOS [

29] in normal sea states, while the target domain is a processed REDOS dataset with SLA under harsh sea states obtained using a 2D GF. Although sharing similar underlying data structures (i.e., maritime region, data types), the source and target domains exhibit distinct distribution characteristics of data. Therefore, the proposed method consists of two distinguishable modules: a domain discriminator and a segmentation model LCNN. Adversarial learning employs a domain discriminator to align LCNN’s output distributions between source and target domains, thereby achieving domain adaptation from

S to

H.

Figure 1 illustrates the complete architecture of the proposed method.

Below, we detail the modules and strategies involved in our method.

LCNN: The encoder–decoder architecture has been proven effective for eddy segmentation in various studies. Based on this framework, we develop an eddy segmentation architecture with an ADF, which is referred to as LCNN in this study. The proposed model consists of an attention module, encoder, pyramid pooling module, decoder, 1 × 1 convolution, and a softmax activation function. It progressively encodes the high-dimensional features of the input data, decodes them to restore the segmentation results, and ensures that the model can not only extract deep features of OMEs but also output accurate pixel-level OME masks at high resolution. An attention module is applied to extract spatial weights, enhance the ability to focus on local features in harsh sea states, and avoid false or missed detections caused by strong noise or disturbance. Finally, the 1 × 1 convolution is adopted to merge channels, and the softmax activation function is applied to obtain the segmentation result for each pixel.

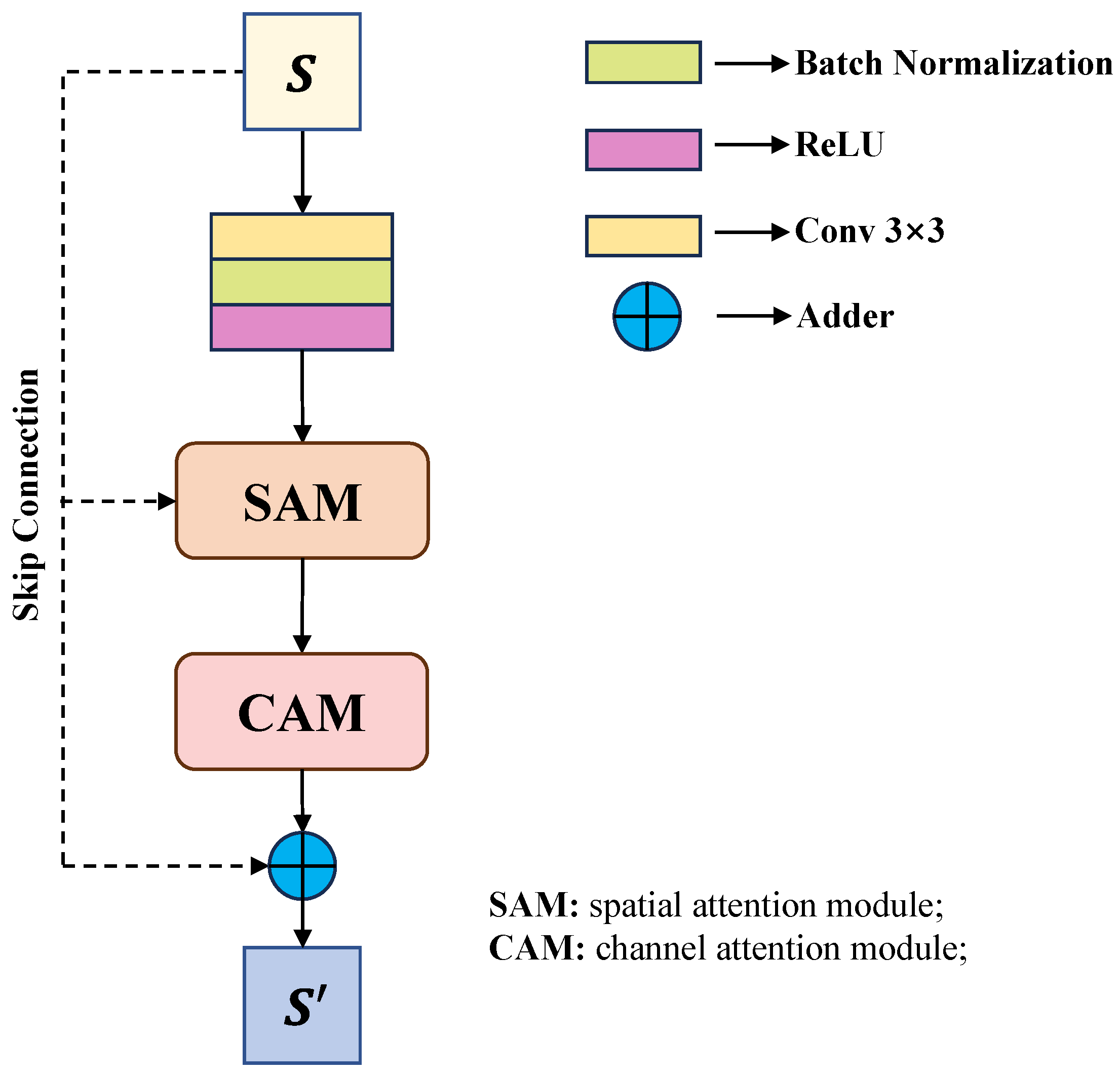

Attention Module:

Figure 2 illustrates the overall structure of the attention module, which is composed of convolutional layers, residual connections (also called skip connections), spatial attention modules (SAMs), and channel attention modules (CAMs). Ahead of the attention block, a 3 × 3 convolutional layer is adopted to convert the original input into a C-channel feature map, where C is a hyperparameter of the architecture.

SAM: A spatial multi-head attention mechanism was involved in the SAM. As shown in

Figure 3, it constructs multiple parallel attention matrices from the feature mappings of the convolutional block, revealing pixel relationships in the spatial domain. To construct the attention matrices, an input feature map is projected into Query (Q), Key (K), and Value (V) tensors using three independent

convolutional layers, making the projections spatially context-aware. The Q, K, and V tensors are then split into multiple parallel heads

H (Mul in

Figure 3). Critically, unlike standard spatial attention, our mechanism calculates attention across the feature dimensions within [

30] each head, thereby modeling the intercorrelations of the learned features. The outputs from all heads are concatenated, fused via a final convolutional layer, and then integrated back using a residual connection. In our implementation, we set the number of heads

H to 8 and the hidden feature dimension to 128. The spatial attention is applied to the original pixel matrices through element-wise multiplication, enabling the model to measure similarity among input elements. This process assigns varying levels of importance to each input, which allows the model to focus on the most pertinent information. The multi-head self-attention mechanism captures spatial attention representations by applying weighting within individual channels, and the convolutional layers primarily operate on the spatial dimension, extracting local features and spatial patterns. It is crucial to detecting eddy boundaries, rotation directions, and SSH anomalies in the eddy core region, covering both the local and overall eddy structures.

CAM: Each channel is generally considered an independent feature map, and this independence assumption prevents the model from capturing the relationships between various channels. Different channels commonly carry information with different characteristics. The ability of the CAM to enhance or suppress certain features helps to extract significant representations more effectively.

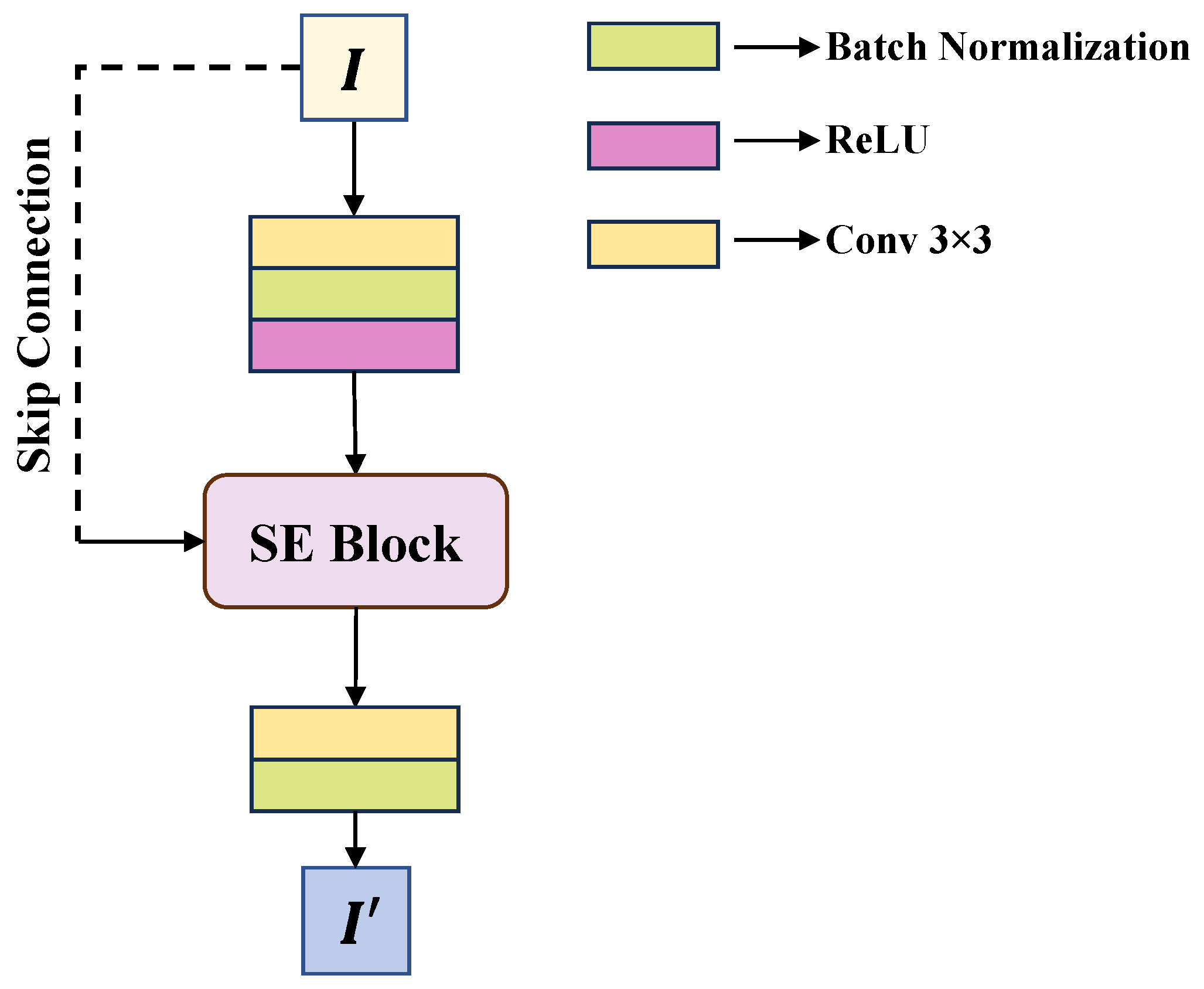

Our proposed CAM, illustrated in

Figure 4, is implemented as a sophisticated residual block integrated with a channel attention mechanism. Specifically, for a given input feature map, the module first processes it through a primary path containing a 3 × 3 convolution, a Rectified Linear Unit (ReLU) activation, which introduces nonlinearity into the model by setting all negative values to zero, and a Batch Normalization layer. In parallel, a shortcut connection processes the original input through a projection layer (a 1 × 1 convolution) to match feature dimensions. The outputs of the primary path and the shortcut are then fused via element-wise addition. This fused feature map is subsequently fed into a Squeeze-and-Excitation (SE) block [

31] for dynamically determining the weights for each channel. The SE block first applies global average pooling to ‘squeeze’ spatial information. Then, an ‘excitation’ step applies a two-layer fully connected network to learn channel-wise relationships. Crucially, this network employs a bottleneck architecture with a reduction ratio

. Finally, a 1 × 1 convolution maps the re-weighted features to the desired number of output channels.

With the incorporation of this composite CAM structure, the model can autonomously assign weights to different channels, optimize feature extraction more efficiently, enhance the importance of eddy-related features, and reduce the interference of strong wind-induced background flow. It leads to more precise and reliable eddy segmentation under harsh sea states.

Figure 4 illustrates the CAM proposed in this study.

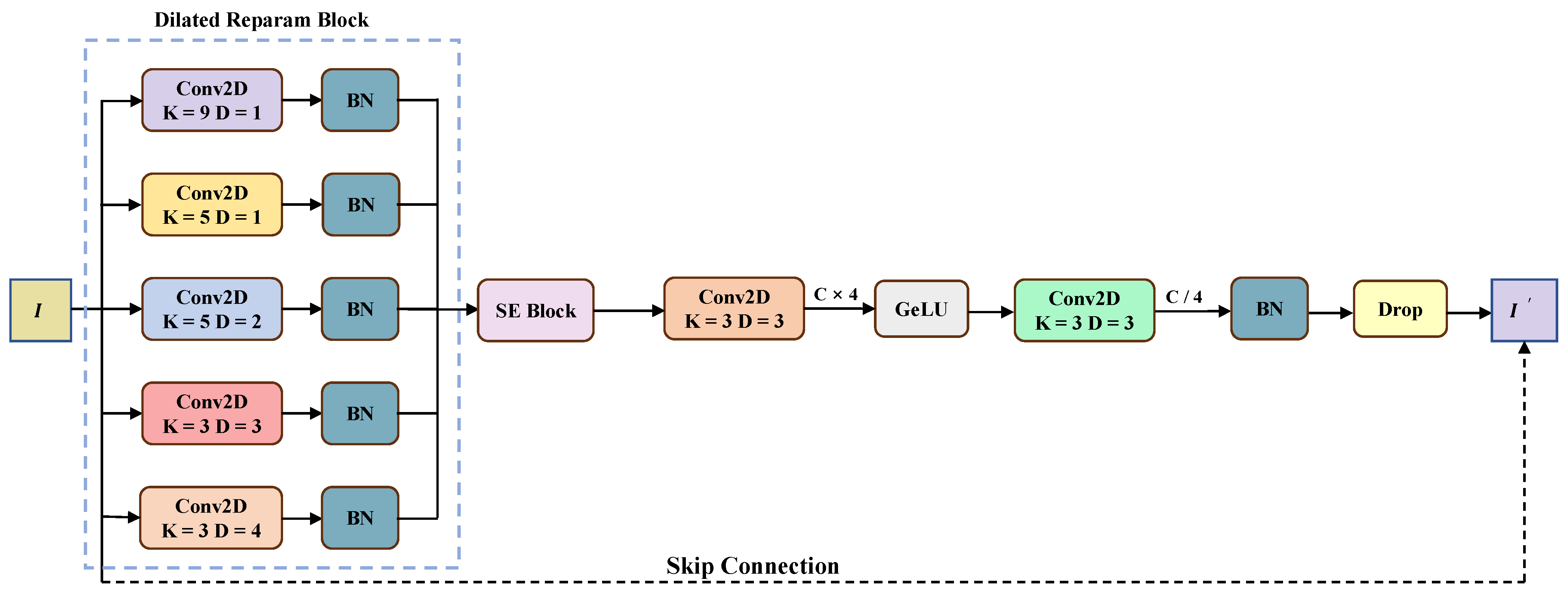

Encoder: The encoder is composed of a stacked feature extraction module (FEM). As shown in

Figure 5, a FEM usually consists of a Dilated Reparam Block, an SE Block, and a feedforward neural network. The Dilated Reparam Block (DRB) proposed by Ding et al. [

23] consists of five parallel dilated convolutions and batch normalization layers. From a parameter perspective, the dilated layer function is similar to a standard convolutional layer but with a large, sparsely populated kernel. The entire DRB is a large kernel convolution (LKC) operator. In recent research, LKC has been proven crucial in unlocking the outstanding performance of original model architectures in areas where they were not originally adept. Designing LKC can increase the model’s receptive field and enhance the abstraction level of spatial patterns in dealing with complex fluctuations and various irregular eddy forms on the sea surface in harsh sea states. Additionally, it can introduce more learnable parameters and nonlinear elements to increase the model’s representation capacity. Furthermore, a dropout layer is involved to reduce the risk of overfitting at the end of the transition block.

The encoder comprises three stages. Specifically, in each stage, the FEM uses a DRB to perform a 2-fold channel expansion on the feature map; therefore, the number of channels in the three stages is C, 2C, and 4C, respectively. Skip connections were proposed for ResNet [

32] to introduce a structure in deep neural networks which allowed the output of the specific layer in the model to directly skip over one or more intermediate layers and connect to the input of a subsequent layer. In this study, we design skip connections spanning the entire block; it allows the original

I to be directly added to

for addressing the issues of vanishing and exploding gradients, thus enabling the effective training of deeper model architectures.

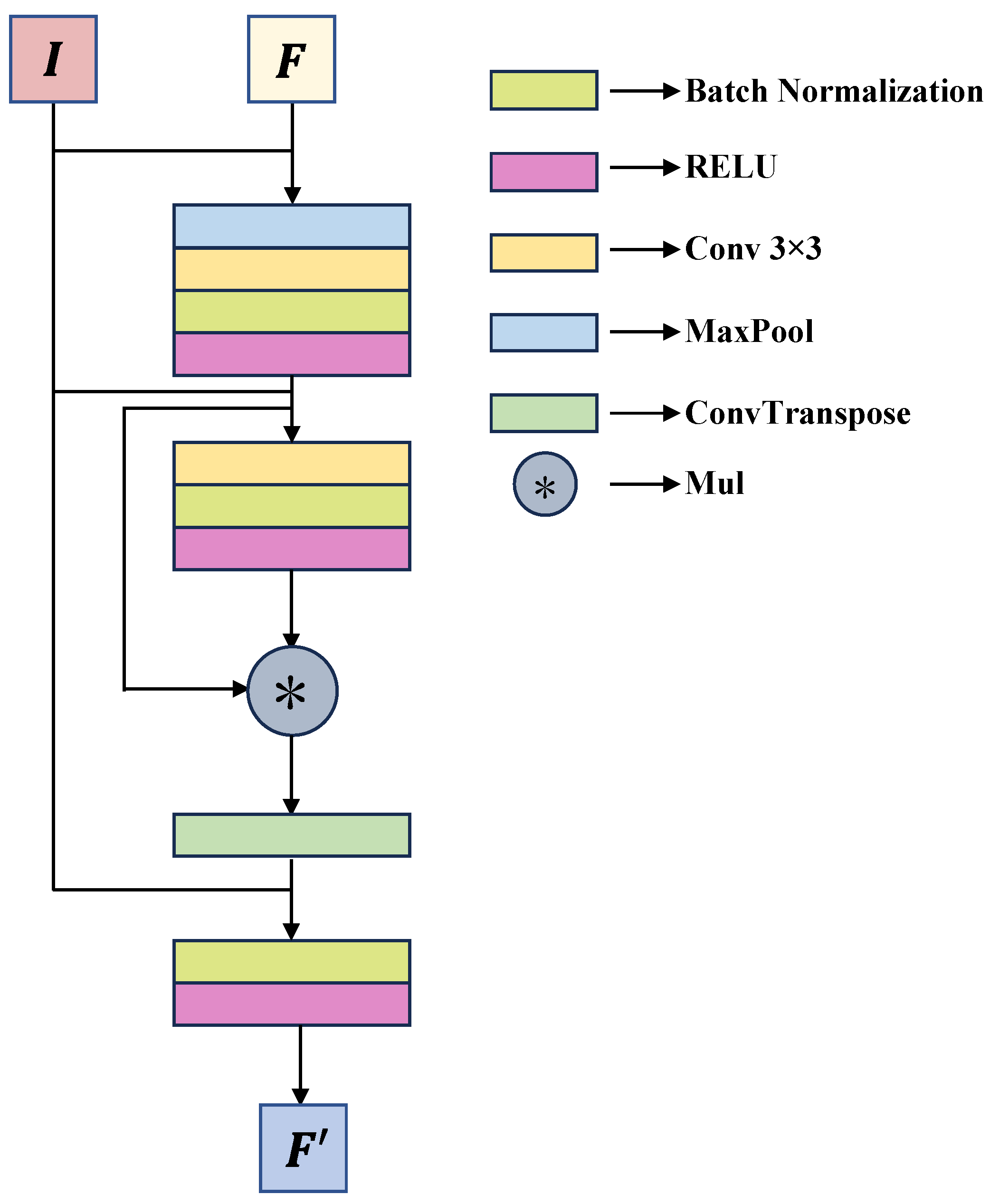

Decoder: The decoder is designed based on a stacked feature segmentation module FSM (

Figure 6). Each FSM consists of batch normalization, ReLU activation, 3 × 3 convolution, max pooling, and transposed convolution operations. In particular, the input feature

F merges with the skip connection feature

I originating from the encoder. Max pooling is applied to reduce redundant information and to enlarge the receptive field, while a 3 × 3 convolution enhances the local feature representation. Then, features are normalized and passed through a nonlinear activation. Finally, features are upsampled to restore the spatial resolution using transposed convolution. During this process, feature

I is concatenated with the features processed by the FSM, compensating for the detail loss caused by pooling and enhancing the precision of the segmentation boundaries. Our design can take into account computational efficiency and feature retention capability.

Discriminator: Inspired by the method in [

26], we train the discriminator and LCNN using an ADF in the output space to determine whether the segmentation outputs come from the source or target domain. This process aligns the features in normal sea states with those in harsh sea states. In this study, we modify the architecture from [

26] to design a new discriminator, which comprises four downsampling blocks and a classification layer; each downsampling block includes a convolutional layer that doubles the number of channels, followed by a downsampling layer. Consequently, the number of channels in the convolutional layers of the downsampling blocks are 64, 128, 256, and 512, respectively.

Each convolutional layer is followed by a batch normalization layer [

33] and a LeakyReLU activation function [

34]. The LeakyReLU layer aims to address the ‘neuron death’ problem associated with the standard ReLU activation function. When the input is less than zero, LeakyReLU allows these negative values to pass through with a small slope (e.g., 0.01) instead of setting them directly to zero. This design prevents gradient vanishing issues during training, keeping neurons active and facilitating learning of more complex feature mappings. The final classification layer uses convolution to merge the channels and produce the discrimination results.

The training of LCNN and the discriminator alternates between two steps. In the first step, with LCNN’s weights frozen, the discriminator is trained to distinguish segmentation outputs from the source domain (normal sea states) from those of the target domain (harsh sea states) using a binary cross-entropy loss. In the second step, the discriminator’s weights are frozen, and LCNN is updated. LCNN’s total loss function is a combination of its primary segmentation loss () and an adversarial component. To encourage LCNN to generate features that fool the discriminator, the adversarial loss is subtracted from the segmentation loss, weighted by a hyperparameter . The update rule for LCNN is based on , where we set . This schedule ensures stable training and effectively aligns the feature distributions across the two domains.

The pseudocode of the used discriminator is shown in Algorithm 1.

| Algorithm 1 Discriminator |

- 1:

Input: Input tensor , where B is the batch size, C is the number of channels, are height and width, and n is the number of hidden feature channels. - 2:

Initialize Layers: - 3:

- 4:

- 5:

- 6:

- 7:

- 8:

Forward Pass: - 9:

- 10:

- 11:

- 12:

- 13:

- 14:

return: x (output tensor)

|

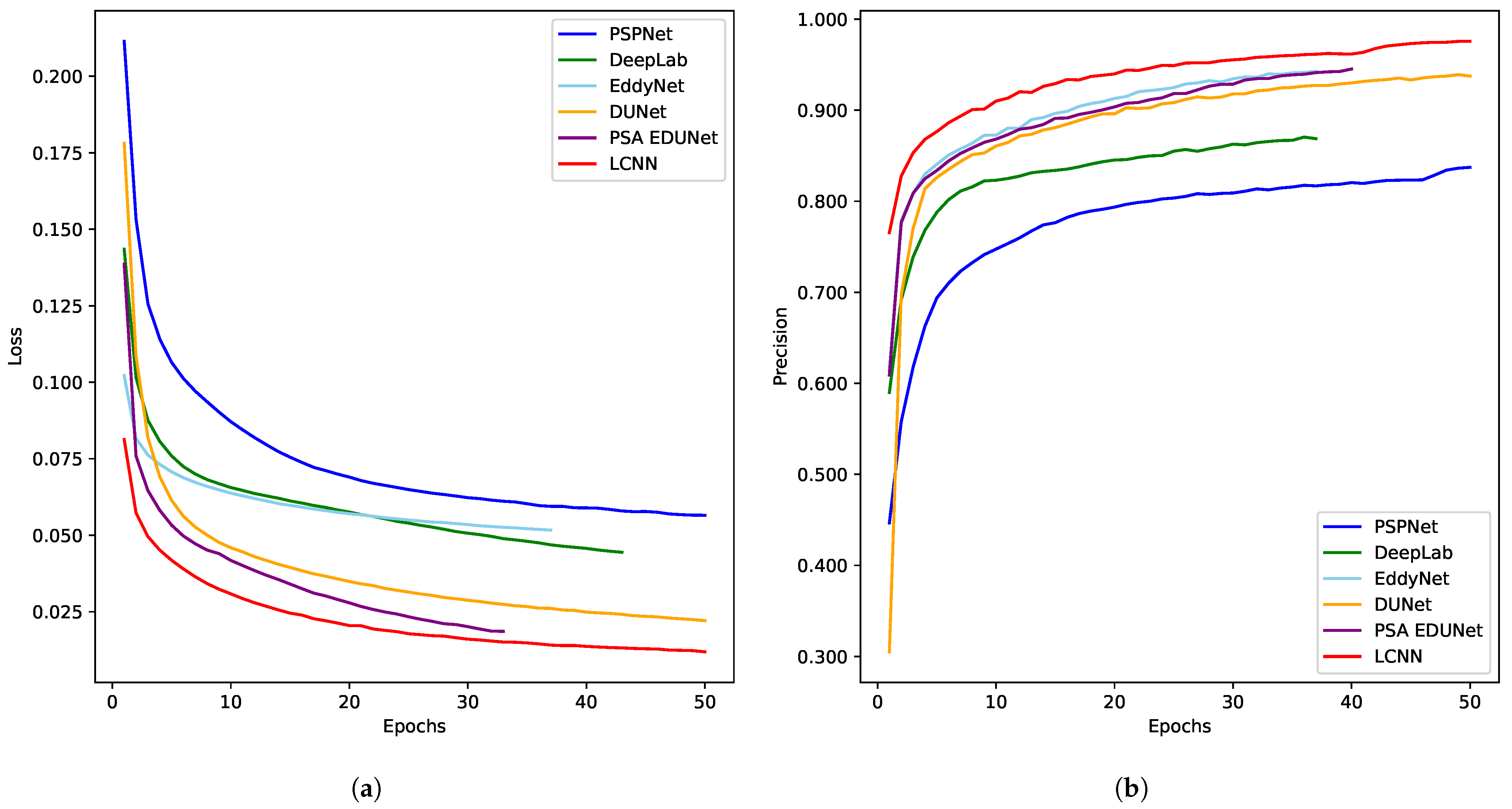

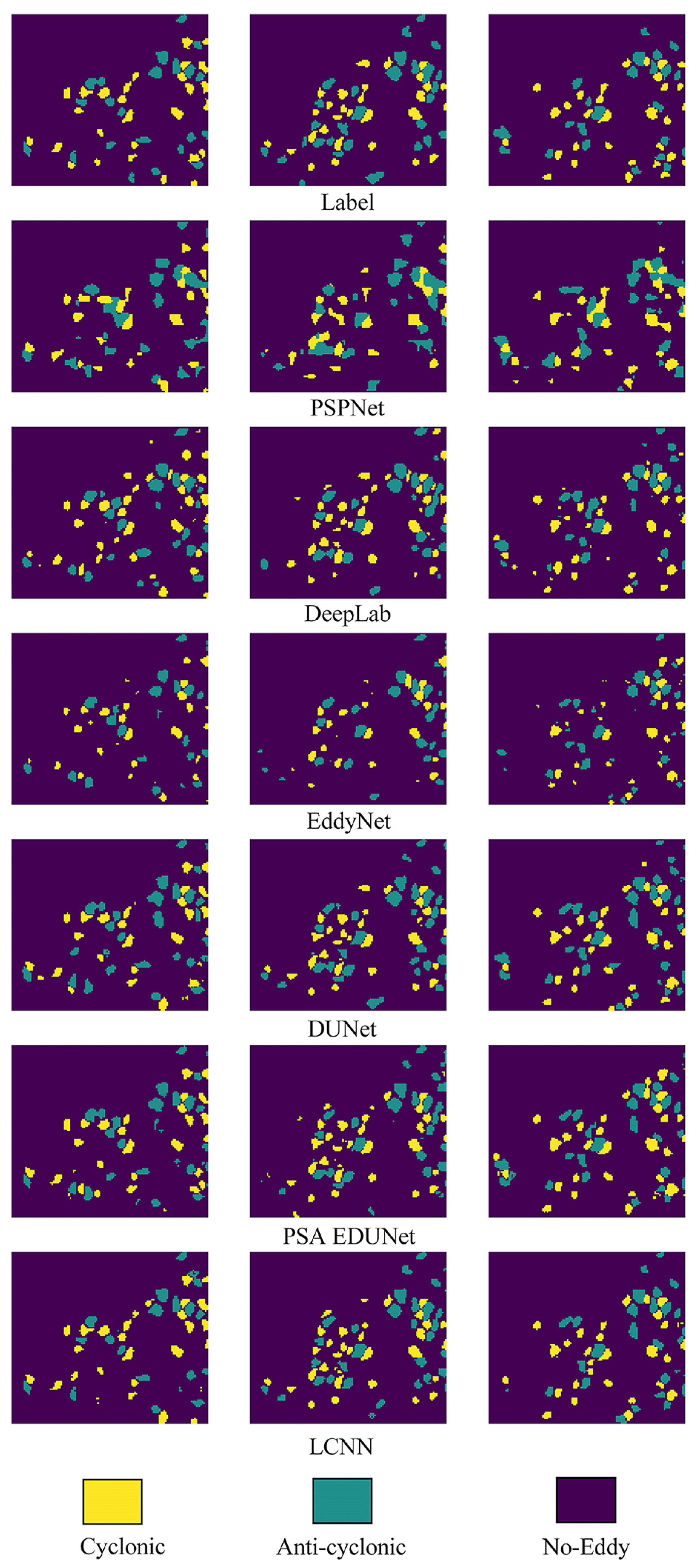

4. Discussion

The results of this study strongly indicate that framing the challenge of OME detection under harsh sea states as a domain adaptation problem is a highly effective strategy. The superior performance of the proposed model (LCNN), particularly the 7.2% improvement in mIoU over a strong baseline, is not merely an incremental gain. It highlights the efficacy of its core components in handling the specific challenges of this task. The large kernel convolution expands the model’s receptive field to capture the distorted and irregular shapes of eddies in severe weather more accurately. At the same time, the channel–spatial attention mechanism focuses on the most salient features. Most critically, the success of the adversarial learning framework (ADF) confirms our working hypothesis: explicit learning domain-invariant features are essential to bridge the significant distribution gap between normal and harsh sea states. On the contrary, previous approaches are effective in normal conditions but do not possess a dedicated mechanism to counteract the domain shift induced by extreme weather, which explains their performance degradation.

The primary contribution of this study is to provide a new paradigm for this specific oceanographic challenge. While the other studies have explored multimodal data to enhance eddy detection [

39], our research demonstrates that addressing the fundamental problem of domain shift can yield substantial improvements even with single-modal data. The ability to reliably identify OMEs in severe weather has significant scientific and practical implications. It enables a new avenue into critical phenomena, such as typhoon–eddy interactions, and provides a more robust tool for applications requiring high-precision ocean forecasting and maritime safety alerts.

Nevertheless, our study has limitations which also point toward clear directions for future research. Firstly, the model’s reliance on SLA data alone, while powerful, could be a constraint. We plan to develop a multimodal OME detection method that incorporates other oceanic or atmospheric variables, such as SST and flow velocity, to better capture the complex interplay of factors during harsh sea states. Secondly, the use of a 2D Gaussian function to mathematically simulate the impact of harsh sea states is a simplification that lacks underlying physical processes. Although effective, it is a source of uncertainty. To address this, we will seek to integrate coupled atmosphere–ocean models or advanced numerical ocean models in future work. This will provide a more realistic simulation of the nonlinear dynamics of typhoon-induced wind and waves, further closing the gap between synthetic training data and real-world conditions.