HSSTN: A Hybrid Spectral–Structural Transformer Network for High-Fidelity Pansharpening

Abstract

Highlights

- A Hybrid Spectral–Structural Transformer Network (HSSTN) is proposed, using an asymmetric architecture and hierarchical fusion to reduce spectral distortion and spatial degradation.

- The proposed HSSTN demonstrates state-of-the-art performance on multiple satellite datasets, outperforming eleven advanced methods in both quantitative metrics and visual quality with sharper details and fewer artefacts.

- This study confirms that an asymmetric, hybrid architecture tailored to different data modalities is a highly effective strategy to overcome the inherent performance trade-offs of single-paradigm models, successfully resolving the core conflict between spatial detail enhancement and spectral fidelity.

- The proposed hierarchical fusion network provides a flexible and powerful blueprint for integrating features from heterogeneous remote sensing sources. This progressive fusion approach offers a promising pathway for developing more robust multimodal and multi-scale models in the future.

Abstract

1. Introduction

- A novel end-to-end pansharpening framework, the Hierarchical Spectral–Structural Transformer Network (HSSTN), is proposed. The HSSTN is built upon a three-stage synergistic enhancement strategy, comprising an asymmetric dual-stream encoder, a hierarchical fusion network, and a multi-objective loss function to ensure a balanced restoration of spectral and spatial information.

- An asymmetric architecture is designed to address the inherent modality gap between PAN and MS imagery. It includes a dual-stream encoder that assigns specialised pathways for PAN and MS data. The PAN branch uses a pure Transformer to maximise spatial detail extraction, while the MS branch employs a hybrid CNN–Transformer design to prioritise spectral preservation before contextual modelling. A hierarchical fusion network that progressively integrates these optimised features, moving from fine-grained detail injection to multi-scale structural aggregation, thereby ensures controlled spatial enhancement while mitigating spectral distortion.

- A synergistic optimisation framework is introduced via a multi-objective loss function. This loss function simultaneously constrains pixel-level fidelity (L1), structural similarity (SSIM), and global spectral integrity (ERGAS), guiding the network to achieve a comprehensive and balanced optimisation across all critical quality metrics during the training process.

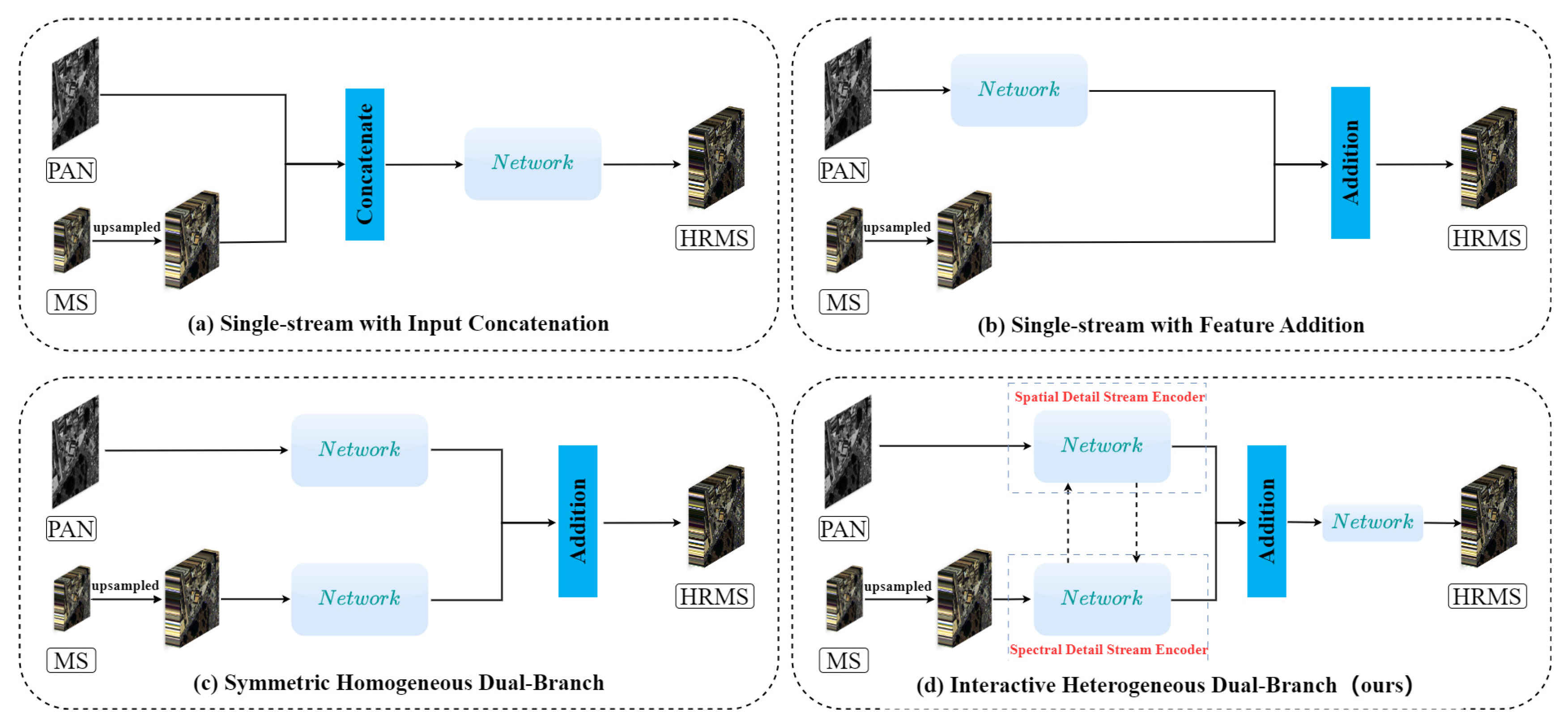

2. Related Work

2.1. Supervised Pansharpening

2.2. Unsupervised Pansharpening

3. Proposed Methods

3.1. Overview of the Proposed Approach

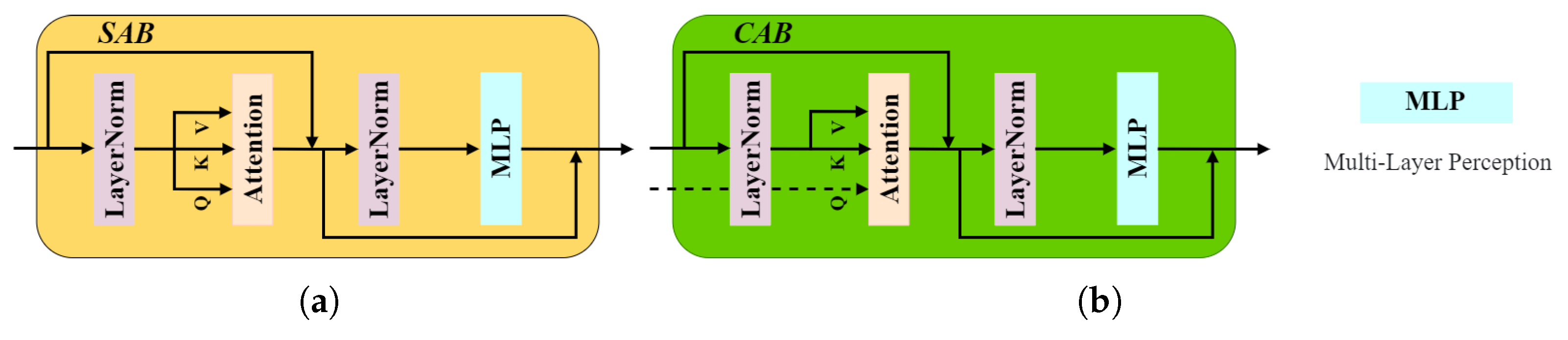

3.2. Dual-Stream Feature Extractor

3.2.1. Panchromatic Detail Stream Encoder

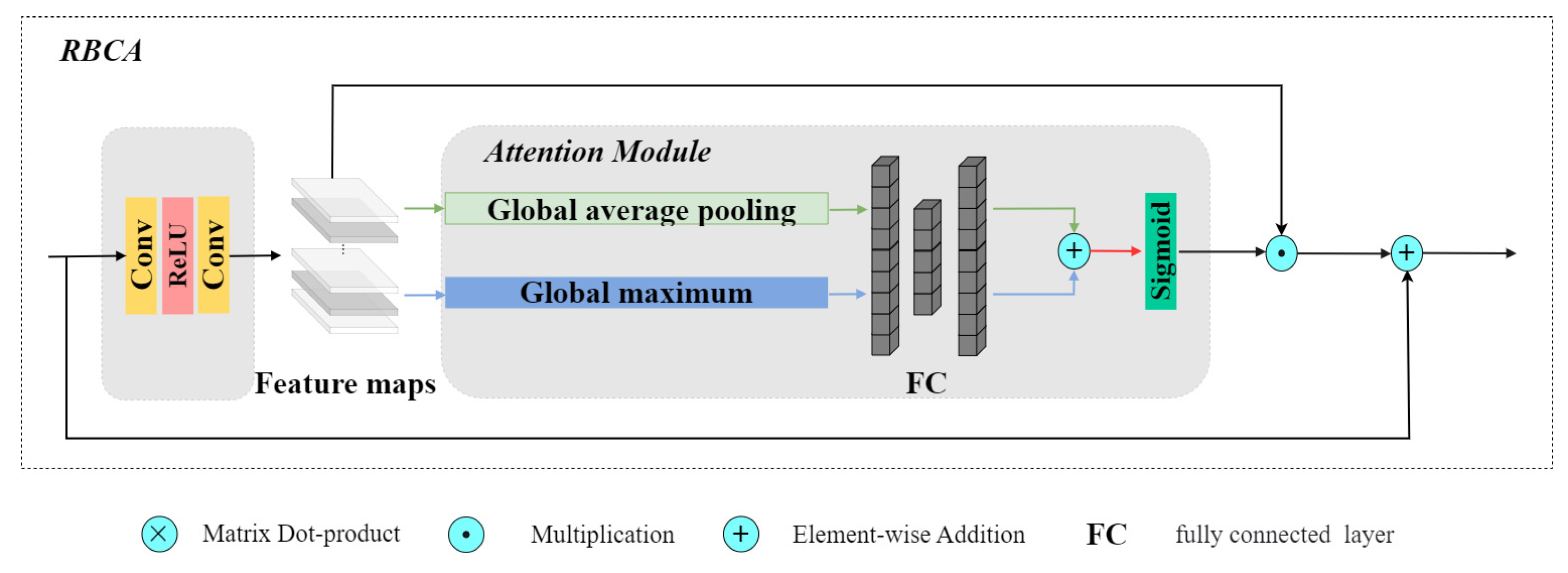

3.2.2. MS-RBCA Spectral Encoder

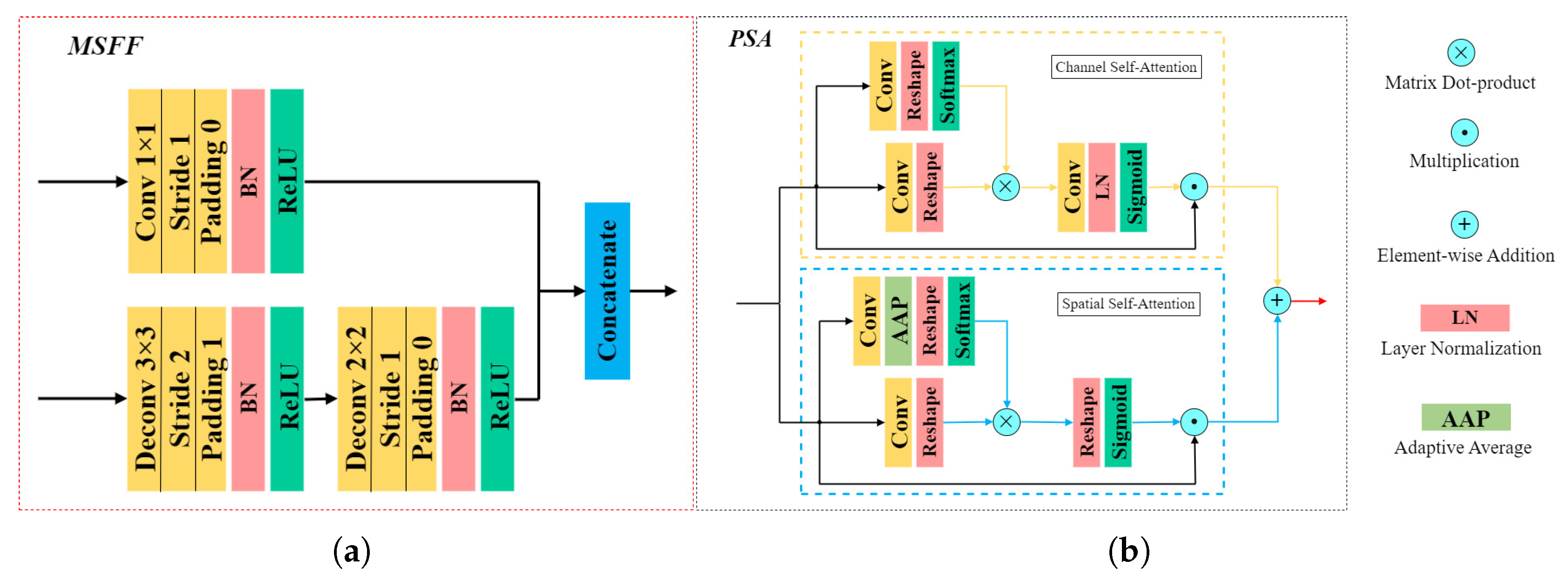

3.3. Hierarchical Fusion Network

3.3.1. Shallow Fusion

3.3.2. Mid-Level Fusion

3.3.3. Deep Fusion

3.4. Image Reconstruction Head

3.4.1. Image Reconstruction Architecture

3.4.2. Synergistic Optimisation Loss Function

4. Experiments and Results

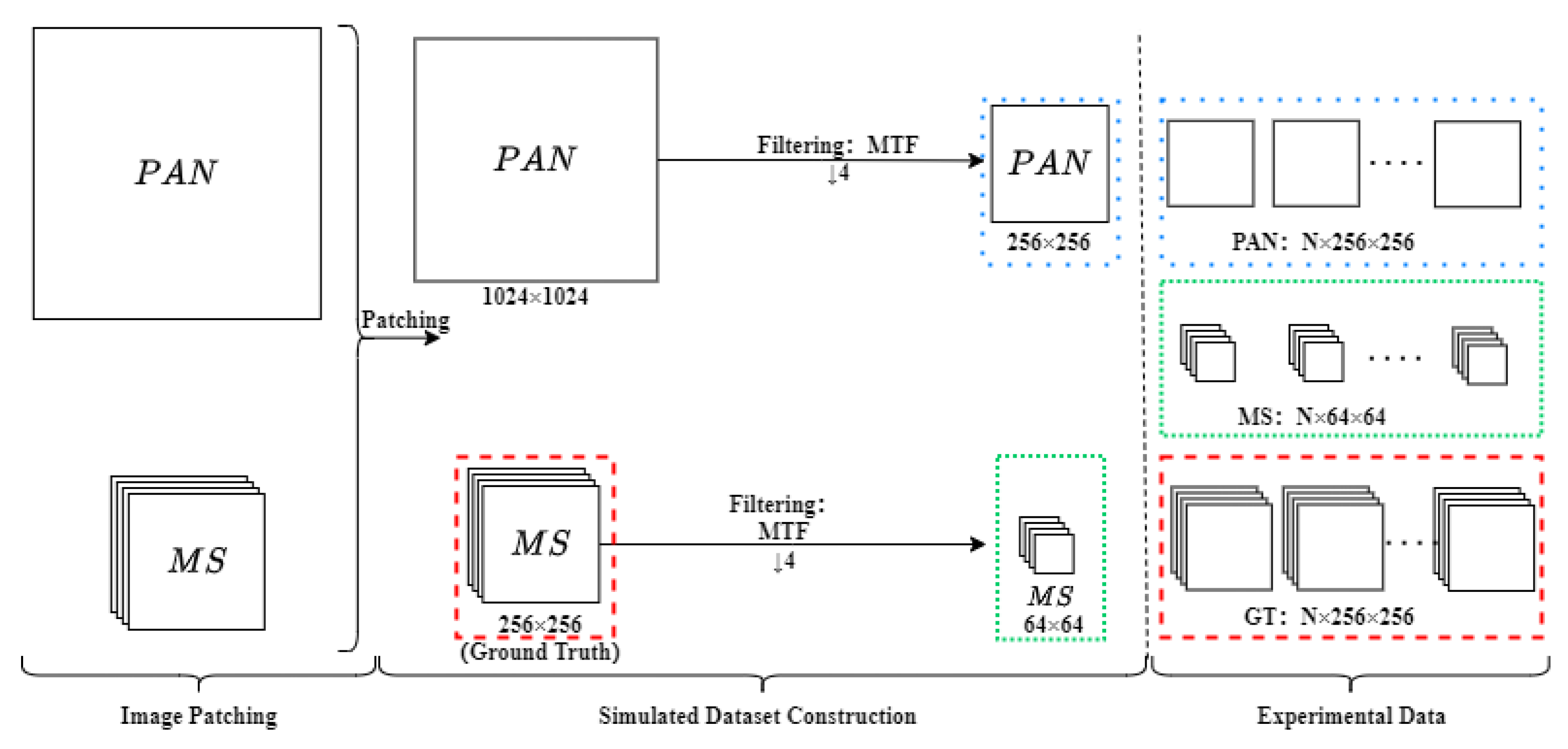

4.1. Datasets

- QuickBird: The QuickBird dataset is from Vidan Sabha, Jaipur Rajasthan, India, dated 18 October 2013, with a resolution of 2.8 m in MS and 0.7 m in PAN. The PAN image is 256 × 256 pixels and the MS image is 64 × 64 pixels.

- GaoFen-2: This dataset represents an urban area of Guangzhou, China. The GaoFen-2 dataset is dated August 2014. The Gaofen-2 satellite provides a PAN image with a spatial resolution of 0.8 m and a 4-band MS image with a resolution of 3.2 m. The sensor captures data with a 10-bit depth, covering Blue, Green, Red, and NIR spectral bands.

- WorldView-3: This dataset represents an urban area of Adelaide, South Australia. The WorldView-3 dataset is dated 27 November 2014 with resolution of 1.2 m 8-band BundleMS and 0.3 m in PAN. The pansharpened multispectral image that we would like to estimate has 256 × 256 × 8 pixels. The dataset has been acquired by the WorldView-3 sensor, which provides a high-resolution PAN channel and eight MS bands.

4.2. Evaluation Indicators and Comparison Methods

4.3. Experimental Details

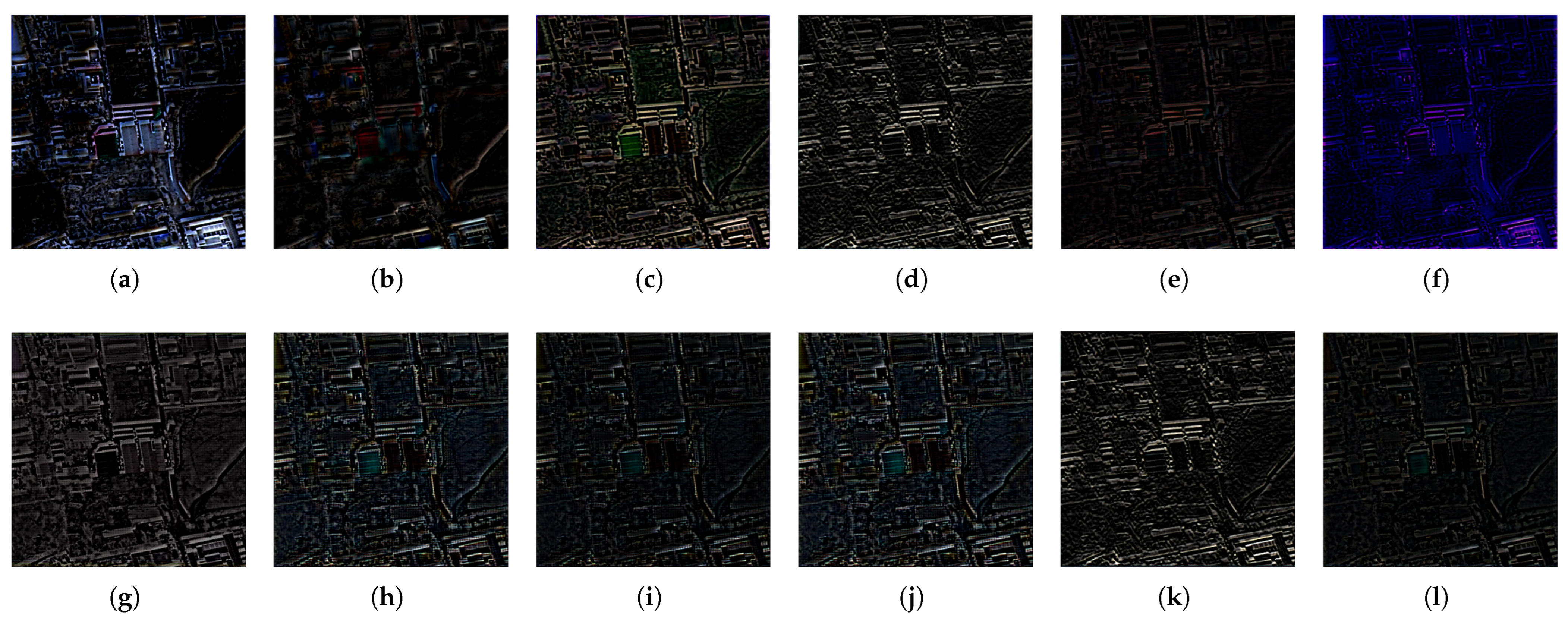

4.4. Experimental Results

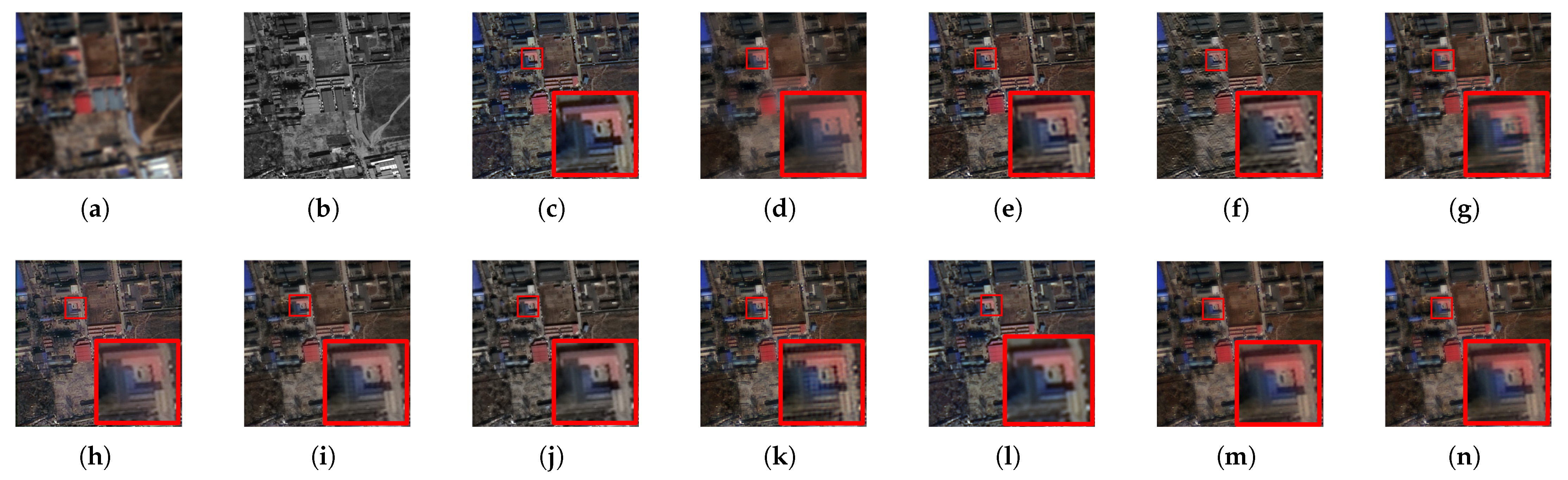

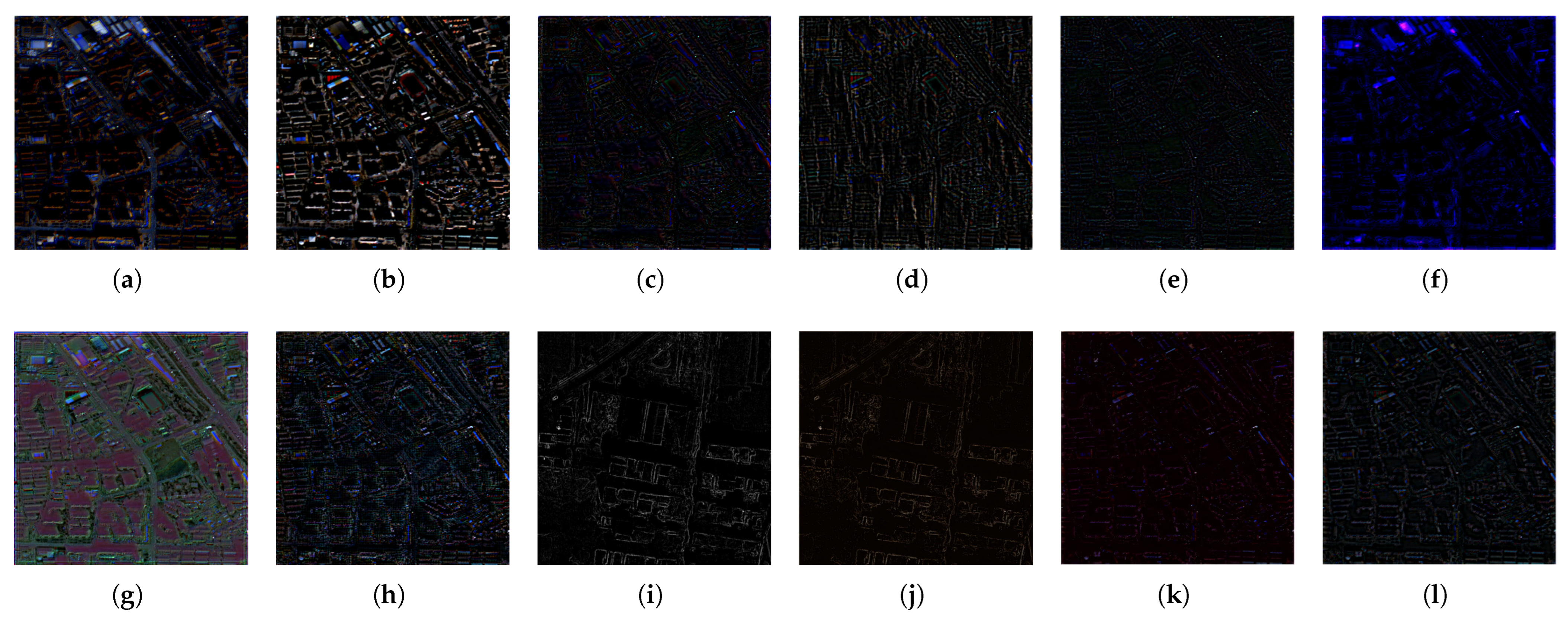

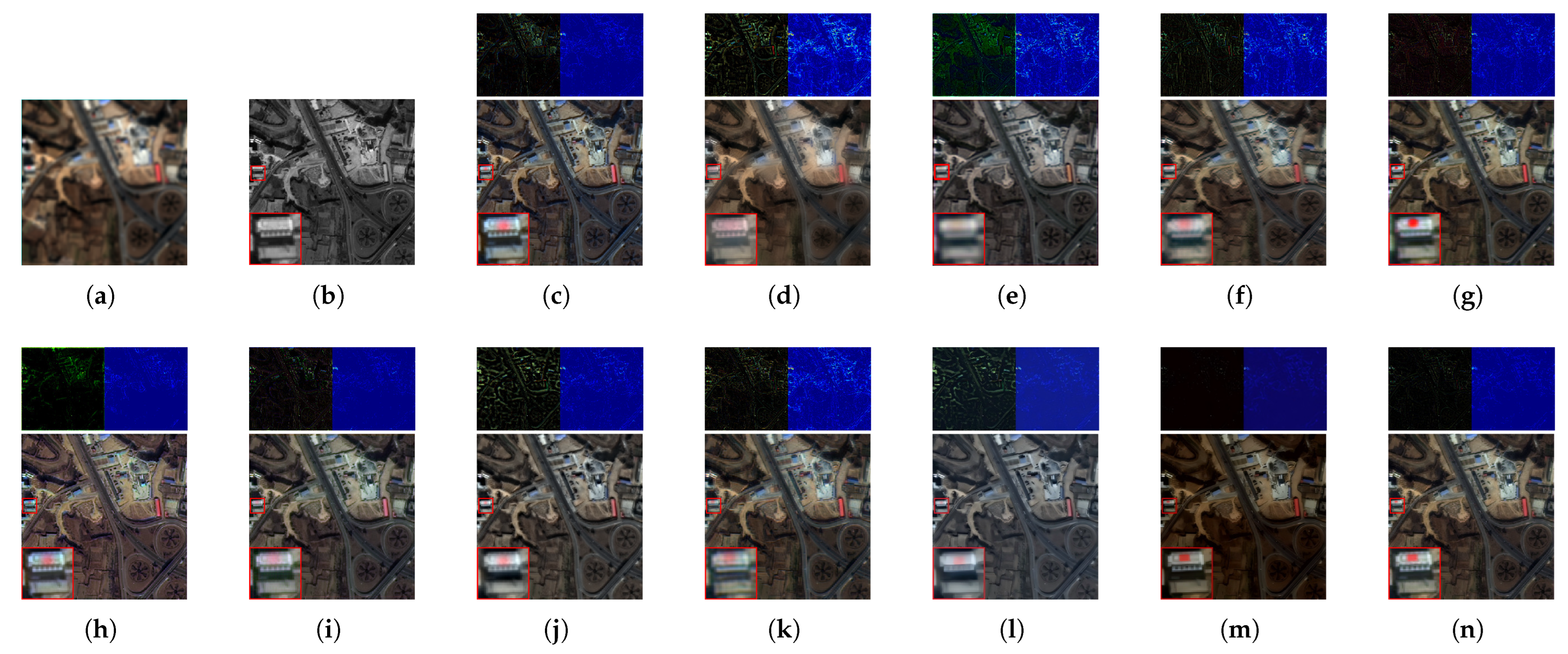

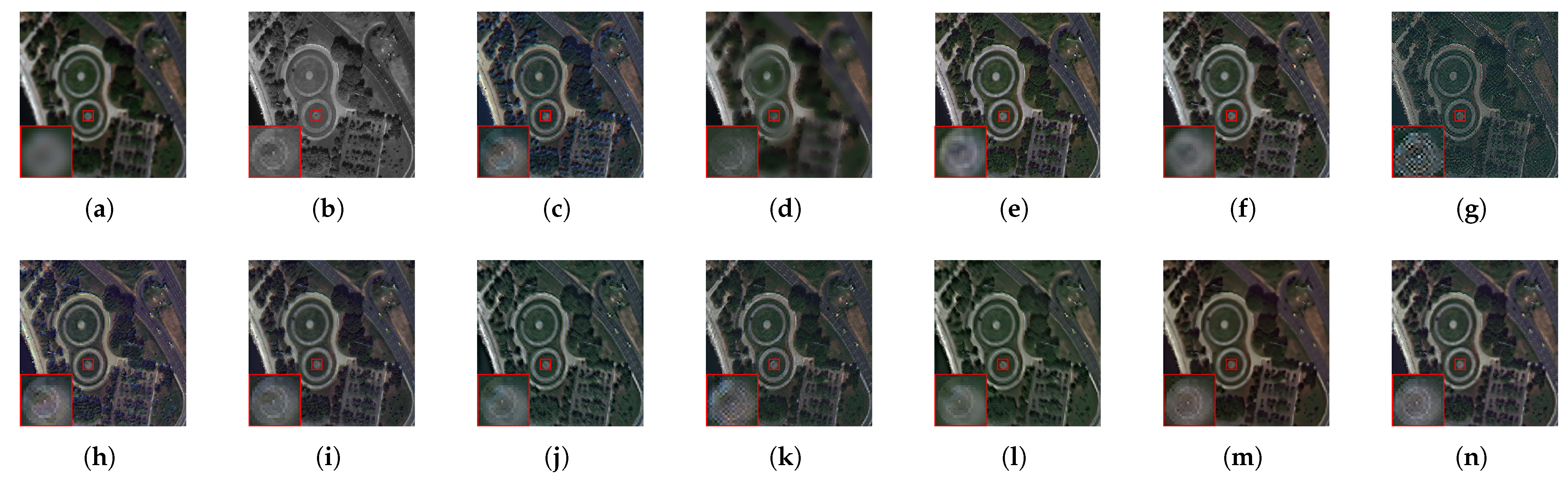

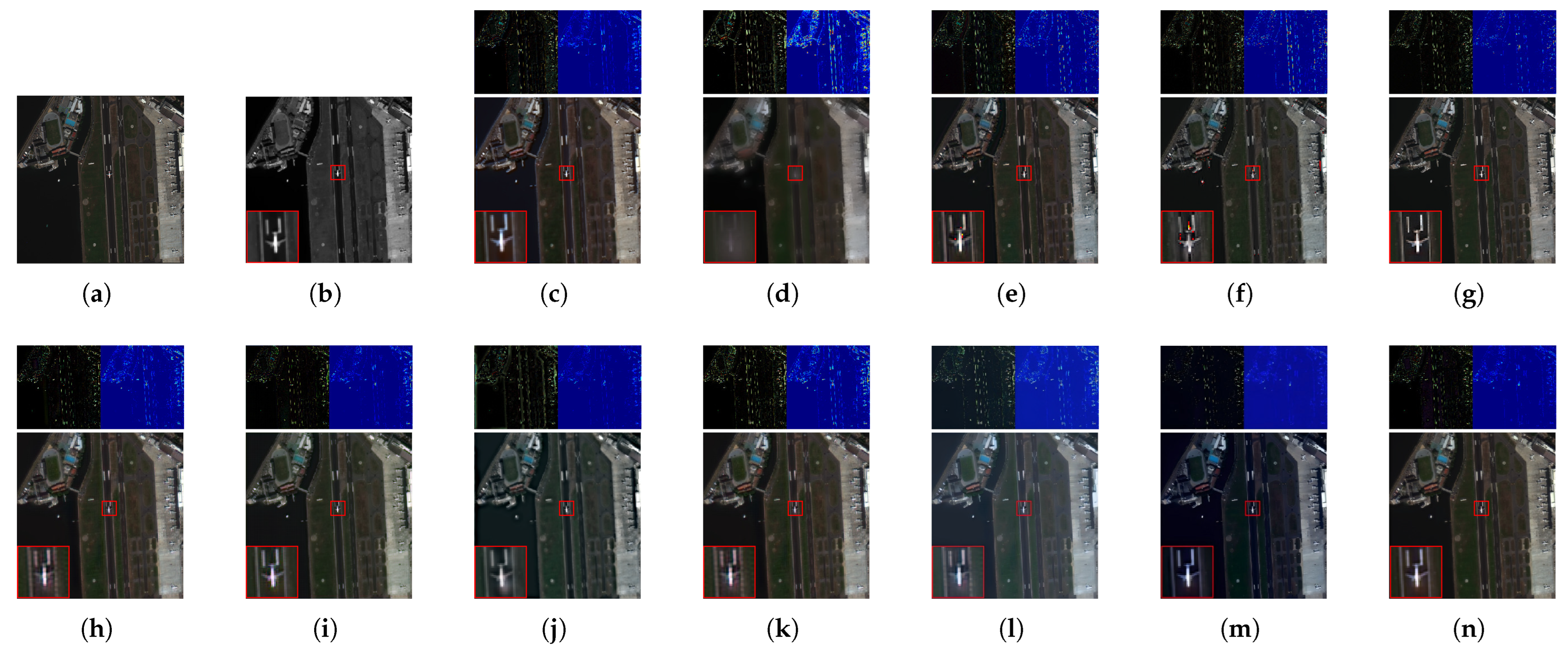

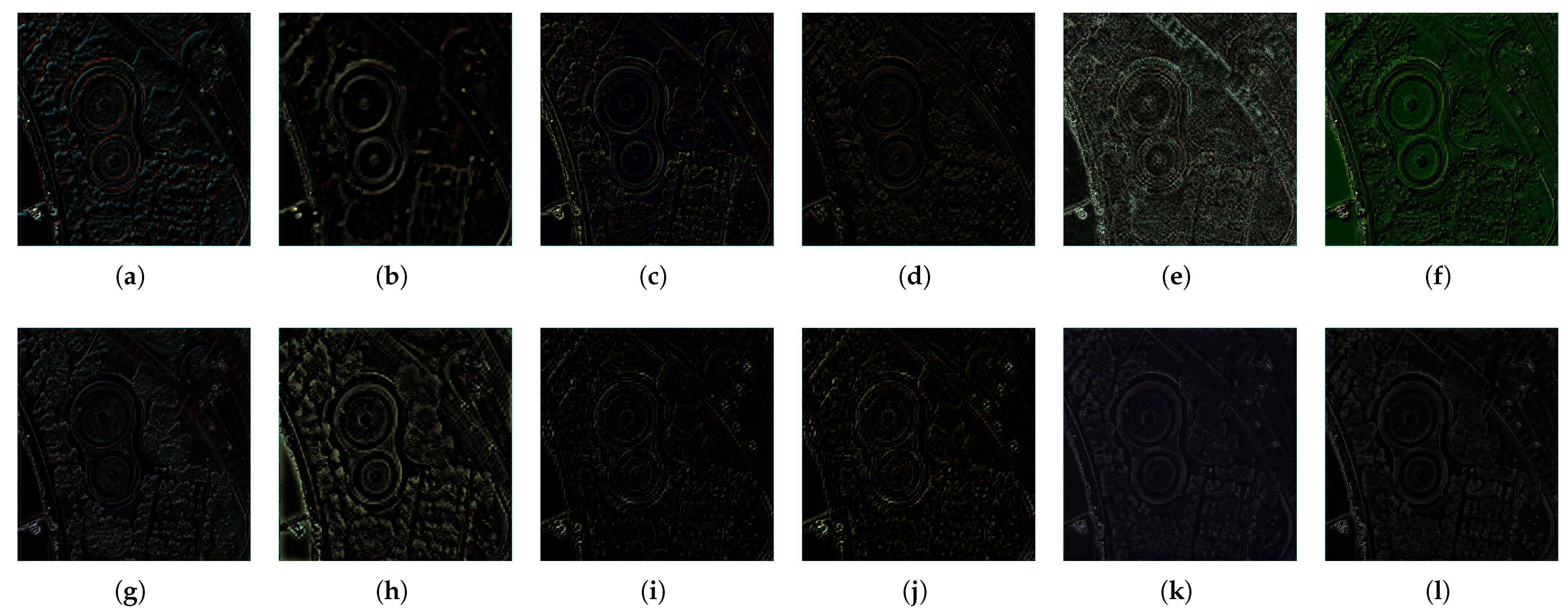

4.4.1. GaoFen-2 Dataset

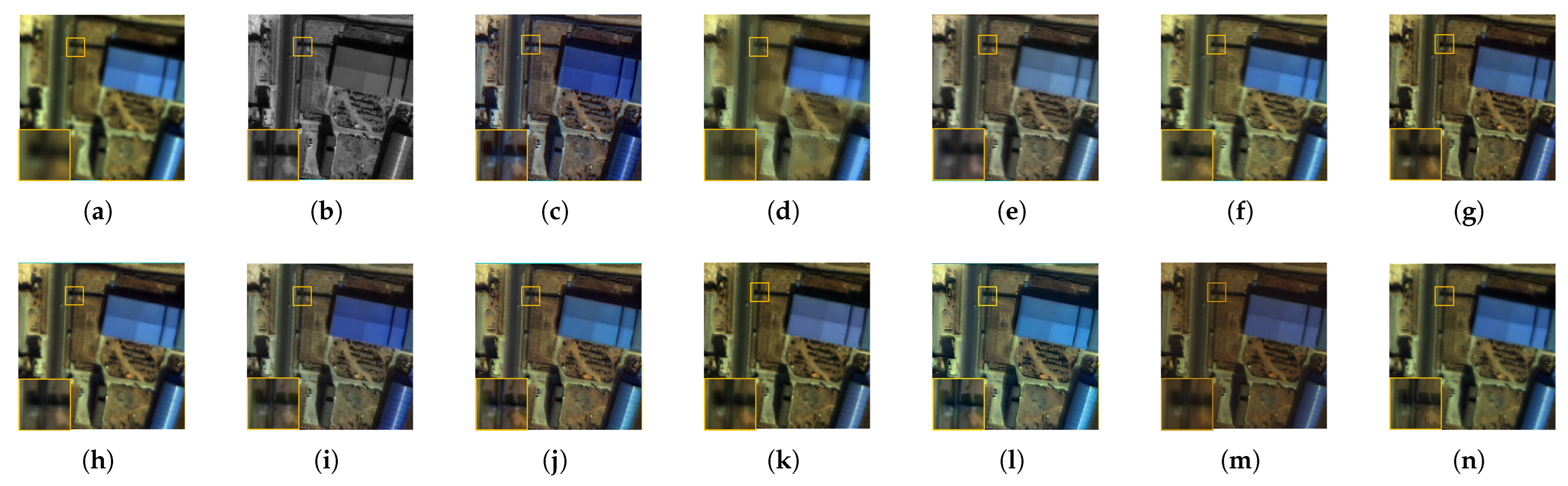

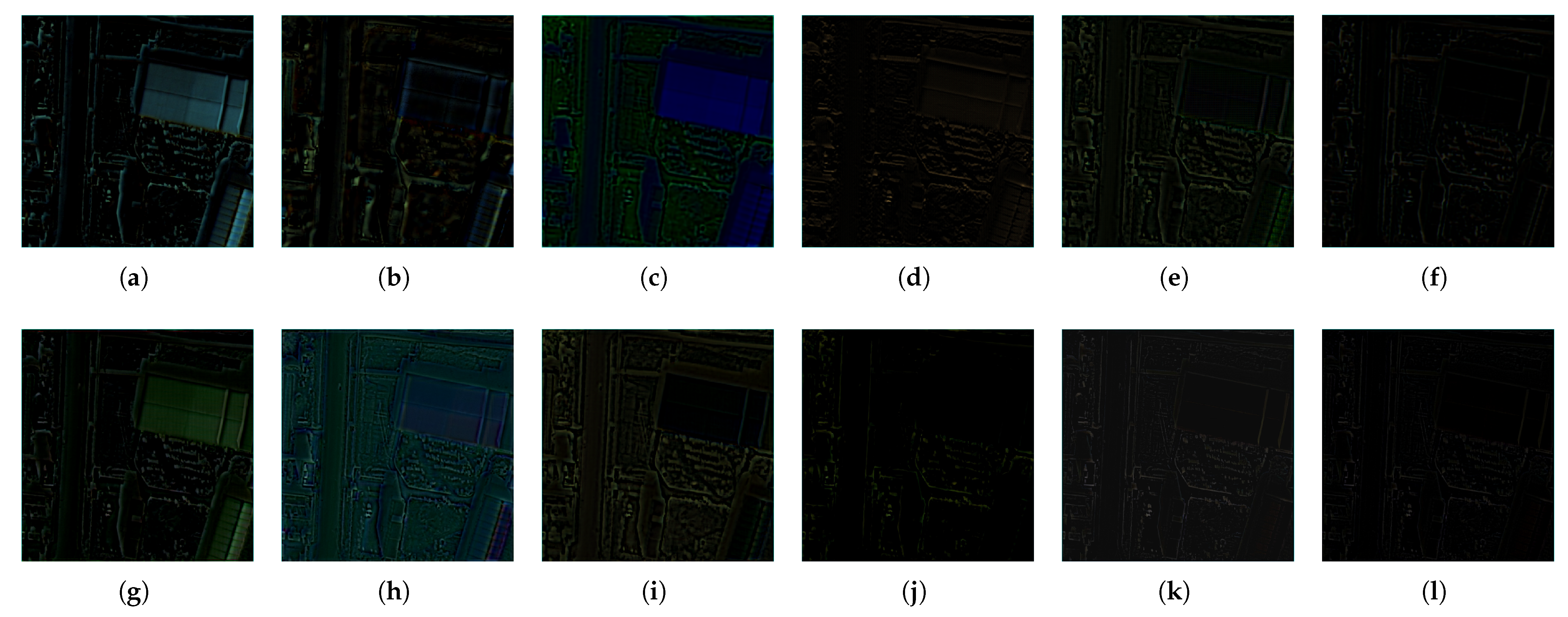

4.4.2. QuickBird Dataset

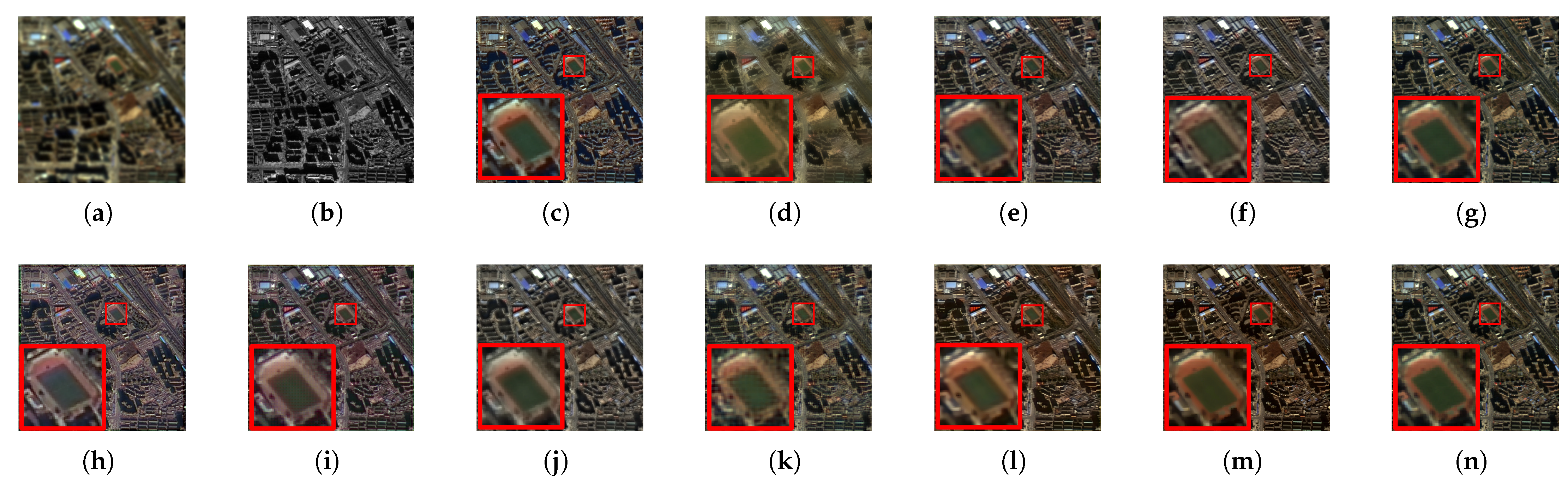

4.4.3. WorldView-3 Dataset

4.4.4. Efficiency Analysis

4.5. Ablation Study

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Perretta, M.; Delogu, G.; Funsten, C.; Patriarca, A.; Caputi, E.; Boccia, L. Testing the Impact of Pansharpening Using PRISMA Hyperspectral Data: A Case Study Classifying Urban Trees in Naples, Italy. Remote Sens. 2024, 16, 3730. [Google Scholar]

- Wang, N.; Chen, S.; Huang, J.; Frappart, F.; Taghizadeh, R.; Zhang, X.; Wigneron, J.P.; Xue, J.; Xiao, Y.; Peng, J.; et al. Global soil salinity estimation at 10 m using multi-source remote sensing. J. Remote Sens. 2024, 4, 0130. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, H.; Zhao, Y.; He, M.; Han, X. Change detection of buildings in remote sensing images using a spatially and contextually aware Siamese network. Expert Syst. Appl. 2025, 276, 127110. [Google Scholar] [CrossRef]

- Wang, S.; Zou, X.; Li, K.; Xing, J.; Cao, T.; Tao, P. Towards robust pansharpening: A large-scale high-resolution multi-scene dataset and novel approach. Remote Sens. 2024, 16, 2899. [Google Scholar] [CrossRef]

- Deng, L.J.; Vivone, G.; Paoletti, M.E.; Scarpa, G.; He, J.; Zhang, Y.; Chanussot, J.; Plaza, A. Machine learning in pansharpening: A benchmark, from shallow to deep networks. IEEE Geosci. Remote Sens. Mag. 2022, 10, 279–315. [Google Scholar] [CrossRef]

- Zini, S.; Barbato, M.P.; Piccoli, F.; Napoletano, P. Deep Learning Hyperspectral Pansharpening on Large-Scale PRISMA Dataset. Remote Sens. 2024, 16, 2079. [Google Scholar]

- Ciotola, M.; Guarino, G.; Vivone, G.; Poggi, G.; Chanussot, J.; Plaza, A.; Scarpa, G. Hyperspectral Pansharpening: Critical review, tools, and future perspectives. IEEE Geosci. Remote Sens. Mag. 2025, 13, 311–338. [Google Scholar]

- Li, D.; Wang, M.; Guo, H.; Jin, W. On China’s earth observation system: Mission, vision and application. Geo-Spat. Inf. Sci. 2024, 28, 303–321. [Google Scholar]

- Cliche, F. Integration of the SPOT panchromatic channel into its multispectral mode for image sharpness enhancement. Photogramm. Eng. Remote Sens. 1985, 51, 311–316. [Google Scholar]

- Javan, F.D.; Samadzadegan, F.; Mehravar, S.; Toosi, A.; Khatami, R.; Stein, A. A review of image fusion techniques for pan-sharpening of high-resolution satellite imagery. ISPRS J. Photogramm. Remote Sens. 2021, 171, 101–117. [Google Scholar]

- Cui, Y.; Liu, P.; Ma, Y.; Chen, L.; Xu, M.; Guo, X. Pixel-Wise Ensembled Masked Autoencoder for Multispectral Pansharpening. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–22. [Google Scholar] [CrossRef]

- Arienzo, A.; Alparone, L.; Garzelli, A.; Lolli, S. Advantages of nonlinear intensity components for contrast-based multispectral pansharpening. Remote Sens. 2022, 14, 3301. [Google Scholar] [CrossRef]

- Gao, H.; Li, S.; Li, J.; Dian, R. Multispectral Image Pan-Sharpening Guided by Component Substitution Model. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5406413. [Google Scholar] [CrossRef]

- Xie, G.; Nie, R.; Cao, J.; Li, H.; Li, J. A Deep Multiresolution Representation Framework for Pansharpening. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5517216. [Google Scholar] [CrossRef]

- Xu, S.; Zhong, S.; Li, H.; Gong, C. Spectral–Spatial Attention-Guided Multi-Resolution Network for Pansharpening. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 7559–7571. [Google Scholar] [CrossRef]

- Cao, X.; Chen, Y.; Cao, W. Proximal pannet: A model-based deep network for pansharpening. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 22 February–1 March 2022; Volume 36, pp. 176–184. [Google Scholar]

- Palsson, F.; Ulfarsson, M.O.; Sveinsson, J.R. Model-based reduced-rank pansharpening. IEEE Geosci. Remote Sens. Lett. 2019, 17, 656–660. [Google Scholar] [CrossRef]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. Pansharpening by convolutional neural networks. Remote Sens. 2016, 8, 594. [Google Scholar] [CrossRef]

- Liu, Q.; Meng, X.; Shao, F.; Li, S. Supervised-unsupervised combined deep convolutional neural networks for high-fidelity pansharpening. Inf. Fusion 2023, 89, 292–304. [Google Scholar] [CrossRef]

- Ye, Y.; Wang, T.; Fang, F.; Zhang, G. MSCSCformer: Multiscale Convolutional Sparse Coding-Based Transformer for Pansharpening. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5405112. [Google Scholar] [CrossRef]

- Liu, X.; Liu, Q.; Wang, Y. Remote sensing image fusion based on two-stream fusion network. Inf. Fusion 2020, 55, 1–15. [Google Scholar] [CrossRef]

- Yang, Y.; Li, M.; Huang, S.; Lu, H.; Tu, W.; Wan, W. Multi-scale spatial-spectral attention guided fusion network for pansharpening. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 3346–3354. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhang, K.; Wang, A.; Zhang, F.; Diao, W.; Sun, J.; Bruzzone, L. Spatial and spectral extraction network with adaptive feature fusion for pansharpening. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5410814. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Zhou, H.; Liu, Q.; Wang, Y. PanFormer: A transformer based model for pan-sharpening. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo, Taipei, Taiwan, 18–22 July 2022; pp. 1–6. [Google Scholar]

- Bandara, W.G.C.; Patel, V.M. Hypertransformer: A textural and spectral feature fusion transformer for pansharpening. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1767–1777. [Google Scholar]

- Li, Z.; Li, J.; Ren, L.; Chen, Z. Transformer-based dual-branch multiscale fusion network for pan-sharpening remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 614–632. [Google Scholar] [CrossRef]

- Zhang, F.; Zhang, K.; Sun, J.; Wang, J.; Bruzzone, L. DRFormer: Learning Disentangled Representation for Pan-Sharpening via Mutual Information-Based Transformer. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5400115. [Google Scholar] [CrossRef]

- Li, S.; Guo, Q.; Li, A. Pan-sharpening based on CNN+ pyramid transformer by using no-reference loss. Remote Sens. 2022, 14, 624. [Google Scholar] [CrossRef]

- Meng, Y.; Zhu, H.; Yi, X.; Hou, B.; Wang, S.; Wang, Y.; Chen, K.; Jiao, L. FAFormer: Frequency-Analysis-Based Transformer Focusing on Correlation and Specificity for Pansharpening. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5403413. [Google Scholar] [CrossRef]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- Yuan, Q.; Wei, Y.; Meng, X.; Shen, H.; Zhang, L. A multiscale and multidepth convolutional neural network for remote sensing imagery pan-sharpening. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 978–989. [Google Scholar] [CrossRef]

- Yang, J.; Fu, X.; Hu, Y.; Huang, Y.; Ding, X.; Paisley, J. PanNet: A deep network architecture for pan-sharpening. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5449–5457. [Google Scholar]

- Dian, R.; Shan, T.; He, W.; Liu, H. Spectral Super-Resolution via Model-Guided Cross-Fusion Network. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 10059–10070. [Google Scholar] [CrossRef] [PubMed]

- Dian, R.; Liu, Y.; Li, S. Hyperspectral Image Fusion via a Novel Generalized Tensor Nuclear Norm Regularization. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 7437–7448. [Google Scholar] [CrossRef]

- Liu, Q.; Zhou, H.; Xu, Q.; Liu, X.; Wang, Y. PSGAN: A generative adversarial network for remote sensing image pan-sharpening. IEEE Trans. Geosci. Remote Sens. 2020, 59, 10227–10242. [Google Scholar] [CrossRef]

- Liu, Y.; Dian, R.; Li, S. Low-rank transformer for high-resolution hyperspectral computational imaging. Int. J. Comput. Vis. 2025, 133, 809–824. [Google Scholar] [CrossRef]

- Dian, R.; Liu, Y.; Li, S. Spectral Super-Resolution via Deep Low-Rank Tensor Representation. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 5140–5150. [Google Scholar] [CrossRef]

- He, X.; Cao, K.; Zhang, J.; Yan, K.; Wang, Y.; Li, R.; Xie, C.; Hong, D.; Zhou, M. Pan-mamba: Effective pan-sharpening with state space model. Inf. Fusion 2025, 115, 102779. [Google Scholar] [CrossRef]

- Lu, P.; Jiang, X.; Zhang, Y.; Liu, X.; Cai, Z.; Jiang, J.; Plaza, A. Spectral–spatial and superpixelwise unsupervised linear discriminant analysis for feature extraction and classification of hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5530515. [Google Scholar] [CrossRef]

- Ciotola, M.; Guarino, G.; Scarpa, G. An Unsupervised CNN-Based Pansharpening Framework with Spectral-Spatial Fidelity Balance. Remote Sens. 2024, 16, 3014. [Google Scholar] [CrossRef]

- Luo, S.; Zhou, S.; Feng, Y.; Xie, J. Pansharpening via unsupervised convolutional neural networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4295–4310. [Google Scholar] [CrossRef]

- Xiong, Z.; Liu, N.; Wang, N.; Sun, Z.; Li, W. Unsupervised pansharpening method using residual network with spatial texture attention. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5402112. [Google Scholar] [CrossRef]

- Ni, J.; Shao, Z.; Zhang, Z.; Hou, M.; Zhou, J.; Fang, L.; Zhang, Y. LDP-Net: An unsupervised pansharpening network based on learnable degradation processes. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5468–5479. [Google Scholar] [CrossRef]

- Uezato, T.; Hong, D.; Yokoya, N.; He, W. Guided deep decoder: Unsupervised image pair fusion. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: New York, NY, USA, 2020; pp. 87–102. [Google Scholar]

- Ma, J.; Yu, W.; Chen, C.; Liang, P.; Guo, X.; Jiang, J. Pan-GAN: An unsupervised pan-sharpening method for remote sensing image fusion. Inf. Fusion 2020, 62, 110–120. [Google Scholar] [CrossRef]

- Zhou, H.; Liu, Q.; Wang, Y. PGMAN: An unsupervised generative multiadversarial network for pansharpening. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6316–6327. [Google Scholar] [CrossRef]

- Dian, R.; Guo, A.; Li, S. Zero-shot hyperspectral sharpening. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 12650–12666. [Google Scholar] [CrossRef] [PubMed]

- Rui, X.; Cao, X.; Pang, L.; Zhu, Z.; Yue, Z.; Meng, D. Unsupervised hyperspectral pansharpening via low-rank diffusion model. Inf. Fusion 2024, 107, 102325. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Li, Y.; Wei, F.; Zhang, Y.; Chen, W.; Ma, J. HS2P: Hierarchical spectral and structure-preserving fusion network for multimodal remote sensing image cloud and shadow removal. Inf. Fusion 2023, 94, 215–228. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Zhang, Y.; Zhang, T.; Wu, C.; Tao, R. Multi-scale spatiotemporal feature fusion network for video saliency prediction. IEEE Trans. Multimed. 2023, 26, 4183–4193. [Google Scholar] [CrossRef]

- Zhang, H.; Zu, K.; Lu, J.; Zou, Y.; Meng, D. EPSANet: An efficient pyramid squeeze attention block on convolutional neural network. In Proceedings of the Asian Conference on Computer Vision, Macau, China, 4–8 December 2022; pp. 1161–1177. [Google Scholar]

- Restaino, R. Pansharpening Techniques: Optimizing the Loss Function for Convolutional Neural Networks. Remote Sens. 2024, 17, 16. [Google Scholar] [CrossRef]

- Wu, X.; Feng, J.; Shang, R.; Wu, J.; Zhang, X.; Jiao, L.; Gamba, P. Multi-task multi-objective evolutionary network for hyperspectral image classification and pansharpening. Inf. Fusion 2024, 108, 102383. [Google Scholar]

- Hore, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

- Pushparaj, J.; Hegde, A.V. Evaluation of pan-sharpening methods for spatial and spectral quality. Appl. Geomat. 2017, 9, 1–12. [Google Scholar] [CrossRef]

- Zhao, W.; Dai, Q.; Zheng, Y.; Wang, L. A new pansharpen method based on guided image filtering: A case study over Gaofen-2 imagery. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 3766–3769. [Google Scholar]

- Snehmani; Gore, A.; Ganju, A.; Kumar, S.; Srivastava, P.; RP, H.R. A comparative analysis of pansharpening techniques on QuickBird and WorldView-3 images. Geocarto Int. 2017, 32, 1268–1284. [Google Scholar]

- Zhou, J.; Civco, D.L.; Silander, J.A. A wavelet transform method to merge Landsat TM and SPOT panchromatic data. Int. J. Remote Sens. 1998, 19, 743–757. [Google Scholar]

- Du, Q.; Younan, N.H.; King, R.; Shah, V.P. On the performance evaluation of pan-sharpening techniques. IEEE Geosci. Remote Sens. Lett. 2007, 4, 518–522. [Google Scholar] [CrossRef]

- Arienzo, A.; Vivone, G.; Garzelli, A.; Alparone, L.; Chanussot, J. Full-Resolution Quality Assessment of Pansharpening: Theoretical and hands-on approaches. IEEE Geosci. Remote Sens. Mag. 2022, 10, 168–201. [Google Scholar] [CrossRef]

- Yuhas, R.H.; Goetz, A.F.; Boardman, J.W. Discrimination among semi-arid landscape endmembers using the spectral angle mapper (SAM) algorithm. In Proceedings of the JPL, Summaries of the Third Annual JPL Airborne Geoscience Workshop, Pasadena, CA, USA, 1–5 June 1992; pp. 147–149. [Google Scholar]

- Khan, S.S.; Ran, Q.; Khan, M.; Ji, Z. Pan-sharpening framework based on laplacian sharpening with Brovey. In Proceedings of the 2019 IEEE International Conference on Signal, Information and Data Processing, Chongqing, China, 11–13 December 2019; pp. 1–5. [Google Scholar]

- Gangkofner, U.G.; Pradhan, P.S.; Holcomb, D.W. Optimizing the high-pass filter addition technique for image fusion. Photogramm. Eng. Remote Sens. 2007, 73, 1107–1118. [Google Scholar] [CrossRef]

- Gao, Z.; Huang, J.; Chen, J.; Zhou, H. FAformer: Parallel Fourier-attention architectures benefits EEG-based affective computing with enhanced spatial information. Neural Comput. Appl. 2024, 36, 3903–3919. [Google Scholar] [CrossRef]

| Sensor | Attribute | Specification |

|---|---|---|

| GaoFen-2 [60] | Acquired Date | August 2014 |

| Area Covered | Guangzhou, China (Urban City) | |

| Spatial Resolution | MS: 3.2 m; PAN: 0.8 m | |

| Spectral Bands | 4 (Blue, Green, Red, NIR) | |

| Bit Depth | 10-bit | |

| Train Set | PAN: ; MS: ; HRMS: ; Number: 25,000 | |

| Test Set | PAN: ; MS: ; HRMS: ; Number: 286 | |

| QuickBird [61] | Acquired Date | 18 October 2013 |

| Area Covered | Vidhansabha, Rajasthan, India | |

| Spatial Resolution | MS: 2.40 m; PAN: 0.70 m | |

| Spectral Bands | 4 (Blue, Green, Red, NIR) | |

| Bit Depth | 11-bit | |

| Train Set | PAN: ; MS: ; HRMS: ; Number: 4000 | |

| Test Set | PAN: ; MS: ; HRMS: ; Number: 32 | |

| WorldView-3 [61] | Acquired Date | 27 November 2014 |

| Area Covered | Adelaide, Australia | |

| Spatial Resolution | MS: 1.20 m; PAN: 0.30 m | |

| Spectral Bands | 8 (Coastal, Blue, Green, Yellow, Red, Red Edge, NIR1, NIR2) | |

| Bit Depth | 11-bit | |

| Train Set | PAN: ; MS: ; HRMS: ; Number: 18,000 | |

| Test Set | PAN: ; MS: ; HRMS: ; Number: 308 |

| Model | SCC | ERGAS | Q4 | SAM | SSIM | HQNR | ||

|---|---|---|---|---|---|---|---|---|

| Brovey | 0.8962 | 3.4862 | 0.8415 | 2.5380 | 0.8290 | 0.8516 | 0.0667 | 0.0875 |

| HPF | 0.9454 | 4.1064 | 0.8682 | 2.6328 | 0.8097 | 0.8643 | 0.0304 | 0.1086 |

| PNN | 0.9450 | 1.5146 | 0.9670 | 1.7236 | 0.9636 | 0.9215 | 0.0372 | 0.0429 |

| PanNet | 0.9713 | 1.3399 | 0.9814 | 1.6753 | 0.9696 | 0.8939 | 0.0289 | 0.0795 |

| PSGAN | 0.9576 | 1.1736 | 0.9858 | 1.5862 | 0.9759 | 0.9384 | 0.0060 | 0.0559 |

| PGMAN | 0.9676 | 1.1659 | 0.9862 | 1.9027 | 0.9712 | 0.9293 | 0.0335 | 0.0385 |

| LDT-Net | 0.9659 | 1.0147 | 0.9813 | 2.3435 | 0.9862 | 0.9588 | 0.0035 | 0.0378 |

| PanFormer | 0.9812 | 0.9641 | 0.9905 | 1.6804 | 0.9819 | 0.9562 | 0.2340 | 0.0209 |

| FAFormer | 0.9757 | 0.9247 | 0.9912 | 0.9362 | 0.9843 | 0.9730 | 0.0075 | 0.0196 |

| PLRDiff | 0.9762 | 0.9328 | 0.9915 | 1.1028 | 0.9635 | 0.9813 | 0.0068 | 0.0120 |

| Pan-Mamba | 0.9785 | 0.9152 | 0.9932 | 1.1652 | 0.9871 | 0.9791 | 0.0056 | 0.0154 |

| Ours | 0.9877 | 0.8789 | 0.9962 | 1.0887 | 0.9860 | 0.9808 | 0.0043 | 0.0150 |

| Reference | 1 | 0 | 1 | 0 | 1 | 1 | 0 | 0 |

| Model | SCC | ERGAS | Q4 | SAM | SSIM | HQNR | ||

|---|---|---|---|---|---|---|---|---|

| Brovey | 0.9341 | 2.7190 | 0.8929 | 2.3076 | 0.8600 | 0.8962 | 0.0642 | 0.0423 |

| HPF | 0.9525 | 3.1126 | 0.8489 | 3.2678 | 0.8486 | 0.8924 | 0.0131 | 0.0958 |

| PNN | 0.9246 | 3.3448 | 0.9020 | 3.4870 | 0.9018 | 0.9021 | 0.0518 | 0.0486 |

| PanNet | 0.9633 | 1.7329 | 0.9581 | 1.7976 | 0.9435 | 0.9164 | 0.0507 | 0.0347 |

| PSGAN | 0.9527 | 2.2682 | 0.9426 | 1.9756 | 0.9266 | 0.9589 | 0.0218 | 0.0197 |

| PGMAN | 0.9586 | 2.1039 | 0.9498 | 2.1998 | 0.9102 | 0.9708 | 0.0042 | 0.0251 |

| LDT-Net | 0.9475 | 2.1043 | 0.9505 | 2.2361 | 0.9221 | 0.9326 | 0.0468 | 0.0216 |

| PanFormer | 0.9731 | 1.5726 | 0.9672 | 1.8840 | 0.9262 | 0.9813 | 0.0019 | 0.0168 |

| FAFormer | 0.9748 | 1.7402 | 0.9649 | 1.7980 | 0.9499 | 0.9786 | 0.0036 | 0.0179 |

| PLRDiff | 0.9651 | 1.8240 | 0.9496 | 2.0028 | 0.9267 | 0.9881 | 0.0021 | 0.0098 |

| Pan-Mamba | 0.9783 | 1.6073 | 0.9692 | 1.6893 | 0.9401 | 0.9777 | 0.0053 | 0.0171 |

| Ours | 0.9800 | 1.5921 | 0.9722 | 1.7889 | 0.9448 | 0.9892 | 0.0026 | 0.0082 |

| Reference | 1 | 0 | 1 | 0 | 1 | 1 | 0 | 0 |

| Model | SCC | ERGAS | Q8 | SAM | SSIM | HQNR | ||

|---|---|---|---|---|---|---|---|---|

| Brovey | 0.8983 | 3.6241 | 0.9019 | 2.4689 | 0.7390 | 0.8961 | 0.0457 | 0.0610 |

| HPF | 0.9008 | 3.7012 | 0.9072 | 2.3458 | 0.8652 | 0.9162 | 0.0483 | 0.0873 |

| PNN | 0.9673 | 3.3726 | 0.9306 | 2.0541 | 0.9352 | 0.9023 | 0.0315 | 0.0684 |

| PanNet | 0.9693 | 3.5024 | 0.9385 | 1.5627 | 0.9483 | 0.9119 | 0.0409 | 0.0492 |

| PSGAN | 0.9686 | 3.4696 | 0.9209 | 1.6923 | 0.9592 | 0.9108 | 0.0415 | 0.0498 |

| PGMAN | 0.9703 | 2.9621 | 0.9571 | 1.0649 | 0.9598 | 0.9528 | 0.0176 | 0.0301 |

| LDT-Net | 0.9793 | 2.8756 | 0.9529 | 1.3642 | 0.9629 | 0.9097 | 0.0609 | 0.0813 |

| PanFormer | 0.9744 | 2.8889 | 0.9416 | 0.8607 | 0.9693 | 0.9570 | 0.0189 | 0.0246 |

| FAFormer | 0.9857 | 2.9362 | 0.9486 | 0.8562 | 0.9697 | 0.9583 | 0.0227 | 0.0194 |

| PLRDiff | 0.9792 | 2.9062 | 0.9493 | 0.8735 | 0.9609 | 0.9771 | 0.0062 | 0.0168 |

| Pan-Mamba | 0.9806 | 2.6815 | 0.9608 | 0.7006 | 0.9883 | 0.9592 | 0.0195 | 0.0217 |

| Ours | 0.9887 | 2.3152 | 0.9673 | 0.6529 | 0.9830 | 0.9764 | 0.0028 | 0.0209 |

| Reference | 1 | 0 | 1 | 0 | 1 | 1 | 0 | 0 |

| Model | Inference Time (s) | Parameters (M) | FLOPs (G) |

|---|---|---|---|

| Brovey [66] | 0.05 | - | - |

| HPF [67] | 0.07 | - | - |

| PNN [18] | 0.08 | 0.10 | 1.56 |

| PanNet [34] | 0.09 | 0.08 | 1.22 |

| PSGAN [37] | 0.13 | 2.44 | 33.79 |

| PGMAN [48] | 0.11 | 3.91 | 12.77 |

| LDT-Net [45] | 0.10 | 0.11 | 0.14 |

| PanFormer [26] | 0.40 | 1.62 | 61.01 |

| FAFormer [68] | 0.34 | 2.32 | 2.69 |

| PLRDiff [50] | 25.80 | 391.05 | 5552.80 |

| Pan-Mamba [40] | 0.10 | 0.49 | 16.02 |

| Ours | 0.13 | 0.38 | 6.10 |

| QuickBird | GaoFen-2 | |||||

|---|---|---|---|---|---|---|

| Methods | HQNR | ERGAS | Q4 | HQNR | ERGAS | Q4 |

| Ours | 0.9892 | 1.5921 | 0.9722 | 0.9808 | 0.8789 | 0.9962 |

| Removal Ablation Studies | ||||||

| w/o RBCA | 0.9862 | 1.8533 | 0.9650 | 0.9783 | 0.9492 | 0.9947 |

| w/o MSFF | 0.9732 | 1.7245 | 0.9532 | 0.9592 | 0.9608 | 0.9922 |

| w/o PSA | 0.9804 | 1.6890 | 0.9588 | 0.9668 | 0.8992 | 0.9937 |

| Replacement Ablation Studies | ||||||

| RBCA → 3 × 3 Conv | 0.9845 | 1.6234 | 0.9685 | 0.9765 | 0.9012 | 0.9925 |

| MSFF → 1 × 1 Conv + Concat | 0.9823 | 1.6445 | 0.9668 | 0.9734 | 0.9156 | 0.9898 |

| PSA → Spatial Attention | 0.9876 | 1.5934 | 0.9712 | 0.9794 | 0.8823 | 0.9952 |

| Reference | 1 | 0 | 1 | 1 | 0 | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, W.; Feng, Y.; Ding, Y.; Xiang, H.; Liu, X.; Cai, Y. HSSTN: A Hybrid Spectral–Structural Transformer Network for High-Fidelity Pansharpening. Remote Sens. 2025, 17, 3271. https://doi.org/10.3390/rs17193271

Kang W, Feng Y, Ding Y, Xiang H, Liu X, Cai Y. HSSTN: A Hybrid Spectral–Structural Transformer Network for High-Fidelity Pansharpening. Remote Sensing. 2025; 17(19):3271. https://doi.org/10.3390/rs17193271

Chicago/Turabian StyleKang, Weijie, Yuan Feng, Yao Ding, Hongbo Xiang, Xiaobo Liu, and Yaoming Cai. 2025. "HSSTN: A Hybrid Spectral–Structural Transformer Network for High-Fidelity Pansharpening" Remote Sensing 17, no. 19: 3271. https://doi.org/10.3390/rs17193271

APA StyleKang, W., Feng, Y., Ding, Y., Xiang, H., Liu, X., & Cai, Y. (2025). HSSTN: A Hybrid Spectral–Structural Transformer Network for High-Fidelity Pansharpening. Remote Sensing, 17(19), 3271. https://doi.org/10.3390/rs17193271